PRE2019 3 Group10: Difference between revisions

No edit summary |

|||

| (83 intermediate revisions by 4 users not shown) | |||

| Line 37: | Line 37: | ||

<ul> | <ul> | ||

<li>Large tech companies are using neural networks to analyze and cluster users of their services. This data is then used for targeted advertisement. This advertisement business is one of the most lucrative online business models. </li> | <li>Large tech companies are using neural networks to analyze and cluster users of their services. This data is then used for targeted advertisement. This advertisement business is one of the most lucrative online business models. </li> | ||

<li>In health care, neural networks are used for detecting certain diseases, for example cancer. Recent studies have shown that neural networks perform well in the detection of lung cancer (Zhou 2002), breast cancer (Karabatak, 2009), and prostate cancer (Djavan, 2002). There are many more examples of researchers using neural networks to detect specific diseases. </li> | <li>In health care, neural networks are used for detecting certain diseases, for example cancer. Recent studies have shown that neural networks perform well in the detection of lung cancer (Zhou 2002), breast cancer (Karabatak, 2009), and prostate cancer (Djavan, 2002). There are many more examples of researchers using neural networks to detect specific diseases.</li> | ||

<li>In businesses, neural networks are used to filter job applicants (Qin, 2018) (Van Huynh, 2019). It uses the information of an applicant and information about the job to predict how well the applicant fits the job. This way the business only has to take the best applicants in for interviews and can reduce the amount of manual labour needed. </li> | <li>In businesses, neural networks are used to filter job applicants (Qin, 2018) (Van Huynh, 2019). It uses the information of an applicant and information about the job to predict how well the applicant fits the job. This way the business only has to take the best applicants in for interviews and can reduce the amount of manual labour needed. </li> | ||

<li>Neural networks are also used to detect cracks in the road (Zhang, 2016). Manually keeping track of road conditions is very costly and time consuming. Automated road crack detection makes it a lot easier to maintain roads. </li> | <li>Neural networks are also used to detect cracks in the road (Zhang, 2016). Manually keeping track of road conditions is very costly and time consuming. Automated road crack detection makes it a lot easier to maintain roads. </li> | ||

<li>Neural networks are also able to recognize faces (Sun, 2015). Facial recognition systems based on neural networks are already heavily deployed in China (Kuo, 2019).</li> | <li>Neural networks are also able to recognize faces (Sun, 2015). Facial recognition systems based on neural networks are already heavily deployed in China (Kuo, 2019). </li> | ||

<li>In self driving cars, deep convolutional neural networks are used to parse the visual input of the sensors of the car. This information can be used to steer the car (Bojarski, 2016).</li> | <li>In self driving cars, deep convolutional neural networks are used to parse the visual input of the sensors of the car. This information can be used to steer the car (Bojarski, 2016). </li> | ||

</ul> | </ul> | ||

<br> | <br> | ||

All in all, there are many | All in all, there are many use cases for neural networks. Research in modern large scale neural networks is very recent. The most promising progress in the field has been made in the last 10 years. The reason for the increase in popularity for neural networks is the increase in available data, because neural networks are only successful when they have a large amount of data to train on. Every day more data gets produced on a massive scale by people using technology. In the future only more data will be created. As time progresses more industries will be able to start using neural networks. | ||

<br> | <br> | ||

A big issue of modern neural networks is that it is a black box approach. Experts do not understand what is going on inside the neural network. Neural networks are given input data and are expected to produce output data. And they do this extremely well. But what exactly happens in between is largely unknown. This is an issue when people use neural networks to make decisions with real, impactful consequences. | A big issue of modern neural networks is that it is a black box approach. Experts do not understand what is going on inside the neural network. Neural networks are given input data and are expected to produce output data. And they do this extremely well. But what exactly happens in between is largely unknown. This is an issue when people use neural networks to make decisions with real, impactful consequences. | ||

<br> | <br> | ||

| Line 54: | Line 53: | ||

== Objectives == | == Objectives == | ||

We are going to make a web application in which users can see visualizations of the inner workings of a neural network. | Our aim is to provide a tool that our users can use to have a better understanding of how the convolutional neural network they are using is making decisions and producing an output. Our goal is not to answer all questions a user might have about the decision of the neural network they use, because we think this is not technically feasible. Our goal is to provide the user with as many visualizations as we can in order to help them understand the neural networks decision. We think that by providing our user with several explanatory images we can at the very least improve their understanding of the decision of the neural network. | ||

We are going to make a web application in which users can see visualizations of the inner workings of a neural network. In this web application users should be able to choose between different datasets and the corresponding neural networks and different images, where the users for both the images and networks have the option to either select one that is on the website already or to upload one themselves. | |||

We will provide 2 types of visualizations. To get a better understanding of the network and what individual layers and neurons look at, we provide users with visualizations of how each layer and neuron perceives the image and which nodes had the most impact in deciding the results. For now this visualization will only be available for the preselected networks. Secondly we should provide the users with a saliency map of that image so they can see what parts of the image were overall considered important by the network. | |||

Another visualization technique we wish to provide is projecting a learned dataset on 2D space to visualize how close certain classes are to each other. | |||

Our first focus will be on showing visualizations of the individual layers of a neural network. The user can select a dataset, a trained network, a layer, and an input image. We will give them a visualization of that layer. We can also select a random image from the dataset if the user does not have an image. | |||

We have mentioned many visualization techniques in our objectives. We are still not entirely sure to which extent it is feasible to implement all these techniques. We will be implementing them in order and we will prioritize polishing our tool as opposed to adding another visualization technique. | |||

== Deliverables and Milestones == | == Deliverables and Milestones == | ||

<h5>Planned milestones set at the beginning of the project</h5> | |||

Week 3: Have a working website with at least 1 visualization. | Week 3: Have a working website with at least 1 visualization. | ||

*Functional front-end that has implemented the basic functionality needed for finalizing this project. | *Functional front-end that has implemented the basic functionality needed for finalizing this project. | ||

*Back-end must have a general framework for supporting several different networks/datasets. | *Back-end must have a general framework for supporting several different networks/datasets. | ||

*One visualization must be | *One visualization must be functional to the user. | ||

<br> | <br> | ||

| Line 91: | Line 79: | ||

If time allows, we want to make it possible to the user to construct his own networks for simple neural network tasks. | If time allows, we want to make it possible to the user to construct his own networks for simple neural network tasks. | ||

<br><br> | <br><br> | ||

We want to deliver a website that is finished and user friendly. Using the site, users are able to get a better understanding of the inner workings of a convolutional neural network. This is done through visualizing the computations and transformations on the input data. Wherever necessary, we will add text to further explain the visualizations to the user. | We want to deliver a website that is finished and user friendly. Using the site, users are able to get a better understanding of the inner workings of a convolutional neural network. This is done through visualizing the computations and transformations on the input data. Wherever necessary, we will add text to further explain the visualizations to the user.<br><br> | ||

<h5>Actual milestones</h5> | |||

'''Week 2''' | |||

*Website for the front-end was partly designed. | |||

'''Week 3''' | |||

*Back-end visualization for Saliency Map and Layer visualization working. | |||

*Working image picker. | |||

*Working image uploader. | |||

'''Week 4''' | |||

*Integration of back-end and front-end. | |||

*Completion of first prototype. | |||

*Demo shown to tutors. | |||

'''Week 5''' | |||

*Refinements for website and front-end. | |||

'''Week 7''' | |||

*Small redesign of the website (adjustments after user feedback). | |||

*New functionality: allowing users to upload their own Neural Network. | |||

== State of the Art == | == State of the Art == | ||

| Line 103: | Line 112: | ||

*https://distill.pub/2017/feature-visualization | *https://distill.pub/2017/feature-visualization | ||

== | <br> | ||

For now, we have | Additionally, we have a lot of sources in the references paragraph along with a summary of each source, showing what has already been done (or could be done) in this field. | ||

== Users and what they need == | |||

We believe many different users can benefit from using our tool. Our main target user is people who are using convolutional neural networks for the analysis of visual input. Users use neural networks because they provide good results when analysing visual data. What neural networks currently do not provide, is a clear explanation of why they give the outputs that they give. Our users are people that can benefit from seeing images representing the inner works of a neural network. | |||

We name several users and explain how they could use our tool. Note that this list is not extensive since convolutional neural networks are applied in an extremely large variety of situations, by a lot of different users. | |||

<ul> | |||

<li> | |||

Doctors who are using images to determine whether a patient has a certain type of cancer. Detecting different types of cancer is a different process and requires different skills. We do not have enough domain knowledge to explain the differences between these processes. Detecting different types of cancer is also done by different types of medical experts. We try to provide information that is general enough such that it is useful in all these cases. | |||

It is interesting for a doctor to see which exact parts of an image have contributed most to the decision of the neural network. The whole reason for using a neural network is that it is not easy to come up with a decision for all experts based on seeing the input image. If the expert is also supplied with a visualization of which region of the image is the most important part, they should be able to get a better understanding of the reasoning of the neural network. | |||

</li> | |||

<li> | |||

Engineers that are developing systems to detect road cracks. These users are different to doctors since we assume the engineers creating these systems have more knowledge about neural networks. Engineers that are creating the neural networks will train several networks and test their performance. In testing the performance of their networks, they might not understand why their network outputs wrong answers at certain times. Our visualizations should help them understand which part of the image is the motivation for their network to provide an output. This could help the engineers come up with pre processing techniques that help the network in this specific area and improve overall performance. | |||

</li> | |||

<li> | |||

Governments using facial recognition to observe their citizens. Governments could use facial recognition neural networks to determine who committed a crime. It is very important for the people using the neural network that they can see why certain faces were matched. We think providing these users with images, showing which parts of a face were the reason for the neural network's decision could help them understand why the neural network came to this decision. | |||

</li> | |||

</ul> | |||

These 3 users all share that they are using a convolutional neural network for image analysis. The expertise of the user differs though. Doctors are not expected to know anything about neural networks. | |||

The only thing they need to know is to give them an input image and read the output. Engineers working on road crack detection are expected to know more about neural networks because they are designing them for their problem. The amount of information that these two different users are able to obtain using our tool will differ. We will make our tool as easy to use as possible and try to give many clear explanations. However, some types of visualizations are more suited for the advanced user. While other types of visualizations, are better for a user without much knowledge about neural networks. Our aim is to provide a wide toolset which can be beneficial for many different users, potentially more beneficial for one type of user than the other. | |||

Our users require an environment in which the inner workings of a neural network is visualized in an intuitive way such that these inner workings become more understandable. They need to be able to select or upload the dataset and network they are working with. They then need to be able to select different types of visualizations in real time, there should be clear and easily understandable explanations for all the types of visualizations. For the visualizations that are based on an input image, the user needs to be able to upload their own input image. | |||

<h5>New detailed Users</h5> | |||

<h6>Engineers</h6> | |||

Engineers creating neural networks for image recognition or image processing in general. They can use our tool to create a better understanding of the strengths and weaknesses of their network and how it works. They can use this knowledge to repeatedly improve their network until it holds a standard that they are satisfied with. | |||

The main way users can use our tool to help with this is by looking at important features. The saliency map that our tool shows, indicates very clearly what features the network is focusing on. Knowing these features can help engineers improve their networks in several ways. | |||

They can preprocess their test data to show certain features more clearly, they can modify their training dataset and they can also change the entire architecture of their network if their network is incapable of capturing key features even with a lot of data. | |||

We give some examples of how our tools can be used by neural network engineers: | |||

It is interesting to look at the saliency map of a certain class and look at what features the network values. If the data your network is intended to work for does not always have these features, engineers might want to extend the training dataset to make sure other features are also recognised. They could also limit the use of their neural network to only images that have these features. | |||

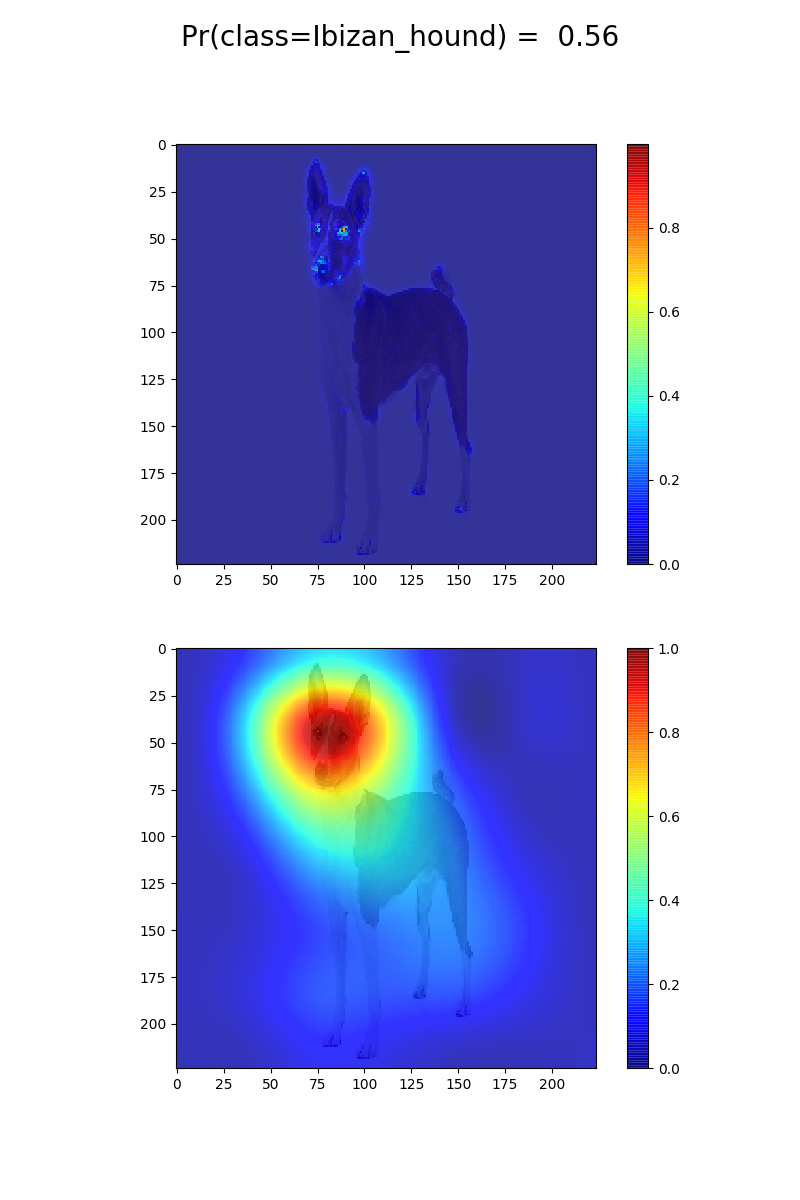

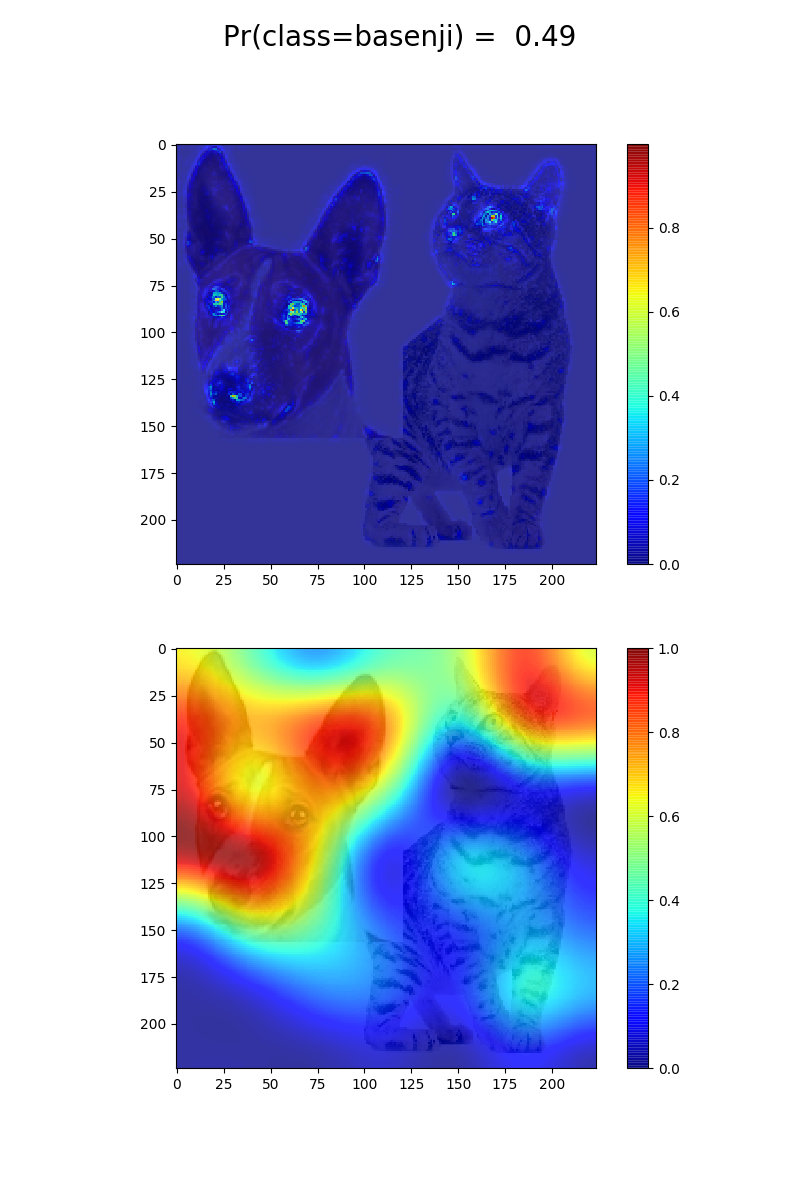

For demonstration purposes, in our current prototype we want to figure out if the network needs the face of a dog to recognize that it is a dog or not. | |||

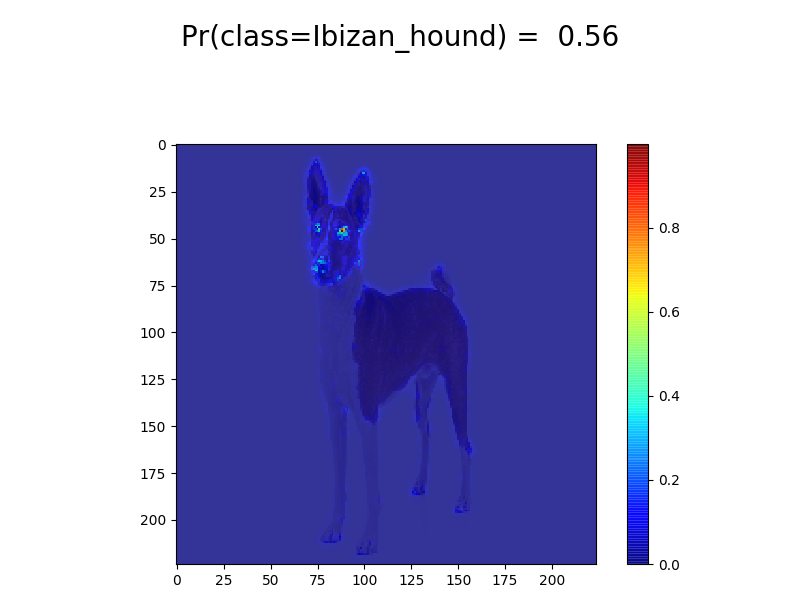

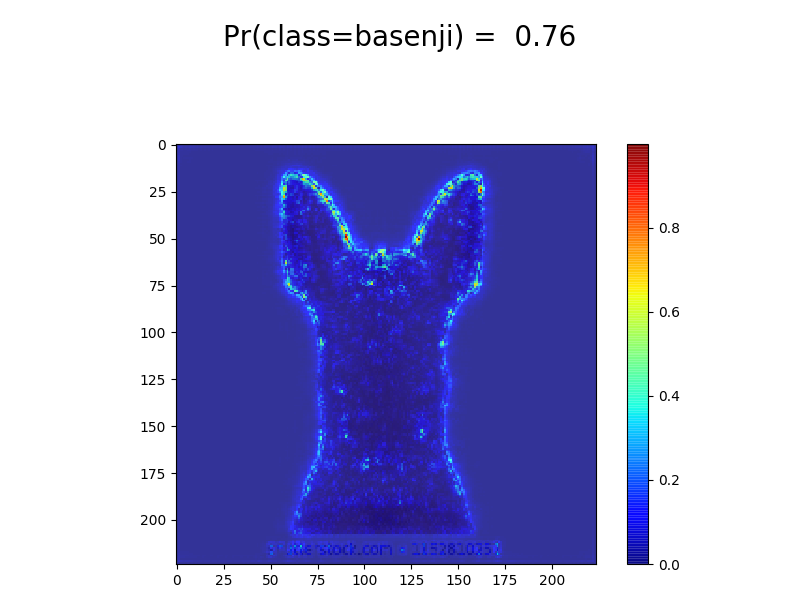

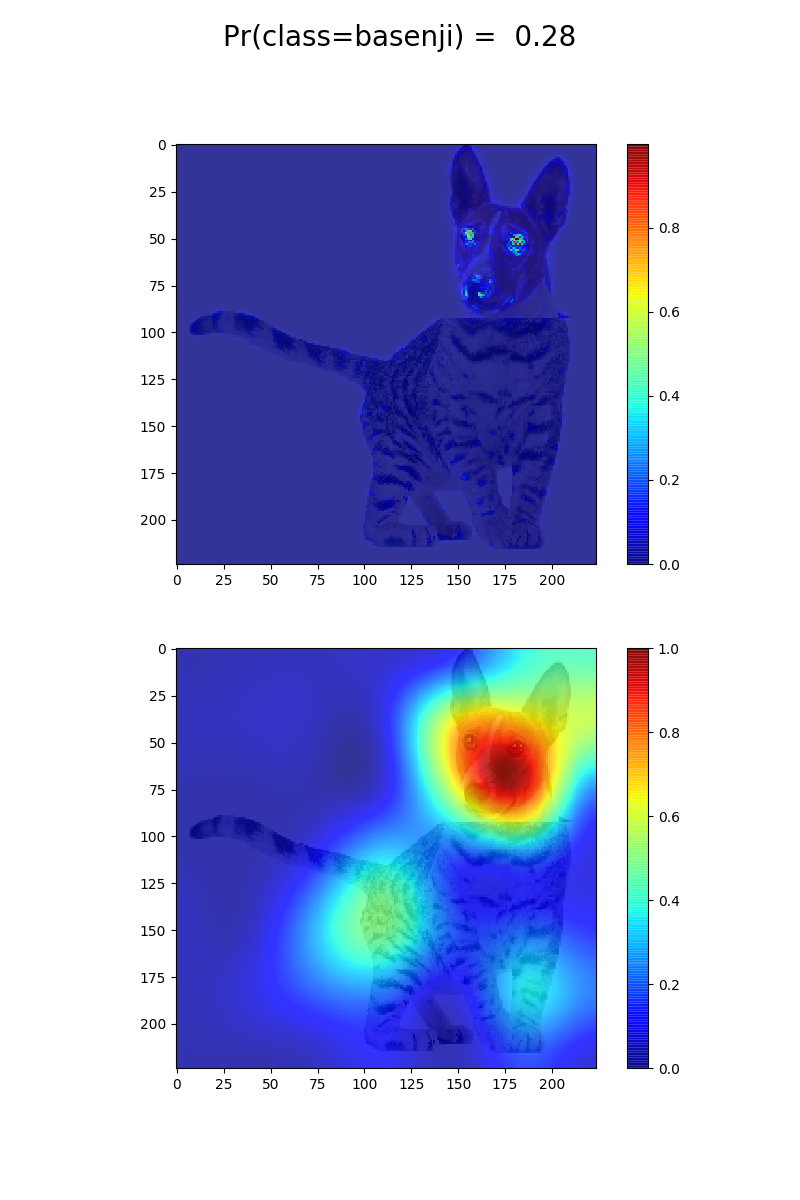

We take a picture of a dog showing its face and body clearly, and a picture of just the back of the head of a dog. The network we use can classify both pictures as dogs (note that ibizan hound and basenji are dog races). We can use our saliency map to see how it does this. Note that on the saliency map of the full dog, a lot of emphasis is put on the eyes and the nose of the dog. On the other hand, the saliency map of the other picture shows that the network only looks at the shape of the dog. This means that the shape of the dog is enough for our network to recognize that it is a dog. We now know that we can use our network to classify dogs as long as an outline of the dog (or the face) is visible. | |||

[[File:Dog.jpg|300px]] | |||

[[File:Dog-back.jpg|300px]] | |||

[[File:Saliency_basenji_hond_crop.png|300px]] | |||

[[File:Saliency_hond_back_crop.png|300px]] | |||

Another thing to notice from this demonstration, is that our network is not good at classifying the race of dogs. In both cases it has misclassified the race of the dog. This tells us that if we want our network to be able to distinguish between different dog races we need to train it on more dog specific datasets. | |||

Yet another thing that engineers may want to take into account is how biased their data is. Here we'll try to show how colors affect the prediction of the network. <br> | |||

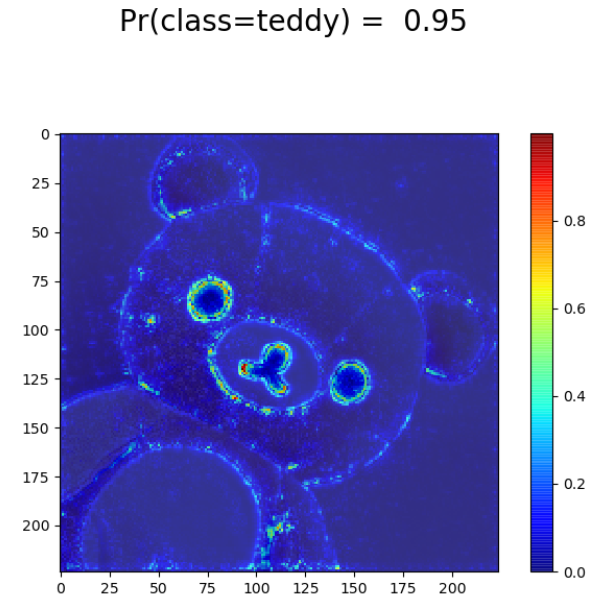

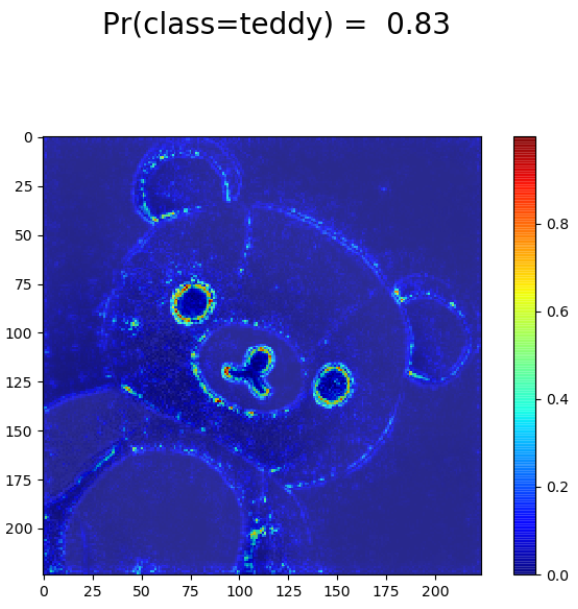

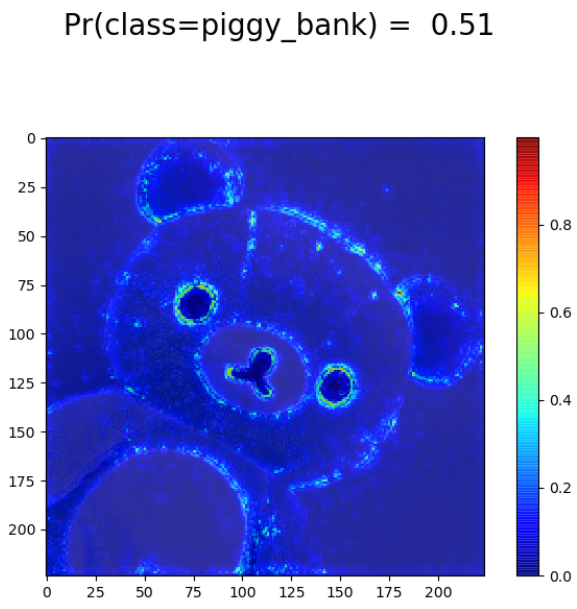

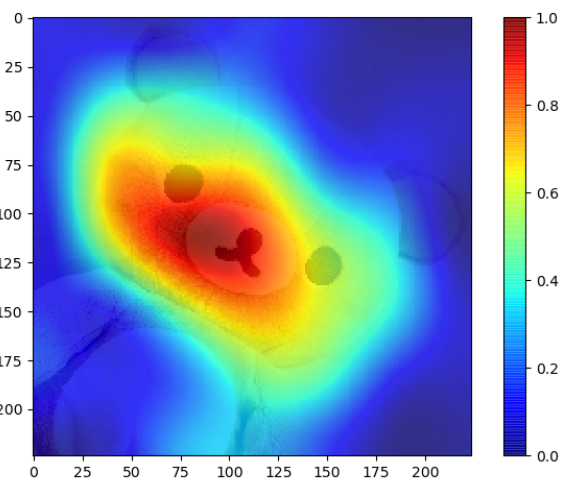

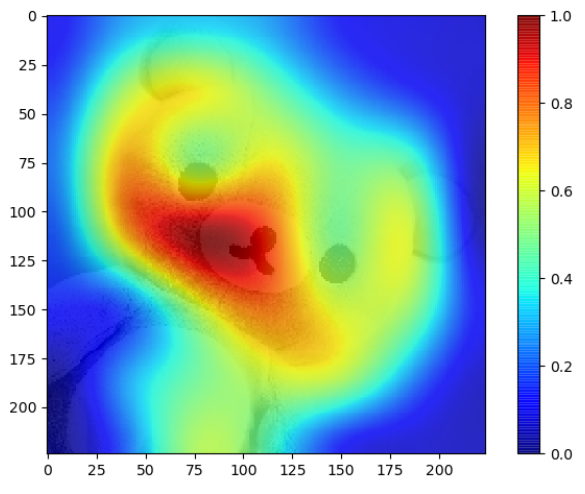

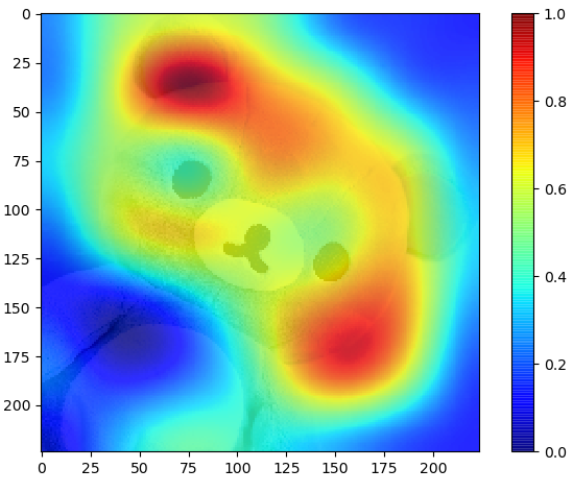

First, we have a teddy bear without filters. We can see that the network is very certain of its prediction. What happens if we apply a black-white filter on the image? We can see that the certainty of the prediction drops quite a bit. It gets even worse when we apply a blue filter on the image. Now the prediction is completely wrong, the network predicts a piggy bank (although, it's far from confident). <br> | |||

Why is the network performing worse? An explanation may be that teddy bears usually have similar colors (beige/brown), so when a network is trained on these kind of colors, it will be less certain when you feed it an uncommon bear color. So it seems that there is actually a small bias in the training data. This is something that should be kept in mind when designing your own network. <br> | |||

On the second map, we can see that the network takes the ear into account whereas on the first map, the ears are not that important. You would think that by taking the ears into account, the network could make a better prediction but it seems like the opposite is the case. On the third map, the blue filter seems sufficient to entirely throw off the network. We can see that the mouth, nose and eyes are no longer the most important parts for the decision making of the network. Instead, there is a shift to the ears which might be an explanation as to why the network predicts a piggy bank as these usually have similarly shaped ears. Especially the last example makes it clear why visualizing the prediction of a Neural Network is very helpful: without visualization it would be difficult to explain why the network would predict a piggy bank. | |||

[[File:Bear_without_filter.jpg|300px]] | |||

[[File:Bear_with_blackwhite_filter.jpg|300px]] | |||

[[File:Bear_with_blue_filter.jpg|300px]] | |||

[[File:Bear_without_filter_sal1.PNG|300px]] | |||

[[File:Bear_with_blackwhite_filter_sal1.PNG|300px]] | |||

[[File:Bear_with_blue_filter_sal1.PNG|300px]] | |||

[[File:Bear_without_filter_sal2.PNG|300px]] | |||

[[File:Bear_with_blackwhite_filter_sal2.PNG|300px]] | |||

[[File:Bear_with_blue_filter_sal2.PNG|300px]] | |||

<h6>Students</h6> | |||

Students learning about convolutional neural networks in any setting can also benefit greatly from using our tool. Most courses focus on understanding how to implement neural networks and how the mathematics behind them work. Usually there is also practical work involved: trying to code a neural network and train and evaluate it on given data. | |||

Our tool would complement such a course by letting students play around with the network. Our tool can be used to understand more intuitively how the network operates. Below, we give an example of how we learned more about a network in our prototype tool. | |||

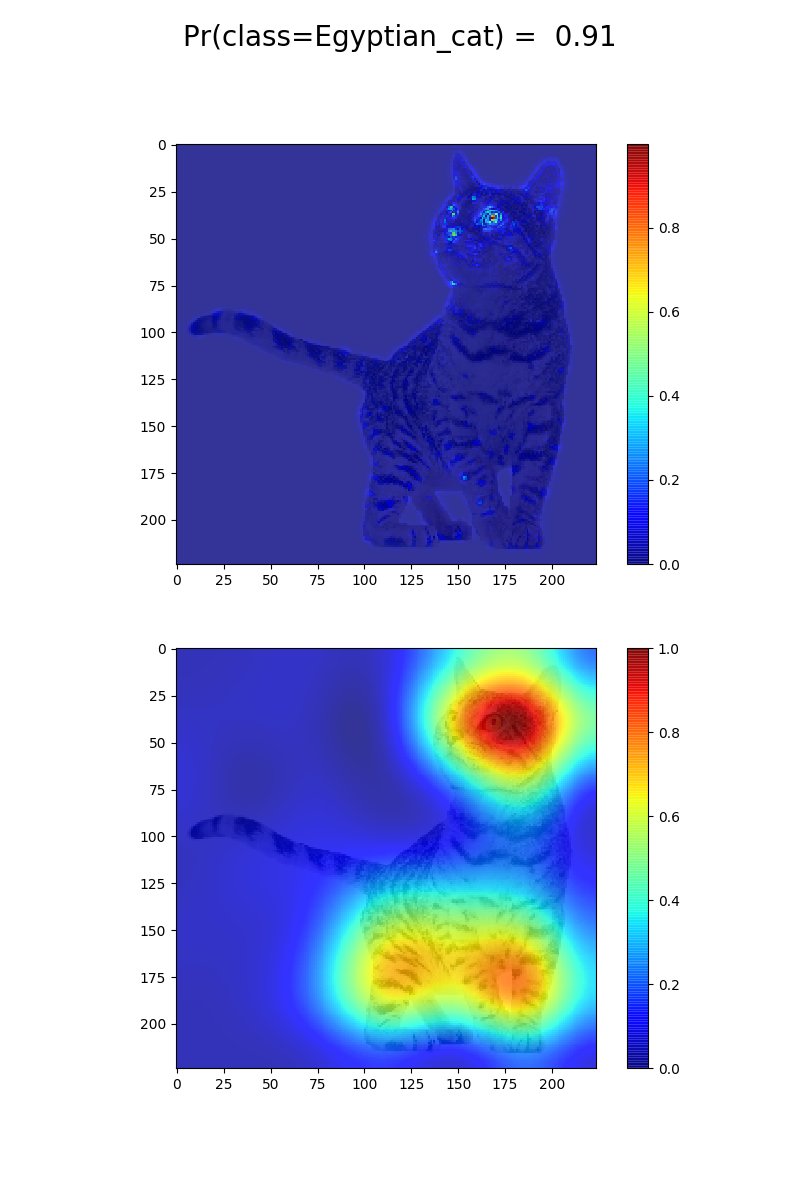

First we saw that the network looked mostly at the face of the dog (see the picture below). Then we also saw that the network looked mostly at the face of a cat to classify it. For the cat, the network also used the legs a bit, but mostly the head. We modified our cat image in multiple ways to see how the network would react. | |||

[[File:Dog.jpg|300px]] | |||

[[File:Cat.png|300px]] | |||

[[File:Saliency_basenji_hond.png|300px]] | |||

[[File:Saliency_cat.png|300px]] | |||

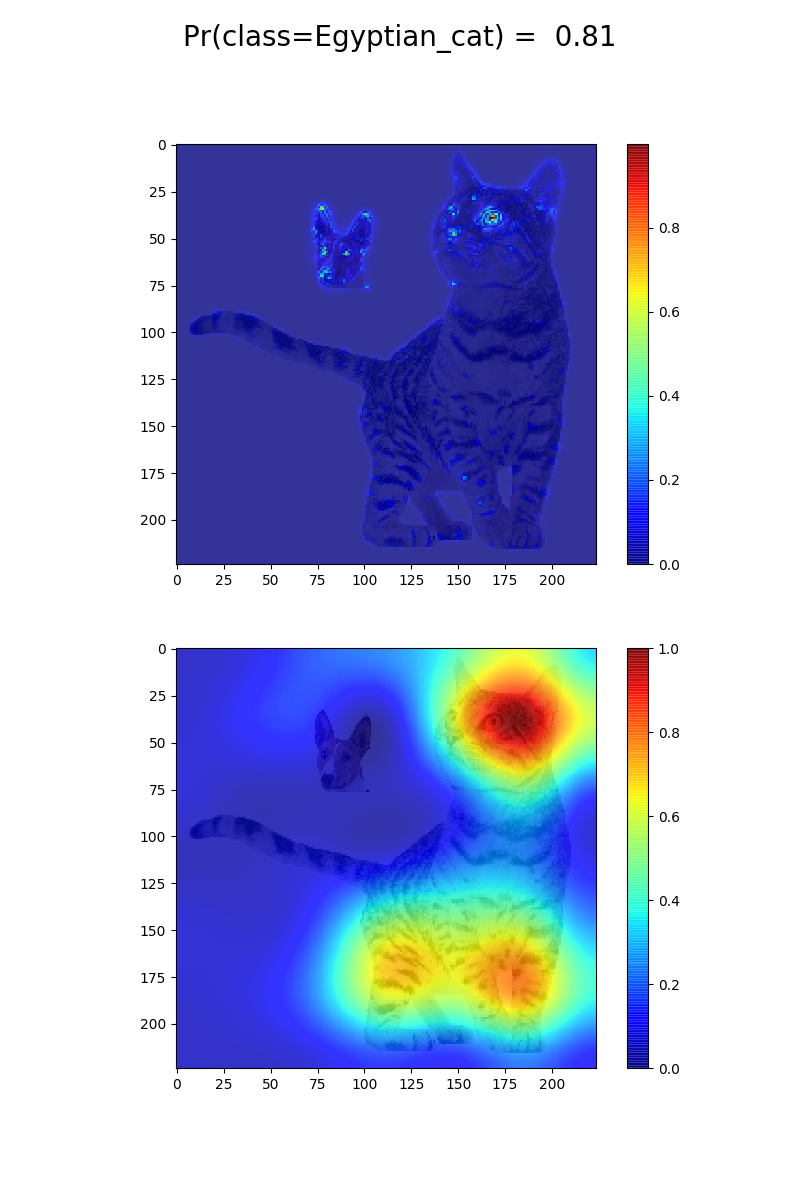

The first modification was putting the dog head over the cat head in the cat image, the dog head was made roughly the same size. Interestingly enough, the network then thought it was a dog. We did the same but this time we put the dog head over the empty space next to the cat head. This time the network thought it was a cat again. We then increased the size of the dog head and now the network thought it was a dog again. The relevant saliency images can be seen below. | |||

We learned a lot about how the network classifies dogs and cats. We learned that the head counts the most to the neural network, not the location. We also learned that if more pixels are covered by something (in our case a dog head), the network values it more than if that same thing (head) is at exactly the same position but smaller. This is an interesting thing to learn about neural networks, something that is hard to learn without playing with our tool. | |||

[[File:Cat_and_dog.png|300px]] | |||

[[File:Cat_bigdog.png|300px]] | |||

[[File:Cat_doghead.png|300px]] | |||

[[File:Saliency_cat_and_dog.png|300px]] | |||

[[File:Saliency_cat_bigdog.png|300px]] | |||

[[File:Saliency_cat_doghead.png|300px]] | |||

<h5>User Feedback</h5> | |||

We made our tool available to specific type of users to gain some extra insight on how well our tool works for our target audience. We will be adding more users soon. | |||

*Bart is a student in Computer Science and Engineering. He's currently taking subjects in AI, so he fits nicely within our target audience. Bart found that navigating the site was a bit difficult at fist because it was not very clear how to get to the visualizations. However when he got to the visualizations, he was actually quite excited. He said that especially the Saliciency maps added a lot of insight into understanding how the network made its decision on the classification of the image. It helped him a lot in understanding why a network simetimes does not make the correct classification. As for the Layer visualization, he found that it was a bit messy although it looked cool. But he did not really gain much insight into the decision making of the network by using this visualization. For the website itself, he had some suggestions. On the homepage, there was not really any direction on how to get to the datasets/networks. Furthermore, the visualization page does not really indicate clearly where the visualization would be visible. | |||

*Oscar is a student in Computer Science and Engineering who has taken the course Data Mining and Machine Learning. He said that overall, the website was well designed. The only problem that he had with the website is that on the homepage, it's not really clear what the datasets on the cards exactly do. This complaint is similar to the complaint Bart had, it's not really clear how to get to the visualization page. Oscar said that the Saliency visualization looked really nice, it was useful to him because it helped him understand better why a network comes to the decision that it made because the visualization highlights the important parts. The layer visualization wasn't really useful to him as he did not know what the layer visualization is supposed to show.<br><br> | |||

<h5>Adjustments based on user feedback</h5> | |||

User feedback would be useless if we don't do anything with it. In this paragraph, we will indicate the changes that we made based on user feedback. | |||

*Adjusted homepage to indicate more clearly where the user can start the visualizations and what options they have. | |||

*Adjusted visualization page to indicate more clearly where the visualization will be shown. | |||

*Added extra information to all pages to make it more clear what the page is about and how they should be used. | |||

== Approach == | |||

We will use a website where we can visualize the activation of the layers in the neural network by uploading a picture/file. Based on the neural net, the website runs a forward pass on a trained network. This yields a layer activation which is returned to the user to gain information on how the neural net detect these objects. <br> | |||

The user will only have to select which network and dataset they want to understand. We then show visualizations and explanations. This happens on the front-end of our website. In the back-end we handle the requests and compute and create the actual visualizations which we then send back to the front-end to show the user. <br> | |||

We will need both front-end and back-end development. <br><br> | |||

'''Programming languages''' | |||

Front-end: Bootstrap and Vue | |||

*Bootstrap with less. (CSS framework) | |||

*Vue (Front-end javascript framework)<br> | |||

Back-end: Django and Keras. | |||

*Network structures saved on the server-side. (Keras/python) | |||

*Our own datasets on the server available to the user. | |||

*Django (Back-end python framework)<br><br> | |||

We chose an architecture with a back-end and front-end for computational reasons. We could have implemented the whole application as a pure client side program. This would mean that all our users need to have a fast enough device to do the computations required to create our visualizations. The computations we need to do to create our visualizations are computationally quite expensive. They already take an order of seconds to complete on our reasonably fast laptops. If a user with a slow laptop had to do these computations, it would take a long time. With this setup, the speed of our tool depends on the device(s) on which our server is hosted. In this case that is us, because we are currently hosting our server locally while doing this project. This is very scalable, because once our application is hosted on a fast server, the speed of our tool instantly increases. Due to the time limit of this project we did not manage to host everything on a remote server. This is part of the future work. | |||

<h5>GitHub repositories</h5> | |||

For the Front-end, the link to the github repository is [http://www.github.com/DanielVerloop/robotproject http://www.github.com/DanielVerloop/robotproject], this is a public repository, so all the code is publicly available. The back-end repository link is: [https://www.github.com/JeroenJoao/UnderstandableAI/tree/netupload https://www.github.com/JeroenJoao/UnderstandableAI/tree/netupload], with as active branch the netupload branch. The code on this github is publicly available. | |||

<h5>Execution Flow</h5> | |||

The user starts on a home page and is able to navigate through our UI. They are able to select the dataset/network they want to analyse. Then they have the ability to select one of our provided images or upload one. When they upload one we send a POST request to the server at url http://(IPOFSERVER):(PORT)/upload/ with the image file. The server then saves those images. | |||

After an image has been selected the user can press the visualize button. We then send two requests to the server, one for the saliency image and one for the layer image. The two (GET) requests look as follows: | |||

http://(IPOFSERVER):(PORT)/dataset/(NAMEOFNETWORK)/1/(LAYER)/(NAMEOFIMAGE)/(TYPE)/(IMGWIDTH)/(SAL1)/(SAL2). The capital variables are defined as follows: | |||

<ul> | |||

<li>IPOFSERVER is the ip address or the domain name that the server is hosted on</li> | |||

<li>PORT is the port that the server is hosted on</li> | |||

<li>NAMEOFTHENETWORK is the name of the network we are trying to analyze, the default is currently imagenet.</li> | |||

<li>LAYER is the layer that is analyzed in the layer visualization</li> | |||

<li>NAMEOFIMAGE is the name of the image that is being analyzed</li> | |||

<li>TYPE is whether we want a saliency or layer visualization</li> | |||

<li>IMGWIDTH is the width of the input layer of the CNN, used for custom network upload</li> | |||

<li>SAL1 is at which layer the first saliency map should be created, used for custom network upload</li> | |||

<li>SAL2 is at which layer the second saliency map should be created, also for custom networks</li> | |||

</ul> | |||

While we are computing/downloading the images, the user sees that the visualization tabs are loading, indicated by spinning circles. The moment one of the images is done, that respective tab is enabled and the user can click on the tab to see the visualization. | |||

<br><br> | |||

There is also an option for a user to upload their own network. Once the user is on the upload network page, they can upload the files of their Keras network. There are 3 files of their network that need to be uploaded: the model architecture, the model weights, the classes. Next to these 3 files, the users also needs to input 3 numbers: the dimension of the input image, the layer of which the first saliency map should be created, the layer of which the second saliency map should be created. | |||

Once the user has filled in these details, they can press a button to upload their network. We make a special POST request containing the 3 model files in the body to the url http://(IPOFSERVER):(PORT)/netupload. After this, the user can upload/choose images to visualize. All visualizations are not made from their own uploaded network. Currently, we only show saliency map visualizations for custom uploaded networks. Due to time constraints we could not also add a layer visualisation for the uploaded networks. This is future work. | |||

<h5>Saliency Mapping</h5> | |||

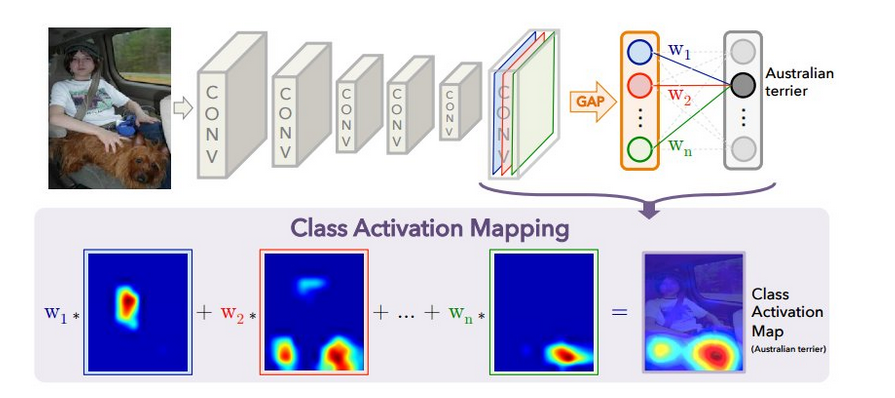

Saliency mapping is one of the tools which our user uses to get a better understanding of convolutional neural networks. It creates a so-called Attention Heatmap which the user shows which regions of an image are found to be interesting. As earlier described this can be very useful, however until now the inner workings of creating this heatmap has remained a black box. In this section we are going to take a look at these inner workings of saliency mapping and how it creates the Attention Heatmap. | |||

First we must find out what such a heatmap actually means. A picture essentially is a matrix with pixels, where each pixel has a color value. The heatmap works in a similar way. For each pixel in the original image there is a pixel in the mapl. Instead of a color value this pixel is mapped to a value between 0 and 1, where 0 means that the pixel is not important and 1 that the pixel is really important. The saliency map is created for each class. This makes sense, since the network will look at different aspects of a picture when it looks whether an image resembles a dog or an airplane. | |||

The saliency map is created via the following procedure: before the final output layer, we take the feature maps, which each look for a certain feature in the image, and for each map we compute the average (the w vecture in the figure below). These averages are then fully connected to the output class for which we want to obtain the heatmap. From here the class-score is computed. Then this score is mapped back to the previous convolutional layer and it generates the class activation map. | |||

[[File:saliency.png|500px]] | |||

<h5>Layer Visualization</h5> | |||

The other visualization we implemented in our tool is the layer visualization. The layer visualization helps the user to see what is actually going on inside all layers of the neural network. The layer visualization takes the input image, and lets it go through the network, and visualizes what the image ‘looks like’ inside the network. It visualizes the activations of the layers in the network when given an input image. | |||

Let's say we have a convolutional layer that maps a 28x28x3 image (input) to the next layer of size 14x14x32. We can interpret the next layer of 14x14x32 as 32 different grayscale images. The 14x14x32 layer is literally a matrix of 14x14x32 of numbers within a certain range. The numbers are scaled to 0-1 or to 0-255, depending on the visualization library. Then the matrix is split over the 3rd dimension. All the 32 14x14 matrices are then visualized as a grayscale image. This can be done using many different libraries, in our case we used matplotlib, a well known python plotting library. The 32 images are put together in a grid and sent to the user. | |||

==Navigating through the UI and Design Decisions== | |||

'''NOTE: Click an image to view in full quality because Wiki ruins the resolution.''' | |||

<h5>Introduction Page</h5> | |||

[[File:Introduction_page.png|500px]]<br> | |||

''Introduction page of the website.''<br><br> | |||

The introduction page is the first page that the users sees when entering the website. From this page, the user can proceed to the home page. | |||

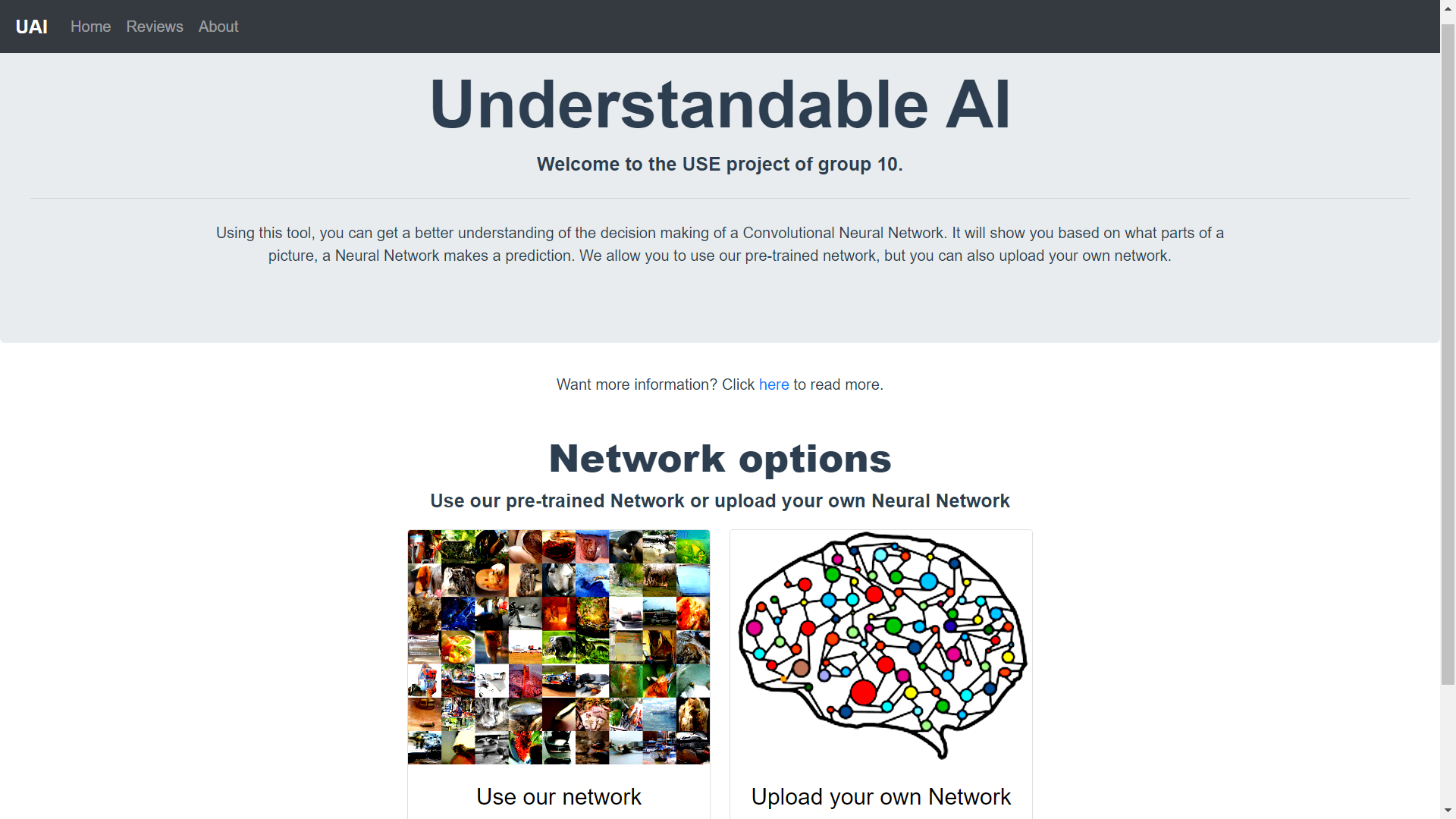

<h5>Home Page</h5> | |||

[[File:Home_page.png|500px]]<br> | |||

''The home page of the website.''<br><br> | |||

The home page welcomes the user and explains what the tool has to offer. If the user wants more information, he can click on the blue 'here' button, this will expand the explanation. <br> | |||

Below, the user is presented with 2 options. He can click on: | |||

*'Use our network' to use our pre-trained network. This network uses the ImageNet database which is an image database organized according to the WorldNet hierarchy (currently only the nouns), in which each node of the hierarchy is depicted by hundreds of thousands of images. | |||

*'Upload your own network' to use his own designed network. | |||

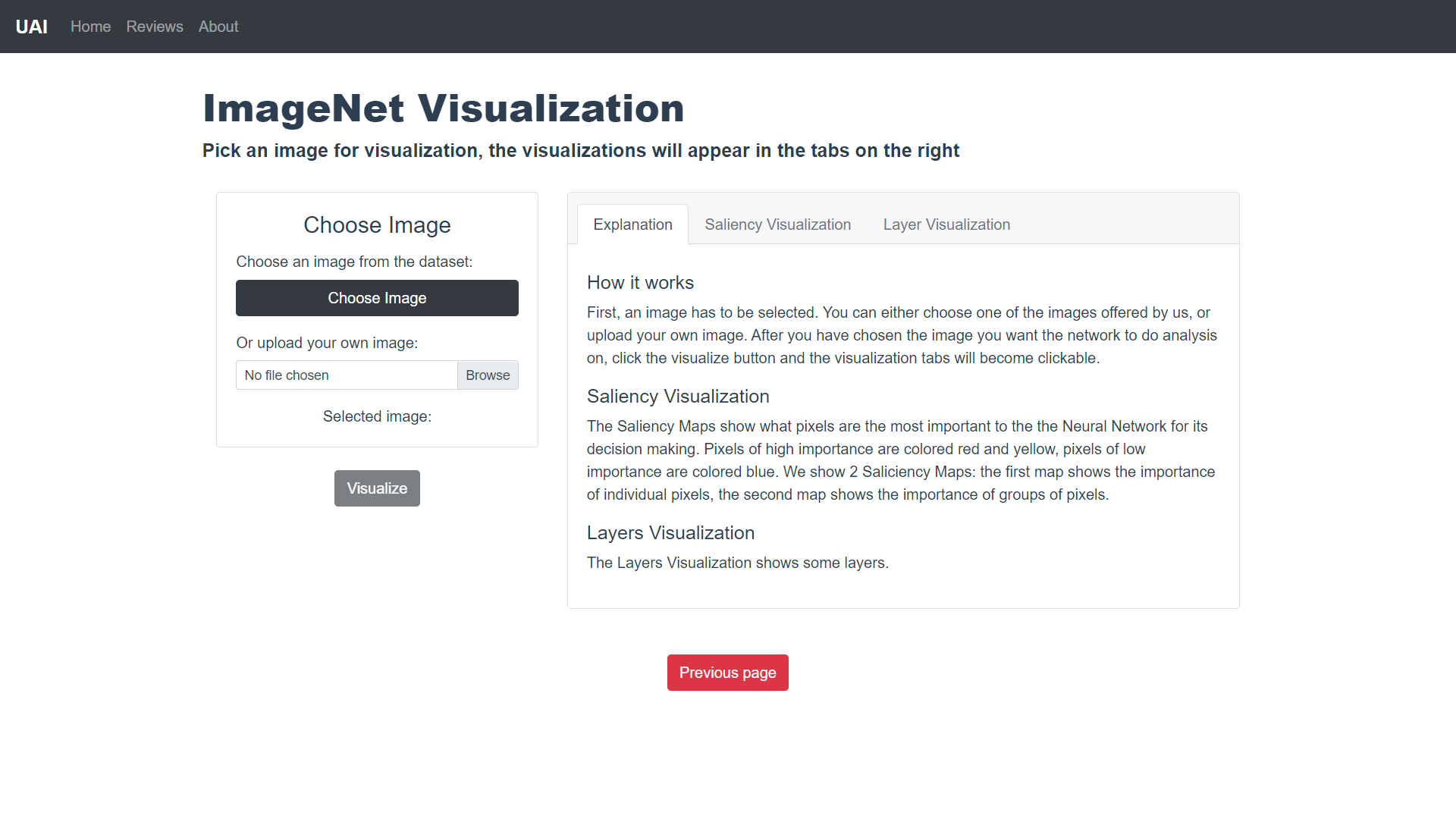

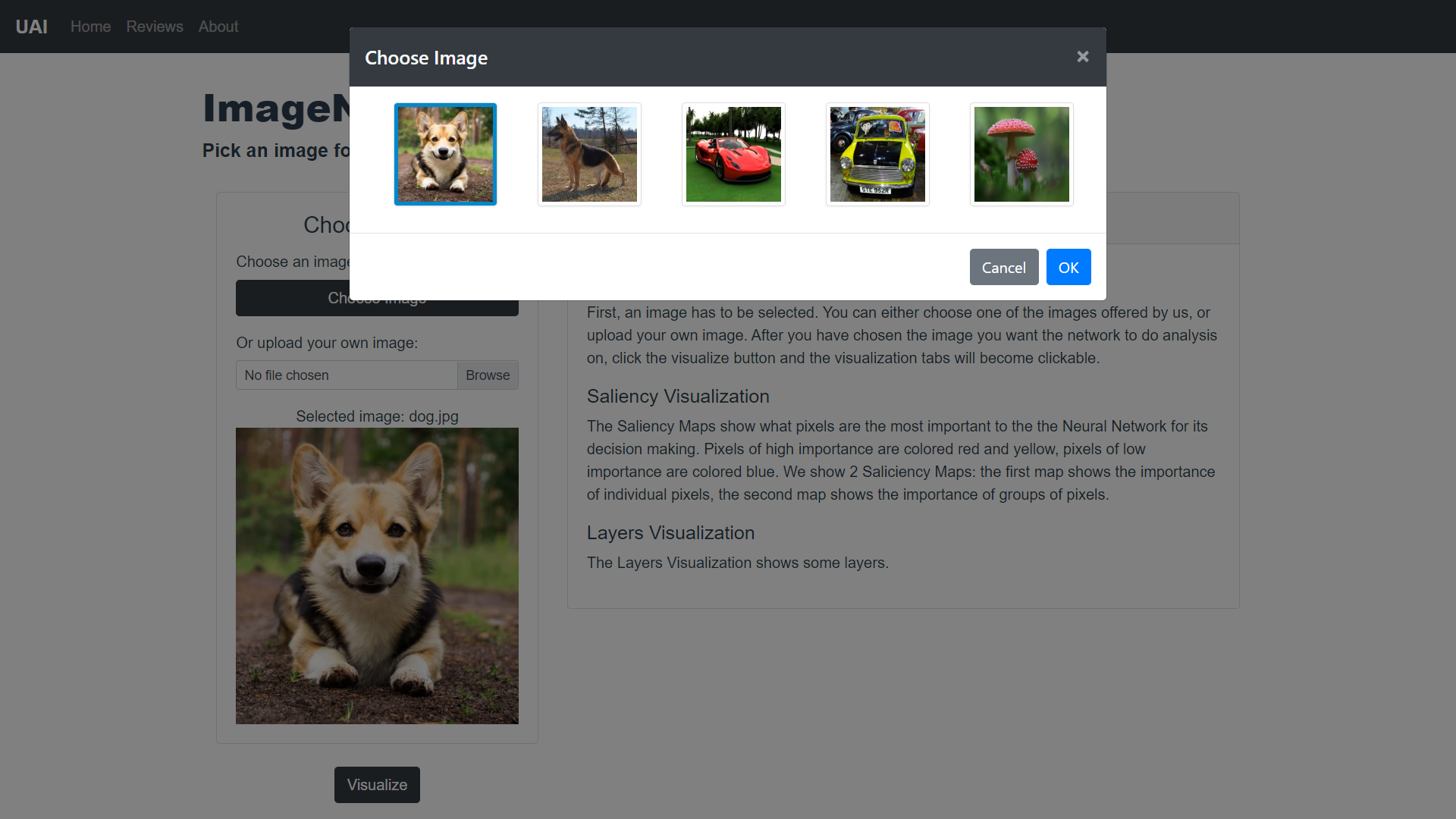

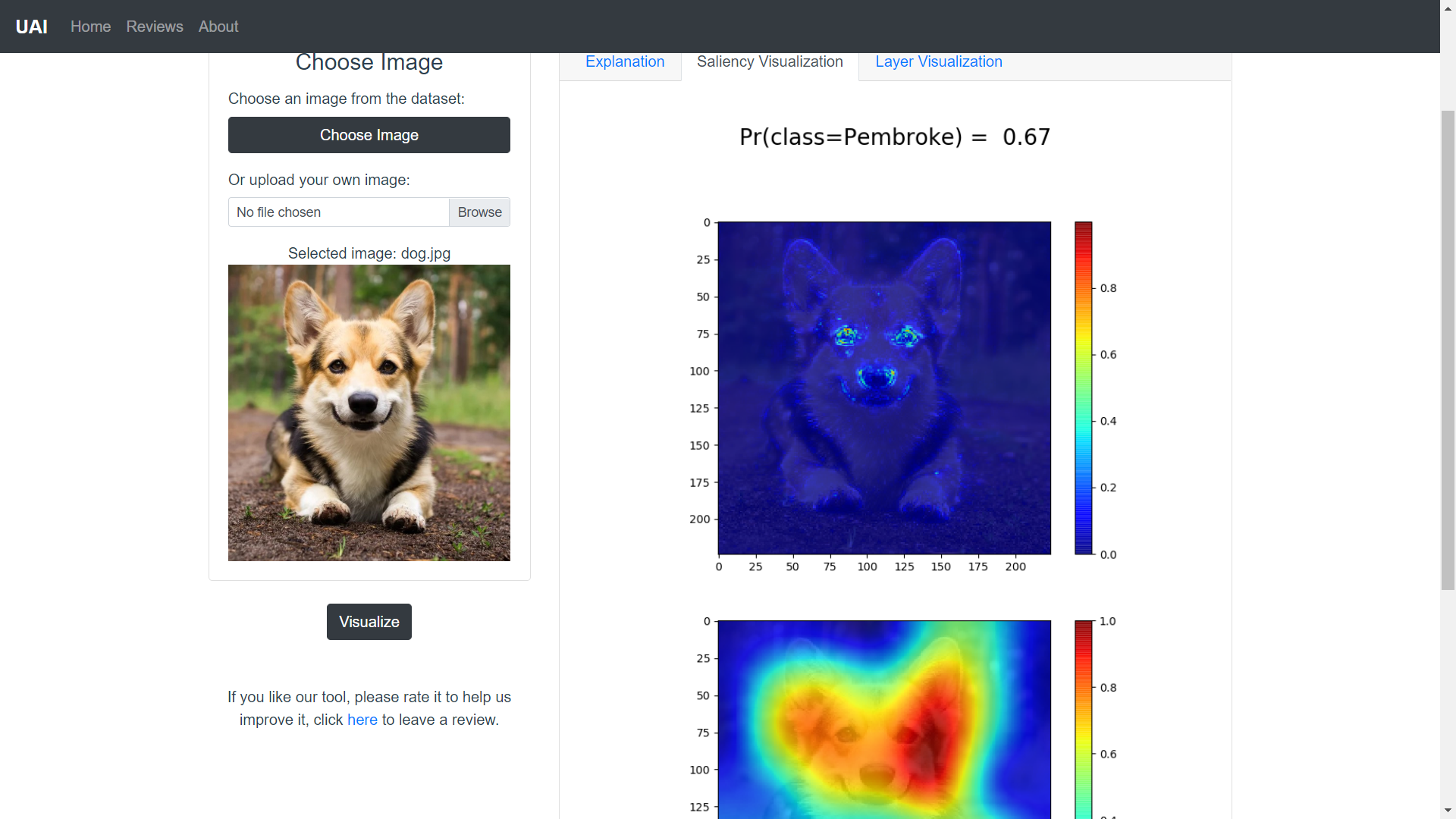

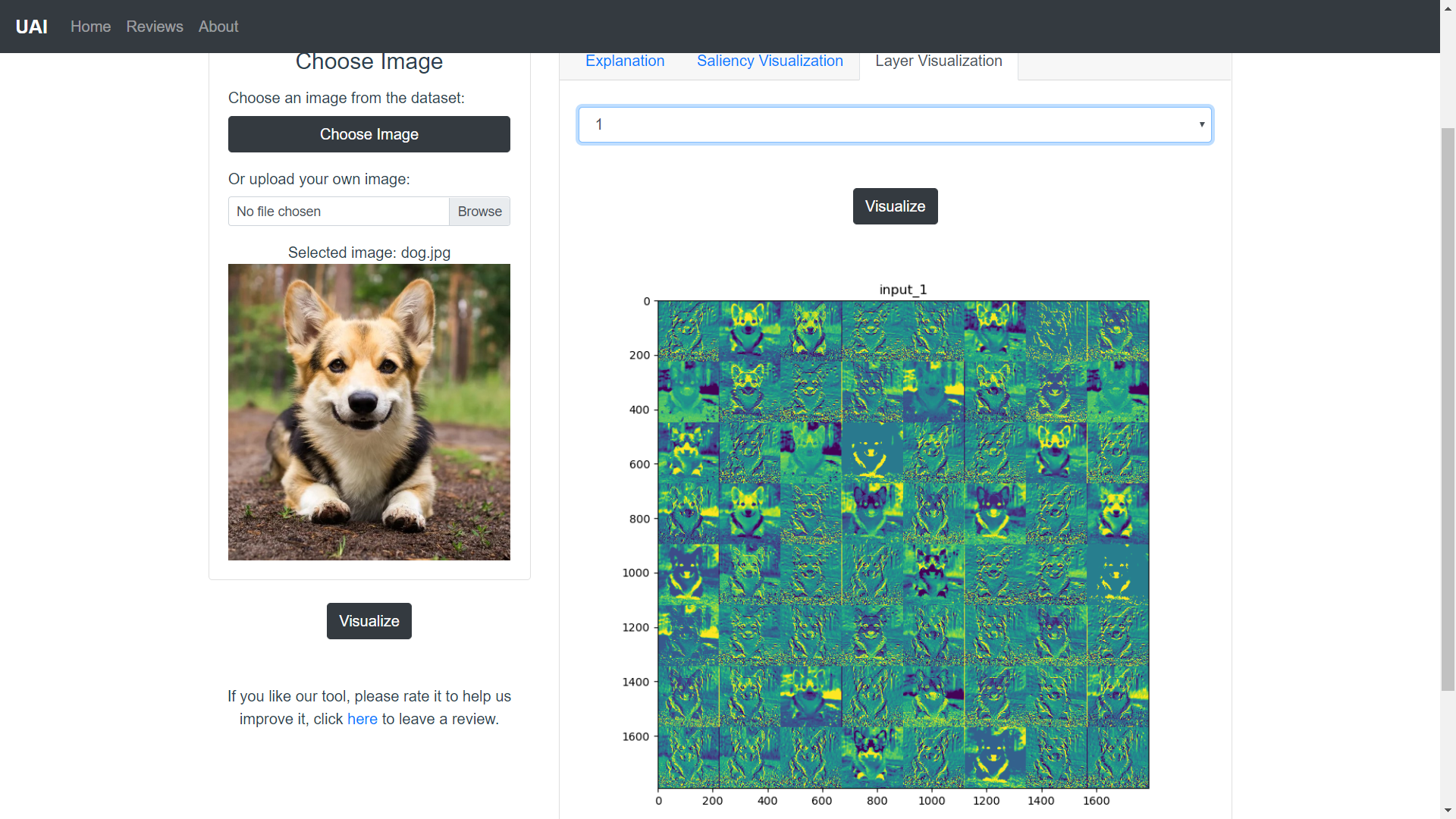

<h5>Use our network Page</h5> | |||

[[File:Vis1_blank.png|500px]] | |||

[[File:Vis1_imagepicker.png|500px]] | |||

[[File:Vis1_sal.png|500px]] | |||

[[File:Vis1_layer.png|500px]] | |||

[[File:Vis1_reviewform.png|500px]]<br> | |||

''In the respective orders: | |||

*''The first visualization page, meant for users who want to use our pre-trained network.'' | |||

*''The image picker on the visualization pages.'' | |||

*''The Saliency Maps on the visualization pages.'' | |||

*''The Layer Visualization on the visualization pages.'' | |||

*''The review form that appears after the user has chosen an image to process.''<br><br> | |||

This page is one of the 2 visualization pages. This page is meant for users who want to make use of our pre-trained network. <br> | |||

On the left, the user can choose a picture to upload. Then the Neural Network will run and try to predict to what class the object in the picture belongs. For uploading, the user has 2 options: | |||

*Pick an image from out database by clicking 'choose image'. | |||

*Upload his own image by clicking 'browse'. | |||

After choosing an image, the 'visualize' button becomes clickable. Then the network starts doing its work. <br> | |||

On the right part, explanations of how the tool works, the Saliency Maps and the Layer Visualizations are given. The Saliency and Layer tabs become accessible only when the user has clicked on 'visualize'. Each tab shows the respective visualizations, the Saliency tab also shows the prediction of the network, along with the certainty of the prediction. The Layer tab shows different layers which can be chosen by the user using the dropdown menu. <br> | |||

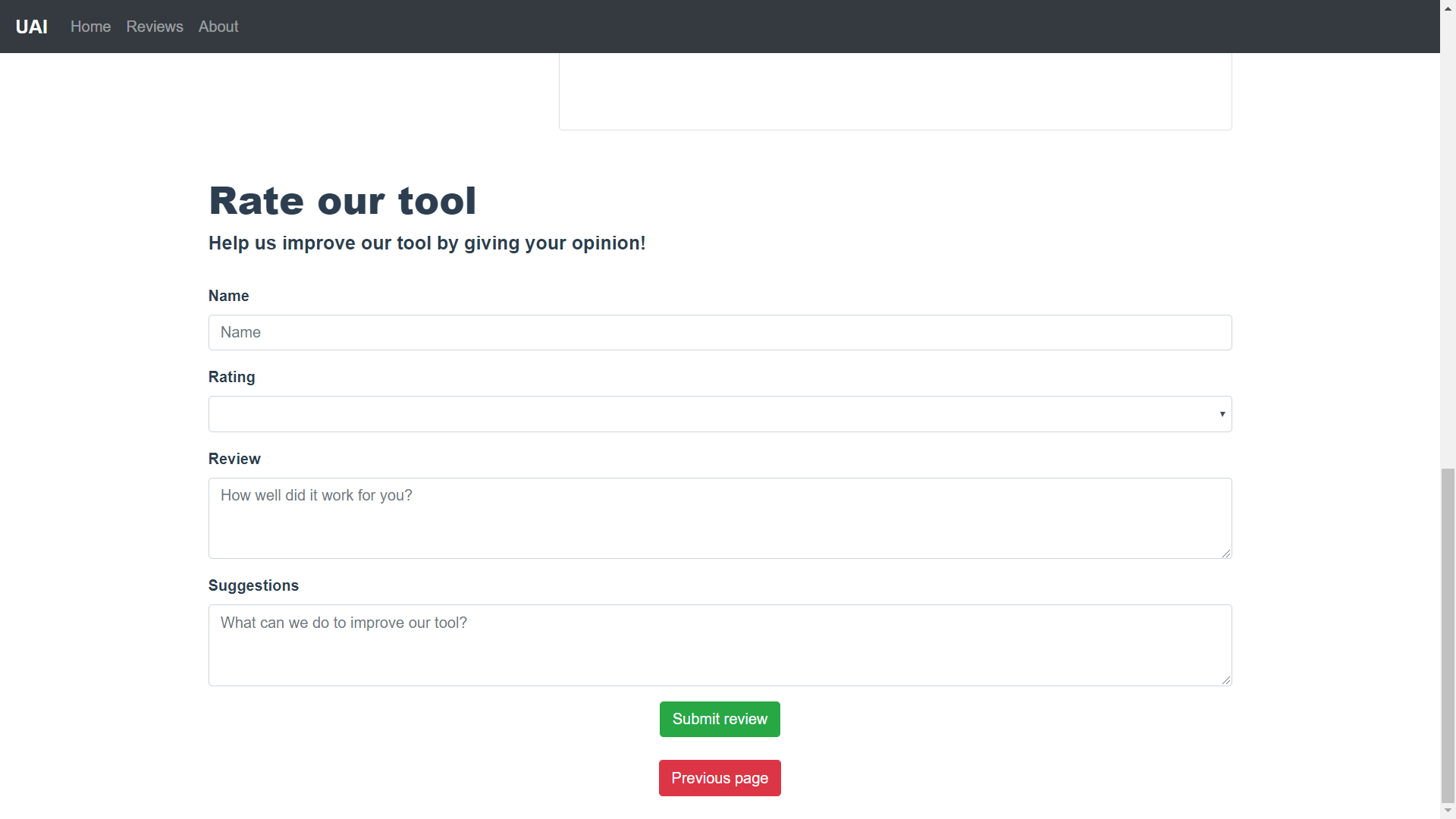

Additionally, after the user has chosen an image to process, a suggestion appears asking the user to leave a review of the tool. If the user agrees, a review form will appear at the bottom of the page. Here the user can leave a rating, tell us about his opinion and possibly make suggestions. | |||

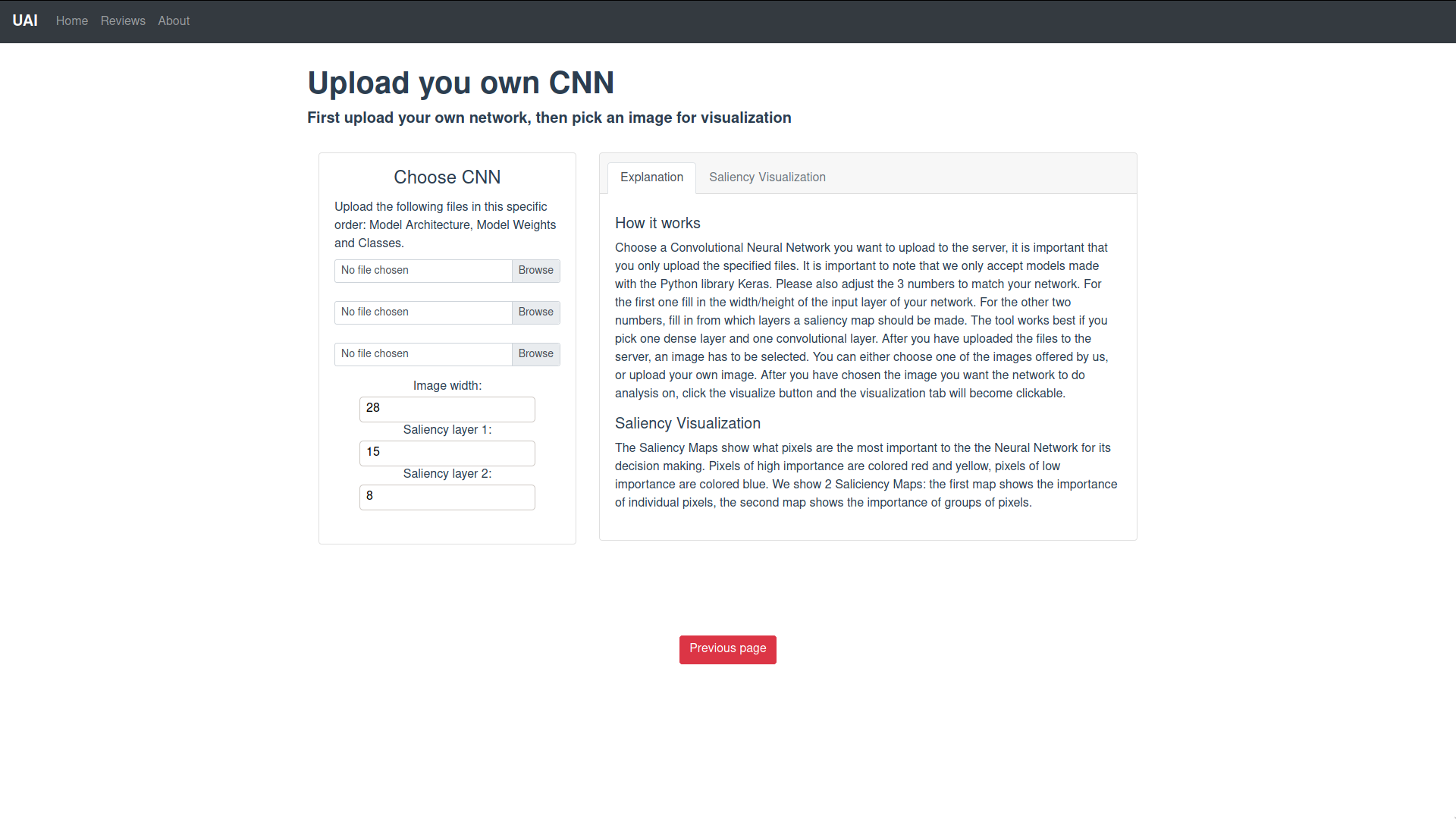

<h5>Upload network page</h5> | |||

[[File:Vis2_NEW.png|500px]]<br> | |||

''The second visualization page, meant for users who want to use their own network.''<br><br> | |||

This page is the other visualization page, meant for users who want to use their own networks. On the left, the user can upload the files of his network. Below that, the user can use the input fields to adjust the image width and Saliency layers of his network, that is, fill in the width/height of the input layer of his network and choose from which layers a Saliency Map should be made. After all required files are chosen, the 'upload network' button appears. After the network has been uploaded, the image picker appears and the user can proceed in the same way as in the other visualization page. | |||

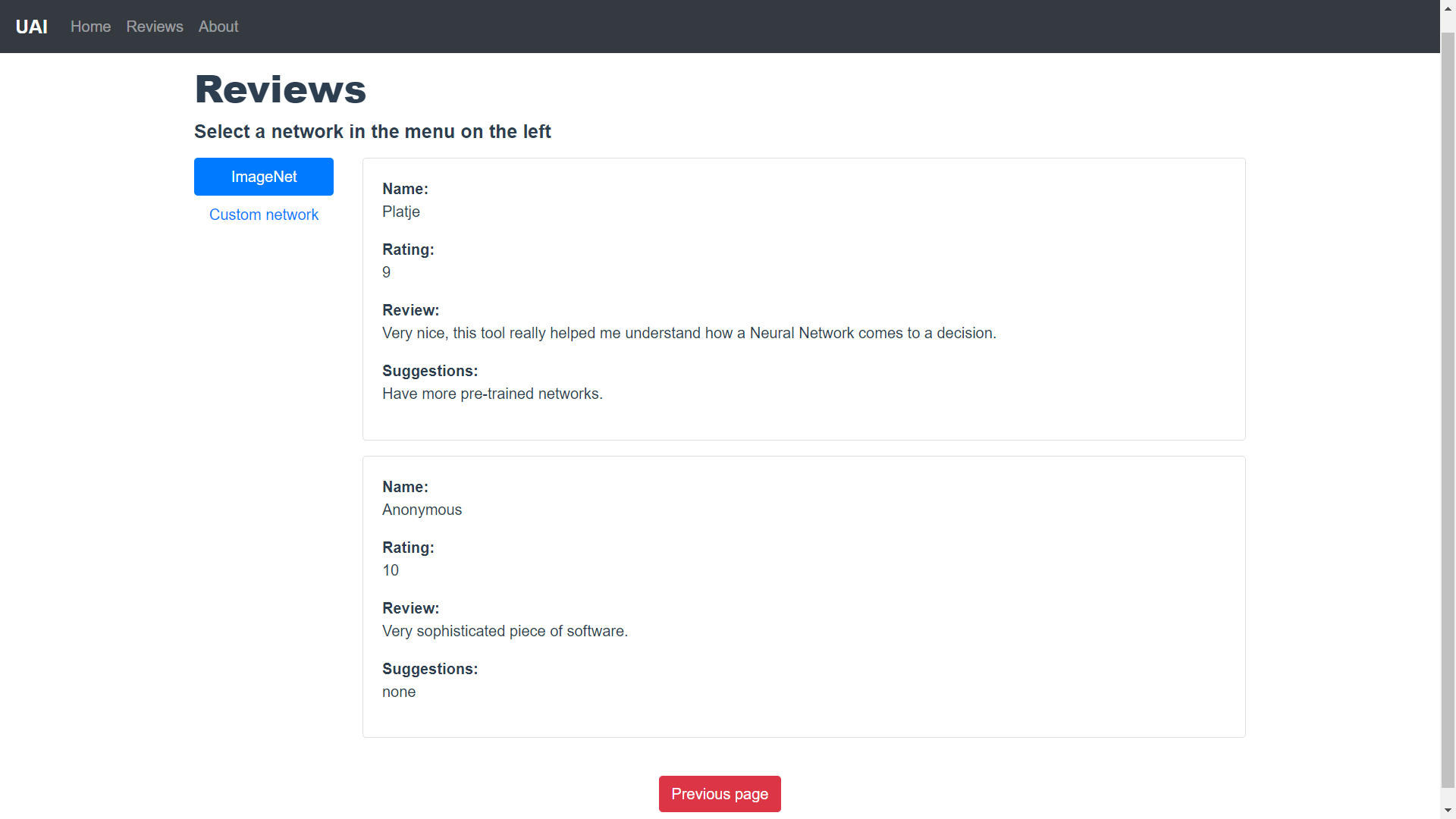

<h5>Reviews Page</h5> | |||

[[File:Reviews_page.png|500px]]<br> | |||

''Reviews shown on the review page.''<br><br> | |||

The review page shows the reviews of users. There are 2 tabs, one for users who used our network and one for users who used their own network. A review includes: name, rating, opinion and suggestions. | |||

<h5>About Page</h5> | |||

[[File:About_page.png|500px]]<br> | |||

''The about page.''<br><br> | |||

The about page shows more information about us, the developers of the project. Furthermore, a copyright notice is shown. | |||

<h5>Design Decisions</h5> | |||

Navigating from page to page is done using the navigation bar, which is shown at the very top of every page (except for the title page). We chose a horizontal navigation bar at the top because we think this is the easiest way for the user to easily switch to the different pages: Home, Reviews and About. <br> | |||

Furthermore, all pages (except for the title page) have a red 'previous page' button at the bottom of the page, which redirect the user to the home page. | |||

All navigation elements have a zooming animation when hovered over. | |||

On the home page, we have decided to use cards in a grid layout because we think this makes it easy to see which options are available at a glance. Putting everything below each other would require the user to scroll down which we think is unnecessary. Cards are useful to our purpose because it shows exactly everything that we need it to show: The title of the option, a brief explanation and a picture. They also play nicely with the zoom effect, synergizes well with the grid layout and are visually pleasing.<br> | |||

On the first visualization (Use our network) page, we also used a grid layout with cards. On the left card, the user can upload the image. On the right card, we have tab layout within the card. We could have put the explanation and the visualizations below each other but that would again require the user to scroll up and down when he wants to switch visualizations. With a tab layout, the user can just click on the appropriate tab, which is more convenient. The visualization page also lets the user leave a review after he's done so he doesn't have to go to the reviews page to leave a review. This is easier for the user because he does not have to remember which option he chose as the system will automatically fill that in. The suggestion of leaving a review is designed to be minimal and not obnoxiously on your face, as that would annoy the user and therefore make a bad user experience. <br> | |||

Everything mentioned for this visualization page also applies for the second visualization page (Upload your own network). On both pages, certain options only appear after previous steps are done. Think of the visualize buttons becoming clickable only when a picture has been chosen, the image picker appearing only when the user has uploaded his network and the tabs becoming clickable only when a picture has been chosen to process. This is done to prevent confusion, making it easier for the user to go though the required steps in the right order. | |||

== Future Work== | |||

<h5>More visualization techniques</h5> | |||

We are currently offering two main techniques to analyze the users neural network. Saliency maps and layer/activation visualizations. There are some other visualizations that would give the user even more insight in their networks. To name a few visualization techniques that would be beneficial to add: visualizing the raw weights, visualizing a 2D projection of the last layers feature space, showing intermediate features, visualizing the optimal image per class. All these visualization techniques take a lot of time to implement since they are quite complicated. We did not implement these due to time constraints, but implementing these additional techniques would improve our tool. | |||

Another limitation is that currently, when a user uploads a custom network, they can only use the saliency visualization. We did not implement the layer visualization for custom networks due to time constraints. | |||

<h5>Current Network upload feature is limited.</h5> | |||

Right now, a user has to upload his own network with a lot of restrictions. Some of these restrictions will always stay there. For example, the uploaded network has to be a convolutional neural network, as this is the main reason we use the current visualisations. However, once you actually have a network trained, it must be possible to convert it to a keras network. Also the file order upload should also be fixable, but we didn’t have time for it anymore during our project. If it is possible to fix these limitations, that would greatly improve user experience. Thus, this is definitely an important feature that could be done in the future. | |||

<h5>Compare two networks at the same time</h5> | |||

If the user is developing a neural network with the help of our tool, he tests his network to see how good it performs. Now, if he found that his network still needed some improvement and made some changes to it, he would want to know if he made the right changes. So, it would be useful if he could compare his old network with his new network side by side. Then he could easily compare the two visualisations of the different networks and see which one performs better on certain images. Thus it would be nice to add this feature in the future. | |||

<h5>More available networks to play around with</h5> | |||

Right now, we have only one available network for users to ‘freely’ explore the visualisations with. We planned to make more than one network, but it was more work than we expected to get even one network done. Hence, making more networks available was no longer possible in the remaining time we had left. However, there is a lot of added value for the user experience if they could have more ‘tools’ to play around with. Thus adding at least another default network in the future is definitely worth considering. | |||

<h5>Image pre-processing</h5> | |||

Convolutional neural networks only work with a fixed size input image. This means that when a user wants to analyze an image with a neural network that does not accept the size of that image, the image needs to be pre-processed to obtain the right size. This can be done in multiple ways, all having different benefits and disadvantages. One way to do it is to simply scale the image. Another way could be to crop the image in a certain way. We currently always scale the input image to the right size. In some cases, scaling the image may limit the network's ability to analyze the image. For this reason, it would be good to let the user choose how we pre-process images. | |||

<h5>Server Hosted Website</h5> | |||

Right now we do not have an online website for this project. This makes it such that the website can only be run locally. This brings with it a couple of problems such as storage and computing power. Some of the visualisations on our website cause the server to need at least a couple of gigabytes of free space in order to keep it running, not all local systems can reserve such an amount of disk space to programs. Also, running locally means that we use the computing power of the local system, which is not always good enough. The algorithms for the visualisations are computationally heavy, which means that with the resources of the local system it can take several minutes to create a response for some requests of the front-end. This limits the user friendliness of the whole product. Why not just put the project on a hosted server? One of the reasons we did not do that yet was security. Currently to limit the access to the back-end server, we use an API key as access token. This makes it impossible to access the services of our REST API without this token. However, we store the key locally, which makes it easy to find. As we did not take any other extra security measures other than input sanity checks, it is advised to invest more in security measures before putting this project on a server. The other main reason we did not host it, was due to the limited amount of time we had. If there was more time, hosting our tool on a remote server would be one of the most important things to work on. | |||

==Video== | |||

Our video (with demo) can be found at [https://www.youtube.com/watch?v=YBOXeoWjxSE https://www.youtube.com/watch?v=YBOXeoWjxSE] | |||

== Peer Review== | |||

Jeroen: +0.5 | |||

Daniel: 0 | |||

Ka Yip: 0 | |||

Joris: 0 | |||

Rik: -0.5 | |||

== References == | == References == | ||

''' | '''Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems (pp. 1097-1105).''' <br> | ||

Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems (pp. 1097-1105). <br> | |||

''Summary: AlexNet. First attempt at using CNNs for image classification for the imagenet competition. Broke the world record. Relatively small neural network used.'' <br> | ''Summary: AlexNet. First attempt at using CNNs for image classification for the imagenet competition. Broke the world record. Relatively small neural network used.'' <br> | ||

Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556. <br> | '''Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556.''' <br> | ||

''Summary: VGGNet. A later larger CNN that again broke the record on the imagenet competition. Very deep architecture with many convolutional layers and pooling layers. Power from size.'' <br> | ''Summary: VGGNet. A later larger CNN that again broke the record on the imagenet competition. Very deep architecture with many convolutional layers and pooling layers. Power from size.'' <br> | ||

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770-778). <br> | '''He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770-778).''' <br> | ||

''Summary: ResNet. New neural network that won the imagenet competition again. The idea behind ResNet was to propagate gradients by having ‘jumps’ in the network. A start to thinking about how neural networks work and what its bottlenecks are and how to develop those aspects.'' <br> | ''Summary: ResNet. New neural network that won the imagenet competition again. The idea behind ResNet was to propagate gradients by having ‘jumps’ in the network. A start to thinking about how neural networks work and what its bottlenecks are and how to develop those aspects.'' <br> | ||

Maaten, L. V. D., & Hinton, G. (2008). Visualizing data using t-SNE. Journal of machine learning research, 9(Nov), 2579-2605. <br> | '''Maaten, L. V. D., & Hinton, G. (2008). Visualizing data using t-SNE. Journal of machine learning research, 9(Nov), 2579-2605.''' <br> | ||

''Summary: This paper presents a method of visualizing a high feature space in 2D. This can be useful for analysing and visualising a dataset the dataset on which a neural network is training.'' <br> | ''Summary: This paper presents a method of visualizing a high feature space in 2D. This can be useful for analysing and visualising a dataset the dataset on which a neural network is training.'' <br> | ||

Simonyan, K., Vedaldi, A., & Zisserman, A. (2013). Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv preprint arXiv:1312.6034. <br> | '''Simonyan, K., Vedaldi, A., & Zisserman, A. (2013). Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv preprint arXiv:1312.6034.''' <br> | ||

''Summary: This paper explains how to create saliency maps for a given input image and CNN. It works by applying a special backward pass through the network.'' <br> | ''Summary: This paper explains how to create saliency maps for a given input image and CNN. It works by applying a special backward pass through the network.'' <br> | ||

Zhou, Z. H., Jiang, Y., Yang, Y. B., & Chen, S. F. (2002). Lung cancer cell identification based on artificial neural network ensembles. Artificial Intelligence in Medicine, 24(1), 25-36. | '''Zhou, Z. H., Jiang, Y., Yang, Y. B., & Chen, S. F. (2002). Lung cancer cell identification based on artificial neural network ensembles. Artificial Intelligence in Medicine, 24(1), 25-36.'''<br> | ||

''Summary: This paper uses neural networks to detect lung cancer cells.'' | ''Summary: This paper uses neural networks to detect lung cancer cells.''<br> | ||

Karabatak, M., & Ince, M. C. (2009). An expert system for detection of breast cancer based on association rules and neural network. Expert systems with Applications, 36(2), 3465-3469. | '''Karabatak, M., & Ince, M. C. (2009). An expert system for detection of breast cancer based on association rules and neural network. Expert systems with Applications, 36(2), 3465-3469.''' <br> | ||

''Summary: This paper uses neural networks to detect breast cancer.'' | ''Summary: This paper uses neural networks to detect breast cancer.'' <br> | ||

Djavan, B., Remzi, M., Zlotta, A., Seitz, C., Snow, P., & Marberger, M. (2002). Novel artificial neural network for early detection of prostate cancer. Journal of Clinical Oncology, 20(4), 921-929. | '''Djavan, B., Remzi, M., Zlotta, A., Seitz, C., Snow, P., & Marberger, M. (2002). Novel artificial neural network for early detection of prostate cancer. Journal of Clinical Oncology, 20(4), 921-929.''' <br> | ||

''Summary: This paper uses neural networks to detect early prostate cancer.'' | ''Summary: This paper uses neural networks to detect early prostate cancer.'' <br> | ||

Qin, C., Zhu, H., Xu, T., Zhu, C., Jiang, L., Chen, E., & Xiong, H. (2018, June). Enhancing person-job fit for talent recruitment: An ability-aware neural network approach. In The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval (pp. 25-34). | '''Qin, C., Zhu, H., Xu, T., Zhu, C., Jiang, L., Chen, E., & Xiong, H. (2018, June). Enhancing person-job fit for talent recruitment: An ability-aware neural network approach. In The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval (pp. 25-34).''' <br> | ||

''Summary: Using neural networks to predict how well a person fits a job.'' | ''Summary: Using neural networks to predict how well a person fits a job.'' <br> | ||

Van Huynh, T., Van Nguyen, K., Nguyen, N. L. T., & Nguyen, A. G. T. (2019). Job Prediction: From Deep Neural Network Models to Applications. arXiv preprint arXiv:1912.12214. | '''Van Huynh, T., Van Nguyen, K., Nguyen, N. L. T., & Nguyen, A. G. T. (2019). Job Prediction: From Deep Neural Network Models to Applications. arXiv preprint arXiv:1912.12214.''' <br> | ||

''Summary: Neural networks for job applications.'' | ''Summary: Neural networks for job applications.'' <br> | ||

Sun, Y., Liang, D., Wang, X., & Tang, X. (2015). Deepid3: Face recognition with very deep neural networks. arXiv preprint arXiv:1502.00873. | '''Sun, Y., Liang, D., Wang, X., & Tang, X. (2015). Deepid3: Face recognition with very deep neural networks. arXiv preprint arXiv:1502.00873.''' <br> | ||

''Summary: Using neural networks to recognize faces.'' | ''Summary: Using neural networks to recognize faces.'' <br> | ||

Kuo, Lily. “China Brings in Mandatory Facial Recognition for Mobile Phone Users.” The Guardian, Guardian News and Media, 2 Dec. 2019, www.theguardian.com/world/2019/dec/02/china-brings-in-mandatory-facial-recognition-for-mobile-phone-users. | '''Kuo, Lily. “China Brings in Mandatory Facial Recognition for Mobile Phone Users.” The Guardian, Guardian News and Media, 2 Dec. 2019, www.theguardian.com/world/2019/dec/02/china-brings-in-mandatory-facial-recognition-for-mobile-phone-users.''' <br> | ||

''Summary: News article covering use of facial recognition in China.'' | ''Summary: News article covering use of facial recognition in China.'' <br> | ||

Bojarski, M., Del Testa, D., Dworakowski, D., Firner, B., Flepp, B., Goyal, P., ... & Zhang, X. (2016). End to end learning for self-driving cars. arXiv preprint arXiv:1604.07316. | '''Bojarski, M., Del Testa, D., Dworakowski, D., Firner, B., Flepp, B., Goyal, P., ... & Zhang, X. (2016). End to end learning for self-driving cars. arXiv preprint arXiv:1604.07316.''' <br> | ||

''Summary: Using CNN to steer self driving car based on camera images'' | ''Summary: Using CNN to steer self driving car based on camera images'' <br> | ||

'''Qin, Zhuwei & Yu, Fuxun & Liu, Chenchen & Chen, Xiang. (2018). How convolutional neural networks see the world - A survey of convolutional neural network visualization methods. https://arxiv.org/abs/1804.11191''' <br> | |||

''Summary: Features learned by convolutional NN are hard to identify and understand for humans. This paper shows how CNN interpretability can be improves using CNN visualizations. The paper talks about several visualization methods, e.g. Activation Maximization, Network Inversion, Deconvolutional NN and Network Dissection based visualization.'' <br> | |||

''' | '''Yu, Wei & Yang, Kuiyuan & Bai, Yalong & Yao, Hongxun & Rui, Yong. (2014). Visualizing and comparing Convolutional Neural Networks. https://arxiv.org/abs/1412.6631''' <br> | ||

''Summary: This paper attempts to understand the internal working of a CNN, among others it uses visualization to do so.'' <br> | |||

'' | |||

'''Zeiler, Matthew D & Fergus, Rob. (2014). Visualizing and Understanding Convolutional Networks. https://link.springer.com/chapter/10.1007/978-3-319-10590-1_53''' <br> | |||

''This paper | ''Summary: This paper introduces a visualization technique that gives insight to the function of feature layers and how classifier operates. It is said that using the visualizations, the network can be improved to outperform other networks.'' <br> | ||

'''Zintgraf, Luisa M. & Cohen, Taco S. & Welling, Max. (2017). A New Method to Visualize Deep Neural Networks. https://arxiv.org/abs/1603.02518''' <br> | |||

''This paper | ''Summary: This paper presents a visualization method for the response of a NN to a specific input. It will for example highlight areas that show why (or why not) a certain class was chosen.'' <br> | ||

'''Zhang, Quanshi & Wu, Ying Nian & Zhu, Song-Chun. (2018). Interpretable Convolutional Neural Networks.http://openaccess.thecvf.com/content_cvpr_2018/html/Zhang_Interpretable_Convolutional_Neural_CVPR_2018_paper.html''' <br> | |||

''This paper | ''Summary: This paper uses a different approach to make CNN more understandable. Instead of focusing on visualization, it proposes a method to modify a traditional CNN to make it more understandable.'' <br> | ||

''' | |||

https://machinelearningmastery.com/how-to-visualize-filters-and-feature-maps-in-convolutional-neural-networks/ <br> | '''https://machinelearningmastery.com/how-to-visualize-filters-and-feature-maps-in-convolutional-neural-networks/''' <br> | ||

''Summary: Coding tutorial on how to visualize neural networks.''<br> | ''Summary: Coding tutorial on how to visualize neural networks.''<br> | ||

Yosinski, J., Clune, J., Nguyen, A., Fuchs, T., & Lipson, H. (2015). Understanding neural networks through deep visualization. arXiv preprint arXiv:1506.06579. https://arxiv.org/abs/1506.06579 <br> | '''Yosinski, J., Clune, J., Nguyen, A., Fuchs, T., & Lipson, H. (2015). Understanding neural networks through deep visualization. arXiv preprint arXiv:1506.06579. https://arxiv.org/abs/1506.06579''' <br> | ||

''Summary: introduces several new regularization methods that combine to produce qualitatively clearer, more interpretable visualizations.''<br> | ''Summary: introduces several new regularization methods that combine to produce qualitatively clearer, more interpretable visualizations.''<br> | ||

Tzeng, F. Y., & Ma, K. L. (2005). Opening the black box-data driven visualization of neural networks (pp. 383-390). IEEE. https://ieeexplore.ieee.org/abstract/document/1532820 <br> | '''Tzeng, F. Y., & Ma, K. L. (2005). Opening the black box-data driven visualization of neural networks (pp. 383-390). IEEE. https://ieeexplore.ieee.org/abstract/document/1532820''' <br> | ||

''Summary: presents designs and shows that the visualizations not only help to design more efficient neural networks, but also assists in the process of using neural networks for problem solving such as performing a classification task.''<br> | ''Summary: presents designs and shows that the visualizations not only help to design more efficient neural networks, but also assists in the process of using neural networks for problem solving such as performing a classification task.''<br> | ||

Nguyen, A., Yosinski, J., & Clune, J. (2016). Multifaceted feature visualization: Uncovering the different types of features learned by each neuron in deep neural networks. arXiv preprint arXiv:1602.03616. https://arxiv.org/abs/1602.03616 <br> | '''Nguyen, A., Yosinski, J., & Clune, J. (2016). Multifaceted feature visualization: Uncovering the different types of features learned by each neuron in deep neural networks. arXiv preprint arXiv:1602.03616. https://arxiv.org/abs/1602.03616''' <br> | ||

''Summary: introduces an algorithm that explicitly uncovers the multiple facets of each neuron by producing a synthetic visualization of each of the types of images that activate a neuron.The paper also introduces regularization methods that produce state-of-the-art results in terms of the interpretability of images obtained by activation maximization.''<br> | ''Summary: introduces an algorithm that explicitly uncovers the multiple facets of each neuron by producing a synthetic visualization of each of the types of images that activate a neuron.The paper also introduces regularization methods that produce state-of-the-art results in terms of the interpretability of images obtained by activation maximization.''<br> | ||

Olah, C., Mordvintsev, A., & Schubert, L. (2017). Feature visualization. Distill, 2(11), e7. <br> | '''Olah, C., Mordvintsev, A., & Schubert, L. (2017). Feature visualization. Distill, 2(11), e7.''' <br> | ||

https://distill.pub/2017/feature-visualization/?utm_campaign=Dynamically%20Typed&utm_medium=email&utm_source=Revue%20newsletter | '''https://distill.pub/2017/feature-visualization/?utm_campaign=Dynamically%20Typed&utm_medium=email&utm_source=Revue%20newsletter'''<br> | ||

''Summary: This article focuses on feature visualization. While feature visualization is a powerful tool, actually getting it to work involves a number of details. In this article, the writer examines the major issues and explores common approaches to solving them. The writer finds that remarkably simple methods can produce high-quality visualizations.''<br> | ''Summary: This article focuses on feature visualization. While feature visualization is a powerful tool, actually getting it to work involves a number of details. In this article, the writer examines the major issues and explores common approaches to solving them. The writer finds that remarkably simple methods can produce high-quality visualizations.''<br> | ||

Hubert Cecotti and Axel Gräser (2011). Convolutional Neural Networks for P300 Detection with Application to Brain-Computer Interfaces.'' Paper that proposes use of a Convolutional Neural Network to analyse brain activities and in this way detecting P300 brain waves.''<br> | '''Leon A. Gatys, Alexander S. Ecker, Matthias Bethge (2016). Image Style Transfer Using Convolutional Neural Networks.'''<br> | ||

''Summary: Paper about a neural network that can seperate and combine the style and content of natural images, which provide new insights into the deep image representations learned by Convolutional Neural Networks and demonstrate their potential for high level image synthesis and manipulation.''<br> | |||

Yunjie Liu, Evan Racah, Prabhat, Joaquin Correa, Amir Khosrowshahi, David Lavers, Kenneth Kunkel, Michael Wehner, William Collins (2016). Application of Deep Convolutional Neural Networks for Detecting Extreme Weather in Climate Datasets.'' In this paper, the authors developed a deep Convolutional Neural Network (CNN) classification system and demonstrated the usefulness of Deep Learning technique for tackling climate pattern detection problems.''<br> | '''Patrice Y. Simard, Dave Steinkraus, John C. Platt (2003) Best Practices for Convolutional Neural Networks Applied to Visual Document Analysis.''' <br> | ||

''Summary: Neural networks are a powerful technology for classification of visual inputs arising from documents. However, there is a confusing plethora of different neural network methods that are used in the literature and in industry. This paper describes a set of concrete best practices that document analysis researchers can use to get good results with neural networks.'' <br> | |||

'''Mohammad Rastegari , Vicente Ordonez, Joseph Redmon , Ali Farhadi (2016). XNOR-Net: ImageNet Classification Using Binary Convolutional Neural Networks.''' <br> | |||

''Summary: Paper in which two approximations of standard Convolutional Networks, using binary filters and input to convolutional layers, are proposed and compared to networks with full precision weights by running them on the ImageNet task. The approximations result in both big memory and runtime savings.''<br> | |||

'''Hubert Cecotti and Axel Gräser (2011). Convolutional Neural Networks for P300 Detection with Application to Brain-Computer Interfaces.'''<br> | |||

''Summary: Paper that proposes use of a Convolutional Neural Network to analyse brain activities and in this way detecting P300 brain waves.''<br> | |||

'''Yunjie Liu, Evan Racah, Prabhat, Joaquin Correa, Amir Khosrowshahi, David Lavers, Kenneth Kunkel, Michael Wehner, William Collins (2016). Application of Deep Convolutional Neural Networks for Detecting Extreme Weather in Climate Datasets.'''<br> | |||

''Summary: In this paper, the authors developed a deep Convolutional Neural Network (CNN) classification system and demonstrated the usefulness of Deep Learning technique for tackling climate pattern detection problems.''<br> | |||

'''[Rowley et al., 1998] Henry A. Rowley, Shumeer Baluja, and Takeo Kanade. Neural network based face detection.''' <br> | |||

''Summary: this paper talks about convolutional neural networks that can detect faces. From this we can take how these layers are structured and how each layer can be visualized.''<br> | |||

'''J.Abdul Jaleel, Sibi Salim, and Aswin.R.B. Artificial neural network based detection of skin cancer.''' <br> | |||

[Rowley et al., 1998] Henry A. Rowley, Shumeer Baluja, and Takeo Kanade. Neural network based face detection. <br> | ''Summary:Here they talk about neural nets that detect skin cnacer even more reliable then humans in a early stage, something that might safe lives.''<br> | ||

Summary: this paper talks about convolutional neural networks that can detect faces. From this we can take how these layers are structured and how each layer can be visualized.<br> | '''B. Lo, H.P. Chan, J.S. Lin, H Li, M.T. Freedman, and S.K. Mun. Artificial convolution neural network for medical image pattern recognition.''' <br> | ||

J.Abdul Jaleel, Sibi Salim, and Aswin.R.B. Artificial neural network based detection of skin cancer. <br> | ''Summary:This is a paper that is more broad in the different deseases that it can find using CNN's.''<br> | ||

Summary:Here they talk about neural nets that detect skin cnacer even more reliable then humans in a early stage, something that might safe lives.<br> | '''A. Canziani & E. Culurciello, and A. Paszke. An analysis of deep neural networks for practical applications''' <br> | ||

B. Lo, H.P. Chan, J.S. Lin, H Li, M.T. Freedman, and S.K. Mun. Artificial convolution neural network for medical image pattern recognition. <br> | ''Summary:In this paper they describe neural nets that can detect more 'normal' objects. They do this with the imagenet dataset.''<br> | ||

Summary:This is a paper that is more broad in the different deseases that it can find using CNN's.<br> | '''L. Zhang, F. Yang, Y.D. Zhang, and Y.J. Zhu. Road crack detection using deep convolutional neural networks.''' <br> | ||

A. Canziani & E. Culurciello, and A. Paszke. An analysis of deep neural networks for practical applications <br> | ''Summary:A paper that goes in detail of CNN's that detect certain road conditions like cracks that are hard to find by humans.''<br> | ||

Summary:In this paper they describe neural nets that can detect more 'normal' objects. They do this with the imagenet dataset.<br> | |||

L. Zhang, F. Yang, Y.D. Zhang, and Y.J. Zhu. Road crack detection using deep convolutional neural networks. <br> | |||

Summary:A paper that goes in detail of CNN's that detect certain road conditions like cracks that are hard to find by humans.<br> | |||

== Logbook == | == Logbook == | ||

| Line 245: | Line 517: | ||

|- | |- | ||

| Joris Goddijn | | Joris Goddijn | ||

| - | | 14 | ||

| - | | Meeting(2h), Research references 6-13 (4h), Rewrite problem statement objectives and users (3h), Learning Django basics (4h), Updating wiki (1h) | ||

|- | |||

| Daniël Verloop | |||

| 14 | |||

| Meeting(2h), Learning Language(3h), Initializing vue project(3h), Website-design(6h) | |||

|- | |||

| Ka Yip Fung | |||

| 13 | |||

| Meeting (2h), Learning Vue basics (3h), Working on website design (7h), Updating Doc/Wiki (1h) | |||

|- | |||

| Rik Maas | |||

| 15 | |||

| Meeting (2h), Learning Django basics (5h), working on saliency maps (8h) | |||

|- | |||

| Jeroen Struijk | |||

| 17 | |||

| Meeting (2h), Learning Django basics (5h), working on layer visualizations (10h) | |||

|} | |||

'''WEEK 3''' | |||

{| class="wikitable" | |||

|- | |||

! Name | |||

! Total hours | |||

! Break-down | |||

|- | |||

| Joris Goddijn | |||

| 24 | |||

| Meeting (4h), Integrating front end and back end in docker (10h) Developing connection between front end and back end in vuejs (10h). | |||

|- | |||

| Daniël Verloop | |||

| 24 | |||

| Meeting (4h), Design vis1 page(10h) Front-End (5h) Learning Axios(2h) Learning Ajax(2h) integrating http requests possibility(1h). | |||

|- | |||

| Ka Yip Fung | |||

| 22 | |||

| Meeting (4h), Designing title page (3h), working on design/explanation Home page (3.5h), working on Vis1 page (8.5h), attempt at migrating backend using Docker (2h), research Axios (1h). | |||

|- | |||

| Rik Maas | |||

| 12 | |||

| Meeting (4h), Working on Saliency maps (8h). | |||

|- | |||

| Jeroen Struijk | |||

| 24 | |||

| Meeting(4h), working on backend(20h). | |||

|} | |||

'''WEEK 4''' | |||

{| class="wikitable" | |||

|- | |||

! Name | |||

! Total hours | |||

! Break-down | |||

|- | |||

| Joris Goddijn | |||

| 11 | |||

| Meeting (3h), fixing bugs (1h), creating user examples (4h), Updating wiki (6h). | |||

|- | |||

| Daniël Verloop | |||

| 15 | |||

| Meeting (3h) Image picker Vis1(12h). | |||

|- | |||

| Ka Yip Fung | |||

| 13 | |||

| Meeting (3h), Updating Home+Title page (4h), Research php/SQLite (2h), Designing Reviews page (2.5h), New users Wiki (1.5h). | |||

|- | |||

| Rik Maas | |||

| 8 | |||

| Meeting (3h), working on saliency mapping (5h). | |||

|- | |||

| Jeroen Struijk | |||

| 21 | |||

| Meeting(3h), working on backend(18h). | |||

|} | |||

'''WEEK 5''' | |||

{| class="wikitable" | |||

|- | |||

! Name | |||

! Total hours | |||

! Break-down | |||

|- | |||

| Joris Goddijn | |||

| 8 | |||

| Meeting (4h), Creating explanations (2h), modularising code (2h). | |||

|- | |||

| Daniël Verloop | |||

| 12 | |||

| Meeting (4h), modularising/cleaning code(8h). | |||

|- | |||

| Ka Yip Fung | |||

| 9 | |||

| Meeting (4h), Updating Home page (1h), Explanation UI (3h), Updating navigation elements (1h). | |||

|- | |||

| Rik Maas | |||

| 8 | |||

| Meeting(4h), Doing research saliency mapping (4h). | |||

|- | |||

| Jeroen Struijk | |||

| 20 | |||

| Meeting(4h), working on backend(16h). | |||

|} | |||

'''WEEK 7''' | |||

{| class="wikitable" | |||

|- | |||

! Name | |||

! Total hours | |||

! Break-down | |||

|- | |||

| Joris Goddijn | |||

| 14 | |||

| Meeting (4h), Front end for network upload (6h) Testing and fixing network upload (4h). | |||

|- | |||

| Daniël Verloop | |||

| 13 | |||

| Meeting(4h), Getting user Feedback(2h), Processing Feedback(1h), Front-end for network upload(6h). | |||

|- | |||

| Ka Yip Fung | |||

| 12.5 | |||

| Meeting (4h), Website: Adjustments (based on feedback) + adjusting for network uploading (3h), Updating Wiki (4.5h), Getting user feedback (0.5h), Processing feedback (0.5h). | |||

|- | |||

| Rik Maas | |||

| 13 | |||

| Meeting (4h), Updating wiki (5h), making presentation text (4h). | |||

|- | |||

| Jeroen Struijk | |||

| 26 | |||

| Meeting(4h), upload functionality backend(22h). | |||

|} | |||

'''WEEK 8''' | |||

{| class="wikitable" | |||

|- | |||

! Name | |||

! Total hours | |||

! Break-down | |||

|- | |||

| Joris Goddijn | |||

| 14 | |||

| Meeting (8h), Workflow explanation (1h), Future Work (1h), Record and edit demo video (4h). | |||

|- | |- | ||

| Daniël Verloop | | Daniël Verloop | ||

| | | 15 | ||

| | | Meeting (8h), Future Work(4h), Presentation(3h). | ||

|- | |- | ||

| Ka Yip Fung | | Ka Yip Fung | ||

| | | 9 | ||

| | | Meeting (8h, Update/checking Wiki (1h). | ||

|- | |- | ||

| Rik Maas | | Rik Maas | ||

| | | 16 | ||

| | | Meeting (8h, Making presentation (8h). | ||

|- | |- | ||

| Jeroen Struijk | | Jeroen Struijk | ||

| | | 22 | ||

| | | Meeting (8h), bug fixing backend(2h), finalizing project(12h). | ||

|} | |} | ||

Latest revision as of 14:16, 6 April 2020

Group Members

| Name | Student Number | Study | |

|---|---|---|---|

| Joris Goddijn | 1244648 | Computer Science | j.d.goddijn@student.tue.nl |

| Daniël Verloop | 1263544 | Computer Science | a.c.verloop@student.tue.nl |

| Ka Yip Fung | 1245300 | Computer Science | k.y.fung@student.tue.nl |

| Rik Maas | 1244503 | Computer Science | r.maas@student.tue.nl |

| Jeroen Struijk | 1252070 | Computer Science | j.j.struijk@student.tue.nl |

Problem Statement

Neural Networks are used extensively in a wide variety of fields:

- Large tech companies are using neural networks to analyze and cluster users of their services. This data is then used for targeted advertisement. This advertisement business is one of the most lucrative online business models.

- In health care, neural networks are used for detecting certain diseases, for example cancer. Recent studies have shown that neural networks perform well in the detection of lung cancer (Zhou 2002), breast cancer (Karabatak, 2009), and prostate cancer (Djavan, 2002). There are many more examples of researchers using neural networks to detect specific diseases.

- In businesses, neural networks are used to filter job applicants (Qin, 2018) (Van Huynh, 2019). It uses the information of an applicant and information about the job to predict how well the applicant fits the job. This way the business only has to take the best applicants in for interviews and can reduce the amount of manual labour needed.

- Neural networks are also used to detect cracks in the road (Zhang, 2016). Manually keeping track of road conditions is very costly and time consuming. Automated road crack detection makes it a lot easier to maintain roads.

- Neural networks are also able to recognize faces (Sun, 2015). Facial recognition systems based on neural networks are already heavily deployed in China (Kuo, 2019).

- In self driving cars, deep convolutional neural networks are used to parse the visual input of the sensors of the car. This information can be used to steer the car (Bojarski, 2016).

All in all, there are many use cases for neural networks. Research in modern large scale neural networks is very recent. The most promising progress in the field has been made in the last 10 years. The reason for the increase in popularity for neural networks is the increase in available data, because neural networks are only successful when they have a large amount of data to train on. Every day more data gets produced on a massive scale by people using technology. In the future only more data will be created. As time progresses more industries will be able to start using neural networks.

A big issue of modern neural networks is that it is a black box approach. Experts do not understand what is going on inside the neural network. Neural networks are given input data and are expected to produce output data. And they do this extremely well. But what exactly happens in between is largely unknown. This is an issue when people use neural networks to make decisions with real, impactful consequences.

In this project we will be focusing on convolutional neural networks applied to visual data. We have chosen to focus on neural networks applied on visual data, because currently neural networks have shown the most promising results in the field of image processing. Furthermore, many impactful use cases of neural networks mentioned before revolve around visual data.

The main problem we are tackling is that many users of convolutional neural networks only see the end decision of the neural network. They do not get an explanation why the neural network gave the output it gave. We think this is a problem because we believe that users can get more use out of their neural networks if it provides more information than just the output of the network.

Objectives

Our aim is to provide a tool that our users can use to have a better understanding of how the convolutional neural network they are using is making decisions and producing an output. Our goal is not to answer all questions a user might have about the decision of the neural network they use, because we think this is not technically feasible. Our goal is to provide the user with as many visualizations as we can in order to help them understand the neural networks decision. We think that by providing our user with several explanatory images we can at the very least improve their understanding of the decision of the neural network.

We are going to make a web application in which users can see visualizations of the inner workings of a neural network. In this web application users should be able to choose between different datasets and the corresponding neural networks and different images, where the users for both the images and networks have the option to either select one that is on the website already or to upload one themselves.

We will provide 2 types of visualizations. To get a better understanding of the network and what individual layers and neurons look at, we provide users with visualizations of how each layer and neuron perceives the image and which nodes had the most impact in deciding the results. For now this visualization will only be available for the preselected networks. Secondly we should provide the users with a saliency map of that image so they can see what parts of the image were overall considered important by the network.

Another visualization technique we wish to provide is projecting a learned dataset on 2D space to visualize how close certain classes are to each other.

Our first focus will be on showing visualizations of the individual layers of a neural network. The user can select a dataset, a trained network, a layer, and an input image. We will give them a visualization of that layer. We can also select a random image from the dataset if the user does not have an image.