Drone Referee - MSD 2018/9: Difference between revisions

| (66 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

[[File:Avular drone.png|thumb|The Drone Referee ]] | [[File:Avular drone.png|thumb|The Drone Referee ]] | ||

=Introduction= | =Introduction= | ||

Football is a long-lived and well-liked sport which is a widespread passion nowadays. Consequently, it represents a growing industry with a huge market size. On the other hand, technological advancements are growing rapidly in different sections of humans’ lives, sports and more specifically football is not an exception. Some examples of the new technologies used are: automatic goal detection [1], use of trackers to monitor players' performance [2], soccer robots (the players are robots) [3], etc. | Football is a long-lived and well-liked sport which is a widespread passion nowadays. Consequently, it represents a growing industry with a huge market size. On the other hand, technological advancements are growing rapidly in different sections of humans’ lives, sports and more specifically football is not an exception. Some examples of the new technologies used are: automatic goal detection [1], use of trackers to monitor players' performance [2], soccer robots (the players are robots) [3], etc. | ||

One possibility of technology usage in football matches is to use a drone referee instead of a human referee or a camera system covering the field. This system provides several advantages with respect to the mentioned conventional refereeing methods. First, human referees are naturally prone to human errors, which are one of the main sources of controversy in the game by possible unfair decision making. A camera-based autonomous system would remove the unfairness factor since every game would be refereed according to the same algorithm and would cover virtually any possible game situation, it is still rather very expensive in many situations such as regional games. A moving camera provided by the drone can therefore replace such an expensive system, and therefore appeal to a large market. While the technology to automatically enforce the rules of the game based on video is not available yet, a camera system capturing important game situations can assist a remote referee, by providing video and repetitions based on which, the he/she is able to provide some decision and send them to the ultimate referee. | One possibility of technology usage in football matches is to use a drone referee instead of a human referee or a camera system covering the field. This system provides several advantages with respect to the mentioned conventional refereeing methods. First, human referees are naturally prone to human errors, which are one of the main sources of controversy in the game by possible unfair decision making. A camera-based autonomous system would remove the unfairness factor since every game would be refereed according to the same algorithm and would cover virtually any possible game situation, it is still rather very expensive in many situations such as regional games. A moving camera provided by the drone can therefore replace such an expensive system, and therefore appeal to a large market. While the technology to automatically enforce the rules of the game based on video is not available yet, a camera system capturing important game situations can assist a remote referee, by providing video and repetitions based on which, the he/she is able to provide some decision and send them to the ultimate referee. | ||

| Line 24: | Line 18: | ||

• Autonomous refereeing system | • Autonomous refereeing system | ||

== | == Scope == | ||

Design an autonomous referring system for Robocup middle size league (MSL) using drones. | Design an autonomous referring system for Robocup middle size league (MSL) using drones. | ||

== | == Team == | ||

1. System | Mechatronics system design 2018 PDEng trainees: | ||

1. Arash, System Architect, a.arjmandi.basmenj@tue.nl | |||

2. Siddhesh, Communication manager, s.v.rane@tue.nl | |||

3. Navaneeth, Team Leader, n.bhat@tue.nl | |||

4. Sareh, Quality manager, s.heydari@tue.nl | |||

5. Mohamed, Project manager, m.a.m.kamel@tue.nl | |||

6. Eduardo, Test manager, e.f.tropeano@tue.nl | |||

7. Andrew, Team Leader, a.n.k.wasef@tue.nl | |||

== | == Deliverables == | ||

1. System Architecture | |||

2. Drone refereeing system | |||

3. Wiki page documentation | |||

= | = System Architecture = | ||

==Referee aiding system== | |||

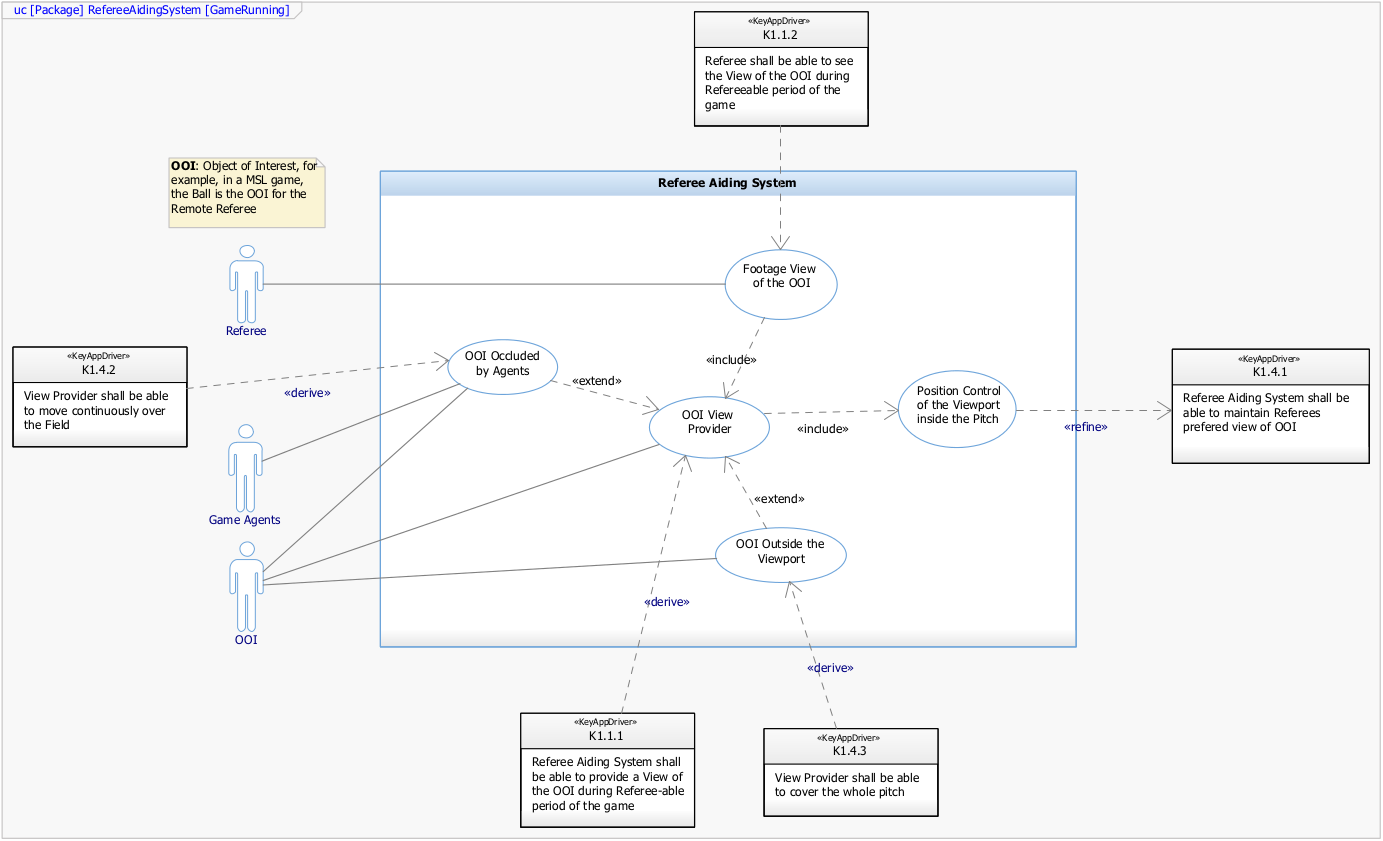

===RAS use case=== | ===RAS use case=== | ||

[[File:RAS-Game-Running-UseCase.png]] | [[File:RAS-Game-Running-UseCase.png]] | ||

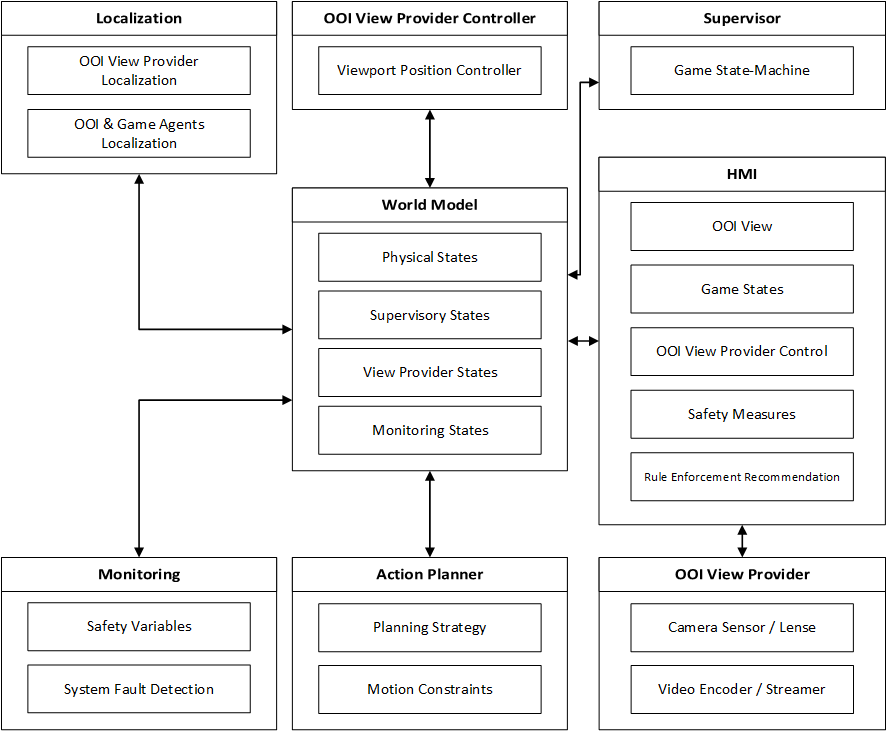

===RAS context diagrams=== | ===RAS context diagrams=== | ||

[[File:RAS-Context-Diagram.png]] | [[File:RAS-Context-Diagram.png]] | ||

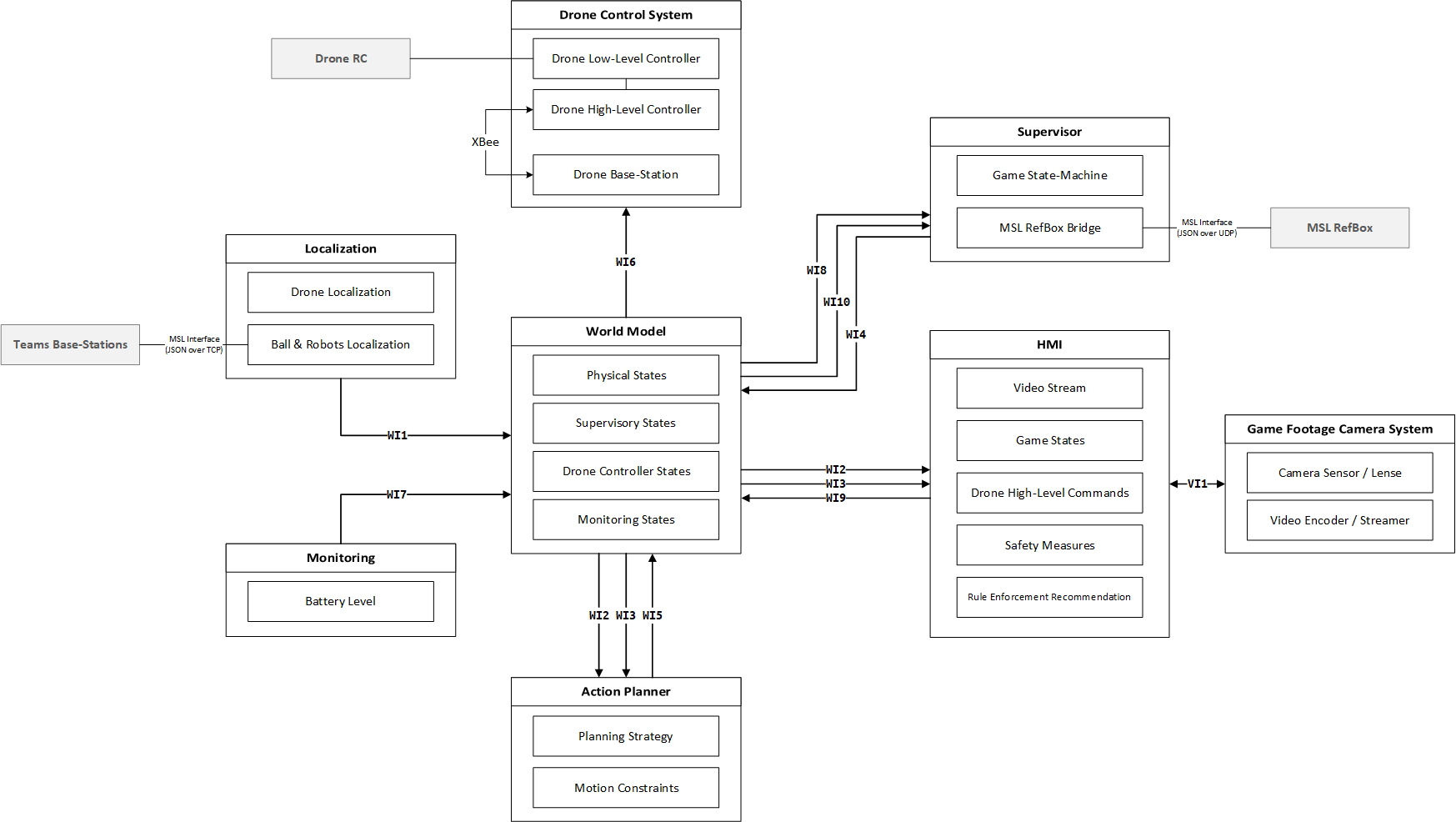

==MSL/Ball-Following 2D== | ===MSL/Ball-Following 2D context diagrams=== | ||

[[File:MSL-Context-Diagram.png]] | |||

= | = Drone refereeing system = | ||

==MSL/Ball-Following 2D sub-systems== | |||

In the following part, we will introduce the sub systems of the MSL 2D package. | |||

These subsystems are: | |||

1. World model | |||

2. Supervisor | |||

==== | === World model === | ||

==== Functions ==== | |||

==== | |||

* Acts as system’s model, avoiding lateral dependencies between sub-systems, | * Acts as system’s model, avoiding lateral dependencies between sub-systems, | ||

* State storage for the whole system, | * State storage for the whole system, | ||

| Line 74: | Line 76: | ||

* Single logging point of the system. | * Single logging point of the system. | ||

==== | === Supervisor === | ||

==== '''Introduction''' ==== | |||

The supervisor is a subsystem of RAS. This subsystem is responsible for providing high level control states for the other subsystems of RAS. | |||

Furthermore, it is responsible for translating user commands to states. The supervisor subsystem monitors the overall safety of RAS. | |||

==== '''Architecture constraints''' ==== | |||

==== | ===== '''RAS''' ===== | ||

''' | |||

''' | |||

The supervisor subsystem is responsible for keeping record of the states of RAS. | |||

The supervisor must capture the game, before game, emergency situation in its states. | The supervisor must capture the game, before game, emergency situation in its states. | ||

The states of the supervisor must be accessible to other subsystems of RAS. | |||

===== '''Drone MSL''' ===== | |||

Supervisor states must include drone positioning as additional states. | Supervisor states must include drone positioning as additional states. | ||

Supervisor must include drone emergency situations in its states. | Supervisor must include drone emergency situations in its states. | ||

Supervisor states are independent on MSL sub-packages (2d, gimbal, inclined?) | Supervisor states are independent on MSL sub-packages (2d, gimbal, inclined?) | ||

Supervisor takes input from MSL referee box through json interface. | Supervisor takes input from MSL referee box through json interface. | ||

Supervisor takes land, take off, safe to land from remote referee through world model | Supervisor takes land, take off, safe to land from remote referee through world model | ||

Supervisor gives the high level game states as outputs through world model | Supervisor gives the high level game states as outputs through world model | ||

Supervisor enabled the RR to land from any point in the field. | Supervisor enabled the RR to land from any point in the field. | ||

Supervisor enables the drone to take off after takeoff command receive from RR. | Supervisor enables the drone to take off after takeoff command receive from RR. | ||

Supervisor enable the drone to start refereeing the game after receiving the start game signal form MSL referee box. | Supervisor enable the drone to start refereeing the game after receiving the start game signal form MSL referee box. | ||

Supervisor dictates that after a half time ends drone will hover above landing position. | Supervisor dictates that after a half time ends drone will hover above landing position. | ||

Supervisor dictates that after receiving the land command drone will hover above landing position. | Supervisor dictates that after receiving the land command drone will hover above landing position. | ||

Supervisor enables the landing of the drone after checking it safe to land from both RR and drone action planner. State transition: check with AP requirements. | Supervisor enables the landing of the drone after checking it safe to land from both RR and drone action planner. State transition: check with AP requirements. | ||

'''System description''' | ==== '''System description''' ==== | ||

In this section, a description of the supervisor subsystem for the MSL 2D architectural package will be shown. The description is divided into four points. These points are scenarios, purpose, inputs and outputs. | In this section, a description of the supervisor subsystem for the MSL 2D architectural package will be shown. The description is divided into four points. These points are scenarios, purpose, inputs and outputs. | ||

'''Scenarios''' | |||

===== '''Scenarios''' ===== | |||

In the upcoming sub-section, selected game operation scenarios are presented. The purpose of these scenarios is to show the sequence of events of running game. These sequences are the result of application of the requirements or constraints to our drone referee system. In the next part, we will try to use these scenarios to obtain supervisory states for our MSL 2D architectural package. These scenarios are game, intentional landing and emergencies scenarios. | In the upcoming sub-section, selected game operation scenarios are presented. The purpose of these scenarios is to show the sequence of events of running game. These sequences are the result of application of the requirements or constraints to our drone referee system. In the next part, we will try to use these scenarios to obtain supervisory states for our MSL 2D architectural package. These scenarios are game, intentional landing and emergencies scenarios. | ||

'''Game scenario''' | |||

===== '''Game scenario''' ===== | |||

This scenario shows a complete sequence for the first half time of a MSL 2D game. The step sequence is the following: | This scenario shows a complete sequence for the first half time of a MSL 2D game. The step sequence is the following: | ||

1. RR makes sure robot players are positioned in center. | 1. RR makes sure robot players are positioned in center. | ||

2. RR press take off using HMI. | 2. RR press take off using HMI. | ||

3. Drone takes off then flies to start position. For MSL 2D ,the start position is the center of the pitch. | 3. Drone takes off then flies to start position. For MSL 2D ,the start position is the center of the pitch. | ||

4. Drone is hovering at start position. | 4. Drone is hovering at start position. | ||

5. MSL referee issues start game signal. | 5. MSL referee issues start game signal. | ||

6. Game starts. | 6. Game starts. | ||

7. Drone starts the refereeing technique. | 7. Drone starts the refereeing technique. | ||

8. First half time is over. | 8. First half time is over. | ||

9. MSL referee issues the end of the 1st half time signal. | 9. MSL referee issues the end of the 1st half time signal. | ||

10. Drones hovers above landing position. | 10. Drones hovers above landing position. | ||

11. RR presses it is okay to land in HMI. | 11. RR presses it is okay to land in HMI. | ||

12. Drone lands in the landing position. | 12. Drone lands in the landing position. | ||

13. Drone is waiting for another game start. | 13. Drone is waiting for another game start. | ||

'''Intentional land scenarios''' | |||

===== '''Intentional land scenarios''' ===== | |||

These scenarios represent situations where the RR wants to land the drone before and during game. In this document, two scenarios are shown. The first scenario (ILS1) ,where; the RR wants the drone to land before game start. While, the second one (ILS2), where, RR wants the drone to land during the game. The sequence of steps of ILS1 is: | These scenarios represent situations where the RR wants to land the drone before and during game. In this document, two scenarios are shown. The first scenario (ILS1) ,where; the RR wants the drone to land before game start. While, the second one (ILS2), where, RR wants the drone to land during the game. The sequence of steps of ILS1 is: | ||

1. RR makes sure robot players are positioned in center. | 1. RR makes sure robot players are positioned in center. | ||

2. RR press take off using HMI. | 2. RR press take off using HMI. | ||

3. Drone takes off then flies to start position. For MSL 2D ,the start position is the center of the pitch. | 3. Drone takes off then flies to start position. For MSL 2D ,the start position is the center of the pitch. | ||

4. Drone is hovering at start position. | 4. Drone is hovering at start position. | ||

5. RR presses land in HMI. | 5. RR presses land in HMI. | ||

6. Drones hovers above landing position. | 6. Drones hovers above landing position. | ||

7. RR presses it is okay to land in HMI. | 7. RR presses it is okay to land in HMI. | ||

8. Drone lands in the landing position. | 8. Drone lands in the landing position. | ||

9. Drone is waiting for another game start. | 9. Drone is waiting for another game start. | ||

While, the steps for ILS2 are: | While, the steps for ILS2 are: | ||

1. RR makes sure robot players are positioned in center. | 1. RR makes sure robot players are positioned in center. | ||

2. RR press take off using HMI. | 2. RR press take off using HMI. | ||

3. Drone takes off then flies to start position. For MSL 2D ,the start position is the center of the pitch. | 3. Drone takes off then flies to start position. For MSL 2D ,the start position is the center of the pitch. | ||

4. Drone is hovering at start position. | 4. Drone is hovering at start position. | ||

5. MSL referee issues start game signal. | 5. MSL referee issues start game signal. | ||

6. Game starts. | 6. Game starts. | ||

7. Drone starts the refereeing technique. | 7. Drone starts the refereeing technique. | ||

8. RR presses land in HMI. | 8. RR presses land in HMI. | ||

9. Drones hovers above landing position. | 9. Drones hovers above landing position. | ||

10. RR presses it is okay to land in HMI. | 10. RR presses it is okay to land in HMI. | ||

11. Drone lands in the landing position. | 11. Drone lands in the landing position. | ||

12. Drone is waiting for another game start. | 12. Drone is waiting for another game start. | ||

Drone Emergencies scenarios | Drone Emergencies scenarios | ||

In these scenarios, three sets of sequences are presented. These sequences deal with only one kind of emergency. This emergency is related to battery emergency at different moments the game. These moments are before game start and drone is still in land (EMS1), drone is hovering at center before game start (EMS2) and during game (EMS3). The sequence of steps of EMS1 is: | In these scenarios, three sets of sequences are presented. These sequences deal with only one kind of emergency. This emergency is related to battery emergency at different moments the game. These moments are before game start and drone is still in land (EMS1), drone is hovering at center before game start (EMS2) and during game (EMS3). The sequence of steps of EMS1 is: | ||

1. Before game, drone is on land | 1. Before game, drone is on land | ||

2. Drone emergency occurs | 2. Drone emergency occurs | ||

3. Drone is not able to takeoff despite any command given by RR | 3. Drone is not able to takeoff despite any command given by RR | ||

4. Drone will only be able to take off again only when emergency is solved | 4. Drone will only be able to take off again only when emergency is solved | ||

For EMS2, the sequence is : | For EMS2, the sequence is : | ||

1. RR makes sure robot players are positioned in center. | 1. RR makes sure robot players are positioned in center. | ||

2. RR press take off using HMI. | 2. RR press take off using HMI. | ||

3. Drone takes off then flies to start position. For MSL 2D ,the start position is the center of the pitch. | 3. Drone takes off then flies to start position. For MSL 2D ,the start position is the center of the pitch. | ||

4. Drone is hovering at start position. | 4. Drone is hovering at start position. | ||

5. Drone emergency occurs | 5. Drone emergency occurs | ||

6. Drone lands | 6. Drone lands | ||

7. Drone is not able to takeoff despite any command given by RR | 7. Drone is not able to takeoff despite any command given by RR | ||

And for EMS3, the sequence is: | And for EMS3, the sequence is: | ||

1. RR makes sure robot players are positioned in center. | 1. RR makes sure robot players are positioned in center. | ||

2. RR press take off using HMI. | 2. RR press take off using HMI. | ||

3. Drone takes off then flies to start position. For MSL 2D ,the start position is the center of the pitch. | 3. Drone takes off then flies to start position. For MSL 2D ,the start position is the center of the pitch. | ||

4. Drone is hovering at start position. | 4. Drone is hovering at start position. | ||

5. MSL referee issues start game signal. | 5. MSL referee issues start game signal. | ||

6. Game starts. | 6. Game starts. | ||

7. Drone starts the refereeing technique. | 7. Drone starts the refereeing technique. | ||

8. Drone emergency occurs | 8. Drone emergency occurs | ||

9. Drone lands | 9. Drone lands | ||

10. Drone is not able to takeoff despite any command given by RR | 10. Drone is not able to takeoff despite any command given by RR | ||

11. Drone will only be able to take off again only when emergency is solved | 11. Drone will only be able to take off again only when emergency is solved | ||

Functions | |||

==== '''Functions''' ==== | |||

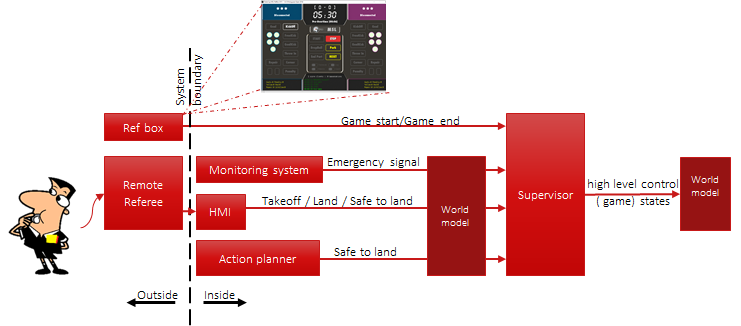

From the previous scenarios and constraints, the functions of the sub-system supervisor can be presented by these points: | From the previous scenarios and constraints, the functions of the sub-system supervisor can be presented by these points: | ||

• To take the remote referee’s commands, referee box game states and drone emergency as inputs | • To take the remote referee’s commands, referee box game states and drone emergency as inputs | ||

• To provide high level control ( game) states according to inputs. | • To provide high level control ( game) states according to inputs. | ||

• To provide reaction to emergency situations | • To provide reaction to emergency situations | ||

[[File: | The other sub-systems will take action based on the high level abstract states. In figure , a context diagram for the supervisor subsystem is depicted. In this diagram, the inputs and outputs of the system are shown. Furthermore, the possible | ||

messages for each input or output are shown. In the figure, the system boundary line separates the subsystems of the drone referee form the outside environment. | |||

[[File:Over_4.png]] | |||

Figure 1 Context diagram for the supervisor subsystem. | Figure 1 Context diagram for the supervisor subsystem. | ||

==== '''Inputs''' ==== | |||

===== '''Remote referee''' ===== | |||

The supervisor takes the takeoff, land and safe to land commands from the remote referee via HMI through world model. | |||

''' | ===== '''Monitoring system''' ===== | ||

The supervisor reads the emergency status in the world model. This status is written by the monitoring system. | |||

Referee box | Referee box | ||

The supervisor reads the game status from the MSL HMI (ref box). Based on this status , supervisor can know when game starts and ends. | The supervisor reads the game status from the MSL HMI (ref box). Based on this status , supervisor can know when game starts and ends. | ||

Action planner | Action planner | ||

Action planner sends a “safe to and signal” to world model. The supervisor reads that signal from world model. Drone can only land when the two safety checks are fulfilled. | Action planner sends a “safe to and signal” to world model. The supervisor reads that signal from world model. Drone can only land when the two safety checks are fulfilled. | ||

High level control (game) states | ==== '''Output''' ==== | ||

===== '''High level control (game) states''' ===== | |||

Supervisor write these states into world model. Other subsystems like action planner read these states and take action based on them. | Supervisor write these states into world model. Other subsystems like action planner read these states and take action based on them. | ||

==== Design choices ==== | ==== '''Design choices''' ==== | ||

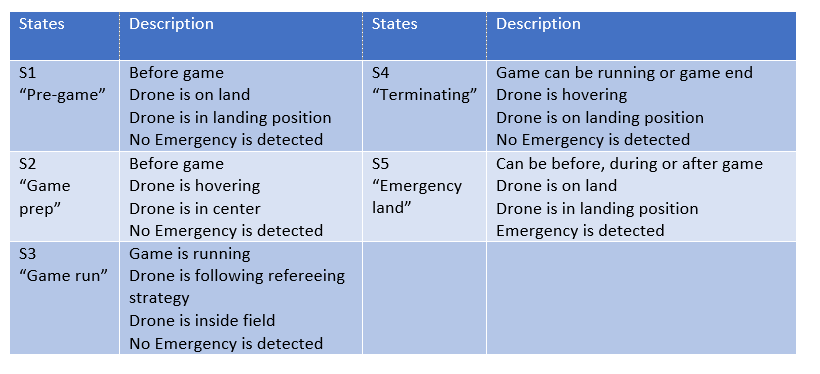

For the MSL 2D, the supervisor will have 5 states. The transition between these states are governed by transition conditions. These conditions are based on the values and combinations of the so called transition variables and the transition variables are based on the inputs of the supervisor subsystem. | For the MSL 2D, the supervisor will have 5 states. The transition between these states are governed by transition conditions. These conditions are based on the values and combinations of the so called transition variables and the transition variables are based on the inputs of the supervisor subsystem. | ||

States | States | ||

The 5 states of the supervisor are presented in table 1. Furthermore, table 1 shows the description of each state. | The 5 states of the supervisor are presented in table 1. Furthermore, table 1 shows the description of each state. | ||

Table 1 State description | Table 1 State description | ||

Transition variables | [[File:T11.PNG]] | ||

===== '''Transition variables''' ===== | |||

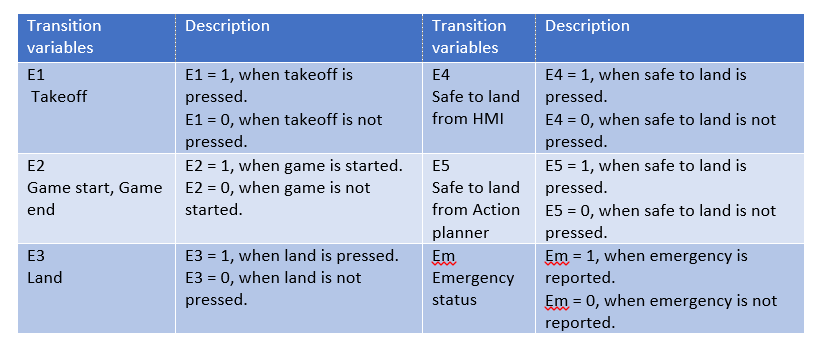

In table 2, the description, value, condition of each transition variable are shown. | In table 2, the description, value, condition of each transition variable are shown. | ||

Table 2 Transition variable description | Table 2 Transition variable description | ||

Transition conditions | [[File:T22.png]] | ||

==== '''Transition conditions''' ==== | |||

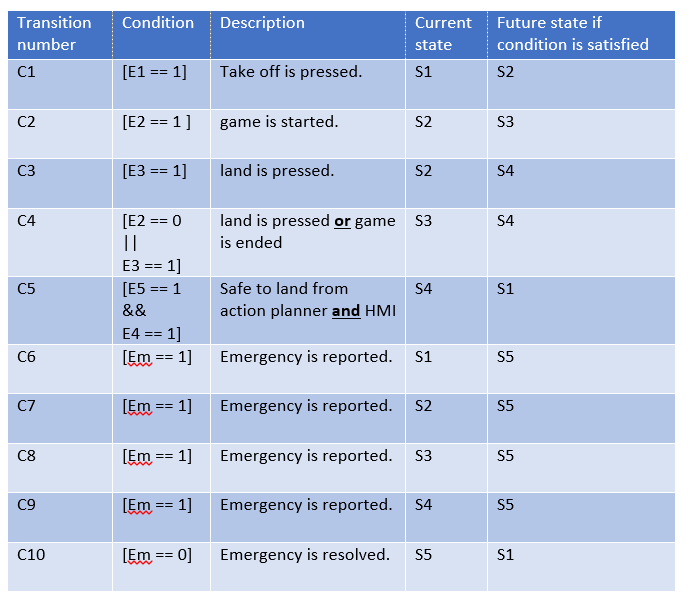

They are conditions consisting of the variables and mathematical operators. The operators are || (OR) , == (EQUALS) and && (AND). Whenever, the condition is true, the supervisor is allowed to move from the current state to the future one. Tables 3 shows the description of each condition, the current state and the future sate. | They are conditions consisting of the variables and mathematical operators. The operators are || (OR) , == (EQUALS) and && (AND). Whenever, the condition is true, the supervisor is allowed to move from the current state to the future one. Tables 3 shows the description of each condition, the current state and the future sate. | ||

Table 3 Transition conditions | Table 3 Transition conditions | ||

State machine | [[File:T33.PNG]] | ||

==== '''State machine''' ==== | |||

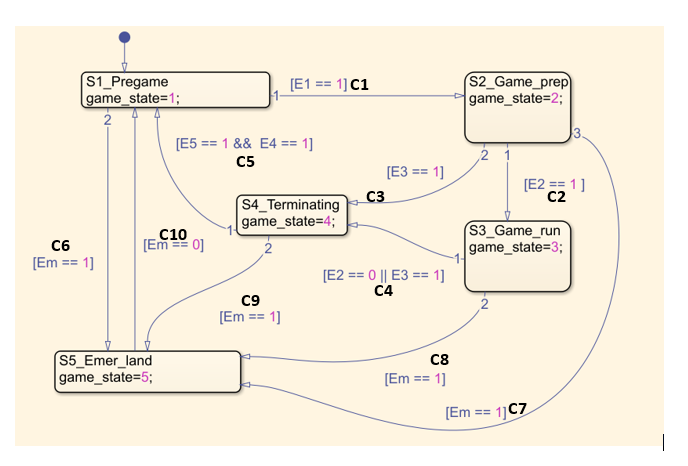

The complete state machine of the supervisor subsystem is shown in figure 2. The initial state of the system is S1. In the figure, the states, variables and conditions are shown. Furthermore, the output (high level control (game) states) of the supervisor is presented in the variable game_state. This variable is sent to world model and can be read by other subsystems. | The complete state machine of the supervisor subsystem is shown in figure 2. The initial state of the system is S1. In the figure, the states, variables and conditions are shown. Furthermore, the output (high level control (game) states) of the supervisor is presented in the variable game_state. This variable is sent to world model and can be read by other subsystems. | ||

[[File:state.png]] | |||

Figure 2 State machine of the supervisor subsystem | Figure 2 State machine of the supervisor subsystem | ||

Reaction to emergency situations | |||

==== '''Reaction to emergency situations''' ==== | |||

In MSL 2D, there are two emergency situations. The first one is drone emergency. While, the second one is dependent on the remote referee’s estimation. For both of these situations, the drone will land. | In MSL 2D, there are two emergency situations. The first one is drone emergency. While, the second one is dependent on the remote referee’s estimation. For both of these situations, the drone will land. | ||

Assumptions | |||

==== '''Assumptions''' ==== | |||

• RR must make sure that robots are in starting place while drone is hovering above starting position. | • RR must make sure that robots are in starting place while drone is hovering above starting position. | ||

• RR must make sure that is safe to takeoff before pressing safe to take off in HMI. | • RR must make sure that is safe to takeoff before pressing safe to take off in HMI. | ||

• The drone emergency situations considered in the systems are only battery emergencies. | • The drone emergency situations considered in the systems are only battery emergencies. | ||

• RR must make sure there are no obstacles in the airfield and landing position. | • RR must make sure there are no obstacles in the airfield and landing position. | ||

• RR must make sure the safety net is closed. | • RR must make sure the safety net is closed. | ||

• During game pauses drone will still follow the refereeing strategy. | • During game pauses drone will still follow the refereeing strategy. | ||

• System’s initial state is always at S1 | • System’s initial state is always at S1 | ||

==== '''Technology''' ==== | |||

==== Technology ==== | |||

For this supervisor, Matlab Stateflow was used [add ref]. | For this supervisor, Matlab Stateflow was used [add ref]. | ||

==== '''Recommendations''' ==== | |||

==== Recommendations ==== | |||

===== Emergency situations ===== | ===== '''Emergency situations''' ===== | ||

Supervisor should consider other drone emergency situations. These situations can be obtained after experimentation of the system in several test runs. Furthermore, additional functional safety measures could be considered for each subsystem. | Supervisor should consider other drone emergency situations. These situations can be obtained after experimentation of the system in several test runs. Furthermore, additional functional safety measures could be considered for each subsystem. | ||

==== | ==== '''Model based supervisor''' ==== | ||

It was not possible for our team to model each subsystem separately and make maximal permissive supervisor with the time resources we had. Furthermore, the drone manufacturer used Matlab Simulink to control the drone. Designing a maximally permissive supervisor on Matlab is very difficult. Our recommendation is to model the subsystems using other supervisory control tools. After finishing the supervisor start couple it with Matlab. The challenge in this case would be modeling, capturing requirements and supervisor synthesis. [ add ref] | It was not possible for our team to model each subsystem separately and make maximal permissive supervisor with the time resources we had. Furthermore, the drone manufacturer used Matlab Simulink to control the drone. Designing a maximally permissive supervisor on Matlab is very difficult. Our recommendation is to model the subsystems using other supervisory control tools. After finishing the supervisor start couple it with Matlab. The challenge in this case would be modeling, capturing requirements and supervisor synthesis. [ add ref] | ||

= | ===Drone=== | ||

====Introduction==== | |||

The drone is the main hardware subsystem of the RAS. This subsystem includes sensors, microcontroller, communication ports and mechanical components. The drone used in this project, is the last version of Avular curiosity drone provided by Avular company. The drone can be programmed by means of Matlab Simulink. All the other subsystems must eventually be implemented on the drone to achieve the final goal of refereeing the game. | |||

====Architecture constraints==== | |||

* ''' RAS:''' | |||

The drone must be able to fly autonomously. | |||

The drone is responsible to follow the command of action planner through world model | |||

The sensors data must be accessible to world model (the other subsystem of RAS) | |||

The drone should provide the battery level information to other subsystems. | |||

The drone must have the possibility of installing the footage camera on it. | |||

* '''Drone MSL:''' | |||

The drone must be able to fly for 5+2 minutes and show the battery level all the time. | |||

== | ===='''System description'''==== | ||

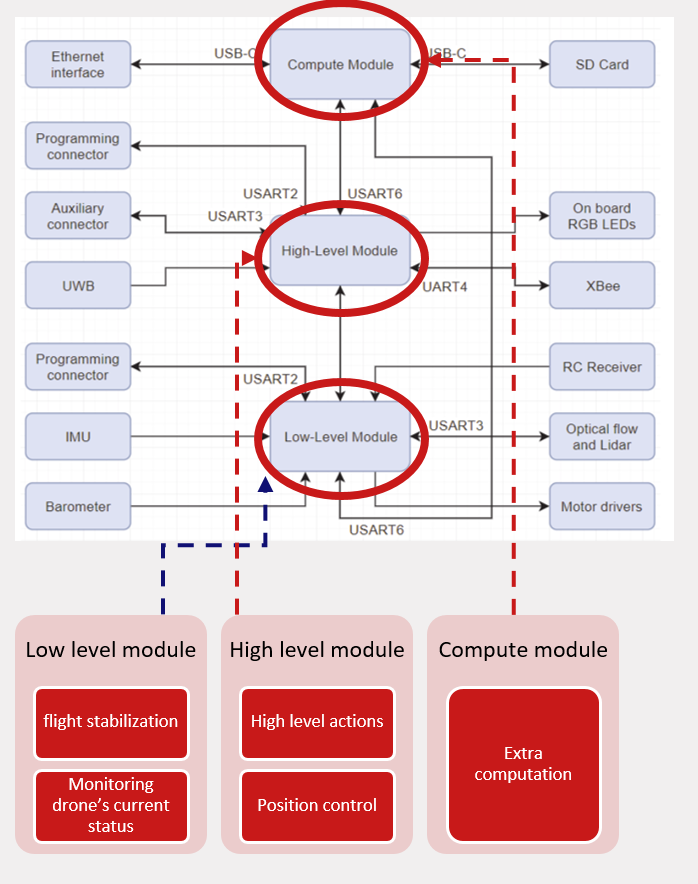

The drone is the main hardware subsystem of drone referee system which consists of different components and subsystems itself. The main task of the drone is to receive commands in real time from a remote computing module (usually a PC) and follow the action required from action planner while carrying the footage camera. For this need,the main challenge is programming the drone and setting the wireless connection for sending wireless data to the micro-controller present on the drone. The Avular curiosity drone can be programmed through three computing modules: Low-Level module, high-Level module and compute module. Each of these modules is responsible for part of the functionality of the drone, and is connected to a different set of sensors, actuators or communication devices. These modules, their general functionality and their communications are shown the the figure below. Each one of the High-Level and Low-Level Modules uses a 180MHz Cortex-M4 processor and the Compute Module is a Raspberry-Pi Compute Module 3. | |||

[[File:Compute modules.PNG]] | |||

The idea is to use Low-Level module for calculations related to flight stabilization and monitoring the current status of the drone, and use the High-Level Module for other high-level calculations, such as: ''path planning'' or ''position control of the drone'' which can be different depending on the project requirements. The Compute Module can be used in combination with both High-Level and Low-Level Modules for intensive computations. In drone referee project it is only used for comunication with the footage camera as mentioned before in the camera section. | |||

=== | ==== '''Functionality Overview''' ==== | ||

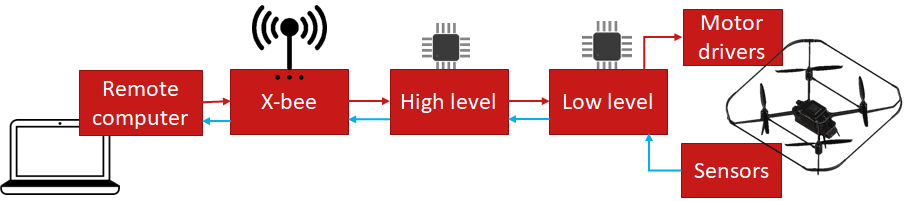

After programming the Low-Level and High-Level modules, communication to the drone is possible through X-bee and by means of MAVLink protocol. An overview of this communication process is shown in figure below. | |||

[[File:Overview_3.png ]] | |||

==Technology== | ====Technology==== | ||

In this project only High-Level and Low-Level modules are used for programming the drone. They are programmed in MATLAB-Simulink via ST-link programmer. | |||

===== Flying manually with the remote control ===== | |||

=== | ====== Diagnosed Problems====== | ||

* Hardware: defective battery | |||

* Software: Probably Kalman filter/Low level control | |||

[[File:ch_1.PNG]] | |||

====== Solutions====== | |||

* New batteries (at least 3) | |||

* More tests to improve the low control system | |||

===== Autonomous flight ===== | |||

====== Diagnosed Problems====== | |||

Incorrect sensor reading (Probably the magnetometer) | |||

[[File:ch_2.png]] | |||

====== Solutions ====== | |||

* Better calibration sensors | |||

* Use other measurement techniques | |||

==== Localization system, UWB (Ultra-wide band) flight ==== | |||

===== Diagnosed Problems ===== | |||

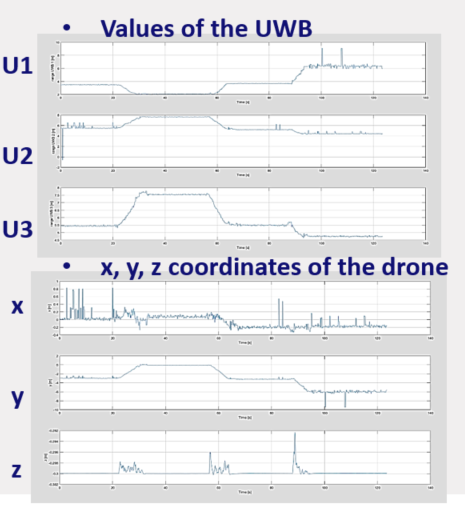

Noisy Ultra-wide band data | |||

[[File:ch_3.PNG]] | |||

===== Solutions ===== | |||

* Better calibration of the UWB | |||

* Apply a median-filter in the MATLAB algorithm | |||

== | ==== Autonomous flight II ==== | ||

In this test, drone is controlled autonomously in Z direction and manually in x,y and yaw. Controlling Z position of the drone was successfully performed. | |||

== | === Camera footage system and HMI === | ||

====Introduction==== | |||

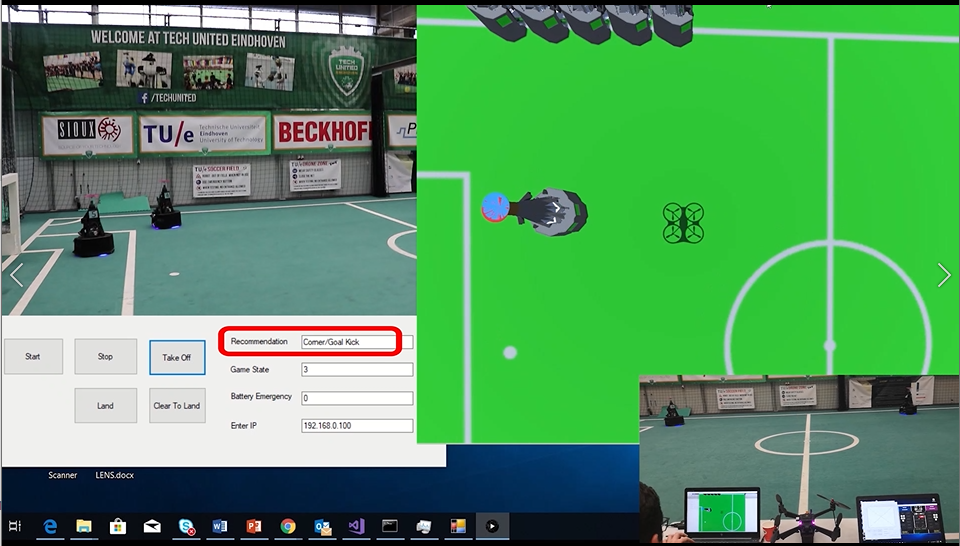

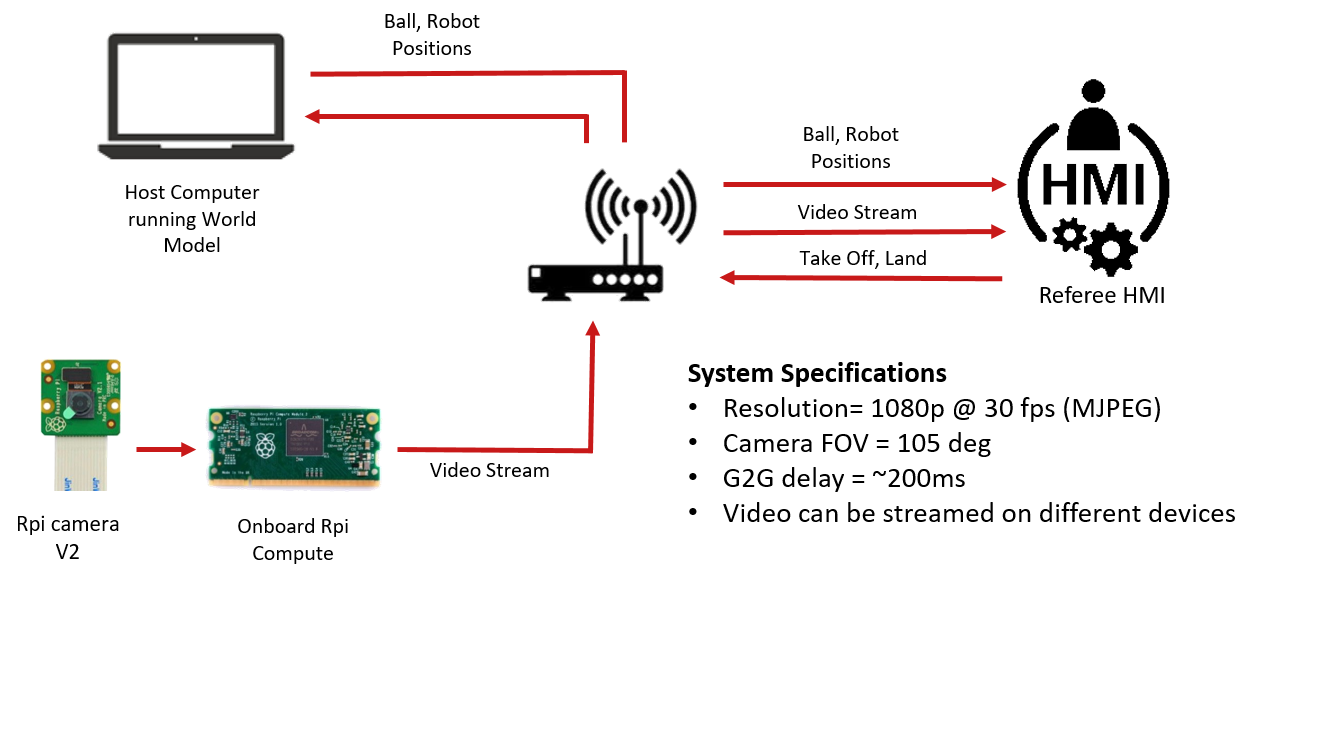

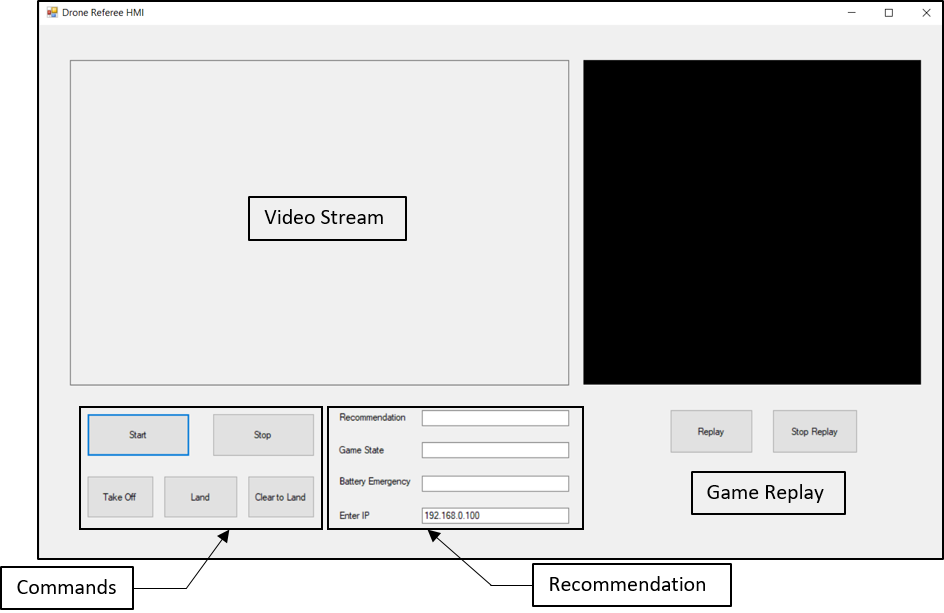

The purpose of camera footage system and the HMI is to provide video stream on game referee’s console. Camera attached to the drone should provide enough situational awareness to enable referee to take decisions. Additionally, referee should be provided with recommendations such as ball out of pitch, collision between players to help him make better decisions. | |||

== | ====Requirements and architecture constraints==== | ||

After discussion with stakeholders and team members following requirement and constraints were imposed on the system | |||

1. Referee’s HMI shall display raw video from received video stream | |||

=== Function === | The HMI should show the video stream of the game on referee’s console. | ||

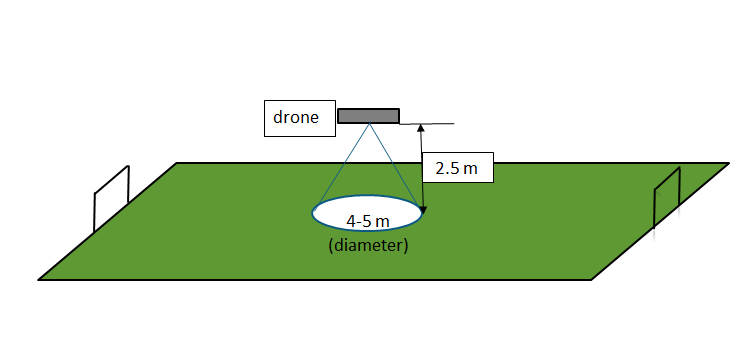

2. The “Game Footage Camera’s ” parameters (FOV, depth-of field etc.) shall be chosen to cover 4-5 meters of field of view (FOV) around the ball, with nominal allowable flight height in each match | |||

[[File:Camera_FOV.png|frame|centre|alt=Puzzle globe|Fig. Field of View(FOV) of the camera]] | |||

It is important for referee to see enough area around the ball to make proper judgement. After discussion with the experienced robosoccer referee, it was decided that it is preferable to have 4-5 meters of field of view around the ball. The field of view is dependent on the height at which drone of the flying. | |||

3. The overall streaming delay (from real-scene viewed by camera to the video stream displayed for the RR ) shall be less than 0.5 sec | |||

In sports it’s important for referee to make decisions as soon as possible. Therefore, the referee should be able to see the video with as less delay as possible. Based on the feedback received from the experienced referee the delay should not be more than 0.5 seconds. | |||

4. Any image processing (detection/distortion improvements/ marking boundaries etc.) shall be done on external processors | |||

To have minimum footprints in terms of size, processing power and energy consumption all the image processing should be done on an external processor. | |||

5. Each video stream shall take less than 10% of the network link bandwidth | |||

System needs to be scalable for multiple cameras, referee consoles. Therefore each video stream should not consume more than 10% of the network bandwidth. | |||

6. Any on-board camera on any drone shall stream raw video to referee’s HMI using network connection | |||

Camera should use network connection for streaming in order to make the system flexible and scalable. | |||

7. RR laptops and any drones within DR system shall be accessible for each other over a wireless network connection | |||

====System description==== | |||

The figure below shows the camera and referee HMI system | |||

[[File:System_Overview.png|frame|centre|alt=Puzzle globe|Fig. Camera and Human Machine Interface(HMI)]] | |||

System consists of camera sensor connected to raspberry pi streaming the video on HMI. The camera is mechanically mounted on the drone with the lens looking vertically downwards. The HMI is programmed in C# which makes it portable to different devices running Windows operating system. HMI also communicates with the world model running on host computer to receive ball, robot and drone position streams. This information can be used for giving useful recommendation to the referee. Current HMI can recommend referee to award corner, throw in or goal kick when ball goes out of the pitch. Referee can also send commands to take off or land the drone from the HMI. Game states described in section 4.4, are also displayed on the HMI. | |||

====Subsystems==== | |||

======Raspberry Pi with camera and Lenses====== | |||

[[File:Rpi_Camera.png|frame|centre|alt=Puzzle globe|Fig. Raspberry Pi with camera module V2]] | |||

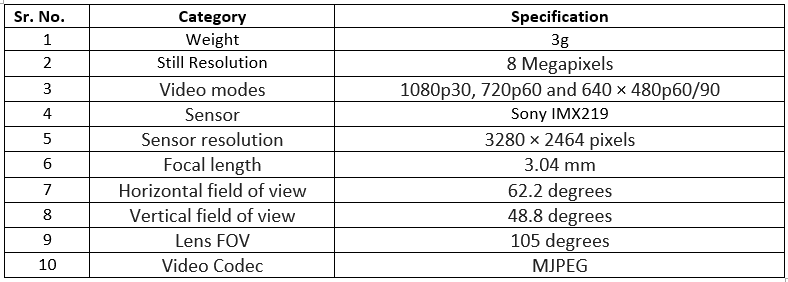

Raspberry pi camera module was chosen because of the following reasons | |||

• Modularity: Pi camera can be attached with different lenses which offer different field of views | |||

• Flexibility: Raspberry pi can be configured to work with different video codecs which can be adapted to different hardware | |||

• Community Support: Raspberry Pi has large user community support | |||

• Availability: Avular drone has raspberry compute module onboard which supports the camera and eliminates requirement of additional hardware | |||

Below are the specifications of the camera and lens | |||

[[File:Camera_Specs.PNG|frame|centre|alt=Puzzle globe|Table. Camera specifications]] | |||

=====Human Machine Interface(HMI)====== | |||

[[File:GUI.png|frame|centre|alt=Puzzle globe|Fig. Human Machine Interface]] | |||

Fig. above shows Human Machine Interface(HMI) for the drone referee. The HMI is programmed in C# which communicates with world model running in MATLAB over the network. The application can be ported to different devices running windows. HMI has following functions | |||

• To display video stream from camera | |||

• Provide replay of last 30 seconds as and when needed | |||

• Send take off, clear to land and land commands to drone | |||

• Display recommendations such as goal kick, corner and throw in | |||

• Display state of the game | |||

====Interfaces==== | |||

=====Remote Referee===== | |||

The supervisor receives Take Off, Clear to Land and Land commands from remote referee. | |||

=====World Model===== | |||

The HMI receives Game States and Physical States(Extracted from positioning data) from the world model. | |||

====Technology==== | |||

HMI is programmed using C# to make it portable and avoid dependency on MATLAB. | |||

===Localization systems=== | |||

==== Function ==== | |||

Provides the ball, individual robots and drones positions to the world model. | Provides the ball, individual robots and drones positions to the world model. | ||

==== '''Ball localization system''' ==== | |||

===== '''Requirements and architecture constrains''' ===== | |||

=== '''Requirements and architecture constrains''' === | |||

Positioning System shall be able to: | Positioning System shall be able to: | ||

| Line 416: | Line 578: | ||

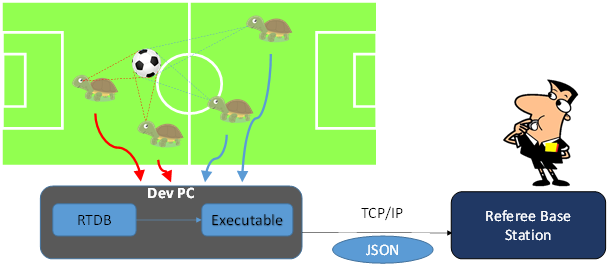

=== '''System description''' === | ===== '''System description''' ===== | ||

In a robot soccer team each of the turtles(soccer robots) and their DevPC of the team share data using a RTDB. The turtles share set of data that contains the robot id, robot position, ball position, ball velocity and the ball confidence. The DevPC hosts this RTDB server. | In a robot soccer team each of the turtles(soccer robots) and their DevPC of the team share data using a RTDB. The turtles share set of data that contains the robot id, robot position, ball position, ball velocity and the ball confidence. The DevPC hosts this RTDB server. | ||

According to the MSL rules each participating team are required to log these data on to the referee base station. | According to the MSL rules each participating team are required to log these data on to the referee base station. | ||

=== ''' | ===== '''Overview''' ===== | ||

[[File:Overview.png]] | [[File:Overview.png]] | ||

| Line 435: | Line 597: | ||

[[File:ball_params.png|Positioning system parameters]] | [[File:ball_params.png|Positioning system parameters]] | ||

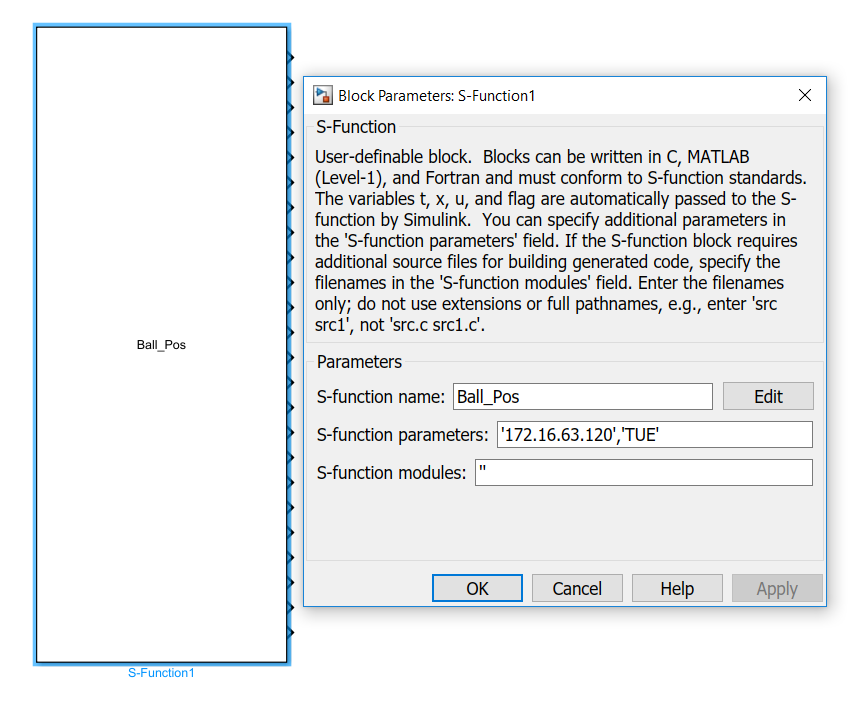

Integrated positioning system with world model | |||

The Simulink block takes in the IP address of the TCP server and the team name as a parameter as shown in figure and outputs the ball position with the highest confidence. In case of equal confidence the value with lower ID value is considered. The block also outputs position of the individual robots. | |||

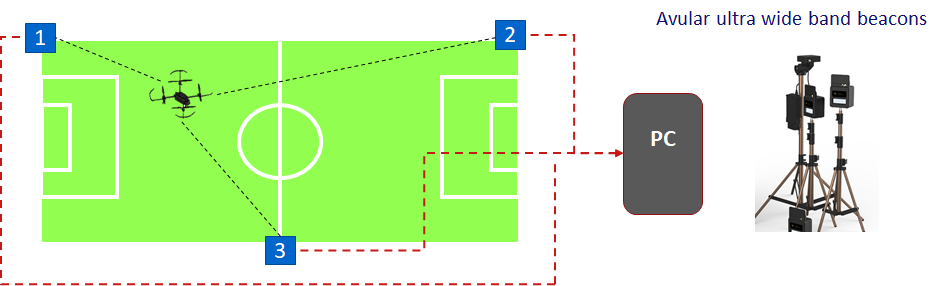

==== '''Drone localization system''' ==== | |||

===== '''Drone localization system overview''' ===== | |||

[[File:Overview_2.png]] | |||

Overview of the drone localization system | |||

===== '''Technology''' ===== | |||

The system was implemented using Avular ultra wide band system | |||

=== Action Planner === | |||

==== Introduction ==== | |||

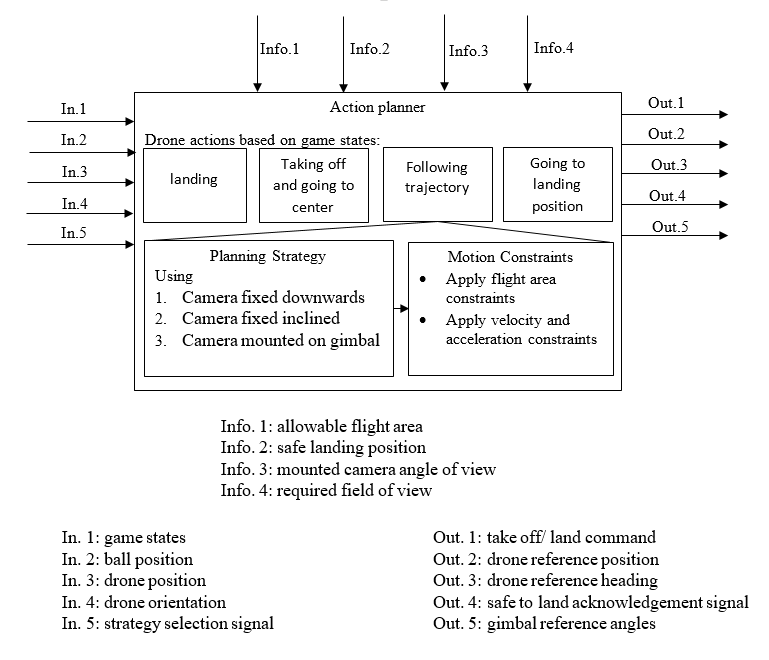

This section presents the action planner used for the drone referee system. The action planner is the part of the system responsible for sending the proper actions to the drone. These actions include taking off and landing commands, as well as generating the reference trajectory for the drone in flight. The action planner takes these decisions based on knowledge of the game states and the positions of the objects of interest (in this case the drone and ball). | |||

==== Functionality ==== | |||

The action planner is concerned with three main functionalities. | |||

1. Controlling the take-off and landing of the drone based on the current game state. | |||

2. While in running game state, the action planner aims at positioning the drone such that the remote referee can have a good view of the game with the on-board mounted camera. | |||

3. The action planner takes the safety constraints into account while doing the above two functionalities. | |||

==== Architecture and Requirements Constraints ==== | |||

The action planner functional requirements are mentioned in the architecture section. Herein, some key requirements that come from the referee and have huge impact on the implementation of the action planner are recalled. | |||

In the previous section we mentioned that one of the functionalities of the action planner is positioning the drone such that the remote referee can have a good view of the game with the on-board mounted camera. A good view is assessed based on the requirements taken from the remote referee. The key requirements are: | |||

1. The video should mainly provide a view for the ball (not the robots or anything else). | |||

2. The video should give a top view of the field. | |||

3. The ball should not leave the viewport as much as possible (this is usually a problem when the ball is shot too far). | |||

==== Inputs and Outputs ==== | |||

The action planner receives online inputs and sends online outputs. It also receives some offline configurable information. These inputs, outputs and offline information are discussed in the following subsections and also represented in the action planner structure figure in next section. | |||

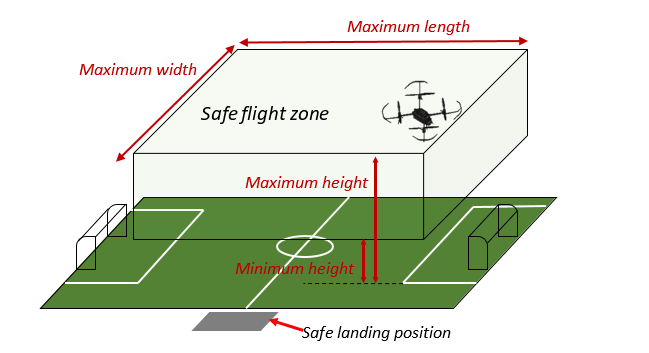

===== Offline Configurable Information ===== | |||

The action planner receives a priori information about: | |||

1. The allowable flight area during the game (for safety issues and physical limitations). | |||

This is specified by maximum length and width in addition to maximum and minimum flight height (see figure below). | |||

2. The safe landing position (see figure below). | |||

3. The camera’s angle of view. | |||

4. The required field of view around the ball. | |||

These data can be configured before the game. | |||

[[File:Safety_configutations.PNG|frame|centre|alt=Puzzle globe|Fig. Safety Configurations]] | |||

===== Online Inputs ===== | |||

In running time, the action planner receives information about: | |||

1. Game states | |||

2. Ball position | |||

3. Drone position | |||

For other strategies, the action planner also take the following inputs | |||

4. Drone orientation | |||

5. A selection signal between planning strategies | |||

===== Online Outputs ===== | |||

In running time, the action planner gives the outputs: | |||

1. Takeoff/land signal for the drone. | |||

2. The reference position for the drone. | |||

3. The reference heading for the drone. | |||

4. An acknowledgment signal indicating the drone is hovering over the landing position and thus safe to land. | |||

For other strategies, the action planner also outputs: | |||

5. Gimbal reference orientation. | |||

==== System Description ==== | |||

The action planner structue is given in the below figure. | |||

[[File:Action_planner_structure.PNG|frame|centre|alt=Puzzle globe|Fig. Action Planner Structure]] | |||

The action planner outermost structure differentiates between the different drone actions based on the received game state. | |||

The most abstract action set is given as: | |||

1. Landing | |||

2. Taking off and going to the center | |||

3. Following trajectory | |||

4. Going to landing position | |||

Each of these actions are subdivided into more states and actions. Particular attention is given to the action of following trajectory. This can be decomposed into generating the reference point based on the planning strategy and applying the motion constraint for trajectory generation. Details are given in the following subsections. | |||

===== Planning Strategy ===== | |||

Through implementing the planning strategies, the key requirements mentioned in section 3 are taken into account. Three planning strategies were implemented towards achieving an enhanced video streaming that satisfies most of the requirements. The three implemented strategies assumes an on-board mounted camera in the following form: | |||

1. Camera fixed looking downwards | |||

2. Camera fixed inclined | |||

3. Camera mounted on a gimbal system | |||

It is noteworthy that the first strategy utilizing a camera looking downwards is the one that was considered through architecture and implementation (one of the reasons is the presence of such camera on the utilized Avular Curiosity drone). The other two strategies are considered for comparison and recommendation, but requires further work towards implementation. In the following subsections each strategy is discussed briefly and explained with a simulation video. | |||

====== Strategy with camera fixed looking downwards ====== | |||

The first strategy considers the presence of a camera mounted on the lower part of the drone looking downwards. This camera is present on board of the Avular Curiosity drone. Given the requirements of the referee, the strategy will simply be summarized in the following points: | |||

1. The ball position is passed as the drone’s reference set point in horizontal plane. | |||

2. Low pass filtering is used to minimize the drone’s motion for small ball tracking error. | |||

3. The reference flight height and heading are kept fixed. | |||

4. The fixed value for the height is calculated based on the prior information of the mounted camera angle of view and the required field of view. | |||

5. The fixed value of the heading is kept at zero degrees. | |||

This strategy satisfies the first two key requirements of focusing on the ball (not other objects) and maintaining almost a top view of the field (except when the drone’s pitch and roll angles are far from zero). However, a drawback will be losing the ball from the field of view when the ball is shot too far. The video in this link simulates the strategy [https://www.youtube.com/watch?v=R_J6VmtNcP0]. | |||

====== Strategy with camera fixed inclined ====== | |||

The second strategy considers the presence of a camera mounted on the lower part of the drone but inclined from the vertical axes by 45 degrees. The strategy will thus be different and summarized in the following points. | |||

1. The reference flight height is kept fixed. | |||

2. The fixed value for the height is calculated based on the prior information of the mounted camera angle of view and the required field of view. | |||

3. The reference set point in the horizontal plane is always considered to be a point on a circle above the ball with radius equal to the flight height and the ball in its center. | |||

4. Out of this circle the point that is closest to the current drone position is used as the set point. | |||

5. Low pass filtering is again used to minimize the drone’s motion for small ball tracking error. | |||

6. The reference heading angle is calculated as the angle between the projection of the line connecting the drone to the ball on a horizontal plane and the zero heading line. | |||

This strategy satisfies the first and third key requirements to some extent except that the ball still leaves the view port few times due to slow heading dynamics. However, it does not satisfy the key requirement of having a top view of the field. This top view is preferred by the referee and should make it more suitable for taking correct decisions. Not having a top view also has the consequences of occlusion occurrence when one of the robots is standing in the way of the camera view to the ball. The algorithm proposed has to be modified a bit to try to avoid occlusion based on knowledge of the robots positions and still avoiding occlusion completely is questionable. The video in this link simulates the strategy [https://www.youtube.com/watch?v=GnpSzbacMVk]. | |||

====== Strategy with camera mounted on gimbal ====== | |||

The third strategy considers the presence of a camera mounted on a gimbal system fixed to the drone’s lower part. This has the advantage of extra degrees of freedom for the camera orientation. The strategy proposed is almost the same in terms of the drone movement in the first strategy, but in addition it uses the gimbal angles to not lose the ball when it is shot far. For completeness the strategy is proposed as follows: | |||

1. The ball position is passed as the drone’s reference set point in the horizontal plane. | |||

2. Low pass filtering is used to minimize the drone’s motion for small ball tracking error. | |||

3. The reference flight height and heading are kept fixed. | |||

4. The fixed value for the height is calculated based on the prior information of the mounted camera angle of view and the required field of view. | |||

5. The fixed value of the heading is kept at zero degrees. | |||

6. The gimbal rotation first compensates for the drone orientation to ensure camera having exact top view. | |||

7. In addition the gimbal uses two angles: the yaw and pitch to always look at the ball. | |||

8. The gimbal reference yaw angle is calculated as the angle between the projection of the line connecting the drone to the ball on a horizontal plane and the zero gimbal yaw angle line. | |||

9. The gimbal reference yaw angle is only changed if the projection of the line connecting the drone to the ball on a horizontal plane is longer than 1 meter. Otherwise, it is kept the same as its last value. | |||

10. The gimbal reference pitch angle is calculated as the angle between the line connecting the drone to the ball and the vertical axis. | |||

11. The gimbal reference pitch angle is used only when the projection of the line connecting the drone to the ball on a horizontal plane is longer than 1 meter. Otherwise, it is kept fixed to zero. | |||

This strategy satisfies almost all the referee key requirements. It can basically provide the top view of the field when the ball is not moving too far, while adjust the gimbal to not lose the ball from the view port when the ball is shot too far. The video in this link simulates the strategy [https://www.youtube.com/watch?v=f742MX8Kc8c]. | |||

The strategy still needs further considerations. The gimbal dynamics are not considered at this point. It is rather simulated with a low path filter after the reference gimbal angles. However, correct simulation of the gimbal dynamics and also studying their effect on stability might be needed. Furthermore, the method can be enhanced by considering using the heading angle in combination with gimbal rotation. Also, instead of using the gimbal’s pitch and yaw angles, it can be possible to use the pitch and roll angles together with some image processing (to invert the image when it is upside down). This has to be tried to if it can provide any better view. | |||

===== Motion Constraints ===== | |||

After the set point is determined by the planning strategy, the trajectory is generated by the low pass filtering already mentioned in addition to applying position/velocity/acceleration saturation to ensure safe flight. Up to this point, only the flight area (drone position) constraint is made configurable. It is easy and recommended to make the velocity and acceleration maximum values also configurable. | |||

==== Technology ==== | |||

The action planner is implemented in MATLAB/Simulink/Stateflow. The actions of landing taking off and following ball are implemented in stateflow because it includes states of the drone and transitions from one state to another that are easier described in stateflow. While the strategy and motion constraints are implemented in Simulink with MATLAB functions when needed for convenience. Implementation details can be checked in the attached files. | |||

== Integration plan == | |||

=== Levels === | |||

Level 0 before world model integration (highest priority) | |||

Level 1 (two system elements together), 6 possible integrations | |||

Level 2 (three system elements together), 4 possible integrations | |||

Level 3 (Four system elements together), 1 possible integration | |||

Level 4 (Five system elements together), 1 possible integration | |||

=== '''Level 0 before world model integration (highest priority)''' === | |||

HMI + Camera = HMI with camera (chosen by team) | |||

Action planner + drone = Linked action planner (should be implemented by Avular) | |||

Ball & Robot from Tech-united + drone from Avular = Position system (ball & robot & drone) | |||

=== '''Level 1 (two system elements together), 6 possible integrations''' === | |||

Supervisor + HMI with camera | |||

Supervisor + Position system (ball & robot & drone) | |||

Supervisor + Linked action planner | |||

Position system (ball & robot & drone)+ HMI with camera | |||

Position system (ball & robot & drone)+ Linked action planner | |||

Linked action planner + HMI with camera | |||

=== '''Level 2 (three system elements together), 4 possible integrations''' === | |||

Supervisor + HMI with camera + Linked action planner | |||

Supervisor + HMI with camera + Position system (ball & robot & drone) | |||

Supervisor + Linked action planner + Position system (ball & robot & drone) | |||

Linked action planner + HMI with camera + Position system(ball & robot & drone) | |||

=== '''Level 3 (Four system elements together), 1 possible integration''' === | |||

Supervisor + HMI with camera + Linked action planner + Position system (ball & robot & drone) | |||

=== '''Level 4 (Five system elements together), 1 possible integration''' === | |||

Supervisor + HMI with camera + Linked action planner + Position system (ball & robot & drone)+World Model GOAL! | |||

== Simulation == | |||

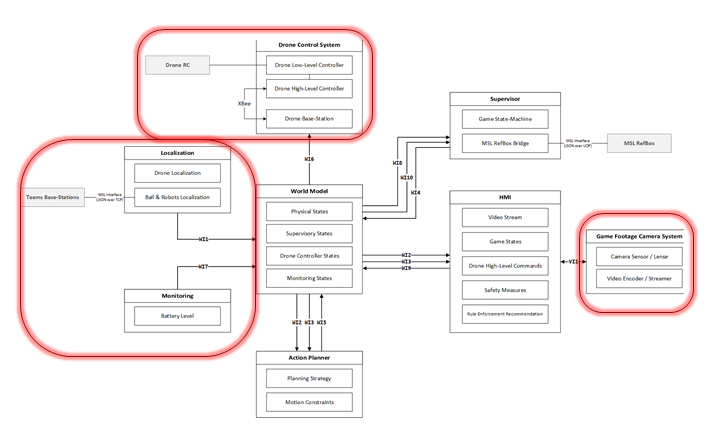

The software simulation has been implemnted to test and simulate the system. In the simulation, the hardware parts has been simulated. These parts have been highlighted in the context diagram below. | |||

[[File:Sim_1.png]] | |||

=== '''Drone''' === | |||

Simulated by use of dynamic equations of motion | |||

Instead of receiving feedback from sensors, it is received from the dynamic equations directly. | |||

Instead of sending PWM (pulse width modulation) to the brushless motor drivers, it is sent to the dynamic equations. | |||

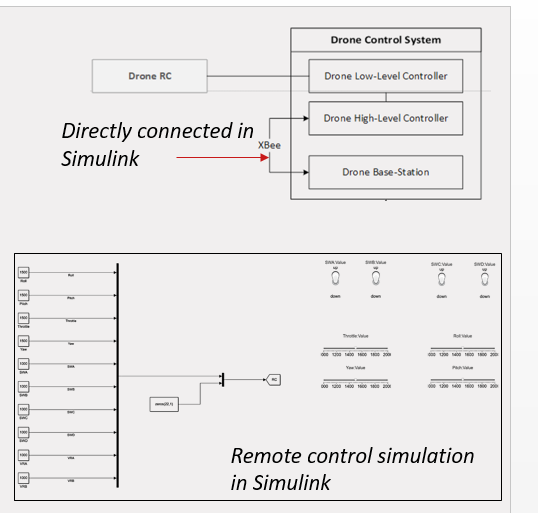

=== '''Remote control''' === | |||

Simulation is simulated in Simulink. | |||

Instead of sending data over X-bee, signals are directly connected in Simulink. | |||

[[File:Sim_2.PNG]] | |||

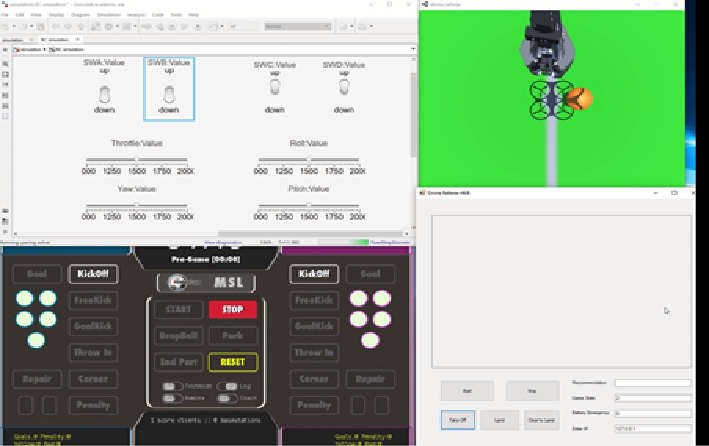

=== '''Features''' === | |||

Simulated remote control | |||

MSL referee box | |||

Simulated view port of the camera | |||

Remote referee HMI with out live camera feed | |||

[[File:Sim_3.png]] | |||

=== '''Simulation video''' === | |||

For the video, press this link | |||

[http://youtu.be/bA-McPrU_gQ] | |||

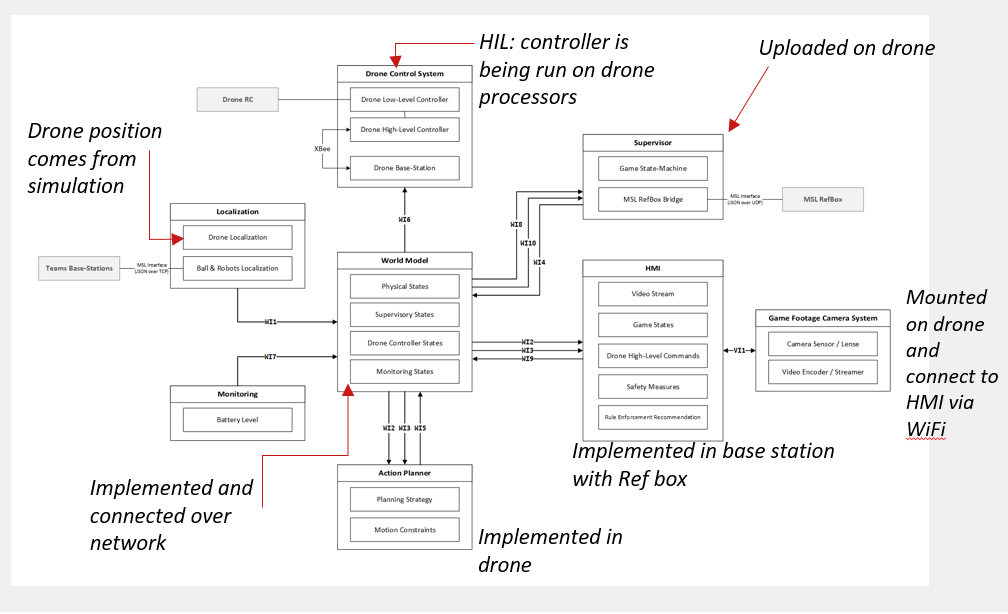

== Full system integration with hardware in loop drone (HIL) == | |||

=== '''Purpose''' === | |||

Test all subsystems except for the drone, while MSL game is running. | |||

Provide a proof of concept of the whole refereeing system | |||

Act as mitigation action if Avualr hardware fails. | |||

=== '''Test conditions''' === | |||

[[File:te_1.PNG]] | |||

=== '''Test record''' === | |||

[[File:teest.PNG]] | |||

For the video, press this link | |||

[https://www.youtube.com/watch?v=mseOMjUeobc] | |||

== Recommendations == | |||

The biggest challenge of the project was to make the hardware part concerning the drone and the sensors reliable and functional. | |||

We have expected that to happen. We have included it into our risk mitigation plan. However, we believe that these problems will recur in the future due to the nature of the project. | |||

Our recommendations are: | |||

1. Extend the duration of the project (minimum 12 weeks) | |||

2. Create a week of interaction between MSD groups over the years so that we can work on the same project clearly. | |||

Latest revision as of 10:13, 27 June 2019

Introduction

Football is a long-lived and well-liked sport which is a widespread passion nowadays. Consequently, it represents a growing industry with a huge market size. On the other hand, technological advancements are growing rapidly in different sections of humans’ lives, sports and more specifically football is not an exception. Some examples of the new technologies used are: automatic goal detection [1], use of trackers to monitor players' performance [2], soccer robots (the players are robots) [3], etc. One possibility of technology usage in football matches is to use a drone referee instead of a human referee or a camera system covering the field. This system provides several advantages with respect to the mentioned conventional refereeing methods. First, human referees are naturally prone to human errors, which are one of the main sources of controversy in the game by possible unfair decision making. A camera-based autonomous system would remove the unfairness factor since every game would be refereed according to the same algorithm and would cover virtually any possible game situation, it is still rather very expensive in many situations such as regional games. A moving camera provided by the drone can therefore replace such an expensive system, and therefore appeal to a large market. While the technology to automatically enforce the rules of the game based on video is not available yet, a camera system capturing important game situations can assist a remote referee, by providing video and repetitions based on which, the he/she is able to provide some decision and send them to the ultimate referee.

The use of a remote referee also makes sense in a robot soccer match, where, although the players are robots, the referee is still human. In this case, in addition to the difficulties of a human players football match refereeing, there are some rules in particular which are difficult to be checked and enforced by a human referee but rather easy for an autonomous referee with a vision system.

In summary, the current football industry can be described by:

• Football is a growing industry with a market size of several billions of euros.

• Human referees, naturally are prone to human errors.

• Unfair situations will happen in a game; where, both financial and emotional stakes are high.

Solution

• Autonomous refereeing system

Scope

Design an autonomous referring system for Robocup middle size league (MSL) using drones.

Team

Mechatronics system design 2018 PDEng trainees:

1. Arash, System Architect, a.arjmandi.basmenj@tue.nl

2. Siddhesh, Communication manager, s.v.rane@tue.nl

3. Navaneeth, Team Leader, n.bhat@tue.nl

4. Sareh, Quality manager, s.heydari@tue.nl

5. Mohamed, Project manager, m.a.m.kamel@tue.nl

6. Eduardo, Test manager, e.f.tropeano@tue.nl

7. Andrew, Team Leader, a.n.k.wasef@tue.nl

Deliverables

1. System Architecture

2. Drone refereeing system

3. Wiki page documentation

System Architecture

Referee aiding system

RAS use case

RAS context diagrams

MSL/Ball-Following 2D context diagrams

Drone refereeing system

MSL/Ball-Following 2D sub-systems

In the following part, we will introduce the sub systems of the MSL 2D package. These subsystems are: 1. World model 2. Supervisor

World model

Functions

- Acts as system’s model, avoiding lateral dependencies between sub-systems,

- State storage for the whole system,

- Master message-passing node for sub-systems,

- Single logging point of the system.

Supervisor

Introduction

The supervisor is a subsystem of RAS. This subsystem is responsible for providing high level control states for the other subsystems of RAS. Furthermore, it is responsible for translating user commands to states. The supervisor subsystem monitors the overall safety of RAS.

Architecture constraints

RAS

The supervisor subsystem is responsible for keeping record of the states of RAS.

The supervisor must capture the game, before game, emergency situation in its states.

The states of the supervisor must be accessible to other subsystems of RAS.

Drone MSL

Supervisor states must include drone positioning as additional states.

Supervisor must include drone emergency situations in its states.

Supervisor states are independent on MSL sub-packages (2d, gimbal, inclined?)

Supervisor takes input from MSL referee box through json interface.

Supervisor takes land, take off, safe to land from remote referee through world model

Supervisor gives the high level game states as outputs through world model

Supervisor enabled the RR to land from any point in the field.

Supervisor enables the drone to take off after takeoff command receive from RR.

Supervisor enable the drone to start refereeing the game after receiving the start game signal form MSL referee box.

Supervisor dictates that after a half time ends drone will hover above landing position.

Supervisor dictates that after receiving the land command drone will hover above landing position.

Supervisor enables the landing of the drone after checking it safe to land from both RR and drone action planner. State transition: check with AP requirements.

System description

In this section, a description of the supervisor subsystem for the MSL 2D architectural package will be shown. The description is divided into four points. These points are scenarios, purpose, inputs and outputs.

Scenarios

In the upcoming sub-section, selected game operation scenarios are presented. The purpose of these scenarios is to show the sequence of events of running game. These sequences are the result of application of the requirements or constraints to our drone referee system. In the next part, we will try to use these scenarios to obtain supervisory states for our MSL 2D architectural package. These scenarios are game, intentional landing and emergencies scenarios.

Game scenario

This scenario shows a complete sequence for the first half time of a MSL 2D game. The step sequence is the following:

1. RR makes sure robot players are positioned in center.

2. RR press take off using HMI.

3. Drone takes off then flies to start position. For MSL 2D ,the start position is the center of the pitch.

4. Drone is hovering at start position.

5. MSL referee issues start game signal.

6. Game starts.

7. Drone starts the refereeing technique.

8. First half time is over.

9. MSL referee issues the end of the 1st half time signal.

10. Drones hovers above landing position.

11. RR presses it is okay to land in HMI.

12. Drone lands in the landing position.

13. Drone is waiting for another game start.

Intentional land scenarios

These scenarios represent situations where the RR wants to land the drone before and during game. In this document, two scenarios are shown. The first scenario (ILS1) ,where; the RR wants the drone to land before game start. While, the second one (ILS2), where, RR wants the drone to land during the game. The sequence of steps of ILS1 is:

1. RR makes sure robot players are positioned in center.

2. RR press take off using HMI.

3. Drone takes off then flies to start position. For MSL 2D ,the start position is the center of the pitch.

4. Drone is hovering at start position.

5. RR presses land in HMI.

6. Drones hovers above landing position.

7. RR presses it is okay to land in HMI.

8. Drone lands in the landing position.

9. Drone is waiting for another game start.

While, the steps for ILS2 are:

1. RR makes sure robot players are positioned in center.

2. RR press take off using HMI.

3. Drone takes off then flies to start position. For MSL 2D ,the start position is the center of the pitch.

4. Drone is hovering at start position.

5. MSL referee issues start game signal.

6. Game starts.

7. Drone starts the refereeing technique.

8. RR presses land in HMI.

9. Drones hovers above landing position.

10. RR presses it is okay to land in HMI.

11. Drone lands in the landing position.

12. Drone is waiting for another game start.

Drone Emergencies scenarios

In these scenarios, three sets of sequences are presented. These sequences deal with only one kind of emergency. This emergency is related to battery emergency at different moments the game. These moments are before game start and drone is still in land (EMS1), drone is hovering at center before game start (EMS2) and during game (EMS3). The sequence of steps of EMS1 is:

1. Before game, drone is on land

2. Drone emergency occurs

3. Drone is not able to takeoff despite any command given by RR

4. Drone will only be able to take off again only when emergency is solved

For EMS2, the sequence is :

1. RR makes sure robot players are positioned in center.

2. RR press take off using HMI.

3. Drone takes off then flies to start position. For MSL 2D ,the start position is the center of the pitch.

4. Drone is hovering at start position.

5. Drone emergency occurs

6. Drone lands

7. Drone is not able to takeoff despite any command given by RR

And for EMS3, the sequence is:

1. RR makes sure robot players are positioned in center.

2. RR press take off using HMI.

3. Drone takes off then flies to start position. For MSL 2D ,the start position is the center of the pitch.

4. Drone is hovering at start position.

5. MSL referee issues start game signal.

6. Game starts.

7. Drone starts the refereeing technique.

8. Drone emergency occurs

9. Drone lands

10. Drone is not able to takeoff despite any command given by RR

11. Drone will only be able to take off again only when emergency is solved

Functions

From the previous scenarios and constraints, the functions of the sub-system supervisor can be presented by these points:

• To take the remote referee’s commands, referee box game states and drone emergency as inputs

• To provide high level control ( game) states according to inputs.

• To provide reaction to emergency situations

The other sub-systems will take action based on the high level abstract states. In figure , a context diagram for the supervisor subsystem is depicted. In this diagram, the inputs and outputs of the system are shown. Furthermore, the possible messages for each input or output are shown. In the figure, the system boundary line separates the subsystems of the drone referee form the outside environment.

Figure 1 Context diagram for the supervisor subsystem.

Inputs

Remote referee

The supervisor takes the takeoff, land and safe to land commands from the remote referee via HMI through world model.

Monitoring system

The supervisor reads the emergency status in the world model. This status is written by the monitoring system. Referee box

The supervisor reads the game status from the MSL HMI (ref box). Based on this status , supervisor can know when game starts and ends. Action planner

Action planner sends a “safe to and signal” to world model. The supervisor reads that signal from world model. Drone can only land when the two safety checks are fulfilled.

Output

High level control (game) states

Supervisor write these states into world model. Other subsystems like action planner read these states and take action based on them.

Design choices

For the MSL 2D, the supervisor will have 5 states. The transition between these states are governed by transition conditions. These conditions are based on the values and combinations of the so called transition variables and the transition variables are based on the inputs of the supervisor subsystem. States

The 5 states of the supervisor are presented in table 1. Furthermore, table 1 shows the description of each state.

Table 1 State description

Transition variables

In table 2, the description, value, condition of each transition variable are shown.

Table 2 Transition variable description

Transition conditions

They are conditions consisting of the variables and mathematical operators. The operators are || (OR) , == (EQUALS) and && (AND). Whenever, the condition is true, the supervisor is allowed to move from the current state to the future one. Tables 3 shows the description of each condition, the current state and the future sate.

Table 3 Transition conditions

State machine

The complete state machine of the supervisor subsystem is shown in figure 2. The initial state of the system is S1. In the figure, the states, variables and conditions are shown. Furthermore, the output (high level control (game) states) of the supervisor is presented in the variable game_state. This variable is sent to world model and can be read by other subsystems.

Figure 2 State machine of the supervisor subsystem

Reaction to emergency situations

In MSL 2D, there are two emergency situations. The first one is drone emergency. While, the second one is dependent on the remote referee’s estimation. For both of these situations, the drone will land.

Assumptions

• RR must make sure that robots are in starting place while drone is hovering above starting position.

• RR must make sure that is safe to takeoff before pressing safe to take off in HMI.

• The drone emergency situations considered in the systems are only battery emergencies.

• RR must make sure there are no obstacles in the airfield and landing position.

• RR must make sure the safety net is closed.

• During game pauses drone will still follow the refereeing strategy.

• System’s initial state is always at S1

Technology

For this supervisor, Matlab Stateflow was used [add ref].

Recommendations

Emergency situations

Supervisor should consider other drone emergency situations. These situations can be obtained after experimentation of the system in several test runs. Furthermore, additional functional safety measures could be considered for each subsystem.

Model based supervisor

It was not possible for our team to model each subsystem separately and make maximal permissive supervisor with the time resources we had. Furthermore, the drone manufacturer used Matlab Simulink to control the drone. Designing a maximally permissive supervisor on Matlab is very difficult. Our recommendation is to model the subsystems using other supervisory control tools. After finishing the supervisor start couple it with Matlab. The challenge in this case would be modeling, capturing requirements and supervisor synthesis. [ add ref]

Drone

Introduction

The drone is the main hardware subsystem of the RAS. This subsystem includes sensors, microcontroller, communication ports and mechanical components. The drone used in this project, is the last version of Avular curiosity drone provided by Avular company. The drone can be programmed by means of Matlab Simulink. All the other subsystems must eventually be implemented on the drone to achieve the final goal of refereeing the game.

Architecture constraints

- RAS:

The drone must be able to fly autonomously.

The drone is responsible to follow the command of action planner through world model

The sensors data must be accessible to world model (the other subsystem of RAS)

The drone should provide the battery level information to other subsystems.

The drone must have the possibility of installing the footage camera on it.

- Drone MSL:

The drone must be able to fly for 5+2 minutes and show the battery level all the time.

System description

The drone is the main hardware subsystem of drone referee system which consists of different components and subsystems itself. The main task of the drone is to receive commands in real time from a remote computing module (usually a PC) and follow the action required from action planner while carrying the footage camera. For this need,the main challenge is programming the drone and setting the wireless connection for sending wireless data to the micro-controller present on the drone. The Avular curiosity drone can be programmed through three computing modules: Low-Level module, high-Level module and compute module. Each of these modules is responsible for part of the functionality of the drone, and is connected to a different set of sensors, actuators or communication devices. These modules, their general functionality and their communications are shown the the figure below. Each one of the High-Level and Low-Level Modules uses a 180MHz Cortex-M4 processor and the Compute Module is a Raspberry-Pi Compute Module 3.

The idea is to use Low-Level module for calculations related to flight stabilization and monitoring the current status of the drone, and use the High-Level Module for other high-level calculations, such as: path planning or position control of the drone which can be different depending on the project requirements. The Compute Module can be used in combination with both High-Level and Low-Level Modules for intensive computations. In drone referee project it is only used for comunication with the footage camera as mentioned before in the camera section.

Functionality Overview

After programming the Low-Level and High-Level modules, communication to the drone is possible through X-bee and by means of MAVLink protocol. An overview of this communication process is shown in figure below.

Technology

In this project only High-Level and Low-Level modules are used for programming the drone. They are programmed in MATLAB-Simulink via ST-link programmer.

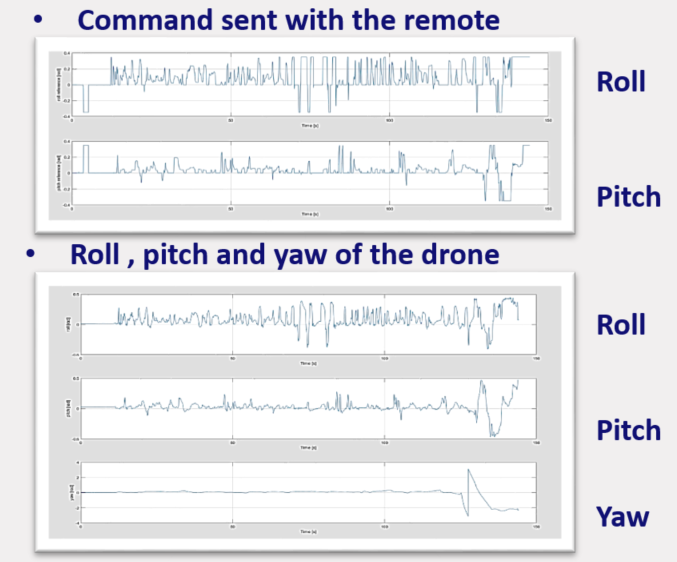

Flying manually with the remote control

Diagnosed Problems

- Hardware: defective battery

- Software: Probably Kalman filter/Low level control

Solutions

- New batteries (at least 3)

- More tests to improve the low control system

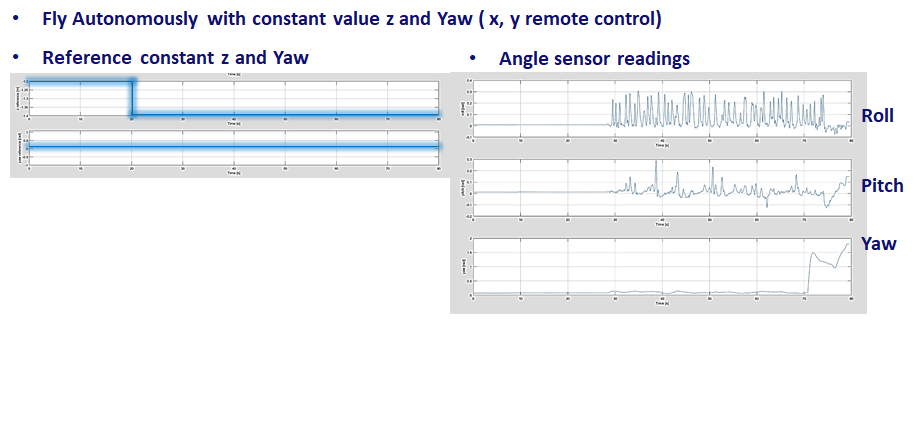

Autonomous flight

Diagnosed Problems

Incorrect sensor reading (Probably the magnetometer)

Solutions

- Better calibration sensors

- Use other measurement techniques

Localization system, UWB (Ultra-wide band) flight

Diagnosed Problems

Noisy Ultra-wide band data

Solutions

- Better calibration of the UWB

- Apply a median-filter in the MATLAB algorithm

Autonomous flight II

In this test, drone is controlled autonomously in Z direction and manually in x,y and yaw. Controlling Z position of the drone was successfully performed.

Camera footage system and HMI

Introduction

The purpose of camera footage system and the HMI is to provide video stream on game referee’s console. Camera attached to the drone should provide enough situational awareness to enable referee to take decisions. Additionally, referee should be provided with recommendations such as ball out of pitch, collision between players to help him make better decisions.

Requirements and architecture constraints

After discussion with stakeholders and team members following requirement and constraints were imposed on the system

1. Referee’s HMI shall display raw video from received video stream

The HMI should show the video stream of the game on referee’s console.

2. The “Game Footage Camera’s ” parameters (FOV, depth-of field etc.) shall be chosen to cover 4-5 meters of field of view (FOV) around the ball, with nominal allowable flight height in each match

It is important for referee to see enough area around the ball to make proper judgement. After discussion with the experienced robosoccer referee, it was decided that it is preferable to have 4-5 meters of field of view around the ball. The field of view is dependent on the height at which drone of the flying.

3. The overall streaming delay (from real-scene viewed by camera to the video stream displayed for the RR ) shall be less than 0.5 sec

In sports it’s important for referee to make decisions as soon as possible. Therefore, the referee should be able to see the video with as less delay as possible. Based on the feedback received from the experienced referee the delay should not be more than 0.5 seconds.

4. Any image processing (detection/distortion improvements/ marking boundaries etc.) shall be done on external processors

To have minimum footprints in terms of size, processing power and energy consumption all the image processing should be done on an external processor.

5. Each video stream shall take less than 10% of the network link bandwidth

System needs to be scalable for multiple cameras, referee consoles. Therefore each video stream should not consume more than 10% of the network bandwidth.