PRE2018 3 Group12: Difference between revisions

| (52 intermediate revisions by 3 users not shown) | |||

| Line 285: | Line 285: | ||

This section gives an in-depth description of how a product is created that conforms to the requirements. | This section gives an in-depth description of how a product is created that conforms to the requirements. | ||

== Simulation of the sensor configuration == | == Simulation of the sensor configuration == | ||

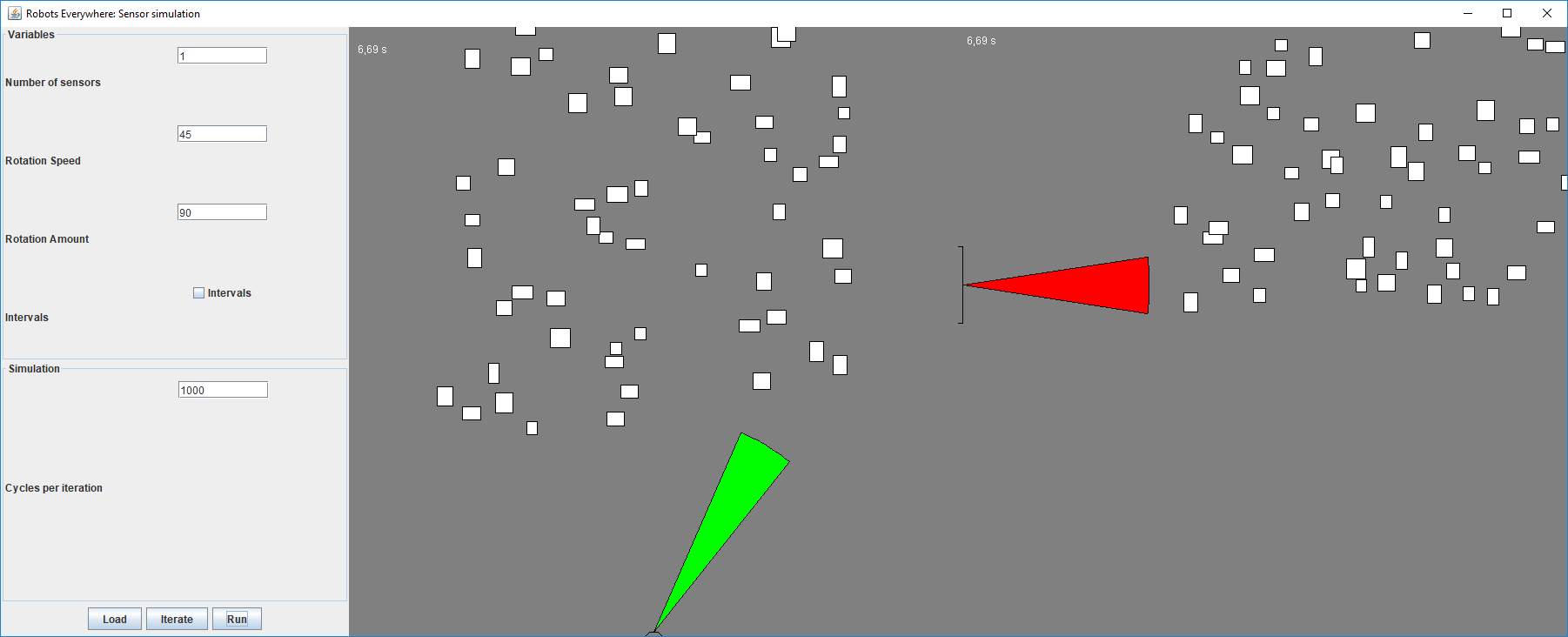

One of the goals for this project is to come up with a solution for the limited field of view of the prototype from last year's group. In order to do that, a proposed solution is to have the sensors rotate, enlarging their field of view. Many variables play a role in this simulation. Since it is very time consuming and cost intensive to test all possible configurations physically, we have chosen to create a simulation. The goal of this simulation is to find the effectiveness of as many configurations as possible. This is done by gathering data from as many configurations as possible, and analyzing this data to figure out what it exactly means to be effective. | One of the goals for this project is to come up with a solution for the limited field of view of the prototype from last year's group. In order to do that, a proposed solution is to have the sensors rotate, enlarging their field of view. Many variables play a role in this simulation. Since it is very time consuming and cost intensive to test all possible configurations physically, we have chosen to create a simulation. The goal of this simulation is to find the effectiveness of as many configurations as possible. This is done by gathering data from as many configurations as possible, and analyzing this data to figure out what it exactly means to be effective. The source can be found here: [[File:Simulation-source.zip]]. | ||

[[File:Screenshot1.PNG|thumb|right|700 px|Screenshot of the simulation, including configuration (left), top down view (center), sideways view (right)]] | [[File:Screenshot1.PNG|thumb|right|700 px|Screenshot of the simulation, including configuration (left), top down view (center), sideways view (right)]] | ||

| Line 343: | Line 343: | ||

Now the processing of the mentioned parameter has been done there is only 1 parameter left in this setup to analyze. This parameter is the Rotation amount. This will be processed in the same way as the parameter Rotation per second. It will be analyzed per amount of sensor in first place. So this are the outcomes. | Now the processing of the mentioned parameter has been done there is only 1 parameter left in this setup to analyze. This parameter is the Rotation amount. This will be processed in the same way as the parameter Rotation per second. It will be analyzed per amount of sensor in first place. So this are the outcomes. | ||

[[File:Poging 3.png|thumb|right|500 px|]] | [[File:Poging 3.png|thumb|left|500 px|]] | ||

[[File:Regressie analyse.png|thumb|right|500 px|]] | |||

[[File:Figure_1.png|thumb|right|500 px|Critical misses plotted agains the height of the sensors.]] | |||

Out of the results and the scatter-plots several conclusions can be made around the usage of Rotation amount. The first one that pops out is the incredibly low value of R². Which indicates that there is almost no correlation between the percentage of missed objects and the Rotation amount. The highest correlation that is found is around the 1,12%, which is almost nothing in comparison with the number of sensors and the Rotation per second. Through this value we can say that the adjustments of the Rotation amount won’t have a big influence on the percentage of missed objects. | Out of the results and the scatter-plots several conclusions can be made around the usage of Rotation amount. The first one that pops out is the incredibly low value of R². Which indicates that there is almost no correlation between the percentage of missed objects and the Rotation amount. The highest correlation that is found is around the 1,12%, which is almost nothing in comparison with the number of sensors and the Rotation per second. Through this value we can say that the adjustments of the Rotation amount won’t have a big influence on the percentage of missed objects. | ||

| Line 349: | Line 351: | ||

One other conclusion can be made after looking at the scatter-plots. When looking at the functions of the plots, 2 things stand out. Like said before, the incredible low impact that the Rotation amount has. And the other one is when looking at the slopes of all the plots the slopes are getting positive in the cases with the 5 and 6 Sensors. This means that if the Rotation amount rises the percentage of missed object also rises. The goal of this simulation was to get an as low as possible percentage of missed object. So although the positive slopes by 5 and 6 sensors aren’t that big, it is possible to say that when using 5 or 6 sensors and making the Rotation amount higher, that it doesn’t contribute to the goal of missing as little as possible objects. | One other conclusion can be made after looking at the scatter-plots. When looking at the functions of the plots, 2 things stand out. Like said before, the incredible low impact that the Rotation amount has. And the other one is when looking at the slopes of all the plots the slopes are getting positive in the cases with the 5 and 6 Sensors. This means that if the Rotation amount rises the percentage of missed object also rises. The goal of this simulation was to get an as low as possible percentage of missed object. So although the positive slopes by 5 and 6 sensors aren’t that big, it is possible to say that when using 5 or 6 sensors and making the Rotation amount higher, that it doesn’t contribute to the goal of missing as little as possible objects. | ||

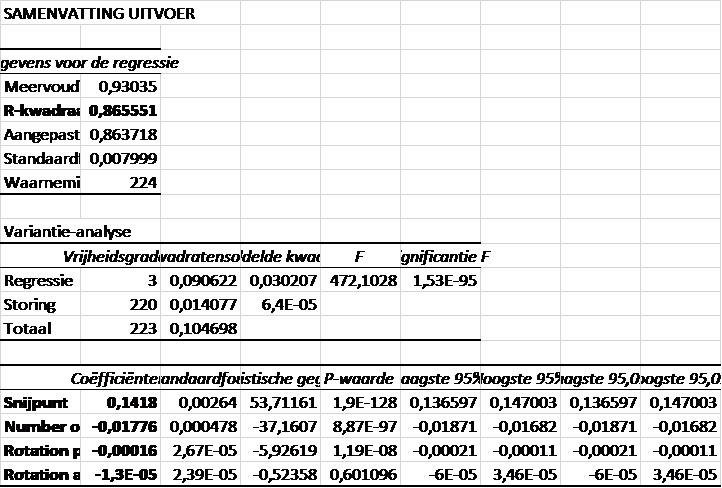

As visible in the Regression-analyses 86,56% of the Y-data can be explained by using the 3 parameter that we have tested in our simulation. The number of sensors has the most influence on the percentage of missed object with an percentage of 84,39%. The rotation per second and the rotation amount give an combined percentage of 2,17%. 86.56% is a high percentage that is possible to assign to its roots, but still 13,44% can’t be assigned to anything. Those 13,44% exist of parameters that we didn’t take into account in this simulation. It can vary from the parameters size of the object to an error in the sensor. To determine where those other 13,44% belongs to further research has to be done. | As visible in the Regression-analyses 86,56% of the Y-data can be explained by using the 3 parameter that we have tested in our simulation. The number of sensors has the most influence on the percentage of missed object with an percentage of 84,39%. The rotation per second and the rotation amount give an combined percentage of 2,17%. 86.56% is a high percentage that is possible to assign to its roots, but still 13,44% can’t be assigned to anything. Those 13,44% exist of parameters that we didn’t take into account in this simulation. It can vary from the parameters size of the object to an error in the sensor. To determine where those other 13,44% belongs to further research has to be done. | ||

In the figure | In the figure, it can be seen that the impact of a higher rotation speed, and a wider rotation angle is very small when having two or more sensors. | ||

==== Influence of height ==== | ==== Influence of height ==== | ||

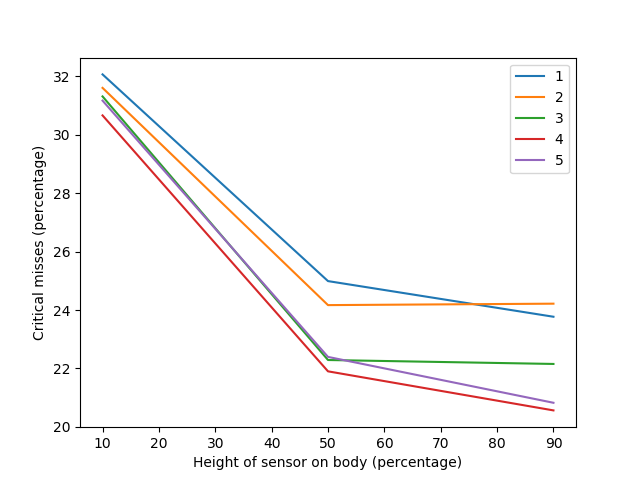

To determine what the optimal height is for the sensor on the body of the person, we adopted the simulation to accommodate a third dimension. Another variable is introduced, namely the height on which the sensors are mounted on the body of the person. The objects will now have, in addition to positions and dimensions on the x and y axis, a position and a dimension on the z axis. The collision detection of the three dimensional cone-like sensor and the rectangular objects are more computationally expensive however. Because of this, we were not able to run as extensive experiments as for the two dimensional configurations. Another notable detail is that the amount of missed objects is higher than for a two dimensional configuration. | To determine what the optimal height is for the sensor on the body of the person, we adopted the simulation to accommodate a third dimension. Another variable is introduced, namely the height on which the sensors are mounted on the body of the person. The objects will now have, in addition to positions and dimensions on the x and y axis, a position and a dimension on the z axis. The collision detection of the three dimensional cone-like sensor and the rectangular objects are more computationally expensive however. Because of this, we were not able to run as extensive experiments as for the two dimensional configurations. Another notable detail is that the amount of missed objects is higher than for a two dimensional configuration. | ||

| Line 368: | Line 369: | ||

For each part of the Electronic design, a description is made. This description is shown below and goes in to detail of every component of the whole circuit. | For each part of the Electronic design, a description is made. This description is shown below and goes in to detail of every component of the whole circuit. | ||

=== General === | === General === | ||

| Line 374: | Line 375: | ||

===Ultrasonic=== | ===Ultrasonic=== | ||

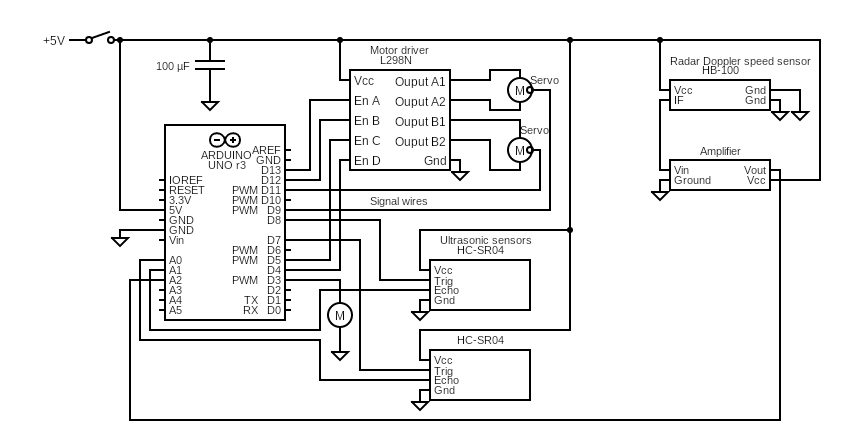

As described above, two ultrasound sensors are placed on top of servos that can rotate. These servos are powered using an motor driver, which is connected to the power source, as the Arduino can not provide the current needed for the servos. However, the Arduino can send signals trough the signal wires indicating to which position the servos should rotate. These signal wires are connected to two Pulse Width Modulation (PWM) pins (pin 9 & 11) of the Arduino. The ultrasonic sensors are connected to the power source as well. Furthermore, both sensors use one input pin to send a signal, and one output pin to read the returned ultrasonic pulse. Using the Arduino, the time difference between the sent and received pulse can be used to determine the distance of an object. Furthermore, the closer the distance the ultrasonic sensor measures, the higher the voltage the | As described above, two ultrasound sensors are placed on top of servos that can rotate. These servos are powered using an motor driver, which is connected to the power source, as the Arduino can not provide the current needed for the servos. However, the Arduino can send signals trough the signal wires indicating to which position the servos should rotate. These signal wires are connected to two Pulse Width Modulation (PWM) pins (pin 9 & 11) of the Arduino. The ultrasonic sensors are connected to the power source as well. Furthermore, both sensors use one input pin to send a signal, and one output pin to read the returned ultrasonic pulse. Using the Arduino, the time difference between the sent and received pulse can be used to determine | ||

[[File:circuit (9).png|thumb|right|540 px| The amplifier of the radar section]] | |||

the distance of an object. Furthermore, the closer the distance the ultrasonic sensor measures, the higher the voltage the Arduino will put on the pins of the vibration motor and thus the vibrations will be amplified. | |||

===Radar=== | ===Radar=== | ||

| Line 381: | Line 385: | ||

=Implementation= | =Implementation= | ||

==Prototyping== | ==Prototyping== | ||

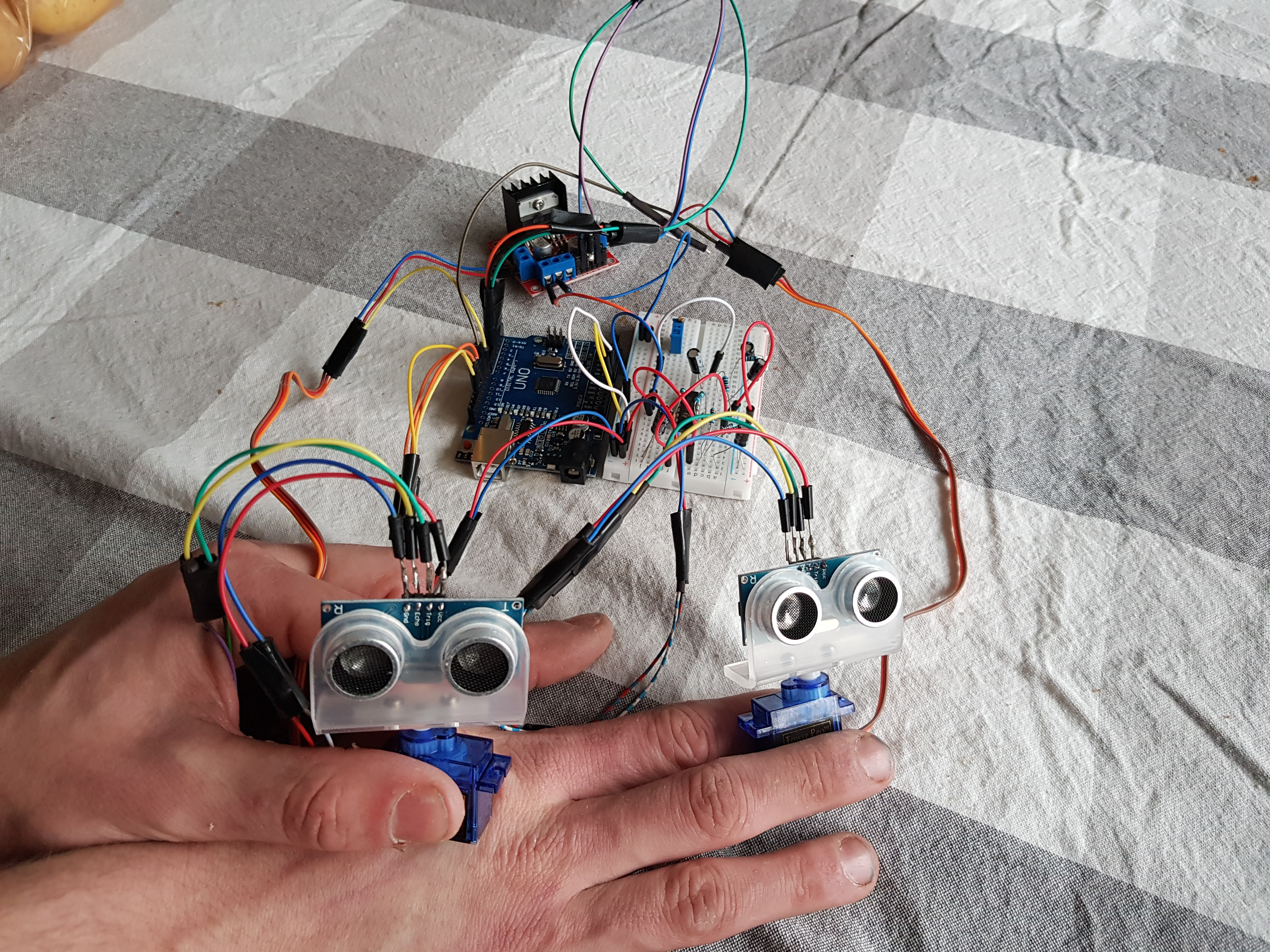

To check whether our ideas and research has actually improved on the work the previous group did, we have made a prototype. This prototype implements the | To check whether our ideas and research has actually improved on the work the previous group did, we have made a prototype. This prototype implements the electronic design as described in [[PRE2018_3_Group12#Electronic design]] and the data on placement and rotation of the ultrasonic sensors as described in [[PRE2018_3_Group12#Simulation of the sensor field of view]]. | ||

We have decided to create a wearable prototype, as we are extending on the work of the previous group. | We have decided to create a wearable prototype, as we are extending on the work of the previous group. Furthermore, the prototype is controlled by an Arduino uno running arduino code. [[file:0LAUK0_group12_2019.zip]] | ||

===Building the prototype=== | ===Building the prototype=== | ||

[[File: | [[File:prot4.png|thumb|right|400 px|Prototype of the moving ultrasonic sensor]] | ||

Building the prototype started with testing all individual components, most notably, the servos and the ultrasonic sensors. The radar sensor could not be tested, as it was impossible to get an output from the bare component. After verifying that the servos and ultrasonic sensors worked, they were mounted on top of | Building the prototype started with testing all individual components, most notably, the servos and the ultrasonic sensors. The radar sensor could not be tested, as it was impossible to get an output from the bare component. After verifying that the servos and ultrasonic sensors worked, they were mounted on top of each other with the screws provided with the servo. Afterwards, both the sensors were tested together. However, there was a problem were the servos will not always turn together. One servo seems to get priority to turn, while the other servo would only turn once every 5 or 10 times. If the 2 servos were switched with each other, the servo that worked stopped working, and the one that did not work, started working. Not always did one servo have priority over the other, this indicated a issue with the power delivered. However, after doing an analysis of the power, it could determined that both the servos had more than enough power delivered, to turn at the same time. As this was not the solution, the next most probable fault would be the Arduino not setting the servo pin to the right value. This was tested and was also was not the source of the problems. The only other source of the problem could be the servo itself. However, this could not be confirmed as the switching the servo out did not solve it. Although the servos were not turning as they should all time, when they did, they turned as intended. | ||

When the ultrasonic sensor part was finished, the radar part was built next. After building the amplifier needed for the radar, it was connected to an audio output. When listening to this audio output, it seemed really silent with a small 'plop' sometimes. After connecting the output to the Arduino, it could be concluded that the amplifier did not amplify the signal enough. Therefore, a new design was made that did amplify the signal enough. When it was connected, the Arduino showed a frequency difference when there was a moving object in the range of the sensor, which was linear dependant on the speed of the object. However, after carrying the sensor around, it suddenly stopped working. | When the ultrasonic sensor part was finished, the radar part was built next. After building the amplifier needed for the radar, it was connected to an audio output. When listening to this audio output, it seemed really silent with a small 'plop' sometimes. After connecting the output to the Arduino, it could be concluded that the amplifier did not amplify the signal enough. Therefore, a new design was made that did amplify the signal enough. When it was connected, the Arduino showed a frequency difference when there was a moving object in the range of the sensor, which was linear dependant on the speed of the object. However, after carrying the sensor around, it suddenly stopped working. The way the amplifier was built (in a breadboard), made it very vulnerable, and it had stopped working. Although it was fixable, we decided that we were not going to include the radar for validation as it would likely break again. We did however, test if the radar worked correctly, these results are shown below. The graph on top represents a fast moving object, while the graph below shows a slow moving object. | ||

[[File:prot3.png|thumb|center|800 px|Amplified radar output signal]] | |||

As can be seen from the figure above, the frequency of the fast moving object is much higher, this is what to expect as a Doppler shift frequency. Furthermore, both signals are either 1 or 0, this means that it can be connected to a digital pin on the Arduino, instead of an analog pin. Digital pins are easier to manufacture and thus a lot cheaper. Using the relation that the Doppler shift frequency can be divided by 19.49 (different for other carrying frequencies) to obtain the velocity, it is estimated that the object in the top graph travels at ~9.5 km/h while the object in the bottom graph travels at ~1 km/h. | |||

=Validation= | =Validation= | ||

| Line 430: | Line 436: | ||

Just like the previous group, the tests will be compared to each other in the measurement of ‘objects hit’. This way different configurations for the device can be compared to each other. The configuration with least objects missed will be noted down as ‘best’. | Just like the previous group, the tests will be compared to each other in the measurement of ‘objects hit’. This way different configurations for the device can be compared to each other. The configuration with least objects missed will be noted down as ‘best’. | ||

== | ==Conducting the tests== | ||

[[File: | [[File:course.jpg|thumb|right|500 px|Picture of the obstacle course, the picture is taken from the starting point, the user had to traverse the course around all the chairs and would finish when reaching the table behind all chairs in the middle of the hallway.]] | ||

We have done each of the tests described above four times. Each test run is denoted by 1) 2) 3) and 4). The data behind each of these tests will be defined at each test individually. | We have done each of the tests described above four times. Each test run is denoted by 1) 2) 3) and 4). The data behind each of these tests will be defined at each test individually. | ||

| Line 471: | Line 477: | ||

The device in its current state (the prototype) is not a standalone product, but with some extra changes it surely could work. | The device in its current state (the prototype) is not a standalone product, but with some extra changes it surely could work. | ||

=Theoretical Situations= | ==Theoretical Situations== | ||

In this section contains a few situations are described in which our device might run into problems. These diffent situations are described below. | In this section contains a few situations are described in which our device might run into problems. These diffent situations are described below. | ||

==Situation 1: The hallway== | ===Situation 1: The hallway=== | ||

Right now, our device has sensors that scan the surrounding for close objects and notify the user of these objects. A wall is detected as a close object to the user. In a hallway of, let's say 1.5m wide, the device will detect an object on both your left and your right side. | Right now, our device has sensors that scan the surrounding for close objects and notify the user of these objects. A wall is detected as a close object to the user. In a hallway of, let's say 1.5m wide, the device will detect an object on both your left and your right side. | ||

The problem that that would give with our current setup; two rotating sensors and two vibration motors, is that the device would almost constantly pick up the signal of a close object. The user would not know how to move in this situation, without any further aids. | The problem that that would give with our current setup; two rotating sensors and two vibration motors, is that the device would almost constantly pick up the signal of a close object. The user would not know how to move in this situation, without any further aids. | ||

A possible way to fix this is by adding a third, stationary sensor and vibration motor combination in the front that would notify the user that the way they are currently orientated towards is free. | A possible way to fix this is by adding a third, stationary sensor and vibration motor combination in the front that would notify the user that the way they are currently orientated towards is free. | ||

==Situation 2: The stairs== | ===Situation 2: The stairs=== | ||

When the user gets close to a set of stairs, the sensors would detect an obstacle close to the user. When the user has a walking stick, they would be able to feel the bottom step and confirm that it indeed is a stairway by trying to find the step above it. However, with our device it would only detect an obstacle in the close surroundings of the user, instead of directly in front of, because of the height of the device. In this case the user would be in danger as they could fall over the stairs. | When the user gets close to a set of stairs, the sensors would detect an obstacle close to the user. When the user has a walking stick, they would be able to feel the bottom step and confirm that it indeed is a stairway by trying to find the step above it. However, with our device it would only detect an obstacle in the close surroundings of the user, instead of directly in front of, because of the height of the device. In this case the user would be in danger as they could fall over the stairs. | ||

= Conclusion = | |||

We can conclude that we have reached our goal of improving on last years' group design. We have succeeded in making a design that helps the visually impaired navigating in unknown situations, and removing the objects that suddenly popped into existence. And on top of that, the field of view of the user has greatly improved. | |||

However, we must also conclude that the prototype we designed is not a standalone aid. It is not possible to completely oriëntate yourself. Because of the limited time we had, we have not been able to test our prototype outside of test environments we created ourselves into the 'real world'. For our own safety, we have decided not to put the theoretical 'stairs' situation to the test. | |||

= Discussion = | |||

The goal of the project, improving on last years' design, has been reached. We were able to obtain additional information from rotating the sensors, even if it was not the information we indented to obtain. The results from the simulation clashed with the practical experience, because the simulation showed that the impact of rotation is minimal when having two or more sensors. However, the practical tests indicated (from personal experience) that the added value of rotation is noticeable. This difference in results is most likely due to the inaccuracies of the simulation. | |||

A better prototype could have been delivered if the building and testing of the prototype was started earlier in the project. Due to time constraints, we did not have time to have more than one design iteration. A thing we got back from testing our prototype is that it is difficult to orientate yourself as you have nothing to compare the rotation of the sensors to. If we had started testing earlier, we would have had the opportunity to add a third, static, ultrasonic to the prototype in the middle pointing straight forward (for instance). Another (obvious) factor contributing to a better prototype is to not let it fall during testing. While having improved on last years' design, several improvements can still be made. Situations such as going down stairs can be handled better. More testing with better integrated components could have been done to obtain a more compact design. The issue currently exists that the prototype does not fit in a container of a practical size. This also means that the prototype could not be worn, like last years'. This complicated testing as well. Furthermore, the radar module did end up working at the end, but it was very unstable. All components should have been soldered on a piece of perfboard, or even a designated PCB could have been designed for it. This would allow the radar module to work correspondingly. | |||

While having reached out on multiple times to multiple different individuals and organizations in our end user group, response was limited and late. Starting with reaching out on day one, and to more parties may have resulted in a better incorporation of the end users in out project. Improvements of the simulation manly consist of making the simulation more accurate. Examples of this are: give the person a realistic three dimensional bounding box (instead of a cylinder) and tweak the parameters of the objects (dimensions and locations). | |||

=Appendix= | =Appendix= | ||

Latest revision as of 20:09, 11 April 2019

Introduction

| Name | Study | Student ID |

|---|---|---|

| Harm van den Dungen | Electrical Engineering | 1018118 |

| Nol Moonen | Software Science | 1003159 |

| Johan van Poppel | Software Science | 0997566 |

| Maarten Flippo | Software Science | 1006482 |

| Jelle Zaadnoordijk | Mechanical Engineering | 1256238 |

The wiki page is divided into five sections: exploration, requirements, design, implementation, and conclusion. These five sections represent the five phases of the project.

Exploration

This section defines our chose problem, the background of this problem, and our approach to tackle it.

Problem Statement

In 2011, almost 302.000 people in the Netherlands have a visual handicap. [1] This means that a person is either visually impaired, or completely blind, but in both cases the person needs help. This number of people was 302.000, but now and in the future, this number will grow. This is because of the fact that the Netherlands is dealing with an aging society. The average age of people is rising. While this is not especially a bad thing, but the body of people slowly breaks down over the years. This also includes their eyes, and effects eyesight. The older a person gets, the bigger the chance is that he or she gets visual impaired, and in the worst case even goes blind. Until now there has not been found a way to prevent this from happening, so we just have to deal with it.

We know that people with a visual impairment will never be able to sense the world as people without visual impairment. But there are some ways to make their lives more comfortable. Thanks to guide dogs and white canes, these people are able to enjoy independence when it comes to navigating in outside areas. They can enjoy a walk with the dog, without having a person telling them what to do or where to walk. This suppresses the feeling of dependency of such a person. They can walk when and where they want to. Although this is currently the best way to deal with the impairment, it is not completely flawless. With the white cane there is the problem of the range of the cane. The person only knows what is happening inside this range, but not what is happening outside of it. The same problem persists with a guiding dog, because it only makes sure that the person moves out of the way of obstacles. So the dog only looks at the movement of the person in small distances. Both tools help the person to have an idea of what around him, but only within a certain range. They do not get a full representation of how the rest of world looks like.

With the use of new technology that might change. Using sensors these people could be given the ability to sense more than their immediate surroundings, sense objects which their white cane did not get into contact with, or the dog ignored because it was not in the way. Also, using physical phenomena such as the Doppler effect one can also detect motion relative to you, further enhancing the image a visually impaired person can obtain of the world.

USE aspects

User

When designing a product, the most important thing to know is for who we are creating the product. This target group of people is called the users. In the early years designers developed a product in the way only they themselves thought best. History has shown us that developing products in this way is not the most efficient way. [2] When developing a product based only on what you think is best, it is easy to miss certain aspects. This is possible, but not necessary, that this results in great problems. Developing a new product without a clear view on your future users increases the chance of developing a product fit for nobody. This is not efficient moneywise and timewise. We can draw the conclusion that it is necessary to take the user into account, when developing a product. In the next two sections we answer the following questions: who are our users and what do they want, and how are we going to satisfy all the needs of our users?

Identifying the users and their needs

The primary users we are designing the technology for, are visually impaired people. Almost 302.000 people in the Netherlands are visually impaired. [1] This is, in our eyes, a large amount of the population. For a blind or partially blind person, even the simplest tasks can be hard to complete. A walk for some fresh air can be a really hard task for someone that cannot orientate himself in the area in which he or she is unfamiliar. For those tasks they often require aids. Those aids are in most cases a guidance dog, or a white cane. By using those aids, the visually impaired can get those tasks done. He or she still cannot orientate himself or herself, but he or she can figure out where objects are. This is, of course, already a nice improvement the situation where these persons did not use these aids. However, in our opinion there still is a lot to improve, because (like stated in the problem statement) those aids have their flaws. By using the existing aids the user can avoid a car but do not know that the object the user is avoiding, is a car. When the dog drags the user away from the car, or his cane hits the car, the user now knows that he has to avoid something, but not what. What our design aims to create for the primary user, is a better impression of how the world looks and works. Improving the living experience of our primary users is our main target.

The secondary users are the friends, family and maybe the caretaker of the primary user. These people are close to the user and assist the primary user if something does not work out as planned. For example, when the primary user cannot take the guidance dog to the veterinary, the second user assists him or her. The most important aspect that we need to take into account is: how do you want to bring that info to the secondary user and is that everything that the secondary user needs? To get this information, it is possible to use scenarios. This to define every possible outcome and in turn, the different problems that can come up. In this way, we get an idea of which problems there may be, that we did not take into account the first time. After obtaining those possible problems, we have to find a way how the second user can assist to overcome those problems. It needs to be simple for the second user, because every one of the secondary users have to be able to fix it.

Satisfying the user needs

Now that we have identified our users and their needs, we look at how we are going to satisfy these needs. As stated before, we want to know what our users like of our concept, and what they would like to see changed. There is no better way to get this information than to get it directly from our primary and secondary users. We want to contact several of our users and ask them about our concept. Through this survey we want to come to know if our concept is a concept that they would like use. Only after we know this information, we can determine whether we can proceed with our concept, or rethink it entirely. We have an image of what the user would like to see, and know how to create a product that our user would like to use. Now maybe the most difficult part has to happen, combining the concept with his technology with the users wishes. The most important requirement of the final product is that it offers a valid alternative to existing aids. This does not necessarily mean that the technology better support the users disability than alternatives, it could also mean that it is simply cheaper. If the product is cheaper it can still be an option for people not able to afford more costly alternatives. There are many factors classifying the value of a product. Two important factors are the production and selling costs, and the support given and the usability of the technology.

Society

Like mentioned before, nowadays almost 302.000 people in the Netherlands are dealing with a visual impairment. That’s around 3% of the population of the Netherlands. 3% doesn’t seems to be such a great number, but almost everyone knows someone with a visual impairment. For example your neighbor, or even a close relative. Although there is a difference in how much you care about those people. Everyone deserves to live their lives as good as they want to, and to support them in this we have to support them with their visual disability. For a lot of disabilities there are tools that allow the user to do almost anything as someone without that disability, take for instance the wheelchair for someone who cannot walk on their own. For vision, there are no tools like this. As the research on replacing vision will not have a 'cure' at least for the next five years [3], we have to think about tools to help them in another way. To get as close as possible to that same end goal; Creating tools that allow them to do almost anything that someone without the visual disability can.

The most of our population now tries to avoid the visual impaired people in public. This can have the reason that we don’t want to interrupt them in focusing how to come around. But also with the fact that it is possible to think that visual impaired people don’t know that we are there, so why interrupt them. Everyone can have another reason but in most cases it leads to giving the visual impaired person space. Whatever reason a person has it doesn’t matter it gets the same outcome. The visual impaired person is getting treated in a different way than a person with a healthy sight. This can give mixed feelings, because in most cases we as society only try to help. This help is always welcome, but the other side of this is that visual impaired people most of the time want to be treated as equals. To be as equals we’ve to get as close as possible to realizing that end result. That’s where our concept comes in. Our concept gives the visual impaired person the feeling of what is happening around them and may give them more the feeling of being part of the society. With our product, they are aware that someone is walking besides them and maybe want to start a conversation with him/ her. The visually impaired are less dependent and can do more on their own. Also the society will react less extreme on an visual impaired person, because with our concept it won’t as noticeable that the person is visual impaired as with a cane or dog.

Our concept will not influence the entire society. However, it will improve the lives of our earlier defined primary and secondary users. People that are not involved in these user groups will not notice that much of a change in their daily lives.

Like said before we cannot reach the end goal of 'curing' the disability yet, because the technology does not exist. But by our concept we are extending the possibilities of what visual impaired people can do without bothering the society in a major way. We only ask the secondary user to assist the primary user, but in most of the times this is already the case. With our product they are more aware of their surroundings and have a better understanding of what is going on around them, which is a step in the right direction to reach the end goal.

Enterprise

The enterprise aspect of equipment for the handicapped is a complicated one. This is because the target demographic is, more often than not, dependent on government alimony programs, or health insurance. The reason for this is that these people commonly are not able to provide for themselves, due to their handicap. [4] This complicates the enterprise aspect, since the final product has to comply with several government and health insurance restrictions.

Some of these restrictions (in the Netherlands) include:

- People can only get one device per category from their health insurance. These categories are reading, watching television, and using a computer. These restrictions are imposed by health insurers, the reasoning behind this is that one device is enough. The problem with this restriction is that it is possible for one device to fall into two categories, or two completely different ones to fall into the same. For example, a device for enlarging a physical newspaper falls in the same category as an electronic magnifier for mobile use. [5]

- Companies that manufacture devices to aid blind people and of which the devices are covered by health insurance, cannot advertise with their products. [6]

- Health insurers do not like to cover proprietary devices, as these devices commonly are more expensive than cheaper ones containing older technology. [6] This is beneficial for insurers as it cuts costs.

These rules and restrictions have a great impact on the final product, and the success of the final product. A product can be perfect in every way, but if it does not comply to these written and unwritten rules, it may never reach the hands of the people that need it most. Even if the goal of the company making the products is not to make money, an can price the products as low as possible, the price can still be too high for the users to afford it without help of the health insurance.

State of the Art

After doing some initial exploration, we found that the problem can be subdivided into two sub problems: how the environment can be perceived to create data, and how this data can be communicated back to the user. Now follows a short summary of existing technologies:

Mapping the environment

Many studies have been conducted on mapping an environment to electrical signals, in the context of supporting visually impaired users. This section will go over the many different technologies that these studies have used. These method can be subdivided into two categories: the technologies that scan the environment, and those who read previously planted information from the environment.

One way of reading an environment, is to provide beacons in this environment from which an agent can obtain information. In combination with a communication technology, it can be used to communicate this geographical information to a user. Such a system is called a geographic information system (GIS), and can save, store, manipulate, analyze, manage and present geographic data. [7] Examples of these communication technologies are the following:

- Bluetooth can be used to communicate spatial information to devices, for example to a cell phone. [8]

- Radio-frequency identification (RFID) uses electromagnetic fields to communicate data between devices. [9]

- Global Positioning System (GPS) is a satellite based navigation system. GPS can be used to transfer navigation data to a device. However, it is quite inaccurate. [9][10]

The other method of reading an environment is to use some technology to scan the environment by measuring some statistics. Examples of these scanning technologies are the following:

- A laser telemeter is a device that uses triangulation to measure distances to obstacles.[11]

- (stereo) Cameras can be used in combination with computer vision techniques to observe the environment. [12][13][14][15][16][17][18]

- Radar or ultrasound are high frequency sound waves. A device sends out these sounds and receives them when they reflect on objects. This is used to calculate the distance between sender and object. [19][20][21][22][23][24][25][26][10][27][28][29][30]

- Pyroelectricity is a chemical property of materials that can be used to detect objects.[29]

- A physical robot can be used in combination with any of the above mentioned techniques, instead of the device directly interacting with the environment. [31]

Communicating to the user

Given we are dealing with the visually impaired, we cannot convey the gathered information through a display. The most common alternatives are using haptic feedback or audio cues, either spoken or generic tones.

Cassinelli et al. have shown that haptic feedback is an intuitive means to convey spatial information to the visually impaired [30]. Their experiments detail how untrained individuals were able to dodge oncoming objects from behind reliably. This is of great use as it shows haptic feedback is a very good option of encoding spatial information.

Another way to encode spatial information is through audio transmissions, most commonly through an earbud for the wearer. An example of such a system was created by Farcy et al. [11]. By having different notes corresponding to distance ranges this information can be clearly relayed. Farcy et al. make use of a handheld device, which caused a problem for them. It required a lot of cognitive work to merge the audio cues with where the user pointed the device. This made the sonorous interface difficult to use so-long as the information processing is not intuitive. In this project the aim is to have a wearable system, which could mean this problem is not of significance.

Finally, regardless of how distance is encoded for the user to interpret, it is vital the user does not experience information overload. According to Van Erp et al. [32] users are easily overwhelmed with information.

State of the Art conclusion

From our State of the Art literary study, we conclude that a wide variety of technologies have been used to develop an even wider variety of devices to aid visually impaired people. However, we noticed relatively little papers focus on what is most important: the user. Many papers pick a technology and develop a product using that technology. This in and of itself is impressive, but too often there is little focus on what this technology can do for the user. Only afterwards a short experiment is conducted on whether or not it is even remotely usable by the user. Even worse, in most cases, not even visually impaired users are the ones that test the final product. The product is tested with blind-folded sighted people, but differences exist that a blindfold cannot simulate. Research has shown that the brains of blind people and sighted people are physically different[33], which could lead to them responding differently to the feedback that the product provides. The fact that the user is not involved in the early stage of decision making, leads to the fact that the final product is not suited for the problem. When the problem is fully understood by involving the actual users, a product can be developed solving the problem.

Approach

To follow our State of the Art conclusion, our goal is to design a system to aid blind people that is tampered to the needs of this user from the ground up. That is why we aim to involve the user from the start of the project. Firstly, we are first going to conduct a questionnaire-based research to fully understand our user. Only after understanding the user, we will start to gather requirements to make a preliminary design that fills the needs of thse users. After the preliminary design is finished, building the prototype can be started. During the making of the design and building the prototype, it is probable that some things might not go as planned and it will be necessary to go back steps, to make an improvement on the design in the end. When the prototype is finished, it is tweaked to perform as optimal as possible using several tests. We also aim to actually test the final prototype with visually impaired people. Finally, everything will be documented in the wiki.

Deliverables and Milestones

A prototype that aids blind people roaming around areas, that are unknown to them. This prototype is based on the design of last year[34]. From this design, a new design was made that tries to improve on the issues the previous design faced. Additionally, a wiki will be made that helps with giving additional information about the protoype, such as costs, components and it provides some backstory of the subject. Finally, a presentation is made regarding the final design and prototype.

- Presentation

- Presentation represents all aspects of the project

- Design

- Preliminary design

- Final design based on preliminary design, with possible alterations due to feedback from building the prototype

- Prototype

- Finish building the prototype regarding the final design

- Prototype is fully debugged and all components work as intended

- Prototype follows requirements

- Must haves are implemented

- Should haves are implemented

- Could haves are implemented

- Wiki

- Find at least 25 relative state-of-the-art papers

- Wiki page is finished containing all aspects of the project

Planning

| Week | Day | Date | Activity | Content | Comments |

|---|---|---|---|---|---|

| Week 1 | Thursday | 07-02 | Meeting | First meeting, no content | |

| Week 1 | Sunday | 10-02 | Deadline | Finding and summarizing 7 papers | |

| Week 2 | Monday | 11-02 | Meeting | Creating SotA from researched papers | |

| Week 2 | Tuesday | 12-02 | Deadline | Planning, users, SotA, logbook, approach, problem statement, milestones, deliverables | Edited in wiki 18 hours before next panel |

| Week 2 | Thursday | 14-02 | Panel | ||

| Week 2 | Sunday | 17-02 | Deadline | Prioritized and reviewed requirements document | |

| Week 3 | Monday | 28-02 | Meeting | Discussing previous deadline (requirements) | |

| Week 3 | Thursday | 21-02 | Panel | ||

| Week 3 | Sunday | 24-02 | Deadline | Preliminary design | |

| Week 4 | Monday | 25-02 | Meeting | Discussing previous deadline (preliminary design) | |

| Week 4 | Thursday | 28-02 | Panel | Maarten not present at panel | |

| Vacation | Sunday | 10-03 | Deadline | Final design | Final design is based on preliminary design |

| Week 5 | Monday | 11-03 | Meeting | Discussing previous deadline (final design) | |

| Week 5 | Thursday | 14-03 | Panel | ||

| Week 6 | Monday | 18-03 | Meeting | Discussing deadline progress (prototype) | |

| Week 6 | Thursday | 21-02 | Panel | ||

| Week 6 | Sunday | 24-03 | Deadline | Prototype complete | |

| Week 7 | Monday | 25-03 | Meeting | Discussing previous deadline (prototype) | |

| Week 7 | Thursday | 27-03 | Panel | ||

| Week 7 | Sunday | 31-03 | Deadline | Conclusion, discussion, presentation | |

| Week 8 | Monday | 01-04 | Meeting | Discussing what is left | |

| Week 8 | Thursday | 04-04 | Final presentation |

Findings from visiting Zichtbaar Veldhoven

On Thursday 21 February Jelle, Harm and Nol went to Zichtbaar Veldhoven. Zichtbaar Veldhoven is a small association for the visual handicapped. On the date of visiting, a presentation took place about currently available aids. The presentation was given by a representative from a company specialized in this equipment. The most notable findings are the following:

- The target demographic is dominated by the elderly. A visual handicap is also an illness that comes with the ages. This is something we had not taken into consideration. It is very important however, because people in this demographic more often suffer from more illnesses that influence the design: partially hearing, tremors, and a general lack of knowledge of technology.

- The market for aids for the visually impaired is vastly complicated. These people are dependent on insurance, and the insurance companies are reluctant on buying proprietary equipment due to costs.

These findings are worked out in more detail in their respective sections. References to findings from this visit are stated with "Personal communication with..". We also have made contact with H. Scheurs, chairman of the council of members.

Requirements

This section contains the requirements for the product, as well as the approach of gathering these.

Getting information from the intended users

To get information from the intended users, we have decided to contact a few foundations for visually impaired people. We asked them if we can interview them, or even better some members of their foundation. The questions we have asked them (in dutch):

- Wat zijn obstakels in het leven tijdens het navigeren en hoe zouden deze opgelost kunnen worden?

- Hoe doet u dit bij onbekende locaties?

- Welke hulpmiddelen heeft u nu al?

- Wat zou u graag willen waarnemen wat u niet al krijgt met de huidige hulpmiddelen?

- Stel dat u objecten die dichtbij zijn zou kunnen waarnemen?

- Stel dat u de beweging van objecten kunt waarnemen?

- Heeft u baat bij technologie die u extra ondersteund om te lopen op (onbekende) locatie?

- Waar zou u het comfortabel vinden om sensoren te dragen? Voorbeelden hiervan: met een extern apparaat, als riem, als vest op het lichaam, op het hoofd.

- Zou het voor u uitmaken als het apparaat zichtbaar is?

- Stel we hebben dit product, dan zal er ergens een batterij in moeten zitten. Op welke manier zou het voor u duidelijk zijn hoe u dit moet aansluiten?

- Waar kunnen we het beste rekening mee houden met het ontwerpen van ons product?

- Zou u bereid zijn om extra geld uit te geven voor deze techniek?

- (Eventueel: Hoe veel? €100-200, €200-500, €500-2000, €2000-10000)

- Mochten wij in dit vak zo ver komen om een werkend prototype te maken, zou u bereid zijn om het uit te proberen?

Prioritized Requirements

The priority levels are defined via the MoSCoW method[35]: Must have, Should have, Could have and Won't have.

Full Product

| Category | Identification | Priority | Description | Verification | Comment |

|---|---|---|---|---|---|

| Hardware | P_HAW_001 | M | Product will not contain sharp parts | Users cannot hurt themselves with the product | |

| P_HAW_002 | M | Product will not interfere with user movement | When the product is in use, the user should be able to freely move around without hitting (parts of) the product. | ||

| P_HAW_003 | M | Product does not have exposed electric wires. | |||

| P_HAW_004 | M | Device is wearable | The user should not hold the device in their hands, but wear it and have their hands free. | ||

| P_HAW_005 | S | Battery life is at least 2 hours | Product battery should last at least two hours in use. | ||

| P_HAW_006 | S | Battery is replaceable | Battery should be replaceable by the (visually impaired) user. Should be replaceable while in use. | ||

| P_HAW_007 | S | Device will have monitor for textual feedback | Trivial | ||

| Software | P_SOW_001 | M | Software will not interfere with radar | When running the software, the radar should not pick up anything to the device and it's software-driven features | |

| Usability | P_USE_001 | M | Device is operable by visually impaired people | No textual instructions, Everything should be clear by touch. | |

| P_USE_002 | M | Device is placed as such that it cannot be blocked by clothing | The sensor should not be covered by clothing. |

Radar

| Category | Identification | Priority | Description | Verification | Comment |

|---|---|---|---|---|---|

| Hardware | R_HAW_001 | C | Radar is able to go through clothing | Radar should be strong enough to go through clothing. | |

| R_HAW_002 | W | Radar can measure distance to an object. | Put an obstacle in front of user, the radar should be able to show the location of this object. | Won't have, a radar that is able to do this costs more than our budget | |

| R_HAW_003 | M | Radar is able to detect moving object | Let an object move in front of the user, the radar should detect this. | ||

| R_HAW_004 | M | Device communicates to the wearer that an object is moving. | Trivial | ||

| R_HAW_005 | M | Received signal should be amplified without distortion | http://www.radartutorial.eu/09.receivers/rx04.en.html | ||

| R_HAW_006 | M | Receiving bandwidth should be in proportion to thermal noise. | http://www.radartutorial.eu/09.receivers/rx04.en.html | ||

| Software | R_SOW_001 | M | Latency between detection and action should be maximal 0.2s | Trivial | |

| R_SOW_002 | M | Software will not interfere with radar | Trivial | ||

| Usability | R_USE_001 | S | Device gives auditory feedback when detecting moving obstacle | Trivial. | |

| R_USE_002 | C | Device has volume option for auditory feedback | When operating the volume option the device's audio output has to be modified accordingly | ||

| R_USE_003 | C | Device gives haptic feedback when detecting moving obstacle |

Servo powered sensors

| Category | Identification | Priority | Description | Verification | Comment |

|---|---|---|---|---|---|

| Hardware | U_HAW_001 | M | Ultrasound is able to detect obstacles | Set an object in front of user, if the ultrasound detects the object the requirement is satisfied. | |

| U_HAW_002 | C | Device can vibrate | Trivial | ||

| U_HAW_003 | M | Ultrasound can rotate to extend FoV | If the ultrasound sensor is rotateable via a servo and still able to operate, this is validated. | ||

| Software | U_SOW_001 | M | Latency between detection and action should be maximal 0.2s | Trivial | |

| U_SOW_002 | M | Ultrasound will keep rotating | Trivial | ||

| U_SOW_003 | S | State information is visible on monitor | Trivial | ||

| Usability | U_USE_001 | C | Device vibrates when detecting obstacle | Trivial. | |

| U_USE_002 | C | Device gives textual feedback when detecting obstacle. | When the ultrasonic sensor spots an obstacle, the device will produce a textual feedback that describes the distance to this obstacle. | Changed textual to auditory. 19/2 Johan: Changed back, textual is intentional: More or less a debug thing. Talked about this with Harm in meeting. |

Design

This section gives an in-depth description of how a product is created that conforms to the requirements.

Simulation of the sensor configuration

One of the goals for this project is to come up with a solution for the limited field of view of the prototype from last year's group. In order to do that, a proposed solution is to have the sensors rotate, enlarging their field of view. Many variables play a role in this simulation. Since it is very time consuming and cost intensive to test all possible configurations physically, we have chosen to create a simulation. The goal of this simulation is to find the effectiveness of as many configurations as possible. This is done by gathering data from as many configurations as possible, and analyzing this data to figure out what it exactly means to be effective. The source can be found here: File:Simulation-source.zip.

Setup

The simulation is rendered on screen both in a top down view, and in a sideways view. The simulation itself is in thee dimensions, but this would be too intensive to render on screen. In the simulation, the human body is roughly approximated by an ellipses from the top down perspective, and as a rectangle in the sideways perspective. The sensors are attached on the body based on two variables: the amount of sensors (determining the horizontal position), and the height of the sensors (determining the vertical position). The horizontal position is determined as follows: the sensors will be mounted on an elliptical curve at the bottom of the window, facing to the top of the window. The sensors are presumed to be spaced evenly across the curve. The vertical position simply follows from the sensor height parameter.

According to the specification overview of the ultrasonic sensor used by last years group, the field of view of each sensor is at most 15 degrees [36], and the range between 2 centimeters and 5 meters, so that is what will be the field of view per sensor in the simulation as well. Finally, to simulate the user moving forward, cubes of random dimensions will be randomly initialized a set distance away from the person, and move towards the bottom at the average walking speed of a human.

Variables

To test every possible configuration, many variables are part of simulation:

- The number of sensors in use, this ranges from 1 to 10. 1 is the minimum number of sensors, since it is obvious that no objects are detected with zero sensors. 10 is the maximum number of sensors, this is sufficient since only the 180 degree field of view in front of the user is simulated.

- The speed of rotation of all sensor, this ranges from 0 degrees/s to 180 degrees/s. The maximum rotation speed is sufficient since this allows a single sensor to view the entire field of view in one second.

- The amount of rotation, this value ranges from 0 degrees to 180 degrees, 0 degrees being no motion and 180 degrees being the maximum angle required to scan the whole area in front of the user.

- Whether the sensors rotate with intervals, this enables not all sensors to rotate in the same direction, thereby creating different scanning motions for each sensor.

- The height of the sensors, this ranges from 0 to the height of the person.

The simulation also contains several constants:

- The walking speed of a person is estimated at 140 millimeter per second.

- The average human width (side to side) is estimated at 350 millimeters.

- The average human width (stomach to back) is estimated at 220 millimeters.

- The average human height is estimated at 1757 millimeters.

Measurements

When running the simulation, the following data will be collected:

- How many objects are not detected that would be in range of the sensors.

- How much electricity is required to run the sensors and the servo's.

- How many of the undetected objects would have hit the user.

Data Analysis

The first part of the analysis has been conducted on only two dimensions, to the sides and in front of the person. This can also be described as a top-down view. The reason for this, is that this part of the simulation was done earlier on, and results could be processed earlier. The second part of this section will cover the analysis of the introduction of a third dimension.

Two dimensional

To get a setup that has an as low as possible percentage of misses we simulated different situations. Every situation is the possible combination of our final product. The situations differ in a couple of parameters. These parameters are the number of sensors, the rotation per second of the sensor and the rotation degree that the sensor covers. These parameters are combined in every possible way to determine which combination is the best one.

After running all the simulations with all possible combinations. The rough data has been gathered and 225 simulations with rough data are ready to be processed. The processing of the data happened in the program excel. Before it is possible to use the data out of the simulations, they would have to be plotted in the right form. When this had happened the real processing continued.

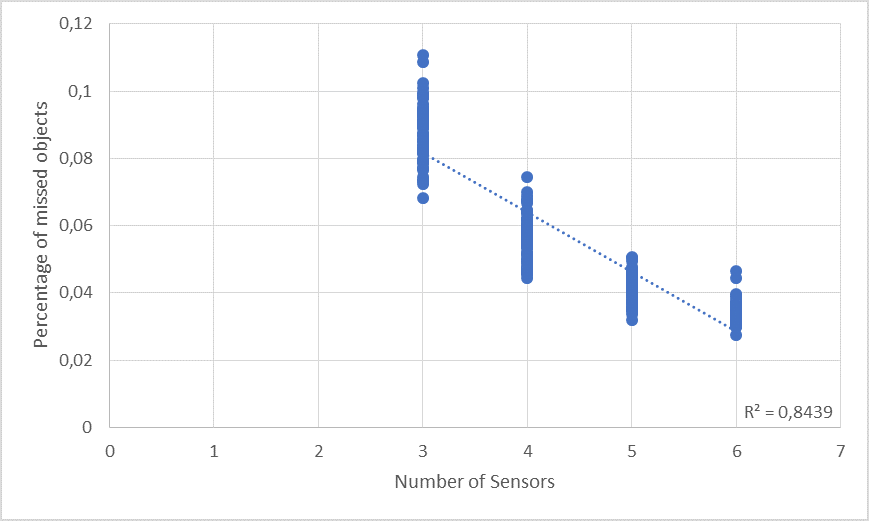

The first thing that is processed is the correlation between the number of sensors and the percentage of missed objects. To give a clear image of how that correlation is and how the number of sensors would influence the percentage of missed objects a scatter-plot has been made.

As visible in the scatter-plot, the number of sensors has a negative influence on the percentage of missed objects. This means that if you use more sensors, the percentage of missed objects will drop. This result is an outcome that could be expected with the idea that the more sensors you use, the bigger the field of view is that the product has. Instead of thinking that this would be the outcome, it is now possible to say for sure that the number of sensors has the most influence on the percentage of missed objects. This is due to the R² of 0.8439. Which means that there is a large correlation between the Y- and the X-data. In other words, an R² of 0.8439 means that 84,39% of the results in the Y-data is due to the change of X-data. So now 84,39% of the Y-data can be explained and allocated to the parameter of number of sensors but still, 15,61% of the Y-data is unexplained and can’t be allocated to something.

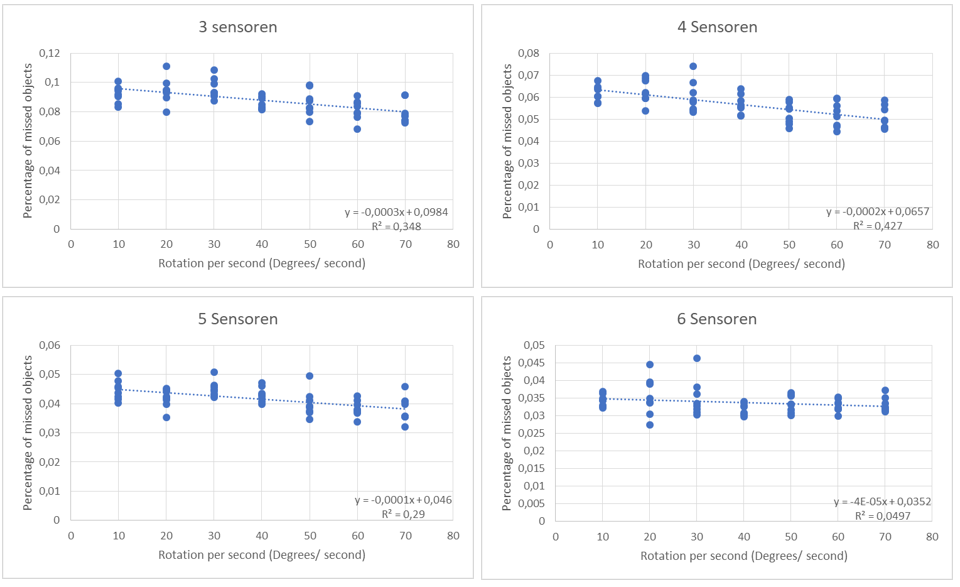

For further data processing, we are looking for the correlation between the Rotation per second and the percentage missed objects. Due to the combinations that we made, we couldn’t test the rotation per second loose, but only with every sensor.

In the results it is possible to see that the influence of the Rotation per second differs around the number of sensors that are used. Out of the R^2 of each of the scatter-plots it is possible to see that the Rotation per second has the most influence when using 4 sensors. And the least influence when using 6 sensors. A couple of other things can be seen in the scatter-plots. One thing is that if you turn the rotations per seconds up that the percentage of missed objects goes down. This hasn’t an big influence, but when looking at the function of the scatter-plots after processing it is possible to see that all the functions are going down. Which means that the rotation per second has a positive outcome when it comes to lower the percentage of missed object. So we know for sure that speeding up the sensors has a positive outcome when we work with 3 or more sensors.

Another thing that is noticeable when looking at the scatter-plots is that the effect of the rotation per seconds is decreasing when the number of sensors is increasing. This is possible a consequence of the fact that the space that needs to be covered in the same time by the sensors is smaller when there are more sensors on it.

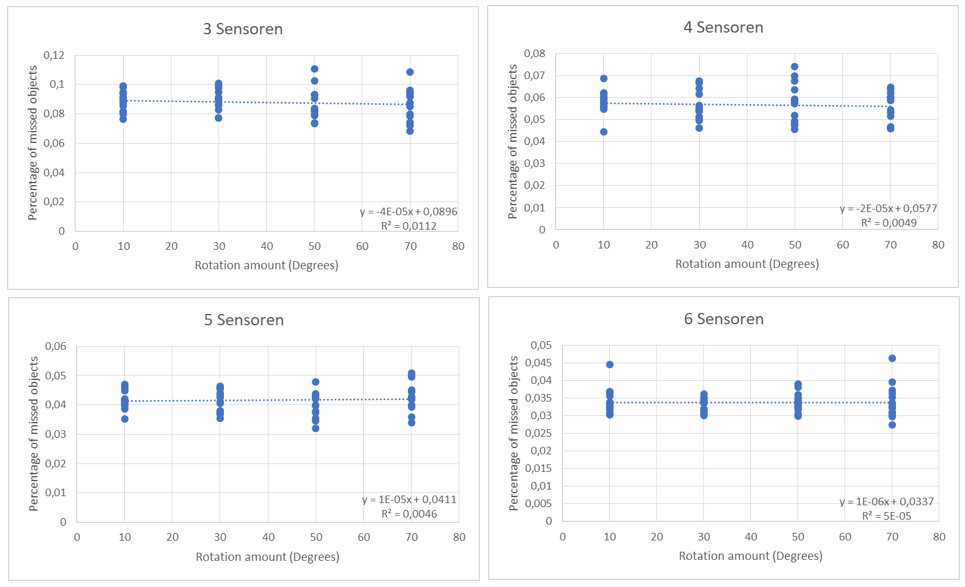

Now the processing of the mentioned parameter has been done there is only 1 parameter left in this setup to analyze. This parameter is the Rotation amount. This will be processed in the same way as the parameter Rotation per second. It will be analyzed per amount of sensor in first place. So this are the outcomes.

Out of the results and the scatter-plots several conclusions can be made around the usage of Rotation amount. The first one that pops out is the incredibly low value of R². Which indicates that there is almost no correlation between the percentage of missed objects and the Rotation amount. The highest correlation that is found is around the 1,12%, which is almost nothing in comparison with the number of sensors and the Rotation per second. Through this value we can say that the adjustments of the Rotation amount won’t have a big influence on the percentage of missed objects.

One other conclusion can be made after looking at the scatter-plots. When looking at the functions of the plots, 2 things stand out. Like said before, the incredible low impact that the Rotation amount has. And the other one is when looking at the slopes of all the plots the slopes are getting positive in the cases with the 5 and 6 Sensors. This means that if the Rotation amount rises the percentage of missed object also rises. The goal of this simulation was to get an as low as possible percentage of missed object. So although the positive slopes by 5 and 6 sensors aren’t that big, it is possible to say that when using 5 or 6 sensors and making the Rotation amount higher, that it doesn’t contribute to the goal of missing as little as possible objects.

As visible in the Regression-analyses 86,56% of the Y-data can be explained by using the 3 parameter that we have tested in our simulation. The number of sensors has the most influence on the percentage of missed object with an percentage of 84,39%. The rotation per second and the rotation amount give an combined percentage of 2,17%. 86.56% is a high percentage that is possible to assign to its roots, but still 13,44% can’t be assigned to anything. Those 13,44% exist of parameters that we didn’t take into account in this simulation. It can vary from the parameters size of the object to an error in the sensor. To determine where those other 13,44% belongs to further research has to be done.

In the figure, it can be seen that the impact of a higher rotation speed, and a wider rotation angle is very small when having two or more sensors.

Influence of height

To determine what the optimal height is for the sensor on the body of the person, we adopted the simulation to accommodate a third dimension. Another variable is introduced, namely the height on which the sensors are mounted on the body of the person. The objects will now have, in addition to positions and dimensions on the x and y axis, a position and a dimension on the z axis. The collision detection of the three dimensional cone-like sensor and the rectangular objects are more computationally expensive however. Because of this, we were not able to run as extensive experiments as for the two dimensional configurations. Another notable detail is that the amount of missed objects is higher than for a two dimensional configuration.

The simulation has been run with the same configurations, as well as a varying height. It was tested for heights of 10, 50, and 90 percent of the height of the person. These are reasonable configurations corresponding to the head, middle of the body, and feet of a person. The figure to the right shows the influence of the height of the sensor, on the ratio of critical misses. A critical miss is defined as an object that has not been detected, and would have hit the person. The differently colored lines represent the variable amount of sensors. As can be seen in the figure, the sensor should actually be worn higher rather than lower, at least from the configurations tested. For the difference between 50% and 90%, the results are inconclusive, the best height is most probably somewhere inbetween.

Electronic design

For each part of the Electronic design, a description is made. This description is shown below and goes in to detail of every component of the whole circuit.

General

In the Design a 100 μF capacitor is added between ground and Vcc. This capacitor will make sure no spike voltage is present when flipping the switch on or off. The capacitor makes the voltage and thus the current increase/decrease slowly to its supposed level. It does not leak any current when on, as the system is operating at DC and a capacitor has infinite resistance at a constant voltage.

Ultrasonic

As described above, two ultrasound sensors are placed on top of servos that can rotate. These servos are powered using an motor driver, which is connected to the power source, as the Arduino can not provide the current needed for the servos. However, the Arduino can send signals trough the signal wires indicating to which position the servos should rotate. These signal wires are connected to two Pulse Width Modulation (PWM) pins (pin 9 & 11) of the Arduino. The ultrasonic sensors are connected to the power source as well. Furthermore, both sensors use one input pin to send a signal, and one output pin to read the returned ultrasonic pulse. Using the Arduino, the time difference between the sent and received pulse can be used to determine

the distance of an object. Furthermore, the closer the distance the ultrasonic sensor measures, the higher the voltage the Arduino will put on the pins of the vibration motor and thus the vibrations will be amplified.

Radar

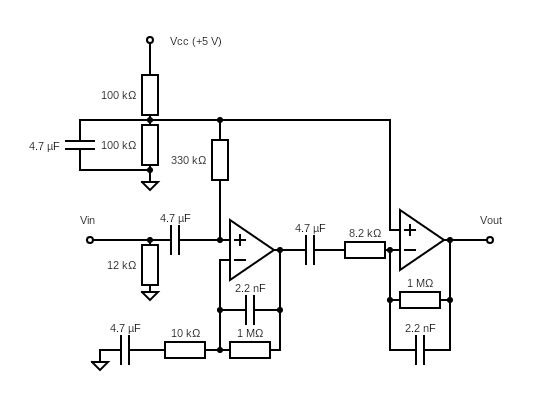

The radar is also connected to the power source. It outputs the Doppler shift frequency (difference between sent and received frequency due to Doppler effect) on its IF pin. This output frequency has an amplitude that is rather small, so it needs to be amplified before it can be processed by the Arduino. For this a 2-stage amplifier is used, where both amplifiers are connected to 5V and ground. The first stage of the 2-stage amplifier amplifies the signal such that it can be detected by the Arduino, while the second stage amplifies it such that the output signal is a block wave. The + input is connected to a voltage divider set on 2.5V, so the output voltage will give a block wave between 0 and 5V. The Doppler shift frequency then can be calculated in Arduino by measuring the time that the signal output is high. As the signal is a block wave, the time that the signal is high is half of the time of 1 period, and thus twice the Doppler shift frequency. Finally, all R and C values that are chosen amplify low frequencies in the audible part of the frequency spectrum, while attenuating high frequencies that are most notably prominent due to noise. The output of the amplifier is then read by the Arduino that determines the frequency. This frequency is then linearly mapped from values between 0 and 1023. These values are then outputted as voltage for a vibration motor. The faster the difference of speed, the higher frequency the Doppler frequency has. The higher the Doppler shift frequency, the higher the output voltage for the vibration motor, and thus the higher the vibrations will be.

Implementation

Prototyping

To check whether our ideas and research has actually improved on the work the previous group did, we have made a prototype. This prototype implements the electronic design as described in PRE2018_3_Group12#Electronic design and the data on placement and rotation of the ultrasonic sensors as described in PRE2018_3_Group12#Simulation of the sensor field of view.

We have decided to create a wearable prototype, as we are extending on the work of the previous group. Furthermore, the prototype is controlled by an Arduino uno running arduino code. File:0LAUK0 group12 2019.zip

Building the prototype

Building the prototype started with testing all individual components, most notably, the servos and the ultrasonic sensors. The radar sensor could not be tested, as it was impossible to get an output from the bare component. After verifying that the servos and ultrasonic sensors worked, they were mounted on top of each other with the screws provided with the servo. Afterwards, both the sensors were tested together. However, there was a problem were the servos will not always turn together. One servo seems to get priority to turn, while the other servo would only turn once every 5 or 10 times. If the 2 servos were switched with each other, the servo that worked stopped working, and the one that did not work, started working. Not always did one servo have priority over the other, this indicated a issue with the power delivered. However, after doing an analysis of the power, it could determined that both the servos had more than enough power delivered, to turn at the same time. As this was not the solution, the next most probable fault would be the Arduino not setting the servo pin to the right value. This was tested and was also was not the source of the problems. The only other source of the problem could be the servo itself. However, this could not be confirmed as the switching the servo out did not solve it. Although the servos were not turning as they should all time, when they did, they turned as intended.

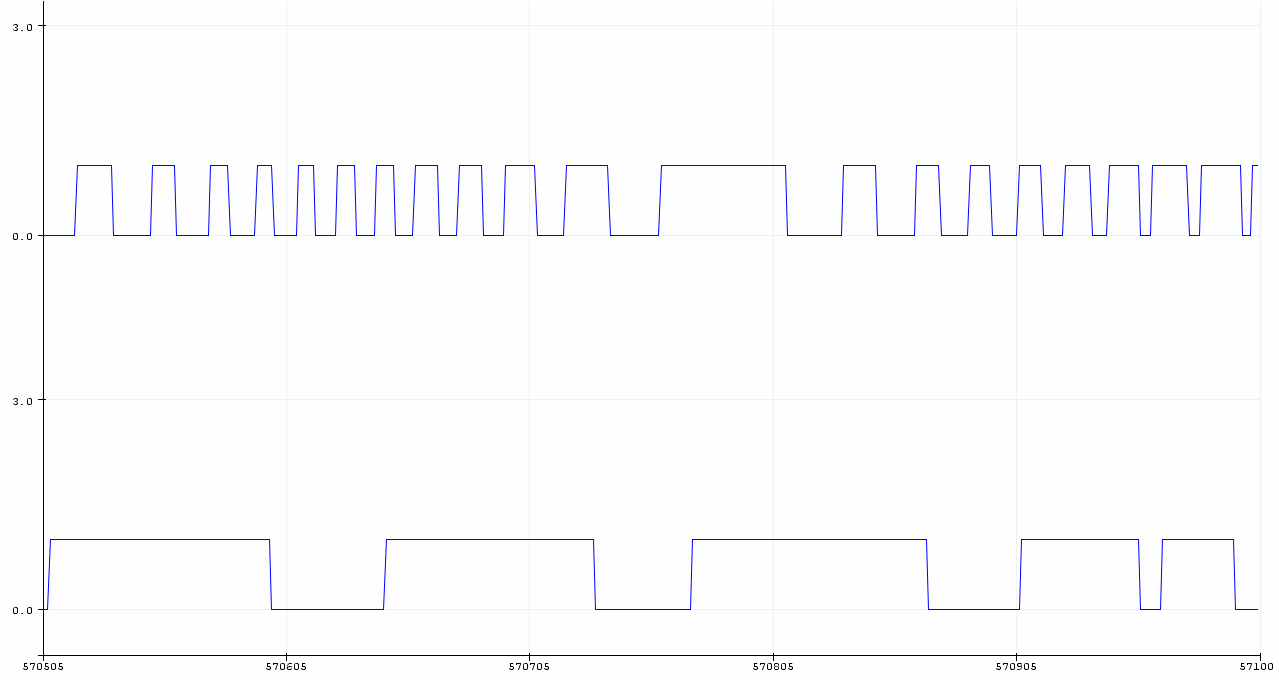

When the ultrasonic sensor part was finished, the radar part was built next. After building the amplifier needed for the radar, it was connected to an audio output. When listening to this audio output, it seemed really silent with a small 'plop' sometimes. After connecting the output to the Arduino, it could be concluded that the amplifier did not amplify the signal enough. Therefore, a new design was made that did amplify the signal enough. When it was connected, the Arduino showed a frequency difference when there was a moving object in the range of the sensor, which was linear dependant on the speed of the object. However, after carrying the sensor around, it suddenly stopped working. The way the amplifier was built (in a breadboard), made it very vulnerable, and it had stopped working. Although it was fixable, we decided that we were not going to include the radar for validation as it would likely break again. We did however, test if the radar worked correctly, these results are shown below. The graph on top represents a fast moving object, while the graph below shows a slow moving object.

As can be seen from the figure above, the frequency of the fast moving object is much higher, this is what to expect as a Doppler shift frequency. Furthermore, both signals are either 1 or 0, this means that it can be connected to a digital pin on the Arduino, instead of an analog pin. Digital pins are easier to manufacture and thus a lot cheaper. Using the relation that the Doppler shift frequency can be divided by 19.49 (different for other carrying frequencies) to obtain the velocity, it is estimated that the object in the top graph travels at ~9.5 km/h while the object in the bottom graph travels at ~1 km/h.

Validation

Test plan

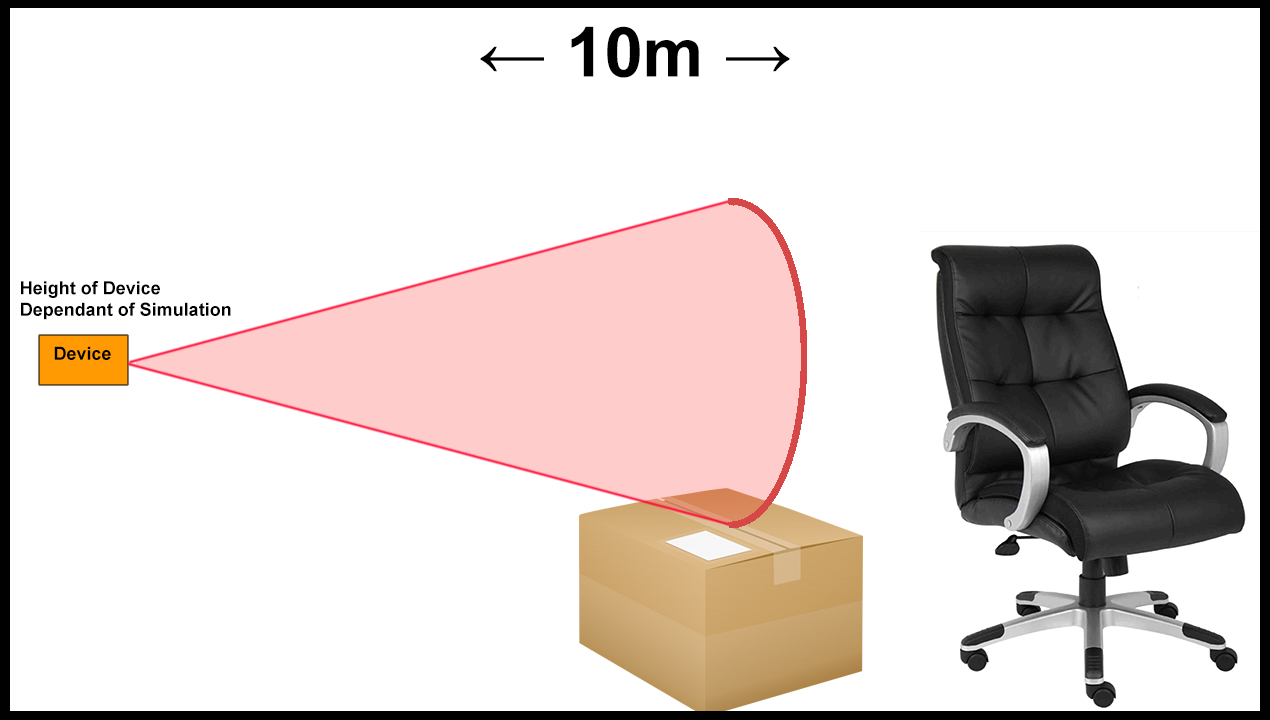

To test if our prototype has improved on last year's group, we are going to conduct two physical tests. This first test will take place in a set environment. The placement of the sensors and rotation amount and speed will be taken from the simulation. In this environment, we will be moving objects towards the sensors. These will be of varying heights and widths. To visualize the test environment we are going to use, see the following image.

We are going to place the device at a static position. The precise placement is dependent on the simulation output, as we will pick the best values for two sensors. In the sketch, we depicted an office chair and cardboard box; the office chair will be used to test the radar, as we can smoothly move this object towards the sensor. We will use a box to simulate curbs and other obstacles as we can easily hold this object at a different height. This way we can test if we have improved on last years feedback that objects used to suddenly pop into existence.

The second test will have the same set-up as the previous group. We are going to put the device on a group member, and let him walk through a test environment.

TEST 1: Device not moving The intention of this test is to test the visual range of the device. This is done by putting the device on a static position that will not be changed during this whole test. The height of the device will be determined from the simulation data. The following things will be done, the ultrasonic sensors have to detect the object to pass.

- Chair moving straight towards device.

- Chair moving in front of device from the side.

- Chair moving away from the device

- Chair dangerously close to device (i.e. if the person wearing the device would walk forwards (s)he would hit the side of the chair).

- Low object (+/- 25cm, which is the average curb height according to https://www.codepublishing.com/CA/MtShasta/html/MtShasta12/MtShasta1204.html#12.04.040) moving on the floor towards the device

- Low object (same size as above) moving in front of device from the side

The following things will be done, the radar has to detect the object to pass.

- Person moving towards device

- Person moving away from device

- Person moving from left to right across the device range

TEST 2: Physical test; device moving For this test the device will be mounted to the user. This can be done in multiple ways, for instance by using a belt or go-pro chest harness. The placement of the device will be taken from the simulation. For this test, we will create a little obstacle course for the user to traverse.

The user will have to successfully walk along the following obstacles:

- A ‘wall’ of tables in the way that you have to walk around left or right

- A circle of chairs that you have to walk around

- A ‘hallway’ of tables that you have to walk through the middle

- A chair that has fallen over (thus a low obstacle) that the user has to walk around

Just like the previous group, the tests will be compared to each other in the measurement of ‘objects hit’. This way different configurations for the device can be compared to each other. The configuration with least objects missed will be noted down as ‘best’.

Conducting the tests

We have done each of the tests described above four times. Each test run is denoted by 1) 2) 3) and 4). The data behind each of these tests will be defined at each test individually.

- Distance between sensors: 20cm.

- Height of sensors: 80cm.

- Angle of rotation, both sensors: 180 degrees.

- Test location: Dorgelozaal, Traverse building.

- Number of sensors: 2.

STATIC TEST:

- Chair moving towards sensor (Distance that the object is first observed, is noted down.): 1) 2.48m, 2) 3.51m, 3) 2.33m, 4) 2.08m

- (Additional observation: The closer the object gets, the faster the sensors rotate as the sound is received earlier.)

- Chair moving very fast from the side at 1.5m distance (Boolean; detected yes/no) 1) Yes, 2) No 3) Yes, 4) Yes.

- Chair moving from side at walking pace at 1.5m distance (Boolean; detected yes/no) 1) Yes, 2) Yes, 3) Yes, 4) Yes

- Chair moving away at walking pace (Distance noted when it doesn't detect anymore) 1) 1.15m 2) 1.8m 3) 1.93m 4) 1.73m

- Chair dangerously close to device. (Device on left side of body, Chair on right side, 0.4m from sensor.) (Angle at which the device detected the chair is noted) 1) 18 degrees from middle, 2) 18 degrees from middle, 3) 0 degrees from middle, 4) 0 degrees from middle

- Object +/- 25cm height moving towards the device. (Distance at which object first detected is noted down.) 1) 3.13m 2) 2.78m 3) 2.28m 4) Not detected.

- Object +/- 25cm height moving from side at 2m distance (Boolean; detected yes/no) 1) Yes, 2) Yes, 3) Yes, 4) No

Static Radar Tests During our testing period the radar gave problems, again. Where it correctly identified moving objects before the testing morning, it now only picked up and amplified background noise. After trying a few times, we had to conclude that the radar wasn't going to deliver trustworthy results, as the background noise had the same amplitude as moving objects had normally. We had to skip on the radar tests.