Embedded Motion Control 2013 Group 5: Difference between revisions

No edit summary |

|||

| (248 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

== Group | == Group information == | ||

=== Members === | |||

<table> | <table> | ||

<tr> | <tr> | ||

| Line 31: | Line 32: | ||

Sjoerd van den Dries<br /><br /> | Sjoerd van den Dries<br /><br /> | ||

== | === Weekly meetings === | ||

<table> | |||

<tr> | |||

<td width="150px"><b>Day:</b></td> | |||

<td width="100px"><b>Time:</b></td> | |||

<td width="150px"><b>Subject:</b></td> | |||

<td width="150px"><b>Location:</b></td> | |||

</tr> | |||

<tr> | |||

<td>Monday</td> | |||

<td>11:00</td> | |||

<td>Tutor meeting</td> | |||

<td>OGO1</td> | |||

</tr> | |||

<tr> | |||

<td>Monday</td> | |||

<td>12:00</td> | |||

<td>Group meeting</td> | |||

<td>OGO1</td> | |||

</tr> | |||

<tr> | |||

<td>Wednesday</td> | |||

<td>10:45</td> | |||

<td>College</td> | |||

<td>GEM-Z 3A08</td> | |||

</tr> | |||

<tr> | |||

<td>Thursday</td> | |||

<td>10:00</td> | |||

<td>Testing</td> | |||

<td>Robocup stadium</td> | |||

</tr> | |||

</table><br /> | |||

=== Deadlines === | |||

<table> | |||

<tr> | |||

<td width="150px"><b>Day:</b></td> | |||

<td width="100px"><b>Time:</b></td> | |||

<td width="150px"><b>Subject:</b></td> | |||

</tr> | |||

<tr> | |||

<td>September, 25th</td> | |||

<td>10:45</td> | |||

<td>Corridor competition</td> | |||

</tr> | |||

<tr> | |||

<td>October, 23th</td> | |||

<td>10:45</td> | |||

<td>Final competition</td> | |||

</tr> | |||

<tr> | |||

<td>October, 27th</td> | |||

<td>23:59</td> | |||

<td>Finish wiki</td> | |||

</tr> | |||

<tr> | |||

<td>October, 27th</td> | |||

<td>23:59</td> | |||

<td>Hand in peer review</td> | |||

</tr> | |||

</table><br /> | |||

== Log == | |||

'''Week 1:'''<br /> | |||

*Installed the software | |||

'''Week 2:'''<br /> | |||

*Did tutorials for ROS and the Jazz simulator | |||

*Get familiar to 'safe_drive.cpp' and use this as base for our program | |||

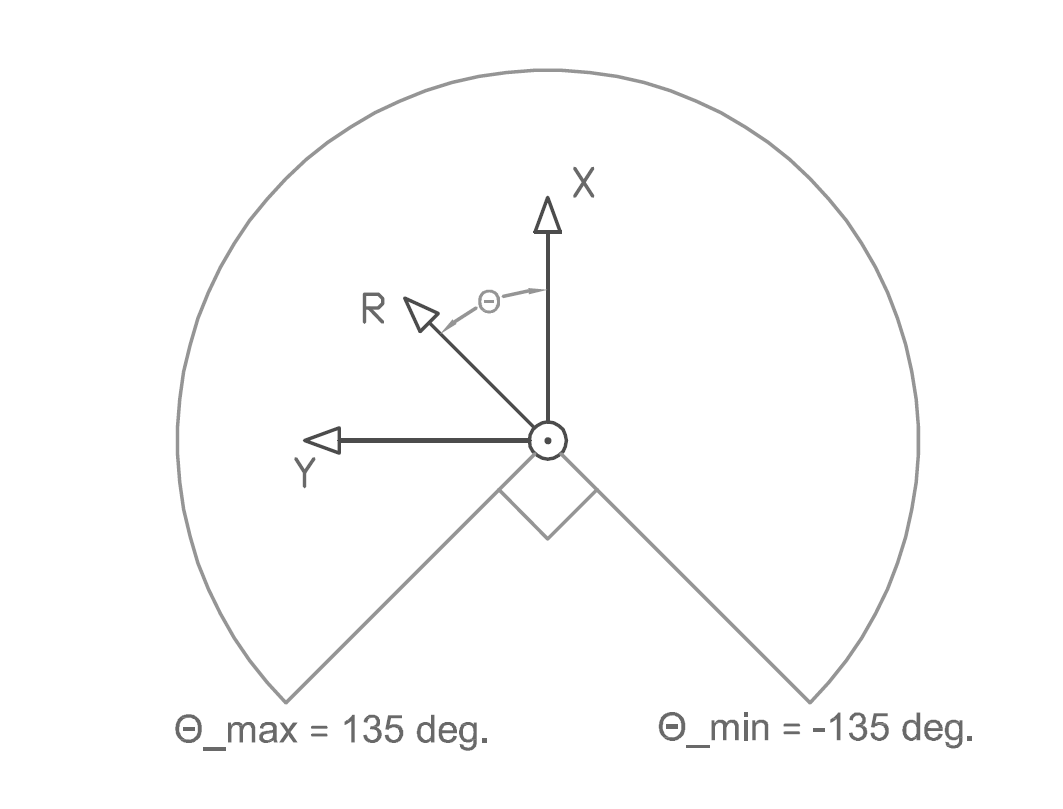

*Defined coordinate system for PICO (see figure 3.2a) | |||

'''Week 3:'''<br /> | |||

*Played with the PICO in the Jazz simulator by adding code to safe_drive.cpp | |||

*Translated the laser data to a 2d plot | |||

*Implemented OpenCV | |||

*Used the Hough transform to detect lines in the laser data | |||

*Tested the line detection method mentioned above in the simulation (see figure 3.7a) | |||

*Started coding for driving straight through a corridor ( drive straight node) | |||

*Started coding for turning ( turn node) | |||

'''Week 4:'''<br /> | |||

* Participated in corridor competition, failed in execution. | |||

* Reorganize our software architecture after the corridor competition | |||

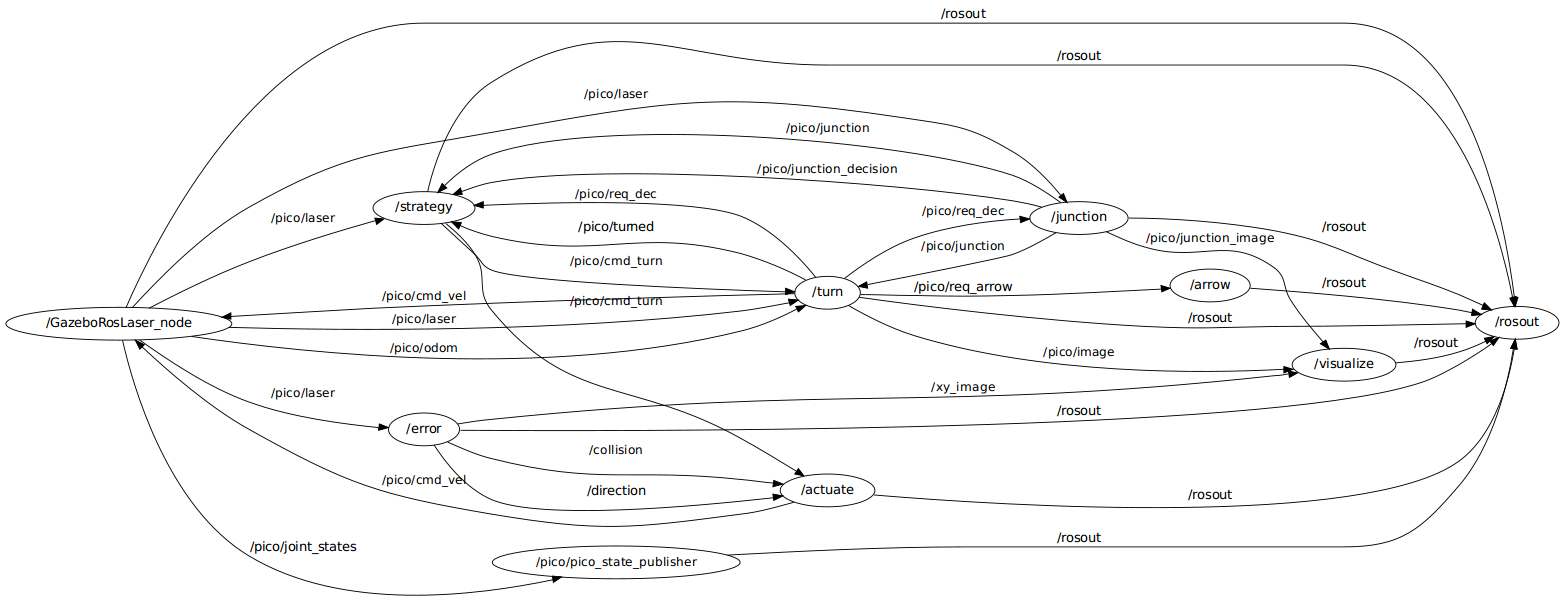

*Created structure of communicating nodes (see Figure 3.3a) | |||

*Finish drive straight node | |||

*Finish turn node | |||

*Started creating a visualization node | |||

'''Week 5:'''<br /> | |||

*Finished visualization node | |||

*Started creating node that can recognize all possible junctions in the maze (junction node) | |||

*Started creating node that generates a strategy (strategy node) | |||

*Tested drive-straight and turn node on PICO separately, after tuning they worked good individually. | |||

'''Week 6:'''<br /> | |||

*Finished junction node | |||

*Started fine tuning strategy node in simulation | |||

*Tested visualization and junction node on PICO, visualization ok but junction operated troublesome. | |||

*Created bag files for testing | |||

'''Week 7:'''<br /> | |||

*Finished strategy node (in simulation) | |||

*Tested strategy node on PICO, did not work as planned | |||

*Did further fine tuning of Turn & Junction node | |||

*Tested the arrow recognition node, arrows recognizable but direction not robust | |||

'''Week 8:'''<br /> | |||

*Tested arrow recognition (whether there is an arrow and its direction), tested ok! | |||

*Simulation ok, tested 30 corridor configurations | |||

*All nodes communicating properly w.r.t. each other | |||

*Tested on PICO, found large differences between simulation & experiment, did not manage to get PICO to operate well due to limited test time. | |||

<br /> | |||

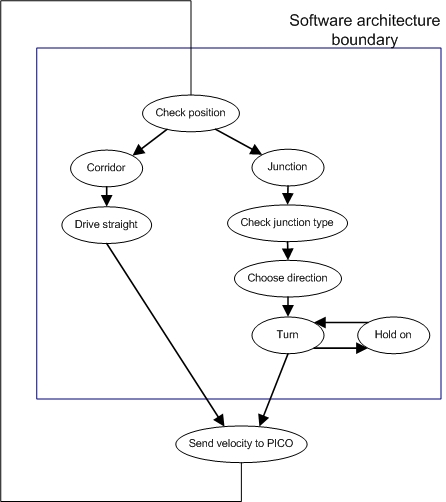

== Software architecture == | |||

The software architecture is shown in figure 3.1a. In this section the architecture is explained in more detail. First we present an overview of all nodes, inputs and outputs. Then the most challenging problems that have to be tackled to solve the maze and the solutions are discussed. | |||

=== Design choices === | |||

During the entire development process we made a number of design choices. Major decisions were made collectively. This was done by small brainstorm sessions and trial-and-error iterations. Smaller, more detailed design choices were made mostly individually when defining the code and are not discussed here. | |||

At first we all agreed on a coordinate system for PICO and decided that all control should be, if possible, based on feedback. Our opinion was that feed forward (driving/turning for several seconds) is not the way to go. The coordinate system is depicted in the figure below:[[File:Coor.png|center|thumb|400px|]] | |||

<div style="text-align: center;"> | |||

''Figure 3.1a: PICO coordinate system'' | |||

</div> | |||

Prior to the corridor competition we dissected the work in two parts: driving straight and turning. Together these would be able to let PICO drive through the corridor. The group was split in two to work on these separate parts. For the straight drive part we looked at the potential field algorithm and the finding the walls using the Hough transform. We decided to implement the Hough algorithm, since it is easier to implement and the hough transform also returns physical data in terms of dimensions of its surroundings (lines), which might come in use later. | |||

As for the second part, the turning algorithm, we used the derivative of the laserscan data to find peaks and thus identify corners. Once a exit was detected, we continued driving until the distance between the found corners was nearly equal. | |||

After the corridor competition all code was still in a single file, and since we had to expand it with more algorithms we decided to re-organize the structure by creating separate nodes and agreed on a strict input-output for each node. These nodes are displayed in table 3.1a, but at this time there was not yet a arrow/junction detection. | |||

Now we started on a new turning and driving straight algorithm, because the first one did not work well enough. For the turning we have come op with a homebrew solution (see section 3.5) instead of turning for a given time (no feedback) or using odometry (false feedback). For the straight driving we use the hough from the openCV functions & libraries. | |||

When these functions were completed we started to expand the software with extra nodes, Strategy, Junction and Arrow detection. To do this we first collectively discussed the approach for these nodes and determined the possible junctions PICO could encounter. As the deadline (maze competition) was coming closer we decided to first build a algorithm that goes left when possible and then add extra options to this to make PICO smarter. So the plan was to first build a strategy node which can recognize dead ends and avoid these, and if there was any spare time we could implement a mapping algorithm. | |||

For the arrow detection we chose for a gradient based search algorithm. At first we had another one (based on outer positions of points on the arrow) but this was not usable when the arrow was tilted. The gradient based algorithm is more robust, so we used that one. We decided not to use the outcome of the arrow node in the strategy node since it may cause problems. This problem can best be recognized when visualized: | |||

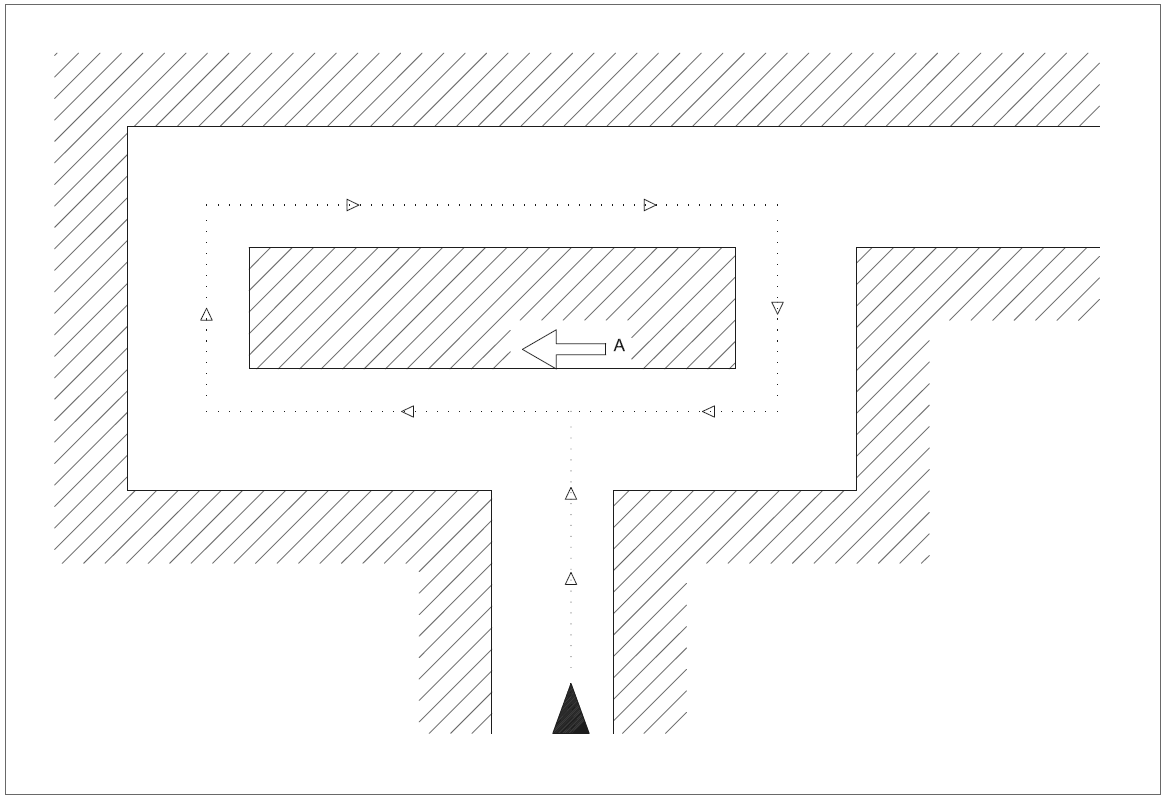

[[File:emc05infiniteloop.png|center|thumb|500px|]] | |||

<div style="text-align: center;"> | |||

''Figure 3.1b: infinite trajectory'' | |||

</div> | |||

When we would use the discussed strategy algorithm but also implement the arrow detection in the strategy the result may be an infinite loop. This occurs when there are multiple paths that lead towards the exit. For example in the figure, if we prefer PICO to make right turns but take a single left (as suggested by the red arrow A), PICO will continue to go right after this left turn and as a result, be stuck on this "island" in the maze. For other directions an analogous situation can be depicted. | |||

=== Overview nodes=== | |||

The software to solve the maze is build around the strategy node. This node receives all the information that is needed to solve the maze, and sends information to the nodes that actuate PICO. An overview of all nodes is given below.<br /> | |||

<br /> | <br /> | ||

{| | |||

{| border="3" width=50% align=center | |||

|- | |- | ||

| '' | | ''NAME'' | ||

| '' | | ''INPUT'' | ||

| '' | | ''OUTPUT'' | ||

|- | |- | ||

| | | Strategy | ||

| | | Laser data<br />Junction data<br />Turn data<br />Junction decision<br />request decision<br /> | ||

| | | Command for left, right or straight | ||

|- | |- | ||

| | | Junction | ||

| | | Laser data<br />request decision | ||

| | | Junction<br />Junction decision<br /> Derivative graph | ||

|- | |- | ||

| | | Turn | ||

| | | Command for left, right or straight<br />Laser data | ||

| | | Velocity command<br />Request decision<br />Turn data<br />rt graph | ||

|- | |- | ||

| | | Drive straight:<br />Consists of error & actuate | ||

| Command for left, right or straight<br />Laser data | |||

<br /> | | Velocity command<br />xy graph | ||

| | |||

|- | |- | ||

| | | Arrow detection | ||

| | | Camera data | ||

| | | Command for left or right or nil | ||

|- | |- | ||

| | | Visualization | ||

| | | xy graph<br />rt graph<br />Derivative graph | ||

| Images on screen | |||

|- | |} <br /> | ||

| | |||

The integration of the nodes can be visualized using rxgraph, which displays all used nodes and their mutual communication. This is done in figure 3.2a. | |||

[[File:rxgraph.png|center|thumb|900px| ]] | |||

| | <div style="text-align: center;"> | ||

''Fig 3.2a:Total software architecture'' | |||

</div> | |||

| | === '''Strategy node''' === | ||

The goal of this competition is to solve the maze. The strategy node contains the plan of action to achieve this goal. The strategy node determines at every junction what the next step is: the most left corridor that is not a dead end. This will be explained in more detail later on. | |||

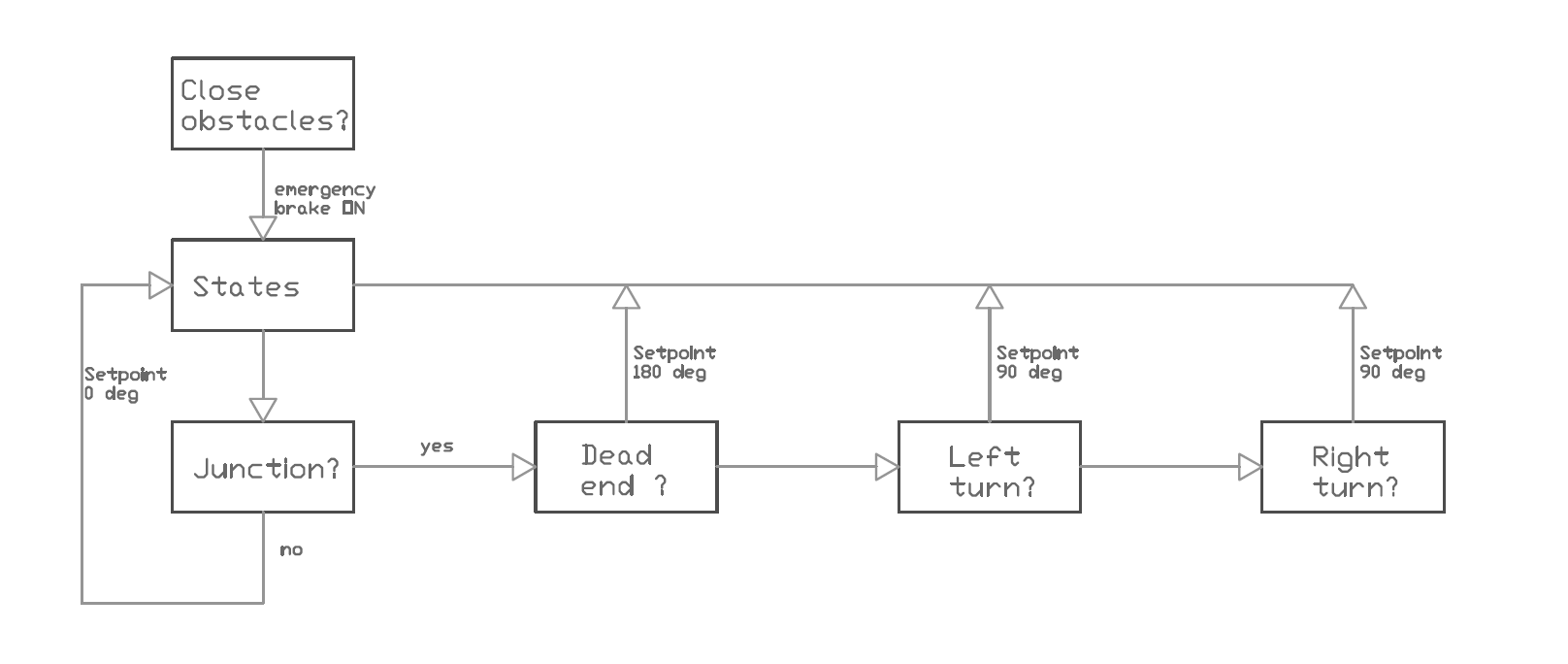

Figure 3.3a is a schematic representation of the strategy that is used. It is an ongoing loop which gets all the input data at the beginning of one cycle and ends the cycle with sending a command of what PICO should do. There is one exception in this ongoing loop, and that is when PICO is turning. More information about this exception is given later. Next the scheme in figure 3.3a is discussed. | |||

[[File:globalarchitect.jpg|center|thumb|400px| ]] | |||

<div style="text-align: center;"> | |||

''Fig 3.3a: High level software architecture '' | |||

</div> | |||

It all starts with a position check, the node will determine whether PICO is in a corridor or at a junction. When PICO is in a corridor it will send a command to drive straight trough the corridor. The straight-drive node react on this command a will control the motion of PICO. When PICO is at a junction, the strategy node will first check what type of junction it is. This information is given by the junction node. When the junction type is clear, the strategy node will make a decision based on the scheme in figure 3.3b. | |||

[[File:Steps.png|center|thumb|500px|]] | |||

<div style="text-align: center;"> | |||

''Figure 3.3b: Strategy, steps for driving'' | |||

</div> | |||

This means that when PICO has the possibility to go left, it will first be checked whether the corridor on the left is a dead end or not. When it is, the option to go left will not be take into account. So when PICO is at a junction it actually checks if there is a new junction after the current junction. Basically the strategy node is able to look two junctions ahead and makes a decision based on that information. | |||

When the decision is made, the strategy node will send a command to the turn node which controls the turn motion. This is the point when the ongoing loop holds for a while. The turn node will make shore that PICO turns the way the strategy node has commanded and will give a command back when the turn is completed. After that command the strategy node will continue the loop. | |||

So what the strategy node does is collect all input signals, decides what would be the best step to take to solve the maze and commands either to the straight-drive or turn node to control the motion. The special feature of the Wall follower ++ strategy is that it makes a decision based on the information that is gathered by looking two junctions ahead. | |||

=== '''Drive straight node''' === | |||

PICO is driving through a rather narrow corridor and may not hit the wall. So when PICO is between two walls (this is determined in our strategy node) the drive straight node is activated until PICO is on a junction again. This node lets PICO drive parallel to the wall and in the middle of the corridor. This is done by the following algorithm/pseudo code. | |||

1. Create black-white image from the laserdata. | |||

* The laserpoints will be white the rest black. | |||

* Pay attention to the orientation of the picture. | |||

2. Find lines through opencv function HoughlinesP() | |||

* These will correspond to the walls. | |||

* Returns (x,y) coordinates of begin and endpoints of the lines. | |||

* Coordinate system in top left corner, positive x axis to the right, positive y down. | |||

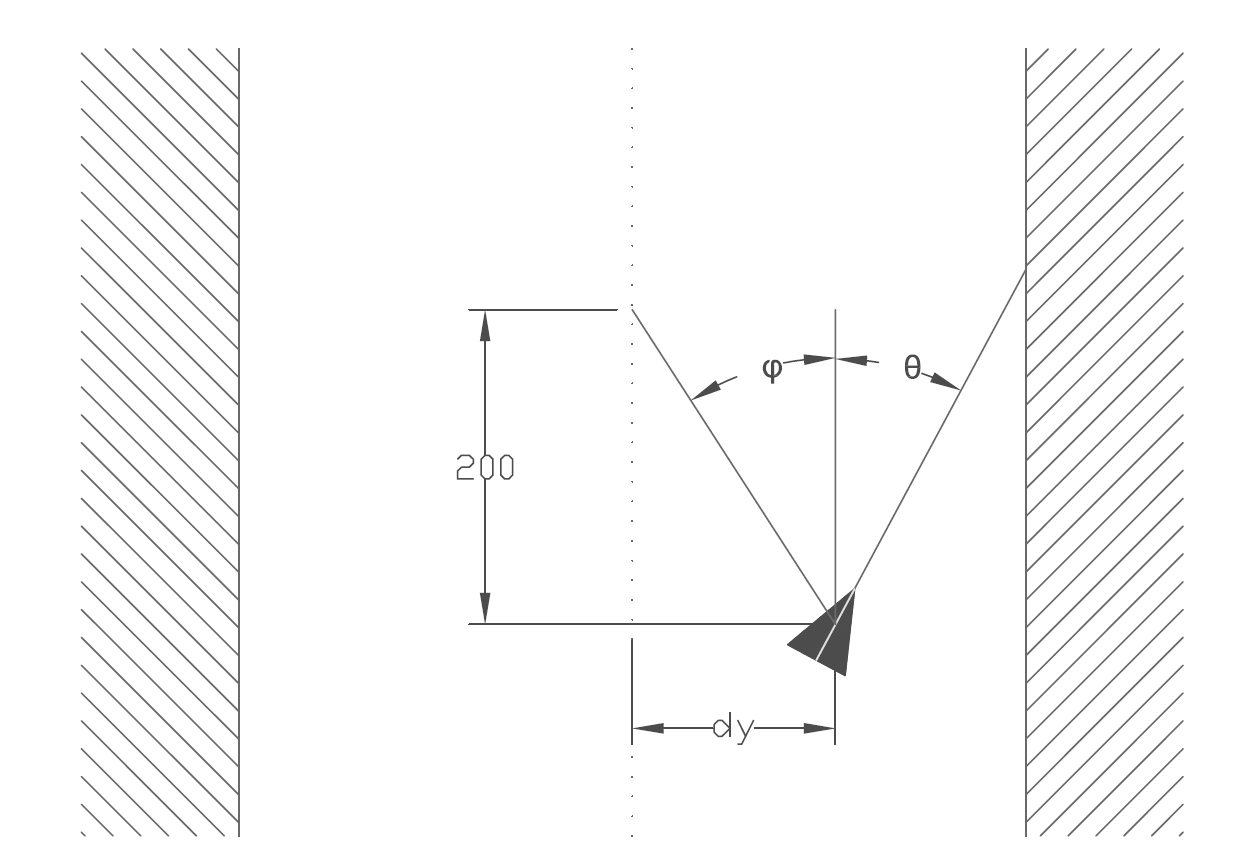

3. Calculate angle of the lines 'theta' , and length 'd' of a vector perpendicular to the line. See figure (..) | |||

* Express these in the 'PICO' coordinate system. | |||

4. Pick the closest wall | |||

* Based on the smallest 'd'. | |||

5. Find the opposite wall | |||

* Using 'theta' of the closest wall, the index in the laserdata of the closest point is calculated. | |||

* Since the walls should be about parralel the same theta is used to find the index of the opposite wall. | |||

6. Find middle of the corridor<br/> | |||

7. Calculate drive angle | |||

* Using the distance to the middle and a arbitrary distance along the corridor we find 'phi'. | |||

* Our drive angle will be 'theta', to get parallel to the wall, plus 'phi' to turn towards the setpoint in the middle of the corridor. | |||

8. Send the drive angle. | |||

* the drive angle is set to a node called actuate. This node sets an x speed and uses the drive angle as the z-rotation speed. | |||

As discussed above, the setpoint generator can be visualized as following: | |||

[[File:setpointgenerator.png|center|thumb|500px|]] | |||

<div style="text-align: center;"> | |||

''Figure 3.4a: Setpoint generation'' | |||

</div> | |||

=== '''Turn node''' === | |||

The turn node always publishes the velocity command, except when the decision is 1. That means that the turn node has to handle with all the situations in which PICO does not meet both of these two requirements: | |||

*PICO has to drive straight ahead. | |||

*PICO is between two walls. | |||

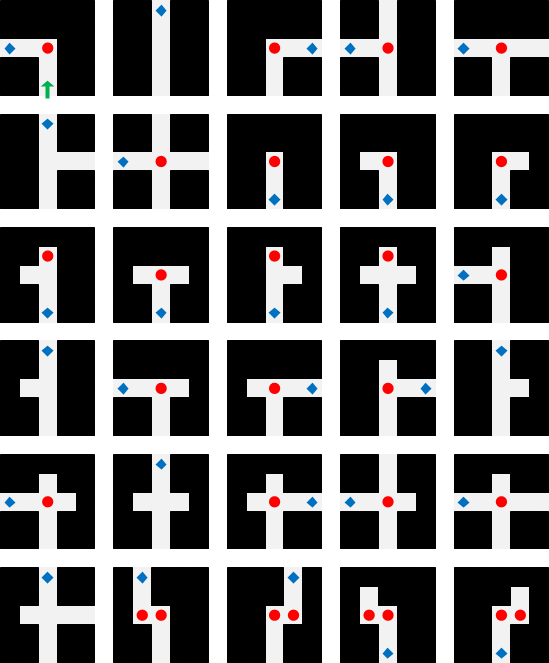

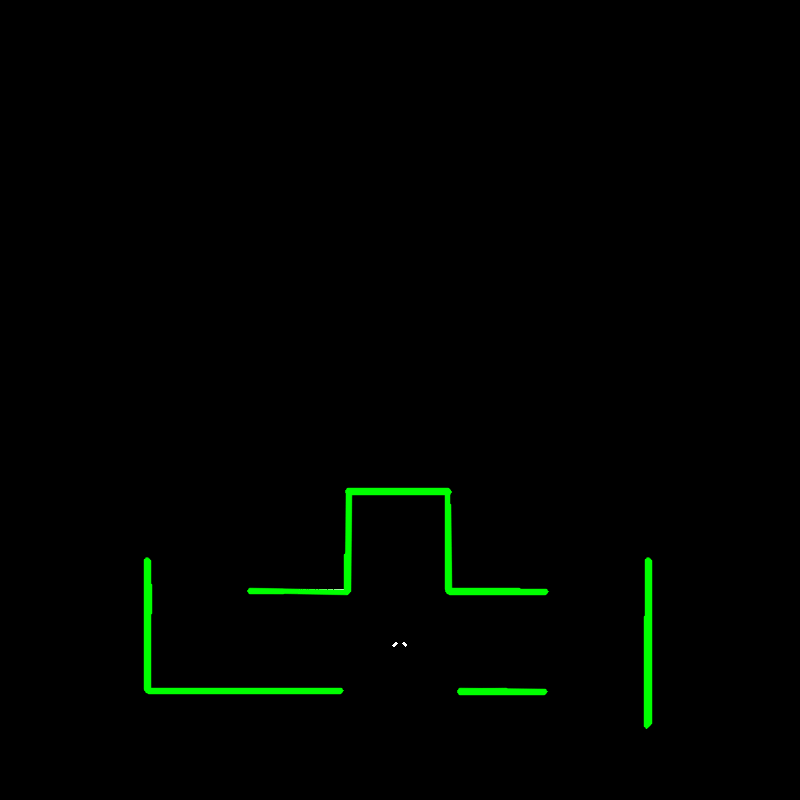

All possible situations are depicted in figure 3.5a, so the turn node only does nothing in the second situation. In all the configurations in the figure PICO starts at the place and in the directions of the green arrow (shown in the first configuration). The red bullet shows the location were PICO turns and the blue diamond images the location where PICO leaves the configuration. | |||

[[File:pico.png|center|thumb|400px|]] | |||

<div style="text-align: center;"> | |||

''Figure 3.5a: possible junctions'' | |||

</div> | |||

''Turning''<br /> | |||

We decided to make a turn of 90 degrees using feedback control. Initially we only used the laserdata, because it is much more robust than the odometry, which can be erroneous due to wheel slip. To make decisions we set thresholds, as shown in figure 3.6b. The radius that is measured by PICO has to be above or below these thresholds within specific ranges of the angle. This method is used to ensure that PICO is in the middle of a junction and for turning exactly 90 degrees. Before PICO makes a turn the turn node asks the strategy node what to do by making the request-boolean true. So, the strategy node only publishes a decision when this boolean is true. This is always the case when PICO is driving straight ahead and when PICO is in the middle of a junction. | |||

For example, when PICO has to turn 90 degrees to the left it drives forward till at least 95% of the laserdata points around 90 degrees is above the threshold. At that moment PICO is in the middle of the junction and has a good view at all possible corridors. Because of that it sends again a decision request, to know for sure which corridors lead to a dead end and which possibly lead to the exit. At this moment it also sends an arrow-boolean to the arrow node to ask if an arrow is detected. When a decision is made in the middle of the junction PICO cannot make a new decision before this turn is completed. Now the choice is confirmed and PICO may start turning. It stops turning and drives forward again when 90% of the laserdata points around 0 degrees is above the threshold. When 95% of the laserdata points is below the threshold the turn node sends a turned-boolean to the strategy node. When the turned-boolean is true, the strategy node knows that PICO completed the turn and all the variables in the turn node are set to their initial values, so that a new decision can be made. | |||

To manage all the turns looking only to a corridor in the front of PICO is not enough to ensure a turn of exactly 90 degrees is made. For example on a junction of type 4 or 6, shown in figure 3.6a, PICO immediately sees a corridor in the front, so it immediately thinks that a turn of 90 degrees is made. Also an S-turn goes wrong with this method, because the laserdata points in front of PICO are to early above the threshold. This is solved by not only looking to the front, but only looking to left. So, when a turn to the left has to be made, PICO turns till the laserdata points in the front and at the left are above the threshold. With the method that we have now, a junction of type 4 or 6 still cannot be managed. This is solved (for a turn to the left) by not looking to the left, but to the right and to the front. The same yields for turning right. | |||

<br /> | <br /> | ||

<br /> | <br /> | ||

'' | ''Dead ends''<br /> | ||

Because our strategy is based on always driving into the most left we also turn left when we drive into a dead end. For a dead end we also turn till all the data points in front of PICO are above the threshold. But when the dead end is a junction of type 1 PICO will drive forward in the left corridor, even though it is a dead end. This problem is solved by using the odometry. Although this information is not as stable as the laserdata it can help by making a turn. | |||

We used some code to transform the x-, y-, z-, w-orientation of the odometry to roll-, pitch-, yaw-angles. When PICO is driving straight ahead the yaw-angle is stored in a reference angle and when PICO starts turning this reference angle is subtracted from the current yaw-angle. The resulting angle is the angle that PICO made in this turn. To turn past the left corridor PICO is only allowed to drive forward when the resulting angle is bigger than 2. | |||

This odometry is also used to manage a junction of type 7. Because on this type of junction the methods explained above will not work. This is because PICO always sees a corridor to the left, to the right and in the front and it immediately thinks that it turned 90 degrees. This is solved by ensuring that PICO does not drive forward when the angle is smaller than 1. | |||

<br /> | <br /> | ||

<br /> | <br /> | ||

'' | ''Cross''<br /> | ||

Now almost all decision are described, but one more has to be added to be able to manage all configurations of figure 3.5a. For example at the bottom left junction PICO has to drive straight ahead, but it is not between two walls. So, when it tries to drive straight ahead a huge error occurs when PICO crosses the junction and it will possibly make undesirable turn. This is solved making another state, where PICO just drive straight ahead and disables the error correction when it is not between two walls. | |||

=== '''Junction node''' === | |||

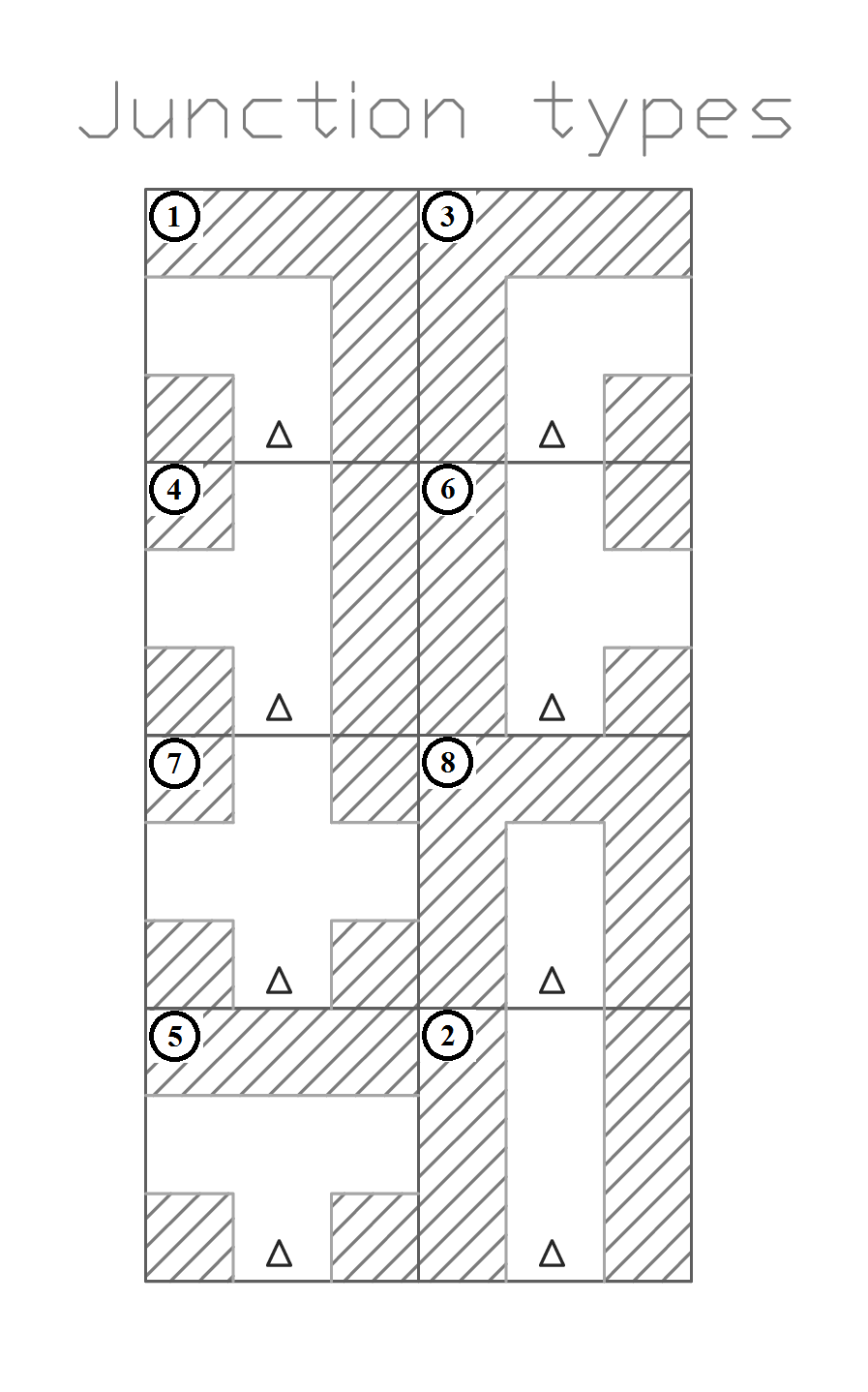

Our strategy is mainly based on the decisions pico can make at each junction. Therefore a Junction node is realised, which is able to detect all 8 different types of junctions, see figure 3.6a. Also he can detect a dead end of corridor. Detecting dead ends in the beginning of a crossing is very usefull for solving the maze fast. No time is lost for driving into these blind corridors. The junction node only listens to the laser data and a request send by the strategy node. | |||

''' | [[File:junctionstype.png|center|thumb|300px|]] | ||

<div style="text-align: center;"> | |||

''Fig 3.6a: Different types of junctions'' | |||

</div> | |||

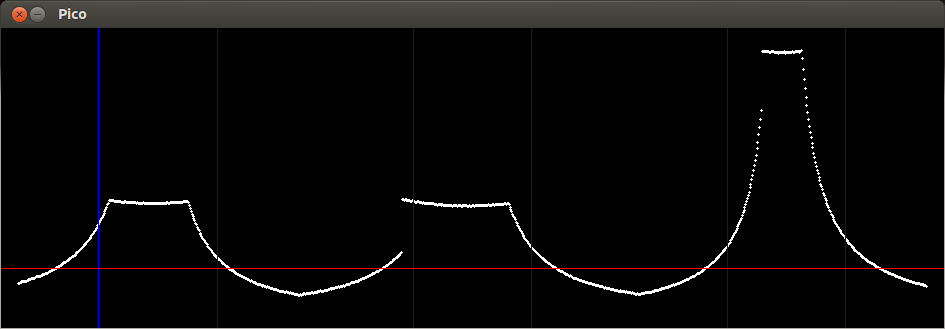

< | First of all, the junctions are detected. The regions for detecting left, straight and right corridors are determined and can be seen in Fig. 3.6b as blue vertical lines. The three humps in the graph are the three ways in the crossing of figure 3.6d. All junctions have a unique distance-angle characteristic. Therefore three booleans are used for determining humps right, straight or left. If a significant percentage laser points in one region is higher than the red threshold line, the hump is detected as a corridor and the corresponding boolean is set high. The three booleans are converted to an integer value and is published on demand of the strategy node. | ||

'' | |||

[[File:Screenshot_junction_good.png|thumb|center|500px|]] | |||

<div style="text-align: center;"> | |||

''Fig 3.6b: Laser Data, distance-angle characteristic'' | |||

< | </div> | ||

= | |||

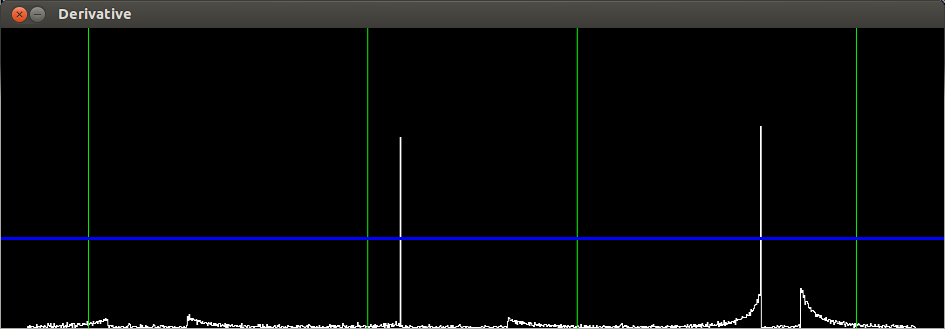

'' | To detect dead ends, the derivative of the laser data is calculated and can be seen in figure 3.6c. This image is published and will be visualized in the visualization node. The peaks in the derivative are gaps in the laser data. Therefore large gaps represents a gap in the corridor . Also three regions and a threshold line can be obtained in the figure. This function also uses three booleans (Go_left Go_straight and Go_right). If a peak in a region is above the threshold, there is a possible way out and the bool is made true. The three booleans are also converted to an integer, which represents the possible ways out at a junction. This decision integer is converted in the same way as the junction integer. | ||

= | [[File:Screenshot_diff_good.png|center|thumb|500px| ]] | ||

<div style="text-align: center;"> | |||

''Fig 3.6c: Derivative of laser data'' | |||

</div> | |||

How the booleans are converted to the integers can be seen in table 3.6.1. The junction integer represents the type of junction, while the possibility contains the possibilities PICO has at a crossing. The possibility value is a combination of the junction and possible ways out. For example, a junction of type 5 (left and right a corridor) can have blind ways left, right or both. Therefore it is possible that the junction message contains a 5 and the possibility message a 3 (Only right is a way out). This possibility integer will be published on demand to the strategy node. | |||

=== | {| border="3" width=50% align=center | ||

|- | |||

| ''Left .'' | |||

| ''Straight .'' | |||

| ''Right .'' | |||

| ''Junction .'' | |||

| ''Possibility'' | |||

|- | |||

|align=center| 1 | |||

|align=center| 0 | |||

|align=center| 0 | |||

|align=center| 1 | |||

|align=center| 1, 8 | |||

|- | |||

|align=center| 0 | |||

|align=center| 1 | |||

|align=center| 0 | |||

|align=center| 2 | |||

|align=center| 2 | |||

|- | |- | ||

| | |align=center| 0 | ||

| | |align=center| 0 | ||

| | |align=center| 1 | ||

| | |align=center| 3 | ||

|align=center| 3, 8 | |||

|- | |- | ||

| | |align=center| 1 | ||

| | |align=center| 1 | ||

| | |align=center| 0 | ||

| | |align=center| 4 | ||

|align=center| 1, 2, 4, 8 | |||

|- | |- | ||

| | |align=center| 1 | ||

| | |align=center| 0 | ||

| | |align=center| 1 | ||

| | |align=center| 5 | ||

|align=center| 1, 3, 5, 8 | |||

|- | |- | ||

| | |align=center| 0 | ||

| | |align=center| 1 | ||

| | |align=center| 1 | ||

| | |align=center| 6 | ||

|align=center| 2, 3, 6, 8 | |||

|- | |- | ||

| | |align=center| 1 | ||

| | |align=center| 1 | ||

| | |align=center| 1 | ||

| | |align=center| 7 | ||

|align=center| 1 up to 8 | |||

|- | |- | ||

| | |align=center| 0 | ||

| | |align=center| 0 | ||

| | |align=center| 0 | ||

| | |align=center| 8 | ||

|align=center| 8 | |||

|} <br /> | |} <br /> | ||

<div style="text-align: center;"> | |||

''table 3.6.1: Possibilities on several junctions'' | |||

</div> | |||

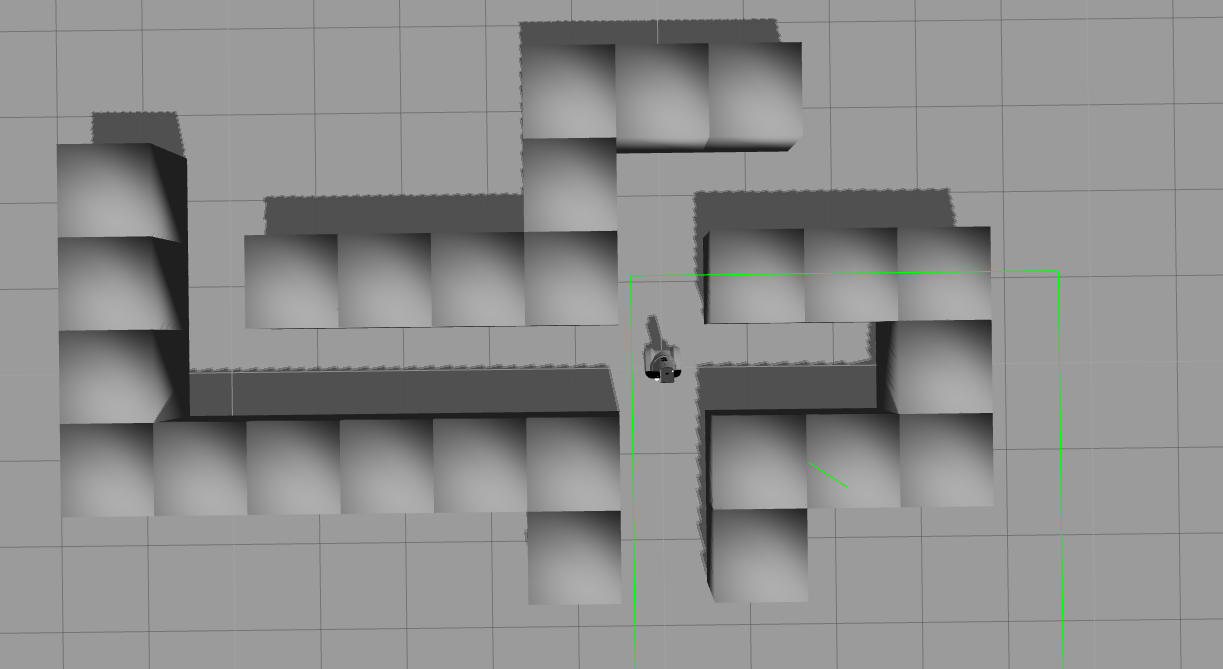

In total, there are 26 different combinations that can occur. At a 4 way crossing, junction is 7, there are 8 possible combinations! All these combinations are tested in simulation and the PICO could detect all possibilities very well as you can see in figure 3.6d, 3.6c & 3.6b. PICO sees three corridors and publishes a junction with value 7. But he can only go into the left or straight corridor and therefore he publishes a possibility with value 4. | |||

'' | [[File:Screenshot_gazebo_good.png|thumb|center|500px|]] | ||

<div style="text-align: center;"> | |||

''Fig 3.6d: Example crossing (junction = 7, Possibility = 4)'' | |||

</div> | |||

=== Visualization node === | |||

As soon as we started programming an efficient way for debugging was chosen. Putting print statements everywhere is not very efficient, so we decided to visualize as much as possible. The standard library of C++ does not support visualization, so an extra library (OpenCV) was added already in the third week. This library contains visualization functions, but there are also many image processing functions that can be used in the arrow detection node and other functions. | |||

At the corridor competition we already had some visualization, but this was not implemented in a separate node. This was also the problem whereby we failed at the corridor competition. Due to the imshow() function, we were publishing the velocity message on a very low frequency, ± 0.5Hz instead of the desired 20 Hz. At that point we decided to make an independent visualization node which will be run on a separate laptop. Through this simple modification, the nodes which run on PICO will publish and read at 20 Hz. The visualization node will read the topics at a lower 10Hz rate. | |||

The visualization node listens to three topics, which contains three images we show: | |||

* The first image contains the xy graph PICO sees, which is shown in figure 3.7a. This image shows the laser data converted to Cartesian coordinates and is used by the drive-straight nodes. The Hough-transformation uses this image for detect the nearest lines. | |||

* The laser data will be plot in the second image, which is shown in figure 3.6b. The distance is plotted against the angle on the y and x axis respectively. This plot will be used to define thresholds and regions for turning and junction detection. | |||

* The last image contains the derivative of the laser data, which is shown in figure 3.6c. The absolute value of the derivative function is used to detect gaps in a corridor. These gaps are used by the junction decision making function. | |||

[[File:LinedetectionOpencv.png|center|thumb|300px|]] | |||

<div style="text-align: center;"> | |||

''Fig 3.7a: Cartesian reconstruction of laserdata'' | |||

</div> | |||

By visualizing as much as possible, debugging went quite fast. Fine-tuning the interesting regions and the thresholds went much easier. The threshold values for example must be lower in the real maze and higher in the simulation. More insight in the data is obtained when the data is plotted, because some strange behavior like noise in the laser data was seen directly. Also the images are used in the presentation and on the wiki page, for clarification. A picture is worth as much as thousand words. | |||

=== Arrow recognition node === | |||

As mentioned before, we did incorporate arrow recognition in the software. We kept the results on a scrict information-output basis (using a cout//). The arrow recognition software was constructed as a separate node which communicates with the central strategy node. | |||

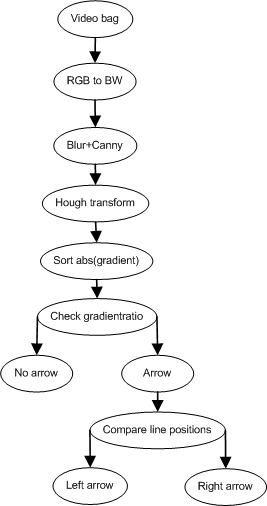

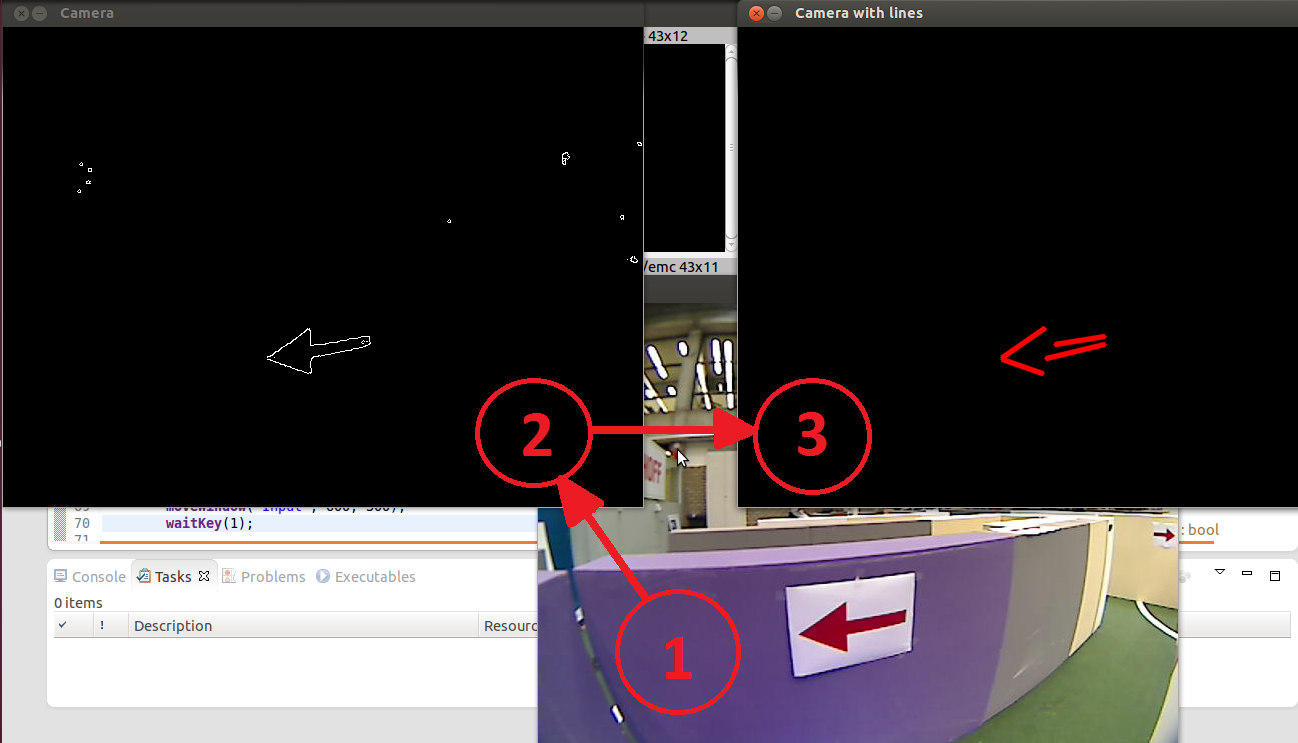

We first convert the video (bag, see step 1 of figure 3.8b from red, green and blue (RGB) to black and white (BW). Next, we apply a blur filter to the image. This is a openCV function and smooths the image. After this a Canny edge detector is applied. We now have the contours displayed as displayed in step 2 of figure 3.8b. Next, we apply a Hough transform to this contour. The options are tweaked such that only the arrows tail and head is found by the Hough transform. Now we have arrived at step 3 of figure 3.8b. | |||

[[File:vision.jpg|center|thumb|300px| ]] | |||

<div style="text-align: center;"> | |||

''fig 3.8a: Flowchart for arrow recognition'' | |||

</div> | |||

When online, these functions output their data to the GUI as displayed in figure 3.8b. | |||

[[File:visionsteps.png|center|thumb|400px| ]] | |||

<div style="text-align: center;"> | |||

''fig 3.8b: Steps involving arrow recognition'' | |||

</div> | |||

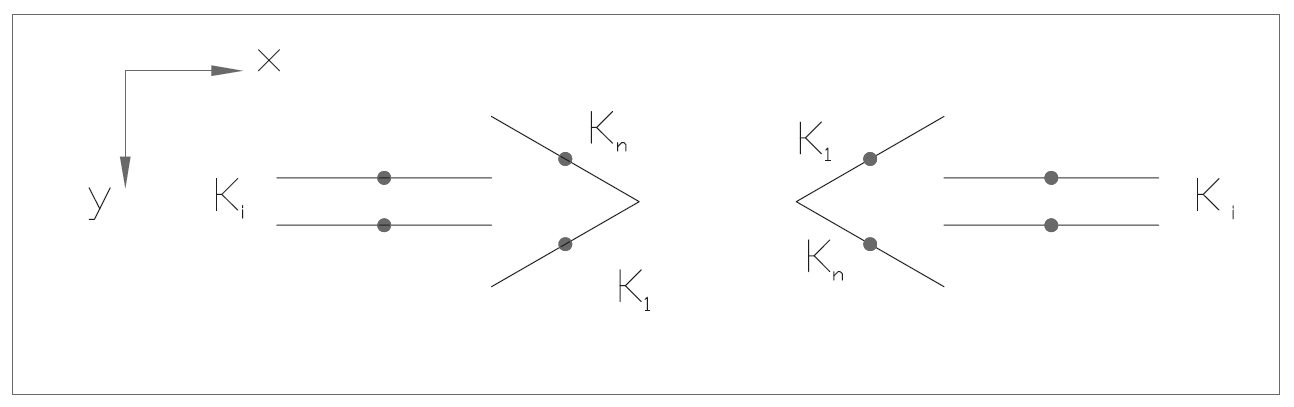

=== | Next, we calculate the gradient (<math>K_i=\frac{dy_i}{dx_i}</math>, i=1..n, n=no. found lines) and average x-value (indicated by the points in figure 3.8c. We couple these values and then we sort these by the magnitude of the gradients. These variables are shown in the figure below. | ||

[[File:arrowrecog.png|center|thumb|500px| ]] | |||

<div style="text-align: center;"> | |||

''Fig 3.8c: Arrow properties'' | |||

</div> | |||

We want to compare the head of the arrow with its tail. If the average x position of the head is smaller than its tail we know it points to the left and vice versa to the right. We know that <math>K_1</math> has the largest gradient and <math>K_n</math> the lowest. The tail is somewhere in between. So if we compare the average x corresponding to the maximum gradient with that of the median gradient we can find the orientation of the arrow by checking at which side (x) the maximum gradient is w.r.t. the median gradient. | |||

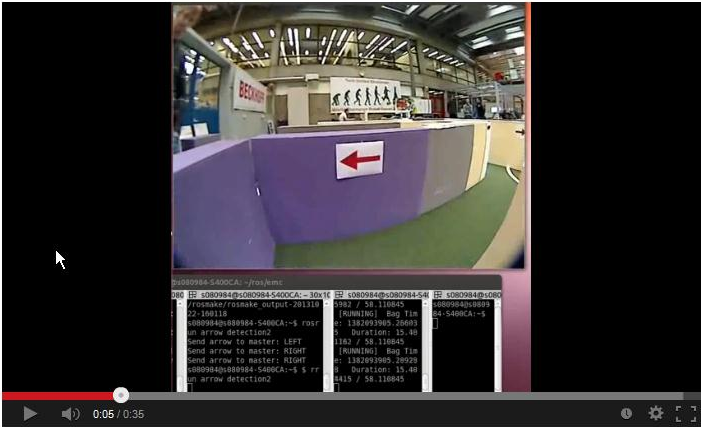

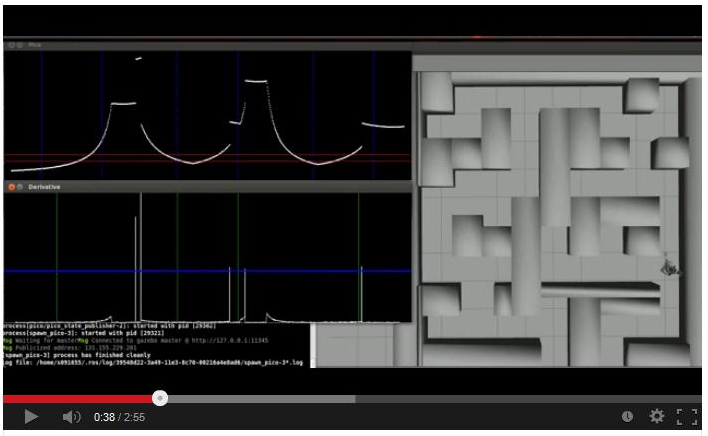

[[File:emc05_thumb2.png|400px|center|link=http://www.youtube.com/watch?v=9DhfgQhNfzQ]] | |||

== Evaluation == | |||

In the youtube-video below the simualation of PICO in the maze is shown: | |||

[[File:EMC05_screenshot1.PNG|400px|center|link=http://www.youtube.com/watch?v=7y6LOpRv1sA&]] | |||

===Maze evaluation=== | |||

During testing on the day before the final competition we find out that some thresholds had to be adjusted, but we did not have enough time to make it perfect. This was the reason why we did not succeeded in the competition. For example, we set the accuracy of the turning from 95% to 90%. In theory the turns should be a little less than 90 degrees. We did this because when PICO stops turning, the rear wheels are not still not in the good position for driving forward. So, when PICO wants to drive straight ahead it also turns a little further than the angle that is already made. Unfortunately PICO still made a larger turn than 90 degrees.<br />PICO hit the wall, because he didn't detect it very well. In our safe_drive function we made the decision on more than one data points. We thought this was more robust against noise. Because PICO drove against the corner of the wall with an angle of approximately 45 degrees, to less data points were inside the region of 30cm of PICO and he hit the wall. This never happened during testing, so we taught the decision was safe enough. It is unfortunate that we found out that this was not the case during the final competition. | |||

== | ===Improvements=== | ||

When we monitored the rostopics we saw that the decisions were made right. So we are sure that, with a little more test time, we would be able to make much better turns and solve the maze. Another improvement that we could implement is a self-learning strategy node, that measures the width of the corridors. Knowing this width, the thresholds can be fine tuned. When the thresholds are not hard coded and the strategy node could set them itself, it would be easier to make turns of exactly 90 degrees in every corridor. | |||

<br /> | <br /> | ||

Latest revision as of 21:22, 27 October 2013

Group information

Members

| Name: | Student ID: |

| Arjen Hamers | 0792836 |

| Erwin Hoogers | 0714950 |

| Ties Janssen | 0607344 |

| Tim Verdonschot | 0715838 |

| Rob Zwitserlood | 0654389 |

Tutor:

Sjoerd van den Dries

Weekly meetings

| Day: | Time: | Subject: | Location: |

| Monday | 11:00 | Tutor meeting | OGO1 |

| Monday | 12:00 | Group meeting | OGO1 |

| Wednesday | 10:45 | College | GEM-Z 3A08 |

| Thursday | 10:00 | Testing | Robocup stadium |

Deadlines

| Day: | Time: | Subject: |

| September, 25th | 10:45 | Corridor competition |

| October, 23th | 10:45 | Final competition |

| October, 27th | 23:59 | Finish wiki |

| October, 27th | 23:59 | Hand in peer review |

Log

Week 1:

- Installed the software

Week 2:

- Did tutorials for ROS and the Jazz simulator

- Get familiar to 'safe_drive.cpp' and use this as base for our program

- Defined coordinate system for PICO (see figure 3.2a)

Week 3:

- Played with the PICO in the Jazz simulator by adding code to safe_drive.cpp

- Translated the laser data to a 2d plot

- Implemented OpenCV

- Used the Hough transform to detect lines in the laser data

- Tested the line detection method mentioned above in the simulation (see figure 3.7a)

- Started coding for driving straight through a corridor ( drive straight node)

- Started coding for turning ( turn node)

Week 4:

- Participated in corridor competition, failed in execution.

- Reorganize our software architecture after the corridor competition

- Created structure of communicating nodes (see Figure 3.3a)

- Finish drive straight node

- Finish turn node

- Started creating a visualization node

Week 5:

- Finished visualization node

- Started creating node that can recognize all possible junctions in the maze (junction node)

- Started creating node that generates a strategy (strategy node)

- Tested drive-straight and turn node on PICO separately, after tuning they worked good individually.

Week 6:

- Finished junction node

- Started fine tuning strategy node in simulation

- Tested visualization and junction node on PICO, visualization ok but junction operated troublesome.

- Created bag files for testing

Week 7:

- Finished strategy node (in simulation)

- Tested strategy node on PICO, did not work as planned

- Did further fine tuning of Turn & Junction node

- Tested the arrow recognition node, arrows recognizable but direction not robust

Week 8:

- Tested arrow recognition (whether there is an arrow and its direction), tested ok!

- Simulation ok, tested 30 corridor configurations

- All nodes communicating properly w.r.t. each other

- Tested on PICO, found large differences between simulation & experiment, did not manage to get PICO to operate well due to limited test time.

Software architecture

The software architecture is shown in figure 3.1a. In this section the architecture is explained in more detail. First we present an overview of all nodes, inputs and outputs. Then the most challenging problems that have to be tackled to solve the maze and the solutions are discussed.

Design choices

During the entire development process we made a number of design choices. Major decisions were made collectively. This was done by small brainstorm sessions and trial-and-error iterations. Smaller, more detailed design choices were made mostly individually when defining the code and are not discussed here.

At first we all agreed on a coordinate system for PICO and decided that all control should be, if possible, based on feedback. Our opinion was that feed forward (driving/turning for several seconds) is not the way to go. The coordinate system is depicted in the figure below:

Figure 3.1a: PICO coordinate system

Prior to the corridor competition we dissected the work in two parts: driving straight and turning. Together these would be able to let PICO drive through the corridor. The group was split in two to work on these separate parts. For the straight drive part we looked at the potential field algorithm and the finding the walls using the Hough transform. We decided to implement the Hough algorithm, since it is easier to implement and the hough transform also returns physical data in terms of dimensions of its surroundings (lines), which might come in use later.

As for the second part, the turning algorithm, we used the derivative of the laserscan data to find peaks and thus identify corners. Once a exit was detected, we continued driving until the distance between the found corners was nearly equal.

After the corridor competition all code was still in a single file, and since we had to expand it with more algorithms we decided to re-organize the structure by creating separate nodes and agreed on a strict input-output for each node. These nodes are displayed in table 3.1a, but at this time there was not yet a arrow/junction detection.

Now we started on a new turning and driving straight algorithm, because the first one did not work well enough. For the turning we have come op with a homebrew solution (see section 3.5) instead of turning for a given time (no feedback) or using odometry (false feedback). For the straight driving we use the hough from the openCV functions & libraries.

When these functions were completed we started to expand the software with extra nodes, Strategy, Junction and Arrow detection. To do this we first collectively discussed the approach for these nodes and determined the possible junctions PICO could encounter. As the deadline (maze competition) was coming closer we decided to first build a algorithm that goes left when possible and then add extra options to this to make PICO smarter. So the plan was to first build a strategy node which can recognize dead ends and avoid these, and if there was any spare time we could implement a mapping algorithm.

For the arrow detection we chose for a gradient based search algorithm. At first we had another one (based on outer positions of points on the arrow) but this was not usable when the arrow was tilted. The gradient based algorithm is more robust, so we used that one. We decided not to use the outcome of the arrow node in the strategy node since it may cause problems. This problem can best be recognized when visualized:

Figure 3.1b: infinite trajectory

When we would use the discussed strategy algorithm but also implement the arrow detection in the strategy the result may be an infinite loop. This occurs when there are multiple paths that lead towards the exit. For example in the figure, if we prefer PICO to make right turns but take a single left (as suggested by the red arrow A), PICO will continue to go right after this left turn and as a result, be stuck on this "island" in the maze. For other directions an analogous situation can be depicted.

Overview nodes

The software to solve the maze is build around the strategy node. This node receives all the information that is needed to solve the maze, and sends information to the nodes that actuate PICO. An overview of all nodes is given below.

| NAME | INPUT | OUTPUT |

| Strategy | Laser data Junction data Turn data Junction decision request decision |

Command for left, right or straight |

| Junction | Laser data request decision |

Junction Junction decision Derivative graph |

| Turn | Command for left, right or straight Laser data |

Velocity command Request decision Turn data rt graph |

| Drive straight: Consists of error & actuate |

Command for left, right or straight Laser data |

Velocity command xy graph |

| Arrow detection | Camera data | Command for left or right or nil |

| Visualization | xy graph rt graph Derivative graph |

Images on screen |

The integration of the nodes can be visualized using rxgraph, which displays all used nodes and their mutual communication. This is done in figure 3.2a.

Fig 3.2a:Total software architecture

Strategy node

The goal of this competition is to solve the maze. The strategy node contains the plan of action to achieve this goal. The strategy node determines at every junction what the next step is: the most left corridor that is not a dead end. This will be explained in more detail later on. Figure 3.3a is a schematic representation of the strategy that is used. It is an ongoing loop which gets all the input data at the beginning of one cycle and ends the cycle with sending a command of what PICO should do. There is one exception in this ongoing loop, and that is when PICO is turning. More information about this exception is given later. Next the scheme in figure 3.3a is discussed.

Fig 3.3a: High level software architecture

It all starts with a position check, the node will determine whether PICO is in a corridor or at a junction. When PICO is in a corridor it will send a command to drive straight trough the corridor. The straight-drive node react on this command a will control the motion of PICO. When PICO is at a junction, the strategy node will first check what type of junction it is. This information is given by the junction node. When the junction type is clear, the strategy node will make a decision based on the scheme in figure 3.3b.

Figure 3.3b: Strategy, steps for driving

This means that when PICO has the possibility to go left, it will first be checked whether the corridor on the left is a dead end or not. When it is, the option to go left will not be take into account. So when PICO is at a junction it actually checks if there is a new junction after the current junction. Basically the strategy node is able to look two junctions ahead and makes a decision based on that information. When the decision is made, the strategy node will send a command to the turn node which controls the turn motion. This is the point when the ongoing loop holds for a while. The turn node will make shore that PICO turns the way the strategy node has commanded and will give a command back when the turn is completed. After that command the strategy node will continue the loop. So what the strategy node does is collect all input signals, decides what would be the best step to take to solve the maze and commands either to the straight-drive or turn node to control the motion. The special feature of the Wall follower ++ strategy is that it makes a decision based on the information that is gathered by looking two junctions ahead.

Drive straight node

PICO is driving through a rather narrow corridor and may not hit the wall. So when PICO is between two walls (this is determined in our strategy node) the drive straight node is activated until PICO is on a junction again. This node lets PICO drive parallel to the wall and in the middle of the corridor. This is done by the following algorithm/pseudo code.

1. Create black-white image from the laserdata.

- The laserpoints will be white the rest black.

- Pay attention to the orientation of the picture.

2. Find lines through opencv function HoughlinesP()

- These will correspond to the walls.

- Returns (x,y) coordinates of begin and endpoints of the lines.

- Coordinate system in top left corner, positive x axis to the right, positive y down.

3. Calculate angle of the lines 'theta' , and length 'd' of a vector perpendicular to the line. See figure (..)

- Express these in the 'PICO' coordinate system.

4. Pick the closest wall

- Based on the smallest 'd'.

5. Find the opposite wall

- Using 'theta' of the closest wall, the index in the laserdata of the closest point is calculated.

- Since the walls should be about parralel the same theta is used to find the index of the opposite wall.

6. Find middle of the corridor

7. Calculate drive angle

- Using the distance to the middle and a arbitrary distance along the corridor we find 'phi'.

- Our drive angle will be 'theta', to get parallel to the wall, plus 'phi' to turn towards the setpoint in the middle of the corridor.

8. Send the drive angle.

- the drive angle is set to a node called actuate. This node sets an x speed and uses the drive angle as the z-rotation speed.

As discussed above, the setpoint generator can be visualized as following:

Figure 3.4a: Setpoint generation

Turn node

The turn node always publishes the velocity command, except when the decision is 1. That means that the turn node has to handle with all the situations in which PICO does not meet both of these two requirements:

- PICO has to drive straight ahead.

- PICO is between two walls.

All possible situations are depicted in figure 3.5a, so the turn node only does nothing in the second situation. In all the configurations in the figure PICO starts at the place and in the directions of the green arrow (shown in the first configuration). The red bullet shows the location were PICO turns and the blue diamond images the location where PICO leaves the configuration.

Figure 3.5a: possible junctions

Turning

We decided to make a turn of 90 degrees using feedback control. Initially we only used the laserdata, because it is much more robust than the odometry, which can be erroneous due to wheel slip. To make decisions we set thresholds, as shown in figure 3.6b. The radius that is measured by PICO has to be above or below these thresholds within specific ranges of the angle. This method is used to ensure that PICO is in the middle of a junction and for turning exactly 90 degrees. Before PICO makes a turn the turn node asks the strategy node what to do by making the request-boolean true. So, the strategy node only publishes a decision when this boolean is true. This is always the case when PICO is driving straight ahead and when PICO is in the middle of a junction.

For example, when PICO has to turn 90 degrees to the left it drives forward till at least 95% of the laserdata points around 90 degrees is above the threshold. At that moment PICO is in the middle of the junction and has a good view at all possible corridors. Because of that it sends again a decision request, to know for sure which corridors lead to a dead end and which possibly lead to the exit. At this moment it also sends an arrow-boolean to the arrow node to ask if an arrow is detected. When a decision is made in the middle of the junction PICO cannot make a new decision before this turn is completed. Now the choice is confirmed and PICO may start turning. It stops turning and drives forward again when 90% of the laserdata points around 0 degrees is above the threshold. When 95% of the laserdata points is below the threshold the turn node sends a turned-boolean to the strategy node. When the turned-boolean is true, the strategy node knows that PICO completed the turn and all the variables in the turn node are set to their initial values, so that a new decision can be made.

To manage all the turns looking only to a corridor in the front of PICO is not enough to ensure a turn of exactly 90 degrees is made. For example on a junction of type 4 or 6, shown in figure 3.6a, PICO immediately sees a corridor in the front, so it immediately thinks that a turn of 90 degrees is made. Also an S-turn goes wrong with this method, because the laserdata points in front of PICO are to early above the threshold. This is solved by not only looking to the front, but only looking to left. So, when a turn to the left has to be made, PICO turns till the laserdata points in the front and at the left are above the threshold. With the method that we have now, a junction of type 4 or 6 still cannot be managed. This is solved (for a turn to the left) by not looking to the left, but to the right and to the front. The same yields for turning right.

Dead ends

Because our strategy is based on always driving into the most left we also turn left when we drive into a dead end. For a dead end we also turn till all the data points in front of PICO are above the threshold. But when the dead end is a junction of type 1 PICO will drive forward in the left corridor, even though it is a dead end. This problem is solved by using the odometry. Although this information is not as stable as the laserdata it can help by making a turn.

We used some code to transform the x-, y-, z-, w-orientation of the odometry to roll-, pitch-, yaw-angles. When PICO is driving straight ahead the yaw-angle is stored in a reference angle and when PICO starts turning this reference angle is subtracted from the current yaw-angle. The resulting angle is the angle that PICO made in this turn. To turn past the left corridor PICO is only allowed to drive forward when the resulting angle is bigger than 2.

This odometry is also used to manage a junction of type 7. Because on this type of junction the methods explained above will not work. This is because PICO always sees a corridor to the left, to the right and in the front and it immediately thinks that it turned 90 degrees. This is solved by ensuring that PICO does not drive forward when the angle is smaller than 1.

Cross

Now almost all decision are described, but one more has to be added to be able to manage all configurations of figure 3.5a. For example at the bottom left junction PICO has to drive straight ahead, but it is not between two walls. So, when it tries to drive straight ahead a huge error occurs when PICO crosses the junction and it will possibly make undesirable turn. This is solved making another state, where PICO just drive straight ahead and disables the error correction when it is not between two walls.

Junction node

Our strategy is mainly based on the decisions pico can make at each junction. Therefore a Junction node is realised, which is able to detect all 8 different types of junctions, see figure 3.6a. Also he can detect a dead end of corridor. Detecting dead ends in the beginning of a crossing is very usefull for solving the maze fast. No time is lost for driving into these blind corridors. The junction node only listens to the laser data and a request send by the strategy node.

Fig 3.6a: Different types of junctions

First of all, the junctions are detected. The regions for detecting left, straight and right corridors are determined and can be seen in Fig. 3.6b as blue vertical lines. The three humps in the graph are the three ways in the crossing of figure 3.6d. All junctions have a unique distance-angle characteristic. Therefore three booleans are used for determining humps right, straight or left. If a significant percentage laser points in one region is higher than the red threshold line, the hump is detected as a corridor and the corresponding boolean is set high. The three booleans are converted to an integer value and is published on demand of the strategy node.

Fig 3.6b: Laser Data, distance-angle characteristic

To detect dead ends, the derivative of the laser data is calculated and can be seen in figure 3.6c. This image is published and will be visualized in the visualization node. The peaks in the derivative are gaps in the laser data. Therefore large gaps represents a gap in the corridor . Also three regions and a threshold line can be obtained in the figure. This function also uses three booleans (Go_left Go_straight and Go_right). If a peak in a region is above the threshold, there is a possible way out and the bool is made true. The three booleans are also converted to an integer, which represents the possible ways out at a junction. This decision integer is converted in the same way as the junction integer.

Fig 3.6c: Derivative of laser data

How the booleans are converted to the integers can be seen in table 3.6.1. The junction integer represents the type of junction, while the possibility contains the possibilities PICO has at a crossing. The possibility value is a combination of the junction and possible ways out. For example, a junction of type 5 (left and right a corridor) can have blind ways left, right or both. Therefore it is possible that the junction message contains a 5 and the possibility message a 3 (Only right is a way out). This possibility integer will be published on demand to the strategy node.

| Left . | Straight . | Right . | Junction . | Possibility |

| 1 | 0 | 0 | 1 | 1, 8 |

| 0 | 1 | 0 | 2 | 2 |

| 0 | 0 | 1 | 3 | 3, 8 |

| 1 | 1 | 0 | 4 | 1, 2, 4, 8 |

| 1 | 0 | 1 | 5 | 1, 3, 5, 8 |

| 0 | 1 | 1 | 6 | 2, 3, 6, 8 |

| 1 | 1 | 1 | 7 | 1 up to 8 |

| 0 | 0 | 0 | 8 | 8 |

table 3.6.1: Possibilities on several junctions

In total, there are 26 different combinations that can occur. At a 4 way crossing, junction is 7, there are 8 possible combinations! All these combinations are tested in simulation and the PICO could detect all possibilities very well as you can see in figure 3.6d, 3.6c & 3.6b. PICO sees three corridors and publishes a junction with value 7. But he can only go into the left or straight corridor and therefore he publishes a possibility with value 4.

Fig 3.6d: Example crossing (junction = 7, Possibility = 4)

Visualization node

As soon as we started programming an efficient way for debugging was chosen. Putting print statements everywhere is not very efficient, so we decided to visualize as much as possible. The standard library of C++ does not support visualization, so an extra library (OpenCV) was added already in the third week. This library contains visualization functions, but there are also many image processing functions that can be used in the arrow detection node and other functions. At the corridor competition we already had some visualization, but this was not implemented in a separate node. This was also the problem whereby we failed at the corridor competition. Due to the imshow() function, we were publishing the velocity message on a very low frequency, ± 0.5Hz instead of the desired 20 Hz. At that point we decided to make an independent visualization node which will be run on a separate laptop. Through this simple modification, the nodes which run on PICO will publish and read at 20 Hz. The visualization node will read the topics at a lower 10Hz rate.

The visualization node listens to three topics, which contains three images we show:

- The first image contains the xy graph PICO sees, which is shown in figure 3.7a. This image shows the laser data converted to Cartesian coordinates and is used by the drive-straight nodes. The Hough-transformation uses this image for detect the nearest lines.

- The laser data will be plot in the second image, which is shown in figure 3.6b. The distance is plotted against the angle on the y and x axis respectively. This plot will be used to define thresholds and regions for turning and junction detection.

- The last image contains the derivative of the laser data, which is shown in figure 3.6c. The absolute value of the derivative function is used to detect gaps in a corridor. These gaps are used by the junction decision making function.

Fig 3.7a: Cartesian reconstruction of laserdata

By visualizing as much as possible, debugging went quite fast. Fine-tuning the interesting regions and the thresholds went much easier. The threshold values for example must be lower in the real maze and higher in the simulation. More insight in the data is obtained when the data is plotted, because some strange behavior like noise in the laser data was seen directly. Also the images are used in the presentation and on the wiki page, for clarification. A picture is worth as much as thousand words.

Arrow recognition node

As mentioned before, we did incorporate arrow recognition in the software. We kept the results on a scrict information-output basis (using a cout//). The arrow recognition software was constructed as a separate node which communicates with the central strategy node. We first convert the video (bag, see step 1 of figure 3.8b from red, green and blue (RGB) to black and white (BW). Next, we apply a blur filter to the image. This is a openCV function and smooths the image. After this a Canny edge detector is applied. We now have the contours displayed as displayed in step 2 of figure 3.8b. Next, we apply a Hough transform to this contour. The options are tweaked such that only the arrows tail and head is found by the Hough transform. Now we have arrived at step 3 of figure 3.8b.

fig 3.8a: Flowchart for arrow recognition

When online, these functions output their data to the GUI as displayed in figure 3.8b.

fig 3.8b: Steps involving arrow recognition

Next, we calculate the gradient ([math]\displaystyle{ K_i=\frac{dy_i}{dx_i} }[/math], i=1..n, n=no. found lines) and average x-value (indicated by the points in figure 3.8c. We couple these values and then we sort these by the magnitude of the gradients. These variables are shown in the figure below.

Fig 3.8c: Arrow properties

We want to compare the head of the arrow with its tail. If the average x position of the head is smaller than its tail we know it points to the left and vice versa to the right. We know that [math]\displaystyle{ K_1 }[/math] has the largest gradient and [math]\displaystyle{ K_n }[/math] the lowest. The tail is somewhere in between. So if we compare the average x corresponding to the maximum gradient with that of the median gradient we can find the orientation of the arrow by checking at which side (x) the maximum gradient is w.r.t. the median gradient.

Evaluation

In the youtube-video below the simualation of PICO in the maze is shown:

Maze evaluation

During testing on the day before the final competition we find out that some thresholds had to be adjusted, but we did not have enough time to make it perfect. This was the reason why we did not succeeded in the competition. For example, we set the accuracy of the turning from 95% to 90%. In theory the turns should be a little less than 90 degrees. We did this because when PICO stops turning, the rear wheels are not still not in the good position for driving forward. So, when PICO wants to drive straight ahead it also turns a little further than the angle that is already made. Unfortunately PICO still made a larger turn than 90 degrees.

PICO hit the wall, because he didn't detect it very well. In our safe_drive function we made the decision on more than one data points. We thought this was more robust against noise. Because PICO drove against the corner of the wall with an angle of approximately 45 degrees, to less data points were inside the region of 30cm of PICO and he hit the wall. This never happened during testing, so we taught the decision was safe enough. It is unfortunate that we found out that this was not the case during the final competition.

Improvements

When we monitored the rostopics we saw that the decisions were made right. So we are sure that, with a little more test time, we would be able to make much better turns and solve the maze. Another improvement that we could implement is a self-learning strategy node, that measures the width of the corridors. Knowing this width, the thresholds can be fine tuned. When the thresholds are not hard coded and the strategy node could set them itself, it would be easier to make turns of exactly 90 degrees in every corridor.

References

- A. Alempijevic. High-speed feature extraction in sensor coordinates for laser rangefinders. In Proceedings of the 2004 Australasian Conference on Robotics and Automation, 2004.

- J. Diaz, A. Stoytchev, and R. Arkin. Exploring unknown structured environments. In Proc. of the Fourteenth International Florida Artificial Intelligence Research Society Conference (FLAIRS-2001), Florida, 2001.

- B. Giesler, R. Graf, R. Dillmann and C. F. R. Weiman (1998). Fast mapping using the log-Hough transformation. Intelligent Robots and Systems, 1998.

- Laser Based Corridor Detection for Reactive Navigation, Johan Larsson, Mathias Broxvall, Alessandro Saffiotti http://aass.oru.se/~mbl/publications/ir08.pdf