PRE2018 3 Group9: Difference between revisions

Created page with '== Group Members == {| class="wikitable" style="border-style: solid; border-width: 1px;" cellpadding="3" !style="text-align:left;"| Name !style="text-align:left"| Study !style="…' |

|||

| (276 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

== Group Members == | =Preface= | ||

=== Group Members === | |||

{| class="wikitable" style="border-style: solid; border-width: 1px;" cellpadding="3" | {| class="wikitable" style="border-style: solid; border-width: 1px;" cellpadding="3" | ||

!style="text-align:left;"| Name | !style="text-align:left;"| Name | ||

| Line 14: | Line 16: | ||

|- | |- | ||

|} | |} | ||

===Initial Concepts=== | |||

After discussing various topics, we came up with this final list of projects that seemed interesting to us: | |||

*Drone interception | |||

*A tunnel digging robot | |||

*A firefighting drone for finding people | |||

*Delivery UAV - (blood in Africa, parcels, medicine, etc.) | |||

*Voice controlled robot - (general technique that has many applications) | |||

*A spider robot that can be used to get to hard to reach places | |||

==Chosen Project: Drone Interception== | |||

===Introduction=== | |||

According to the most recent industry forecast studies, the unmanned aerial systems (UAS) market is expected to reach 4.7 million units by 2020.<ref name="rise">Allianz Global Corporate & Specialty (2016). [https://www.agcs.allianz.com/assets/PDFs/Reports/AGCS_Rise_of_the_drones_report.pdf Rise of the Drones] Managing the Unique Risks Associated with Unmanned Aircraft Systems</ref> Nevertheless, regulations and technical challenges need to be addressed before such unmanned aircraft become as common and accepted by the public as their manned counterpart. The impact of an air collision between an UAS and a manned aircraft is a concern to both the public and government officials at all levels. All around the world, the primary goal of enforcing rules for UAS operations into the national airspace is to assure an appropriate level of safety. Therefore, research is needed to determine airborne hazard impact thresholds for collisions between unmanned and manned aircraft or even collisions with people on the ground as this study already shows.<ref name="ato">Federal Aviation Administration (FAA) (2017). [http://www.assureuas.org/projects/deliverables/a3/Volume%20I%20-%20UAS%20Airborne%20Collision%20Severity%20Evaluation%20-%20Structural%20Evaluation.pdf UAS Airborne Collision Severity Evaluation] Air Traffic Organization, Washington, DC 20591</ref>. | |||

With the recent developments of small and cheap electronics unmanned aerial vehicles (UAVs) are becoming more affordable for the public and we are seeing an increase in the number of drones that are flying in the sky. This has started to pose several potential risks which may jeopardize not only our daily lives but also the security of various high values assets such as airports, stadiums or similar protected airspaces. The latest incident involving a drone which invaded the airspace of an airport took place in December 2018, when Gatwick airport had to be closed and hundreds of flights were cancelled following reports of drone sightings close to the runway. The incident caused major disruption and affected about 140000 passengers and over 1000 flights. This was the biggest disruption since ash from an Icelandic volcano shut down all traffic across Europe in 2010.<ref name="gatwick">From Wikipedia, the free encyclopedia (2018). [https://en.wikipedia.org/wiki/Gatwick_Airport_drone_incident Gatwick Airport drone incident] Wikipedia</ref> | |||

Tests performed at the University of Dayton Research Institute show the even a small drone can cause major damage to an airliner’s wing if they meet at more than 300 kilometers per hour.<ref name="dayton">Pamela Gregg (2018). [https://www.udayton.edu/blogs/udri/18-09-13-risk-in-the-sky.php Risk in the Sky?] University of Dayton Research Institute</ref> | |||

The Alliance for System Safety of UAS through Research Excellence (ASSURE) which is FAA's Center of Excellence for UAS Research also conducted a study<ref name="assure_11">ASSURE (2017). [http://www.assureuas.org/projects/deliverables/sUASAirborneCollisionReport.php ASSURE] Alliance for System Safety of UAS through Research Excellence (ASSURE)</ref> regarding the collision severity of unmanned aerial systems and evaluated the impact that these might have on passenger airplanes. This is very interesting as it shows how much damage these small drones or even radio-controlled airplanes can inflict to big airplanes, which poses a huge safety threat to planes worldwide. As one can image, airplanes are most vulnerable to these types of collisions when taking off or landing, therefore protecting the airspace of an airport is of utmost importance. | |||

This project will mostly focus on the importance of interceptor drones for an airport’s security system and the impact of rogue drones on such a system. However, a discussion on drone terrorism, privacy violation and drone spying will also be given and the impacts that these drones can have on users, society and enterprises will be analyzed. As this topic has become widely debated worldwide over the past years, we shall provide an overview of current regulations concerning drones and the restrictions that apply when flying them in certain airspaces. With the research that is going to be carried out for this project, together with all the various deliverables that will be produced, we hope to shine some light on the importance of having systems such as interceptor drones in place for protecting the airspace of the future. | |||

===Problem Statement=== | |||

The problem statement is: ''How can autonomous unmanned aerial systems be used to quickly intercept and stop unmanned aerial vehicles in airborne situations without endangering people or other goods.'' | |||

A UAV is defined as an unmanned aerial vehicle and differs from an unmanned aerial system (UAS) in one major way: a UAV is just referring to the aircraft itself, not the ground control and communications units.<ref name="uas">From Wikipedia, the free encyclopedia (2019). [https://en.wikipedia.org/wiki/Unmanned_aerial_vehicle Unmanned aerial vehicle] Wikipedia</ref> | |||

===Objectives=== | |||

*Determine the best UAS that can intercept a UAV in airborne situations | |||

*Improve the chosen concept | |||

*Create a design for the improved concept, including software and hardware | |||

*Build a prototype | |||

*Make an evaluation based on the prototype | |||

==Project Organisation== | |||

===Approach=== | |||

The aim of our project is to deliver a prototype and model on how an interceptor drone can be implemented. The approach to reach this goal contains multiple steps. | |||

Firstly, we will be going through research papers and other sources which describe the state of art of such drones and its respective components. This allows our group to get a grasp of the current technology of such a system and introduce us to the new developments in this field. This also helps to create a foundation for the project, which we can develop onto. The state of art also gives valuable insight into possible solutions we can think and whether their implementation is feasible given the knowledge we possess and the limited time. The SotA research will be achieved by studying the literature, recent reports from research institutes and the media and analyzing patents which are strongly connected to our project. | |||

Furthermore, we will continue to analyze the problem from a USE – user, society, enterprise – perspective. An important source of this analysis is the state of art research, where the impact of these drone systems in different stakeholders is discussed. The USE aspects will be of utmost importance for our project as every engineer should strive to develop new technologies for helping not only the users but also the society as a whole and to avoid the possible consequence of the system they develop. This analysis will finally lead to a list of requirements for our design. Moreover, we will discuss the impact of these solutions on the categories listed prior. | |||

Finally, we hope to develop a prototype for an interceptor drone. We do not plan on making a physical prototype as the time of the project is not feasible for this task. We plan on creating a 3D model of the drone and detailing how such a system would be implemented in an airport. To show the functioning of the tracking capabilities we are building a demo tracker. To complement this, we also plan on building an application, which serves as a dashboard for the drone, tracking different parameters from the drones such as position and overall status. Next to this, a list of hardware components will be researched, which would be feasible with our project and create a cost-effective product. Concerning the software, a UML diagram will be created first, to represent the system which will be implemented later. Together with the wiki page, these will be our final deliverables for the project. | |||

Below we summarize the main steps in our approach of the project. | |||

*Doing research on our chosen project using SotA literature analysis | |||

*Analyzing the USE aspects and determining the requirements of our system | |||

*Consider multiple design strategies | |||

*Choose the Hardware and create the UML diagram | |||

*Work on the prototype (3D model and mobile application) | |||

*Create a demo of the tracking functionality | |||

*Evaluate the prototype | |||

===Milestones=== | |||

Within this project there are three major milestones: | |||

*After week 2, the best UAS is chosen, options for improvements of this system are made and also there is a clear vision on the user. This means that it is known who the users are and what their requirements are. | |||

*After week 5, the software and hardware are designed for the improved system. Also, a prototype has been made. | |||

*After week 7, the tracker demo will be finished in order to be shown at the presentations. | |||

*After week 8, the wiki page is finished and updated with the results that were found from testing the prototype. Also, future developments are looked into and added to the wiki page. | |||

===Deliverables=== | |||

*This wiki page, which contains all of our research and findings | |||

*A presentation, which is a summary of what was done and what our most important results are | |||

*A prototype | |||

*A video of the tracker demo | |||

===Planning=== | |||

The plan for the project is given in the form of a table in which every team member has a specific task for each week. There are also group tasks which every team member should work on. The plan also includes a number of milestones and deliverables for the project. | |||

{| class="wikitable" | style="vertical-align:middle;" | border="2" style="border-collapse:collapse" | |||

! rowspan="2" style="text-align: center; font-weight:bold; background-color:#dae8fc;" | Name | |||

! rowspan="1" style="text-align: center; font-weight:bold; background-color:#b6d7a8;" | Week #1 | |||

! rowspan="1" style="text-align: center; font-weight:bold; background-color:#d9ead3;" | Week #2 | |||

! rowspan="1" style="text-align: center; font-weight:bold; background-color:#b6d7a8;" | Week #3 | |||

! rowspan="1" style="text-align: center; font-weight:bold; background-color:#d9ead3;" | Week #4 | |||

! rowspan="1" style="text-align: center; font-weight:bold; background-color:#b6d7a8;" | Week #5 | |||

! rowspan="1" style="text-align: center; font-weight:bold; background-color:#d9ead3;" | Week #6 | |||

! rowspan="1" style="text-align: center; font-weight:bold; background-color:#b6d7a8;" | Week #7 | |||

! rowspan="1" style="text-align: center; font-weight:bold; background-color:#d9ead3;" | Week #8 | |||

|- | |||

| style="text-align: center; font-style:italic; background-color:#fff2cc;" | Research | |||

| style="text-align: center; font-style:italic; background-color:#fff2cc;" | Requirements and USE Analysis | |||

| style="text-align: center; font-style:italic; background-color:#fff2cc;" | Hardware Design | |||

| style="text-align: center; font-style:italic; background-color:#fff2cc;" | Software Design | |||

| style="text-align: center; font-style:italic; background-color:#fff2cc;" | Prototype / Concept | |||

| style="text-align: center; font-style:italic; background-color:#fff2cc;" | Proof Reading | |||

| style="text-align: center; font-style:italic; background-color:#fff2cc;" | Future Developments | |||

| style="text-align: center; font-style:italic; background-color:#fff2cc;" | Conclusions | |||

|- | |||

| rowspan="4" style="text-align: center;" | Claudiu Ion | |||

| Make a draft planning | |||

| Add requirements for drone on wiki | |||

| Regulations and present situation | |||

| Write pseudocode for interceptor drone | |||

| Mobile app development | |||

| Proof read the wiki page and correct mistakes | |||

| Make a final presentation | |||

| Review wiki page | |||

|- | |||

| Summarize project ideas | |||

| Improve introduction | |||

| Wireframing the Dashboard App | |||

| Build UML diagram for software architecture | |||

| Improve UML with net rounds | |||

| Add system security considerations | |||

| Add new references on wiki (FAA) | |||

| Add final planning on wiki | |||

|- | |||

| Write wiki introduction | |||

| Check approach | |||

| | |||

| Start design for dashboard mobile app | |||

| Add user interface form | |||

| Work on demo (YOLO neural network) | |||

| Export app screens to presentation | |||

| | |||

|- | |||

| Find 5 research papers | |||

| | |||

| | |||

| | |||

| Add login page to app | |||

| | |||

| | |||

| | |||

|- | |||

| rowspan="4" style="text-align: center;" | Endi Selmanaj | |||

| Research 6 or more papers | |||

| Elaborate on the SotA | |||

| Research the hardware components | |||

| Mathematical model of net launcher | |||

| Write on the security of the system | |||

| Write on hardawre/software interface | |||

| Work on the Presantation | |||

| Present the work done | |||

|- | |||

| Write about the USE aspects | |||

| Review the whole Wiki page | |||

| Draw the schematics of electronics used | |||

| What makes a drone friend or enemy | |||

| Try to fix the Latex | |||

| | |||

| Write on the Base Station | |||

| Finalize writting on base station | |||

|- | |||

| | |||

| Improve approach | |||

| | |||

| Start with the simulation | |||

| | |||

| | |||

| Research Drone Detection radars | |||

| Review the Wiki | |||

|- | |||

| | |||

| Check introduction and requirements | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

|- | |||

| rowspan="4" style="text-align: center;" | Martijn Verhoeven | |||

| Find 6 or more research papers | |||

| Update SotA | |||

| Research hardware options | |||

| List of parts and estimation of costs | |||

| Target recognition & target following | |||

| Review wiki page | |||

| Finalise demo and presentation | |||

| Finalise wiki page | |||

|- | |||

| Fill in draft planning | |||

| Check USE | |||

| Start thinking about electronics layout | |||

| Target recognition & target following | |||

| Image recognition | |||

| Contact bluejay | |||

| Make Aruco marker detection demo | |||

| | |||

|- | |||

| Write about objectives | |||

| | |||

| | |||

| | |||

| | |||

| Work on demo | |||

| | |||

| | |||

|- | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

|- | |||

| rowspan="4" style="text-align: center;" | Leo van der Zalm | |||

| Find 6 or more research papers | |||

| Update milestones and deliverables | |||

| | |||

| Finish hardware research | |||

| User interview | |||

| Review wiki page | |||

| Finish experts part | |||

| Finalise wiki page | |||

|- | |||

| Search information about subject | |||

| Check SotA | |||

| Research hardware components | |||

| Make a bill of costs and list of parts | |||

| Confirmation of having acquired target | |||

| Contact the army | |||

| Improve Justification | |||

| | |||

|- | |||

| Write problem statements | |||

| USE analysis including references | |||

| | |||

| Improve design of net gun | |||

| | |||

| Justify requirements and constraints | |||

| Improve planning | |||

| | |||

|- | |||

| Write objectives | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

|- | |||

| style="background-color:#fe0000; color:#efefef;" | | |||

| style="background-color:#fe0000; color:#efefef;" | | |||

| style="background-color:#fe0000; color:#efefef;" | | |||

| style="background-color:#fe0000; color:#efefef;" | | |||

| style="background-color:#fe0000; color:#efefef;" | | |||

| style="background-color:#fe0000; color:#efefef;" | | |||

| style="background-color:#fe0000; color:#efefef;" | | |||

| style="background-color:#fe0000; color:#efefef;" | | |||

| style="background-color:#fe0000; color:#efefef;" | | |||

|- | |||

| rowspan="5" style="text-align: center;" | Group Work | |||

| Introduction | |||

| Expand on the requirements | |||

| Research hardware components | |||

| Interface design for the mobile app | |||

| Finish the software parts | |||

| Brainstorm about the presentation | |||

| Work on the final presentation | |||

| | |||

|- | |||

| Brainstorming ideas | |||

| Expand on the state of the art | |||

| Research systems for stopping drones | |||

| Start working on 3d drone model | |||

| Finish simulation of drone forces | |||

| Make a draft for the presentation | |||

| Finish the demo | |||

| | |||

|- | |||

| Find papers (5 per member) | |||

| Society and enterprise needs | |||

| | |||

| Start thinking about the software | |||

| Start contacting experts | |||

| Look for ideas for the demo | |||

| Improve the planning | |||

| | |||

|- | |||

| User needs and user impacts | |||

| Work on UML activity diagram | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

|- | |||

| Define the USE aspects | |||

| Improve week 1 topics | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

|- | |||

| rowspan="4" style="text-align: center;" | Milestones | |||

| Decide on research topic | |||

| Add requirements to wiki page | |||

| USE analysis finished | |||

| 3D drone model finished | |||

| Simulation of drone forces | |||

| Mobile app prototype finished | |||

| The demo(s) work | |||

| Wiki page is finished | |||

|- | |||

| Add research papers to wiki | |||

| Add state of the art to wiki page | |||

| UML activity diagram finished | |||

| List of parts and estimation of costs | |||

| | |||

| Information from experts is processed | |||

| Presentation is finished | |||

| | |||

|- | |||

| Write introduction for wiki | |||

| | |||

| Research into building a drone | |||

| | |||

| | |||

| | |||

| | |||

| | |||

|- | |||

| Finish planning | |||

| | |||

| Research into the costs involved | |||

| | |||

| | |||

| | |||

| | |||

| | |||

|- | |||

| rowspan="2" style="text-align: center;" | Deliverables | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| Mobile app prototype | |||

| Demo tracker(s) | |||

| Final presentation | |||

|- | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| 3D drone model | |||

| | |||

| Wiki page | |||

|} | |||

=USE= | |||

In this section, we will focus on analyzing the different aspects involving users, society and enterprises in the context of interceptor drones. We will start by identifying key stakeholders for each of the categories and proceed by giving a more in-depth analysis. After identifying all these stakeholders, we will continue by stating what our project will mainly focus on in terms of stakeholders. Since the topic of interceptor drones is quite vast depending from which angle we choose to tackle the problem, focusing on a specific group of stakeholders will help us produce a better prototype and conduct better research for that group. Moreover, each of these stakeholders experiences different concerns, which are going to be elaborated separately. | |||

===Users=== | |||

When analyzing the main users for an interceptor drone, we quickly see that airports are the most interested in having such a technology. Since the airspace within and around airports is heavily restricted and regulated, unauthorized flying drones are a real danger not only to the operation of airports and airlines, but also to the safety and comfort of passengers. As was the case with previous incidents, intruder drones which are violating airport airspaces lead to airport shutdowns which result in big delay and huge losses. | |||

Another key group of users is represented by governmental agencies and civil infrastructure operators that want to protect certain high value assets such as embassies. As one can imagine, having intruder drones flying above such a place could lead to serious problems such as diplomacy fights or even impact the relations between the two countries involved. Therefore, one could argue that such a drone could indeed be used with malicious intent to directly cause tense relations. | |||

Another good example worth mentioning is the incident involving the match between Serbia and Albania in 2014 when a drone invaded the pitch carrying an Albanian nationalist banner which leads to a pitch invasion by the Serbian fans and full riot. This incident led to retaliations from both Serbians and Albanians which resulted in significant material damage and damaged even more the fragile relations between the two countries <ref name="euro">From Wikipedia, the free encyclopedia (2019). [https://en.wikipedia.org/wiki/Serbia_v_Albania_(UEFA_Euro_2016_qualifying) Serbia v Albania (UEFA Euro 2016 qualifying)] Wikipedia</ref>. | |||

We can also imagine such an interceptor drone being used by the military or other government branches for fending off terrorist attacks. Being able to deploy such a countermeasure (on a battlefield) would improve not only the safety of people but would also help in deterring terrorists from carrying out such acts of violence in the first place. | |||

Lastly, a smaller group of users, but still worth considering are individuals who are prone to get targeted by drones, therefore having their privacy violated by such systems. This could be the case with celebrities or other VIPs who are targeted by the media to get more information about their private lives. | |||

To summarize, from a user perspective we think that the research which will go into this project can benefit airports the most. One could say that we are taking a utilitarianism approach to solving this problem, as implementing a security system for airports would produce the greatest good for the greatest number of people. | |||

===Society=== | |||

When thinking about how society could benefit from the existence of a system that detects and stops intruder drones, the best example to consider is again the airport scenario. It is already clear that whenever an unauthorized drone enters the restricted airspace of an airport this causes major concern for the safety of the passengers. Moreover, it causes huge delays and creates big problems for the airport’s operations and airlines which will be losing a lot of money. Apart from this, rogue drones around airports cause logistical nightmares for airports and airlines alike since this will not only create bottlenecks in the passenger flow through the airport, but airlines might need to divert passengers on other routes and planes. The cargo planes will also suffer delays, and this could lead to bigger problems down the supply chain such as medicine not reaching patients in time. All these problems are a great concern for society. | |||

Another big issue for the society, which interceptor drones hope to solve, would be the ability to safely stop a rogue drone from attacking large crowds of people at various events for example. For providing the necessary protection in these situations, it is crucial that the interceptor drone acts very quickly and stops the intruder in a safe and controlled manner as fast as possible without putting the lives of other people in danger. Again, when we think in the context of providing the greatest good for the greatest number of people, the airport security example stands out, therefore this is where the focus of the research of this paper will be targeted towards. | |||

===Enterprise=== | |||

When analyzing the impact interceptor drones will have on the enterprise in the context of airport security we identify two main players: the airport security and airlines operating from that airport. Moreover, the airlines can be further divided into two categories: those which transport passengers and those that transports cargo (and we can also have airlines that do both). | |||

From the airport’s perspective, a drone sighting near the airport would require a complete shutdown of all operations for at least 30 minutes, as stated by current regulations <ref name="alex_hern">Alex Hern, Gwyn Topham (2018). [https://www.theguardian.com/technology/2018/dec/20/how-dangerous-are-drones-to-aircraft How dangerous are drones to aircraft?] The Guardian</ref>. As long as the airport is closed, it will lose money and cause operational problems. Furthermore, when the airport will open again, there will be even more problems caused by congestion since all planes would want to leave at the same time which is obviously not possible. This can lead to incidents on the tarmac involving planes, due to improper handling or lack of space in an airport which is potentially already overfilled with planes. | |||

Whether we are talking about passenger transport or cargo, drones violating an airport’s airspace directly translates in huge losses, big delays and unhappy passengers. Not only will the airline need to compensate passengers in case the flight is canceled, but they would also need to support accommodation expenses in some cases. For cargo companies, a delay in delivering packages can literally mean life or dead if we talk about medicine that needs to get to patients. Moreover, disruptions in the transport of goods can greatly impact the supply chain of numerous other businesses and enterprises, thus these types of events (rogue drones near airports) could have even bigger ramifications. | |||

Finally, after analyzing the USE implications of intruder drones in the context of airport security, we will now focus on researching different types of systems than can be deployed in order to not only detect but also stop and catch such intruders as quickly as possible. This will therefore help mitigate both the risks and various negative implications that such events have on the USE stakeholders that were mentioned before. | |||

===Experts=== | |||

We have explained who the main users are, but not how much use our idea can find and if it can be implemented in airports. To find how our idea can be implemented, we contacted different airports; Schiphol Airport, Rotterdam The Hague Airport, Eindhoven Airport, Groningen Eelde Airport and Maastricht Aachen Airport. Only Eindhoven Airport responded to the questions that we posed. | |||

We asked the airports the following questions: | |||

*Are you aware of the problem that rogue intruder drones pose? | |||

*How are you taking care of this problem now? | |||

*Who is responsible if things go wrong? | |||

*How does this process go? (By this we mean the process form when a rogue drone is detected until it is taken down.) | |||

*Do you see room for innovation or are you satisfied with the current process? | |||

Their response made clear that they are very aware of the problem. But they told us that it is not their responsibility. The terrain of the airport is the property of the Dutch army, and also the responsibility of the Dutch army. Because this problem is new, the innovation center of the army takes care of these problems. | |||

This answer gave us some information, but we still don't know anything about the current process yet. So, we e-mailed the innovation center of the army. Also, we contacted Delft Dynamics, because they also deal with this problem and probably know more about it. The innovation center of the army and Delft Dynamics both didn't respond to any of our emails. | |||

===Requirements=== | |||

To better understand the needs and design for an interceptor drone, a list of requirements is necessary. There are clearly different ways in which a rogue UAV can be detected, intercepted, tracked and stopped. However, the requirements for the interceptor drone need to be analyzed carefully as any design for such a system must ensure the safety of bystanders and minimize all possible risks involved in taking down the rogue UAV. Equally important are the constraints for the interceptor drone and finally the preferences we have for the system. For prioritizing the specific requirements for the project, the MoSCoW model was used. Each requirement has a specific level of priority which stands for must have (''M''), should have (''S''), could have (''C''), would have (''W''). We will now give the RPC table for the autonomous interceptor drone and later provide some more details about each specific requirement. | |||

{| class="wikitable" | style="vertical-align:middle;" | border="2" style="border-collapse:collapse" | |||

! style="text-align: center; font-weight:bold;" | ID | |||

! style="text-align: center; font-weight:bold;" | Requirement | |||

! style="text-align: center; font-weight:bold;" | Preference | |||

! style="text-align: center; font-weight:bold;" | Constraint | |||

! style="text-align: center; font-weight:bold;" | Category | |||

! style="text-align: center; font-weight:bold;" | Priority | |||

|- | |||

| style="text-align: center; font-style:italic;" | R1 | |||

| Detect rogue drone | |||

| Long range system for detecting intruders | |||

| Does NOT require human action | |||

| Software | |||

| M | |||

|- | |||

| style="text-align: center; font-style:italic;" | R2 | |||

| Autonomous flight | |||

| Fully autonomous drone | |||

| Does NOT require human action | |||

| Software & Control | |||

| M | |||

|- | |||

| style="text-align: center; font-style:italic;" | R3 | |||

| Object recognition | |||

| Accuracy of 100% | |||

| Able to be intervened | |||

| Software | |||

| M | |||

|- | |||

| style="text-align: center; font-style:italic;" | R4 | |||

| Detect rogue drone's flying direction | |||

| Accuracy of 100% | |||

| | |||

| Software & Hardware | |||

| S | |||

|- | |||

| style="text-align: center; font-style:italic;" | R5 | |||

| Detect rogue drone's velocity | |||

| Accuracy of 100% | |||

| | |||

| Software & Hardware | |||

| S | |||

|- | |||

| style="text-align: center; font-style:italic;" | R6 | |||

| Track target | |||

| Tracking targer for at least 30 minutes | |||

| Allows for operator to correct drone | |||

| Software & Hardware | |||

| M | |||

|- | |||

| style="text-align: center; font-style:italic;" | R7 | |||

| Velocity of 50 km/h | |||

| Drone is as fast as possible | |||

| | |||

| Hardware | |||

| M | |||

|- | |||

| style="text-align: center; font-style:italic;" | R8 | |||

| Flight time of 30 minutes | |||

| Flight time is maximized | |||

| | |||

| Hardware | |||

| M | |||

|- | |||

| style="text-align: center; font-style:italic;" | R9 | |||

| FPV live feed (with 60 FPS) | |||

| Drone records and transmits flight video | |||

| Records all flight video footage | |||

| Hardware | |||

| C | |||

|- | |||

| style="text-align: center; font-style:italic;" | R10 | |||

| Camera with high resolution | |||

| Flight video is as clear as possible | |||

| Allow for human drone identification | |||

| Hardware | |||

| C | |||

|- | |||

| style="text-align: center; font-style:italic;" | R11 | |||

| Stop rogue drone | |||

| Is always successful | |||

| Cannot endanger others | |||

| Hardware | |||

| M | |||

|- | |||

| style="text-align: center; font-style:italic;" | R12 | |||

| Stable connection to operation base | |||

| Drone is always connected to base | |||

| If connection is lost drone pauses intervention | |||

| Software | |||

| S | |||

|- | |||

| style="text-align: center; font-style:italic;" | R13 | |||

| Sensor monitoring | |||

| Drone sends sensor data to base and app | |||

| Critical sensor information is sent to base | |||

| Software | |||

| C | |||

|- | |||

| style="text-align: center; font-style:italic;" | R14 | |||

| Drone autonomously returns home | |||

| Drone always gets home by itself | |||

| | |||

| Software | |||

| C | |||

|- | |||

| style="text-align: center; font-style:italic;" | R15 | |||

| Auto take off | |||

| | |||

| Drone can take off autonomously at any moment | |||

| Control | |||

| M | |||

|- | |||

| style="text-align: center; font-style:italic;" | R16 | |||

| Auto landing | |||

| | |||

| Drone can autonomously land at any moment | |||

| Control | |||

| M | |||

|- | |||

| style="text-align: center; font-style:italic;" | R17 | |||

| Auto leveling (in flight) | |||

| Drone is able to fly in heavy weather | |||

| Does not require human action | |||

| Control | |||

| M | |||

|- | |||

| style="text-align: center; font-style:italic;" | R18 | |||

| Minimal weight | |||

| | |||

| | |||

| Hardware | |||

| S | |||

|- | |||

| style="text-align: center; font-style:italic;" | R19 | |||

| Cargo capacity of 8 kg | |||

| | |||

| Drone is able to carry captured drone | |||

| Hardware | |||

| M | |||

|- | |||

| style="text-align: center; font-style:italic;" | R20 | |||

| Portability | |||

| Drone is portable and easy to transport | |||

| Does not hinder drone's robustness | |||

| Hardware | |||

| C | |||

|- | |||

| style="text-align: center; font-style:italic;" | R21 | |||

| Fast deployment | |||

| Drone can be deployed in under 5 minutes | |||

| Does not hinder drone's functionality | |||

| Hardware | |||

| S | |||

|- | |||

| style="text-align: center; font-style:italic;" | R22 | |||

| Minimal costs | |||

| System cost is less than 100000 euros | |||

| | |||

| Costs | |||

| S | |||

|} | |||

===Justification Requirements=== | |||

Starting with R1 we see that our drone must be able to detect a rogue drone to take him down. A preference is that the detection is done by a long-range system, this allows a smaller number of detectors to scan a bigger airspace. As can be seen at the constraint of R1, we want the drone to be autonomous. This is again specified in R2. This system autonomy is required to ensure that the system is as fast as possible, as human are less reliable in that regard. Next to that, automatic systems allow for higher accuracy as these systems can be controlled to generate optimal solutions. The difference between just autonomous flight and full autonomy is the detection and deployment part. In our system, the intruder will be detected autonomously, and the interceptor drone will also be deployed autonomously. Only after deployment, the second requirement takes effect. | |||

R3 until R5 are about recognition and following. R3 claims that our drone must be able to recognize different objects. R4 and R5 have a lower priority but are still important. This is because detecting flying direction and detecting speed increases the chance of catching the drone but are not completely necessary. This is because the interceptor drone can better follow the rogue drone and with better following comes better shooting quality from the net launcher. Like R3, we prefer that R4 and R5 are done with 100 percent accuracy since this will improve our chance of success. Also, the drone must be able to track the rogue drone to catch it. If the rogue drone escapes, we cannot find out why the drone was here and will not be able to prevent it from happening again. Therefore, R6 gets a high priority. The constraint has been set such that the tracking process can be interrupted by an operator to prevent takedowns of friendly targets or to stop the drone if it disproportionally endangers others. R7 is about the minimal maximum velocity. If our drone cannot keep up with the rogue drone, it might escape. We prefer that our drone is as fast as possible, but we have to keep in mind that the battery does not run out too fast. 50km/h has been chosen as a safe speed as it makes sure the drone can get to the other side of a 5km airfield in roughly 10 minutes. This way it is also able to follow fast light drones. (R8) The drone must have a flight time of at least 30 minutes, because otherwise it might not have enough battery life to participate in a dogfight with an intruding drone. A flight time of 30 minutes is chosen because it is the average flight time of a prosumer drone, which are the most advanced drones manufactured by companies we can encounter. | |||

R9 and R10 are about the cameras on the drone. The drone will need two cameras. R9 is about the high-speed camera that is used to do the tracking of the intruder. It needs to have low latency and a high refresh rate to be able to optimize the control (see section ATR). Next to this camera, a high-resolution camera is needed since it can be used to identify the intruder by the operator. The stream from this camera gets fed back to the base for inspection. R11 is the most important RPC because this is the main goal of our drone. The requirement is to stop the rogue drone, and the preference is that this is always successful. Because we chose a net launcher (justified later on), the drone will need to reload at its base after every shot. The constraint from R11 comes back at the constraint of R6, because here we see that the drone may never endanger humans. | |||

R12 is about the connection to the operation base. A stable connection to the operation base is required because the operation base needs to know what is happening. The drone can act by itself because it’s autonomous, but maybe human interference is needed. If the operation base is not connected to the drone, human interference is not possible. We prefer that the drone is always connected to the base. But when connection is lost, the drone has to continue tracking but not yet launch its net, such that it will not try to capture a friend. R13 concerns the sensor information that is returned to the base. With this information we can adjust the drone for better performance and monitor it. This allows for intervention based on for example mechanical failure. Also, this information helps us to understand incident better afterward as the data can be saved, helps us to prepare for upcoming incidents and provide information about how we can solve them even better. R14, R15 and R16 represent the take-off, returning to station and landing. These are tasks that can best be performed autonomously for the same reasons as specified for R1 and R2. | |||

Our drone also needs to be able to withstand different weather types. Auto-leveling in flight is required because the drone needs to be stable even in different weather conditions, like a change of wind speed. This can be performed at best by sensors and computing power instead of human control. We prefer that our drone can also fly in heavy weather conditions, at least as heavy as a regular plane can so that the system can always be used. Looking at R7 and R8 we see that we want to reach maximum speed and maximum flight time. For this to take place, it is required that our drone has minimal weight (R18). Because we need to carry the rogue drone, in R19 is mentioned that we require a cargo capacity of 8 kg. This is based on an estimated weight of an intruder with possible cargo like explosives. In case of emergency, the drone should be able to be transported, but a constraint is that this does not hinder the drone’s robustness. Not having optimal robustness causes the drone to be able to break more easily and thereby decrease the chance of success. Lastly, R22 claims that the system should be delivered at minimal costs to ensure its use in as many as possible airports. This price is based on a rough estimate but a lot lower than the potential losses. That is why we feel this price is justified. | |||

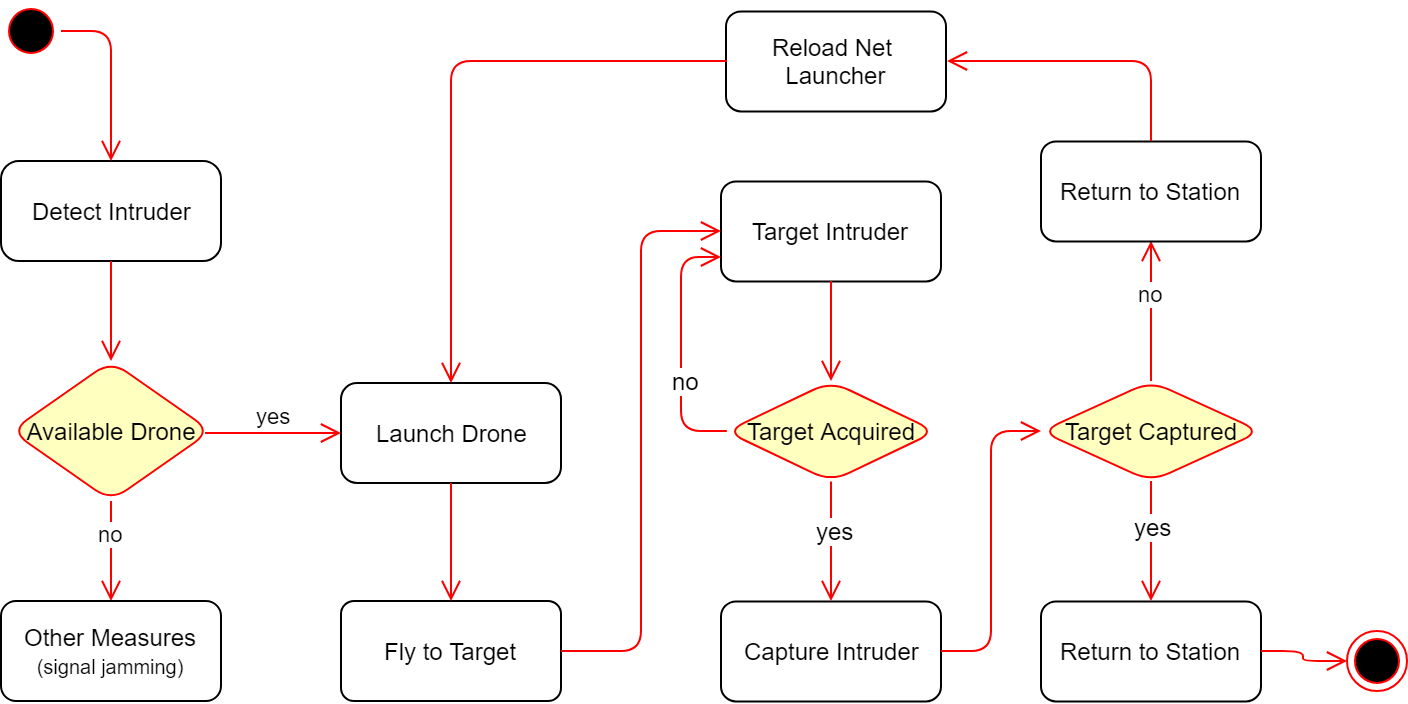

=== UML Activity Diagram === | |||

Activity diagrams, along with use case and state machine diagrams describe what must happen in the system that is being modeled and therefore they are also called behavior diagrams. Since stakeholders have many issues to consider and manage, it is important to communicate what the overall system should do with clarity and map out process flows in a way that is easy to understand. For this, we will give an activity diagram of our system, including the overview of how the interceptor drone will work. By doing this, we hope to demonstrate the logic of our system and also model some of the software architecture elements such as methods, functions and the operation of the drone. | |||

[[File:Uml.png|center|800px]] | |||

=Regulations= | |||

The world’s airspace is divided into multiple segments each of which is assigned to a specific class. The International Civil Aviation Organization (ICAO) specifies this classification to which most nations adhere to. In the US however, there are also special rules and other regulations that apply to the airspace for reasons of security or safety. | |||

The current airspace classification scheme is defined in terms of flight rules: IFR (instrument flight rules), VFR (visual flight rules) or SVFR (special visual flight rules) and in terms of interactions between ATC (air traffic control). Generally, different airspaces allocate the responsibility for avoiding other aircraft to either the pilot or the ATC. | |||

===ICAO adopted classifications=== | |||

''Note: These are the ICAO definitions.'' | |||

*'''Class A''': All operations must be conducted under IFR. All aircraft are subject to ATC clearance. All flights are separated from each other by ATC. | |||

*'''Class B''': Operations may be conducted under IFR, SVFR, or VFR. All aircraft are subject to ATC clearance. All flights are separated from each other by ATC. | |||

*'''Class C''': Operations may be conducted under IFR, SVFR, or VFR. All aircraft are subject to ATC clearance (country-specific variations notwithstanding). Aircraft operating under IFR and SVFR are separated from each other and from flights operating under VFR, but VFR flights are not separated from each other. Flights operating under VFR are given traffic information in respect of other VFR flights. | |||

*'''Class D''': Operations may be conducted under IFR, SVFR, or VFR. All flights are subject to ATC clearance (country-specific variations notwithstanding). Aircraft operating under IFR and SVFR are separated from each other and are given traffic information in respect of VFR flights. Flights operating under VFR are given traffic information in respect of all other flights. | |||

*'''Class E''': Operations may be conducted under IFR, SVFR, or VFR. Aircraft operating under IFR and SVFR are separated from each other and are subject to ATC clearance. Flights under VFR are not subject to ATC clearance. As far as is practical, traffic information is given to all flights in respect of VFR flights. | |||

*'''Class F''': Operations may be conducted under IFR or VFR. ATC separation will be provided, so far as practical, to aircraft operating under IFR. Traffic Information may be given as far as is practical in respect of other flights. | |||

*'''Class G''': Operations may be conducted under IFR or VFR. ATC has no authority, but VFR minimums are to be known by pilots. Traffic Information may be given as far as is practical in respect of other flights. | |||

Special Airspace: these may limit pilot operation in certain areas. These consist of Prohibited areas, Restricted areas, Warning Areas, MOAs (military operation areas), Alert areas and Controlled firing areas (CFAs), all of which can be found on the flight charts. | |||

Note: Classes A–E are referred to as controlled airspace. Classes F and G are uncontrolled airspace. | |||

Currently, each country is responsible for enforcing a set of restrictions and regulations for flying drones as there are no EU laws on this matter. In most countries, there are two categories for drone pilots: recreational drone pilots (hobbyists) and commercial drone pilots (professionals). Depending on the use of such drones, there are certain regulation that apply and even permits that a pilot needs to obtain before flying. | |||

For example, in The Netherlands, recreational drone pilots are allowed to fly at a maximum altitude of 120m (only in Class G airspace) and they need special permission for flying at a higher altitude. Moreover, the drone needs to remain in sight at all times and the maximum takeoff weight is 25 kg. For recreational pilots, a license is not required, however, flying at night needs special approval. | |||

For commercial drone pilots, one or more permits are required depending on the situations. The RPAS (remotely piloted aircraft system) certificate is the most common license and will allow pilots to fly drones for commercial use. The maximum height, distance and takeoff limits are increased compared to the recreational use of such drones, however night time flying still requires special approval and drones still need to be flown in Class G airspace. | |||

Apart from these rules, there are certain drone ban zones which are strictly forbidden for flying and these are: state institutions, federal or regional authority constructions, airport control zones, industrial plants, railway tracks, vessels, crowds of people, populated areas, hospitals, operation sites of police, military or search and rescue forces and finally the Dutch Caribbean Islands of Bonaire St. Eustatius and Saba. Failing to abide by the rules may result in a warning or a fine. The drone may also be confiscated. The amount of the fine or the punishment depends on the type of violation. For example, the judicial authorities will consider whether the drone was being used professionally or for hobby purposes and whether people have been endangered. | |||

As it is usually the case with new emerging technologies, the rules and regulations fail to keep up with the technological advancements. However, recent developments in the European Parliament hope to create a unified set of laws concerning the use of drones for all European countries. A recent study<ref name="economy_drones">EU Parliament (2018). [http://www.europarl.europa.eu/news/en/headlines/economy/20180601STO04820/drones-new-rules-for-safer-skies-across-europe Drones: new rules for safer skies across Europe] Civilian Drones: Rules that Apply to European Countries</ref> suggests that the rapid developing drone sector will generate up to 150000 jobs by 2050 and in the future this industry could account for 10% of the EU’s aviation market which amounts to 15 billion euros per year. | |||

Therefore, there is definitely a need to change the current regulation which in some cases complicates cross border trade in this fast-growing sector. As shown with the previous example, unmanned aircraft weighing less than 25 kg (drones) are regulated at a nationwide level which leads to inconsistent standards across different countries. | |||

Following a four months consultation period, the European Union Aviation Safety Agency (EASA) published a proposal<ref name="easa_proposal">European Union Aviation Safety Agency (2019). [https://www.easa.europa.eu/easa-and-you/civil-drones-rpas Civil Drones] European Union Aviation Safety Agency</ref> for a new regulation for unmanned aerial systems operation in open (recreational) and specific (professional) categories. On the 28th February 2019, the EASA Committee has given its positive vote to the European Commission proposal for implementing the regulations which are expected to be adopted at the latest on 15 March 2019. Although these are still small steps, the EASA is working on enabling safe operations of unmanned aerial systems (UAS) across Europe’s airspaces and the integration of these new airspace users into an already busy ecosystem. | |||

=The Interceptor Drone= | |||

==Catching a Drone== | |||

[[File:Skywall_100.jpg|thumb|The Skywall 100 is a manual drone intercepting device]] | |||

The next step is to look at the device which we use to intercept the drone. This could be done with another drone, which we suggested above. But there is also another option. This is by shooting the drone down with a specialized launcher, like the ‘Skywall 100’ from OpenWorks Engineering.<ref name = "mp200">OpenWorks Engineering [https://openworksengineering.com/]</ref> This British company invented a net launcher which is specialized in shooting down drones. It is manual and has a short reload time. This way, taking down the drone is easy and fast, but it has two big problems. The first one is that the drone falls to the ground after it is shot down. This way it could fall onto people or even worse, conflict enormous damage when the drone is armed with explosives. Therefore the ‘Skywall 100’ cannot be used in every situation. Another problem is that this launcher is manual, and a human life can be at risk in situations when an armed drone must be taken down. | |||

[[File:Interceptor_from_Delft_Dynamics.jpg|thumb|A drone catching another one by using a net launcher]] | |||

There remain two alternatives by which another drone is used to catch the violating drone. No human lives will be at risks and the violating drone can be delivered at a desired place. The first option is by using an interceptor drone, which deploys a net in which the drone is caught. This is an existing idea. A French company named MALOU-tech, has built the Interceptor MP200.<ref name = "interceptor"> MALOU-tech [http://groupe-assmann.fr/malou-tech/]</ref> But this way of catching a drone has some side-effects. On the one hand, this interceptor drone can catch a violating drone and deliver it at a desired place. But on the other hand, the relatively big interceptor drone must be as fast and agile as the smaller drone, which is hard to achieve. Also, the net is quite rigid and when there is a collision between the net and the violating drone, the interceptor drone must be stable and able to find balance, otherwise it will fall to the ground. Another problem that occurs is that the violating drone is caught in the net, but not sealed in it. It can easily fall out of the net or not even be able to be caught in the net. Drones with a frame that protect the rotor blades are not able to get caught because the rotor blades cannot get stuck in the net. | |||

Drones like the Interceptor MP 200 are good solutions to violating drones which need to be taken down, but we think that there is a better option. When we implement a net launcher onto the interceptor drone and remove the big net, this will result in better performance because of the lower weight. And when the shot is aimed correctly, the violating drone is completely stuck in the net and can’t get out, even if it has blade guards. This is important when the drone is equipped with explosives. In this case we must be sure that the armed drone is neutralized completely, meaning that we know for sure that it cannot escape or crash in an unforeseen location. This is an existing idea, and Delft Dynamics built such a drone.<ref name="dronecatcher">Delft Dynamics [https://dronecatcher.nl/ DroneCatcher]</ref>. This drone however is, in contrary to our proposed design, not fully autonomous. | |||

==Building the Interceptor Drone== | |||

Building a drone, like most other high-tech current day systems, consists of hardware as well as software design. In this part we like to focus on the software and give a general overview of the hardware. This is because our interests are more at the software part, where a lot more innovative leaps can still be made. In the hardware part we will provide an overview of drone design considerations and a rough estimation of what such a drone would cost. In the software part we are going to look at the software that makes this drone autonomous. First, we look at how to detect the intruder, how to target it and how to assure that it has been captured. Also, a dashboard app is shown, which displays the real-time system information and provides critical controls. | |||

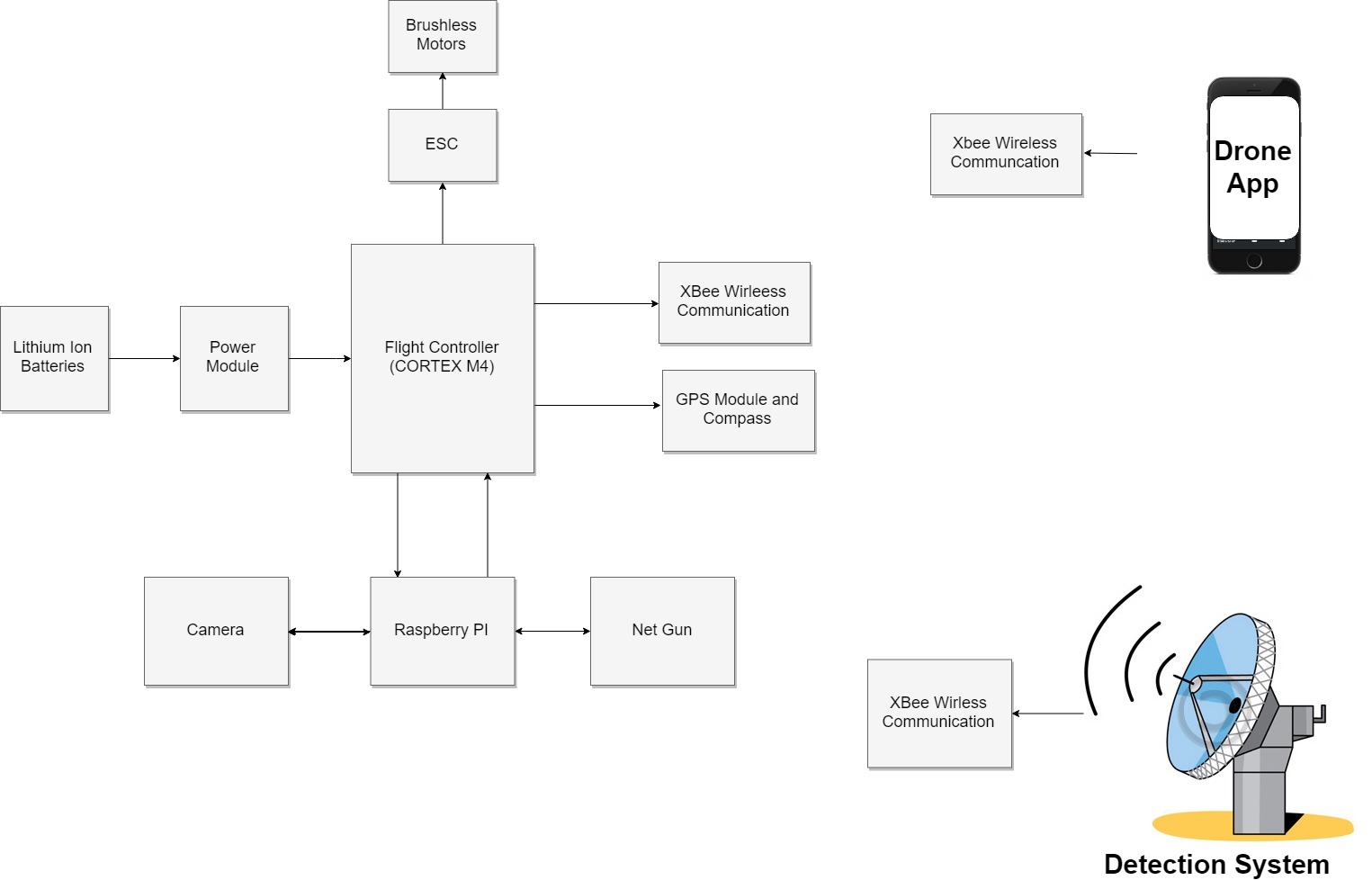

===Hardware/Software interface=== | |||

[[File:Hard.jpg|center|800px|The hardware design]] | |||

The main unit of the drone is the flight controller which is an ST Cortex M4 Processor. This unit serves as the brain of the drone. The drone itself is powered by high capacity Lithium-Ion Batteries. The power of the batteries goes through a power module, that makes sure the drone is fed constant power, while measuring the voltage and current going through it, detecting when an anomaly with the power is happening or when the drone needs recharging. The code for autonomous flight is coded into the Cortex M4 chip, which through the Electronic Speed Controllers (ESC) can control the Brushless DC Motors which spin the propellers to make the drone fly. Each Motor has its own ESC, meaning that each motor is controlled separately. | |||

For the intent of having the drone position in real time, a GPS module is used, which provides an accurate location for outdoor flight. This module communicates with the Cortex M4 to process and update the location of the drone in relation to the location target. | |||

To be able to detect an intruder drone with the help of computer vision, a Raspberry Pi is placed into the drone to offer the extra computation power needed. Raspberry Pi is connected to a camera, with which it can detect the attacking drone. After the target has been locked into position, the net launcher is launched towards the attacking drone. | |||

The drone communicates with a system located near the area of surveillance. It uses XBee Wireless Communication to do so, with which it receives and transmits data. The drone uses this first for communicating with the app, where all the data of the drone is displayed. The other use of wireless communication is to communicate with the detection system placed around the area. This can consist of a radar-based system or external cameras, connected to the main server, which then communicates the rough location of the intruder drone back. | |||

===Hardware=== | |||

From the requirements a general design of the drone can be created. The main requirements concerning the hardware design of the drone are: | |||

*Velocity of 50km/h | |||

*Flight time of 30 minutes | |||

*FPV live feed | |||

*Stop rogue drone | |||

*Minimal weight | |||

*Cargo capacity of 8kg | |||

*Portability | |||

*Fast deployment | |||

*At minimal cost | |||

A big span is required, to stay stable while carrying high weight (i.e. an intruder with heavy explosives). This is due to the higher leverage of rotors at larger distances. But with the large format the portability and maneuverability decrease. Based on the intended maximum target weight of 8kg, a drone of roughly 80cm has been chosen. The chosen design uses eight rotors instead of the more common four or six (also called an octocopter, [https://3dinsider.com/hexacopters-quadcopters-octocopters/]) to increase maneuverability and carrying capacity. By doing so, the flight time will decrease, and costs will increase. Flight time however is not a big concern as a typical interceptor routine will not take more than ten minutes. For quick recharging, a system with automated battery swapping can be deployed.<ref name= "lee"> D. Lee, J. Zhou, W. Tze Lin [https://ieeexplore.ieee.org/abstract/document/7152282/ Autonomous battery swapping system for quadcopter] (2015) </ref> | |||

Another important aspect of this drone will be its ability to track another drone. To do so, it is equipped with two cameras. The FPV-camera is low resolution and low latency and is used for tracking. Because of its low resolution, it can also real time be streamed to the base station. The drone is also equipped with a high-resolution camera which captures images at a lower framerate. These images are streamed to the base station and can be used for identification of intruders. Both cameras are mounted on a gimbal to the drone to keep their feeds steady at all times such that targeting and identification gets easier. | |||

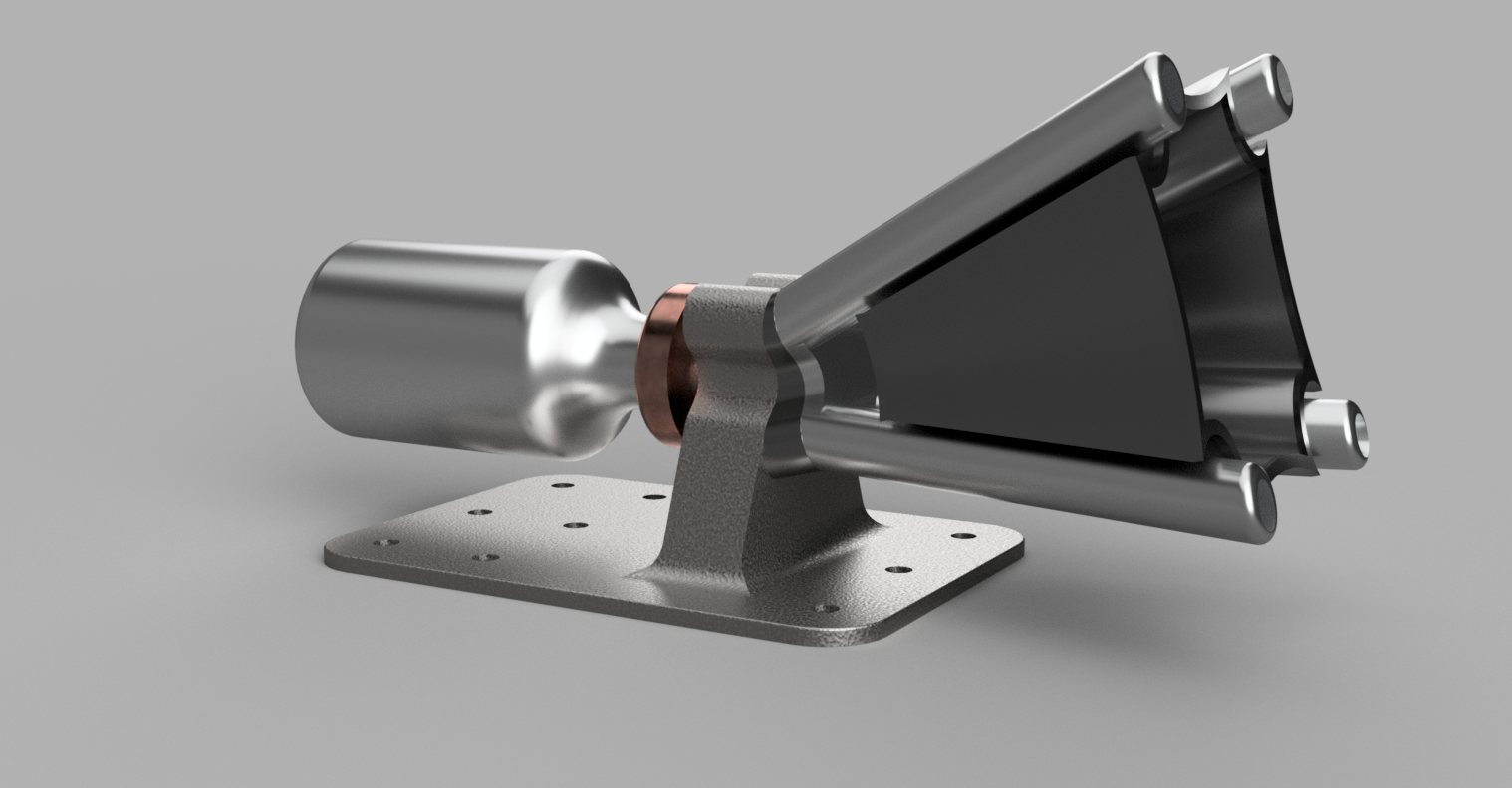

The drone is equipped with a net launcher to stop the targeted intruder as described in the section “How to catch a drone”. | |||

To clarify our hardware design, we have made a 3D model of the proposed drone and the net launcher. This model is based on work from Felipe Westin on GrabCad [https://grabcad.com/library/drone-octocopter-z8-1] and extended with a net launcher. | |||

[[File:Z8_Octo_v6.png|center|800px|A render of the proposed drone design]] | |||

[[File:Net_launcher_Render.png|center|800px|A render of the proposed net launcher design]] | |||

===Bill of costs=== | |||

If we wanted to build the drone, we start with the frame. In this frame it must be able to implement eight motors. Suitable frames can be bought for around 2000 euros. The next step is to implement motors into this frame. Our drone must be able to follow the violating drone, so it must be fast. Motors with 1280kv can reach a velocity of 80 kilometers per hour. These motors cost about 150 euros each. The motors need power which comes from the battery. The price of the battery is a rough estimation. This is because we do not exactly know how much power our drone needs and how much it is going to weigh. Because this is a price estimation we take a battery with 4500mAh and costs about 200 euros. Also, two cameras are needed as explained before. FPV-camera's for racing drones cost about 50 euros. High resolution cameras can cost as much as you want. But we need to keep the price reasonable so the camera which we implement gives us 5.2K Ultra HD at 30 frames per second. These two cameras and the net launcher need to be attached on a gimbal. A strong and stable enough gimbal costs about 2000 euros. Further costs are electronics such as a flight controller, an ESC, antennas, transmitters and cables. | |||

{| class="wikitable" style="border-style: solid; border-width: 1px;" width="40%" cellpadding="3" | |||

!style="text-align:left;"| Parts | |||

!style="text-align:left"| Number | |||

!style="text-align:left;"| Estimated cost | |||

|- | |||

|Frame and landing gear || 1 || €2000,- | |||

|- | |||

|Motor and propellor || 8|| €1200,- | |||

|- | |||

|Battery || 1 || €200,- | |||

|- | |||

|FPV-camera || 1 || €200,- | |||

|- | |||

|High resolution camera || 1 || €2000,- | |||

|- | |||

|Gimbal || 1 || €2000,- | |||

|- | |||

|Net launcher || 1 || €1000,- | |||

|- | |||

|Electronics || || €1000,- | |||

|- | |||

|Other || || €750,- | |||

|- | |||

| style="background-color:#000; color:#efefef;" | | |||

| style="background-color:#000; color:#efefef;" | | |||

| style="background-color:#000; color:#efefef;" | | |||

|- | |||

| Total || || €10350,- | |||

|} | |||

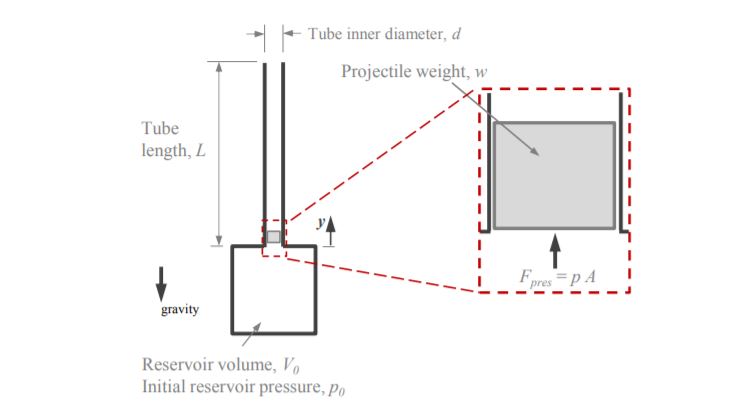

===Net launcher mathematical modeling=== | |||

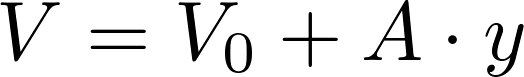

The net launcher of the drone uses a pneumatic launcher to shoot the net. To fully understand its capabilities and how to design it, a model needs to be derived first. | |||

[[File:Schematic_and_free_body_diagram_of_the_pneumatic_Launcher.JPG |none|800px|Schematic and free body diagram]] | |||

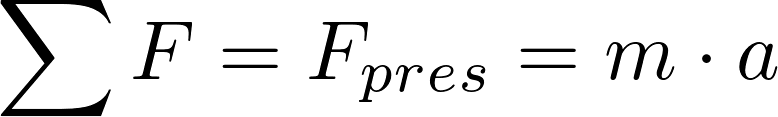

From Newton's second law, the sum of forces acting on the projectile attached to the corners of the net are: | |||

[[File:formula_1.png|none|150px]] | |||

Where | |||

[[File:formula_2.png|none|150px]] | |||

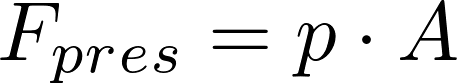

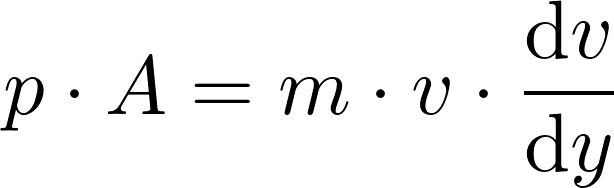

With p being the pressure on the projectile and A the cross-sectional area. Using these equations, we calculate that: | |||

[[File:formula_3.png|none|150px]] | |||

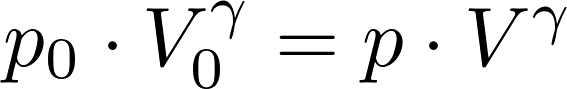

For the pneumatic launcher to function we need a chamber with carbon dioxide, using it to push the projectile. We will assume that no heat is lost through the tubes of the net launcher and that this gas expands adiabatically. The equation describing this process is: | |||

[[File:formula_4.png|none|150px]] | |||

where <math display="inline">p</math> is the initial pressure, <math display="inline">v</math> is the initial volume of the gas, <math display="inline">\gamma</math> is the ratio of the specific heats at constant pressure and at constant pressure, which is 1.4 for air between 26.6 degrees and 49 degrees and | |||

[[File:formula_5.png|none|150px]] | |||

is the volume at any point in time. After adjusting the previous formulas, we get the following: | |||

[[File:formula_6.png|none|250px]] | |||

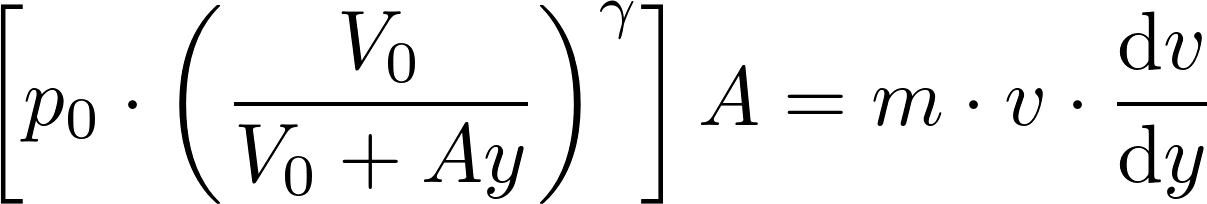

After integrating from <math display="inline">y</math> is 0 to L, where L is the length of the projectile tube, we can find the speed which the projectile lives the muzzle, given by: | |||

[[File:formula_7.png|none|300px]] | |||

This equation helps us to design the net launcher, more specifically its diameter and length. As we require a certain speed that the net needs to be shot, we will have to adjust these parameters accordingly, so the required speed is met. | |||

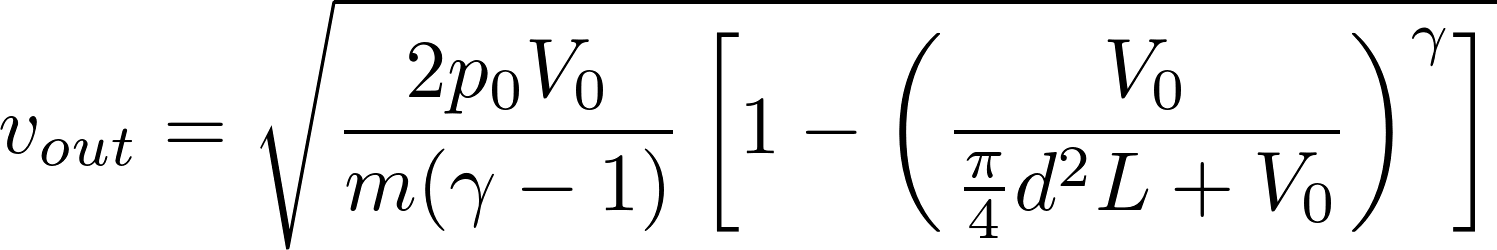

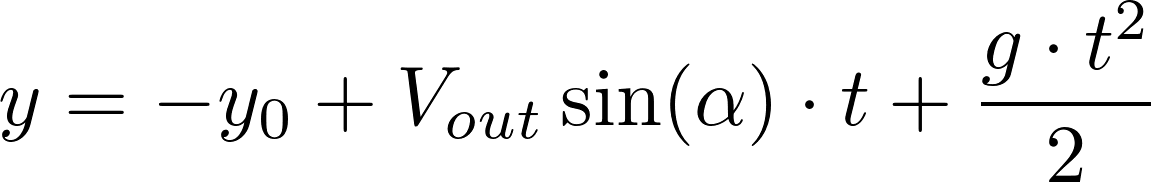

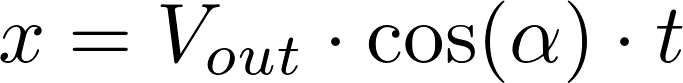

As there is a projectile motion by the net, assuming that friction and tension forces are negligible we get the following equations for the x and y position at any point in time t: | |||

[[File:formula_8.png|none|250px]] | |||

[[File:formula_9.png|none|200px]] | |||

Where <math display="inline">y</math> is the initial height and <math display="inline">\alpha</math> is the angle that the projectile forms with the x-axis. | |||

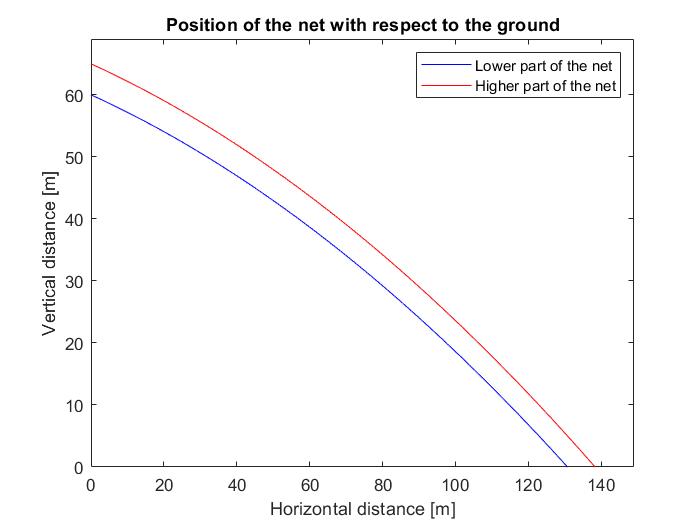

Assuming a speed of 60 m/s, we can plot the position of the net in a certain time point, helping us predict where our drone needs to be for it to successfully launch the net to the other drone. | |||

[[File:Plot2.jpg|none|500px]] | |||

From the plot, it is clear that the drone can be used from a very large distance to capture the drone it is attacking, from a distance as far as 130m. Although this is an advantage of our drone, shooting from such a distance must be only seen as a last option, as the model does not predict the projectile perfectly. Assumptions such as the use of an ideal gas, or neglect of the air friction make for inaccuracies, which only get amplified in bigger distances. | |||

===Automatic target recognition and following=== | |||

''Many thanks to Duarte Antunes on helping us with drone detection and drone control theory'' | |||

For the drone to be able to track and target the intruder, it needs to know where the intruder is at any point in time. To do so, various techniques have been developed over the years all belonging to the area of Automatic Target Recognition (also referred to as ATR). There has been a high need for this research field for a long time. It has applications in for example guided missiles, automated surveillance and automated vehicles. | |||

ATR started with radars and manual operators but as quality of cameras and computation power became more accessible and have taken a big part in today’s development. The camera can supply high amounts of information at low cost and weight. That is why it has been chosen to use it on this drone. Additionally, the drone is equipped with a gimbal on the camera to make the video stream more stable and thus lower the needed filtering and thereby increase the quality of the information. | |||

This high amount of information does induce the need for a lot of filtering to get the required information from the camera. Doing so is computationally heavy, although a lot of effort has been made to reduce the computational lifting. To do this, our drone carries a Raspberry Pi computation unit next to its regular flight controller to do the heavy image processing. One example algorithm is contour tracking, which detects the boundary of a predefined object. Typically, the computational complexity for these algorithms is low but their performance in complex environments (like when mounted to a moving parent or tracking objects that move behind obstructions) is also low. An alternative technology is based around particle filters. Using this particle filter on color-based information, a robust estimation of the target's position, orientation and scale can be made. <ref name="teuliere">C. Teuliere, L. Eck, E. Merchand (2011) [https://ieeexplore.ieee.org/abstract/document/6094404 Chasing a moving target from a flying UAV] ''2011 IEEE/RSJ International Conference on Intelligent Robots and Systems''</ref> | |||

Another option is a neural network trained to detect drones. This way, a pretrained network could operate on the Raspberry Pi on the drone and detect the other drone in real time. The advantages of these networks are among others that they can cope with varying environments and low-quality images. A downside of these networks is their increased computational difficulty. But because other methods like Haar cascade cannot cope very well with changing environments, seeing parts of the object or changing objects (like rotating rotors). [https://www.baseapp.com/deepsight/opencv-vs-yolo-face-detector/] A very popular network is YOLO (You Only Look Once), which is open-source and available for the common computer vision library OpenCV. This network must be trained with a big dataset of images that contain drones, from which it will learn what a drone looks like. | |||

To actually track the intruding drone using visual information, one can use one of two approaches. One way is to determine the 3D pose of the drone and use this information to generate the error values for a controller which in turn tries to follow this target. Another way is to use the 2D position of your target in the camera frame and using the distance of your target from the center of your frame as the error term of the controller. To complete this last method, you need a way to now the distance to the target. This can be done with an ultrasonic sensor, which is cheap and fast, with stereo cameras or with and approximation based on the perceived size of the drone in the camera frame. | |||

Which controller to use depends on the required speed, complexity of the system and computational weight. LQR and MPC are two popular control methods which are well suited to generate the most optimal solution, even in complex environments. Unfortunately, they require a lot of tuning and are relatively computationally heavy. A well-known alternative is the PID controller, which is not able to always generate the most optimal solution but is very easy to tune and easy to implement. Therefore, such a controller would best suit this project. For future version, we do advise to investigate more advanced controllers (like LQR and MPC). | |||

===YOLO demo=== | |||

To demonstrate the ATR capabilities of a neural network we have built a demo. This demo shows neural network called YOLO (You Only Look Once) trained to detect humans. Based on the location of these humans in the video frame, a Python script outputs control signals to an Arduino. This Arduino controls a two-axis gimbal on which the camera is attached. This way the system can track a person in its camera frame. Due to the computational weight of this specific neural net and the lacking ability to run of a GPU, the performance is quite limited. In controlled environments, the demo setup was able to run at roughly 3 FPS. This meant that running out of the camera frame was easy and caused the system to “lose” the person. | |||

Video of the YOLO demo: | |||

[https://youtu.be/1KOLKnDnK0I https://youtu.be/1KOLKnDnK0I] | |||

To also show a faster tracker we have built a demo that tracks so called Aruco markers. These markers are designed for robotics and are easy to detect for image recognition algorithms. This means that the demo can run at a higher framerate. A new Python script determines the error as the distance from the center of the marker to the center of the frame and, using this, generates a control output to the Arduino. This Arduino in turn moves the gimbal. This way, the tracker tries to keep the target at the center of the frame. | |||

Video of the Aruco demo: | |||

[https://youtu.be/ARxtBIWKNT8 https://youtu.be/ARxtBIWKNT8] | |||

See [https://github.com/martinusje/USE_robots_everywhere] for all code. | |||

===Conformation of acquired target=== | |||

One of the most important things is actually shooting the net. But after that, the drone must know what to do next. Because when it misses, it must go back to base and reload the net gun. Otherwise, when the target is acquired, and the intruder is caught, the drone should deliver it at a safe place, and his mission has succeeded. | |||

To confirm whether the drone has been caught, a robust and sensitive force sensor is attached between the net and the net launcher. Based on vibrations in the net (caused by the target trying to fly) and the actual weight, the drone can determine whether the other drone has been caught. Next to that the drone is equipped with a microphone that recognizes the pitching noise of the rotors of the other drone. If this sound has disappeared, the drone uses this information along with its other sensors to determine whether the target has been acquired or not. | |||

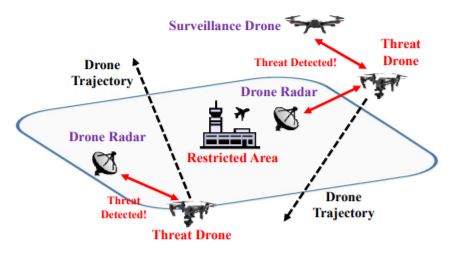

=Base Station= | |||

The base station is where all the data processing of drone detection is happening, and all the decision are made. Drone detection is the most important part of the system, as it should always be accurate to prevent any unwanted situation. | |||

There is a lot of state of art technology that can be employed for drone detection such as mmWave Radar <ref name="Droz"> J. Drozdowicz, M. Wielgo, P. Samczynski, K. Kulpa, J. Krzonkalla, M. Mordzonek, M. Bryl, and Z. Jakielaszek [https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=7497351 35 GHz FMCW drone detection system ] “35 GHz FMCW drone detection system,” in Proc. Int. Radar Symposium (IRS). IEEE, 2016, pp. 1–4. </ref>, UWB Radar <ref name="Fontana"> R. J. Fontana, E. A. Richley, A. J. Marzullo, L. C. Beard, R. W. Mulloy, and E. Knight [ https://ieeexplore.ieee.org/abstract/document/1006344/ An ultra-wideband radar for micro air vehicle applications ] “An ultra-wideband radar for micro air vehicle applications” in Proc. IEEE Conf. Ultra Wideband Syst. Technol., 2002, pp. 187–191</ref>, Acoustic Tracking <ref name="Benyamin"> M. Benyamin and G. H. Goldman [https://www.arl.army.mil/arlreports/2014/ARL-TR-7086.pdf Acoustic Detection and Tracking ] “Acoustic Detection and Tracking of a Class I UAS with a Small Tetrahedral Microphone Array,” Army Research Laboratory Technical Report (ARL-TR-7086), DTIC Document, Tech. Rep., Sep. 2014. </ref> and Computer Vision <ref name="Boddhu"> S. K. Boddhu, M. McCartney, O. Ceccopieri, and R. L. Williams https://www.researchgate.net/publication/271452517_A_collaborative_smartphone_sensing_platform_for_detecting_and_tracking_hostile_drones A collaborative smartphone sensing platform for detecting and tracking hostile drone ] “A collaborative smartphone sensing platform for detecting and tracking hostile drones,” SPIE Defense, Security, and Sensing, pp. 874 211–874 211, 2013. </ref> . For use in airport environment radar-based systems work best, as they can identify a drone without problem in any weather condition or even in instances of high noise, which both Computer Vision and Acoustic Tracking can have problems respectively. | |||

As for Acoustic Tracking, there is a lot of interference in an airport, such as airplanes, jets and numerous other machines working in an airport. For a system to be able to work perfectly it needs to detect all these noises and differentiate from the ones that a drone makes, which can prove to be troublesome. With Computer Vision a high degree of feasibility can be achieved with the use of special cameras equipped with night vision, thermal sensors or Infiniti Near Infrared Cameras. Such cameras are suggested to be used in addition to radar technology for detecting drones, using data fusion techniques to get the best result, since if only Computer Vision is used a large number of cameras is needed and distance of the drone is not computed as accurately as a radar system. | |||

[[File:Base.JPG|none|400px]] | |||

The radar systems considered for drone detection are mmWave and UWB Radar. While mmWave offers 20% higher detection range, distance detection and drone distinguishing offers better results with UWB radar systems, so it is the preferred type of radar for our application <ref name="Guve"> Güvenç, İ., Ozdemir, O., Yapici, Y., Mehrpouyan, H., & Matolak, D [ https://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/20170009465.pdf Detection, localization, and tracking of unauthorized UAS and jammers ] Detection, localization, and tracking of unauthorized UAS and jammers. In 2017 IEEE/AIAA 36th Digital Avionics Systems Conference (DASC) (pp. 1-10). IEEE. </ref>. Higher cost can be involved with installing more radar systems, but since the user of the system is an airport, accuracy holds higher importance than cost. | |||

Use of such radars enables the user to accurately analyze the Doppler spectrum, which is important from distinguishing from birds and drones and knowing the type of drone that is interfering in the airport area. By studying the characteristics of UWB radar echoes from a drone and testing with different types of drones, a lot of information can be distinguished about the attacking drones such as drone’s range, radial velocity, size, type, shape, and altitude <ref name=Droz />. A database with all the data can be formed which can lead to easy detection of an attacking drone once one is located near an airport. | |||

A location of the enemy drone can be estimated by the various UWB radars using triangulation <ref name="Park"> Hyunwook Park, Jaewon Noh and Sunghyun Cho [ https://journals.sagepub.com/doi/pdf/10.1177/1550147716671720 Three-dimensional positioning system | |||

using Bluetooth low-energy beacons ] "Three-dimensional positioning system using Bluetooth low-energy beacons." International Journal of Distributed Sensor Networks. 12. 10.1177/1550147716671720. </ref>. The accuracy of triangulation depends on the number of radars that detect the drone, but the estimate is enough for our drone to be in the sight of the attacking drone. Once this happens another method is used for accurately locating the 3D position of the attacking drone. 3D localization is realized by UWB radars by employing transceivers in the ground station and another in our drone. The considered approach uses the two-way time-of-flight technique and can work at communication ranges up to 80m. A Kalman filter can finally be used to track the range of the target since there will also be noise available which we want to filter. Results have shown that the noisy range likelihood estimates can be smoothed to obtain an accurate range estimate to the target the attacking drone <ref name=Guve />. | |||

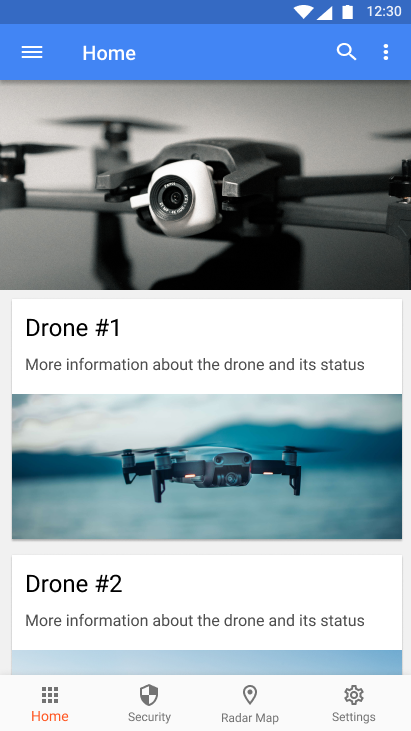

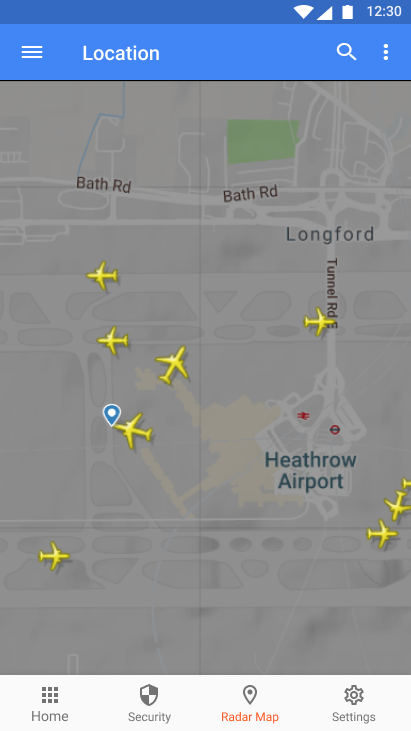

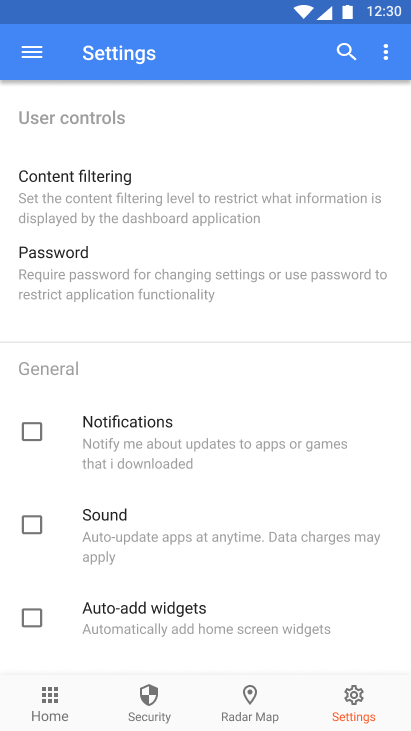

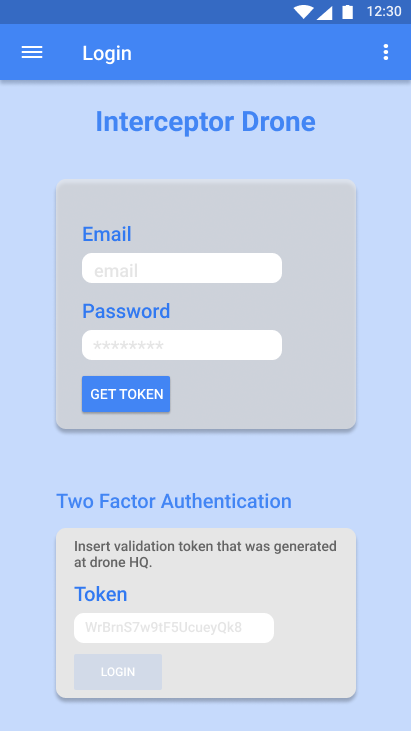

=Application= | |||

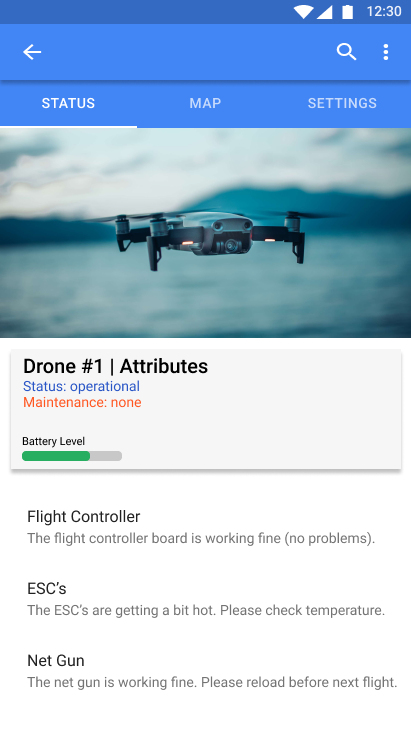

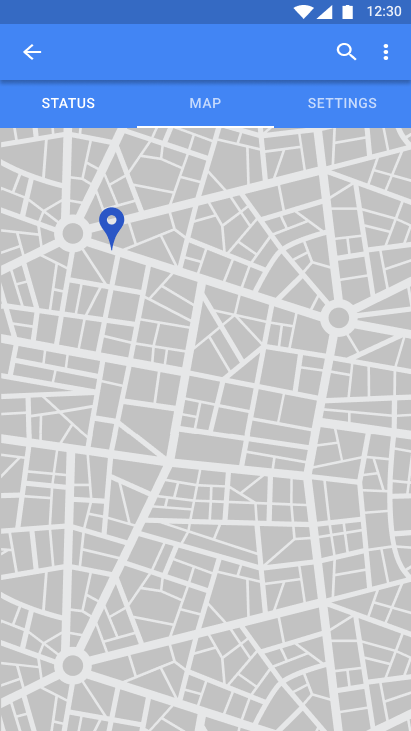

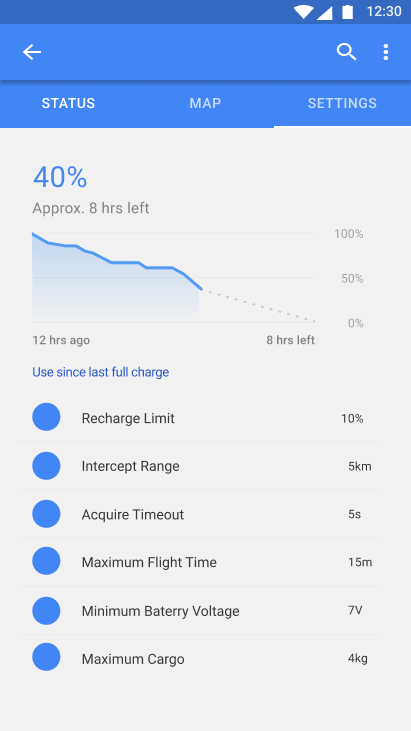

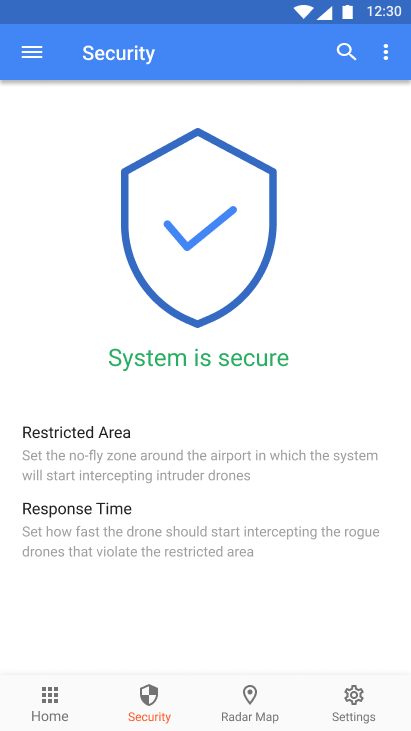

For the interceptor drone system there will be a mobile application developed from which the most important statistics about the interceptor drones can be viewed and also some key commands can be sent to the drone fleet. This application will also communicate with the interceptor drone (or drones if multiple are deployed) in real time, thus making the whole operation of intercepting and catching an intruder drone much faster. Being able to see the stats of the arious interceptor drones in one’s fleet is also very nice for the users and the maintenance personnel who will need to service the drones. A good example that shows how useful the application will be is when a certain drone returns from a mission, the battery levels can immediately be checked using the app and the drone can be charged accordingly. | |||

''Note:'' there will be no commands being sent from the application to the drone. The application's main purpose is to display the various data coming from the drones such that users can better analyze the performance of the drones and maintenance personnel can service the drones quicker. | |||