Firefly Eindhoven - Trajectory App: Difference between revisions

| (15 intermediate revisions by the same user not shown) | |||

| Line 4: | Line 4: | ||

The team Firefly is working hard on creating a drone show, where the lighting, song and movement of the drone all interact with each other. As of now these interactions are created manually, if they are to be changed they need to be adjusted inside the coding. The purpose of a trajectory editor, is to give any user the possibility to create his own drone show. Thus with this application it will be possible to change the song, trajectory and led design without having to dig deep inside the coding. | The team Firefly is working hard on creating a drone show, where the lighting, song and movement of the drone all interact with each other. As of now these interactions are created manually, if they are to be changed they need to be adjusted inside the coding. The purpose of a trajectory editor, is to give any user the possibility to create his own drone show. Thus with this application it will be possible to change the song, trajectory and led design without having to dig deep inside the coding. | ||

The application for the trajectory editor is being built using JavaScript and HTML5, thus making it a web application. A plan of action has been created, the goal is to create three prototypes. The first prototype focuses mainly on the functionality of the editor, to have a working prototype. It will only contain the top view of the drone, so the height will be constant when editing the trajectory. The second prototype will be created when most functions have been added. It will focus on the user experience and a side view of the drone will be added. Lastly for the final prototype we will try and create a 3D view of the drone, this will be quite | The application for the trajectory editor is being built using JavaScript and HTML5, thus making it a web application. A plan of action has been created, the goal is to create three prototypes. The first prototype focuses mainly on the functionality of the editor, to have a working prototype. It will only contain the top view of the drone, so the height will be constant when editing the trajectory. The second prototype will be created when most functions have been added. It will focus on the user experience and a side view of the drone will be added. Lastly for the final prototype we will try and create a 3D view of the drone, this will be quite a challenge. | ||

As of now we are still working on the first prototype, most functions have been added. The plan was to have the end assessment of this academic year as the deadline of our first working prototype, which has already been achieved. | As of now we are still working on the first prototype, most functions have been added. The plan was to have the end assessment of this academic year as the deadline of our first working prototype, which has already been achieved. | ||

| Line 12: | Line 12: | ||

For building the trajectory application, one of the most important parts of the whole project was staying organized at all times. When working on such large applications, it is vital that every team member has a good idea regarding the task of the others such that overlap does not occur in the team. | For building the trajectory application, one of the most important parts of the whole project was staying organized at all times. When working on such large applications, it is vital that every team member has a good idea regarding the task of the others such that overlap does not occur in the team. | ||

The team that was | The team that was assigned the task of building the trajectory application had its own private repository on GitHub. Whenever new features were being developed, a new branch was first created for that specific feature. After building the necessary feature on the newly created branch, a pull request was created for merging the feature branch into the master. The repository was configured such that at least one other team member approved the code changes before the branch could be merged into master, this way ensuring that everyone had a general understanding both of the code and of each other’s tasks. When everyone agreed on the code changes, the feature branch was merged into master. The final version of the application was published using github pages, such that the link could be shared online with other team members. The link for the application is: [https://cldme.github.io/trajectory-editor/ trajectory-editor] | ||

==User Interface Design== | ==User Interface== | ||

===User Interface Design=== | |||

For the first prototype the user interface design has been created together with all three group members. It had to look simple and clean, the first prototype was rather to implement the functionality of the application. A design was created using Adobe XD. | For the first prototype the user interface design has been created together with all three group members. It had to look simple and clean, the first prototype was rather to implement the functionality of the application. A design was created using Adobe XD. | ||

[[File: | [[File: prototype1.png ||thumb|right|300px|The first prototype of the trajectory editor.]] | ||

The design is really simple, it consist of a toolbar, a canvas and a timeline. The toolbar contains the show design and the movements which can be added. In the show design the user is can add the led design and the the song which has to be playing. For the first prototype the user can only add a song, by choosing from a list or by importing his/her own song. The toolbar consist also of movements which can be added to the trajectory of the drone. The trajectory of the drone can consist of different kind of movements. For the functionality of the first prototype however, it was decided to only use ''point'' as a movement. This can also be seen in the canvas right beneath the toolbar. | The design is really simple, it consist of a toolbar, a canvas and a timeline. The toolbar contains the show design and the movements which can be added. In the show design the user is can add the led design and the the song which has to be playing. For the first prototype the user can only add a song, by choosing from a list or by importing his/her own song. The toolbar consist also of movements which can be added to the trajectory of the drone. The trajectory of the drone can consist of different kind of movements. For the functionality of the first prototype however, it was decided to only use ''point'' as a movement. This can also be seen in the canvas right beneath the toolbar. | ||

Inside the canvas the user can add these points by clicking. The user can also drag those numbered points around in the 2D space. These adjustments will show up in the time line under the canvas. In this time line the different points can be adjusted as well, which will cause the drone to reach its destination faster or slower. When the song and trajectory both have been added, the show can be played, paused or stopped by the buttons on the left side of the time line. During the show the volume can be adjusted on the right side of the time line. | Inside the canvas the user can add these points by simply clicking. The user can also drag those numbered points around in the 2D space. These adjustments will show up in the time line under the canvas. In this time line the different points can be adjusted as well, which will cause the drone to reach its destination faster or slower. When the song and trajectory both have been added, the show can be played, paused or stopped by the buttons on the left side of the time line. During the show the volume can be adjusted on the right side of the time line. | ||

==Functionality== | ==Functionality== | ||

| Line 44: | Line 46: | ||

===Canvas Elements=== | ===Canvas Elements=== | ||

Added in HTML5, the HTML | Added in HTML5, the HTML <canvas> element can be used to draw graphics via scripting in JavaScript. For example, it can be used to draw graphs, make photo compositions, create animations, or even do real-time video processing or rendering. | ||

Since our application needs to render the trajectory that is being drawn by the user in real time, the obvious choice for this is to use an HTML canvas element. This give us the flexibility of freely drawing various (custom) shapes and plotting the trajectory in real time. | Since our application needs to render the trajectory that is being drawn by the user in real time, the obvious choice for this is to use an HTML canvas element. This give us the flexibility of freely drawing various (custom) shapes and plotting the trajectory in real time. | ||

| Line 55: | Line 57: | ||

The main method of interacting with html elements inside .js is using event listeners for various events that might be triggered on a page such as mouse clicks or drags. These event listeners are attached to the main canvas and fire based on the actions taken by users. For the main canvas, the most important event handlers used are onMouseDown(), onMouseUp() and onMouseMove() each being tasked with checking for a different event, suggested by the name. By using these three event listeners we can determine when points are added, dragged or no action is taken. The same principles are applied for the timeline canvas also, but more checks are made to ensure that certain properties (like handles not going over each other’s intervals) hold. | The main method of interacting with html elements inside .js is using event listeners for various events that might be triggered on a page such as mouse clicks or drags. These event listeners are attached to the main canvas and fire based on the actions taken by users. For the main canvas, the most important event handlers used are onMouseDown(), onMouseUp() and onMouseMove() each being tasked with checking for a different event, suggested by the name. By using these three event listeners we can determine when points are added, dragged or no action is taken. The same principles are applied for the timeline canvas also, but more checks are made to ensure that certain properties (like handles not going over each other’s intervals) hold. | ||

===Undo and Redo=== | |||

Undo and redo functionality is implemented in undo/redo.js. | |||

Before a change to the trajectory is applied, the function "snapshotTrajectory" pushes the state of the trajectory before the change onto the "undoStack". | |||

Calling undo pops the latest value from the undoStack and replaces the current trajectory with it. | |||

To allow redo functionality, undo also pushes the state of the trajectory before the undo is applied onto the "redoStack". | |||

Redo does the exact same as undo, but with the two stacks swapped; undo pushes the current trajectory onto the "undoStack", and pops the latest entry in the "redoStack" to the current trajectory. | |||

When applying undo or redo it is important to check if the stack the function will pop a trajectory from is empty. | |||

If so, the function must do nothing. | |||

Also, when loading a new file, the undoStack and redoStack must be cleared to prevent the user from accidentally 'leaving' his loaded trajectory. | |||

Currently both stacks can grow without bound, which has the effect that after long periods of editing the undoStack might become impracticly large. | |||

This might cause performance problems, which should be prevented by limiting the size of the undoStack to a certain size (by forgetting the first entry after it reaches a certain size). | |||

===Savin and Loading Trajectories=== | |||

Saving and loading only concerns state of the trajectory and is implemented in save\_load.js, though it relies on specific funcionality implemented in state.js, event.js and trajectory.js. | |||

The output format of the trajectory is a string of the following format with the format given by: (t, x, y, psi). | |||

===Improving Functionality=== | ===Improving Functionality=== | ||

| Line 62: | Line 83: | ||

After this, the team plans on adding a side view (next to the existing top-down view) in order to better visualize the trajectory. Ideally, the last version will have a 3d view of the whole trajectory, but as this is harder to program in .js (as we would need some extensive knowledge in WebGL), it was decided to first implement multiple side views for the next prototypes. For implementing 3d functionality into our application a special .js library will probably be used such that working with WebGL is made easier. The team will start researching 3d libraries as soon as the 2d version of the application is finished such that the main functionality is already implemented once we move to building the 3d application. This way, we will have better and faster results when porting the application from 2d to 3d. | After this, the team plans on adding a side view (next to the existing top-down view) in order to better visualize the trajectory. Ideally, the last version will have a 3d view of the whole trajectory, but as this is harder to program in .js (as we would need some extensive knowledge in WebGL), it was decided to first implement multiple side views for the next prototypes. For implementing 3d functionality into our application a special .js library will probably be used such that working with WebGL is made easier. The team will start researching 3d libraries as soon as the 2d version of the application is finished such that the main functionality is already implemented once we move to building the 3d application. This way, we will have better and faster results when porting the application from 2d to 3d. | ||

== | ==Trajectory== | ||

===Trajectory representation=== | |||

To describe the trajectory representation, a few types must be explained. | |||

Firstly, the state type (implemented in state.js) is a tuple (x, y, z, psi), where x, y, z, psi are the parameters describing the orientation of a drone. | |||

Notice that a state and it's derivatives at a certain position in time fully constrains the translation, velocity, orientation and rate of rotation of a drone without a possibility of the system being overdetermined. | |||

For this reason every followable trajectory is fully described by a function of time to state (with continuity to sufficient order). | |||

States have addition, subtraction and scalar multiplication functions, which makes the set of states a vector space. | |||

The second type used in the trajectory representation is the event (implemented in event.js). | |||

An event is a tuple containing a time and state: e = (t, s). | |||

An event can be thought of as a waypoint that the drone should follow. | |||

Like states, events have addition, subtraction and scalar multiplication functions, which makes the set of events a vector space. | |||

It is clear that a list of events with an interpolation function (which produces a continuous set of events) can be used to map time to state, and therefore fully describes a drone trajectory. | |||

It should be noted that the method of interpolation must guarantee that the state varies sufficiently smooth over time in order for the trajectory to be followable by a drone. | |||

Also, to describe a trajectory it is not required that the list of generating events are contained in the continuous set of events produced by the interpolation, which is not in line with the usual definition of the word interpolation. | |||

From now on the entire process will be referred to as sampling. | |||

Our trajectory parameterization is based on these ideas, therefore; a trajectory is parameterized by a time ordered array of events. | |||

The sampling functions are described in the next section. | |||

===Trajectory sampling=== | |||

As explained in the previous section, sampling is achieved by interpolating events to find states corresponding to intermediate times with sufficient smoothness. | |||

Because the acceleration of the drone is coupled to its orientation, the x, y, z components of followable trajectories must be continuous to at least the fourth order timed derivative. | |||

However, we use a control system which split into a high-level and low-level controller, where the spatial part of the high-level controller only uses (x, y, z), (x, y, z)', and (x, y, z)". | |||

For this reason discontinuities in the third and fourth order time derivatives of the state are 'forgotten' after passing through the high-level controller, and the interpolation only needs to be second order differentiable. | |||

[[File: fig1.png ||thumb|left|300px| Events on the t, x plane.]] | |||

[[File: fig2.png ||thumb|right|300px| Events on the t, x plane, with corners split, showing for one corner base, v0, v1, and the time window corresponding to the corner.]] | |||

The code for sampling is implemented in trajectory.js, and it is useful to have taken a look to the code before reading this section. The sampling works by dividing the trajectory into 'corners'; every event 'base' of the trajectory array is combined with and two event vectors v0, v1. v0 spans halfway to the previous event, while v1 spans halfway to the next event. We sample the corner using the following formulae: | |||

<math> | |||

e(\tau) = base + v0 * c0(\tau) + v1 * c1(\tau) | |||

</math> | |||

<math> | |||

c0(\tau) = c1(1-\tau) | |||

</math> | |||

SIDENOTE: in the code the values of v0 and v1 are twice the value described here, the difference is compensated by halving c0 and c1. | |||

Which leaves freedom to choose a function c1. | |||

We require a few conditions: c1: [0, 1] -> [0, 1], to ensure all times covered by the corner can be 'generated', c1 is bijective, to ensure every time has a unique corresponding tau, c1(0) = 0, c1'(0) = 0, c1"(0) = 0, up to N order derivative. | |||

This condition guarantees that at the sides of the window, the trajectory transitions smooth to N-th order (0 order and first order are the same across the edge, higher order time derivatives are 0). | |||

<br><br> | |||

A method of achieving this is taking: | |||

<math> | |||

c1(tau) = \alpha * \tau^{N - 1} + \beta * \tau^N | |||

</math> | |||

<math> | |||

\alpha = N - 1 | |||

</math> | |||

<math> | |||

\beta = 2 - N | |||

</math> | |||

Now, the union of all corners, with <math>\tau</math> in [0, 1] gives a set of events where every time (in the duration of the trajectory) occurs exactly once. | |||

The remaining problem is to find this unique event given a certain time t. | |||

The correct corner can be found by comparing the start and end time of all corners; a corner contains the desired event if and only if (base + v0).t < t <(base + v1).t. | |||

Searching through all corners and checking this condition gives the correct corner. | |||

Within every corner <math>\frac{dt}{d\tau} > 0</math> at every point, therefore bisection can be used to find the value of <math>\tau</math> corresponding to t. | |||

When <math>\tau</math> is found, the corner can be sampled to finally find the event, and therefore the state at t. | |||

This sampling method was developed because it ensures sufficient smoothness of the path while guaranteeing that modifications to the input events only modify the sampled path locally, and allowing the user to modify the time variable of the events in the trajectory list makes syncing the trajectory with the music simple. | |||

( | Unfortunately this sampling method has some problems, most importantly, the condition that derivatives from second to Nth order are zero when transitioning between corners to guarantee smoothness gives issues. | ||

When making a circular motion the magnitude of acceleration is constant, but when emulating it it becomes zero at the transitions between corners, while varying smoothly. | |||

This implies that when a circular motion is replicated, the magnitude of acceleration oscillates between zero and an acceleration that's significantly greater than the perfect circle would have. | |||

This can only be fixed by making the acceleration non-zero during transitions, which requires another way to smoothen the transition between corners such that acceleration stays continuous. | |||

We propose to do this by applying convolution to the first-order continuous trajectory where the convolution has compact support and is smooth to at least first order (a single oscillation of a cosine wave or part of a fourth order polynomial are some examples). | |||

We are currently working on this, but is not in the codebase yet. | |||

Latest revision as of 11:32, 27 May 2018

Concept

Trajectory Application

The team Firefly is working hard on creating a drone show, where the lighting, song and movement of the drone all interact with each other. As of now these interactions are created manually, if they are to be changed they need to be adjusted inside the coding. The purpose of a trajectory editor, is to give any user the possibility to create his own drone show. Thus with this application it will be possible to change the song, trajectory and led design without having to dig deep inside the coding.

The application for the trajectory editor is being built using JavaScript and HTML5, thus making it a web application. A plan of action has been created, the goal is to create three prototypes. The first prototype focuses mainly on the functionality of the editor, to have a working prototype. It will only contain the top view of the drone, so the height will be constant when editing the trajectory. The second prototype will be created when most functions have been added. It will focus on the user experience and a side view of the drone will be added. Lastly for the final prototype we will try and create a 3D view of the drone, this will be quite a challenge.

As of now we are still working on the first prototype, most functions have been added. The plan was to have the end assessment of this academic year as the deadline of our first working prototype, which has already been achieved.

Organization

For building the trajectory application, one of the most important parts of the whole project was staying organized at all times. When working on such large applications, it is vital that every team member has a good idea regarding the task of the others such that overlap does not occur in the team.

The team that was assigned the task of building the trajectory application had its own private repository on GitHub. Whenever new features were being developed, a new branch was first created for that specific feature. After building the necessary feature on the newly created branch, a pull request was created for merging the feature branch into the master. The repository was configured such that at least one other team member approved the code changes before the branch could be merged into master, this way ensuring that everyone had a general understanding both of the code and of each other’s tasks. When everyone agreed on the code changes, the feature branch was merged into master. The final version of the application was published using github pages, such that the link could be shared online with other team members. The link for the application is: trajectory-editor

User Interface

User Interface Design

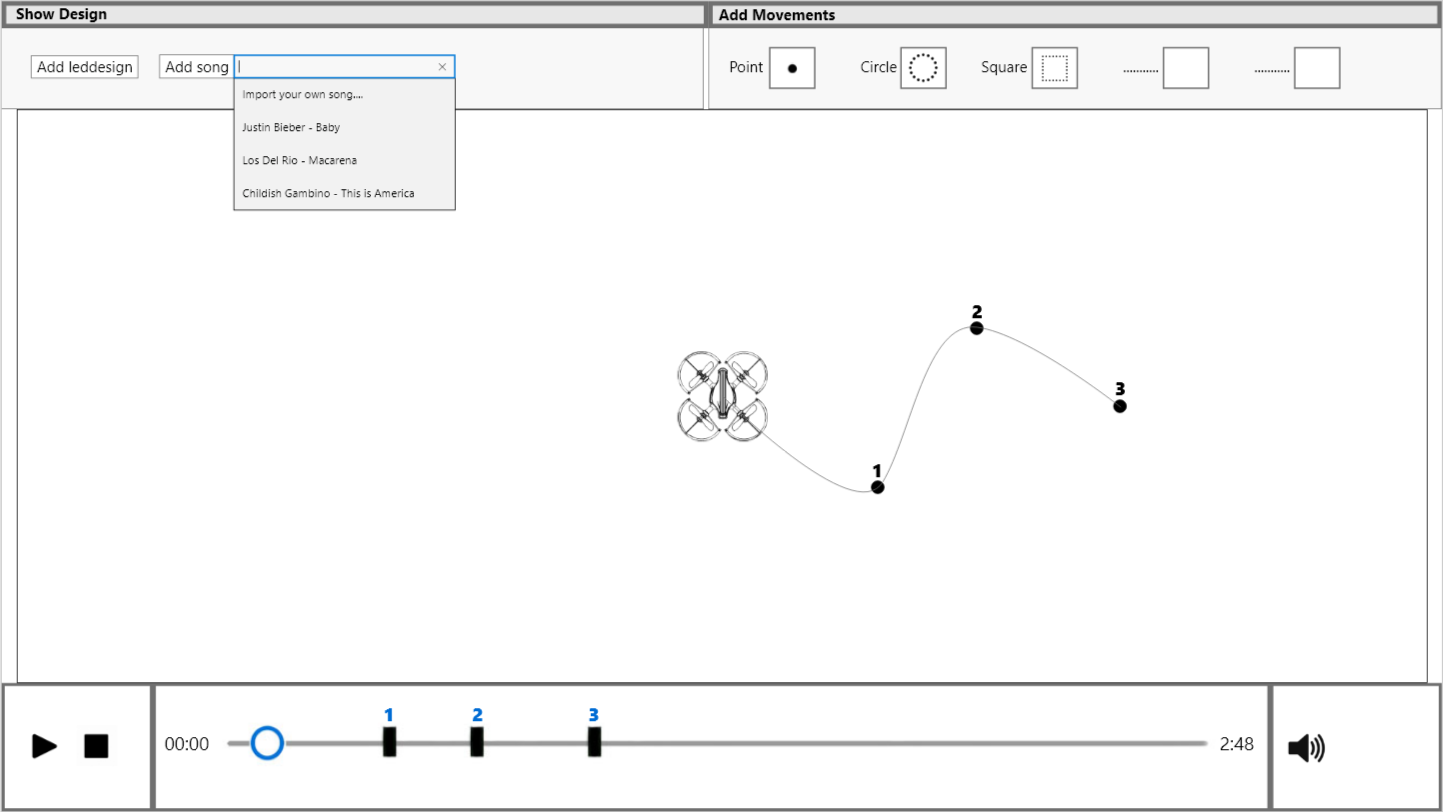

For the first prototype the user interface design has been created together with all three group members. It had to look simple and clean, the first prototype was rather to implement the functionality of the application. A design was created using Adobe XD.

The design is really simple, it consist of a toolbar, a canvas and a timeline. The toolbar contains the show design and the movements which can be added. In the show design the user is can add the led design and the the song which has to be playing. For the first prototype the user can only add a song, by choosing from a list or by importing his/her own song. The toolbar consist also of movements which can be added to the trajectory of the drone. The trajectory of the drone can consist of different kind of movements. For the functionality of the first prototype however, it was decided to only use point as a movement. This can also be seen in the canvas right beneath the toolbar.

Inside the canvas the user can add these points by simply clicking. The user can also drag those numbered points around in the 2D space. These adjustments will show up in the time line under the canvas. In this time line the different points can be adjusted as well, which will cause the drone to reach its destination faster or slower. When the song and trajectory both have been added, the show can be played, paused or stopped by the buttons on the left side of the time line. During the show the volume can be adjusted on the right side of the time line.

Functionality

This section provides some details regarding the core functionality of the application and how this is built. The structure of the code is explained and details are given regarding why certain software design choices were made.

Code Structure

The whole application functionality was split into smaller parts, each concerning a very specific core function. These include the main canvas for displaying the drone path animation and also for allowing users to input trajectories, the timeline, which allows users to manipulate the different time intervals for the points and the song functionality for uploading a song file. Furthermore, for each of these main core functions, helper methods were implemented in order to facilitate both scalability and to allow for easy maintenance.

Although JavaScript is a loosely typed programming language, the application was structured in a way that it adheres to all the object oriented solid principles. This includes building helper methods, isolating specific code in separate .js files and avoiding code duplication by parametrizing functions.

Bootstrap (front-end framework)

A front-end framework is a library with useful .js functions and CSS libraries such that web applications are easier and faster to develop. For our application it was decided that Bootstrap should be used for the front-end prototyping.

Bootstrap is a free and open-source front-end library for designing websites and web applications. It contains HTML and CSS-based design templates for typography, forms, buttons, navigation and other interface components, as well as optional JavaScript extensions. Unlike many web frameworks, it concerns itself with front-end development only. Bootstrap is the second most-starred project on GitHub which not only makes it the best choice for using it in web related projects but also offers lots of support for various problems (originally it was designed by two Twitter engineers who were looking for a fast and easy way to design nice looking websites).

Since the trajectory design application only uses basic UI elements and two main canvas elements, Bootstrap’s grid system was used to ensure that the application will be responsive. That is, the application will be displayed properly on various size devices, such as mobile phones and tables. However, not all functionality will work on such small devices as of now.

Canvas Elements

Added in HTML5, the HTML <canvas> element can be used to draw graphics via scripting in JavaScript. For example, it can be used to draw graphs, make photo compositions, create animations, or even do real-time video processing or rendering.

Since our application needs to render the trajectory that is being drawn by the user in real time, the obvious choice for this is to use an HTML canvas element. This give us the flexibility of freely drawing various (custom) shapes and plotting the trajectory in real time.

Our application uses two canvas elements: one for the main drawing of the drone and plotting the trajectory (together with the points and also a visualization of the path) and one for the timeline, for drawing a handle for each point such that users can change the time at which the drone needs to reach specific points (in the trajectory). Using these elements gives a lot of flexibility in terms of how the visual elements can be updated with .js dynamically.

Event Listeners

DOM Events are sent to notify code of interesting things that have taken place. Each event is represented by an object which is based on the Event interface, and may have additional custom fields and/or functions used to get additional information about what happened. Events can represent everything from basic user interactions to automated notifications of things happening in the rendering model.

The main method of interacting with html elements inside .js is using event listeners for various events that might be triggered on a page such as mouse clicks or drags. These event listeners are attached to the main canvas and fire based on the actions taken by users. For the main canvas, the most important event handlers used are onMouseDown(), onMouseUp() and onMouseMove() each being tasked with checking for a different event, suggested by the name. By using these three event listeners we can determine when points are added, dragged or no action is taken. The same principles are applied for the timeline canvas also, but more checks are made to ensure that certain properties (like handles not going over each other’s intervals) hold.

Undo and Redo

Undo and redo functionality is implemented in undo/redo.js. Before a change to the trajectory is applied, the function "snapshotTrajectory" pushes the state of the trajectory before the change onto the "undoStack". Calling undo pops the latest value from the undoStack and replaces the current trajectory with it. To allow redo functionality, undo also pushes the state of the trajectory before the undo is applied onto the "redoStack". Redo does the exact same as undo, but with the two stacks swapped; undo pushes the current trajectory onto the "undoStack", and pops the latest entry in the "redoStack" to the current trajectory.

When applying undo or redo it is important to check if the stack the function will pop a trajectory from is empty. If so, the function must do nothing. Also, when loading a new file, the undoStack and redoStack must be cleared to prevent the user from accidentally 'leaving' his loaded trajectory. Currently both stacks can grow without bound, which has the effect that after long periods of editing the undoStack might become impracticly large. This might cause performance problems, which should be prevented by limiting the size of the undoStack to a certain size (by forgetting the first entry after it reaches a certain size).

Savin and Loading Trajectories

Saving and loading only concerns state of the trajectory and is implemented in save\_load.js, though it relies on specific funcionality implemented in state.js, event.js and trajectory.js. The output format of the trajectory is a string of the following format with the format given by: (t, x, y, psi).

Improving Functionality

For the next iteration the team plans on adding new functionality such as: drawing predefined shapes (not only points), improving the user experience by adding tooltips to indicate various functions of the application, improving the overall look and feel of the application and also adding the possibility to get direct feedback. Adding a sound visualiser (to see the various pitches of the sound for better designing the show) and adding another slider to restrict the range of the main timeline are also ideas that we hope to include soon.

After this, the team plans on adding a side view (next to the existing top-down view) in order to better visualize the trajectory. Ideally, the last version will have a 3d view of the whole trajectory, but as this is harder to program in .js (as we would need some extensive knowledge in WebGL), it was decided to first implement multiple side views for the next prototypes. For implementing 3d functionality into our application a special .js library will probably be used such that working with WebGL is made easier. The team will start researching 3d libraries as soon as the 2d version of the application is finished such that the main functionality is already implemented once we move to building the 3d application. This way, we will have better and faster results when porting the application from 2d to 3d.

Trajectory

Trajectory representation

To describe the trajectory representation, a few types must be explained. Firstly, the state type (implemented in state.js) is a tuple (x, y, z, psi), where x, y, z, psi are the parameters describing the orientation of a drone. Notice that a state and it's derivatives at a certain position in time fully constrains the translation, velocity, orientation and rate of rotation of a drone without a possibility of the system being overdetermined. For this reason every followable trajectory is fully described by a function of time to state (with continuity to sufficient order). States have addition, subtraction and scalar multiplication functions, which makes the set of states a vector space.

The second type used in the trajectory representation is the event (implemented in event.js). An event is a tuple containing a time and state: e = (t, s). An event can be thought of as a waypoint that the drone should follow. Like states, events have addition, subtraction and scalar multiplication functions, which makes the set of events a vector space.

It is clear that a list of events with an interpolation function (which produces a continuous set of events) can be used to map time to state, and therefore fully describes a drone trajectory. It should be noted that the method of interpolation must guarantee that the state varies sufficiently smooth over time in order for the trajectory to be followable by a drone. Also, to describe a trajectory it is not required that the list of generating events are contained in the continuous set of events produced by the interpolation, which is not in line with the usual definition of the word interpolation. From now on the entire process will be referred to as sampling. Our trajectory parameterization is based on these ideas, therefore; a trajectory is parameterized by a time ordered array of events. The sampling functions are described in the next section.

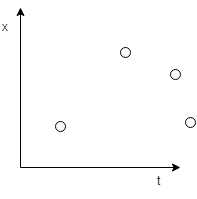

Trajectory sampling

As explained in the previous section, sampling is achieved by interpolating events to find states corresponding to intermediate times with sufficient smoothness. Because the acceleration of the drone is coupled to its orientation, the x, y, z components of followable trajectories must be continuous to at least the fourth order timed derivative. However, we use a control system which split into a high-level and low-level controller, where the spatial part of the high-level controller only uses (x, y, z), (x, y, z)', and (x, y, z)". For this reason discontinuities in the third and fourth order time derivatives of the state are 'forgotten' after passing through the high-level controller, and the interpolation only needs to be second order differentiable.

The code for sampling is implemented in trajectory.js, and it is useful to have taken a look to the code before reading this section. The sampling works by dividing the trajectory into 'corners'; every event 'base' of the trajectory array is combined with and two event vectors v0, v1. v0 spans halfway to the previous event, while v1 spans halfway to the next event. We sample the corner using the following formulae:

[math]\displaystyle{ e(\tau) = base + v0 * c0(\tau) + v1 * c1(\tau) }[/math]

[math]\displaystyle{ c0(\tau) = c1(1-\tau) }[/math]

SIDENOTE: in the code the values of v0 and v1 are twice the value described here, the difference is compensated by halving c0 and c1.

Which leaves freedom to choose a function c1. We require a few conditions: c1: [0, 1] -> [0, 1], to ensure all times covered by the corner can be 'generated', c1 is bijective, to ensure every time has a unique corresponding tau, c1(0) = 0, c1'(0) = 0, c1"(0) = 0, up to N order derivative.

This condition guarantees that at the sides of the window, the trajectory transitions smooth to N-th order (0 order and first order are the same across the edge, higher order time derivatives are 0).

A method of achieving this is taking:

[math]\displaystyle{ c1(tau) = \alpha * \tau^{N - 1} + \beta * \tau^N }[/math]

[math]\displaystyle{ \alpha = N - 1 }[/math]

[math]\displaystyle{ \beta = 2 - N }[/math]

Now, the union of all corners, with [math]\displaystyle{ \tau }[/math] in [0, 1] gives a set of events where every time (in the duration of the trajectory) occurs exactly once. The remaining problem is to find this unique event given a certain time t. The correct corner can be found by comparing the start and end time of all corners; a corner contains the desired event if and only if (base + v0).t < t <(base + v1).t. Searching through all corners and checking this condition gives the correct corner. Within every corner [math]\displaystyle{ \frac{dt}{d\tau} \gt 0 }[/math] at every point, therefore bisection can be used to find the value of [math]\displaystyle{ \tau }[/math] corresponding to t. When [math]\displaystyle{ \tau }[/math] is found, the corner can be sampled to finally find the event, and therefore the state at t.

This sampling method was developed because it ensures sufficient smoothness of the path while guaranteeing that modifications to the input events only modify the sampled path locally, and allowing the user to modify the time variable of the events in the trajectory list makes syncing the trajectory with the music simple. Unfortunately this sampling method has some problems, most importantly, the condition that derivatives from second to Nth order are zero when transitioning between corners to guarantee smoothness gives issues. When making a circular motion the magnitude of acceleration is constant, but when emulating it it becomes zero at the transitions between corners, while varying smoothly. This implies that when a circular motion is replicated, the magnitude of acceleration oscillates between zero and an acceleration that's significantly greater than the perfect circle would have. This can only be fixed by making the acceleration non-zero during transitions, which requires another way to smoothen the transition between corners such that acceleration stays continuous. We propose to do this by applying convolution to the first-order continuous trajectory where the convolution has compact support and is smooth to at least first order (a single oscillation of a cosine wave or part of a fourth order polynomial are some examples). We are currently working on this, but is not in the codebase yet.