Drone Referee - MSD 2017/18: Difference between revisions

No edit summary |

|||

| (248 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

[[File: Collina.png|thumb|upright=2|right|none|alt=Alt text|The Drone Referee]] | |||

=Introduction= | =Introduction= | ||

==Abstract== | ==Abstract== | ||

Being a billion Euro industry, the game of Football is constantly evolving with the use of advancing technologies that not only improves the game but also the fan experience. Most football stadiums are outfitted with state-of-the-art camera technologies that provide previously unseen vantage points to audiences worldwide. However, football matches are still refereed by humans who take decisions based on their visual information alone. This causes the referee to make incorrect decisions, which might strongly affect the outcome of the games. There is a need for supporting technologies that can improve the accuracy of referee decisions. Through this project, TU Eindhoven hopes develop a system with intelligent technology that can monitor the game in real time and make fair decisions based on observed events. This project is a | Being a billion Euro industry, the game of Football is constantly evolving with the use of advancing technologies that not only improves the game but also the fan experience. Most football stadiums are outfitted with state-of-the-art camera technologies that provide previously unseen vantage points to audiences worldwide. However, football matches are still refereed by humans who take decisions based on their visual information alone. This causes the referee to make incorrect decisions, which might strongly affect the outcome of the games. There is a need for supporting technologies that can improve the accuracy of referee decisions. Through this project, TU Eindhoven hopes to develop a system with intelligent technology that can monitor the game in real time and make fair decisions based on observed events. This project is a follow-up of two previous projects <Ref>http://cstwiki.wtb.tue.nl/index.php?title=Autonomous_Referee_System</Ref> <Ref>http://cstwiki.wtb.tue.nl/index.php?title=Robotic_Drone_Referee</Ref> working towards this goal. | ||

In this project, a drone is used to | In this project, a drone is used to assist a football match by detecting events and providing recommendations to a remote referee. The remote referee is then able to make decisions based on these recommendations from the drone. This football match is played by the university’s RoboCup robots, and, as a proof-of-concept, the drone referee is developed for this environment. | ||

This project focuses on the design and development of a high level system architecture and corresponding software modules on an existing quadrotor (drone). This project builds upon data and recommendations by the first two generations of Mechatronics System Design trainees with the purpose of providing a proof-of-concept Drone Referee for a 2x2 robot-soccer match. | This project focuses on the design and development of a high level system architecture and corresponding software modules on an existing quadrotor (drone). This project builds upon data and recommendations by the first two generations of Mechatronics System Design trainees with the purpose of providing a proof-of-concept Drone Referee for a 2x2 robot-soccer match. | ||

| Line 9: | Line 11: | ||

==Background and Context== | ==Background and Context== | ||

The Drone Referee project was introduced to the PDEng Mechatronics Systems Design team of 2015. The team was successful in demonstrating a proof-of-concept architecture, and the PDEng team of 2016 developed this further on an off-the-shelf drone. The challenge presented to the team of 2017 was to use the lessons of the previous teams to develop a drone referee using a new custom-made quadrotor. This drone was built and configured by a master student and his thesis was used as the baseline for this project. | The Drone Referee project was introduced to the PDEng Mechatronics Systems Design team of 2015. The team was successful in demonstrating a proof-of-concept architecture, and the PDEng team of 2016 developed this further on an off-the-shelf drone. The challenge presented to the team of 2017 was to use the lessons of the previous teams to develop a drone referee using a new custom-made quadrotor. This drone was built and configured by a master student and his thesis, Peter Rooijakkaers <Ref name="peter">http://cstwiki.wtb.tue.nl/index.php?title=File:Rooijakkers_PLM_2017_Design_and_Control_of_a_Quad-Rotor.pdf</Ref>, and was used as the baseline for this project. | ||

The MSD 2017 team | The MSD 2017 team consisted of seven people with different technical and academic backgrounds. One project manager and two team leaders were appointed and the remaining four team-members were divided under the two team leaders. The team is organized as below: | ||

{| class="wikitable" border="1" | {| class="wikitable" border="1" | ||

| Line 31: | Line 33: | ||

| Mohammad Reza Homayoun || Team 2 || m.r.homayoun@tue.nl | | Mohammad Reza Homayoun || Team 2 || m.r.homayoun@tue.nl | ||

|} | |} | ||

==Problem Description== | ==Problem Description== | ||

===Problem Setting=== | ===Problem Setting=== | ||

Two agents will be used to referee the game: a drone moving above the pitch and a remote human referee, receiving video streams from a camera | Two agents will be used to referee the game: a drone moving above the pitch and a remote human referee, receiving video streams from a camera system attached to the drone. The motion controller of the drone must provide video streams allowing for an adequate situation awareness of the human referee, and consequently enabling him/her to make proper decisions. A visualization and command interface shall allow interaction between the drone and the human referees. In particular, besides allowing the visualization of the real-time video stream this interface shall allow for more features such as on-demand repetitions of recent plays and enable the human referee to send decisions (kick-off, foul, free throw, etc) which should be signaled to the audience via LEDs placed on the drone and a display connected to a ground station. Moreover, based on computer vision processing, an algorithm which can run on the remote PC showing the video stream to the human referee, shall give a recommendation to the human referee with respect to the following two rules: | ||

* RULE A: Free throw, when the ball moves out of the bounds of the pitch, crossing one of the four lines delimiting the field. | * RULE A: Free throw, when the ball moves out of the bounds of the pitch, crossing one of the four lines delimiting the field. | ||

| Line 57: | Line 58: | ||

* WP4: Automatic recommendation for rule enforcement | * WP4: Automatic recommendation for rule enforcement | ||

==Project Scope== | ==Project Scope== | ||

The scope of | The scope of the project was refined during the design phase of the project and the scope was narrowed down to the following topics which will be explained in the sequel: | ||

* System Architecture | * System Architecture | ||

** Task-Skill-World model | ** Task-Skill-World model | ||

| Line 89: | Line 79: | ||

=System Architecture= | =System Architecture= | ||

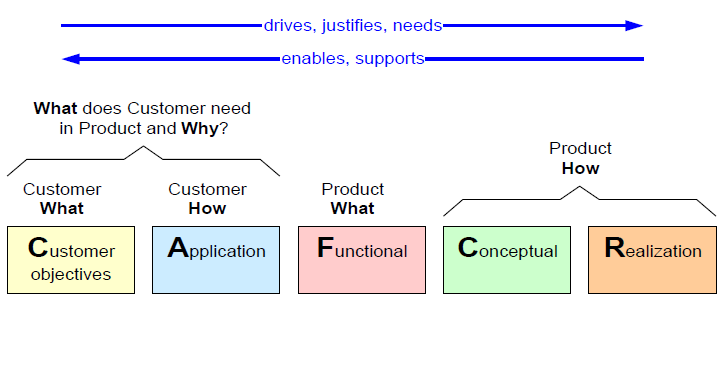

== | The initial architecture of Autonomous drone referee is based on CAFCR system architecture principles which follows the ''CAFCR: A Multi-view Method for Embedded Systems Architecting. Balancing Genericity and Specificity''<Ref>http://www.gaudisite.nl/ThesisBook.pdf</Ref>. This architecting technique gave an insight about different view-points in the project, and to create an architecture that has a balance between customer demands and the resources present. | ||

==Implemented | [[File:CAFCR diagram.PNG|center||frame|none|alt=Alt text|CAFCR view points for system architecture]] | ||

== Task Skill Motion Model == | |||

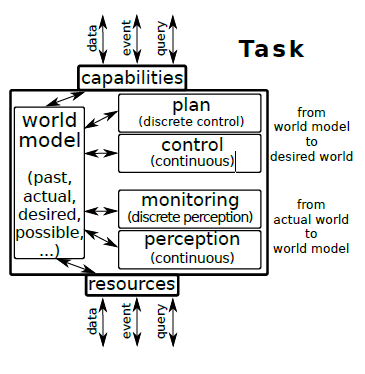

This project followed a paradigm often used define the system’s architecture of robotics systems taken from ''Composable control stacks in component-based cyber-physical system platforms'' <Ref>https://people.mech.kuleuven.be/~bruyninc/tmp/modelling-for-motion-stack.pdf</Ref>. It presents a model of components, whose final goal is to create a software application to be deployed on an operating systems process on top of hardware resources. This model helps to realize the compositions required for an application to perform tasks and eventually for integrating multiple systems into a complete system.. | |||

This model helps to realize certain aspects of a system: | |||

* It realizes the goals of the application. | |||

* It realizes how the goals will be realized. | |||

* How to exploit the resources it has available and construct a model with those available resources. | |||

* To realize capabilities that application can offer. | |||

=== Components of the Task model: === | |||

* Resources: These are the limitations and constrains that must be considered by the resources available for the project. | |||

* Capability: The limitation of the task that can be achieved by a user from it. | |||

* World Model: In a world model all the information is stored. It contains the past, present and future or the desired state of the tasks. The world model is required to be interconnected with other task models so as to share the information and also to update accordingly. | |||

* Plan: The high-level discrete events that make the current world model to achieve the desired world model. | |||

* Control: It consists of how to realize the plan, in the actual world, and with the actual task requirements and constraints. It contains continuous events facilitating the plans. | |||

* Monitor: It is a check on the perception so as to monitor the expected behaviour of the tasks. The monitor triggers the control action to keep the task parameters in check. | |||

* Perception: The perception is how the world model is perceived and updated in the world model. | |||

[[File: TSM.PNG|center|frame|none|alt=Alt text|Mereo-topological diagram for task skill motion model]] | |||

==Implemented Architecture and Description== | |||

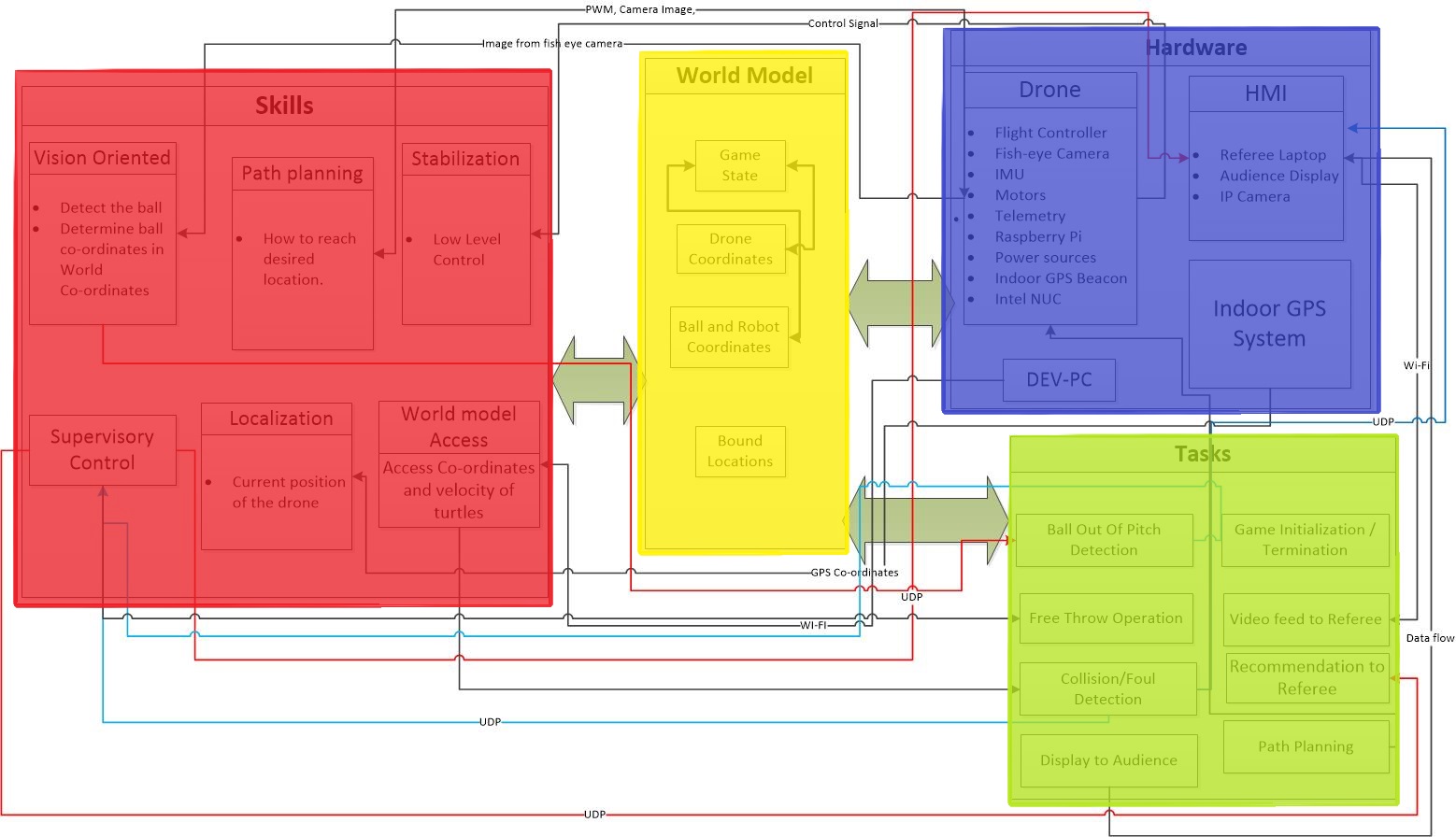

The architecture proposed has four prominent blocks: tasks, hardware, world model and skill. The tasks (green) consists of the objectives that is to be accomplished for the completion of the project. Skills (red) the implementation that is required perform the tasks successfully, this basically is the amalgamated version of the control and plan elements of the task skill motion model. Hardware (blue) block consists of all the hardware resources used in order to complete the tasks. It also has the platform where the tasks are performed, so the hardware is related to the capabilities of the system. Therefore the hardware block in this architecture contains both resources and capabilities. World model (Yellow) acts as a dynamically updated database for the parameters such as game states which informs about what is the current status of the game, for example, if it is in a gameplay or a pause has been taken for decision enforcement. World model also stores and updates the ball and robot co-ordinates which are quite crucial for the collision detection and the ball out of pitch detection. <br> | |||

The architecture also consists of interfaces between the interlinked components in the system. These interfaces are marked with the kind of information flow between the blocks. The world model is kept connected to all the blocks because it needs to be dynamically updated with the all the information.<br> | |||

[[File: System archi wiki.jpg|1000px|thumb|center|none|alt=Alt text|Implemented system architecture]] | |||

<p> | |||

'''Hardware:''': | |||

*'''''Drones''''': The drone used was made by Peter Rooijaker (master student from TU/e) as his master thesis project [https://surfdrive.surf.nl/files/index.php/s/hmlMrW6OkkqqlHg here]. The drone consists of a flight controller called pixhawk PX4, motors, fish eye camera, lidar, raspberry pi as an on-board processor for processing vision and indoor GPS beacon. Telemetry was used to communicate between drone and the off-board PC (Intel Nuc). | |||

*'''''Indoor GPS System''''': The localisation of the drone was done by installing Indoor GPS system by placing ultrasound beacons around the field. One of these beacons was placed on the drone which gave the drone coordinates. | |||

*'''''Dev-PC''''': We needed a Linux machine that can connect to the TechUnited network to access the world. | |||

*'''''Referee Laptop''''': A Laptop where a remote referee is monitoring the game and has an HMI to give his decision based on the recommendation and video feed. | |||

</p> | |||

<p> | |||

'''World Model''': | |||

*'''''Games States''''': It stores the state of the game if it's in progress, there is an event (ball out of pitch, ball inside pitch), there is a pause (referee taking time to think about discussion) or the game has ended. | |||

*'''''Drone Coordinates''''': It stores the coordinates of the drone obtained from the indoor GPS system and lidar. | |||

*'''''Ball and Robot Coordinates''''': The ball coordinates were obtained in the world model frame using fish eye camera and raspberry pi. The robot coordinates were obtained by accessing the TechUnited World model. | |||

</p> | |||

<p> | |||

'''Task:'''The objectives of the system is explained here: | |||

*'''''Ball out of pitch''': The system detects when the ball goes out of the pitch. | |||

*'''''Free throw''''': The referee notifies his decision of ''Free throw'' via HMI to the audience. | |||

*'''''Collision detection''''': The system is able to detect when two robots collide with each other. | |||

*'''''Display to audience''''': A screen notifies the audience about the decision of the remote referee. | |||

*'''''Video feed to referee''''': A live video feed of the gameplay is given to the referee. | |||

*'''''Recommendation to referee''''': Recommendations of events are send to the referee in referee HMI. | |||

*'''''Path Planning''''': An optimal way how the drone should move inside the pitch so that it can detect the events with ease. | |||

</p> | |||

<p> | |||

'''Skills''': Depending on the tasks the skills required by the system is explained here: | |||

*'''''Vision Oriented''''': The vision system has an ability to detect the ball and determine the ball coordinates in the world coordinates. | |||

*'''''Path Planning''''': The path planning has the ability to track the ball in an optimal way. | |||

*'''''Localisation''''': It notifies the flight controller (Pixhawk PX4) about its current position. | |||

*'''''Stabilization''''': This skill is associated with the flight controller where it provides a stable flight with help of an in-built PID Controller. | |||

*'''''World Model access''''': This provides the coordinates and velocity of the turtles during the gameplay. | |||

*'''''Supervisory Control''''': This skill regulates the discrete flags to the HMI and automates the sending of recommendation to the HMI. | |||

</p> | |||

=Implementation= | =Implementation= | ||

==Flight and Control== | ==Flight and Control== | ||

===Drone Localization=== | ===Drone Localization=== | ||

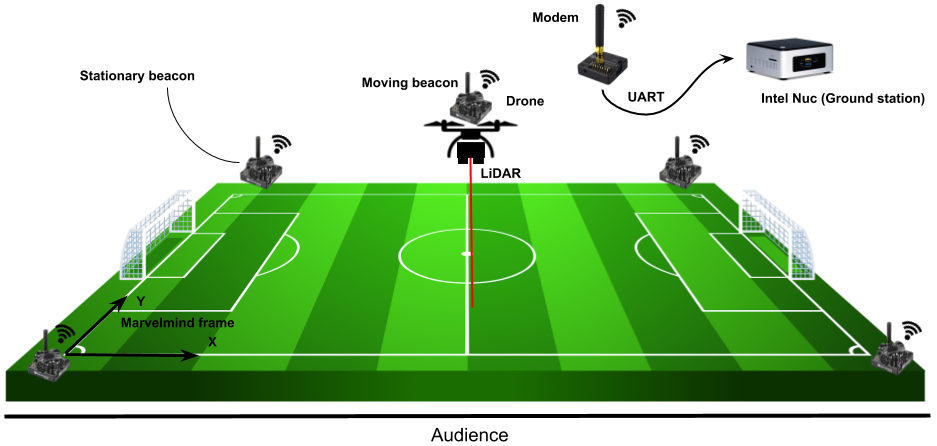

To efficiently plan and execute trajectories, it is crucial for the drone to have an accurate representation of its current pose. | To efficiently plan and execute trajectories, it is crucial for the drone to have an accurate representation of its current pose, position and attitude, in a Cartesian frame of reference. The internal IMU of the Pixhawk estimates the attitude (Roll, Pitch and Yaw angles) of the drone. However, the Pixhawk relies on external positioning systems to determine its position (X, Y and Z co-ordinates). Thus, the following localization systems was used: | ||

* A Lidar for determining the height (Z Co-ordinate). | |||

* Marvelmind setup for estimating X and Y co-ordinates. | |||

====Lidar==== | |||

A LIDAR-Lite V3 was physically attached to the drone. Since the Pixhawk has a built-in option for using a lidar as it's primary height measurement device, The lidar was then connected directly to the Pixhawk via the Pixhawk's I2C port. | |||

In order to enable this option the following Pixhawk parameters should be changed as follows: | |||

== | *EKF2_HGT_MODE = 2 | ||

*EKF2_RNG_AID = 1 | |||

These parameters can be changed using QGroundControl, which is a software interface for the Pixhawk. QGroudControl can be installed on either a Linux or a Windows computer and then the Pixhawk is connected to that computer through USB. In QGroundControl | |||

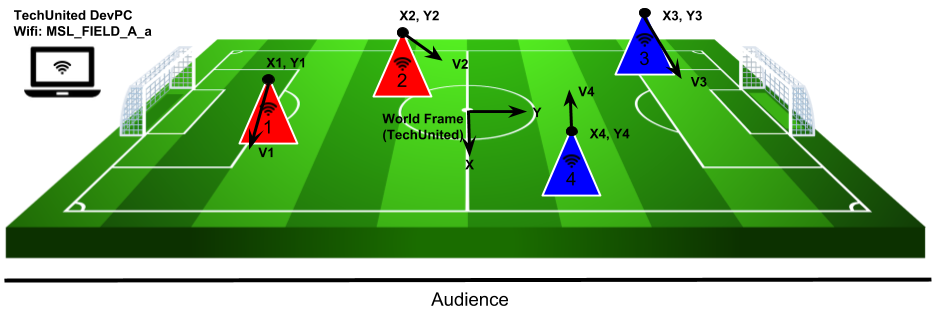

===Marvelmind Localization=== | ====Marvelmind Localization==== | ||

Marvelmind Robotics is a company that develops | Marvelmind Robotics is a company that develops an off-the-shelf indoor GPS. Indoor localization is achieved with the use of ultrasound beacons. In this application, four stationary beacons are placed at a height of around 1 meter at all each corner of the robocup field. A fifth beacon is attached to the drone and connected to the drone's on-board power circuit. This beacon is known as the hedgehog or hedge for short. The hedgehog is a mobile beacon whose position is tracked by the stationary beacons. Pose data of the four beacons and the hedgehog are communicated using the modem, which also provides data output via UART. In this application, the pose data for the hedgehog is accessed through the modem using a C program. This program accesses the data via UART, decrypts the pose data and broadcasts it to the MATLAB/Simulink file via UDP. All of this is executed on the Intel Nuc. The co-ordinates derived from the Marvelmind setup was mapped to the NED co-ordinate frame used by the Pixhawk. | ||

[[File:MarvelmindLocalization.png|center|Localization using Marvelmind indoor GPS: Coordinate frames and interfaces]] | [[File:MarvelmindLocalization.png|thumb|center||frame|none|alt=Alt text|Localization using Marvelmind indoor GPS: Coordinate frames and interfaces]] | ||

====Autonomous Flight==== | |||

This section explains how the on-board Pixhawk was used to fly the drone autonomously. | |||

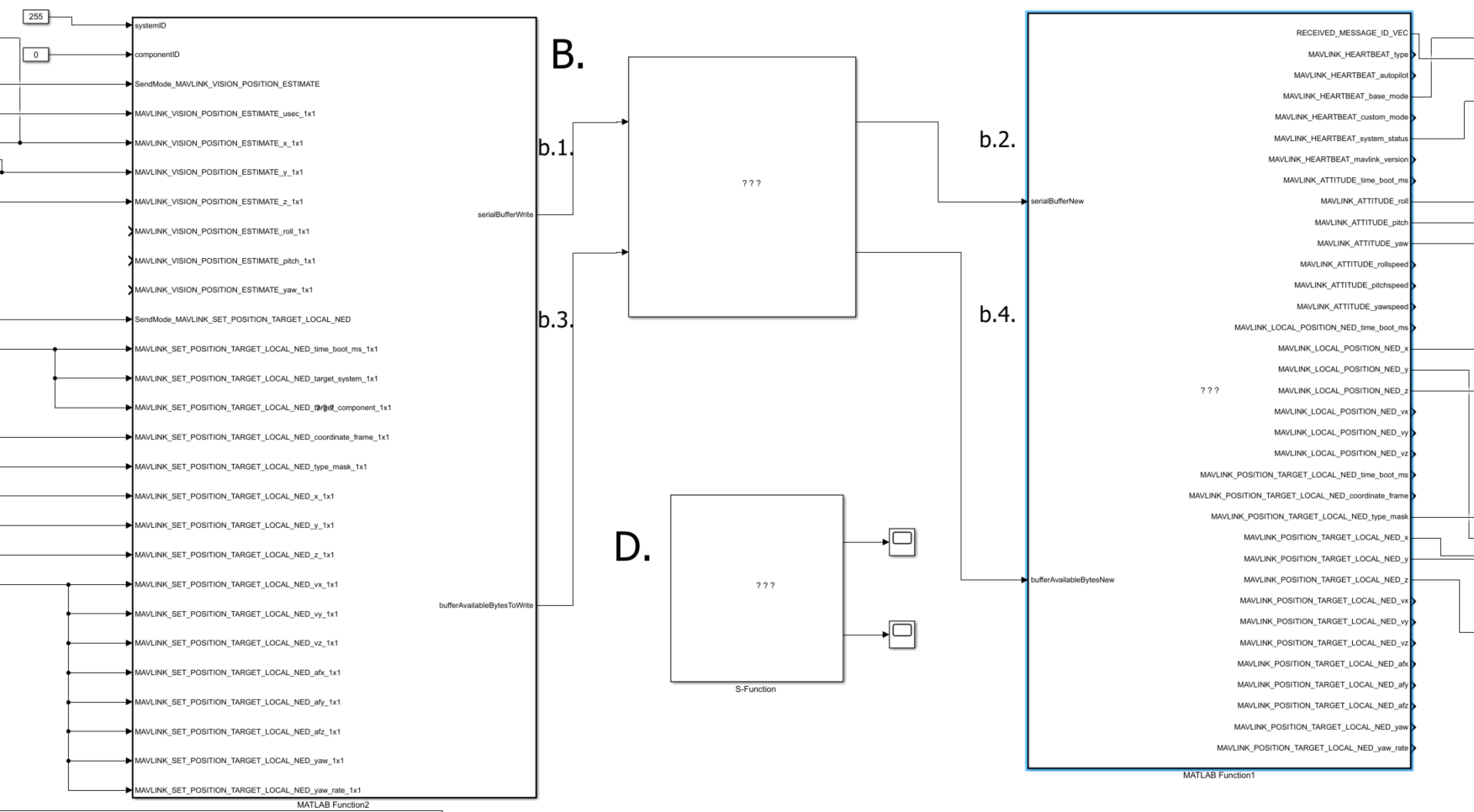

The actions taken by the Pixhawk are dictated by the messages it receives through the Mavlink message protocol. The Mavlink messages were generated in Matlab Simulink running on an off-board Intel NUC computer and were communicated to the Pixhawk via telemetry. The telemetry connection was established through connecting two telemetry modules, one to the ''telem2'' port of the Pixhawk and the other to the Intel NUC computer via USB. One Mavlink message is sent and another is received in every iteration of the Matlab Simulink simulation. Each Mavlink message sent to the Pixhawk include the position estimations taken from the Marvelmind setup and a required set point. The setpoint represents the target location that the Pixhawk, and subsequently the drone, is required to move to. The Mavlink message received include information regarding the drone's current pose and positions. This information was received to be used in [[#Path Planning|planning the drone's path]] by deciding upon the set points sent to the Pixhawk. | |||

[[File:Simulink Block Screenshot.png|thumb|upright=5|center|none|alt=Alt text|Matlab Simulink simulation blocks provided by Peter Rooijakkaers <ref name="peter" />. The left block is used to send Mavlink messages to the Pixhawk and the right block is used to receive Mavlink messages from the Pixhawk]] | |||

Pixhawk controllers by design have to be connected to a remote controller. As a result, a radio signal receiver was connected to the Pixhawk which received signals from a paired remote controller. The remote controller was used for the drone's take-off. When the drone reached an appropriate height, the remote controller was used to switch the Pixhawk to Offboard mode. The Offboard mode is the Pixhawk mode where it uses the set points received to move autonomously. | |||

Note: To set up the Pixhawk to accommodate switching to Offboard mode, the parameters below has to be set as follows: | |||

*SYS_COMPANION = 57600: Companion Link (57600 baud, 8N1) | |||

*RC_MAP_OFFB_SW = 5 ''(Can be selected to be any other desired channel)'' | |||

It is worth noting that a fully autonomous flight was never achieved. During flight trials, we observed that the set point that we read from the Pixhawk (Mavlink Message #85), did not always match the set point that was being sent to it (Mavlink Message #84). We were not able to determine the reason for this difference in values. | |||

===Path Planning=== | ===Path Planning=== | ||

Path planning | Path planning has a significant importance for autonomous systems. This lets a system like a drone to find a suitable path. A suitable path can be defined as a path that has a minimal amount of turning or hovering or whatever a specific application requires. Path planning requires a map of the environment and the drone needs to have its location with respect to the map. | ||

In this project, the drone is assumed to fly to the center of the pitch in manual mode and, after that, it switches to the automatic mode. In the automatic mode, a number of other assumptions are considered. | In this project, the drone is assumed to fly to the center of the pitch in manual mode and, after that, it switches to the automatic mode. In the automatic mode, a number of other assumptions are considered. | ||

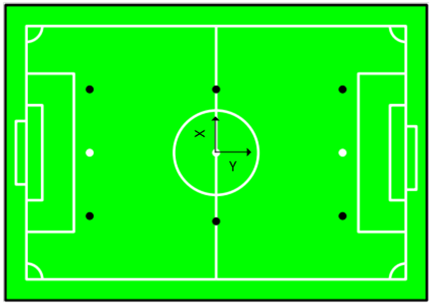

We will assume for now that the drone is able to localize itself in regards to the map which is a RoboSoccer football field. A view of the match field is depicted in | We will assume for now that the drone is able to localize itself in regards to the map which is a RoboSoccer football field. A view of the match field is depicted in the figure below. The drone is assumed to stay with the same attitude during the game and it receives ball location on the map, x and y. Also, the ball is assumed to be on the ground. Another assumption is that there is no obstacle for the drone in its height. Finally, the position obtained from vision algorithms includes the minimum noise. | ||

[[File:The_map_of_the_field.png|center| | [[File:The_map_of_the_field.png|center||frame|none|alt=Alt text|The map of the field]] | ||

Based on these assumptions, the problem is simplified to find a 2D path planning algorithm. Simple 2D path planning algorithms are not able to deal with complex 3D environments, where there are quite a lot of structures constraints and uncertainties. | Based on these assumptions, the problem is simplified to find a 2D path planning algorithm. Simple 2D path planning algorithms are not able to deal with complex 3D environments, where there are quite a lot of structures and obstacles resulting in constraints and uncertainties. | ||

Basically, the path planning problem is defined as finding the shortest path for the drone because of the energy constraint forced by hardware and the duration of the match. The drone that it is used in this project is limited to the maximum velocity of 3m/s. Another constraint is to have the ball in the view of the camera which is attached to the drone. | Basically, the path planning problem is defined as finding the shortest path for the drone because of the energy constraint forced by hardware and the duration of the match. The drone that it is used in this project is limited to the maximum velocity of 3m/s. This limitation is applied for the sake of tran Another constraint is to have the ball in the view of the camera which is attached to the drone. | ||

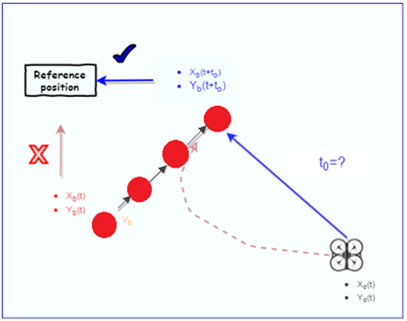

The path planning algorithm receives x and y coordinates of the ball from vision algorithm, a reference path should be defined for the drone. One possible solution is to directly give the ball position as a reference. Since the ball mostly moves into different direction during the game, it is more efficient to use ball velocity to predict future position. In this manner, the drone would make less effort to follow the ball. | The path planning algorithm receives x and y coordinates of the ball from vision algorithm, a reference path should be defined for the drone. One possible solution is to directly give the ball position as a reference. Since the ball mostly moves into different direction during the game, it is more efficient to use the ball velocity to predict the future position. In this manner, the drone would make less effort to follow the ball. The figure below illustrates how the drone traversed path is reduced by future position on the ball. | ||

[[File: Trajectory-based on the future position of the ball.png|center| | [[File: Trajectory-based on the future position of the ball.png|center|frame|none|alt=Alt text|Trajectory-based on the future position of the ball]] | ||

Using this approach, the result of the generated trajectory and drone position are shown in Figure | Using this approach, the result of the generated trajectory and drone position are shown in Figure below. | ||

[[File:Ball Tracking without position hold mechanism.png|center| | [[File:Ball Tracking without position hold mechanism.png|center|frame|none|alt=Alt text|Ball Tracking without position hold mechanism]] | ||

The drone should still put a considerable effort into tracking the ball constantly. Furthermore, results from the simulated game show that following the ball requires having a fast moving drone which may affect the quality of images. Therefore, the total velocity of the drone along the x and y-axes is limited for the sake of image quality. The video of the simulated game when a drone is following a ball is shown in video 1. | The drone should still put a considerable effort into tracking the ball constantly. Furthermore, results from the simulated game show that following the ball requires having a fast moving drone which may affect the quality of images. Therefore, the total velocity of the drone along the x and y-axes is limited for the sake of image quality. The video of the simulated game when a drone is following a ball is shown in video 1. | ||

[[File:TopViewG.gif|center]] | [[File:TopViewG.gif|center||frame|none|alt=Alt text|Video 1- Top view of the ball]] | ||

To make it more efficient, it is possible to consider a circle around the ball as its vicinity. When it is in the vicinity, supervisory control can change the drone mode to the position-hold. As soon as the ball moves to a position where the drone is out of its vicinity, it will switch to the ball tracking mode. On the other hand, the ball position in the images is sometimes occluded by the robot players. Thus, three different modes are designed in the current path planning. The first mode is ball tracking mode in which ball is far from the drone. The second one is position hold when the drone is in the ball vicinity. The Third one is occlusion mode in which drone moves around the last given position of the ball. However, this algorithm adds complexity to the system and may be hard to implement. | To make it more efficient, it is possible to consider a circle around the ball as its vicinity. When it is in the vicinity, supervisory control can change the drone mode to the position-hold. As soon as the ball moves to a position where the drone is out of its vicinity, it will switch to the ball tracking mode. On the other hand, the ball position in the images is sometimes occluded by the robot players. Thus, three different modes are designed in the current path planning. The first mode is ball tracking mode in which ball is far from the drone. The second one is position hold when the drone is in the ball vicinity. The Third one is occlusion mode in which drone moves around the last given position of the ball. However, this algorithm adds complexity to the system and may be hard to implement. | ||

A different scenario is to follow the ball from one side of the field by following the ball along y-axes. It is shown in the Video 2. In this case, the drone should be at a height more than what it can be in reality. Therefore, it is not practical. | A different scenario is to follow the ball from one side of the field by following the ball along y-axes. It is shown in the Video 2. In this case, the drone should be at a height more than what it can be in reality. Therefore, it is not practical. | ||

[[File:SideVideoG.gif|center]] | [[File:SideVideoG.gif|center||frame|none|alt=Alt text|Video 2- Side view of the ball]] | ||

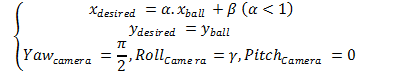

The drone follows the ball along the x-axes but it does not follow the ball completely along the y-axes. In fact, It moves in one side of the field and the camera records the image with an angle ( see equation 1). | The drone follows the ball along the x-axes but it does not follow the ball completely along the y-axes. In fact, It moves in one side of the field and the camera records the image with an angle ( see equation 1). | ||

| Line 146: | Line 217: | ||

[[File:Equation.png|center]] | [[File:Equation.png|center]] | ||

A result of utilizing this approach is that the drone moves I a part of the field and it is not required to move all around the field to follow the ball. The coefficient in the equation one should be set based on camera properties and also the height in which the drone flies. Video 3 | A result of utilizing this approach is that the drone moves I a part of the field and it is not required to move all around the field to follow the ball. The coefficient in the equation one should be set based on camera properties and also the height in which the drone flies. Video 3 illustrates this approach. | ||

[[File:PredefinedAreaG.gif|center]] | [[File:PredefinedAreaG.gif|center||frame|none|alt=Alt text|Video 3- Moving in a pre-defined area]] | ||

==Event Detection and Rule Enforcement== | ==Event Detection and Rule Enforcement== | ||

In a robot soccer, referee has to correctly interpret every situation, a wrong interpretation can have a large effect | In a robot soccer, a referee has to correctly interpret every situation, a wrong interpretation can have a large effect on the game result. The main duty of a robot referee should be the analysis of the game and the real-time refereeing decision making. A robot referee should be able to follow the game, i.e. to be near the most important game actions, as human referees do. In addition, it should be able to communicate its decisions to the human referee. Thus, a camera is attached to the drone to capture images of the game and to stream the video to the HMI for the referee. Also, the images have to be processed to estimate the position of the ball in the pitch. | ||

===Drone Camera=== | ===Drone Camera=== | ||

Compared to the field of vision that birds have, regular cameras have a | Compared to the field of vision that birds have, regular cameras have a rather narrow field of view, with an angle of around 70. The view angle of a fisheye camera is 180 degrees, so it can cover a wider field of view than a normal camera [[http://cstwiki.wtb.tue.nl/index.php?title=Autonomous_Referee_System Autonomous Referee System, 2016]]. In addition, experimental conclusions in the Autonomous Referee System shows that it is difficult to track a fast moving ball with a narrow-angle down-facing camera. In order to solve this problem, in this work, a fish-eye camera is selected. An effect of a wide field of vision might be that the ball can be tracked for a larger period of time without actively having to control the drone.Technical specifications of the fish-eye camera are in the following table. | ||

{| cellpadding=5 style="border:1px solid #BBB" | |||

|- bgcolor="#fafeff" | |||

! Camera | |||

! ELP-USB8MP02G-L180 | |||

|- bgcolor="#fafeff" | |||

| Sensor | |||

| Sony (1 / 3.2 ") IMX179 | |||

|- bgcolor="#fafeff" | |||

| Lens | |||

| 180 deg Fish-Eye | |||

|- bgcolor="#fafeff" | |||

| Format | |||

| MJPEG / YUY2 / | |||

|- bgcolor="#fafeff" | |||

| USB Protocol | |||

| USB2.0 HS / FS | |||

|- bgcolor="#fafeff" | |||

| Performance | |||

|3264X2448 MJPEG 15fps YUY2 2fps | |||

2592X1944 MJPEG 15fps YUY2 3fps | |||

2048X1536 MJPEG 15fps YUY2 3fps | |||

1600X1200 MJPEG 10fps YUY2 10fps | |||

1280X960 MJPEG15fps YUY2 10fps | |||

1024X768 MJPEG 30fps YUY2 10fps | |||

800X600 MJPEG 30fps YUY2 30fps | |||

640X480 MJPEG 30fps YUY2 30fp | |||

|- bgcolor="#fafeff" | |||

| Mass | |||

| 12 gram | |||

|- bgcolor="#fafeff" | |||

| Power | |||

| DC 5V 150mA | |||

|} | |||

===Image Processing=== | ===Image Processing=== | ||

====Objectives==== | ====Objectives==== | ||

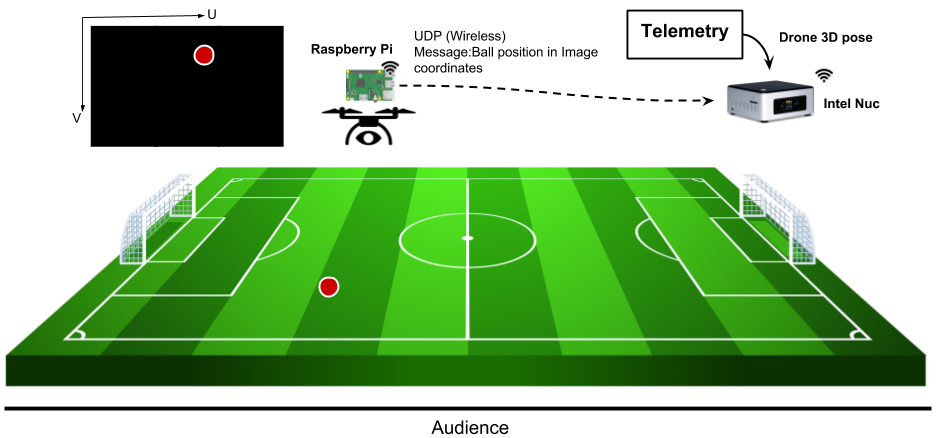

The position of the ball in the pitch | The position of the ball in the pitch has to be estimated. Therefore, images have to be captured from the camera (attached to the drone) and processed to detect the ball and estimate its position. Using the position of the ball, a recommendation has to be sent to the supervisory control when the ball goes out of pitch (B.O.O.P). Also, the referee needs a video streaming of the game to be able to follow the game. As a result, video streaming of camera has to be sent to the HMI for the remote referee. | ||

====Method==== | ====Method==== | ||

Two methods are available for detecting a ball in the image: | Two methods are available for detecting a ball in the image: | ||

*MATLAB | *MATLAB | ||

** | **FFmpeg | ||

**gigecam | **gigecam | ||

**hebicam | **hebicam | ||

*C++/python/Java | *C++/python/Java | ||

**OpenCV | **OpenCV | ||

Test results from the ‘MSD Group 2016’ show that capturing one image in MATLAB takes 2.2 seconds. This can be very slow for ball detection and position estimation of the ball, [[http://cstwiki.wtb.tue.nl/index.php?title=Autonomous_Referee_System Autonomous Referee System, 2016]]. Therefore, it would be better to use C++ instead of MATLAB. To this end, C++/OpenCV library has used in this project and the position of the ball can be estimated based on its color (yellow/orange/red). | |||

Test results from the ‘MSD Group 2016’ | |||

====Transformation==== | ====Transformation==== | ||

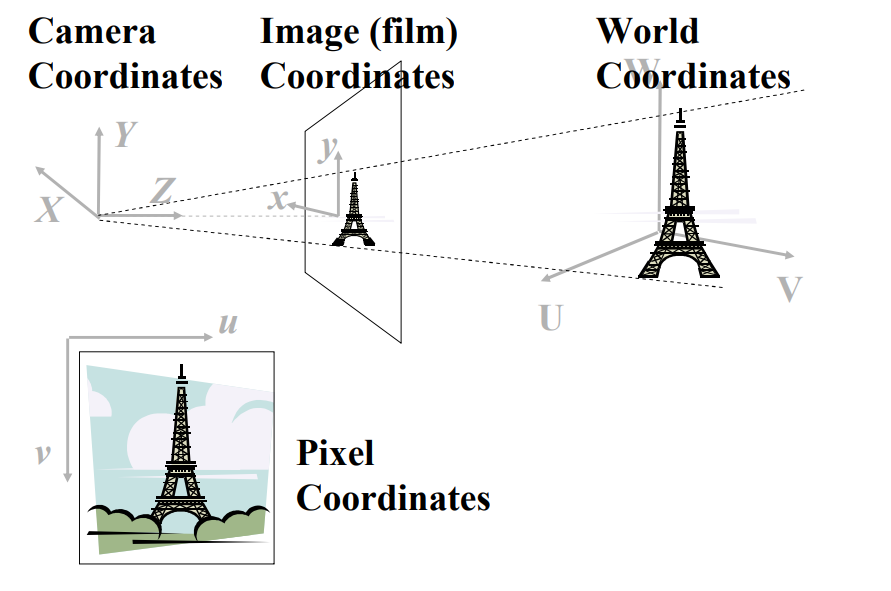

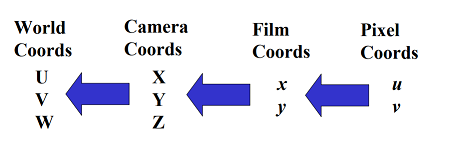

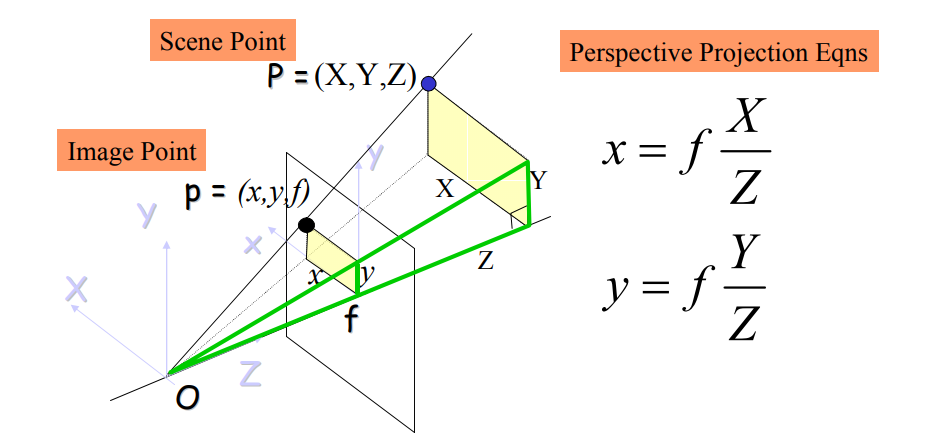

After finding position of the ball in the image, a mathematical model is needed to describe how 2D Pixel points get projected into 3D World points. | After finding the position of the ball in the image, a mathematical model is needed to describe how 2D Pixel points get projected into 3D World points. These mathematical coordinates are shown in the following Figure: | ||

[[File:ImagingGeometry.png | | [[File:ImagingGeometry.png|thumb|alt=Imaging Geometry.|Imaging Geometry|upright=3|center]] | ||

Therefore, the concern is to drive the backward projection | Therefore, the concern is to drive the backward projection as follows: | ||

[[File:smallerpic2.png |center|200px|frame|none|alt=Alt text|backward projection ]] | [[File:smallerpic2.png |center|200px|frame|none|alt=Alt text|backward projection ]] | ||

The process of image formation, in a camera, involves a projection of the 3-dimensional world onto a 2-dimensional surface. The depth information is lost and we can no longer tell from the image whether it is of a large object in the distance or a smaller closer object. | The process of image formation, in a camera, involves a projection of the 3-dimensional world onto a 2-dimensional surface. The depth information is lost and we can no longer tell from the image whether it is of a large object in the distance or a smaller closer object. | ||

Firstly, for projection from pixel coordinate to Film coordinate an undistorted image is required. To undistort the image, OpenCV library can be used. In order to apply it “calibration matrix” and “distortion coefficients” are needed which can be obtained from camera calibration code using ARUCO markers. For more information see OpenCV: Fisheye camera model. Using | Firstly, for projection from pixel coordinate to Film coordinate, an undistorted image is required. To undistort the image, OpenCV library can be used. In order to apply it “calibration matrix” and “distortion coefficients” are needed which can be obtained from camera calibration code using ARUCO markers. For more information see OpenCV: Fisheye camera model. Using these coefficients and basic perspective projection, the position of the ball with respect to film coordinate can be achieved. | ||

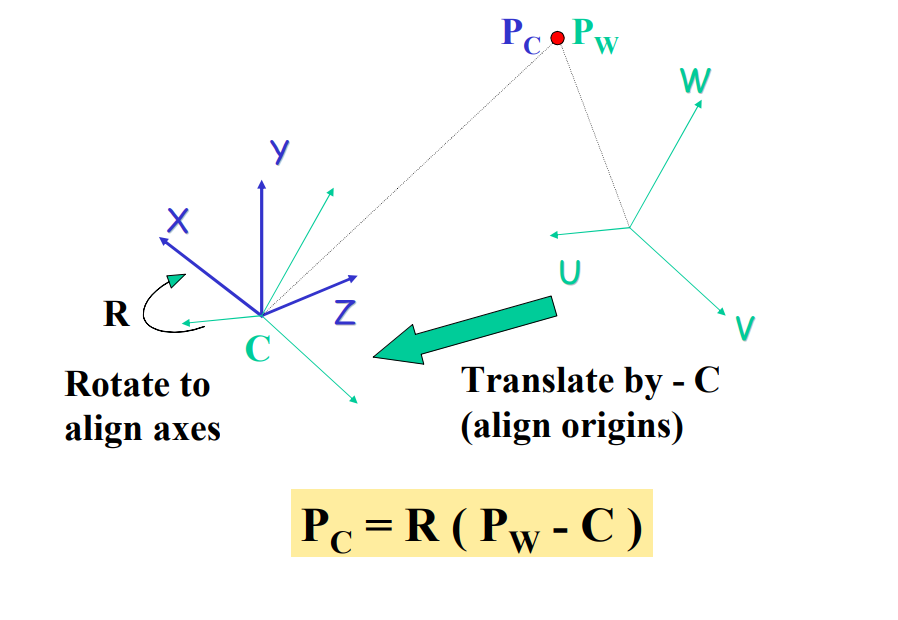

Secondly, projection from film coordinate to camera coordinate is illustrated in Figure | Secondly, projection from film coordinate to camera coordinate is illustrated in the following Figure: | ||

[[File:mappingcoordinates.png | | [[File:mappingcoordinates.png|thumb|alt=mapping from film coordinate to camera coordinate |mapping from film coordinate to camera coordinate |upright=3|center]] | ||

Finally, after applying projection equation and finding the position of the ball with respect to the camera, a rigid transformation (rotation+ translation) between world and camera coordinate systems is needed. | Finally, after applying projection equation and finding the position of the ball with respect to the camera, a rigid transformation (rotation+ translation) between world and camera coordinate systems is needed. | ||

[[File:transformationcoordinates.png | | [[File:transformationcoordinates.png|thumb|alt=transformation between world and camera coordinate|mtransformation between world and camera coordinate |upright=2|center]] | ||

Here, the position of the drone in the world model is available from top camera. Thus, there is no need to calculate camera transformation to world model. | Here, the position of the drone in the world model is available from the top camera. Thus, there is no need to calculate camera transformation to world model. The position of the ball with respect to the camera is calculated on the raspberry pi attached to the drone and this data is sent via UDP to the NUC. On NUC using this position and position of the drone, the position of the ball in the world model can be calculated. The connections between drone and ground station are shown here: | ||

[[File:communicationcoordinates.png |center|700px|alt=Alt text|communication between drone and ground station]] | [[File:communicationcoordinates.png |center|700px|alt=Alt text|communication between drone and ground station]] | ||

===Detecting Ball Out of the Pitch=== | ===Detecting Ball Out of the Pitch=== | ||

The objective is to detect the ball out of the pitch and send this data to the remote referee. The idea is to detect ball out of the pitch based on the position of the ball and the boundaries of the pitch. It is recommended to use this data with combination of line detection in the algorithm for more accuracy. | The objective is to detect the ball out of the pitch and send this data to the remote referee. The idea is to detect ball out of the pitch based on the position of the ball and the boundaries of the pitch. It is recommended to use this data with a combination of line detection in the algorithm for more accuracy. | ||

===Collision Detection=== | ===Collision Detection=== | ||

| Line 198: | Line 313: | ||

====ArUco Markers==== | ====ArUco Markers==== | ||

ArUco is a library for augmented reality (AR) applications based on OpenCV. In implementation, this library produces a dictionary of uniquely numbered AR tags that can be printed and stuck to any surface. With sufficient number of tags, the library is then able to determine the 3D position of the camera that is viewing these tags, assuming that the positions of the tags are known. In this application, the ArUco tags were stuck on top of each player robot and the camera was attached on the drone. The ArUco algorithm was reversed using perspective projections to determine the position of the AR tags using the known position of the drone (localization). However, two drawbacks of this solution were realized. | ArUco is a library for augmented reality (AR) applications based on OpenCV. In the implementation, this library produces a dictionary of uniquely numbered AR tags that can be printed and stuck to any surface. With sufficient number of tags, the library is then able to determine the 3D position of the camera that is viewing these tags, assuming that the positions of the tags are known. In this application, the ArUco tags were stuck on top of each player robot and the camera was attached on the drone. The ArUco algorithm was reversed using perspective projections to determine the position of the AR tags using the known position of the drone (localization). However, two drawbacks of this solution were realized. | ||

* The strategy for trajectory planning is to have the ball visible at all times. Since the priority is given to the ball, it is not necessary that all robot players are visible to the camera at all times. In certain circumstances, it is also possible that a collision occurs away from the ball and hence away from the camera’s field of view. | * The strategy for trajectory planning is to have the ball visible at all times. Since the priority is given to the ball, it is not necessary that all robot players are visible to the camera at all times. In certain circumstances, it is also possible that a collision occurs away from the ball and hence away from the camera’s field of view. | ||

| Line 214: | Line 329: | ||

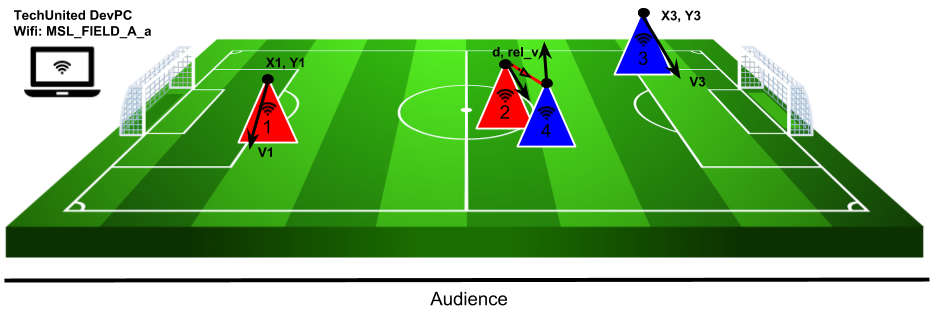

The image below shows 4 player robots divided into two teams. Using the multicast server, the position and velocity (with direction) is measured and stored. The axes in the center is the global world frame convention followed by TechUnited. | The image below shows 4 player robots divided into two teams. Using the multicast server, the position and velocity (with direction) is measured and stored. The axes in the center is the global world frame convention followed by TechUnited. | ||

[[File:WorldModel.png|center|TechUnited World Model]] | [[File:WorldModel.png|center|700px||frame|none|alt=Alt text|TechUnited World Model]] | ||

====Collision Detection==== | ====Collision Detection==== | ||

| Line 244: | Line 359: | ||

In the above pseudo-code, collisions are detected only if two robots are within a certain distance of each other. In this case, the relative velocity between these robots are checked. If this velocity is above a certain threshold, a collision is detected. The diagram below describes the scenario for a collision. In this diagram, the red line describes the two robots being close to each other, within the distance threshold. It can be considered that the robots are touching at this point. However, a collision is only defined if these robots are actually colliding. So, the relative velocity, described as ''rel_v' in the diagram, is checked. | In the above pseudo-code, collisions are detected only if two robots are within a certain distance of each other. In this case, the relative velocity between these robots are checked. If this velocity is above a certain threshold, a collision is detected. The diagram below describes the scenario for a collision. In this diagram, the red line describes the two robots being close to each other, within the distance threshold. It can be considered that the robots are touching at this point. However, a collision is only defined if these robots are actually colliding. So, the relative velocity, described as ''rel_v' in the diagram, is checked. | ||

[[File:CollisionDetectionalgorithm.png|center|Scenario for collision between robots]] | [[File:CollisionDetectionalgorithm.png|center||frame|none|alt=Alt text|Scenario for collision between robots]] | ||

The above pseudo-code is broken down and explained below: | The above pseudo-code is broken down and explained below: | ||

| Line 292: | Line 407: | ||

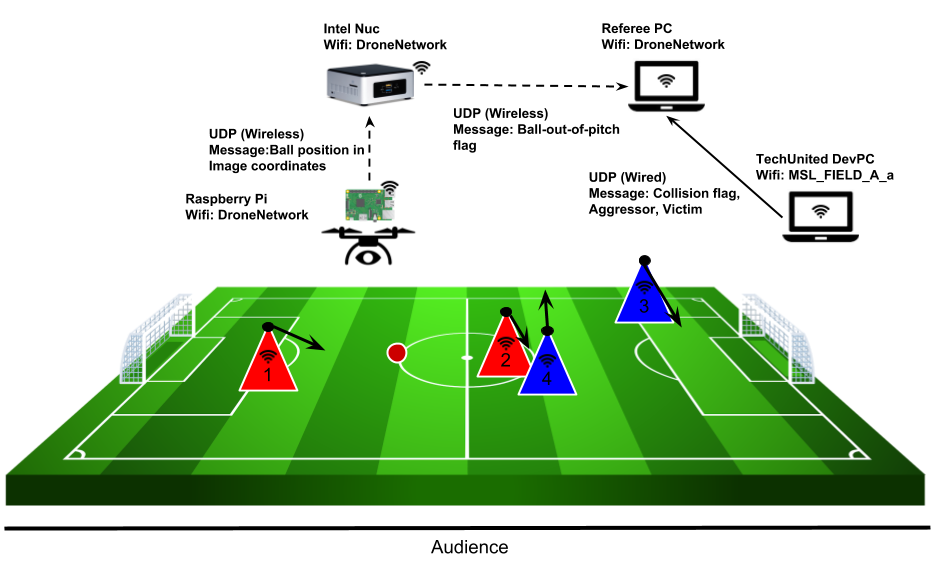

Developing additional interfaces for event detection and rule enforcement was necessary due to the following constraints. | Developing additional interfaces for event detection and rule enforcement was necessary due to the following constraints. | ||

* Intel Nuc was unreliable | * Intel Nuc was unreliable onboard the drone. While the telemetry module and the Pixhawk helped fly the drone, a Raspberry Pi was used for the computer vision. This Raspberry Pi communicates over UDP to the Nuc. Both the Nuc and the Raspberry Pi operate over an ad-hoc wireless network (DroneNetwork). | ||

* The collision detection utilizes the World Model of the TechUnited robots. To access this, the computer must be connected to the TechUnited wireless network. It was realized that the collision detection algorithm has nothing to do with the flight of the drone, and hence it is not necessary to broadcast the collision detection information to the Intel Nuc. Hence, this information was broadcasted directly to the remote referee over the TechUnited network. | * The collision detection utilizes the World Model of the TechUnited robots. To access this, the computer must be connected to the TechUnited wireless network. It was realized that the collision detection algorithm has nothing to do with the flight of the drone, and hence it is not necessary to broadcast the collision detection information to the Intel Nuc. Hence, this information was broadcasted directly to the remote referee over the TechUnited network. | ||

The diagram below describes all these interfaces and the data that is being transmitted/received. | The diagram below describes all these interfaces and the data that is being transmitted/received. | ||

[[File:RuleEnforcementinterfaces.png|center|Interfaces for the event detection and rule enforcement subsystem]] | [[File:RuleEnforcementinterfaces.png|thumb|upright=0.5|center||frame|none|alt=Alt text|Interfaces for the event detection and rule enforcement subsystem]] | ||

==Human-Machine Interface== | ==Human-Machine Interface== | ||

| Line 303: | Line 418: | ||

* A supervisor, which reads the collision detection and ball-out-of-pitch flag and determines the signals to be sent to the referee. | * A supervisor, which reads the collision detection and ball-out-of-pitch flag and determines the signals to be sent to the referee. | ||

* A GUI, where the referee is able to make decisions based on the detected events and also display these decisions/ | * A GUI, where the referee is able to make decisions based on the detected events and also display these decisions/enforcement to the audience. | ||

===Supervisory Control=== | ===Supervisory Control=== | ||

| Line 311: | Line 426: | ||

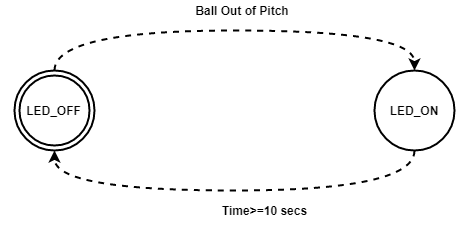

* The supervisor receives input from the vision algorithm as soon as event ''Ball Out of Pitch'' occurs. Afterwards, it provides a recommendation to the remote referee via a GUI platform running on his laptop. A constraint has been applied to terminate the recommendation automatically after 10 seconds if the referee doesn’t acknowledge the recommendation. | * The supervisor receives input from the vision algorithm as soon as event ''Ball Out of Pitch'' occurs. Afterwards, it provides a recommendation to the remote referee via a GUI platform running on his laptop. A constraint has been applied to terminate the recommendation automatically after 10 seconds if the referee doesn’t acknowledge the recommendation. | ||

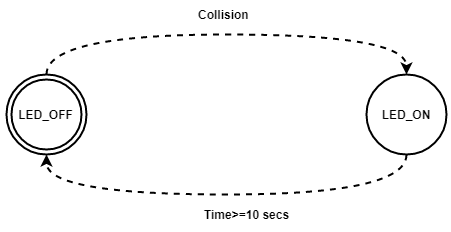

* The supervisor receives input about the occurrence of the event ''Collision Detected''. As soon as this event is detected, a recommendation is sent to remote referee via GUI Platform. The termination strategy is same as the previous event termination. | * The supervisor receives input about the occurrence of the event ''Collision Detected''. As soon as this event is detected, a recommendation is sent to remote referee via GUI Platform. The termination strategy is same as the previous event termination. | ||

* The referee has a button to show the audience the decision he has taken. For this, as soon as he pushes the button in GUI the decision is | * The referee has a button to show the audience the decision he has taken. For this, as soon as he pushes the button in GUI the decision is sent to another screen as an LED and the supervisor terminates the LED after a certain time as it releases the push button automatically. | ||

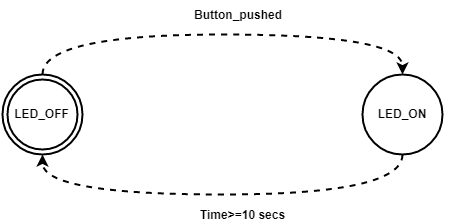

The following automatons were used for these tasks. | The following automatons were used for these tasks. | ||

[[File: | [[File:Ball_out_of_pitch_a.PNG|center||frame|none|alt=Alt text|LED to recommend Ball-out-of-pitch]] | ||

[[File:Collision_detection_a.PNG|center||frame|none|alt=Alt text|LED to recommend collision]] | |||

[[File:Push_button_a.PNG|center||frame|none|alt=Alt text|Push button for enforcing rules]] | |||

A brief study of both the Matlab state flow and CIF was conducted to decide which platform to be used to design the supervisor. Afterwards, we concluded that it is better to use CIF tool in our case because of the following reasons: | |||

* In CIF it is easy to synthesize a supervisory controller as soon as we create the model of the system. | |||

* In CIF one can straightforwardly check about controllable, uncontrollable states as well as marked and unmarked states. | |||

* In CIF the defining of states and also specifying what kind of states is quite easy. | |||

* In CIF the complexity to design the supervisory is lesser than State-flow. | |||

* It is quite simple to implement the supervisor constructed in CIF in MATLAB. | |||

To implement CIF code in Simulink a Matlab Simulink S-Function C-Code was generated, this code was further used in Simulink using S-function block. For code generation steps given in CIF website <ref> http://cif.se.wtb.tue.nl/tools/codegen/simulink.html </ref> is used. | |||

===Graphical User Interface=== | ===Graphical User Interface=== | ||

====The background==== | |||

The acceptance of model-based design across the embedded community has led to more and more systems being developed in Simulink. The complexities of Simulink models are increasing with more and more features being incorporated into controllers. To analyze the outputs of Simulink models we generally rely on scope and display blocks. But, when we work with large models, it is no longer possible to understand what is happening in the system with scope and display block alone. It can be better if you have a customizable HMI which consists of a panel of buttons, checkboxes et cetera which feeds all the necessary data required to the model and there is a similar Graphical User Interface(GUI,also known as UIs) which displays the output of the model use plots, fields et cetera. | |||

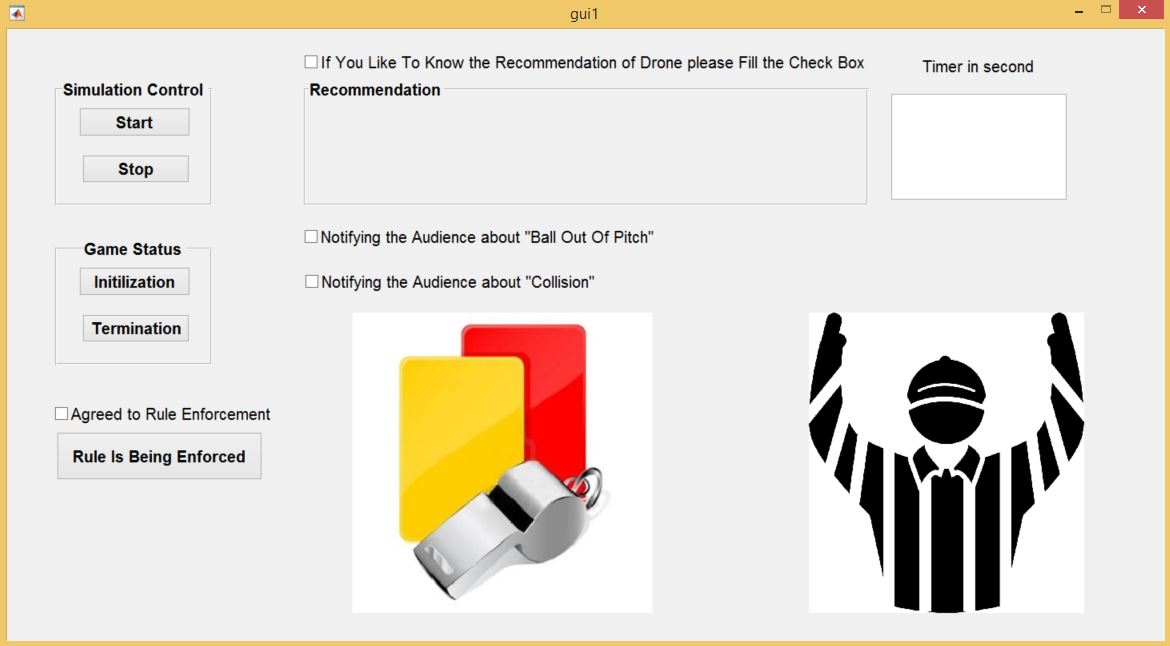

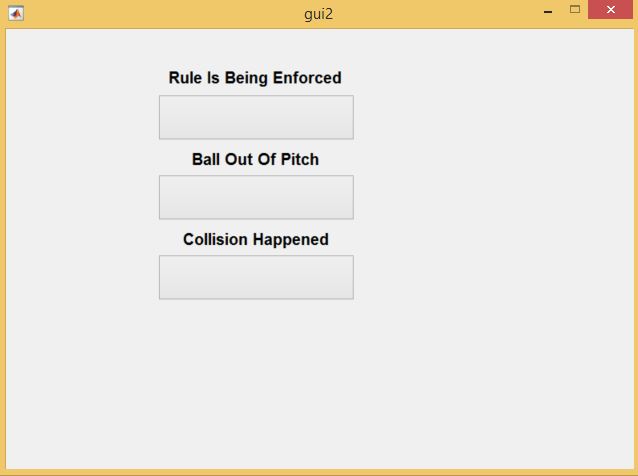

In this project, two agents will be used to referee the game: a drone moving above the pitch and a remote human referee, receiving video streams from a camera attached to the drone. A camera attached to the UAV help providing video streams allowing for an adequate situational awareness of the human referee, and consequently enabling him/her to make proper decisions. In addition, the vision-based algorithm was developed for providing recommendations such as “Ball Out Of Pitch” and “Collision Of Two Robots” to help human referee for making the final decision. It is worth mentioning that UAV only provide the RECOMMENDATION and FINAL decision will be made by the human referee. | |||

Therefore, a visualization and command interface shall allow interaction between the drone and the human referees. In particular, besides allowing the visualization of the real-time video stream, this interface shall allow for more features such as on-demand recommendation (“Ball Out Of Pitch” and “Collision Of Two Robots”) and enable the human referee to send decisions (kick-off, foul, free throw, etc.) which should be signaled to the audience via LEDs place on the second ground station interface. | |||

Hence, this documentation provides detail information on the process in which HMI is built. HMI used in this study was created with help of MATLAB-GUIDE. GUIs provide point-and-click control of software applications on the unmanned aerial vehicle (UAV) in this study case. GUIDE (GUI development environment) provides tools to design user interfaces for custom apps. Using the GUIDE Layout Editor, UI graphical design can be changed. GUIDE then automatically generates the MATLAB code for constructing the UI, which you can modify to program the behavior of your app. | |||

Consequently, the final design of HMI should cover following points: | |||

*Two GUI’s should be implemented ( one for the human referee and one for the audience) | |||

*Timer (countdown of the game) | |||

*Start/Stop of application (Simulink in this case) remotely | |||

*Initialization/Termination of the game | |||

*Provide abovementioned recommendation when it is requested | |||

*Real-time video stream | |||

*Communication with audience interface (ability to share the result with the audience) | |||

====Literature review==== | |||

For basic of MATLAB-GUIDE, the interested reader is referred to: | |||

* The online course on www.udemy.com under name of “MATLAB App Designing: The Ultimate Guide for MATLAB Apps” | |||

* [http://www.apmath.spbu.ru/ru/staff/smirnovmn/files/buildgui.pdf buildgui.pdf] | |||

* [https://nl.mathworks.com/matlabcentral/fileexchange/24861-41-complete-gui-examples GUI Example] | |||

* [https://ece.uwaterloo.ca/~nnikvand/Coderep/gui%20examples/A4-Gui_tutorial.pdf Gui_tutorial.pdf] | |||

* [https://blogs.mathworks.com/pick/2012/06/01/use-matlab-guis-with-simulink-models/ Use Matlab GUI with Simulink models] | |||

Among different functions available in the Simulink/GUIDE, knowing of the following functions is suggested to the reader as they may come handy at times. The functions can be named as follows: | |||

<pre> | |||

* open_system(‘sys’) | |||

* close_system(‘sys’) | |||

* paramValue = get_param(object, paramName) | |||

* set_param(object, paramName, Value) | |||

</pre> | |||

====AIM==== | |||

To make 2 GUI's | |||

#; Remote Referee interface (1st GUI) | |||

## Collects information from Simulink model and process it for a human referee | |||

## Control the Simulink model remotely | |||

#; Audience interface (2nd GUI) | |||

## Controlled by 1st GUI in order to change the LED colors depends on referee decision | |||

Two GUI’s have been designed to fulfill the requirement. This is done by drag and drop work using GUIDE. These two GUI’s could be seen in Figure 1 and 2 respectively. | |||

[[File: HMI1.jpg|center|300px|frame|none|alt=Alt text|visualization and command interface designed for human referee]] | |||

[[File: HMI2.jpg|center||frame|none|alt=Alt text|visualization for the audience]] | |||

====Property Inspector of GUI’s==== | |||

The following settings should be set in Property Manager prior to proceeding any further | |||

# All components were given specific Tags for easy identification | |||

# Hand Visibility of both GUI’s were set to “ON” for data flow between both GUI’s | |||

# String of Edit Text was deleted (place where Timer is going to work) | |||

====Opening Function (OpeningFcn)==== | |||

In addition, following commands were implemented in Opening Function part of 1st GUI: | |||

<pre> | |||

set(handles.gameover,'visible','off'); | |||

set(handles.BOOP,'visible','off'); | |||

set(handles.collision,'visible','off'); | |||

</pre> | |||

In 1st GUI OpeningFcn, following code implemented to automate loading and opening of Simulink file | |||

<pre> | |||

find_system('Name','check'); | |||

open_system('check'); | |||

</pre> | |||

In 1st GUI OpeningFcn, the background images of axes were introduced | |||

<pre> | |||

bg = imread('C:\Users\20176896\surfdrive\HMI Drone\HMI\ref4.jpg'); | |||

axes(handles.axes1) | |||

imshow(bg); | |||

bg1 = imread('C:\Users\20176896\surfdrive\HMI Drone\HMI\ref2.png'); | |||

axes(handles.axes2) | |||

imshow(bg1); | |||

</pre> | |||

====Provide Recommendation (Receiving/Setting Data from/to Simulink)==== | |||

It was mentioned earlier that interface provided for the human remote referee should provide on-demand recommendation (“Ball Out Of Pitch” and “Collision Of Two Robots”) and enable the human referee to send decisions (kick-off, foul, free throw, etc.). Therefore, there is a need for the visualization and command interface designed for human referee to be able to receive real-time DYNAMIC data from supervisory, UDP or any type of communication which is running in Simulink. | |||

There are different methods for interaction with dynamic simulation. This article describes some of the ways in which you can utilize Simulink’s data in MATLAB GUI. Simulink Function, Simulink Call back Functions, Simulink Event Listener, Custom MATLAB Code S-Function and Gluing the Simulink with GUIDE are some of the methods that can be used for this purposes. However, this documentation does not intend to explore every option in detail. | |||

To avoid future confusion, some of the experimented NON real-time methods for information collecting from Simulink model is explained as follow: | |||

=====Non-real-time solutions===== | |||

* Using To Workspace block to export the data.However, only data at the very beginning of the simulation can be extracted. | |||

* Using Scope and change some properties: check Save Data To Workspace and Uncheck Limite data to Last. However, ScopeData would appear in workspace only once the simulation is stopped. | |||

* Using the SIMSET function to define which workspace the Simulink model interacts with. ( simOut = sim(model,Name,Value) ) | |||

=====Real-time solution===== | |||

For receiving data from Simulink in real-time, combination of RuntimeObject and Simulink Event Listener have been used. Interested reader is referred to the following link: | |||

* [https://nl.mathworks.com/help/simulink/ug/accessing-block-data-during-simulation.html Accessing block data during simulation] | |||

======Access a Run-Time Object====== | |||

Therefore, first load and start the simulation by following commands: | |||

<pre> | |||

load_system('myModel') | |||

set_param('myModel','SimulationCommand','Start'); | |||

</pre> | |||

Then, to read data on any line of your Simulink model: | |||

#, Get a Simulink block object (let's try a Clock with the name Clock as an example): | |||

<pre> | |||

block = 'myModel/Clock'; | |||

rto = get_param(block, 'RuntimeObject'); | |||

</pre> | |||

# Then get the data on its first (or any) output port (or input) of that block. | |||

<pre> | |||

time = rto.OutputPort(1).Data; | |||

</pre> | |||

It is worth mentioning that, the displayed obtained data may not be the true block output if the run-time object is not synchronized with the Simulink execution. Simulink only ensures the run-time object and Simulink execution are synchronized when the run-time object is used either within a Level-2 MATLAB S-function or in an event listener callback. When called from the MATLAB Command Window, the run-time object can return incorrect output data if other blocks in the model are allowed to share memory. | |||

To ensure the Data field contains the correct block output, open the Configuration Parameters dialog box, and then clear the Signal Storage Reuse check box | |||

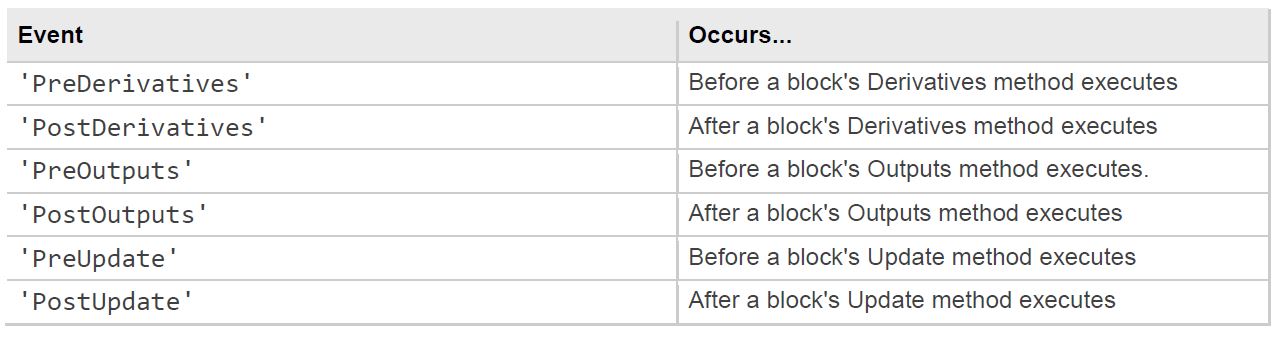

======Simulink Event Listener (Listen for Method Execution Events) ====== | |||

One application for the block run-time API is to collect diagnostic data at key points during simulation, such as the value of block states before or after blocks compute their outputs or derivatives. The block run-time API provides an event-listener mechanism that facilitates such applications. | |||

To apply Event Listener to any Simulink Block, add_exec_event_listener (h = add_exec_event_listener(blk,event,listener)) can be used. This will allow you to registers a listener for a block method execution event. For doing so, in the Simulink model, double-clicking on the annotation below the Model Properties and implement the desired code in the StartFcn-Callback. | |||

* [https://nl.mathworks.com/help/simulink/slref/add_exec_event_listener.html dd_exec_event_listener] | |||

* [https://nl.mathworks.com/help/simulink/ug/accessing-block-data-during-simulation.html accessing block data during simulation] | |||

In Event Listener, blk is specifying the block whose method execution event the listener is intended to handle by defining full pathname of a block. Check is the name of the Simulink Model and S-Function is the block whose method execution event the listener is intended to handle. Afterward, type of Event which the listener listens should be specified base on need and application. Events may be any of the followings: | |||

[[File: Photo_8.JPG|thumb|upright=4|center|alt=Alt text| Different types of events]] | |||

Then, the function displayBusdata.m (custom made function) is registered as an event listener that is executed after every call to the S-function's Outputs method. The calling syntax used in displayBusdata.m follows the standard needed for any listener. The function displayBusdata.m can be found in the source code. | |||

In conclusion, in the GUI designed, the referee can have access to real-time data of supervisory (S-Function) in Simulink model when he/she is interested (can express this by filling the checkbox). Therefore, in call back of this check box, abovementioned functionality is incorporated. | |||

====Data Flow between two GUI’s==== | |||

Based on the above-mentioned information, the remote referee final decision should be signaled to the audience via LEDs place on the second ground station interface. Therefore, there should be a data flow between the GUI provided to the human referee and the GUI which is provided for the audience. | |||

For data flow between two GUI’s, Hand Visibility of both GUI’s should be set to “ON”. No codes need to be implemented in the 2nd GUI (Audience GUI) and the color of LEDs (push buttons in audience GUI) can be changed within the first GUI (remote referee GUI) according to the commands which are implemented. | |||

==== Timer Implementation==== | |||

The remote referee can set and see the remaining time of the game at all times. The Timer (Count down) could be created performing following steps: | |||

# Create a timer object. | |||

# Specify which MATLAB commands you want executed to control the object behavior. | |||

# Trigger the object using the Push Button. (here Initialization push button) | |||

==== Real-Time Video Feed from USB Webcam ==== | |||

The live video stream can be incorporated in a custom graphical user interface (GUI) using the “preview” function in any Handle Graphics® image object if the USB camera is PHYSICALLY connected to the computer that GUI is running in. First, make sure that “MATLAB Support Package for USB Webcams” and “Image Acquisition Toolbox Support Package for OS Generic Video Interface” Hardware Support Packages are installed. Then, to use this capability, create an image object (i.e. AXES) and then call the preview function, specifying a handle to the image object as an argument. The preview function outputs the live video stream to the image object you specify. The following example calls the preview function, specifying a handle to the image object. | |||

<pre> | |||

axes(handles.axes1); | |||

vid=videoinput('winvideo',2); | |||

himage=image(zeros(1024,768,3),'parent',handles.axes1); | |||

preview(vid,himage); | |||

</pre> | |||

For better understanding, the interested reader is referred to: | |||

* [http://nl.mathworks.com/help/imaq/previewing-data.html#f11-76067 Matlab Previewing Data] | |||

* [https://www.youtube.com/watch?v=RP5VeeTceeo YouTube tutorial] | |||

==== Start/Stop of Simulink remotely ==== | |||

The status of simulation can be changed using "set_param()" function in the call back of specified object in GUI.Further information could be found at the following link: | |||

* [https://nl.mathworks.com/help/simulink/ug/control-simulations-programmatically.html Control simulations programmatically] | |||

==== Initialization/Termination of the game ==== | |||

The initialization and termination of the game can be controlled remotely via GUI provided to the human referee. The remote referee should be able to terminate the game at ALL the time. For better understanding, the interested reader is referred to the "Initialization" and "Termination" parts of the source code. | |||

=== Live Video feed and Replay === | |||

One of the important features in the HMI was to provide the human referee with a live video stream of the game situation with a replay feature. An attempt which was made to achieve this which was quite successful in an application, but due to lack of resources and time constraints implementation results were not up to the mark. | |||

==== Implementation ==== | |||

During the implementation, a mobile camera was converted to an IP-camera using an IP-Webcam android application. The IP-address of the camera is known VLC-media player can be used to create a Live video feed and record a video in the system simultaneously <ref>https://wiki.videolan.org/Documentation:Streaming_HowTo/Easy_Streaming/</ref>. The IP of this IP camera can also be used in a Matlab script to create a screen for a Live video feed. The recorded video can be used by a referee as a reference to verify the events that happened in the game. | |||

==Integration== | ==Integration== | ||

| Line 327: | Line 642: | ||

===Demo Video=== | ===Demo Video=== | ||

<center>[[File:YoutubeThumbnailDroneReferee.jpg|center|link=https://www.youtube.com/watch?v=Q4CtUdvZS1U?autoplay=1]]</center> | <center>[[File:YoutubeThumbnailDroneReferee.jpg|center|link=https://www.youtube.com/watch?v=Q4CtUdvZS1U?autoplay=1]]</center> | ||

=Risk Analysis= | |||

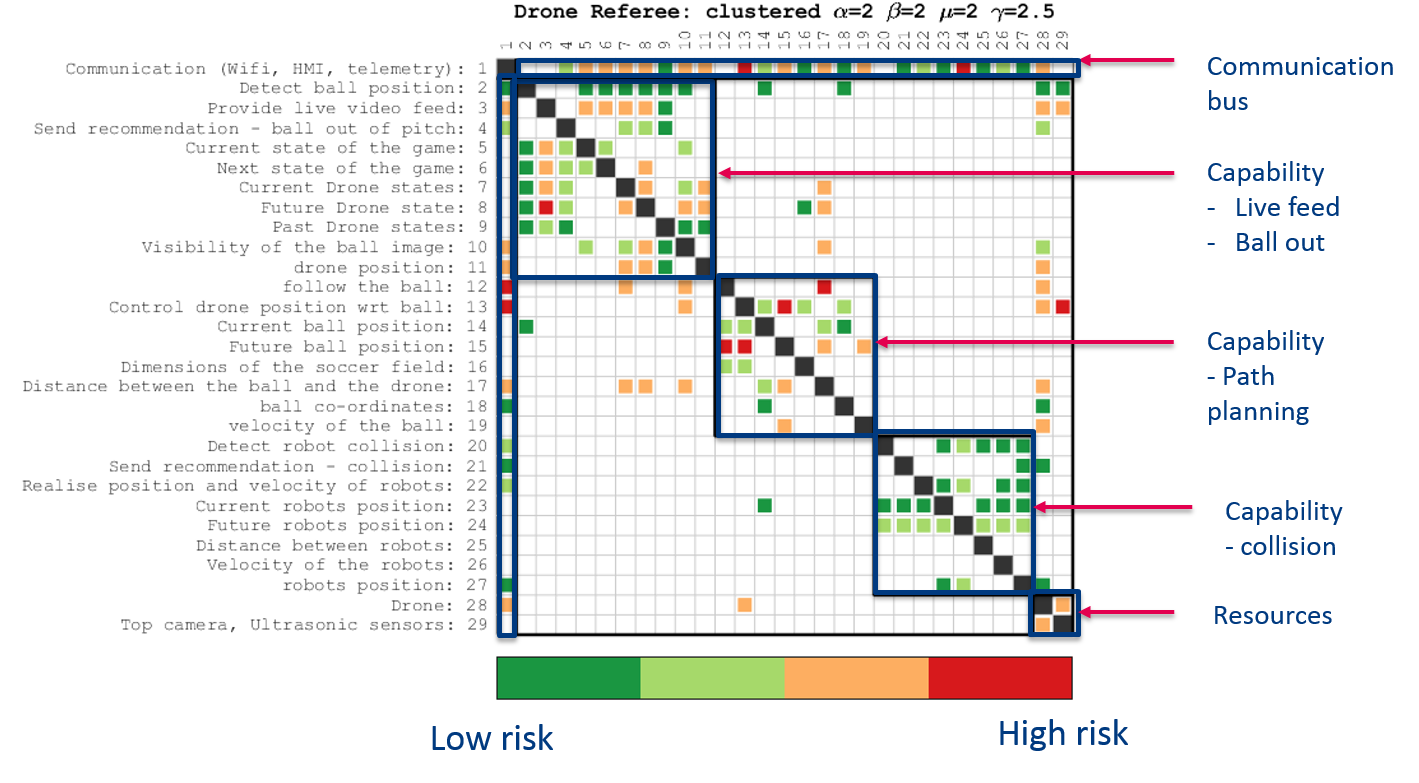

The goal of this exercise to analyze risk within the drone referee system based on the objective function called the technology risk (TR). Here, TR is the summation of ratings given to the actual test outputs and failure risk of elements within the system architecture. Please refer to [https://surfdrive.surf.nl/files/index.php/s/xS46kbhNAu1toGd?path=%2FDSM%2Fdata this] for the rating criterion.<br> | |||

The rating criterion for the test results defines the output of the test results in such a way that a rating criterion of 1 implies the test results are reliable, 3 – the test results are moderately reliable, 5 – results are unsatisfactory. A similarly rating criterion has been provided for the failure risk, where 1 signifies no drop is performance or failure risk is low, 2 means the risk is moderate but the system is operable and 5 means that the system is inoperable.<br> | |||

To give an example , the drone referee project has a capability of ball position detection, after conducting several tests we have rated this capability for failure risk as 1 and test results as 1, because we were always able to detect the ball when the ball was moving on the ground.<br> | |||

However, in case of providing the live video feed we rated failure risk to be 3 and test results as 5. This is because we used a raspberry pi to provide a live feed and the processing power is of the raspberry pi is low and we were unable to achieve a stable live feed.<br> | |||

Moreover, capability of following the ball we found out that the drone was unable to move to a predefined location because the marvelmind sensors were unable to give a yaw input to the drone. Moreover, the magnetometer within the pixhawk was also inoperable. Although the ideal solution here would have been to use the top camera for the yaw input but unfortunately the top camera code was not working. Hence, we rated the test results to 5 and failure risk to 3.<br> | |||

Figure shows the system architecture mapped onto a matrix, here the diagonal terms of the matrix represents the elements connected with themselves and off diagonal terms represent the interaction within the elements. This is also known as the interaction matrix or a design structured matrix.<br> | |||

The main motivation to use the DSM is to have a concise architectural representation and clearly highlight the risk within the interactions between the element of the system. | |||

As the architectural representation is generic this tool can be used by future generation to highlight the risk. The information to use this tool is provide in [https://surfdrive.surf.nl/files/index.php/s/xS46kbhNAu1toGd?path=%2FDSM%2Fdata this].<br> | |||

To this end a multilevel flow based Markov clustering has been used <ref>Wilschut, T., Etman, L. F. P., Rooda, J. E., & Adan, I. J. B. F. (2017). Multi-level flow-based Markov clustering for design structure matrices. Journal of Mechanical Design : Transactions of the ASME, 139(12), [121402]. DOI: 10.1115/1.4037626</ref>.. The clustering algorithm permutes the system with respect to capabilities, resources and a bus connection. Furthermore, the color map shows the increase in risk from green to red, this is highlighted in figure. Interesting to note that all the risks within the system are now represented by a simple color map that distinctly highlights the level of risk involved as explained above.<br> | |||

[[File:Risk analysis.png|thumb|upright=4|center|alt=Alt text| Interaction matrix for risk analysis]] | |||

=Conclusions and Recommendations= | =Conclusions and Recommendations= | ||

==Conclusions== | ==Conclusions== | ||

* The previous arrangement of having an on-board Intel NUC computer has been replaced with a raspberry pi and a telemetry connection. | |||

* Ball detection and ball out of pitch have been successfully implemented using a manually operated drone. | |||

* Collision Detection | |||

** Detection and Tracking of robots using the drone camera was proven to be unreliable due to drone/robot speed and processing latency issues | |||

** It was decided to access state values from the robots themselves | |||

** The TechUnited world model was accessed and used successfully | |||

** Using the robots' positions and velocities was proven to be reliable, considering the communication interfaces to be working perfectly | |||

* Communication Interfaces | |||

** The telemetry interface demonstrated latency issues and had a significantly lower update rate than with the Pixhawk connected directly to the on-board Nuc | |||

** The UDP interfaces showed high update rates and reliability. These connections, however, were not completely secure | |||

** There was high latency in the video streaming of the fish-eye camera using the Raspberry Pi. This was solved by using an IP camera. This proved to be reliable as a stand-alone solution, however, it wasn't integrated with the final system | |||

* Simulation conducted to gain insights related to path planning. | |||

* Supervisory Control | |||

**It was found that CIF is a better tool when compared to Matlab state flow for designing a supervisor for the current use case. A supervisor made in CIF tool was successfully implemented in Matlab simulink by converting it into Matlab S-function C-code. | |||

* HMI has been established for the remote referee. | |||

* Communication between the human remote referee interface and audience interface has been established | |||

==Recommendations== | ==Recommendations== | ||

* Fuse sensor data of top camera and indoor GPS system for drone localization. | |||

* To have a more compact drone because the current drones propellers airflow displaces the ball. | |||

* Collision Detection | |||

** Consider using either beacons on-board the robot players or the top cam for robot detection and tracking. This eliminates the dependency on the TechUnited software architecture | |||

** As an alternative to the above, consider developing a standardized interface for robo-soccer teams to provide robot states (position/velocity/acceleration) to the referee system | |||

** The collision detection algorithm needs to be updated with consideration towards the impact on the robots, to determine the player guilty of causing the collision | |||

** Consider predictive algorithms that can predict an impending collision | |||

** Detect the position of the collision and integrate this information with the trajectory planning to re-orient the drone during collisions | |||

* Combine line detection algorithm with the current algorithm for improving the accuracy. | |||

* Procure an FPV camera kit or a wide angle IP camera so that the range of vision is better than a mobile phone camera. It has been realized that IP camera is a feasible solution for providing Live feed and Replays, also Matlab has compatible hardware package to support it. | |||

=Additional Resources= | =Additional Resources= | ||

| Line 339: | Line 698: | ||

* MATLAB/Simulink Code | * MATLAB/Simulink Code | ||

The C/C++ codes are | The C/C++ codes are open-source on Github [https://github.com/adityakamath/drone_referee_2017_18 here] and the team's private Gitlab repository [https://gitlab.com/msd_module2/droneref_cv here]. For access to the Gitlab workspace, please write an email to Aditya Kamath (a.kamath@tue.nl). The Gitlab/Github repository contains the following source code: | ||

* ArUco detection/tracking: This is a re-used code sample using the aruco library for the detection and tracking of AR markers | * ArUco detection/tracking: This is a re-used code sample using the aruco library for the detection and tracking of AR markers | ||

| Line 347: | Line 706: | ||

* Marvelmind: This code reads pose data of a selected beacon from the MarvelMind modem and broadcasts data via UDP. This is accessed by the MATLAB/Simulink files. | * Marvelmind: This code reads pose data of a selected beacon from the MarvelMind modem and broadcasts data via UDP. This is accessed by the MATLAB/Simulink files. | ||

The MATLAB/Simulink files are stored [https:// | The MATLAB/Simulink files are stored [https://surfdrive.surf.nl/files/index.php/s/xS46kbhNAu1toGd?path=%2F here] and contain the following resources: | ||

* MAV004: This repository contains all the files required to communicate and control the pixhawk using MATLAB/Simulink | * MAV004: This repository contains all the files required to communicate and control the pixhawk using MATLAB/Simulink | ||

* HMI: This contains an integration of the developed supervisor and referee/audience GUI | * HMI and Supervisory Controller: This contains an integration of the developed supervisor and referee/audience GUI | ||

* DSM: This folder contains files for the risk analysis matrix | |||

* Drone Motion: This repository contains simulation files for trajectory planning | |||

Useful references for development using Pixhawk PX4: | |||

* [https://github.com/PX4/Firmware PX4 source code] | |||

* [https://dev.px4.io/en/advanced/parameter_reference.html PX4 parameters list] | |||

* [http://mavlink.org/messages/common Mavlink messages] | |||

=References= | =References= | ||

{{reflist}} | |||

Latest revision as of 16:38, 9 May 2018

Introduction

Abstract

Being a billion Euro industry, the game of Football is constantly evolving with the use of advancing technologies that not only improves the game but also the fan experience. Most football stadiums are outfitted with state-of-the-art camera technologies that provide previously unseen vantage points to audiences worldwide. However, football matches are still refereed by humans who take decisions based on their visual information alone. This causes the referee to make incorrect decisions, which might strongly affect the outcome of the games. There is a need for supporting technologies that can improve the accuracy of referee decisions. Through this project, TU Eindhoven hopes to develop a system with intelligent technology that can monitor the game in real time and make fair decisions based on observed events. This project is a follow-up of two previous projects [1] [2] working towards this goal.

In this project, a drone is used to assist a football match by detecting events and providing recommendations to a remote referee. The remote referee is then able to make decisions based on these recommendations from the drone. This football match is played by the university’s RoboCup robots, and, as a proof-of-concept, the drone referee is developed for this environment.

This project focuses on the design and development of a high level system architecture and corresponding software modules on an existing quadrotor (drone). This project builds upon data and recommendations by the first two generations of Mechatronics System Design trainees with the purpose of providing a proof-of-concept Drone Referee for a 2x2 robot-soccer match.

Background and Context

The Drone Referee project was introduced to the PDEng Mechatronics Systems Design team of 2015. The team was successful in demonstrating a proof-of-concept architecture, and the PDEng team of 2016 developed this further on an off-the-shelf drone. The challenge presented to the team of 2017 was to use the lessons of the previous teams to develop a drone referee using a new custom-made quadrotor. This drone was built and configured by a master student and his thesis, Peter Rooijakkaers [3], and was used as the baseline for this project.

The MSD 2017 team consisted of seven people with different technical and academic backgrounds. One project manager and two team leaders were appointed and the remaining four team-members were divided under the two team leaders. The team is organized as below:

| Name | Role | Contact |

|---|---|---|

| Siddharth Khalate | Project Manager | s.r.khalate@tue.nl |

| Mohamed Abdel-Alim | Team 1 Leader | m.a.a.h.alosta@tue.nl |

| Aditya Kamath | Team 1 | a.kamath@tue.nl |

| Bahareh Aboutalebian | Team 1 | b.aboutalebian@tue.nl |

| Sabyasachi Neogi | Team 2 Leader | s.neogi@tue.nl |

| Sahar Etedali | Team 2 | s.etedalidehkordi@tue.nl |

| Mohammad Reza Homayoun | Team 2 | m.r.homayoun@tue.nl |

Problem Description

Problem Setting