PRE2017 3 Groep13: Difference between revisions

| (318 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

== ''' | [[Coaching Questions Group 13]] | ||

== Group members == | |||

{| class="wikitable" | style="vertical-align:middle;" | border="2" style="border-collapse:collapse" ; | |||

! align="center"; style="width: 20%" | '''Name''' | |||

! align="center"; style="width: 20%" | '''Student ID''' | |||

|- | |||

| Bruno te Boekhorst || 0950789 | |||

|- | |||

| Evianne Kruithof || 0980004 | |||

|- | |||

| Stefan Cloudt || 0940775 | |||

|- | |||

| Eline Cloudt || 0940758 | |||

|- | |||

| Tom Kamperman || 0961483 | |||

|} | |||

== '''Problem Statement''' == | |||

Persons with visual impairments have trouble navigating through space due to the absence of clear sight. Nowadays, these persons have to trust on a guiding dog or a stick designed for blind people while navigating and avoiding objects. When using the stick, it is possible to feel if objects are present in the environment and where they are located. However, it is not possible to feel multiple objects at the same time. Also a guiding dog is not ideal, as people can be allergic for dogs and dogs are not always allowed into buildings. Furthermore, another inconvenience occurs when using both the guiding dog or the stick. These tools don't allow the users to have their hands free, as they have to use one hand to hold the dog or the stick. This project will look into designing a more convenient tool helping persons with visual impairments to navigate safely and comfortably. | |||

==''' Users''' == | |||

The primary users of the tool that will be designed are persons with visual impairments. For them, the tool will replace the stick or the guiding dog. If possible, a potential user will be contacted so the tool can be designed by means of user-centered design. | |||

== | The secondary users of the tool will be the persons in the same environment as the primary users, like colleagues in the same office room. These users don't want to be annoyed by the tool. | ||

== '''Scenario''' == | |||

The | The scenario in which the tool will be designed is an office space with obstacles like desks, chairs and bags. A blind person should be able to navigate through the room from his or her desk to for example a coffee machine without hitting objects by means of using the tool that will be designed. | ||

=== '''Specific test environment''' === | |||

To test the device a course will be made by means of tables, chairs and bags. A blindfolded subject, like a blindfolded group member, will try to navigate through the course in a general direction given by someone else (in real life this will be: the blind person generally knows the location of i.e. the coffee machine) without hitting objects. The course will be randomized every trial so the subject will not know the locations of the objects. | |||

== '''State-of-the-art''' == | |||

Bousbia-Salah suggests a system where obstacles on the ground are detected by an ultrasonic sensor integrated into the cane and the surrounding obstacles are detected using sonar sensors coupled on the user shoulders. Shoval et al. proposes a system which consists of a belt, filled with ultrasonic sensors called Navbelt. One limitation of this kind of system is that it is exceedingly difficult for the user to understand the guidance signals in time, which should allow walking fast. Another system using a vision sensor is presented by Sainarayanan et al. to capture the environment in front of the user. The image is processed by a real time image-processing scheme using fuzzy clustering algorithms. The processed image is mapped onto a specially structured stereo acoustic pattern and transferred to stereo earphones. Some authors use stereovision to obtain 3D information of the surrounding environment. Sang-Woong Lee proposes a walking guidance system which uses stereovision to obtain 3D range information and an area correlation method for approximate depth information. It includes a pedestrian detection model trained with a dataset and polynomial functions as kernel functions.<ref>Filipe, V., Fernandes, F., Fernandes, H., Sousa, A., Paredes, H., & Barroso, J. (2012). Blind Nagivation Support System based on Microsoft Kinect. ''Procedia Computer Science'', 14, 94-101. https://doi.org/10.1016/j.procs.2012.10.011</ref> | |||

Ghiani, Ieporini, & Paternò designed a multimodal mobile application considering museum environments(2009). They extended the vocal output with haptic output in form of vibrations. It was tested if participants prefered discontinuous output or continuous output differing in intensity using a within-subject design. The results implied the participants prefered the discontinuous variant, but the results were non-significant. | |||

<ref>Ghiani, G., Leporini, B., & Paternò, F. (2009). Vibrotactile feedback to aid blind users of mobile guides. ''Journal of Visual Languages & Computing'', 20(5), 305-317. https://doi.org/10.1016/j.jvlc.2009.07.004</ref> | |||

== '''Deliverable''' == | |||

== Objective == | A design of a new tool to help blind people navigate through the environment will be made. The deliverable will be a prototype of this design. Furthermore, the wiki will represent the background information and a final presentation about the design and the prototype will be held. | ||

== '''Requirements''' == | |||

{| class="wikitable" | style="vertical-align:middle;" | border="2" style="border-collapse:collapse" ; | |||

! align="center"; style="width: 5%" | '''ID''' | |||

! align="center"; style="width: 10%" | '''Category''' | |||

! align="center"; style="width: 60%" | '''Requirement''' | |||

|- | |||

| R1 || rowspan="3" | Lightweight || The weight attached to the head shall be at most 200 gram. | |||

|- | |||

| R2 || The weight attached to the waist shall be at most 1 kg. | |||

|- | |||

| R3 || The weight attached to other body parts than those in R1 and R2 shall be at most 200 gram. | |||

|- | |||

|R4 || Freedom of movement || The capability of the person shall not be impaired. | |||

|- | |||

|R5 || Comfortable || The device shall not cause itching, pressure, rash. | |||

|- | |||

|R6 || rowspan="2" | Detecting of objects || The device shall detect objects of a distance between 5cm upto 150 cm. | |||

|- | |||

|R7 || The device shall be able to differentiate 5 five distances between 5cm upto 150cm. | |||

|- | |||

|R8 || Lack of delay || The device shall not have a noticeable delay (<30 ms). | |||

|- | |||

|R9 || rowspan="2" | Energy || The device is able to operate for two hours. | |||

|- | |||

|R10 || The device shall use no more than 5Wh in one hour. | |||

|- | |||

|R11 || rowspan="2" | Portable || The device shall be mountable and unmountable. | |||

|- | |||

|R12 || The device shall be wearable under clothing. | |||

|- | |||

|R13 || Quiet || The device does produce less than 40 dB of sound. | |||

|- | |||

|R14 || Aesthetic || The device shall be fashionalbe or unnoticeable. | |||

|- | |||

|R15 || Health safety || The device does not cause any health problems. | |||

|- | |||

|R16 || Robust || The device does not break under minimal impact. | |||

|- | |||

|R17 || Sustainable || Manufacturing costs should not exceed 100 euros. | |||

|- | |||

|R18 || Calibrated || The user shall be able to calibrate the device in at most 5 user actions. | |||

|- | |||

|R19 || On/off switch || The device can be switched on and off easily. | |||

|} | |||

== '''Objective''' == | |||

Making a device that makes it more easy and less intensive for blind people to navigate through space. | Making a device that makes it more easy and less intensive for blind people to navigate through space. | ||

== '''Approach''' == | |||

== Approach == | |||

An user-centered design (if possible) of a device that helps blind people navigating through space. This can be done by making a device that gives more information about the close environment of the user. The user have to get information about obstacles by their feet and head/other body parts at the same time. A point for orientation is needed so the user knows in which direction he/she walks. To know what the best options are to make device easy and comfortable to use a blind person will be contacted. After designing a device that meets the requirements a prototype of this device will be made. For this, the following milestones have to be achieved: | An user-centered design (if possible) of a device that helps blind people navigating through space. This can be done by making a device that gives more information about the close environment of the user. The user have to get information about obstacles by their feet and head/other body parts at the same time. A point for orientation is needed so the user knows in which direction he/she walks. To know what the best options are to make device easy and comfortable to use a blind person will be contacted. After designing a device that meets the requirements a prototype of this device will be made. For this, the following milestones have to be achieved: | ||

== '''Milestones''' == | |||

== Milestones == | |||

- Literature study | - Literature study | ||

| Line 45: | Line 115: | ||

- The device is usable (usability testing) | - The device is usable (usability testing) | ||

== '''Who’s doing what and roles''' == | |||

== | === '''Initial Planning''' === | ||

Week 1: literature study (all), asking experient permission for usability testing (Eline), writing the problem statement (Evianne), make planning (all), determining roles (all), making requirements (all) | |||

Week 2: Think about specific scenario and problem (all), make specifications and requirements (Evianne) | |||

Tom | Week 3: interviewing user (Eline), making design of the device (Eline and Evianne), making bill of materials (Stefan, Tom, Bruno), updating wiki (all) | ||

Bruno | Week 4: Write scenario (Bruno), make planning for this week (Tom), think about design (Evianne), update wiki (Evianne), make bill of materials (Stefan), answer coaching questions (Tom), doing specific literature research (Tom), taking care of budget (Eline), write technology (Eline) | ||

Week 5: Order bill of materials (Stefan), programm the feedback system (Stefan), better the design (Evianne), build on prototype (Tom, Eline Bruno) | |||

Week 6: completing tasks that are not finished yet (all), testing the feedback system of the wearable (Eline) | |||

Week 7: Finishing the prototype (all), final presentation (Eline) | |||

Week 8: Ordering the wikis (all) | |||

=== '''Initial Roles - Who will be doing what''' === | |||

Eline : Designing, usability testing, literature study - external contacts | |||

Stefan: Programming, literature study - checks the planning | |||

Tom: - | Tom: Assembling, selecting products, literature study - prepare meetings | ||

Bruno: Assembling, selecting products, literature study - making minutes | |||

Evianne: Human-machine interactions, designing, usability testing, literature study - checks wiki | |||

''' | == '''Literature research''' == | ||

The topics needed for the project are split up into 5 different directions. | |||

=== '''Spatial discrimination of the human skin''' === | |||

Surfaced have often to be smooth, i.e. food textures have to feel smooth for users. Healthy human are tested in roughness sensation <ref>Aktar, T., Chen, J., Ettelaie, R., Holmes, M., & Henson, B. (2016). Human roughness perception and possible factors effecting roughness sensation. ''Journal of Texture Studies'', 48(3), 181–192. http://doi.org/10.1111/jtxs.12245</ref>. Obtained results showed that human’s capability of roughness discrimination reduces with increased viscosity of the lubricant, where the influence of the temperature was not found to be significant. Moreover, the increase in the applied force load showed an increase in the sensitivity of roughness discrimination. | |||

Pain in the skin can be localized with an accuracy of 10-20 mm <ref>Schlereth, T., Magerl, W., & Treede, R.-D. (2001). Spatial discrimination thresholds for pain and touch in human hairy skin. ''Pain'', 92(1), 187–194. http://doi.org/10.1016/S0304-3959(00)00484-X</ref>. Mechanically-induced pain can be localized more precisely then heat or a non-painful touch. Possible due to two different sensory channels. The spatial discrimination capacities of the tactile and nociceptive system are about equal. | |||

There are two categories for thermal discrimination <ref>Justesen, D. R., Adair, E. R., Stevens, J. C., & Brucewolfe, V. (1982). A comparative study of human sensory thresholds: 2450-MHz microwaves vs far-infrared radiation. ''Bioelectromagnetics'', 3(1), 117–125. https://doi.org/10.1002/bem.2250030115</ref>. A rising or a falling temperature and temperatures above or below the neutral temperature of the skin. Sensing temperature happens by myelinated fibers in nerves. | |||

=== '''Localization''' === | |||

Indoor localization can be achieved using a beacon system of ultrasonic sensors and a digital compass. Although ultrasonic sensors are very sensitive to noise or shocks these disadvantages can be mitigated by using a band pass filter <ref>Hong-Shik, K., & Jong-Suk, C. (2008). Advanced indoor localization using ultrasonic sensor and digital compass. ''Control, Automation and Systems, 2008, ICCAS 2008.''. https://doi.org/10.1109/ICCAS.2008.4694553</ref>. Generally the use of an Unscented Kalman Filter further improves accuracy of measurements. | |||

The use of the digital compass inside a smartphone can be used to track head movement. This can then be used to reproduce a virtual surround sound field using headphones <ref>Shingchern, D. Y., & Yi-Ta, W. (2017). Using Digital Compass Function in Smartphone for Head-Tracking to Reproduce Virtual Sound Field with Headphones. ''Consumer Electronics (GCCE), 2017''. https://doi.org/10.1109/GCCE.2017.8229205</ref>. Applying such a system to blind people could help them navigating by producing sounds which come from a certain direction without other people hearing it. | |||

It is shown that a digital compass can give accurate readings using a RC circuit, ADC and a Atmega16L MCU <ref>Shaocheng, Q., ShaNi, Lili, N., & Wentong, L. (2011). Design of Three Axis Fluxgate Digital Magnetic Compass. ''Modelling, Identification and Control (ICMIC)''. https://doi.org/10.1109/ICMIC.2011.5973727</ref>. Furthermore, by slowly rotating the compass to measure the magnetic field inference can further improve accuracy. In this paper the entire design of such a compass is shown. | |||

If it is known in which plane the compass is being turned, it can be used to further reduce errors <ref>Zhang, L., & Chang, J. (2011). Development and error compensation of a low-cost digital compass for MUAV applications. ''Computer Science and Automation Engineering (CSAE), 2011''. https://doi.org/10.1109/CSAE.2011.5952670</ref>. However, these solutions were applied to micro-unmanned air vehicle which do require very precise readings. Applications helping blind people might not need these precise readings. | |||

An application of digital compasses might be the orientation of a robotic arm. It is shown how an entire system using digital compasses could be built, addressing multiple issues mainly on a high-level without going too much into the details <ref>Marcu, C., Lazea, G., Bordencea, D., Lupea, D., & Valean, H. (2013). Robot orientation control using digital compasses. ''System Theory, Control and Computing (ICSTCC), 2013.''. https://doi.org/10.1109/ICSTCC.2013.6688981</ref>. Furthermore, it is shown how to convert the coordinates using the readings of the compass. | |||

=== '''Usability testing and universal design''' === | |||

Usability | To implement User-Centered Design, it is important to define the term usability. Usability has been interpreted in many different ways, but fortunately also a clear and extensive usability taxonomy is defined <ref>Alonso-Ríos, D., Vázquez-García, A., Mosqueira-Rey, E., & Moret-Bonillo, V. (2009). Usability: A Critical Analysis and a Taxonomy. ''International Journal of Human-Computer Interaction'', 26(1), 53-74. https://doi.org/10.1080/10447310903025552</ref>. | ||

Usability Testing is effective in helping developers produce more usable products <ref>Lewis, J. R. (2012). Usability Testing. In G. Salvendy (Ed.), ''Handbook Human Factors Ergonomics'' (4th ed., pp. 1267–1312). Boca Raton, Florida.</ref>, which comes in handy in this project. This chapter explains types to do Usability Testing, like thinking aloud which is quite easy but gives a lot of information. Furthermore, several examples are presented. | |||

Especially when involved with people with impairments, it is important to make a universal design. A lot of things have to be considered if a design should be universal. <ref>Story, M. F., Mueller, J. L., & Mace, R. L. (1998b). Understanding the Spectrum of Human Abilities. In ''The Universal Design File: Designing for People of All Ages and Abilities'', (pp. 15–30).</ref> | |||

Seven principles of universal design are defined <ref>Story, M. F., Mueller, J. L., & Mace, R. L. (1998). The Principles of Universal Design and Their Application. In ''The Universal Design File: Designing for People of All Ages and Abilities'', (pp. 31–84). Retrieved from https://files.eric.ed.gov/fulltext/ED460554.pdf</ref>. When these principles are followed, the design will be universally usable. Furthermore, a lot of examples are provided in this chapter. | |||

'' | === '''Developed aid devices and optical sensors''' === | ||

The objective of robot mobility aid is to let blind people regain personal autonomy by taking exercise independent of people who care for them. The perceived usefulness rather than usability is the limiting factor in adoption of new technology. Multiple impairments can be tackled at the same time, in the paper the device works as a mobility device and walking support. Preferable is that the user is in control of what happens, or that the robot decides what happens, but not something in between as that leads to confusion. Also users prefer voice feedback over tonal information, perhaps because it is more human-like | The objective of robot mobility aid is to let blind people regain personal autonomy by taking exercise independent of people who care for them. <ref>Giudice, N. A., & Legge, G. E. (2008). Blind Navigation and the Role of Technology (pp. 479–500). http://doi.org/10.1002/9780470379424.ch25</ref> The perceived usefulness rather than usability is the limiting factor in adoption of new technology. Multiple impairments can be tackled at the same time, in the paper the device works as a mobility device and walking support. Preferable is that the user is in control of what happens, or that the robot decides what happens, but not something in between as that leads to confusion. Also users prefer voice feedback over tonal information, perhaps because it is more human-like. | ||

A device is created which uses a laser to calculate distances to objects <ref>Yuan, D., & Manduchi, R. (2004). A Tool for Range Sensing and Environment Discovery for the Blind. Retrieved from https://users.soe.ucsc.edu/~manduchi/papers/DanPaperV3.pdf</ref>. This could help the user to decide where to move, but is not sufficient for safe deambulation. The device would work as a virtual white cane, without using the actual invasive sensing method of the white cane. It uses active triangulation to produce reliable local range measurements. Objects can be found, but it is still hard to translate this to the user. | |||

A device is created which uses a laser to calculate distances to objects. This could help the user to decide where to move, but is not sufficient for safe deambulation. The device would work as a virtual white cane, without using the actual invasive sensing method of the white cane. It uses active triangulation to produce reliable local range measurements. Objects can be found, but it is still hard to translate this to the user | |||

The long cane (white cane) is still the primary mobility aid for the blind. New electronic mobility aids mostly use the transmission of an energy wave and the reception of echoes (echo-location) from objects in or near the user’s path <ref>Brabyn, J. A. (1982). New Developments in Mobility and Orientation Aids for the Blind. IEEE TRANSACTIONS ON BIOMEDICAL ENGINEERING, (4). Retrieved from http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.476.159&rep=rep1&type=pdf</ref>. Optical transmission is another highly used concept. As wavelength is the limiting factor in detection of small objects, optical transmission has advantages. One class of aids is known as the “obstacle detectors”, other aids attempt more than only obstacle detection, by using for example auditory output to show the way. Also incorporating he sensing on the skin has been researched. Lastly there is the area of cortical implants. | |||

The long cane (white cane) is still the primary mobility aid for the blind. New electronic mobility aids mostly use the transmission of an energy wave and the reception of echoes (echo-location) from objects in or near the user’s path. Optical transmission is another highly used concept. As wavelength is the limiting factor in detection of small objects, optical transmission has advantages. One class of aids is known as the “obstacle detectors”, other aids attempt more than only obstacle detection, by using for example auditory output to show the way. Also incorporating he sensing on the skin has been researched. Lastly there is the area of cortical implants | |||

The most important difference between visual navigation and blind navigation is that visual navigation is more of a perceptual process, whereas blind navigation is more of an effortful endeavor requiring the use of a cognitive and attentional resources <ref>Kay, L. (1964). An ultrasonic sensing probe as a mobility aid for the blind. Ultrasonics, 2(2), 53–59. http://doi.org/10.1016/0041-624X(64)90382-8</ref>. Some technologies work better in other environments. Also aesthetics are of impact on the user. The long cane and guide dog are the most common tools of navigation, other aids are mostly used to complement these tools. Other devices are based on sonar detection, optical technologies, infrared signage, GPS-based devices, sound devices and some tools for indoor navigation. | |||

The most important difference between visual navigation and blind navigation is that visual navigation is more of a perceptual process, whereas blind navigation is more of an effortful endeavor requiring the use of a cognitive and attentional resources. Some technologies work better in other environments. Also aesthetics are of impact on the user. The long cane and guide dog are the most common tools of navigation, other aids are mostly used to complement these tools. Other devices are based on sonar detection, optical technologies, infrared signage, GPS-based devices, sound devices and some tools for indoor navigation | |||

It is quite hard to get a clear understanding of a possible environment via echo location or ultrasonic sensing. Under specific conditions objects were located with echo location <ref>Lacey, G., & Dawson-Howe, K. M. (1998). The application of robotics to a mobility aid for the elderly blind. Robotics and Autonomous Systems, 23(4), 245–252. http://doi.org/10.1016/S0921-8890(98)00011-6</ref>. Different environments give very different amounts of perception. Moreover was it needed for users to learn to use the device. Learning to interpret the sounds did not necessarily improve mobility. Objects could be detected, but users had to still learn how to avoid them. Also, as hearing was used for echo location, normal use of the hearing sense was slightly impaired. | |||

=== '''Electromagnetics and sensors''' === | |||

Proximity sensors can detect the presence of an object without physical contact. There are many variations of these sensors but 3 basic designs exist: electromagnetic, optical, and ultrasonic <ref>Seraji, H., Steele, R., & Iviev, R. (1996). Sensor-based collision avoidance: Theory and experiments. Journal of Robotic Systems, 13(9), 571–586. http://doi.org/10.1002/(SICI)1097-4563(199609)13:9<571::AID-ROB2>3.0.CO;2-J</ref><ref>When close is good enough - ProQuest. (1995). Retrieved from https://search.proquest.com/docview/217152713?OpenUrlRefId=info:xri/sid:wcdiscovery&accountid=27128</ref>. The electromagnetic types are subdivided into inductive and capacitive sensors. However these work with metal. Optical proximity sensors use one of two basic principles, reflection or through-beam. Here shiny surfaces can cause trouble. | |||

Ultrasound works similar, in respect that sound also reflect on objects, which can be measured. Here objects that do not reflect it back can cause trouble. | |||

A non-contact proximity sensor is able to detect any kind of material with a low frequency electromagnetic field. <ref>Benniu, Z., Junqian, Z., Kaihong, Z., & Zhixiang, Z. (2007). A non-contact proximity sensor with low frequency electromagnetic field. Sensors and Actuators A: Physical, 135(1), 162–168. http://doi.org/10.1016/J.SNA.2006.06.068</ref> | |||

A medium range radar sensor is proposed, however this is for a distance too big for our purposes. <ref>Jackson, M. R., Parkin, R. M., & Tao, B. (2001). A FM-CW radar proximity sensor for use in mechatronic products. Mechatronics, 11(2), 119–130. http://doi.org/10.1016/S0957-4158(99)00087-2</ref> | |||

The ideal circumstances for an ultrasonic sensor in air are discussed. <ref>Lee, Y., & Hamilton, M. F. (1988). A parametric array for use as an ultrasonic proximity sensor in air. The Journal of the Acoustical Society of America, 84(S1), S8–S8. http://doi.org/10.1121/1.2026546</ref> | |||

The homing behaviour of Eptesicus fuscus, known as the big brown bat, can be altered by artificially shifting the Earth's magnetic field, indicating that these bats rely on a magnetic compass to return to their home roost <ref>Holland, R. A., Thorup, K., Vonhof, M. J., Cochran, W. W., & Wikelski, M. (2006). Bat orientation using Earth’s magnetic field. ''Nature'', 444(7120), 702. http://doi.org/10.1038/444702a</ref>. Homing pigeons use also the magnetic field for navigation. | |||

=== '''Sensors that are able to observe objects''' === | |||

A short distance ultrasonic distance meter device is made to detect objects at short distances <ref>Park, K. T., & Toda, M. (1993). U.S. Patent No. US5483501A. Washington, DC: U.S. Patent and Trademark Office. </ref>. When a object is detected the device starts to ring, if an object cannot be observed because it is too far away, anti feedback makes sure ringing is cancelled. Also opposite phase methods are used to provide the same purpose. Lastly both are used to receive optimal results. | |||

The distance of obstacles at near range to a motor vehicle are determined with a displacement sensor instead of an ultrasonic measurement <ref>Widmann, F. (1993). U.S. Patent No. US5602542A. Washington, DC: U.S. Patent and Trademark Office. </ref>. The distance is measured above a predetermined limiting value. By comparing the signals of the displacement sensor and the ultrasonic measurement a calibration and correction factor for the displacement sensor can be obtained. | |||

A driver in a car always has blind spots where he cannot see if there is an object. Those blind spots cannot be observed through the mirrors. To solve the problem a sequentially operating dual sensor technology is used <ref>Miller, B. A., & Pitton, D. (1986). U.S. Patent No. US4694295A. Washington, DC: U.S. Patent and Trademark Office.</ref>. The first sensor takes place as a photonic event. Infrared light emitted by an IRLED is coupled to an infrared light sensitive phototransistor or photo-darlington. Once the reflected infrared light is detected a second sensor is activated. The second sensor works with ultrasonic waves. The driver can then be given the distance between the vehicle and the threat obstacle. | |||

== '''Design''' == | |||

There is made a design of the prototype that meets the specifications. One of the specifications is that the obstacles in front of the feet and the obstacles at waist height have to be detected. It will be easy if the wearable is being carried at the same height. The legs and feet are not stable enough to detect a specific area in a constant manner. For this, we thought about a belt, which is also easy usable and could also be used to indicate that the wearer is blind. | |||

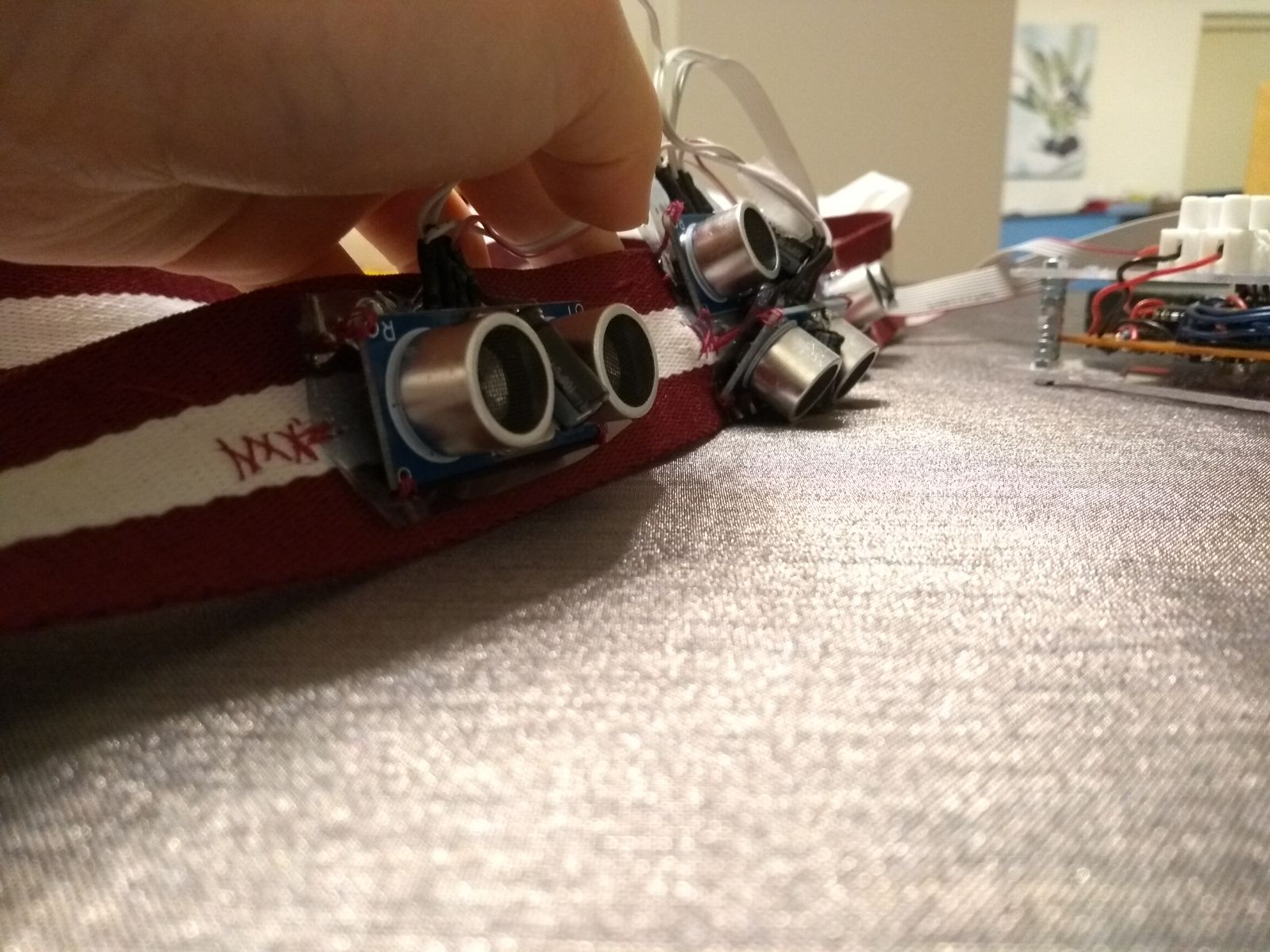

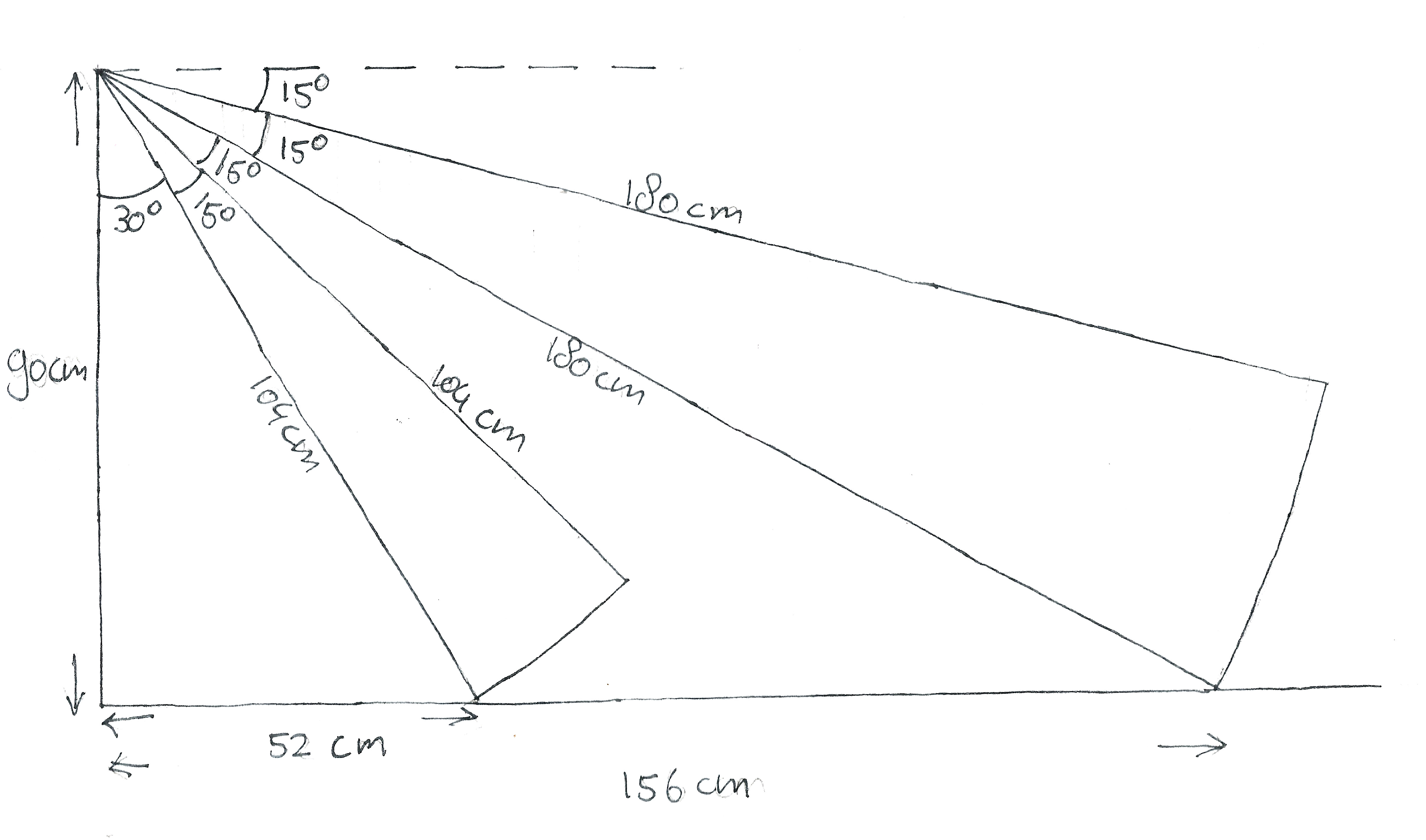

The affordable and small sensors used in this project only have a detection angle of 15 degrees. These sensors can detect the area in front of the feet and at waist height. For this, the sensors detect forwards in a cone shape of 15 degrees. If the bundle comes closer to the user than 30 degrees, the sensors will detect the legs and feet while walking around. 3 sensors will be placed under an angle of 22.5 degrees relative to the belt, and another sensor is placed under an angle of 52.5 degrees so it can detect objects in front of the feet of the user. The ideal angles have been calculated by goniometry (the measuring angle of the sensor has been taken into account), and are drawn out in figure 1. | |||

[[File:Corners.jpeg|thumb|upright=2|right|alt=Alt text|Figure 1: The angles at which the sensors will work and what ranges it will work for.]] | |||

One of the specifications is that the user has to know in which direction the obstacle is located. For this, we need four sensor bundles to indicate the location of the obstacle, right, left, in front of but far away and in front and short distance away from it. It will be annoying when the belt is constantly giving feedback in case someone walks next to the blind person or in case the hands of the user are hindering the sensor. We also make the assumption that users only walk straight ahead and not sideways. The sensor will roughly work in a cone of 60 degrees in front of the user. This way, the user has room to move his hands and is able to wear an open jacket over the belt without hindering the sensors. This area of 60 degrees has to be split up in three sub areas. Obstacles in front of the user are most important to detect for preventing an accident, so in the middle 2 sensors are placed for short- and far distance objects. The 60 degrees can be split up in two front bundles of 15 degrees under the above mentioned vertical angles. The left and right bundle will also have a width of 15 degrees. Another option to filter out hand movement in front of the sensor would be to let the signals ignore obstacles that are closer than 5-10 cm, although a signal should be given that the sensor is blocked and that it can have influence on the detectability of other objects. | |||

To let other persons know the user is blind, the belt has the same colors as the stick (red and white). Furthermore (colored) lights could be added to the belt, lighting the area where the sensor detects obstacles. In this way, other persons can know that the user of this belt is visibly impaired. Because of the light, people will stay away of the area the sensor is detecting which will help the user to get a more clear feedback signal. The button will be placed on an easily findable spot, so the user is able to operate the belt without much effort. | |||

=== '''Feedback system''' === | |||

The feedback of the sensors will be given by vibration. There are 4 sensors on the belt at only 3 different locations, left, mid and right. The vibration motors will be placed under the belt at the same place as the sensors. To indicate where the obstacle is with respect to the person wearing the device, the sensor that senses an obstacle will let the vibration motor at that place vibrate. For the 2 middle sensors, the lower vibration motor vibrates if an object like a bag is before the feet of the user, and the upper vibration motor vibrates in case the upper sensor senses an object far away, like tables and chairs. | |||

The further away an obstacle is, the softer the vibration motor should vibrate and how closer the obstacle is the more vigorous it should vibrate. This vibration levels are equally distributed over 6 distances between 0 and 150 cm <ref>Mansfield, J (2005). Washington, D.C. Human Respomse to Vibration, crc press, 113-129. https://books.google.nl/books?id=cpe6mfCVHZEC&pg=PA133&lpg=PA133&dq=human+signal+vibration&source=bl&ots=xu53EB8II4&sig=lg0Dg8mA7iAwKaTEChJEJsEMHIA&hl=nl&sa=X&ved=0ahUKEwiduribr6DaAhUiMewKHQ7sDq8Q6AEIXDAG#v=onepage&q=stepwise%20signal&f=false </ref> | |||

. | |||

== ''' | === '''Testing of the feedback system, Wizard of Oz''' === | ||

Before the wearable works, we can test the feedback system. The user has to know where the obstacles are in a office room and in which direction he can walk without hitting the obstacles. To do this, the user needs information of the environment by signals of the wearable. This signals have to be clear and easy to understand. To investigate which kind of signals are preferable we made a test parkour. In this parkour, we guided several blindfolded test persons by giving varying feedback to them in each test. We studied the walk behavior of this test persons and asked several questions. After this tests, we can answer the following questions about the feedback system, and know how to make the signals optimal for the users. | |||

1) How many feedback signals are preferable? | |||

2) What range of the sensors is preferable? | |||

3) Is is more clear in which way the user can walk in case more vibration motors can give signal in the same time? | |||

4) What is easier to understand: the vibration motors give signal in which direction the obstacles are, or the vibration motors give signal in which direction no obstacles are. | |||

5) Is it helpful to use intervals in the signals? | |||

6) Is sound or vibration more clear as signal for the user? | |||

7) How long have the signals to last? | |||

8) Which place is more preferable to get signals: hips (belt) or waist? | |||

In the tests we used a room with the same kind of obstacles that are usual in office rooms too. The blindfolded test person had to walk in a room in Cascade, in this place chairs, tables, walls and bags were placed. The testperson had to walk through the room by several vibration signals, first at the hip and second at the waist. After this, the testperson had to walk in the room by sound signals. The test gave us the following insights: | |||

''How many feedback signals are preferable?'' | |||

In case more than 3 feedback signals came at the same time, the user gets confused. He needs a longer time to think about the meaning of the signals. For this, he have to slow down while thinking about in which direction he can walk. Because of this, it is not needed or even confusing to use more than 4 feedback signals in our wearable. In case more signals are used, several signals can give feedback about the same obstacle, this works confusing. | |||

''What range of the sensors is preferable?'' | |||

If the full range of the sensors is used, this will be inconvenient, because the user will get feedback of obstacles that are at a 4 meter distance, which is a bit much. During the tests it became clear that this was not useful, the information only became useful when the test person was within a meter of the object. That way he is informed on time, so he knows there is an object and that it is still not right at his feet. Using this we can determine that the range of our sensors should be around that point. | |||

'' | ''Is is more clear in which way the user can walk in case more vibration motors can give signal in the same time?'' | ||

It is helpful for the user when more vibration motors can give signal at the same time. For example when the user walks between two tables, he have to know that the obstacles are at the left and right side at the same time. When the feedback signal vibrates left and right, he knows he have to walk straight ahead. It is confusing to give this signals alternately, because the user looses information about distances etc. when some vibration motors can not be used continuously. | |||

''What is easier to understand: the vibration motors give signal in which direction the obstacles are, or the vibration motors give signal in which direction no obstacles are?'' | |||

For the user, it feels like he have to act in case the vibration signal is given. For this, it is confusing when i.e. the vibration motor in the front of the user gives signal in case the user can walk straight ahead. Vibration motors that give signal in which direction no obstacles are less intuitive. It is also not preferable to get signal continuously in case there are no obstacles in the neighbor of the user. | |||

''Is it helpful to use intervals in the signals?'' | |||

After the tests we came to the conclusion this is not the case, when using intervals the user was confused quicker, and sometimes the feedback didn’t come in time to prevent a collision. Thus we have decided that we will use continuous feedback to the user, to prevent this confusion and delay. | |||

''Is sound or vibration more clear as signal for the user?'' | |||

Sound and vibration are both usable for the blindfolded. It is more important that the signals are given continuously. The user also needs information about the distances between him and the obstacles. So sound and vibration can both give clear information to the user about his environment. However, it is not preferable for other persons to hear signals in a office room. Sound signals make it also more difficult to have a conversation with other persons in the office room. | |||

''How long do the have signals to last?'' | |||

In the tests it was clear that it is useful to have a signal as long as the sensor is measuring an object, if it stops before the object is no longer in sight the person could become confused and walk in that direction, while the object is still there. This is why the signal has to last until the object is no longer measureable. | |||

''Which place is more preferable to get signals: hips (belt) or waist?'' | |||

With our test persons it didn’t matter where the signals were given. At both places the signals were easy to feel and interpret. | |||

=== '''Belt''' === | |||

The design will be a belt which will be worn around the hips, like a normal belt. The devices (sensors, motors, PCB) will be placed at the end of the belt (the PCB can be put in a pocket of the jeans together with power supply), so the first part of the belt can be worn normally through the loops on jeans. As it was calculated which parts of the environment the sensors will see or not, it was decided to add a fourth sensor in the front. In this way all 3 positions scan objects like tables and chairs, and the front one will also scan a lower area in which bags may be located to prevent the user tripping over them. The 3 positions of the sensors are relatively close to each other, as the detection of clothes is not desirable. The sensors will be placed onto the belt, 3 sensors in an angle of 22.5 degrees with respect to the belt and 1 sensor in an angle of 52.5 degrees relative to the belt. The vibration motors will be placed inside the belt to improve the detection of the signal by the user and aesthetics. The device will have an on/off button, which will be placed with the PCB. | |||

==='''Can a jacket be worn while using the wearable?'''=== | |||

A jacket can be worn when using the belt, the sensors have been placed at a distance from each other that should provide enough width in the viewing area, and also be between the two sides of the jacket. This way the jacket will not be seen as an input for the sensor. | |||

==='''How does the wearable adjust to other body types?'''=== | |||

Not all people have the same body, that is why the belt should be adjustable for different kinds of bodies. We have determined that for a slim person it doesn’t really matter if the belt is on the hips or on the belly, thus the location is only dependent on the preferred place of the person wearing the belt. However a thicker person could have a problem with the positions of the sensors, as the belly is bigger, the sensors might be at a bigger angle than is required. This can be solved by wearing the belt on the hips, as with most people the hips are not causing a big angle difference. If however this still causes a problem, the location of the belt can be adjusted on the belly until it reaches a good location, although this is not a very large area as the curvature of the belly has a big impact on the angles. | |||

== '''The product''' == | |||

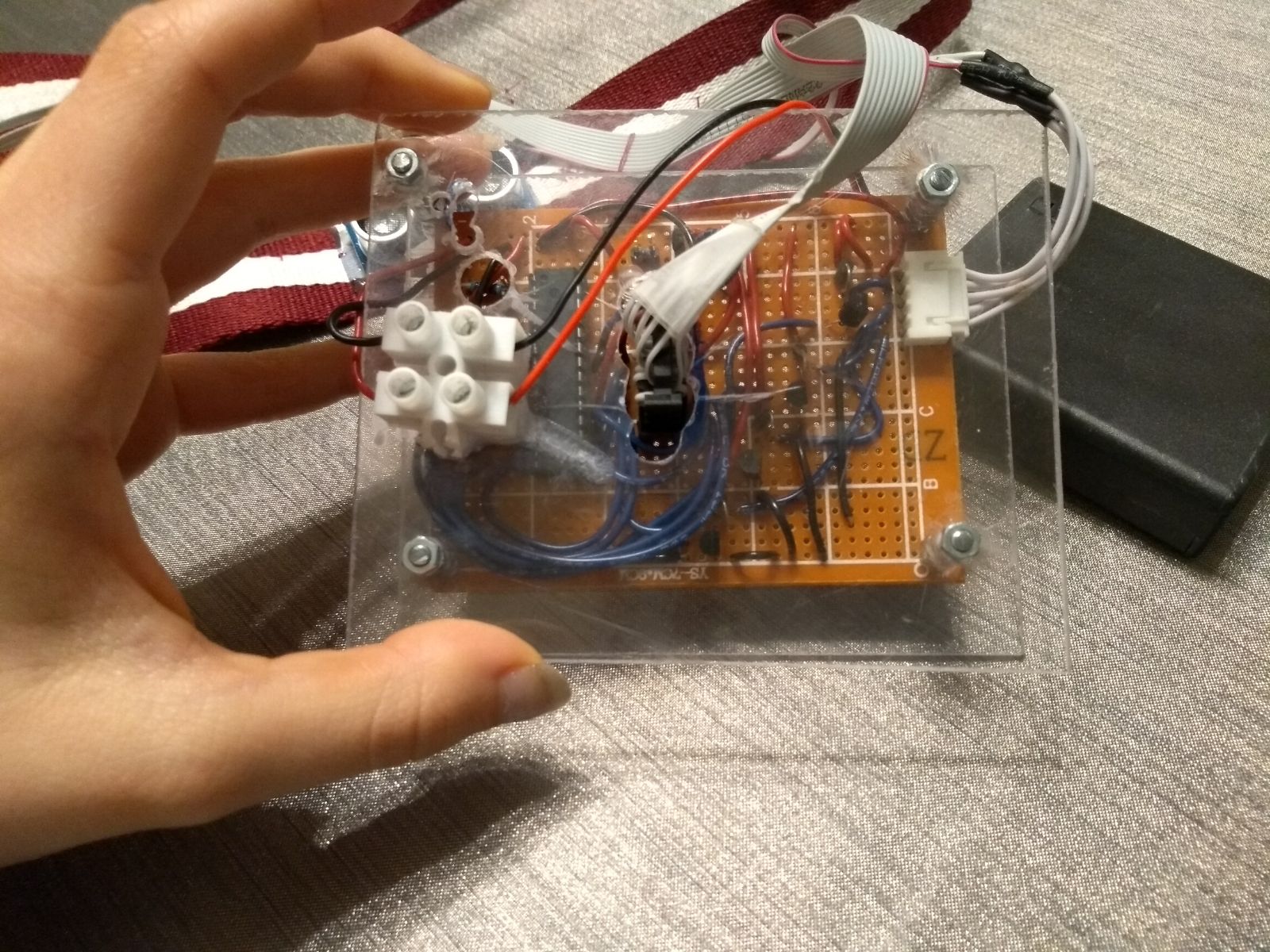

=== '''Electronic design''' === | |||

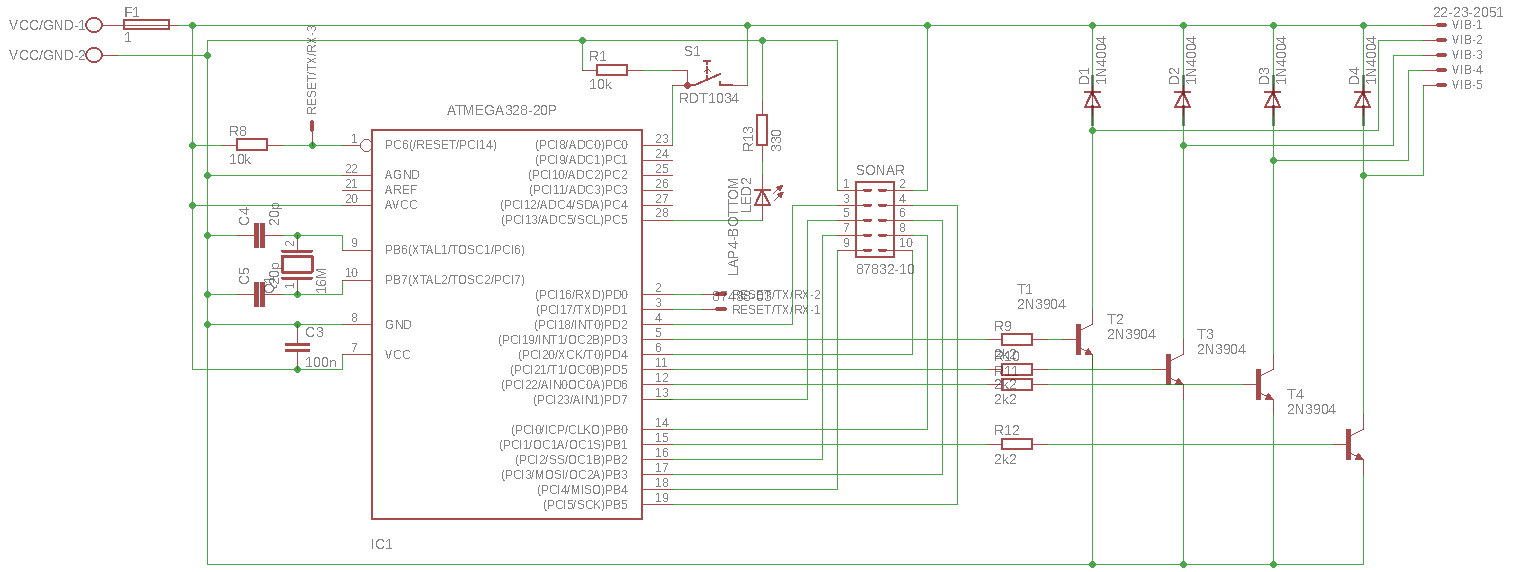

[[File:Circuit.png|thumb|upright=5|right|alt=Alt text|Figure 2: Circuit of the wearable, note that the diodes used are of type 1N4007, not 1N4004]] | |||

==== '''Microcontroller''' ==== | |||

The system uses an ATmega328p-pu<ref>8-bit AVR Microcontrollers, ATmega328/P, Datasheet Summary http://ww1.microchip.com/downloads/en/DeviceDoc/Atmel-42735-8-bit-AVR-Microcontroller-ATmega328-328P_Summary.pdf</ref> microcontroller. Because this microcontroller is used in some Arduino boards the ecosystem is usefull for this project. To make the ATmega faster we attached a 16Mhz crystal to pin 9 and 10. Both terminals of this crystal should be connected to a 20pF grounded capacitor. Pin 22 (AGND) is the analog ground pin, all analog measurements are relative to this ground. Pin 20 (AVCC) is the analog source which is the source for all analog signals inside the Arduino. We connected AGND and AVCC to GND and VCC respectively. The terms GND and VCC are used to represent the ground and source. The VCC pin 7 and GND pin 8 of the ATmega are connected to the GND and VCC. Some components in the system might cause a spike in the current. This spike might result in the ATmega not getting any current, which results in the ATmega powering down and restarting after the spike. This is a highly undiresable situation as this can seriously interfere with the operation of the software. To solve the problem we placed a 100nF between the GND and VCC pins of the ATmega, when such a spike happens the capacitor will act as a temporary source to keep the ATmega alive. The ATmega is configured to operate at a potential of 5V. | |||

An important part of the process of programming the ATmega is uploading the software onto the ATmega. This is done by using three pins. Pin 2 and 3 (TX and RX) provide a serial interface, in combination with pin 1 (RESET) we can communicate with the ATmega by using a programmer. In our project we used an Arduino Uno without microcontroller as the programmer. As soon as the RESET pin has a potential equal to GND the ATmega resets itself, to prevent this it is required to use a pull-up resistor to keep the potential at VCC. Only when a reset is needed one can short RESET to GND in order to trigger the reset. | |||

==== '''Peak energy consumption'''==== | |||

{| class="wikitable" | style="vertical-align:middle;" | border="2" style="border-collapse:collapse" ; | |||

! align="center"; style="width: 40%" | '''Part''' | |||

! align="center"; style="width: 10%" | '''Multiplier''' | |||

! align="center"; style="width: 10%" | '''Usage (mA)''' | |||

! align="center"; style="width: 15%" | '''Resulting usage (mA)''' | |||

|- | |||

|Vibration motor || 4 || 100 || 400 | |||

|- | |||

|Ultrasonic sensor || 4 || 15 || 60 | |||

|- | |||

|ATmega328p ||1 || 1|| 1 | |||

|- | |||

|LED ||1 ||40 || 40 | |||

|- | |||

|Button || 1 || 0.5 || 0.5 | |||

|- style="border-top: 1.5pt solid black;" | |||

|Subtotal || || || 501.5 | |||

|- | |||

|Risk || || || 20% | |||

|-style="border-top: 2pt solid black;" | |||

|Total || || || 601.8 | |||

|} | |||

==== '''Power supply''' ==== | |||

As calculated in our [[#Peak energy consumption|peak energy consumption]] section the current does not exceed 1A. Therefore to enhance safety of the device we added a fuse directly after the power source. When something in the circuit shorts the fuse will block all current when it exceeds 1A. This fuse saved the board multiple times during development. As the HC-SR04 ultrasonic sensors operate at 5V we used 4 rechargable AA batteries in series. Each rechargable AA battery keeps it voltage constant at 1.2V, using for of them we achieve a constant 4.8V power supply capable of driving our system. | |||

==== '''HC-SR04 Sonar''' ==== | |||

The HC-SR04 Sonar has a 4 pin interface. The VCC and GND pins simply need to be connected to the source and ground. Because of components causing a current spike the ultrasonic sensor needs to be protected too. This is done by adding a 100μF electrolycit capacitor across VCC and GND of the sensor. Adding this capacitor improved stability of the measurements. The TRIG pin is used to trigger a measurement. The ATmega needs to send a high signal of at least 10μs in order to trigger a measurement. Then the ultrasonic sensor generates 8 40kHz pulses, when the pulses return the sensor sends a high output during the same amount of time it took the pulse to get back to the sensor. The ATmega can calculate the measured distance by using the velocity of sound and the time it took. If the pulse does not return the measured time will be 0. Note that it is not possible to measure 0 distances because then the pulse will be block instantly and it will not be able to actually reach the microphone. The ECHO and TRIG pin can be connected to the digital pins of the ATmega without further components needed. | |||

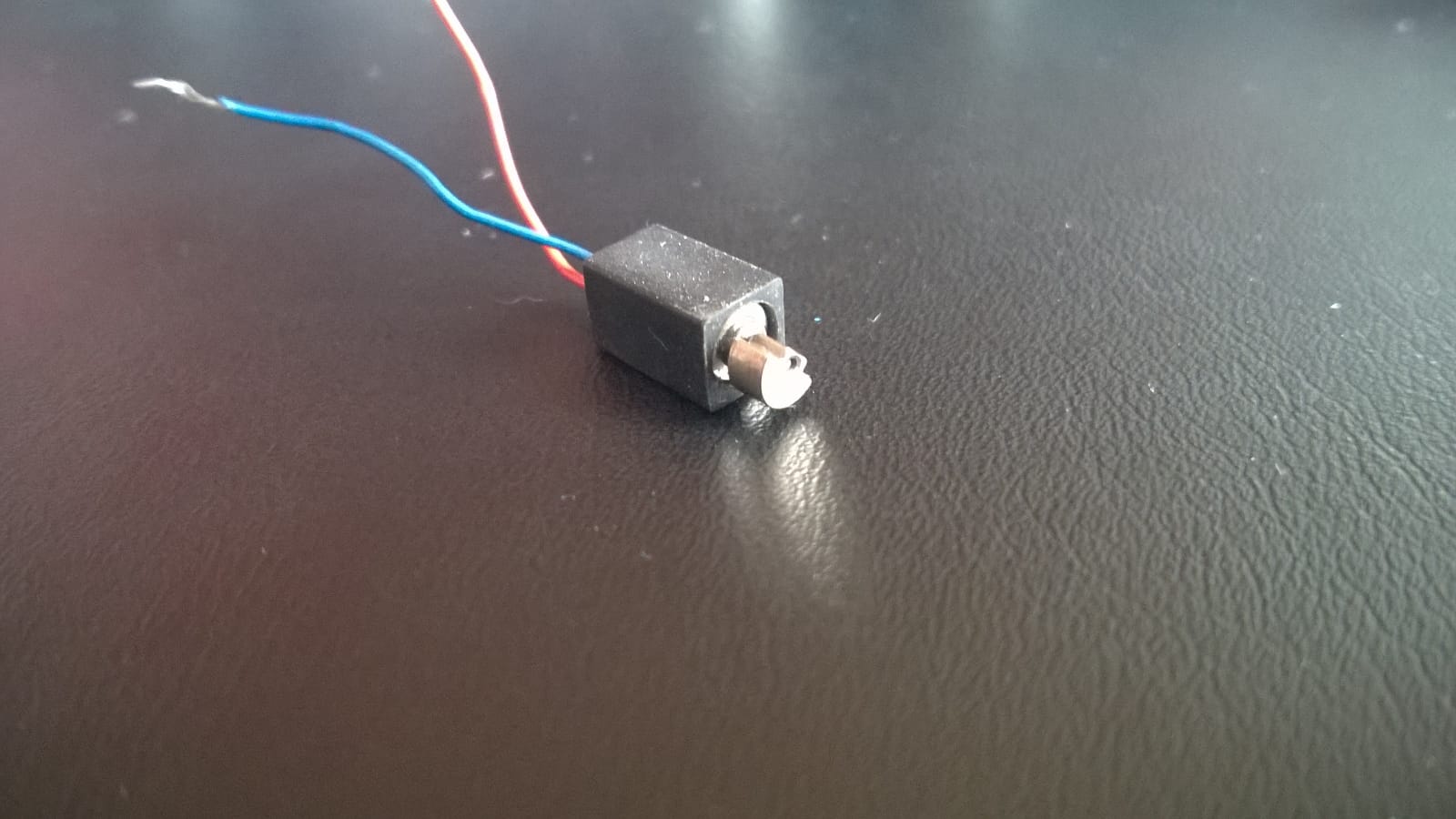

==== '''Vibration motor''' ==== | |||

The vibration motors operate at a voltage between 3 and 6V. Tests using a 5V power supply resulted in a current order of size of 100mA. This current is too high for the ATmega to drive. A possible solution to solve this without buying separate amplifier circuits is by using a transistor. We chose to use the 2N3409 npn transistor<ref>2N3904/ MMBT3904/ PZT3904, NPN General-Purpose Amplifier. https://www.fairchildsemi.com/datasheets/2N/2N3904.pdf</ref>. This transistor is biased in saturation mode by a 2.2kΩ base resistor, which means that we can use it as a switch. Measurements turned out that the base current equals 1.6mA, the collector current 94mA and the motor voltage drop equals 3.6V. Tests shown that the motor operated normally in this configuration. | |||

As soon as a motor is not driven anymore it will not immediately stop rotating, instead it directly starts generating power. The motor will change it’s kinectic energy into electrical energy. The current can destroy the other components in the circuit. To prevent this a so called free running diode is added in parralel with the motor, blocking current from VCC to the collector of the transistor. This diode will allow the current generated by the motor to flow directly back into the motor to drive it again. The result is that the motor will stop without destroying other components. The 1N4007 diode<ref>1N4001 - 1N4407, 1.0A Rectifier. https://www.diodes.com/assets/Datasheets/ds28002.pdf</ref> is used for this purpose. | |||

==== '''Alive LED''' ==== | |||

Additionally a 'alive' LED is added. This LED will be used to inform users and developpers that the device is up and running. LEDs typically operate at about a current of 20mA. If a LED is directly connected from VCC to GND this will result in a too high current for the LED. To prevent that a 330Ω resistor is placed in series with the LED such that the current is limited. | |||

==== '''Switch''' ==== | |||

The circuit contains a switch which is connected to the ATmega. The purpose of this switch is to stop the device from operation without turning it off. The switch is directly connected to an analog pin on the ATmega. The other side of the switch is connected to the VCC. A 10kΩ pull-down resistor pulls down the potential at the analog pin to GND if the switch is open. If the switch closes the potential is ofcourse raised to VCC. | |||

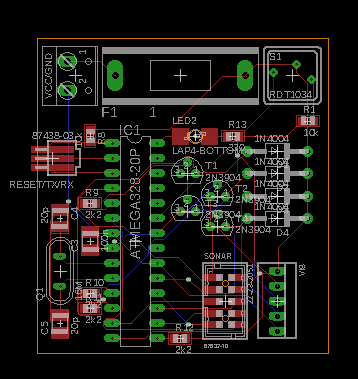

==== '''PCB''' ==== | |||

[[File:Board.png|thumb|upright=5|right|alt=Alt text|Figure 3:PCB layout]] | |||

Because we used the Eagle design suite of Autodesk we could easily export our schematic to a board design. This design can be exported to a format that is readable by machines which can fabricate PCB. The size would be around 5 by 5cm, which is 60% smaller than our hand-soldered board. Therefore a fabricated PCB would fit inside a pocket. However, we did not actually fabricate this PCB and therefore problems which might arise are unknown. | |||

=== '''Software design''' === | |||

==== '''Bootloader and uploading software''' ==== | |||

Because the ATmega328p is used in the Arduino we could use the Arduino IDE. This made setup easier and provided us with access to the Arduino libraries. These libraries provide additional functionality which resulted in easy use of the ATmega328p. The ATmega328p came from our supplier with the Arduino Uno Bootloader already flashed onto it. Therefore by simply connecting the VCC, GND, RESET, TX and RX to an Arduino board we were able to use the ATmega as if it were an Arduino. | |||

==== '''The main loop''' ==== | |||

Operation of the device is modelled in two parts: initialization and loop. Inside the loop we execute tasks that need to be executed repeatedly very fast. Clearly no long execution times can be included here. A few parts of the system need to be handled inside this loop. | |||

===== '''Sonar and averaging filter''' ===== | |||

The ATMega needs to control the ultrasonic sensor. It is important to configure a maximum distance to measure, because that way we also limit the maximum waiting time for the pulse to return. If the pulse did not return within the time that a `max distance` pulse would return the software returns the distance as a 0 value. Because the ultrasonic sensor is not stabile we added an averaging filter. This filter is configurable in code. It keeps a buffer of the last pulses and then calculates the average of all these pulses. This way the value used in the control logic is more stabile. However the 0 values need to be handled differently because they wrongly pull the average down. Therefore these 0 values are not included in the averaging. However a treshold is added such that if more than y pulses were 0 (and thus larger than the max distance) the value returned to the control logic will be 0. | |||

===== '''Vibration motor''' ===== | |||

In order to change the itensity of the vibration motors the technology Pulse Width Modulation is used, PWM for short. The ATmega already contains PWM modules and such the software only needed to write a value to the correct register. This 'analog' value is then emulated by using PWM. | |||

The software calculates from the calculated distance of the sonar the required intensity. The intensity is a linear step-wise function of the distance. This function can be described as a line between a minimal distance and a maximal distance, then this line is split up in a in code configurable number of steps. Then the intensity is the value of the middle of a step along the whole step distance. We illustrate this with a small example. Consider a minimal distance of 0cm and a maximal distance of 10cm, we use 10 steps. Then we get a function which maps all distances in <math>[0,1)</math> to the value <math>0</math>, all values in <math>[1,2)</math> to <math>1</math>. | |||

===== '''The on/off button''' ===== | |||

The button used is a push button, therefore to actually get a toggle behaviour we needed to implement a state machine with 4 states. These 4 states are: off, off and pressed, on, on and pressed. When a button is pressed the software goes into the 'off and pressed' state if the previous state was 'off', and the 'on and pressed' state if the previous state was 'on'. If the button was already pressed it stays in the current state untill it is in a 'and pressed' state for a certain amount of time. If the button is released within this time the state goes back to the previous, otherwise to the new state. This basically means that in order to switch from 'on' to 'off' and vice versa the button needs to be down for at least some configurable amount of time. | |||

===== '''The LED''' ===== | |||

The control for the LED is quite simple. We produce a HIGH signal for 1 second and a LOW signal for the other second. | |||

=== '''Bill of materials for this project''' === | |||

{| class="wikitable" | border="2" style="border-collapse:collapse; text-align: center; width: 80%" | {| class="wikitable" | border="2" style="border-collapse:collapse; text-align: center; width: 80%" | ||

! style="width: 26%" | '''Part''' | ! style="width: 26%" | '''Part''' | ||

| Line 343: | Line 397: | ||

! style="width: 16%" | '''Store''' | ! style="width: 16%" | '''Store''' | ||

! style="width: 8%" | '''Paid By''' | ! style="width: 8%" | '''Paid By''' | ||

|- | |||

| [https://www.tinytronics.nl/shop/nl/robotica/motoren/kleine-tril-vibratie-dc-motor-3-6v Vibration Motors] || € 1.00 || 5 || € 5.00 || Tinytronics || Stefan | |||

|- style="background-color: #d1d1d1;" | |- style="background-color: #d1d1d1;" | ||

| [https://www.tinytronics.nl/shop/nl/ | | [https://www.tinytronics.nl/shop/nl/sensoren/afstand/ultrasonische-sensor-hc-sr04 Distance Sensor] || € 3.00 || 5 || € 15.00 || Tinytronics || Stefan | ||

|- | |- | ||

| [https://www.tinytronics.nl/shop/nl/ | | [https://www.tinytronics.nl/shop/nl/componenten/crystal/16mhz-crystal-hc49?search=16MHz 16MHz Crystal] || € 0.50 || 2 || € 1.00 || Tinytronics || Eline | ||

|- style="background-color: #d1d1d1;" | |||

| [https://www.tinytronics.nl/shop/nl/componenten/microcontrollers-chips/atmel-atmega328p-met-uno-bootloader-incl.-crystal-kit?search=atmel Atmel ATmega328P] || € 6.00 || 2 || € 12.00 || Tinytronics || Stefan | |||

|- | |||

| [https://www.tinytronics.nl/shop/nl/componenten/schakelaars/breadboard-tactile-pushbutton-switch-momentary-2pin-6*6*5mm?search=button Button] || € 0.15 || 1 || € 0.15 || Tinytronics || Stefan | |||

|- style="background-color: #d1d1d1;" | |- style="background-color: #d1d1d1;" | ||

| [https://www.tinytronics.nl/shop/nl/ | | [https://www.tinytronics.nl/shop/nl/componenten/zekeringen/zelfherstellende-zekering-pptc-polyfuse-1000ma Fuse 1A] || € 0.50 || 1 || € 0.50 || Tnytronics || Tom | ||

|- | |- | ||

| [https://www.tinytronics.nl/shop/nl/batterij-en-accu/batterijhouders/ | | [https://www.tinytronics.nl/shop/nl/prototyping/toebehoren/10-pins-header-connector-2x5p?search=header Header Connector] || € 0.30 || 1 || € 0.30 || Tinytronics || Eline | ||

|- style="background-color: #d1d1d1;" | |||

| Tube round silver|| € 3.79 || 1 || € 3.79 || Gamma || Tom | |||

|- | |||

| [https://www.tinytronics.nl/shop/nl/componenten/weerstanden/10k%CF%89-weerstand-(standaard-pull-up-of-pull-down-weerstand) 10kΩ-resistor] || € 0.05 || 5 || € 0.25 || Tinytronics || Tom | |||

|- style="background-color: #d1d1d1;" | |||

| [https://www.tinytronics.nl/shop/nl/componenten/weerstanden/330%CF%89-weerstand-(led-voorschakelweerstand) 330Ω-resistor] || € 0.05 || 1 || € 0.05 || Tinytronics || Tom | |||

|- | |||

| [https://www.tinytronics.nl/shop/nl/prototyping/printplaten/experimenteer-printplaat-7cm*9cm PCB] || € 0.60 || 1 || € 0.60 || Tinytronics || Stefan | |||

|- style="background-color: #d1d1d1;" | |||

| [https://www.tinytronics.nl/shop/nl/componenten/microcontrollers-chips/28-pins-ic-voet 28 pins socket] || € 0.50 || 1 || € 0.50 || Tinytronics || Tom | |||

|- | |||

| [https://www.tinytronics.nl/shop/nl/prototyping/solderen/desoldeerlint-1mm-1.5m Desolderband] || € 1.50 || 1 || € 1.50 || Tinytronics || Tom | |||

|- style="background-color: #d1d1d1;" | |||

| [https://www.tinytronics.nl/shop/nl/prototyping/krimpkousen/krimpkous-2:1-%C3%B8-2mm-diameter-50cm Heathshrink 2mm] || € 0.55 || 1 || € 0.55 || Tinytronics || Tom | |||

|- | |||

| [https://www.tinytronics.nl/shop/nl/prototyping/krimpkousen/krimpkous-2:1-%C3%B8-4mm-diameter-50cm Heathshrink 4mm] || € 0.65 || 1 || € 0.65 || Tinytronics || Tom | |||

|- style="background-color: #d1d1d1;" | |||

| [https://www.tinytronics.nl/shop/nl/kabels/prototype-draden/alpha-wire-draad-enkeladerig-solide-%C3%B81.5mm-0.33mm2-blauw-1m Alpha wire] || € 1.00 || 3 || € 3.00 || Tinytronics || Tom | |||

|- | |||

| [https://www.tinytronics.nl/shop/nl/componenten/diode/diode-1n4007 Diode] || € 0.10 || 4 || € 0.40 || Tinytronics || Tom | |||

|- style="background-color: #d1d1d1;" | |||

| [https://www.tinytronics.nl/shop/nl/batterij-en-accu/batterijhouders/4x-aa-batterijdoosje-met-losse-draden-en-schakelaar Battery box] || € 2.00 || 1 || € 2.00 || Tinytronics || Tom | |||

|- | |||

| [https://www.tinytronics.nl/shop/nl/componenten/transistor-fet/npn-transistor-2n3904 NPN Transistor 2N3904] || € 0.15 || 4 || € 0.60 || Tinytronics || Tom | |||

|- style="background-color: #d1d1d1;" | |- style="background-color: #d1d1d1;" | ||

| | | [https://www.tinytronics.nl/shop/nl/componenten/condensatoren/100nf-50v-ceramische-condensator 100nF capacitor] || € 0.10 || 1 || € 0.10 || Tinytronics || Tom | ||

|- | |- | ||

| | | Delivery Costs || € 2.50 || 3 || € 7.50 || Tinytronics || Stefan | ||

|- style="background-color: #d1d1d1;" | |- style="background-color: #d1d1d1;" | ||

| [https://www.tinytronics.nl/shop/nl/ | | [https://www.tinytronics.nl/shop/nl/prototyping/toebehoren/2-pin-schroef-terminal-block-connector-2.54mm-afstand?search=terminal Screw clamp] || € 0.30 || 1 || € 0.30 || Tinytronics || Eline | ||

|- | |- | ||

| [https://www.tinytronics.nl/shop/nl/ | | [https://www.tinytronics.nl/shop/nl/prototyping/toebehoren/female-header-flatcable-connector-10p-2x5p?search=header Flatcable Connector] || € 0.30 || 1 || € 0.30 || Tinytronics || Eline | ||

|- style="background-color: #d1d1d1;" | |- style="background-color: #d1d1d1;" | ||

| [https://www.tinytronics.nl/shop/nl/kabels/flatcable/flatcable-grijs-0.5m?search=flatcable Flatcable] || € 1.00 || 2 || € 2.00 || Tinytronics || Eline | |||

|- | |- | ||

| colspan="3"| '''Total costs''' || € | | [https://www.tinytronics.nl/shop/nl/prototyping/printplaten/experimenteer-printplaat-7cm*9cm?search=PCB Experimental PCB] || € 1.00 || 1 || € 1.00 || Tinytronics || Tom | ||

|-style="background-color: #d1d1d1;" | |||

| Delivery Costs || € 2.50 || 1 || € 2.50 || Tinytronics || Tom | |||

|- | |||

| 1.5 mm drill || € 2.49 || 1 || € 2.49 || Gamma || Stefan | |||

|-style="background-color: #d1d1d1;" | |||

| Belt || € 4.00 || 1 || € 4.00 || Primark || Eline | |||

|- | |||

| colspan="3"| '''Total costs''' || € 68.03 || || | |||

|} | |} | ||

== ''' | === '''Bill of materials of product only''' === | ||

This bill is the bill which contains the prices of all components inside the product excluding VAT. We chose for a batch size of 100 to limit the costs. | |||

''' | {| class="wikitable" | border="2" style="border-collapse:collapse; text-align: center; width: 80%" | ||

! style="width: 26%" | '''Part''' | |||

! style="width: 8%" | '''Min Bach size''' | |||

! style="width: 8%" | '''Price''' | |||

! style="width: 8%" | '''Amount''' | |||

! style="width: 8%" | '''Total costs''' | |||

! style="width: 16%" | '''Store''' | |||

|- style="background-color: #d1d1d1;" | |||

| [https://www.tinytronics.nl/shop/nl/robotica/motoren/kleine-tril-vibratie-dc-motor-3-6v Vibration Motors] || 1|| € 0.83 || 4 || € 3.32 || Tinytronics | |||

|- style="background-color: #d1d1d1;" | |||

| [https://www.tinytronics.nl/shop/nl/sensoren/afstand/ultrasonische-sensor-hc-sr04 Distance Sensor] ||10|| € 2.07 || 4 || € 8.28 || Tinytronics | |||

|- | |||

''' | | [https://be.eurocircuits.com/shop/orders/configurator.aspx?loadfrom=web&service=pcbproto&lang=en&quantity=5&dimX=50&dimY=50&layers=2&deliveryTerm=25&deliverycountry=NL&invcountry=NL&country=NL PCB] ||100|| € 2.12 || 1 || € 2.12 || Tinytronics | ||

|- style="background-color: #d1d1d1;" | |||

| [https://www.tinytronics.nl/shop/nl/componenten/microcontrollers-chips/atmel-atmega328p-met-uno-bootloader-incl.-crystal-kit?search=atmel Atmel ATmega328P] || 1|| € 4.96 || 1 || € 4.96 || Tinytronics | |||

|- | |||

| [https://www.tinytronics.nl/shop/nl/componenten/schakelaars/breadboard-tactile-pushbutton-switch-momentary-2pin-6*6*5mm?search=button Pushbutton] ||1|| € 0.12 || 1 || € 0.12 || Tinytronics | |||

|- style="background-color: #d1d1d1;" | |||

| [https://www.tinytronics.nl/shop/nl/componenten/zekeringen/zelfherstellende-zekering-pptc-polyfuse-1000ma Fuse 1A] ||1|| € 0.41 || 1 || € 0.41 || Tinytronics | |||

|- | |||

| [https://www.tinytronics.nl/shop/nl/prototyping/toebehoren/10-pins-header-connector-2x5p?search=header 10 pins Header Connector] ||50|| € 0.2158 || 1 || € 0.2158 || Tinytronics | |||

|- style="background-color: #d1d1d1;" | |||

| [https://www.tinytronics.nl/shop/nl/prototyping/toebehoren/3-pins-header-female?search=header 3 Pins Female Header]||1|| € 0.10 || 1 || € 0.10 || Tinytronics | |||

|- | |||

| [https://www.tinytronics.nl/shop/nl/componenten/weerstanden/10k%CF%89-weerstand-(standaard-pull-up-of-pull-down-weerstand) 10kΩ-resistor] ||50|| € 0.0249 || 2 || € 0.0498 || Tinytronics | |||

|- style="background-color: #d1d1d1;" | |||

| [https://www.tinytronics.nl/shop/nl/componenten/weerstanden/330%CF%89-weerstand-(led-voorschakelweerstand) 330Ω-resistor] ||50|| € 0.0249 || 1 || € 0.0249 || Tinytronics | |||

''' | |- | ||

| 2k2kΩ-resistor ||50|| € 0.0249 || 4 || € 0.0996 || Tinytronics | |||

|- style="background-color: #d1d1d1;" | |||

| [https://www.tinytronics.nl/shop/nl/prototyping/toebehoren/6-pins-header-connector-2x3p?search=header 6 Pins Header Connector] ||1|| € 0.17 || 1 || € 0.17 || Tinytronics | |||

|- | |||

| [https://www.tinytronics.nl/shop/nl/prototyping/toebehoren/female-header-flatcable-connector-10p-2x5p?search=header 10 Pins Female Connector] ||1|| € 0.25 || 1 || € 0.25 || Tinytronics | |||

|- style="background-color: #d1d1d1;" | |||

''' | | [https://www.tinytronics.nl/shop/nl/prototyping/toebehoren/female-header-flatcable-connector-6p-2x3p?search=header 6 Pins Female Connector] ||1|| € 0.17 || 1 || € 0.17 || Tinytronics | ||

|- | |||

| [https://www.tinytronics.nl/shop/nl/prototyping/krimpkousen/krimpkous-2:1-%C3%B8-2mm-diameter-50cm Heathshrink] ||1|| € 0.45 || 0.5 || € 0.225 || Tinytronics | |||

|- style="background-color: #d1d1d1;" | |||

| Aluminium Tube ||1|| € 3.79 || 1 || € 3.79 || Gamma | |||

|- | |||

| [https://www.tinytronics.nl/shop/nl/componenten/diode/diode-1n4007 Diode] ||50|| € 0.0581 || 4 || € 0.2324 || Tinytronics | |||

|- style="background-color: #d1d1d1;" | |||

| [https://www.tinytronics.nl/shop/nl/batterij-en-accu/batterijhouders/4x-aa-batterijdoosje-met-losse-draden-en-schakelaar Battery box] ||1|| € 1.65 || 1 || € 1.65 || Tinytronics | |||

|- | |||

| [https://www.tinytronics.nl/shop/nl/componenten/transistor-fet/npn-transistor-2n3904 NPN Transistor 2N3904] ||1|| € 0.12 || 4 || € 0.48 || Tinytronics | |||

|- style="background-color: #d1d1d1;" | |||

| [https://www.tinytronics.nl/shop/nl/componenten/condensatoren/100nf-50v-ceramische-condensator?search=100nF 100nF ceramic capacitor] ||1|| € 0.08 || 1 || € 0.08 || Tinytronics | |||

|- | |||

| Red led 5mm ||50|| € 0.0498 || 1 || € 0.0489 || Tinytronics | |||

|- style="background-color: #d1d1d1;" | |||

| [https://www.tinytronics.nl/shop/nl/componenten/condensatoren/100uf-25v-elektrolytische-condensator?search=elektro 100nF electrolyt capacitor] ||1|| € 0.08 || 4 || € 0.32 || Tinytronics | |||

''' | |- | ||

| [https://www.tinytronics.nl/shop/nl/prototyping/toebehoren/2-pin-schroef-terminal-block-connector-2.54mm-afstand?search=terminal 2 Pins Screw Clamp] ||1|| € 0.25 || 1 || € 0.25 || Tinytronics | |||

|- style="background-color: #d1d1d1;" | |||

| [https://www.tinytronics.nl/shop/nl/kabels/flatcable/flatcable-grijs-0.5m?search=flatcable Flatcable 10 wires] ||20|| € 0.415 || 3 || € 1.245 || Tinytronics | |||

1 | |- | ||

| [https://www.tinytronics.nl/shop/nl/batterij-en-accu/aa/eneloop-oplaadbare-batterij-4x-aa-1900mah Rechargable AA battery] ||1|| € 7.85 || 1 || € 7.85 || Tinytronics | |||

|-style="background-color: #d1d1d1;" | |||

| Belt ||1|| € 3.32 || 1 || € 3.32 || Primark | |||

|- | |||

| ||100|| colspan="2"| '''Costs per wearable''' || € 39.78 || | |||

|} | |||

'' | |||

=== '''Sensor range and interpretation of signals''' === | |||

The three upper sensors will have a range of 180 cm, so objects at 156 cm could be seen. To get this range the sensors are put on a 22.5 degree angle relative to the belt. The last sensor, which is under a 52.5 degree angle relative to the belt, will have a range of 104 cm to see objects at 52 cm. | |||

The three upper sensors will have a range of 180 cm, so objects at 156 cm could be seen. To get this range the sensors are put on a 22.5 degree angle relative to the belt. The last sensor, which is under a 52.5 degree angle relative to the belt, will have a range of 104 cm to see objects at 52 cm | |||

If the sensors do not measure anything, the input will be zero and therefore the output will be zero as well. This will be above 180 cm for the three upper sensors, and above 104 cm for the last sensor. The sensors are capable of measuring objects to a maximum distance of 4 meters, so a large interval of the sensors is filtered out. The resulting output of the measurements signals the vibration motors to start vibrating at a certain speed. | If the sensors do not measure anything, the input will be zero and therefore the output will be zero as well. This will be above 180 cm for the three upper sensors, and above 104 cm for the last sensor. The sensors are capable of measuring objects to a maximum distance of 4 meters, so a large interval of the sensors is filtered out. The resulting output of the measurements signals the vibration motors to start vibrating at a certain speed. | ||

=== '''Measurement errors''' === | |||

'''Measurement errors''' | |||

If the input is zero due to measuring errors, a measurement giving a zero output while there is an object nearby, there could occur problems for the user of the device. The user will not get a notification of a near object and could be walking into it. To deal with this problem, enough measurements are taken so the error measurement is just one of many measurements. The user still gets the signal that an object is near from the other measurements so the error measurement is negligible. If many error measurements are made after each other, which there is a very slight chance to, there could still occur a problem for the user of the device. These kind of errors are worse than measuring errors in which the device gives output while there is no object. With these errors the user acts because he thinks there is an object while there is not (ghost object). If the device measures the objects around this “ghost object”, the user can still walk properly around the objects. Also there only is one measurement or a few measurements in which the device detects a ghost object. Other measurements do not detect the ghost object, which at most leads to confusement for the user. | If the input is zero due to measuring errors, a measurement giving a zero output while there is an object nearby, there could occur problems for the user of the device. The user will not get a notification of a near object and could be walking into it. To deal with this problem, enough measurements are taken so the error measurement is just one of many measurements. The user still gets the signal that an object is near from the other measurements so the error measurement is negligible. If many error measurements are made after each other, which there is a very slight chance to, there could still occur a problem for the user of the device. These kind of errors are worse than measuring errors in which the device gives output while there is no object. With these errors the user acts because he thinks there is an object while there is not (ghost object). If the device measures the objects around this “ghost object”, the user can still walk properly around the objects. Also there only is one measurement or a few measurements in which the device detects a ghost object. Other measurements do not detect the ghost object, which at most leads to confusement for the user. | ||

Another solution that is made for the error measurements is that multiple measurements are being made and a mean of these measurements is taken. This could also filter out the measurement errors. Zero values could be filtered out of this mean. | Another solution that is made for the error measurements is that multiple measurements are being made and a mean of these measurements is taken. This could also filter out the measurement errors. Zero values could be filtered out of this mean. | ||

== '''Design for Usability Testing''' == | |||

'''Group A:''' | |||

walk course 1 time with stick | |||

* walk | * measure time | ||

* write down how many obstacles have been hit | |||

* fill in survey 1 | |||

walk course 3 times with stick | |||

* measure time each trial | |||

* write down how many obstacles have been hit each trial | |||

* fill in survey 2 | |||

walk course 1 time with belt | |||

* measure time | |||

* write down how many obstacles have been hit | |||

* fill in survey 1 | |||

walk course 3 times with belt | |||

* measure time each trial | |||

* write down how many obstacles have been hit each trial | |||

* fill in survey 2 | |||

fill in survey 3 | |||

'''Group B''' | |||

walk course 1 time with belt | |||

* measure time | |||

* write down how many obstacles have been hit | |||

* fill in survey 1 | |||

walk course 3 times with belt | |||

* measure time each trial | |||

* write down how many obstacles have been hit each trial | |||

* fill in survey 2 | |||

walk course 1 time with stick | |||

* measure time | |||

* write down how many obstacles have been hit | |||

* fill in survey 1 | |||

walk course 3 times with stick | |||

* measure time each trial | |||

* write down how many obstacles have been hit each trial | |||

* fill in survey 2 | |||

'''Survey 1:''' | |||

''Survey 1:'' | |||

* How do you experience the use of the stick/belt to help you navigate? | * How do you experience the use of the stick/belt to help you navigate? | ||

* Do you feel restrictions while using the stick/belt disregarding the absent of sight? | * Do you feel restrictions while using the stick/belt disregarding the absent of sight? | ||

| Line 551: | Line 584: | ||

* Do you have points of improvement for the stick/belt? | * Do you have points of improvement for the stick/belt? | ||

''Survey 2:'' | '''Survey 2:''' (Fill in if you changed your mind) | ||

(Fill in if you changed your mind) | |||

* How do you experience the use of the stick/belt to help you navigate? | * How do you experience the use of the stick/belt to help you navigate? | ||

* Do you feel restrictions while using the stick/belt disregarding the absent of sight? | * Do you feel restrictions while using the stick/belt disregarding the absent of sight? | ||

| Line 559: | Line 591: | ||

* In what aspects did the stick/belt fall short? | * In what aspects did the stick/belt fall short? | ||

* Do you have points of improvement for the stick/belt? | * Do you have points of improvement for the stick/belt? | ||

* Do you experience differences now you used the stick/belt more often? | |||

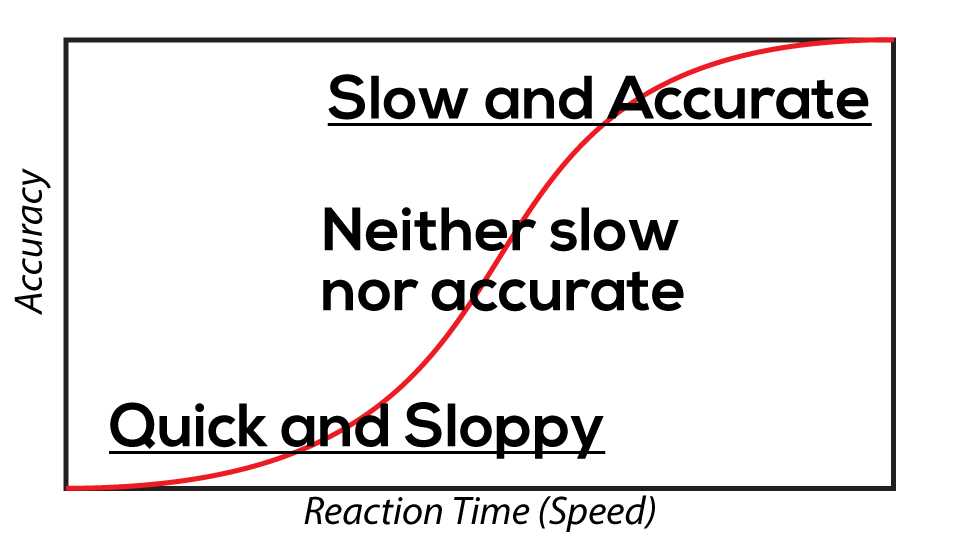

[[File:Speed-accuracy_trade-off.png|thumb|upright=2|right|alt=Alt text|Figure 4: Graph of the speed accuracy trade-off.]] | |||

''Survey 3:'' | '''Survey 3:''' | ||

* What are the advantages of the belt in comparison with the stick? | * What are the advantages of the belt in comparison with the stick? | ||

* What are the disadvantages of the belt in comparison with the stick? | * What are the disadvantages of the belt in comparison with the stick? | ||

| Line 569: | Line 602: | ||

within-subject design | The design of this usability testing is a within-subject design, as every participant will conduct the experiment in both conditions. The independent variable is the tool, which can be the stick or the belt. The dependent variable will be the time the subjects took to complete the course and the number of objects they hit. The time taken and the amount of hits into objects are both measured as these are both measures of the success of the object. Measuring only one is not enough because of the speed accuracy trade-off <ref>Wickelgren, A (1976). U.S.A. Speed accuracy tradeoff and information processing dynamics. Acta Psychologica, 67-85. https://doi.org/10.1016/0001-6918(77)90012-9 </ref>, as one becomes more speedy he/she will be less accurate and the other way around. Two different groups has been chosen to control for a possible learning effect. This means it will be easier to navigate through a course while being blind, even though the course is randomized every trial, when you have done it more often despite of the tool you use. Because the number of participants is very low, results of statistical analysis will not be very reliable so only exploratory (t-)tests will be done. | ||

== '''Final wearable''' == | |||

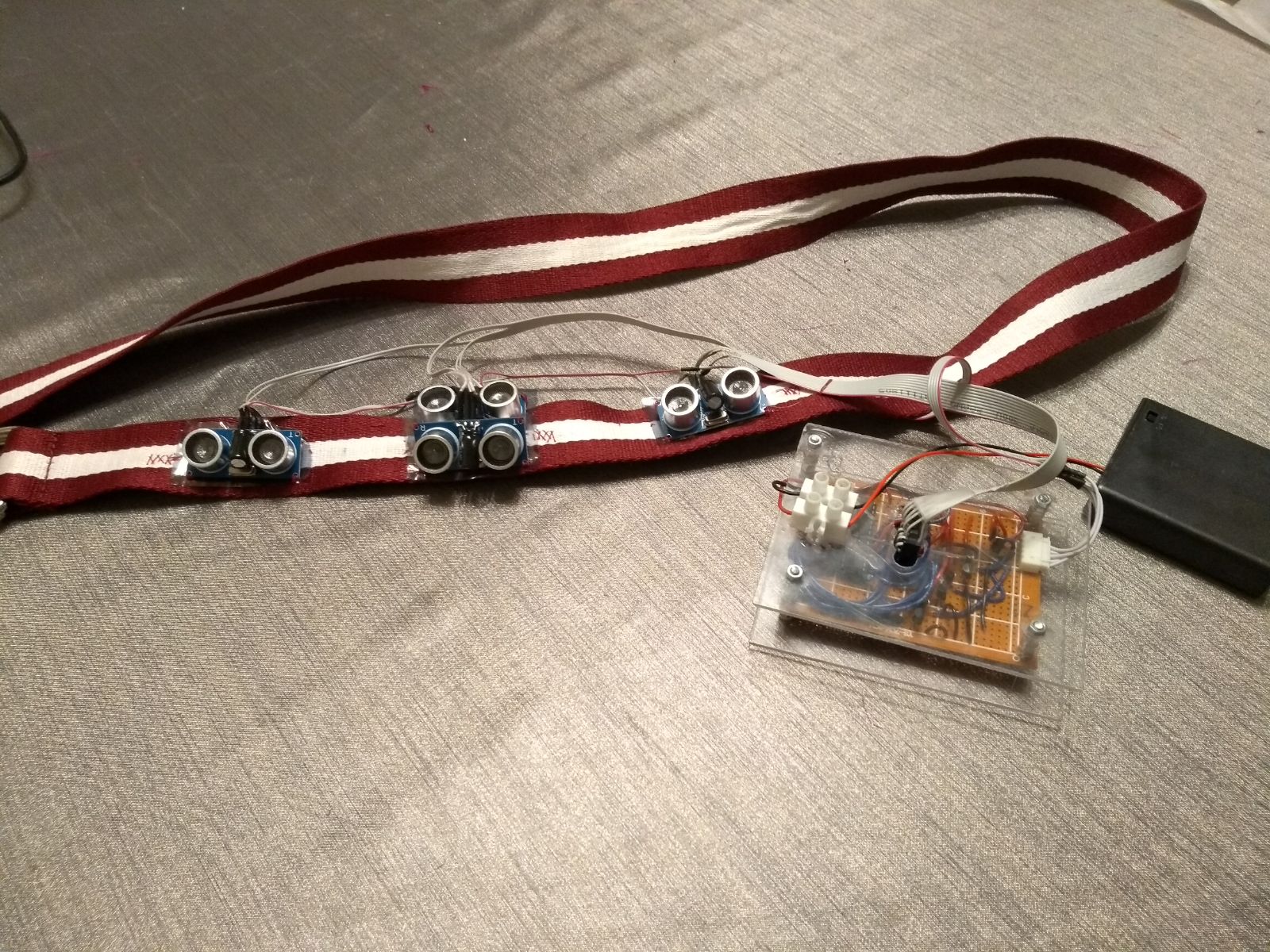

<gallery caption="Wearable" heights="220px" widths="303px" > | |||

File:Use1.jpeg|Total wearable | |||

File:Use2.jpeg|Distance sensor | |||

File:Use7.jpeg|Distance sensors in several angles | |||

File:Use3.jpeg|Electronic circuit | |||

File:Use8.jpeg|Vibration motor | |||

File:Use5.jpeg|Mounted vibration motor | |||

File:Use6.jpeg|User wearing the wearable | |||

</gallery> | |||

=== Conclusion === | |||

After the wearable was completed, a small test is done to investigate if it works. For this, an environment like an office room is created. In the rooms of De Zwarte Doos, chairs and bags were placed. Several blindfolded persons had to walk the parcour under the guidance of the wearable. | |||

It can be concluded that the wearable works. Several blindfolded persons walked from A to B without hitting any obstacles. However, there is enough space for improvement. The persons are walking very slowly. This can be caused by the measurement errors and the thrust of the user in the technology that have to be developed. Also, the range of the sensors were limited, especially the angle in which the sensors could measure. Because of this, obstacles could appear out of nowhere. It can also be concluded, that the user have to get used with the wearable. In the first part of the test, test persons were confused in case more signals were given in the same time. This happened when test persons were enclosed between chairs and the wall. In later parts of the test, test persons were less confused in comparable situations, and they could walk around without hitting the obstacles. The expectation is that, after improvement and more research, this wearable can be helpful for visual impaired persons who are walking in office rooms, or other rooms containing chairs, tables and bags. | |||

== '''Task division''' == | |||

{| class="wikitable" | style="vertical-align:middle;" | border="2" style="border-collapse:collapse" ; | |||

! align="center"; style="width: 10%" | '''Week''' | |||

! align="center"; style="width: 50%" | '''Task''' | |||

! align="center"; style="width: 20%" | '''Person''' | |||

|- | |||

| rowspan="5"|1 || style="background-color: #d1d1d1;" | Literature study || Everyone | |||

|- | |||

|Make planning || Everyone | |||

|- | |||

| style="background-color: #d1d1d1;"| Determining roles || Everyone | |||

|- | |||

|Making requirements || Everyone | |||

|- | |||

| style="background-color: #d1d1d1;"| Writing problem statement || Evianne | |||

|- | |||

| rowspan="4"|2 || Determining a concrete problem || Everyone | |||

|- | |||

| style="background-color: #d1d1d1;"| Invent a solution to the concrete problem || Everyone | |||

|- | |||

| Evaluate if the solution is user friendly and can be introduced to blind people || Everyone | |||

|- | |||

| style="background-color: #d1d1d1;"|Update Wiki || Evianne & Tom | |||

|- | |||

| rowspan="5"|3 || Update the overall planning || Evianne | |||

|- | |||

| style="background-color: #d1d1d1;"| Defining what problems can occur for blind people || Everyone | |||

|- | |||

| Thinking about the scenario || Everyone | |||

|- | |||

| style="background-color: #d1d1d1;"| Evaluate if the solutions are user friendly || Eline | |||

|- | |||

| Update wiki || Evianne & Tom & Eline | |||

|- | |||

| rowspan="8"|4 || style="background-color: #d1d1d1;"| Literature study about the new problem || Tom | |||

|- | |||

|Defining the requirements and specifications of the to be built prototype || Evianne | |||

|- | |||

| style="background-color: #d1d1d1;"| Think and write about design || Evianne | |||

|- | |||

| Update wiki || Evianne & Tom & Eline | |||

|- | |||

| style="background-color: #d1d1d1;"| Making a planning for each member || Tom & Evianne | |||

|- | |||

| Think of technologies that should be used || Stefan & Tom | |||

|- | |||

| style="background-color: #d1d1d1;"| Thinking about and writing the scenario || Tom & Evianne & Stefan & Bruno | |||

|- | |||

| Thinking and writing about the feedback system || Bruno | |||