PRE2017 1 Groep3: Difference between revisions

| (236 intermediate revisions by 5 users not shown) | |||

| Line 15: | Line 15: | ||

The technology of robotics is an unavoidable rapidly evolving technology which could bring a lot of improvements for the modern world as we know it nowaday. The challenge is however to invest in the kind of robotics that will make its investments worthwhile, instead of investing in research that will never be able to pay its investments back. This report is going to investigate a robotics technology with the goal of solving the initial problem statement. This chapter will describe the problem that is chosen, the objective of our project and the approach to show how the solution will take its form. | The technology of robotics is an unavoidable rapidly evolving technology which could bring a lot of improvements for the modern world as we know it nowaday. The challenge is however to invest in the kind of robotics that will make its investments worthwhile, instead of investing in research that will never be able to pay its investments back. This report is going to investigate a robotics technology with the goal of solving the initial problem statement. This chapter will describe the problem that is chosen, the objective of our project and the approach to show how the solution will take its form. | ||

== Problem Definition == | == Problem Definition & Approach== | ||

When you travel by train on a regular basis you might have noticed that when people in a wheelchair need to exit or enter the train it goes rather slow. Before they can get on or off the train, the train personnel is needed first to get some sort of ramp to let the disabled people board or exit the train. When someone in a wheelchair wants to exit or wants to board the train, the train might even be delayed because of this. As we know, trains in the Netherlands tend to be late sometimes and therefore every obstacle that is getting in the way of the schedule, should be taken care of. The boarding wheelchair is definitely one of the obstacles, because they | When you travel by train on a regular basis you might have noticed that when people in a wheelchair need to exit or enter the train it goes rather slow. Before they can get on or off the train, the train personnel is needed first to get some sort of ramp to let the disabled people board or exit the train. When someone in a wheelchair wants to exit or wants to board the train, the train might even be delayed because of this. As we know, trains in the Netherlands tend to be late sometimes and therefore every obstacle that is getting in the way of the schedule, should be taken care of. The boarding wheelchair is definitely one of the obstacles, because they tend to cause delays. But the perspective of the handicapped person is also important. For them the feeling of constantly being dependent on others is the worst part of living with a handicap. This dependence raises the threshold for these people to travel by train. The disabled in general lose a part of their long distance mobility when they stop using the train. This might have an impact on their social well-being (Oishi, 2010); it might be a cause for loneliness or depression, as disabled are not able to sustain distant relations (Steptoe et al., 2013). In a survey conducted by the SP 154 handicapped persons shared their complaints. Laurens Ivens and Agnes Kant translated these complaints into thirteen recommendations. Among these recommendations they state that the height difference between the train and platform should be reduced or bridged easier. They state that there should be a travel tracking system for handicapped and at last the accessibility has to be increased (Ivens and Kant, 2004). This project will research improvements for disabled people in wheelchairs travelling by train in the Netherlands. | ||

This project will first determine the problems wheelchair-bound people face when travelling by train. Then, we look at different stakeholders and possible solutions. After that, questionnaires will be held with stakeholders to determine the needs. After this, a final design for our helping robot will be made and a prototype will show some of the working principles that need to be proven in order to give credibility to the final design. | |||

This project will first determine the problems wheelchair-bound people face when travelling by train. Then, we look at different stakeholders and possible solutions. After that, questionnaires will be held with stakeholders to determine the needs. After this, a final design for our helping robot will be made and a prototype will show some of the | |||

Team motto: | |||

'''Veni, vidi, wheelie!''' | |||

=USE-analysis= | =USE-analysis= | ||

To get a better view of the design criteria the design should comply with, the USE aspect of the problem statement and the objective will be considered in this section. Moreover, the topic will be discussed from the perspective of several other stakeholders, such as society and and train companies (i.e. the Nederlandse Spoorwegen). | To get a better view of the design criteria the design should comply with, the USE aspect of the problem statement and the objective will be considered in this section. Moreover, the topic will be discussed from the perspective of several other stakeholders, such as society and and train companies (i.e. the Nederlandse Spoorwegen). | ||

== Who are the users? == | == Who are the users? == | ||

| Line 31: | Line 34: | ||

== What is the NS' perspective? == | == What is the NS' perspective? == | ||

The NS is a very important stakeholder in this project, | The NS is a very important stakeholder in this project, they are the ones that will eventually need to pay for the research and manufacturing of the robot. Moreover, the NS staff is also the current user; in the current situation, they help the disabled people board the train by means of a ramp. It is therefore very important to get a better view of what the operating NS staff thinks of our idea. For this purpose, a questionnaire for the NS personnel is made. In this questionnaire the view of the train personnel is asked regarding the current assistance and what they think that could/should be improved. Also their view on the idea of a robot helping the disabled people is important. | ||

== The questionnaires == | == The questionnaires == | ||

| Line 43: | Line 46: | ||

To find participants for this study, several steps were taken: a call on Facebook was posted for the target groups, personal networks were contacted and NS staff on Eindhoven station were approached in person. The questionnaires could be filled in online or on paper. | To find participants for this study, several steps were taken: a call on Facebook was posted for the target groups, personal networks were contacted and NS staff on Eindhoven station were approached in person. The questionnaires could be filled in online or on paper. | ||

We aimed to get 5 participants for the disabled target group, and 2 to 3 for the NS staff. These amounts are based on what is reasonable for the scope of this project; due to time constraints it is not an option to find large groups of participants. The fact that it is hard to find | We aimed to get 5 participants for the disabled target group, and 2 to 3 for the NS staff. These amounts are based on what is reasonable for the scope of this project; due to time constraints it is not an option to find large groups of participants. The fact that it is hard to find wheelchair bound people that actually use the train was further confirmed by the low response rate on our call for questionnaires. The questionnaires were written in Dutch and answered in Dutch. | ||

Link to paper questionnaire for the disabled: [[Media: Enquete mindervaliden.pdf]] | |||

URL to online questionnaire for the disabled: https://www.survio.com/survey/d/G1O9M1J3Y2Q3T3L0A | |||

Link to paper questionnaire for NS staff: [[Media: enquete staff.pdf]] | |||

URL to online questionnaire for NS staff: https://www.survio.com/survey/d/I8K8O0G2F7V4E9U6O | |||

=== The questionnaire for the disabled === | === The questionnaire for the disabled === | ||

| Line 116: | Line 127: | ||

== Thematic analysis of the results of the questionnaires == | == Thematic analysis of the results of the questionnaires == | ||

In the thematic analysis, we gather all codes from both questionnaires, and by combining the codes we will create themes. | |||

An overview of the codes used can be found here: [[Media: Overview of codes used.pdf]] | |||

An overview of the filled-in questionnaires and their coding can be found here: | |||

Questionnaires for disabled people: [[Media: Enquete ingevuld disabled.pdf]] | |||

Questionnaires for NS staff: [[Media: Enquete ingevuld staff.pdf]] | |||

=== Method === | |||

As described above, participants for this study were found by employing social media, contacting our personal networks and approaching people at train stations. | |||

After the questionnaires were filled in, they were coded. These codes were subsequently evaluated to create themes. | |||

=== Results === | |||

'''Improvements''' | |||

In the | Combining codes from the questionnaires related to improvements for the current system yielded the following: first of all, one of the disabled people wanted it to be possible to change trains faster. Moreover, better accessibility of all stations, at every time of the day, is desirable. The staff wanted a better operability of the current bridge, and a better communication with the taxi company. | ||

(In the current situation, NS travel assistance is only possible at the larger, manned stations. If a disabled person wants to travel to a smaller station, he has to contact an NS-connected taxi company. Those taxi drivers namely have access to the bridge, and are appointed to help disabled people get off of the train. Unfortunately, especially in case of disruptions in the train schedule, clear communication with the taxi company is lacking.) | |||

'''Current situation''' | |||

This theme encompasses all codes related to the current situation; from the questionnaires we yielded the info that there are multiple parties involved: the disabled person, NS service staff, NS conductors, and, like mentioned above, appointed taxi companies. From the codes we have established the service staff escorts the disabled person on the bigger stations, taxi companies on the smaller stations, and conductors are mainly involved in maintaining safety at all times. The conductor mentioned helping a disabled person about 3 times a day, whereas the service staff helps over 20 times a day. | |||

'''Advantages and disadvantages of current system''' | |||

In this theme we can merge all codes related to advantages and disadvantages of the current system. Staff reported as advantages the following: the time needed for reporting is short, the time needed for helping is short, localization of the disabled person in the train is clearly communicated. A disabled person reported arriving on time at the desired station as an advantage. | |||

In this theme we can merge all codes related to advantages of the current system. Staff reported as advantages the following: the time needed for reporting is short, the time needed for helping is short, localization of the disabled person in the train is clearly communicated. A disabled person reported arriving on time at the desired station as an advantage. | |||

The disabled people reported having little room in the train as a disadvantage. Staff reported the system is failing in case of train disruptions, and it takes too much time. | The disabled people reported having little room in the train as a disadvantage. Staff reported the system is failing in case of train disruptions, and it takes too much time. | ||

'''New concept''' | |||

=== | By aggregating all codes, in this theme we can take a look at the opinions on the new concept and important aspects of the system. Disabled people reacted positive, whereas NS was generally negative towards the concept. The staff made clear they thought they might be losing their job to the robot, and they considered it impossible for an automated system to work because of crowdedness. The disabled people mentioned an extending shelf and lift as possible idea. Aspects that the system should have according to them are: it should be fast, it should give the disabled person influence on the situation and it should be suitable for different users with different disabilities. Of course, the above could be combined with the improvements for the current system. | ||

=== Conclusion === | |||

The above themes can be aggregated and examined to discover new relations between themes. The main result of the questionnaires is a better view on the current situation, and the identification of user requirements for the new concept. | The above themes can be aggregated and examined to discover new relations between themes. The main result of the questionnaires is a better view on the current situation, and the identification of user requirements for the new concept. | ||

==== Current situation ==== | ==== Current situation ==== | ||

In the theme | In the theme Current Situation we have established how exactly the current situation works. New information for us is the involvement of taxi companies. | ||

==== User requirements ==== | ==== User requirements ==== | ||

Multiple themes above can be used to identify user requirements. The new concept should namely build on the Advantages of the current system (it should in the least not | Multiple themes above can be used to identify user requirements. The new concept should namely build on the Advantages of the current system (it should in the least not take away those advantages), it should avoid the Disadvantages of the current system, it should incorporate the Improvements mentioned, and it should be aware of the aspects mentioned in New concept. If we combine this, we can make a list of all user requirements: | ||

*The new concept should make changing trains as a disabled person faster | *The new concept should make changing trains as a disabled person faster | ||

*The new concept should grant accessibility to all train stations and all platforms | *The new concept should grant accessibility to all train stations and all platforms at all times | ||

*The operability of the new system should be good for staff | *The operability of the new system should be good for staff | ||

| Line 176: | Line 205: | ||

The government is also a stakeholder, as they are the institution responsible for making society as handi-friendly as possible. They may therefore be involved in partial funding of the project. In more detail, the Ministry of Infrastructure and Environment is involved as a stakeholder. | The government is also a stakeholder, as they are the institution responsible for making society as handi-friendly as possible. They may therefore be involved in partial funding of the project. In more detail, the Ministry of Infrastructure and Environment is involved as a stakeholder. | ||

== | ==Conclusions of the questionnaires== | ||

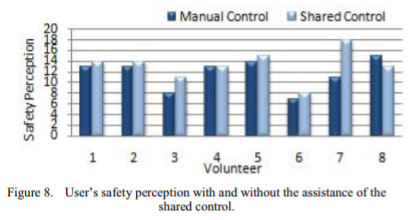

From the questionnaires we identified a specific user need: he/she mentioned he wanted more influence on the process. Since we are designing in an iterative manner, our concept got updated after the questionnaire result was known. It was decided to involve the concept of shared control in our autonomous robot. To close the loop, in an ideal situation we would want to test this interpretation of the user need with the user. However, due to time constraints and a lack of interest from disabled people to answer questions regarding the topic, we were unable to reach out to check this interpretation. However, a literature research can be performed to find out more on the topic of disabled people, shared control and a lack of influence. | From the questionnaires we identified a specific user need: he/she mentioned he wanted more influence on the process. Since we are designing in an iterative manner, our concept got updated after the questionnaire result was known. It was decided to involve the concept of shared control in our autonomous robot. To close the loop, in an ideal situation we would want to test this interpretation of the user need with the user. However, due to time constraints and a lack of interest from disabled people to answer questions regarding the topic, we were unable to reach out to check this interpretation. However, a literature research can be performed to find out more on the topic of disabled people, shared control and a lack of influence. This can be found below in this Wiki. | ||

==Jobs of the train staff== | |||

As was already mentioned in the results of the questionnaire for the NS staff, the NS service staff is afraid to lose their jobs when helping the disabled people is automated. Their concerns are true because when the robot functions as it is desired to function no staff is needed for helping the disabled entering and exiting the train. It is important to think about the consequences of introducing a robotic technology and what impact that it might have on people their jobs. The NS assistance is only available at 100 of the 400 trainstations in the Netherlands, more information about this can be found in the current situation chapter. When the robot is ready to be implemented in the trainstations it could be beneficial to first start at stations where the NS assistance is not available. This way disabled people are able to travel to more locations and the current NS service staff can still operate at the current trainstation. When the implementation to the other trainstation is successful it can be extended to the other, bigger, stations as well. The staff that is currently working at these stations can be used in a different way. For example for checking if there are no problems with the robots and if everyone understands how to use them properly. They can also fulfill other jobs at the NS for example the other service jobs like informing people on how to get to their destination. Of course this will result in less jobs but since the robotic concept is designed to make it easier for disabled people to travel by train this is not the primary concern. | |||

= Current Situation = | = Current Situation = | ||

This section will take a look into the current situation of train traveling for the disabled. | |||

== The current model== | == The current model== | ||

In the following section you will be guided through the current process of boarding a train when you are disabled. | In the following section you will be guided through the current process of boarding a train when you are disabled. | ||

| Line 203: | Line 236: | ||

== Other Countries == | == Other Countries == | ||

[[File:wheelchairlift.jpg|thumb|300px| A modern wheelchair lift]] | [[File:wheelchairlift.jpg|thumb|300px| A modern wheelchair lift]] | ||

Most railway companies in other European countries are bound by law to | Most railway companies in other European countries are bound by law to accommodate disabled people onto their train. Trains like the Eurostar have dedicated spaces inside trains in the 1st class cars, and allow for an additional passenger to come with the wheelchair bound customer. Most railways companies work like the NS system: you have to plan your trip ahead of time (online or through customer service) so the railway employees can help you along your trip. However, not all trips are allowed because railway companies like Deutsche Bahn have a specific time that they need to make sure you can transfer between trains, thus some passengers have to wait for the next train because a 10 minute transfer between trains is not possible. | ||

Either ramps or mobile wheelchair lifts are used. These are stored on the platform and chained to a pole or wall and the railway employee will put this ramp in place for you. Then, when it’s connected to the train door the railway employee will push you on board or place you on the mobile wheelchair lift. When you are on the lift both sides are closed and the employee presses a button to align the height with the train door. Once it’s done lifting, the front ramp will go down and you can ride on the train on your own. It’s also possible for trains to have a ramp inside the train floor that goes out when a button is pressed. | Either ramps or mobile wheelchair lifts are used. These are stored on the platform and chained to a pole or wall and the railway employee will put this ramp in place for you. Then, when it’s connected to the train door the railway employee will push you on board or place you on the mobile wheelchair lift. When you are on the lift both sides are closed and the employee presses a button to align the height with the train door. Once it’s done lifting, the front ramp will go down and you can ride on the train on your own. It’s also possible for trains to have a ramp inside the train floor that goes out when a button is pressed. | ||

Companies that use the wheelchair lift are: VIA Canada, TGV (France), SBB (Switzerland), Trenitalia (Italy). | Companies that use the wheelchair lift are: VIA Canada, TGV (France), SBB (Switzerland), Trenitalia (Italy). | ||

Starting in 2013, a test was done in Den Bosch with led lights on the platform to show where the train will stop and where doors are located, including which wagons are full and which are empty. The results of this experiment have been included in the NS app, which now shows how long trains are and how busy certain trains are. | Starting in 2013, a test was done in Den Bosch with led lights on the platform to show where the train will stop and where doors are located, including which wagons are full and which are empty. The results of this experiment have been included in the NS app, which now shows how long trains are and how busy certain trains are. | ||

= Robotic Solution Specifications = | = Robotic Solution Specifications = | ||

In this section the requirements, preferences and constraints of the complete solution are stated. Consequently the new solution is described and the concepts we used to get there. | |||

== RPC's == | == RPC's == | ||

The requirements, preferences and constraints. | |||

'''Requirements''' | '''Requirements''' | ||

| Line 232: | Line 266: | ||

* Time available to board the train equals *4* minutes | * Time available to board the train equals *4* minutes | ||

The | == Conceptual designs == | ||

In order to get to a solution for our problem statement, five different conceptual designs were formed. On the basis of the RPCs, the best conceptual designs are chosen to come to a preliminary design. All these designs are answering part of the problem and are products of individual brainstorm sessions. | |||

=== Design 1 === | |||

Design 1 involves an autonomous driving vehicle which can automatically drive to a certain location at the platform. The car only drives in a straight line parallel to the railway and therefore one robotic vehicle is needed per platform. The robot has wheels and an extendable shelf that can be attached to the train when the doors are opened. When someone wants to use the robot to board a train one simply walks up to the robot and pushes a button. The robot will be positioned at the end or front part of the platform depending on where the nearest elevator is located. When the train has arrived the robot will move to the door that is nearest to its location. This is either at the rear of the train or at the front (depending on which direction the train travels). | |||

The robot will be positioned using sensors in the doors to let it know where the doors are located exactly. When the doors are opened the robot will unfold its ramp and the person can board the training. By the use of a pressure sensor in the shelf the robot knows whether the person has entered the train. After the person has entered the train the robot will lift the shelf up again and then drives back to its original position. When the person inside the train wants to exit the train at a certain station the (not yet existing) extension of the NS app can be used. The app shares the information with the robot and the robot can know in advance that someone wants to exit the train. The robot can move in place when the train arrives (it can start moving when the door sensor is within its reach). | |||

When the doors open the shelf will be put in place again and the person can exit the train. When the person has left the shelf and is on the platform the robot will again lift the shelf and go back in its original position. To make sure the robot has enough power there will be a power station at the beginning position of the robot. The robot can attach to the power station and charge his batteries (same way as the lawnmower robot). | |||

=== Design 2 === | |||

Design 2 uses a crane to lift wheelchairs and moves them on or off the train. With this design there is no need for a car on the platform. There will be designated doors for people in wheelchairs where the crane positioned on the train. The crane has a lifting cable with four universal clamps which can be locked on the wheels of the wheelchair. The advantage of this concept is that it does not need anything on the platform which can cause obstructions for other persons. Getting off the train is just as easy as getting on. You will not have to worry if whether the crane is on the right platform at the right door and on the right time when you arrive, because the crane moves with you in the train. The disadvantage of this design is that you need to attach the clamps to the wheelchair yourself. | |||

It does not work autonomously. If you are incapable of operating it yourself, you still need someone to help you. The second disadvantage is that all the trains need to be adjusted, which takes a lot of time and will probably cost a lot of money. | |||

=== Design 3 === | |||

Design 3 is in many ways similar to the current ramp that is being used at NS stations. It involves two ramps that are folded upwards, and when one wants to use the ramp both sides flip down and level with the desirable height. At one side of the ramp this equals the height of the entrance of the train, and on the other side this equals the height of the platform. In this way, a person in a wheelchair can simply drive upward or downward if one wants to enter or respectively leave the train. When the ramp is folded upward, a simple user interface could be installed. The screen would allow interaction between user and platform; the user could enter an ‘order’ after which the robot can perform its duty. | |||

The robot is driven by two large wheels, one on each side, which allow for easy rotation within the platform environment. The robot autonomously navigates in this environment. The robot is stationed at one single spot per platform, where it can recharge itself after serving. The ramp has raised edges, to avoid someone falling off of the ramp. | |||

=== Design 4 === | |||

This design will focus on the docking problem for an autonomous robot. The wheelchair boarding system, as mentioned, has three main stages. The alert, dock and board stage. In this design the vehicle will use wireless network and latency to triangulate the position. There will be beacons in the platform, this could also be in the docking station, but that is probably less accurate. The robot has two sender/receiver combinations. One on the front and one on the back. They will send signals to the beacons, the beacons resend them. With the latency information the robot will be able to triangulate its position and orientation. At the same time there is a send/receive combination module under the stair of the train. This will also ping the beacons. The beacons then again triangulate the position and send this information to the robot. So at this moment the robot knows where it is and there is also a goal. In order to move to the goal, there are at least three things required: | |||

*Before using the robot, the disabled person has to use the app to enter his trip and to reserve the robot at the platform of departure and arrival. | '''Solutions''' | ||

* The motion is to be planned within the kinematic constraints of the robot. A Quintic polynomial could be used to control start values of position, velocity and acceleration. The problem is that the robot is constrained in its movement. So the orientation matters. We could describe the path as a series of robotic links, making constraints between the links. In a way the robot can always go from one to another. | |||

* The motion should be tracked by suppressing disturbances. This could be done using the kinematic equations of motion represented in a state space. | |||

* It has to move around obstacles, human and inhuman. This could be done by planning a path around it. Proximity sensors make a map of the nearest obstacles. Just a thought on this problems allows to fantasize a solution where the controller tracks the path, but starts deviating from the path as the sensors pick up obstacles. So instead of thriving for zero error, the error could increase with sensor input. The human obstacles are mobile, which means that they could move aside if urged to. | |||

The solutions posed for the problems are made up using current knowledge. In order to find smart solutions, we might look into "Truck Docking". This is investigated by truck companies and shows a similar problem. | |||

=== Design 5 === | |||

Design 5 is an autonomous mobile lifting robot on four wheels that will help the disabled person board the train without any assistance from railway employees. The robot is stationed at a charging hub on each platform and has to be activated through the NS app and physical interaction with the OV-chipcard. Once the trip is planned and you "log in" on the robot with your chipcard, the robot will move itself to where the train will stop, ideally already aligned with a train door. Then, the train arrives and the robot will autonomously align itself with the door and open the back gate so the disabled can ride into the lifting platform. | |||

Then, the backdoor closes for safety and the person is lifted. When at the right height, the front door is brought down so the wheelchair can move over it into the train. When the person has left the robot, it should detect this and return to its original position and then move back to the charging hub as soon as possible so other passenger can use the door. This design will be connected to wifi to get the trip information from the NS app and accurate train arrival times. | |||

In order to be ready to dock to the train when it arrives, the disabled person has to activate the robot to move to the correct position 5-10 minutes before arrival of the train. It will have to avoid passengers and bags on the ground on its own, but ideally this problem is limited by either introducing a "wheelchair robot path" on the ground so people know where to avoid placing bags or it has sensors in front that enable it to manouvre around these objects. | |||

Because it has accurate trip information through the NS app the robot will know train arrivals in which a wheelchair is, so it needs to be ready to help this person get out of the train completely on its own, without any physical "log in" with the NS app. | |||

== Preliminary design == | |||

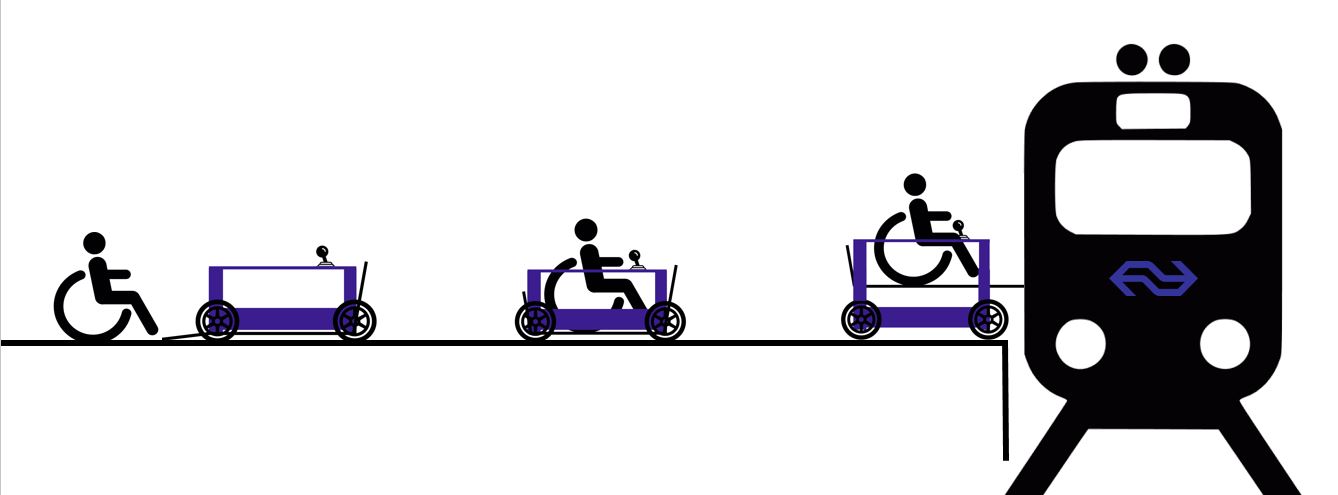

The Preliminary design is basically a combination of design 1 and 5. The design will be an autonomously driving vehicle that can be placed at each platform. The vehicle has 4 wheels and uses a horizontal plate that can be lifted up and down to be able to reach the right height to enter the train. It will be placed at one end of the platform which will be called its homing position. | |||

[[File:Chairliftdown.JPG|340px]][[File: Wheelchairfinal.png|200px]] [[File: Chairliftup.JPG|240px]] | |||

At its homing position a power station will be placed. The robot will always come back to the homing position and attach itself to the power station. The robot has to be equipped with different kinds of sensors. For example the robot should be able to sense obstacles in its driving path. When the robot senses something is in its way it should stop and give some kind of signal to let its surroundings know that something is blocking the robot. Another design challenge is to find out how the robot can locate a door where the person can enter or exit the train. The first idea for a solution to this is to equip every train with a sensor at the very first and last door of the train, these doors will then be used as an entrance for disabled people. An advantage of this solution is that the robot can always choose the door which is nearest to its homing position and therefore less people will walk in its driveway and the time to arrive at the door will be short. | |||

== Idealized solution == | |||

The idealized solution has to fulfill every requirement, preference and constraint. The biggest goal is that disabled people are able to travel all by themselves. This means that they can reach the platform and use the automated assistance system to exit and enter the train without any staff being involved. | |||

*Before using the robot, the disabled person has to use the app (see information below) to enter his trip and to reserve the robot at the platform of departure and arrival. | |||

*When someone arrives at a train station the first thing they need to do is to get to the right platform with the use of the elevators that are already present at every station. | *When someone arrives at a train station the first thing they need to do is to get to the right platform with the use of the elevators that are already present at every station. | ||

* | *The disabled person needs to check in like every other person that uses the train. The people that need assistance when entering or exiting the train have a special OV-card which can be used at the robot touchscreen to activate the automated assistance. Since the user has entered his trip in the app, the robot knows which side of the platform he may have to drive to, in case he should drive autonomously. | ||

*The disabled person enters the robot with his/her wheelchair | *The disabled person enters the robot with his/her wheelchair. He/she can select on a touchscreen whether or not to drive themselves, or let the robot drive. | ||

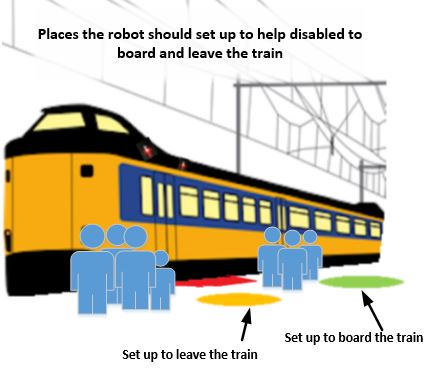

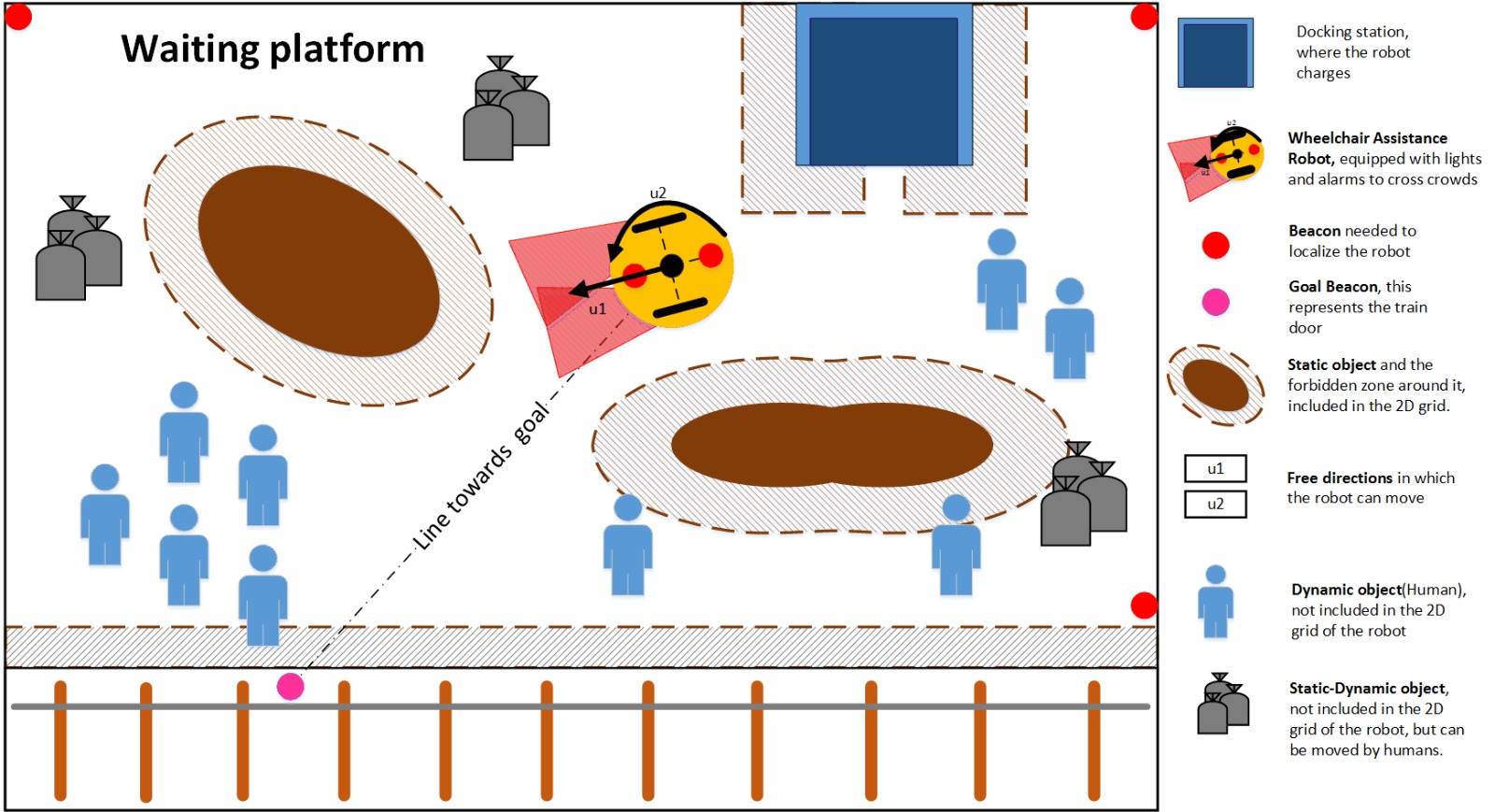

*The person can | *If you want to drive yourself, you can use the joystick to navigate towards the train doors. Where one should position itself with the robot before docking is depicted in the figure below. The picture is explained in detail in the next chapter. | ||

*In the mean time, at the desired destination, the robot is stationed already at the door where the person wants to exit the train (this information is transmitted through the OV pole) | *With the use of shared control, he/she can already drive itself towards the train, and in case the person navigates too close near an obstacle, the system will take over and redirect the robot, passing the obstacle. The person can choose if he wants to pass the object over the left or right side. When the train ultimately arrives, the robot will autonomously dock. | ||

*If the disabled person does not want to drive, the robot will autonomously drive towards its docking position. During the movement of the robot the robot should always pick the shortest but also the safest path to the door. With safest is meant that the robot should never hit any obstacles or any passengers. To realize this the robot needs to be aware of its own position and the position of obstacles and people. The robot should therefore be able to constantly adapt its driving path to avoid moving obstacles as efficient as possible. This is a very important aspect, which will be elaborated on later in this wiki. | |||

*After docking, the person can enter the train. | |||

*In the mean time, at the desired destination, the robot is stationed already at the door where the person wants to exit the train (this information is transmitted through the OV pole) | |||

*When the person then arrives, he can leave the train immediately. | *When the person then arrives, he can leave the train immediately. | ||

*There may be modifications in the train's timetable, causing the train to arrive at a different platform. Since the disabled person's trip is checked in with the NS, they know where the person is heading and will use the robot at the 'old platform'. In case of such a change of platform, the system can automatically reschedule the robot, to make sure there is a robot at the other platform available. As there are two robots on every platform, it is impossible the robot is already taken at that platform; after all, if the train can enter at that platform, no other train is at that platform, which equals one free robot. There may be a disabled person on the other side of the platform using a robot, but there are two robots, and only one disabled person can travel per train per time frame. | |||

*There may be modifications in the train's timetable, causing the train to arrive at a different platform. Since the disabled person's trip is checked in with the NS, they know where the person is heading and will use the robot at the 'old platform'. In case of such a change of platform, the system can automatically reschedule the robot, to make sure there is a robot at the other platform available. As there are two robots on every platform, it is impossible the robot is already taken at that platform; after all, if the train can enter at that platform, no other train is at | |||

== Safety regulations & Patent Check == | == Safety regulations & Patent Check == | ||

The concept needs to | The concept needs to comply with safety regulations for autonomous driving vehicles: | ||

For autonomous driving vehicles there are up to today no universal laws or regulations. A congress | For autonomous driving vehicles there are up to today no universal laws or regulations. A congress(Arc, n.d.) near the end of this year should shed some light on this issue. For now we think it suffices to make the vehicle as safe as possible, so the risk of getting in a hazard while driving is minimal. The people around the vehicle should be aware it is driving and have to move out of the way, this can be achieved with an alarm and floodlights. | ||

Safety regulations for lifting people: | Safety regulations for lifting people: | ||

There are a lot of rules and regulations for lifting a person, but most of them are simple like: the lift should be designed to minimize the risk of getting in to a hazard. The full list of rules and regulations can be found here: | There are a lot of rules and regulations for lifting a person, but most of them are simple like: the lift should be designed to minimize the risk of getting in to a hazard. The full list of rules and regulations can be found here: (HSE,2008). The important thing we should take in mind regarding these safety regulations is that in no case the vehicle can flip over while lifting a person or the person can drive off the lift while going up or down. | ||

There are no existing patents regarding the basic idea of autonomous train assistance. This patent check was done by inserting the terms wheelchair, train and wheelchair, lifting into the US Patent & Trademark Office search tool which includes international trademarks. Thus, the robotic solution does not need to take patent law into account regarding the robotic wheelchair lift concept. | |||

== Comparing Current and New Solution == | |||

In this section, we are comparing the current and new solution and check whether the new solution complies with a number of the RPC's. | |||

* Completely safe to use for the disabled person but also completely safe to other passengers on the train. | * Completely safe to use for the disabled person but also completely safe to other passengers on the train. | ||

This requirement is | This requirement is guaranteed for the current solution, since train staff is involved it is completely safe to use. | ||

* Able to use continuously, if not it will cause delay for the train or the person misses the train. | * Able to use continuously, if not it will cause delay for the train or the person misses the train. | ||

| Line 280: | Line 351: | ||

* The solution should not cause delay for other people who want to board the train. | * The solution should not cause delay for other people who want to board the train. | ||

The current solution is in most cases not causing delay for other people since they wait till everyone else has entered the train before | The current solution is in most cases not causing delay for other people since they wait till everyone else has entered the train before they help the disabled person with entering the train. | ||

= Robotic Solution Concept Explained = | = Robotic Solution Concept Explained = | ||

In this chapter the new solution is discussed in detail. First the update on the current application is explained. Subsequently the interface of the robot. Then the interaction with the surroundings are discussed. Eventually the robot docking is discussed. These first parts are mainly about ethics, surroundings and experience. The robot can also move on its own, so the autonomous driving chapter will discuss the capabilities of the robot, without humans. In the end the concept of shared control is discussed, as a result of the user questionnaire. | |||

== NS App integration == | == NS App integration == | ||

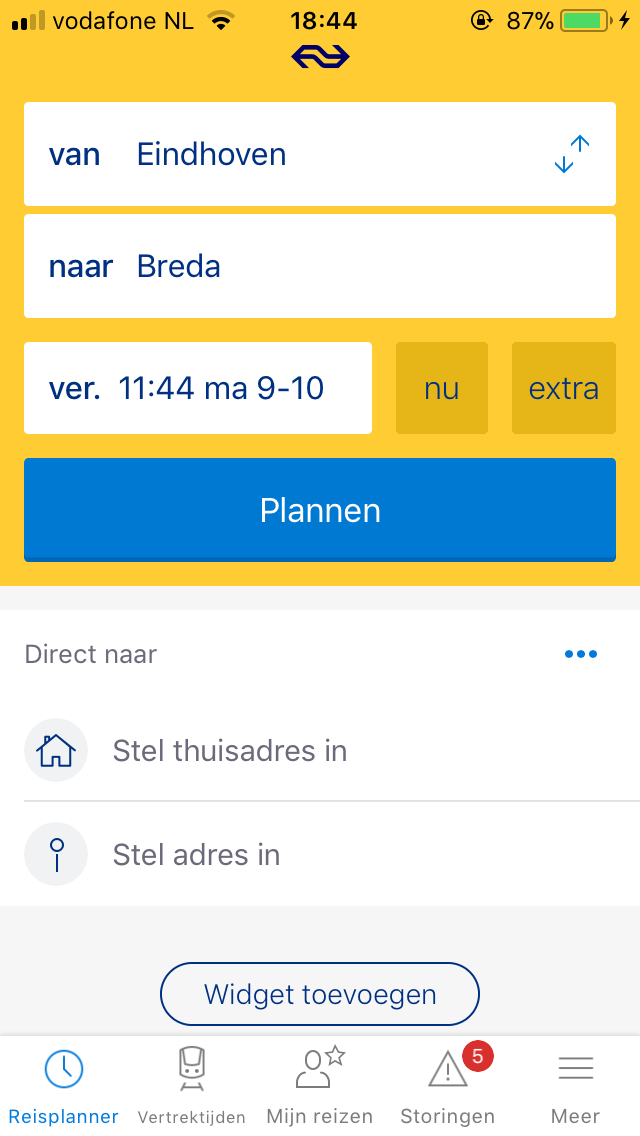

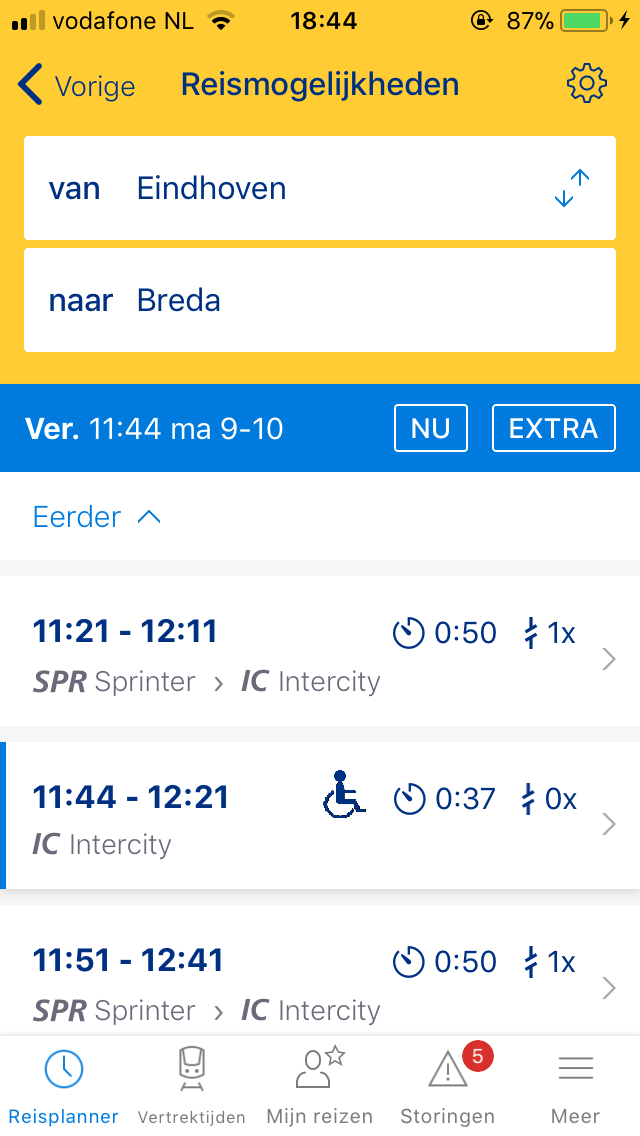

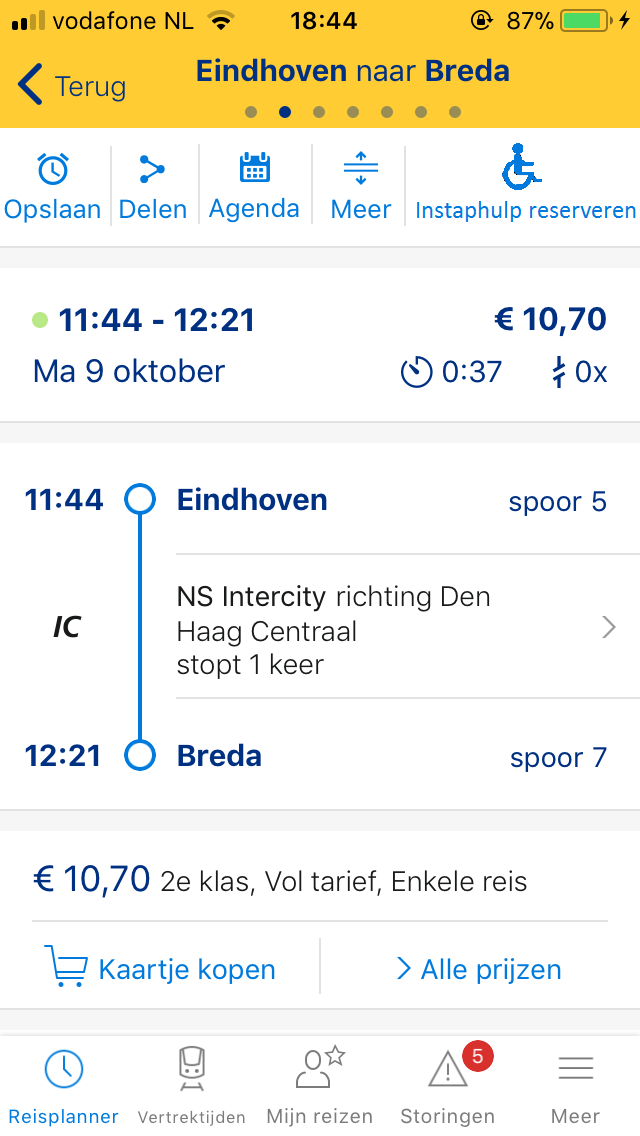

The disabled person uses the app to enter his/her trip. This can easily be implemented in the existing app. In the first picture you can see the app where one can plan his trip. In the second picture you can select in which time frame you want to travel, and a wheelchair-logo signals which trip is possible, i.e. if the robot at a particular time frame is not already reserved. In the third picture you can see the button at the top where you can subsequently reserve the robot for your travel. | The disabled person uses the app to enter his/her trip. This can easily be implemented in the existing app. In the first picture you can see the app where one can plan his trip. In the second picture you can select in which time frame you want to travel, and a wheelchair-logo signals which trip is possible, i.e. if the robot at a particular time frame is not already reserved. In the third picture you can see the button at the top where you can subsequently reserve the robot for your travel. | ||

| Line 289: | Line 362: | ||

[[File:app2.PNG|200px| Picture 2]] | [[File:app2.PNG|200px| Picture 2]] | ||

[[File:app3.PNG|200px| Picture 3]] | [[File:app3.PNG|200px| Picture 3]] | ||

As we all know the trains do not always function as they should do. To deal with delayed and canceled trains the robot needs to be aware of this. Imagine that the location of where the train arrives at a trainstation then the robot needs to be aware of this in order get to the right door. To solve these problems the NS app will be used, this app will send the real time updated information to the robot to let it know when trains are delayed or moved to a different platform. With this information the robot is always able to get to the right location in time. The people that need to use the robot can activate it with this same app in order to let the robot know when it has to drive to a certain location. | |||

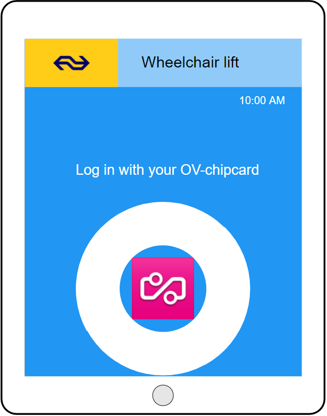

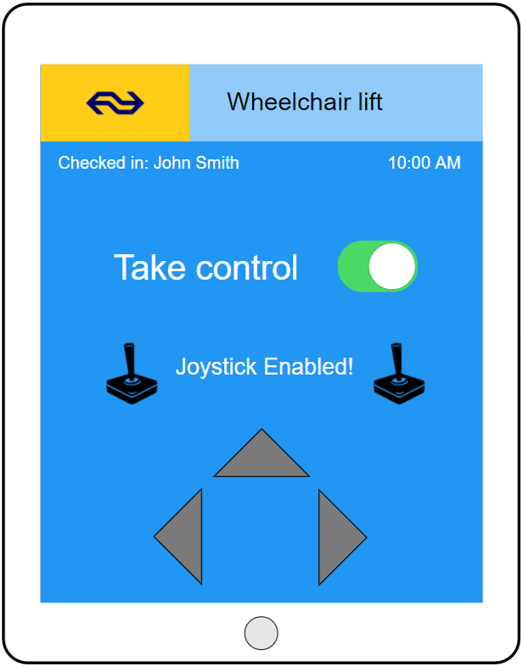

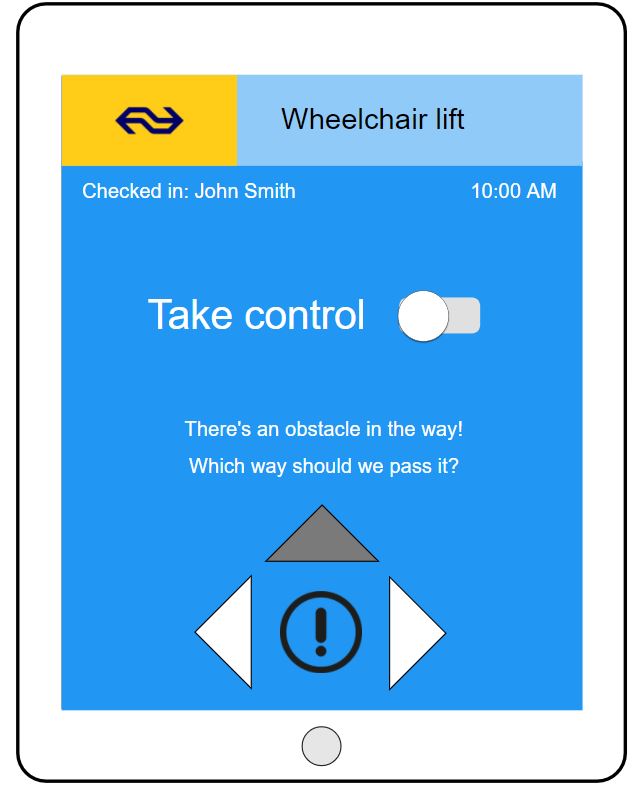

== Interface on robot and check-in== | == Interface on robot and check-in== | ||

| Line 295: | Line 370: | ||

[[File:joystick.jpg|270px|Armrest]][[File:Ovcheckin.png|250px|OV-checkin]] [[File:Screen1.png|250px|touchscreen]] [[File:Screen2.JPG|260px|touchscreen]] | [[File:joystick.jpg|270px|Armrest]][[File:Ovcheckin.png|250px|OV-checkin]] [[File:Screen1.png|250px|touchscreen]] [[File:Screen2.JPG|260px|touchscreen]] | ||

On the left side of the wheelchair, an integrated touchscreen is | On the left side of the wheelchair, an integrated touchscreen is visible to the user. This touchscreen acts as a panel to activate the robot with the OV-chipcard (picture 1) and as a navigation tool. Users can toggle between joystick-mode and autonomous mode with this control. Also, when in autonomous mode navigation options are shown when objects are encountered. | ||

== Robot-surrounding interaction == | == Robot-surrounding interaction == | ||

=== | === Personal distance === | ||

If the robot is driving (autonomously), it should be clear at all times what its direction is. This should be communicated to the other people at the train station, to avoid collision. To find out the best way of | When approaching and passing other people at the train station, the robot should take into consideration the concept of personal space. Moreover, based on past research on the matter, we should devise an ideal way of approaching other people. | ||

Research by Brandl et al. (2016) has looked at the design of the phase when a personal-service robot approaches a human being. Although in our case the robot merely passes other people, multiple important insights from this research should be incorporated in our design: | |||

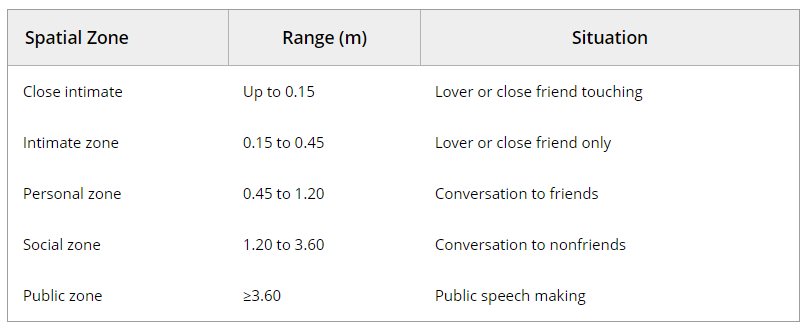

*In human-human interaction, Hall(1966) roughly distinguished between 5 zones, which were later described by Walters et al.(2008). | |||

[[File:personalspace.png|500px]] | |||

*Research by Koay, Syrdal et al.(2007) found that a mechanoid robot is allowed to come closer to humans than a humanoid robot. Our robot is mechanoid, which decreases the amount of personal space required. | |||

*Butler and Agah(2001) found that a fast approach by a robot(1 m/s) made participants feel less comfortable than a slow approach(0.25 or 0.38 m/s). We should therefore be aware that we cannot unlimitedly increase the robot’s speed to fasten the process; apparently a slower approach is more human-friendly. | |||

*Zlotwoski et al.(2012) performed research on the approach direction of walking humans, which is also highly relevant for this project. They found that human prefer to be approached from a front-left direction or a front-right direction rather than from the front. However, as our robot will be dealing with many people in a highly dynamic environment, the extent to which this ideal angle can be achieved is limited. | |||

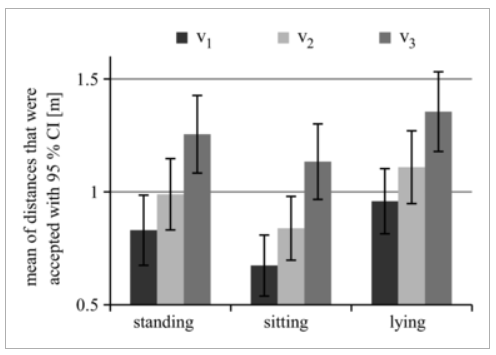

*Brandl et al.(2016) performed research on the distances that were accepted of a robot approaching, while standing, sitting and lying at three different speeds of approaching. V1 = 0.25 m/s, V2 = 0.5 m/s and V3 = 0.75 m/s. | |||

[[File:graphps.png|400px]] | |||

*As the graph shows, at a speed of 0.5 m/s, the mean of distances that were accepted is about 1 meter. This implies our robot should not be closer to people than 1 meter. However, as we are dealing with highly crowded and dynamic situations, this is not a realistic option. We should therefore employ other techniques to decrease the amount of personal space that is desired, if we want to set this bar lower than recommended in this study. | |||

*A study by Koay et al. (2014) researched whether the usage of LED display colours to signal movements would decrease the amount of personal space needed. This hypothesis was not supported, suggesting this would not make a difference. | |||

Several conclusions can be drawn from the information above: | |||

*The ideal minimal distance for our robot is 1 meter at a speed of 0.5 m/s. Our robot will drive at an average speed of 0.5 m/s. At a lower speed of 0.25 m/s, the distance drops to about 0.8 meter. 1 meter is not feasible in a busy train station environment. We will lower this, but our anchor is at 1 meter and we must beware to not lower this distance too much. A reasonable distance may be 0.6 meter; according to Hall’s research(1966) this falls within the personal zone, without intruding the intimate zone. | |||

*Since our robot is mechanoid, it is allowed to come closer to humans than humanoid robots. | |||

=== Traversing the platform === | |||

While traversing the platform, two requirements should be kept in mind: | |||

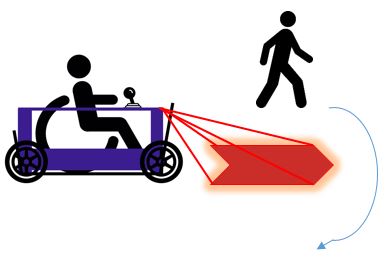

*If the robot is driving (autonomously), it should be clear at all times what its direction is. This should be communicated to the other people at the train station, to avoid collision. The robot should make clear what its future actions are. To illustrate the situation: if the robot will be turning left in less than 3 seconds, arrows should be pointing to the left. There is therefore an advance of about 3 seconds. | |||

*If the robot is driving, other people at the station should not be walking in front of it or closely behind it. | |||

To find out the best way of fulfilling these requirements, we first take a look at the state-of-the-art, which we may draw inspiration from. | |||

==== Vodafone Smart Jacket ==== | ==== Vodafone Smart Jacket ==== | ||

The Vodafone smart jacket is a ‘smart’ jacket, intended to increase visibility of cyclists in traffic in the dark and to improve traffic safety. | The Vodafone smart jacket is a ‘smart’ jacket, intended to increase visibility of cyclists in traffic in the dark and to improve traffic safety. | ||

The jacket is connected to your smartphone, and before cycling you plan your trip on your phone. By actively tracking your location during the trip, the jacket indicates your direction by an illuminated red arrow on the back: see Figure below. | The jacket is connected to your smartphone, and before cycling you plan your trip on your phone. By actively tracking your location during the trip, the jacket indicates your future direction by an illuminated red arrow on the back: see Figure below. | ||

So, if the cyclist aims to turn right, other traffic users know his intentions. This is still a prototype and has not been implemented in society, hence we cannot draw many conclusions of the effectiveness of the concept. However, the arrow indicating the cyclist’s direction serves as an inspiration for our robot. | So, if the cyclist aims to turn right in the near future (about 30 meter), other traffic users know his intentions. This is still a prototype and has not been implemented in society, hence we cannot draw many conclusions of the effectiveness of the concept. However, the arrow indicating the cyclist’s direction serves as an inspiration for our robot. | ||

[[File:vodafonejacket.jpeg|300px| Picture 1]] | [[File:vodafonejacket.jpeg|300px| Picture 1]] | ||

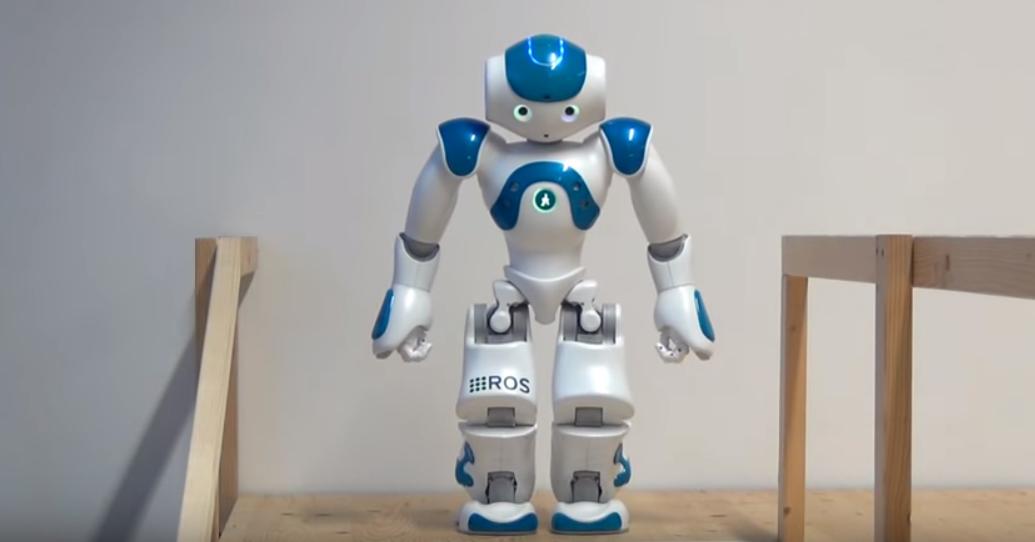

==== Nao robot ==== | ==== Nao robot ==== | ||

The infamous Nao robot indicates its direction most often by looking towards the direction. This perception of gaze direction (of the robot) is crucial for this to work. A study by Torta( | The infamous Nao robot indicates its direction most often by looking towards the direction. This perception of gaze direction (of the robot) is crucial for this to work. A study by Torta(2014) has indicated that a ‘3D head is needed for mimicking gaze direction’, and that ‘head orientation is sufficient to elicit eye contact’. In the case of our robotic system, a 3D head is not applicable, which is why we cannot draw inspiration from this. Moreover, Nao walks generally slow, which limits the risks of collision while walking. Although this may limit the risks of collision, the technique is not very effective for our robotic system as we aim to transport the disabled person as fast as possible. | ||

[[File:naowalking.PNG|300px| Picture 1]] | [[File:naowalking.PNG|300px| Picture 1]] | ||

| Line 315: | Line 413: | ||

A major example of an electronic system indicating a vehicle’s direction is obviously a car. The car is by far the most well-known among people. A car’s headlights are white/yellow, while a car’s rear lights are red. Since cars are extremely common in everyday life, people are most likely to associate white light with the front of a vehicle, and red lights with the back of a vehicle. This can be incorporated in our project. | A major example of an electronic system indicating a vehicle’s direction is obviously a car. The car is by far the most well-known among people. A car’s headlights are white/yellow, while a car’s rear lights are red. Since cars are extremely common in everyday life, people are most likely to associate white light with the front of a vehicle, and red lights with the back of a vehicle. This can be incorporated in our project. | ||

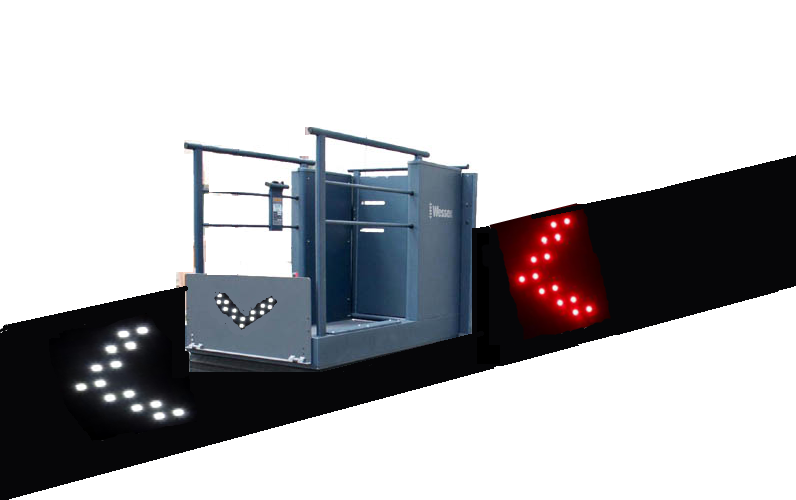

== Our design == | === Our design === | ||

Summarizing the above, we can design the following system to indicate the robot’s direction to bystanders at the train station: the car will have 4 lights indicating its direction. 2 led arrows will be displayed on the floor, on the back and front. 2 other led arrows will be displayed on the robot itself; since many people do not watch the floor while they are walking, those additional arrows on eye-level will increase visibility of the robot. Arrows on the front and arrows on the back floor will illuminate the direction. This system indicates direction in multiple ways: | Summarizing the above, we can design the following system to indicate the robot’s direction to bystanders at the train station: the car will have 4 lights indicating its direction. 2 led arrows will be displayed on the floor, on the back and front. 2 other led arrows will be displayed on the robot itself; since many people do not watch the floor while they are walking, those additional arrows on eye-level will increase visibility of the robot. Arrows on the front and arrows on the back floor will illuminate the direction. This system indicates direction in multiple ways: | ||

*The arrow points in the direction the robot is moving | *The arrow points in the direction the robot is moving | ||

| Line 322: | Line 420: | ||

*The colors on the ground will prevent people from walking in this illuminated space | *The colors on the ground will prevent people from walking in this illuminated space | ||

The | The right picture below shows how lights are used in the design. | ||

[[File:robot navigation.png|300px| Picture 1]] [[File:Lightschair.JPG|300px| Picture 2]] | [[File:robot navigation.png|300px| Picture 1]] [[File:Lightschair.JPG|300px| Picture 2]] | ||

=== Light === | ==== Light ==== | ||

The color of the lights is chosen to be red | The color of the lights is chosen to be red in the direction where the robot is heading towards (in front of the robot) and green on the back of the robot. The use of these colors will indicate that people are allowed to walk behin dthe robot but should avoid walking too close in front of the robot. With the lights people will avoid the front section of the robot which makes it easier to drive through a crowded area. | ||

=== Alarm === | |||

The alarm cannot cause panic on the waiting platform. Therefore it should not make a similar sound to that of police, ambulance, fireguard or other emergency institutions. In fact it might even be a good idea to play some familiar music. Maybe even piano music. This is already used in Taiwan with garbage collection. The garbage trucks play "Fur Elise" from Beethoven. The people know this and go out to bring their garbage to the truck. It is draws attention and it is not especially agitating. Thus it might help to make the waiting platform slightly more friendly. The robot should indicate its approach by a sound. For the scope of this project it is not a priority to find the actual sound; however, we can identify the requirements for this sound: | ==== Alarm ==== | ||

*It should be loud enough to be heard by | - | ||

*The sound should not be very ‘alarm-like’, as this may cause a scare, and more importantly, may cause people to panic or think of an emergency. The robot passing is obviously not an emergency, and the sound should therefore indicate the | When people are not paying attention to the lights and get too close to the robot the robot sounds an alarm. This way people are alerted when they are standing in its way. The alarm cannot cause panic on the waiting platform. Therefore it should not make a similar sound to that of police, ambulance, fireguard or other emergency institutions. In fact it might even be a good idea to play some familiar music. Maybe even piano music. This is already used in Taiwan with garbage collection. The garbage trucks play "Fur Elise" from Beethoven. The people know this and go out to bring their garbage to the truck. It is draws attention and it is not especially agitating. Thus it might help to make the waiting platform slightly more friendly. The robot should indicate its approach by a sound. For the scope of this project it is not a priority to find the actual sound; however, we can identify the requirements for this sound: | ||

*To enhance pleasure in use, we could choose for a song to indicate the robot’s passing. As the sound is meant to beware people of the robot | *It should be loud enough to be heard by everyone within a radius of 10 meter around the robot, even for people that are hard of hearing people and people using headphones. It should however not be too loud; it should not cause a hearing impairment, or cause annoyance or possible scare people. | ||

*The sound should not be very ‘alarm-like’, as this may cause a scare, and more importantly, may cause people to panic or think of an emergency. The robot passing is obviously not an emergency, and the sound should therefore indicate the robots passing in a serene, but hearable manner. Another option would be for a robot voice to signal its passing, e.g. 'Please move!'. | |||

*To enhance pleasure in use, we could choose for a song to indicate the robot’s passing. As the sound is meant to beware people of the robot passing through, we could for example use ‘Go your own way’ from Fleetwood Mac. | |||

== Autonomous Driving == | |||

In this chapter the autonomous function of the robot will be presented. The background and techniques that we like to use in a hardware implementation should be used as a starting point for the actual implementation. First the localization and orientation of the robot are discussed, because it is a starting point of planning and acting in the real world (Russel, S. and Norvig, P., 2014). Then the planning and acting on the platform will be discussed on fundamental level. The following chapter is mainly based on the book "Artificial Intelligence, A Modern Approach" written by Stuart Russel and Peter Norvig and published in 2014. In the eventual implementation it is advised to read their book in addition to this chapter. | |||

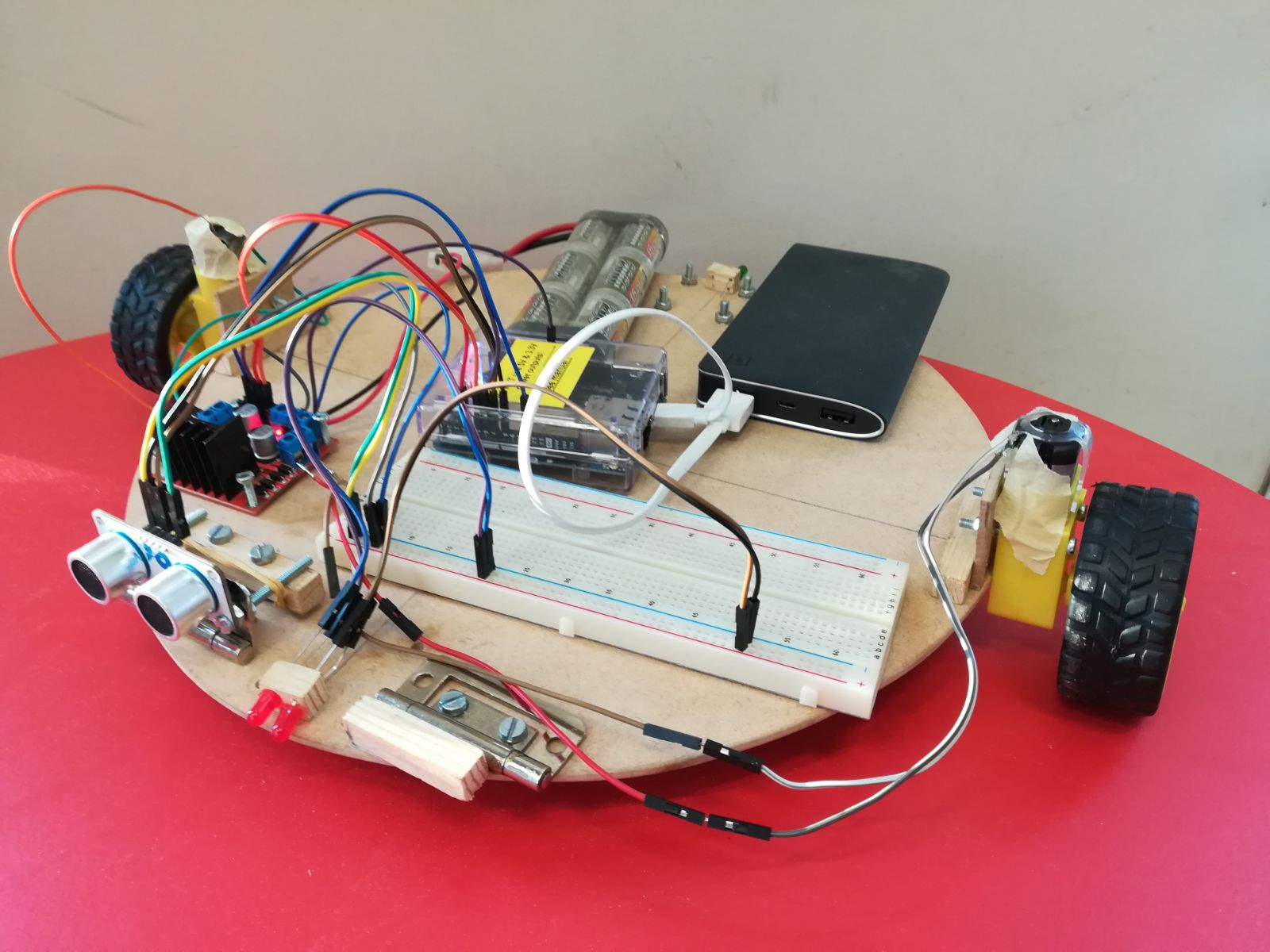

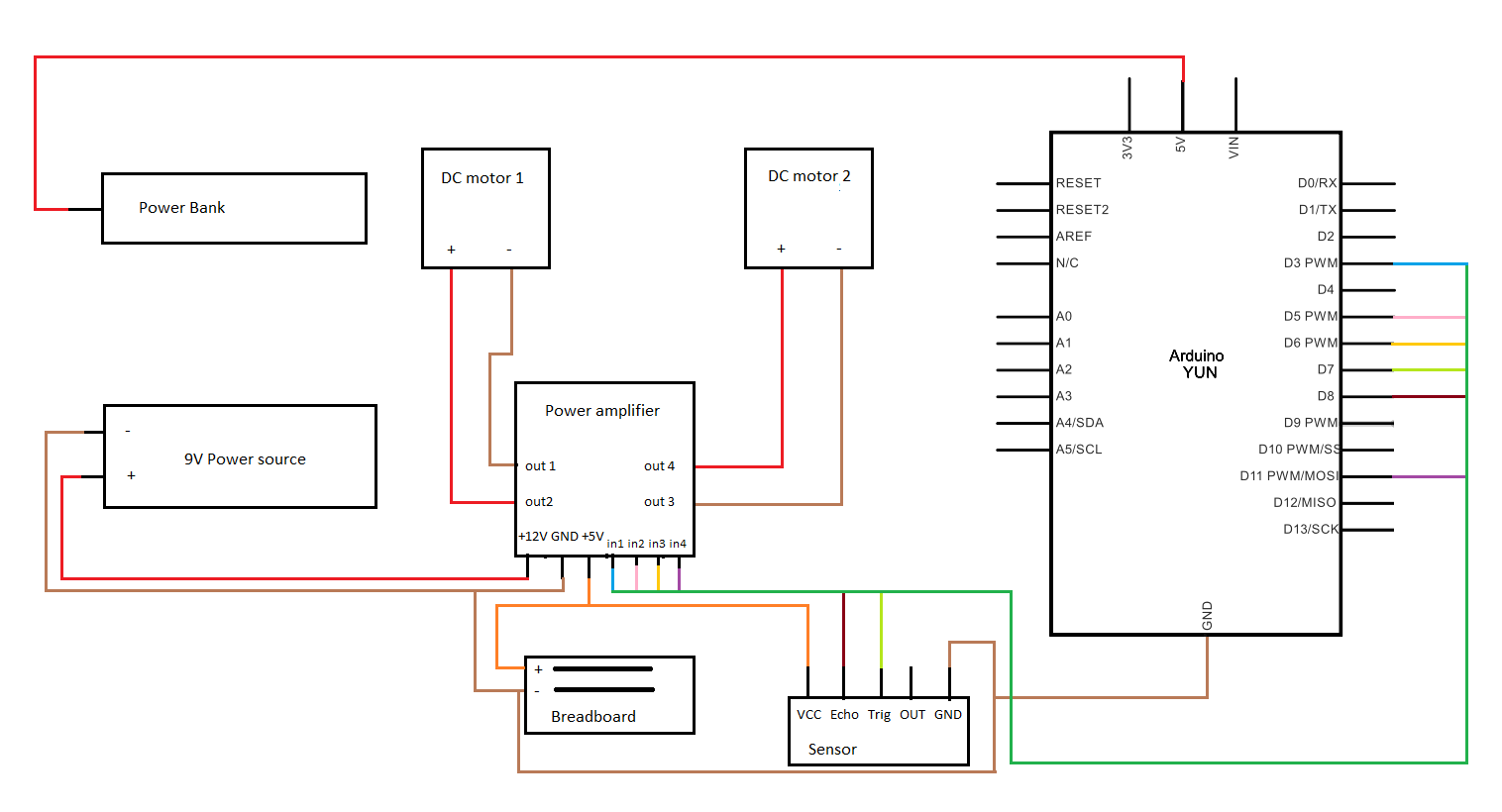

== | === Robot Hardware === | ||

==== Perception ==== | |||

Although perception appears to be effortless for humans, it requires a significant amount of sophisticated computation. The goal of vision is to extract information needed for tasks such as manipulation, navigation, and object recognition. The robot traversing the platform will have different methods to face different obstacles, but in order to choose the right branch of action the robot first has to know what is happening. Perception in our robot will be done with active sensing. The robot should combine ultrasound with laser or camera vision. Visual observations are extraordinarily rich, both the detail they can reveal and in the sheer amount of data they produce. The extra problem to face with the wheelchair robot is then to determine which aspects of the rich visual stimulus should be considered to help make good choices, and which aspects to ignore. The ultrasound sensors will mainly determine the world map and notice when objects are moving. The visual observations should help the robot distinguish between different dynamic objects (e.g. human and cat). Together with the previous Norvig and Russel also mention that visual object recognition in its full generality is a very hard problem, but with simple feature-based approaches our robot should be able to know enough to take action. Perception can also help our robot measure its own motion using accelerometers and gyroscopes. | |||

==== Effectors ==== | |||

Effectors are the means by which robots move and change the shape of their bodies (Russel and Norvig, 2015). Our robot will have a differential drive for locomotion. This imposes three degrees of freedom on our robot (x,y position and xy orientation) that can be obtained with the marvelmind beacons discussed before. Only two degrees of freedom are controllable and hence the robot is nonholonomic. This makes the robot harder to control as it cannot move sideways. Yet the choice to only pick two wheels on the side of a disk makes the kinematic model of our robot a lot simpler. | |||

=== Robotic perception and path planning=== | |||

Earlier on the perception criteria for the robot where discussed, here the hardware/software implementation is discussed. Russel and Norvig give the following definition to perception: "Perception is the process by which robots map sensor measurements into internal representations of the environment." Perception is hard because the environment is partially observable, unpredictable, and dynamic for our robot. In addition the sensors are noisy. In all cases the robot should filter the good information and make a state estimation that contains enough information to make good decisions. | |||

== | ==== Localization ==== | ||

=== | |||

In the algorithm that enables the robot to find the goal, there are positions and orientations requested. This section will elaborate on the triangulation of positions and orientations. To triangulate the position there are three beacons required <math> (A, B, C) </math>. These beacons have positions <math>[A, B, C] = [(0,0), (0,B), (C,0)</math> in which <math>B</math> & <math>C</math> are constant values since the beacons do not move. Next we calculate the distance to all the beacons from the sender/receiver on the robot. We use the timestamp -<math>t_{X,i}</math>- and the speed of the signal - now assumed to be light speed - <math>C</math>. The <math>i</math> refers to the sender receiver node to which the distance applies. In other words the <math>i</math> refers to the corresponding coordinate system. | In the algorithm that enables the robot to find the goal, there are positions and orientations requested. This section will elaborate on the triangulation of positions and orientations. To triangulate the position there are three beacons required <math> (A, B, C) </math>. These beacons have positions <math>[A, B, C] = [(0,0), (0,B), (C,0)</math> in which <math>B</math> & <math>C</math> are constant values since the beacons do not move. Next we calculate the distance to all the beacons from the sender/receiver on the robot. We use the timestamp -<math>t_{X,i}</math>- and the speed of the signal - now assumed to be light speed - <math>C</math>. The <math>i</math> refers to the sender receiver node to which the distance applies. In other words the <math>i</math> refers to the corresponding coordinate system. | ||

| Line 361: | Line 464: | ||

<math>\theta = Atan(\frac{Y_2 - Y_1}{X_2 - Y_1})</math> | <math>\theta = Atan(\frac{Y_2 - Y_1}{X_2 - Y_1})</math> | ||

===== Beacons of Marvelous Minds ===== | |||

After some research we found out that the drones team uses a [https://marvelmind.com/ Marvelmind] Robotics beacon system. The system can be used with an arduino. The company provides - among other - the following information: | |||

"Marvelmind Indoor Navigation System is off-the-shelf indoor navigation system designed for | |||

providing precise (+-2cm) location data to autonomous robots, vehicles (AGV) and copters. | |||

The navigation system is based on stationary ultrasonic beacons united by radio interface in | |||

license-free band. Location of a mobile beacon installed on a robot (vehicle, copter, human, | |||

VR) is calculated based on the propagation delay of ultrasonic signal (Time-Of-Flight or | |||

TOF) to a set of stationary ultrasonic beacons using trilateration. | |||

Stationary beacons form the map automatically. No manual entering of coordinates or distance measurement is required. If stationary beacons are not moved, the map is built only | |||

once and then the system is ready to function after 7-10 seconds after the modem is powered | |||

The system needs an unobstructed sight by a mobile beacon of two stationary or more stationary | |||

beacons simultaneously – for 2D (X,Y) tracking. The distance between beacons cannot exceed 30 m." | |||

This system should be used in our robot in order to keep a clear reference to the real world and know where the robot is itself. | |||

==== Mapping ==== | |||

To be able to plan a path first a map is needed and just knowing where you are is only part of the map creation. The robot knows where it is via the beacons and local measurement hardware. The next step is to determine where the obstacles are relative to the robot and putting them in a map. To determine where the obstacles are the robot uses ultrasound range sensors. These give a certain range and along with that the object gets a landmark and a position in the map. Russel and Norvig describe the Kalman filter and the extended Kalman filter. The difference is the approximation of the sensors and robot. The normal Kalman uses only linear models for motion and sensors. These filters are used in the so called Monte Carlo localization. In our robot solution we give the robot a map of the waiting platform before it has to traverse that. Along that the beacons are used to acquire location. This means that the robot is not required to simultaneously localize and map. In other words we do not see SLAM as needed nor desired for localization. | |||

A greater problem is to find out what kind of object is in front of us. In other words we would like to identify the landmarks. This is problematic, because we would like to use camera vision to address this problem. However, perception is complicated and this report merely describes some solutions and gives a step-up to some background. | |||

In | In addition the planning should be able to address uncertainties. To solve this the robot needs to re-plan the path continuously and keep asking for information when it faces high uncertainty. | ||

==== Determining location of the door ==== | |||

For the robot to be able to help a person exit the train the location of the person in the train is needed. The trains in the Netherlands do not stop at exactly the same place on a platform every time. This will cause a problem when a robot is being used. In Den Bosch they are experimenting real time updated led lights to show the people were a door will be located when the train stops. This information can be useful for determining the location of the person on board of the train. When the location of the door for handicapped persons is known the system can send this information to the robot. Then the robot knows where someone is located and can drive to that location. | |||

[[File:ledlight.PNG|400px|thumb|right]] | |||

==== Planning to move ==== | |||

The robot will use point-to-point motion to deliver the robot to the end location. The original 3D space is turned into the configuration space. This space is continuous and can be solved by either cell decomposition or skeletonization. They both reduce the continuous path-planning problem to a discrete graph-search problem. In addition the robot should be able to do compliant motion. This means that the robot is in physical contact with an obstacle and it is necessary to add this to the robot as people on the waiting platform can start to push the robot or have other forms of contact. | |||

==== Moving ==== | |||

A path found by a search algorithm can be executed by using the path as the reference trajectory for a PID controller. The controller is necessary for our robot as path planning alone is usually insufficient. In our demonstration we simply specified a robot controller directly. So rather than deriving a path from a specific model of the world, our controller just switches state in a finite state machine, as it encounters problems. This implementation is a lot easier and gives us a nice demonstration for the robot we build. The finite state machine also allows for easy feedback towards the user. The different states give a good indication of the actions that will be taken. However the higher level path planning is necessary for autonomously traversing the platform. | |||

==== Obstacle Avoidance ==== | |||

In order to make it more suitable for the train platform case, we would like to make use of the dynamics that govern the people moving on the platform. In order to achieve this one should first make a distinction between static and dynamic objects. Otherwise it is not possible to determine which actions to take. In order to distinct static and dynamic objects, we want to use a coarse 2D grid (cell decomposition). All benches and static static objects are hard coded inside the grid. Then we use the sensors to detect objects. They will give an indication of the tile in which the object is detected. Then it will evaluate the map and decide if it knows the object or not. If it knows the object it can just follow the path that was already planned around the object, otherwise it should find a way to get past the object. In this case it could be a static-dynamic object like a case, which can be moved. Or a dynamic object that can move itself. | |||

==== Docking and entering the train ==== | |||

The robot will position itself using local light sensitive sensors and reflectors beneath the train. This only happens in the last moment. The robot is already close enough to the train so that no people can interfere with the light sensors. Also for these sensors, probability filters will have to be used to filter noise out and get reliable results. The docking of the robot will be left to the robot itself and cannot be done manually to prevent any accidents. | |||

[[File:Laser_docking.JPG|400px| Picture 1]] | |||

When docking to the train the other passengers might block the robot. As mentioned the train has information about the position of the disabled person in the train. If the disabled scans his OV-card inside the train a beacon starts, but it also activates a red light on the door that indicates that a disabled will leave the train. The same can be applied as the disabled scans his OV-chipcard at the dock. The robot connects to the beacon at the door of the train, and the established connection will also trigger a red light on the outside of the door. This will make the end phase of the docking less troublesome, because it will hopefully have the effect of deterrence at the train doors for other passengers where the robot will board. | |||

[[File:Docking_user.JPG|400px| Picture 1]] [[File:Traindock.JPG|850px| Picture 1]] | |||

The handicapped will board the train using a lift system. When the robot is docked to the train the plate will go up, when the plate is at the right hight the lift stops and the person can easily get in or off the train. | |||

== Shared Control == | == Shared Control == | ||

| Line 446: | Line 523: | ||

Another research that serves as an inspiration for this project was performed by Connell and Viola(1990). They made a striking comparison to riding a horse and driving a car. A horse will not crash at high speed, and “if you fall asleep in the saddle, a horse will continue to follow the path it is on.” This illustrates the added value of shared control very well. In this article, the robot works as follows: the operator(the disabled person) is free to drive the robot in any direction, but the robot will refuse to continue its path if it detects an obstacle. This is similar to the way we are designing our robot. Shared control is beneficial in two cases: if the robot is too cautious (for example in a very busy environment), the disabled person can gain complete control to increase efficiency. On the other hand, when the person is either unable to drive, or tired, he can fully hand over all power to the robot. | Another research that serves as an inspiration for this project was performed by Connell and Viola(1990). They made a striking comparison to riding a horse and driving a car. A horse will not crash at high speed, and “if you fall asleep in the saddle, a horse will continue to follow the path it is on.” This illustrates the added value of shared control very well. In this article, the robot works as follows: the operator(the disabled person) is free to drive the robot in any direction, but the robot will refuse to continue its path if it detects an obstacle. This is similar to the way we are designing our robot. Shared control is beneficial in two cases: if the robot is too cautious (for example in a very busy environment), the disabled person can gain complete control to increase efficiency. On the other hand, when the person is either unable to drive, or tired, he can fully hand over all power to the robot. | ||

Because there is no information available with respect to shared control in wheelchair lifting devices, | Because there is no information available with respect to shared control in wheelchair lifting devices, when the system encounters an object or the end of the platform, it needs the user to take over the driving mechanism. But what is the best way to indicate to the user that it needs to act? Obviously, it needs to signal the user with information on what is happening, and when it desires the user to take over the control and move the machine himself. “Shared control beteen human and machine: using haptic steering wheel to aid in land vehicle guidance” (Steele et al, 2001) concludes that incorporating haptic feedback into the control device (so in our case the joystick) improves the alertness of users. A haptic feedback is for example a vibration signal to the user when the machine encounters a problem and needs to give control to the user. This alerts the user to immediately take control. “Haptic shared control: smoothly shifting control authority?” (Abbink et al, 2011) concludes that haptic shared control can lead to short-term performance benefits (faster and more accurate vehicle control, lower levels of control effort). Thus, it would be wise to incorporate a force feedback (haptic control) into the feedback system to the user. Much like the autopilot of Tesla (see reference list), which requires the driver to place its hands on/near the steering wheel and has haptic feedback when it needs to user to act, our machine could require the user to place its hand on the joystick. | ||

When the wheelchair lifting device encounters an obstacle, the end of the platform, or an error, it needs to signal to the user what is needed of him. Because a screen is already incorporated in the device due to the checking in system of the OV-chipcard, it makes sense to give this also the purpose of indicating signals when the user needs to take control. The user also needs to have the option to take control himself, without the obstacle encountering a problem. This shared control can be displayed on the screen, and it can be made touchscreen so the user can press a button and take control of the machine. This however brings a problem, because according to “Visual-haptic feedback interaction in automotive touchscreens” (Pitts et al, 2012) touchscreens in the automotive industry take away user awareness of the surroundings (because it adds a visual workload to the user). However, it also concludes that incorporating haptic feedback counters this and improves the overall situational awareness. This research suggests that is it a good idea to provide information on the screen alerting the user that he needs to take control (by pressing the button on the touchscreen), while also alerting the user with force feedback (vibration) to inform him he needs to take an action. | When the wheelchair lifting device encounters an obstacle, the end of the platform, or an error, it needs to signal to the user what is needed of him. Because a screen is already incorporated in the device due to the checking in system of the OV-chipcard, it makes sense to give this also the purpose of indicating signals when the user needs to take control. The user also needs to have the option to take control himself, without the obstacle encountering a problem. This shared control can be displayed on the screen, and it can be made touchscreen so the user can press a button and take control of the machine. This however brings a problem, because according to “Visual-haptic feedback interaction in automotive touchscreens” (Pitts et al, 2012) touchscreens in the automotive industry take away user awareness of the surroundings (because it adds a visual workload to the user). However, it also concludes that incorporating haptic feedback counters this and improves the overall situational awareness. This research suggests that is it a good idea to provide information on the screen alerting the user that he needs to take control (by pressing the button on the touchscreen), while also alerting the user with force feedback (vibration) to inform him he needs to take an action. | ||

| Line 456: | Line 533: | ||

The results of the questionnaire, combined with the above research, has several implications for our design: | The results of the questionnaire, combined with the above research, has several implications for our design: | ||

*Although we are unable to test our interpretation of the questionnaires, the literature research above nevertheless confirms shared control is an added value to this robotic system. | *Although we are unable to test our interpretation of the questionnaires, the literature research above nevertheless confirms shared control is an added value to this robotic system. | ||

*The way of implementing shared control will be similar to the ‘Mister Ed’ robot by Connell and Viola(1990): the operator is free to drive in any direction, but the robot will refuse to continue its path when detecting an obstacle. The robot is effectively looking over the disabled person’s shoulder to remain safe at all times. | *The way of implementing shared control will be similar to the ‘Mister Ed’ robot by Connell and Viola(1990): the operator is free to drive in any direction, but the robot will refuse to continue its path when detecting an obstacle. The robot will then pass the obstacle, over the left or right side (this can be chosen by the user). The robot is effectively looking over the disabled person’s shoulder to remain safe at all times. | ||