PRE2016 4 Groep3: Difference between revisions

| (180 intermediate revisions by 5 users not shown) | |||

| Line 13: | Line 13: | ||

|- | |- | ||

| 0854765 | | 0854765 | ||

| | | Liselotte van Wissen | ||

|- | |- | ||

| 0944862 | | 0944862 | ||

| Line 21: | Line 21: | ||

| Michalina Tataj | | Michalina Tataj | ||

|} | |} | ||

= Introduction = | = Introduction = | ||

| Line 28: | Line 26: | ||

=== Problem description === | === Problem description === | ||

The problem we decided to address is the improvement of the security procedure at airports. First, it is usefull to look at the current situation. This will give a starting point for createtion of a model to optimize this procedure. | |||

Currently, all security checks are done by humans. Apart from the standard checks everybody gets, the security guards determine who looks suspicious and needs to be checked upon more thoroughly. However, this approach still has some problems that we would like to improve. We would like to introduce a model able to detect various illegal/endangering activities such that it can alarm the guards about violence, acts of terrorism, smuggling and stealing and enable action before these events occur. The model will determine whether a person is acting suspicious by measuring the biometrics during walking and motion patterns, as these can be used to deduct a person’s mental state, like anxiousness <ref name="suspicious_inconspicuous"> Koller, C. I., Wetter, O. E., & Hofer, F. (2015) </ref>. The problems our model will solve and it's constraints are listed below. | |||

First problem: | |||

An airport can be very crowed, which makes it a hard for the guards to actually see every single person and detemine if they act suspicious or not. Using multiple camaras already improves the view, but we believe that using a model which can analyse the camera footage, nobody will be missed and at least everybody at the airport will be checked for suspicious behaviour. This should lead to less or no potential criminals omitting inspection. | |||

Because of the crowds at the airport it is seldom possible to capture the motions of the lower body of a person, especially with (stationary) cameras. This leads to the constraint that the model can only analyse the biometrics of the upper body. | |||

Second problem: | |||

The human acspect of the security checks introduces a bias and/or (instructed) profiling. Since our model will analyse a person's behaviour and not their appearance, gender or ethnicity, it will be a lot more objective and will not use visual profiling. | |||

At the airport people from different countries and cultures will be present, therefore this will lead to the constraint, that the model should use only globally consistent traits to determine whether or not a person is suspicious. | |||

=== Definitions === | |||

; Abnormal behaviour : Abnormal behaviour is defined as behaviour that deviates from the standard behaviour of people in a specific context and is not frequently observed. The chapter 'Suspicious behaviour' goes more into depth of how we define and detect this abnormal/suspiscious behaviour. | |||

; Biometrics : We define biometrics as the measurements and analysis of body movement characteristics that are unique to different types of behaviour. See the chapter 'Possible biometrics for detecting abnormal behavior in crowds' for more in detail information on how we determine the biometrics from surveillance footage. | |||

= Objectives | = Objectives= | ||

'''Goal: Develop a model for a video-based abnormal behaviour detection program''' | '''Goal: Develop a software model for a video-based abnormal behaviour detection program''' | ||

Objectives of this project: | Objectives of this project: | ||

*Formulate concrete problem statement | *Formulate concrete problem statement | ||

*Develop overview of the State-of-the-Art | *Develop overview of the State-of-the-Art | ||

**List | **List the possible biometrics for detecting abnormal/suspicious behaviour | ||

**List | **List the different methods available for measuring biometrics (pros/cons, what method works best for what purpose/setting) | ||

** | **Study current areas of research | ||

** | **Indicate problems with the current technologies | ||

*Develop model scenarios for determining abnormal | *Develop model scenarios for determining abnormal behaviour | ||

**Determine what constitutes abnormal | **Determine what constitutes abnormal behaviour (heavily dependent on context) | ||

**What techniques could be used (pros/cons, possible new ideas) | **What techniques could be used (pros/cons, possible new ideas) | ||

*Develop USE aspects | *Develop USE aspects | ||

| Line 58: | Line 58: | ||

***Develop easy-to-understand graphical interface for primary users | ***Develop easy-to-understand graphical interface for primary users | ||

***Maintain sense of participation in primary users | ***Maintain sense of participation in primary users | ||

***Conduct survey among | ***Conduct survey among primary users to get insight in their current approach and to research support of such an application | ||

***Incorporate findings into design | ***Incorporate findings into design | ||

**Society: | **Society: | ||

| Line 71: | Line 71: | ||

*Create final presentation | *Create final presentation | ||

The main goal of the model is to provide a general structure of a program which is capable of identifying suspicious persons for security applications. The method should be based on biometrics which can be used to determine abnormal | The main goal of the model is to provide a general structure of a program which is capable of identifying suspicious persons for security applications. The method should be based on biometrics, which can be used to determine abnormal behaviour in order to obtain a higher success rate than comparable human-based surveillance. | ||

The objectives of the model are: | The objectives of the model are: | ||

| Line 77: | Line 77: | ||

**Decrease false-negative rate compared to human-based surveillance | **Decrease false-negative rate compared to human-based surveillance | ||

**Decrease false-positive rate compared to human-based surveillance | **Decrease false-positive rate compared to human-based surveillance | ||

**Provide results to primary user(s) (security guards/police) | **Provide results to primary user(s) effectively (security guards/police) | ||

*USE objectives: | *USE objectives: | ||

**Users: | **Users: | ||

***Provide easy-to-understand information to primary | ***Provide easy-to-understand information to primary users | ||

***Provide a higher sense of security (secondary | ***Provide a higher sense of security (secondary users) | ||

**Society: | **Society: | ||

***Decrease terrorist activity | ***Decrease terrorist activity | ||

*** | ***Create a higher global sense of security | ||

***Higher crime prevention | ***Higher crime prevention rate | ||

***Decrease racial/religious tensions | ***Decrease racial/religious tensions | ||

**Enterprise: | **Enterprise: | ||

*** | ***Improve success rate of secutiry checks | ||

***Be economically feasible | ***Be economically feasible | ||

***Decrease damage caused to assets | ***Decrease damage caused to assets and maintain company reputation | ||

= State-of-the-art = | = State-of-the-art = | ||

== Possible biometrics for detecting abnormal behavior in crowds == | == Possible biometrics for detecting abnormal behavior in crowds == | ||

To be able to detect abnormal behaviour, certain characteristics are required to identify agents in a a scene. Such characteristics are based on either physiological or behavioural characteristics and are generally referred to as biometrics. In order to asses biometrics, the following conditions can be used:<ref name="biometrics general"> Jain, A., Bolle, R., & Pankanti, S. (Eds.). (2006). Biometrics: personal identification in networked society (Vol. 479). Springer Science & Business Media.</ref> | |||

*Universality (every person in the scene should posses the trait) | *Universality (every person in the scene should posses the trait) | ||

| Line 100: | Line 100: | ||

*Permanence (the trait should not vary too much over time) | *Permanence (the trait should not vary too much over time) | ||

*Measurability (the trait should be relatively easy to measure) | *Measurability (the trait should be relatively easy to measure) | ||

*Performance (relates to the speed, | *Performance (relates to the speed, accuracy and robustness of the technology used) | ||

*Acceptability (the subjects should be accepting towards the technology used) | *Acceptability (the subjects should be accepting towards the technology used) | ||

*Circumvention (It should not be easy to imitate the metric) | *Circumvention (It should not be easy to imitate the metric) | ||

Most research on identifying | Most research on identifying behaviour via computer vision techniques is focused on non-crowded situations. The subject is either isolated or only a very small number of people are present. Also, most of the conventional computer vision methods are not appropriate for use in crowded areas. This is partly due to the fact that people display different behavior in crowd context. As a result, some individual characteristics can no longer be observed, but new collective characteristics of the crowd as a whole now emerge. Another important factor is the difficulty of identifying and tracking individuals in a crowd context. This is mostly due to occlusion of (parts of) the subject(s) by objects or other agents. The quality of the video image and the increased processing power needed to track individuals are also important factors. | ||

<ref name="crowd analysis techniques survey"> Junior, J. C. S. J., Musse, S. R., & Jung, C. R. (2010). Crowd analysis using computer vision techniques. IEEE Signal Processing Magazine, 27(5), 66-77.</ref> | <ref name="crowd analysis techniques survey"> Junior, J. C. S. J., Musse, S. R., & Jung, C. R. (2010). Crowd analysis using computer vision techniques. IEEE Signal Processing Magazine, 27(5), 66-77.</ref> | ||

Most current research focuses on tracking of the people in the crowds. The individual tracking of people has proven to be difficult in a crowd context. Many different methods have been proposed for individual tracking and while these tend to work satisfactory for low to moderately crowded situations, they tend to fall flat in higher density crowds. There are also models which try to use general crowd characteristics to detect anomalies, but these tend to ignore singular abnormalities and are better suited for detecting general locations in the scene which contain anomalies, for example where a fire has broken out.<ref name="crowd analysis techniques survey" /> | Most current research on detecting abnormal behaviour in crowds focuses on tracking of the people in the crowds. The individual tracking of people has proven to be difficult in a crowd context. Many different methods have been proposed for individual tracking and while these tend to work satisfactory for low to moderately crowded situations, they tend to fall flat in higher density crowds. There are also models which try to use general crowd characteristics to detect anomalies, but these tend to ignore singular abnormalities and are better suited for detecting general locations in the scene which contain anomalies, for example where a fire has broken out.<ref name="crowd analysis techniques survey" /> | ||

There are promising models that try to combine a bit of both extremes. There is a model which uses a set of low-level motion features to form trajectories of the people in the crowd, but uses an additional rule-set computed based on the longest common sub-sequences <ref> Cheriyadat, A. M., & Radke, R. J. (2008). Detecting dominant motions in dense crowds. IEEE Journal of Selected Topics in Signal Processing, 2(4), 568-581.</ref>. This results in a system that is capable of highlighting individual movements not coherent with the dominant flow. Another paper created an unsupervised learning framework to model activities and interactions in crowded and complicated scenes <ref>Wang, X., Ma, X., & Grimson, W. E. L. (2009). Unsupervised activity perception in crowded and complicated scenes using hierarchical bayesian models. IEEE Transactions on pattern analysis and machine intelligence, 31(3), 539-555.</ref>. They used three elements: low-level visual elements, "atomic" activities, the most fundamental of actions which can not be further divided in sub-activities, and interactions. This model was capable of completing challenging visual surveillance tasks such as determining abnormalities. | There are promising models that try to combine a bit of both extremes. There is a model which uses a set of low-level motion features to form trajectories of the people in the crowd, but uses an additional rule-set computed based on the longest common sub-sequences <ref> Cheriyadat, A. M., & Radke, R. J. (2008). Detecting dominant motions in dense crowds. IEEE Journal of Selected Topics in Signal Processing, 2(4), 568-581.</ref>. This results in a system that is capable of highlighting individual movements not coherent with the dominant flow. Another paper created an unsupervised learning framework to model activities and interactions in crowded and complicated scenes <ref>Wang, X., Ma, X., & Grimson, W. E. L. (2009). Unsupervised activity perception in crowded and complicated scenes using hierarchical bayesian models. IEEE Transactions on pattern analysis and machine intelligence, 31(3), 539-555.</ref>. They used three elements: low-level visual elements, "atomic" activities, the most fundamental of actions which can not be further divided in sub-activities, and interactions. This model was capable of completing challenging visual surveillance tasks such as determining abnormalities. | ||

Common problems in crowded scenes, such as occlusion of the subjects, can be prevented by moving to a multi-camera surveillance system. Having different angles of the same scene available allows the system to better identify and track subjects. Dynamic cameras | Common problems in crowded scenes, such as occlusion of the subjects, can be prevented by moving to a multi-camera surveillance system. Having different angles of the same scene available allows the system to better identify and track subjects. Dynamic cameras, cameras able to turn and zoom in and out, should be able to increase the efficiency of identifying suspicious persons by for example zooming on on the area. However, the use of multiple cameras brings new problems with it. It is difficult to calibrate camera view with significant overlap and to compute their topology. Calibrating camera views which are disjoint and where objects move on multiple ground planes has proven to be challenging. Most research on video surveillance assumes a single-camera view, even though multiple-camera surveillance systems can better solve occlusions and scene clutters. Most research on multi-camera systems are based on small-camera networks.<ref> Wang, X. (2013). Intelligent multi-camera video surveillance: A review. Pattern recognition letters, 34(1), 3-19.</ref> | ||

== Detecting human activity == | |||

In order to recognise human activity, a general system is used which divides human activity recognition in three levels. The low-level represents the core technology, meaning the technical aspects for recognising humans in a scene. The mid-level represents the actual human recognition systems. The high-level represents the recognised results applied in an environment, for example a surveillance environment. | |||

The low-level contains three main processing stages: object segmentation, feature extraction and representation, and activity detection and classification algorithms. | |||

Object segmentation is performed on each frame in the video sequence to detect humans in the scene. The segmentation can be divided into two types based on the mobility of the camera used. In case of a static camera, the most used segmentation method is background subtraction. In background subtraction, the background image without any foreground object(s) is first established. The current image can then be subtracted from the background image to obtain the foreground objects. However, this process is highly sensitive to illumination changes and background changes. Other more complex methods are based on complex statistical models or on tracking. For dynamic cameras the background is constantly changing. The most commonly used segregation method is than temporal difference, the difference between consecutive image frames. It is also possible to transform the coordinate system of the moving camera based on the pixel-level motion between two images in the video. | |||

The second stage of the low-level looks at the characteristics of the segmented objects and represents them in some sort of features. These features can generally be categorised in four groups: space-time information, frequency transform, local descriptors and body modeling. Different methods are used for the different categories. The classification algorithm is based on the available set of suitable feature representations. | |||

The actual mid-level abnormal activity recognition generally relies on a deviation approach. Explicitly defining abnormal behaviour depends heavily on context and surrounding environments. These types of behaviours are, by definition, not frequently observed. Thus most models use a reference model, as in the case of background subtraction, based on examples or previously seen data, and consider new observations as abnormal if they deviate from the trained model. Different methods are used. | |||

The last level, high-level, represents the actual application. The application is dependent on the environment of the system. This research focusses on surveillance environments. In surveillance systems, human activity recognition is mostly focused on automatically tracking individuals and crowds in order to support security personnel. These types of environments tend to have multiple cameras, which can be used together as a network-connected visual system. The cameras can than track the position and velocity path for each subject. The tracking results can then be used to detect suspicious behaviour.<ref> Ke, S. R., Thuc, H. L. U., Lee, Y. J., Hwang, J. N., Yoo, J. H., & Choi, K. H. (2013). A review on video-based human activity recognition. Computers, 2(2), 88-131.</ref> | |||

== Suspicious behaviour == | |||

In order to teach our software to recognise suspicious activity we must first determine what constitutes such behaviour. Trying to remain inconspicuous while conducting a highly suspicious action results in a behavioural paradox that can be difficult to detect by bystanders. However, there are some general patterns in body language and motion that are observed significantly more frequently in individuals with criminal intentions. In our research we will distinguish between two types of non-verbal cues: motion of the body itself and motion of the individual through a crowd. With ‘body’ we refer to the torso, head and arms, because these are mostly visible in a crowd, while the lower body is not. Facial expressions are outside the scope of our project and will not be taken into account. | |||

= | === Body language === | ||

Body language of people with criminal intent tends to differ from that of bystanders, because they need to remain undetected <ref name=suspicious_inconspicuous />. Frey <ref name="Whos_the_criminal">Frey, C. (2014). " Who's the Criminal?": Early Detection of Hidden Criminal Intentions-Influence of Nonverbal Behavioral Cues, Theoretical Knowledge, and Professional Experience (Doctoral dissertation).</ref>, among others, showed that people recognise this deception far more often than can be accounted for by chance. | |||

During the build-up phase of a crime, offenders often show an increased frequency of object- and self-adaptors, in other words, the “manipulation of objects without instrumental goal” <ref name="Nonverbal_indicators">Burgoon, J. K. (2005). Measuring nonverbal indicators of deceit. The sourcebook of nonverbal measures: Going beyond words, 237-250.</ref> and the frequency and duration of contact between the hand and the own body <ref name="Moderator_of_nonverbal_indicators">Sporer, S. L., & Schwandt, B. (2007). Moderators of nonverbal indicators of deception: A meta-analytic synthesis.</ref>. This includes touching and scratching of the own hair and face <ref name="Detecting_lies_deceit">Vrij, A. (2008). Detecting lies and deceit: Pitfalls and opportunities. (Vol. 13, 2nd ed.). John Wiley & Sons.</ref> and strictly unnecessary contact with carried objects, such as tapping pens repeatedly or reaching for an object multiple times without using it. This behaviour was observed in both assassination and bomb-planting scenarios in large crowds, indicating that it is likely not crime-specific <ref name="Fahigkeit_identifikation">Heubrock, D., Kindermann, S., Palkies, P., & Röhrs, A. (2009). Die Fähigkeit zur Identifikation von Attentätern im öffentlichen Raum. Polizei&Wissenschaft, 2(2009), 2-11.</ref> <ref name="Moglichkeiten">Heubrock, D. (2011). Möglichkeiten der polizeilichen Verhaltensanalyse zur Identifikation muslimischer Kofferbomben-Attentäter.[Possibilities of behavior analysis for identifying muslimic suitcase bombers.]. Polizei-Heute, 11(2), 13-24.</ref>. | |||

Troscianko et al. <ref name="What_happens_next">Troscianko, T., Holmes, A., Stillman, J., Mirmehdi, M., Wright, D., & Wilson, A. (2004). What happens next? The predictability of natural behaviour viewed through CCTV cameras. Perception, 33(1), 87-101.</ref> observed that head orientation could also give away suspicious intentions. Offenders look away from their walking direction more often and look around repeatedly. However, one should be careful when considering these signs, as airports are vast and crowded, which often results in passengers getting confused or lost. Their searching behaviour could result in similar head movement, while they have no harmful intentions. | |||

Therefore, in addition to the cues itself, a reliable method is needed to differentiate between real cues and normal behaviour. One way to do this is by measuring behaviour relatively to the crowd. To ensure that one wrong gesture does not lead to a false positive, a baseline is established first. The frequency of suspicious behavioural cues is measured in the crowd overall to determine what should be regarded as ‘normal’ behaviour <ref name="Protecting_airline_passengers">Frank, M. G., Maccario, C. J., & Govindaraju, V. (2009). Protecting Airline Passengers in the Age of Terrorism. ABC-CLIO, Santa Barbara.</ref>. Only distinct deviations from this baseline are considered suspicious. | |||

The recognition of body language does not rely on perfect information and vision. Experiments were conducted with recognising human behaviour based on point light animation footage. It was observed that humans still can pick up behavioural cues with this limited visual information <ref name="Visual_perception">Johansson, G. (1973). Visual perception of biological motion and a model for its analysis. Perception & psychophysics, 14(2), 201-211.</ref>. This supports the idea that computer software will be able to pick up behavioural cues, despite its visual limitations. | |||

=== Motion patterns === | |||

Criminal intentions do not only show though a person’s body movement, but also in the way they move through a crowd. In general, an offender will show a significantly more abrupt kind of movement during the build-up phase of a crime. There are more changes in speed, position and direction than in a general crowd <ref name=suspicious_inconspicuous />. | |||

However, it is important to keep in mind that these movement patterns should be observed within the relevant context. For example, in an airport, changes in speed and direction could also indicate searching behaviour. It is therefore import to study movement that deviates from the rest of the crowd, rather than universal ‘suspicious’ movement. | |||

All cues, both for motion and body language, were found to be positive deviations, i.e. the behaviour was expressed more strongly by the culprit than by the bystanders <ref name=suspicious_inconspicuous />. This is a useful property for our project, as it is easier to spot the deviating behaviour of one individual than to find a behaviour that occurs in the overall crowd, but not in one suspicious individual. | |||

= Approach = | |||

[[File: | |||

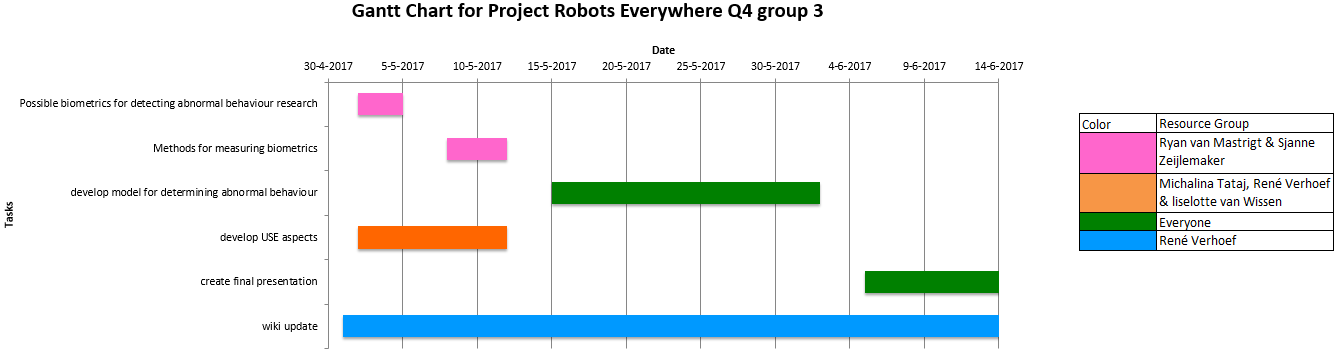

=== Planning === | |||

In order to keep track of the progress of the project and set deadlines for our goals we have made a Gantt chart. This chart shows what tasks are done when and how these tasks are divided among our team members. | |||

[[File:Gantt chart PRE 16 Q4 group3`v2.png|1250px]] | |||

=== Milestones === | === Milestones === | ||

Based on the tasks in our planning we determined a number of milestones to close off each phase of the project: | |||

* | *Finish the research into what defines abnormal behaviour. (planned by the end of week 2) | ||

* | *Finish the research into the existing methods for biometric scanning. (planned by the end of week 3) | ||

* | *Finish analysing the USE aspects that our project brings with it. (planned by the end of week 3) | ||

*Having developed a model for the detection of abnormal behaviour based on previous research and analyses. (planned by the end of week 6) | *Having developed a model for the detection of abnormal behaviour based on previous research and analyses. (planned by the end of week 6) | ||

* | *Present the finished product in the final presentation. (planned by the end of week 8) | ||

* | *Finalise the wiki for judging. (planned by the end of week 8) | ||

===Deliverables=== | ===Deliverables=== | ||

| Line 145: | Line 174: | ||

= USE aspects = | = USE aspects = | ||

== User == | == User == | ||

Our system will interact directly or indirectly with the following users: | |||

Primary users | |||

*Security Guards | *Security Guards | ||

*Police officers | *Police officers | ||

*Military personnel | *Military personnel | ||

Secondary users | |||

*Persons being filmed | *Persons being filmed | ||

*Technical maintenance personnel | |||

*The people manufacturing the product | |||

* | Tertiary users | ||

*The people manufacturing, repairing and designing the product | |||

*Governement | |||

*Airline companies | |||

==== User friendliness ==== | ==== User friendliness ==== | ||

User friendliness can be described using the following factors: | |||

==== | |||

In order to gain insight in the current methods of detecting suspicious persons and to look at the wishes of the primary users of the system, a survey will be done with security personnel | *Learnability: | ||

* | The system does not require the user to learn many new skills. The only thing the user (airport security) needs to learn is how the system lets them know when a suspicious person is detected and who/where this person is. | ||

* | *Efficiency: | ||

* | Once the system is in use, a higher level of efficiency will be reached since more criminals will be detected and less innocent people will be checked by security. | ||

*Do you | *Memorability: | ||

* | Since there is not a lot to learn for the user, it will be possible to use the system even after not using it for a longer period of time. | ||

*Errors: | |||

Once the system is realised, its error rate should be analysed extensively. If it made more incorrect detections than a human securtity guard, the system would be unnecessary. For the effectiveness of the product it is important to keep te error rate of the system as low as possible. | |||

*Satisfaction | |||

With the questions of the interview below, a general idea of what the users are looking for in the system can be established and interpeted. However, since the survey will be restricted to only one individual we cannot guarantee that there results are representative. If the system was realised, user tests would still be needed during and after the design process to find out what the users think of the ineraction with the system and which things should be changed to suit their needs optimally. | |||

==== Interview primary users ==== | |||

In order to gain insight in the current methods of detecting suspicious persons and to look at the wishes of the primary users of the system, a survey will be done with security personnel at airport Veldhoven. We will ask them the following questions: | |||

Behaviour: | |||

*Which behavioural cues do you look for when trying to identify suspicious persons? | |||

*What kind of criminal activities do you encounter at the airport? | |||

*Do different criminal activities correspond to different behaviours? | |||

**Could you explain which cues are specific for each crime? | |||

*To what extend do you look at body language specifically? | |||

*Do you consider the way people move through a crowd when trying to identify suspicious individuals? | |||

**How does the motion pattern of a suspicious person differ from that of an innocent person? | |||

*What role does facial recognition play in your job? | |||

Procedure and failure rate: | |||

*How many people are present in the departure hall during peak hours? | |||

*What actions do you take after identifying a suspicious person? | *What actions do you take after identifying a suspicious person? | ||

*How often does it occur that an apprehended person turns out innocent? | *How often does it occur that an apprehended person turns out to be innocent? | ||

* | **Which specific causes contribute to this false-positive rate? | ||

* | |||

* | |||

* | System interaction: | ||

*To what extend would you trust a camera-based computer system which detects suspicious persons automatically? | |||

**Why would you (not) trust it? | |||

*If such a system existed and it detected a suspicious individual, how would you prefer this information to be presented to you? | |||

**Why do you find this type of interaction most convenient? | |||

*Are there any particular features you would like to see in such a program? | |||

*Are there any aspects of your job that, in your opinion, cannot or should not be automated? | |||

In the end, we could not arrange a meeting with a security guard from the airport. Instead, we interviewed a teacher from Summa college that trains security guards. The interview was conducted in Dutch and we left it that way to make sure we did not interpert anything wrong during translation. | |||

''Naar welke gedragskenmerken kijkt u om verdachte mensen te herkennen?'' | |||

Dat is heel breed, zeker op de luchthaven. Een voorbeeldje: er zijn mensen die hebben haast. Op een luchthaven hebben altijd mensen haast. Dat zou opvallen, maar op de luchthaven valt dat weer niet op, omdat dat normaal is. Mensen die niet weten waar ze moeten zijn gaan vaak informatie inwinnen. Staan ze ergens anders te kijken en weten ze niet waar ze moeten zijn, dan is dat een afwijkende situatie. Je kunt ook denken aan mensen zonder koffer of mensen in de zomer met een lange jas aan; kleding die niet past in de tijd van het jaar. Overmatige transpiratie als het vriest, zou ook een indicator kunnen zijn. Bepaalde groepen die bij elkaar zijn, bijvoorbeeld heel veel mannen bij elkaar. Dat wil niet zeggen dat er altijd iets mis is. Je moet er ook naar kijken of het bijvoorbeeld een zakelijke vlucht is of een vakantievlucht. | |||

''Kijken jullie ook naar kleine dingen, zoals hoe vaak mensen bijvoorbeeld hun gezicht aan raken?'' | |||

Ja, dat kan. Het is vooral een stukje houding. Er is onderzoek naar gedaan: je hebt een aantal oerinstincten en iedereen reageert vanuit zijn oerinstinct. Je hebt daar geen invloed op, dus als je zenuwachtig bent, laat je dat altijd op een of andere manier zien door middel van houding of gedrag. Wij kijken naar wat de normale situatie is. Wijken mensen daarvan af, dan is er iets aan de hand, maar dat wil nog niet zeggen dat het fout is. | |||

''En als mensen tegen de stroom in lopen?'' | |||

Dat kan inderdaad ook verdacht zijn. Waarom loopt iemand tegen de stroom in? | |||

''Wat voor criminele activiteiten zien jullie zoal op een vliegveld?'' | |||

Mogelijke terroristische activiteiten, mensen die illegaal reizen, mensen- en drugssmokkel. Maar in basis: al die mensen weten dat ze fout zitten, dus die primaire reactie zie je altijd. | |||

''Kun je ook zien aan de hand van de reactie wat voor criminele activiteit iemand van plan is uit te voeren?'' | |||

Er zit zeker verschil tussen drugssmokkel en het voorbereiden van een aanslag, maar dat is niet mijn specialiteit. | |||

''In hoeverre kijkt u naar lichaamstaal?'' | |||

Heel veel. non-verbale communicatie is zeker 90%. Je kunt niet iedereen aanspreken: “Hé, ga je drugs smokkelen?”. Je gaat altijd af op lichaamskenmerken. | |||

''Geldt dat ook voor mensen die naar camerabeelden op schermen kijken of is dat met camera’s lastig te zien?'' | |||

Nee, voor zover ik weet gaan die van dezelfde kenmerken uit. | |||

''Hoe kijk je naar mensen die zich anders door een menigte bewegen?'' | |||

Ze lopen in een andere richting, staan stil. Je kunt ook werken met triggers. Voorbeeld: bij Schiphol hebben ze zichtbaar een aantal marechaussees neergezet bij de ingangen en gekeken hoe mensen reageerden. Op de camerabeelden kun je dan zien dat mensen soms een omtrekkende bewegen maakten en via een andere kant naar binnen gingen. Niet iedereen die dat doet is iets van plan, maar ze hebben wel een bepaalde natuurlijke reactie op uniformen, dus er is altijd iets aan de hand. | |||

''In hoeverre speelt gezichtsherkenning een rol?'' | |||

Steeds meer, ook in verband met big data en terrorisme. Als er verdachte personen zijn, staan die in het systeem en als ze ergens opduiken, worden ze geregistreerd. Het lastige is, dat sommige landen niet met elkaar samen willen of kunnen werken, maar dat gaat wel komen. | |||

Er zij nu al systemen die alles meten: gezicht temperatuur, transpiratie, vingerafdrukken, maar wat doe je met die informatie? Er zit een bedrijf in Weert, UTC, dat daar veel mee doet. Zij zouden jullie daar misschien meer informatie over kunnen geven. | |||

''Hoeveel mensen bevinden zich gemiddeld in een vertrekhal?'' | |||

Dat ligt heel erg aan het vliegveld. Bij Maastricht is dat zo’n 200-300 man, maar bij Schiphol moet je aan duizenden mensen denken. Dat is ook interessant voor jullie systeem: ga je die allemaal scannen of pak je alleen risicovluchten? | |||

''Wat voor actie onderneemt u als u een verdacht persoon herkent?'' | |||

We spreken die persoon aan. Waarom is jouw gedrag afwijkend van de norm? Dan zeggen ze bijvoorbeeld: “Ik heb mijn teen gestoten, daarom loop ik moeilijk.” Dan ga je er dieper op in en daaruit ga je filteren: vertelt hij de waarheid? Zo niet, dan is er iets aan de hand. | |||

''Hoe vaak komt het voor dat een persoon die aangehouden wordt, onschuldig is?'' | |||

Daar heb ik geen inzicht in, zeker niet op de luchthaven. Het mooiste zou zijn 90%, dan wordt er hard gewerkt en weinig gevonden. | |||

''Is het dan niet vervelend voor onschuldige personen dat ze staande gehouden worden?'' | |||

Het punt is: hun gedrag wijkt af. Als je alleen gedrag aan gaat pakken van mensen die schuldig zijn, mis je heel veel dingen. Je kunt dus beter heel veel mensen eruit pikken en erachter komen dat ze zich op een bepaalde manier gedragen zonder kwaad in de zin te hebben. | |||

''Zijn er specifieke oorzaken die tot het verdenken van onschuldige personen leiden?'' | |||

Haast en onwetendheid bijvoorbeeld. En spanning – mensen zijn gespannen omdat ze met een vliegtuig de lucht in gaan. Die kenmerken zijn hetzelfde als van iemand die iets kwaads in de zin heeft. | |||

''In hoeverre zou u het systeem dat wij beschrijven vertrouwen?'' | |||

Ik denk dat je daar goed op kunt vertrouwen. De ene beveiliger vindt een situatie wel verdacht ende andere niet. De ene dag heeft hij goed geslapen en de andere niet. Dat zou niet mogen, maar het gebeurt wel. Als een systeem een gedeelte van zijn keuzes kan maken, zou dat ideaal zijn. | |||

''Hoe zou u deze informatie het liefst gepresenteerd hebben?'' | |||

Dat is lastig, want je hebt mensen die rond lopen. Het zou kunnen met een mobiel scherm op de telefoon of met een meldkamer. | |||

''Zijn er nog specifieke dingen die u terug zou willen zien in zo’n programma?'' | |||

Nee, dat durf ik zo niet te zeggen. Ik denk dat het heel uitgebreid kan en mag. | |||

''Zij er aspecten die niet door het systeem overgenomen zouden moeten worden?'' | |||

Nee, in het systeem zoals je nu beschrijft niet. Er is nog steeds een stukje aanspreken van mensen. Dat moet denk ik wel blijven. Je moet er toch achter zien te komen waarom iemand zich zo gedraagt. Iemand kan zweten omdat het dertig graden is of omdat hij een bomgordel om heeft: hoe kom je daar achter met een systeem? | |||

The interview confirms a few important assumptions that we made. It shows that suspicious behaviour is universal, context-dependent and that it is usually a positive deviation from the crowd. The behavioural cues that we found in our literature study seem to be quite accurate, however, we need to keep the context in mind at all times. | |||

Now that these assumptions are validated we can safely implement them into our model. It was also good to hear that guards check all persons that deviate from the crowd, even if this leads to many false positives. Our system will likely give some false alarms too and while we want to minimise this, it is good to know that it is better to have some false positives than to miss a real criminal. The idea of making it a mobile application was something that we had overlooked. If guards are moving around in the airport, it is indeed useful to have a portable screen so that they can interact with the system from any location. Finally, we were positively surprised by his open attitude towards our system. We were afraid that security guards would object to such a system, because they would feel that they could do a better job themselves. The fact that they would trust our system enough to use it is crucial to our project, because there is no point to a system that no one is willing to use, no matter how good it is. | |||

== Society == | == Society == | ||

=== Advantages === | === Advantages === | ||

For a System to work and be accepted in society it should have a lot of societal advantages. The biggest advantages and thus the reasons to design this system will be listed below. | |||

==== Terrorist/criminal activity ==== | ==== Terrorist/criminal activity ==== | ||

The first advantage is also the main goal of the system. To detect criminal activity at airports. | |||

By using cameras and algorithms to detect movements linked to criminal activity, potential terrorists, smugglers and thieves can be caught. By using this system the process will be more efficient since it can analyse every person walking in the airport. Therefore, this system will be responsible for a higher criminal detection rate and thus increase the safety of airports. | |||

==== Security ==== | ==== Security ==== | ||

As said above, using the system should result in a higher criminal and terrorists catching rate, making the airport and flying safer. The system will not act as a replacement of the security at an airport, but will be an aid to help them be more accurate and select/check less hermless people. It will also make it possible to check every person entering the airport, so no criminals will go unnoticed. Since the system is only capable of detecting potentially suspicious persons the securtity guards and police will still have to the check for proof and, if neccesary, conduct the arrest. | |||

==== Racial/religious tensions ==== | ==== Racial/religious tensions ==== | ||

The last advantage this sytem will provide is its objectiveness. Since the system will be 'scanning' persons based on their behaviour and movements the outer appearance of the person is not taken into account when determmining if a person could be dangarous or not. | |||

With persons/security/police detecting suspisous persons their will always be some part of bias, since it is humanly impossible to be completely unbias. Also currently selecting people is also partyally based upon profiling, by looking for external characteristics convicted criminals have in common and based on those external charasteristics search for people who also have these charateristics because it would than be more likely for them to be a criminal. This is a self induced system. If for example 70% of convicted criminals would wear blue nail polish, people wearing blue nail polish would be checked upon for more often than people who don't, leading to more findings and more arrests of people with blue nail polish and thus keeping up this profile. | |||

Currently there are a lot of discussions about this fenomenon, because it is claimed to happen upon characteristics such as race and religion. This leads to a lot of tension between different races and religions within a country but also world wide. By using the system this (racial) profiling can be extermintated since it is not based upon a database of suspicious external charateristics but upon behaviour. A lot of our behaviour and body language is unconsiouly so by using psychological research this unconsious behaviour can be analysed and liked to certain feelings and acts, such as nervousness and lying. | |||

=== Disadvantages === | === Disadvantages === | ||

Besides the advantages it is also important to consider the disadvanatges of our system and, if possible, minimise or solve them. For our system to be socially acceptable, the advantages for society should clearly outweigh these disadvantages. | |||

==== Privacy concerns ==== | ==== Privacy concerns ==== | ||

The biggest disadvantage for the people subjected to the system will be the privacy invasion. Knowing that the moment you walk into the airport you're beging filmed and watched will cause the issue that other people will know where you are, when you leave the country and where you're going to. | |||

In the privacy vs. security debate there are three questions that need to be awnsered to determine if the advantages outweigh the disadvantages: (Brey,2004) | |||

*How much added security results from the system? | |||

*How invasive to privacy is the technology, as can be judged from both public response and scholarly arguments? | |||

*Are there reasonable alternatives to the technology that may yield similar security results without the privacy concerns? | |||

The first question cannot be awnsered yet, because we can't yet measure if and how much more criminals will be caught with the system, therefor it should first be built and tested before qe can get these results. | |||

Compared to the current state of the art, the system will not be more invasive to privacy, because there are already security cameras in airports. Our system will use this existing infrastructure. The only difference is, that the footage will be analysed by an algorithm instead of by a person. This could be an improvement, because an actual person watching might feel more invasive than a non-human computer program. To get more insight in how the public thinks about this a survey could be held. | |||

The anwser to the third question is debatable because of the word reasonable. Letting security guards search for suspicious people can be seen as an resonable alternative since it is accepted at the moment. On the other hand, you could say there is no resonable alternative, because no person could be completly without bias and the system will be more effective than the current method. If the system prevents significantly more crimes at the airport, one could argue that human guards are no longer a reasonable alternative, because you are knowingly imposing a larger risk on the passengers if you use this method. | |||

====Errors==== | |||

Another possible disadvantage that should be taken into account is the error rate of the system. Since suspicous behaviour is involuntary, it is nearly impossible to simulate it accurately, so the real error rate could only be detemined when tested in real life. This could be risky if it than turns out to have a large error rate. The system might either select a lot of harmless people or not detect potential criminals. The first type of error only results in inconvenience for the passengers involved, but the second type of error could be dangerous, because criminals and terrorist do not get caught and can carry out their criminal activity unnoticed. | |||

A way to deal with this problem is by testing the system while the current security measures are still in place. This way the airport will at least as safe as it was before while the system is tested. | |||

== Enterprise == | == Enterprise == | ||

==== Feasibility ==== | ==== Feasibility ==== | ||

The proposed system is quite easy to implement. No new infrastructure is needed as the system will use the existing monitoring network. However, it will be necessary to install the program that will analyse the camera recordings into a computer. This computer should be strong enough to support required hardware. As the regular CCTV monitoring systems do not require the assistance of the computer, this will be an additional cost for the implementation of the new network. | |||

==== Advantages ==== | ==== Advantages ==== | ||

The proposed system can be quite advantegous for the enterprise. By increasing safety of the passangers and employees of the airlines and airports, more people will be willing to use this way of transport which then will be beneficial for business of both airline companies and stores at the airports. By increasing safety of the personnel, the job in this line of work will be more appealing. Another adventage is the decrease in damage of the company's property, like aircrafts or airport building which occurs in case of a bomb detonation or theft. The system will decrease losses for the companies, as well as increase income by ensuring safety of the clients and thus, enhance competitiveness of this way of transport. | |||

==== Disadvantages ==== | ==== Disadvantages ==== | ||

Despite the rise of potential income, the system will require some additional costs. The hardware will require mainatainance as well as adjustments. Both can only be carried out by the specialised personnel, which will enlarge the costs. The system will also require training for the security guards, because they need to learn how to use the system. Making the system interface easy to use should minimise the latter. | |||

=Model= | =Model= | ||

=Results= | ==Conceptual model== | ||

In summary, we found the following behavioural cues that indicate suspicious behavior: | |||

*Non-instrumental use of objects/fidgeting | |||

*Looking around | |||

*Looking away from walking direction | |||

*Touching your own body (face/hair) | |||

*Deviation from general crowd flow | |||

*Walking in circles | |||

One ‘offence’ should not lead to immediate action. There must be a baseline to determine what level of offence is suspicious. Because the system we want to design is completely new, we have no given baselines that we could use. However, we could implement a learning system that could generate a baseline. | |||

The software would be placed in a departure hall of an airport and detect the indicated behaviour. However, it should not report to security yet. It merely counts the amount of offences to establish the average number of behavioural cues per individual. Once we have established a base line and the system is running, we will use probabilistics to determine whether a person is suspicious or not, as we will specify below. Since the system detects the cues to spot suspicious behaviour anyway, it can adjust the baseline actively to adapt to possible changes. | |||

===Assumptions=== | |||

Several things are assumed when creating the model: | |||

*There is only one suspicious person at the time | |||

*The only behavioural cues for suspicious behavior are the ones mentioned above | |||

*The behavioural cues are positive deviations | |||

*All cues have an equal weight | |||

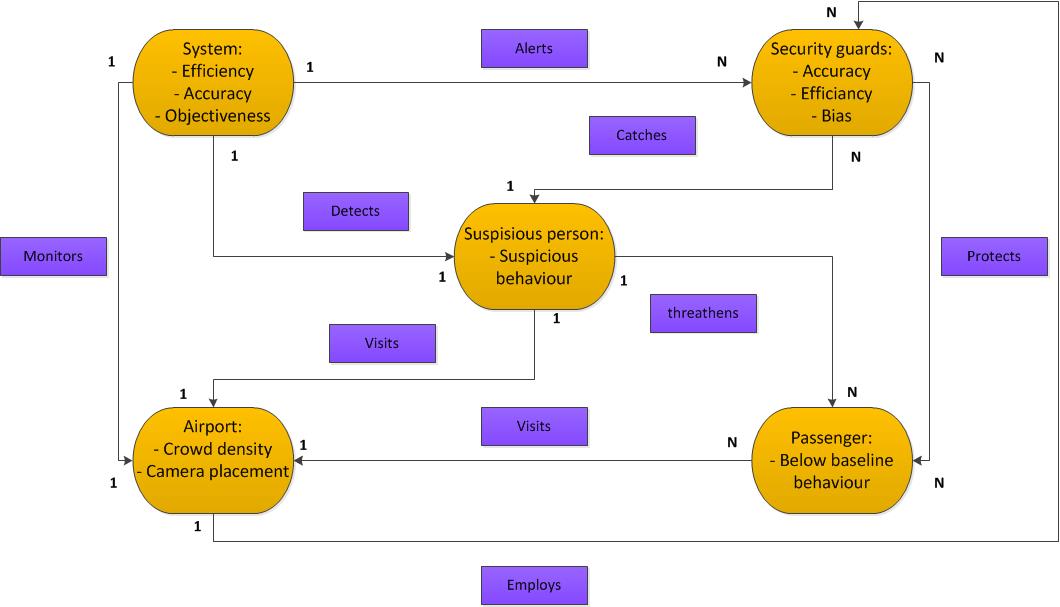

===Entities and properties=== | |||

Security guard: | |||

*Accuracy | |||

*Efficiency | |||

*Bias | |||

Passenger: | |||

*Below baseline behavior | |||

Suspicious person: | |||

*Suspicious behaviour | |||

Software system: | |||

*Efficiency | |||

*Accuracy | |||

*Objectiveness | |||

Airport: | |||

*Crowd density | |||

*Camera placement | |||

===Relations=== | |||

The diagram below illustrates the proposed model and the relations between agents. | |||

[[File:Model_scheme.jpg|750px]] | |||

===When to report=== | |||

The outcome of the video footage analysis needs to be processed so the system is able to make a decision whether or not a person should be considered suspicious. | |||

The output of the video footage analysis will give us for each behavioural cue how often they occur. This output will be the input of our probability model. For our model, we assumed all cues to be equally important. However, this could easily be adapted by assigning a weight to each cue. The model will consist of a function which adds the occurrence of all cues multiplied by the assigned weight, and then give a so called suspicious score, which indicates how suspicious a person is. | |||

Such a function would look like the following: | |||

''W<sub>1</sub> C<sub> 1 </sub> + w<sub>2</sub> C<sub>2</sub> +... + w<sub>n</sub> C<sub>n</sub> = S'' | |||

In this function ''w<sub>i</sub>'' is the weight assigned to a certain cue, ''C<sub>i</sub>'' is the amount of times/duration of the cue we got from the video footage analysis, and ''S'' is the suspicious score. The model then compares this score to the baseline and if the score is significantly higher than the baseline it should alarm the guard. This means that the model should also create a baseline. | |||

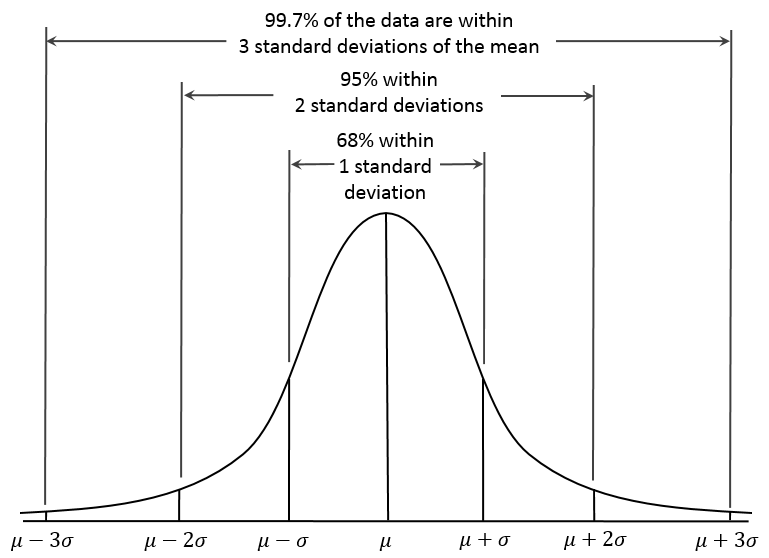

The baseline of the model can be determined by letting the model run for a while and plot the results, these results will probably look like a normal distribution with a mean <math>\mu</math> and standard deviation <math>\sigma</math>. This distribution fits well, since the chances of somebody being close to the baseline are highest and higher numbers of behavioural cues are increasingly unlikely to occur. | |||

[[File:Normal.PNG|500px]] <ref name="Normal distribution"> https://en.wikipedia.org/wiki/Normal_distribution#/media/File:Empirical_Rule.PNG </ref> | |||

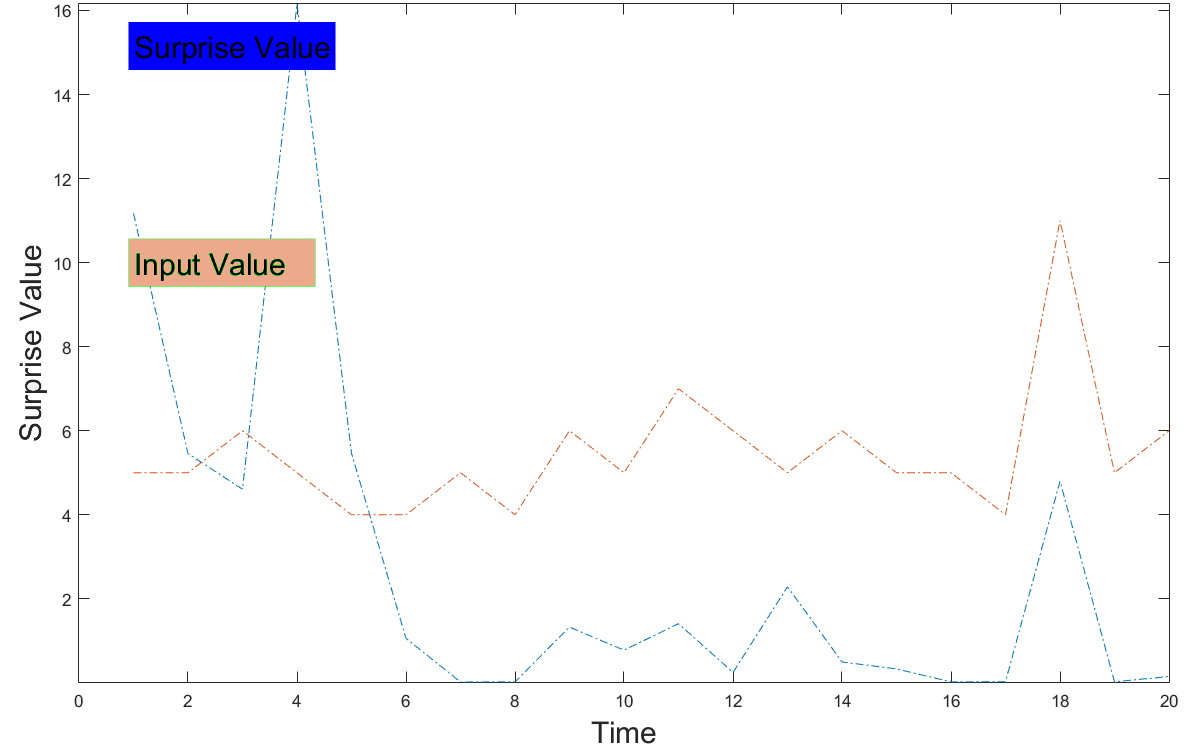

To find deviations the baseline, we will use the concept of Bayesian surprise. Say our system starts with a given probability distribution of the suspicious scores. Every new event, every new score measured, slightly changes the probability distribution of the system. If the number is close to the previous score, there will be no significant change. However, if a score has a large deviation, it is more ‘surprising’ and will have a larger impact on the distribution <ref name="Bayesian surprise"> Itti, L., & Baldi, P. (2005, June). A principled approach to detecting surprising events in video. In Computer Vision and Pattern Recognition, 2005. CVPR 2005. IEEE Computer Society Conference on (Vol. 1, pp. 631-637). IEEE.</ref>. For our system, this means that a person behaves significantly different, hence is suspicious. | |||

We will use a Matlab toolkit that implements Bayesian surprise that can be downloaded here<ref name="Link model">https://sourceforge.net/projects/surprise-mltk/</ref>. The model takes into account the series of previous measurements to calculate how surprising a new score is. You can manually set the decay factor that regulates the decay of your believe in a measurement over time. This way, if the general behavior of a crowd slowly changes over time, the system will adapt to this. | |||

We ran a simple test to see whether the program behaves as expected. We used decay factor 0.4 and the following sample data: 5 5 6 5 4 4 5 4 6 5 7 6 5 6 5 5 4 11 5 6. As you can see, the 18 <sup>th</sup> measurement strongly deviates from the rest. The corresponding surprise graph looks as follows: | |||

[[File:Surprise.PNG|800px]] | |||

There gives a clear peak where the value 11 occurs. This example also shows the importance of a calibration phase. The first few measurements give very sharp peaks, because the system has not seen much data yet, so even small deviations are surprising. After a while, it starts to see the larger picture and small deviations no longer cause big surprises. | |||

==Software model== | |||

The previous chapter described how to determine what is suspicious and what is normal behaviour based on cues that people present when navigating through/ waiting at an airport. Another crucial aspect is of course extracting the described cues from security footage in real time. For this reason it should not only be able to accurately track persons and some body parts, but it should be able to do so with enough efficiency to deliver results to the security guards in real time. | |||

There are a couple of ways to allow the software to perform in real time: | |||

*Simply providing the hardware necessary. This will have no negative effects on the performance of the software, but will have high hardware and maintenance costs. | |||

*limiting the framerate the software uses, this means it does not have to analyse all frames so it can keep up with incoming data easier, but it will affect performance as you are creating gaps in the data. | |||

*Taking a more holistic approach for the estimation of cues in a high density crowd, this is far more efficient than object based tracking, but is unlikely to detect subtle cues like fidgeting. This method could also be combined with object based tracking for better results.<ref name="Social_Force_Model"> Mehran, R., Oyama, A., & Shah, M. (2009, June). Abnormal crowd behavior detection using social force model. In Computer Vision and Pattern Recognition, 2009. CVPR 2009. IEEE Conference on (pp. 935-942). IEEE.</ref> | |||

For this project we will first take a holistic approach to do crowd tracking, and we will later add as many extractable behavioural cues as possible within the designated project time and computational capability available to us. | |||

For the crowd tracking we will use the Social force based particle advection model as desingned by Mehran et al.<ref name ="Social_Force_Model" />, however they use it to detect abnormal crowd behaviour as it occurs, while we want to detect the behavioural cues leading up to the event causing the abnormal behaviour in order to prevent the event from happening. We will have to extend the model to help us detect the behavioural cues we wish to detect. | |||

In order to write a model we use some data from the publicly availlable UMN dataset that is applicable for our context, and data recorded from live security cameras <ref name ="airport_Koln">http://www.koeln-bonn-airport.de/am-airport/airport-webcam.html</ref><ref name = "airport_Warsaw">http://www.airport.gdansk.pl/airport/kamery-internetowe</ref><ref name = "airport_Hamburg">http://www.hamburg-airport.de/de/livebilder_terminal_2.php</ref>. We also used self-made footage of scenarios displaying suspicious behaviour in multiple ways. The model is written in MATlab R2017a with Piotr's computer vision matlab toolbox<ref name = "Piotr">https://pdollar.github.io/toolbox/</ref>, which is an extension of Matlabs image processing toolbox. | |||

===Analysing the general flow=== | |||

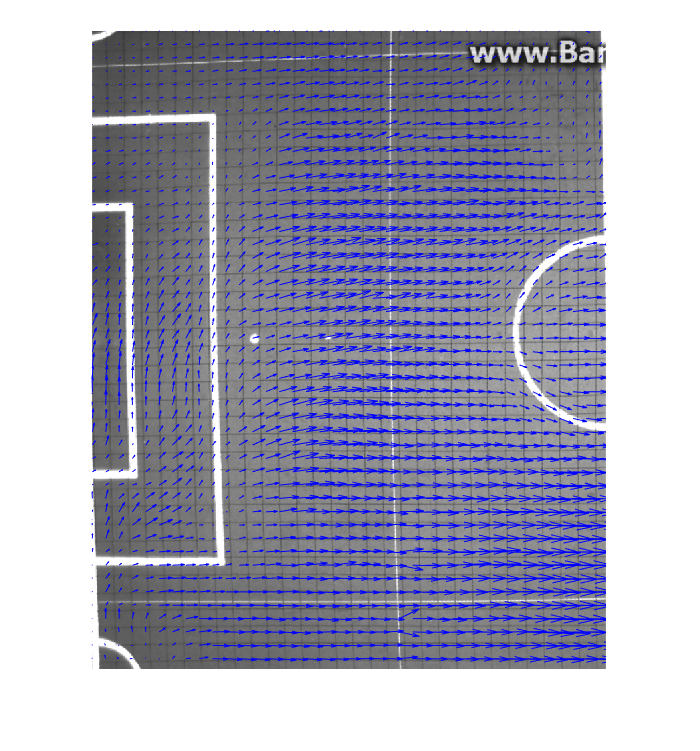

To obtain flow data from the raw video footage we use an optical flow algorithm from Piotr's computer Vision Toolbox <ref name = "Piotr">https://pdollar.github.io/toolbox/</ref>. The optical flow algorithm returns a displacement vector for each pixel for each timestep or transition to the next frame. This data can be used to determine a general flow by taking the time average of the displacement vector for each pixel location. The general flow of the baseline video is shown in the figure on the bottom of this paragraph. | |||

Because analysing each pixel location would take too much processing power, we have decided to adopt a particle approach in which a set of particles is spread evenly over the first frame of the video. These particles then move according to their local average velocity vector, which is calculated by taking the weighted average of the velocity vectors in a radius of 10 pixels around the particle. The velocity vectors are weighted using a Gaussian distribution centered on the location of the particle. The particle approach has an extra advantage, because it doubles as a tool to track individuals in the crowd without using complicated person-detection algorithms. If the particles come within 10 pixels of the edge of the videoframe they get reset to their initial position. Because there are significantly less particles than pixels in the scene the analysing of the accompanying data takes less computing power than analysing the data for each pixel. | |||

[[File:Average flow Baseline2.png|500px|thumb|none| The average flow of the baseline video. The direction the blue arrow is pointing in indicates the direction of the flow at that location and the size of the blue vector indicates the speed of the flow at that location]] | |||

===Social Force model<ref name ="Social_Force_Model"/>=== | |||

The social force model was originally introduced to model pedestrian movement dynamics<ref name = "Helberg">Helbing, D., & Molnar, P. (1995). Social force model for pedestrian dynamics. Physical review E, 51(5), 4282.</ref>. However it can be used in many situations in which general crowd tracking and interaction is relevant. The Social force model measures the effect of the environment and other people on the movement of a person. | |||

The first step in calculating the force flow in footage is to measure the optical flow. The optical flow can be described as the pattern apparent motion between a scene and an observer<ref name ="optical_flow>Burton, A., & Radford, J. (Eds.). (1978). Thinking in perspective: critical essays in the study of thought processes. Methuen.</ref>. With the optical flow over time an average crowd motion in footage can be determined. Similar as to Mehran et al. we scatter particles over the footage which better refine and visualize the optical flow in a small frame window. The particles move according to a weighted average from the optical flow within a certain region of interest around the particle, and when particles are about to exit the screen they are reset to their initial positions. | |||

Using the optical flow and a relaxation parameter we can calculate the force flow according to: | |||

'''F<sub>int</sub> = <sup>1</sup>/τ x (V<sub>desired</sub> - V<sub>optical</sub>) x <sup>d</sup>/<sub>dFrame</sub>(V<sub>optical</sub>)''' | |||

where F<sub>int</sub> is the interaction force or force flow, τ is the relaxation parameter, V<sub>desired</sub> is the desired velocity of an individual, assumed to be the local optical flow, and V<sub>optical</sub> is the average local optical flow. | |||

This force flow evaluates positions inside the footage where relatively many interactions between an individual and its environment take place. With both deviation from optical flow and intensity of the localized force flow we can accomplish our holistic detection of susplicious behaviour. | |||

===Measuring Suspicious Behaviour=== | |||

In order to actually measure suspicious behaviour some general level of suspiciousness needs to be determined. To do this, the model uses three different parameters: | |||

*Deviation local velocity vector size from local average velocity vector size | |||

*Deviation local velocity vector direction from local average velocity vector angle | |||

*The social force | |||

These parameters are all indicators for possible suspicious behaviour. The deviation of the local velocity vector size from the local average velocity vector size is calculated using the size of the difference between the local velocity vector and the local average velocity vector: | |||

'''V<sub>relsize</sub>=√((V<sub>av,x</sub>(x,y)-V<sub>loc,x</sub>(x,y))<sup>2</sup> + (V<sub>av,y</sub>(x,y)-V<sub>loc,y</sub>(x,y))<sup>2</sup>).''' | |||

In this equation '''V<sub>av</sub>(x,y)''' is the average local velocity vector and '''V<sub>loc</sub>(x,y)''' is the local velocity vector. | |||

The angle between the local velocity vector and the average local velocity vector is calculated with: | |||

'''V<sub>relangle</sub>(x,y)=mod(|tan(V<sub>av,y</sub>(x,y)/V<sub>av,x</sub>(x,y))-tan(V<sub>loc,y</sub>(x,y)/V<sub>loc,x</sub>(x,y))|,2 π).''' | |||

Mod is de modulus of 2 π in this case. | |||

The social force '''F<sub>soc</sub>''' is the size of the interaction force vector '''F<sub>int</sub>''': | |||

'''F<sub>soc</sub>(x,y)=√(F<sub>int,x</sub>(x,y)<sup>2</sup> + F<sub>int,y</sub>(x,y)<sup>2</sup>)''' | |||

The three parameters are added linearly to a particle-bound suspicious score '''S(x,y)''': | |||

'''S(x,y,t)=S(x,y,t-1) + w<sub>1</sub> V<sub>relsize</sub>(x,y,t) + w<sub>2</sub> V<sub>relangle</sub>(x,y,t) + w<sub>3</sub> F<sub>soc</sub>(x,y,t).''' | |||

'''t''' is the discrete timestep (or frame) at which the parameters are determined and '''w<sub>n</sub>''' are weight factors for the individual parameters. | |||

This value is then fed into a Bayesian surprise model which calculates a surprise factor using the method described earlier. If the surprise factor is higher than a certain threshold, the behaviour is deemed suspicous and the system will highlight the corresponding particle. The threshold is determined using a baseline video, in which only 'normal' behaviour takes place. That way small deviations that occur in normal scenarios will not be detected while more surprising behaviour will be detected. | |||

When we discussed the probabilistic part of the model one of the assumptions was that the suspicious score would be spread out around some mean value like a Gaussian distribution. A measurement done on the baseline video confirmed this is indeed the case. A histogram showing the distribution of the suspicious score '''S''' is shown in the figure below. The red line shows a Gaussian distribution fitted through the data using the least-squares method. This means we can indeed use the Bayesian Surprise algorithm to find outliers in the data and identify suspicious persons. The Matlab scripts are available for download <span class="plainlinks">[http://cstwiki.wtb.tue.nl/index.php?title=File:Abnormal_detection.zip here]</span>. Piotr's Computer Vision Matlab Toolbox<ref name = "Piotr">https://pdollar.github.io/toolbox/</ref> and the Bayesian Surprise Toolkit for Matlab<ref name="Link model">https://sourceforge.net/projects/surprise-mltk/</ref> are needed to run the scripts. | |||

[[File:Histogram Tmean S Baseline2 Gaussfit.png|500px|thumb|none| Histogram of the time-averaged measured suspicious score measured from the baseline video. A Gaussian distribution was fitted over the data and is displayed as a red line.]] | |||

===User Interface=== | |||

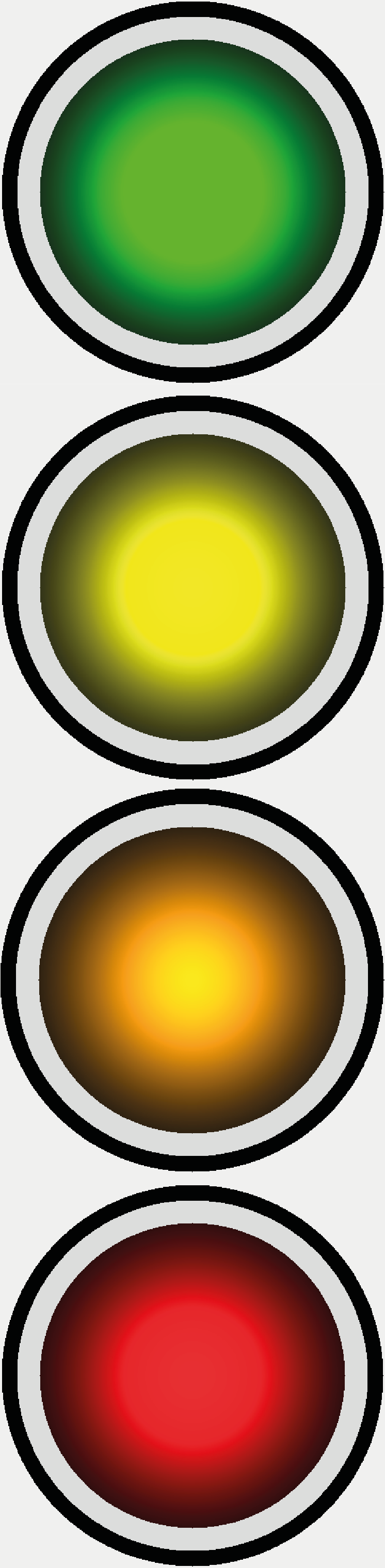

[[File:Alarm lights.png|100px|thumb|from top to bottom green,yellow orange and red alarm light levels respectively]] | |||

In order to provide security guards with easy-to-understand information we have developed a user interface for the guard to use. On this interface the guard will see the footage itself, with next to it a light that indicates the threat level. The light can have four different colors: green, yellow, orange and red, indicating the severity of the threat in this order. Most of the time the light on a monitor will be green, this indicates that the system doesn't detect any suspicious behaviour and the guard can keep overall oversight and look at monitors with a different alarm color. | |||

Yellow is the single lowest threat level. This indicates a slight deviation from the usual behaviour of an airport visitor and does not signal immediate action. However keeping an eye on the situation is advised. | |||

Orange is the next threat level. This indicates a decent deviation from the usual behaviour and further investigation by security personnel is advised. | |||

Red is the highest threat level, indicating a high deviation from the usual behaviour. In this case immediate action with multiple security guards is strongly recommended. | |||

When the alarm light is any other colour than green the screen will show a red circle around the area where suspicious behaviour is occuring to attract the guard's attention to this spot. This way he does not have to inspect the entire monitor when an alarm goes off. The guard will also have the option to pause the screen to identify the person(s) in the circle. When the play/pause button is pressed again it will resume showing live footage. In the figure below we show an example of what the final UI could look like. | |||

[[File:Guard screen.png|500px|thumb|none| The screens the guard will see when using the system, each screen has a light indicating the alarm level and a play/pause button next to it.]] | |||

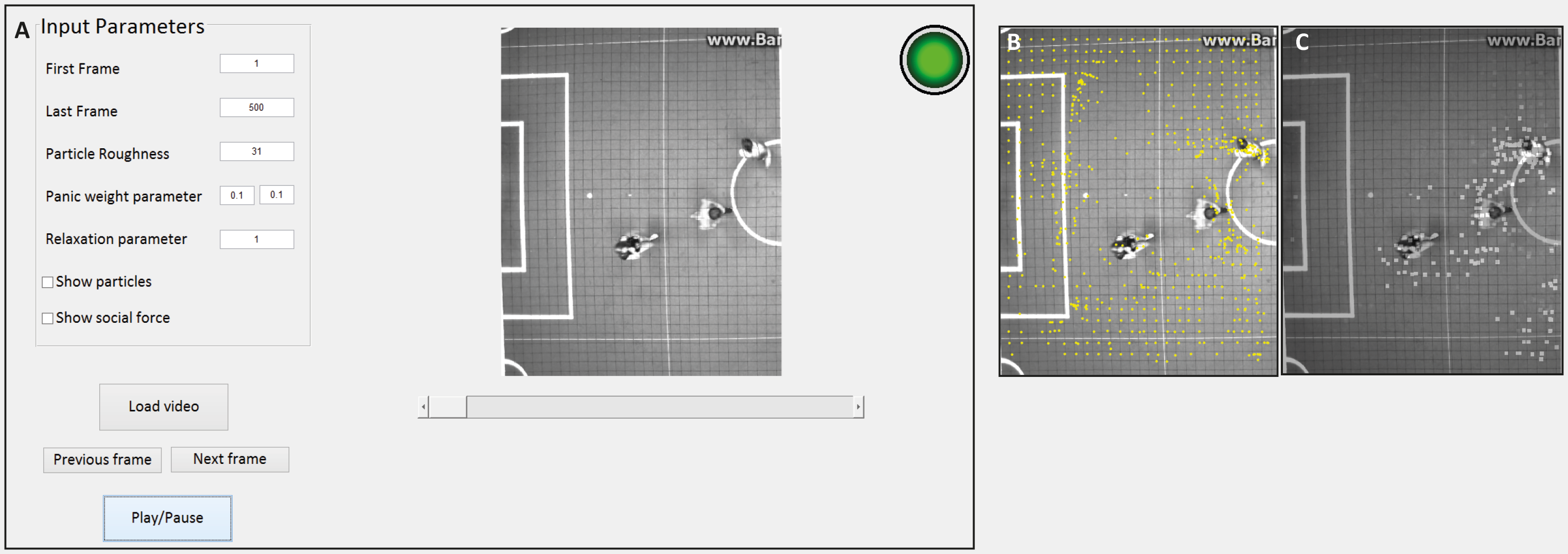

For demonstrative purposes we have developed another UI that has more options and works with video footage instead of live footage. This UI can show the particle advection and the social force on a video to show its workings, which the security guard does not need to see but are usefull for demonstration. Since we do not have access to live footage we use this UI and the software behind it to test our model. The figure below shows this developer UI with some examples of the article advection and social force. The developer UI can be downloaded by clicking <span class="plainlinks">[http://cstwiki.wtb.tue.nl/images/UI.zip here]</span>. | |||

[[File:Dev UI.png|1000px|thumb|none| The developer UI. A) the screen the developer sees. The input parameters are the first and last frame of the video the UI should show when playing the video, the particle roughness determines how far particles are apart when calculating the particle advection, and the panic and relaxation parameters are used in the calculation of the social force. The tick boxes allow for overlaying the particle advection (see B) or the social force (see C) on the video. The buttons are for loading the video from your computer, for traveling between frames and playing and pausing the video. B) the frame from the video shown in A overlayed with particle advection. C) the frame from the video shown in A overlayed with the social force.]] | |||

==Testing== | |||

To find out whether our software works in practice we filmed and analysed a number of scenario's in a crowd context. In every video one or two people act out some kind of suspicious behaviour while the rest of the crowd walks in a normal way. If our software detects these suspicous individuals we know that our program works for these scenarios. Of course a real airport would be more crowded and chaotic, but it gives us a good indication whether our software can pick up some very basic cues. | |||

===Footage used=== | |||

The footage used for the analysis was created at the soccer field of Tech United using their topcam. We invited a large number of students and instructed them to walk from one side of the field to the other while one or two people moved through the crowd in a suspicous manner. | |||

We made the following video's to test our program on: | |||

{| class="wikitable" | |||

|- | |||

! Video # | |||

! Discription | |||

|- | |||

| 1 | |||

| baseline, everyone walks in same direction and at the same pace | |||

|- | |||

| 2 | |||

| 1 person zigzags between the crowd | |||

|- | |||

| 3 | |||

| 1 person walks double the speed | |||

|- | |||

| 4 | |||

| 1 person walks in opposite direction | |||

|- | |||

| 5 | |||

| 1 person walks, stops in the middle, then walks again. | |||

|- | |||

| 6 | |||

| 1 person walks in circles through the crowd | |||

|- | |||

| 7 | |||

| 2 people zigzag between the crowd | |||

|- | |||

| 8 | |||

| 2 people walk at double the speed | |||

|- | |||

| 9 | |||

| 2 people walk in the opposite direction | |||

|- | |||

| 10 | |||

| 2 people walk, stop in the middle, then walk again. | |||

|- | |||

| 11 | |||

| 2 people walk in circles through the crowd | |||

|- | |||

|} | |||

In our original model we assumed that there would be only one suspicous person at the time, but the software we wrote is not strictly limited to one individual. To see how it would perform if there were multiple deviations from the crowd flow we shot all the video's with both one and two suspicous persons. | |||

===Results=== | |||

The results of our tests were rather disappointing. Our software pointed out several suspicious particles, but these were mostly false positives, while the actual suspicious individuals often went unnoticed. This was the case for every video, regardless the behavioural cue acted out. Playing around with the sensitivity did not solve this problem. However, if we visualised the crowd flow there clearly were particles following each person and the arrows indicating the flow were pointing in the correct direction. It seems that although the footage is analysed correctly, the system does not give the appropriate response. This indicates that the problem might be in the code that determines what is suspicious. | |||

Part of the problem was that our crowd wasn't much of a crowd. Although we invited many friends only 15 people showed up for our experiment. We used half of the field and walked in circles, but still there were large gaps in the crowd flow. Since these were deviations, the system often found these particles suspicous when in fact there was nobody there. The general and local flow was calculated correctly in these places, however, the Bayesian surprise model jumped to the wrong conclusions. In an airport there could also be moments when there are not many people around, for example in between flights. Therefore this is an important issue that needs to be addressed. | |||

In conclusion, our results are mixed. On the one hand, the underlying software seems to be working fine. On the other hand, it gives wrong information to the user. This means that the most important part of the system needs to be revised. A security guard does not know about the social force or general flow that the system calculates: he or she just wants to know whether there is a suspicious individual that needs to be monitored. If the system gives incorrect information about this it has little use, even if the basics work correctly. | |||

=Conclusion= | |||

The goal of our project was to solve two problems in airport security: the large amount of people that need to be monitored and the bias that human guards introduce. | |||

The first problem is solved by our software, because it can oversee the whole crowd. The particle model in our software allows us to check every individual without overloading our system. This way every person is checked and no suspicious individual can go unnoticed. | |||

The second problem would be solved by the full implementation of our idea. Our literature research showed that behavioural cues are universal, hence a system based on these cannot discriminate on culture or ethnicity. This would make security checks more honest and accurate. However, we only managed to implement one of the behavioural cues that are considered suspicious, so while in theory our system eliminates bias, we cannot yet confirm that this is actually the case. | |||

All in all our system should be considered a first step towards more accurate and honest airport surveillance. It is not yet complete, but paves the way for more elaborate systems that take into account all specified cues and make the airport a safer place. | |||

=== Recommendations for further research and development=== | |||

The system we developed still has a lot of room for improvement. Our proposed ideas for improving the system are listed below. | |||

*Further research on suspicious behaviour indicators may improve accuracy of the system in detecting possible suspects. Such research will also enable attribution of the weights in a probabilistic model to different traits. | |||

*So far only one out of listed cues was implemented into the system. A way to distinguish different behaviours in the crowd should be researched and their baselines established before extending the system to recognise more traits. | |||

*One of the assumptions of proposed system is that there is only one suspicious person exposed. This is not very realistic as there is no way to ensure this constraint in real life. The system should be able to detect all suspicious people with different suspicious scores. | |||

*At the moment a person can only be tracked while he or she stays within the range of one particular camera. If this person moves on, the next camera does not know this person's history and the behaviour analysis starts again from scratch. A biometric recognition system, for example facial recognition, could be used to identify individuals and monitor them as they walk from camera to camera. | |||

*The experiments we did should be repeated with a larger crowd to accurately model an airport-like environment. This might influence the performance of our model. | |||

*The Bayesian surprise model seemed to cause most problems during testing. Research into other probabilistic methods to determine abnormalities is needed to find a better alternative. | |||

=References= | =References= | ||

| Line 198: | Line 601: | ||

'''26-04-2017''' | '''26-04-2017''' | ||

The subject of the project has been chosen and the deliverables and objectives (as found on the wiki) have been determined. | *The subject of the project has been chosen and the deliverables and objectives (as found on the wiki) have been determined. | ||

'''30-04-2017''' | '''30-04-2017''' | ||

| Line 204: | Line 607: | ||

*A planning and milestones have been determined (see the Approach section) | *A planning and milestones have been determined (see the Approach section) | ||

*A wiki page has been created, including a template for the documentation with the already available information filled in. | *A wiki page has been created, including a template for the documentation with the already available information filled in. | ||

'''03-05-2017''' | |||

*We have agreed upon a list of questions to ask the security officer at Veldhoven. Research of behavioural cues and biometric scanners has been discussed and is still ongoing. Several sections of the wiki, including the planning and charts, were updated and given a more structured layout. | |||

'''08-05-2017''' | |||

*The problem statement and deliverables were discussed extensively to clarify the goal of the project. Tasks for the upcoming week were determined and divided. | |||

'''11-05-2017''' | |||

*We met at Eindhoven airport to talk to the security guards. They did not have time for us, but gave us an email address that could help us make an appointment. | |||

'''15-05-2017''' | |||

*We discussed the problems with Eindhoven Airport and decided to focus on the model instead if we cannot get an interview in the next week, because it messes up our planning too much if we wait. We then agreed on what needs to be done for next week and divided the tasks. | |||

'''22-05-2017''' | |||

*We set the goal to have a 'working' code next week. That is, we want the program to be able to track a person. All other details will be implemented later. We also agreed to look further into the probabilistic model that determines whether behaviour is suspicious. The group was split into two teams to carry out these tasks. | |||

*We received a response from a teacher at Summa college that trains security guards. We made an appointment for tuesday 30-05 to have an interview with him. | |||

'''29-05-2017''' | |||

*We brainstormed about real-life experiments with crowds to test our system. We talked about possible scenarios and divided tasks to organise this. | |||

'''12-06-2017''' | |||

*The software model is now able to attach a certain suspicious score to each particle based on Gaussian distributions of behavioral characteristics. It can also detect particles with unusual suspicious scores. | |||

*The interview with the teacher at Summa College was conducted and transcribed and is available on the wiki. | |||

*Final preparations are made for the experiments. | |||

'''15-06-2017''' | |||

*The test footage of a crowd with suspicious people is made at the robot soccer field. | |||

'''19-06-2017''' | |||

*A planning is made to finish the wiki. | |||

Latest revision as of 19:54, 25 June 2017

Group members

| Student ID | Name |

| 0900940 | Ryan van Mastrigt |

| 0891024 | René Verhoef |

| 0854765 | Liselotte van Wissen |

| 0944862 | Sjanne Zeijlemaker |

| 0980963 | Michalina Tataj |

Introduction

Problem description