PRE2016 3 Groep16: Difference between revisions

| (41 intermediate revisions by 6 users not shown) | |||

| Line 17: | Line 17: | ||

| T. Jansen | | T. Jansen | ||

|- | |- | ||

| | | 0897620 | ||

| J. Van Galen | | J. Van Galen | ||

|- | |- | ||

| | | 0965090 | ||

| B.G.M Hopman | | B.G.M Hopman | ||

|} | |} | ||

| Line 29: | Line 29: | ||

* Local security and criminals detection | * Local security and criminals detection | ||

* Inspection of insulation materials in houses (not directly "human recognition", but considered a valuable research) | * Inspection of insulation materials in houses (not directly "human recognition", but considered a valuable research) | ||

Since Europe is currently struggling with a refugee problem in the Mediterranean sea, it was chosen to focus on the first application. | |||

==Problem Statement== | ==Problem Statement== | ||

Due to turbulent geopolitical times in parts of Africa, thousands of people try to get a safe, better life in Europe. Conflicts in countries such as Somalia and the violation of human rights in countries with strict regimes, such as Eritrea, forces certain groups | Due to turbulent geopolitical times in parts of Africa, thousands of people try to get a safe, better life in Europe. Conflicts in countries such as Somalia and the violation of human rights in countries with strict regimes, such as Eritrea, forces certain groups to move somewhere else<ref>Herkomstlanden van vluchtelingen. (n.d.). Retrieved March 08, 2017, from https://www.vluchtelingenwerk.nl/feiten-cijfers/landen-van-herkomst</ref>. The safest place that is reachable for them is Europe. However, to make it to Europe, the Mediterranean Sea needs to be crossed. The crossing is often done with old, small boats that were originally intended for much less passengers than they are currently loaded with. The result is the sinking of many boats, that later on drown in the open sea. Only in 2016 there were 4,218 ensured deaths in the Mediterranean because of drowning boats. In the first month of 2017, the death count for this cause was already 377 migrants<ref>Mediterranean Update. (2017, January 31). Retrieved March 08, 2017, from http://migration.iom.int/docs/MMP/170131_Mediterranean_Update.pdf</ref>. | ||

Up until November 2014, Italy had its own rescue operation called Mare Nostrum to find refugees that were victims of boat accidents. The rescue mission was successful, about 160,000 people were saved from drowning. However, the use of seven ships, two helicopters, three planes and the help of the marine, coastal guard and Red Cross costed €9,5 million per month which was too expensive and the mission stopped<ref>Laer, M. V. (2015, April 28). Operaties vergeleken: Mare Nostrum vs Triton. Retrieved March 08, 2017, from http://solidair.org/artikels/operaties-vergeleken-mare-nostrum-vs-triton</ref>. Moreover, it was thought that the rescue operation would encourage refugees to take their chances to cross the sea, as they would be rescued anyway when they would get in trouble. The result of stopping with the operation resulted in a ten fold of casualties<ref>Fijter, N. D. (2015, April 3). Tien keer meer drenkelingen. Retrieved March 08, 2017, from https://www.trouw.nl/home/tien-keer-meer-drenkelingen~a9d369cd/</ref>. | Up until November 2014, Italy had its own rescue operation called Mare Nostrum to find refugees that were victims of boat accidents. The rescue mission was successful, about 160,000 people were saved from drowning. However, the use of seven ships, two helicopters, three planes and the help of the marine, coastal guard and Red Cross costed €9,5 million per month which was too expensive and the mission stopped<ref>Laer, M. V. (2015, April 28). Operaties vergeleken: Mare Nostrum vs Triton. Retrieved March 08, 2017, from http://solidair.org/artikels/operaties-vergeleken-mare-nostrum-vs-triton</ref>. Moreover, it was thought that the rescue operation would encourage refugees to take their chances to cross the sea, as they would be rescued anyway when they would get in trouble. The result of stopping with the operation resulted in a ten fold of casualties<ref>Fijter, N. D. (2015, April 3). Tien keer meer drenkelingen. Retrieved March 08, 2017, from https://www.trouw.nl/home/tien-keer-meer-drenkelingen~a9d369cd/</ref>. | ||

| Line 69: | Line 70: | ||

== Planning == | == Planning == | ||

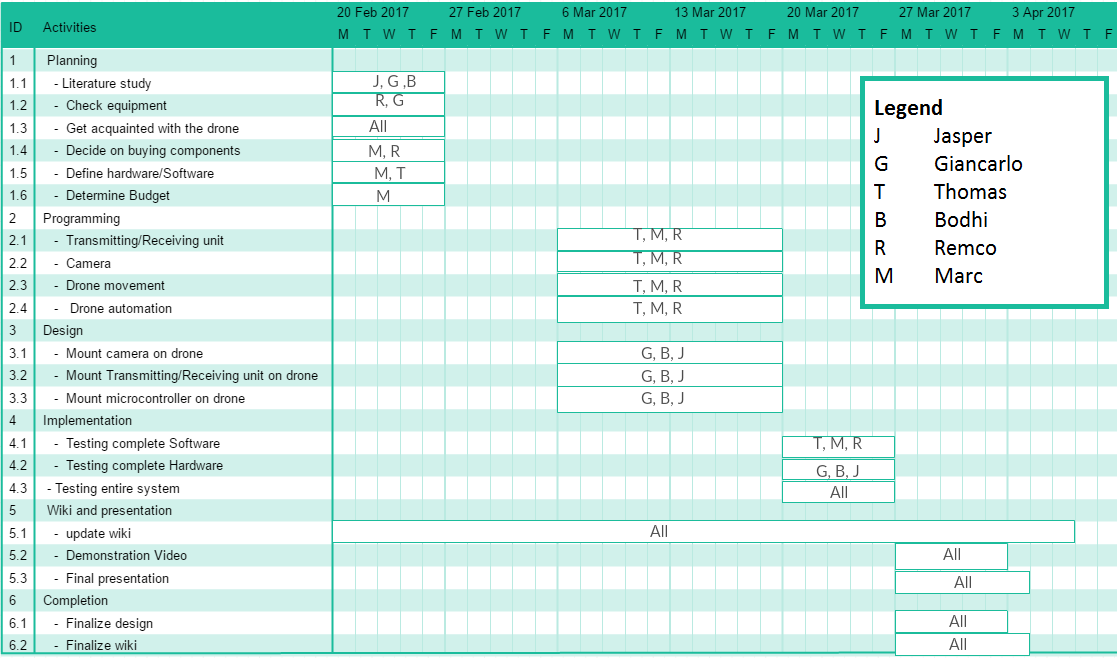

To organize the workload of the project a planning is required, where the main requirements and deadlines are set, together with the task division (starting from week 3 of the quartile e.g. 20th February 2017). | To organize the workload of the project a planning is required, where the main requirements and deadlines are set, together with the task division (starting from week 3 of the quartile, e.g. 20th February 2017). A Gantt chart can be found in Fig. 1. | ||

[[File:ganto.png|thumb|700px|Gantt chart for workload division during the project.]] | [[File:ganto.png|thumb|700px|Fig.1. Gantt chart for workload division during the project.]] | ||

== Logbook == | == Logbook == | ||

| Line 82: | Line 83: | ||

==== USE analysis of the application ==== | ==== USE analysis of the application ==== | ||

===== User ===== | ===== User ===== | ||

The users of the rescue drone will be the coastal guards Italy, Greece, etc. Since deploying ships and planes for surveillance is costly and slow, the use of drones can decrease costs significantly and increase search speed. Due to the reduction in costs, a lot of the drones can be deployed, increasing the surveillance area. Deployment of drones is also easier for the operator, since you can fly drones in autonomous mode. This makes it possible to control the drone by anyone, instead of requiring skilled pilots. Automatic detection of refugees by the drone, simplifies locating them, after which rescue ships can be send to their location. The user requirements, are that the drone should be easily deployable and be able to detect refugees with as little control by the operators as possible. | The users of the rescue drone (ideal stakeholders) will be the coastal guards Italy, Greece, etc. Since deploying ships and planes for surveillance is costly and slow, the use of drones can decrease costs significantly and increase search speed. Due to the reduction in costs, a lot of the drones can be deployed, increasing the surveillance area. Deployment of drones is also easier for the operator, since you can fly drones in autonomous mode. This makes it possible to control the drone by anyone, instead of requiring skilled pilots. Automatic detection of refugees by the drone, simplifies locating them, after which rescue ships can be send to their location. The user requirements, are that the drone should be easily deployable and be able to detect refugees with as little control by the operators as possible. | ||

===== Society ===== | ===== Society ===== | ||

Rescue drones will benefit society, since a lot of deaths of refugees can be prevented by their deployment. A reduction in costs, will make more money available for the shelter and care of the refugees. | Rescue drones will benefit society, since a lot of deaths of refugees can be prevented by their deployment. A reduction in costs, will make more money available for the shelter and care of the refugees.Moreover, better shelters and care would make an impact, reducing tensions and decreasing civil unrest. Thus, the main requirements for society are to reduce the costs of rescue operations, saving money. | ||

===== Enterprise ===== | ===== Enterprise ===== | ||

A rescue drone has little commercial applications, but a drone equipped with thermal camera, could be utilized by insulation companies to scan houses in urban areas for improvements. It could also be used by security companies, to scan business premises instead of fixed cameras that have a limited view. | A rescue drone has little commercial applications, but a drone equipped with thermal camera, could be utilized by insulation companies to scan houses in urban areas for improvements. It could also be used by security companies, to scan business premises instead of fixed cameras that have a limited view. | ||

| Line 140: | Line 143: | ||

** Minimum height: 10m | ** Minimum height: 10m | ||

** Minimum speed: 1 m/s | ** Minimum speed: 1 m/s | ||

** Minimum angle of detection (with respect to the perpendicular) : 10 ° | ** Minimum angle of detection (with respect to the perpendicular): 10 ° | ||

** Minimum flying autonomy : 2 minutes | ** Minimum flying autonomy: 2 minutes | ||

** Minimum detection rate | ** Minimum detection rate 70% | ||

*Camera | *Camera | ||

| Line 150: | Line 153: | ||

*General | *General | ||

** Mapped Area: 100 m<sup>2</sup> | ** Mapped Area: 100 m<sup>2</sup> | ||

** | ** Autonomous search strategy: NO | ||

| Line 164: | Line 167: | ||

==== Validation of the thermal image processing ==== | ==== Validation of the thermal image processing ==== | ||

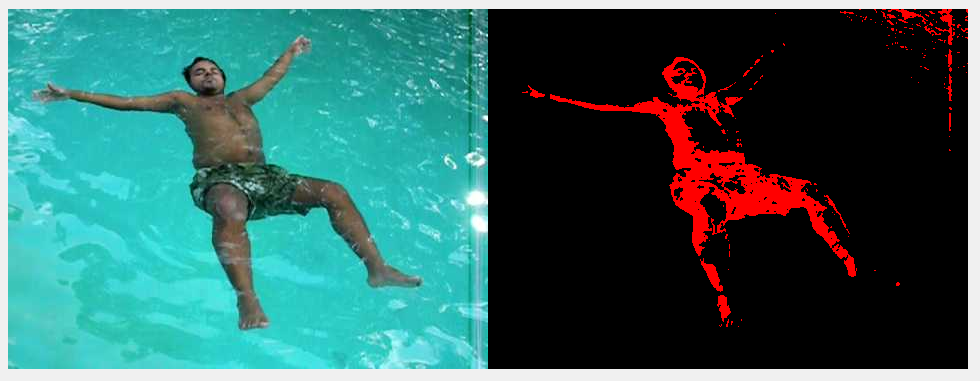

[[File:Regular&thermal.png|thumb|500px| | [[File:Regular&thermal.png|thumb|500px|Fig. 2. Thermal image of a whale in see (left). Mimic by red object (right).]] | ||

As explained in the introduction of week 5, the thermal camera is not available and can therefore not be used. The thermal camera would make detection in real life environment significantly easier than detection by regular camera, as the distinction between water (sea) and humans is very obvious when comparing heat characteristics. Nevertheless, the technique of distinction that is used in these thermal cameras can be used by use of the normal cameras installed on the drone. | As explained in the introduction of week 5, the thermal camera is not available and can therefore not be used. The thermal camera would make detection in real life environment significantly easier than detection by regular camera, as the distinction between water (sea) and humans is very obvious when comparing heat characteristics. Nevertheless, the technique of distinction that is used in these thermal cameras can be used by use of the normal cameras installed on the drone. | ||

The image generated by a thermal camera from a creature in the sea consists of a color pattern dependent on the heat radiation of the environment. The amount of heat is translated into a color pattern which deviates between black (cold) and red (hot), which is shown in | The image generated by a thermal camera from a creature in the sea consists of a color pattern dependent on the heat radiation of the environment. The amount of heat is translated into a color pattern which deviates between black (cold) and red (hot), which is shown in the figure above <ref>Beynen, J. V. (n.d.). Thermal imaging may save Hauraki Gulf whales. Retrieved March 15, 2017, from http://www.stuff.co.nz/environment/67712222/thermal-imaging-may-save-hauraki-gulf-whales/</ref>. In order to achieve this kind of patterns with the regular camera, the environment can be adapted to the image gathered from a thermal camera. | ||

The environment, in which the goal of detection of refugees in open water by means of a thermal camera, should be modified as much to represent the images described above. To do this, the watercolor will be blue/white, as the tiles in the swimming pool are white and the water shows a bright blue color. This can be used as water color, just as the black represents coldness in the thermal image. The color deviation of a human in water is less obvious than a thermal camera would generate, thus adaption of the environment is required to create a good simulation. The adaption that this group uses to mimic the thermal image, is to equip the swimmer, which represents a refugee, with a head or body size bright red object. This object will strongly differ from the color of the water detected by the regular camera, as shown in Figure, and therefore the deviation method of thermal images can be used. The next section describes how this image processing is done. | The environment, in which the goal of detection of refugees in open water by means of a thermal camera, should be modified as much to represent the images described above. To do this, the watercolor will be blue/white, as the tiles in the swimming pool are white and the water shows a bright blue color. This can be used as water color, just as the black represents coldness in the thermal image. The color deviation of a human in water is less obvious than a thermal camera would generate, thus adaption of the environment is required to create a good simulation. The adaption that this group uses to mimic the thermal image, is to equip the swimmer, which represents a refugee, with a head or body size bright red object. This object will strongly differ from the color of the water detected by the regular camera, as shown in Figure, and therefore the deviation method of thermal images can be used. The next section describes how this image processing is done. | ||

==== Image Processing ==== | ==== Image Processing ==== | ||

[[File:floating1.png|thumb|500px| | [[File:floating1.png|thumb|500px|Fig. 3. Regular image of person in pool (left). Processed image to mimic thermal image (right).]] | ||

Looking at how it is possible to mimic thermal vision with a regular camera, some possibilities came up by using MATLAB. One is by converting the acquired frames to binary images, based on a threshold value. The binary images are probably easier to analyze. Two examples are given below. | Looking at how it is possible to mimic thermal vision with a regular camera, some possibilities came up by using MATLAB. One is by converting the acquired frames to binary images, based on a threshold value. The binary images are probably easier to analyze. Two examples are given below. | ||

Binarizing the images works quite well, but the problem with these two images, is that they require opposite threshold values, due to the difference in skin color. This might impose a problem in a real scenario, but is likely no problem in our test scenario, since only one test environment is used. In case a thermal camera is used, differences in water and skin color, won’t matter since, the image is only based on body heat. | Binarizing the images works quite well, but the problem with these two images, is that they require opposite threshold values, due to the difference in skin color. This might impose a problem in a real scenario, but is likely no problem in our test scenario, since only one test environment is used. In case a thermal camera is used, differences in water and skin color, won’t matter since, the image is only based on body heat. | ||

Identification of persons in the video stream of the drone, might be possible by using a neural network. The images were put through a pre-trained deep learning model called AlexNet, which can be implemented in | Identification of persons in the video stream of the drone, might be possible by using a neural network. The images were put through a pre-trained deep learning model called AlexNet, which can be implemented in MATLAB. It was able to identify some objects, but did not giving satisfying results with the images in Fig. 3, so follow up for a more suitable way is necessary. | ||

=== Week 6 === | === Week 6 === | ||

==== Investigation on interesting area for the refugees search ==== | ==== Investigation on interesting area for the refugees search ==== | ||

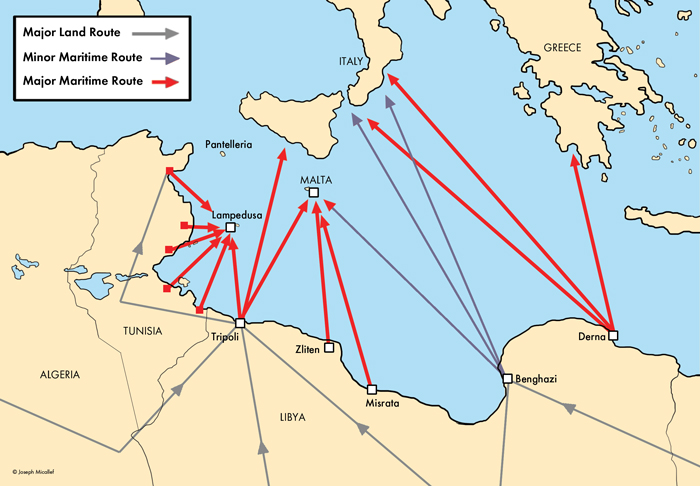

Another requirement that has to be set is the area that the drone has to cover. To acquire numbers regarding these area’s common refugee routes have to be known. Since the refugee crisis is already going on for several years organizations like the UNHCR and BBC have conducted quite some investigation in it. The | Another requirement that has to be set is the area that the drone has to cover. To acquire numbers regarding these area’s common refugee routes have to be known. Since the refugee crisis is already going on for several years organizations like the UNHCR and BBC have conducted quite some investigation in it. The picture in Fig. 4 from the BBC depicts the most used sea routes. | ||

[[File:BBCroutes2.jpg|thumb|700px|This image | [[File:BBCroutes2.jpg|thumb|700px|Fig. 4. This image displays the most common maritime refugee routes<ref>Micallef J.V. (2015, June 24) Reflections on the Mediterranean refugee crisis. Retrieved March 20 2017, from http://www.huffingtonpost.com/joseph-v-micallef/reflections-on-the-medite_b_7120708.html</ref> ]] | ||

Clearly visible in this picture is that most refugees travel from Tunisia or Libya to the island of Lampedusa. This is also the region on which our research is based. This due to the fact that drones won’t be able to fly to other popular location as for example near Morocco, but are able to cover routs from more cities. Using a distance measurement tool embedded in google maps it was possible to measure the distance. This distance was from the most popular Tunisian and Libyan cities to the most common European target island which is Lampedusa. Lampedusa is an island that is part of Italy | Clearly visible in this picture is that most refugees travel from Tunisia or Libya to the island of Lampedusa. This is also the region on which our research is based. This due to the fact that drones won’t be able to fly to other popular location as for example near Morocco, but are able to cover routs from more cities. Using a distance measurement tool embedded in google maps it was possible to measure the distance. This distance was from the most popular Tunisian and Libyan cities to the most common European target island which is Lampedusa. Lampedusa is an island that is part of Italy and 20 square kilometer big having around 6000 Italian inhabitants. Because this is Italian, and with that European soil the refugees can start their asylum procedures there. The first couple of miles into the sea however are territorial waters for which the local coast guard is responsible, this is 12 miles in total. After these 12 miles the refugees are regarded to be on open sea. Distances from most popular cities to travel to Lampedusa can be viewed in the following table. | ||

===== | ===== Distances to Lampedusa ===== | ||

{| class="wikitable" style="width: 600px" | {| class="wikitable" style="width: 600px" | ||

|- | |- | ||

| Line 250: | Line 253: | ||

* The flight can be recorded easily; | * The flight can be recorded easily; | ||

* The flight control is more stable than the MATLAB code. | * The flight control is more stable than the MATLAB code. | ||

However, the use of the app is less convenient than the use of the MATLAB code since it is controlled via touch screen and gyroscopic sensing of the smartphone. To investigate on what is the more suitable height for the camera, with a resolution of 360p (480x360)<ref>Piskorski, S., Brulez, N., Eline, P., & D'Haeyer, F. (2012, May 21). A.R.Drone Developers Guide [PDF]. Parrot.</ref>, a red card had been placed on a black surface. The drone would then execute the following procedures: | |||

* Fly over the card; | * Fly over the card; | ||

* Hover above the card; | * Hover above the card; | ||

* Increase the altitude until the max set height of 12m. | * Increase the altitude until the max set height of 12m. | ||

The recorded flight videos would then be processed with another MATLAB code and | The recorded flight videos would then be processed with another MATLAB code and tuned until where the card would get visible. To estimate the height a second video was made which recorded the drone from a higher distance. | ||

==== Demonstration video making==== | ==== Demonstration video making==== | ||

[[File:Drone1.JPG|thumb|400px| Drone used during the demonstration]] | [[File:Drone1.JPG|thumb|400px| Fig. 5. Drone used during the demonstration]] | ||

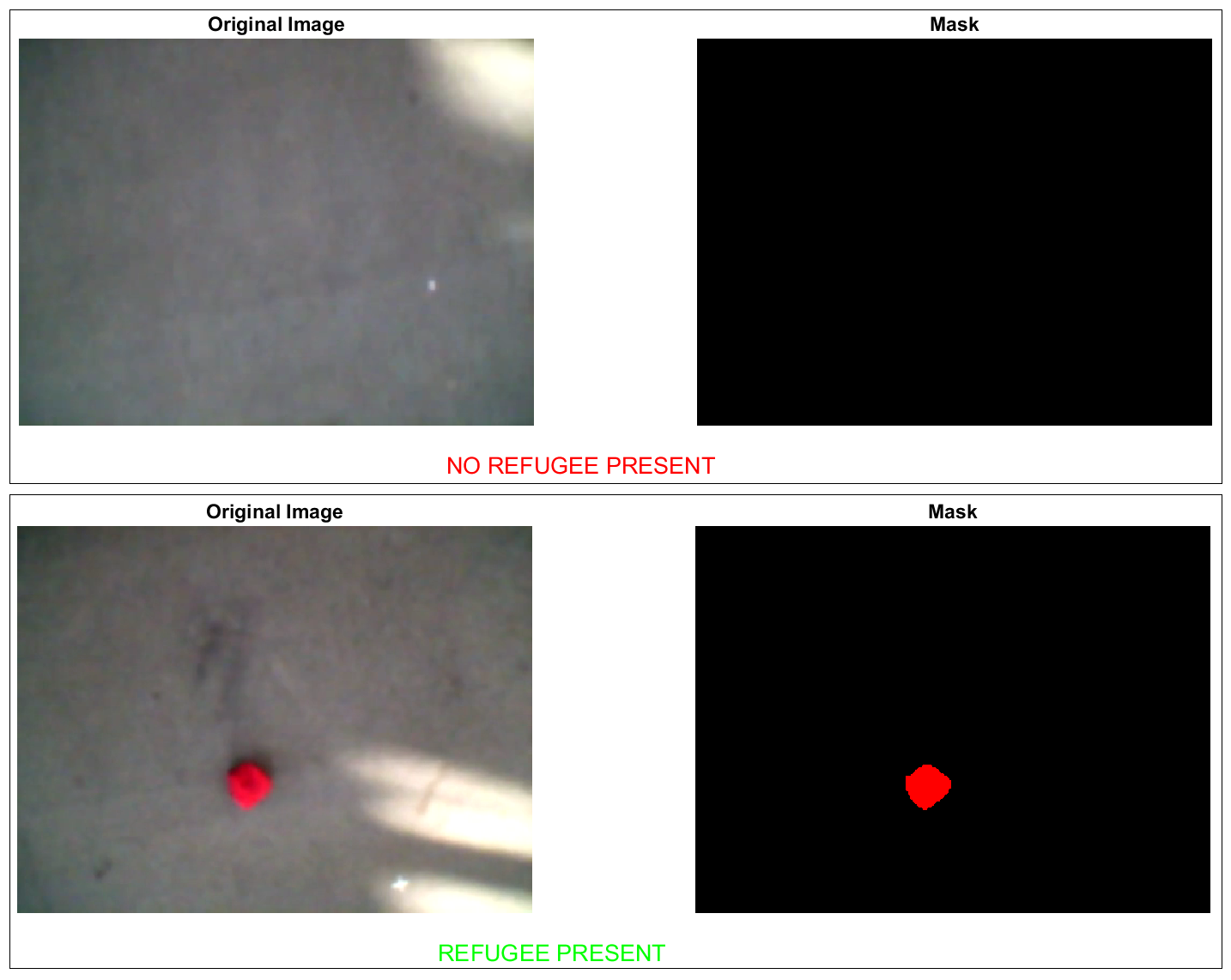

For the demonstration video, the recordings | For the demonstration video, the recordings described in the previous section have been used. The images have been processed using MATLAB using the script in Appendix I-B. A simple description of the script is as follows. It extracts each individual frame and converts it from red, green and blue (RGB) format to Hue Saturation and Value (HSV) format. The HSV threshold for the specified color range (red) is set, after which the corresponding parts of the HSV-layers are extracted. From these layers a mask is created that shows only the part where the color (red) is present. Based on the ratio of the color and background, it is then determined if ‘a refugee’ is present. | ||

[[File:matlaboutput.png|thumb|400px| MATLAB script only detecting 'a refugee' when the red object is in sight.]] | [[File:matlaboutput.png|thumb|400px| Fig. 6. MATLAB script only detecting 'a refugee' when the red object is in sight.]] | ||

The MATLAB script was used to analyze three different videos of the drone flying over objects of varying size at different altitudes. With a large object at low altitude it was easy to distinguish the object, and say ‘a refugee is present’. With a smaller object, the detection was still easy, but the color/background ratio had to be lowered. The MATLAB output of this video is shown in | The MATLAB script was used to analyze three different videos of the drone flying over objects of varying size at different altitudes. With a large object at low altitude it was easy to distinguish the object, and say ‘a refugee is present’. With a smaller object, the detection was still easy, but the color/background ratio had to be lowered. The MATLAB output of this video is shown in Fig. 6. At last the video of the credit card at maximum altitude was analyzed. Detection was still possible, but sometimes a bit troublesome. The color/background ratio had to be set very low, which will make the result less accurate, because a lot of noise would then lead to a false positive detection. This accuracy can likely be increased by using a higher resolution camera. | ||

==== Final validation of the model ==== | ==== Final validation of the model ==== | ||

To draw conclusions from the work done during the past weeks, this first has to be validated. The results from the testing have to be compared and verify how reliable they would have been in case of using a thermal camera. Regarding the drone functionalities, these will not be deeply inspected and validated because the image processing algorithm and method is designed to be implemented on the AVY drone. | To draw conclusions from the work done during the past weeks, this first has to be validated. The results from the testing have to be compared and verify how reliable they would have been in case of using a thermal camera. Regarding the drone functionalities, these will not be deeply inspected and validated because the image processing algorithm and method is designed to be implemented on the AVY drone. | ||

==== Validation of cost-efficiency ==== | ==== Validation of cost-efficiency ==== | ||

This subsection is dedicated to the validation of the cost-efficiency. Obviously, a drone, such as Avy, equipped with a sensory system to detect the refugees automatically, must be better cost-wise and risk-wise. The drones clearly have less risk for the pilot/controller, as there would be no risk of crashing while operating the small tasks that are not done autonomously by the drone. The cost-wise analysis is a complex analysis, which involves a large amount of estimations, as many operators do not publish their exact costs. | This subsection is dedicated to the validation of the cost-efficiency. Obviously, a drone, such as Avy, equipped with a sensory system to detect the refugees automatically, must be better cost-wise and risk-wise. The drones clearly have less risk for the pilot/controller, as there would be no risk of crashing while operating the small tasks that are not done autonomously by the drone. The cost-wise analysis is a complex analysis, which involves a large amount of estimations, as many operators do not publish their exact costs. | ||

To acquire an estimate on how much money the use of drones will decrease the money spend on migrant search in the Mediterranean | To acquire an estimate on how much money the use of drones will decrease the money spend on migrant search in the Mediterranean Sea, the initial costs need to be known. These costs will for our research solely consist of the money spend on coast guards. And with that excluding the costs of for example migrant centers on the island of Lampedusa. This is done since the drone’s use is aimed to assist the coast guard. A good cost estimate was found by analyzing the Italian Mare nostrum operation. In one of the first weeks of the project we came across this ‘successful’ refugee rescue mission. This was a sea operation which was launched in 2013 after high migrant death counts near Lampedusa. This mission consisted of saving lives and arresting human traffickers. This took place in the Mediterranean Sea in front of the Tunisian coast which area is also investigated in this project. The mission consisted of several ships, helicopters, airplanes and a lot of humans helping. Obviously not all of these factors can be replaced by drones but the cost reduction can be estimated. The Mare Nostrum mission costed around 9,5 million euros per month, which results in spending around 114 million euros per year. This sum of money is a very large sum, but does not include the price of purchasing the equipment, such as helicopters, planes and ships. For example, a military helicopter, which can be used for transporting people and searching, can easily cost more than 20 million euros<ref>Welcome to Aircraft Compare. (n.d.). Retrieved March 30, 2017, from https://www.aircraftcompare.com/helicopter-airplane/Agusta-Westland-AW101-Merlin/251</ref>. The refugees are not transported by these helicopters, as the capacity is much smaller than ships. This means that the ships will still be required, but the money spent on searching and first-aid on sea are likely to be reduced significantly. | ||

The costs for developing Avy are not publically known, and as there has been no response from Avy on the demand for more information, an assumption should be made. Patrique Zaman, one of the founders of Avy, tells in an interview with NOS that the Avy development is mostly financed by own funds with a little funding of the European Space Agency (ESA)<ref>NOSop3. (2017, Feb 11). Deze Nederlandse drone gaat bootvluchtelingen redden. Retrieved March 05, 2017, from http://nos.nl/op3/artikel/2157627-deze-nederlandse-drone-gaat-bootvluchtelingen-redden.html</ref>. The amount funding from the ESA is very diverse<ref>Funding. (2016, September 16). Retrieved March 29, 2017, from https://artes.esa.int/funding</ref>, but as he made clear the it is a minority of the budget for developing Avy, it is not likely that they would have received a 500,000 euro funding. We assume the funding to be in the order of 100,000 euros, with the company’s own investments of around 200,000 euros. This will create a budget of 300,000 for Avy, which seems a reliable estimate | The costs for developing Avy are not publically known, and as there has been no response from Avy on the demand for more information, an assumption should be made. Patrique Zaman, one of the founders of Avy, tells in an interview with NOS that the Avy development is mostly financed by own funds with a little funding of the European Space Agency (ESA)<ref>NOSop3. (2017, Feb 11). Deze Nederlandse drone gaat bootvluchtelingen redden. Retrieved March 05, 2017, from http://nos.nl/op3/artikel/2157627-deze-nederlandse-drone-gaat-bootvluchtelingen-redden.html</ref>. The amount funding from the ESA is very diverse<ref>Funding. (2016, September 16). Retrieved March 29, 2017, from https://artes.esa.int/funding</ref>, but as he made clear the it is a minority of the budget for developing Avy, it is not likely that they would have received a 500,000 euro funding. We assume the funding to be in the order of 100,000 euros, with the company’s own investments of around 200,000 euros. This will create a budget of 300,000 for Avy, which seems a reliable estimate that is not too positively approximated. | ||

Another very important point is to take the height of flying into consideration. A helicopter is flying not very high above sea level, or uses a camera with a zoom function to scan the sea surface. This means that a smaller area can be covered than the total image that can be gathered with a (high quality) thermal camera, as the image processing technique does the job and humans are only required for verification. This means that the Avy is likely to fly at | Another very important point is to take the height of flying into consideration. A helicopter is flying not very high above sea level, or uses a camera with a zoom function to scan the sea surface. This means that a smaller area can be covered than the total image that can be gathered with a (high quality) thermal camera, as the image processing technique does the job and humans are only required for verification. This means that the Avy is likely to fly at a higher altitude than a human operated helicopter for the same detection-rate. The price for a thermal camera that is likely to be suitable for this kind of operation, is a law-enforcement thermal camera, that is used in neighborhoods to patrol, and costs around 6000 euros <ref>Systems, I. F. (n.d.). LS-Series Thermal Night Vision Monocular. Retrieved March 29, 2017, from http://www.flir.eu/law-enforcement/display/?id=57065</ref>. | ||

As the drone is not produced, but is still in the development phase, a cost-price approximation is hard to do. Let’s assume that the cost-price of the drone is dependent of the amount of drones that are produced. During the described rescue mission, 2 helicopters and 3 planes are used. As a cut in this budget would be beneficial, assume a fleet of Avys will replace the two helicopters, than a budget of around 40 million would be available. Calculating the amount of drones that could be bought with those development costs (€310,000), whereas the real-estimated cost of the Avy will be lower than this, a fleet of around 130 Avys can be established. The area that these Avys can cover will be way larger than the area covered with the helicopters, as well as the costs of operation are likely to be much lower. | As the drone is not produced, but is still in the development phase, a cost-price approximation is hard to do. Let’s assume that the cost-price of the drone is dependent of the amount of drones that are produced. During the described rescue mission, 2 helicopters and 3 planes are used. As a cut in this budget would be beneficial, assume a fleet of Avys will replace the two helicopters, than a budget of around 40 million would be available. Calculating the amount of drones that could be bought with those development costs (€310,000), whereas the real-estimated cost of the Avy will be lower than this, a fleet of around 130 Avys can be established. The area that these Avys can cover will be way larger than the area covered with the helicopters, as well as the costs of operation are likely to be much lower. | ||

| Line 282: | Line 284: | ||

=== Week 8 === | === Week 8 === | ||

==== | ==== Calculations and model finalization ==== | ||

The camera used that was used on the drone in this project has a resolution of 640x360 pixels and an angle of view of 22.6 degrees. The used algorithm could reliable detect the bank card (8.6x5.4cm) while the drone was flying at a height of 5 meters. At this height every pixel of the camera sees an area of 0.3x0.3 cm, which means the area of the bank card had to be covered by approximately 500 pixels to be able to reliable detect it. When searching for refugees, the worst case scenario would be when only the head of a person is above the water. In that case, the camera would have to detect an area of approximately 300 cm2, which means every pixel should span 0.8x0.8 cm. The camera used in this project could do this while covering an area of 5x3m. The AVY drone at its top speed of 200km/h could cover an area of 1km2 per hour, while also only having 1 frame per area if the camera runs at 60 frames per second. This is not much and does not make drones sound like a good alternative for finding refugees. However, this area could be much larger with a better camera and algorithm. Thermal cameras usually do not have very high resolutions, but the quality of the picture can be much better. The camera used in this project is already of low quality and the video feed was then sent over Wi-Fi, which means it was compressed. Because the processing on the drone’s video feed would be done by the drone itself, it would not have to be compressed first, meaning the quality of the camera fully determines the quality of the pictures. When there is a clear distinction between the pixel values of the sea and of a human in the sea, the amount of pixels needed to reliably detect a refugee could be much lower. Just a few pixels could be enough in that case. In the same situation where only the head is above the water, but now only 5 pixels covering the head are needed, the camera could cover an area of 50x30m, resulting in 10km2 covered per hour. While also having 30 frames available for every area if the camera runs at 60 frames per second. This means the camera could run slower to save energy and reliability would be much higher. | |||

Using the calculated 130 Avy drones that would be available for the mission, the fleet could search through an area of 1300km2 per hour, which means the whole Lampedusa triangle could be fully searched in just 6 hours. This is already pretty quick and all of the calculations are taken on the safe side. The real world results would probably be even better. | |||

== Conclusion == | == Conclusion == | ||

=== USE analysis === | |||

====User==== | |||

As said before, the projected end user of the refugee drone is Coastal guards situated along the Mediterranean Sea, such as the national coast guard of Italy and Greece. This is because those countries are highly involved in the current refugee situation, where refugees from Africa seek asylum in European countries. | |||

The refugees often come in small crowded boats resulting in unsafe situations at sea. As a consequence accidents occasionally occur which cause drownings in return. The current rescue operations performed by the coastal guards, along with the military, make use of helicopters, planes and big ships to find and rescue or capture those refugees. The costs of these missions rise to an amount of €9,5 million per month. These costs are generally too high resulting in the cancelation of those rescue missions, such as the case of Mare Nostrum. | |||

A drone, such as the Avy, equipped with a thermal camera could in theory be much cheaper method to spot refugee boats and refugees that had fallen of those boats, in comparison with, for example, a turboprop airplane. When costs of rescue mission becomes cheaper, it is more likely to continue for a longer period. Moreover, making use of a large fleet of drones (which is necessary in any case due to battery live and maximum altitude) could in theory also result in a more efficient way to scan the sea surface. | |||

====Society==== | |||

Although in first appearance the coastal guards may seem to benefit the most from the drone, with respect to the costs, the society indirectly profits as well. This is because coast guards are often a branch of the national navy, which in turn is funded by tax money. When the fund for the coast guard is decreased, the amount for other public services will increase. | |||

This extra money can for example be used for better shelter and care for the refugees, decreasing civil unrest. Also, more information could be spread about the nature of the refugee crisis, yielding in more respect towards refugees which in its turn could decrease the tension between the native people and the immigrants. | |||

On top of that, since the rescue missions save lives, it is humanitarian to continue with those missions. Lowering the costs increases the change of continuation. As said, the use of drones could in theory also be more efficient, this would further increase the change of saving more lives. | |||

====Enterprise==== | |||

The utilization of drones with thermal cameras might be unfavorable for certain enterprises. As stated before, the current resources the coast guards make use of to find refugees are boats, airplanes and helicopters. The drones might completely replace the position of airplanes, and a large part of the helicopter fleet as well (some helicopter are still needed to lift casualties out of the water in certain circumstances). Therefore, the helicopter and airplane manufactures can lose a costumer. Moreover, helicopters and airplanes rely on fossil fuel, whereas drones rely on electrical power. The deliverers of fuel for those vehicles can therefore also lose a costumer. | |||

On the other hand, the use of drones creates business opportunities as well. Since drone and thermal imaging are both relative new technologies, a lot of development is yet to take place. The coastal guards could be a valuable costumer for (startup) companies in those fields. On top of that, the use of a drone equipped with a thermal camera does not necessarily have to be limited to find refugees. Although care needs to be taken with regard to laws and regulations, the drones can also be used for law enforcers, such as the police, to pursuit fugitives. Another application might be to use the drones to scan neighborhoods to spot energy losses in the form of heat due to bad insulation. | |||

=== Further research === | |||

Follow up research could be done in several ways. Firstly it is possible to enhance the model that was created in our research project. This could be done by a more accurate detection in which less mistakes are made. Moreover another enhancement that would greatly benefit the previously mentioned purposes of the project is making the drones fly autonomously. If a swarm of drones could operate independently less human interference in the searching procedure would be necessary. Which obviously reduces the costs, but moreover could improve the number of refugees that is found. | |||

Secondly it is also possible to investigate not in enhancement of the technology but research in a broader spectrum of application. The technology that is presented by the research that is done here focusses on the refugee search. However the search of humans is done in a wide variety. The technology that is broad forward in this research is able to detect persons in the water, however using slight adjustments it might also be able to recognize people on land. This could then be applied in numerous fields as for example one that is often on the news namely the search for poachers. With several animals on the brink of extinction autonomous detection of poachers would maybe be able to contribute to wildlife preservation. | |||

== Reflection == | |||

As shown in the conclusion, the User Society Enterprise aspects of the project are satisfied. Nevertheless, the path taken to arrive at the final results and, subsequently, the conclusion, is not a straight path. During the project, there have been drawbacks, reformulations and other difficulties on which an analysis will be made in this section. From this section, a deduction can be made for improvements for a similar type of project. | |||

First of all, the opportunity for groups to decide entirely about the subject they want to focus on in the project is a very nice parts. Nevertheless, this requires a lot of creativity and structure inside the groups as well, as focusing on the wrong things is easily done in these cases. Unfortunately, this group also experienced some moments in which the focus not on the most important part, as stated in the objective: “The goal is to study the feasibility of using autonomous drones equipped with thermal cameras to search for refugees in replacement of airplanes, helicopters and boats currently assigned to this task”. For example, the focus has been on controlling the drone via MATLAB and due to some bugs in the code, this took a lot of time. Controlling the drone by smartphone to acquire an image/video that could be used for processing should have happened at one of the earlier stages of the project. As the final results provide an image processing technique, information about the refugee-routes, implementation on a drone-under-development and much more, the time outside these faulty focusses has been used very efficiently, mainly due to the great collaboration in the group, which will described in further in the next paragraph. | |||

The group was very well balanced. Firstly, the group members were all motivated to work on the project, as their interest were very much with the subject. Secondly, having 5 Electrical Engineers, which are passionate about electronics and embedded system design and an Applied Physicist, which is very skillful in MATLAB and has a critical vision, has proven to be a very strong combination. Working in subgroups also did not affect the coherence of the whole project in a negative way. | |||

Overall, the group logistics, motivation and collaboration turned out successively. Nevertheless, due to enthusiasm and wrong focus, some time was lost by researching and developing parts that were not necessary to prove the objective. This focus should have been spread in a more structured manner throughout the project, and this will be an element that will be kept in mind when participating in another group project with a similar structure as the USE Robots Everywhere final project, or in whatsoever real life problem that fits in the structure above mentioned. | |||

== References == | == References == | ||

| Line 660: | Line 697: | ||

==== B: Code used for image processing ==== | ==== B: Code used for image processing ==== | ||

clear ; clc; | |||

% Set Color to find in function SetThreshold(). 1 = Yellow, 2 = Green, 3 = Red, 4 = White | |||

[hueThresholdLow, hueThresholdHigh, saturationThresholdLow, saturationThresholdHigh, ... | |||

valueThresholdLow, valueThresholdHigh] = SetThresholds(); | |||

smallestAcceptableArea = 10; | |||

structuringElement = strel('disk', 25); | |||

video = VideoReader('remco360p.mp4'); | |||

while hasFrame(video) | |||

rgbImage = readFrame(video); | |||

subplot(1,2,1); | |||

cla reset | |||

imagesc(rgbImage); | |||

title('Original Image', 'Fontsize', 14); | |||

axis off | |||

hsvImage = rgb2hsv(rgbImage); | |||

hImage = hsvImage(:,:,1); | |||

sImage = hsvImage(:,:,2); | |||

vImage = hsvImage(:,:,3); | |||

% Create Hue, Saturation and Value Masks based on threshold values | |||

hueMask = (hImage >= hueThresholdLow) & (hImage <= hueThresholdHigh); | |||

saturationMask = (sImage >= saturationThresholdLow) & (sImage <= saturationThresholdHigh); | |||

valueMask = (vImage >= valueThresholdLow) & (vImage <= valueThresholdHigh); | |||

% Combine masks | |||

coloredObjectsMask = uint8(hueMask & saturationMask & valueMask); | |||

% Remove small areas | |||

% smallestAcceptableArea = 100; % out-of-loop | |||

coloredObjectsMask = uint8(bwareaopen(coloredObjectsMask, smallestAcceptableArea)); | |||

% Smoothen borders | |||

% structuringElement = strel('disk', 25); % out-of-loop | |||

coloredObjectsMask = imclose(coloredObjectsMask, structuringElement); | |||

% Fill holes | |||

coloredObjectsMask = imfill(logical(coloredObjectsMask), 'holes'); | |||

% Lay mask over original image | |||

coloredObjectsMaskConv = cast(coloredObjectsMask, 'like', rgbImage); | |||

maskedImageR = coloredObjectsMaskConv .* rgbImage(:,:,1); | |||

maskedImageG = coloredObjectsMaskConv .* rgbImage(:,:,2); | |||

maskedImageB = coloredObjectsMaskConv .* rgbImage(:,:,3); | |||

maskedRGBImage = cat(3, maskedImageR, maskedImageG, maskedImageB); | |||

subplot(1, 2, 2); | |||

cla reset | |||

%imagesc(maskedRGBImage); % Mask layover origial image | |||

cmap = [0,0,0 | |||

1,0,0]; | |||

colormap(cmap); | |||

imagesc(coloredObjectsMask); % Mask | |||

title('Mask', 'FontSize', 14); | |||

axis off | |||

[rows, columns, numberOfColorChannels] = size(rgbImage); | |||

numberOfPixels = rows*columns; | |||

ratio = sum(sum(coloredObjectsMask))/numberOfPixels; | |||

% ratiothreshold; remco 0.01; jacket 0.005; card 0.0001; threshold | |||

% for detection decreases with drone height, which might increase | |||

% number of false positives. | |||

if ratio > 0.0001 | |||

text(-0.5, -0.1, 'REFUGEE PRESENT', 'Units', 'Normalized', 'FontSize', 16, 'Color', 'green') | |||

else | |||

text(-0.5, -0.1, ' NO REFUGEE PRESENT', 'Units', 'Normalized', 'FontSize', 16, 'Color', 'red') | |||

end | |||

drawnow | |||

truesize | |||

end | |||

function [hueThresholdLow, hueThresholdHigh, saturationThresholdLow, saturationThresholdHigh, valueThresholdLow, valueThresholdHigh] = SetThresholds() | |||

% Use values that I know work for the onions and peppers demo images. | |||

color = 3; | |||

switch color | |||

case 1 | |||

% Yellow | |||

hueThresholdLow = 0.10; | |||

hueThresholdHigh = 0.14; | |||

saturationThresholdLow = 0.4; | |||

saturationThresholdHigh = 1; | |||

valueThresholdLow = 0.8; | |||

valueThresholdHigh = 1.0; | |||

case 2 | |||

% Green | |||

hueThresholdLow = 0.15; | |||

hueThresholdHigh = 0.60; | |||

saturationThresholdLow = 0.36; | |||

saturationThresholdHigh = 1; | |||

valueThresholdLow = 0; | |||

valueThresholdHigh = 0.8; | |||

case 3 | |||

% Red. | |||

% IMPORTANT NOTE FOR RED. Red spans hues both less than 0.1 and more than 0.8. | |||

% We're only getting one range here so we will miss some of the red pixels - those with hue less than around 0.1. | |||

% To properly get all reds, you'd have to get a hue mask that is the result of TWO threshold operations. | |||

hueThresholdLow = 0.80; | |||

hueThresholdHigh = 1; | |||

saturationThresholdLow = 0.58; | |||

saturationThresholdHigh = 1; | |||

valueThresholdLow = 0.55; | |||

valueThresholdHigh = 1.0; | |||

case 4 | |||

% White | |||

hueThresholdLow = 0.0; | |||

hueThresholdHigh = 1; | |||

saturationThresholdLow = 0; | |||

saturationThresholdHigh = 0.36; | |||

valueThresholdLow = 0.7; | |||

valueThresholdHigh = 1.0; | |||

end | |||

return; % From SetThresholds() | |||

end | |||

Based on code: https://nl.mathworks.com/matlabcentral/fileexchange/26420-simplecolordetection | |||

Latest revision as of 15:21, 11 April 2017

Group members

| Student ID | Name |

| 0943957 | M.D Visser |

| 0980082 | G. Marzano |

| 0950506 | R. Schalk |

| 0960353 | T. Jansen |

| 0897620 | J. Van Galen |

| 0965090 | B.G.M Hopman |

Introduction

This is the Wiki page for the project Robots everywhere (0LAUK0) of group 16. The subject chosen is "Detection of people by using drones equipped with IR/heat sensors and Camera". Developing such a technology would make an impact due to the several applications it carries along. In the first phase of the project, after brainstorming, the following applications emerged:

- Find refugees in open sea

- Local security and criminals detection

- Inspection of insulation materials in houses (not directly "human recognition", but considered a valuable research)

Since Europe is currently struggling with a refugee problem in the Mediterranean sea, it was chosen to focus on the first application.

Problem Statement

Due to turbulent geopolitical times in parts of Africa, thousands of people try to get a safe, better life in Europe. Conflicts in countries such as Somalia and the violation of human rights in countries with strict regimes, such as Eritrea, forces certain groups to move somewhere else[1]. The safest place that is reachable for them is Europe. However, to make it to Europe, the Mediterranean Sea needs to be crossed. The crossing is often done with old, small boats that were originally intended for much less passengers than they are currently loaded with. The result is the sinking of many boats, that later on drown in the open sea. Only in 2016 there were 4,218 ensured deaths in the Mediterranean because of drowning boats. In the first month of 2017, the death count for this cause was already 377 migrants[2].

Up until November 2014, Italy had its own rescue operation called Mare Nostrum to find refugees that were victims of boat accidents. The rescue mission was successful, about 160,000 people were saved from drowning. However, the use of seven ships, two helicopters, three planes and the help of the marine, coastal guard and Red Cross costed €9,5 million per month which was too expensive and the mission stopped[3]. Moreover, it was thought that the rescue operation would encourage refugees to take their chances to cross the sea, as they would be rescued anyway when they would get in trouble. The result of stopping with the operation resulted in a ten fold of casualties[4].

The search for refugees is currently done with the use of helicopters and planes equipped with cameras. However, these aerial vehicles are very expensive to keep in the air. The cost of the assistance of a C-130 turboprop plane used in rescue missions, for example, costs more than €6,000 per hour. The main purpose for these aircrafts is to spot refugees, after which a boat will get them out of the water[5].

In order to make rescue operations such as Mare Nostrum stay in action, costs have to be cut. Since the aircraft division of those operations are one of the most expensive aspects, another solution must be invented. The usage of the promising drone technology could be a very good solution since they are a lot cheaper to keep flying. Moreover, they could be used in large numbers to cover large water surfaces making rescue operations much more efficient. Therefore, this wiki will discuss the feasibility of the deployment of drones within refugee rescue missions. Since drones are currently relying on rather weak power sources, batteries in particular, the equipment of the drones should be minimal. The use of a thermal camera will therefore be investigated because apart from the fact that it is small and lightweight, the automatic detection of organisms, in this case human refugees, can be easily implemented since humans have a warmer body temperature than the surrounding water. Moreover, since the thermal cameras are not dependent on visible light, the cameras can also be used at night increasing operational hours of the drone.

Objectives

Final formulation of the project objectives

The focus of this project will be on the methods applied in searching refugees. The goal is to study the feasibility of using autonomous drones equipped with thermal cameras to search for refugees in replacement of airplanes, helicopters and boats currently assigned to this task. This study is done by testing how much area one drone can cover, and from that value estimate how many drones would be needed to replace the current searching methods. The final result of this project should, therefore, be a comparison of the costs and efficiency between current searching methods and the use of drones.

Approach

General

- Determine the demands and benefits for user, society & enterprise.

- Divide group in subgroups working on different aspects of the project.

- Make a detailed planning.

Technical

- Equip the drone with a thermal camera (considering that a drone can be provided by TU/e).

- Program the drone and tune the sensors (e.g. find the threshold voltages) to detect the different values.

- Link the obtained data to an environmental structure (e.g. environment heat model).

- Adjust program in order to map properly the perimeters and consider the external heat deviations.

- Act on the environment under inspection (e.g. transmitting signal to operator).

Literature Study

For the literature study of this project the article titled ‘feasibility study of inexpensive thermal sensors and small UAS deployment for living human detection in rescue missions application scenarios’ was found[6]. This article mentions how there are two critical phases in which geospatial imaging for rescuing purposes can be very useful. Namely for the detection of humans and secondly for the confirmation whether a detected human is dead or alive. Moreover the article elaborates on the “proof of concept for using small UAVs equipped with infrared and visible diapason sensors for detection of living humans in outdoor settings”. In which “Electro-optical imagery was used for the research in optimal human detection algorithms”.

Quite a lot of useful information about thermal imaging came forward in this research. Already in the introduction a human psychological aspect comes forward which says that “the human tendency to disregard opportunity costs when the life of identifiable individuals are visibly threatened. Due to this fact, we may observe operations when hundreds of people and multiple sets of equipment are deployed to save only one human life”. Moreover Rudol et al, already introduced human body detection via positioning algorithms using visible and infrared imagery in 2008. Follow up research in this field realized analysis algorithms that detect breathing and heartbeat rates through 15 cm of rubble.

In our research we were already aware of the fact that manned aerial vehicles for rescuing purposes are quite expensive. However something that came forward in this article and was not considered by us is that for manned aerial vehicles “very strict requirements are needed for areas of take-off and landing, and these areas are often far from the search and rescue area”. Moreover we found out that there exist several classes of UAVs, however we will adapt to the drone that can be provided by TU/e.

The research article conducted their research on human dummy objects and real humans. Experimental results showed that “various types of boundaries created by changes in feature signs such as color and texture, bringing a lot of difficulties in automated image processing. Thus, a potentially reliable algorithm needs to consider all combination of different types of image attributes together in order to provide correct segmentation of real natural images”. The conclusion following up on this result made clear that living humans can be detected in a reliable way in positive (13 °C) as well as negative (-5 °C) temperature surroundings.

Planning

To organize the workload of the project a planning is required, where the main requirements and deadlines are set, together with the task division (starting from week 3 of the quartile, e.g. 20th February 2017). A Gantt chart can be found in Fig. 1.

Logbook

Weekly the progress of the group is going to be reported in this logbook. The purpose is to keep the full group up to date with the progress of each sub group. Moreover, it is useful to be always able to compare the actual progress with the planning.

Week 1

During week 1 the group has been formed, the possible objectives of the project have been discussed and for each of the possibility the main USE aspect have been identified, as reported in Objectives section. Moreover, a rough estimation of the possible planning has been discussed, to find out the best suitable times for the group members to meet.

Week 2

During week 2 the group had to present the subject of the project, with the relative objectives and approach. Based on the feedback received, the group starts to focus on one of the application presented: The Refugees Search.

USE analysis of the application

User

The users of the rescue drone (ideal stakeholders) will be the coastal guards Italy, Greece, etc. Since deploying ships and planes for surveillance is costly and slow, the use of drones can decrease costs significantly and increase search speed. Due to the reduction in costs, a lot of the drones can be deployed, increasing the surveillance area. Deployment of drones is also easier for the operator, since you can fly drones in autonomous mode. This makes it possible to control the drone by anyone, instead of requiring skilled pilots. Automatic detection of refugees by the drone, simplifies locating them, after which rescue ships can be send to their location. The user requirements, are that the drone should be easily deployable and be able to detect refugees with as little control by the operators as possible.

Society

Rescue drones will benefit society, since a lot of deaths of refugees can be prevented by their deployment. A reduction in costs, will make more money available for the shelter and care of the refugees.Moreover, better shelters and care would make an impact, reducing tensions and decreasing civil unrest. Thus, the main requirements for society are to reduce the costs of rescue operations, saving money.

Enterprise

A rescue drone has little commercial applications, but a drone equipped with thermal camera, could be utilized by insulation companies to scan houses in urban areas for improvements. It could also be used by security companies, to scan business premises instead of fixed cameras that have a limited view.

Demonstration

To demonstrate the functionality of the drone, the detection of a human being in a marine environment will e inspected. This could be shown by a test in a swimming pool or in the 'Dommel', with an individual swimming in the water. If the drone is able to recognize whether there is a human in the water or not, it will be a passed test. The test will be recorded on video and shown at the final presentation of the project.

State of the art

Refugee drone Avy

The use of drones is a development that has not been around for decades. This directly implies that research on drones for application in specific domains is not largely spread and therefore there are few state-of-the-art systems that are comparable with the idea that this group wants to develop. Nevertheless, there has been developed a drone that is specifically designed for refugees.

Avy is the drone that has been receiving publicity lately, as it focusses on delivering goods (a huge floater) to the refugees on open see. After dropping the floater, the location of the refugees is directly known and the emergency services can move to the area with the gathered knowledge. The weakness of this design is that the drone does not focus on the detection of refugees, as the location that is transmitted comes down to the location at which the floater is dropped. For dropping a floater, one must be able to detect the refugees by some means, as dropping a floater randomly will not help the current problem. A thermal camera, with which our design will be equipped, will ensure detection of refugees on open see, even at night conditions. Many other elements of Ivy are very hopeful and inspirational to our development, such as the design of the Avy[7].

The design of Avy focused on traveling large distances overseas, which is not doable with a 'regular' quadcopter drone. This, however, is not directly interesting for our project, as the main focus is on the detection by using the thermal camera instead of designing a drone that is able to cover large distances. Even though the design of Avy focussed on large distance travelling on high speeds (around 200 km/h), it is still able to take-off and land vertically. Moreover, the design is fully electric driven with propulsion, able to fly (nearly) autonomously and able to carry a payload of around 10 kg[8].

"UAE Drones for Good Award", Dubai

In Dubai, since 2016 an annual competition called "UAE Drones for Good Award" has been established. The mission, as stated on their website [9] follows:

The UAE Government invite the most innovative and creative minds to find solutions that will improve people’s lives and provide positive technological solutions to modern day issues.

The Avy case, above described, has been one of the finalists of this competition along with numerous other intriguing projects. Some of them were focused on rescuing and assisting in case of disasters, namely "Drones for Search and Rescue" and "FINDER drone". One of the finalist projects, called "Drones 4Right2Life", is particularly interesting because of the similarities it has with the objectives of this group.

On the page of the project [10], they affirm:

The solution promises to have [...] an earlier, closer, and more efficient detection of the vessels, which may result not only in a better use of human and technological resources in the rescue effort, but more importantly, in saving more lives.

Week 3

In preparation for the presentation of this week, the group defines the deliverables of the project

Deliverables

- A working drone equipped with a bottom-facing camera capable of detecting humans

- A demonstration video which shows the functionalities of the drone

- Complete wiki with all relevant information on the project. This includes:

- introduction

- context analysis (state of the art)

- problem statement

- research with an accent on the USE perspective

- conclusion with some ideas for further improvement.

Week 4

During the carnival break, the group took contact with dr. ir. M.J.G. van de Molengraft and subsequently received the drone. Moreover, the literature study has been completed and improved. An important discussion took place during Week 4, regarding the use of the thermal camera for recognition. The reasons which lead to the propose of such a technology can be found in the previous section of the wiki. However, an alternative might be to use a regular camera and, instead of showing the actual working principle, just demonstrate it by mean of a bright colored object. Using colors that can be easily distinguished from the water in normal situations, eliminates the need for a thermal camera. This and other possible options have been discussed during the weekly meeting with the professors. The feedback received has been used to start on working on a list of requirements.

Work on the Drone

After having received the drone, the group started to work on it. In order to make it more convenient to control the drone, it was decided to use MATLAB to send commands and receive data. The idea was that within MATLAB we could also do the image analysis. However, after spending hours testing different MATLAB codes, still no success was accomplished since the drone would not get into the air, nor MATLAB was able to receive data from the drone. After some time we figured that one of the codes, received from the TU/e, is only suitable for a previous version of the drone. As said, different other codes have been tested, but they also do not seem to get the drone into the air.

Week 5

At this point, some adjustment had to be made to the original planning, due to some changes of direction of the project. These mainly concern the type of camera used, which is going to be a regular camera instead of a thermal one. Consequently, to clarify more which objectives have to be achieved, the final requirements have been listed. Moreover, during this week and the end of the previous one, some work has been done on the image processing as well. This is necessary for the detection of refugees through the camera. Finally, the requirements are also important for preparing the final demonstration video, and assess how valuable the outcome is going to be even though a different camera is used.

List of Requirements

A list of requirements has been done, in order to proceed efficiently in the following weeks. Considered the nature of the project, focused on the image processing and human recognition, and at the same time the state-of-the-art in implementing drones with sensors to enhance new optimizations for preexisting solutions, the group has decided which is the position that this project will take. Indeed, in the realization and then demonstration, the work shown will be an extension to the already existing AVY drone, previously presented. The testing of the human recognition and the validation of its functionality referring to the use of a thermal camera will be the main bullet-points of the final demonstration. The detailed list of requirements follow:

- Drone

- Minimum height: 10m

- Minimum speed: 1 m/s

- Minimum angle of detection (with respect to the perpendicular): 10 °

- Minimum flying autonomy: 2 minutes

- Minimum detection rate 70%

- Camera

- Minimum resolution: Qvga (bottom camera & front camera)

- Conversion of output image in a thermal image: YES

- General

- Mapped Area: 100 m2

- Autonomous search strategy: NO

The demonstration will be filmed and then shown. The drone will have to fly (under control of the team) and map the surrounding area (which would be a pool of approximately 300 m2, but just a third of it will be mapped). By mean of the camera, it will be able to take pictures of the environment. The demo will take place in a public pool or similar, in order to simulate the real life situation, when it has to find refugees in the open sea. Obviously, some assumptions due to the testing conditions had to be made, namely:

- No currents in the sea, nor waves or any kind of water motion

- No use of thermal camera

- A single "refugee" that has to be recognized

- Refugee's body completely outside of the water (e.g. not swimming, drowning...)

Finally, the resulting image, taken while considering all the previously stated restrictions and requirements, will be converted in a thermal image to validate the model and at the same time show an useful result to extend the research that has been done with the AVY drone.

Validation of the thermal image processing

As explained in the introduction of week 5, the thermal camera is not available and can therefore not be used. The thermal camera would make detection in real life environment significantly easier than detection by regular camera, as the distinction between water (sea) and humans is very obvious when comparing heat characteristics. Nevertheless, the technique of distinction that is used in these thermal cameras can be used by use of the normal cameras installed on the drone.

The image generated by a thermal camera from a creature in the sea consists of a color pattern dependent on the heat radiation of the environment. The amount of heat is translated into a color pattern which deviates between black (cold) and red (hot), which is shown in the figure above [11]. In order to achieve this kind of patterns with the regular camera, the environment can be adapted to the image gathered from a thermal camera.

The environment, in which the goal of detection of refugees in open water by means of a thermal camera, should be modified as much to represent the images described above. To do this, the watercolor will be blue/white, as the tiles in the swimming pool are white and the water shows a bright blue color. This can be used as water color, just as the black represents coldness in the thermal image. The color deviation of a human in water is less obvious than a thermal camera would generate, thus adaption of the environment is required to create a good simulation. The adaption that this group uses to mimic the thermal image, is to equip the swimmer, which represents a refugee, with a head or body size bright red object. This object will strongly differ from the color of the water detected by the regular camera, as shown in Figure, and therefore the deviation method of thermal images can be used. The next section describes how this image processing is done.

Image Processing

Looking at how it is possible to mimic thermal vision with a regular camera, some possibilities came up by using MATLAB. One is by converting the acquired frames to binary images, based on a threshold value. The binary images are probably easier to analyze. Two examples are given below.

Binarizing the images works quite well, but the problem with these two images, is that they require opposite threshold values, due to the difference in skin color. This might impose a problem in a real scenario, but is likely no problem in our test scenario, since only one test environment is used. In case a thermal camera is used, differences in water and skin color, won’t matter since, the image is only based on body heat.

Identification of persons in the video stream of the drone, might be possible by using a neural network. The images were put through a pre-trained deep learning model called AlexNet, which can be implemented in MATLAB. It was able to identify some objects, but did not giving satisfying results with the images in Fig. 3, so follow up for a more suitable way is necessary.

Week 6

Investigation on interesting area for the refugees search

Another requirement that has to be set is the area that the drone has to cover. To acquire numbers regarding these area’s common refugee routes have to be known. Since the refugee crisis is already going on for several years organizations like the UNHCR and BBC have conducted quite some investigation in it. The picture in Fig. 4 from the BBC depicts the most used sea routes.

Clearly visible in this picture is that most refugees travel from Tunisia or Libya to the island of Lampedusa. This is also the region on which our research is based. This due to the fact that drones won’t be able to fly to other popular location as for example near Morocco, but are able to cover routs from more cities. Using a distance measurement tool embedded in google maps it was possible to measure the distance. This distance was from the most popular Tunisian and Libyan cities to the most common European target island which is Lampedusa. Lampedusa is an island that is part of Italy and 20 square kilometer big having around 6000 Italian inhabitants. Because this is Italian, and with that European soil the refugees can start their asylum procedures there. The first couple of miles into the sea however are territorial waters for which the local coast guard is responsible, this is 12 miles in total. After these 12 miles the refugees are regarded to be on open sea. Distances from most popular cities to travel to Lampedusa can be viewed in the following table.

Distances to Lampedusa

| City | Km | Miles | Open sea miles |

| Misratah | 412.52 | 256.24 | 244.24 |

| Tripoli | 295.38 | 183.54 | 171.54 |

| Zuara | 289.54 | 179.91 | 167.91 |

| Gabés | 290.07 | 180.24 | 168.24 |

| Mahdia | 131.07 | 81.44 | 69.44 |

| Monastir | 154.90 | 96.25 | 84.25 |

This results in two triangular shaped search area. One from Libya to Lampedusa and one from Tunisia to the same island. With the same google maps tool it now becomes clear that the Libya triangle covers around 34792 km2 and the triangle to Lampedusa from Tunisia is 7906 km2.

Further research on AVY project

In week 2, research has been done on the state-of-the-art drones that are developing and designing drones with application in the field of refugee aiding drones. As the method this group is developing is not an entire drone, but a smart image processing technique to detect refugees autonomously on open see, the application of the technique must be applicable for a drone type that is in use. The Avy drone happens to be a great example in the world of drones that are working on a solution of the refugee problem.

The internet is not very elaborate on the specifications of Avy, whereas most of the specifications provided in week 2 are the only ones that are found on the internet. For example, the website of Avy provides that “the Avy Rescue possesses a powerful and complete sensor & communication package”[13]. The Avy website offers the opportunity to ask questions to the developers of Avy, which would be an excellent way of acquiring information about the sensor package of Avy to determine if the sensing mechanisms required for the technique developed are present. Unfortunately, there has been no response from Avy up to now, as there is no information available to include.

Work on the drone

The drone is finally flying and controllable with use of MATLAB and a standard code[14]. The drone is able to do all the standard functions, including automated takeoff and landing. After many trials and errors the camera feed is also available, however there is a big lag of about 8 seconds. The cause of this is the slow execution of an external program which converts the non-common ‘h264’ video format from the drone to a visible stream or image. This might not be fixed unfortunately. To process the camera feed an image will be required every ~8 seconds. The stream is not used since processing an image in MATLAB is easier than processing a stream. Also, since there is a big lag there is also no point of analyzing the stream. There are yet some things to be done. The first one is to make the software more stable, sometimes errors occur when taking a picture with the drone, which causes the drone to be uncontrollable. Also the image processing needs to be fully implemented.

Week 7

During this week, a step back regarding the final objectives and deliverables for the project, which are definitely reported in the relative subsections, occurs. Moreover, the last operations on the drone and for the image processing algorithm are completed. During the week, some videos of the drone have been recorded.

Testing

The MATLAB code has been further improved and the program was made a little more stable. This final code can be found in Appendix I-A. However the camera lag was not to be prevented and still the program would freeze in about 30% of the cases when taking a snapshot. Also, the bottom camera of the drone could not be reached after numerous tries of different codes. The complications posed a few problems to the original idea of delivering a drone that could live detect a ‘victim’, in the form of a red floating object, above the water. This is mainly because the lag forces the drone to hover above the field of sight of the camera until the snapshot has been taken and the analysis is done. This however would take too much time, and the battery would die during the process. Moreover, the risk could not be taken that the drone crashed into the water due to the fact that the control program occasionally freezes, leaving the drone uncontrollable. During the final week the objective had therefore been altered, exploring the profitability of using drones to replace the current rescue resources. The drone is still useful for this objective since it needs to be explored what type of camera should be used for an adequate height. For this purpose the drone is controlled via the standard smartphone app [15]. The use of the app gives rise to a few advantages over the MATLAB code:

- The bottom camera can be reached;

- The flight can be recorded easily;

- The flight control is more stable than the MATLAB code.

However, the use of the app is less convenient than the use of the MATLAB code since it is controlled via touch screen and gyroscopic sensing of the smartphone. To investigate on what is the more suitable height for the camera, with a resolution of 360p (480x360)[16], a red card had been placed on a black surface. The drone would then execute the following procedures:

- Fly over the card;

- Hover above the card;

- Increase the altitude until the max set height of 12m.

The recorded flight videos would then be processed with another MATLAB code and tuned until where the card would get visible. To estimate the height a second video was made which recorded the drone from a higher distance.

Demonstration video making

For the demonstration video, the recordings described in the previous section have been used. The images have been processed using MATLAB using the script in Appendix I-B. A simple description of the script is as follows. It extracts each individual frame and converts it from red, green and blue (RGB) format to Hue Saturation and Value (HSV) format. The HSV threshold for the specified color range (red) is set, after which the corresponding parts of the HSV-layers are extracted. From these layers a mask is created that shows only the part where the color (red) is present. Based on the ratio of the color and background, it is then determined if ‘a refugee’ is present.

The MATLAB script was used to analyze three different videos of the drone flying over objects of varying size at different altitudes. With a large object at low altitude it was easy to distinguish the object, and say ‘a refugee is present’. With a smaller object, the detection was still easy, but the color/background ratio had to be lowered. The MATLAB output of this video is shown in Fig. 6. At last the video of the credit card at maximum altitude was analyzed. Detection was still possible, but sometimes a bit troublesome. The color/background ratio had to be set very low, which will make the result less accurate, because a lot of noise would then lead to a false positive detection. This accuracy can likely be increased by using a higher resolution camera.

Final validation of the model

To draw conclusions from the work done during the past weeks, this first has to be validated. The results from the testing have to be compared and verify how reliable they would have been in case of using a thermal camera. Regarding the drone functionalities, these will not be deeply inspected and validated because the image processing algorithm and method is designed to be implemented on the AVY drone.

Validation of cost-efficiency

This subsection is dedicated to the validation of the cost-efficiency. Obviously, a drone, such as Avy, equipped with a sensory system to detect the refugees automatically, must be better cost-wise and risk-wise. The drones clearly have less risk for the pilot/controller, as there would be no risk of crashing while operating the small tasks that are not done autonomously by the drone. The cost-wise analysis is a complex analysis, which involves a large amount of estimations, as many operators do not publish their exact costs.