PRE2015 4 Groep2: Difference between revisions

| (248 intermediate revisions by 6 users not shown) | |||

| Line 10: | Line 10: | ||

==Introduction== | ==Introduction== | ||

We are developing a fruit visual inspection system that uses a neural network to determine fruit ripeness. The system is being initially developed for | We are developing a fruit visual inspection system that uses a neural network to determine fruit ripeness. The system is being initially developed for analysing the quality of strawberries; however, the neural network, database back end, and mobile application have all been designed to scale to different kinds of produce. In this project we detail that creation of our system and implement the technology in a demo to display how the system can be used in a quality assurance setting. Our demo was one example application of the system, but the solution can be applied to harvesting robots, fruit-counting robots, and many other technologies. | ||

==Abstract== | ==Abstract== | ||

[[File:conveyor.png | 340px | thumb | Render of concept.]] | |||

[[File:qualitycontrol.png| 194px | thumb | Current strawberry quality control.]] | |||

At the start of this project the goal we had in mind was to build a robot that can harvest fruit (strawberries in our case) autonomously. The idea was that the robot would drive along a row of plants, detect which of the fruits are ripe and harvest the fruits that are ripe enough for consumption. Our plan was to use a cheap camera, like a Kinect, to take low resolution pictures of the fruit. These pictures would then be fed through a neural network (a Convolutional Neural Net) and classified into several categories with different degrees of ripeness. This classification can then be used to determine if the fruit should be harvested or not. | At the start of this project the goal we had in mind was to build a robot that can harvest fruit (strawberries in our case) autonomously. The idea was that the robot would drive along a row of plants, detect which of the fruits are ripe and harvest the fruits that are ripe enough for consumption. Our plan was to use a cheap camera, like a Kinect, to take low resolution pictures of the fruit. These pictures would then be fed through a neural network (a Convolutional Neural Net) and classified into several categories with different degrees of ripeness. This classification can then be used to determine if the fruit should be harvested or not. | ||

| Line 32: | Line 31: | ||

==Problem statement== | ==Problem statement== | ||

How can we, as a society, produce enough food to feed over 9 billion people by 2050 when there are already over 900 million people living in famine today? | |||

The vision system we provide should be a simple, scalable system that can be implemented on farms, quality assurance centers, and other produce processing facilities, to mainly improve crop yields for farmers, as well as preserve best agricultural and post-harvest practices. | |||

==Research== | |||

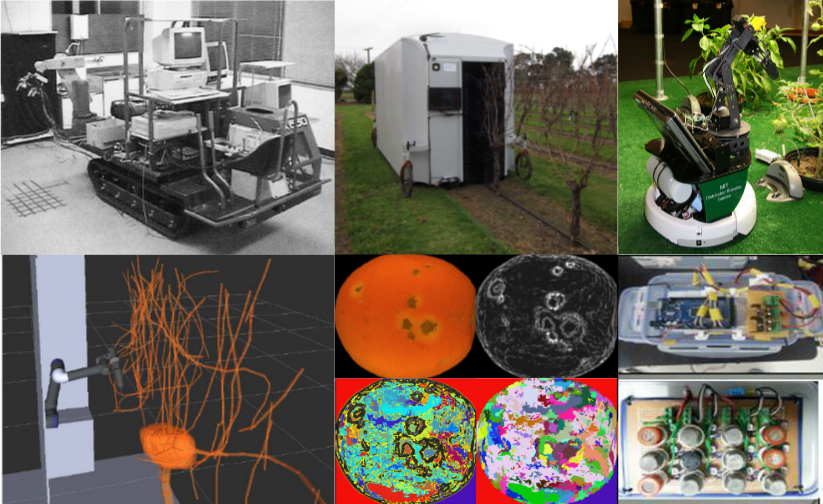

When embarking on developing our vision system, we first did a preliminary search for what was already developed. The first question we asked was whether such a vision system was necessary, and how if it was standardized across a number of technologies. In the image provided below, a number of projects are displayed that effectively capture the scope of the vision problems within agriculture today. There are a number of pruning/harvesting robots, which use stereo vision to create RGB and depth maps of the plants. The robot can then use these representations of the plant to create a 3D space in which to plan the optimal path for guiding its arm to harvest fruit, or prune the plant’s branches. This use of stereo cameras is the standard today for in-field machine vision. | |||

Moving away from visible light, there are numerous techniques involving microwave and x-ray sensing of the fruit for internal/external quality tests. These methods are promising within specific applications within quality assurance, and they even could be used with our neural network fruit classification system, if we replaced the RGB images with hyperspectral images. | |||

After reading up on the various kinds of machine vision techniques used in agriculture, it was clear that an approach using a neural network, and a shared database of images, had not been implemented previously. Thus, the research confirmed that our project had its niche, and so we began looking into the USE aspects surrounding our project, as well as searching for validation of our conceptualized system. | |||

===Manual strawberry harvesting process=== | |||

[[File:stateoftheart.png | thumb | 350px | Previous agritech projects related to harvesting and quality assurance.]] | |||

Strawberries that grow outside can be harvested once a year, during a three to four week period. The fruit is ripe 28 to 30 days after the plant has bloomed fully, since the fruits do not ripen all at the same time, it is important to check on the strawberries every two or three days [http://www.gardeningknowhow.com/edible/fruits/strawberry/picking-strawberry-fruit.htm]. | |||

When harvesting the berries, the stem should be cut about a quarter of the way up from the strawberry. It is very important to handle the fruit carefully, since bruised fruit will degrade faster. The harvested berries should be refrigerated quickly after harvesting, since they only last three days when refrigerated. Luckily, strawberry can handle freezing really well, which prolongs their lifespan considerably. When grown in a greenhouse, the period during which the berries can be harvest is prolonged. Different types of strawberries also bloom at different times during the year, enabling the harvesting of strawberries through out the year [http://www.chrisbowers.co.uk/guides/articles_strawberry_cultivation.php]. | |||

===Harvesting robots=== | |||

Quite some research on robots that can harvest fruit has already been done. There is for example a cucumber harvesting robot, that can work autonomous in a greenhouse [http://link.springer.com/article/10.1023/A:1020568125418]. The research shows a way to handle soft fruit without loss of quality, which is important for our initial type of fruit, strawberries. It can detect, pick and transport cucumbers. This research is already 15 years old, but still the robot is not widely used. The main reason for that is that the average time to pick a cucumber is around 45 seconds [http://link.springer.com/article/10.1023/A:1020568125418]. This is fairly slow. Speed will therefor be an important subject we must take into account for designing our own robot. A possibility to compensate for the long time it takes to pick one fruit, one can think of opperrating in parallel. This solution is examined for tomatoes [http://alexandria.tue.nl/extra2/afstversl/W/Hamers_2015.pdf]. This may make up for the cycling time. An important aspect of the design is that it should be easy to implement in farms or greenhouses like they are now. The farmers should not have to make major changes in their farms to be able to use the robot(s) [http://alexandria.tue.nl/extra2/afstversl/W/Hamers_2015.pdf]. The article shows that a multi-functional robot can work well and an important feature will be that the plants grow in a fixed height. This can be taken care of by for example using growing buckets, like the picture of the modern strawberry farm, below in section Design. | |||

A major challenge for most harvesting robots is recognition and localization of the fruit in a greenhouse or at a farm [http://www.sciencedirect.com/science/article/pii/S0021863483710206]. But also handeling without damaging the fruit or the tree is challenging [http://www.sciencedirect.com/science/article/pii/S0021863483710206]. Already major progress has been made in identifying fruit on the tree, but there is still a long way to go, since only 85% of the fruits are being identified, mostly because of the variability in lightning conditions and obscurity of fruits due to leaves [http://www.sciencedirect.com/science/article/pii/S0021863483710206]. Even when the fruit is identified, there is not a total freedom in movement, since the tree structure can hinder the robotic arm. | |||

For our initial type of fruit, strawberries, there is currently a machine that helps farmers harvest the strawberries. However, this machine is very large, bulky and expensive [https://www.google.nl/url?sa=i&rct=j&q=&esrc=s&source=images&cd=&ved=0ahUKEwi7r9eHqbTNAhVOkRQKHU0JDkgQjRwIBw&url=http%3A%2F%2Fwww.agrobot.com%2F&psig=AFQjCNEr2Ik2SSY9-xcjQsKEc_QyzNQARg&ust=1466433391988049 (see picture)]. Cost prices are in the order of 50,000 dollars. | |||

===Older Research=== | |||

* Yamamoto, S., et al. "Development of a stationary robotic strawberry harvester with picking mechanism that approaches target fruit from below (Part 1)-Development of the end-effector." Journal of the Japanese Society of Agricultural Machinery 71.6 (2009): 71-78. [https://www.jircas.affrc.go.jp/english/publication/jarq/48-3/48-03-02.pdf Link] | |||

* Sam Corbett-Davies , Tom Botterill , Richard Green , Valerie Saxton, An expert system for automatically pruning vines, Proceedings of the 27th Conference on Image and Vision Computing New Zealand, November 26-28, 2012, Dunedin, New Zealand [http://dl.acm.org.dianus.libr.tue.nl/citation.cfm?id=2425849 Link] | |||

* Hayashi, Shigehiko, Katsunobu Ganno, Yukitsugu Ishii, and Itsuo Tanaka. "Robotic Harvesting System for Eggplants." JARQ Japan Agricultural Research Quarterly: JARQ 36.3 (2002): 163-68. Web. [https://www.jstage.jst.go.jp/article/jarq/36/3/36_163/_article Link] | |||

* Blasco, J., N. Aleixos, and E. Moltó. "Machine Vision System for Automatic Quality Grading of Fruit." Biosystems Engineering 85.4 (2003): 415-23. Web. [http://www.sciencedirect.com/science/article/pii/S1537511003000886 Link] | |||

* Cubero, Sergio, Nuria Aleixos, Enrique Moltó, Juan Gómez-Sanchis, and Jose Blasco. "Advances in Machine Vision Applications for Automatic Inspection and Quality Evaluation of Fruits and Vegetables." Food Bioprocess Technol Food and Bioprocess Technology 4.4 (2010): 487-504. Web. [https://www.researchgate.net/publication/226403140_Advances_in_Machine_Vision_Applications_for_Automatic_Inspection_and_Quality_Evaluation_of_Fruits_and_Vegetables Link] | |||

: | |||

: | |||

* Tanigaki, Kanae, et al. "Cherry-harvesting robot." Computers and Electronics in Agriculture 63.1 (2008): 65-72. [http://www.sciencedirect.com/science/article/pii/S0168169908000458 Direct] [http://www.sciencedirect.com.dianus.libr.tue.nl/science/article/pii/S0168169908000458 Dianus] | |||

** Evaluation of a cherry-harvesting robot. It picks by grabbing the peduncle and lifting it upwards. | |||

* Hayashi, Shigehiko, et al. "Evaluation of a strawberry-harvesting robot in a field test." Biosystems Engineering 105.2 (2010): 160-171. [http://www.sciencedirect.com/science/article/pii/S1537511009002797 Direct] [http://www.sciencedirect.com.dianus.libr.tue.nl/science/article/pii/S1537511009002797 Dianus] | |||

** Evaluation of a strawberry-harvesting robot. | |||

==USE aspects== | ==USE aspects== | ||

===User | ===User Need=== | ||

The user group for the above introduced system can be split into three categories. The primary users are farmers and their permanent workers, they are the ones who will be interacting with our system on a daily basis. The secondary users are the quality assurance companies as well as the food distributors. Whilst they will not be interacting with the system on a daily basis, it will still influence them. The tertiary user is the company that will be producing and maintaining the system. | |||

'''Primary users''' | |||

The primary users have the following needs: | |||

*Farmers want information about their farm and their crops, as much as possible. | |||

:''The more information they have the better, this allows them to find potential problems with the crops, irrigation systems or any other technology on the farm. By making the system more transparent in this way, farmers can fix problems before they lose an entire harvest. It also enables farmers to experiment with different soil types, irrigation, fertilizer, etc. and to see the result of these experiments in real time. However, farmers do not have an autonomous system that can monitor their plants as they grow. Such a system would be tremendously useful, since real time monitoring by hand can take a lot of time.'' | |||

*Farmers would like to have more time business sides besides the operational side of the work | |||

:''Working on a farm is very labor intensive, an autonomous harvesting system would decrease the amount of physical work that the farmer has to do. They would now only occasionally need to check the harvest and possibly adjust some parameters. This work is less heavy than harvesting and therefore less health problems due to heavy work are expected. Additionally, more time can be spent on checking out new technologies to increase its competitive position.'' | |||

*Farmers want to waste less time | |||

:''By automating some of the processes in the farm, repetitive proceedings can be eliminated. This leaves more room for the trained and expensive workers to do tasks a robot cannot perform currently. An additional advantage is that there is no need to train seasonal workers anymore.'' | |||

'''Secondary users''' | |||

*Information is also very important for secondary users | |||

:''Especially if quality control centers discover that a large part of a batch of fruit is not fit for consumption or contaminated by a disease, they want to know where this fruit came from. If information about the individual fruits could already be gathered at the farm, this would greatly increase the transparency of the whole food production line. For the food distributor, traceability is very important. People want to know where the food that they are eating comes from, to avoid things like the 2015 [http://nos.nl/artikel/2026645-vijf-jaar-cel-geeist-in-paardenvleesschandaal.html horse meat scandal].'' | |||

*Shorter lines within the food chain | |||

:''In the current state is takes relatively long for the food to travel from farm to fork, causing (unnecessary) deterioration already before the food has arrived at the consumer. Days often pass before the food that was picked today is available for consumption. This could be done much more efficiently if harvesting can be done 24/7, thus enabling distributors to pick up the produce whenever suits them best. Quicker handling in the delivery chain can reduce loss significantly, due to prolonged shelf life either at the supermarket or at the customer. All quality loss before that contributes to the waste of food in general.'' | |||

'''Tertiary users''' | |||

*The tertiary user needs the system to be easily maintainable (which also includes easy to clean) | |||

:''These aspects should be taken into account during the design of the system. If maintainability is low, it costs both a lot of time and money to fix or improve the system, this is a major disadvantage for the manufacturer and the user. This will decrease the likelihood of selling the robot because it will generate great problems if the system is down. The cleaneability of the system is especially important, since the food industry has very high hygiene standards. Difficult cleaning processes will decrease the benefit of implementing a robotic handling system.'' | |||

*They are also interested in increasing demand for their services | |||

:''Increased demand provides additional employment, additional money flows and thus increases the possibility to innovate and develop the technology even more. Additionally it allows for scalability, which is of great importance for lowering the overall production costs and in the end the profitability of the product. These factors contribute to the viability of the company and that of the entire industry.'' | |||

===User Impact=== | |||

The impact of the implementation is manifold. Below the different user segments are listed again for the sake of readability. | |||

Primary: Farmer (and permanent employees) | Primary: Farmer (and permanent employees) | ||

*Transparency | *'''Transparency''': this is an effect of providing more information on the produce throughout the entire food chain. | ||

*Traceability | *'''Traceability''': this is a result of keeping track of more detailed information on where the produce comes from. | ||

*Less labor intensive work | *'''Less labor intensive work''': this is a direct consequence of automation. | ||

Secondary: Quality control / Supermarkets | Secondary: Quality control / Supermarkets | ||

*Transparency | *'''Transparency''': this is an effect of providing more information on the produce throughout the entire food chain. | ||

*Traceability | *'''Traceability''': this is a result of keeping track of more detailed information on where the produce comes from. | ||

Tertiary: Maintenance / Supplier | Tertiary: Maintenance / Supplier | ||

*Increase in demand for their services | *'''Increase in demand for their services''': this is an outcome of the fact that when more farmers start using the system, mouth-to-mouth advertising and other indirect forms will contribute to increasing product awareness. | ||

===Societal Need=== | |||

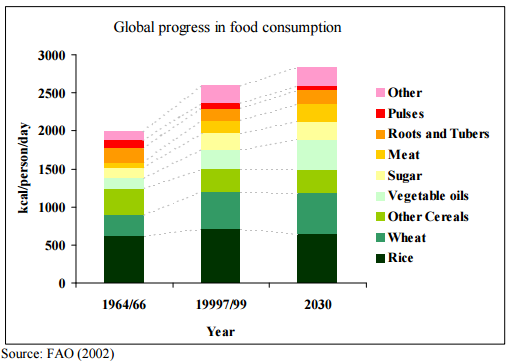

[[File:FoodDistribution.PNG | 400px | thumb | Evolution of the average diet composition of a human. | |||

[http://www.fao.org/fileadmin/templates/wsfs/docs/expert_paper/How_to_Feed_the_World_in_2050.pdf] ]] | |||

There are several problems to which our solution can relate such as: | There are several problems to which our solution can relate such as: | ||

* There will not be enough food in the near future for all the people | * There will not be enough food in the near future for all the people on earth | ||

* There is an increasing shortage of workers due to aging | * There is an increasing shortage of workers due to aging | ||

* Decrease in wage gap due to overabundance of food | * Decrease in wage gap due to overabundance of food | ||

| Line 92: | Line 134: | ||

[http://www.un.org/waterforlifedecade/food_security.shtml UN] facts (cited) supporting these statements are: | |||

* | * “The world population is predicted to grow from 6.9 billion in 2010 to 8.3 billion in 2030 and to 9.1 billion in 2050. By 2030, food demand is predicted to increase by 50% (70% by 2050). The main challenge facing the agricultural sector is not so much growing 70% more food in 40 years, but making 70% more food available on the plate.” | ||

* | * “Roughly 30% of the food produced worldwide – about 1.3 billion tons - is lost or wasted every year, which means that the water used to produce it is also wasted. Agricultural products move along extensive value chains and pass through many hands – farmers, transporters, store keepers, food processors, shopkeepers and consumers – as it travels from field to fork.” | ||

* | * “In 2008, the surge of food prices has driven 110 million people into poverty and added 44 million more to the undernourished. 925 million people go hungry because they cannot afford to pay for it. In developing countries, rising food prices form a major threat to food security, particularly because people spend 50-80% of their income on food.” | ||

Going from all the above mentioned we can address several calls listed in the Horizon 2020 Work Programme 2016-2017 (section on Food [http://ec.europa.eu/research/participants/data/ref/h2020/wp/2016_2017/main/h2020-wp1617-food_en.pdf]). Some would require a slight shift of our focus, but could well be addressed after our project is completed too since one can build upon our knowledge, making it easier to solve such issues in a likewise way. These include: | |||

Going from all the above mentioned we can address several calls listed in the Horizon 2020 Work Programme 2016-2017. Some would require a slight shift of our focus, but could well be addressed after our project is completed too since one can build upon our knowledge, making it easier to solve such issues in a likewise way. These include: | |||

* SFS-05-2017: Robotics Advances for Precision Farming | * SFS-05-2017: Robotics Advances for Precision Farming | ||

* SFS-34-2017: Innovative agri-food chains: unlocking the potential for competitiveness and sustainability | * SFS-34-2017: Innovative agri-food chains: unlocking the potential for competitiveness and sustainability | ||

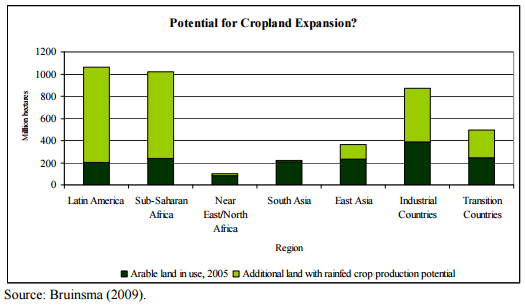

[[File:GrowthPlaces.PNG| 400px | thumb | Places with space and conditions appropriate for food production. [http://www.fao.org/fileadmin/templates/wsfs/docs/expert_paper/How_to_Feed_the_World_in_2050.pdf] ]] | |||

Our solution helps because by integrating the robots’ operation system into the system of distributors, less food will be wasted, and thus more will be available for others when developing the system in such a way that distribution can reach further for instance. Additionally we can help reduce poverty or limit the number of people that are driven into poverty, robots can reduce prices significantly on the middle to long term (before 2050 is certainly doable). By using the procedure we will use to make sure computer vision combined with machine learning is able to identify strawberries in the field, it will become easier to produce more legumes (semi-)automatically, thus addressing the SFS-26-2016 call. Our solution meets the expected mentioned at SFS-15-2017, namely: ‘increase in the safety, reliability and manageability of agricultural technology, reducing excessive human burden for laborious tasks’. Our robotic solution aims at reducing the number of people needed at a farm site. Also it specifically meets one of the expected impacts from the SFS-34-2017 call which says solutions should ‘enhance transparency, information flow and management capacity’. This is what the system behind the robot is intended to achieve. | Our solution helps because by integrating the robots’ operation system into the system of distributors, less food will be wasted, and thus more will be available for others when developing the system in such a way that distribution can reach further for instance. Additionally we can help reduce poverty or limit the number of people that are driven into poverty, robots can reduce prices significantly on the middle to long term (before 2050 is certainly doable). By using the procedure we will use to make sure computer vision combined with machine learning is able to identify strawberries in the field, it will become easier to produce more legumes (semi-)automatically, thus addressing the SFS-26-2016 call. Our solution meets the expected mentioned at SFS-15-2017, namely: ‘increase in the safety, reliability and manageability of agricultural technology, reducing excessive human burden for laborious tasks’. Our robotic solution aims at reducing the number of people needed at a farm site. Also it specifically meets one of the expected impacts from the SFS-34-2017 call which says solutions should ‘enhance transparency, information flow and management capacity’. This is what the system behind the robot is intended to achieve. | ||

=== | ===Societal Impact=== | ||

On the short to middle term our solution can contribute to the following: | |||

*'''Decrease of jobs in agriculture''': this is a direct effect of replacing human labor by robots. | |||

**'''Increase in robot maintenance jobs''': this is an outcome of increased sales in this sector and functions as a buffer for some of the job losses in agriculture. | |||

*'''Increasing food security''': this is a result of better transparency and traceability throughout the food chain. | |||

*'''Lower prices''': this is a consequence of decreased labor costs due to deployment of robots. | |||

*'''Less waste''': this is a result of more efficient food chains and better resource management. | |||

On the long term one major impact is expected: | |||

* | *'''More food available''': this will be an effect of growing farms, thus actually producing more food, and less waste of the actual produce. | ||

===Enterprise Need=== | |||

[[File: enterprisetable.png | thumb | 340 px| Percentage of greenhouses/nurseries with various level of automation for different tasks. [http://horttech.ashspublications.org/content/18/4/697/T2.expansion.html] ]] | |||

*Automation can result in more sales | |||

:''A socioeconomic survey of 87 nurseries and greenhouses located in Mississippi, Louisiana, and Alabama has shown greenhouses or nurseries with higher levels of sales tend to also have a higher level of automation [http://horttech.ashspublications.org/content/18/4/697.full]. The level of automation was measured as the percentage of tasks that were automated of mechanized, it was found that “significant increases in total revenues were associated with the level of mechanization, the number of full time equivalent workers, and the number of acres in production. These positive coefficients indicate that an increase in total revenues, on the average, by $4,900/year was associated with a one-unit (1%) increase in the level of mechanization.”'' | |||

:''The same survey also showed that within these 87 nurseries and greenhouses the automation level of ‘harvesting and grading production’ was 0 percent. This could mean that there is incentive to introduce automated harvesting robots in similar greenhouses and nurseries. It also confirms what we were told by the farmer from Kwadendamme, grading production involves the inspection, assessment and sorting of produce and was indeed found to be 0 percent automated. | |||

:''Another study, on the farm development in Dutch agriculture and horticulture [https://core.ac.uk/download/files/153/7082880.pdf] shows that “the degree of mechanization increases the probability of firm growth and firm renewal.”'' | |||

*Farms are getting bigger | |||

:''The structure of agricultural production is experiencing fundamental changes, this is happening worldwide [https://core.ac.uk/download/files/153/7082880.pdf]. Farms are moving away from the traditional family-owned, small scale and independent farming model and moving towards an industry that is structured in line with the production and distribution value chain. The result of these changes is that the average number of farms is decreasing, whereas the average farm size is increasing. Autonomous harvesting robots could help farmers deal with this increased size in a cost efficient way.'' | |||

:''These robots would also be beneficial for the second stage in the production chain, the quality control and packing centers. The robots could gather information about the produce as it is growing and share this with the quality control centers.'' | |||

*Farmers show interest in autonomous machines | |||

:''A recent survey of German farmers [http://link.springer.com.dianus.libr.tue.nl/chapter/10.3920/978-90-8686-778-3_97] has shown that these farmers are interested in adopting (semi-)autonomous machinery. They see several benefits, amongst them are saving labor, saving time, higher precision and increasing efficiency. When asked about fully-autonomous machines, some farmers also saw some additional benefits, lower health risks and an advantage in documentation and evaluation. However, they were skeptical about the reliability and safety of the machines. This shows that there is a potential market for our idea.'' | |||

*Lower costs | |||

:''The increase in automation will be paired with lower wage costs and less health benefits that have to be paid by the farmers, since the amount of full time, part time and seasonal workers will decrease. This is already a reason for current development of machinery.'' | |||

:''“High labor costs in recent years have encouraged larger, wider and faster machinery for cost efficient production.” [http://link.springer.com.dianus.libr.tue.nl/chapter/10.3920/978-90-8686-778-3_97].'' | |||

The need may be summarized as enhanced competitive advantage needed to survive the price [http://www.ad.nl/economie/prijsverlaging-ah-ontketent-nieuwe-prijzenoorlog~a32ce14e/ ‘war’], since lower prices in supermarkets will in the end put more pressure on the producers of the food they sell. Therefore growth and automation are needed. Our robotic solution can provide just what these enterprises need. | |||

===Enterprise Impact=== | |||

The impact of our solution on the enterprise (the farm(er) in our case) can be summarized as follows: | |||

*'''More sales''': automation on farms correlates to $4900 more revenue a year per 1% automation. | |||

*'''Lower costs''': as a result of less labor costs. | |||

**'''Investment''': as a result of lower costs more money will be available | |||

***'''Expansion''': the above mentioned room for investment can lead the farmer to this decision. | |||

***'''System improvement''': the above mentioned money for investment can be used for this too. | |||

***'''Other technical advances''': the same reason as the upper two. | |||

===Transparency and traceability=== | |||

Transparency and traceability are immensely important within the context of our project. Beyond the novelty of being able to see that your fruit comes from Sri Lanka, Guatemala, etc. the ability to track which farms the produce has passed through allows for better food regulation and, even more importantly, the wherewithal to eliminate diseased fruit from contaminating the food supply. Imagine that there was an outbreak of E. coli, and the first recorded instance of the E. coli could be traced back to the farm from which it originated. The farmer would then know which of his crops would need to be uprooted. | |||

Another example is that a food crate might come in weighing ten kilos, and the RFID tag that is attached to the crate states that the food crate should contain fifteen kilos. This kind of produce checksum ensures the food hasn’t been tampered with, as well as providing an added sense of security in the food that is being purchased. | |||

==Requirements== | |||

As a result of the above adressed research, the following requirements were formulated. | |||

===Functional requirements=== | |||

The | * The robot should be able to detect fruit using a camera. | ||

* It should be able to classify the ripeness of the fruit into several different classes. | |||

* The robot will query an online database about the ripeness of a certain fruit and the database will return the percentile of ripeness the fruit is in based on different fruit image sets. | |||

The | * The robot should not damage the fruit in any way. | ||

* The farmer should be able to give feedback, this should increase the performance of the system. | |||

* The farmer should be able to interface with the database as well as different harvesting metrics through a mobile device. | |||

* The robot must be easy to maintain and clean. | |||

* The robot should not be able to spread diseases from one plant to another plant. | |||

===Non-functional requirements=== | |||

* It should be relatively simple to add the Kinect and the Raspberry Pi to an existing harvesting system. | |||

* The farmer should be able to use the system with minimal prior knowledge. | |||

* | |||

* The robot should perform better than a human quality controller. | |||

* | |||

* The robot should be faster and more efficient than a human worker. | |||

* This robot should have all the safety features necessary to ensure no critical failures. | |||

==Validation of concept== | ==Validation of concept== | ||

===Conversation with | ===Conversation with cucumber farmer=== | ||

On the 12th of May we conducted an interview with a Cucumber farmer, named Wilco Biemans. A friend of Maarten and Cameron works at his farm, through her we were able to get his contact information and schedule an interview. During the interview we asked him several questions about his farm, the current state of the art, developments that were taking place and his opinion on our system. His overall response was very positive, he saw the potential in our system but he did point out several points of improvement. Below the main questions and his answer will be outlined, please note that we are not reporting his exact answers since the interview was conducted in Dutch and we did not record it. | On the 12th of May we conducted an interview with a Cucumber farmer, named Wilco Biemans. A friend of Maarten and Cameron works at his farm, through her we were able to get his contact information and schedule an interview. During the interview we asked him several questions about his farm, the current state of the art, developments that were taking place and his opinion on our system. His overall response was very positive, he saw the potential in our system but he did point out several points of improvement. Below the main questions and his answer will be outlined, please note that we are not reporting his exact answers since the interview was conducted in Dutch and we did not record it. | ||

'''What system is in place for detecting the ripeness of fruit?''' | '''What system is in place for detecting the ripeness of fruit?''' | ||

The farmer said that currently the fruit is sorted by trained workers and machines. The cucumbers are picked by workers who have received training which allows them to identify which cucumbers are ripe enough for picking. These vegetables are put in crates, which are then transported to a sorting facility. There the cucumbers that are deformed and not fit for consumption are separated from the rest, these will be sold to the cosmetics or fertilizer industry. The remaining cucumbers are separated by a machine into several weight categories (400-500, 500-600 grams) which are then sold for consumption. | The farmer said that currently the fruit is sorted by trained workers and machines. The cucumbers are picked by workers who have received training which allows them to identify which cucumbers are ripe enough for picking. These vegetables are put in crates, which are then transported to a sorting facility. There the cucumbers that are deformed and not fit for consumption are separated from the rest, these will be sold to the cosmetics or fertilizer industry. The remaining cucumbers are separated by a machine into several weight categories (400-500, 500-600 grams) which are then sold for consumption. | ||

'''What developments are currently being made in the agriculture industry, with respect to automated harvesting?''' | '''What developments are currently being made in the agriculture industry, with respect to automated harvesting?''' | ||

| Line 195: | Line 252: | ||

An other development is a machine which can sort cucumbers based on their length, automatically. Development of this machine has been ongoing for about 10 years. Methods for detecting rot on the inside of fruits are also being developed. Finally, he pointed us to the work that is being done by the University of Wageningen, more on this can be found in the section on PicknPack and in the section on the state of the art. | An other development is a machine which can sort cucumbers based on their length, automatically. Development of this machine has been ongoing for about 10 years. Methods for detecting rot on the inside of fruits are also being developed. Finally, he pointed us to the work that is being done by the University of Wageningen, more on this can be found in the section on PicknPack and in the section on the state of the art. | ||

'''Would a more accurate system be helpful?''' | '''Would a more accurate system be helpful?''' | ||

| Line 202: | Line 258: | ||

===Trip to PicknPack workshop at Wageningen University=== | ===Trip to PicknPack workshop at Wageningen University=== | ||

[[File:Imagebrix.jpg | 400px | thumb | An assessment done by the quality assessment module of the PicknPack demo. [https://goo.gl/photos/AHox56GW1U79fTcX7 We have more images here.]]] | |||

Via one of our mentors we were pointed at the PicknPack project. In this project, research is being done on solutions for easy packaging of food products. The goal of the research is to provide knowledge on possible solutions for picking food from (harvest) bins and packing the food, and meanwhile doing quality control and adding traceability information. To that end they created a prototype industrial conveyor system with machines that provide this functionality. The system is designed to be flexible and allow easy changing of the processed food type/category. While checking out the project, we saw a workshop announcement where they planned on showing the prototype and giving information. The time was possible for us and the content was interesting, so we decided to go with a group of 3 (Mark, Cameron, Maarten). We went on May 26 to Wageningen University for the workshop. | Via one of our mentors we were pointed at the PicknPack project. In this project, research is being done on solutions for easy packaging of food products. The goal of the research is to provide knowledge on possible solutions for picking food from (harvest) bins and packing the food, and meanwhile doing quality control and adding traceability information. To that end they created a prototype industrial conveyor system with machines that provide this functionality. The system is designed to be flexible and allow easy changing of the processed food type/category. While checking out the project, we saw a workshop announcement where they planned on showing the prototype and giving information. The time was possible for us and the content was interesting, so we decided to go with a group of 3 (Mark, Cameron, Maarten). We went on May 26 to Wageningen University for the workshop. | ||

The quality assessment module is the most interesting for our project, and we were pleasantly surprised to learn that their approach used components that we were planning to use ourselves. In the quality assessment module, they set up an RGB camera, a hyperspectral camera, a 3D camera and a microwave sensor. The RGB camera is used for detecting ripeness. | The quality assessment module is the most interesting for our project, and we were pleasantly surprised to learn that their approach used components that we were planning to use ourselves. In the quality assessment module, they set up an RGB camera, a hyperspectral camera, a 3D camera and a microwave sensor. The RGB camera is used for detecting ripeness. To process images taken by this camera, a statistical model is used. By color the system determines the location of the fruits, as well as the stem and the background. This determination was trained manually. In a user interface, a couple of determination results where shown (around 7) using different parameters. Each result showed the original image with an overlay showing what the computer determined for each area in the complete image (fruit, stem or background). The user would then choose the best result, which would alter the parameters accordingly. Using the information of where the fruits are, weight was estimated using the 3D camera. Features they measure via the hyperspectral camera are sugar content, raw/cooked state (for meat products) and chlorophyll, also using the location information. The image on the right shows a result of a live assessment that was demoed for us on their system. The different fruits are located on the image and for each fruit the estimated weight, color and sugar content (brix) is shown. The last sensor that they also set up is a microwave sensor, which is used for freshness and water content (among other things). | ||

Cameron asked about the use of machine learning in combination with the RGB camera to a PhD student working on the RGB ripeness detection. The student explained that they weren't opposed using machine learning, in this project however the statistical model suited their needs better. He mentioned the black box nature and overfitting as potential problems for using machine learning. | |||

An other big aspect in the project was traceability. A system was designed to closely track each food packet. Techniques used for this are RFID, QR codes and bar codes. There is also a database where the details are stored. | |||

Cleanliness was also very important. To deal with this a robot was created that could move using the conveyor system through all machines. For each separate machine a separate cleaning procedure can be defined. | |||

In the software aspect, a research group from KU Leuven created a general software system as a backend for each module in the conveyor system. This was designed in such a way that modules can be added or removed easily and that it is easy to communicate between modules. | |||

An overview of all different aspects of the system can be seen here: [http://www.picknpack.eu/index.php/workpackages] | |||

'''Trends identified by the PicknPack team''' | |||

* More personalized fresh foods (online) | * More personalized fresh foods (online) | ||

| Line 215: | Line 280: | ||

* Advancing technology | * Advancing technology | ||

===Visit to Kwadendamme Farm=== | |||

[[File:kwad.jpg | 350px | thumb | Section of the apple and pear farm.]] | |||

'''Overview''' | |||

We learned an immense amount of information from the Steijn farmers in Kwadendamme. These farmers plant Conference pears and Elstar apples, and they process over 900,000kg of fruit every year across 15 hectares of land. As seen in the picture above, the tress are kept to a relatively low height (around 2 meters) and the are aligned nicely in even rows. | We learned an immense amount of information from the Steijn farmers in Kwadendamme. These farmers plant Conference pears and Elstar apples, and they process over 900,000kg of fruit every year across 15 hectares of land. As seen in the picture above, the tress are kept to a relatively low height (around 2 meters) and the are aligned nicely in even rows. | ||

'''Farmer Problems''' | |||

The main priority of the farmer is to maximize his revenue. In our conversations with Farmer Rene, he mentioned that the biggest detractor from their bottom line was labor. The hourly wage for a Romanian/Polish harvester was 16 euros per hour (which includes tax, healthcare and other employee benefits), even though only 7 euros of that ends up going directly to the worker. The farmers only hire farmhands during 3-4 weeks of the year when they are harvesting their apples and pears, and the rest of the year the majority of their work goes into pruning, fertilizing, and maintaining the plants. | The main priority of the farmer is to maximize his revenue. In our conversations with Farmer Rene, he mentioned that the biggest detractor from their bottom line was labor. The hourly wage for a Romanian/Polish harvester was 16 euros per hour (which includes tax, healthcare and other employee benefits), even though only 7 euros of that ends up going directly to the worker. The farmers only hire farmhands during 3-4 weeks of the year when they are harvesting their apples and pears, and the rest of the year the majority of their work goes into pruning, fertilizing, and maintaining the plants. | ||

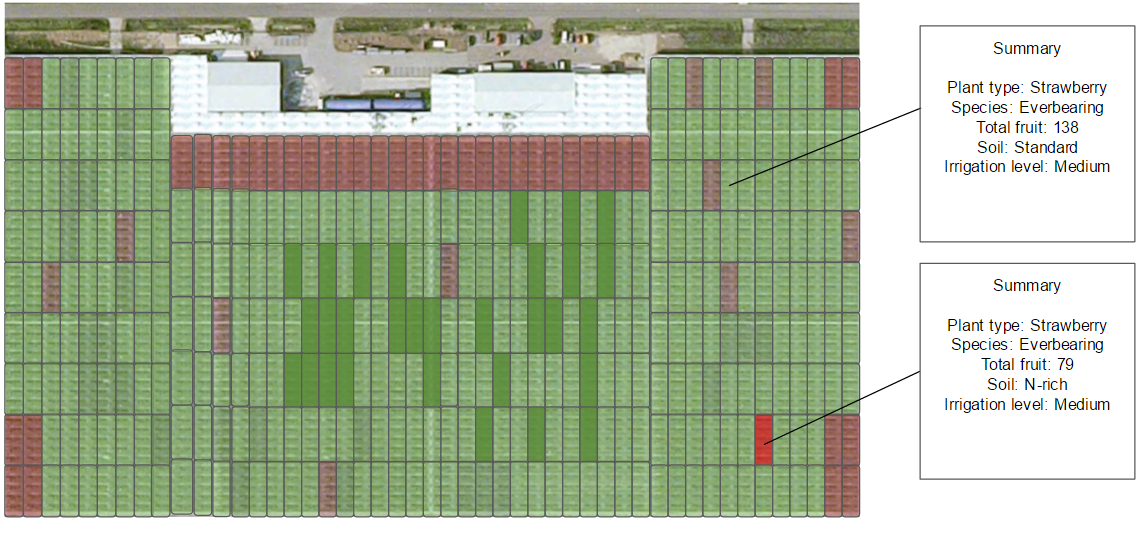

Another way for the farmer to increase his bottom line is to drive top line growth. If the farmer can produce plants that yield 20% more fruit, this translates to an almost equally proportional revenue increase. The farmers mentioned that a robotic system that continually keeps track of the number of produce each row of trees (or individual tree if such accuracy is attainable) is bearing. We discussed the concept of a density map for their land, and the idea resonated with them. We decided here that we would develop the planning for a high-tech farm in which our image processing system would play as a proof of concept for one of the many technologies that would be implemented in the overall farm. This allows us to focus on a more broad perspective, while still creating a technically viable solution. | Another way for the farmer to increase his bottom line is to drive top line growth. If the farmer can produce plants that yield 20% more fruit, this translates to an almost equally proportional revenue increase. The farmers mentioned that a robotic system that continually keeps track of the number of produce each row of trees (or individual tree if such accuracy is attainable) is bearing. We discussed the concept of a density map for their land, and the idea resonated with them. We decided here that we would develop the planning for a high-tech farm in which our image processing system would play as a proof of concept for one of the many technologies that would be implemented in the overall farm. This allows us to focus on a more broad perspective, while still creating a technically viable solution. | ||

[[File:densitymap.png | | [[File:densitymap.png | 350px | thumb | Example density map.]] | ||

Interestingly enough, on this farm they were testing new kinds of fertilizers and plant species on specific areas of the farm. They buy new products (for example pretreated seed that protects against insects) from Bayer, Monsanto, OCI and other companies, which they subsequently plant in test crops to gauge its efficacy. Using a density map similar to the one above allows the farmer to try more experimental methods to increase yield on their farm. | Interestingly enough, on this farm they were testing new kinds of fertilizers and plant species on specific areas of the farm. They buy new products (for example pretreated seed that protects against insects) from Bayer, Monsanto, OCI and other companies, which they subsequently plant in test crops to gauge its efficacy. Using a density map similar to the one above allows the farmer to try more experimental methods to increase yield on their farm. | ||

| Line 238: | Line 303: | ||

We also asked the farmers how they felt about certain technologies we saw at Delft, such as the 3D X-ray scanners for detecting internal quality of fruit. Again, they said that such technologies had their place in quality assurance but it was not useful to them as proving an apple that looks good on the outside has internal defects just subtracts from the total number of apples they can sell to the intermediary trader between them an Albert Heijn. | We also asked the farmers how they felt about certain technologies we saw at Delft, such as the 3D X-ray scanners for detecting internal quality of fruit. Again, they said that such technologies had their place in quality assurance but it was not useful to them as proving an apple that looks good on the outside has internal defects just subtracts from the total number of apples they can sell to the intermediary trader between them an Albert Heijn. | ||

===Support vector machine vs convolutional neural network=== | |||

One of the main design choices we had to make in our project, was which classification algorithm should we use to classify the fruit. We did not want to choose a convolutional neural network just because it sounded exciting if a simple K-means clustering algorithm with color thresholds would have sufficed. We spoke with an expert in machine learning and he told us that the state of the art in classification algorithms were the convolutional neural network and the support vector machine. We decided to investigate which of the two worked better for our project, and it turned out that the CNN was more suited to our needs. Below is a comparison between the two techniques. | |||

====SVM (support vector machines)==== | |||

In a SVM neural network the training data is represented as points in a multi-dimensional feature space, to this end the data has to be presented as a vector. The idea is that the data can be separated into two groups, which (ideally) consist of clusters of points in this feature space. The SVM then construct a hyperplane that best separates the two groups of points, this is achieved by finding the plane with the largest distance to the nearest training data-point in any class. Once the best fitting hyperplane has been found, it can be used to quickly classify new data into one of the two categories. To use a SVM for image classification, the image will have to be represented as a vector. | |||

=====Benefits===== | |||

* Once the hyperplane has been found, the classification of new data can be done really quickly. | |||

* Implementation is relatively easy. | |||

=====Downsides===== | |||

* Representing an image as a vector is not ideal. Not only is all spatial correlation between parts of the image lost, we would also have to choose one color channel, convert the image to black and white (losing all information about the colour) or train multiple SVMs who each look at one colour channel (which will take a lot of time). | |||

* An SVM can only separate the training data in two classes, further subdivision of the data would require more SVMs. This means SVMs are not well suited for our project, since our aim is to separate the strawberries into different levels of ripeness. | |||

====Convolutional Neural Network (CNN)==== | |||

In a convolutional neural network in the connectivity pattern between the neurons is inspired by the animal visual cortex. In the visual cortex the neurons are arranged such that they respond to overlapping regions in the visual field, called receptive fields. CNNs are widely used in image recognition, since they can exploit the 2-D data structure of the images. | |||

When used for image recognition CNNs are made up of several layers of neurons, each of these layers processes a receptive field. The outputs of the neurons in each layer are then combined such that their receptive fields overlap, giving a better representation of the original image. This allows the CNN to be invariant to (small) translations in the input. Neurons in adjacent layers are connected using a local connectivity pattern, which allows the network to take spatial correlations and patterns (like edges) in the image into account. The network then looks for patterns at different depths in the set of shapes and patterns, then combines this knowledge, in a few fully connected layers, to classify the image. | |||

=====Benefits===== | |||

* CNN are widely used for image recognition and have been used to achieve very high levels of correct classification on various data sets. (MNIST: 0.23%, ImageNet: 0.06656%) | |||

* There are many libraries available for the implementation of CNNs, for instance TensorFlow. | |||

* Can be used to classify images into multiple categories. | |||

=====Downsides===== | |||

* Training the network is very computationally intensive. | |||

* A large training set of images is needed, ideally with different lighting conditions, orientation of the objects, colors. | |||

==Design== | ==Design== | ||

| Line 243: | Line 337: | ||

===Initial design=== | ===Initial design=== | ||

[[File:Strawberry_farm.jpg | 300 px | thumb | Modern strawberry farm.]] | |||

Our initial idea was to build an autonomous harvesting robot, made to harvest strawberries. It would be able to determine the level of ripeness of the strawberries and harvest the correct ones accordingly. | Our initial idea was to build an autonomous harvesting robot, made to harvest strawberries. It would be able to determine the level of ripeness of the strawberries and harvest the correct ones accordingly. | ||

| Line 262: | Line 356: | ||

===Final design=== | ===Final design=== | ||

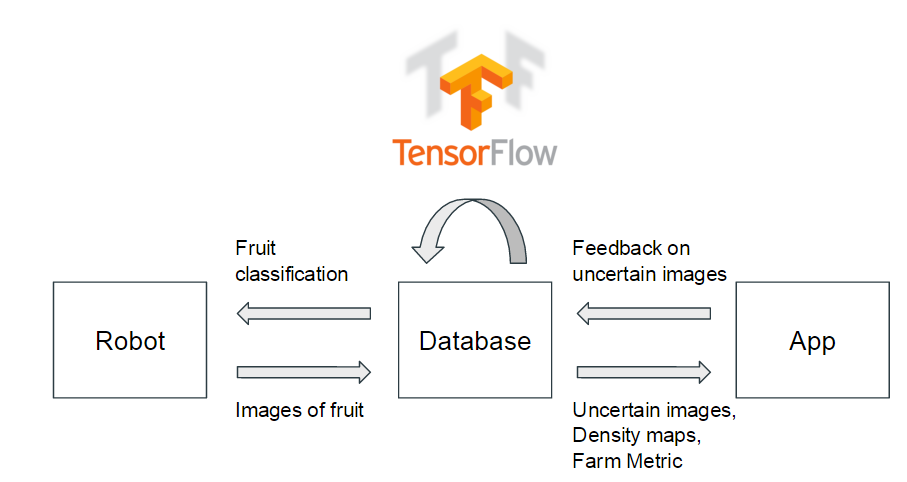

For our final design we took a fundamentally different approach. The first thing we looked at was how our system would fit in on a farm and how the farmer should interact with the system. There would still be a robot working in the field, the purpose of this robot is to inspect the fruit and harvest those fruits which are ripe enough to be harvested. The second part of the system is an application that allows the farmer to see how his system is performing, what the status of his growing crops is and to give feedback and improve the performance. The third component would be the back end, which connects the robot and the application. | For our final design we took a fundamentally different approach. The first thing we looked at was how our system would fit in on a farm and how the farmer should interact with the system. There would still be a robot working in the field, the purpose of this robot is to inspect the fruit and harvest those fruits which are ripe enough to be harvested. The second part of the system is an application that allows the farmer to see how his system is performing, what the status of his growing crops is and to give feedback and improve the performance. The third component would be the back-end database, which connects the robot and the application. | ||

[[File:System Overview.PNG | | [[File:System Overview.PNG | 250 px | thumb | Overview of system.]] | ||

'''Robot''' | |||

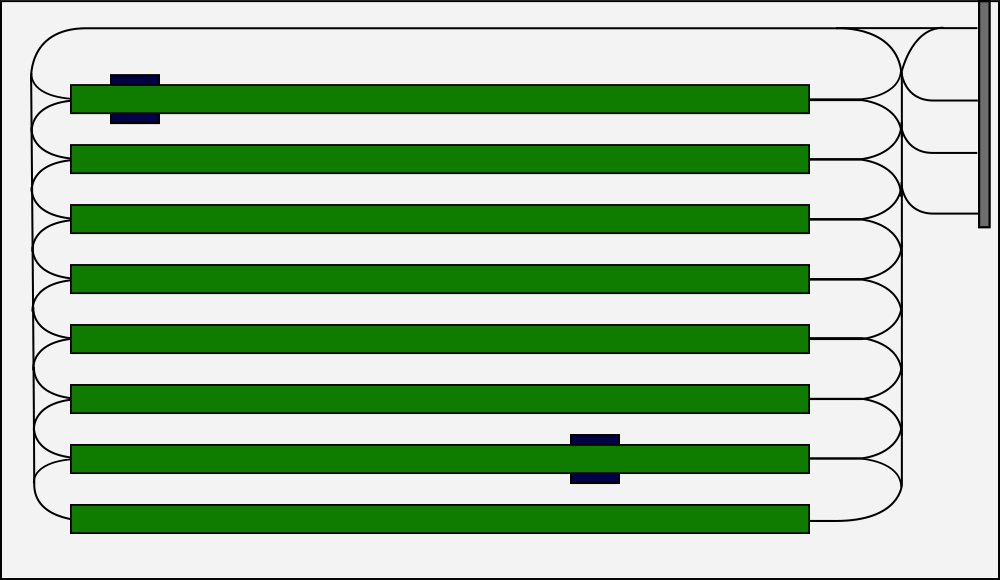

We started by looking at the way that modern strawberry farms are set up, whilst keeping our old design in mind. These modern farms are all build inside greenhouses, which allows for control of the temperature and humidity of the farm. The strawberries plants are grown in rectangular tray, which are suspended from the ceiling at a height of about 1 meter. The plants are placed such, that the grown strawberries dangle from the side of the trays, which allows for easy harvesting. To further optimise the harvesting process, the plants are suspended at a height suited for human pickers. This layout allows for further optimization than we initially anticipated. | |||

We started by looking at the way that modern strawberry farms are set up, whilst keeping our old design in mind. These modern farms are all build inside greenhouses, which allows for control of the temperature and humidity of the farm. The strawberries plants are grown in rectangular tray, which are suspended from the ceiling at a height of about 1 meter. The plants are placed such, that the grown strawberries dangle from the side of the trays, which allows for easy harvesting. To further optimise the harvesting process, the plants are suspended at a height suited for human pickers. This layout allows for further optimization than we initially anticipated | |||

[[File:Whole_system 1.PNG | 250 px | thumb| Sketch of floor plan of conceptual system.]] | |||

[[File:Robot_tekening.jpg | 250 px | thumb| Concept sketch of the robot.]] | |||

The layout of the plants is fixed, the layout of the greenhouse however, is not. We therefore decided not to build the robot on rails, but rather adopt a system similar to the one used in the warehouses of, for example, Amazon. These warehouses employ robots to transport packets around, black stripes painted on the floor are used as roads by the robots. The stripes indicate where they are allowed to move and since the complete pattern is known by the robots, they can find there way around the warehouse easily. In the greenhouse, these stripes would be placed underneath the trays that hold the plants and would also be connected to a charging station and a place to drop the harvested fruit. A top down view of the envisioned system can be seen in the figure on the right. By guiding the robots around in this way, the system can be easily adapted to work in any shape greenhouse. This easy adaptability is of great interest to farmers, since they do not want to change the layout of their farms. | |||

By allowing the robot to move underneath the trays, it is able to look at the strawberries on both side of the tray at the same time. This greatly increases the efficiency of the robot and allows it to process all of the fruit on the farm much faster. A concept sketch of the robot can be found on the right. The robot would consist of a cubic base, which houses all of the electronics and batteries. This allows us to waterproof the system, such that it can withstand the harsh conditions inside a greenhouse (for a robot, that is). | |||

On top of the base would be a spot to place the basket, where the fruit is collected after harvesting. This allows for easy loading and depositing of the baskets at the base station. The basket would be mounted on a piston, which will allow for vertical movement of the basket. This is done to reduce the distance that the fruit has to fall. | |||

On each side of the robot, a structure would be mounted. Onto this structure we would mount the camera, as can be seen in the sketch the camera system is supported by two metal rods. A third rod, with screw thread, is mounted in the middle, which allows for vertical movement of the camera assembly. Besides the camera for taking images, the camera assembly would also house a mirror galvanometer. This system consists of a mirror that rotates if it receives an electric signal, these mirror are widely used to guide laser beams. In our case we would use the laser to cut the fruit, as suggested by Wilco Biemans. The laser would come from the base and the beam would run in between the metal rods that support the camera assembly. | |||

The robot would have two functions within the system. The first is to inspect the fruit as it grows, as we learned from the apple and pear farmer in Kwadendamme, farmers have no automated way of tracking the development of their crops as they are growing. The vision system on our robot enables it to do this and we can display this information inside the app. This allows the farmer to see the development of his crop, as well as experiment with different soil types and see the results in real time. It would also allow him / her to troubleshoot much more effectively. Since the system would allow the farmer to find areas that are lagging behind the rest of the farm, early intervention might save crops in these areas. | |||

The second function of the robot would be to harvest fruit that is ripe. If the robot detects that a fruit is ripe enough to be harvested, it would stop moving along the tray with strawberries and use the laser to cut the stem of the ripe fruit, just about the crown of the strawberry. The strawberry would then fall down, a short distance, into the basket in the middle of the robot. | |||

'''Application''' | '''Application''' | ||

It was clear that the farmer should be able to monitor the system without having to go into the field or greenhouse. To this end we decided to build a mobile application, allowing the farmer to monitor his harvesting system from within the comfort of his home. The app would display statistics about the system, such as power usage, potential defects | It was clear that the farmer should be able to monitor the system without having to go into the field or greenhouse. To this end we decided to build a mobile application, allowing the farmer to monitor his harvesting system from within the comfort of his home. The app would display statistics about the system, such as power usage, potential defects, temperature of the greenhouse, etc.. | ||

The app would also allow the farmer to give feedback. If the system is unsure whether a certain fruit is ripe enough to be harvested, it would send the image it took to the farmers application. The farmer could then tell the system whether or not the fruit was ripe enough. This information could then be sent back to system and incorporated into it's grading system. | The app would also allow the farmer to give feedback. If the system is unsure whether a certain fruit is ripe enough to be harvested, it would send the image it took to the farmers application. The farmer could then tell the system whether or not the fruit was ripe enough. This information could then be sent back to system and incorporated into it's grading system. | ||

Since the feedback of one farmer is not going to have a big impact on the systems performance, we decided to combine the feedback from multiple users. This means that all of the systems have to utilise the same database, which has two main benefits: | Since the feedback of one farmer is not going to have a big impact on the systems performance, we decided to combine the feedback from multiple users. This means that all of the systems have to utilise the same database, which has two main benefits: | ||

:*When the robot sends out a picture that it could not classify with enough accuracy, multiple users will receive it on their application. Individually, they can decided whether or not the fruit is ripe enough. If enough of these users agree, the image can be added to the database to improve the accuracy. Since the feedback from multiple users is considered, the chance that the image is classified incorrectly is greatly decreased. | |||

:*Because the system is receiving feedback from multiple users, the number of new pictures added to the database is a lot larger. This means that the impact of the feedback will be a lot greater and the overall accuracy of the system a lot higher. | |||

The third and final main feature of the app is the display of information about the crops that it receives from the robot. This information consists of the amount of fruit in each tray and the level of ripeness of each of these fruit. The app can use this information to build density maps of the farm. Several maps we had in mind are: | |||

:*The average quality level of the fruit in several sections of the farm. | |||

:*The expected revenue in these sections. | |||

:*The amount of processed fruit per section. | |||

:*The amount of fruit that is present in a certain section. | |||

These maps can be used by the farmer to gain insight into the growth of his crops as well as detect problems in certain areas of the farm. | |||

'''Back end''' | |||

The | The back end of the system connects the robot and the application. It consists mainly of a large database with all of the pictures sorted into different categories. The robot would communicate with the back end in two ways. Firstly, it will send the pictures that it takes to the database for classification. The image will then be classified using the CNN and the result will be communicated back to the robot, which then decides whether or not to pick the fruit. | ||

The | The app also interacts with the database, images are not classified with enough certainty will be send to users of the app and the app will send their feedback back to the database. The database will also send the information, gathered by the robot, to the app. Where it is used to create the density maps. | ||

The feedback of the users will be implemented into the database at predetermined time intervals, which can be chosen by the farmer. This is done because the neural network has to be retrained every time feedback is implemented. This retraining is a lengthy process (4 hours with ~200 images) and therefore cannot be done every time a user gives feedback. | |||

==Vision system== | ==Vision system== | ||

[[File:lamp.png | 350px | thumb| Demo electronics schematic.]] | |||

The robot needs a vision system to detect whether a fruit in its sight is ready to be plucked. When a fruit is detected, it should be left hanging when it is not ripe (green in case of strawberries). When the fruit is ripe or overripe it should be plucked. Overripe fruits will be filtered during later quality assessment phases which are already in place. This often happens during the packaging phase. | The robot needs a vision system to detect whether a fruit in its sight is ready to be plucked. When a fruit is detected, it should be left hanging when it is not ripe (green in case of strawberries). When the fruit is ripe or overripe it should be plucked. Overripe fruits will be filtered during later quality assessment phases which are already in place. This now often happens during the packaging phase. | ||

For the vision system we chose to use image recognition using machine learning. | For the vision system we chose to use image recognition using machine learning. This has a couple of advantages over simple color detection. It is not really hard to implement using preexistent libraries and will provide good results already with a small dataset. For simple color detection you also need to detect the position of the strawberry to cancel out different background colors. A color detection system also needs to be adjusted for each different food category. For the machine learning system however, it is only needed to provide a new dataset for a new food category and retrain the model. A PhD student at Wageningen UR also was interest in using machine learning for this. They did not use it because they had a slightly different use case for which a statistical model was more appropriate. | ||

A disadvantage of the use of machine learning is the required computing power. Training of the model is not the main computing power problem here, the existing model can be retrained once a week for newly added images. Retraining only takes 20-30 minutes on a single core. Images taken by the robot need to be classified using the model, to determine whether or not the fruit should be plucked. To do this, for each image a considerable amount of computing power is needed. Experimental results have shown that using a small server with only 2 cores it will take around 10-20 seconds to classify an image. A system with 16 cores reduces the classification time to around 1 second. This system is however more expensive, when using a Google virtual machine the hourly cost is $0.779. This is however not a significant cost compared to farm workers. The system can also be easily shared with multiple farms. | |||

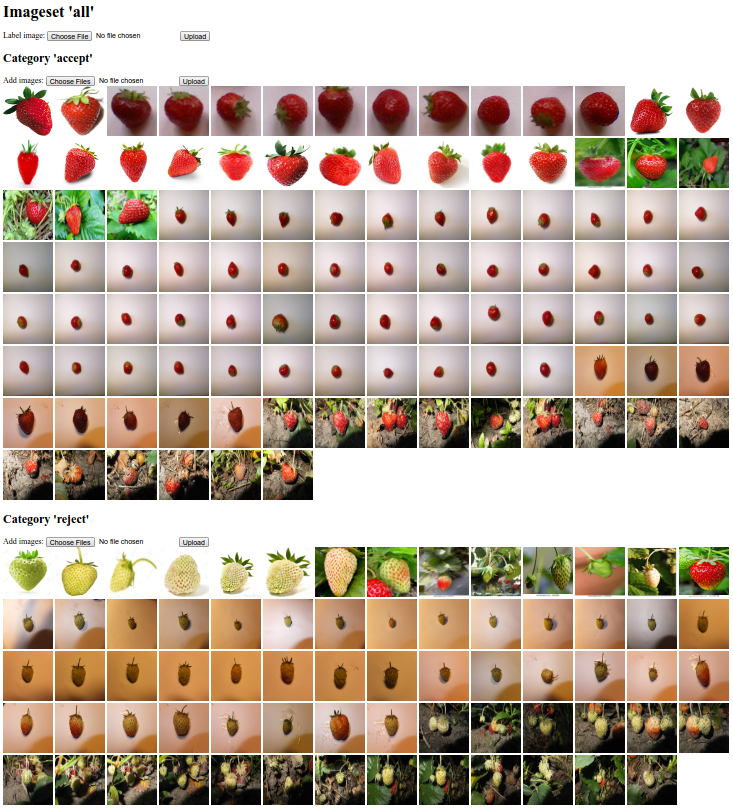

For | [[File:StrawberryDataSet.png | 300px | thumb | Strawberry data set.]] | ||

===TensorFlow library=== | |||

For our classification of fruit ripeness, we are using TensorFlow, an open-source Python/C++ library for machine learning. We are using a convolutional neural network (CNN) with a custom image set of ripe and unripe strawberries. To get acquinted with the library, we have followed the tutorials for the basic MNIST dataset as well as the CIFAR-10 CNN. We are using a modified CNN based on the Inception v3 model. We based our implementation of the CNN on the Inception model as detailed at the following links: [https://www.tensorflow.org/versions/r0.9/how_tos/image_retraining/index.html#how-to-retrain-inceptions-final-layer-for-new-categories] and [https://www.tensorflow.org/versions/r0.9/tutorials/image_recognition/index.html#image-recognition]. | |||

For training the neural network we chose to retrain an existing general model from ImageNet, to learn it our use case, as explained on the former given links. Research done by Donahue et al. [http://arxiv.org/pdf/1310.1531v1.pdf] showed that features in a model that was trained with a general large dataset are also effective for novel use cases, fruit ripeness in our case. Retraining a part of the existing model (the final layer) should theoretically give worse results compared to training a new model from scratch. However, it has the advantage that it is fast, it only takes around 20 minutes on one core. Training a model from scratch can take weeks according to the sources in former paragraph. That is also the reason why we are unable to experimentally verify that training from scratch gives better performance. Another advantage of retraining is that it can reuse features already in the original model, and thus can get good performance already with a small training set. | |||

===Back end=== | |||

We have set up a Python web server to act as a back end for the vision system. Here is where all images are stored and where the training and classification is done, using the TensorFlow library. A REST API is used for front end devices to communicate with the server. Via this API, the robot can send an image to the server, where it is classified, and the result is returned to the robot. In the demo we show this on the Raspberry Pi. The second use of this back end is to improve the training set. Via the API, new images can be added to the training set. This is used in the farmer front end application (the app), so that he/she can improve the dataset with own classified images. | |||

An administration front end for the back end can be found [http://plant-ml.appspot.com here]. You can browse through the images in the data set, add images, classify images and start retraining. The code used can be found [https://github.com/mhvis/farm-ml/blob/master/server.py here]. | |||

===Data set and results=== | |||

For the main training data set we have decided to use a mix of strawberry pictures taken in different ways. This data set includes pictures with a white background and pictures taken in the field. This diversity is to make sure that the classification works under changing conditions, for instance with different lighting. Pictures were taken using strawberries from an own strawberry garden, with extra images from Google for higher diversity. We have done tests with a data set of size 173 (104 plucking, 69 not plucking). This data set is shown on the right. After retraining, we have validated this database with 56 new pictures not in this database (24 plucking, 32 not plucking). To ensure objectivity when creating the validation set, we used Google image search where we picked the first images that applied. The results: from the plucking set, 23 of 24 images were classified correctly. From the not plucking set, 30 of 32 images were classified correctly. These results show that performance is already good even using a relatively small data set (see farmer needs in last paragraph of the cucumber farmer conversation). The validation set constructed using Google images classified 95% correctly. To be able to draw stronger conclusions it is helpful to consider a set of images taken at a farmer during harvesting season, this was however not feasible for us. | |||

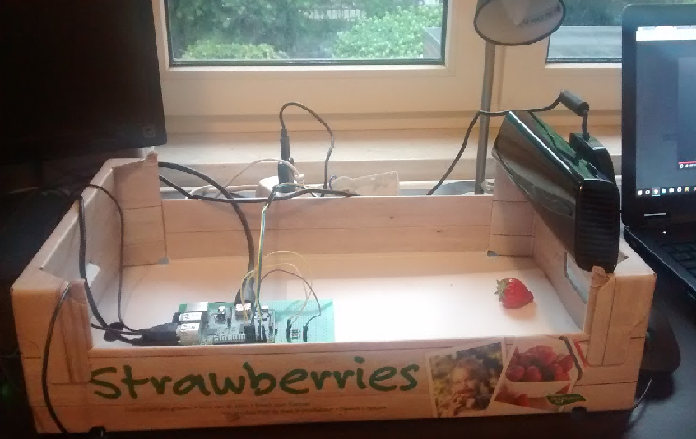

[[File:demosetup.png | 350px | thumb | Setup of the demo.]] | |||

===Demo setup=== | ===Demo setup=== | ||

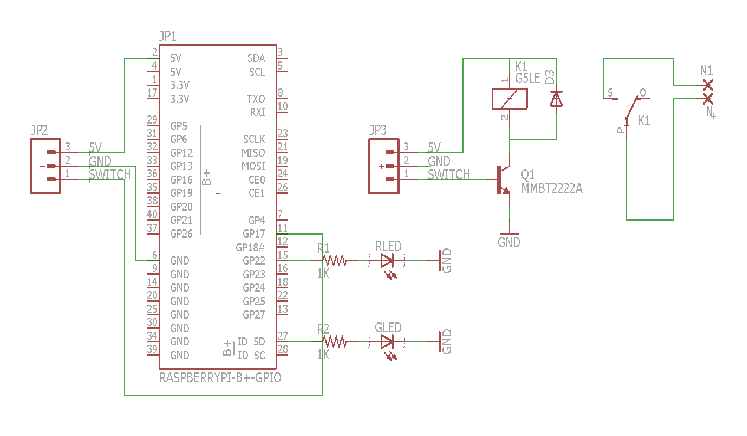

We decided to use a standard off-the-shelf Raspberry Pi as the microcontroller for our implementation of the vision system. The idea is that any device can post an image of a ripe/unripe fruit to the database and receive a classification. Using the Raspberry Pi demonstrates that any device with internet connection and sufficient processing power to use our fruit classification system. | We decided to use a standard off-the-shelf Raspberry Pi as the microcontroller for our implementation of the vision system. The idea is that any device can post an image of a ripe/unripe fruit to the database and receive a classification. Using the Raspberry Pi demonstrates that any device with internet connection and sufficient processing power to use our fruit classification system. | ||

[ | The Raspberry Pi used a single python script to turn on the spotlight, take a picture using the Kinect, and flash a corresponding red or green LED if the fruit was rejected or accepted. The code can be found [https://drive.google.com/open?id=0B89EvnWAmZI-WnVQYXJDWDhjM00 here]. An image of our demo setup is shown on the right. | ||

To turn on the spotlight, a GPIO on the Raspberry Pi was connected to a custom relay board that we produced, which was in turn connected to mains electricity. The neutral wire from the mains split into two wires, which could be electrically connected by the relay, turning on the light. Using a MOSFET, we were able to switch on/off the relay using a 3.3V GPIO from the Raspberry Pi. A video of the demo can be found [https://drive.google.com/file/d/0B89EvnWAmZI-VTlHdUhsbzNscU0/view?usp=sharing here]. | |||

To turn on the spotlight, a GPIO on the Raspberry Pi was connected to a custom relay board that we produced, which was in turn connected to mains electricity. The neutral wire from the mains split into two wires, which could be electrically connected by the relay, turning on the light. Using a MOSFET, we were able to switch on/off the relay using a 3.3V GPIO from the Raspberry Pi. | |||

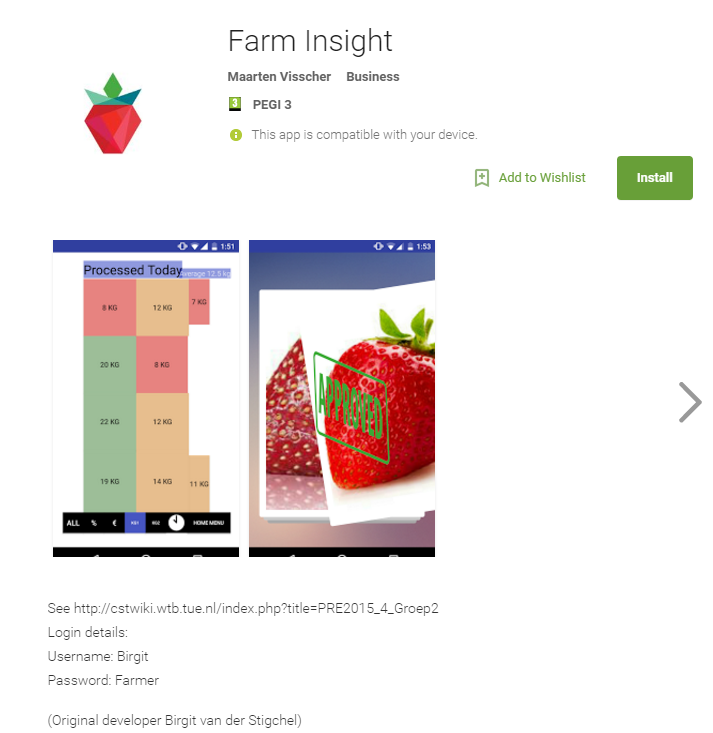

==App== | ==App== | ||

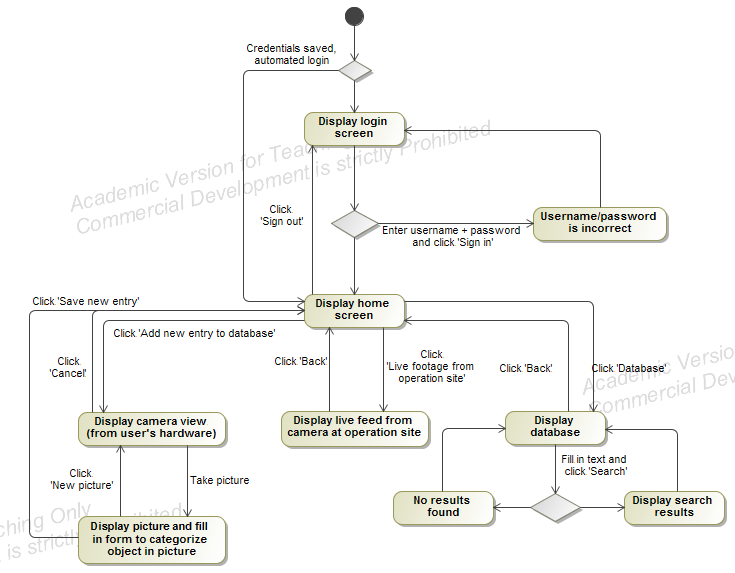

[[File:Screenflow v1.PNG| 300px | thumb | Screenflow version 1.]] | |||

===Idea behind the app=== | ===Idea behind the app=== | ||

One of the requirements of the system was for the user/farmer to be able to add pictures of unripe and ripe fruit to the database in order to be improve the system. This was chosen because if it was hypothesized that more pictures under different lighting settings and different circumstances, of different classifications of the fruit would results into a better and faster neural network, that would improve the overall performance of the system. Furthermore we also wanted to give the farmer an extra way of getting more insight about his/her farm and the used system. For example how much fruit was picked, the overall quality of this fruit, the temperature and ways to connect to the CCTV system of the farm en keep an eye on everything. | One of the requirements of the system was for the user/farmer to be able to add pictures of unripe and ripe fruit to the database in order to be improve the system. This was chosen because if it was hypothesized that more pictures under different lighting settings and different circumstances, of different classifications of the fruit would results into a better and faster neural network, that would improve the overall performance of the system. Furthermore we also wanted to give the farmer an extra way of getting more insight about his/her farm and the used system. For example how much fruit was picked, the overall quality of this fruit, the temperature and ways to connect to the CCTV system of the farm en keep an eye on everything. | ||

We decided to use an app for this since we cannot expect the user to have knowledge about programming or any other difficult means to add pictures to the database. With this app the farmer would be able to add pictures to the database and label them, get more information about his/her farm and he/she would be able to go into the database to delete or change the labels of pictures. | We decided to use an app for this since we cannot expect the user to have knowledge about programming or any other difficult means to add his own labelled pictures to the database. With this app the farmer would be able to add pictures to the database and label them, get more information about his/her farm and he/she would be able to go into the database to delete or change the labels of pictures. | ||

We decided to first create screenflows and functional powerpoint versions of the app, to be able to get a good look and | We decided to first create screenflows and functional powerpoint versions of the app, to be able to get a good look and feel before programming the "real" app. This often resulted in some slight changes because we found that aspects were not working nicely or lacked an intuitive feel. | ||

===Screenflows=== | ===Screenflows=== | ||

[[File:Screenflow v2.PNG| 300px | thumb | Screenflow version 2.]] | |||

====Version 1==== | ====Version 1==== | ||

The first version was made during the third week of the course and formed the base for the first version of the app. The initial idea was that a farmer should be able to alter the database. Add new pictures to the database of instances that do not meet expectations when checking the strawberries that the system had picked, such that it could learn by using this pictures for additional training. Finally it should have an option to view what the robot is doing by live streaming the footage from the robots' camera. | The first version was made during the third week of the course and formed the base for the first version of the app. The initial idea was that a farmer should be able to alter the database. Add new pictures to the database of instances that do not meet expectations when checking the strawberries that the system had picked, such that it could learn by using this pictures for additional training. Finally it should have an option to view what the robot is doing by live streaming the footage from the robots' camera. | ||

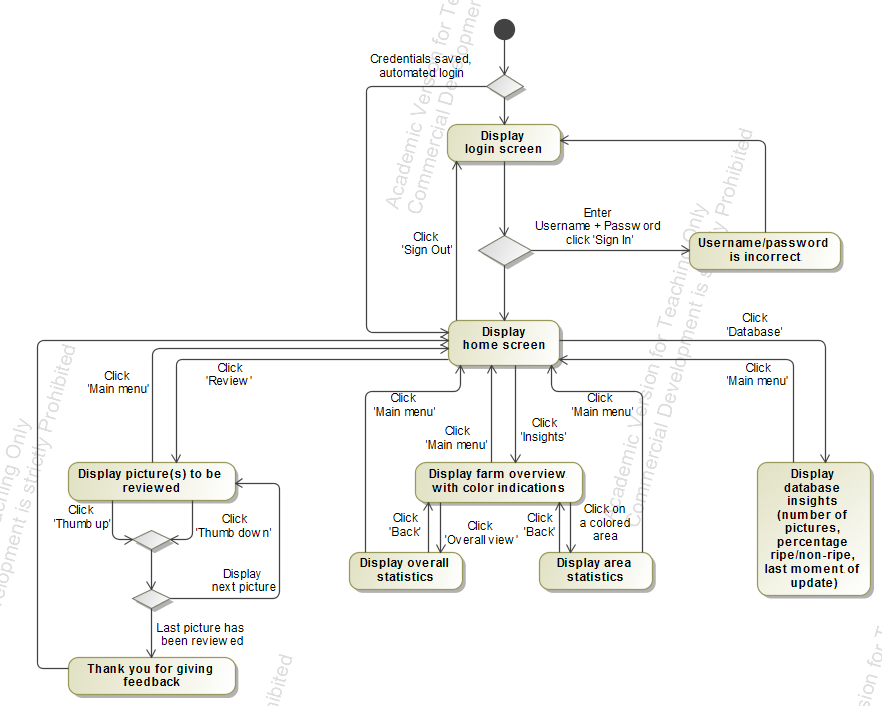

====Version 2==== | ====Version 2==== | ||

| Line 366: | Line 465: | ||

The database aspect was changed in a way that it now displays statistics about the database, under which the last version and when that update took place and how many pictures are in there. | The database aspect was changed in a way that it now displays statistics about the database, under which the last version and when that update took place and how many pictures are in there. | ||

The live feed was replaced by an overview of the farm with different 'filters' which show the performance of different sections in terms of among others revenue, average quality and expected harvest in coming days. This can be seen for the farm as a whole, but also per section individually. | The live feed was replaced by an overview of the farm with different 'filters' which show the performance of different sections in terms of among others revenue, average quality and expected harvest in coming days. This can be seen for the farm as a whole, but also per section individually. | ||

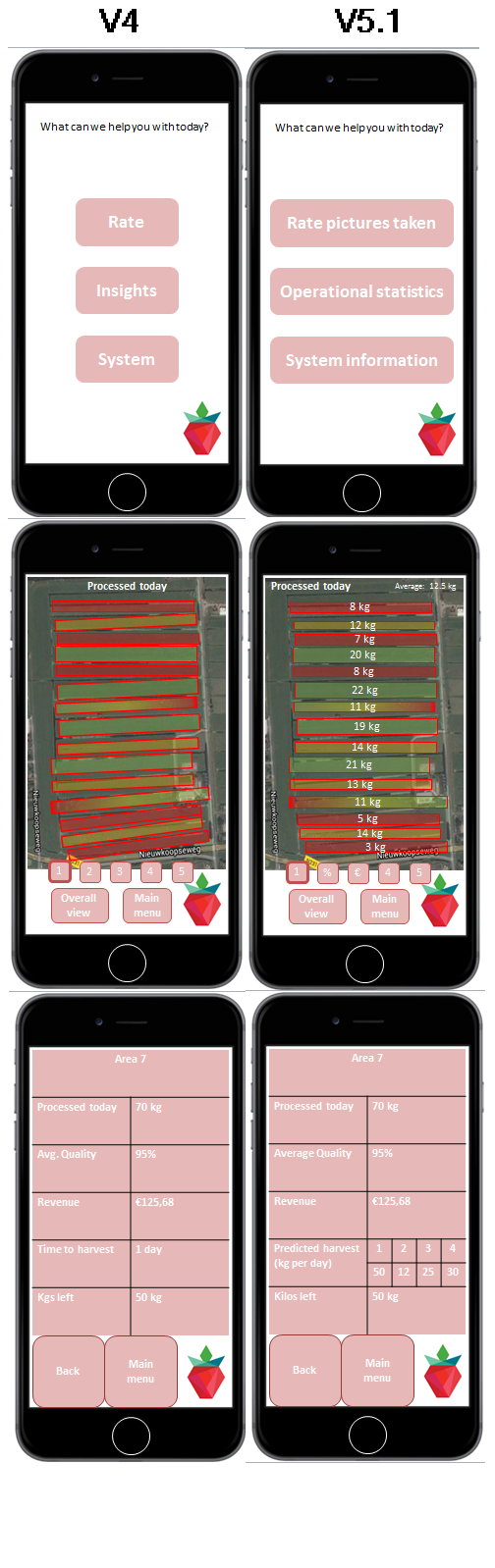

===Different versions of the app=== | ===Different versions of the app=== | ||

====Version 1==== | ====Version 1==== | ||

[[File: app1.jpg | 180 px | thumb| Example of the color coding per segment.]] | |||

The first version of the app was made during the 4th week of the course. This app had the functionalities that were described in the "idea behind the app" section. The user could log in to his/her personal account, take pictures, label them and add them to the database. Furthermore the farmer could get insight in the performance of his/her farm and could change and delete pictures from the database. | |||

The first version of the app can be found here: [[File: Use app.pdf]]. | |||

The first version of the app can be found [[File: Use app.pdf]]. | |||

====Version 2==== | ====Version 2==== | ||