Embedded Motion Control 2012 Group 9: Difference between revisions

| (55 intermediate revisions by 4 users not shown) | |||

| Line 8: | Line 8: | ||

'''Objective'''<br> | |||

== '''Objective'''<br> == | |||

The Jazz robot find his way out of a maze in the shortest amount of time <br> | The Jazz robot find his way out of a maze in the shortest amount of time <br> | ||

<br> | <br> | ||

''' | |||

== '''Requirements'''<br> == | |||

The Jazz robot refrains from colliding with the walls in the maze<br> | The Jazz robot refrains from colliding with the walls in the maze<br> | ||

The Jazz robot detects arrow (pointers) to choose moving to the left or to the right<br> | The Jazz robot detects arrow (pointers) to choose moving to the left or to the right<br> | ||

== '''Planning'''<br> == | |||

An intermediate review will be held on June 4th, during the corridor competition<br> | An intermediate review will be held on June 4th, during the corridor competition<br> | ||

| Line 22: | Line 26: | ||

'''Week 1 | == '''Progress Before Corridor Competition '''<br> == | ||

==='''Week 1 '''<br>=== | |||

Make a group, find book & literature<br> | Make a group, find book & literature<br> | ||

<br> | <br> | ||

'''Week 2 | ==='''Week 2 ''' <br>=== | ||

''Installation :''<br> | ''Installation :''<br> | ||

''1st laptop :''<br> | ''1st laptop :''<br> | ||

| Line 41: | Line 47: | ||

<br> | <br> | ||

'''Week 3 | ==='''Week 3 '''<br>=== | ||

''Installation :''<br> | ''Installation :''<br> | ||

SVN (Got the username & password on 8th May)<br> | SVN (Got the username & password on 8th May)<br> | ||

| Line 55: | Line 61: | ||

3 computers will use Ubuntu 11.10 and 1 computer uses Xubuntu 12.04<br> | 3 computers will use Ubuntu 11.10 and 1 computer uses Xubuntu 12.04<br> | ||

'''Week 4 | ==='''Week 4 '''<br>=== | ||

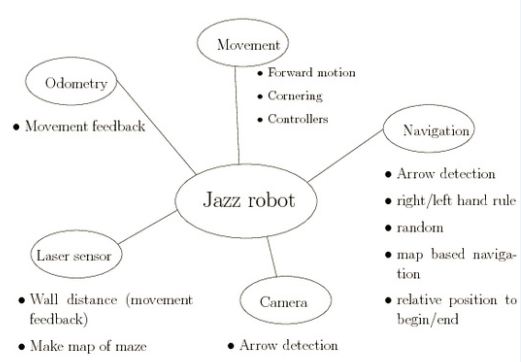

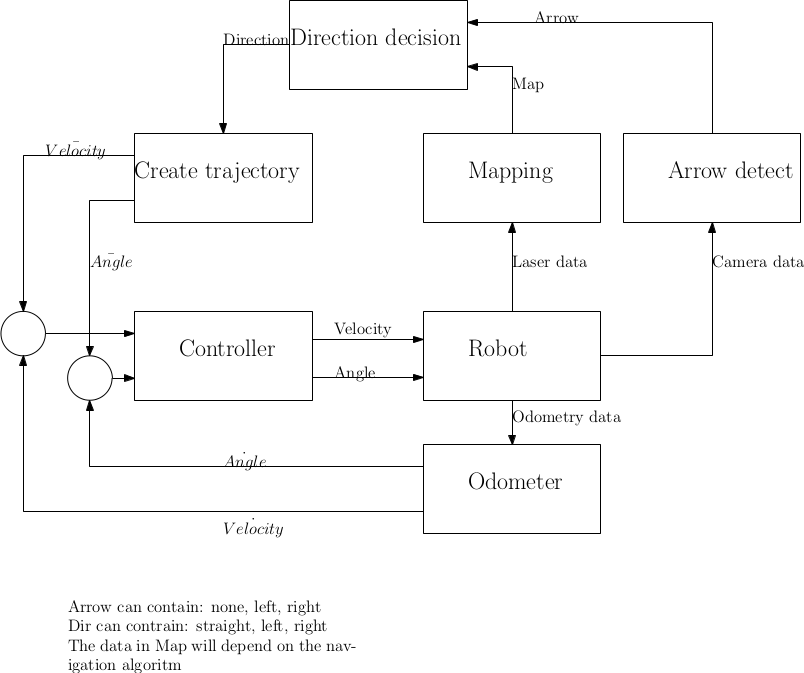

We did a brainstorm session on what functions we should make in the software, the result is below. <br> | We did a brainstorm session on what functions we should make in the software, the result is below. <br> | ||

[[File:brainstorm.jpg]] | [[File:brainstorm.jpg]] | ||

'''Week 5 | ==='''Week 5 '''<br>=== | ||

We made a rudimentary software map and started developing two branches of software based on different ideas. The software map will be discussed and revised in light of the results of the software that was written early in week 6. The group members responsible for the lecture started reading/researching chapter 11.<br> | We made a rudimentary software map and started developing two branches of software based on different ideas. The software map will be discussed and revised in light of the results of the software that was written early in week 6. The group members responsible for the lecture started reading/researching chapter 11.<br> | ||

<br> | <br> | ||

[[File:software_map.png]] | [[File:software_map.png|600px|]] | ||

<br> | <br> | ||

| Line 69: | Line 77: | ||

==='''Week 6 '''<br>=== | |||

Making slide for Presentation Chapter 11 : Timer <br> | |||

[[File:Chapter_11.pdf ]] | |||

<br> | == <br>'''Implementation So Far & Decision We Made'''<br> == | ||

'''Implementation So Far & Decision We Made'''<br> | |||

''' | ==='''Navigation with Laser'''=== | ||

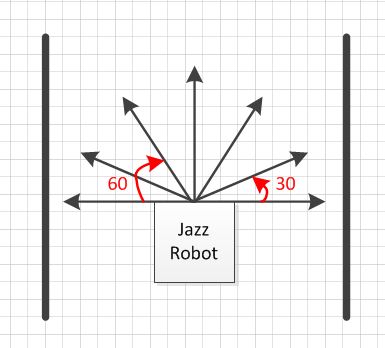

We are using 7 laser points with variety degrees as shown below : <br> | We are using 7 laser points with variety degrees as shown below : <br> | ||

| Line 84: | Line 94: | ||

<br> | <br> | ||

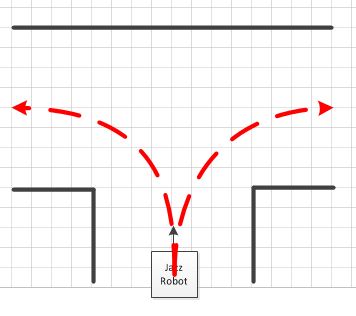

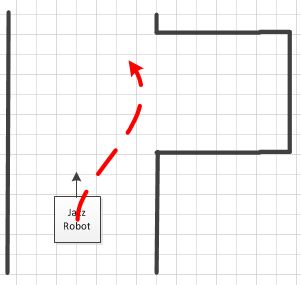

''' | ==='''Robot Movement'''<br>=== | ||

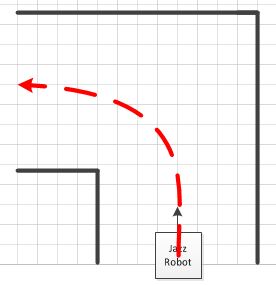

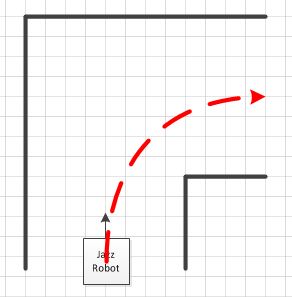

we designed the movement of the robot as show in the picture below. We want to make the robot turn the corner like one-fourth of circle, so it will cut the time because it has shorter path than turn the corner with 90 degrees movement | we designed the movement of the robot as show in the picture below. We want to make the robot turn the corner like one-fourth of circle, so it will cut the time because it has shorter path than turn the corner with 90 degrees movement | ||

'' | ====''Turn Left or Right'' <br>==== | ||

[[File:Turn left.JPG]] | [[File:Turn left.JPG]] | ||

[[File:Turn right.JPG]] | [[File:Turn right.JPG]] | ||

| Line 94: | Line 104: | ||

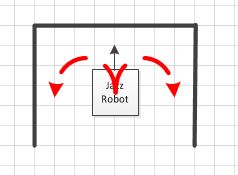

'' | ====''Turn T connection''<br>==== | ||

Because we have not already implemented the camera vision to help robot navigation, the movement of the robot either turn to right or left depends on the wall follower algorithm. If Righ Hand Rule activated, so the robot will go to right and vice versa.<br> | Because we have not already implemented the camera vision to help robot navigation, the movement of the robot either turn to right or left depends on the wall follower algorithm. If Righ Hand Rule activated, so the robot will go to right and vice versa.<br> | ||

[[File:T connection.JPG]] | [[File:T connection.JPG]] | ||

'' | ====''Dead end ''<br>==== | ||

[[File:Deadend.JPG]] | [[File:Deadend.JPG]] | ||

<br> | <br> | ||

'' | ====''Avoid Dead end ''<br>==== | ||

The robot we will avoid dead end<br> | The robot we will avoid dead end<br> | ||

[[File:Antideadend.JPG]] | [[File:Antideadend.JPG]] | ||

| Line 110: | Line 120: | ||

<br> | <br> | ||

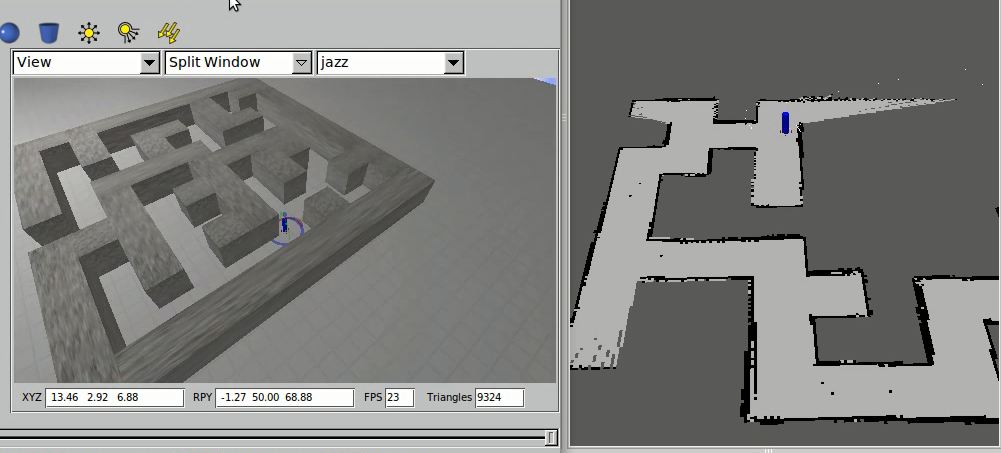

''' | ==='''Building map with Laser & Odometry'''=== | ||

The map is used to give information where the current location of the robot is. For future development this map also can be used for implementing Tremaux's Algorithm (http://www.youtube.com/watch?v=6OzpKm4te-E). We are trying to do that, so our robot would not go to the same place twice. <br> | The map is used to give information where the current location of the robot is. For future development this map also can be used for implementing Tremaux's Algorithm (http://www.youtube.com/watch?v=6OzpKm4te-E). We are trying to do that, so our robot would not go to the same place twice. <br> | ||

[[File:Map.JPG]] | [[File:Map.JPG|500px|thumb|center|]] | ||

<br> | <br> | ||

''' | |||

==='''Algorithm'''<br>=== | |||

There are a lot of algorithms to solve the maze problem (http://en.wikipedia.org/wiki/Maze_solving_algorithm) , the simplest basic idea that can be implemented easily is using Wall follower (Right Hand Rule of Left Hand Rule). We are trying to find the best algorithm that give us the shortest time to find exit <br> | There are a lot of algorithms to solve the maze problem (http://en.wikipedia.org/wiki/Maze_solving_algorithm) , the simplest basic idea that can be implemented easily is using Wall follower (Right Hand Rule of Left Hand Rule). We are trying to find the best algorithm that give us the shortest time to find exit <br> | ||

| Line 137: | Line 148: | ||

3. If possible the robot must try to go straight.<br> | 3. If possible the robot must try to go straight.<br> | ||

http://www.robotix.in/rbtx09/tutorials/m4d4 | http://www.robotix.in/rbtx09/tutorials/m4d4 | ||

<br> | <br> | ||

==='''Video Documentation''' <br>=== | |||

1. First Trial Mouse Algorithm <br> | |||

[[File:Mouse.JPG|300px|thumb|center|http://www.youtube.com/watch?v=9xynPzQU7Q8&feature=youtu.be]] | |||

2. First Trial Right Hand Rule Algorithm <br> | |||

[[File:RHR.JPG|300px|thumb|center|http://www.youtube.com/watch?v=_c31Wct0tPI&feature=youtu.be]] | |||

<br> | <br> | ||

<br> | <br> | ||

<br> | <br> | ||

'''Choice between 2 | ==='''Choice between 2 approaches for corridor competition''' <br>=== | ||

''1st | ''1st approach (complex):'' <br> | ||

* Make Gmap with odometry and laser <br> | * Make Gmap with odometry and laser <br> | ||

* Detect arrow <br> | * Detect arrow <br> | ||

| Line 157: | Line 169: | ||

* Collision detection <br> | * Collision detection <br> | ||

<br> | <br> | ||

''2nd | ''2nd approach (simple):'' <br> | ||

* Follow right wall <br> | * Follow right wall <br> | ||

* Detect dead ends <br> | * Detect dead ends <br> | ||

* Make nice | * Make nice turns <br> | ||

* Detect | * Detect arrows <br> | ||

<br> | <br> | ||

''Comparision:'' <br> | ''Comparision:'' <br> | ||

| Line 170: | Line 182: | ||

* The 2nd algoritm will probably work most of the time <br> | * The 2nd algoritm will probably work most of the time <br> | ||

<br> | <br> | ||

We choose the 2nd algoritm | We choose the 2nd algoritm and continue the design/implementation process. | ||

<br> | |||

== <br>'''Corridor competition strategy''' <br> == | |||

For the corridor competition we decided to focus on controlling the robot well, for straight driving and taking a corner. The camera node will be left for after the corridor competition because we will not use the camera for that. | |||

== <br>'''Implementation for the corridor competition''' <br> == | |||

For the corridor competition we implemented a very simple algorithm in which only five laser beams are used, the 0, +/- 30, +/- 60 and the +/- 90 degree beams. The 0 degree beam is used to detect the distance to the wall in front of the robot, to detect the forward path and avoid head-on collisions. The beams at +/- 90 degrees are used to keep the robot in the center of the corridor while driving.To detect free paths we use the +/- 30 and +/- 60 degree lasers (two beams for robustness). | |||

All this was implemented in a simple while loop consisting of several if-statements. The parameters were tuned in the simulator and the algorithm seemed to function well enough to get the robot through the corridor competition. | |||

== <br>'''Test before the corridor competition'''<br> == | |||

We were scheduled for testing on Friday afternoon before the corridor competition. During that test, we first had to figure out why the lasers did not behave as they should have (because the number of laser points on the robot was different than that in the simulator). After that we noticed the algorithm did not work as expected. It was far less robust than we thought it would be and performed much poorer than in the simulator. Despite this we did have a few (of many) successful runs. Since this test was on Friday afternoon we did not have the chance to make changes or adjustments to the parameters (because we had no way of making sure it would work in reality), so we left it at this and hoped the corridor competition would be one of those successful runs. | |||

== <br>'''After the corridor competition''' <br> == | |||

Unfortunately we weren't lucky in the corridor competition, it did not go well (as expected). The main problem was taking corners, driving straight did not go well either. The problem might be caused by communication delay, but probably by the robustness of the controller. The solution for this is improving the controller. The current controller is based on a nested if-then structure. We need a different strategy. | |||

== <br>'''Maze competition strategy'''<br> == | |||

After the corridor competition we had a meeting in which we decided we needed a completely different approach. Both for control and corner detection. We decided on a program structure with three nodes, a controller node, a decision node and a camera node. They communicate as shown in the figure below. | |||

[[File:Struct.png]] | |||

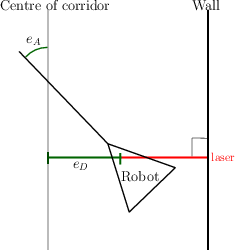

== <br>'''Controller node''' <br> == | |||

[[File:G9 robot errors.png]] <br> | |||

<br> | |||

The laser measures 1081 distances, but from all these points there is 1 shortest distance. Our algoritm finds this shortest distance. The shortest distance measured is allways perpendicular to the wall, and using the known angle from this laser point we can calculate the error angle and distance. The formulas below are for just the left side, the right side uses the negative values because the laser points to the right wall there.<br> | |||

<br> | |||

<math> e_A = change\_index\_to\_degree(laser\_point) + 90 </math><br> | |||

<math> e_D = 0.5 - shortest\_distance </math><br> | |||

<br> | |||

We want the velocity of the robot to be 0 when facing the wall, and full speed when driving straight. A cosine of the error angle has the perfect behaviour for this. The angular velocity has to steer the robot to the center of the corridor while also correcting the error angle. These 2 tasks are impossible to do simultaneously so we made a controller which has a trade-off with tunable parameter. We will use the following control law: <br> | |||

<br> | |||

<math>Velocity = K_1 cos(e_A)</math> <br> | |||

<math>Angular = K_2 e_A + K_3 e_D </math> <br> | |||

<br> | |||

The velocity will be 0 when the robot faces the wall, and it will be maximum when the robot is straight. The angular velocity makes the robot steer towards the middle when it is at the side of the corridor. When the robot is in the middle of the corridor it will steer the robot straight. This controller is currently working in the simulator and we tested it on the real robot (video at the bottom of the page).<br> | |||

<br> | <br> | ||

This controller can also be used to turn corners. One side of the lasers (left half or right half) will be ignored when the corner command is given, the robot then only follows the wall on one side of the robot. When that wall has a corner in it it will be followed because the shortest distance to the wall will be the corner point. The robot will drive the corner with a distance of 0.5 to the corner point. | |||

== | == '''Decision node''' == | ||

The decision node serves two purposes, the first is detecting corners and the second is deciding the direction the robot should take. | |||

The decisions depend three factors. One is the rule in effect (left or right hand rule), the other is the available paths and the last is a detected arrow. Depending on the rule in effect, one direction will take precedence over the other when both paths are free. The direction opposite the rule will only be taken when the forward path and the direction of the rule are blocked or an arrow indicating that direction is detected. When all paths are blocked a dead end is detected. The direction to be taken will be sent to the controller node. | |||

'''Segment red arrow''' <br> | The detection of corners works by detecting the 'corner points'. This is done by first correcting the laser data of all lasers and then look for jumps in the value. <br> | ||

<br> | |||

<math> laser\_corrected = laser\_data * sin(angle\_of\_laser\_data)</math><br> | |||

<br> | |||

The data is now normalized to lines parallel to the robot. A gap in the wall or corner is charachterized by a jump in the value of the data. We look for jumps bigger than 0.55 to find the corner points. When a decision about the direction has been taken we start turning (see controller node), the turning is stopped when the odometry yaw value changed more than 70 degree and no more turns are seen. The last statement makes sure we do not 'see' the same turn right after taking it. | |||

== '''Camera node''' == | |||

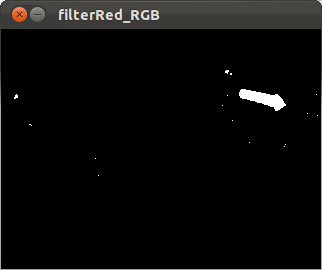

At least two steps are needed for arrow detection: segment red arrow from complex background and detect the direction. More details are as follows, and picture frame0008.jpg from the video is shown as an example. | |||

[[File:frame0008.jpg |300px|frame0008]] | |||

==='''Segment red arrow''' <br> === | |||

* Segment red part from original picture. Since pictures in | * Segment red part from original picture. Since pictures in OpenCV can be easily represented in RGB and HSV and converted between each other, we try two filtering methods based on RGB and HSV respectively and then compare the results to choose one with better performance. | ||

With RGB colorspace, three color channels can be splitted by ''cvSplit()'' function in OpenCV. Because white are combination of red, blue and green, white part is also represented in three single channels. Thus we approximate that <math>''realRed = cRed - cBlue - cGreen''</math> to remove the influence of white color. After that, use ''cvThreshold()'' to make picture binary and filter dark points below a setting threshold. | |||

cvSplit(img, cBlue, cGreen, cRed, NULL); | |||

cvAdd(cBlue, cGreen, cGreen); | |||

cvSub(cRed, cGreen, cRed); | |||

cvThreshold(cRed, cRed,REDBOUND,255, CV_THRESH_BINARY); | |||

To work with HSV colorspace, first use function ''cvCvtColor()'' to convert BGR to HSV. And then select interested red part by using ''cvInRangeS()'' and ''cvOr()''. Here two hues ranges are used because HSV colorspace is actually a circle. | |||

cvInRangeS(imgHSV, Low1, High1, imageArrowPixels1); | |||

cvInRangeS(imgHSV, Low2, High2, imageArrowPixels2); | |||

cvOr(imageArrowPixels1, imageArrowPixels2, imageArrowPixels); | |||

When we set ''REDBOUND'' in RGB method to 10 and set boundaries in HSV method to a relative large range, the segmentation result is shown below. The left one is from RGB and right from HSV and with less noise (small areas). After trying all the samples from the video, we find that the ''REDBOUND'' that can segment all these arrows always brought more noise than HSV method. Thus we choose the second method. | |||

| Line 186: | Line 264: | ||

[[File:HSV_fil_pic8.png |300px|''Using HSV filter'']] | [[File:HSV_fil_pic8.png |300px|''Using HSV filter'']] | ||

* Remove all the environment noise. | |||

In order to get rid of the influence from more complex environment, we´d better remove all the environment noise. In that case, we find a very useful blob extraction library to OpenCV - ''cvBlobsLib''. It provides functions to manipulate, filter and extract results from the extracted blobs. We can use ''blobs.Filter'' to remove blobs with small areas, and then select the biggest blob as the arrow, fill it with white color. In this way only one blob is left in the picture, and when there is no other large red noise from the environment, we can surely assume the left area is the arrow. As can be seen blow, this filter does very well to remove all the noise. | |||

[[File:Blob_Fil_pic8.png |300px|''Blob_Fil'']] | |||

But we met problem when we implemented blob filter to ros, because ''cvBlobsLib'' is not a internal library of OpenCV. Since we don´t want to spend to much time to fix this problem, we tried a simple filter method as a substitution. In this method, ''cvErode()'' and ''cvDilate()'' are used to help remove small number of pixels. Because our HSV filter in the first step is good enough (only quite small pieces are left), this simple further filter also works well as the following picture shows. | |||

[[File:Simple_filter_pic8.png |300px|''Simple filter'']] | |||

==='''Detect direction'''=== | |||

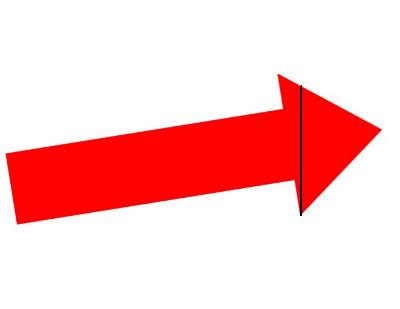

At first we considered finding the corners of arrow. However, because we use relatively strict filter above, the final arrow we get is not very accurate, i.e. no sharp angle. We cannot get correct numbers of corners in this case. And from the direct view that the arrow head should be ¨heavier¨ than arrow tail, we decided to use areas to detect direction. So the problem becomes to find a proper dividing line in the arrow and compare areas. | |||

* Find dividing line. | |||

In this part, we obtain the approximate position of arrow head by calculating the position of maximum vertical line, because as long as the arrow plane is approximately parallel to the camera, then the maximum vertical line is always on the arrow head side. Here thank Royce from group3 for this idea. | |||

[[File:Arrow_meitu_1.jpg]] | |||

* | * Calculate areas of left and right parts. get the direction by comparing two area values. | ||

In order to separate two parts, we use Region of Interest (ROI) in OpenCV, and use ''cvMoments()'' to easily calculate areas. Since the dividing line stays at arrow head, then the smaller area represents arrow direction. Here to get more accurate result, we filter again the arrows with small areas, that means arrows can only be detected when it is large enough in Jazz´s eyes. | |||

We test this code for all the samples as well as the video we made by ourselves, the result goes quite well, but there would still be wrong detection when Jazz move too sharply. So an accurate controller is needed to move Jazz smoothly. | |||

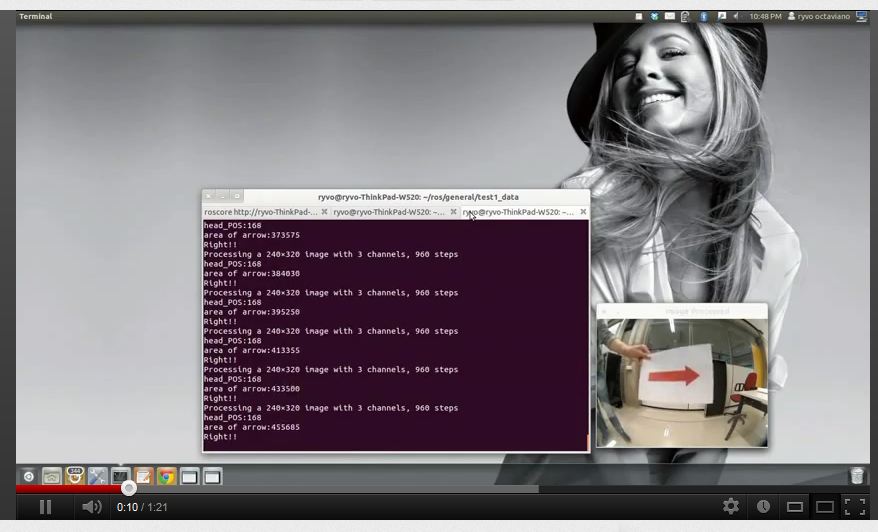

==='''Arrow testing video : from bag file''' <br>=== | |||

[[File:Arrow.JPG|300px|thumb|center|http://www.youtube.com/watch?v=3siXzV95cfE&feature=youtu.be]] | |||

<br> | <br> | ||

''' | |||

The | == <br>'''Results first test''' <br> == | ||

The first test was scheduled on Wednesday the 13th of June. We mainly tested the controller node because the decision node and the camera node weren't ready yet. The result was pretty good and proof that the algoritm was working the way it should. There is a small issue: The robot starts to stray when the corridor width varies a little, this is caused by the algoritm. The robot tries to stay at 0.5m from both walls, when the width is less than 1m it will chose between the walls and show undesirable behaviour. A solution would be to either decrease the required distance to the wall, or add a dead zone. <br> | |||

<br> | <br> | ||

The wall following algoritm will also take corners when some laser data is ignored. This means that the robot will only navigate based on the left side lasers when turning left (it only sees the left wall), and vice versa. During testing we noticed that the robot makes the corner a bit too big and corrects itself afterwards (overshoot). This could become a problem at higher speeds, a solution could be to shorten the distance to the wall (on the inside of the corner). <br> | |||

<br> | <br> | ||

A video of the trial run is shown here. The decision to take corners is made by hand, but driving through the corner is autonomous. <br> | |||

[[File:Jazz.JPG|300px|thumb|center|http://www.youtube.com/watch?v=qIfZxWd8xc8&feature=youtu.be]] | |||

<br> | <br> | ||

http://www.youtube.com/watch?v= | == <br>'''Results second test''' <br> == | ||

Our second test was scheduled on wednesday 20th of June. Unfortunately testing wasn't possible then because the setup didn't work. This was unfortunate because we implemented a lot of new features and the next (last) opportunity to test was going to be only two days before the final contest. | |||

== <br>'''Results third test''' <br> == | |||

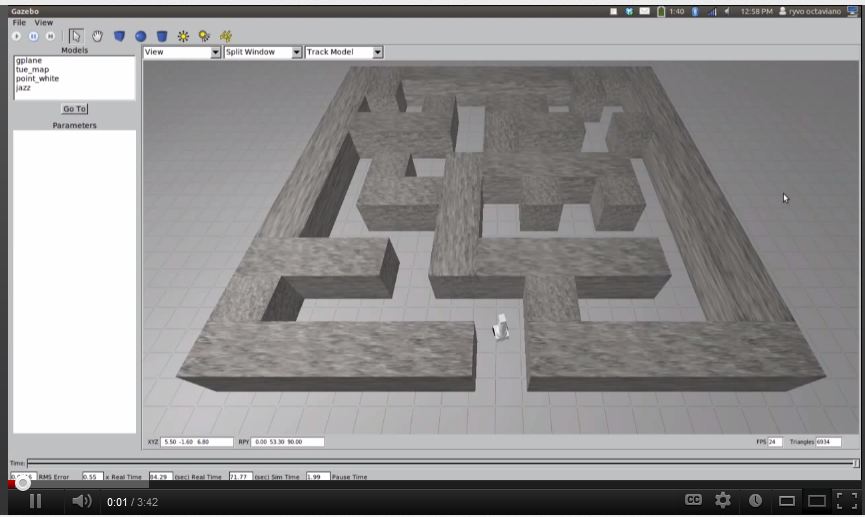

The last test was scheduled on Wednesday 27th of June, two days before the contest. We wanted to test the final result, all the nodes integrated. A lot had changed since the first test a few weeks ago and we noticed not everything worked as expected. We did not have enough time to find out why exactly and also had exams the following day so we had to enter the contest knowing the robot wasn't going to do what it was supposed to, even though it performed very well in the simulator. For proof of this, see the video below. The speed of video is increased 5 times, the actual maximum speed of jazz robot in simulator is 0.1 | |||

[[File:Gazebo.JPG|300px|thumb|center|http://www.youtube.com/watch?v=jQ5RrSp04a0]] <br> | |||

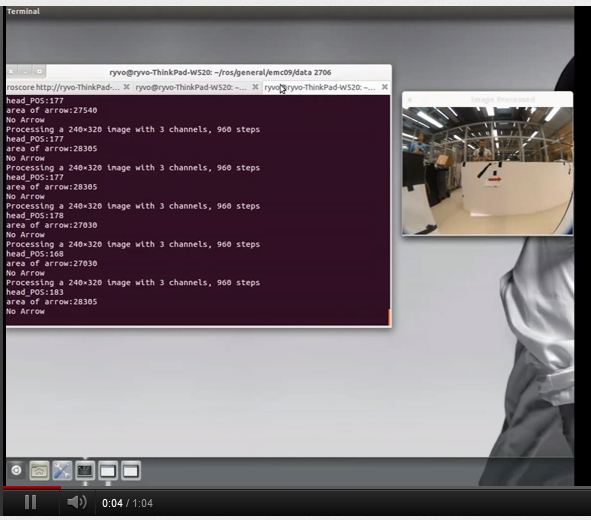

We also did integration with camera node in the third test. The image recognition of the arrow is really good. The proof can be seen on the video below, | |||

[[File:Camera.JPG|300px|thumb|center|http://www.youtube.com/watch?v=sGyVWsk4zSM]] <br> | |||

== '''Closing'''<br> == | |||

- On the final test, our jazz robot was not working as our expectation. <br> | |||

- Thank you very much for our tutor Jos Elfring <br> | |||

Latest revision as of 01:27, 2 July 2012

Group Members :

Ryvo Octaviano 0787614 r.octaviano@student.tue.nl Weitian Kou 0786886 w.kou@student.tue.nl Dennis Klein 0756547 d.klein@student.tue.nl Harm Weerts 0748457 h.h.m.weerts@student.tue.nl Thomas Meerwaldt 0660393 t.t.meerwaldt@student.tue.nl

Objective

The Jazz robot find his way out of a maze in the shortest amount of time

Requirements

The Jazz robot refrains from colliding with the walls in the maze

The Jazz robot detects arrow (pointers) to choose moving to the left or to the right

Planning

An intermediate review will be held on June 4th, during the corridor competition

The final contest will be held some day between June 25th and July 6

Progress Before Corridor Competition

Week 1

Make a group, find book & literature

Week 2

Installation :

1st laptop :

Ubuntu 10.04 (had an error in wireless connection : solved)

ROS Electric

Eclipse

Environmental setup

Learning :

C++ programming (http://www.cplusplus.com/doc/tutorial)

Chapter 11

Week 3

Installation :

SVN (Got the username & password on 8th May)

Learning :

ROS (http://www.ros.org/wiki/ROS/Tutorials)

Jazz Simulator (http://cstwiki.wtb.tue.nl/index.php?title=Jazz_Simulator)

To DO :

end of week 3

Finish setup for all computers

3 computers will use Ubuntu 11.10 and 1 computer uses Xubuntu 12.04

Week 4

We did a brainstorm session on what functions we should make in the software, the result is below.

Week 5

We made a rudimentary software map and started developing two branches of software based on different ideas. The software map will be discussed and revised in light of the results of the software that was written early in week 6. The group members responsible for the lecture started reading/researching chapter 11.

We decided to work on two branches of software, developing different ideas, in parallel. The first branch is based on the software map above. We started writing two nodes, one to process and publish laser data and one controller node to calculate and follow a trajectory. The former node reads laser data from the /scan topic and processes this to detect whether the robot is driving straight in a corner, detect and transmit corner coordinates and provide collision warnings. The second node is the controlling, which uses odometry data to provide input coordinates (i.e. a trajectory) for the robot to follow. The controller implemented is a PID controller, which is still to be tuned. The I and D actions might not be used/necessary in the end. The controller node will also use the laser data provided by the laser data node to be able to plan the trajectory for the robot. A decision algorithm (i.e. go left or right) is still to be developed.

Week 6

Making slide for Presentation Chapter 11 : Timer

File:Chapter 11.pdf

Implementation So Far & Decision We Made

We are using 7 laser points with variety degrees as shown below :

1. The 0 is used for detecting the distance between the robot and the wall in front of the robot, it is also used for anti collision

2. The -90 and 90 are used to make the position of the robot looking forward and keep the distance between the robot and side wall

3. The -30, -60, 60, and 30 are used to detect the position of the free way (left side or right side)

Robot Movement

we designed the movement of the robot as show in the picture below. We want to make the robot turn the corner like one-fourth of circle, so it will cut the time because it has shorter path than turn the corner with 90 degrees movement

Turn Left or Right

Turn T connection

Because we have not already implemented the camera vision to help robot navigation, the movement of the robot either turn to right or left depends on the wall follower algorithm. If Righ Hand Rule activated, so the robot will go to right and vice versa.

Dead end

Avoid Dead end

The robot we will avoid dead end

Building map with Laser & Odometry

The map is used to give information where the current location of the robot is. For future development this map also can be used for implementing Tremaux's Algorithm (http://www.youtube.com/watch?v=6OzpKm4te-E). We are trying to do that, so our robot would not go to the same place twice.

Algorithm

There are a lot of algorithms to solve the maze problem (http://en.wikipedia.org/wiki/Maze_solving_algorithm) , the simplest basic idea that can be implemented easily is using Wall follower (Right Hand Rule of Left Hand Rule). We are trying to find the best algorithm that give us the shortest time to find exit

a. We have already implemented the Wall follower algorithm

This would be fine except for two things.

1. Firstly, you may have to visit almost the entire maze before you find the exit so this can be a very slow technique.

2. Secondly, the maze may have islands in it. Unless all the walls are eventually connected to the outside, you may wander around forever without reaching the exit.

http://www.robotix.in/rbtx09/tutorials/m4d4

To solve this problem, we can use Camera for navigation

b. We also have already implemented the random mouse algorithm

But to make it more intelligent we want to add memory (Modified FloodFill), so the robot won't go same place twice (http://www.youtube.com/watch?v=DVB_twrqlu8)

As the cells are mapped with the numbers as shown in the figure, at each cell the robot is expected to take three decisions.

1. Move to cell which it has gone to least

2. Move to the cell that has minimum cell value

3. If possible the robot must try to go straight.

http://www.robotix.in/rbtx09/tutorials/m4d4

Video Documentation

1. First Trial Mouse Algorithm

2. First Trial Right Hand Rule Algorithm

Choice between 2 approaches for corridor competition

1st approach (complex):

- Make Gmap with odometry and laser

- Detect arrow

- Decide left/right/straight (Navigation algoritm)

- Create trajectory (As in a tracking control problem)

- Follow trajectory (Tracking control problem)

- Collision detection

2nd approach (simple):

- Follow right wall

- Detect dead ends

- Make nice turns

- Detect arrows

Comparision:

- The 1st algoritm is smarter

- The 2nd algoritm is easier to implement

- The 2nd algoritm costs less time to implement

- The robustness of the 1st algoritm is unknown

- The 2nd algoritm will probably work most of the time

We choose the 2nd algoritm and continue the design/implementation process.

Corridor competition strategy

For the corridor competition we decided to focus on controlling the robot well, for straight driving and taking a corner. The camera node will be left for after the corridor competition because we will not use the camera for that.

Implementation for the corridor competition

For the corridor competition we implemented a very simple algorithm in which only five laser beams are used, the 0, +/- 30, +/- 60 and the +/- 90 degree beams. The 0 degree beam is used to detect the distance to the wall in front of the robot, to detect the forward path and avoid head-on collisions. The beams at +/- 90 degrees are used to keep the robot in the center of the corridor while driving.To detect free paths we use the +/- 30 and +/- 60 degree lasers (two beams for robustness). All this was implemented in a simple while loop consisting of several if-statements. The parameters were tuned in the simulator and the algorithm seemed to function well enough to get the robot through the corridor competition.

Test before the corridor competition

We were scheduled for testing on Friday afternoon before the corridor competition. During that test, we first had to figure out why the lasers did not behave as they should have (because the number of laser points on the robot was different than that in the simulator). After that we noticed the algorithm did not work as expected. It was far less robust than we thought it would be and performed much poorer than in the simulator. Despite this we did have a few (of many) successful runs. Since this test was on Friday afternoon we did not have the chance to make changes or adjustments to the parameters (because we had no way of making sure it would work in reality), so we left it at this and hoped the corridor competition would be one of those successful runs.

After the corridor competition

Unfortunately we weren't lucky in the corridor competition, it did not go well (as expected). The main problem was taking corners, driving straight did not go well either. The problem might be caused by communication delay, but probably by the robustness of the controller. The solution for this is improving the controller. The current controller is based on a nested if-then structure. We need a different strategy.

Maze competition strategy

After the corridor competition we had a meeting in which we decided we needed a completely different approach. Both for control and corner detection. We decided on a program structure with three nodes, a controller node, a decision node and a camera node. They communicate as shown in the figure below.

Controller node

The laser measures 1081 distances, but from all these points there is 1 shortest distance. Our algoritm finds this shortest distance. The shortest distance measured is allways perpendicular to the wall, and using the known angle from this laser point we can calculate the error angle and distance. The formulas below are for just the left side, the right side uses the negative values because the laser points to the right wall there.

[math]\displaystyle{ e_A = change\_index\_to\_degree(laser\_point) + 90 }[/math]

[math]\displaystyle{ e_D = 0.5 - shortest\_distance }[/math]

We want the velocity of the robot to be 0 when facing the wall, and full speed when driving straight. A cosine of the error angle has the perfect behaviour for this. The angular velocity has to steer the robot to the center of the corridor while also correcting the error angle. These 2 tasks are impossible to do simultaneously so we made a controller which has a trade-off with tunable parameter. We will use the following control law:

[math]\displaystyle{ Velocity = K_1 cos(e_A) }[/math]

[math]\displaystyle{ Angular = K_2 e_A + K_3 e_D }[/math]

The velocity will be 0 when the robot faces the wall, and it will be maximum when the robot is straight. The angular velocity makes the robot steer towards the middle when it is at the side of the corridor. When the robot is in the middle of the corridor it will steer the robot straight. This controller is currently working in the simulator and we tested it on the real robot (video at the bottom of the page).

This controller can also be used to turn corners. One side of the lasers (left half or right half) will be ignored when the corner command is given, the robot then only follows the wall on one side of the robot. When that wall has a corner in it it will be followed because the shortest distance to the wall will be the corner point. The robot will drive the corner with a distance of 0.5 to the corner point.

Decision node

The decision node serves two purposes, the first is detecting corners and the second is deciding the direction the robot should take.

The decisions depend three factors. One is the rule in effect (left or right hand rule), the other is the available paths and the last is a detected arrow. Depending on the rule in effect, one direction will take precedence over the other when both paths are free. The direction opposite the rule will only be taken when the forward path and the direction of the rule are blocked or an arrow indicating that direction is detected. When all paths are blocked a dead end is detected. The direction to be taken will be sent to the controller node.

The detection of corners works by detecting the 'corner points'. This is done by first correcting the laser data of all lasers and then look for jumps in the value.

[math]\displaystyle{ laser\_corrected = laser\_data * sin(angle\_of\_laser\_data) }[/math]

The data is now normalized to lines parallel to the robot. A gap in the wall or corner is charachterized by a jump in the value of the data. We look for jumps bigger than 0.55 to find the corner points. When a decision about the direction has been taken we start turning (see controller node), the turning is stopped when the odometry yaw value changed more than 70 degree and no more turns are seen. The last statement makes sure we do not 'see' the same turn right after taking it.

Camera node

At least two steps are needed for arrow detection: segment red arrow from complex background and detect the direction. More details are as follows, and picture frame0008.jpg from the video is shown as an example.

Segment red arrow

- Segment red part from original picture. Since pictures in OpenCV can be easily represented in RGB and HSV and converted between each other, we try two filtering methods based on RGB and HSV respectively and then compare the results to choose one with better performance.

With RGB colorspace, three color channels can be splitted by cvSplit() function in OpenCV. Because white are combination of red, blue and green, white part is also represented in three single channels. Thus we approximate that [math]\displaystyle{ ''realRed = cRed - cBlue - cGreen'' }[/math] to remove the influence of white color. After that, use cvThreshold() to make picture binary and filter dark points below a setting threshold.

cvSplit(img, cBlue, cGreen, cRed, NULL); cvAdd(cBlue, cGreen, cGreen); cvSub(cRed, cGreen, cRed); cvThreshold(cRed, cRed,REDBOUND,255, CV_THRESH_BINARY);

To work with HSV colorspace, first use function cvCvtColor() to convert BGR to HSV. And then select interested red part by using cvInRangeS() and cvOr(). Here two hues ranges are used because HSV colorspace is actually a circle.

cvInRangeS(imgHSV, Low1, High1, imageArrowPixels1); cvInRangeS(imgHSV, Low2, High2, imageArrowPixels2); cvOr(imageArrowPixels1, imageArrowPixels2, imageArrowPixels);

When we set REDBOUND in RGB method to 10 and set boundaries in HSV method to a relative large range, the segmentation result is shown below. The left one is from RGB and right from HSV and with less noise (small areas). After trying all the samples from the video, we find that the REDBOUND that can segment all these arrows always brought more noise than HSV method. Thus we choose the second method.

- Remove all the environment noise.

In order to get rid of the influence from more complex environment, we´d better remove all the environment noise. In that case, we find a very useful blob extraction library to OpenCV - cvBlobsLib. It provides functions to manipulate, filter and extract results from the extracted blobs. We can use blobs.Filter to remove blobs with small areas, and then select the biggest blob as the arrow, fill it with white color. In this way only one blob is left in the picture, and when there is no other large red noise from the environment, we can surely assume the left area is the arrow. As can be seen blow, this filter does very well to remove all the noise.

But we met problem when we implemented blob filter to ros, because cvBlobsLib is not a internal library of OpenCV. Since we don´t want to spend to much time to fix this problem, we tried a simple filter method as a substitution. In this method, cvErode() and cvDilate() are used to help remove small number of pixels. Because our HSV filter in the first step is good enough (only quite small pieces are left), this simple further filter also works well as the following picture shows.

Detect direction

At first we considered finding the corners of arrow. However, because we use relatively strict filter above, the final arrow we get is not very accurate, i.e. no sharp angle. We cannot get correct numbers of corners in this case. And from the direct view that the arrow head should be ¨heavier¨ than arrow tail, we decided to use areas to detect direction. So the problem becomes to find a proper dividing line in the arrow and compare areas.

- Find dividing line.

In this part, we obtain the approximate position of arrow head by calculating the position of maximum vertical line, because as long as the arrow plane is approximately parallel to the camera, then the maximum vertical line is always on the arrow head side. Here thank Royce from group3 for this idea.

- Calculate areas of left and right parts. get the direction by comparing two area values.

In order to separate two parts, we use Region of Interest (ROI) in OpenCV, and use cvMoments() to easily calculate areas. Since the dividing line stays at arrow head, then the smaller area represents arrow direction. Here to get more accurate result, we filter again the arrows with small areas, that means arrows can only be detected when it is large enough in Jazz´s eyes.

We test this code for all the samples as well as the video we made by ourselves, the result goes quite well, but there would still be wrong detection when Jazz move too sharply. So an accurate controller is needed to move Jazz smoothly.

Arrow testing video : from bag file

Results first test

The first test was scheduled on Wednesday the 13th of June. We mainly tested the controller node because the decision node and the camera node weren't ready yet. The result was pretty good and proof that the algoritm was working the way it should. There is a small issue: The robot starts to stray when the corridor width varies a little, this is caused by the algoritm. The robot tries to stay at 0.5m from both walls, when the width is less than 1m it will chose between the walls and show undesirable behaviour. A solution would be to either decrease the required distance to the wall, or add a dead zone.

The wall following algoritm will also take corners when some laser data is ignored. This means that the robot will only navigate based on the left side lasers when turning left (it only sees the left wall), and vice versa. During testing we noticed that the robot makes the corner a bit too big and corrects itself afterwards (overshoot). This could become a problem at higher speeds, a solution could be to shorten the distance to the wall (on the inside of the corner).

A video of the trial run is shown here. The decision to take corners is made by hand, but driving through the corner is autonomous.

Results second test

Our second test was scheduled on wednesday 20th of June. Unfortunately testing wasn't possible then because the setup didn't work. This was unfortunate because we implemented a lot of new features and the next (last) opportunity to test was going to be only two days before the final contest.

Results third test

The last test was scheduled on Wednesday 27th of June, two days before the contest. We wanted to test the final result, all the nodes integrated. A lot had changed since the first test a few weeks ago and we noticed not everything worked as expected. We did not have enough time to find out why exactly and also had exams the following day so we had to enter the contest knowing the robot wasn't going to do what it was supposed to, even though it performed very well in the simulator. For proof of this, see the video below. The speed of video is increased 5 times, the actual maximum speed of jazz robot in simulator is 0.1

We also did integration with camera node in the third test. The image recognition of the arrow is really good. The proof can be seen on the video below,

Closing

- On the final test, our jazz robot was not working as our expectation.

- Thank you very much for our tutor Jos Elfring