PRE2015 4 Groep4: Difference between revisions

No edit summary |

No edit summary |

||

| (120 intermediate revisions by 5 users not shown) | |||

| Line 7: | Line 7: | ||

=Introduction= | =Introduction= | ||

In large hospitals, elderly patients and visitors may have trouble reading or understanding the navigation signs. Therefore they may go to the information desk to request help from human caregivers. A more efficient alternative could be: robots that guide elderly patients/visitors safely to their destination in the hospital. In this way, human caregivers can have their hands free to help elderly with more serious problems that robots cannot solve, like helping elderly stand up when they fell over. Robots can guide elderly easily to their destination, when a map of the hospital is programmed into the robot. Even if the patient doesn't know what to do or where to go to, the robot gives clear instructions for example through touchscreen or speakers. | In large hospitals, elderly patients and visitors may have trouble reading or understanding the navigation signs. Therefore they may go to the information desk to request help from human caregivers. A more efficient alternative could be: robots that guide elderly patients/visitors safely to their destination in the hospital. In this way, human caregivers can have their hands free to help elderly with more serious problems that robots cannot solve, like helping elderly stand up when they fell over. Robots can guide elderly easily to their destination, when a map of the hospital is programmed into the robot. Even if the patient doesn't know what to do or where to go to, the robot gives clear instructions for example through touchscreen or speakers. | ||

=Scenario= | |||

An old man wants to visit his wife in the hospital. Due to the abbreviations of locations and his hunchback, he is having trouble with reading the signage, so he asks for help at the information desk. Here he is directed to a guiding robot. This robot carries a touch screen on eye level which asks the user to press "start". The man presses start and the robot asks him to put on the smart bracelet. This question is also displayed on the screen so people with hearing problems can understand the instructions too. The old man puts on the bracelet and presses "proceed". Now the robot asks where the man wants to go and the robot uses voice-recognition to indentify the user's destination. | An old man wants to visit his wife in the hospital. Due to the abbreviations of locations and his hunchback, he is having trouble with reading the signage, so he asks for help at the information desk. Here he is directed to a guiding robot. This robot carries a touch screen on eye level which asks the user to press "start". The man presses start and the robot asks him to put on the smart bracelet. This question is also displayed on the screen so people with hearing problems can understand the instructions too. The old man puts on the bracelet and presses "proceed". Now the robot asks where the man wants to go and the robot uses voice-recognition to indentify the user's destination. | ||

The robot guides the man trough the hospital to his destination. Because of the smart bracelet the robot can keep track of the distance between the user and the robot. When the distance gets too big, the robot will adjust its speed. | The robot guides the man trough the hospital to his destination. Because of the smart bracelet the robot can keep track of the distance between the user and the robot. When the distance gets too big, the robot will adjust its speed. | ||

When arrived at the destination the robot will ask the user if the desired location is reached. If so, the robot will leave the user. When the user wants to leave the hospital again, he can summon one using his smart bracelet. When a robot is finished with a guiding task, it will check if there are other people trying to summon a robot, otherwise it will go back to its base and recharge. | When arrived at the destination the robot will ask the user if the desired location is reached. If so, the robot will leave the user. When the user wants to leave the hospital again, he can summon one using his smart bracelet. When a robot is finished with a guiding task, it will check if there are other people trying to summon a robot, otherwise it will go back to its base and recharge. | ||

== | =Users= | ||

'''Primary users''' | |||

Given that the user space is a hospital environment, a user would like to be treated with care. Therefore we stated a few things as necessary for the user. | |||

First of all the robot needs to behave in a friendly way. This means its way of communicating with the user should be as neutral as possible, such that the user feels at ease with the robot. Secondly, the robot should be able to adjust its speed to the needs of the user. For instance, younger people tend to be quicker by foot than elderly. The route calculated should be as safe and passable for the user as possible, with efficiency as second priority such that it does not take longer than necessary to reach the user's goal. Another thing the user needs is a way to communicate to a human if needed, think of situations like the robot being lost together with the user, it needs to be able to tell this to a human operator such that the user can be helped. | |||

'''Secondary users''' | |||

Friends and family of the elderly target group can benefit from the use of robots, because they do not have to plan the appointment of the elderly in their own agenda and therefore they do not need to accompany them anymore to their destinations in the hospital. The elderly can go by themselves. Caregivers could also benefit from the use of robots, because now they can better focus on their more important care tasks, instead of simply guiding the elderly to a destination. | |||

'''Tertiary users''' | |||

These users should all take into account that they indirectly might work with robots and therefore it requires adjustments for their design, construction, insurance, ethics etc. Industry involved in robot business could benefit financially and scientifically from the use of robots. | |||

Although the needs of the primary users are certainly key, the needs of the secondary and tertiary users should be considered as well to ensure high acceptance of the robot. | |||

=Stakeholders= | |||

Stakeholders are those who are involved in the development of the system. The two main stakeholders are the technology developers and the hospitals. The hospital can be separated into different stakeholders: the workers (nurses, doctors and receptionists), visitors, the board of the hospital and the patients. The primary stakeholders are the visitors, workers and developers. Secondary stakeholders are the government, the board and patients. | |||

Collaboration between developers, users and workers is needed. | |||

The influence of patients that are hospitalised must be considered, they must not experience nuisance with the use of the technology in the hallways. | |||

== | =Requirements= | ||

*The robot has to be able to reach each possible destination. Therefore it should be able to open doors and use elevators. | For the guiding robot the following requirements are drawed: | ||

* | *The robot has to be able to reach each possible destination. Therefore it should be able to open doors(doors open automatically in hospitals) and use elevators. | ||

*The robot should be able to calculate the optimal route to the destination. | *The robot should be easy to use. It should be easier and quicker figuring out how the robot works then trying to figure out how to get from A to B yourself. Thus a user-friendly interface should be designed. | ||

*It should be clear for the | *The robot should be able to calculate the optimal route to the destination. These routes are integrated, since the map of a hospital doesn't change. | ||

*It should be clear for the user that the robot is guiding hem or her to the desired destination. | |||

*It should be easy to follow the robot. | *It should be easy to follow the robot. | ||

*The robot has to be able to detect if the | *The robot has to be able to detect if the user is following him. | ||

*The robot outputs clear visual and audible feedback to the user. The user is well informed about the robot understanding capability and its next action. | |||

*The robot is able to track its location in the hospital. | |||

*The guiding robot should have a motor that is strong enough. | |||

*The battery life of the robot should allow it to work several hours continuously. | |||

*The robot has to be able to avoid collisions. | *The robot has to be able to avoid collisions. | ||

*The patient should feel comfortable near the robot. Interaction with the robot should feel natural. | *The patient should feel comfortable near the robot. Interaction with the robot should feel natural. | ||

*The robot should have a low failure rate. It should work most of the time. | *The robot should have a low failure rate. It should work most of the time. | ||

*If the robot stops working or in case of emergency, then it should be possible for the patient to contact help. | *If the robot stops working or in case of emergency, then it should be possible for the patient to contact help. | ||

*The design of the robot should fit in the hospital. It must be easy to clean, as sterile as possible and low risk of hurting oneself on the robot. | |||

== | =State-of-the-art= | ||

==Human-Robot Interaction== | |||

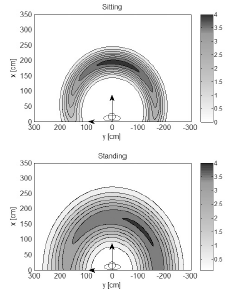

Several experiments have been done to determine the optimal distance between a robot and a user. Torta [7] conducted an experiment where participants were asked to stand still, but they could move their head freely. The robot would approach them in different direction and the participants could stop the robot when they felt most comfortable with the distance by pressing a button. Afterwards they evaluated the direction at which the robot approached them by answering the 5-point Likert scale question: How did you find the direction at which the robot approached you. This experiment was also executed when the participants were sitting. The results of these experiments are shown in the figure below. | |||

[[File:Personal space model.png]] | |||

These experiments that the mean value of the optimal distance of approach is 173 cm when the user is standing and 182 cm when he is sitting. This is significantly larger than the preferred distance between humans (45cm-120cm). | |||

These results show that it is important to test a following scenario with a real robot for the preferred distances in human-human interaction are different from human-robot interaction. The values obtained in these experiments cannot be used, because the robot used (Nao) is much smaller than our guiding robot and this shows the optimal values for approaching scenarios, not for guiding scenarios. | |||

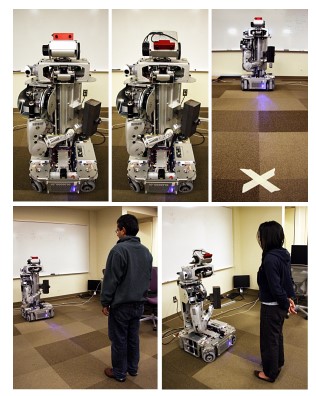

More experiments on proxemics behaviours in human-robot interaction have been conducted by Takayama and Pantofaru [8]. Each participant was asked to stand on the X marked on the floor, which was 2.4 meters away from the front of the robot. The robot was directly facing the participant as shown in the figure below. | |||

[[File:experiment takayama.jpg]] | |||

For the half of the participants the robot’s head was tilted to look at their face in round 1 and at their legs in round 2. For the other half the order was switched. The participants were asked to move as far forward as they feel comfortable to do so. Then the robot would approach the participants and when the robot came to close to feel comfortable, the person had to step aside. Afterwards the participants filled in a questionnaire and the procedure was repeated for the robot’s head tilted in the other direction. | |||

In this experiment some factors were found which influence the human-robot interaction. Experience with owning pets and experience with robots both decrease the personal space that people maintain around robots. Also women maintain larger personal spaces from robots that are looking at their faces than man. It does not matter whether people approach robots or if they let robots approach them. | |||

== | ==Guiding robot== | ||

In the field of guiding robots, several projects are already handeld. One of these projects is the SPENCER project. The robot called 'SPENCER' is a socially aware service robot for passenger guidance on busy airports. It is able to track individuals and groups at the airport Schiphol. SPENCER uses a variety of sensors like a 2D laser detector, a multi-hypothesis tracker (MHT), a HOG based detector, and a RGB-D sensor. SPENCER is meant for a large environment. There is no technology yet that provides similar quality detection in smaller form. However SPENCER provides a good base and some of his technologies for recognition of persons and/or groups can be used for avoiding collisions with people moving about. | |||

Another project is that described in Akansel Cosgun’s paper.[6] It is a navigation guidance system, that guides a human to a goal point. The robot is using a vibro-tactile belt interface and a stationary laser scanner. Normally, leg detection is common practice for robots where a laser scanner is placed at ankle height. However in cluttered environments this leg detection was found to be prone to false positives. To reduce these false positives a approach is attempted by employing multiple layers of laser scans, usually at the torso height in addition to ankle height. Studies showed that the upper body tracker achieved better results than the leg tracker, due to the simpler linear motion model instead of a walking model. This technology is convenient for tracking people, but not for one specific person, like our elderly user. So this technology can better not be used for tracking the user in our specific setting. | |||

=USE-aspects= | |||

Below the USE-aspects concerning our guiding robot are described. | |||

==Users== | |||

The users of the new guiding robot are generally elderly people who have trouble with finding the way in the hospital. Without this robot they would have to ask employees to guide them. Employees may not have time to guide the elderly all the way to the destination. So elderly can still get lost if they don't fully understand the intructions of the employees. They could come too late to an appointment due to this. | The users of the new guiding robot are generally elderly people who have trouble with finding the way in the hospital. Without this robot they would have to ask employees to guide them. Employees may not have time to guide the elderly all the way to the destination. So elderly can still get lost if they don't fully understand the intructions of the employees. They could come too late to an appointment due to this. | ||

It is important that the users feel comfortable near the robot. Users may otherwise refuse to let the robot guide them. | It is important that the users feel comfortable near the robot. Users may otherwise refuse to let the robot guide them. | ||

==Society== | |||

Our society is aging so the ratio of elderly and young people is changing. Less young caregivers will be available for the older people who need care. Deploying robots for executing simple tasks reduces the need for caregivers, because they can work more efficiently if they just have to focus on the more complex tasks. The growing demand of caregivers will diminish and the elderly will still get the care they need. | |||

==Enterprise== | |||

The robot can help patients who have trouble with finding the way to arrive in time. Therefore less patients would arrive too late to an appointiment. This may make the hospital more efficient and could reduce costs. Also industry involved in robot business could benefit financially and scientifically from the use of robots. The robot industry will grow, which creates more jobs for engineers who want to develop and improve this robot. | |||

= Objectives = | |||

Below the objectives concerning the system and the final presentation are explained. | |||

==Objectives of systems== | |||

* Guiding elderly patients and visitors of a hospital safely, comfortably and quickly to their destination | |||

* Reducing amount of human caregivers who guide patients and visitors, so they can have their hands free to help patients with more serious problems | |||

* Keeping accompany with lonely elderly, so they can have someone to interact with and have a conversation with | |||

* Keeping track of the user | |||

* Avoid obstacles | |||

* Provide a suitable solution in case the robot malfunctions | |||

* Using touchpad with app and speakers in order to communicate with the user or to give instructions to the user | |||

==Objectives of presenation== | |||

As presentation of our design, we are going to use a Turtle robot from Tech United. Further information is found in [[#Robot|Robot]] | |||

*The Turtle will guide a person from A to B to C etc. on the soccerfield. | |||

*The Turtle will react to the position of the user and the turtle will give signals when he for example wants to makes a turn left. | |||

An [[#User interface|App]] is made for the first interaction with the robot. | |||

= Tasks and approach (new; after week 3)= | |||

'''Robot''' | |||

A decision has to be made between using real [[#Robot|robot]] (for example the amigo or a robocup robot) or building our own robot with an arduino, motor, battery, wheels, webcam, sensors and platform. We decided to choose for a robocup robot for the following reasons: | |||

* It is more robust than the amigo; it has bumpers | |||

* navigation is already implemented, we need a hospital map | |||

* obstacle avoidance is already implemented, since the robocup robots avoid each other when they play football. We have to define what is an obstacle and what is not. | |||

What still has to be implemented by us is the interaction/conversation between robot and user. Robot gives instructions through speaker and touchscreen. In case elderly stops following the robot, because he/she stops walking or walks into another direction, the robot should communicate with the user or information desk, so that a human caregiver can continue guiding the user.. | |||

'''Actions robot should take in case of [[#Malfunctions|malfunctions]]''' | |||

It could happen that the robot has electrical problems, wifi problems and/or mechanical problems, like broken sensor, broken link wifi connection, broken motors. Then the robot should call another robot or call the information desk for human caregivers so that they can continue guiding the user. | |||

= | =User interface= | ||

An interaction between the user and the guiding robot is needed. Herefore, a app is designed to make the first interaction. The app will work as user interface to tell the robot his or hers desired location of arrival. This app will be programmed in Android Studio. When starting on the app, the following things should be discussed: | |||

Which features should it hold? How can we make it user friendly? Who are the users? | |||

The design of the app is based on the desing of the Catharinaziekenhuiz website. | |||

==App design== | |||

Below the design of the app is described, with all the features it should hold. | |||

---- | |||

'''''Homescreen:''''' | |||

The homescreen should contain two buttons a start button and a change language button and the text 'Welkom'. When the language is selected differently the homescreen will reappear in the desired language. | |||

---- | |||

'''''Next screen:''''' | |||

The following screen is found when the user presses start. It will contain the following text: 'Ik ben een'. Below it two buttons will be shown containing the text 'Patiënt' and 'Bezoeker'. | |||

---- | |||

'''''Patiënt:''''' | |||

The screen following on the patient button should contain te following buttons: 'Ik heb een afspraak', 'ik heb geen afspraak'. Following these two buttons, is described below the desierd button. | |||

*ik heb een afspraak | |||

**Op welke polikliniek heeft u een afspraak? Voor bloedprikken of het inleveren van andere lichaamsvochten, is het niet nodig een afspraak te maken. | |||

***Menu with all the polis in a scroll menu, the possiblity to type in the poli | |||

*Ik heb geen afspraak | |||

**U heeft geen afspraak, maar wel een verwijzing | |||

***Voor een afspraak op één van onze poliklinieken kunt u bellen naar de betreffende polikliniek of afdeling. Tussen 08.30 en 16.30 uur zijn onze poliklinieken telefonisch bereikbaar. | |||

**U heeft geen afspraak en ook geen verwijzing | |||

***Voordat u een afspraak kunt maken op een van de poliklinieken, moet u eerst een verwijzing hebben van uw huisarts. Maak hiervoor eerst een afspraak met uw huisarts. | |||

''' | ---- | ||

The | '''''Bezoeker:''''' | ||

The screen following on the 'Bezoeker'-button contains the following two buttons, with its subselections. | |||

*op welke afdeling wilt u een bezoek brengen? | |||

**menu with the sections | |||

***op welke kamer moet u zijn? | |||

***Ik weet niet op welke kamer ik moet zijn? | |||

*Ik weet niet op welke afdeling ik moet zijn? | |||

The visiting times can be checked when the section is know. | |||

---- | |||

''' | '''''Ik weet niet waar ik een bezoek wil brengen:''''' | ||

The | The following screen appears when the button 'ik weet niet waar ik een bezoek wil brengen'is used: | ||

*Possibility to fill in the patient | |||

*We should ask if this is possible concerning privacy | |||

=Robot= | |||

The simulation of our idea will be made by using a Turtle from Tech United. The Turtle and its programming of Tech United can be used and changed too our idea. With the use of a DEV-notebook we can program and simulate our idea. When the results are desirable the simulation with the real Turtle can be made. Below a few things we will use that are already programmed are listed: | |||

*The Turtle knows the field and its own position on the field. | |||

*The Turtle sees a black object as an obstacle, which it will avoid. | |||

*The Turtle sees a yellow object as the ball. | |||

*The Turtle is programmed using positions and not using velocity. | |||

We will work in Eclipse on the role_attacker_main.c file. | |||

==Interpretation of the guiding system== | |||

The Turtle knows the field and its position. The goal of the Turtle is to move from a position A through a set route a position B. The route will be simulation of hallways with turns. During the movement the Turtle will avoid obstacles (black). De user (yellow) must be guided from through the route to its end destination. | |||

==Decision making== | |||

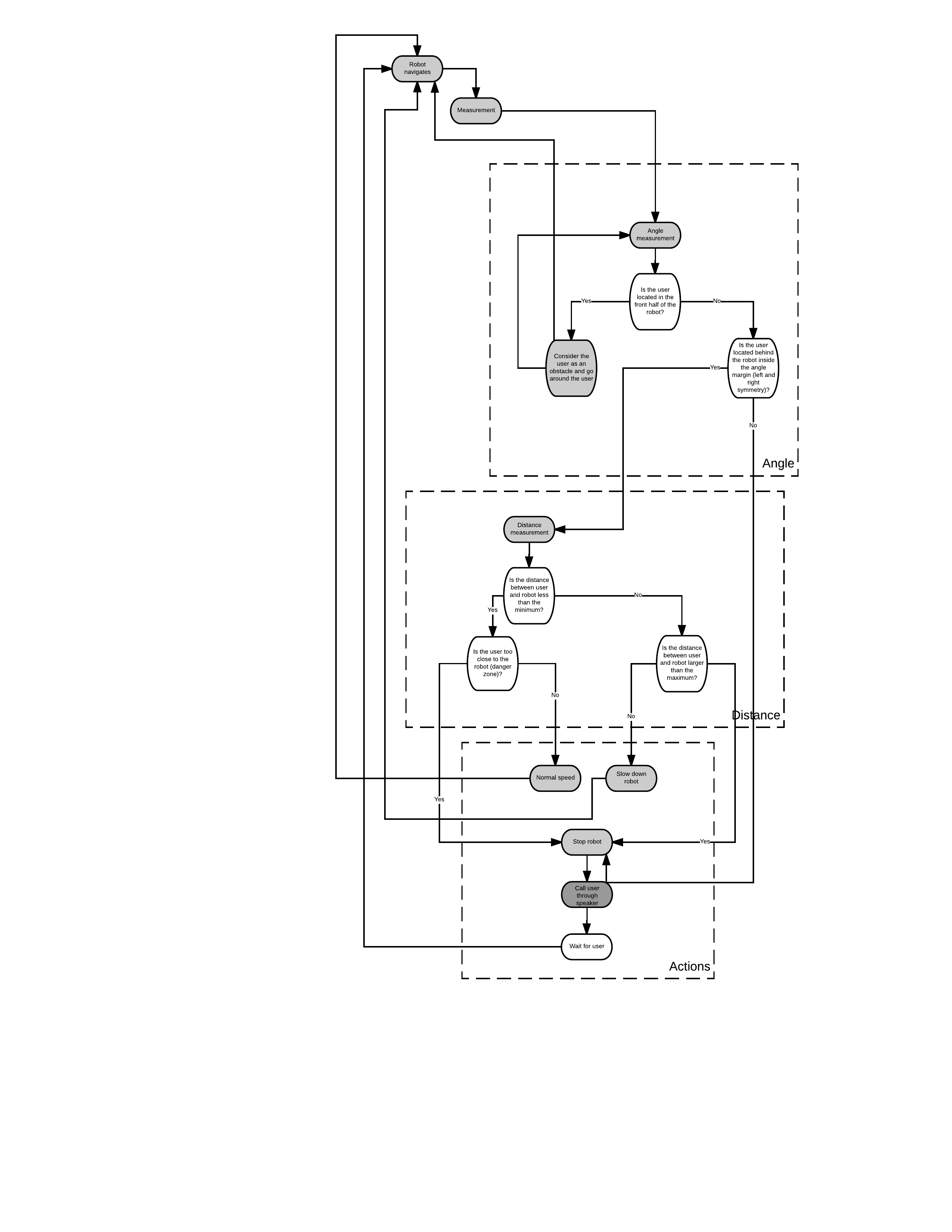

The robot cannot simple follow the route, it has to take several decision into account. The following points have to be taken into account when making a decision: | |||

*Lead/follow; dependend on the distance, what should the robot do. When robot leads, it follows the preprogrammed path from starting point to destination and may accelerate or keep the same speed (distance between robot and user is small ebough). When robot follows, it goes slows down. Robot calls reception if user is completely off track. | |||

*Interaction: what will the robot do/call (through speaker), in case it malfunctions (see section 'Malfunctions')/issue (see section 'Possible issues'). How to implement this? | |||

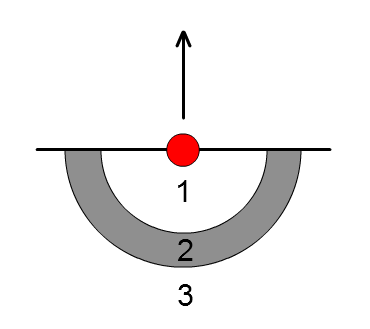

The decision the robot has to make depends on the zone that the user is in, see figure below. | |||

[[File:123zones.png]] | |||

*1: The robot continues its route at a faster rate. | |||

*2: The robot continues its route at a normal rate. | |||

*3: The robot continues its route at a slower rate. | |||

The zone infront of the robot: the robot decides to move around the user and follows the path. | |||

' | When the user and robot are too far away from eachother the robot will stop it's route until the user gets close again. | ||

Which decision the robot will make at which time is shown by the flow chart below. | |||

[[File:Blank_Flowchart_7_(1).png|1200px]] | |||

==Code== | |||

The | The code used for the robot is shown below. The comments in the code will explain some steps. | ||

---- | |||

The code starts there: | |||

/******************* | |||

* Prototypes * | |||

*******************/ | |||

int q = 0; | |||

int l = 0; //NEW | |||

int t = 0; | |||

int t2 = 0; | |||

void Role_eAttacker_Main(MUHParStruct_p pS_MUHP, AHParStruct* pS_AHP, | |||

InputStruct* pS_in, OutputStruct* pS_out, SimStruct *S) { | |||

/* global data */ | |||

psfun_global_data psfgd; | |||

get_pointers_to_global_data(&psfgd, S); | |||

Pose_t Turtle_Target; | |||

pPose_t cur_xyo = &pS_in->pSFB->cur_xyo; | |||

pBall_t ball = &pS_in->pSFB->ball_xyzdxdydz; | |||

/* calculate distance from turtle to ball :D */ | |||

//ballData->dist = getdistance(cur_xyo_delayed.arr, ball_xyz); | |||

int i; | |||

double obsDist = 100000., dist; | |||

pObstacle_t closestOpponent = NULL; | |||

for (i = 0; i < MAX_OPPONENTS; ++i) { | |||

dist = getdistance(pS_in->pTB->WMopponents[i].pose.arr, cur_xyo->arr); | |||

if (dist < obsDist) { //shortest distance | |||

obsDist = dist; | |||

closestOpponent = pS_in->pTB->WMopponents + i; | |||

} | |||

} | |||

//double speedUpDistance = 1; //0.5;//- | |||

//double slowDownDist = 1.5; //2.0;//1.0; | |||

double slowDownDist1 = 0.6; | |||

double slowDownDist2 = 1.2; | |||

double slowDownDist3 = 1.6; | |||

double stopDist = 2; | |||

double current_r_angle; | |||

double current_user_angle; | |||

double relative_angle; | |||

double allowed_angle = 90; //hoek links en rechts t.o.v bewegingsrichting robot | |||

//' Turtle_Target' is waar de robot naartoe moet, 'cur_xyo' is waar de robot nu is (x,y) en o is rotatie/orientation | |||

//BALLOBSTACLERADIUS=0.5; | |||

//current_angle=tan(()/()) | |||

AddObstacle(ball->x, ball->y, BALLOBSTACLERADIUS, pS_out->pAddObsStruct); | |||

/* calculate distance from turtle to ball :D */ | |||

double ballDist = getdistance(ball->pos.arr, cur_xyo->arr); | |||

if (ball != NULL) { | |||

//Turtle_Target.x = ball->x; DO NOT DELETE!!!!! | |||

/* | |||

if((cur_xyo->x=!-3)||(cur_xyo->y=!0)) //mistake: always go to there if current x=!-3 and y=!0 | |||

{ | |||

Turtle_Target.y=0; | |||

Turtle_Target.x=-3; | |||

//DoAction(Action_GoToTarget, pS_AHP, &Turtle_Target); | |||

} | |||

*/ | |||

//if ((cur_xyo->y==-3)&&(cur_xyo->x==0)){ | |||

//if ((cur_xyo->y==3)&&(cur_xyo->x==0)){ | |||

//The coordinates for the paths are below | |||

int path1_x[6] = { -3, -3, 0, 0, 3, 3 }; //coordinates in x-direction | |||

int path1_y[6] = { 0, 3, 3, -3, -3, 0 }; //coordinates in y-direction | |||

int path1_o[6] = { 0, 1, 1, 2, 2, 3 }; //NEW | |||

int path2_x[6] = { 0, -3, -3, 3, 3, 0 }; //NEW //coordinates in x-direction | |||

int path2_y[6] = { -3, -3, 0, 0, 3, 3 }; //NEW //coordinates in y-direction | |||

int path2_o[6] = { 0, 1, 1, 2, 2, 3 }; | |||

//NEW path_o, 1 play: turn left, 2 play: turn right, 3 play: finish | |||

//EXTR | |||

int path3_x[6] = { -3, 0, 0, 3, 3, -3 }; | |||

int path3_y[6] = { 0, 0, -3, -3, 3, 3 }; | |||

int path3_o[6] = { 0, 1, 2, 2, 2, 3 }; | |||

int path4_x[6] = { -3, 3, 3, 0, 0, -3 }; | |||

int path4_y[6] = { -3, -3, 3, 3, 0, 0 }; | |||

int path4_o[6] = { 0, 2, 2, 2, 1, 3 }; | |||

//smal path | |||

int path5_x[6] = { -3, 0, 0, 3, 3, -3 }; | |||

int path5_y[6] = { -2.8, -2.8, -5.6, -5.6, -0.2, -0.2 }; | |||

int path5_o[6] = { 0, 1, 2, 2, 2, 3 }; | |||

int path6_x[6] = { -3, 3, 3, 0, 0, -3 }; | |||

int path6_y[6] = { 0.2, 0.2, 5.6, 5.6, 2.8, 2.8 }; | |||

int path6_o[6] = { 0, 2, 2, 2, 1, 3 }; | |||

int path8_x[10] = { -3, 0, 0, 3, 3, -3 }; //0} | |||

int path8_y[10] = { -2.8, -2.8, -5.6, -5.6, -0.2, -0.2 }; //7,} | |||

int path8_o[10] = { 0, 1, 2, 2, 2, 3 }; | |||

int path13_x[10] = { -3, -0.5, 0, 0, 0.5, 2.5, 3, 3, 2.5, -3 }; //''//0};//.} | |||

int path13_y[10] = { -3, -3, -3.5, -5.1, -5.6, -5.6, -5.1, -0.5, 0, 0 }; //''//6} | |||

//int path13_o[10]={0,2,2,1,1,1,1,1,1,1,1} | |||

//int path13_o[10]={0,1,1,2,2,2,2,2,2} | |||

//int | |||

//int | |||

int path13_o[10] = { 0, 1, 1, 2, 2, 2, 2, 2, 2, 3 }; | |||

int data_array[8][100000]; //={} | |||

//smal path | |||

//EXTR | |||

int path_x[10]; //={0,0,0,0,0,0}; | |||

int path_y[10]; //={0,0,0,0,0,0}; | |||

int path_o[10]; //NEW | |||

for (t2 = 0; t2 < 10; t2++) { | |||

path_x[t2] = path13_x[t2]; //c[]//path_x[t2] = path5_x[t2]; //6 should be changed to the length of the array | |||

path_y[t2] = path13_y[t2]; | |||

path_o[t2] = path13_o[t2]; | |||

} | |||

Turtle_Target.o = LOOK_AT_BALL_FLAG; | |||

if (q < 10) { //similarly 6 should be changed | |||

//printl(cur_xyo->o, "angle robot=%0.1f"); | |||

//printl(2,"stopping\n"); | |||

current_r_angle = atan( | |||

(path_y[q] - cur_xyo->y) / (path_x[q] - cur_xyo->x)); | |||

if (ball->y - cur_xyo->y < 0) { | |||

current_user_angle = atan( | |||

(ball->y - cur_xyo->y) / -(ball->x - cur_xyo->x)); | |||

} | |||

if (ball->y - cur_xyo->y > 0) { | |||

current_user_angle = atan( | |||

(ball->y - cur_xyo->y) / (ball->x - cur_xyo->x)); | |||

} | |||

if (sqr(cur_xyo->x - path_x[q]) + sqr(cur_xyo->y - path_y[q]) | |||

< .1) { | |||

// if ((round(10. * cur_xyo->x) == 10 * path_x[q]) && (round(10. * cur_xyo->y) == 10 * path_y[q])) { | |||

q = q + 1; //go to next point in path. | |||

} | |||

double sound_timeout = 3.0; | |||

if (sqr(cur_xyo->x - path_x[q]) + sqr(cur_xyo->y - path_y[q]) < 1) { //NEW if 1 meter ahead of next point | |||

if (path_o[q] == 1) { //NEW | |||

if (getTimeSec() - t > sound_timeout) { //NEW | |||

system("aplay -Dplughw:CARD=Device,DEV=0 /home/robocup/rechtsaf.wav &"); //NEW | |||

t = getTimeSec(); //NEW | |||

} //NEW | |||

} //NEW | |||

if (path_o[q] == 2) { //NEW | |||

// printl(9, "rechtsaf\n"); | |||

if (getTimeSec() - t > sound_timeout) { //NEW | |||

// printl(8, "Rechtsaf geluidje\n"); | |||

system("aplay -Dplughw:CARD=Device,DEV=0 /home/robocup/linksaf.wav &"); //NEW | |||

t = getTimeSec(); //NEW | |||

} | |||

} | |||

if (path_o[q] == 3) { //new | |||

if (getTimeSec() - t > sound_timeout) { //new | |||

system("aplay -Dplughw:CARD=Device,DEV=0 /home/robocup/finish.wav &"); //new | |||

t = getTimeSec(); //new | |||

} | |||

} | |||

//if (q==6){ | |||

// if(fabs(t - ssGetT(S)) > 1.0) { | |||

// system("aplay kom_hier_heen.wav &"); | |||

// t = ssGetT(S); | |||

//} | |||

//} | |||

else { //NEW | |||

} //NEW | |||

} //NEW | |||

relative_angle = current_r_angle - current_user_angle; | |||

// if (getTimeSec() - t > 0.05) { //1.0) { //new 20 time per second, 100000 so 5000 seconds so 100 minutes | |||

// //system("aplay finish.wav &"); //new | |||

// data_array[1][l] = cur_xyo->x; //Turtle_Target.x; | |||

// data_array[2][l] = cur_xyo->y; //Turtle_Target.y; | |||

// data_array[3][l] = ballDist; | |||

// data_array[4][l] = ball->x; | |||

// data_array[5][l] = ball->y; //ee | |||

// data_array[6][l] = current_r_angle; | |||

// data_array[7][l] = current_user_angle; | |||

// data_array[8][l] = relative_angle; | |||

// t = getTimeSec(); | |||

// } | |||

printl(2, | |||

"angle robot=%0.3f angle user=%0.3f balDist=%0.3f bal.x=%0.3f bal.y=%0.3f\n x=%0.3f y=%0.3f\n", | |||

current_r_angle, current_user_angle, ballDist, ball->x, | |||

ball->y, cur_xyo->x, cur_xyo->y); | |||

printl(4, " relative angle=%0.3f\n", relative_angle); | |||

//printl(2, "angle robot=%0.3f angle user=%0.3f balDist=%0.3f bal.x=%0.3f bal.y=%0.3f\n x=%0.3f y=%0.3f\n", current_r_angle, current_user_angle, ballDist, ball->x, ball->y, cur_xyo->x, cur_xyo->y); //comment | |||

//else { | |||

//} //Bracket from else function | |||

pS_AHP->desired_vel = 0.5; | |||

if (cur_xyo->x != ball->x || cur_xyo->y != ball->y) { //%||) | |||

if (ballDist >= stopDist) { | |||

pS_AHP->desired_vel = 0; | |||

if (getTimeSec() - t > sound_timeout) { //NEW | |||

system("aplay -Dplughw:CARD=Device,DEV=0 /home/robocup/komm.wav &"); //NEW | |||

t = getTimeSec(); //NEW | |||

} | |||

} | |||

else { | |||

if (ballDist > slowDownDist3) { | |||

pS_AHP->desired_vel = 0.3; | |||

} //The robot slows down. | |||

else { | |||

if (ballDist > slowDownDist2) { | |||

pS_AHP->desired_vel = 0.4; | |||

} else { | |||

if (ballDist > slowDownDist1) { | |||

pS_AHP->desired_vel = 0.44; | |||

} | |||

} | |||

} | |||

} | |||

} | |||

else{ | |||

pS_AHP->desired_vel = 0; | |||

} | |||

Turtle_Target.x = path_x[q]; | |||

Turtle_Target.y = path_y[q]; | |||

DoAction(Action_GoToTarget, pS_AHP, &Turtle_Target); | |||

//} | |||

} else { | |||

DoAction(Action_Idle, pS_AHP); | |||

} | |||

} else { | |||

DoAction(Action_Idle, pS_AHP); | |||

} | |||

/* copy game target to strategy bus */ | |||

pS_out->pSTB->gameRoleTarget_xy = Turtle_Target; | |||

pS_out->pSTB->gameRoleTarget_Role = pS_in->pSTB->assigned_game_role; /* use the accompanying game role to ensure data consistency for role_assigner gettidGR_RA function */ | |||

l = l + 1; | |||

} //249 | |||

= | The code ends there | ||

' | ---- | ||

=Tracking user= | |||

The idea of tracking the user, was by using a 'smart bracelet'. The idea of tracking a person by using leg detection is very difficult in a crowded environment. | |||

The smart bracelet is worn on the user's wrist. In this way the robot can keep track of the distance radius between the user and the robot. The basic idea of the bracelet is as follows: the robot sends a signal to the smart bracelet and the robot starts the timer. The smart bracelet receives the signal and immediately sends a signal back to the robot. The robot receives the signal and stops the timer. The timer indicates the time interval between the robot sending and receiving the signal. However, this timer is twice as much as the actual travel time. Hence this time has to be divided by 2. The distance radius between the user and robot can be calculated as follows: x=v*t/2, where x is the distance radius between the user and robot, v is the travelling speed of the signal, t is the time between the robot sending and receiving the signal, the factor 1/2 is for correcting the double travelling time. | The smart bracelet is worn on the user's wrist. In this way the robot can keep track of the distance radius between the user and the robot. The basic idea of the bracelet is as follows: the robot sends a signal to the smart bracelet and the robot starts the timer. The smart bracelet receives the signal and immediately sends a signal back to the robot. The robot receives the signal and stops the timer. The timer indicates the time interval between the robot sending and receiving the signal. However, this timer is twice as much as the actual travel time. Hence this time has to be divided by 2. The distance radius between the user and robot can be calculated as follows: x=v*t/2, where x is the distance radius between the user and robot, v is the travelling speed of the signal, t is the time between the robot sending and receiving the signal, the factor 1/2 is for correcting the double travelling time. | ||

Deciding which kind of signals are most prefferable and which components are best, has to be researched if the smart bracelet is to be implemented. | |||

An example could be the myRIO device that uses wifi as signal and use it in the MATLAB environment. MATLAB starts a timer when sending a signal to the myRIO. The myRIO receives this signal and immediately sends a signal back to MATLAB. MATLAB stops the timer when receiving the signal and the elapsed time between MATLAB sending and receiving the signal is measured. The actual travel time is half of the measured time, since the measured time is twice as large (back and forth). | |||

However, tracking the user using this smart bracelet is not necessary when the [[#Robot|Turtle]] of Tech United are used for the presentation of the guiding system. | |||

=Principle= | |||

* Robot will always consider the user as an obstacle in order to avoid collisions | |||

* Robot drives from start to end as long as user is in a range between two radii | |||

behind the robot with a certain speed | |||

* The robot speeds up if the user comes too close (inside inner radius) | |||

* The robot stops if the user too far away (outside outer radius) and robot can | |||

call the user to come back through speakers | |||

* When the user is ahead of the robot, the robot will consider the user as an | |||

obstacle and go around him to take the lead again | |||

* In this way the robot maintains a constant distance between robot and user. | |||

=Deliverables= | |||

The following points will be given during the presentation of our design. | |||

*We show that the robot can follow a preprogrammed path from the starting point to the destination. (This simulates the route to a destination) | |||

*We show that the robot can avoid obstacles | |||

*We show that the robot interact with the user; the robot leads if the distance between robot and user is small enough, the robot follow if the distance between robot and user is too large. | |||

*We show that robot can indicate when it will turn or brake. | |||

*We show that the robot can keep track of its own position and the position of the user. | |||

*We show that the robot can guide the user safely and time efficient from a starting point to a destination. Safely is measured in terms of comfort (survey) and robot keeping track of user and interact in appropriate way depending | |||

Dit nog controleren!! | |||

*We show that the user can input their destination via the app. | |||

*Time efficiency will be measured in terms of elapsed time and displaced distance (planned route AND unplanned route, for example when the robot has to go after the user because the user didn't follow the robot) | |||

*The users position can be tracked with radius and angle with respect to the robot. This can be done in two ways: | |||

1. Either we use the Kinect 2 only to measure the angle (position of user on frames generated by Kinect 2) and radius (size of user on frames generated by Kinect 2) pf the user with respect to the robot | |||

=Malfunctions= | |||

One of our objectives is to provide a suitable solution in case of malfunctions. The robot’s main objective is to guide a user to its destination, so even in case of any technical defect the user should be able to arrive at the destination. This means that our robot is responsible for the user’s safe arrival. The kinds of malfunctions are divided into mechanical, electrical and wifi connection problems. In case of any defect the user should be able to contact the helpdesk with a telephone attached to the robot | |||

==Wifi problems== | |||

When the wifi connection the robot may lose track of the users or its own position. If there are still other connections available a robot can switch between channels when the current network channel is broken [1]. It looks for another available connection channel and once found, it tries to connect to it. If a robot detects multiple channels, it has to choose the best one and starts communicating using that channel. | |||

If this does not work the robot will try and switch to its backup wifi. The robot could also already have sensed the wifi unit being broken (for example 0 voltage is measured coming through it, which means the antenna is not transmitting any signals), than it will also reroute to the backup wfi unit. So the current robot can continue to guide the elderly safely. An alternative could be employing another robot. | |||

In order to extend the lifetime of the network, robots are designed to minimize network communications among sensors as much as possible [2]. For example, it uses an initial configuration with good coverage of the environment. | |||

If the signal is lost completely the robot will try to follow its map and last known location to follow the route to get out of off dead, wifi-less, zones. If this fails the robot will after certain amount of time has passed inform the user of its issues and suggest they look for the nearest staff member for further assistance or give the ability to call the front desk via a phone signal. | |||

If the loss of wifi connection results in movements in the wrong direction, other solutions should be provided to stop the robot. The developers of the Care-O-Bot solved this problem by implementing a bumper that prevents the robot of being crushed when it bumps into a wall. Also it automatically shuts down when it crosses magnetic band, integrated in the floor. Places where the robot does not need to be (outside the hospital or inside operating rooms) can be marked with this magnetic band. The robot can be turned off manually with emergency buttons too. | |||

==Mechanical problems== | |||

For a the robot to function properly it needs to be able to move around at a good pace. This is assured by a tachometer that has a light source and a detector (light sensor)[3] [4]. The wheels have slots (holes), through which the light shines. When the wheels spin, the detector can count the numbers of times the light hits the sensor; the light hits the sensor when a hole is aligned with the light source and the sensor and the light doesn't hit the sensor when a hole is not aligned (the light is blocked). In case the robot gives a command to spin the wheels, but the sensor counts 0 speed (either a continuous hit or a continuous blocking of light), the robot knows the motor has issues. When such an issue occurs the robot will stop its attempts to move and inform the user that it is inoperable and has dispatched a request for a backup unit. The robot lists itself as malfunctioning and awaits pickup. | |||

==Electrical problems== | |||

The robot has to make sure it's battery is charged sufficiently (before it even starts an attempt to guide the user) in order to guide without interruptions. This means for example that at the start of the guiding, the robot should be able to make at least 2 rounds through the hospital. If not, it will go to the charging station to be sufficiently charged. During the guiding, the robot keeps track of the battery level and compares this to the amount of energy it thinks it will need to travel the remaining path. If during the guiding, the robot predicts a battery level that is insufficient to travel the remaining path, it will call upon a replacement and it will return to a charging station. | |||

Screen issues may occur during the guiding. If it shuts down without reason, an alternative could be speech through speakers and speech recognition. When 0 voltage is measured in the screen, even if the robot gave a command to display something, the robot uses this alternative. If the screen displays pixels wrongly, the user can manually reset the screen. | |||

=Impact= | |||

The deployment of our robot will have a positive impact on the visitors and the employees of the hospital. Some elderly may have trouble understanding the signage, so having someone to walk with them to their destination will prevent them from getting lost. Nowadays the visitors would have to ask a human caregiver to help them, which disturbs the caregivers in doing their tasks. If a robot could show the way to the visitors, human caregivers will be able to focus on more important care tasks. An advantage to this robot employment is that it will be cheaper to use robots instead of humans, because the robot does not need a salary and it is also able to work twenty four seven. | |||

A downside of deploying robots could be that elderly have less human personal contact, which might make them dislike the robot. However if a human caregiver gives a person elaborate directions and walks with him to his destination, the caregiver will waste time while he should be doing other tasks. Usually the caregiver will choose to give directions very quickly and continue with his own tasks, so the visitor will not get a lot of personal contact anyway and could still get lost. | |||

On the other hand some elderly could also be glad with the social interaction with the robot. They do not have to burden human caregivers or other people any more with their problem to find their destination in the hospital. Their self-esteem could increase by this, because they are not dependent on other humans. | |||

Also because of the friendly appearance the robot is more likeable for users, so the users will accept it more easily. The use of bracelets will make the robot stay close to the users, which make it appear more trustworthy and concerned about the user. The robot will wait for the user when needed and stop if the user wants to chat or go to the toilet, so the users will feel comfortable while using the robot. The ability to solve problems due to malfunctioning of the system also improves the trustworthiness. If for example the wifi connection is broken, it can still contact the helpdesk so the user will not be left alone, which has a positive impact on the user. The system will not only be found helpful, but also reliable. | |||

=Experiment= | |||

The range that will be used to adjust the speed, will be concluded from the experiment in the next paragraph. | |||

==Experimental setup== | |||

Below the setup of the experiment is described. | |||

===Design=== | |||

In our study the participants are going to be tested under the same conditions. The participants are going to compute the experiment on the soccer field of Tech United. Environmental factors, like noise, people passing by cannot be controlled. | |||

The participants are going to be asked to follow a robot at a distance they feel comfortable with. At the end of the experiment they will be asked to fill out a short questionnaire. | |||

===Participants=== | |||

The experiment is going to be held under students of our own social network. Due to the lack of time to conduct an experiment under a big amount of people of all ages, especially elderly, we are going to held it under students. This will give us the “right” data for our presentative of the idea. | |||

===Procedure=== | |||

First the participants get a short introduction about the experiment. They are told what the goal of the experiment is and for what purposes the data is going to be used. | |||

The test is to follow the robot at a speed and distance they feel comfortable with. The robot is moving from A to B in a straight line. The robot in the experiment will adjust its speed to the participant, but after a set delay. In this delay the user can choose to walk closer/further away from the robot. The participants repeat this test 5 times. | |||

After the test the participants are asked to fill out a questionnaire. | |||

===Apparatus and Materials=== | |||

During the experiment the same robot, with the same programming is used. The questionnaire is going to be made on paper, for the ease of use. | |||

==Questionnaire== | |||

The questionnaire consists of four questions which must be answered through a closed answering form consisting of the 5-point scale. | |||

*How much experience do you have with robot? | |||

*How much experience do you have in owning pets? | |||

*How is your attitude towards robots? | |||

*Are you interested in technology | |||

Afterwards three demographic questions are to be answered. | |||

*What is your age? | |||

*What is your gender? | |||

*What is your occupation/study? | |||

= Planning (old; Before week 3)= | = Planning (old; Before week 3)= | ||

| Line 201: | Line 613: | ||

'''week 7''' | '''week 7''' | ||

*Reaction on user behaviour (action) + reaction on user feedback: Victor, Huub, Marleen | *Reaction on user behaviour (action) + reaction on user feedback: Victor, Huub, Marleen | ||

* | *Experiment voorbereiden: Willeke, Marleen | ||

---- | ---- | ||

'''week 8''' | '''week 8''' | ||

| Line 220: | Line 632: | ||

Smart bracelet: used during guiding user by robot. Robot keeps track of distance between user and robot by using smart bracelet and robot asks questions if distance between user and robot becomes too large. How smart bracelet works: 'Tasks and approack (new; after week 3)'-->'smart bracelet' | Smart bracelet: used during guiding user by robot. Robot keeps track of distance between user and robot by using smart bracelet and robot asks questions if distance between user and robot becomes too large. How smart bracelet works: 'Tasks and approack (new; after week 3)'-->'smart bracelet' | ||

= | =Literature= | ||

== | [1] Cassinis, R., Tampalini, F., & Bartolini, P. (2005). Wireless Network Issues for a Roaming Robot. Proc. ECMR’05. | ||

[2] Coltin, B., & Veloso, M. (2010, October). Mobile robot task allocation in hybrid wireless sensor networks. In Intelligent Robots and Systems (IROS), 2010 IEEE/RSJ International Conference on (pp. 2932-2937). IEEE. | |||

[3] Monica L. Visincy (1999), Fault Detection and Fault Tolerance Methods for Robotics, page 43 | |||

[4] Monica L. Visincy (1999), Fault Detection and Fault Tolerance Methods for Robotics, Motor and Sensor Failures, page 23 | |||

== | [5] P. Leica, J.M. toibero, F. Roberti, R. Carelli (2013), Switched Control Algorithms to Robot-Human Bilateral Interaction without Contact. Advanced Robotics (ICAR). | ||

* | [6] Cosgun, A., Sisbot, E. A., & Christensen, H. I. (2014, May). Guidance for human navigation using a vibro-tactile belt interface and robot-like motion planning. In Robotics and Automation (ICRA), 2014 IEEE International Conference on (pp. 6350-6355). IEEE. | ||

[7] E. Torta, R.H. Cuijpers, J.F. Juola, “Design of a parametric model of personal space for robotic social navigation”, International Journal of Social Robotics, 5(3), pp. 357-365, 2013 | |||

[8] L.Takayama, C. Pantofaru, “Influences on Proxemic Behaviors in Human-Robot Interaction, 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp 5495-5502, 2009 | |||

=Appendix= | |||

Because we would like to show ideas that used to be in our initial design, but we did not integrate in our final product, we include those in this appendix: | |||

==Tasks and approach (old; Before week3)== | |||

In order to test the design and system of the guiding robot, we had two possibilities: use the real robot Amigo or make a simulation. The 3D simulation is chosen, since it would be a more easy suitable way to test than in a real hospital. The simulation will be a first person 3D game where the player will be the test subject. The player is looking through the eyes of an elderly patient or visitor in a hospital. | |||

For making the simulation, the following aspects have to be considered: | |||

'''1. Unity/Blender''' | |||

The simulationgame will be done in Unity and the 3d models (hospital as environment and character as user) will be made in Blender | |||

'''2. Catharina hospital map''' | |||

A real hospital map is used for the simulation. The Catharina hospital is prefered since it is close to the TU/e in case tests have to be performed. The 3D environment will be modeled after this map as well | |||

'''3. Eindhoven Airport robot''' | |||

Literature study and research about the Eindhoven airport will be done to find the flaws of the airport guiding robots, so they can be avoided in the design | |||

'''4. Interaction''' | |||

The player (user) 'speaks' by inputting a certain sentence and the robot should respond accordingly and correctly through the speakers. | |||

'''5. Virtual bracelet''' | |||

A 3d model of the bracelet will be made that the character (user) can put on. | |||

'''6. Same starting point and same destination''' | |||

For now, each simulation will be performed with the same start and end. Later on more starting and destinations may be added. | |||

'''7. Obstacle avoidance''' | |||

Whenever the robot notices something hinders its way, it should avoid it or move around it. | |||

'''8. Distance between user and robot (keeping track)''' | |||

Robot constantly checks distance between user and robot (radius). In case distance (radius) is too large, the robot waits or comes to the user. This keeping track of the user can be done with smart bracelets. The robot sends a signal to the bracelet, and the bracelet sends a signal back to the robot. The robot measures the time between sending and receiving and the distance can be calculated by multiplying speed of signal and time. | |||

'''9. Malfunction/call human''' | |||

In case the robot malfunctions, there should be an alternative for example calling a human caregiver or another well functioning robot. | |||

'''10. Touchpad and speaker''' | |||

A touchpad and speaker from the robot are used to give instruction to (for example about putting on the bracelet) and have a conversation with the user | |||

'''11. App for touchpad''' | |||

A program has to be written that allows the user to use the touchpad | |||

'''12. Survey before/during/after test (feedback)''' | |||

To get feedback about the design and system. A few aspects that can be asked are comfort, ease of use, time-efficiency etc. | |||

'''13. Elevators in hospital''' | |||

During the guiding the robot has to make use of elevators in case switching between different floors is necessary | |||

'''14. Navigation system/structure of signage''' | |||

The usual navigation signs of a real hospital will be implemented in the following way. Locations in the hospital where a navigation sign is placed is considered as a node. The hallway that is referred by that sign is considered as a branch. The main entrance is the root and each node is connected to child nodes through branches. The different departments are the so-called 'leafs'. For example, the node 'Main entrance' has a navigation sign that tells the visitor to go to the left hallway (branch) for routes 1-50 and go to the right hallway (branch) for routes 51-100. At the end of these two hallways are again signs (child nodes) that have other hallways (subbranches) connected to it. The sign at the end of the left hallway tells the visitor to go to the left subhallway (subbranch) for routes 1-25 and the right subhallway (subbranch) for routes 26-50. The sign at the end of the right hallway tells the visitor to go to the left or right subhallway (subbranch) for routes 51-74 and 75-100 respectively. | |||

==Smart bracelet== | |||

2 arduino's and two transceivers (transmitter and receiver in 1), 1 for robot, 1 for smart bracelet | |||

robot (continuously) sends signal and starts timer | |||

bracelet receives signal and responds with other signal (continuously) | |||

robot (continuously) receives signal and stops timer | |||

measure time is back and forth, so actual time is half of it | |||

, hence radius distance of user and robot is = v*t/2 | |||

if distance is smaller than or equal to required, robot keeps leading | |||

if distance is larger than required, robot slows down (robot follows) | |||

robot has indicator that blinks a few seconds before it turns left or right (turning light) | |||

robot has indicator that turns a few seconds before it brakes (brake-light) | |||

we already have one arduino, so we have to buy another arduino and buy two transceivers | |||

since we want transceivers (transmitter and receiver in 1) that is programmable, we decided for arduinos with compatible transceivers. Arduino options could be: arduino uno, nano, micro. Arduino compatible transceivers could be Nrf2401, Nrf24L01 | |||

-- | first we have to talk to Tech United to obtain more information about the football robots. The second Arduino uno and two arduino compatible receiver Nrf2401, Nrf24L01, those three have to be bought, or we may borrow from the LaPlace building, lab room LG0.60 or Flux building floor 0. Both buildings are located on the campus of the University of Technology Eindhoven (TU/e). The used signal between both the receivers is probably WiFi-signal, hence during the operation a WiFi-network link has to be set up and the corresponding network has to be selected and connected to. | ||

http://playground.arduino.cc/InterfacingWithHardware/Nrf2401 | |||

https://arduino-info.wikispaces.com/Nrf24L01-2.4GHz-HowTo | |||

http://forum.arduino.cc/index.php?topic=92271.0 | |||

http://www.societyofrobots.com/robotforum/index.php?topic=7599.0 | |||

==Experiment== | |||

* | * Intention: find out the sizes of the radii for the zones. | ||

* | * The robot will drive in a straight line | ||

* The robot will equal, with a delay of speed adaption, its own speed to the speed of the | |||

user | |||

* In this experiment the robot does not maintain a constant distance between robot and | |||

user, but only adapts its speed | |||

* In this way (due to delay of speed adaption) the user can come closer to the robot if he | |||

thinks the robot is too far away or the user can increase the distance if he thinks the | |||

robot is too close. The user will walk with constant speed and hence with constant | |||

distance if he thinks the distance is comfortable. | |||

* These distance will fluctuate (due to delay of speed adaption) and will be stored. | |||

* After the experiment, the distance that occurs the most frequent is the optimum | |||

distance. The min and max distance can be obtained after doing more measurements | |||

with more different users | |||

Latest revision as of 20:13, 20 June 2016

Group 4 members

- (Tim) T.P. Peereboom 0783677

- (Marleen) M.J.W. Verhagen 0810317

- (Willeke) J.C. van Liempt 0895980

- (Victor) V.T.T. Lam 0857216

- (Huub) H.W.J. van Rijn 0903068

Introduction

In large hospitals, elderly patients and visitors may have trouble reading or understanding the navigation signs. Therefore they may go to the information desk to request help from human caregivers. A more efficient alternative could be: robots that guide elderly patients/visitors safely to their destination in the hospital. In this way, human caregivers can have their hands free to help elderly with more serious problems that robots cannot solve, like helping elderly stand up when they fell over. Robots can guide elderly easily to their destination, when a map of the hospital is programmed into the robot. Even if the patient doesn't know what to do or where to go to, the robot gives clear instructions for example through touchscreen or speakers.

Scenario

An old man wants to visit his wife in the hospital. Due to the abbreviations of locations and his hunchback, he is having trouble with reading the signage, so he asks for help at the information desk. Here he is directed to a guiding robot. This robot carries a touch screen on eye level which asks the user to press "start". The man presses start and the robot asks him to put on the smart bracelet. This question is also displayed on the screen so people with hearing problems can understand the instructions too. The old man puts on the bracelet and presses "proceed". Now the robot asks where the man wants to go and the robot uses voice-recognition to indentify the user's destination. The robot guides the man trough the hospital to his destination. Because of the smart bracelet the robot can keep track of the distance between the user and the robot. When the distance gets too big, the robot will adjust its speed. When arrived at the destination the robot will ask the user if the desired location is reached. If so, the robot will leave the user. When the user wants to leave the hospital again, he can summon one using his smart bracelet. When a robot is finished with a guiding task, it will check if there are other people trying to summon a robot, otherwise it will go back to its base and recharge.

Users

Primary users

Given that the user space is a hospital environment, a user would like to be treated with care. Therefore we stated a few things as necessary for the user. First of all the robot needs to behave in a friendly way. This means its way of communicating with the user should be as neutral as possible, such that the user feels at ease with the robot. Secondly, the robot should be able to adjust its speed to the needs of the user. For instance, younger people tend to be quicker by foot than elderly. The route calculated should be as safe and passable for the user as possible, with efficiency as second priority such that it does not take longer than necessary to reach the user's goal. Another thing the user needs is a way to communicate to a human if needed, think of situations like the robot being lost together with the user, it needs to be able to tell this to a human operator such that the user can be helped.

Secondary users

Friends and family of the elderly target group can benefit from the use of robots, because they do not have to plan the appointment of the elderly in their own agenda and therefore they do not need to accompany them anymore to their destinations in the hospital. The elderly can go by themselves. Caregivers could also benefit from the use of robots, because now they can better focus on their more important care tasks, instead of simply guiding the elderly to a destination.

Tertiary users

These users should all take into account that they indirectly might work with robots and therefore it requires adjustments for their design, construction, insurance, ethics etc. Industry involved in robot business could benefit financially and scientifically from the use of robots. Although the needs of the primary users are certainly key, the needs of the secondary and tertiary users should be considered as well to ensure high acceptance of the robot.

Stakeholders

Stakeholders are those who are involved in the development of the system. The two main stakeholders are the technology developers and the hospitals. The hospital can be separated into different stakeholders: the workers (nurses, doctors and receptionists), visitors, the board of the hospital and the patients. The primary stakeholders are the visitors, workers and developers. Secondary stakeholders are the government, the board and patients. Collaboration between developers, users and workers is needed. The influence of patients that are hospitalised must be considered, they must not experience nuisance with the use of the technology in the hallways.

Requirements

For the guiding robot the following requirements are drawed:

- The robot has to be able to reach each possible destination. Therefore it should be able to open doors(doors open automatically in hospitals) and use elevators.

- The robot should be easy to use. It should be easier and quicker figuring out how the robot works then trying to figure out how to get from A to B yourself. Thus a user-friendly interface should be designed.

- The robot should be able to calculate the optimal route to the destination. These routes are integrated, since the map of a hospital doesn't change.

- It should be clear for the user that the robot is guiding hem or her to the desired destination.

- It should be easy to follow the robot.

- The robot has to be able to detect if the user is following him.

- The robot outputs clear visual and audible feedback to the user. The user is well informed about the robot understanding capability and its next action.

- The robot is able to track its location in the hospital.

- The guiding robot should have a motor that is strong enough.

- The battery life of the robot should allow it to work several hours continuously.

- The robot has to be able to avoid collisions.

- The patient should feel comfortable near the robot. Interaction with the robot should feel natural.

- The robot should have a low failure rate. It should work most of the time.

- If the robot stops working or in case of emergency, then it should be possible for the patient to contact help.

- The design of the robot should fit in the hospital. It must be easy to clean, as sterile as possible and low risk of hurting oneself on the robot.

State-of-the-art

Human-Robot Interaction

Several experiments have been done to determine the optimal distance between a robot and a user. Torta [7] conducted an experiment where participants were asked to stand still, but they could move their head freely. The robot would approach them in different direction and the participants could stop the robot when they felt most comfortable with the distance by pressing a button. Afterwards they evaluated the direction at which the robot approached them by answering the 5-point Likert scale question: How did you find the direction at which the robot approached you. This experiment was also executed when the participants were sitting. The results of these experiments are shown in the figure below.

These experiments that the mean value of the optimal distance of approach is 173 cm when the user is standing and 182 cm when he is sitting. This is significantly larger than the preferred distance between humans (45cm-120cm).

These results show that it is important to test a following scenario with a real robot for the preferred distances in human-human interaction are different from human-robot interaction. The values obtained in these experiments cannot be used, because the robot used (Nao) is much smaller than our guiding robot and this shows the optimal values for approaching scenarios, not for guiding scenarios.

More experiments on proxemics behaviours in human-robot interaction have been conducted by Takayama and Pantofaru [8]. Each participant was asked to stand on the X marked on the floor, which was 2.4 meters away from the front of the robot. The robot was directly facing the participant as shown in the figure below.

For the half of the participants the robot’s head was tilted to look at their face in round 1 and at their legs in round 2. For the other half the order was switched. The participants were asked to move as far forward as they feel comfortable to do so. Then the robot would approach the participants and when the robot came to close to feel comfortable, the person had to step aside. Afterwards the participants filled in a questionnaire and the procedure was repeated for the robot’s head tilted in the other direction.

In this experiment some factors were found which influence the human-robot interaction. Experience with owning pets and experience with robots both decrease the personal space that people maintain around robots. Also women maintain larger personal spaces from robots that are looking at their faces than man. It does not matter whether people approach robots or if they let robots approach them.

Guiding robot

In the field of guiding robots, several projects are already handeld. One of these projects is the SPENCER project. The robot called 'SPENCER' is a socially aware service robot for passenger guidance on busy airports. It is able to track individuals and groups at the airport Schiphol. SPENCER uses a variety of sensors like a 2D laser detector, a multi-hypothesis tracker (MHT), a HOG based detector, and a RGB-D sensor. SPENCER is meant for a large environment. There is no technology yet that provides similar quality detection in smaller form. However SPENCER provides a good base and some of his technologies for recognition of persons and/or groups can be used for avoiding collisions with people moving about.

Another project is that described in Akansel Cosgun’s paper.[6] It is a navigation guidance system, that guides a human to a goal point. The robot is using a vibro-tactile belt interface and a stationary laser scanner. Normally, leg detection is common practice for robots where a laser scanner is placed at ankle height. However in cluttered environments this leg detection was found to be prone to false positives. To reduce these false positives a approach is attempted by employing multiple layers of laser scans, usually at the torso height in addition to ankle height. Studies showed that the upper body tracker achieved better results than the leg tracker, due to the simpler linear motion model instead of a walking model. This technology is convenient for tracking people, but not for one specific person, like our elderly user. So this technology can better not be used for tracking the user in our specific setting.

USE-aspects

Below the USE-aspects concerning our guiding robot are described.

Users

The users of the new guiding robot are generally elderly people who have trouble with finding the way in the hospital. Without this robot they would have to ask employees to guide them. Employees may not have time to guide the elderly all the way to the destination. So elderly can still get lost if they don't fully understand the intructions of the employees. They could come too late to an appointment due to this. It is important that the users feel comfortable near the robot. Users may otherwise refuse to let the robot guide them.

Society

Our society is aging so the ratio of elderly and young people is changing. Less young caregivers will be available for the older people who need care. Deploying robots for executing simple tasks reduces the need for caregivers, because they can work more efficiently if they just have to focus on the more complex tasks. The growing demand of caregivers will diminish and the elderly will still get the care they need.

Enterprise

The robot can help patients who have trouble with finding the way to arrive in time. Therefore less patients would arrive too late to an appointiment. This may make the hospital more efficient and could reduce costs. Also industry involved in robot business could benefit financially and scientifically from the use of robots. The robot industry will grow, which creates more jobs for engineers who want to develop and improve this robot.

Objectives

Below the objectives concerning the system and the final presentation are explained.

Objectives of systems

- Guiding elderly patients and visitors of a hospital safely, comfortably and quickly to their destination

- Reducing amount of human caregivers who guide patients and visitors, so they can have their hands free to help patients with more serious problems

- Keeping accompany with lonely elderly, so they can have someone to interact with and have a conversation with

- Keeping track of the user

- Avoid obstacles

- Provide a suitable solution in case the robot malfunctions

- Using touchpad with app and speakers in order to communicate with the user or to give instructions to the user

Objectives of presenation

As presentation of our design, we are going to use a Turtle robot from Tech United. Further information is found in Robot

- The Turtle will guide a person from A to B to C etc. on the soccerfield.

- The Turtle will react to the position of the user and the turtle will give signals when he for example wants to makes a turn left.

An App is made for the first interaction with the robot.

Tasks and approach (new; after week 3)

Robot

A decision has to be made between using real robot (for example the amigo or a robocup robot) or building our own robot with an arduino, motor, battery, wheels, webcam, sensors and platform. We decided to choose for a robocup robot for the following reasons:

- It is more robust than the amigo; it has bumpers

- navigation is already implemented, we need a hospital map

- obstacle avoidance is already implemented, since the robocup robots avoid each other when they play football. We have to define what is an obstacle and what is not.

What still has to be implemented by us is the interaction/conversation between robot and user. Robot gives instructions through speaker and touchscreen. In case elderly stops following the robot, because he/she stops walking or walks into another direction, the robot should communicate with the user or information desk, so that a human caregiver can continue guiding the user..

Actions robot should take in case of malfunctions It could happen that the robot has electrical problems, wifi problems and/or mechanical problems, like broken sensor, broken link wifi connection, broken motors. Then the robot should call another robot or call the information desk for human caregivers so that they can continue guiding the user.

User interface

An interaction between the user and the guiding robot is needed. Herefore, a app is designed to make the first interaction. The app will work as user interface to tell the robot his or hers desired location of arrival. This app will be programmed in Android Studio. When starting on the app, the following things should be discussed: Which features should it hold? How can we make it user friendly? Who are the users?

The design of the app is based on the desing of the Catharinaziekenhuiz website.

App design

Below the design of the app is described, with all the features it should hold.

Homescreen: The homescreen should contain two buttons a start button and a change language button and the text 'Welkom'. When the language is selected differently the homescreen will reappear in the desired language.

Next screen: The following screen is found when the user presses start. It will contain the following text: 'Ik ben een'. Below it two buttons will be shown containing the text 'Patiënt' and 'Bezoeker'.

Patiënt: The screen following on the patient button should contain te following buttons: 'Ik heb een afspraak', 'ik heb geen afspraak'. Following these two buttons, is described below the desierd button.

- ik heb een afspraak

- Op welke polikliniek heeft u een afspraak? Voor bloedprikken of het inleveren van andere lichaamsvochten, is het niet nodig een afspraak te maken.

- Menu with all the polis in a scroll menu, the possiblity to type in the poli

- Op welke polikliniek heeft u een afspraak? Voor bloedprikken of het inleveren van andere lichaamsvochten, is het niet nodig een afspraak te maken.

- Ik heb geen afspraak

- U heeft geen afspraak, maar wel een verwijzing

- Voor een afspraak op één van onze poliklinieken kunt u bellen naar de betreffende polikliniek of afdeling. Tussen 08.30 en 16.30 uur zijn onze poliklinieken telefonisch bereikbaar.

- U heeft geen afspraak en ook geen verwijzing

- Voordat u een afspraak kunt maken op een van de poliklinieken, moet u eerst een verwijzing hebben van uw huisarts. Maak hiervoor eerst een afspraak met uw huisarts.

- U heeft geen afspraak, maar wel een verwijzing

Bezoeker: The screen following on the 'Bezoeker'-button contains the following two buttons, with its subselections.

- op welke afdeling wilt u een bezoek brengen?

- menu with the sections

- op welke kamer moet u zijn?

- Ik weet niet op welke kamer ik moet zijn?

- menu with the sections

- Ik weet niet op welke afdeling ik moet zijn?

The visiting times can be checked when the section is know.

Ik weet niet waar ik een bezoek wil brengen: The following screen appears when the button 'ik weet niet waar ik een bezoek wil brengen'is used:

- Possibility to fill in the patient

- We should ask if this is possible concerning privacy

Robot

The simulation of our idea will be made by using a Turtle from Tech United. The Turtle and its programming of Tech United can be used and changed too our idea. With the use of a DEV-notebook we can program and simulate our idea. When the results are desirable the simulation with the real Turtle can be made. Below a few things we will use that are already programmed are listed:

- The Turtle knows the field and its own position on the field.

- The Turtle sees a black object as an obstacle, which it will avoid.

- The Turtle sees a yellow object as the ball.

- The Turtle is programmed using positions and not using velocity.

We will work in Eclipse on the role_attacker_main.c file.

Interpretation of the guiding system

The Turtle knows the field and its position. The goal of the Turtle is to move from a position A through a set route a position B. The route will be simulation of hallways with turns. During the movement the Turtle will avoid obstacles (black). De user (yellow) must be guided from through the route to its end destination.

Decision making

The robot cannot simple follow the route, it has to take several decision into account. The following points have to be taken into account when making a decision:

- Lead/follow; dependend on the distance, what should the robot do. When robot leads, it follows the preprogrammed path from starting point to destination and may accelerate or keep the same speed (distance between robot and user is small ebough). When robot follows, it goes slows down. Robot calls reception if user is completely off track.

- Interaction: what will the robot do/call (through speaker), in case it malfunctions (see section 'Malfunctions')/issue (see section 'Possible issues'). How to implement this?