Robotic Drone Referee: Difference between revisions

m →Links |

|||

| (53 intermediate revisions by 4 users not shown) | |||

| Line 11: | Line 11: | ||

---- | ---- | ||

=Introduction - Project Description= | =Introduction - Project Description= | ||

<p> | |||

This project was carried out for the second module of the 2015 MSD PDEng program. The team consisted of the following members: | |||

* Cyrano Vaseur ('''Team Leader''') | |||

* Nestor Hernandez | |||

* Arash Roomi | |||

* Tom Zwijgers | |||

The goal was to create a system architecture as well as provide a proof of concept in the form a demo. | |||

</p> | |||

<p> | |||

*'''Context''': The demand for objective refereeing in sports is continuous. Nowadays, more and more technology is used for assisting referees in their judgement on a professional level, e.g. Hawk-Eye and goal-line technology. As more and more technology is applied, this might someday lead to autonomous refereeing. Application of such technology however will most likely lead to disagreements. Nevertheless, a more acceptable environment for such technology is that of robot soccer (RoboCup). Development of autonomous referee in this context is a first step towards future applications in actual sports on a professional level. | |||

*'''Goal''': Therefore the goals of this project are: | |||

**To develop a System Architecture (SA) for a Drone Referee system | |||

**Realize part of the SA to prove the concept | |||

</p> | |||

---- | ---- | ||

=System Architecture= | =System Architecture= | ||

<p> | <p> | ||

Any ambitious long-term project starts with a vision of what the end product should do. For the robotic drone referee this has taken the form of the System Architecture presented in | |||

[[System Architecture Robotic Drone Referee|the System Architecture section]]. The goal is to provide a possible road map and create a framework to start development, such as the proof of concept described later on in this document. Firstly the four key drives behind the architecture are discussed and explained. In the second part a detailed description and overview of the proposed system is given. | |||

</p> | |||

==System Architecture - Design Choices== | ==System Architecture - Design Choices== | ||

<p> | <p> | ||

[[System Architecture Robotic Drone Referee#System Architecture - Design Choices|System Architecture - Design Choices]] | |||

</p> | |||

==Detailed System Architecture== | ==Detailed System Architecture== | ||

<p> | <p>[[System Architecture Robotic Drone Referee#Detailed System Architecture|Detailed System Architecture]]</p> | ||

---- | |||

=Proof of Concept (POC)= | =Proof of Concept (POC)= | ||

<p> | |||

<p> | To proof the concept, part of the system architecture is realized. This realization is used to demonstrate the use case. The selected use case is to referee a ball crossing the pitch border lines. To make sure this refereeing task works well, even in the worst cases, a benchmark test is setup. This test involves rolling the ball out of pitch, and after it just crossed the line roll it back into pitch. In [[Proof of Concept Robotic Drone Referee|Proof of Concept]], the use case is specified in further detail. | ||

</p> | |||

==Use Case-Referee Ball Crossing Pitch Border Line== | ==Use Case-Referee Ball Crossing Pitch Border Line== | ||

<p> | <p> The goal of the demo is to provide a proof of concept. This will be achieved through a use-case, focusing on a specific situation and make that specific situation works correctly. The details of this use-case are presented in [[Proof of Concept Robotic Drone Referee#Use Case-Referee Ball Crossing Pitch Border Line|Use Case-Referee Ball Crossing Pitch Border Line]]</p> | ||

==Proof of Concept Scope== | ==Proof of Concept Scope== | ||

<p> | <p>[[Proof of Concept Robotic Drone Referee#Proof of Concept Scope|Proof of Concept Scope]]</p> | ||

==Defined Interfaces== | ==Defined Interfaces== | ||

<p> | <p>[[Proof of Concept Robotic Drone Referee#Defined Interfaces|Defined Interfaces]]</p> | ||

==Developed Blocks== | ==Developed Blocks== | ||

<p> | <p>In this section, all the developed and tested blocks from the [http://cstwiki.wtb.tue.nl/index.php?title=System_Architecture_Robotic_Drone_Referee#Detailed_System_Architecture| System Architecture] are described.</p> | ||

===Rule Evaluation=== | ===Rule Evaluation=== | ||

<p> | <p>Rule evaluation encloses all refereeing soccer rules that are currently taken into account in this project. The rule evaluation set consists of the following:</p> | ||

# [[Refereeing Out of Pitch]] | |||

===World Model - Field Line | ===World Model - Field Line predictor=== | ||

<p> | <p>The detection module needs a prediction module to predict the view that the camera on drone would have in each moment. Because the localization method does not use image processing and there is no need to provide data of camera view other than side lines, the line predictor block just provides data of visible side lines, visible to drone camera.[http://cstwiki.wtb.tue.nl/index.php?title=Field_Line_predictor [Continue...]] </p> | ||

===Detection Skill=== | ===Detection Skill=== | ||

<p> | <p>The Detection skill is in charge of the vision-based detection within the frame of reference. Currently, it consists of the following developed sub-tasks:</p> | ||

# [[Ball Detection]] | |||

# [[Line Detection]] | |||

===World Model - Ultra Wide Band System (UWBS) - Trilateration=== | ===World Model - Ultra Wide Band System (UWBS) - Trilateration=== | ||

| Line 66: | Line 91: | ||

==Integration== | ==Integration== | ||

<p> | <p> | ||

As many different skills have been developed over the course of the project, a combined implementation has to be made in order to give a demonstration. To this end, the integration strategy was developed and applied. While it is not recommended that the same strategy is applied throughout the project, the process was documented in [[Integration Strategy Robotic Drone Referee]]. | |||

</p> | |||

==Tests & Discussion== | ==Tests & Discussion== | ||

<p> | <p>The test consisted of a simultaneous coverage of the developed blocks so far: Rule Evaluation, Field Line Predictor, Detection Skill and Trilateration.</p> | ||

<p>The test was conducted in a 'Robocup' small soccer field (8 by 12 meters) and comprised of:</p> | |||

* Trilateration set up and configuration | |||

* Localization testing | |||

* Ball detection and localization | |||

* Out of Pitch Refereeing | |||

The performed test can be viewed [https://youtu.be/_VaHlZv1tgI here]. | |||

The conclusions of the test (demo) are the following: | |||

* The localization method based on trilateration is very robust and accurate but it should be tested in bigger fields. | |||

* The color based detection works really good with no players or other objects in the field. Further testing including robots and taking into account occlusion should be considered. | |||

* The out of pitch refereeing is very sensitive to the psi/yaw angle of the drone and the accuracy of the references provided by the Field Line estimator. | |||

* Improvement in distinguising between close parallel lines should be continued. | |||

* Increasing localization accuracy and refining the Field Line estimator should be researched. | |||

==Researched Blocks== | ==Researched Blocks== | ||

<p> | <p>In this section, all the blocks from the [[System_Architecture_Robotic_Drone_Referee#Detailed_System_Architecture| System Architecture]] that were researched, but are yet to be implemented, are described. Also we include in this section all the discarded options that might be interesting or useful to take into account in the future.</p> | ||

===Sensor Fusion=== | ===Sensor Fusion=== | ||

<p> | <p>In the designed system there are some environment sensing methods that give the same information about the environment. It can be proven that measurement updates can increase accuracy of a probabilistic function. | ||

As an example, Localization block can use UltraWide band and acceleration sensors to localize the position of drone. This data fusion is desirable because the UltraWide band system has a high accuracy but low response time, and acceleration sensors have lower accuracy but a higher response time. | |||

The other system that can benefit from sensor fusion is the psi angle block. The psi angle is needed for drone motion control and also for other detection blocks. The data of the psi angle are coming from the drone’s magneto meter and gyroscope. The magneto meter gives the psi angle with a rather high error. The gyroscope gives the derivation of the psi angle. The data provided by the gyroscope has a high accuracy but because it is derivation of psi angle the uncertainty increases by time. Sensor fusion can be used in this case to correct data coming from both sensors. [http://cstwiki.wtb.tue.nl/index.php?title=Sensor_Fusion#Method continue] | |||

</p> | |||

===Cascaded Classifier Detection=== | ===Cascaded Classifier Detection=== | ||

<p> | <p>The use of classifiers using multiple learning algorithms such as the Viola-Jones algorithm could be used to obtain a predictive performance in recognizing patterns and features in images. For this purpose, a [http://mathworks.com/help/vision/ug/train-a-cascade-object-detector.html cascaded object detector] was researched. After a proper training, the object detector should be able to uniquely identify in real-time a soccer ball within the expected environment. During this project several classifiers were trained but the result was later discarded because of the following reasons:</p> | ||

* Very sensitive to lighting changes | |||

* Not enough data set for an effective training | |||

* Little knowledge about how these algorithms really work | |||

* Difficult to find the trade-off when defining the acceptance criteria for a trained classifier | |||

<p>Nevertheless, the results were promising but not robust enough to be used at this stage. In the future, this method for detection could be further researched in order to overcome the mentioned drawbacks.</p> | |||

===Position Planning and Trajectory planning=== | ===Position Planning and Trajectory planning=== | ||

| Line 103: | Line 157: | ||

===Motion Control=== | ===Motion Control=== | ||

<p> | |||

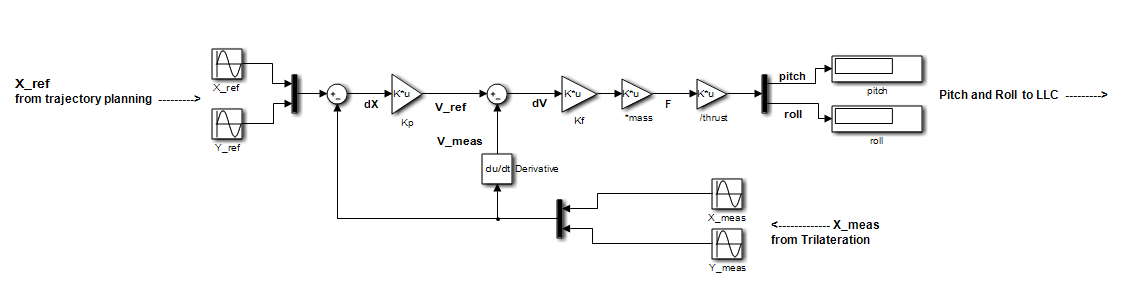

Drone control is decomposed into high level control (HLC) and low level control (LLC). High level (position) control converts desired reference for position into the desired/reference for drone pitch and yaw angle. Low level control then converts this desired drone pitch and roll into required motor PWM inputs to steer the drone to the desired position. In this section HLC only is discussed. | |||

<br /><br /> | |||

''High Level Control Design'' <br /> | |||

For the high level control, cascaded position-velocity control is applied. This is illustrated in Figure 1. The error between reference position X<sub>ref</sub> and measured position X<sub>meas</sub> is multiplied by K<sub>p</sub> to generate a reference velocity V<sub>ref</sub>. This reference is compared with the measurement X<sub>meas</sub>. The error is multiplied with K<sub>f</sub> to generate the desired force F to move the drone to the correct position. This force F is divided by the total drone trust T to obtain the pitch (θ) and roll (φ) angles (input for the LLC). The relation between the angles, trust T and force F this is also explained well in the book by Peter Corke<ref>P. Corke, Robotics, Vision and Control, Berlin Heidelberg: Springer-Verlag , 2013.</ref>. The non-linear equation is: | |||

<br /><br /> | |||

F<sub>x</sub> = T*sin(θ) | |||

<br /> | |||

F<sub>y</sub> = T*sin(φ) | |||

<br /><br /> | |||

However, with small angle deviations (below 10°) the equations simplify to: | |||

<br /><br /> | |||

F<sub>x</sub> = T*θ | |||

<br /> | |||

F<sub>y</sub> = T*φ | |||

<br /><br /> | |||

Position measurements can be gathered from the WM-Trilateration block. Measured velocities can be taken as the derivative of position measurements. | |||

The challenge in designing this configuration is in tuning K<sub>p</sub> and K<sub>f</sub>. | |||

[[File:ControllorOverviewRoboticDroneReferee.png|1000px|thumb|center|Figure 1: High level control configuration]] | |||

</p> | |||

=Discussion= | |||

<p> | |||

In this section some of the most important improvement points for the referee are listed. These issues were encountered, but because of lack of time, or because they were out of scope, no solution was applied. For others, to continue with this project it is good to be aware of the current limitations and problems with the hardware and the software. | |||

</p> | |||

<p> | <p> | ||

Proposed improvements for the current rule evaluation algorithms: | |||

* Second layer testing modules: | |||

** Take into account line width and ball radius: For more precise evaluation on ball out of pitch, a second layer is required taking into account line width and ball radius. | |||

** Develop goal post detection block: For detecting a goal score, a second layer is necessary to evaluate if a ball crossing the back line is a ball out of pitch or indeed a goal score. For this detection of the goal post is necessary. | |||

</p> | |||

<p> | |||

Proposed improvements to the sensor suite: | |||

* Improve altitude measurements: With additional weight on the drone, altitude measurements seemed unreliable. Therefore, in the proof of concept drone altitude is set and updated by hand. One possible solution for more reliable measurements of altitude is to extend the UWBS trilateration system to also measure drone altitude. | |||

* Improve yaw-angle measurement and control: | |||

** Current magnetometer unreliable: Hardware insufficient: Unpredictable freezes: The magnetometer on the drone freezes unpredictably. | |||

** Current top camera unreliable: Distortions in measurements: The top camera is used for measuring the yaw angle of the drone based on color. However, below the top camera a net is cast (partially wired). This causes light reflection causing distortions in the measurements. | |||

*An attempt was made to apply sensor fusion (Kalman filtering) with these measurements. However, due to the malfunctions in the hardware (unpredictable freezes) there was not much success with this. Therefore, in the proof of concept also yaw angle is set and updated by hand. Hence, new methods for measuring drone yaw are needed. One possible simple solution is applying a better quality magnetometer. | |||

* Establish drone self-calibration: Drone sensor outputs are extremely temperature sensitive. Therefore, drone calibration parameters require regular update. For the system to work properly without interruptions for calibration, drone self-calibration is required. A direction for a possible solution is to use Kalman filtering for prediction of both state and measurement bias. | |||

</p> | |||

<p> | <p> | ||

Implementation of autonomous drone motion control: | |||

* Improve low level control: Drone low level control currently is confined. For open outer loop, there still exists drift. This is possibly due to faulty measurements as mentioned already. Consequently these need to be resolved first. | |||

* Test high level control configuration: For high level control/ outer loop position control, a cascaded position velocity loop has been developed already. This however needs to be tested next. | |||

</p> | |||

= | <p> | ||

To avoid the issues encountered during system integration, the following changes are proposed: | |||

* Improve simultaneous drone control and camera feed input: One obstacle ran into has been the drone control while simultaneously getting camera feed from the onboard cameras. Possible solutions for this problem are: | |||

** Extending the script written by Daren Lee which is used for reading drone sensor data: The script could be extended to read camera feed next to navigation data. | |||

** Read feed from auxiliary camera through second pc connected through udp. This is a less elegant solution however. | |||

* Write code in C/C++: For the demo, to run all realized blocks concurrently as desired, integration is done in Simulink. This led to some difficulties. Certain matlab functions could not be used/called in Simulink without some harsh bypassing, i.e. using Matlab coder.extrinsic. For that reason it is advised to future developers to write code in C/C++. | |||

</p> | |||

= Links = | |||

<p> | |||

For the repository go to [https://github.com/nestorhr/MSD2015/ Github]. | |||

</p> | |||

<p> | |||

For the 01/04/16 demo footage click [https://youtu.be/_VaHlZv1tgI here]. | |||

</p> | |||

<p> | |||

For the documentation of the next generation system document click [http://cstwiki.wtb.tue.nl/index.php?title=Autonomous_Referee_System here]. | |||

</p> | |||

< | =Notes= | ||

<references /> | |||

---- | ---- | ||

Latest revision as of 15:32, 9 November 2017

Abstract

Refereeing any kind of sport is not an easy job and the decision making procedure involves a lot of variables that cannot be fully taken into account at all times. Human refereeing has a lot of limitations but it has been the only way of proceeding until now. Due to the lack of information, referees sometimes make wrong decisions that can sometimes change the flow of the game or even make it unfair. The purpose of this project is to develop an autonomous drone that will serve as a referee for any kind of soccer match. The robotic referee should be able to make objective decisions taking into account all the possible information available. Thus, information regarding the field, players and ball should be assessed in real-time. This project will deliver an efficient, innovative, extensible and flexible system architecture able to cope with real time requirements and well-known robotic systems constraints.

Introduction - Project Description

This project was carried out for the second module of the 2015 MSD PDEng program. The team consisted of the following members:

- Cyrano Vaseur (Team Leader)

- Nestor Hernandez

- Arash Roomi

- Tom Zwijgers

The goal was to create a system architecture as well as provide a proof of concept in the form a demo.

- Context: The demand for objective refereeing in sports is continuous. Nowadays, more and more technology is used for assisting referees in their judgement on a professional level, e.g. Hawk-Eye and goal-line technology. As more and more technology is applied, this might someday lead to autonomous refereeing. Application of such technology however will most likely lead to disagreements. Nevertheless, a more acceptable environment for such technology is that of robot soccer (RoboCup). Development of autonomous referee in this context is a first step towards future applications in actual sports on a professional level.

- Goal: Therefore the goals of this project are:

- To develop a System Architecture (SA) for a Drone Referee system

- Realize part of the SA to prove the concept

System Architecture

Any ambitious long-term project starts with a vision of what the end product should do. For the robotic drone referee this has taken the form of the System Architecture presented in the System Architecture section. The goal is to provide a possible road map and create a framework to start development, such as the proof of concept described later on in this document. Firstly the four key drives behind the architecture are discussed and explained. In the second part a detailed description and overview of the proposed system is given.

System Architecture - Design Choices

System Architecture - Design Choices

Detailed System Architecture

Proof of Concept (POC)

To proof the concept, part of the system architecture is realized. This realization is used to demonstrate the use case. The selected use case is to referee a ball crossing the pitch border lines. To make sure this refereeing task works well, even in the worst cases, a benchmark test is setup. This test involves rolling the ball out of pitch, and after it just crossed the line roll it back into pitch. In Proof of Concept, the use case is specified in further detail.

Use Case-Referee Ball Crossing Pitch Border Line

The goal of the demo is to provide a proof of concept. This will be achieved through a use-case, focusing on a specific situation and make that specific situation works correctly. The details of this use-case are presented in Use Case-Referee Ball Crossing Pitch Border Line

Proof of Concept Scope

Defined Interfaces

Developed Blocks

In this section, all the developed and tested blocks from the System Architecture are described.

Rule Evaluation

Rule evaluation encloses all refereeing soccer rules that are currently taken into account in this project. The rule evaluation set consists of the following:

World Model - Field Line predictor

The detection module needs a prediction module to predict the view that the camera on drone would have in each moment. Because the localization method does not use image processing and there is no need to provide data of camera view other than side lines, the line predictor block just provides data of visible side lines, visible to drone camera.[Continue...]

Detection Skill

The Detection skill is in charge of the vision-based detection within the frame of reference. Currently, it consists of the following developed sub-tasks:

World Model - Ultra Wide Band System (UWBS) - Trilateration

One of the most important building blocks for the drone referee is a method for positioning. At all times, the drone state, namely the set {X,Y,Z,Yaw}, should be known in order to perform the refereeing duties. Of the drone state, Z and the Yaw are measured by either the drone sensor suite or other programs as they are required for the low-level control of the drone. However, in order to localize w.r.t. the field and to find X and Y, a solution has to be found. To this end, several concepts were composed. Of those concepts, trilateration using Ultra Wide Band Anchors (UWB) was realized. For more details, go to Ultra Wide Band System - Trilateration. First, the rejected concepts are shortly listed, followed by a detailed explanation of the UWB system.

Integration

As many different skills have been developed over the course of the project, a combined implementation has to be made in order to give a demonstration. To this end, the integration strategy was developed and applied. While it is not recommended that the same strategy is applied throughout the project, the process was documented in Integration Strategy Robotic Drone Referee.

Tests & Discussion

The test consisted of a simultaneous coverage of the developed blocks so far: Rule Evaluation, Field Line Predictor, Detection Skill and Trilateration.

The test was conducted in a 'Robocup' small soccer field (8 by 12 meters) and comprised of:

- Trilateration set up and configuration

- Localization testing

- Ball detection and localization

- Out of Pitch Refereeing

The performed test can be viewed here.

The conclusions of the test (demo) are the following:

- The localization method based on trilateration is very robust and accurate but it should be tested in bigger fields.

- The color based detection works really good with no players or other objects in the field. Further testing including robots and taking into account occlusion should be considered.

- The out of pitch refereeing is very sensitive to the psi/yaw angle of the drone and the accuracy of the references provided by the Field Line estimator.

- Improvement in distinguising between close parallel lines should be continued.

- Increasing localization accuracy and refining the Field Line estimator should be researched.

Researched Blocks

In this section, all the blocks from the System Architecture that were researched, but are yet to be implemented, are described. Also we include in this section all the discarded options that might be interesting or useful to take into account in the future.

Sensor Fusion

In the designed system there are some environment sensing methods that give the same information about the environment. It can be proven that measurement updates can increase accuracy of a probabilistic function. As an example, Localization block can use UltraWide band and acceleration sensors to localize the position of drone. This data fusion is desirable because the UltraWide band system has a high accuracy but low response time, and acceleration sensors have lower accuracy but a higher response time. The other system that can benefit from sensor fusion is the psi angle block. The psi angle is needed for drone motion control and also for other detection blocks. The data of the psi angle are coming from the drone’s magneto meter and gyroscope. The magneto meter gives the psi angle with a rather high error. The gyroscope gives the derivation of the psi angle. The data provided by the gyroscope has a high accuracy but because it is derivation of psi angle the uncertainty increases by time. Sensor fusion can be used in this case to correct data coming from both sensors. continue

Cascaded Classifier Detection

The use of classifiers using multiple learning algorithms such as the Viola-Jones algorithm could be used to obtain a predictive performance in recognizing patterns and features in images. For this purpose, a cascaded object detector was researched. After a proper training, the object detector should be able to uniquely identify in real-time a soccer ball within the expected environment. During this project several classifiers were trained but the result was later discarded because of the following reasons:

- Very sensitive to lighting changes

- Not enough data set for an effective training

- Little knowledge about how these algorithms really work

- Difficult to find the trade-off when defining the acceptance criteria for a trained classifier

Nevertheless, the results were promising but not robust enough to be used at this stage. In the future, this method for detection could be further researched in order to overcome the mentioned drawbacks.

Position Planning and Trajectory planning

Creating a reference for the drone is not as simple as just following the ball, especially in the case of multiple drones. While the ball is of interest, many objects in the field are. For instance, if near a line or the goal, it is better to have this in frame. In case of a player it might be better to be prepared for a kick. Furthermore, with multiple drones the references and trajectory paths should never cross and the extra drones should be in positions of use.

As this is a complex problem, it should be best to have a configurable solution. In case a drone gets another assignment, from following the ball to watching the line, the same algorithm should be applicable. At the beginning of the project, a good positioning solution was within the scope, but as the project progressed it was omitted. For this reason a conceptual solution was devised, but no implementation was made. The proposal was to use a weighted algorithm, different factors should influence the position, and the influence should be adjustable using weights. Based on these criteria, a potential field algorithm was selected from a few candidates.

In a potential field algorithm, an artificial potential or gravitational field is overlaid on the actual world map. The agent using the algorithm will go from A to B following this field. So an obstacle is represented a repulsive object while other things can be classified as attracting objects. In this way it is used for path planning. The proposed implementation would be different in the way that it would be used to find the reference position to go to. For instance, the ball and the goal would be an attractor while other drones could be repulsors. By configuring the strength of the different attractors and repulsors, a different drone task could be represented. The implementation requires more study as well as some experimentation. This required time that was not available. However, it could be a possible area of interest to other to continue with this project.

In the scope of this project trajectory planning never received a lot of attention. As the purpose for the demo was always to implement only one drone, the trajectory could just be a straight line. However, in case of other drones, jumping players or bouncing balls, this is not sufficient. If a potential field algorithm is already being researched, it could of course also be implemented for the trajectory planning. However, swarm-based flying and intelligent positioning are fields in which a lot of research is conducted right now. As such, for trajectory planning it might be better to do more extensive research into the state of the art.

Motion Control

Drone control is decomposed into high level control (HLC) and low level control (LLC). High level (position) control converts desired reference for position into the desired/reference for drone pitch and yaw angle. Low level control then converts this desired drone pitch and roll into required motor PWM inputs to steer the drone to the desired position. In this section HLC only is discussed.

High Level Control Design

For the high level control, cascaded position-velocity control is applied. This is illustrated in Figure 1. The error between reference position Xref and measured position Xmeas is multiplied by Kp to generate a reference velocity Vref. This reference is compared with the measurement Xmeas. The error is multiplied with Kf to generate the desired force F to move the drone to the correct position. This force F is divided by the total drone trust T to obtain the pitch (θ) and roll (φ) angles (input for the LLC). The relation between the angles, trust T and force F this is also explained well in the book by Peter Corke[1]. The non-linear equation is:

Fx = T*sin(θ)

Fy = T*sin(φ)

However, with small angle deviations (below 10°) the equations simplify to:

Fx = T*θ

Fy = T*φ

Position measurements can be gathered from the WM-Trilateration block. Measured velocities can be taken as the derivative of position measurements.

The challenge in designing this configuration is in tuning Kp and Kf.

Discussion

In this section some of the most important improvement points for the referee are listed. These issues were encountered, but because of lack of time, or because they were out of scope, no solution was applied. For others, to continue with this project it is good to be aware of the current limitations and problems with the hardware and the software.

Proposed improvements for the current rule evaluation algorithms:

- Second layer testing modules:

- Take into account line width and ball radius: For more precise evaluation on ball out of pitch, a second layer is required taking into account line width and ball radius.

- Develop goal post detection block: For detecting a goal score, a second layer is necessary to evaluate if a ball crossing the back line is a ball out of pitch or indeed a goal score. For this detection of the goal post is necessary.

Proposed improvements to the sensor suite:

- Improve altitude measurements: With additional weight on the drone, altitude measurements seemed unreliable. Therefore, in the proof of concept drone altitude is set and updated by hand. One possible solution for more reliable measurements of altitude is to extend the UWBS trilateration system to also measure drone altitude.

- Improve yaw-angle measurement and control:

- Current magnetometer unreliable: Hardware insufficient: Unpredictable freezes: The magnetometer on the drone freezes unpredictably.

- Current top camera unreliable: Distortions in measurements: The top camera is used for measuring the yaw angle of the drone based on color. However, below the top camera a net is cast (partially wired). This causes light reflection causing distortions in the measurements.

- An attempt was made to apply sensor fusion (Kalman filtering) with these measurements. However, due to the malfunctions in the hardware (unpredictable freezes) there was not much success with this. Therefore, in the proof of concept also yaw angle is set and updated by hand. Hence, new methods for measuring drone yaw are needed. One possible simple solution is applying a better quality magnetometer.

- Establish drone self-calibration: Drone sensor outputs are extremely temperature sensitive. Therefore, drone calibration parameters require regular update. For the system to work properly without interruptions for calibration, drone self-calibration is required. A direction for a possible solution is to use Kalman filtering for prediction of both state and measurement bias.

Implementation of autonomous drone motion control:

- Improve low level control: Drone low level control currently is confined. For open outer loop, there still exists drift. This is possibly due to faulty measurements as mentioned already. Consequently these need to be resolved first.

- Test high level control configuration: For high level control/ outer loop position control, a cascaded position velocity loop has been developed already. This however needs to be tested next.

To avoid the issues encountered during system integration, the following changes are proposed:

- Improve simultaneous drone control and camera feed input: One obstacle ran into has been the drone control while simultaneously getting camera feed from the onboard cameras. Possible solutions for this problem are:

- Extending the script written by Daren Lee which is used for reading drone sensor data: The script could be extended to read camera feed next to navigation data.

- Read feed from auxiliary camera through second pc connected through udp. This is a less elegant solution however.

- Write code in C/C++: For the demo, to run all realized blocks concurrently as desired, integration is done in Simulink. This led to some difficulties. Certain matlab functions could not be used/called in Simulink without some harsh bypassing, i.e. using Matlab coder.extrinsic. For that reason it is advised to future developers to write code in C/C++.

Links

For the repository go to Github.

For the 01/04/16 demo footage click here.

For the documentation of the next generation system document click here.

Notes

- ↑ P. Corke, Robotics, Vision and Control, Berlin Heidelberg: Springer-Verlag , 2013.