Embedded Motion Control 2015 Group 3: Difference between revisions

No edit summary |

|||

| (147 intermediate revisions by 7 users not shown) | |||

| Line 1: | Line 1: | ||

This is the Wiki-page for EMC-group 3 | This is the Wiki-page for EMC-group 3, part of the [[Embedded_Motion_Control_2015|Embedded Motion Control 2015 course]]. | ||

| | |||

= Group members = | = Group members = | ||

| Line 103: | Line 54: | ||

The goal of this course is to acquire knowledge and insight about the design and implementation of embedded motion systems. Furthermore, the purpose is to develop insight in the possibilities and limitations in relation with the embedded environment (actuators, sensors, processors, RTOS). To make this operational and to practically implement an embedded control system for an autonomous robot, there is the Maze Challenge. In which the robot has to find its way out in a maze. | The goal of this course is to acquire knowledge and insight about the design and implementation of embedded motion systems. Furthermore, the purpose is to develop insight in the possibilities and limitations in relation with the embedded environment (actuators, sensors, processors, RTOS). To make this operational and to practically implement an embedded control system for an autonomous robot, there is the Maze Challenge. In which the robot has to find its way out in a maze. | ||

PICO is the name of the robot that will be used. | PICO is the name of the robot that will be used. In this case, PICO has two types of useful inputs: | ||

# The laser data from the laser range finder | # The laser data from the laser range finder | ||

# The odometry data from the wheels | # The odometry data from the wheels | ||

| Line 109: | Line 60: | ||

In the fourth week there is the "Corridor Competition". During this challenge, students have to let the robot drive through a corridor and then take the first exit (whether left or right). | In the fourth week there is the "Corridor Competition". During this challenge, students have to let the robot drive through a corridor and then take the first exit (whether left or right). | ||

At the end of the project, the "A-maze-ing challenge" has to be solved. The goal of this competition is to let PICO autonomously drive through a maze and find the exit. | At the end of the project, the "A-maze-ing challenge" has to be solved. The goal of this competition is to let PICO autonomously drive through a maze and find the exit. Group 3 was the only group capable of solving the maze. | ||

= Design = | = Design = | ||

| Line 117: | Line 68: | ||

The final goal of the project is to solve a random maze, fully autonomously. Based on the description of the maze challenge, several requirements can be set: | The final goal of the project is to solve a random maze, fully autonomously. Based on the description of the maze challenge, several requirements can be set: | ||

* Move and reach the exit of the maze. | * Move and reach the exit of the maze. | ||

** As fast as possible | |||

** Enter a door | |||

** Do not get stuck in a loop | |||

* The robot should avoid bumping into the walls. | * The robot should avoid bumping into the walls. | ||

* | * Therefore, it should perceive its surroundings. | ||

* The robot has to solve the maze in a 'smart' way. | * The robot has to solve the maze in a 'smart' way. | ||

* Must be applicable to every maze. | |||

=== Functions & Communication === | === Functions & Communication === | ||

[[File:behaviour_diagram.png|thumb| | |||

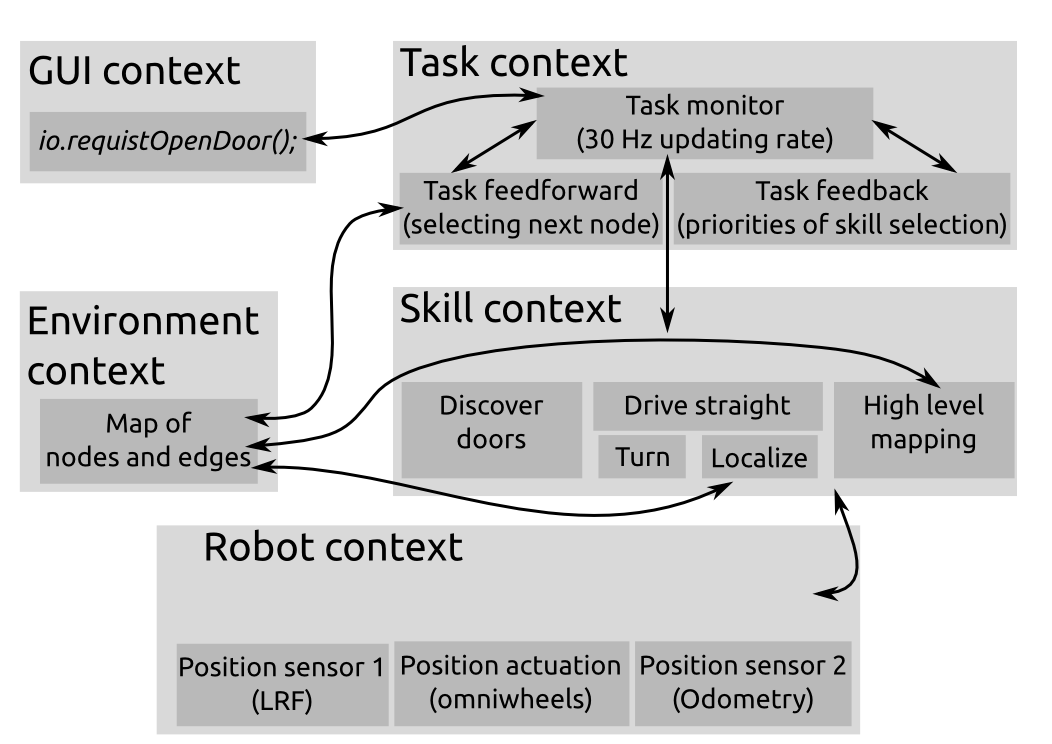

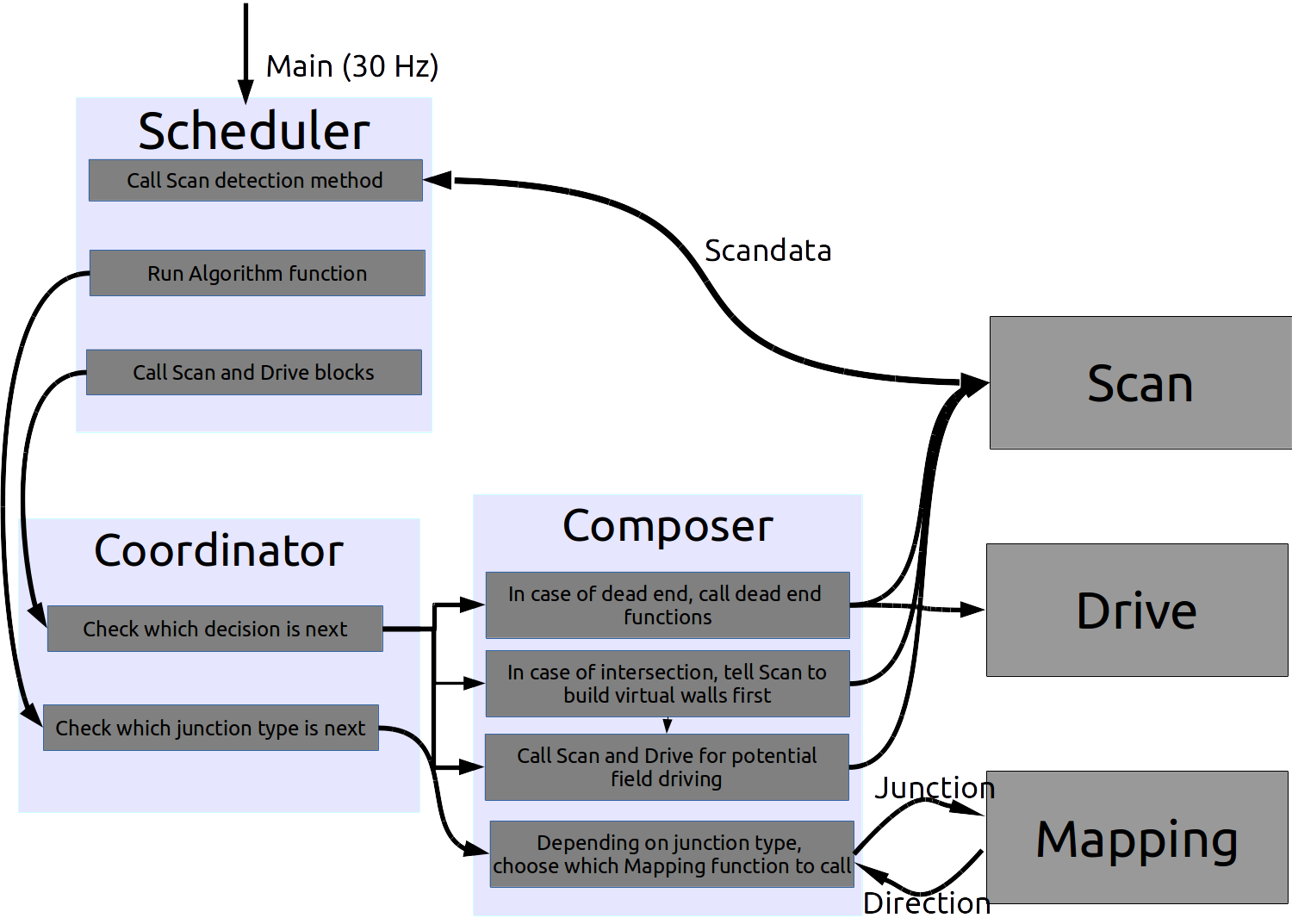

[[File:behaviour_diagram.png|250px|thumb|right|Blockdiagram for connection between the contexts]] The robot will know a number of basic functions. These functions can be divided into two categories: tasks and skills. | |||

The task are the most high level proceedings the robot should be able to do. These are: | The task are the most high level proceedings the robot should be able to do. These are: | ||

*Determine situation | |||

* Determine situation | *Decision making | ||

* Decision making | *Skill selection | ||

* Skill selection | |||

The skills are specific actions that accomplish a certain goal. The list of skills is as follows: | The skills are specific actions that accomplish a certain goal. The list of skills is as follows: | ||

*Handle intersections | |||

*Handle dead ends | |||

*Discover doors | |||

*Mapping environment | |||

*Make decisions based on the map | |||

*Detect the end of the maze | |||

* Drive | These skills need the following functions of the robot: | ||

* Rotate | *Drive | ||

* | *Rotate | ||

*Read out sensor data to scan environment | |||

=== Software architecture === | === Software architecture === | ||

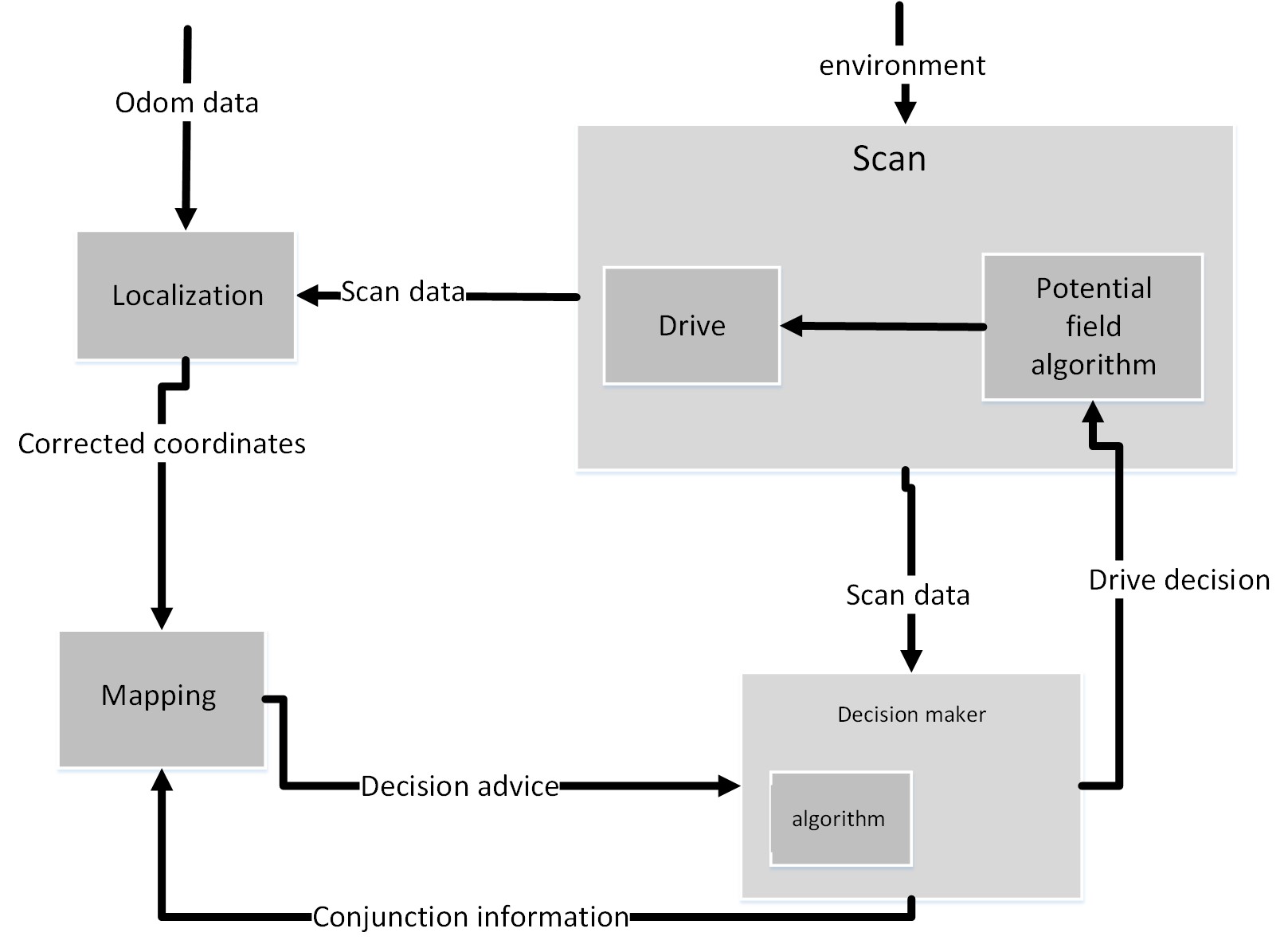

[[File:Overrall structure.jpg|thumb| | [[File:Overrall structure.jpg|250px|thumb|right|Overall structure]]To solve the problem, it is divided into different blocks with their own functions. We have chosen to make these five blocks: Scan, Drive, Localisation, Decision and Mapping. The figure on the right shows a simplified scheme of the software architecture and the cohesion of the individual blocks. In practice, Drive/Scan and Localisation/Mapping are closely linked. Now, a short clarification of the figure will be given. More detailed information of each block will be discussed in the next sections. | ||

Lets start with the Scan block: | Lets start with the Scan block: | ||

| Line 162: | Line 110: | ||

* Then it should know if the robot has been there before. Based on that, Decision can send a new action to Scan/Drive. | * Then it should know if the robot has been there before. Based on that, Decision can send a new action to Scan/Drive. | ||

* Now the new vector is based on the environment data and the information from Decision. In this way, the robot should find a strategic way to drive through the maze. | * Now the new vector is based on the environment data and the information from Decision. In this way, the robot should find a strategic way to drive through the maze. | ||

=== Calibration === | === Calibration === | ||

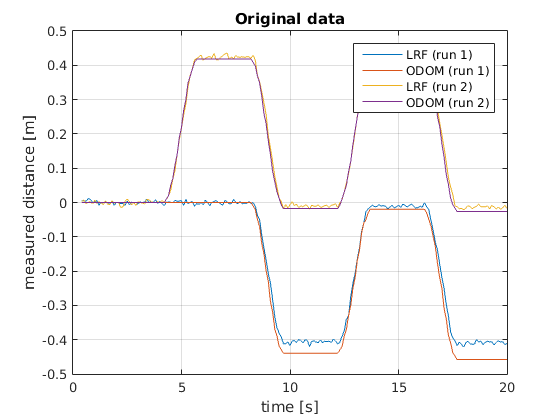

In the lectures, the claim was made that 'the odometry data is not reliable'. We decided to quantify the errors in the robot's sensors in some way. The robot was programmed to drive back and forth in front of a wall. At every time instance, it would | <p>[[File:Originaldata.png|250px|thumb|right|Calibration: Difference between odometry and LRF data]] In the lectures, the claim was made that 'the odometry data is not reliable'. We decided to quantify the errors in the robot's sensors in some way. The robot was programmed to drive back and forth in front of a wall. At every time instance, it would collect odometry data as well as laser data. The laser data point that was straight in front of the robot was compared to the odometry data, i.e. the driven distance is compared to the measured distance to the wall in front of the robot. The top figure on the right shows these results. The starting distance from the wall is substracted from the laser data signal. Then, the sign is flipped so that the laser data should match the odometry exactly, if the sensors would provide perfect data. Two things are now notable from this figure: | ||

The starting distance from the wall is substracted from the laser data signal. Then, the sign is flipped so that the laser data should match the odometry exactly, if the sensors would provide perfect data. Two things are now notable from this figure: | |||

*The laserdata and the odometry data do not return exactly the same values. | *The laserdata and the odometry data do not return exactly the same values. | ||

*The odometry seems to produce no noise at all. | *The odometry seems to produce no noise at all. | ||

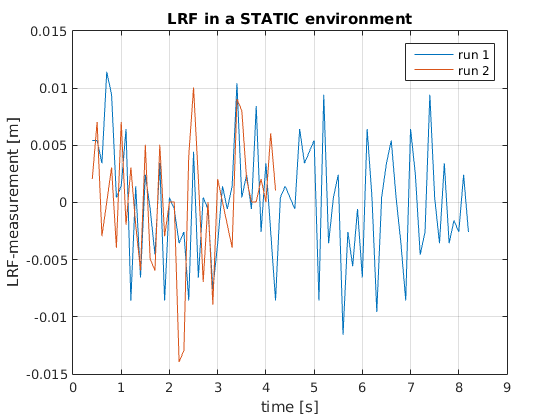

[[File:StaticLRF.png|250px|thumb|right|alt=Static LRF|Calibration: Static LRF]] | |||

[[File:StaticLRF.png|thumb| | |||

The noisy signal that was returned by the laser is presented in the bottom picture on the right. Here, a part of the laser data is picked from a robot that was not moving. | |||

* The maximum amplitude of the noise is roughly 12 mm. | * The maximum amplitude of the noise is roughly 12 mm. | ||

* The standard deviation of the noise is roughly 5.5 mm | * The standard deviation of the noise is roughly 5.5 mm | ||

* The laser produces a noisy signal. Do not trust one measurement but take the average over time instead. | * The laser produces a noisy signal. Do not trust one measurement but take the average over time instead. | ||

* The odometry produces no notable noise at all, but it has a significant drift as the driven distance increases. Usage is recommended only for smaller distances (<1 m) | * The odometry produces no notable noise at all, but it has a significant drift as the driven distance increases. Usage is recommended only for smaller distances (<1 m)</p> | ||

<br><br><br><br><br><br><br><br><br><br><br><br> | |||

= Software implementation = | = Software implementation = | ||

| Line 189: | Line 132: | ||

=== Drive block === | === Drive block === | ||

Basically, the [ | [[File:Drive.jpg|250px|thumb|right|Composition pattern of Drive]] Basically, the [[Embedded_Motion_Control_2015_Group_3/Drive|Drive block]] is the doer (not the thinker) of the complete system. The figure shows the composition pattern of Drive. In our case, the robot moves around using potential field. How the potential field works in detail is shown in [[Embedded_Motion_Control_2015_Group_3/Scan|Scan]]. Potential field is an easy way to drive through corridors, and making turns. Important is to note that information from the Decision maker can influence the tasks Drive has to do. | ||

Two other methods were also considered: [[Embedded_Motion_Control_2015_Group_3/Drive#Simple_method|Simple method]] and [[Embedded_Motion_Control_2015_Group_3/Drive#Path_planning_for_turning|Path planning]]. Especially, we worked a lot of time on the Path planning method. However, after testing, the potential field was the most robust and most convenient method. | |||

<br><br><br><br><br> | |||

=== Scan block === | === Scan block === | ||

[ | [[File:Scan_cp_new.jpg|250px|thumb|right|Composition pattern of Scan]][[Embedded_Motion_Control_2015_Group_3/Scan|The block Scan]] processes the laser data of the Laser Range Finders. This data is used to detect corridors, doors, and different kind of junctions. The data that is retrieved by 'scan' is used by all three other blocks. | ||

# Scan directly gives information to 'drive'. Drive uses this to avoid collisions. | # Scan directly gives information to 'drive'. Drive uses this to avoid collisions. | ||

| Line 203: | Line 148: | ||

# Mapping also uses data from scan to map the maze. | # Mapping also uses data from scan to map the maze. | ||

PICO moves always to the place with the most space using its potential field. However, at junctions and intersections the current potential field is incapable of leading PICO into the desired direction. Virtual walls are constructed to shield potential path ways, than PICO will move to its desired direction which is made by the decision maker. To create an extra layer of safety, collision avoidance has been added on top of the potential field. Also, the scan block is capable of detecting doors, which is a necassary part of solving the maze. More detailed information about the following properties is found in [[Embedded_Motion_Control_2015_Group_3/Scan|the block Scan]]: | |||

To create an extra layer of | |||

* Potential field | |||

* Detecting junctions/intersections | |||

* Virtual walls | |||

* Collision avoidance | |||

* Detecting doors | |||

=== Decision block === | === Decision block === | ||

The | [[File:Composition_Pattern_Decision.png|250px|thumb|right|Composition pattern of Decision]]The [[Embedded_Motion_Control_2015_Group_3/Decision|Decision block]] contains the algorithm for solving the maze. It can be seen as the 'brain' of the robot. It receives the data of the world from 'Scan'; then decides what to do (it can consult 'Mapping'); finally it sends commands to 'Drive'. | ||

Input: | <ins>Input:</ins> | ||

* Mapping model | * Mapping model | ||

* Scan data | * Scan data | ||

Output: | <ins>Output:</ins> | ||

* Specific drive action command | * Specific drive action command | ||

The used maze solving algorithm is called: Trémaux's algorithm. This algorithm requires drawing lines on the floor. Every time a direction is chosen it is marked bij drawing a line on the floor (from junction to junction). Choose every time the direction with the fewest marks. If two direction are visited as often, then choose random between these two. Finally, the exit of the maze will be reached. | |||

The used maze solving algorithm is called: Trémaux's algorithm. This algorithm requires drawing lines on the floor. Every time a direction is chosen it is marked bij drawing a line on the floor (from junction to junction). Choose | |||

=== Mapping block === | === Mapping block === | ||

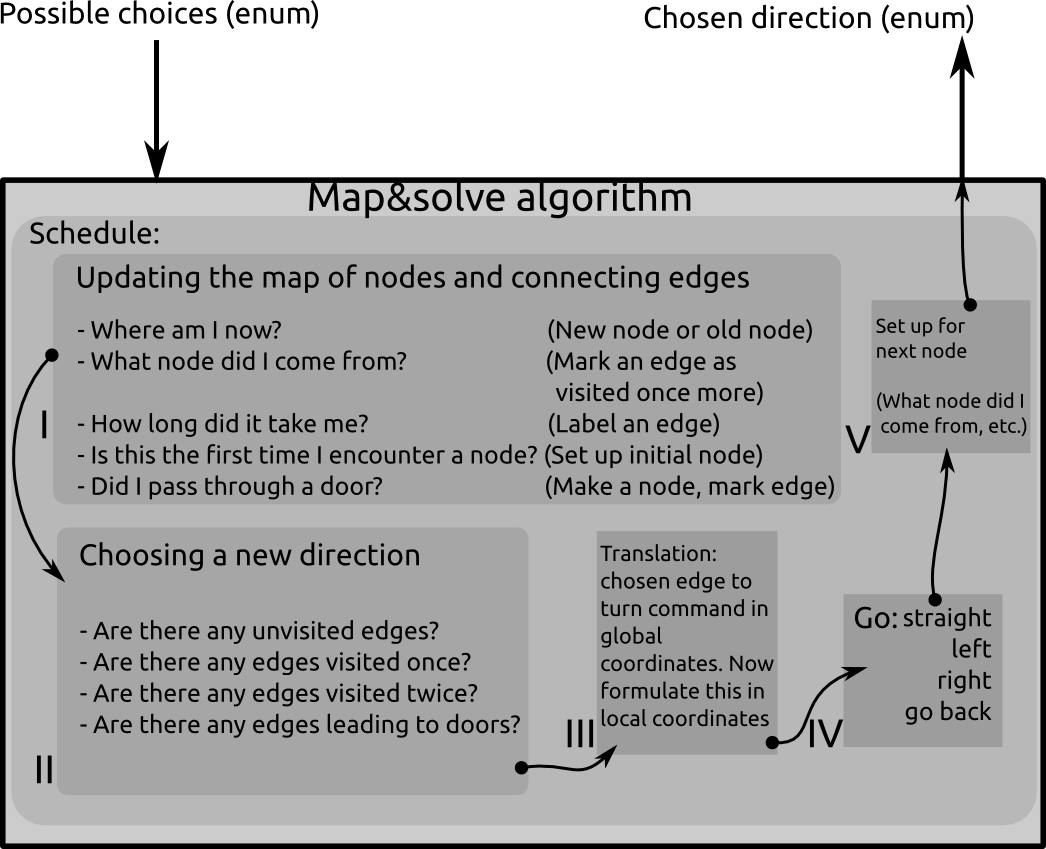

[[File:Emc03 wayfindingCP1.png|250px|thumb|right|Mapping & solve algorithm]] [[Embedded_Motion_Control_2015_Group_3/Mapping|The mapping block]] will store the corridors and junctions of the maze. This way, the decision maker can make informed decisions at intersections, to ensure that the maze will be solved in a strategic way. | |||

[ | To do this, the [http://www.cems.uvm.edu/~rsnapp/teaching/cs32/lectures/tremaux.pdf Tremaux algorithm] is used, which is an implementation of Depth First Search. | ||

The maze will consist of nodes and edges. A node is either a dead end, or any place in the maze where the robot can go in more than one direction. an edge is the connection between one node and another. An edge may also lead to the same node. In the latter case, this edge is a loop. The algorithm is called by the general decision maker whenever the robot encounters a node (junction or a dead end). The input of the algorithm is the possible routes the robot can go (left, straight ahead, right, turn around) and the output is a | The maze will consist of nodes and edges. A node is either a dead end, or any place in the maze where the robot can go in more than one direction (i.e., an intersection). An edge is the connection between one node and another, and as such is also called a corridor. An edge may also lead to the same node. In the latter case, this edge is a loop. The algorithm is called by the general decision maker whenever the robot encounters a node (junction or a dead end). The input of the algorithm is the possible routes the robot can go (left, straight ahead, right, turn around) and the output is a direction that the Mapping block considers the best option. | ||

In order to detect loops, the position of the robot must be known as well as the position of each node, to see when the robot has returned to the same location. This is decoupled from the Mapping block and done in the [[Embedded_Motion_Control_2015_Group_3/Localisation|Localisation block]]. Since the localization did not work in the end, a Random Walk implementation was also made in the Mapping block. | |||

<br><br><br> | |||

=== Localisation block === | |||

= Localisation = | |||

The purpose of the localisation is that the robot can prevent itself from driving in a loop for infinite time, by knowing where it is at a given moment in time. By knowing where it is, it can decide based on this information what to do next. As a result, the robot will be able to exit the maze within finite time, or it will tell there is no exit if it has searched everywhere without success. | The purpose of the localisation is that the robot can prevent itself from driving in a loop for infinite time, by knowing where it is at a given moment in time. By knowing where it is, it can decide based on this information what to do next. As a result, the robot will be able to exit the maze within finite time, or it will tell there is no exit if it has searched everywhere without success. | ||

The localisation algorithm is explained in on the [ | The localisation algorithm is explained in on the [[Embedded_Motion_Control_2015_Group_3/Localisation|Localisation page]]; by separating and discussing the important factors. | ||

= A-maze-ing Challenge = | = A-maze-ing Challenge = | ||

| Line 284: | Line 191: | ||

For the a-maze-ing challenge we decided on using two versions of our software package. In the first run (see section Video's further down on the page), we implemented Tremaux's algorithm together with a localiser that would together map the maze and try to solve it. Our second run was conducted with the Tremaux's algorithm and localisation algorithm turned off. Each time the robot encountered a intersection, a random decision was made on where to go next. | For the a-maze-ing challenge we decided on using two versions of our software package. In the first run (see section Video's further down on the page), we implemented Tremaux's algorithm together with a localiser that would together map the maze and try to solve it. Our second run was conducted with the Tremaux's algorithm and localisation algorithm turned off. Each time the robot encountered a intersection, a random decision was made on where to go next. | ||

== Run 1 == | === Run 1 === | ||

The first run is taped on video and can be seen [https://www.youtube.com/watch?v=fzsNA2OUwww here]. The robot recognizes a four-way cross-section and decides to turn to the left corridor. It then immediately starts do chatter as the corridor was more narrow than expected. Next, it follows the corridor smoothly until it encounters the next T-juction. The robot is confused because of the intersection immediatly to its left. After driving closer to the wall, it mistakes it for a door. Because it (of course) didn't open, it decides to turn to right and explore the dead end. In the part between 20 seconds and 24 seconds in to the video, the robot is visibly having a hard time with the narrow corridor. It tries to drive straight but also evade the walls to the left and to the right. It recognizes another dead-end and turns around swiftly. It crosses the T-junction again by going straight and at 43 seconds it again thinks it is in front of a door. After ringing the bell, it waits for the maximum of 5 seconds that it can take to open the door. Now, it recognized that also this is a dead-end and not a door. After turning around it drives back to the starting position. Between 1:11 and 1:30, it explores the edges that he has not yet seen. Here, the Tremaux's algorithm and the localiser 'seem' to be doing their job just fine. Unfortunately, as can be seen in the rest of the video, something went wrong with the other nodes to be placed. It decides to follow the same route as the first time, fails to drive to the corridor with the door in it and eventually got stuck in a loop. | The first run is taped on video and can be seen [https://www.youtube.com/watch?v=fzsNA2OUwww here]. The robot recognizes a four-way cross-section and decides to turn to the left corridor. It then immediately starts do chatter as the corridor was more narrow than expected. Next, it follows the corridor smoothly until it encounters the next T-juction. The robot is confused because of the intersection immediatly to its left. After driving closer to the wall, it mistakes it for a door. Because it (of course) didn't open, it decides to turn to right and explore the dead end. In the part between 20 seconds and 24 seconds in to the video, the robot is visibly having a hard time with the narrow corridor. It tries to drive straight but also evade the walls to the left and to the right. It recognizes another dead-end and turns around swiftly. It crosses the T-junction again by going straight and at 43 seconds it again thinks it is in front of a door. After ringing the bell, it waits for the maximum of 5 seconds that it can take to open the door. Now, it recognized that also this is a dead-end and not a door. After turning around it drives back to the starting position. Between 1:11 and 1:30, it explores the edges that he has not yet seen. Here, the Tremaux's algorithm and the localiser 'seem' to be doing their job just fine. Unfortunately, as can be seen in the rest of the video, something went wrong with the other nodes to be placed. It decides to follow the same route as the first time, fails to drive to the corridor with the door in it and eventually got stuck in a loop. | ||

Main reason for failure is thought to be the node placement. The first T-junction that the robot encountered made PICO go into its collision avoiding mode, which might have interfered with the commands to place a node. It is also possible that this actually happened, but that the localization went wrong because of all the lateral swaying to avoid collisions with the wall. It was clear that the combination of localisation, the maze-solving algorithm and the situation recognition by LRF was not yet ready to be implemented as a whole. Therefore, we decided to make the second run with a more simple version of our software, running only the core-modules that were tested and found to be reliable. | Main reason for failure is thought to be the node placement. The first T-junction that the robot encountered made PICO go into its collision avoiding mode, which might have interfered with the commands to place a node. It is also possible that this actually happened, but that the localization went wrong because of all the lateral swaying to avoid collisions with the wall. It was clear that the combination of localisation, the maze-solving algorithm and the situation recognition by LRF was not yet ready to be implemented as a whole. Therefore, we decided to make the second run with a more simple version of our software, running only the core-modules that were tested and found to be reliable. | ||

== Run 2== | === Run 2 === | ||

For the second run, we ran a version of our software without the Tremaux's algorithm implemented and with the global localiser absent. These features were developed later in the project and were not finished 100%. For this run, a random decision was passed to the decision maker every time it asked for a new direction to head to. | For the second run, we ran a version of our software without the Tremaux's algorithm implemented and with the global localiser absent. These features were developed later in the project and were not finished 100%. For this run, a random decision was passed to the decision maker every time it asked for a new direction to head to. | ||

| Line 295: | Line 202: | ||

= Experiments = | = Experiments = | ||

Seven experiments are done during the course. [ | Seven experiments are done during the course. [[Embedded_Motion_Control_2015_Group_3/Experiments|Here]] you can find short information about dates and goals of the experiments. Also there is a short evaluation for each experiment. | ||

= Conclusion = | |||

In the end, our final script was capable of solving the maze challenge, in a short time and robustly, in that it did not bump the wall. However, since we were not able to implement the higher order thinking into our script, and our final code was dependent on a random walk, the route the robot takes is up to chance. This still will solve the maze, eventually, as is shown in the second trial. | |||

Our recommendations therefore are to further the localisation, in combination with the mapping, and in this way implement the higher order learning, as was our aim. | |||

What we learned from this project was to implement top down software design using algorithms. This helped us a lot to keep overview of such a big code, by compartmentalizing the code into blocks, and keeping a clear overview of the communications between the blocks. Also, it allowed for an easier bottom up implementation, which has the added benefits of being able to build the code up from scratch, in that we would now start by creating a composition pattern, then basing the code on this. | |||

The classes did help us in figuring out the way to approach these diagrams, like the composition pattern. However we had trouble seeing the application to our problem right away, like how each block in this cp should be applied. | |||

= Files & presentations = | = Files & presentations = | ||

| Line 314: | Line 227: | ||

Maze challenge: Winning attempt! on June 17, 2015. | Maze challenge: Winning attempt! on June 17, 2015. | ||

* https://www.youtube.com/watch?v=UHz_41Bsi7c | * https://www.youtube.com/watch?v=UHz_41Bsi7c | ||

= EMC03 CST-wiki sub-pages = | = EMC03 CST-wiki sub-pages = | ||

* [ | * [[Embedded_Motion_Control_2015_Group_3/Drive|Drive]] | ||

* [ | * [[Embedded_Motion_Control_2015_Group_3/Scan|Scan]] | ||

* [ | * [[Embedded_Motion_Control_2015_Group_3/Decision|Decision]] | ||

* [ | * [[Embedded_Motion_Control_2015_Group_3/Mapping|Mapping]] | ||

* [ | * [[Embedded_Motion_Control_2015_Group_3/Localisation|Localisation]] | ||

* [ | * [[Embedded_Motion_Control_2015_Group_3/Experiments|Experiments]] | ||

Latest revision as of 19:05, 26 June 2015

This is the Wiki-page for EMC-group 3, part of the Embedded Motion Control 2015 course.

Group members

| Name | Student number | |

|---|---|---|

| Max van Lith | 0767328 | m.m.g.v.lith@student.tue.nl |

| Shengling Shi | 0925030 | s.shi@student.tue.nl |

| Michèl Lammers | 0824359 | m.r.lammers@student.tue.nl |

| Jasper Verhoeven | 0780966 | j.w.h.verhoeven@student.tue.nl |

| Ricardo Shousha | 0772504 | r.shousha@student.tue.nl |

| Sjors Kamps | 0793442 | j.w.m.kamps@student.tue.nl |

| Stephan van Nispen | 0764290 | s.h.m.v.nispen@student.tue.nl |

| Luuk Zwaans | 0743596 | l.w.a.zwaans@student.tue.nl |

| Sander Hermanussen | 0774293 | s.j.hermanussen@student.tue.nl |

| Bart van Dongen | 0777752 | b.c.h.v.dongen@student.tue.nl |

General information

This course is about software designs and how to apply this in the context of autonomous robots. The accompanying assignment is about applying this knowledge to a real-life robotics task.

The goal of this course is to acquire knowledge and insight about the design and implementation of embedded motion systems. Furthermore, the purpose is to develop insight in the possibilities and limitations in relation with the embedded environment (actuators, sensors, processors, RTOS). To make this operational and to practically implement an embedded control system for an autonomous robot, there is the Maze Challenge. In which the robot has to find its way out in a maze.

PICO is the name of the robot that will be used. In this case, PICO has two types of useful inputs:

- The laser data from the laser range finder

- The odometry data from the wheels

In the fourth week there is the "Corridor Competition". During this challenge, students have to let the robot drive through a corridor and then take the first exit (whether left or right).

At the end of the project, the "A-maze-ing challenge" has to be solved. The goal of this competition is to let PICO autonomously drive through a maze and find the exit. Group 3 was the only group capable of solving the maze.

Design

In this section the general design of the project is discussed.

Requirements

The final goal of the project is to solve a random maze, fully autonomously. Based on the description of the maze challenge, several requirements can be set:

- Move and reach the exit of the maze.

- As fast as possible

- Enter a door

- Do not get stuck in a loop

- The robot should avoid bumping into the walls.

- Therefore, it should perceive its surroundings.

- The robot has to solve the maze in a 'smart' way.

- Must be applicable to every maze.

Functions & Communication

The robot will know a number of basic functions. These functions can be divided into two categories: tasks and skills.

The task are the most high level proceedings the robot should be able to do. These are:

- Determine situation

- Decision making

- Skill selection

The skills are specific actions that accomplish a certain goal. The list of skills is as follows:

- Handle intersections

- Handle dead ends

- Discover doors

- Mapping environment

- Make decisions based on the map

- Detect the end of the maze

These skills need the following functions of the robot:

- Drive

- Rotate

- Read out sensor data to scan environment

Software architecture

To solve the problem, it is divided into different blocks with their own functions. We have chosen to make these five blocks: Scan, Drive, Localisation, Decision and Mapping. The figure on the right shows a simplified scheme of the software architecture and the cohesion of the individual blocks. In practice, Drive/Scan and Localisation/Mapping are closely linked. Now, a short clarification of the figure will be given. More detailed information of each block will be discussed in the next sections.

Lets start with the Scan block:

- Scan receives information about the environment. To do this it uses his laser range finder data.

- Based on this data Scan consults its potential field algorithm to make a vector for Drive.

- Drive interprets the vector and sends the robot in that direction.

- Together the LRF and odometry data determine the traveled distance and direction. Localisation saves this in an orthogonal grid.

- Mapping consults these positions to 'tell' Decision at what interesting point the robot is. For instance, this can be a junction or a dead end.

- Then it should know if the robot has been there before. Based on that, Decision can send a new action to Scan/Drive.

- Now the new vector is based on the environment data and the information from Decision. In this way, the robot should find a strategic way to drive through the maze.

Calibration

In the lectures, the claim was made that 'the odometry data is not reliable'. We decided to quantify the errors in the robot's sensors in some way. The robot was programmed to drive back and forth in front of a wall. At every time instance, it would collect odometry data as well as laser data. The laser data point that was straight in front of the robot was compared to the odometry data, i.e. the driven distance is compared to the measured distance to the wall in front of the robot. The top figure on the right shows these results. The starting distance from the wall is substracted from the laser data signal. Then, the sign is flipped so that the laser data should match the odometry exactly, if the sensors would provide perfect data. Two things are now notable from this figure:

- The laserdata and the odometry data do not return exactly the same values.

- The odometry seems to produce no noise at all.

The noisy signal that was returned by the laser is presented in the bottom picture on the right. Here, a part of the laser data is picked from a robot that was not moving.

- The maximum amplitude of the noise is roughly 12 mm.

- The standard deviation of the noise is roughly 5.5 mm

- The laser produces a noisy signal. Do not trust one measurement but take the average over time instead.

- The odometry produces no notable noise at all, but it has a significant drift as the driven distance increases. Usage is recommended only for smaller distances (<1 m)

Software implementation

In this section, implementation of this software will be discussed based on the block division we made.

Brief instruction about one block can be found here. In addition, there are also more detailed problem-solving processes and ideas which can be found in the sub-pages of each block.

Drive block

Basically, the Drive block is the doer (not the thinker) of the complete system. The figure shows the composition pattern of Drive. In our case, the robot moves around using potential field. How the potential field works in detail is shown in Scan. Potential field is an easy way to drive through corridors, and making turns. Important is to note that information from the Decision maker can influence the tasks Drive has to do.

Two other methods were also considered: Simple method and Path planning. Especially, we worked a lot of time on the Path planning method. However, after testing, the potential field was the most robust and most convenient method.

Scan block

The block Scan processes the laser data of the Laser Range Finders. This data is used to detect corridors, doors, and different kind of junctions. The data that is retrieved by 'scan' is used by all three other blocks.

- Scan directly gives information to 'drive'. Drive uses this to avoid collisions.

- The scan sends its data to 'decision' to determine an action at a junction for the 'drive' block.

- Mapping also uses data from scan to map the maze.

PICO moves always to the place with the most space using its potential field. However, at junctions and intersections the current potential field is incapable of leading PICO into the desired direction. Virtual walls are constructed to shield potential path ways, than PICO will move to its desired direction which is made by the decision maker. To create an extra layer of safety, collision avoidance has been added on top of the potential field. Also, the scan block is capable of detecting doors, which is a necassary part of solving the maze. More detailed information about the following properties is found in the block Scan:

- Potential field

- Detecting junctions/intersections

- Virtual walls

- Collision avoidance

- Detecting doors

Decision block

The Decision block contains the algorithm for solving the maze. It can be seen as the 'brain' of the robot. It receives the data of the world from 'Scan'; then decides what to do (it can consult 'Mapping'); finally it sends commands to 'Drive'.

Input:

- Mapping model

- Scan data

Output:

- Specific drive action command

The used maze solving algorithm is called: Trémaux's algorithm. This algorithm requires drawing lines on the floor. Every time a direction is chosen it is marked bij drawing a line on the floor (from junction to junction). Choose every time the direction with the fewest marks. If two direction are visited as often, then choose random between these two. Finally, the exit of the maze will be reached.

Mapping block

The mapping block will store the corridors and junctions of the maze. This way, the decision maker can make informed decisions at intersections, to ensure that the maze will be solved in a strategic way.

To do this, the Tremaux algorithm is used, which is an implementation of Depth First Search.

The maze will consist of nodes and edges. A node is either a dead end, or any place in the maze where the robot can go in more than one direction (i.e., an intersection). An edge is the connection between one node and another, and as such is also called a corridor. An edge may also lead to the same node. In the latter case, this edge is a loop. The algorithm is called by the general decision maker whenever the robot encounters a node (junction or a dead end). The input of the algorithm is the possible routes the robot can go (left, straight ahead, right, turn around) and the output is a direction that the Mapping block considers the best option.

In order to detect loops, the position of the robot must be known as well as the position of each node, to see when the robot has returned to the same location. This is decoupled from the Mapping block and done in the Localisation block. Since the localization did not work in the end, a Random Walk implementation was also made in the Mapping block.

Localisation block

The purpose of the localisation is that the robot can prevent itself from driving in a loop for infinite time, by knowing where it is at a given moment in time. By knowing where it is, it can decide based on this information what to do next. As a result, the robot will be able to exit the maze within finite time, or it will tell there is no exit if it has searched everywhere without success.

The localisation algorithm is explained in on the Localisation page; by separating and discussing the important factors.

A-maze-ing Challenge

In the third week of this project we had to do the corridor challenge. During this challenge, we have to let the robot drive through a corridor and then take the first exit (whether left or right). This job can be tackled with two different approaches:

- Make a script only based on the corridor challenge.

- Make a script for the corridor challenge but with clear references to the final maze challenge.

We chose the second approach. This implies that we will have to do some extra work to think about a properly structured code. Because only then the same script can be used for the final challenge. After the corridor competition, we can discuss about our choice because we failed the corridor challenge while other groups succeed. But most of these group had selected approach 1 and we already had a decent base for the a-maze-ing challenge. And this was proving its worth later..

For the a-maze-ing challenge we decided on using two versions of our software package. In the first run (see section Video's further down on the page), we implemented Tremaux's algorithm together with a localiser that would together map the maze and try to solve it. Our second run was conducted with the Tremaux's algorithm and localisation algorithm turned off. Each time the robot encountered a intersection, a random decision was made on where to go next.

Run 1

The first run is taped on video and can be seen here. The robot recognizes a four-way cross-section and decides to turn to the left corridor. It then immediately starts do chatter as the corridor was more narrow than expected. Next, it follows the corridor smoothly until it encounters the next T-juction. The robot is confused because of the intersection immediatly to its left. After driving closer to the wall, it mistakes it for a door. Because it (of course) didn't open, it decides to turn to right and explore the dead end. In the part between 20 seconds and 24 seconds in to the video, the robot is visibly having a hard time with the narrow corridor. It tries to drive straight but also evade the walls to the left and to the right. It recognizes another dead-end and turns around swiftly. It crosses the T-junction again by going straight and at 43 seconds it again thinks it is in front of a door. After ringing the bell, it waits for the maximum of 5 seconds that it can take to open the door. Now, it recognized that also this is a dead-end and not a door. After turning around it drives back to the starting position. Between 1:11 and 1:30, it explores the edges that he has not yet seen. Here, the Tremaux's algorithm and the localiser 'seem' to be doing their job just fine. Unfortunately, as can be seen in the rest of the video, something went wrong with the other nodes to be placed. It decides to follow the same route as the first time, fails to drive to the corridor with the door in it and eventually got stuck in a loop.

Main reason for failure is thought to be the node placement. The first T-junction that the robot encountered made PICO go into its collision avoiding mode, which might have interfered with the commands to place a node. It is also possible that this actually happened, but that the localization went wrong because of all the lateral swaying to avoid collisions with the wall. It was clear that the combination of localisation, the maze-solving algorithm and the situation recognition by LRF was not yet ready to be implemented as a whole. Therefore, we decided to make the second run with a more simple version of our software, running only the core-modules that were tested and found to be reliable.

Run 2

For the second run, we ran a version of our software without the Tremaux's algorithm implemented and with the global localiser absent. These features were developed later in the project and were not finished 100%. For this run, a random decision was passed to the decision maker every time it asked for a new direction to head to.

The second run can be seen here. Again the robot immediately decides to go left. Note that the first corner it takes in the corridor, between 0:02 and 0:04 are exactly the same. This is because the robot is driven by separate blocks of software. The blocks that are active during the following of a corridor were exactly the same for both runs. At 00:7, the collision detection works just in time to prevent a head on collision with the wall in front of PICO at the T-junction. Now, a random decision is made to go left, followed by a right turn in to the corridor with the door. It recognizes the door in front of it exactly as expected and stops to ring the doorbell. Although the door started moving immediately after ringing the bell, the robot is programmed to wait for five seconds until it is allowed to move again. During these five seconds, it used the LRF to check if the door moved out of its way. After the passage was all clear, the robot started exploring the new area. Now, the robot drives in to the open space. Note that, between 0:30 and 0:36, the robot made a zigzag manoeuvre. When it first drives into the open space, the potential field points at the center of this open space. Between 0:36 and 0:46 it drives in 'open space mode'. This means that the robot will drive to the nearest wall and starts driving alongside of it. It should thereby always find a new node where a new decision can be made. By doing so, it drives into a corridor. Note that at 0:47, the normal 'corridor mode' started working again. The potential field method will direct the robot towards the middle of the corridor. This explains the sharp turn it made at 0:47. After hearing the presenter ask to 'Please go left... Please go left?!?', the robot makes another random decision. As luck would have it, the random decision was indeed to go left. It slightly overturns, but the collision detection saves PICO from crashing into the wall yet again at 1:06. At 1:10, the well earned applause for PICO started as he finished the maze in a total time of 1:16!

Experiments

Seven experiments are done during the course. Here you can find short information about dates and goals of the experiments. Also there is a short evaluation for each experiment.

Conclusion

In the end, our final script was capable of solving the maze challenge, in a short time and robustly, in that it did not bump the wall. However, since we were not able to implement the higher order thinking into our script, and our final code was dependent on a random walk, the route the robot takes is up to chance. This still will solve the maze, eventually, as is shown in the second trial. Our recommendations therefore are to further the localisation, in combination with the mapping, and in this way implement the higher order learning, as was our aim. What we learned from this project was to implement top down software design using algorithms. This helped us a lot to keep overview of such a big code, by compartmentalizing the code into blocks, and keeping a clear overview of the communications between the blocks. Also, it allowed for an easier bottom up implementation, which has the added benefits of being able to build the code up from scratch, in that we would now start by creating a composition pattern, then basing the code on this. The classes did help us in figuring out the way to approach these diagrams, like the composition pattern. However we had trouble seeing the application to our problem right away, like how each block in this cp should be applied.

Files & presentations

- Initial design document (week 1): File:Init design.pdf

- First presentation (week 3): File:Group3 May6.pdf

- Second presentation (week 6): File:Group3 May27.pdf

- Final design presentation (week 8): File:EMC03 finalpres.pdf

Videos

Experiment 4: Testing the potential field on May 29, 2015.

Maze challenge: Tremaux's algorithm, but failing to solve the maze. June 17, 2015.

Maze challenge: Winning attempt! on June 17, 2015.