PRE2024 3 Group3: Difference between revisions

| (169 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

<div class="floatleft" style="width: 80%; margin-left: auto; margin-right: auto;"> | |||

=Members= | =Group Members= | ||

{| class="wikitable" | {| class="wikitable" | ||

|'''Name''' | |'''Name''' | ||

| Line 13: | Line 13: | ||

|- | |- | ||

|Luis Fernandez Gu | |Luis Fernandez Gu | ||

| | |1804189 | ||

| | |Computer Science and Engineering | ||

| | |l.fernandez.gu@student.tue.nl | ||

|- | |- | ||

|Alex Gavriliu | |Alex Gavriliu | ||

| | |1785060 | ||

| | |Computer Science and Engineering | ||

| | |a.m.gavriliu@student.tue.nl | ||

|- | |- | ||

|Theophile Guillet | |Theophile Guillet | ||

| Line 28: | Line 28: | ||

|- | |- | ||

|Petar Rustić | |Petar Rustić | ||

| | |1747924 | ||

| | |Applied Physics | ||

| | |p.rustic@student.tue.nl | ||

|- | |||

|Floris Bruin | |||

|1849662 | |||

|Computer Science and Engineering | |||

|f.bruin@student.tue.nl | |||

|} | |} | ||

= | =Introduction= | ||

=== | === Problem Statement === | ||

Diagnosing speech impairments in children is a complex and evolving area within modern healthcare. While early diagnosis is essential for effective treatment, current diagnostic practices can present challenges that may impact both accuracy and accessibility. Several studies and practitioner reports have highlighted inefficiencies in existing assessment models, especially for young children (e.g., ASHA, 2024<ref>https://www.asha.org/public/speech/disorders/SpeechSoundDisorders/</ref>, leader.pubs.asha.org<ref>https://leader.pubs.asha.org/doi/10.1044/leader.FTR3.19112014.48?utm_source=chatgpt.com</ref>). | |||

Traditionally, speech and language therapists (SLTs) rely on structured, in-person testing methods. These assessments often involve up to three hours of standardized questioning, which can be mentally exhausting for both the child and the therapist. Extended sessions may introduce human fatigue and subjectivity, increasing the risk of inconsistencies in analysis. Additionally, children—especially those between the ages of 3 and 5—may struggle to remain focused and responsive during lengthy appointments, which can affect the quality and reliability of their responses. | |||

Another significant consideration is the environment in which assessments take place. Therapist offices can feel unfamiliar or intimidating to young children, potentially affecting their comfort level and behavior during testing. The child’s perception of the therapist, the formality of the setting, and the pressure of the situation can all influence the outcome of the diagnostic process. Moreover, both therapist and child performance may decline over time, introducing bias and reducing the diagnostic clarity. | |||

To address these challenges, this project proposes the use of an interactive, speech-focused diagnostic robot designed to support and enhance speech impairment assessments. This tool aims not to replace therapists but to assist them by mitigating known sources of bias and fatigue while making the process more accessible and engaging for children. | |||

Firstly, it questions these children consistently, not suffering from its questioning decreasing in quality as the tests progress. Secondly, it records the child's responses, and provides an adequate level of preprocessing to allow the results of the test to be easily and conveniently analyzed by the therapist, allowing the therapist to be able to listen to the recording multiple times, as well as when they have the energy and state of mind to conveniently do so. Finally, it partitions the exam into smaller, gamified chunks - while still ensuring the test provides the same effectiveness as a full three hour session - which offers the children breaks and a more adequate interface which can improve the quality of their responses. Also its friendly appearing, non-threatening interface may provide the child with a more comfortable and engaging exam experience - although this will need to be tested empirically to analyze its effectiveness. | |||

Other benefits such a robot provides include a separation between the therapist's office and the child's location. This allows for diagnoses to be taken in locations where access to therapists is limited or nonexistent as the responses from the robot can be sent digitally to therapists abroad. It also allows for a diagnosis from multiple therapists, as with consent from the child's guardians, multiple therapists can have access to the recordings and results from the tests allowing for a more robust and certified diagnosis from the therapists as well as reducing bias present in any one therapist. | |||

=== Objectives === | |||

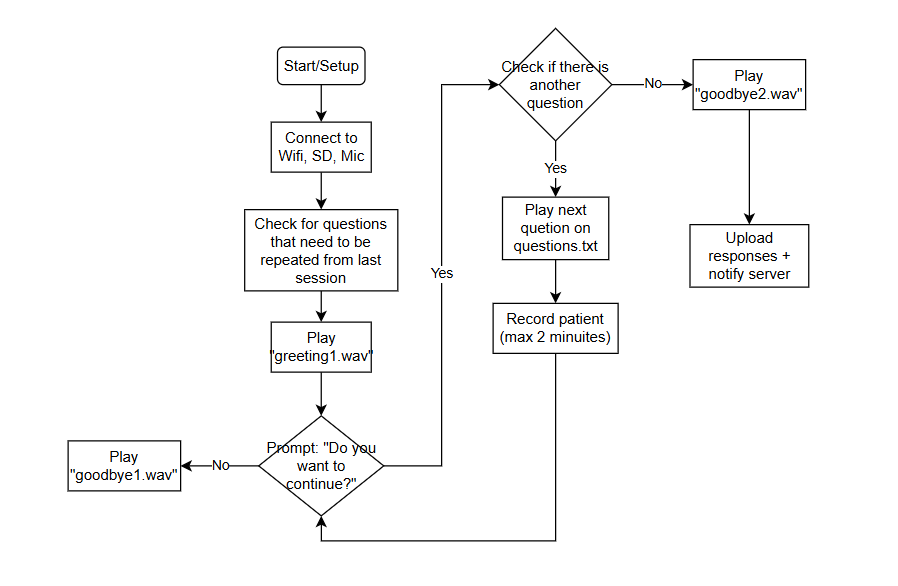

# Create a robot which can ask questions, record answers, provide some basic preprocessing to answers, and possess suitable paths of executions for unexpected use cases - for example children crying, incoherent responses, etc. | |||

# Perform multiple tests to understand the effectiveness of the robot. The first being a test analyzing how well a child interacts with the robot. The second test being a comparison between an analysis from a therapist of the results from the device and the standard results from a current test. | |||

# Test how well the robot performs when encountered with unexpected use cases. | |||

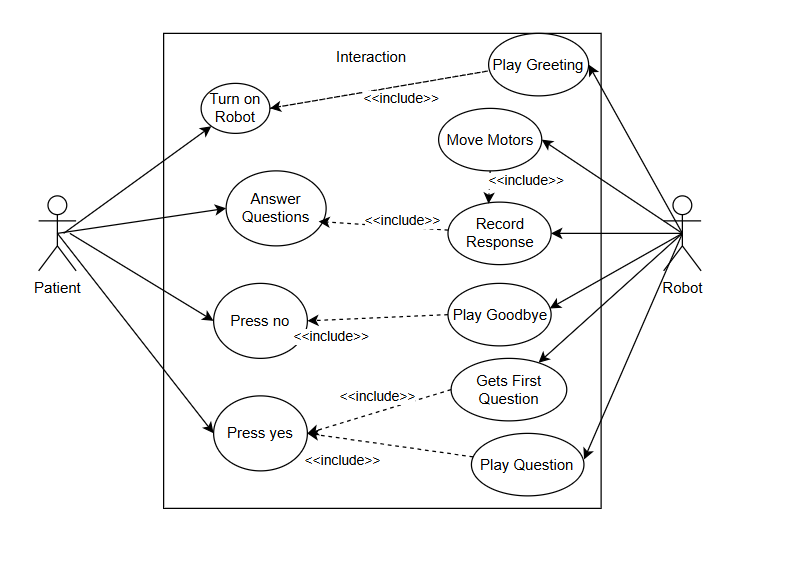

== USE Analysis == | |||

= | === Users === | ||

==== Speech-Language Therapist (Primary User) ==== | |||

Clinicians that are dedicated professionals responsible for diagnosing and treating children with speech, language, and communication challenges. They are often under high workloads and constant pressure to deliver accurate assessments quickly. | |||

===== Needs: ===== | |||

* '''Efficient Diagnostic Processes:''' Therapists require engaging diagnostic methods that transform lengthy traditional assessments into shorter, gamified sessions to minimize child fatigue and cognitive overload (Sievertsen et al., 2016<ref name=":0">https://pmc.ncbi.nlm.nih.gov/articles/PMC4790980/</ref>). Utilizing robots to conduct playful tasks significantly enhances child engagement, allowing therapists to better manage their time and increase diagnostic accuracy (Estévez et al., 2021<ref>https://www.mdpi.com/2071-1050/13/5/2771</ref>). Interviews with the primary user group suggest that the robot can specifically target repetitive "production constraint" tasks, reducing the number of repetitions needed, which in turn reduces session time and patient fatigue. | |||

* '''Comprehensive Data Capture and Objective Analysis:''' Therapists identify data analysis as the most time-consuming aspect of diagnostics. Interviews indicate that high-quality audio and interaction data captured during robot-assisted sessions enable therapists to conduct thorough asynchronous reviews, significantly streamlining the analysis process. The robot’s ability to provide unbiased assessments, free from subjective influences such as therapist fatigue or emotional state, improves diagnostic reliability (Moran & Tai, 2001<ref>https://www.scirp.org/reference/referencespapers?referenceid=3826718</ref>). | |||

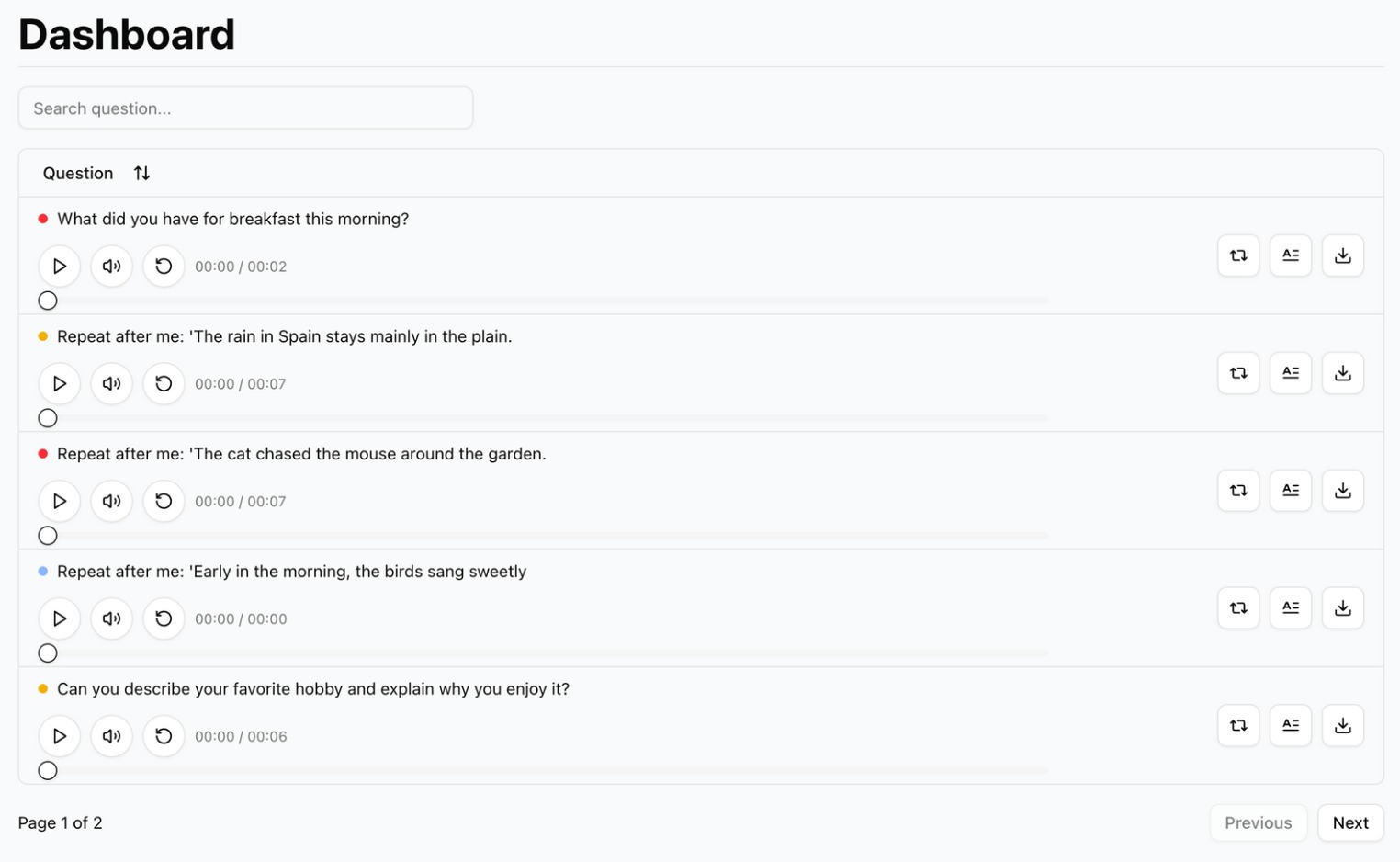

* '''Intuitive Digital Tools:''' Therapists benefit from secure, well-designed dashboards that streamline patient management, enable detailed annotations, and support remote, asynchronous assessments. A case study (Kushniruk & Borycki, 2006<ref>https://pubmed.ncbi.nlm.nih.gov/17238408/</ref>) in the ''Journal of Biomedical Informatics'' shows that intuitive clinical interfaces significantly improve diagnostic accuracy and reduce clinician errors by minimizing cognitive load and decision fatigue in health technology systems. When paired with privacy-by-design principles that align with legal frameworks like GDPR, these tools not only enhance usability but also maintain strict data protection standards. | |||

==== Child Patient (Secondary User) ==== | |||

Young children aged 5–10, who are the focus of speech assessments, often experience anxiety, discomfort or boredom in traditional clinical environments. Their engagement in therapy sessions is crucial for accurate assessment and treatment outcomes. | |||

This age range has been identified as the most suitable for early diagnosis due to several factors. By the age of 4 to 5, most children can produce nearly all speech sounds correctly, making it easier to identify atypical patterns or delays (ASHA<ref>https://www.asha.org/public/speech/disorders/SpeechSoundDisorders/</ref>, 2024; RCSLT, UK<ref>https://www.rcslt.org/speech-and-language-therapy/clinical-information/speech-sound-disorders/</ref>). Children within this range also possess a higher level of cognitive and behavioral development, which allows them to better understand and follow test instructions—critical for accurate assessments. | |||

Early identification is crucial because speech sound disorders (SSDs) often begin to emerge at this stage. Addressing these issues early greatly improves outcomes in speech development, literacy, and overall academic performance (NHS, 2023<ref>https://www.nhs.uk/conditions/speech-and-language-therapy/</ref>; European Journal of Pediatrics, 2013<ref>https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3560814/</ref>). | |||

While some speech impairments are not apparent until after age 5—such as developmental language disorders or fluency issues—the younger demographic (ages 3–5) poses a unique challenge. Children aged 3–5 often have limited attention spans (6–12 minutes) and are in Piaget’s preoperational stage of development, making it essential for diagnostic tools to be simple, engaging, and developmentally appropriate to ensure accurate assessment (CNLD.org<ref>https://www.cnld.org/how-long-should-a-childs-attention-span-be/</ref>). Children in this group are often less willing or able to engage with structured diagnostic tasks due to limited attention spans and mental maturity. Therefore, it is essential that diagnostic tools are designed with this in mind, using engaging, age-appropriate methods that ensure accurate and efficient assessment. | |||

===== Needs: ===== | |||

* '''Interactive, Game-Like Experience:''' Gamification significantly boosts child engagement and motivation, reducing anxiety and fatigue (Zakrzewski, 2024<ref><nowiki>https://digitalcommons.montclair.edu/etd/1386/</nowiki></ref>). Children interacting with socially assistive robots show higher attention spans, improved participation, and reduced stress compared to traditional assessments (Shafiei et al., 2023<ref><nowiki>https://pmc.ncbi.nlm.nih.gov/articles/PMC10240099/</nowiki></ref>). Interviews with therapists indicate that children often find traditional diagnostics "long," "tiring," and "laborious," underscoring the need for more engaging, playful methodologies. | |||

* '''Immediate, Clear Feedback:''' Immediate visual and auditory feedback from robots guides children effectively, providing reinforcement and maintaining high engagement levels (Grossinho, 2017<ref>https://www.isca-archive.org/wocci_2017/grossinho17_wocci.pdf</ref>). According to user interviews, such real-time feedback helps mitigate frustration during challenging speech tasks, supporting sustained engagement and more reliable outcomes. | |||

==== Parent/Caregiver (Support User) ==== | |||

Parents or caregivers play an essential role in supporting the child’s therapy and need to feel confident that the process is both secure and effective. To ensure the therapy is reinforced outside the clinical setting, the parents need to be fully on-board. | |||

===== Needs: ===== | |||

* '''Data Security and Transparency:''' Caregivers consistently express the need for robust privacy protections. Interviews and research indicate that many parents hesitate to adopt digital tools unless they are confident that their child’s recordings and personal data are securely encrypted, stored in compliance with healthcare regulations (e.g., HIPAA/GDPR), and used solely for clinical benefit. Transparent consent mechanisms and clear explanations of data use build trust and increase engagement (Houser, Flite, & Foster, 2023<ref>https://pmc.ncbi.nlm.nih.gov/articles/PMC9860467/</ref>). Parents want assurance that they retain control over data visibility, which reinforces their role as advocates for their child’s safety. | |||

* '''Progress Monitoring''': Parents expect to stay informed about their child’s development. A user-friendly caregiver dashboard or app interface should display digestible progress summaries, such as completed tasks, improvements in specific sounds, and visual/audio comparisons over time. This empowers parents to understand their child’s journey and reinforces their sense of involvement. Caregiver interviews showed that getting clear updates after each session, e.g. “Jonas practiced 20 words and improved on ‘s’ sounds”, helped parents feel more motivated and involved. This kind of feedback makes it easier for parents to support practice at home and builds a stronger connection between families and therapists (Roulstone et al., 2013<ref>https://www.researchgate.net/publication/265099078_Investigating_the_Role_of_Language_in_Children's_Early_Educational_Outcomes</ref>; Passalacqua & Perlmutter, 2022<ref>https://www.researchgate.net/publication/364191716_Parent_Satisfaction_With_Pediatric_Speech-Language_Pathology_Telepractice_Services_During_the_COVID-19_Pandemic_An_Early_Look</ref>). | |||

==== Personas ==== | |||

[[File:PRE2024 Group3 Persona3.jpg|thumb|720x720px|Personaboard, Secondary User]] | |||

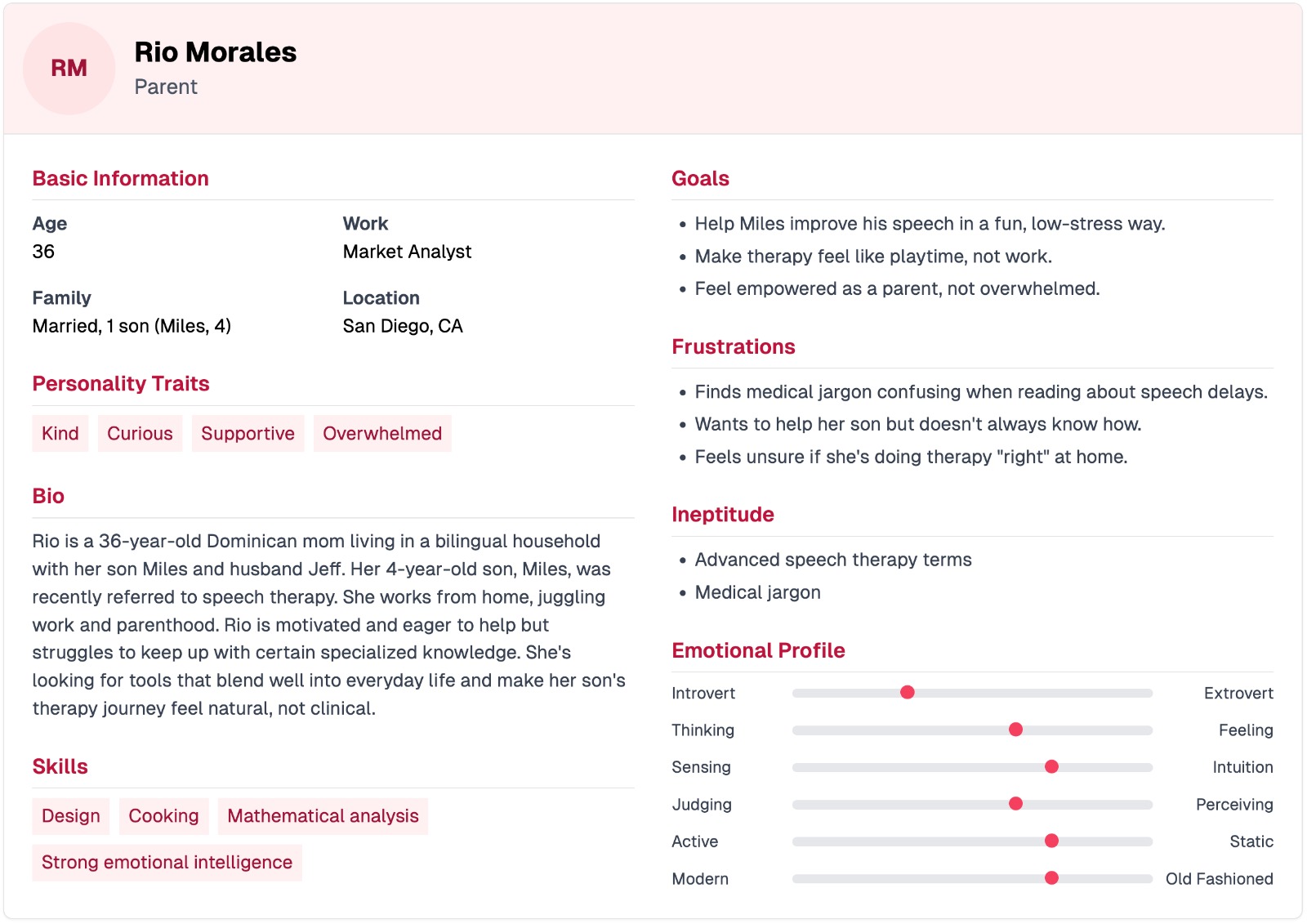

===== '''Persona 1: Parent''' ===== | |||

'''Rio Morales''' is a 36-year-old Dominican mom, market analyst, and creative spirit living in San Diego with her husband Jeff and their 4-year-old son, Miles. Kind, curious, and deeply supportive, Rio is navigating the early stages of her son’s speech therapy journey. While she’s highly motivated to help, she often feels overwhelmed by complex medical jargon and unsure if she’s “doing it right” at home. | |||

She thrives on emotional connection and values intuitive, playful approaches over rigid, clinical methods. Rio’s goal is to turn speech therapy into something that feels like quality time, not homework. With strong emotional intelligence, a knack for design and analysis, and a love for hands-on learning, Rio is looking for tools that fit seamlessly into family life and empower her to support Miles with confidence and ease. | |||

[[File:PRE2024 Group2 Persona3.jpg|thumb|721x721px|Personaboard, Secondary User]] | |||

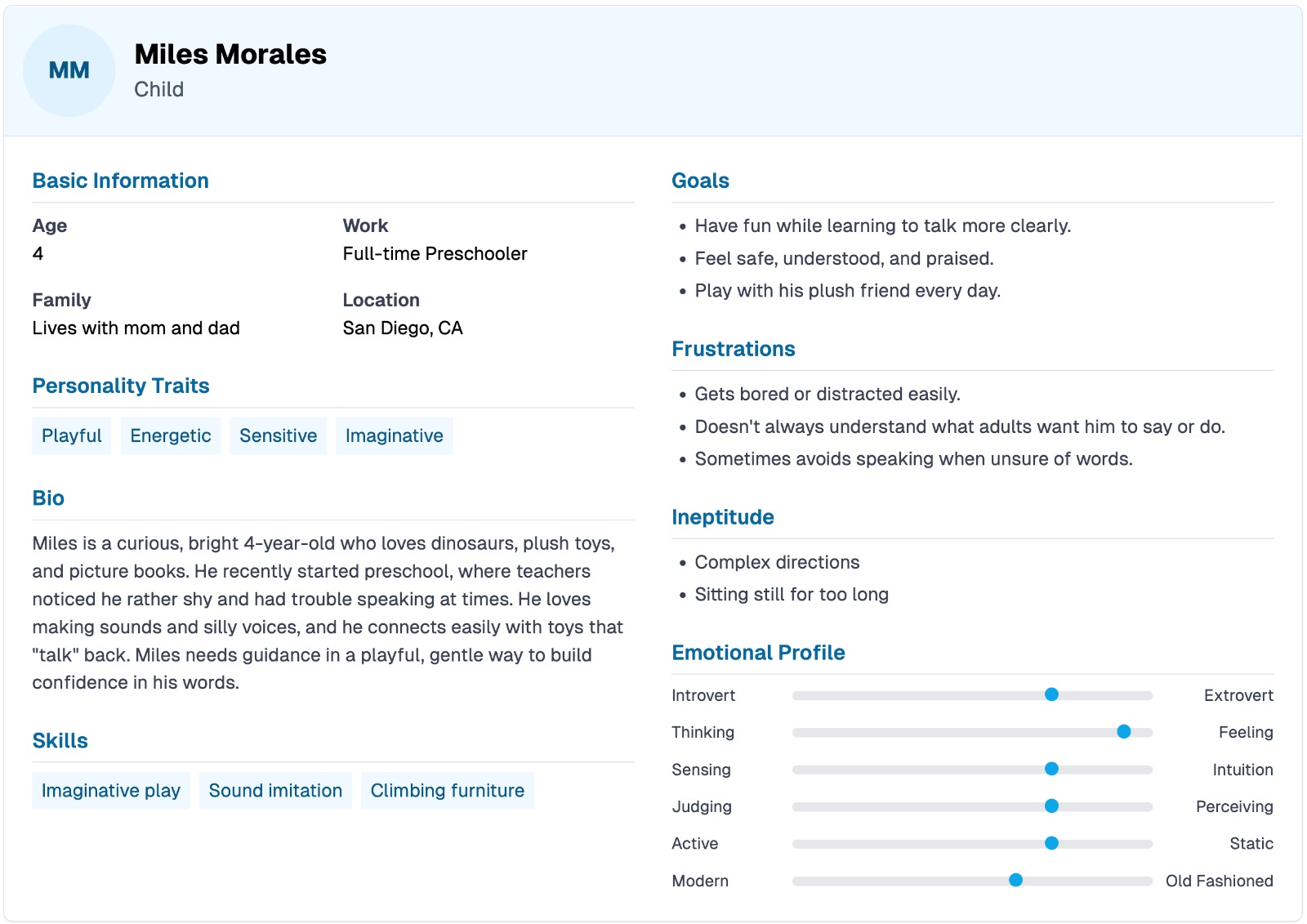

===== Persona 2: Child ===== | |||

'''Miles Morales''' is a bright, energetic 4-year-old with a big imagination and a love for dinosaurs, plush toys, and silly sounds. Recently enrolled in preschool, Miles is navigating early challenges with speech and communication. While he’s playful and emotionally intuitive, he can get easily distracted, especially by anything more serious or structured. He tends to avoid speaking when unsure, but thrives when communication is fun, safe, and full of encouragement. | |||

Miles connects deeply with his toys, especially ones that “talk,” and learns best through imaginative play and sound imitation. He responds well to gentle guidance and praise, and needs speech support to feel more like play than practice. With the right support, especially one that taps into his world of fun and fantasy, Miles has everything he needs to grow more confident with his words. | |||

[[File:PRE2024 Group3 Persona1.jpg|thumb|721x721px|Personaboard, Primary User]] | |||

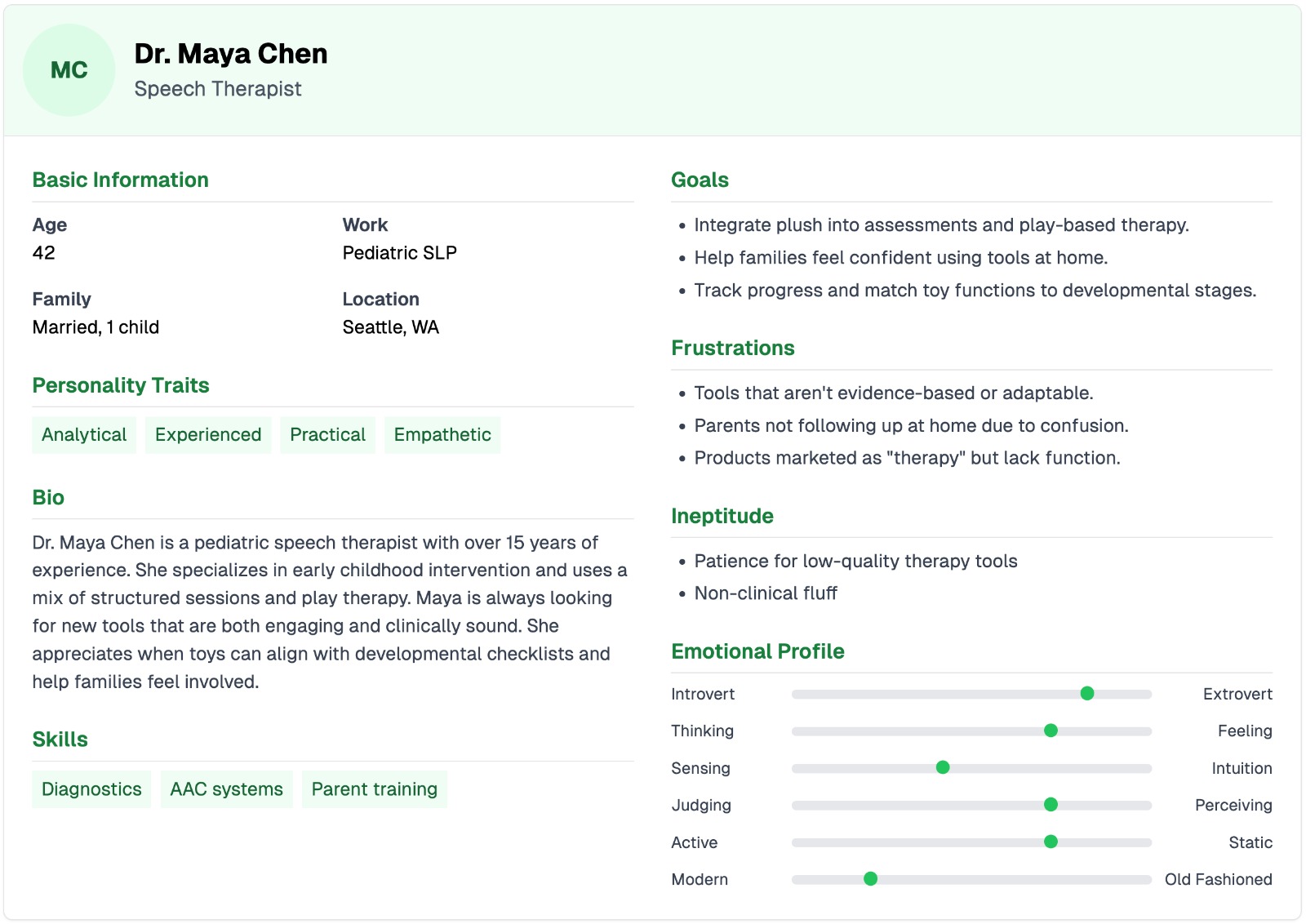

=== | ===== Persona 3: speech therapist ===== | ||

'''Dr. Maya Chen''' is a seasoned pediatric speech-language pathologist with over 15 years of experience, based in Seattle. Analytical, practical, and deeply empathetic, Maya blends clinical precision with a love for play-based learning. She specializes in early intervention and thrives when tools are both evidence-based and engaging for kids. | |||

Her biggest frustration is when therapy tools lack functionality or confuse parents rather than empower them. Maya seeks to bridge that gap, helping families feel confident at home while ensuring progress stays on track. She values adaptable resources that align with developmental stages and support her structured-yet-playful therapy style. With expertise in diagnostics, AAC systems, and parent coaching, Dr. Chen is always on the lookout for tools that are not only fun for kids but meaningful and effective in therapy. | |||

==== Scenarios ==== | |||

=== | ===== Scenario 1: ===== | ||

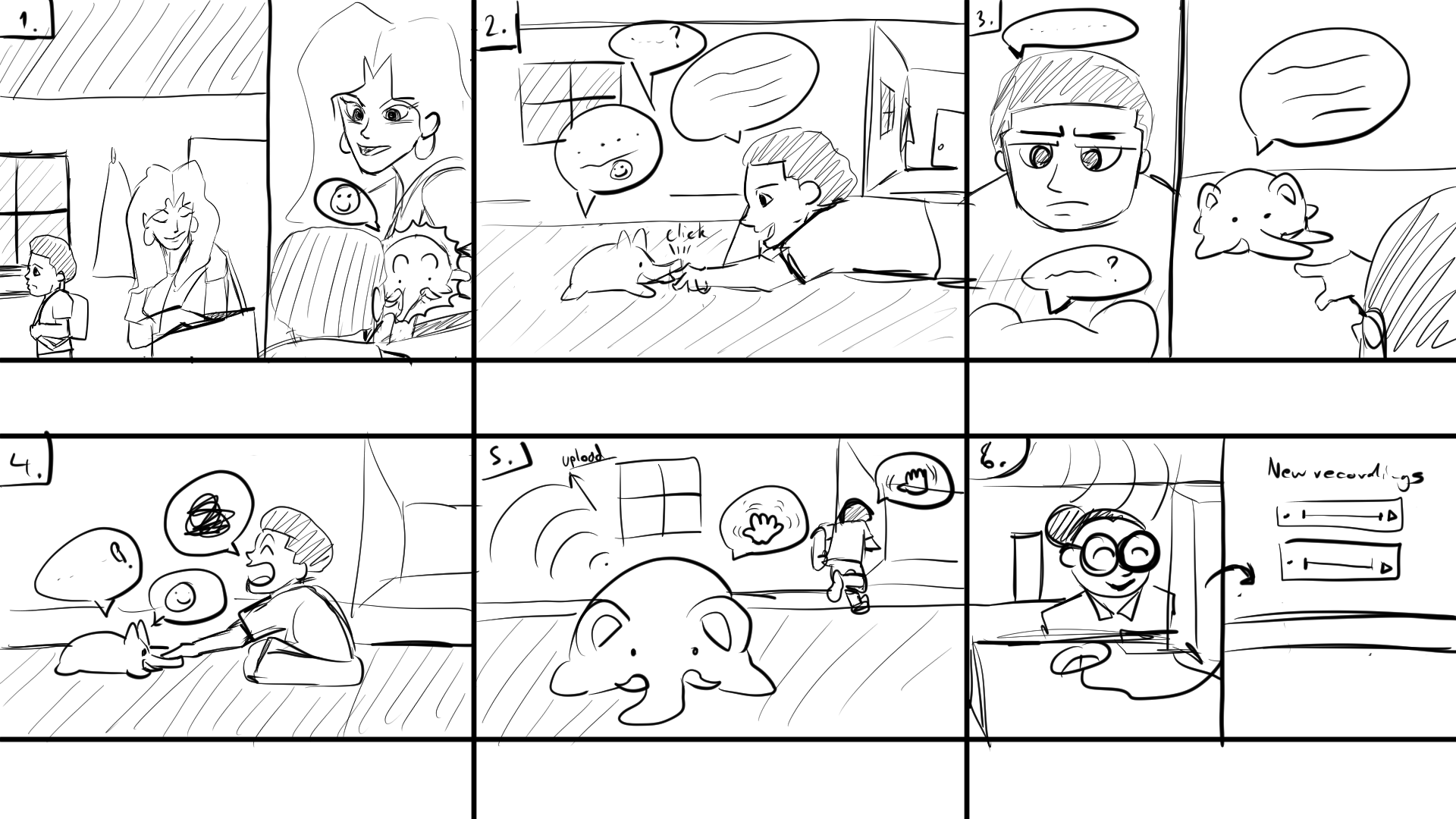

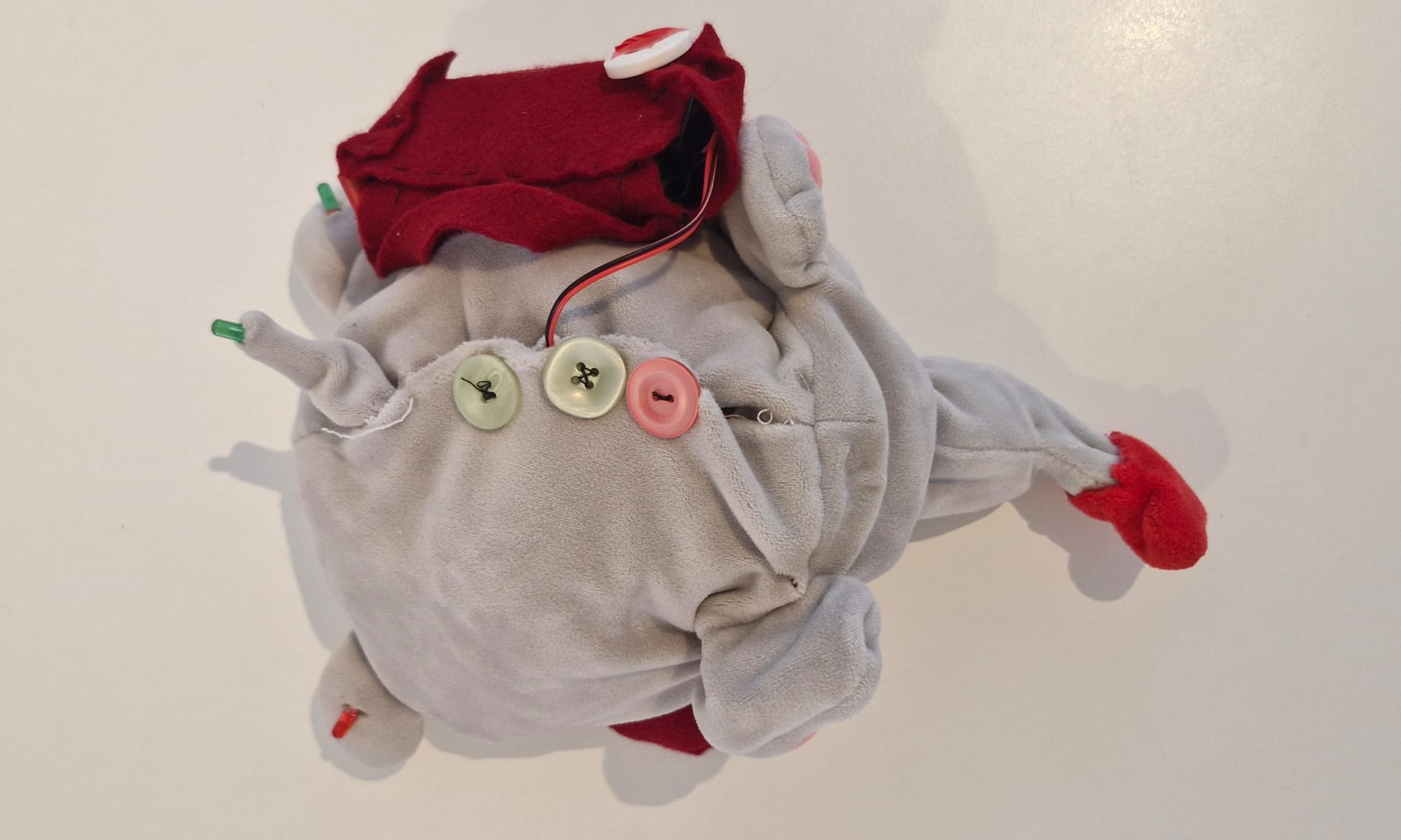

Rio has just come home after picking up Miles from school. Knowing that it's important to continue gathering diagnostic data for his speech therapy, she pulls out Rene, the plush elephant, and switches him on. Rene immediately comes to life with a cheerful sparkle in his voice, greeting Miles in a whimsical tone: “Hey there, Miles! Would you like to have a little chat with me today?” Miles lights up with a smile and, remembering what to do, squeezes Rene’s left paw to begin. Rene’s soft glow pulses as he starts asking questions, each one phrased in a playful and inviting way to make the experience feel like a fun game rather than a test. | |||

Miles follows Rene’s instructions eagerly at first. When asked, “What sound does a dog make?” he responds with a confident “Woof!” pressing Rene’s trunk to record his answer. Rene reacts with delight, “Great job, Miles!” and seamlessly moves to the next question. The session continues smoothly for the first few minutes. Each of Miles’ responses is neatly timestamped and logged as Rene records when prompted by the trunk being pressed. But soon, Rene asks a question that catches Miles off guard. Miles hesitates, then quietly presses the trunk without saying anything, producing a barely-there 1 millisecond audio clip. Rene, unfazed, still registers it as a response and moves on without drawing attention to the missed moment. | |||

After that, it becomes clear that Miles is losing interest. His answers become silly—giggling, making random noises, and throwing in nonsense words. Still, Rene patiently continues, recording each one and storing them just like any other response. Eventually, Miles gives Rene’s right paw a squeeze, signalling that he’s done for the day. Rene instantly switches tone, saying goodbye in a warm voice: “That was so much fun, Miles! Let’s talk again another time, okay?” | |||

As soon as the session ends, Rene automatically begins the upload process. All the audio recordings—whether clear, silly, or silent—are sent securely to Miles’ encrypted profile in the therapy database. Once received, the system begins analysing the data, scanning each response for clarity, duration, and consistency. It flags any problematic or ambiguous responses, including the one-millisecond silent clip and the burst of unrelated sounds toward the end. These are added to a follow-up list that Rene will be programmed to revisit in a future session, adapting his script accordingly to ensure each critical diagnostic point is eventually covered. | |||

At the same time, the therapist’s companion app receives a quiet notification: new recordings are available. When she logs in, she’ll see exactly which questions were answered, which ones need clarification, and can even listen to the audio if needed. From this playful interaction between a child and a soft toy, meaningful diagnostic data has been collected—seamlessly, naturally, and without stress.[[File:Story Board for scenario.png|none|thumb|658x658px|Story board for scenario]] | |||

===== Requirements ===== | ===== Requirements ===== | ||

===== For the Therapist ===== | |||

* Robust Hardware Integration: The system must incorporate reliable and durable hardware to ensure diagnostic sessions are completed without data loss or interruption. The design should aim to minimise technical failures during assessments, ensuring that every session's data remains intact and can be reviewed later. | |||

* User-Friendly Dashboard: An intuitive and efficient digital dashboard is required to present clinicians with information on each recorded session. The dashboard should facilitate rapid review and analysis, with the goal of enabling therapists to quickly identify patterns or issues in speech. By streamlining navigation and data review, the tool should help therapists manage multiple patients efficiently while maintaining high diagnostic accuracy. | |||

* Secure Remote Accessibility: In today’s increasingly digital healthcare environment, therapists must be able to access patient data remotely. The system must employ state-of-the-art encryption and robust user authentication protocols to protect sensitive patient data from unauthorised access. Having robust security is crucial for both clinicians and patients, as it reassures all parties that the integrity and confidentiality of the clinical data are maintained as per today’s standards. | |||

===== For the Child and Parent ===== | |||

* Engaging and Comfortable Design: For young children, the physical design of the robot plays a crucial role in therapy success. A soft, plush exterior coupled with interactive buttons and LED feedback systems can create a friendly, non-intimidating interface that reduces fosters a positive human robot interaction. Ultimately, the sessions should be a fun experience for the child, as otherwise no progress would be made and no speech data would be collected. | |||

* Responsive Feedback Systems: Dynamic auditory and visual cues are essential components that should guide children through each step of a session. Real-time feedback, such as flashing LEDs synchronised with encouraging sound effects, aims to help the child understand when they are performing correctly, and gently corrects mistakes when necessary. This immediate reinforcement not only keeps the child engaged and motivated but also provides parents with clear, observable evidence of their child’s progress. In essence, cues ensure that the therapy sessions are both interactive and instructive. | |||

* Robust Data Security: The system must implement comprehensive security measures such as end-to-end encryption and secure storage protocols to prevent unauthorised access or data breaches. The level of protection must reassure both parents and therapists that the child’s data is handled with the highest level of care and confidentiality. Adhering strictly to healthcare regulations is essential to maintain trust and protect privacy throughout the therapy process. | |||

=== Society === | === Society === | ||

Early, engaging diagnostic and intervention tools, such as a speech companion robot, offer substantial benefits for children with communication impairments and society at large. Timely identification and therapy in the preschool years can prevent persistent academic, communication, and social difficulties that often emerge when early intervention is missed, with broad effects on educational attainment and life opportunities (Hitchcock, E.R., et al., 2015<ref name=":5">https://pmc.ncbi.nlm.nih.gov/articles/PMC5708870/</ref>). Research further shows that developmental language disorders, if not addressed, can impede literacy and learning across all curriculum areas (Ziegenfusz, S., et al., 2022<ref name=":6">https://pmc.ncbi.nlm.nih.gov/articles/PMC9620692/</ref>). By delivering engaging, play-based practice at an early age, a robot helps close this gap, motivating children through game-like exercises and positive reinforcement, which in turn promotes consistent practice and faster skill gains. Significantly, trials of socially assistive robots in speech therapy report notable improvements in children’s linguistic skills, as therapists observe that robots keep young learners more engaged and positive during sessions (Spitale et al., 2023<ref name=":2" />). | |||

Beyond facilitating better speech outcomes, early intervention promotes social inclusion. Communication ability is a key factor in social development, and deficits often undermine peer interaction and participation into adulthood<ref name=":5" />. Even mild speech impairments can result in social withdrawal or negative peer perceptions, affecting confidence and classroom integration. By improving intelligibility and expressive skills in the preschool years, early intervention equips children to engage more fully with teachers and classmates, fostering an inclusive learning environment. Enhancing communication skills by age 5 has been linked to better social relationships and academic performance<ref name=":6" />. A therapy robot that makes speech practice fun and accessible therefore not only accelerates skill development but also strengthens a child’s long-term social and educational trajectory. | |||

Furthermore, digitally enabled tools can dramatically increase access to speech-language services and mitigate regional disparities in care. Demand for speech therapy outstrips supply in many European countries: in England, for example, over 75,000 children were on waitlists in 2024, with many facing delays of a year or longer (The Independent, 2024<ref>https://www.the-independent.com/news/uk/england-nhs-england-community-government-b2584094.html</ref>), while in Ireland some families waited over two years or even missed the critical early intervention window entirely (Sensational Kids, 2024<ref>https://www.sensationalkids.ie/shocking-wait-times-for-hse-therapy-services-revealed-by-sensational-kids-survey/</ref>). A digital companion robot can alleviate these bottlenecks by providing preliminary assessments and guided practice without requiring a specialist on-site for every session. Telehealth findings during the COVID-19 pandemic confirm that remote services can effectively deliver therapy and improve access, allowing children in rural or underserved areas to begin articulation work or screening immediately, with therapists supervising progress asynchronously. This approach lowers wait times, ensures earlier intervention, and reduces diagnostic disparities across Europe. Moreover, as the EU moves toward interoperable digital health networks (Siderius, L., et al., 2023<ref>https://pmc.ncbi.nlm.nih.gov/articles/PMC10766845/</ref>), data and expertise can be shared seamlessly across borders, enabling a harmonized standard of care. An EU-wide rollout of speech companion robots could thus accelerate early intervention for all children, regardless of geography, and foster continual refinement of therapy protocols through aggregated insights. | |||

=== Enterprise === | === Enterprise === | ||

Implementing a plush robotic therapy assistant could reduce long-term costs for clinics and schools by supplementing human therapists and delegating their workloads to the robotic assistants. Despite the high initial investment, the robot's ability to handle repetitive practice drills and engage multiple children simultaneously could significantly increase therapists' efficiency. Many children with speech impairments require extensive therapy, approximately 5% of children have speech sound disorders needing intervention (CDC, 2015<ref name=":12">https://www.cdc.gov/nchs/products/databriefs/db205.htm</ref>). Robotic aides that accelerate progress and facilitate frequent practice could substantially reduce total therapy hours required, enabling therapists to manage higher caseloads and allocate more time to complex, personalized interventions. | |||

The market for therapeutic speech robots spans both healthcare and education sectors. For example, in the U.S. alone, nearly 8% of children aged 3–17 have communication disorders, with speech issues being most prevalent<ref name=":12" />. This demographic represents millions of children domestically and globally. Clinics, educational institutions, and private practices serving these children constitute a significant market. Additional market potential exists among children with autism spectrum disorders or developmental language delays, further expanding the reach of such technology. Increasing awareness and investments in inclusive education and early interventions suggest robust market growth potential if therapeutic robots demonstrate clear efficacy and user acceptance. | |||

Robotic solutions offer substantial scalability. After development and validation, plush therapeutic robots could be widely implemented across classrooms, speech therapy clinics, hospitals and homes. Centralized software updates could uniformly introduce new therapy activities or languages, maintaining consistent therapy quality. Robots could also enable group sessions, increasing therapy accessibility and efficiency. Remote operation capabilities could further extend services to underserved regions lacking on-site specialists, thereby addressing workforce shortages and regional disparities in speech therapy services. | |||

Adoption barriers include initial cost, potential skepticism from traditional practitioners, and concerns from parents regarding effectivenes. Training requirements, technological reliability, privacy, data security issues, and regulatory approval processes also pose significant challenges. Demonstrating robust clinical efficacy through research is essential to encourage cautious institutions to adopt this technology. Addressing privacy concerns through secure data handling practices and obtaining necessary regulatory approvals will be critical in mitigating these barriers. Successful integration requires plush robots to complement existing therapeutic infrastructures as supportive tools rather than replacements. Robots could guide structured therapy exercises prepared by therapists, allowing therapist intervention as needed. Data collected, such as task performance, pronunciation accuracy, and engagement metrics, could seamlessly integrate into electronic health records and individualized education plans. Reliable maintenance and robust technical support from institutional IT teams or vendors are necessary to sustain long-term functionality. Effective integration ensures robots become routine tools in speech therapy, similar to interactive software and educational materials, aligning well with evolving EU digital health strategies and facilitating standardized practices across institutions ([https://health.ec.europa.eu/ehealth-digital-health-and-care/digital-health-and-care_en European Comission, eHealth]). | |||

=State of the Art= | =State of the Art= | ||

=== Literature study === | |||

Traditional pediatric speech assessments often require hour-long in-person sessions, which can exhaust the child and even the clinician. Research shows that young children’s performance suffers as cognitive fatigue sets in: after long periods of sustained listening or test-taking, children exhibit more lapses in attention, slower responses, and declining accuracy (Key et al., 2017<ref>https://pmc.ncbi.nlm.nih.gov/articles/PMC5831094/</ref>; Sievertsen et al., 2016<ref name=":0" />). Preschool-aged children (≈3–5 years old) are especially prone to losing focus during extended diagnostics. In fact, to accommodate their limited attention spans, researchers frequently shorten and simplify tasks for this age group (Finneran et al., 2009<ref>https://pmc.ncbi.nlm.nih.gov/articles/PMC2740746/</ref>). Even brief extensions in task length can markedly worsen a young child’s performance; for example, one study found that increasing a continuous attention task from 6 to 9 minutes led 4–5 year olds with attentional difficulties to make significantly more errors (Mariani & Barkley, 1997<ref name=":11">https://www.scirp.org/(S(ny23rubfvg45z345vbrepxrl))/reference/referencespapers?referenceid=2156170</ref>). Together, these findings suggest that lengthy, single-session evaluations may introduce cognitive fatigue and inconsistency into the results, i.e. a child tested at the ''beginning'' of a multi-hour session may perform very differently than at the ''end'', simply due to waning concentration and energy. Further supporting this concern, studies on pediatric neuropsychological testing have emphasized the need for flexible, modular test to prevent fatigue-related bias. For instance, studies (Plante et al., 2019<ref><nowiki>https://pmc.ncbi.nlm.nih.gov/articles/PMC6802914/</nowiki></ref>) highlight how short therapy sessions can be effective. This is because attention and memory performance in young children are heavily influenced by to time-on-task effects, recommending shorter tasks with breaks in between. | |||

Another challenge is that the clinical setting itself can skew a child’s behavior and communication, potentially affecting diagnostic outcomes. Children are often taken out of familiar environments and placed in a sterile clinic or hospital room for these assessments. This change can lead to atypical behavior: some kids become anxious or reserved, while others might act out due to discomfort. Notably, children’s speech and language abilities observed in a clinic may not reflect their true skills in a natural environment. In a classic study, Scott and Taylor (1978<ref name=":1">https://pubmed.ncbi.nlm.nih.gov/732285/</ref>) found that preschool children produced significantly longer and more complex utterances at home with a parent than they did in a clinic with an unfamiliar examiner. The home setting elicited richer language (more past-tense verbs, longer sentences, etc.), whereas the clinic samples were shorter and simpler<ref name=":1" />. Similarly, Hauge et al. (2023<ref>https://www.liebertpub.com/doi/full/10.1089/eco.2022.0087</ref>) stress that conventional testing environments, often unfamiliar and rigid, can increase stress and distractibility in children, adding to fatigue-related performance issues. This suggests that standard clinical evaluations could underestimate a child’s capabilities if the child is uneasy or not fully engaged in that setting. Factors like unfamiliar adults, strange equipment, or the feeling of being “tested” can all negatively impact a young child’s behavior during assessment. Thus, there is a clear motivation to explore assessment methods that make children feel more comfortable and interested, in order to obtain more consistent and representative results. | |||

To address these issues, some recent work has turned to socially assistive robots (SARs) and gamified interactive tools as innovative means of conducting speech-language assessment and therapy. The underlying idea is that a friendly robot or game can transform a tedious evaluation into a fun, engaging interaction. There is growing evidence that such approaches can indeed improve children’s engagement, yield more consistent participation, and broaden accessibility. For instance, game-based learning techniques have been shown to boost motivation and focus: multiple studies (including meta-analyses) conclude that using “serious games” or gamified tasks significantly increases students’ engagement and learning in therapeutic or educational contexts (Brackenbury & Kopf, 2022<ref>https://pubs.asha.org/doi/abs/10.1044/2021_PERSP-21-00284</ref>). In speech-language therapy, this means a child might persevere longer and respond more enthusiastically when the session feels like play rather than an exam. Social robots, similarly, can serve as charismatic partners that hold a child’s attention. In one 8-week intervention study, a socially assistive humanoid robot was used to help deliver language therapy to children with speech impairments (Spitale et al., 2023<ref name=":2">https://psycnet.apa.org/record/2023-98356-001</ref>). The researchers found significant improvements in the children’s linguistic skills over the program, comparable to those made via traditional therapy. Importantly, children working with the physical robot displayed greater engagement; measured by eye gaze, attention, and number of vocal responses – than those who received the same therapy through a screen-based virtual agent<ref name=":2" />. In the robot-assisted sessions, children not only spoke more, but also stayed motivated and positive throughout, as the robot’s interactive games and feedback kept them interested. Therapists initially approached the technology with some scepticism, but after hands-on experience they reported that the robot helped maintain the children’s focus and could be a useful tool for keeping young clients motivated<ref name=":2" />. Such findings align with a broader trend in pediatric care; socially assistive robots and interactive platforms can mitigate boredom and fatigue by making therapy more engaging, all while delivering structured practice. Another advantage is consistency and unlike human evaluators who might inadvertently vary their prompts or become tired, a robot can present therapy exercises in a highly standardized way each time. This consistency, paired with the robot’s playful demeanour, can lead to more reliable assessments and enjoyable therapy sessions that children look forward to rather than dread. | |||

In addition to robot-assisted solutions, researchers are also exploring telehealth and asynchronous models to improve accessibility and flexibility of speech-language services. Telehealth (remote therapy via video call) saw a huge expansion during the COVID-19 pandemic, but its utility extends beyond that context. Studies have demonstrated that tele-speech therapy can be highly effective for children, often yielding progress equivalent to in-person therapy while removing geographic and scheduling barriers (Fekar Gharamaleki & Nazari, 2024<ref>https://pmc.ncbi.nlm.nih.gov/articles/PMC10851737/</ref>). By delivering evaluation and treatment over a secure video link, clinicians can reach families who live far from specialists or who have difficulty traveling to appointments. Parents and children have reported high satisfaction with teletherapy, citing benefits such as conducting sessions in the child’s own home (where they may be more comfortable and attentive) and easier scheduling for busy families. Beyond live video sessions, asynchronous approaches are being tried as well. In an asynchronous model, a clinician might prepare therapy activities or prompts in advance for the family to use at their convenience – for example, a tablet-based app that records the child’s speech attempts, which the clinician reviews later. Research (Vaezipour et al., 2020<ref><nowiki>https://mhealth.jmir.org/2020/10/e18858</nowiki></ref>) is being done in this field, looking at what kind of benefits this can have. This “store-and-forward” technique allows therapy to happen in short bursts when the child is most receptive, rather than insisting on a fixed appointment time. Hill and Breslin (2016<ref name=":3">https://www.frontiersin.org/journals/human-neuroscience/articles/10.3389/fnhum.2016.00640/full</ref>) describe a platform where speech-language pathologists could upload tailored exercises to a mobile app; children would complete the exercises (with the app capturing their responses) at home, and the therapist would asynchronously monitor the results and update tasks as needed. Such a system removes the need to coordinate schedules in real time and can reduce the burden of 2-hour sessions by spreading practice out in more frequent, bite-sized interactions. Early evaluations of these models indicate they can improve efficiency without compromising outcomes, though they require careful planning and reliable technology<ref name=":3" />. AI-driven platforms, in particular, can adapt to a child’s responses and maintain motivation through personalized challenges and praise, making them especially suitable for preschoolers with limited attention spans (Utepbayeva et al., 2022<ref>https://www.researchgate.net/publication/365865210_Artificial_intelligence_in_the_diagnosis_of_speech_disorders_in_preschool_and_primary_school_children</ref>) (Bahrdwaj et al., 2024<ref>https://www.sciencedirect.com/science/article/pii/S2772632024000412</ref>). Overall, the rise of telehealth in speech-language pathology shows promise for making assessment and therapy more flexible, family-centered, and accessible, especially for young children who may do better with multiple shorter interactions than a marathon clinic visit. It also means clinicians can observe children in naturalistic home settings via video, potentially gaining a more ecologically valid picture of the child’s communication skills than a clinic observation would provide. | |||

Looking ahead, the integration of wearable sensors, voice-activated assistants, and augmented reality (AR) platforms may become options available for pediatric speech-language therapy. These innovations offer the potential to track subtle speech metrics throughout the day, deliver immersive learning experiences, and provide real-time coaching in environments more natural for the child (Wandi et al., 2023). For instance, smart microphones embedded in toys or home devices could collect speech samples during play, giving clinicians richer data without requiring formal testing sessions. Meanwhile, AR systems might enable children to interact with animated characters that prompt speech practice through storytelling or collaborative games, making therapy feel more like an adventure than a clinical task. As these technologies develop, they could improve both assessment accuracy and child engagement, especially when combined with clinician oversight and family participation. | |||

Finally, any move toward robot-assisted or digital speech therapy must be accompanied by rigorous attention to privacy, consent, and ethics. Working with children introduces critical ethical responsibilities. Informed consent in pediatric settings actually involves parental consent (and when possible, the child’s assent); parents or guardians need to fully understand and agree to how an AI or robot will interact with their child and what data will be collected. Developers of such tools are urged to adopt a "privacy-by-design" approach, ensuring that any audio/video recordings or personal data from children are securely stored and used only for their intended therapeutic purposes (Lutz et al., 2019<ref name=":4">https://journals.sagepub.com/doi/10.1177/2050157919843961</ref>). Privacy considerations for socially assistive robots go beyond just data encryption; for example, a robot equipped with cameras and microphones could intrude on a family’s privacy if it is always observing, so clear limits and transparency about when it is “recording” are essential<ref name=":4" />. Ethical guidelines also emphasize that children’s participation should be voluntary and respectful: a child should never be forced or deceived by an AI system. One recent field study noted concerns about trust and deception when young children interact with social robots: kids might innocently over-trust a robot’s instructions or grow distressed if the robot malfunctions or behaves unpredictably (Singh et al., 2022<ref>https://pubmed.ncbi.nlm.nih.gov/35637787/</ref>). To address this, designers strive to make robots safe and reliable, and clinicians are careful to explain the robot’s role (for instance, framing it as a helper or toy, not as an all-knowing authority). It’s also important to maintain human oversight: the goal is to assist, not replace, the speech-language pathologist. Ethically designed systems will flag any serious issues to a human clinician and allow parents to opt out at any time. In summary, child-centric AI and robotics projects must prioritize the child’s well-being, autonomy, and rights at every stage. This involves obtaining proper consent, safeguarding sensitive information, and transparently integrating technology in a way that complements traditional care. By doing so, innovative speech diagnostic and therapy tools can be both cutting-edge and responsible, ultimately delivering engaging, consistent, and accessible support for children’s communication development without compromising ethics or privacy. | |||

=== Existing robots === | |||

To understand how we can create the best robot for our users, we have to look at what robots already exists relating to our project. We analyzed the following robots and related them to how we can use them for our robot. | |||

=== RASA robot === | |||

The RASA (Robotic Assistant for Speech Assessment) robot is a socially assistive robot developed to enhance speech therapy sessions for children with language disorders. The robot is used during speech therapy sessions for children with language disorders. The robot uses facial expressions that make therapy sessions more engaging for the children. The robot also uses a camera that uses facial expression recognition with convolutional neural networks to detect the way the children are speaking. This helps the therapist in improving the child's speech. [https://pubmed.ncbi.nlm.nih.gov/36467283/ Studies] have shown that incorporating the RASA robot into therapy sessions increases children's engagement and improves language development outcomes.[[File:RASA robot.png|thumb|160x160px|The RASA robot|none]] | |||

=== Automatic Speech Recognition === | |||

Recent advancements in Automatic speech recognition (ASR) technology have led to systems capable of analyzing children's speech to detect pronunciation issues. For instance, a [https://arxiv.org/abs/2403.08187 study] fine-tuned the wav2vec 2.0 XLS-R model to recognize speech as pronounced by children with speech sound disorders, achieving approximately 90% accuracy in matching human annotations. ASR technology streamlines the diagnostic process for clinicians, saving time in the diagnosing process. | |||

=== Nao robot === | |||

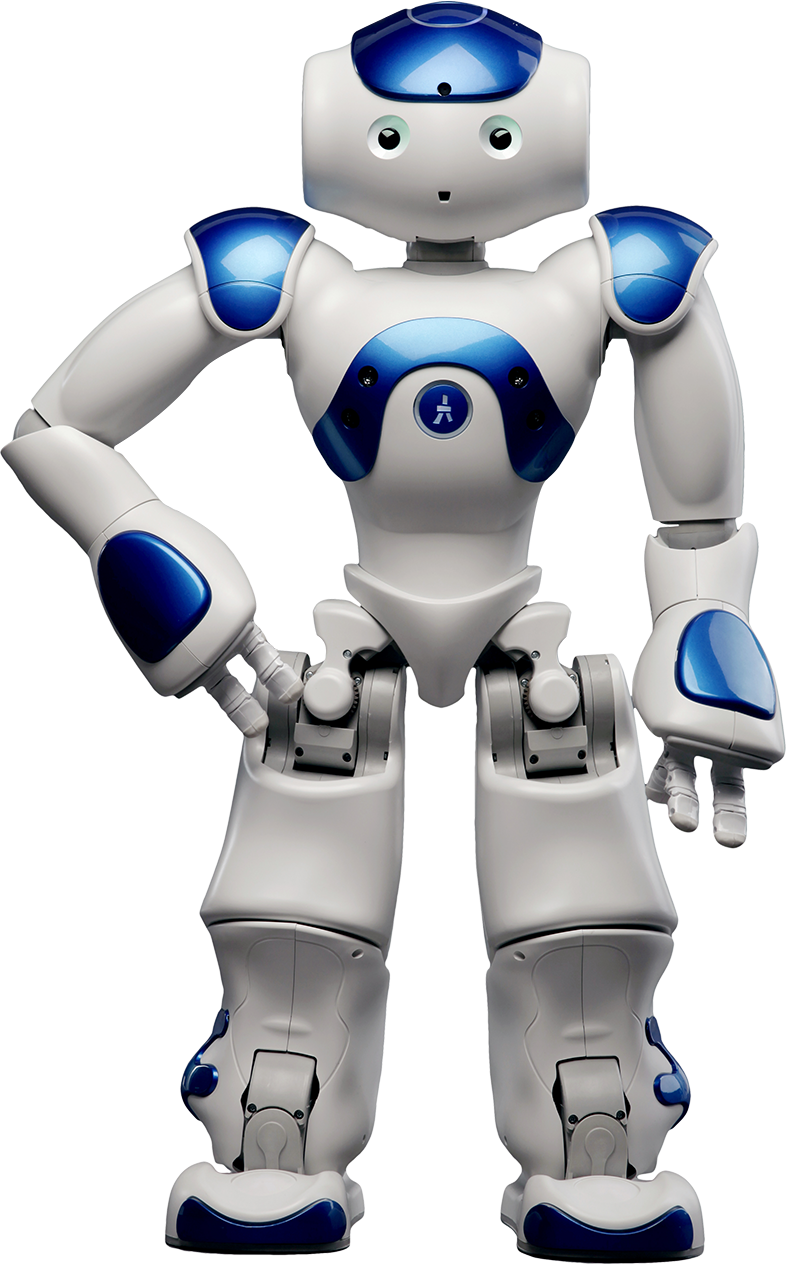

Developed by Aldebaran Robotics, the Nao robot is a programmable humanoid robot widely used in educational and therapeutic settings. Its advanced speech recognition and production capabilities make it a valuable tool in assisting speech therapy for children, helping to identify and correct speech impediments through interactive sessions.[[File:Nao robot.png|thumb|172x172px|The Nao robot|none]] | |||

=== Kaspar robot === | |||

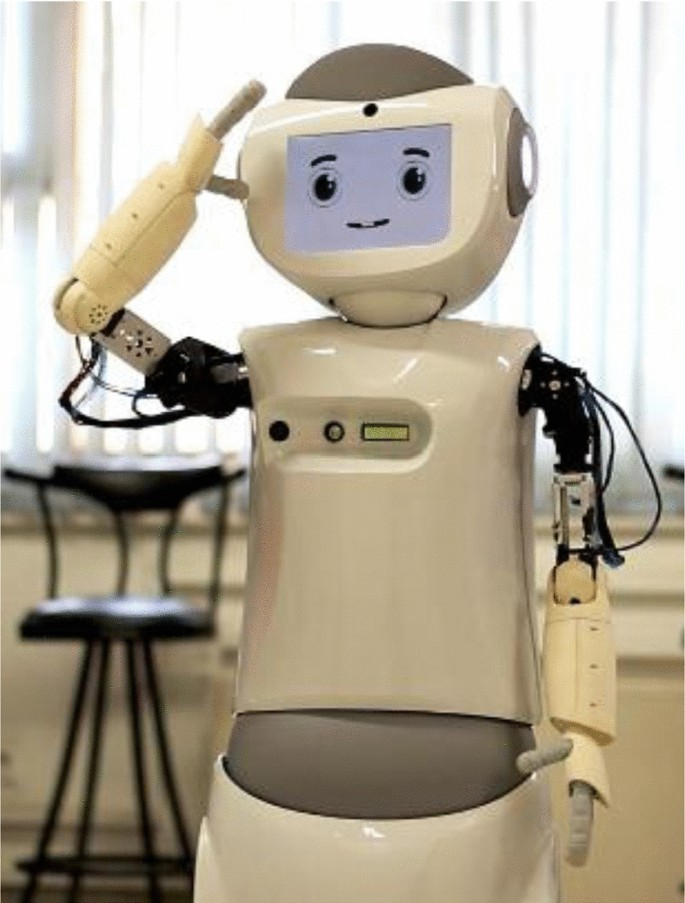

Kaspar is a socially assistive robot whose purpose is to help children with autism learn social and communication skills. A child-centric appearance and expressive behavior are given prominence in the design in order to invite users to engage in interactive activity. Studies <ref>https://www.jbe-platform.com/content/journals/10.1075/pc.12.1.03dau</ref> have indicated that children working with Kaspar show improved social responsiveness, and the same design principles could be applied to enhancing speech therapy outcomes.[[File:Uherts 368017448591-min.jpg|thumb|Kaspar the social robot, University of Hertfordshire|none]] | |||

=Requirements= | =Requirements= | ||

The aim of this project is to develop a speech therapy plush robot specifically designed to address issues in misdiagnoses of speech impediments in children. Our plush robot solution addresses these issues by transforming lengthy, traditional speech assessments into engaging, short, interactive sessions that are more enjoyable and less exhausting for both patients (children aged 5-10) and therapists. By providing an interactive plush toy equipped with audio recording, playback capabilities, local data storage, intuitive user interaction via buttons and LEDs, and secure data transfer, we aim to significantly reduce the strain on SLT resources and enhance diagnostic accuracy and patient engagement. | |||

= | To be able to achieve all of this we must satisfy a certain set of requirements that will outline the requirements, design considerations, user interactions, data handling, performance goals, and a test plan, all of which will be done following a MOSCOW prioritization method: | ||

{| class="wikitable" | |||

|+MOSCOW Prioritization Model | |||

!Category | |||

!Definition | |||

|- | |||

|Must Have | |||

|Essential for core functionality; mandatory for the product's success. | |||

|- | |||

|Should Have | |||

|Important but not essential; enhances usability significantly. | |||

|- | |||

|Could Have | |||

|Beneficial enhancements that can be postponed if necessary. | |||

|- | |||

|Won’t Have | |||

|Explicitly excluded from current scope and version. | |||

|} | |||

=== | === Design Specifications === | ||

{| class="wikitable" | |||

|+Physical and Interaction Design Requirements | |||

|Ref | |||

|Requirement | |||

|Priority | |||

|- | |||

|DS1 | |||

|Plush toy casing made from child-safe, non-toxic materials. | |||

|Must | |||

|- | |||

|DS2 | |||

|Secure internal mounting of microphone, speaker, battery, and processing units. | |||

|Must | |||

|- | |||

|DS3 | |||

|Accessible button controls (Power, Next Question, Stop Recording). | |||

|Must | |||

|- | |||

|DS4 | |||

|Visual feedback via LEDs and Movement (eg. Recording, Test Complete, Error, Low Storage, Low Battery). | |||

|Must | |||

|- | |||

|DS5 | |||

|The robot casing should withstand minor physical impacts or drops. | |||

|Should | |||

|- | |||

|DS6 | |||

|Device will not be waterproof. | |||

|Won’t | |||

|} | |||

The plush robot’s physical design prioritizes child safety, comfort, and ease of interaction. Using child-safe materials (DS1) and securely mounted electronics (DS2) ensures safety, while intuitive button controls (DS3) and clear LED indicators (DS4) simplify usage for young children. Durability considerations (DS5) shall ensure the product withstands typical child handling (or any handling...), though waterproofing (DS6) is excluded due to practical constraints and the lack of its necessity given the setting it will be used in. | |||

The test plan is as follows: | |||

{| class="wikitable" | |||

|+Test Cases Design Specifications | |||

!Ref | |||

!Precondition | |||

!Action | |||

!Expected Output | |||

|- | |||

|DS1 | |||

|N/A | |||

|Inspect the plush toy casing and its material certificates/specifications. | |||

|Plush toy casing is certified as child-safe and non-toxic. | |||

|- | |||

|DS2 | |||

|N/A | |||

|Inspect internal components of the plush toy. | |||

|Microphone, speaker, battery, and processing units are securely mounted internally. | |||

|- | |||

|DS3 | |||

|Plush robot is powered on. | |||

|Press each button (Power, Next Question, Stop Recording). | |||

|Each button activates its designated functionality immediately. | |||

|- | |||

|DS4 | |||

|Plush robot is powered on. | |||

|Observe LED and any movement indicators when performing different operations (recording, completing test, causing an error, storage nearly full, battery low). | |||

|LEDs correctly indicate each state as intended. | |||

|- | |||

|DS5 | |||

|N/A | |||

|Simulate minor drops or impacts on a safe surface. | |||

|Plush robot casing withstands minor impacts without significant damage or loss of functionality. | |||

|- | |||

|DS6 | |||

|N/A | |||

|N/A | |||

|N/A | |||

|} | |||

=== Functionalities === | |||

{| class="wikitable" | |||

|+Functional Requirements | |||

|Ref | |||

|Requirement | |||

|Priority | |||

|- | |||

|FR1 | |||

|Provide pre-recorded prompts for speech exercises. | |||

|Must | |||

|- | |||

|FR2 | |||

|Capture high-quality audio recordings locally in WAV format. | |||

|Must | |||

|- | |||

|FR3 | |||

|Securely store audio locally to maintain data privacy. | |||

|Must | |||

|- | |||

|FR4 | |||

|Enable intuitive button-based session controls. | |||

|Must | |||

|- | |||

|FR5 | |||

|Support secure data transfer via the Internet, USB and/or Bluetooth. | |||

|Must | |||

|- | |||

|FR6 | |||

|Implement basic noise-filtering algorithms. | |||

|Should | |||

|- | |||

|FR7 | |||

|Automatically shut down after prolonged inactivity to conserve battery. | |||

|Should | |||

|- | |||

|FR8 | |||

|Optional admin panel for therapists to configure exercises and session lengths. | |||

|Could | |||

|- | |||

|FR9 | |||

|Optional voice activation for hands-free interaction. | |||

|Could | |||

|- | |||

|FR10 | |||

|Explicitly exclude cloud storage for privacy compliance. | |||

|Won’t | |||

|} | |||

These functional requirements reflect: the necessity to simplify lengthy therapy sessions into manageable segments (FR1, FR4), securely capture and store speech data for later analysis (FR2, FR3, FR5), and maintain user-friendly interaction. Privacy and data security are prioritized by excluding cloud storage (FR10), while noise filtering (FR6), auto-shutdown (FR7), admin panel (FR8), and voice activation (FR9) further enhance practical usability and efficiency but are not essential for the functionality of the robot per se. | |||

The test plan is as follows: | |||

{| class="wikitable" | |||

|+Functionalities Test Plan | |||

!Ref | |||

!Precondition | |||

!Action | |||

!Expected Output | |||

|- | |||

|FR1 | |||

|Plush robot is powered on. | |||

|Initiate a therapy session. | |||

|Robot plays clear pre-recorded speech exercise prompts correctly. | |||

|- | |||

|FR2 | |||

|Plush robot is powered on and ready to record. | |||

|Record speech audio using provided prompts. | |||

|High-quality WAV audio recordings are stored locally. | |||

|- | |||

|FR3 | |||

|Recording completed. | |||

|Inspect local storage on plush robot (either via ssh or usb etc.) | |||

|Audio recordings are securely stored locally. | |||

|- | |||

|FR4 | |||

|Plush robot is powered on. | |||

|Use button controls to navigate through a session. | |||

|Session navigation (Start/Stop, Next Question) operates smoothly via buttons. | |||

|- | |||

|FR5 | |||

|Plush robot has recorded data. | |||

|Connect robot via USB/Bluetooth to transfer data securely. | |||

|Data transfer via USB/Bluetooth completes successfully with no data corruption or leaks. | |||

|- | |||

|FR6 | |||

|Robot is powered on and ready to record. | |||

|Record speech in a moderately noisy environment. | |||

|Recorded audio demonstrates effective noise filtering with reduced background noise. | |||

|- | |||

|FR7 | |||

|Plush robot powered on, idle for prolonged period. | |||

|Leave robot idle for X+ minutes. | |||

|Robot automatically powers off to conserve battery. | |||

|- | |||

|FR8 | |||

|Admin panel feature implemented. | |||

|Therapist configures new exercises/session lengths via admin panel. | |||

|Admin panel accurately saves and applies changes to exercises/session durations. | |||

|- | |||

|FR9 | |||

|Voice activation implemented. | |||

|Use voice commands to navigate exercises. | |||

|Robot successfully responds to voice commands. | |||

|- | |||

|FR10 | |||

|Check product documentation/design specification. | |||

|Inspect data storage and upload protocols. | |||

|Confirm explicitly stated absence of cloud storage capability. | |||

|} | |||

=== UI/UX === | |||

{| class="wikitable" | |||

|+User Interaction Requirements | |||

|Ref | |||

|Requirement | |||

|Priority | |||

|- | |||

|UI1 | |||

|Clear visual indication of active recording status through LEDs. | |||

|Must | |||

|- | |||

|UI2 | |||

|Easy navigation between prompts using physical buttons. | |||

|Must | |||

|- | |||

|UI3 | |||

|Dedicated button to stop the session and securely store audio. | |||

|Must | |||

|- | |||

|UI4 | |||

|Audio or Visual notifications/indications when storage or battery capacity is low. | |||

|Could | |||

|- | |||

|UI5 | |||

|Optional voice commands to navigate exercises. | |||

|Could | |||

|- | |||

|UI6 | |||

|Exclude advanced manual audio processing controls for simplicity. | |||

|Won’t | |||

|} | |||

User interactions are designed to be intuitive, enabling children to comfortably navigate therapy sessions (UI1, UI2, UI3) without assistance. Additional audio notifications (UI4) provide helpful prompts, and potential voice command options (UI5) may further simplify operation. Advanced settings are deliberately excluded (UI6) to maintain simplicity for the primary child users (and also due to the lack of time available to work on the project). | |||

The test plan is as follows: | |||

{| class="wikitable" | |||

|+ | |||

!Ref | |||

!Precondition | |||

!Action | |||

!Expected Output | |||

|- | |||

|UI1 | |||

|Plush robot is powered on. | |||

|Initiate audio recording session. | |||

|LEDs clearly indicate active recording status immediately. | |||

|- | |||

|UI2 | |||

|Session ongoing. | |||

|Press "Next" button. | |||

|Plush robot navigates to next prompt immediately and clearly. | |||

|- | |||

|UI3 | |||

|Session ongoing, recording active. | |||

|Press dedicated "Stop" button. | |||

|Recording stops immediately and audio securely stored. | |||

|- | |||

|UI4 | |||

|Low storage/battery conditions simulated. | |||

|Fill storage nearly full and/or drain battery low. | |||

|Robot issues clear audio or visual notifications indicating low storage or battery. | |||

|- | |||

|UI5 | |||

|Voice commands implemented. | |||

|Navigate prompts using voice commands. | |||

|Robot accurately navigates prompts using voice interaction. | |||

|- | |||

|UI6 | |||

|Check product documentation/design specification. | |||

|Verify available UI options. | |||

|Confirm explicitly stated absence of advanced audio processing UI controls. | |||

|} | |||

=== | === Data Handling and Privacy === | ||

{| class="wikitable" | |||

|+Data Handling and Privacy Requirements | |||

|Ref | |||

|Requirement | |||

|Priority | |||

|- | |||

|DH1 | |||

|Safe private storage of all collected data. | |||

|Must | |||

|- | |||

|DH2 | |||

|Encryption of stored data. | |||

|Should | |||

|- | |||

|DH3 | |||

|Facility for deletion of data post-transfer. | |||

|Should | |||

|- | |||

|DH4 | |||

|Optional automatic deletion feature to manage storage space. | |||

|Could | |||

|- | |||

|DH5 | |||

|No external analytics or third-party integrations unless anonymized . | |||

|Won’t | |||

|} | |||

Data privacy compliance is paramount, thus mandating the need for safe and secure storage (DH1). Encryption (DH2) and data deletion capabilities (DH3, DH4) enhance security measures, while explicitly excluding third-party integrations (DH5) aligns with data protection and privacy goals. If third-party integrations or analytics are used then it is important for the data processed by it to first be anonymized. | |||

The test plan is as follows: | |||

{| class="wikitable" | |||

|+Data Handling and Privacy Test Plan | |||

!Ref | |||

!Precondition | |||

!Action | |||

!Expected Output | |||

|- | |||

|DH1 | |||

|Robot has collected data. | |||

|Inspect robot’s storage location and methods. | |||

|Confirm data is stored locally/offline with no online/cloud-based storage. | |||

|- | |||

|DH2 | |||

|Data encryption implemented. | |||

|Attempt accessing stored data directly without proper keys. | |||

|Data is inaccessible or unreadable without proper decryption. | |||

|- | |||

|DH3 | |||

|Data has been transferred. | |||

|Delete data via provided facility after transfer. | |||

|Data is successfully deleted from local storage immediately after confirmation. | |||

|- | |||

|DH4 | |||

|Data exists (old) | |||

|Fill storage and observe automatic deletion feature. | |||

|Old data automatically deletes to maintain adequate storage space. | |||

|- | |||

|DH5 | |||

|N/A | |||

|Inspect software. | |||

|Confirm absence of external analytics and third-party integrations... | |||

|} | |||

=== Performance === | === Performance === | ||

{| class="wikitable" | |||

|+Performance Requirements | |||

|Ref | |||

|Requirement | |||

|Priority | |||

|- | |||

|PR1 | |||

|Prompt playback latency under one second after interaction. | |||

|Must | |||

|- | |||

|PR2 | |||

|Recording initiation latency under one second post-activation. | |||

|Must | |||

|- | |||

|PR3 | |||

|Real-time or near-real-time audio noise filtering. | |||

|Could | |||

|- | |||

|PR4 | |||

|Optional speech detection for audio segment trimming. | |||

|Could | |||

|- | |||

|PR5 | |||

|Exclusion of cloud-based or GPU-intensive AI processing. | |||

|Won’t | |||

|} | |||

Performance standards demand rapid, seamless interaction (PR1, PR2) to maintain user engagement and provide a good user experience, supplemented by noise filtering capabilities (PR3) to provide the therapists a good experience though it is not exactly necessary for the functioning of the robot. Advanced optional speech-processing features (PR4) are nice to haves but again not necessary, and high-resource cloud-based AI operations (PR5) are explicitly omitted to maintain simplicity and most importantly data security. | |||

The test plan is as follows: | |||

{| class="wikitable" | |||

|+Performance Test Plan | |||

!Ref | |||

!Precondition | |||

!Action | |||

!Expected Output | |||

|- | |||

|PR1 | |||

|Plush robot powered on. | |||

|Initiate speech prompt via interaction/button press. | |||

|Prompt playback latency is less than or equal to X seconds. | |||

|- | |||

|PR2 | |||

|Plush robot powered on. | |||

|Start recording via interaction/button press. | |||

|Recording initiation latency is less than or equal to X second. | |||

|- | |||

|PR3 | |||

|Noise filtering feature implemented. | |||

|Record audio in background-noise conditions and observe immediate playback. | |||

|Real-time or near-real-time audio noise filtering noticeably reduces background noise. | |||

|- | |||

|PR4 | |||

|Speech detection implemented. | |||

|Record speech with silent pauses. | |||

|Audio segments are correctly trimmed to include only relevant speech portions. | |||

|- | |||

|PR5 | |||

|N/A | |||

|Inspect software. | |||

|Confirm absence... | |||

|} | |||

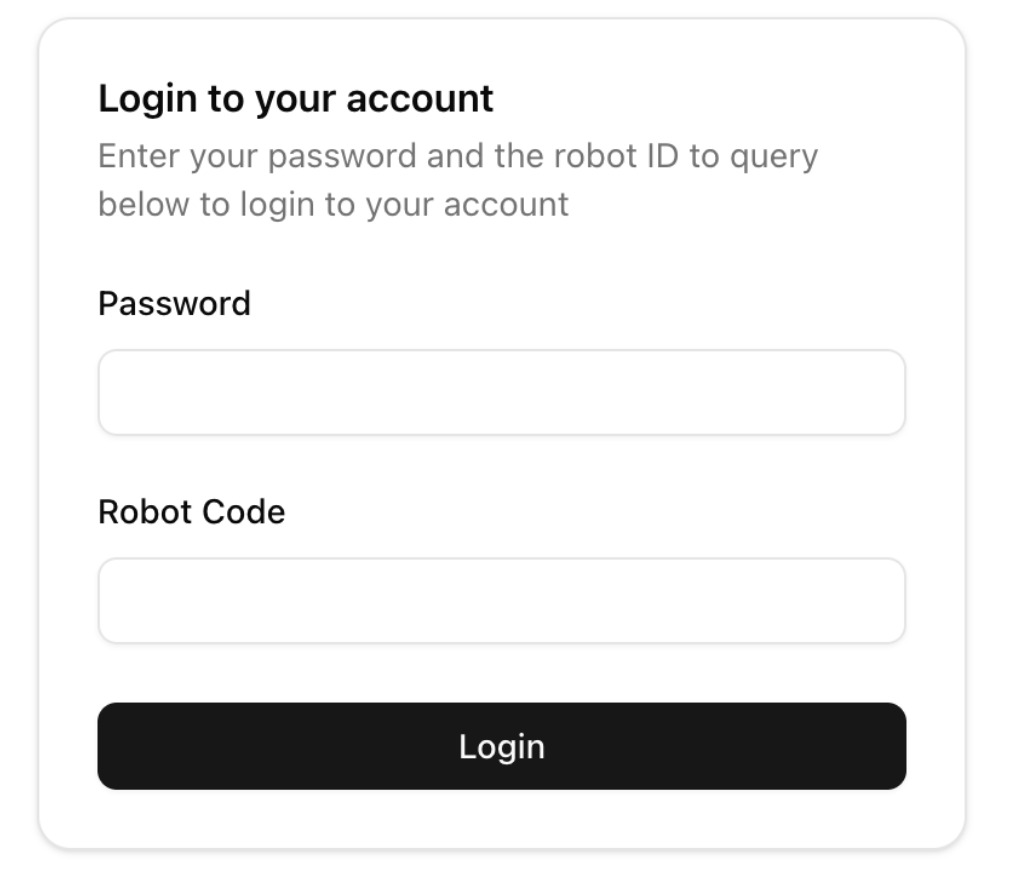

=== Application === | |||

{| class="wikitable" | |||

|+Application Requirements | |||

!Ref | |||

!Requirement | |||

!Priority | |||

|- | |||

|APP1 | |||

|Secure login system requiring therapist and robot passcodes. | |||

|Must | |||

|- | |||

|APP2 | |||

|Encrypted and Authenticated data transfer over HTTPS. | |||

|Must | |||

|- | |||

|APP3 | |||

|UI must be intuitive and allow easy navigation through audio recordings. | |||

|Must | |||

|- | |||

|APP4 | |||

|Visual flagging of audio quality (Red, Yellow, Green, Blue). | |||

|Must | |||

|- | |||

|APP5 | |||

|Audio playback should occur directly in-browser. | |||

|Must | |||

|- | |||

|APP6 | |||

|Manual override and reprocessing of flagged audio, triggering resend to robot. | |||

|Must | |||

|- | |||

|APP7 | |||

|Role-based access control to isolate data per therapist. | |||

|Must | |||

|- | |||

|APP8 | |||

|Application should be responsive and accessible across platforms. | |||

|Must | |||

|- | |||

|APP9 | |||

|Session tokens should persist login securely for a defined period (e.g. 1 day). | |||

|Should | |||

|- | |||

|APP10 | |||

|Transcript generation via integrated AI model. | |||

|Should | |||

|- | |||

|APP11 | |||

|Therapist UI should auto-update when new recordings are uploaded. | |||

|Should | |||

|- | |||

|APP12 | |||

|Admin panel to manage course content dynamically. | |||

|Should | |||

|} | |||

The therapist-facing web application is another central part of the speech diagnosis system, providing a secure, intuitive interface for reviewing and managing recordings which is what will actually enable the therapist to have a nicer experience with less bias when performing the diagnosis. To meet its functional and clinical goals, the application enforces a dual-passcode login system (APP1) that authenticates both therapist and robot identities, ensuring only authorized access. All data transmission is secured through HTTPS encryption (APP2), upholding privacy and regulatory compliance along with custom encryption to validate authenticity. | |||

To improve therapist workflows, the UI must also be intuitive and navigable (APP3), with an in-browser audio playback system (APP5) and visual quality indicators (APP4) that help prioritize attention of specific recordings. Therapists must also be able to manually override audio flags and resend prompts to the robot (APP6) when a recording is insufficient. The app enforces strict role-based access control (APP7), ensuring data is siloed between therapists, and must be responsive across devices (APP8), supporting both desktop and tablet workflows. | |||

Additional features enhance usability and flexibility: persistent session tokens (APP9) reduce login friction during active use, automated transcript generation (APP10) simplifies documentation, and real-time dashboard updates (APP11) help therapists stay in sync with robot-side activity. A dedicated admin panel (APP12) further supports dynamic content management, enabling continuous evolution of therapeutic content without developer intervention. | |||

The test plan is as follows: | |||

{| class="wikitable" | |||

|+Application Test Plan | |||

!Ref | |||

!Precondition | |||

!Action | |||

!Expected Output | |||

|- | |||

|APP1 | |||

|App is deployed | |||

|Attempt login with valid therapist and robot passcodes | |||

|Secure login is successful; access granted only when both passcodes are valid | |||

|- | |||

|APP1 | |||

|App is deployed | |||

|Attempt login with one or both invalid passcodes | |||

|Access denied with clear, user-friendly error message | |||

|- | |||

|APP2 | |||

|App is live | |||

|Monitor network activity during login and uploads | |||

|All requests are transmitted over HTTPS; no plaintext data is sent and also an authentication signature is passed to validate authenticity. | |||

|- | |||

|APP3 | |||

|Therapist logged in | |||

|Navigate through dashboard and audio recordings | |||

|Navigation is smooth, labels are clear, and all key functions are easily discoverable | |||

|- | |||

|APP4 | |||

|Audio files available | |||

|View audio table | |||

|Files are color-coded based on quality flags (Red, Yellow, Green, Blue) | |||

|- | |||

|APP5 | |||

|Audio files uploaded | |||

|Click play on an audio recording | |||

|Audio plays in-browser without downloading or external tools | |||

|- | |||

|APP6 | |||

|Poor-quality audio identified | |||

|Therapist flags it for reprocessing | |||

|Recording turns Blue; robot receives re-ask instruction during next poll | |||

|- | |||

|APP7 | |||

|Two therapists registered | |||

|Each logs in with their own robot passcode | |||

|Each sees only their respective data; no cross-access to recordings or transcripts | |||

|- | |||

|APP8 | |||

|Access from various devices | |||

|Open app on phone, tablet, and desktop | |||

|UI adapts properly; all functionalities remain intact | |||

|- | |||

|APP9 | |||

|Therapist logged in | |||

|Close browser and return after several hours | |||

|If within session time, access is maintained; otherwise redirected to login | |||

|- | |||

|APP10 | |||

|Transcript service enabled and recording exists | |||

|Click "Generate Transcript" button on a recording | |||

|Transcript appears in UI with non-verbal content filtered; therapist can edit/download | |||

|- | |||

|APP11 | |||

|Robot uploads a new recording | |||

|Watch therapist dashboard | |||

|New recording appears in real time with correct metadata and flag | |||

|- | |||

|APP12 | |||

|Admin user logged in | |||

|Create a new course and lesson in admin panel | |||

|Content is saved and becomes accessible to therapists on next login | |||

|} | |||

= Legal & Privacy Concerns = | |||

Note: We are only going to concern ourselves with EU legislation and regulations as this is our country of residence. Furthermore most of these regulations concern themselves with a full scale implementation of this robot. | |||

We will mainly be making reference to the following regulations/Legislation: | |||

* General Data Protection Regulation GDPR (https://gdpr-info.eu/) | |||

* Medical Device Regulation MDR (https://eur-lex.europa.eu/eli/reg/2017/745/oj/eng) | |||

* AI act (https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A32024R1689) | |||

* UN Convention on the Rights of the Child | |||

* AI Ethics Guidelines (EU & UNESCO) | |||

* Product Liability Directive (EU 85/374/EEC) | |||

* ISO 13482 (Safety for Personal Care Robots) | |||

* EN 71 (EU Toy Safety Standard) | |||

=== Data Collection & Storage === | === Data Collection & Storage === | ||

The robot we want to build for this project requires that some specific audio snippets and data to be collected and stored somewhere where, therapists and professionals that are responsible for the patient's care can access it. This data however is sensitive and must be secured and protected so it is only accessible to those who are permitted to access it. We should also focus on storing the minimum required amount of data on the patient using the robot to make sure only necessary data is stored. These specific data collection and storage concerns, in the EU, are outlined in Articles [https://gdpr-info.eu/art-5-gdpr/ 5] and [https://gdpr-info.eu/art-9-gdpr/ 9] of the GDPR. | |||

in this context this means the data collected by the robot should at most include: | |||

* Speech audio data of the patient needed by the therapist to help treat the patients impediment | |||

* minimal identification data to know which patient has what data. | |||

* Other data may be needed but must specifically be argued (subject to change) | |||

Furthermore all the data collected by the robot must be: | |||

* encrypted, so if somehow stolen cannot be interpreted | |||

* securely stored, so it can be accessed by the relevant permitted parties | |||

In addition to the basic principles of data minimization and secure local storage, [https://gdpr-info.eu/art-25-gdpr/ Article 25] of the GDPR mandates "privacy by design and by default". This means the architecture of the robot must enforce strict defaults: storage should be disabled until parental consent is granted, recordings should be timestamped and logged with audit trails, and access should be time-limited and traceable. Furthermore, for deployments in schools or healthcare settings, the system must be able to integrate with institutional data protection policies and local data controllers. | |||

=== User Privacy & Consent === | === User Privacy & Consent === | ||

In order for the robot to be used and for data to collected and shared with the relevant parties, the patient user must consent to this and they must also hold specific rights over the data (creation, deletion, restriction etc). On top of this depending on the age of the patient certain restrictions must be placed on the way data is shared, and all patients must have a way to opt-out and withdraw consent from data collection if necessary. These are all covered in Articles [https://gdpr-info.eu/art-6-gdpr/ 6], [https://gdpr-info.eu/art-7-gdpr/ 7], [https://gdpr-info.eu/art-8-gdpr/ 8] of the GDPR. | |||

In essence this means the user must have the most power and control over the data collected by the robot, and the data collected and its use must be made explicitly clear to the user to make sure that its function is legal and ethical. | |||

Under [https://gdpr-info.eu/art-8-gdpr/ Article 8] of the GDPR, children under the age of digital consent require explicit parental permission to engage with services that collect personal data. In practice, this means the robot must support consent verification mechanisms, such as requiring a digital signature from the guardian or integrating a PIN-based consent process at setup. Additionally, [https://www.privacy-regulation.eu/en/recital-38-GDPR.htm Recital 38] of the GDPR advises that the privacy notices shown to both child and guardian be presented in age-appropriate language. For example, using pictograms or child-friendly animations to explain when the robot is recording would not only support legal compliance but also improve trust and understanding. | |||

=== Security Measures === | === Security Measures === | ||

Since we must exchange sensitive data between the patient and therapist, data must be secured and protected in its transmission, storage and access. These relevant regulations are specified in [https://gdpr-info.eu/art-32-gdpr/ Article 32] of the GDPR (Data Security Requirements). | |||

This means that data communication must be end-to-end encrypted, and there must be secure and strong authentication protocols across the entire system. On the therapists end of things there must be relevant RBAC (role based access control) so only the relevant admins can access the data. In real time use over long periods of time there should be the possibility of software updates to improve security. | |||

To comply with Article 32, the robot's firmware should be hardened against attacks (e.g. disabling remote debugging in production) and should support secure boot to prevent tampering. Additionally, data uploads must use TLS 1.3 or better, with server authentication validated using public key pinning. Therapist access should require MFA (multi-factor authentication), and if possible, sessions should auto-expire after inactivity. Finally, a detailed Data Protection Impact Assessment (DPIA) should be conducted prior to releasing the product to users, as required for any product handling systematic monitoring of vulnerable groups ([https://gdpr-info.eu/art-35-gdpr/ Art. 35]). | |||

=== Legal Compliance & Regulations === | === Legal Compliance & Regulations === | ||

Since this robot can be considered as a health related or medical device, we must check and make sure that the data collected is used and treated as medical data. All regulations relevant to this are specified in the Medical Device Regulation. | |||

This Robot may also have certain AI specific features or functionalities so this must also fall within and adhere to regulations and laws present in the AI act so that the functionality and usage of the robot is ethical. | |||

=== Ethical Considerations === | === Ethical Considerations === | ||

Since the patients using this device and interacting with it are children, we must make sure that the interactions with the child are ethical and the way in which data is used and analysed in order to form a diagnosis is not biased in any sort of way. | |||

The robot must minimize psychological risks of AI-driven diagnosis, prevent any possible distress, anxiety and deception that interaction could cause. Training assessments should be analysed in a fair and unbiased manner and decisions on treatment and required data for a particular stage of treatment should be almost entirely decided by the therapist with little to minimal AI involvement. | |||

These are all outlined in the AI Ethics Guidelines and article 16 of the UN Convention on the Rights of the Child. | |||

The UN Convention on the Rights of the Child [https://www.ohchr.org/en/instruments-mechanisms/instruments/convention-rights-child Article 3] requires that all actions involving children prioritize the child’s best interest. In practice, this means ensuring the robot never gives judgmental feedback ("That was bad") or creates hierarchical comparisons with other children’s responses. The robot should instead focus on affirmative reinforcement and therapist-supervised decision-making. Furthermore, models used to analyze speech should be trained and/or tested on diverse data to avoid systemic bias. If AI is integrated into the system, then the [https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A32024R1689 AI Act] will require such fairness by design in all child-facing systems. | |||

=== Third-Party Integrations & Data Sharing === | === Third-Party Integrations & Data Sharing === | ||

Since we are sharing the data collected from the robot to the therapist, we must ensure that strict data-sharing policies are in place that require parental/therapist consent. Furthermore if we use any 3rd party services, like cloud storage providers, AI tools, or healthcare platforms we must make sure data is fully anonymised so no there is no risk of re-identification. | |||