PRE2024 3 Group15: Difference between revisions

added names of group members Tag: 2017 source edit |

|||

| (175 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

Nikola Milanovski, Senn Loverix, | {{DISPLAYTITLE:Computer Vision for hand gesture control of synthesizers (modulation of synthesizers)}} | ||

Group members: Nikola Milanovski, Senn Loverix, Rares Ilie, Matus Sevcik, Gabriel Karpinsky | |||

== Link to GitHub repository == | |||

<blockquote>[https://github.com/Gabriel-Karpinsky/Project-Robots-Everywhere-Group-15 https://github.com/Gabriel-Karpinsky/Handi]</blockquote> | |||

= Table of work times = | |||

Instead of having a long table of every week at the top of the wiki, we instead share a | |||

[https://docs.google.com/spreadsheets/d/1LjFgobNuYJQzRVwhrHQrzcVzEVyR9A_9NcWLjT7u2a0/edit?usp=sharing sheet with our work and tasks]. And have that as a table in the appendix. | |||

= Problem statement and objectives = | |||

The synthesizer has become an essential instrument in the creation of modern day music. They allow musicians to modulate and create sounds electronically. Traditionally, an analog synthesizer utilizes a keyboard to generate notes, and different knobs, buttons and sliders to manipulate sound. However, through using MIDI (Music Instrument Digital Interface) the synthesizer can be controlled via an external device, usually also shaped like a keyboard, and the other controls are made digital. This allows for a wide range of approaches to what kind of input device is used to manipulate the digital synthesizer. Although traditional keyboard MIDI controllers have reached great success, its form may restrict expressiveness of musicians that seek to create more dynamic and unique sounds, as well as availability to people that struggle with the controls due to a lack of keyboard playing knowledge or a physical impairment for example. | |||

During this project the aim is to design a new way of controlling a synthesizer using the motion of the users’ hand. By moving their hand to a certain position in front of a suitable sensor system which consists of one or more cameras, various aspects of the produced sound can be controlled, such as pitch or reverb. Computer vision techniques will be implemented in software to track the position of the users’ hand and fingers. Different orientations will be mapped to operations on the sound which the synthesizer will do. Through the use of MIDI, this information will be passed to a synthesizer software to produce the electronic sound. We aim to allow various users in the music industry to seamlessly implement this technology to create brand new sounds in an innovative, easy to control way to create these sounds in a more accessible way than through using a more traditional synthesizer. | |||

= Users = | |||

With this new and interesting way of performing live music, the main targets for this technology are users in the music industry. Such users include performance artists and DJ’s, which can implement this technology to enhance their live performances or sets. The more expressive form of hand tracking control adds another layer of immersion to a live performance, where the crowd is able to more clearly see how the DJ is manipulating the sound. Visual artists and motions based performers could integrate the technology within their choreography to be able to control the sound in a visually appealing way. Controlling sound parameters using hand motions/gestures could be a lot more visually appealing and crowd-pleasing when compared to more traditional technology. Content creators that use audio equipment to enhance their content, such as soundboards, could use the technology as a new way to seamlessly control the audio of their content. They could map hand gestures to sound effects, leading to entertaining and comedic moments. | |||

This new way of controlling a synthesizer could also be a great way to introduce people to creating and producing electronic music. It would be especially useful for people with some form of a physical impairment which could have restricted them from creating the music that they wanted before. For example, people with a muscle disease could experience difficulties trying to control the knobs and sliders of digital music controllers. A hand tracking controller would allow them to use gestures and motions that work for them to produce or modify music. | |||

= User requirements = | |||

For the users mentioned above, we have set up a list of requirements we would expect the users to have for this technology. First of all, it should be easy to set up for performance artists and producers so they don’t spend too much time preparing right before their performance or set. Next, the technology should be easily accessible and easy to understand for all users, both people that have a lot of experience with electronic music, and people that are relatively new to it. | |||

Furthermore, the hand tracking should work in different environments. For example, a DJ that works in dimly lighted clubs who integrate a lot of different lighting and visual effects during their sets should still be able to rely on accurate hand tracking. There should also be the ability to easily integrate the technology into the artist’s workflow. An artist should not change their entire routines of performing or producing music if they want to use a motion based synthesizer. | |||

Lastly, the technology should allow for elaborate customization to fit to each user’s needs. The user should be able to decide what attributes of the recognized hand gestures are important for their work, and which ones should be omitted. For example, if the vertical position of the hand regulates the pitch of the sound, and rotation of the hand the volume, the user should be able to ‘turn off’ the volume regulation so that if they rotate their hand nothing will change. | |||

To get a better understanding of the user requirements, we interviewed some people in the music industry such as music producers, a DJ and an audiovisual producer. The interview questions are as follows: | |||

'''Background and Experience:''' What tools or instruments do you currently use in your creative process? Have you previously incorporated technology into your performances or creations? If so, how? | |||

'''Creative Process and Workflow:''' Can you describe your typical workflow? How do you integrate new tools or technologies into your practice? What challenges do you face when adopting new technologies in your work? | |||

'''Interaction with Technology:''' Have you used motion-based controllers or gesture recognition systems in your performances or art? If yes, what was your experience? | |||

How do you feel about using hand gestures to control audio or visual elements during a performance? | |||

What features would you find most beneficial in a hand motion recognition controller for your work? | |||

'''Feedback on Prototype:''' What specific functionalities or capabilities would you expect from such a device? | |||

How important is the intuitiveness and learning curve of a new tool in your adoption decision? | |||

'''Performance and Practical Considerations:''' In live performances, how crucial is the reliability of your equipment? What are your expectations regarding the responsiveness and accuracy of motion-based controllers? | |||

How do you manage technical issues during a live performance? | |||

How important are the design and aesthetics of the tools you use? | |||

Do you have any ergonomic preferences or concerns when using new devices during performances? | |||

What emerging technologies are you most excited about in your field? | |||

=== Summary of interview results: === | |||

The interviews revealed several key insights into the potential use of a hand motion recognition controller in music production and live performance. Most artists currently rely on prepared tracks and standard hardware or software tools, using effects and modulation to add expressiveness during live sets. A motion-based controller could serve as an additional input method for adjusting parameters like EQ, reverb, or pitch, offering a more granular and creative layer of control. However, it would be most effective as an optional enhancement rather than a replacement for existing gear, particularly if implemented as a software-based tool with a user-friendly interface. | |||

Responsiveness and accuracy are important but context-dependent; slight delays are acceptable when modulating sound but not when playing in real time like an instrument. For broader adoption, the controller must be practical, quick to set up, and highly compatible with standard digital audio workstations and plugins. Its benefits must clearly outweigh the effort required to learn and implement it. While not ideal for detailed production work due to the imprecise nature of motion tracking, it holds promise as a dynamic and visually engaging tool in live settings—especially if designed to be flexible, intuitive, and easily integrated into existing performance environments. | |||

==== Important takeaways: ==== | |||

# Artists, mainly DJs, have prepared tracks which are chopped up and ready to perform and mostly play with effects and values. So for this application using it instead of a knob or a digital slider to give the artist more granular control over an effect or a sound would be a possible implementation. For example if it our controller was an app with a GUI that could be turned on and of at will during the performance it could add "spice" to a performance. | |||

# For visual control during a live performance, it's too difficult to use it live especially compared to the current methods. But it could possibly be used to control for example color or some specific element. | |||

# Many venues have multiple cameras already pre setup which could be used to capture the gestures from multiple angles and at high resolutions. | |||

# There is interest in using it live. | |||

# If it is used to modulate sound and not to be played like an instrument delay isn't that much of a problem. | |||

# Difficulty of using the software (learning it, implementing it) should not outweigh the benefit you could gain from it. | |||

# Could be an interesting tool for live performances, but impractical for music production due to the imprecise nature of the software in comparison to physical controllers | |||

# Must be easily compatible with a wide range of software | |||

= State-of-the-art = | |||

===== Preface ===== | |||

As there are currently no commercial products that fully realize the concept behind this project, the state of the art primarily resides within academic research and the open-source community. Consequently, our exploration focuses on individual technological components necessary to bring this product to life. | |||

[[File:Mi.Mu Gloves.jpg|thumb|383x383px|Figure 1: Mi.Mu Gloves]] | |||

The main inspiration for this project originated from an Instagram video by a Taiwanese visual media artist ([https://www.instagram.com/p/DCwbZwczaER/ Instagram link]), showcasing gesture-driven musical interaction. Further inspiration was found by discovering related innovations such as the ''Mi.Mu Gloves'', developed by a English musician and producer Imogen Heap, which enable expressive control over music through hand gestures ([https://mimugloves.com/ Mi.Mu Gloves]). A related commercial product was also found: the ''Roli Airwave'', which uses a combination of cameras and ultrasonic sensors to track a pianist’s hand movements in order to control a MIDI keyboard ([https://roli.com/eu/product/airwave-create Roli Airwave]). However, this product is both expensive and is intended as a tool to aid people in learning how to play keyboard, rather than as a performance tool. | |||

[[File:RoliAirwave.jpg|thumb|324x324px|Figure 2: Roli Airwave]] | |||

===== Hardware ===== | |||

Hand tracking and gesture recognition can be achieved using a variety of imaging technologies, each with distinct trade-offs: | |||

* '''RGB cameras''' are cost-effective and provide high-resolution color imagery but lack depth perception. | |||

* '''Infrared (IR) sensors''' offer precise motion capture but are vulnerable to interference from other IR sources. | |||

* '''RGB-D cameras''', which integrate color and depth data, are widely adopted in real-time gesture recognition applications due to their balanced performance [3][4][5]. | |||

Recent literature supports the use of RGB-D sensors as a reliable choice in computer vision-based hand tracking systems, particularly when combined with robust pose estimation algorithms [4][5]. However, these sensors can be expensive and may not match RGB cameras in image resolution. Improving accuracy often involves the use of multiple camera types (e.g., combining RGB, IR, and depth sensors), which enhances robustness across varying environments but increases system complexity and cost [17]. Alternatives such as mirror-based multi-view setups provide a more economical path to multi-perspective tracking, reducing hardware requirements while maintaining accuracy [18]. Optimal camera placement also plays a critical role in tracking precision and can be optimized using algorithms designed for motion capture scenarios [19]. | |||

In addition to camera-based solutions, wearable sensors like those explored in [20] offer a non-invasive means for gesture capture, but may not align with the aesthetic and freedom required for a performance-focused instrument. | |||

===== Software ===== | |||

The software layer is pivotal in translating raw visual data into meaningful gestural input. Across the literature, OpenCV is consistently cited as the foundational library for computer vision tasks, including image processing, motion detection, and object tracking [12]. It is often paired with MediaPipe, a framework developed by Google that provides high-performance pipelines for hand tracking and pose estimation [14]. | |||

A typical processing pipeline involves: | |||

# Hand detection and segmentation, | |||

# Keypoint or feature extraction, | |||

# Gesture classification—often executed in real time [12][15][16]. | |||

Python is the most frequently recommended language for developing these systems, due to its ease of use, extensive community support, and seamless integration with machine learning libraries and frameworks [12]–[16]. | |||

Recent works demonstrate the integration of machine learning and convolutional neural networks (CNNs) to enhance the accuracy of gesture recognition under challenging conditions, such as varying lighting or complex backgrounds [21][23][24][25]. Additionally, novel segmentation techniques and optimization methods have been proposed to improve system responsiveness and gesture clarity [24]. | |||

Furthermore, examples like ''AirCanvas'' [29], ''Gesture-Based MIDI Controllers'' [1][28], and browser-based systems [2] show growing interest in gesture-driven musical interaction across both research and hobbyist communities. | |||

= Approach, milestones and deliverables = | |||

The initial approach taken following the collection of user requirements and the stat-of-the-art research is as follows: | |||

# Research software solutions: | |||

## Find suitable library for hand tracking and gesture recognition and implement it in a way that satisfies user requirements | |||

## Research and attempt possible AI integration for gesture recognition | |||

## Create a suitable GUI in order to satisfy User Requirements for ease of use and customizability | |||

## Find and implement a way to turn the hand tracking movements into MIDI commands which can then be sent to the software of choice by the user | |||

# Research hardware solutions: | |||

## Research viability of different camera setups: multiple cameras, IR cameras, etc. | |||

## Research hardware acceleration options if necessary | |||

# Combine the separate modules: | |||

## Connecting the hand tracking/gesture recognition with the GUI, as well as then connecting both of those to a MIDI output command which can be sent to the user's software of choice | |||

# Refine User Requirements: | |||

## Revising User Requirements to make sure that they are still realistic for the time-frame of the project, without sacrificing user satisfaction and the original goal of the project | |||

# Testing and refining final product: | |||

## Testing the connections between the modules | |||

## Testing the hardware performance to determine if acceleration is necessary | |||

## Testing output to determine if product functions as intended | |||

## Refining the GUI and adding features suggested by users | |||

## Preparing final demo version of product to ensure as many user requirements as possible are satisfied | |||

The milestones and deliverables set and accomplished during the project are as follows: | |||

# Have a working hand tracker that can recognize simple gestures (Week 3) | |||

# Integrate hand tracking software with GUI (Week 5) | |||

# Provide MIDI output from code (Week 5) | |||

# Integrate MIDI output into hand tracking and gestures (Weeks 5 and 6) | |||

# Test product with potential users and integrate feedback (Weeks 5 and 6) | |||

# Add customizability options to GUI (Week 6) | |||

# Add Mac support (Week 6) | |||

# Fix bugs and prepare product for final demonstration (Weeks 6 and 7) | |||

= The Design = | |||

Our design consists of a software tool which acts as a digital MIDI controller. Using the position of the user’s hand as an input, the design can send out MIDI commands to music software or digital instruments. The design consists of three main parts, the GUI, the hand tracking and gesture recognition software and the software responsible for sending out the MIDI commands. Through the GUI, the user can see how their hand is being tracked, and is able to customize what sound parameters are controlled by what hand motions or gestures. The hand tracking software tracks the position of the hand given a camera input, and the gesture recognition software should be able to notice when the user performs a certain pre determined gesture. Lastly, there should be the lines of code responsible for sending out the correct MIDI commands in an adequate way. The design of each part is explained and motivated below. | |||

== GUI == | |||

The GUI chosen for the design is a dashboard UI through which the user can determine their own style of sound control. The GUI was designed to be as user-friendly as possible and contain extensive customization options for how the user wishes to control the sound parameters. The buttons and sliders integrated within the GUI were made to be as intuitive and easy to understand as possible. That way, if an music artist or producer were to use the design, they would not have to spend a long time familiarizing with how they should use it. The GUI used for our design is shown in figure 3. | |||

[[File:GUI screen.png|center|thumb|540x540px|Figure 3: The GUI used in our design]] | |||

The GUI shows the user the camera input the software is receiving, and how their hand is being tracked by drawing the landmarks on the user's hand and placing a bounding box around it. The GUI also allows the user to choose what camera to use if their device were to have multiple camera inputs. Furthermore, there is also a “Detection Confidence” slider to change the detection confidence of the hand tracking code. The detection confidence indicates how sure the hand tracking algorithm needs to be of the hand position before it decides to track it. This means that if the detection confidence is lowered, the algorithm is more likely to track a hand position even if there is a lot of uncertainty about the location of the hand. If the user observes that their hand is not being tracked accurately enough, or if the hand tracking is too choppy or jumpy, they can change the detection confidence by lowering it. If the user detects hand tracking errors, the detection confidence can be increased. This can be convenient for using the design in different lighting conditions. Below the camera screen the user can select what hand gestures control what parameter of the sound. They can also select through what MIDI channel the corresponding commands are sent, and they can choose to activate or deactivate the control by ticking the active box. The hand gestures that can be used for control are shown in table 1. The sound parameters that can be controlled are shown in table 2. | |||

<div style="text-align: center;"> | |||

<div style="display:inline-block; vertical-align: top; margin: 0 1em;"> | |||

{| class="wikitable" | |||

|+ Table 1 | |||

!Hand gestures | |||

|- | |||

|Bounding box | |||

|- | |||

|Pinch | |||

|- | |||

|Fist | |||

|- | |||

|Victory | |||

|- | |||

|Thumbs up | |||

|} | |||

</div><!-- | |||

--><div style="display:inline-block; vertical-align: top; margin: 0 1em;"> | |||

{| class="wikitable" | |||

|+ Table 2 | |||

!Sound parameters | |||

|- | |||

|Volume | |||

|- | |||

|Octave | |||

|- | |||

|Modulation | |||

|} | |||

</div> | |||

</div> | |||

Further explanation on how the control works and why these hand gestures were chosen is given in the section on hand tracking and gesture recognition in the design. The GUI also allows the user to add and remove gestures. The newly added gestures will appear below the already existing gesture settings. When using the “Pinch” or “Bounding Box” gestures, the percentage with which the chosen parameter is changed is displayed below the camera feed. An example of a possible combination of gesture settings can be seen in figure ??. When clicking “Apply Gesture Settings”, the gestures the user has set as active will be used to control their corresponding sound parameter. If the user wished to add or remove a gesture, change what gesture controls what parameter or activate/deactivate a gesture, they will have to click the “Apply Gesture Settings” button again in order for the new settings to be applied. In order to stop all MIDI commands being sent, the user can click the “MIDI STOP” button. | |||

[[File:Example gesture settings.png|center|thumb|732x732px|Figure 4: An example of possible gesture settings]] | |||

== Hand tracking and gesture recognition == | |||

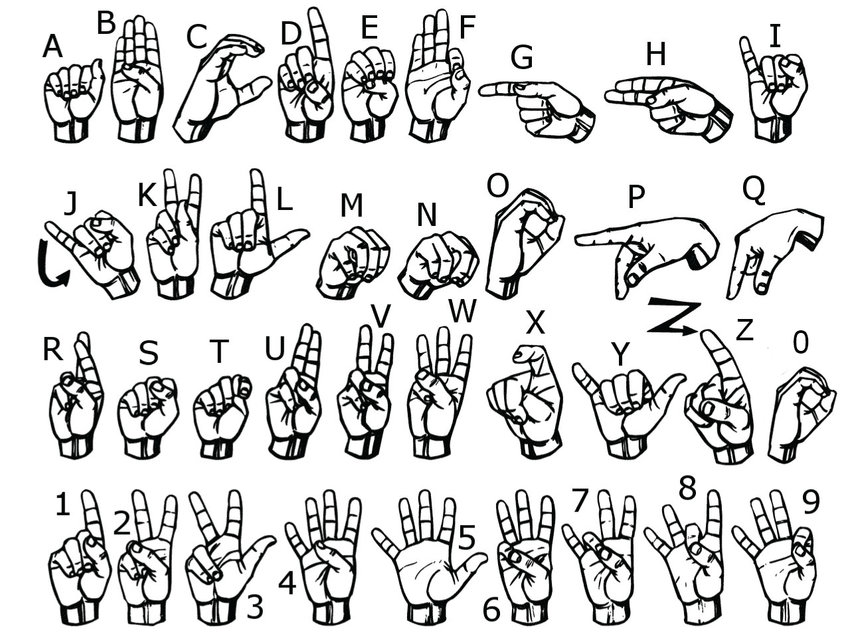

[[File:The-26-letters-and-10-digits-of-American-Sign-Language-ASL.png|thumb|499x499px|Figure 5: The American Sign Language]] | |||

To control the sound parameters in a continuous way, the hand gestures “Pinch” and “Bounding Box” are chosen. For the “Pinch” gesture, the landmark placed at the top of the index finger and the top of the thumb are used. The euclidean distance between these points is calculated and converted to a percentage. This percentage is then used to determine with how much the chosen sound parameter is influenced. For example, if the user pinches their thumb and index to a distance for which the GUI indicates 50%, and the chosen parameter is volume, then the sound will play at 50% volume. The "Bounding Box" works in a very similar way, itself it is a rectangle around the hand such that it encapsulates all the fingers and the bottom of the palm, then the area of this box is translated into a percentage, and then works the same way as the pinch. | |||

We implemented several gestures in the final version of our product. For inspiration on what gestures we could use, we looked at the American Sign Language (ASL). The alphabet of the ASL is shown in figure 5. Asides from pinch and bounding box as our two continuous gestures we implemented victory sign (V), thumbs-up, and fist (A), these have been chosen due to being easily distinguishable and because they are are universal. These three act as our binary gestures, with their intended use to send a one time command such start playing note x or toggle a low-pass filter. To recognize these we use a function that returns what fingers are up during each captured frame. Thus, we can simply check whether a thumb is up and no others resulting in a thumbs up gesture being recognized, similarly, if index and middle finger are up we recognize this as a victory sign gesture and fist is recognized when no fingers are up. | |||

== Sending MIDI commands == | |||

For sending out the information on what sound parameters are to be controlled, MIDI was chosen. This is because MIDI is a widely used communication protocol used to control synthesizers, music software including the likes of Ableton and VCVRack and other digital instruments. MIDI allows for sending simple control change commands. Such commands can be used to quickly control various sound parameters, which makes them ideal for being used in a hand tracking controller. From the conducted interviews it also became clear that MIDI is very common in the music industry. Another advantage is that a single midi port connection can use up to 16 channels, thus we can send more than one command at a time on different channels. | |||

= Other explored features = | |||

=== Multiple camera inputs === | |||

To further improve hand tracking accuracy, the possibility of using multiple camera inputs at the same time was explored and tested. To allow data inputs from two cameras to be handled effectively, various software libraries were studied to find one that would be best suitable. One library that seemed to be able to handle multiple camera inputs the best was OpenCV. Using the OpenCV library, a program was created that could take the input of two cameras and output the captured images. The two cameras used to test the software were a laptop webcam, and a phone camera that was wirelessly connected to the laptop as a webcam. The program seemed to run correctly, however there was also a lot of delay between the two cameras. In an attempt to reduce this latency, the program was adjusted to have a lower buffer size for both camera inputs and to manually decrease the resolution, however this did not seem to reduce the latency. An advantage of using OpenCV is that it allows for threading so that the frames of both cameras can be retrieved in separate threads. Along with that a technique called fps smoothing can be implemented to try and synchronize frames. However, even after implementing both threading and frame smoothing, the latency did not seem to reduce. The limitations could be because of the wireless connection between the phone and the laptop, so a USB connected webcam could possibly lead to better results. | |||

Before continuing with using multiple cameras, a test has to be carried out to see whether or not the hand tracking software works on multiple camera inputs. To do this, the code for handling multiple camera inputs was extended using the hand tracking software. The handling of multiple camera inputs was done via threading. Upon first inspection, the hand tracking software did seem to work for multiple cameras. From each camera input, a completely separate hand model is created depending on the position of the camera. However, a big drawback of using two camera inputs which each run the hand tracking algorithm, is that the fps of both camera inputs decreases. Even through using fps smoothing the camera inputs suffered a very low fps. This is probably due to the fact that running the hand tracking software for two camera inputs becomes computationally expensive. This very low fps results in the system not being very user-friendly when using multiple cameras. Therefore, we have decided to focus on creating a well-preforming single-camera system before returning to using multiple cameras. | |||

=== Normalized distance for the "Pinch" gesture === | |||

One problem that arises from calculating the percentage with which the chosen sound parameter is changed using the “Pinch” gesture is that the euclidean distance also changes when the user moves their hand away from or towards the camera. One way we have tried to keep the percentage the same is by not using the euclidean distance between the top of the index finger and the top of the thumb directly, but to use a normalized distance. To find a suitable distance, we have tried to divide the euclidean distance between index and thumb by another distance between two different landmarks. That way if the user were to move their hand to or away from the camera, both distances would increase or decrease by the same amount, leading to the normalized distance staying the same. The percentage should only change if the normalized distance changes by a certain amount. A few different landmarks were used to find the distance by which the euclidean distance between index and thumb has to be divided. Some of these include the distance between the base of the index finger and the little finger, as well as using the distance between the lowest point on the hand and one of the adjacent landmarks. We have also tried using the size of the bounding box. | |||

Although tests showed that the percentage did remain the same when moving the hand while keeping the pinching distance the same, it only did so when moving the hand very slowly. Updating the percentage to a correct value when the normalized distance did change also proved to be very difficult, as the percentage sometimes did not go higher than a certain amount, or it would suddenly jump to a nonsensical value. Although it does look like a promising solution, we have decided to drop this feature to focus on other, more important features. | |||

=== AI model for gesture recognition === | |||

Instead of using conditional statements on the location of certain landmarks to determine the user’s gesture, we also explored training an AI model to classify gestures. The model was created using Keras (https://keras.io/) so that it could be used in Python. The model was trained using pictures of different hand gestures, each labelled by the correct gesture it is supposed to represent. Although the model showed some potential in that it could make correct classifications, the rate at which the model made an error was still too high. Changes is lighting conditions with respect to the pictures on which the model was trained had a negative impact on the accuracy of the model. To make a more accurate model, we would have to train on a lot more pictures in different lighting conditions. Due to not having enough time to do this training, we decided to drop this feature in favour of using the vector tracking approach that we were initially using. | |||

= Code documentation = | |||

=== Setup === | |||

# Download python 3.9-3.12 | |||

# Clone repository ```git clone [https://github.com/Gabriel-Karpinsky/Project-Robots-Everywhere-Group-15 ht]<nowiki/>[https://github.com/Gabriel-Karpinsky/Project-Robots-Everywhere-Group-15 tps://github.com/Gabriel-Karpinsky/Project-Robots-Everywhere-Group-15] ``` | |||

# Create a python virtual environment ```pip install -r requirements.txt ``` | |||

# Install [https://www.tobias-erichsen.de/software/loopmidi.html loop midi] | |||

# Create a port named "Python to VCV" | |||

# Use a software of your choice that accepts midi | |||

=== GUI === | |||

After researching potential GUI solutions we ended up narrowing it down to a few. Those being native python GUI implementation with either PyQt6 or a Java script based interface written in react/angular run either in a browser via a web socket or as a stand alone app. Main considerations were, looks, performance and ease of implementation. We ended up settling on PyQt6 as it is native to python, allows for Open-GL hardware acceleration and is relatively easy to implement for our use case. A MVP prototype is not ready yet but it is being worked. We identified some of the most important features to by implemented first as: changing input sensitivity of the hand tracking. Changing the camera input channel in case of multiple cameras connected, and a start stop button. | |||

===== PyQt6 library ===== | |||

The code for the GUI was written in the file HandTrackingGUI.py. In order to create the GUI in Python, the PyQt6 library was used. This library contains Python bindings for Qt, a framework for creating graphical user interfaces.[31] | |||

The main GUI window is created by creating a class from '''QWidget''', called “HandTrackingGUI”. This class is responsible for setting up everything in the GUI window, including buttons and sliders. The class also makes sure the user’s camera input is visible. It does this by calling the code responsible for hand tracking, and then displaying the obtained frames containing the landmarks on the user’s hand to the GUI using '''QImage'''. QWidget is also responsible for creating the gesture setting rows where the user can select what gesture they want to use to control what sound parameter. This is done using the “GestureSettingRow” class. Each time the user adds a new gesture, a new row has to be created. This is done within the GestureSettingsWidget class. Every time a new gesture is added, a new instance of the GestureSettingRow class is generated. This allows for multiple different mappings from gesture to form of MIDI control. The HandTrackingGUI class is also responsible for handling button presses and slider adjustments, and making sure the correct gesture settings are applied. | |||

As mentioned before, while running the GUI there should also be code that handles the hand tracking. To make sure the GUI does not freeze, this code should be run simultaneously with the code that runs the GUI. This can be achieved via multi-threading, a technique that makes sure multiple parts of a program can be run at the same time. The PyQt6 library allows for multithreading by creating a class using '''QThread'''. This class is called “HandTrackingThread”, which is responsible for running the hand tracking code. | |||

The current GUI is made using a default layout and styling. This was done because the focus was mainly on creating a functional GUI, rather than making it visually appealing using stylistic features. However, there are plans to make further improvements to the GUI. The idea is to use a tool called Qt Widgets Designer[32] to create a more visually appealing GUI. This tool is a drag and drop GUI designer for PyQt. It can help simplify making a more customized and complicated GUI, as extensive coding would not be necessary. | |||

=== Output to audio software === | |||

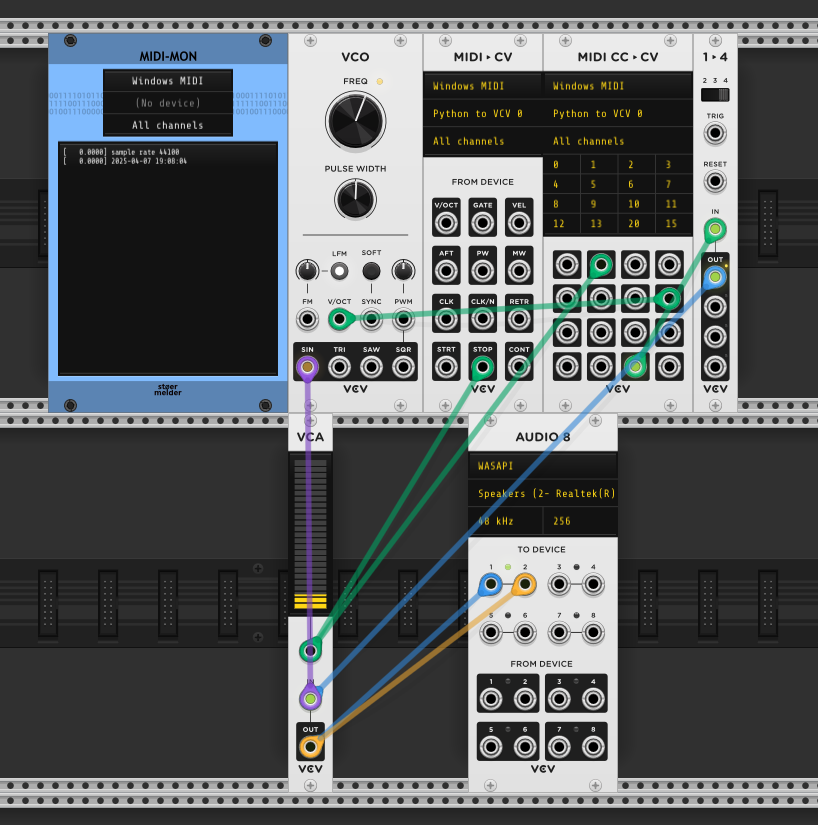

[[File:VCV setup.png|thumb|456x456px|Figure 6: The VCV Rack setup used to test MIDI commands generated using Python]] | |||

Initially there was a discussion on how the product should look, if we want it to be, in very simple terms, a knob (or any number of knobs) from a mixing deck or if we want it to be able run as executable inside Ableton or something else entirely. Based on this discussion there are several options on what the output the software should have. Based on the interview with Jakub K. we learned that we could just pass Ableton MIDI and in cases an int or some other metadata. For the case of having it as an executable it would be much more difficult, we would have to do more research on how to make it correctly interact with Ableton as we still would need to figure out how to change parameters from inside Ableton. | |||

===== Music software for testing ===== | |||

Since we could not obtain a version of Ableton, we have decided to test how we can send MIDI commands via Python using VCV Rack. An overview of the VCV Rack setup used can be seen in figure 6. In order to play notes sent via MIDI commands, the setup needs a MIDI-CV module to convert MIDI signals to voltage signals. It also needs a MIDI-CC module to handle control changes. Along with that it needs an amplifier to regulate the volume and an oscillator to regulate the pitch of the sound. Finally it requires a module that can output the audio. The setup also contains a monitor to keep track of the MIDI messages being sent. The Python code was connected to VCV Rack using a custom MIDI output port created using loopMIDI. A Python script was created which could send different notes at a customizable volume using MIDI commands. All notes sent were successfully picked up and played by the VCV Rack setup. Furthermore, we also wanted to see if the MIDI commands could be successfully picked up by more commonly used professional music software such as Ableton. To do this, we asked Jakub K. to test the hand tracking MIDI controller on an Ableton setup. He confirmed that the controller worked with his setup as well, and that the MIDI commands were successfully picked up by the software. | |||

===== Mido library ===== | |||

In order to send MIDI commands to VCV rack, a library called [https://mido.readthedocs.io/en/stable/index.html mido] was used. These messages allow for sending specified notes at a certain volume. Before sending these messages however, a MIDI output port must be selected. This can be done using the open_output() command, and passing the name of the created loopMIDI port as the argument. After this is done, notes can be sent using the following command structure: | |||

{| class="wikitable" | |||

|+ | |||

!output_port.send(Message('note_on', note=..., velocity=..., time=...)) | |||

|} | |||

where ‘note_on’ indicates that a note is to be played, note requires a number that represents the pitch, velocity represents a number that represents the volume and time indicates for how many seconds the note should play. The time attribute can be omitted if the note is wished to keep playing without having a time constraint. To stop the note from being played, the same command can be given, where ‘note_on’ is changed to ‘note_off’. | |||

To change sound parameters such as volume, octave or modulation, a 'control_change' message can be sent. In order to send these messages, the following command structure can be used: | |||

{| class="wikitable" | |||

|+ | |||

!output_port.send(Message('control_change', control=... , channel=... ,value=...)) | |||

|} | |||

where ‘control’ takes the control change value corresponding to the sound parameter that is to be modified. An overview of these control change values can be found [https://midi.org/midi-1-0-control-change-messages here]. The ‘channel’ argument determines what MIDI channel the message is to be transmitted over. ‘value’ determines how much the chosen sound parameter is modified, ranging from 0 to 127. For example, if the volume should be changed to 70%, ‘control’ should have a value of 7, and ‘value’ should have a value of around 89. In order to stop all notes being sent, a control value of 123 can be used. | |||

===== MIDITransmitter class ===== | |||

In the hand tracking module (HandTrackingModule.py), a class called MIDITransmitter was created to send control change messages to the music software. When initialized the class tries to establish a connection to a specified MIDI output port using the '''connect()''' function. In our case, this specified port is labelled “Python to VCV”. When all notes need to be turned off, the '''send_stop()''' function can be. This function makes sure that notes on all channels are turned off. | |||

The class also contains functions that handle a control change in volume, octave and modulation. These functions are called '''send_volume()''', '''send_octave()''' and '''send_modulation()''' respectively. There is also a function called '''send_cc()''', which can be used to make a control change to all aforementioned parameters. This function is used by the GUI to make control changes, passing the appropriate control value as an argument. | |||

===== BinaryGesture and ContinousGesture classes ===== | |||

Each time a new gesture is defined by the user the in the GUI, ''HandTrackingModule.py'' makes a new instance of the class ContinousGesture or BinaryGesture (depending on the gesture type is called) is created. These classes are responsible for sending the MIDI signals. They each create there own instance of MIDITransmitter which allows us to send multiple different commands on multiple different channels simultaneously. In previous implementations that didn't have this solutions multiple continuous commands would try and send a command using the same MIDITrnasmitter which would result in conflicts where two commands would be send but with the same CC command or on the wrong channel. There is also a GestureCollection class which keeps tracks of all of the gestures this is done in order to create the individual percentage readouts in the GUI and more importantly correctly add and remove gestures when the user clicks apply settings in the GUI. | |||

=== Hand tracking and gesture recognition === | |||

We have implemented a real-time hand detection system that tracks the position of 21 landmarks on a user's hand using the MediaPipe library. It captures live video input, processes each frame to detect the hand, and maps the 21 key points to create a skeletal representation of the hand. The program calculates the Euclidean distance between the tip of the thumb and the tip of the index finger. This distance is then mapped to a volume control range using the pycaw library. As the user moves their thumb and index finger closer or farther apart, the system's volume is adjusted in real time. We’ve also implemented functions to detect common gestures, such as fists or peace signs, by assessing which fingers are extended or folded. These methods (e.g., <code>is_fist</code>, <code>is_victory</code>, <code>is_thumbs_up</code>) each rely on analyzing landmark positions, enabling a straightforward method of building binary or continuous gestures that can be mapped onto musical parameters. | |||

Additionally, we are in the process of implementing a program that is capable of recognising and interpreting American Sign Language (ASL) hand signs in real time. It consists of two parts: data collection and testing. The data collection phase captures images of hand signs using a webcam, processes them to create a standardised dataset, and stores them for training a machine learning model. The testing phase uses a pre-trained model to classify hand signs in real time. By recognizing and distinguishing different hand signs, the program will be able to map each gesture to specific ways of manipulating sound, such as adjusting pitch, modulating filters, changing volume, etc. For example, a gesture for the letter "A" could activate a low-pass filter, while a gesture for "B" could increase the volume. | |||

Beyond the predefined gestures, we’ve set up a class-based structure that lets you easily create and manage new gestures. Classes like <code>BinaryGesture</code> and <code>ContinuousGesture</code> each take a “detector” function and a callback to define what to do if the gesture is recognized in any given frame. For example, if you want the system to play a drum note when someone makes a fist, simply pass <code>is_fist</code> as the detector function and specify the command you want triggered in the <code>on_trigger</code> callback. Furthermore, we use the <code>BinaryGesture</code> and <code>ContinuousGesture</code> to differentiate between gestures that are used to trigger, turn on/off in other words send one time midi commands and continuous commands such as changing volume, pitch etc. The <code>GestureCollection</code> class maintains all active gestures so that multiple ones can be tracked at once without conflict. Additionally, each gesture uses its own instance of a <code>MIDITransmitter</code>, ensuring that separate controls can be adjusted simultaneously across different MIDI channels. With these flexible classes in place, you can expand the system to detect new gestures—like sign language letters or instrument-specific hand signals—and link them to whichever musical parameters or commands you prefer. | |||

==Project reflection and future improvements== | |||

'''Achievement of Initial User Requirements''' | |||

At the start of the project, we established a set of user requirements aimed at developing an intuitive and efficient system to implement motion tracking and physical expression into live performances. Key objectives included ease of setup, modularity, non-intrusiveness when used in a live performance, and reliable functionality. Through iterative development and user feedback, we successfully met these objectives, resulting in a system which is user-friendly, responsive, modular, and seamless integration into the artist's workspace. | |||

'''Design Decisions and Trade-offs''' | |||

One significant design consideration was the system's performance in low-light environments, a common setting for many artists. Initially, supporting low-light conditions was set as one of our design objectives. However, during development, we identified that ensuring reliable performance under such conditions would require extra hardware beyond only a laptop and therefore lead to higher setup time and a greater barrier to entry for our project. After discussions with our collaborating artist and interviewees, we decided to de prioritize the low-light support requirement in favor of reducing system complexity and enhancing ease of setup. As our exit interviews affirmed even though ensuring good lighting conditions during a performance is an inconvenience to some, and a deal-breaker to others, and requires additional setup, it is not as critical of a requirement for many artists who would consider using our project as we initially thought. | |||

'''Insights from User Feedback''' | |||

The exit interview with our primary external collaborator, Jakub K., provided valuable insights into the system's real-world application. Jakub expressed appreciation for the system's simplicity and reliability, and to his own surprise said that he can not only imagine himself using it in a live performance, but that he will also try and implement it into parts of his workflow. Jakub also suggested that future iterations could benefit from increased customization options, enabling artists to tailor the system's behavior to their individual preferences and workflows. | |||

'''Future Development and Open-Source Commitment''' | |||

We recognize several ways for enhancing the overall system and making it more useful and accessible to a more artists. Incorporating greater customization features would allow artists to adapt the system to their unique creative processes, thereby broadening its applicability across different live and production applications. We also identified a list of other features that we consider mandatory to implement before this project could be reliably deployed in a live performance setting. | |||

These include a more user friendly GUI. The current GUI was created for demonstration purposes and doesn't hold up to modern application design standards, it is also missing a few features such as proper image resizing etc. As mentioned in the GUI section of the wiki the new iteration of the GUI will most likely still leverage the pyqt6 library but external tools such as QT designer will be used to bring it more in line with modern interface standards. | |||

Another major section of improvement is overall stability, the application in it's current form is relatively stable, however there are a number of bugs and repeatable crashes that need to be addressed before we are confident in giving it to live performers to use. | |||

A heavily requested feature in our interviews was a better stop or panic button integration that would reliably stop the hand tracking in case something goes wrong. The current implementation requires a mouse click withing the app. A better implementation would be a MIDI based binding that the artist could connect to a MIDI controller or DJ deck of their choice. | |||

Finally, we plan to offer greater customization of the gestures and gesture settings. We have plans to implement more gestures to increase the types and complexity levels of hand tracking the artists can set up in the app. This would provide the largest improvement in the usability of our app in different live performances and application. We also have plans to implement individual gestures settings where the users could tweak things such as individual gesture sensitivity, which gesture is bound to which hand, and many others. | |||

We also plan to continue supporting the project as an open-source initiative on GitHub. | |||

In conclusion, this project has successfully met its core objectives, delivering a system that can effectively supports artists in their creative endeavors. By continuing to support the app in the future and focusing on future enhancements, we aim to contribute meaningfully to the intersection of technology and art, by providing a tool that innovative and help fulfill a need of the artistic community. | |||

= References = | |||

[1] “A MIDI Controller based on Human Motion Capture (Institute of Visual Computing, Department of Computer Science, Bonn-Rhein-Sieg University of Applied Sciences),” ResearchGate. Accessed: Feb. 12, 2025. [Online]. Available: <nowiki>https://www.researchgate.net/publication/264562371_A_MIDI_Controller_based_on_Human_Motion_Capture_Institute_of_Visual_Computing_Department_of_Computer_Science_Bonn-Rhein-Sieg_University_of_Applied_Sciences</nowiki> | |||

[2] M. Lim and N. Kotsani, “An Accessible, Browser-Based Gestural Controller for Web Audio, MIDI, and Open Sound Control,” ''Computer Music Journal'', vol. 47, no. 3, pp. 6–18, Sep. 2023, doi: 10.1162/COMJ_a_00693. | |||

[3] M. Oudah, A. Al-Naji, and J. Chahl, “Hand Gesture Recognition Based on Computer Vision: A Review of Techniques,” ''J Imaging'', vol. 6, no. 8, p. 73, Jul. 2020, doi: 10.3390/jimaging608007 | |||

[4] A. Tagliasacchi, M. Schröder, A. Tkach, S. Bouaziz, M. Botsch, and M. Pauly, “Robust Articulated‐ICP for Real‐Time Hand Tracking,” Computer Graphics Forum, vol. 34, no. 5, pp. 101–114, Aug. 2015, doi: 10.1111/cgf.12700. | |||

[5] A. Tkach, A. Tagliasacchi, E. Remelli, M. Pauly, and A. Fitzgibbon, “Online generative model personalization for hand tracking,” ACM Transactions on Graphics, vol. 36, no. 6, pp. 1–11, Nov. 2017, doi: 10.1145/3130800.3130830. | |||

[6] T. Winkler, Composing Interactive Music: Techniques and Ideas Using Max. Cambridge, MA, USA: MIT Press, 2001. | |||

[7] E. R. Miranda and M. M. Wanderley, New Digital Musical Instruments: Control and Interaction Beyond the Keyboard. Middleton, WI, USA: AR Editions, Inc., 2006. | |||

[8] D. Hosken, An Introduction to Music Technology, 2nd ed. New York, NY, USA: Routledge, 2014. doi: 10.4324/9780203539149. | |||

[9] P. D. Lehrman and T. Tully, "What is MIDI?," Medford, MA, USA: MMA, 2017. | |||

[10] C. Dobrian and F. Bevilacqua, Gestural Control of Music Using the Vicon 8 Motion Capture System. UC Irvine: Integrated Composition, Improvisation, and Technology (ICIT), 2003. | |||

[11] J. L. Hernandez-Rebollar, “Method and apparatus for translating hand gestures,” US7565295B1, Jul. 21, 2009 Accessed: Feb. 12, 2025. [Online]. Available: https://patents.google.com/patent/US7565295B1/en | |||

[12] I. Culjak, D. Abram, T. Pribanic, H. Dzapo, and M. Cifrek, “A brief introduction to OpenCV,” in 2012 Proceedings of the 35th International Convention MIPRO, May 2012, pp. 1725–1730. Accessed: Feb. 12, 2025. [Online]. Available: https://ieeexplore.ieee.org/document/6240859/?arnumber=6240859 | |||

[13] K. V. Sainadh, K. Satwik, V. Ashrith, and D. K. Niranjan, “A Real-Time Human Computer Interaction Using Hand Gestures in OpenCV,” in IOT with Smart Systems, J. Choudrie, P. N. Mahalle, T. Perumal, and A. Joshi, Eds., Singapore: Springer Nature Singapore, 2023, pp. 271–282. | |||

[14] V. Patil, S. Sutar, S. Ghadage, and S. Palkar, “Gesture Recognition for Media Interaction: A Streamlit Implementation with OpenCV and MediaPipe,” International Journal for Research in Applied Science & Engineering Technology (IJRASET), 2023. | |||

[15] A. P. Ismail, F. A. A. Aziz, N. M. Kasim, and K. Daud, “Hand gesture recognition on python and opencv,” IOP Conf. Ser.: Mater. Sci. Eng., vol. 1045, no. 1, p. 012043, Feb. 2021, doi: 10.1088/1757-899X/1045/1/012043. | |||

[16] R. Tharun and I. Lakshmi, “Robust Hand Gesture Recognition Based On Computer Vision,” in 2024 International Conference on Intelligent Systems for Cybersecurity (ISCS), May 2024, pp. 1–7. doi: 10.1109/ISCS61804.2024.10581250. | |||

[17] E. Theodoridou ''et al.'', “Hand tracking and gesture recognition by multiple contactless sensors: a survey,” ''IEEE Transactions on Human-Machine Systems'', vol. 53, no. 1, pp. 35–43, Jul. 2022, doi: 10.1109/thms.2022.3188840. | |||

[18] G. M. Lim, P. Jatesiktat, C. W. K. Kuah, and W. T. Ang, “Camera-based Hand Tracking using a Mirror-based Multi-view Setup,” ''IEEE Engineering in Medicine and Biology Society. Annual International Conference'', pp. 5789–5793, Jul. 2020, doi: 10.1109/embc44109.2020.9176728. | |||

[19] P. Rahimian and J. K. Kearney, “Optimal camera placement for motion capture systems,” ''IEEE Transactions on Visualization and Computer Graphics'', vol. 23, no. 3, pp. 1209–1221, Dec. 2016, doi: 10.1109/tvcg.2016.2637334. | |||

[20] R. Tchantchane, H. Zhou, S. Zhang, and G. Alici, “A Review of Hand Gesture Recognition Systems Based on Noninvasive Wearable Sensors,” ''Advanced Intelligent Systems'', vol. 5, no. 10, p. 2300207, 2023, doi: 10.1002/aisy.202300207. | |||

[21] Sahoo, J. P., Prakash, A. J., Pławiak, P., & Samantray, S. (2022). Real-Time Hand Gesture Recognition Using Fine-Tuned Convolutional Neural Network. ''Sensors'', ''22''(3), 706. <nowiki>https://doi.org/10.3390/s22030706</nowiki> | |||

[22] Cheng, M., Zhang, Y., & Zhang, W. (2024). Application and Research of Machine Learning Algorithms in Personalized Piano Teaching System. International Journal of High Speed Electronics and Systems. <nowiki>http://doi.org/10.1142/S0129156424400949</nowiki> | |||

[23] Rhodes, C., Allmendinger, R., & Climent, R. (2020). New Interfaces and Approaches to Machine Learning When Classifying Gestures within Music. Entropy. 22. <nowiki>http://doi.org/10.3390/e22121384</nowiki> | |||

[24] Supriya, S., & Manoharan, C.. (2024). Hand gesture recognition using multi-objective optimization-based segmentation technique. Journal of Electrical Engineering. 21. 133-145. <nowiki>http://doi.org/10.59168/AEAY3121</nowiki> | |||

[25] Benitez-Garcia, G., & Takahashi, Hiroki. (2024). Multimodal Hand Gesture Recognition Using Automatic Depth and Optical Flow Estimation from RGB Videos. <nowiki>http://doi.org/10.3233/FAIA240397</nowiki> | |||

[26] Togootogtokh, E., Shih, T., Kumara, W.G.C.W., Wu, S.J., Sun, S.W., & Chang, H.H. (2018). 3D finger tracking and recognition image processing for real-time music playing with depth sensors. Multimedia Tools and Applications. 77. <nowiki>https://doi.org/10.1007/s11042-017-4784-9</nowiki> | |||

[27] Manaris, B., Johnson, D., & Vassilandonakis, Yiorgos. (2013). Harmonic Navigator: A Gesture-Driven, Corpus-Based Approach to Music Analysis, Composition, and Performance. AAAI Workshop - Technical Report. 9. 67-74. <nowiki>http://doi.org/10.1609/aiide.v9i5.12658</nowiki> | |||

[28] Velte, M. (2012). A MIDI Controller based on Human Motion Capture (Institute of Visual Computing, Department of Computer Science, Bonn-Rhein-Sieg University of Applied Sciences). <nowiki>http://doi.org/10.13140/2.1.4438.3366</nowiki> | |||

[29] Dikshith, S.M. (2025). AirCanvas using OpenCV and MediaPipe. International Journal for Research in Applied Science and Engineering Technology. 13. 14671-1473. <nowiki>http://doi.org/10.22214/ijraset.2025.66601</nowiki> | |||

[30] Patel, S., & Deepa, R. (2023). Hand Gesture Recognition Used for Functioning System Using OpenCV. 3-10. <nowiki>http://doi.org/10.4028/p-4589o3</nowiki> | |||

[31] Riverbank Computing Limited, ''PyQt6''. PyPI - the Python Package Index. [Online]. Available: https://pypi.org/project/PyQt6/. | |||

[32] The Qt Company, ''Qt Designer Manual''. [Online]. Available: [https://doc.qt.io/qt-6/qtdesigner-manual.html. https://doc.qt.io/qt-6/qtdesigner-manual.html.] | |||

== Appendix == | |||

=== <u>Interviews:</u> === | |||

==== Interview (Gabriel K.) with (Jakub K., Music group and club manager, techno producer and DJ): ==== | |||

'''What tools or instruments do you currently use in your creative process? ''' | |||

For a live performance he uses: pioneer 3000 player, can send music to xone-92 or pioneer v10 (industry standard). Then it goes to speakers. | |||

alternatively instead of the pioneer 3000 a person can have a laptop going to a less advanced mixing dec. | |||

Our hand tracking solution would be a laptop as a full input to a mixing dec. or an additional input to xone-92 as a input on a separate channel. | |||

On the mixer he as a DJ mostly only uses eq, faders and adding effects on a knob leaving many channels open. Live performances use a lot more of the nobs then DJs | |||

'''Have you previously incorporated technology into your performances or creations? If so, how? ''' | |||

Yes he has, him and a colleague tried to add a drum machine to a live performance. They had an Ableton project that had samples and virtual midi controllers to add a live element to their performance. But it was too cumbersome to add to his standard DJ workflow. | |||

'''What challenges do you face when adopting new technologies in your work? ''' | |||

Practicality with hauling equipment setting it up. Adds time to setup, before and pack up after especially when he goes to a club. worried about the club goers destroying the equipment. In his case even having a laptop is extra work. His current workflow has no laptop he just has USB sticks with prepared music and plays it live on the already setup equipment. | |||

'''What features would you find most beneficial in a hand motion recognition controller for your work? ''' | |||

If it could learn specific gestures. Could be solved with sign language library. | |||

He likes the idea of assigning specific gestures in the GUI to be able to switch between different sound modulations. For example show thumbs up and then the movement of his fingers modulates pitch. Then a different gesture and his movements modulate something else. | |||

'''What are your expectations regarding the responsiveness and accuracy of motion-based controllers? | |||

''' | |||

Delay is ok, if he learns it and expects. If it's playing it like an instrument it's a problem but if it's just modifying a sound without changing how rhythmic it is delay is completely fine | |||

'''How important are the design and aesthetics of the tools you use? | |||

''' | |||

Aesthetics don't matter unless it's a commercial product. If he is supposed to pay for it he expects nice visuals otherwise if it works he doesn't care. | |||

'''What emerging technologies are you most excited about in your field? | |||

''' | |||

He says there is not that much new technology. Some improvements in software like AI tools that can separate music tracks etc... But otherwise it is a pretty figured out standardized industry. | |||

==== Interview (Matus S.) with (Sami L., music producer): ==== | |||

'''What tools or instruments do you currently use in your creative process? | |||

''' | |||

For music production mainly uses Ableton as main editing software, uses libraries to find sounds, and sometimes third party equalizers or midi controllers (couldnt give me a name on the spot). | |||

'''Have you previously incorporated technology into your performances or creations? If so, how? ''' | |||

No, doesn't do live performances. | |||

'''What challenges do you face when adopting new technologies in your work?''' | |||

Learning curves | |||

Price | |||

'''What features would you find most beneficial in a hand motion recognition controller for your work? ''' | |||

Being able to control eq's in his opened tracks, or control some third party tools, changing modulation, or volume levels of sounds/loops. | |||

'''What are your expectations regarding the responsiveness and accuracy of motion-based controllers?''' | |||

Would not want delay if its would be like a virtual instrument, but if the tool would only be used as eq or changing modulation, volume levels then its fine, but would be a little skeptical of accuracy of the gestures sensing. | |||

'''How important are the design and aesthetics of the tools you use?''' | |||

If its not commercial doesn't really care but ideally would avoid 20 y.o. looking software/interface | |||

'''What emerging technologies are you most excited about in your field?''' | |||

Does not really track them. | |||

==== Interview (Nikola M.) with ( a) Louis P., DJ and Producer, and b) Samir S., Producer and Hyper-pop artist): ==== | |||

'''What tools or instruments do you currently use in your creative process?''' | |||

a) MIDI duh, Ableton | |||

b) DAW (Ableton Live 11) and various synthesizers (mostly Serum and Omnisphere) | |||

'''Have you previously incorporated technology into your performances or creations? If so, how? ''' | |||

a) MIDI knobs | |||

b) Not a whole lot, outside of what I already use to create. When I perform I usually just have a backing track and microphone. | |||

'''What challenges do you face when adopting new technologies in your work?''' | |||

a) Software compatibility | |||

b) Lack of ease of use and lack of available tutorials | |||

'''What features would you find most beneficial in a hand motion recognition controller for your work?''' | |||

a) I'd like just a empty slot where you can choose between dry and wet 0-100% and you let the producer chose which effect / sound to put on it , but probably some basic ones like delay or reverb would be a start. | |||

b) Being able to modulate chosen MIDI parameters (pitch bend, mod wheel, or even mapping a parameter to a plugin’s parameter), by using a certain hand stroke (maybe horizontal movements can control one parameter and vertical movements another parameter?) | |||

'''What are your expectations regarding the responsiveness and accuracy of motion-based controllers? ''' | |||

a) Instant recognition no lag | |||

b) Should be responsive and fluid/continuous rather than discrete. There should be a sensitivity parameter to adjust how sensitive the controller is to hand actions, so that (for example) the pitch doesn’t bend if your hand moves slightly up/down while modulating a horizontal movement parameter | |||

'''How important are the design and aesthetics of the tools you use?''' | |||

a) Not that important its about the music and I think any hand controller thing would look cool because its technology | |||

b) I would say it’s fairly important. | |||

'''What emerging technologies are you most excited about in your field?''' | |||

a) 808s | |||

b) I’m not sure unfortunately. I don’t keep up too much with emerging technologies in music production. | |||

=== Exit interview Jakub K. === | |||

This interview was conducted as a semi structured interview. The general takeaways was a very positive reception of the project. The interviewee tested our final product on his mac book using Ableton, he was able to set it up within 10min with no guidance from us. For the main unstructured takeaways he really appreciated the molecularity aspect of it and the visual feedback he got in the application. He also really liked how seamlessly he was able to switch between cameras and the general open layout of the application. All features were immediately apparent to him and there was no need for us to explain any functionality. Furthermore he experimented with the software modulating sound for about 30min a part of that process was shown in the final presentation as a video recording. | |||

In the structured part he was asked the same set of questions as in the start and some supplementary questions. | |||

'''Can you imagine yourself using this tool in a live performance or music production?''' | |||

a) Yes, regarding the issue of the tool only working in good lighting conditions I personally don't mind that but some other performers might have a problem with it especially if they perform in gloves or prefer low light conditions. For music production I intend to try it out and integrate it into my workflow. | |||

'''Did you have any concerns initially that are now fixed?''' | |||

a) Yes, I was worried about how it would integrate with the MIDI standard and my workflow. I can confidently say that it works better then I expected and the modularity allows me to adjust it to exactly how I want to use it. | |||

'''What are some features that would have to be implemented before you would feel confident in performing with it live?''' | |||

a) There are definitely a few. First of it needs to be a bit more stable and bug free I have not experienced many bugs and technical problems but I need to be sure it won't fail me mid performance. I would also like to see a nicer user interface. There is nothing wrong with the current one I would just really appreciate it looking nicer and having more options. I would also need a better STOP implementation. Preferably the ability to bind the stop tracing button onto an external midi controller or DJ deck so I can shut it off if needed. I would also like to see some motion smoothing so there are now jumps and misstracks in the hand recognition. It needs to perform smoothly for a live performance. | |||

'''What are some good to have features you would like to see us implement?''' | |||

a) I would really like to see more gestures and more molecularity options, it's not mandatory for sure but it would expand what I can do with the app. I would also like to have an .exe file that I can run so I don't have to run the script. This last suggestion is a bit more out there but if you could output the tracking data and the visualization out of the app so I could use it for live visuals that would be very nice. And maybe an Ableton template that people can download and try out all the features in an already remade environment. | |||

'''Anything you would like to add which you haven't mentioned already?''' | |||

a) No, not much but in general I really like the expressiveness it could add to a live performance and I definitely see many different ways to use it. I am also very excited where it goes from here there is definitely space for this even in the music creating and studio space which I initially didn't expect. | |||

Latest revision as of 15:28, 10 April 2025

Group members: Nikola Milanovski, Senn Loverix, Rares Ilie, Matus Sevcik, Gabriel Karpinsky

Link to GitHub repository

Table of work times

Instead of having a long table of every week at the top of the wiki, we instead share a sheet with our work and tasks. And have that as a table in the appendix.

Problem statement and objectives

The synthesizer has become an essential instrument in the creation of modern day music. They allow musicians to modulate and create sounds electronically. Traditionally, an analog synthesizer utilizes a keyboard to generate notes, and different knobs, buttons and sliders to manipulate sound. However, through using MIDI (Music Instrument Digital Interface) the synthesizer can be controlled via an external device, usually also shaped like a keyboard, and the other controls are made digital. This allows for a wide range of approaches to what kind of input device is used to manipulate the digital synthesizer. Although traditional keyboard MIDI controllers have reached great success, its form may restrict expressiveness of musicians that seek to create more dynamic and unique sounds, as well as availability to people that struggle with the controls due to a lack of keyboard playing knowledge or a physical impairment for example.

During this project the aim is to design a new way of controlling a synthesizer using the motion of the users’ hand. By moving their hand to a certain position in front of a suitable sensor system which consists of one or more cameras, various aspects of the produced sound can be controlled, such as pitch or reverb. Computer vision techniques will be implemented in software to track the position of the users’ hand and fingers. Different orientations will be mapped to operations on the sound which the synthesizer will do. Through the use of MIDI, this information will be passed to a synthesizer software to produce the electronic sound. We aim to allow various users in the music industry to seamlessly implement this technology to create brand new sounds in an innovative, easy to control way to create these sounds in a more accessible way than through using a more traditional synthesizer.

Users

With this new and interesting way of performing live music, the main targets for this technology are users in the music industry. Such users include performance artists and DJ’s, which can implement this technology to enhance their live performances or sets. The more expressive form of hand tracking control adds another layer of immersion to a live performance, where the crowd is able to more clearly see how the DJ is manipulating the sound. Visual artists and motions based performers could integrate the technology within their choreography to be able to control the sound in a visually appealing way. Controlling sound parameters using hand motions/gestures could be a lot more visually appealing and crowd-pleasing when compared to more traditional technology. Content creators that use audio equipment to enhance their content, such as soundboards, could use the technology as a new way to seamlessly control the audio of their content. They could map hand gestures to sound effects, leading to entertaining and comedic moments.

This new way of controlling a synthesizer could also be a great way to introduce people to creating and producing electronic music. It would be especially useful for people with some form of a physical impairment which could have restricted them from creating the music that they wanted before. For example, people with a muscle disease could experience difficulties trying to control the knobs and sliders of digital music controllers. A hand tracking controller would allow them to use gestures and motions that work for them to produce or modify music.

User requirements

For the users mentioned above, we have set up a list of requirements we would expect the users to have for this technology. First of all, it should be easy to set up for performance artists and producers so they don’t spend too much time preparing right before their performance or set. Next, the technology should be easily accessible and easy to understand for all users, both people that have a lot of experience with electronic music, and people that are relatively new to it.

Furthermore, the hand tracking should work in different environments. For example, a DJ that works in dimly lighted clubs who integrate a lot of different lighting and visual effects during their sets should still be able to rely on accurate hand tracking. There should also be the ability to easily integrate the technology into the artist’s workflow. An artist should not change their entire routines of performing or producing music if they want to use a motion based synthesizer.

Lastly, the technology should allow for elaborate customization to fit to each user’s needs. The user should be able to decide what attributes of the recognized hand gestures are important for their work, and which ones should be omitted. For example, if the vertical position of the hand regulates the pitch of the sound, and rotation of the hand the volume, the user should be able to ‘turn off’ the volume regulation so that if they rotate their hand nothing will change.

To get a better understanding of the user requirements, we interviewed some people in the music industry such as music producers, a DJ and an audiovisual producer. The interview questions are as follows:

Background and Experience: What tools or instruments do you currently use in your creative process? Have you previously incorporated technology into your performances or creations? If so, how?

Creative Process and Workflow: Can you describe your typical workflow? How do you integrate new tools or technologies into your practice? What challenges do you face when adopting new technologies in your work?

Interaction with Technology: Have you used motion-based controllers or gesture recognition systems in your performances or art? If yes, what was your experience?

How do you feel about using hand gestures to control audio or visual elements during a performance?

What features would you find most beneficial in a hand motion recognition controller for your work?

Feedback on Prototype: What specific functionalities or capabilities would you expect from such a device?

How important is the intuitiveness and learning curve of a new tool in your adoption decision?

Performance and Practical Considerations: In live performances, how crucial is the reliability of your equipment? What are your expectations regarding the responsiveness and accuracy of motion-based controllers?

How do you manage technical issues during a live performance?

How important are the design and aesthetics of the tools you use?

Do you have any ergonomic preferences or concerns when using new devices during performances?

What emerging technologies are you most excited about in your field?

Summary of interview results:

The interviews revealed several key insights into the potential use of a hand motion recognition controller in music production and live performance. Most artists currently rely on prepared tracks and standard hardware or software tools, using effects and modulation to add expressiveness during live sets. A motion-based controller could serve as an additional input method for adjusting parameters like EQ, reverb, or pitch, offering a more granular and creative layer of control. However, it would be most effective as an optional enhancement rather than a replacement for existing gear, particularly if implemented as a software-based tool with a user-friendly interface.

Responsiveness and accuracy are important but context-dependent; slight delays are acceptable when modulating sound but not when playing in real time like an instrument. For broader adoption, the controller must be practical, quick to set up, and highly compatible with standard digital audio workstations and plugins. Its benefits must clearly outweigh the effort required to learn and implement it. While not ideal for detailed production work due to the imprecise nature of motion tracking, it holds promise as a dynamic and visually engaging tool in live settings—especially if designed to be flexible, intuitive, and easily integrated into existing performance environments.

Important takeaways:

- Artists, mainly DJs, have prepared tracks which are chopped up and ready to perform and mostly play with effects and values. So for this application using it instead of a knob or a digital slider to give the artist more granular control over an effect or a sound would be a possible implementation. For example if it our controller was an app with a GUI that could be turned on and of at will during the performance it could add "spice" to a performance.

- For visual control during a live performance, it's too difficult to use it live especially compared to the current methods. But it could possibly be used to control for example color or some specific element.

- Many venues have multiple cameras already pre setup which could be used to capture the gestures from multiple angles and at high resolutions.

- There is interest in using it live.

- If it is used to modulate sound and not to be played like an instrument delay isn't that much of a problem.

- Difficulty of using the software (learning it, implementing it) should not outweigh the benefit you could gain from it.

- Could be an interesting tool for live performances, but impractical for music production due to the imprecise nature of the software in comparison to physical controllers

- Must be easily compatible with a wide range of software

State-of-the-art

Preface

As there are currently no commercial products that fully realize the concept behind this project, the state of the art primarily resides within academic research and the open-source community. Consequently, our exploration focuses on individual technological components necessary to bring this product to life.

The main inspiration for this project originated from an Instagram video by a Taiwanese visual media artist (Instagram link), showcasing gesture-driven musical interaction. Further inspiration was found by discovering related innovations such as the Mi.Mu Gloves, developed by a English musician and producer Imogen Heap, which enable expressive control over music through hand gestures (Mi.Mu Gloves). A related commercial product was also found: the Roli Airwave, which uses a combination of cameras and ultrasonic sensors to track a pianist’s hand movements in order to control a MIDI keyboard (Roli Airwave). However, this product is both expensive and is intended as a tool to aid people in learning how to play keyboard, rather than as a performance tool.

Hardware

Hand tracking and gesture recognition can be achieved using a variety of imaging technologies, each with distinct trade-offs:

- RGB cameras are cost-effective and provide high-resolution color imagery but lack depth perception.

- Infrared (IR) sensors offer precise motion capture but are vulnerable to interference from other IR sources.

- RGB-D cameras, which integrate color and depth data, are widely adopted in real-time gesture recognition applications due to their balanced performance [3][4][5].