PRE2024 3 Group18: Difference between revisions

Created page with "Dylan Jansen, Bas Gerrits, Sem Janssen" Tag: 2017 source edit |

|||

| (261 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

Dylan Jansen, Bas Gerrits, Sem Janssen | = Members = | ||

{| class="wikitable" | |||

|+ | |||

!Name | |||

!Student Number | |||

!Division | |||

|- | |||

|Bas Gerrits | |||

|1747371 | |||

|BEE | |||

|- | |||

|Jada van der Heijden | |||

|1756710 | |||

|BPT | |||

|- | |||

|Dylan Jansen | |||

|1485423 | |||

|BEE | |||

|- | |||

|Elena Jansen | |||

|1803654 | |||

|BID | |||

|- | |||

|Sem Janssen | |||

|1747290 | |||

|BEE | |||

|} | |||

= Approach, milestones and deliverables = | |||

{| class="wikitable" | |||

|+Project Planning | |||

!Week | |||

!Milestones | |||

|- | |||

|Week 1 | |||

|Project orientation | |||

Initial topic ideas | |||

Creating planning | |||

Defining deliverables | |||

Defining target user group | |||

|- | |||

|Week 2 | |||

|Wiki layout | |||

Task distribution | |||

SotA research | |||

UX Design: User research | |||

|- | |||

|Week 3 | |||

|UX Design: User Interviews | |||

Identifying specifications (MoSCoW Prioritization) | |||

Wiki: Specifications, Functionalities (sensors/motors used) | |||

|- | |||

|Week 4 | |||

|Prototyping system overview | |||

UX Design: Processing interviews | |||

Bill of materials | |||

|- | |||

|Week 5 | |||

|Evaluating and refining final design | |||

Order needed items | |||

|- | |||

|Week 6 | |||

|Prototype creation and testing | |||

Wiki: Prototype description & Testing results | |||

|- | |||

|Week 7 | |||

|Presentation/demo preperations | |||

Wiki: Finalize and improve | |||

|} | |||

{| class="wikitable" | |||

|+Project Roles | |||

!Name | |||

!Responsibilities | |||

|- | |||

|Bas Gerrits | |||

|Sensor research | |||

|- | |||

|Jada van der Heijden | |||

|Administration, UX design, Wiki | |||

|- | |||

|Dylan Jansen | |||

|SotA, Prototype | |||

|- | |||

|Elena Jansen | |||

|Designing, UX research | |||

|- | |||

|Sem Janssen | |||

|Potential solutions, Prototype, Feedback Devices | |||

|} | |||

= Problem statement and objectives = | |||

=== Problem Statement === | |||

[[File:Sketch of the road scenario.png|alt=A cartoon style image of a road with two bike lanes, two car lanes, a truck, a car and a bike, with a person with a cane trying to cross made in photoshop|thumb|Sketch of the road scenario]] | |||

When encountering an environment as ever-changing and unpredictable as traffic, it is important for every traffic participant to have the widest range of information about the situation available to them, for safety reasons. Most roads are already covered in guiding materials: traffic lights, cross walks and level crossings have visual, tactile and auditory signals that are able to relay as much information to users of traffic as possible. Unfortunately, some user groups are more dependent on certain type of signals than others, for example due to disabilities. Not every crossing or road has all of these sensory cues, therefore it is important to find a solution for those user groups that struggle with this lack of information and therefore feel less safe in traffic. In specific, we are looking at visually impaired people, and creating a tool that will aid them in crossing roads, even with the lack of external sensory cues. The specific scenario used for this project is a 50km/h road with bike lanes on both sides, with no accessibility features, red lights or zebra. This scenario was recreated in Photoshop in the image on the right. | |||

'''Main objectives''' | |||

* The design aids the user in crossing roads regardless of external sensory cues present, thus giving more independence to the user. | |||

* The design has an audible testing phase and then gives intuitive haptic feedback for crossing roads. | |||

* The design must have a reliable detection system. | |||

* The design does not restrict the user in any way from wearing what they want, participating in activities, not calling extra unnecessary attention to the user. | |||

An extended list of all features can be found at ''MoScoW part.'' | |||

= State of the Art Literature Research = | |||

=== Existing visually-impaired aiding materials === | |||

[[File:Glidance.jpg|left|200px|thumb|Glidance, a prototype guidance robot for visually impaired people]] | |||

Today there already exist a lot of aids for visually impaired people. Some of these can also be applied to help cross the road. The most common form of aid for visually impaired people when crossing is audio traffic signals and tactile pavement. Audio traffic signals provide audible tones when it’s safe to cross the road. Tactile pavement are patterns on the side walk to alert visually impaired people to hazards or important locations like cross walk. These aids are already widely applied but come with the drawback that it is only available at dedicated cross walks. This means visually impaired people might still not be able to cross at locations they would like to, which doesn’t positively affect their independence. | |||

Another option is smartphone apps. There are currently two different types of apps that visually impaired people can use. The first is apps that use a video call to connect visually impaired people to someone that can guide them through the use of the camera. Examples of these apps are [https://www.bemyeyes.com/ Be My Eyes] and [https://aira.io/ Aira]. The second type is an app utilising AI to describe scenes using the phone’s camera. An example of this is [https://apps.apple.com/us/app/seeing-ai/id999062298 Seeing AI] by Microsoft. The reliability of this sort of app is of course a major question, however during research Aira was found to greatly improve QoL in severely visually impaired individuals<ref>Park, Kathryn & Kim, Yeji & Nguyen, Brian & Chen, Allison & Chao, Daniel. (2020). "Quality of Life Assessment of Severely Visually Impaired Individuals Using Aira Assistive Technology System". Vision Science & Technology. 9. 21. <nowiki>https://doi.org/10.1167/tvst.9.4.21</nowiki> </ref>. There have also been others apps developed but often not widely tested or used and others with a monthly subscription of 20 euros a month which is quite costly. | |||

There have also been attempts to make guidance robots. These robots autonomously guide, avoid obstacles, stay on a safe path, and help you get to your destination. [https://glidance.io/ Glidance] is one of these robots currently in the testing stage. It promises obstacle avoidance, the ability to detect doors and stairs, and a voice to describe the scene. In its demonstration it also shows the ability to navigate to and across cross walks. It navigates to a nearby cross walk, slows down and comes to a standstill before the pavement ends, and keeps in mind the traffic. It also gives the user subtle tactile feedback to communicate certain events to them. These robots could in some ways replace the tasks of guidance dogs. There are also projects that try to make the dogs robot-like. Even though this might make the implementation harder than it needs to be. It seems the reason for the shape of a dog is to make the robot feel more like a companion. | |||

[[File:Noa by Biped.ai worn by a user.png|alt=Noa by Biped.ai worn by a user|thumb|Noa by Biped.ai worn by a user]] | |||

There also exist some wearable/accessory options for blind people. Some examples are the [https://www.orcam.com/ OrCam MyEye]: A device attachable to glasses that helps visually impaired users by reading text aloud, recognising faces, and identifying objects in real time. Or the [https://www.esighteyewear.com/ eSight Glasses]: Electronic glasses that enhance vision for people with legal blindness by providing a real-time video feed displayed on special lenses. There's also the Sunu Band (no longer available): A wristband equipped with sonar technology that provides haptic feedback when obstacles are near, helping users detect objects and navigate around them. While these devices can all technically assist in crossing the road, none of them are specifically designed for that purpose. The OrCam MyEye could maybe help identify oncoming traffic but may not be able to judge their speed. The eSight Glasses are unfortunately not applicable to all types of blindness. And the Sunu Band would most likely not react fast enough to fast-driving cars. Lastly, there are some smart canes that come with features like haptic feedback or GPS that can help guide users to the safest crossing points. | |||

https://babbage.com/ Had a product called the N-vibe consisting of two vibrating bracelets giving feedback on surroundings but if you look it up on their site the page seems to be deleted https://babbage.com/?s=n+vibe The product used to be a GPS that helps blind people get from point a to point b in a less invasive way with feedback through vibration. This feedback through vibration has been tested in multiple scenarios and tends to work. This would also be a good idea for our project. Babbage is a company with many solutions for visually impaired people with products slightly similar to our goal but nothing quite like it yet. | |||

Biped.ai developed a wearable vest called 'NOA'. "NOA is a revolutionary vest worn like a backpack that guides people with blindness and low vision in complement to canes & dogs. With cameras, a computer, and hands-free operation, it speaks to you to give you clear instructions.Through audio feedback, NOA identifies obstacles, provides GPS navigation, describes surroundings, and helps locate important things like cross walks and doors - both indoors and outdoors." (description from their official site).<ref>''NOA by biped.ai, your AI mobility companion''. (z.d.). <nowiki>https://biped.ai/</nowiki></ref> | |||

=User Experience Design Research: USEr research= | |||

For this project, we will employ a process similar to UX design: | |||

*We will contact the stakeholders or target group, which is in this case visually impaired people, to understand what they need and what our design could do for them | |||

*Based on their insight and literature user research, we will further specify our requirements list from the USE side | |||

*Combined with requirements formed via the SotA research, a finished list will emerge with everything needed to start the design process | |||

*From then we build, prototype and iterate until needs are met | |||

Below are the USE related steps to this process. | |||

===Target Group=== | |||

Primary Users: | |||

*People with affected vision that would have sizeable trouble navigating traffic independently or crave more independence in unknown areas: ranging from heavily visually impaired to fully blind (<10%) | |||

Our user group only focusses on those who are able to independently take part of traffic in at least some familiar situations. | |||

Secondary Users: | |||

*Road users: any moving being or thing on the road will be in contact with the system. | |||

*Fellow pedestrians: the system must consider other people when moving. This is a separate user category, as the system may have to interact with these users in a different way than, for example, oncoming traffic. | |||

===Users=== | |||

'''General information''' | |||

As not everyone is as educated on visually challenged people and what different percentages mean for people sight some general information is included. | |||

Interviewed users have said that just a percentage is not always enough to illustrate the level of blindness. Thus we combine it with the kind of blindness in our survey and interview. | |||

An example, Peter from Videlio has 0.5% with tunnel vision, and George (70yo) has complete blindness. | |||

*"Total blindness: This is when a person cannot see anything, including light. | |||

*Low vision: Low vision describes visual impairments that healthcare professionals cannot treat using conventional methods, such as glasses, medication, or surgery. | |||

*Legal blindness: “Legal blindness” is a term the United States government uses to determine who is eligible for certain types of aid. To qualify, a person must have 20/200 Trusted Source vision or less in their better-seeing eye, even with the best correction. | |||

*Visual impairment: Visual impairment is a general term that describes people with any vision loss that interferes with daily activities, such as reading and watching TV."<ref>Nichols, H. (2023b, april 24). ''Types of blindness and their causes''. <nowiki>https://www.medicalnewstoday.com/articles/types-of-blindness#age-related</nowiki></ref> | |||

In the Netherlands we use different terms and fractions. These are used in answers to our interview questions: | |||

*Milde – gezichtsscherpte slechter dan 6/12 tot 6/18 50%-33% | |||

*Matig – gezichtsscherpte slechter dan 6/18 tot 6/60 33%-10% | |||

*Ernstig – gezichtsscherpte slechter dan 6/60 tot 3/60 10%-5% | |||

*Blindheid – gezichtsscherpte slechter dan 3/60 <5% | |||

'''6/60''' means that what a person with 20/20 vision can see at '''60 meters''', doesnt stop being blurry until '''6 meters'''. For '''3/60''' this is 3 meters. | |||

The Dutch version of legal blindness: "Maatschappelijk blind: Your visual acuity is between 2% and 5%. You can still see light and the outlines of people and objects, but your visual impairment has a major impact on your life. Sometimes a person may see sharply, but there is a (severe) limitation in the field of vision, such as tunnel vision. If your field of vision is less than 10 degrees, this is referred to as maatschappelijk blind."<ref>Lentiamo.nl. (2023b, augustus 2). ''Wat betekent het om wettelijk blind te zijn?'' <nowiki>https://www.lentiamo.nl/blog/wettelijke-blindheid.html?srsltid=AfmBOorvRSTnULBQsYzCX0BvJ5k9JQnrlvt65dlUgwTOjzMnPugfEybp</nowiki></ref> <ref>Oogfonds. (2022b, september 9). ''Blindheid - oogfonds''. <nowiki>https://oogfonds.nl/visuele-beperkingen/blindheid/</nowiki></ref> | |||

For 50%-10% people can usually be in traffic by themselves with no issues and no tools. Sometimes high contrast glasses are used or other magnification tools. Starting at 10% a lot of people use a cane to look for obstacles. This is why we focus on people with less than 10% vision or rapidly declining vision for our research and as our target group. | |||

At 2% vision people can still count fingers from 1m distance. However this can differ with different kinds of blindness; | |||

Tunnel vision, central vision loss (the centre of vision is blurry), peripheral vision loss, blurry vision, fluctuating vision (vision changes due to blood sugar etc), Hemianopia (loss of vision in half of the vision field on one or both eyes), light perception blindness (Being able to see shape and a little coulor). | |||

'''''What Do They Require?''''' | |||

The main takeaway here is that visually impaired people are still very capable people, and have developed different strategies for how to go about everyday life. | |||

"I have driven a car, a jet ski, I have gone running, I have climbed a mountain. Barriers don’t exist we put them in front of us." (Azeem Amir, 2018) | |||

‘Not having 20/20 vision is not an inability or disability its just not having the level of vision the world deems acceptable. I'm not disabled because I'm blind but because the sighted world has decided so.’<ref>TEDx Talks. (2018, 8 november). ''Blind people do not need to see | Santiago Velasquez | TEDxQUT'' [Video]. YouTube. <nowiki>https://www.youtube.com/watch?v=LNryuVpF1Pw</nowiki></ref>. This was said by Azeem Amir in a Ted Talk about how being blind would never stop him. In the interviews and research it came forward again and again that being blind does not mean you can not be an active participant in traffic. While there are people that lost a lot of independence due to being blind there is a big group that goes outside daily, takes the bus, walks in the city, crosses the street. There are however still situations where it does not feel safe to cross a busy street in which case users have reported having to walk around it, even for kilometres, or asking for help from a stranger. This can be annoying and frustrating. In a Belgian interview with blind children a boy said the worst thing about being blind was not being able to cycle and walk everywhere by himself, interviewing users also reflected this<ref>Karrewiet van Ketnet. (2020, 15 oktober). ''hoe is het om blind of slechtziend te zijn?'' [Video]. YouTube. <nowiki>https://www.youtube.com/watch?v=c17Gm7xfpu8</nowiki></ref>. So our number one objective with this project is to give back that independence. | |||

A requirement of the product is not drawing to much attention to the user. This is because while some have no problem showing the world they're legally blind and need help, others prefer not to have a device announce they are blind over and over. | |||

Something that came forward in the interviews is that google maps and other auditory feedback software lack a repeat button. Traffic can be loud and then when the audible cue is missed it is hard to figure out where to go or what to do. So while audio is helpful it is only helpful if it can be repeated or is not the sole accessible option. Popular crossing methods include listening to parallel traffic, the logic being if the traffic parallel to the user is driving the traffic in front can not go. In the Netherlands there is also a legal protocol that says when a blind person sticks up their cane all traffic is required by law to stop and let them cross. In reality this is often not the case with many people not stopping. The AD also posted an article on this in 2023 stating that half of cyclists and drivers don't stop, causing one-third of blind people to feel unsafe crossing the road.<ref>''DPG Media Privacy Gate''. (z.d.). <nowiki>https://www.ad.nl/auto/zo-moet-je-als-verkeersdeelnemer-reageren-als-blinden-en-slechtzienden-hun-stok-de-lucht-in-steken~ac8d46e0/</nowiki></ref> | |||

Blind people do not like to be grouped with disabled people, or even mentally challenged people. They might not have the same vision as us but that is the only difference. There is nothing wrong with their mind, they are not helpless children. Blind people also might not look aware of their surroundings but they often are. When designing this has to be kept in mind. Blind people can still get complicated solutions, can understand a design process and thus why a product might be expensive. Often accessibility solutions can be simple, but we do not need to oversimplify it so they can understand. | |||

For our interviews a lot of the interviewed people were fairly old, this also makes sense as our main contact that used his network was Peter who is not young himself, and because as we get older chances of our vision decreasing increase. Many of them due to their lack of vision have learned to use technology and more complicated devices than the average elderly person. They also might be more open to trying new things. This does not go for everyone so we still have to be careful with a intuitive UX design but less so than with other elders. | |||

===Society & enterprise=== | |||

''How is the user group situated in society? How would society benefit from our design? What is the market like?'' | |||

People that are visually impaired are somewhat treated differently in society. In general, there are plenty of guidelines and modes of help accessible for this group of people, considering globally at least 2.2 billion have a near or distance vision impairment<ref>World Health Organization: WHO. (2023, 10 augustus). ''Blindness and vision impairment''. <nowiki>https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment</nowiki></ref>. In the Netherlands there are 200 000 people with a 10%- 0% level of sight ('ernsitge slechtziendheid' and 'blindheid'). It is important to remember that when someone is legally blind a big percentage has some form of rest vision left. Peter who we have interviewed for this project and is a member of innovation space on campus, has 0.5% sight with tunnelvision. This means Peter can only see something in the very centre. Sam from a Willem Wever interview has 3% with tunnel vision and can be seen in the interview wearing special glasses through which he can still watch tv and read<ref>Kim Bols. (2015, 18 februari). ''Willem Wever - Hoe is het om slechtziend te zijn?'' [Video]. YouTube. <nowiki>https://www.youtube.com/watch?v=kg4_8dECL4A</nowiki></ref>. Society often forgets this and simply assume blind people see nothing at all. This is true a 70 year old man we interviewed, but not for all target users. | |||

The most popular aids for visually challenged people is currently the cane and the support dog. Using these blind people navigate their way through traffic. In the Netherlands it is written in the law that when the cane is raised cars have to stop. In reality this does not always happen, especially bikes do not stop, this was said in multiple interviews. Many people do have the decency to stop but it is not a fail proof method. Blind people thus remain dependant on their environment. Consistency, markings, auditory feedback and physical buttons are there to make being independent more accessible. These features are more prominent in big cities, which is why our scenario takes place in a town road with no accessibility features. | |||

Many interviewed people reported apps on their phones, projects from other students, reports from products in other countries, paid monthly devices, etc. There are a lot of option for improvement being designed or researched but none are being brought into the big market. Peter from Videlio says the community does not get much funding or attention in new innovations. Which is why he has taken it into his own hands to create a light up cane for at night, which until now does not even exist for Dutch users. | |||

There is a lot of research on universities and school projects because it is an 'interesting user group' with a lot of potential for new innovations, but they do not get actual new products on the market. People with a vision of less than 10% make up such a small part of society that there is simply not enough demand for new products as those are very expensive to develop, which is a shame. | |||

As society stands right now, the aids that exist do help in aiding visually impaired people in their day to day life, but do not give them the right amount of security and confidence needed for people to feel more independent, and more at equal pace as people without impaired vision. In order to close up this gap, creating a design that will focus more on giving users a bigger sense of interdependency and self-reliance is key. | |||

=User Experience Design Research: Stakeholder Analysis= | |||

To come in closer contact with our target groups, we reached out to multiple associations via mail. Some example questions were given to show the associations what our interviews could potentially look like. | |||

Following these questions, we got answers from multiple associations that were able to give us additional information and potentially participate in the interviews. | |||

From this, we were able to setup interviews via Peter Meuleman, researcher and founder of Videlio Foundation. This foundation focusses on enhancing daily life for the visually impaired through personal guidance, influencing governmental policies and stimulating innovation in the field of assisting tools. Peter himself is very involved in the research and technological aspects of these tools, and was willing to be interviewed by us for this project. He also provided us with interviewees via his foundation and his personal circle. | |||

Additionally, through a personal contact, we were able to visit StudioXplo on the 21st of March to conduct some in-person interviews. | |||

These interview were semi-structured, with a structured version being sent as an announcement on [https://www.kimbols.be/oproepjes/oproep-ervaring-van-het-verkeer-onder-slechtziende-blinde-mensen.html www.kimbols.be] (in Dutch) via Microsoft Forms. The announcement, form link and the questions can be found in the Appendix as well. | |||

==Interview results== | |||

With these interviews, we were aiming to find answers to the following research questions: | |||

*How do visually impaired people '''experience''' crossing the road? | |||

*What '''wishes''' do visually impaired people have when it comes to new and pre-existing aids? | |||

*How can we improve the sense of '''security''' and '''independency''' for visually impaired people when it comes to crossing the road? | |||

From the interviews, a (deductive) thematic analysis was done. As we went into these interviews with a predefined goal and some pre-exiting notions on what the participants might answer, the approach is deductive. | |||

A total of 17 people were interviewed, of which 5 were done via call (semi-structured), two face to face (semi-structured) and 10 via Microsoft Forms (structured). The average age of respondents was around 53, with ages ranging from 31 - 69. A large amount of participants reported to be fully blind, with some stating to have legal blindness or low vision. | |||

====Thematic Analysis==== | |||

{| class="wikitable" | |||

|+ | |||

!Theme | |||

! Codes | |||

|- | |||

|'''Experience of traffic''' | |||

|Little attention to pedestrians | |||

Abundance of non-marked obstacles | |||

Very dependant on situation | |||

Familiar = comfort | |||

|- | |||

|'''Experience of crossing the road''' | |||

| Unclarity about crossing location | |||

Lack of attention from other traffic users | |||

Lack or abundance of auditory information | |||

Taking detours for dangerous roads | |||

|- | |||

|'''Road crossing strategies''' | |||

| Patience for safety | |||

Focus on listening to traffic | |||

Being visible to other traffic users | |||

Confidence | |||

Cyclists | |||

Asking for help if needed | |||

|- | |||

|'''Aid system needs''' | |||

| Not being able to pick up everything | |||

Aid should not be handheld | |||

Preference for tactile information + haptic feedback | |||

Control over product | |||

|} | |||

=====Experience of traffic===== | |||

Overall, participants gave very varying answers when it came to their general experience with traffic. Almost all users use a white cane, with some having an additional guide dog. Most if not all participants expressed that they are able to move around freely and with general ease and comfort, but that it is dependant on the situation at hand. Most participants expressed their experience with traffic to worsen in a higher-stake situation, with busy roads. '''This is mostly due to other traffic users 'being impatient and racing on''' '. This point comes back multiple times, with people expressing that at peak hours they could lose track of the situation easier. | |||

Additionally, it was mentioned that participants could find it hard to navigate new situations, especially those where things are not marked properly. One participant mentioned that there are cases of bike paths right behind a road with a cross walk, where the cross walk doesn't extend to the bike path. She mentioned that it is hard to anticipate for situations like this, where there are '''unexpected''' '''obstacles'''. Things such as curbs or tactile guiding lines being blocked off by for example café tables or billboards were also mentioned as hazards. Another participant talked about the struggles of road work and construction noise throwing her off even on familiar routes. | |||

=====Experience of crossing the road===== | |||

The point of the sound guidance at traffic lights or traffic control systems ('''''rateltikker''''') came up multiple times. Participants mentioned that these really help, but are '''not available everywhere''', sometimes '''turned off''' or the sound is '''too low'''. When it comes to these types of auditory information, participants mentioned that, since they rely much on their hearing, the lack of auditory signals can make it hard for them to cross. Things such as cyclists, electric vehicles and On the other hand, | |||

Participants also mentioned it can sometimes be hard to '''find the spot to cross the road'''. One participant mentioned that the sound of the traffic can be a hint to a traffic light being present ("If the traffic is all going and then suddenly stops, I know there is a traffic light nearby"), but if there are no guidelines (no ''rateltikker'' or tactile guiding lines), she would not know where to cross the road. | |||

Another participant talked about how he always avoids crossing in corners of the road and knows other blind people do this too, because the corner is one of the more dangerous parts to cross. | |||

=====Road crossing strategies===== | |||

A general theme that comes up is '''safety first'''. Many participants described an approach of '''patience''' and '''clarity''' when it comes to crossing the road. Firstly, if the road was too busy (a high-speed road, two-way road or at peak hour), many participants would choose to avoid the road or find the safest spot to cross. If there is no other option, some would also ask cyclists to aid in crossing the road. Secondly, all participants described that they would carefully '''listen to traffic''', and try to cross when it is least busy. Many mentioned they would do this when they '''were absolutely sure nothing was coming''' (no sound from traffic, even then still waiting a bit). Then, in order to actually cross, the participants that owned a white cane would then lift their cane or push it towards the road, so that other users '''can see that they want to cross'''. Participants clearly stated '''visibility''' as an important thing, but mentioned even then some traffic users would not stop. | |||

Two participants mentioned that in order to show you want to cross, you should just move '''confidently''' and without hesitation''',''' since otherwise traffic will just keep moving. Other participants seem to share this sentiment of traffic being unpredictable, but seem to prefer waiting until they are certain it is safe: when there is hesitation, they would not cross. | |||

An additional issue brought up was '''cyclists''' (or electric vehicles): these were '''hard to hear''', and would often refuse to stop. This issue of traffic users being impatient was mentioned before in experience of traffic, and seems to reoccur here and restrict the participants' crossing strategies. | |||

Two-way roads are a point of interests: some participants mentioned these type of roads as hard to cross. Most participants prefer to '''cross the road in one go''', and for two-way roads, it is hard to anticipate whether there is a car coming from the other side, and whether cars are willing to stop and wait. Many mentioned the concept of '''parallel traffic''' in order to identify when to cross: if the traffic parallel to them is surging, they know not to cross and vice versa. People with some form of vision left are more confident in crossing roads with two lanes, especially during daylight. | |||

=====Aid system needs===== | |||

In general, one of the main issues brought up, which is mentioned before in Road crossing strategies, is that there are some traffic users that the participants are '''unable to hear'''. Their aids are not able to detect these sorts of things, and circumstances such as loud wind or traffic can make it hard for the participants themselves to pick these things up. | |||

Almost all users expressed a preference for an aid system with '''tactile''' information, especially when considering the overload of auditory information already present in traffic (cars, beeps, wind etc.). One participant mentioned that a combination of the two could be used as a way of learning how the aid works (this participant also travels with other visually impaired friends, and mentioned the auditory component could aid their friends in crossing the road together). | |||

Many users expressed concern when it comes to '''control''': most prefer to have absolute control over the aid system, with many expressing concern and distrust ("A sensor is not a solution" and "I'd rather not use this"). Some prefer minimal control, mostly due to their energy going towards crossing the road already. One participant mentioned as an example that they would like to be able to turn their system on and off whenever it suits the situation. Another participant asked for a stand-by mode where it would not fully turn off and recognise when it is time to cross a road but this would be much harder in application. | |||

Participants also said they would prefer to not have to '''hold''' the system in their hand, as most already have their hands full with their other aids and if they were to drop the product it could be harder to retrieve. | |||

====Insight overview==== | |||

Overall, the general idea that independency is an important aspect for our user group has become very clear from our interviews. From the four themes created in the analysis, we can set up a more comprehensive list of what our user group seems to need and desire in the context of crossing roads. | |||

As for the research questions: | |||

*How do visually impaired people '''experience''' crossing the road? | |||

**Many things were mentioned about the general scanning of surroundings, also in unknown areas. A common struggle is finding the actual point of where to stand to safely cross the road. A spot where the user is visible to others, a crossing, a green light, it is not always easy to find. In unknown surroundings, users are more prone to avoid the situation entirely. Roads have different setups: a common obstacle is a road that is in the middle divided by a car parking, bushes, tiles etc. It is thus important to consider that our product should not be dependant on the type of road of general differing traffic situations. | |||

**Not all legally blind people are capable of taking part in traffic by themselves. When it comes to our product, we want to limit it to those who are able to go places without assistance. Other aspects mentioned related to the detections of objects and surroundings mentioned by the interviewees will not be taken into account when it comes to the product itself, as this is outside the scope of the project at this moment. | |||

**Attention to cyclists is an unexpected extra point of interest: as interviewees mentioned these are hard to hear and are more prone to not stopping, it is important that our product is able to detect and differentiate all sorts of traffic, not just cars. | |||

**Users generally prefer to cross the road in one go. With respect to this, our device should let the user know when it is safe to cross the road entirely, not partially. | |||

*What '''wishes''' do visually impaired people have when it comes to new and pre-existing aids? | |||

**Users have a general need for the product to be out of the users' way, or the need of the product not interrupting their general road-crossing procedures. This includes a general preference for the product to '''not''' be in their hands, not be too heavy. As a solution to this issue, we opt to place the sensors on the shoulders of the user, and a bracelet for the feedback. | |||

**A general preference for tactile feedback over auditory feedback was mentioned, with the usage of auditory feedback being an additional mode of help. Auditory feedback can not be heard due to surrounding sounds, but can also help fellow (visually impaired) pedestrians in giving information on when to cross the road. Additionally, some visually impaired people (especially as they age) have declining hearing. The auditory feedback should at most be '''secondary''' to tactile with all these considerations. | |||

***The vibrations should already be on, also when the user cannot cross yet (with respect to the users mentioning that some traffic aids can be off or to low, leading to annoyance). | |||

**The product '''can''' be visible, since our user group usually already has aids that indicate to others that they are visually impaired. Combined with the reluctance and uncertainty of whether traffic actually stops, is it important that the users do not look like they are not visually impaired. | |||

*How can we improve the sense of '''security''' and '''independency''' for visually impaired people when it comes to crossing the road? | |||

**In order to give the users more independence, it is best to give the users a lot of control over the product. This means giving them the choice of what level of feedback they want to receive, and allowing them to turn the product off or on whenever they like. | |||

**In terms of security, the product should in practice always be on, and the product needs to have a long battery life to ensure it does not give faulty readings. | |||

=MoSCoW Requirements= | |||

From the user interview we can infer some design requirements. These will be organised using the MoSCoW method. First off, the product will have to give feedback to the user. It was mentioned in the interviews that audio feedback might not always be heard, however vibrational feedback can be felt and might be more reliable. Therefore vibrational feedback will be the feedback method of choice and audio is seen as an optional feature. Many visually impaired people also already use tools to help them, it should be taken into consideration that our solution does not impact the use of those tools or it could even integrate with them. Interviewees also mentioned that what they would like is to be more independent, this is therefore also a priority for the solution. Ideally it would create more opportunities for visually impaired people to cross the road where and when they want. Some people mentioned in the interviews they don't like drawing much attention to their blindness, therefore a the implementation should be rather discrete. This could be difficult however, as quite a few devices are needed which might be difficult to completely hide. A very important step of designing a road crossing aid is of course safety because mistakes could injure or even cost someone their life. Thus, safety should be a high priority when making a product. It's also clear from the interviews that users do not need the solution to be simplified for them and are capable of handling more complicated devices. The solution can thus be allowed to be more advanced. | |||

Because the group of visually impaired users is quite diverse, a decision must be made about who to design for specifically. As people with minor visual impairments do not require much in the way of assistance in crossing the road, focusing the design around people with moderate to severe blindness will provide more usefulness. Furthermore, the solution will not be able to replace the place of current blind assistance tool but rather act as an additional help. While AI detection of cars, bicycles, or other traffic could provide a good solution to evaluating traffic situations, this is outside of the scope of the project and will therefore not be considered. | |||

The table below summarises the design requirements. | |||

{| class="wikitable" | |||

|+ | |||

!Must | |||

! Should | |||

!Could | |||

!Won't | |||

|- | |||

|Give vibration feedback | |||

|Not draw too much attention | |||

| Give audio feedback | |||

|Be designed for people with minor visual impairments | |||

|- | |||

|Ensure safety | |||

|Allow new road crossing opportunities | |||

|Integrate with existing aids | |||

|Implement AI detection | |||

|- | |||

|Not get in the way | |||

|Not obstruct use of canes/dogs | |||

|Be complex/advanced | |||

|Replace existing aids | |||

|- | |||

|Provide extra independence | |||

|Allow crossing the road in one go | |||

|Detect all kinds of traffic | |||

|Detect the physical road layout | |||

|} | |||

=Design ideas= | |||

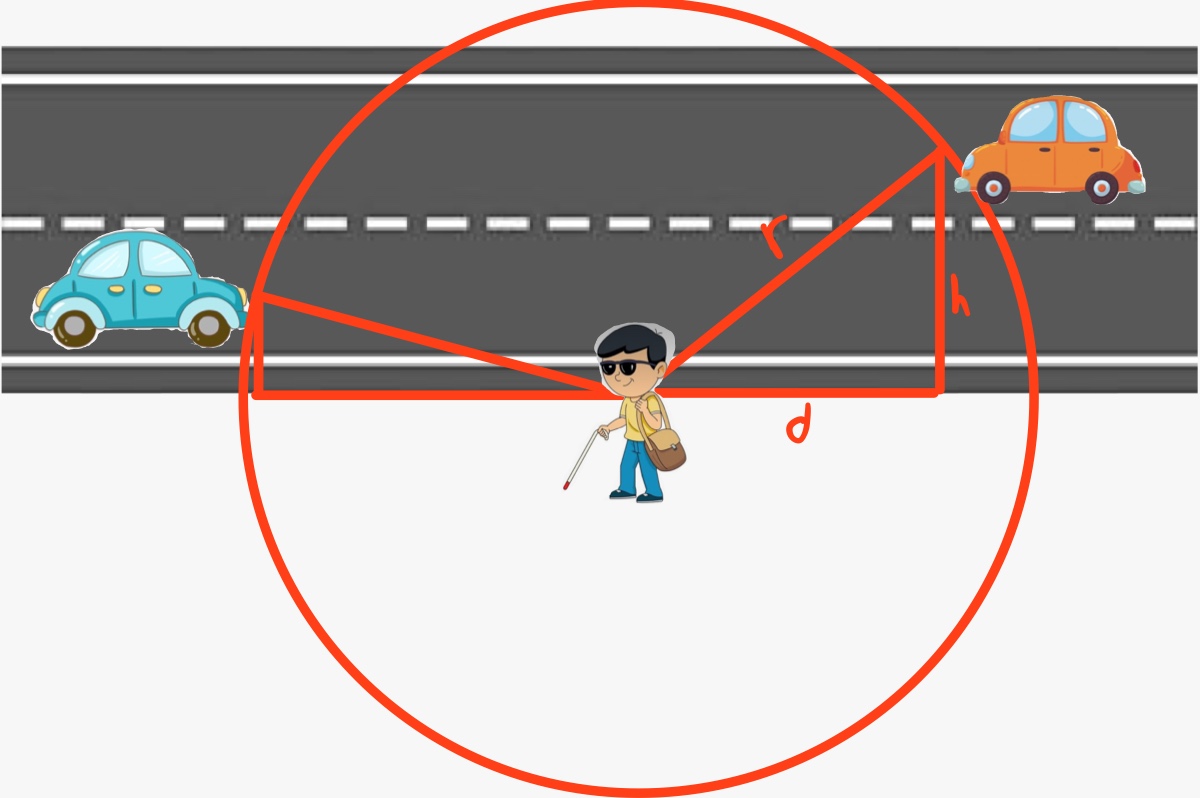

[[File:SituationCalculation.jpg|thumb|alt=Visualisation uses Pythagoras to calculate the minimum distance the sensor needs to detect cars. |Visualisation to calculate the minimum distance.]]To safely cross a road with a speed limit of 50 km/h using distance detection technology, we need to calculate the minimum distance the sensor must measure. | |||

Assuming a standard two-lane road width of 7.9 meters to be on the safe side, the middle of the second lane is at 5.8 meters. This gives us a height '''''h''''' of 5.8 meters. | |||

The average walking speed is 4.8 km/h, which converts to 1.33 m/s. Therefore, crossing the road takes approximately 5.925 seconds. | |||

The distance '''''d''''' can now be calculated. A car travelling at 50 km/h moves at 13.88 m/s, so: | |||

<math>d=13.88 * 5.925 = 82.24 </math> meters | |||

Applying the Pythagorean theorem, the radius '''''r''''' is: | |||

<math>r=\sqrt(d^2 + h^2)=\sqrt(82.24^2 + 5.8^2)=82.44</math> | |||

This means the sensor needs to measure a minimum distance of approximately 82.44 meters to ensure safe crossing. However, the required distance needs to be greater to ensure the user can cross the road safely. Users also need enough time to react to the device's feedback and make a decision based on additional cues, such as sound. Since the distance is significantly greater than the height, the height factor has minimal influence on the calculation and can be neglected. To be on the safe side, the threshold is set to 9 seconds by default. However, users can adjust this value in the settings based on what they feel comfortable with. To help prevent accidents, the threshold cannot be set lower than 8 seconds. | |||

===Sensors=== | |||

There are 3 main ways to measure distances which we will discuss and evaluate for our application: Ultrasonic sound sensor, lidar/radar, or an AI camera. | |||

====Radar/Lidar sensor==== | |||

This section discusses radar versus Lidar. Lidar and radar sensors are both able to measure distances of at least 100 meters. Both sensors are widely used, for example, in autonomous vehicles, robotics, traffic control and surveillance systems. To determine the best sensor for this application, the strengths and weaknesses are evaluated. | |||

The benefits of the lidar sensor are as follows: | |||

* High precision and resolution: Lidar uses laser beams, which offer high accuracy and resolution. It can be used for 3D mapping of environments and object detections. Due to the high resolution, it can make great measurements. | |||

* 3D mapping: The lidar sensor sends light beams in all directions, creating 3D images of the surroundings. This can be useful when implementing an AI with lidar helping the person navigate the surroundings. It can detect the difference between trucks, cars, bicycles and pedestrians. | |||

The drawbacks of the lidar sensor are as follows: | |||

* Weather conditions: The range decreases significantly when the conditions are not ideal. The conditions the lidar sensor struggles with are rain, fog, snow, dust and smoke. The light beam scatters, reducing the accuracy. Bright sunlight can cause noise to the data. | |||

* Expensive: Lidar sensors are expensive, which would make the product quite expensive and not appealing to the user. | |||

* Surface: The surface matters when measuring using light. Lidar sensors struggle with reflective and transparent surfaces, which results in inaccurate data. | |||

* Power consumption: Lidar systems consume more power than radar sensors, which is not ideal when using a battery system to power the device. | |||

The benefits of the radar sensor are as follows: | |||

* Longer range: Radar sensors are able to measure up to much larger ranges. | |||

* Robustness: The radar sensor is less affected by rain, fog or snow, making it more reliable for different weather conditions. The sensor is also not affected by sunlight because it uses radio waves. | |||

* Lower cost: Most radar sensors are less expensive than lidar sensors, especially when comparing sensors with the same measuring ranges. Thus making the product less expensive for the user. | |||

* Smaller: The radar sensor is significantly smaller in size than the lidar sensor. | |||

The drawbacks of the radar sensor are as follows: | |||

* Lower resolution: The radar sensor provides less data than the lidar sensor. Radar sensors have a hard time differentiating between similar objects and small objects. The situation measured using the radar sensor is less detailed than the lidar. | |||

* Busy environments: When there are multiple reflecting surfaces, a lot of echoes arise from the surfaces, causing the radar sensor to pick up more objects than there are in the surroundings. | |||

* Angles: Increasing the angle at which the radar sensor can measure the environment decreases the distance. Due to this the product needs two radar sensors to measure in 2 different directions to check if it is clear to cross the road. | |||

* Frequency dependent: The resolution of the radar sensor is dependent on the frequency of the sensor. Increasing the frequency increases the resolution of the radar but also increasing the price. | |||

In conclusion, the lidar provides better resolution however for this application the high resolution is not necessary to measure road users. The sensor needs to accurately measure position and speed of road users. If sensor picks up more road users at the same place because of echoes that doesn’t matter because filters can solve this problem. The robustness of the sensor is important to reliable operation in all weather condition. Cost is also an important factor. While the radar sensor requires two units to function, it is still cheaper compared to a single LIDAR sensor. Additionally, power consumption is important to keep the device compact and light, avoiding the need for a large battery. That is why the radar sensor is most optimal for this application. | |||

The LiDAR and radar sensors that were investigated can be found at the following links: [https://ouster.com/products/hardware/os0-lidar-sensor LIDAR] sensor and [https://www.youtube.com/watch?v=5SJbFr4cpfA Radar] sensor. | |||

====Ultrasound using Doppler effect ==== | |||

This section discusses ultrasound sensors to measure distance utilising the Doppler effect (Doppler shift). This technology is already widely researched and used in applications for blind and visually impaired people.<ref>''A blind mobility aid modeled after echolocation of bats''. (1991, 1 mei). IEEE Journals & Magazine | IEEE Xplore. <nowiki>http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=81565&isnumber=2674</nowiki></ref><ref>Culbertson, H., Schorr, S. B., & Okamura, A. M. (2018). Haptics: The Present and Future of Artificial Touch Sensation. ''Annual Review Of Control Robotics And Autonomous Systems'', ''1''(1), 385–409. <nowiki>https://doi.org/10.1146/annurev-control-060117-105043</nowiki></ref><ref>Pereira, A., Nunes, N., Vieira, D., Costa, N., Fernandes, H., & Barroso, J. (2015). Blind Guide: An Ultrasound Sensor-based Body Area Network for Guiding Blind People. ''Procedia Computer Science'', ''67'', 403–408. <nowiki>https://doi.org/10.1016/j.procs.2015.09.285</nowiki></ref> In essence, it means that a high frequency sound wave is emitted which will reflect back from the object of significance. Whenever this object is moving towards the receiver it will "squish" the reflected sound wave, decreasing its wavelength and increasing its frequency (higher pitch). The opposite can be said for an object that moves away from the receiver; the wavelength increases and the frequency decreases. This difference between the original incident frequency and the reflected frequency can be compared and used to calculate the speed of the detected object. | |||

The Doppler effect is very useful in our situation but ultrasound is the weaker link in this system. Ultrasound, generally, has a maximum range of about 21 meters which is far below the required range of 80-100 meters. Secondly, it measures at a slow rate (200 Hz) which means it can be quite inaccurate in quickly changing environments. Lastly, ultrasound is susceptible to differences in vapour concentrations of the air which changes the speed of sound in air, which can decrease accuracy of measurement results. | |||

====Wearable AI camera==== | |||

This wearable device has a camera built into it in order to be able to view traffic scenes visually impaired people might want to cross but lack the vision to do so safely. The cameras send their video to some kind on small computer running an AI image processing model. This model would then extract some parameters that are important for safely crossing the road, think about: if there is oncoming traffic; other pedestrians; how long crossing would take; or whether there are traffic islands. This information can then also be communicated back to the user according to their preferences: audio (like a voice or certain tones) or haptic (through vibration patterns). The device could be implemented in various wearable forms. For example: a camera attached to glasses, something worn around the chest or waist, a hat, or on the shoulder. The main concerns is that the user feels most comfortable with the solution, like being easy to use, not being in the way, and being hidden if it makes them self-conscious. | |||

This idea requires a quite sophisticated AI model, it is of concern that the computer is able to quickly process cars and relay the information back to the user or else the window to safely cross the road might have already passed. It also needs to be reliable, should it misidentify a car or misjudge is speed it could have a negative impact for the users life expectancy. Running an AI model like is proposed could also pose a challenge on a small computer. The computer has to be attached to the user is some way and can be quite obtrusive if it is too large. Users might also feel it makes them uncomfortable if it is very noticeable that they are wearing some aid and a large computer could make it difficult to make discrete. On the other side, an advanced model could probably not run locally on a very small machine, especially considering the fast reaction times required. | |||

===Feedback=== | |||

Feedback is the communication of spatial information to visually impaired people to perceive their surroundings. There are two main types of feedback that are explored for this project, specifically; haptic feedback and auditory feedback. Haptics are feedback devices relating to touch and auditory devices utilise sound to convey information. | |||

It is important that the feedback device adheres to the following: | |||

#The signal must be noticeable, over all traffic noises and stimuli surrounding the user | |||

#The signal must clearly indicate whether it is time to walk (Go condition) or to keep still (No-Go condition), and these conditions must be distinguishable | |||

#The signal must not be confused by any other surrounding stimuli | |||

These rules must be adhered to in order to properly carry over the information from the device to the user, and for the user to use said information to then make their decisions. | |||

====Haptic Feedback==== | |||

Haptic means “relating to the sense of touch”. Haptic feedback is therefore a class of feedback devices that utilise the sense of touch for conveying information. These haptic systems can also be divided into three categories: graspable, wearable, and touchable devices.<ref>Culbertson, H., Schorr, S. B., & Okamura, A. M. (2018). Haptics: The Present and Future of Artificial Touch Sensation. ''Annual Review Of Control Robotics And Autonomous Systems'', ''1''(1), 385–409. <nowiki>https://doi.org/10.1146/annurev-control-060117-105043</nowiki></ref> | |||

[[File:Haptic Devices.gif|alt=Different types of haptic devices; specifically graspable, wearable and touchable devices|thumb|438x438px|Different types of haptic devices]] | |||

Graspable devices are typically kinaesthetic i.e. force-feedback devices, which allow the user to push and/or be pushed back by the device. | |||

Wearable systems include devices that can be worn on the hands, arms or other parts of the body and provide haptic feedback directly to the skin. Usually this is done by vibrating at different frequencies and/or in different vibrational patterns, like alternating long and short pulses (similarly to Morse code). Utilising vibrations to convey information is a technique that is already widely used in smartphones and game controllers. | |||

Finally, touchable systems are devices with changing tactile features and surfaces that can be explored by the user. | |||

For our purposes, a wearable system seems to be the most logical. A VIP can wear the device and can immediately receive information gathered in the surroundings by vibrations directly on the skin. A human can sense different vibrations ranging from 5 to 400 Hz. | |||

====Auditory Feedback==== | |||

When it comes to sound cues, there are multiple aspects to consider. Most crossings with traffic lights already have an auditory system set up (''rateltikker'') in order to signal to the pedestrians whether they can cross or not. To mimic this type of auditory pedestrian signal, the same type of considerations should be used for our design. From the book Accessible Pedestrian Signals by Billie L. Bentzen, some guidelines and additional info had been laid out that can be considered when looking at what kinds of sounds, what volume levels and what frequencies are best to use. | |||

The sound of the aiding device must be audible over the sounds of traffic. The details of noise levels around intersections are out of scope for this specific research, as there are many factors that go with determining the noise level (such as wind conditions, temperature, height of receiver and so on). Therefore, we will keep this straightforward, and use information of research with similar conditions as our key scenario, a 50 k/m road with no traffic lights. | |||

In general, a signal that is '''5dB above surrounding sound''' from the receiver is loud enough for the pedestrian to hear and process the sound made.<ref name=":0">Bentzen, B. L., Tabor, L. S., Architectural, & Board, T. B. C. (1998). ''Accessible Pedestrian Signals''. Retrieved from <nowiki>https://books.google.nl/books?id=oMFIAQAAMAAJ</nowiki></ref> As the sound in our scenario will be coming from the a device relatively close to the user, we do not need to be that concerned with it being audible from across the street. From research and simulations on traffic noises at intersections, the general noise sound levels around intersections lay around 65-70 dB.<ref>Quartieri, J., Mastorakis, N. E., Guarnaccia, C., Troisi, A., D’Ambrosio, S., & Iannone, G. (2010). Traffic noise impact in road intersections. ''International Journal of Energy and Environment'', ''1''(4), 1-8.</ref> For a signal then to be heard, a sound of 70-75 dB seems to be loud enough to be audible. | |||

As for frequency levels of sound, research shows that high-frequencies sounds are easier to distinguish and detect from traffic sounds that low- or medium-frequency sounds. For example, the results from a study done by Hsieh M.C. et al. from 2021, where the auditory detectability of electric vehicle warning sounds at different volumes, distances, and environmental noise levels was tested, shows an overall preference for the high-frequency warning sound.<ref>Hsieh, M. C., Chen, H. J., Tong, M. L., & Yan, C. W. (2021). Effect of Environmental Noise, Distance and Warning Sound on Pedestrians' Auditory Detectability of Electric Vehicles. ''International journal of environmental research and public health'', ''18''(17), 9290. <nowiki>https://doi.org/10.3390/ijerph18179290</nowiki></ref> This sound was not affected by environmental noise. Things to note however, is that the detection rate (whether participants could detect the sound) of the higher sound pressure level (51 dBA) was significantly higher than the lower sound pressure level (46 dBA) under the high-frequency sound. This means that, when using high-frequency sound, it is imperative that a higher sound pressure level is used in order for users to actually detect the sound. Additionally, this research was done about electric vehicle warning sounds, which are by nature harder-to-detect sounds. This study however still informs us that even under these lower sound level conditions, high-frequency sounds are preferred. | |||

Research from Szeto, A. Y. et al. also notes this usage of high-frequency sounds: low-frequency sounds require longer durations and intensity to be heard, and low-frequency masking of the auditory signals can occur in traffic, even when most of the sound energy in traffic noise occurs in frequencies below 2000 Hz.<ref name=":1">Szeto, A. Y., Valerio, N. C., & Novak, R. E. (1991). Audible pedestrian traffic signals: Part 3. Detectability. ''Journal of rehabilitation research and development'', ''28''(2), 71-78.</ref> From their testing, using different types of sounds (cuckoo vs. chirp, which we will get into later), for the signal to be audible, there needs to be a good balance between the potential of the low-frequency masking of the traffic noise (<1000 Hz) and the aspects of hearing loss for higher frequency sounds (>2500 Hz) most common in elderly adults. Their advice is to use signals with frequencies between 2000 - 3000 Hz, with harmonics up to 7000 Hz. Best is to use an array of frequencies, that are continuous and have a duration of 500 ms. Varying the frequency around 2500 Hz, with a minimum of 2000 and maximum of 3000 Hz is recommended by Szero, A. Y. et al. The book on Accessible Pedestrian Signals by Billie L. Bentzen supports this idea: high harmonics, with continuous varying frequencies.<ref name=":0" /> | |||

As for type of sound, there are multiple options as well: | |||

*Voice messages: messages that communicate in speech are optimal for alerting pedestrians and their surroundings that the pedestrian is wanting to cross, as this signal is understandable and meaningful to everyone (ex. 'Please wait to cross')<ref name=":0" /> | |||

*Tones: chirp and cuckoo sounds. In America, the high-frequency, short duration 'chirp' and low-frequency, long duration 'cuckoo' type sounds are used to indicate east/west and north/south cross walks respectively. Additionally, the chirp sounds are of higher frequency compared to the cuckoo sounds, and are therefore easier to detect. In turn, this higher frequency sound is harder to detect for elderly people, as mentioned before.<ref name=":1" /> Another point of consideration is that pedestrians have mentioned that mockingbirds or other surrounding animals may imitate these sounds, therefore making it harder to distinguish from their surroundings.<ref name=":0" /> | |||

*Tones: major and minor scales. Major scales in music are often associated with positive emotions, as minor scales are associated with negatives. Additionally, minor scales exhibit more uncertainty and variability, which can evoke feelings of instability. Compared to the major scale, a minor scale contains pitches that are lower than expected, paralleling characteristics of sad speech, which often features lower pitch and slower tempo.<ref>Parncutt, R. (2014). The emotional connotations of major versus minor tonality: One or more origins? Musicae Scientiae, 18(3), 324-353. <nowiki>https://doi.org/10.1177/1029864914542842</nowiki> (Original work published 2014)</ref> | |||

Considering this research, it is thus important to think about the trade-off between how meaningful the auditory message can be in context of its' surroundings, in order to make it distinguishable but still relay the information of whether crossing is safe or not. | |||

=Creating the solution= | |||

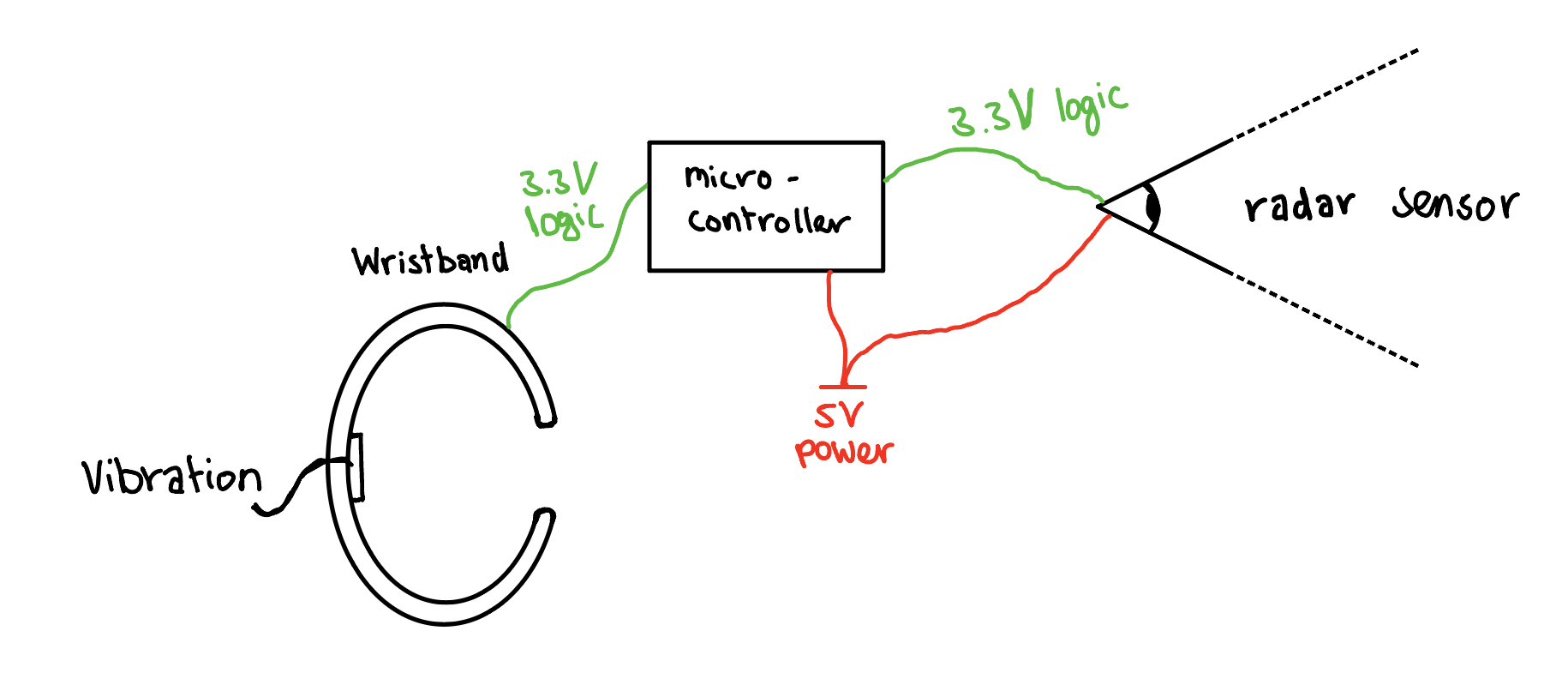

[[File:Road crossing aid system overview.jpg|alt=A system overview of the road crossing aid|thumb|568x568px|A system overview of the road crossing aid]] | |||

Keeping in mind the design requirements, we can start thinking about the prototype. First of all, there will have to be some feedback to the user when it is safe to cross the road. It was clear there is a preference for vibrational feedback so a vibration motor will be used for this purpose. The next step is to think about how to attach this to the user so they can feel it. There are several options here: one is to attach some kind of belt to the waist, another to make a vest , and lastly to have a wristband. In the interviews some people asked for discreet options and other preferred familiar placings. As such the wristband seems like the best choice here, which is what the prototype will use. That being said the other solutions are not without merit. Their larger size might allow them to embed multiple vibrational motors allowing for more detailed or directional feedback. | |||

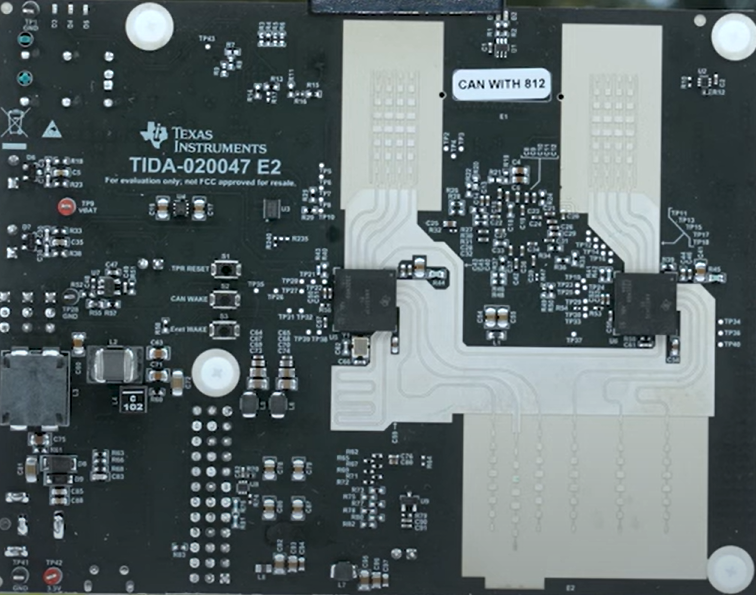

Next is to think about the detection of traffic. From multiple design options the long range lidar sensor seems the most reliable and as safety (and thus reliability) is very important, this is the detection method of choice. The chosen sensor is the Texas Instruments AWR2243 radar sensor, the details of will be discussed below. | |||

==Simulation== | |||

To write a simulation for a radar sensor, we need to understand how a radar sensor works. Radar stands for Radio Detection and Ranging. The radar sensor uses radio waves to detect objects and measures the distance, velocity and their position. The radar sensor transmits radio waves through an antenna. The electromagnetic waves travel at the speed of light and have a frequency ranging from 1 to 110 GHz. The radio waves reflect off objects. The reflection depends on the material. The antenna receives the reflective waves. From the time it takes to transmit and receive the radio waves, the distance can be calculated using the following equation. | |||

<math>Distance = \frac{speed \ of \ light \cdot time}{2} </math> | |||

If the object is moving, the frequency of the reflected wave will be different than the frequency of the transmitted wave. This is called the Doppler effect. The Doppler frequency shift is dependent on the velocity between the radar sensor and the object. Since the blind person is standing still, the velocity is in all scenarios 0 m/s, which simplifies the simulation. | |||

The velocity can be calculated using the Doppler shift equation: | |||

<math>f_d = \frac{2 \cdot v f_0 }{c}</math> | |||

where <math>f_d</math> is the Doppler shift frequency, <math>v</math> is the relative velocity of the object, <math>f_0</math> is the transmitted frequency and <math>c</math> is the speed of light. | |||

The radar simulation is based on a [https://uk.mathworks.com/help/radar/ug/simultaneous-range-and-speed-estimation-using-mfsk-waveform.html?searchHighlight=mfsk&s_tid=srchtitle_support_results_6_mfsk MATLAB radar package]. The helperMFSKSystemSetup.m file is used to set up the predefined situation. The situation can be set up by giving the distance and velocity of the vehicles. The model propagates in free space. The transmitter and receiver are also defined in the setup file. Lastly, the user velocity is set to zero. | |||

The radar sensor is demonstrated using the Multiple Frequency Shift Keying (MFSK) waveform. The MFSK waveform’s time frequency representation helps identify the vehicles. | |||

The key parameters for the FMSK waveform are sample rate, sweep bandwidth, step time, and steps per sweep. The radar sensor does not provide all these parameters. | |||

The distance and velocity are calculated using the beat frequency and phase difference and Doppler estimation. The MFSK waveform provides the range and speed estimations. If there are ghost targets, they will be filtered out later. The estimated range and speed have a small difference with the set distance and velocity. | |||

The code filters the values if there are random outliers that the radar sensor would not be able to pick up. Speeds above 100 km/h and distances larger than 200 meters are filtered out. Also, if a road user is traveling away from the user, the road user can be filtered out. If the radar sensor measures multiple road users with similar speed, the values are averaged out. Since the radar sometimes picks up a road user multiple times because of CFAR peak detections. This also gives more accurate results. Then, with the distance and velocity of the road users, the arrival times of each road user can be calculated. The threshold time to cross the road can be set. The threshold time is compared to the arrival times. If the arrival times are larger than the threshold, the device will signal the user that it is safe to cross. | |||

The following sections of the code are extras that would not be included in the design since the radar sensor constantly examines situations. The simulation runs only once, using a predefined situation. It sorts the arrival times and calculates the differences between them. If the difference exceeds the threshold, the corresponding position is selected, and the system signals the user when it is safe to proceed after the arrival time for that position has passed. The last part of the code is to visualise the predefined situation. Showing that the radar sensor is working and the user can safely cross the road after receiving the feedback from the device. | |||

Additionally, if the user signals their intention to cross by using a stick, the sensor also checks if the traffic is slowing down. If the sensor detects that the first car in each lane has stopped and traffic has come to a halt in both directions (if traffic is present). It will signal that it is safe to cross, even if there are still vehicles further behind that need to slow down due to the car in front. This is achieved by monitoring the XY positions of the cars and bicycles and verifying if their speeds have decreased to zero. This is not implemented in the simulation since the simulation does not continuously sense the situation. | |||

==Physical product design== | |||

===Design wearable sensor:=== | |||

'''Function:''' | |||

Analyse the traffic situation and give a signal to the bracelet of whether to cross or not. | |||

'''Sensors:''' | |||

* Radar | |||

* Speaker | |||

* Bluetooth | |||

'''Requirements:''' | |||

* Can not be heavy | |||

* Camera needs to maintain the right angle | |||

* Has to be moveable as not the same clothes/hats etc are worn through every season | |||

* Has to view the road the user is trying to cross on both sides | |||

'''Inspiration/reasoning:''' | |||

Two radars are needed because otherwise the one radar would have to be manually turned from left to right. Users reported not wanting the sensor in their hands, in fear of dropping it, it being stolen or because their hands are full. Attaching it to the front of the user is equally not an option as users told us it is dangerous for a blind person to look from side to side as this signals to the cars and other traffic that they can see, which means the traffic wont accommodate for them. | |||

Placement of the sensor was decided by the final interview in which our preliminary design was discussed. The team was deciding between attaching it to the cane or having the user wear it on the shoulders. It was explained that a cane is constantly swayed from side to side and gets stuck behind surfaces, tiles, etc. and thus receives a lot of impact and movement. This would make the sensor unstable. As well as the legal protocol in the Netherlands of sticking the cane vertical and then forward when crossing roads. This would prevent the sensor from being angled in the right direction before crossing. Shoulders was said to be a good solution. | |||

There was doubt about the aesthetics of having sensors on the shoulders, this could look silly or robotic, but a user assured us that it was a good idea. "Out of experience I will tell you that blind people can be willing to sacrifice some looks for independence, functionality and comfort." (translation of Peter 2025). And after functionality is working aesthetics can be improved. | |||

For the clip on function it has to be sturdy so the angle of the radar stays in place, yet moveable so the user can wear it through the seasons. | |||

A way to do this is adding multiple attachment options, a clip on works for a t-shirt but not on a sweater, pins work on a sweater but not on a jacket. By adding a clips, pins and a strap the user can adjust it to their needs. | |||

'''Sketch:''' | |||

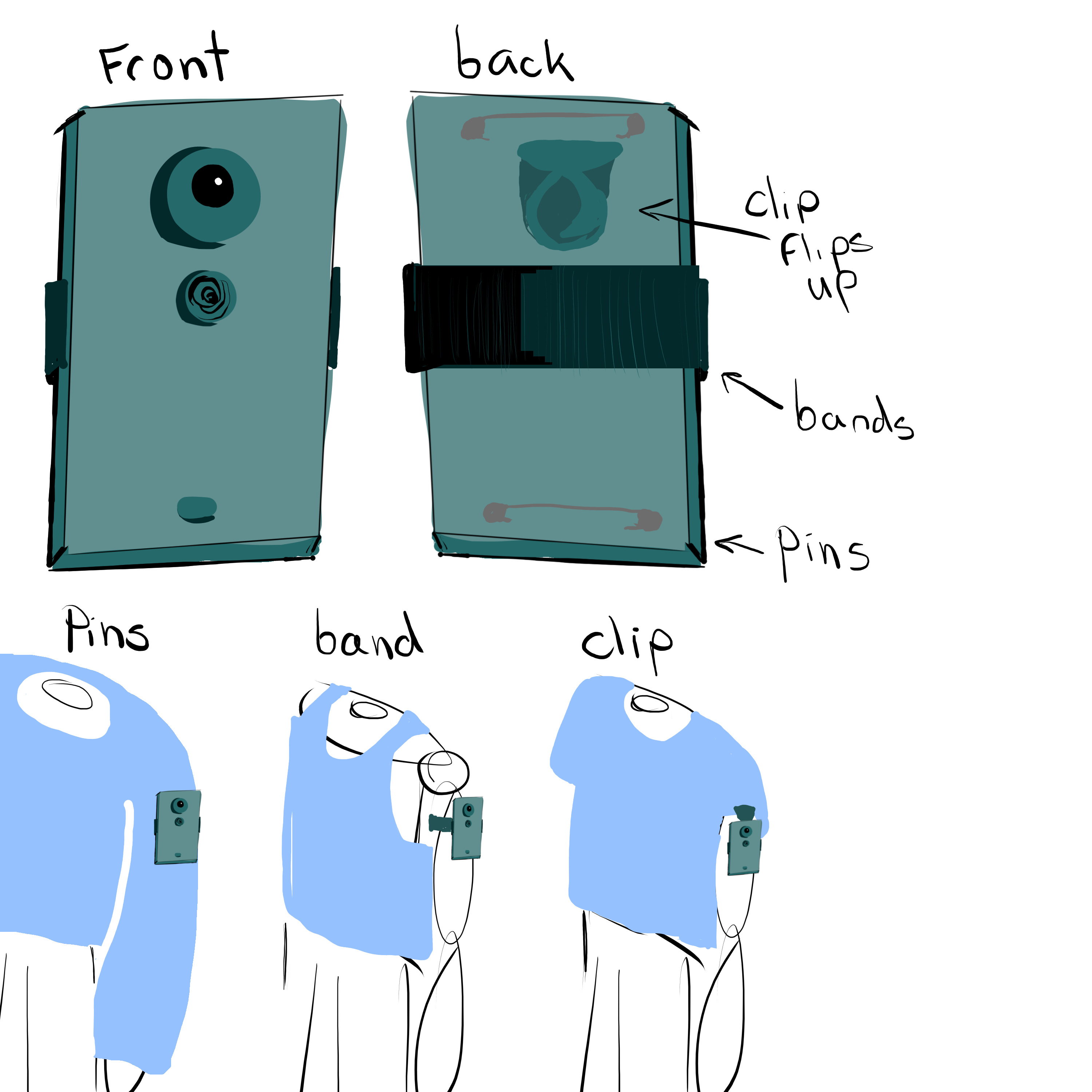

[[File:Sensor design sketch .png|alt=A sketch of the radar as a wearable in different seasons. It is the same color as the watch and has 2 pins, a clip and a band so it can be attatched to the shoulders in different ways|thumb|left|Sketch of radar as a wearable]] | |||

[[File:Radar sensor used.png|alt=An image of a sensor it is a flat radar. With a big white area on the right that makes sure it can see traffic.|thumb|right|The radar sensor used in the simulation]] | |||

Sketch of a possible design for the wearable radar. The top of the wearable is not reflective of what the actual product would look like due to the radar having a different area that has to not be covered and this differs per radar. For our radar it is the white area in the image on the right the sketch. It does illustrate the several ways of keeping the radar on the shoulders. A combination of pins, a band and a clip makes sure it works for all sizes and clothes. | |||

<br> | |||

<br> | |||

<br> | |||

<br> | |||

<br> | |||

<br> | |||

<br> | |||

<br> | |||

<br> | |||

<br> | |||

<br> | |||

<br> | |||

<br> | |||

<br> | |||

<br> | |||

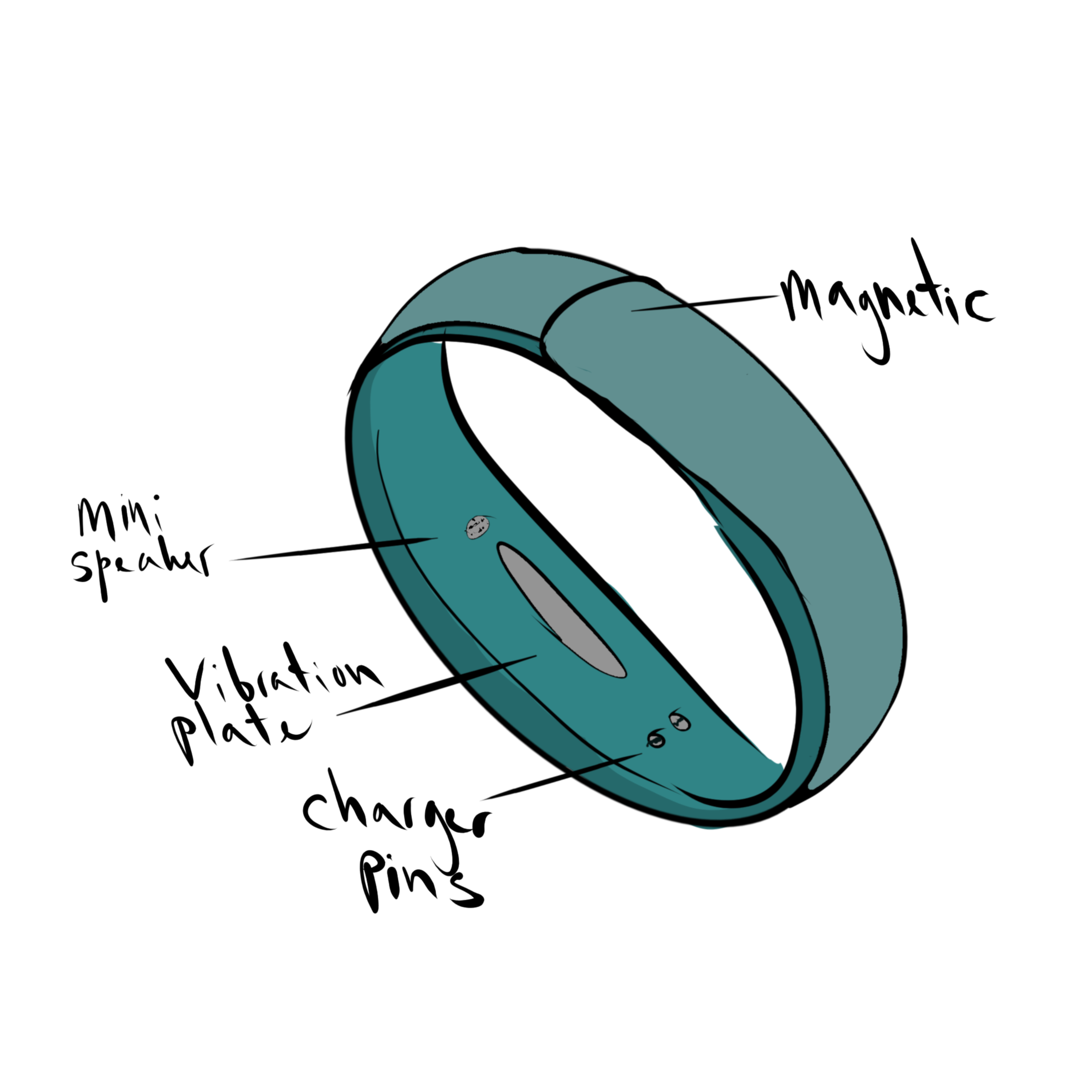

===Design feedback wristband:=== | |||

'''Function:''' Letting the user know when it is safe to cross the road and when it is not. | |||

'''Sensors:''' | |||

* Vibration plate | |||

* Charging port | |||

* Mini speaker (optional but ideal) | |||

* Some sort of Bluetooth/Wi-Fi connection | |||

'''Requirements:''' | |||

* Has to be chargeable | |||

* On/off button | |||

* Can not easily fall off, has to be easy to put on | |||

* Can not be bulky | |||

* Discreet and fashionable | |||

'''Inspiration:'''[[File:Xiaomi smartband 7.png|alt=in the image you can see a black smartwatch from the bottom with two little metal dots where the charging cable goes|thumb|173x173px|Xiaomi smartband 7|left]]For the design a bracelet that is as flat as possible is ideal. Users have to wear it through all seasons and bulky watches do not go under all winter sweaters and jackets. Having a bulky watch can also be uncomfortable. | |||

Because of the flat design there needs to be a flat charging option. Looking at affordability, easy to use, compactness and efficiency, the magnetic pogo pin charger is the best option. In the image on the right it can be seen in a Xiaomi smartband which is an affordable smartwatch. This charger is magnetic and thus easy for a blind person to use. | |||

<br> | |||

[[File:Wristband2.png|alt=A sketch of a green blue wristband with a sensor in it for haptic feedback and a charger holes|left|thumb|243x243px|Sketch of the wristband]] | |||

'''Product sketch:''' Using the previously talked about requirements this sketch was made. | |||

The bracelet is worn using a strong magnetic strap this way it is easy to put on, no clasps needed. The charger pins are also magnetic, the charger pops right on. | |||

The purpose of the mini speaker is for notifying the user when it is charging, being able to send a signal from a phone to let the user know where it is. | |||

The vibration plate in the sketch is a flat rectangle but in our prototype it will be a little thicker circle as we are working with budget and short term availability. | |||

Using a little bit of a wider band ensures the sensor will stay flat against the skin and the band will not move around too much. It is also more comfortable in daily use. | |||

The band has a very unisex feel and can be used by young and old, men and women. Multiple colours could be sold. | |||

'''Improvements:''' | |||

With the speaker and a Bluetooth sensor installed the band could also have potential for further programming or to be connected to an app. | |||

The vibration sensor could double as a navigation tool or a sonar sensor could be added to notify the user of surroundings. | |||

<br> | |||

<br> | |||

<br> | |||

==Implementation== | |||

The next step is to create the prototype and implement the feedback and sensing aspects of the design. The sensing was tested as proof of concept via a simulation, while the feedback was tested with criticism from users. | |||

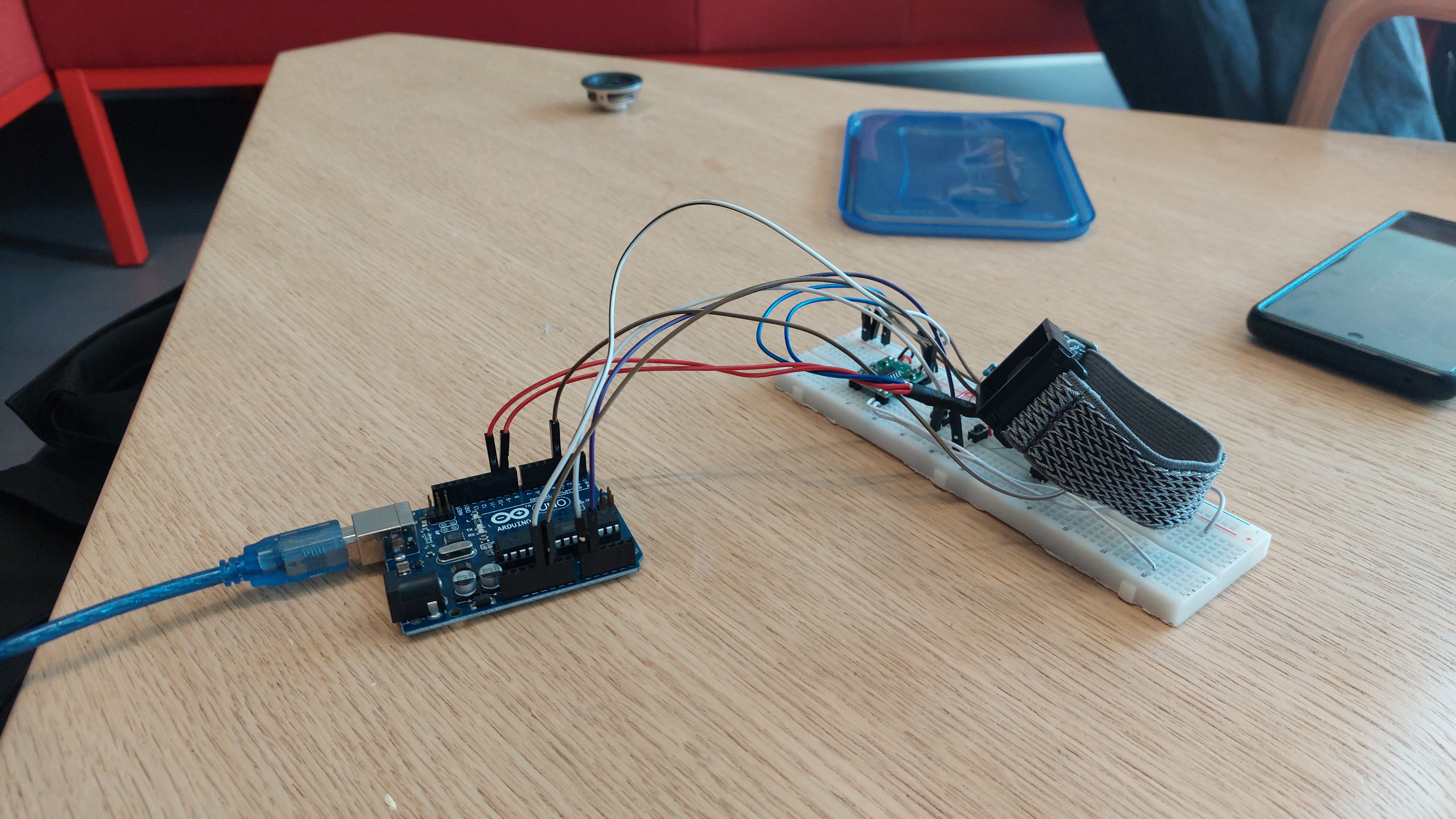

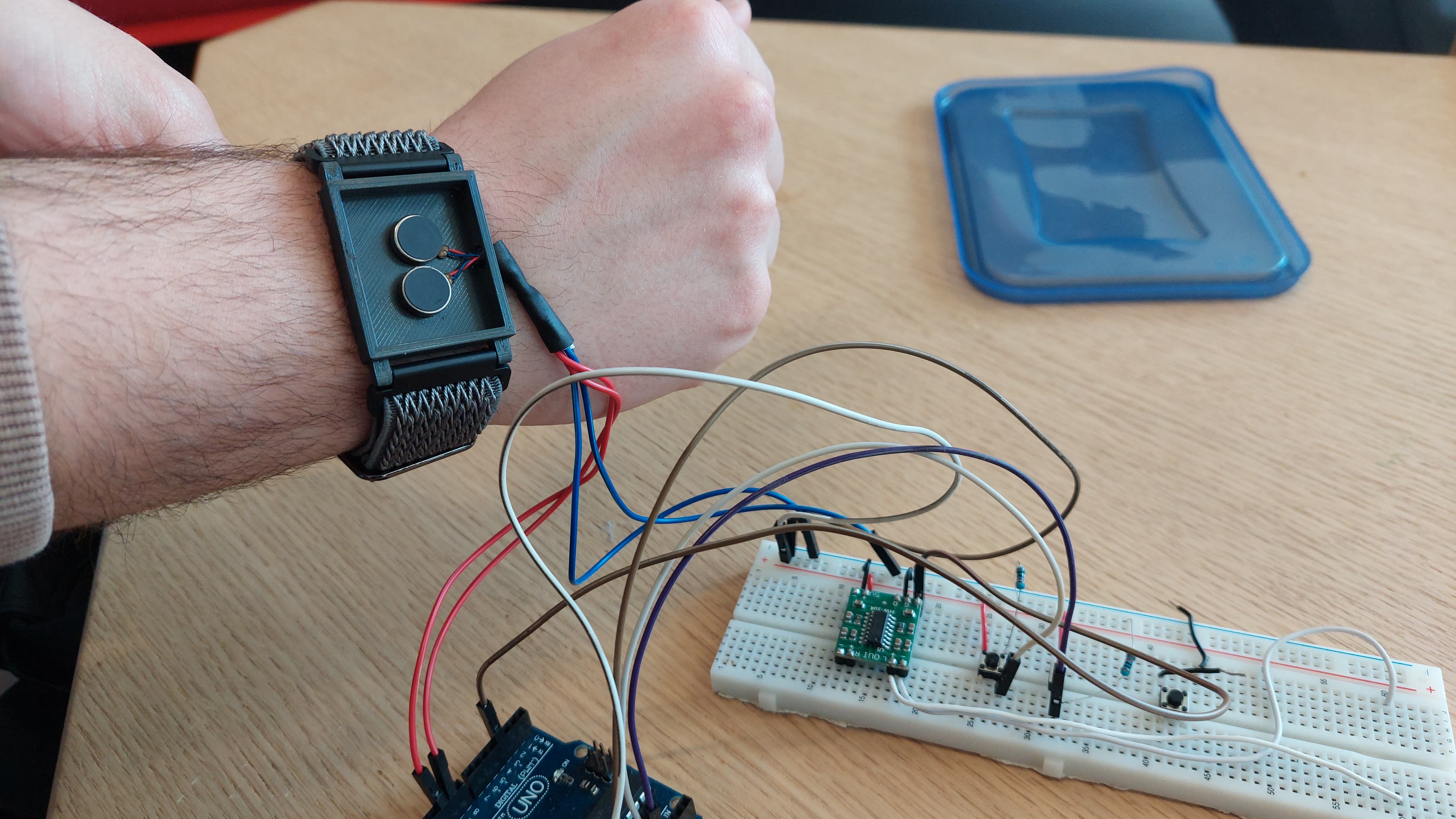

[[File:Physical protoype group 18.jpg|alt=A physical prototype for the device meant to aid visually impaired people with crossing busy roads. The picture includes an Arduino board, connected with a few wires to a wristband with two vibrating modules in it. |thumb|The physical prototype]] | |||

The prototype made can be seen in the picture next to the materials table. This prototype uses the following materials: | |||

{| class="wikitable" | |||

|+Materials Table | |||

!Materials | |||

!What for? | |||

!Representation in actual design: | |||

|- | |||

|Arduino Uno board | |||

|Sending timed signals to drive the feedback components, turning the system on and off. | |||

|The final design would have a small single board computer like a raspbery pi which would provide more compute power needed to process the sensor data, can also facilitate wireless communitation between the feedback devices. | |||

|- | |||

|Buttons (3x) | |||

|Turning on the system (for the user), switching between conditions. | |||

|The final design would have one button on the wristwatch, so that the user can turn the sensing device on or off whenever they want. | |||

|- | |||

|Vibrating Modules (2x) | |||

|Causes the main vibration function of the device, with a No-Go and Go condition accompanied with differing modes of vibration. | |||

|The final design would have these vibration modules inside the wristband as described in the product sketches. In the protoype these are laid bare so that during testing it is easier to sense if they work or change the wiring. | |||

|- | |||

|Speaker | |||

|Providing auditory feedback to the user, plays an upbeat sound for a Go condition and a glum sound for a No-Go condition. | |||

|The final design would have earpieces so that the user can clearly hear the audio signals. These would be completely optional should the user not find them nessecary. | |||

|} | |||

====Testing the modes of vibration==== | |||

The physical prototype was made with two vibrating modules inside the wristband. To test the mode of vibration, four different modes were tested. The reasoning behind each of these modes is based on the literature research. | |||

*Mode 1: Variating waiting time. The No-Go condition used long waiting times between pulses, the Go condition used close-rapid pulses. Length of the pulses is equal in both conditions. | |||

**The rhythm is a 100ms vibration followed by a 900ms wait in No-Go and a 100ms vibration followed by a 100ms wait in Go. | |||

**This mode is similar to how a ''rateltikker'' works, but in vibration format instead of auditory. | |||

*Mode 2: Variating pulse time. The No-Go condition used long pulses, the Go condition used short pulses. Waiting time between pulses is equal in both conditions. | |||

**The rhythm is a 800ms vibration followed by a 100ms wait in No-Go and a 100ms vibration followed by a 100ms wait in Go. | |||

**The idea of this mode is that it mimics the pulses used in morse code to differentiate between different conditions. | |||

*Mode 3: Variating intensity of pulse. The No-Go condition used low-intensity pulses, the Go condition used high-intensity pulses. Waiting time between and length of pulses is equal in both conditions. | |||

**The rhythm is the same as in the 1st mode but the power of vibration is 55% of the full power in No-Go and 90% of the full power in Go. | |||

**The idea of this mode was that a higher intensity of the pulse is easy to notice when the environment is quite busy, giving a sense of urgency via the device. | |||

*Mode 4: Combining Mode 2 & 3. | |||

**The rhythm is a 800ms vibration at 55% power followed by a 100ms wait in No-Go and a 100ms vibration at 90% power followed by a 100ms wait in Go. | |||

**This was done to see if combining different modes of information would increase the clarity of the information relayed to the user. | |||

We tested each mode once, without giving any instruction. Then, each mode was played again, and the tester was asked about their opinions, with a main focus on whether the No-go & Go conditions were 1. Differentiable, and 2. Clear & Readable. | |||

[[File:Physical Protoype Group 18 (worn).jpg|alt=A physical prototype for the device meant to aid visually impaired people with crossing busy roads. The picture includes an Arduino board, connected with a few wires to a wristband worn, showing the two vibrating modules in it. |thumb|The physical prototype worn, showing the vibrating modules]] | |||

From this testing we concluded the following: | |||