PRE2024 1 Group2: Difference between revisions

| (120 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

== '''Group Members''' == | =='''Group Members''' == | ||

{| class="wikitable" | {| class="wikitable" | ||

|+ | |+ | ||

| Line 16: | Line 16: | ||

|Tim Damen | |Tim Damen | ||

|1874810 | |1874810 | ||

|Applied Physics | | Applied Physics | ||

|- | |- | ||

|Ruben Otter | | Ruben Otter | ||

|1810243 | |1810243 | ||

|Computer Science | |Computer Science | ||

| Line 27: | Line 27: | ||

|} | |} | ||

== '''Problem Statement''' == | == '''Problem Statement'''== | ||

In modern warfare drones play a huge role. Drones are relatively cheap to make and deal a lot of harm, while not a lot is done against them. There exists large anti-drone systems which protect important areas from being attacked by drones. Individuals, which are at the front line, are not protected by such anti-drone systems as they are expensive and too large to carry around. This makes individuals at the front lines vulnerable to drone attacks. We aim to show that an anti-drone system can be made cheap, lightweight and portable to protect these vulnerable individuals. | |||

== '''Objectives''' == | =='''Objectives'''== | ||

To | To show that an anti-drone system can be made cheap, lightweight and portable we do the following things: | ||

* Determine how drones and projectiles can be detected. | *Explore and determine ethical implications of the portable device. | ||

* Determine how a drone or projectile can be intercepted and/or redirected. | *Determine how drones and projectiles can be detected. | ||

* Build a prototype of this portable device. | *Determine how a drone or projectile can be intercepted and/or redirected. | ||

* | *Build a prototype of this portable device. | ||

* Create a model for the interception | |||

* Prove the system’s utility. | *Prove the system’s utility. | ||

== '''Planning''' == | =='''Planning'''== | ||

Within | Within the upcoming 8 weeks we will be working on this project. The table below shows when we aim to finish the different tasks within the 8 weeks of the project. | ||

{| class="wikitable" | {| class="wikitable" | ||

|+ | |+ | ||

| Line 60: | Line 60: | ||

|- | |- | ||

| | | | ||

|Start constructing prototype | |Start constructing prototype and software. | ||

|- | |- | ||

| | | | ||

| Line 66: | Line 66: | ||

|- | |- | ||

|4 | |4 | ||

|Continue constructing prototype | |Continue constructing prototype and software | ||

|- | |- | ||

|5 | |5 | ||

|Finish prototype | |Finish prototype and software | ||

|- | |- | ||

|6 | |6 | ||

| Line 77: | Line 77: | ||

|Evaluate testing results and make final changes. | |Evaluate testing results and make final changes. | ||

|- | |- | ||

|7 | | 7 | ||

|Create final presentation. | |||

|- | |||

|8 | |||

|Finish Wiki page. | |Finish Wiki page. | ||

|} | |||

=='''Risk Evaluation'''== | |||

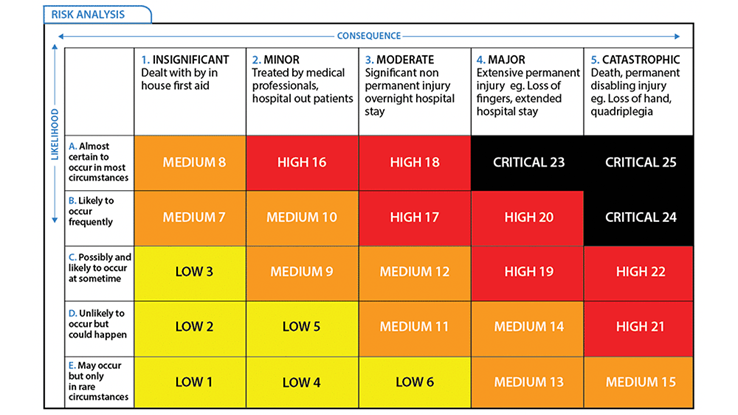

A risk evaluation matrix can be used to determine where the risks are within our project. This is based on two factors: the consequence if a task is not fulfilled and the likelihood that this happens. Both of these factors are rated on a scale from 1 to 5 and using the matrix below a final risk is determined. This can be a low, medium, high or critical risk. Knowing the risks beforehand gives the ability to prevent failures from occurring as it is known where special attention is required. | |||

<ref>How to read a risk matrix used in a risk analysis (assessor.com.au)</ref> | |||

[[File:Imaged.png|thumb|Risk evaluation matrix|426x426px]] | |||

{| class="wikitable" | |||

|'''Task''' | |||

|'''Consequence (1-5)''' | |||

|'''Likelihood (1-5)''' | |||

|'''Risk''' | |||

|- | |- | ||

| | |Collecting 25 articles for the SoTA | ||

| | |1 | ||

|1 | |||

|Low | |||

|- | |||

|Interviewing front line soldier | |||

|1 | |||

|2 | |||

|Low | |||

|- | |||

|Finding features for our system | |||

|4 | |||

|1 | |||

|Medium | |||

|- | |||

|Making a prototype | |||

|3 | |||

|3 | |||

|Medium | |||

|- | |||

|Make the wiki | |||

|5 | |||

|1 | |||

|Medium | |||

|- | |||

|Finding a detection method for drones and projectiles | |||

| 4 | |||

|1 | |||

|Medium | |||

|- | |||

|Determine (ethical) method to intercept or redirect drones and projectiles | |||

|5 | |||

|1 | |||

|Medium | |||

|- | |||

|Prove the systems utility | |||

|5 | |||

|2 | |||

|High | |||

|} | |} | ||

== '''Users and their Requirements''' == | =='''Target Market Interviews'''== | ||

=== '''<u>Interview 1: Introductory interview with F.W., Australian Military Officer Cadet (military as a potential user)</u>''' === | |||

<u>Q1: What types of anti-drone (/projectile protection) systems are currently used in the military?</u> | |||

* A: The systems currently in use include the Trophy system from Israel, capable of intercepting anti-tank missiles and potentially intercepting dropped payload explosives or drones. Another similar system is the Iron Fist, also from Israel. Additionally, Anduril's Lattice system offers detection and identification capabilities within a specified operational area, capable of detecting all possible threats and, if necessary, intercepting them. | |||

<u>Q2: What are key features that make an anti-drone system effective in the field?</u> | |||

* A: Effective systems are often deployed in open terrains like fields and roads, as drones have difficulty navigating dense forests. An effective anti-drone system could include a training or tactics manual to optimize use in these environments. Key features also include a comprehensive warning system that can alert troops on the ground to incoming drones, allowing them to take cover. Systems should not focus solely on interception but also on early detection. | |||

<u>Q3: What are the most common types of drones that these systems are designed to counter?</u> | |||

* A: The systems are primarily designed to counter consumer drones and homemade FPV drones, which are known for their speed and accuracy. These drones are incredibly effective and easy to construct. | |||

<u>Q4: What are the most common types of drone interception that these systems employ?</u> | |||

* A: The common types of interception include non-kinetic methods such as RF and optical laser jamming, which have a low cost-to-intercept. Kinetic methods include shooting with regular rounds, using net charges, or employing blast interception techniques such as those used in the Iron Fist system. High-cost methods like air-burst ammunition are also utilized due to their high interception likelihood. | |||

<u>Q5: Are there any specific examples of successful or failed anti-drone operations you could share?</u> | |||

* A: No specific examples were shared during the interview. | |||

<u>Q6: How are drones/signals determined to be friendly or not?</u> | |||

* A: The identification process was not detailed in the interview. | |||

<u>Q7: What are the most significant limitations of current anti-drone systems?</u> | |||

* A: Significant limitations include the high cost, which makes these systems unaffordable for individual soldiers or small groups. Most systems are also complex and require vehicle mounts for transportation, making them less suitable for quick or discreet maneuvers. | |||

<u>Q8: Are there any specific environments where anti-drone systems struggle to perform well?</u> | |||

* A: These systems typically perform well in large open areas but may struggle in environments with dense vegetation, which can offer natural cover for troops but limit the functionality of the systems. | |||

<u>Q9: Can you give a rough idea of the costs involved in deploying these systems?</u> | |||

* A: The costs vary widely, and detailed pricing information is generally available on a need-to-know basis, making it difficult to provide specific figures without direct inquiries to manufacturers. | |||

<u>Q10: Which ethical concerns may be associated with the use of anti-drone systems, particularly regarding urban, civilian areas?</u> | |||

* A: Ethical concerns are significant, especially regarding the deployment in civilian areas. The military undergoes extensive ethical training, and all new systems are evaluated for their ethical implications before deployment. | |||

<u>Q11: What improvements do you think are necessary to make anti-drone systems more effective?</u> | |||

* A: The interview did not specify particular improvements but highlighted the need for systems that can be easily deployed and operated by individual soldiers. | |||

<u>Q12: Do you think AI or machine learning could help enhance anti-drone systems?</u> | |||

* A: The potential for AI and machine learning to enhance these systems is recognized, with ongoing research into their application in anti-drone and anti-missile technology. | |||

<u>Q13: Is significant training required for personnel to effectively operate anti-drone systems?</u> | |||

* A: The level of training required varies, but there is a trend towards developing systems that require minimal training, allowing them to be used effectively straight out of the box. | |||

<u>Q14: How do these systems usually handle multiple drone threats or swarm attacks?</u> | |||

* A: Handling multiple threats involves a combination of detection, tracking, and engagement capabilities, which were not detailed in the interview. | |||

<u>Q15: How are these systems tested and validated before they are deployed in the field?</u> | |||

* A: Systems undergo rigorous testing and validation processes, often conducted by military personnel to ensure effectiveness under various operational conditions. | |||

=== '''<u>Interview 2: Interview with B.D. to specify requirements for system, Volunteer on Ukrainian front lines (volunteers and front line soldiers as potential users)</u>''' === | |||

<u>What types of anti-drone systems are currently used near the front lines?</u> | |||

*Mainly see RF jammers and basic radar systems deployed here | |||

*Detection priority | |||

* Nothing for specifically intercepting munitions dropped by drones | |||

**<u>Follow-up: What are general ranges for these RF jammers and other detection methods?</u> | |||

*** Very different, RF detectors or radar can have ranges of 100 meters but very much depends on the conditions | |||

***Rarely available consistently across positions | |||

***Soldiers usually listen for drones but this can be difficult when moving or during incoming/outgoing fire | |||

*** Any RELIABLE range would be beneficial but to give enough time to react a range around 20 or 30 meters would probably be the minimum | |||

<u>What are key features that make an anti-drone system effective in the field?</u> | |||

*Needs to be easy to move and hide, no bulky shapes or protruding parts, light | |||

** No space in backpacks, cars, trucks, already often full of equipment. Nothing you couldn’t carry and run with (estimate: 30kg) | |||

**Pack-up and deployment speed: exfil usually over multiple hours of packing up for drone operator squads, but for front line troops, constant movement. Anything above 30 seconds is insufficient [and that seems to already by pushing it] | |||

*No language barrier advisable [assuming: basically meaning no training] | |||

*Detection range is quite critical. A few seconds need to be given for soldiers or others near the front lines to prepare for an incoming impact | |||

** <u>Follow-up: What kind of alarm to show drone detection would be best?</u> | |||

***[...] Conclusion: sound is only real option without immediately giving away position of system, drones will also often fly over positions so to alarm soldiers with lights would risk revealing their positions where the drone otherwise would have flown past. Sound already risky. | |||

<u>What are the most significant limitations of current anti-drone systems?</u> | |||

*Apart from RF and some alternatives no real solution to drone drops on either side | |||

*Systems that are light enough to be carried by one person and simple enough to operate without extensive training are rare if any exist at all | |||

<u>General Discussion on Day-to-Day Experiences</u> | |||

*Drone attacks very unpredictable. Periods of constant drone sounds above, then days of nothing. Constant vigilance necessary, which tires you out and puts you under serious pressure. | |||

*According to people he has talked to, RF jammers and RF/radar-based detection useful but very difficult to counter an FPV drone with a payload if it is able to reach you and get close to you, but very environment-dependent, e.g. open field vs forest | |||

*Despite the conditions, there's a shared sense of purpose between locals, volunteers, etc. | |||

*Constant fundraising needed to gather enough funds to fix vehicles (such as recently the van shown on IG account), as well as equipment and supplies both for locals and soldiers | |||

=='''Users and their Requirements'''== | |||

We currently have two main usages for this project in mind, which are the following: | We currently have two main usages for this project in mind, which are the following: | ||

* Military forces facing threats from drones and projectiles. | * Military forces facing threats from drones and projectiles. | ||

* Privately-managed critical infrastructure in areas at risk of drone-based attacks. | *Privately-managed critical infrastructure in areas at risk of drone-based attacks. | ||

From our literary review and our interviews, we have determined that users of the system will require the following: | |||

* Minimal maintenance | *Minimal maintenance | ||

* High reliability | *High reliability | ||

* System should not pose additional threat to surrounding | *System should not pose additional threat to surrounding | ||

* System must be personally portable | *System must be personally portable | ||

* System should work reliably in dynamic, often extreme, environments | * System should work reliably in dynamic, often extreme, environments | ||

* System should be scalable and interoperable in concept | *System should be scalable and interoperable in concept | ||

=='''Ethical Framework'''== | |||

The purpose of this project is to develop a portable defense system for neutralizing incoming projectiles, intended as a last line of defense in combat scenarios. In such circumstances, incoming projectiles may vary, and not all require neutralization, meaning decisions will vary based on specific conditions. Here, we focus on combat situations, such as war zones where environments evolve rapidly, necessitating adaptive equipment for soldiers. The system requires advanced software capable of distinguishing potential threats, such as differentiating between birds and drones or identifying grenades dropped from high altitudes<ref>Prof. Bharath Bharadwaj B S1,Apeksha S2, Bindu NP3, S Shree Vidya Spoorthi 4, Udaya S5 (2023). ''A deep learning approach to classify drones and birds'', https://www.irjet.net/archives/V10/i4/IRJET-V10I4263.pdf</ref>. In these contexts, it must adhere to the Geneva Conventions, which emphasize minimizing harm to civilians. This principle guides the need for the device to evaluate its impact on civilians and ensure that its actions do not indirectly or directly cause harm. In addition, if the device will be used in combat environments, it must work within the International Humanitarian Law. This law states that in a combat environment you are not allowed to target civilians<ref>Making Drones to Kill Civilians: Is it Ethical? | Journal of Business Ethics (springer.com)</ref>. | |||

Consider a scenario in which a kamikaze drone targets a military vehicle. The device could intercept the drone in one of two ways: by redirecting it or triggering it to explode above the ground. To solve this problem we take a look at the three major ethical trends: virtue ethics, deontology and utilitarianism. First of with virtue ethics, in short they will choose the path that a 'virtue person' would choose<ref>Stanford Encyclopedia of philosophy, Virtue Ethics. https://plato.stanford.edu/entries/ethics-virtue/</ref>. However, if the drone would be redirected too close to a populated area, or if an aerial explosion results in scattered fragments, there’s a potential risk for civilian casualties. Such scenarios introduce a moral dilemma similar to the "trolley problem"<ref>Peter Graham, ''Thomson's Trolley problem,'' DOI: 10.26556/jesp.v12i2.227</ref>. Activating the system may protect soldiers while risking civilian harm, while inaction leaves soldiers vulnerable. Therefore, the device must be capable of directing threats to secure locations, minimizing risk to civilians and aiming to maximize overall safety. In this scenario virtue ethics would not be able to assist in choosing wisely, because we do not know what a 'virtue person' would choose with regard to redirecting a drone. Deontology is build upon strict rules to follow<ref>Ethics unwrapped, Deontology https://ethicsunwrapped.utexas.edu/glossary/deontology#:~:text=Deontology%20is%20an%20ethical%20theory%20that%20uses%20rules%20to%20distinguish,Don't%20cheat.%E2%80%9D</ref>. This could result in our example in two different rules. The first rule could be 'Do redirect the drone', the second rule could then be 'Do not redirect the drone'. This is would be an easy solution to the problem, but we still remain with the problem which rule to choose. Utilitarianism suggests that maximizing happiness often involves minimizing harm<ref>Utilitarianism meaning, ''Cambridge dictionary'' https://dictionary.cambridge.org/dictionary/english/utilitarianism</ref>. This ethical path looks at the output of its actions. Therefore it should in fact already have anticipated on whether or not there would be casualties if it is redirected and how many. For now we assume that we are not able to anticipate on this because this would probably require massive amounts data and RAM storage. With our environment in mind, we can assume that at the place of impact of the drone there will be always casualties if the drone is not directed because if not, the device would not have to be at that location. If the drone is redirected the chances of people being outside the trenches is relatively small because this person would be in plain sight of the enemy. Therefore, from a utilitarianism point of view, over a period of time the least harm would thus have been caused if we consequently redirect the drone form the location of impact. Due to this the percentage of prevented casualties outweighs the percentage of 'made' casualties. | |||

=='''Specifications''' == | |||

To guide us in our design decisions, we have to create a set of specifications that reflect more specifically our users' needs. Our design decisions include our choice of drone detection and projectile interception, and using our knowledge gotten from our literary review and interviews, we can set up a list of SMART requirements. These requirements will help us design the best prototype for our users, while also keeping within the bounds of International Humanitarian Law, as predefined by our ethical framework. | |||

{| class="wikitable" | |||

!ID | |||

!Requirement | |||

! Preference | |||

!Constraint | |||

!Category | |||

!Testing Method | |||

|- | |||

|R1 | |||

|Detect Rogue Drone | |||

|Detection range of at least 30m | |||

| | |||

|Software | |||

|Simulate rogue drone scenarios in the field | |||

|- | |||

|R2 | |||

|Object Detection | |||

|100% recognition accuracy | |||

|Detects even small, fast-moving objects | |||

|Software | |||

|Test with various object sizes and speeds in the lab | |||

|- | |||

|R3 | |||

|Detect Drone Direction | |||

|Accuracy of 90% | |||

| | |||

|Software | |||

|Use drones flying in random directions for validation | |||

|- | |||

|R4 | |||

|Detect Drone Speed | |||

|Accuracy within 5% of actual speed | |||

|Must be effective up to 20m/s | |||

|Software | |||

|Measure speed detection in controlled drone flights | |||

|- | |||

|R5 | |||

|Detect Projectile Speed | |||

|Accurate speed detection for fast projectiles | |||

|Must handle speeds above 10m/s | |||

|Software | |||

|Fire projectiles at varying speeds and record accuracy | |||

|- | |||

|R6 | |||

| Detect Projectile Direction | |||

|Accuracy within 5 degrees | |||

| | |||

|Software | |||

|Test with fast-moving objects in random directions | |||

|- | |||

|R7 | |||

|Track Drone with Laser | |||

|Tracks moving targets within a 1m² radius | |||

|Must follow targets precisely within the boundary | |||

|Hardware | |||

|Use a laser pointer to follow a flying drone in real-time | |||

|- | |||

|R8 | |||

|Can Intercept Drone/Projectile | |||

|Drone/Projectile is within the 1m² square | |||

|Must not damage surroundings or pose threat | |||

| Hardware | |||

|Test in a field, using projectiles and drones in motion | |||

|- | |||

|R9 | |||

|Low Cost-to-Intercept | |||

|Interception cost under $50 per event | |||

| | |||

|Hardware & Software | |||

|Compare operational cost per interception in trials | |||

|- | |||

|R10 | |||

|Low Total Cost | |||

| Less than $2000 | |||

|Should include all components (detection + net) | |||

|Hardware | |||

|Budget system components and assess affordability | |||

|- | |||

|R11 | |||

|Portability | |||

|System weighs less than 3kg | |||

| | |||

|Hardware | |||

|Test for total weight and ease of transport | |||

|- | |||

|R12 | |||

|Easily Deployable | |||

|Setup takes less than 5 minutes | |||

|Must require no special tools or training | |||

|Hardware & Software | |||

|Timed assembly by users in various environments | |||

|- | |||

|R13 | |||

|Quick Reload/Auto Reload | |||

|Reload takes less than 30 seconds | |||

|Must be easy to reload in the field | |||

|Hardware | |||

|Measure time to reload net launcher in real-time scenarios | |||

|- | |||

|R14 | |||

|Environmental Durability | |||

|Operates in temperatures between -20°C and 50°C | |||

|Must work reliably in rain, dust, and strong winds | |||

|Hardware | |||

|Test in extreme weather conditions (wind, rain simulation) | |||

|} | |||

=== Justification of Requirements === | |||

In the first requirement '''R1''', '''Detect Rogue Drone''', we say that we want a detection range of at least 30 meters. This range is crucial to provide soldiers or operators enough time to react to potential threats. According to B.D. (Interview 2), a detection range of 20–30 meters would be the minimum needed to give front-line soldiers a reliable chance to prepare for impact or take cover. This range aligns with the typical operational range of RF and radar detection systems, as discussed in ''The Rise of Radar-Based UAV Detection For Military'', making it a practical and achievable requirement. For '''Object Detection R2''', 90% recognition accuracy is preferred to prevent misidentifying other objects as drones, which could lead to wasted resources or unnecessary responses. ''Advances and Challenges in Drone Detection and Classification'' highlights the value of sensor fusion in improving detection accuracy, which would help the system distinguish drones from other objects in complex or cluttered environments. This accuracy is essential for military applications, where false detections could cause unnecessary alerts and distractions, and non-detections could leave the troops vulnerable. The requirements to '''Detect Drone Direction''' '''R3''' with 90% accuracy and '''Detecting Drone Speed R4''' are justified by the need to implement our projectile interception. Though from our interviews we know that most drones just drop projectiles while they are hovering, we have to be able to adapt to the scenario that the drone is moving while it drops the projectile, to calculate the trajectory of the projectile. Moreover, high-speed detection is necessary, as it can alert troops of the presence of a drone way before the drone drops a projectile, allowing them to take cover and/or shoot the drone down with their gun. Similarly, '''Detecting Projectile Speed R5''' and '''Detecting Projectile Direction''' '''R6''' with an accuracy of within 5 degrees allows the system to predict where a projectile is heading, and to calculate where the net should be shot to redirect the projectile. As suggested by F.W., focusing not only on drones but on any incoming projectiles within range is essential to providing comprehensive situational awareness and maximizing the system's value in dynamic and potentially high-risk environments. The requirement to '''Track Drone with Laser R7''' is justified by the need for precise targeting, as well as a preemptive measure before the implementation of the interception is complete. A laser tracking system that tracks within a 1m² radius allows for monitoring the movement of drones, letting the troops know where the drone is. The ability to '''Intercept Projectiles''' '''R8''' within a 1m² area ensures that the system can redirect the projectile using the 1m<sup>2</sup> net designed for our system. In Interview 1, F.W. explained that while interception methods like jamming are often preferable, kinetic interception may still be necessary for drones that pose an immediate threat. A '''Low Cost-to-Intercept''' '''R9''' of under $50 per event is essential to ensure that our a single troop or a group of troops can afford this system of last line of defense, as we want to make sure that the price to intercept a drone is as close if not lower than the cost of the drone, especially for military operations that may require frequent use. The article ''Countering the drone threat implications of C-UAS technology for Norway in an EU and NATO context'' discusses the importance of keeping interception costs low to ensure sustainability and usability in ongoing operations. By minimizing the cost per interception, the system remains practical and cost-effective for high-frequency use. Similarly, the requirement for '''Low Total Cost R10''' (under $2000) ensures accessibility to smaller or single military units. B.D. noted that cost is a major constraint, especially for front-line volunteers who often rely on fundraising to support equipment needs. A lower total cost makes the system more widely deployable and achievable for those with limited budgets. '''Portability R11''', with a target weight of under 3 kg, is crucial for ease of use and mobility. According to B.D., lightweight systems are essential for front-line soldiers, who have limited space and carry substantial gear. A portable system ensures that soldiers can transport it efficiently and integrate it into their equipment without compromising mobility or comfort. '''Ease of Deployment R12''' is also essential, with a setup time of less than 5 minutes. B.D. emphasized that in unpredictable field environments, soldiers need a system that requires minimal setup. Quick deployment is highly important in dynamic situations where immediate action is required, allowing personnel to be prepared with minimal downtime. '''Quick Reload/Auto Reload''' '''R13'''capabilities, with a reload time of under 30 seconds, enable the system to handle multiple threats in rapid succession. This requirement addresses the feedback from F.W., who noted the importance of speed in high-risk areas. Fast reloading helps maintain the system’s readiness, preventing delays in the event of multiple drone or projectile threats. Lastly, '''Environmental Durability''' '''R14''' ensures that the system operates reliably across a wide temperature range and in adverse weather conditions. ''The Rise of Radar-Based UAV Detection For Military'' stresses that systems used in real-world military applications must withstand rain, dust, and extreme temperatures. Durability in harsh environments increases the system's utility, ensuring it remains effective regardless of weather or climate conditions. | |||

=='''Detection'''== | |||

=== Drone Detection=== | |||

==== The Need for Effective Drone Detection==== | |||

With the rapid advancement and production of unmanned aerial vehicles (UAV), particularly small drones, new security challenges have emerged for the military sector.<ref name=":0" /> Drones can be used for surveillance, smuggling, and launching explosive projectiles, posing threats to infrastructure and military operations.<ref name=":0" /> Within our project we will be mostly looking at the threat of drones launching explosive projectiles. We have as an objective to develop a portable, last-line-of-defense system that can detect drones and intercept and/or redirect the projectiles they launch. An important aspect of such a system is the capability to reliably detect drones in real-time, in possibly dynamic environments.<ref name=":1" /> The challenge here is to create a solution that is not only effective but also lightweight, portable, and easy to deploy. | |||

====Approaches to Drone Detection==== | |||

Numerous approaches have been explored in the field of drone detection, each with its own set of advantages and disadvantages.<ref name=":2" /><ref name=":1" /> The main methods include radar-based detection, radio frequency (RF) detection, acoustic-based detection, and vision-based detection.<ref name=":0" /><ref name=":2" /> It is essential for our project to analyze these methods within the context of portability and reliability, to identify the most suitable method, or combination of methods. | |||

=====''Radar-Based Detection''===== | |||

Radar-based systems are considered as one of the most reliable methods for detecting drones.<ref name=":2" /> Radar systems transmit short electromagnetic waves that bounce off objects in the environment and return to the receiver, allowing the system to detect the object's attributes, such as range, velocity, and size of the object.<ref name=":2" /><ref name=":1" /> Radar is especially effective in detecting drones in all weather conditions and can operate over long ranges.<ref name=":0" /><ref name=":2" /> Radars, such as active pulse-Doppler radar, can track the movement of drones and distinguish them from other flying objects based on the Doppler shift caused by the motion of propellers (the micro-Doppler effect).<ref name=":0" /><ref name=":1" /><ref name=":2" /> | |||

Despite its effectiveness, radar-based detection systems come with certain limitations that must be considered. First, traditional radar systems are rather large and require significant power, making them less suitable for a portable defense system.<ref name=":2" /> Additionally, radar can struggle to detect small drones flying at low altitudes due to their limited radar cross-section (RCS), particularly in cluttered environments like urban areas.<ref name=":2" /> Millimeter-wave radar technology, which operates at high frequencies, offers a potential solution by providing better resolution for detecting small objects, but it is also more expensive and complex.<ref name=":2" /><ref name=":0" /> | |||

===== ''Radio Frequency (RF)-Based Detection''===== | |||

Another common method is detecting drones through radio frequency (RF) analysis.<ref name=":0" /><ref name=":1" /><ref name=":2" /><ref name=":3" /> Most drones communicate with their operators via RF signals, using the 2.4 GHz and 5.8 GHz bands.<ref name=":0" /><ref name=":2" /> RF-based detection systems monitor the electromagnetic spectrum for these signals, allowing them to identify the presence of a drone and its controller on these RF bands.<ref name=":2" /> One advantage of RF detection is that it does not require line-of-sight, implying that the detection system does not need to have a view of the drone.<ref name=":2" /> It can also operate over long distances, making it effective in a large pool of scenarios.<ref name=":2" /> | |||

However, RF-based detection systems do have their limitations. They are unable to detect drones that do not rely on communication with another operator, as in autonomous drones.<ref name=":1" /> Also, the systems are less reliable in environment where many RF signals are presents, such as cities.<ref name=":2" /> Therefore in situations where high precision and reliability are a must, RF-based detection might not be too suitable. | |||

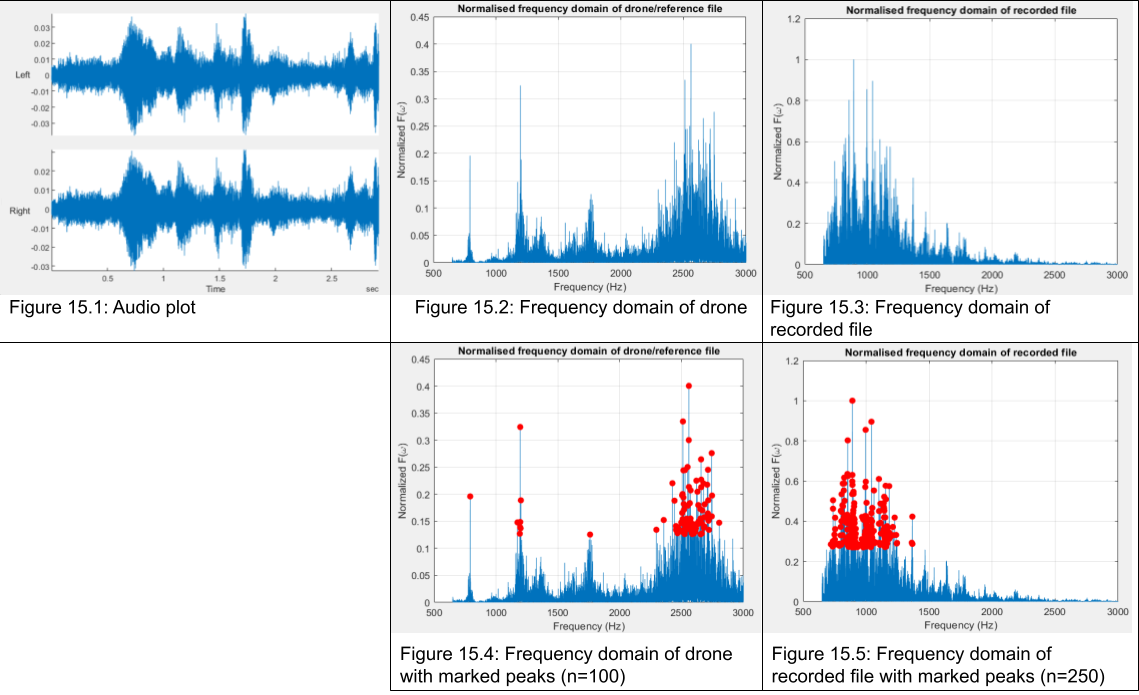

=====''Acoustic-Based Detection''===== | |||

Acoustic detection systems rely on the unique sound signature produced by drones, patricularly the noise generated by their propellers and motors.<ref name=":2" /> These systems use highly sensitive microphones to capture these sounds and then analyze the audio signals to identify the presence of a drone.<ref name=":2" /> The advantage of this type of detection is that it is rather low cost and also does not require line-of-sight, therefore this type of detection is mostly used for detecting drones behind obstacles in non-open spaces.<ref name=":2" /><ref name=":0" /> | |||

However, it also has its disadvantages. In environments with a lot of noise, as in a battlefields, these systems are not as effective.<ref name=":1" /><ref name=":2" /> Additionally, some drones are designed to operate silently.<ref name=":1" /> Also, they only work on rather short range, since sound weakens over distance.<ref name=":2" /> | |||

=====''Vision-Based Detection'' ===== | |||

Vision-based detection systems use camera, either in the visible or infrared spectrum, to detect drones visually.<ref name=":0" /><ref name=":2" /> These system rely on image recognition algorithms, often by use of machine learning.<ref name=":2" /><ref name=":3" /> Drones are then detected based on their shape, size and movement.<ref name=":3" /> The main advantage of this type of detection is that the operators themselves will be able to confirm the presence of a drone, and are able to differentiate between a drone and other objects such as birds.<ref name=":2" /> | |||

However, there are also disadvantages when it comes to vision-based detection systems.<ref name=":1" /><ref name=":2" /> These systems are highly dependent on environmental conditions, they need a clear line-of-sight and good lightning, additionally weather conditions can have an impact on the accuracy of the systems.<ref name=":1" /><ref name=":2" /> | |||

====Best Approach for Portable Drone Detection==== | |||

For our project, which focuses on a portable system, the ideal drone detection method must balance between effectiveness, portability and ease of deployment. Based on this, a sensor fusion approach appear to be the most appropriate.<ref name=":2" /> | |||

===== ''Sensor Fusion Approach'' ===== | |||

Given the limitations of each individual detection method, a sensor fusion approach, which would combine radar, RF, acoustic and vision-based sensors, offers the best chance of achieving reliable and accurate drone detection in a portable system.<ref name=":2" /> Sensor fusion allows the strengths of each detection method to complement the weaknesses of the others, providing more effective detection in dynamic environments.<ref name=":2" /> | |||

#'''Radar Component:''' A compact, millimeter-wave radar system would provide reliable detection in different weather conditions and across long ranges.<ref name=":1" /> While radar systems are traditionally bulky, recent advancements make it possible to develop portable radar units that can be used in a mobile systems.<ref name=":2" /> These would most likely be less effective, therefore to compensate a sensor fusion approach would be used.<ref name=":2" /> | |||

#'''RF Component:''' Integrating an RF sensor will allow the system to detect drones communicating with remote operators.<ref name=":2" /> This component is lightweight and relatively low-power, making it ideal for a portable system.<ref name=":2" /> | |||

#'''Acoustic Component:''' Adding acoustic sensors can help detect drones flying at low altitudes or behind obstacles, where rader may struggle.<ref name=":0" /><ref name=":2" /> Also this component is mainly just a microphone and the rest is dependent on software, and therefore also ideal for a portable system.<ref name=":2" /> | |||

#'''Vision-Based Component:''' A camera system equipped with machine learning algorithms for image recognition can provide visual confirmation of detected drones.<ref name=":3" /><ref name=":2" /> This component can be added by use of lightweight, wide-angle camera, which again does not restrict the device from being portable.<ref name=":2" /> | |||

====Conclusion==== | |||

To achieve portability in our system we have to restrict certain sensors and/or components, therefore to still achieve effectivity when it comes to drone detection, the best approach is sensor fusion. The system would integrate radar, RF, acoustic and vision-based detection. These together would compensate for each others limitations resulting in an effective, reliable and portable system. | |||

=== Sensor Fusion === | |||

When it comes to detection, sensor fusion is essential for integrating inputs from multiple sensos types to achieve higher accuracy and reliability in dynamic conditions. Which in our case are radar, radio frequency, acoustic and vision-based. Sensor fusion can occur at different stages, with '''early''' and '''late fusion'''.<ref name=":5">Svanström, F.; Alonso-Fernandez, F.; Englund, C. Drone Detection and Tracking in Real-Time by Fusion of Different Sensing Modalities. ''Drones'' 2022, ''6'', 317. https://doi.org/10.3390/drones6110317</ref> | |||

'''Early fusion''' integrates raw data from various sensors at the initial stages, creating a unified dataset for procession. This approach captures the relation between different data sources, but does require extensive computational recourses, especially when the data of the different sensors are not of the same type, consider acoustic and visual data.<ref name=":5" /> | |||

'''Late fusion''' integrates the processed outputs/decisions of each sensor. This method allows each sensor the apply its own processing approach before the data is fused, making it more suitable for systems where sensor outputs vary in type. According to recent studies in UAV tracking, late fusion improves robustness by allowing each sensor to operate indepentently under its optimal conditions.<ref name=":5" /><ref name=":6">Samaras, S.; Diamantidou, E.; Ataloglou, D.; Sakellariou, N.; Vafeiadis, A.; Magoulianitis, V.; Lalas, A.; Dimou, A.; Zarpalas, D.; Votis, K.; et al. Deep Learning on Multi Sensor Data for Counter UAV Applications—A Systematic Review. ''Sensors'' 2019, ''19'', 4837. https://doi.org/10.3390/s19224837</ref> | |||

Therefore, we can conclude that for our system late fusion is best suited. | |||

==== Algorithms for Sensor Fusion in Drone Detection ==== | |||

# '''Extended Kalman Filter (EKF)''': EKF is widely used in sensor fusion for its ability to handle nonlinear data, making it suitable for tracking drones in real-time by predicting trajectory despite noisy inputs. EKF has proven effective for fusing data from radar and LiDAR, which is essential when estimating an object's location in complex settings like urban environments.<ref name=":6" /> | |||

# '''Convolutional Neural Networks (CNNs)''': Primarily used in vision-based detection, CNNs process visual data to recognize drones based on shape and movement. CNNs are particularly useful in late-fusion systems, where they can add a visual confirmation layer to radar or RF detections, enhancing overall reliability.<ref name=":5" /><ref name=":6" /> | |||

# '''Bayesian Networks''': These networks manage uncertainty by probabilistically combining sensor inputs. They are highly adaptable in scenarios with varied sensor reliability, such as combining RF and acoustic data, making them suitable for applications where conditions can impact certain sensors more than others.<ref name=":5" /> | |||

# '''Decision-Level Fusion with Voting Mechanisms''': This algorithmic approach aggregates sensor outputs based on their agreement or “votes” regarding an object's presence. This simple yet robust method enhances detection accuracy by prioritizing consistent detections across sensors.<ref name=":5" /> | |||

# '''Deep Reinforcement Learning (DRL)''': DRL optimizes sensor fusion adaptively by learning from patterns in sensor data, making it particularly suited for applications requiring dynamic adjustments, like drone tracking in unpredictable environments. DRL has shown promise in managing fusion systems by balancing multiple inputs effectively in real-time applications.<ref name=":5" /><ref name=":6" /> | |||

These algorithms have demonstrated efficacy across diverse sensor configurations in UAV applications. EKF and Bayesian networks are particularly valuable when fusing data from radar, RF, and acoustic sources, given their ability to manage noisy and uncertain data, while CNNs and voting mechanisms add reliability in vision-based and multi-sensor contexts. However, without testing no conclusions could be made on which algorithms can be applied effectively and which ones would work best.<ref name=":5" /><ref name=":6" /> | |||

=='''Interception'''== | |||

=== Protection against Projectiles Dropped by Drones === | |||

In modern warfare and security scenarios, small drones have emerged as cheap and effective tools capable of carrying payloads that can be dropped over critical areas or troops. Rather than intercepting the drones themselves—which would require a high-cost interception method—we shifted our focus to intercepting projectiles they drop, as these are usually artillery shells have an easily computable trajectory, and are far lighter and thus cheaper and easier to intercept. By targeting these projectiles as a last line of defense, our system provides a more cost-effective to neutralize potential threats only when necessary. This approach minimizes the resources spent on countering non-threatening drones while concentrating defensive efforts on imminent, high-risk projectiles, as a last resource for individual troops to remain safe. | |||

===Key Approaches to Interception=== | |||

==== Kinetic Interceptors: ==== | |||

Kinetic methods involve the direct impact destruction or incapacitation of projectiles dropped by drones. These systems are designed for medium- to long-range distances and include missile-based and projectile-based interception systems. For example, the Raytheon Coyote Block 2+ missile is a kinetic interceptor designed to counter small aerial threats, such as drone projectiles. The Coyote’s design allows it to engage moving targets with precision and agility. Originally developed as a UAV, the Coyote has been adapted for use as a missile system, with each missile costing approximately $24,000, which is a disparagingly high cost to destroy relatively inexpensive threats like drone-deployed projectiles<ref name=":7">''Coyote UAS''. (n.d.). <nowiki>https://www.rtx.com/raytheon/what-we-do/integrated-air-and-missile-defense/coyote</nowiki></ref>. The precision and effectiveness of kinetic systems like the Coyote make them particularly valuable for high-priority and threatening targets, despite the high cost-to-intercept. | |||

==== Electronic Warfare (Jamming and Spoofing) ==== | |||

Electronic warfare techniques, such as radio frequency jamming (RF) and GNSS jamming, disrupt the control signals of drones, causing them to lose connectivity to their controller or to the satellite signal. Spoofing, on the other hand, involves hijacking the communication system of the drone, giving it instructions that benefit you, such as releasing the projectile in a safe place. While jamming is non-lethal, it may affect other electronics nearby and is ineffective against autonomous drones that don’t rely on external signals. For example, DroneShield’s DroneGun MKIII is a portable jammer capable of disrupting RF and GNSS signals up to 500 meters away. By targeting a drone’s control signals, the DroneGun can cause the drone to lose connection, descend, or prematurely release its payload, which can then be intercepted by other defenses. However, RF jamming can interfere with nearby electronics, making it most suitable for use in remote or controlled environments, to minimize the collateral damage to your own electronic infrastructure. This system has demonstrated effectiveness in remote military applications and large open spaces where the risk of collateral interference is minimized<ref name=":8">''DroneGun MK3: Counterdrone (C-UAS) Protection — DroneShield''. (n.d.). Droneshield. <nowiki>https://www.droneshield.com/c-uas-products/dronegun-mk3</nowiki></ref><ref name=":9">Michel, A. H., The Center for the Study of the Drone at Bard College, Aasiyah Ali, Lynn Barnett, Dylan Sparks, Josh Kim, John McKeon, Lilian O’Donnell, Blades, M., Frost & Sullivan, Peace Research Institute Oslo, Norwegian Ministry of Defense, Open Society Foundations, Pvt. James Newsome, & Senior Airman Kaylee Dubois. (2019). ''COUNTER-DRONE SYSTEMS'' (D. Gettinger, Isabel Polletta, & Ariana Podesta, Eds.; 2nd Edition). <nowiki>https://dronecenter.bard.edu/files/2019/12/CSD-CUAS-2nd-Edition-Web.pdf</nowiki></ref>. | |||

==== Directed Energy Weapons (Lasers and Electromagnetic Pulses) ==== | |||

Directed energy systems like lasers and electromagnetic pulses (EMP) are designed to disable dropped projectiles by damaging electrical components or destroying them outright. Lasers provide precision and instant engagement with minimal collateral, although they are very expensive and lose effectiveness due to environmental conditions like rain or fog. EMP systems can disable multiple projectiles simultaneously but may interfere with other electronics in the vicinity. For example, Israel’s Iron Beam is a high-energy laser system developed by Rafael Advanced Defense Systems for intercepting aerial threats, including projectiles dropped by drones, but mainly other missiles. Unlike kinetic interceptors, Iron Beam offers a lower-cost engagement per interception. EMPs, on the other hand, provide a broad area effect, allowing simultaneous disabling of multiple projectiles. However, EMP systems may also disrupt nearby electronics, limiting their use in civilian-populated areas<ref name=":10">Hecht, J. (2021, June 24). Liquid lasers challenge fiber lasers as the basis of future High-Energy weapons. ''IEEE Spectrum''. <nowiki>https://spectrum.ieee.org/fiber-lasers-face-a-challenger-in-laser-weapons</nowiki></ref>. | |||

==== Net-Based Capture Systems ==== | |||

Net-based systems use physical nets to capture projectiles mid-flight, stopping them from reaching their target by redirecting them. Nets can be launched from ground platforms or other drones, effectively intercepting low-speed, low-altitude projectiles. This method is non-lethal and minimizes collateral damage, though it has limitations in range and reloadability. For example, Fortem Technologies’ DroneHunter F700 is a specialized drone designed to intercept other drones or projectiles by deploying high-strength nets. The DroneHunter captures aerial threats, stopping them from completing their intended path, thus minimizing potential damage. However, net-based systems have limitations in range and require reloading unless automated, which can slow response time during scenarios where the threat of drones dropping projectiles is constant<ref name=":11">DroneHunter® F700. (2024, October 24). ''Fortem Technologies''. <nowiki>https://fortemtech.com/products/dronehunter-f700/</nowiki></ref>. | |||

==== Geofencing ==== | |||

Geofencing involves creating virtual boundaries around sensitive areas using GPS coordinates. Drones equipped with geofencing technology are automatically programmed to avoid flying into restricted zones. This method is proactive, preventing any drone to even get close to any troops, but can be bypassed by modified or non-compliant drones. DJI, a major drone manufacturer, has integrated geofencing technology into its drones, preventing its users from entering or flying in zones that are restricted zones. This feature allows DJI to choose what areas drones can and cannot enter, and provides a non-lethal preventive measure. However, geofencing requires drone manufacturer cooperation, and modified or non-compliant drones can bypass these restrictions, making it unreliable <ref name=":12">''DJI FlySafe''. (n.d.). <nowiki>https://fly-safe.dji.com/nfz/nfz-query</nowiki></ref>. | |||

===Objectives of Effective Drone Neutralization=== | |||

When designing or selecting a drone interception system, several key objectives must be prioritised: | |||

#'''Low Cost-to-Intercept''': Interception costs are critical, as small, cheap drones can carry projectiles that may cost significantly less than the interception method itself. Low-cost systems, like net-based options, are preferred for frequent engagements. Conversely, more expensive kinetic methods may be necessary for high-speed or armored projectiles. Raytheon’s Coyote missiles is an example of the cost tradeoff of kinetic systems and highlight the economic considerations that come into play in military versus civilian contexts<ref name=":7" />. | |||

#'''Portability''': Interception systems should ideally be lightweight, collapsible, and transportable across various settings. Portable systems, like the DroneGun MKIII jammer and net-based launchers, enable rapid setup and adaptability to various operational environments, making them valuable in mobile defense scenarios<ref name=":8" />. | |||

#'''Ease of Deployment''': Quick deployment is essential in dynamic scenarios like military operations or large-scale events. For example, drone-based net systems and RF jammers mounted on mobile units offer flexible deployment options, allowing for rapid response in fast-moving situations<ref name=":9" />. | |||

#'''Quick Reloadability or Automatic Reloading''': In high-threat environments, interception systems with rapid or automated reloading capabilities ensure continuous defense. Systems like lasers and RF jammers support quick re-engagement, while net throwers and kinetic projectiles may require manual reloading, potentially reducing efficiency in sustained threats<ref name=":13">''Iron Beam laser weapon, Israel''. (2023, November 1). Army Technology. <nowiki>https://www.army-technology.com/projects/iron-beam-laser-weapon-israel/</nowiki></ref>. | |||

#'''Minimal Collateral Damage''': In urban or civilian areas, minimizing collateral damage is of utmost importance. Non-lethal interception methods, such as jamming, spoofing, and net-based systems, provide effective solutions that neutralize threats without excessive environmental or infrastructural impact. Systems like the Fortem DroneHunter F700 illustrate the potential for non-destructive interception in urban areas<ref name=":11" />. | |||

===Evaluation of Drone Interception Methods=== | |||

==== Pros and Cons of Drone Interception Methods==== | |||

#'''Jamming (RF/GNSS)''' | |||

#* Pros: Jamming effectively disrupts communications between drones and operators, often forcing premature payload drops. Non-destructive and widely applicable, jamming can target multiple drones simultaneously, making it well-suited to civilian defense applications<ref name=":9" />. | |||

#*Cons: Jamming is limited in effectiveness against autonomous drones and can interfere with nearby electronics, posing a risk in urban areas where collateral electronic disruption can impact civilian infrastructure. | |||

#'''Net Throwers''' | |||

#*Pros: Non-lethal and environmentally safe, nets can physically capture projectiles without destroying them, making them ideal for urban settings where collateral damage is a concern. | |||

#*Cons: Effective primarily against slow-moving, low-altitude projectiles, net throwers require manual reloading between uses, which limits their response time during sustained threats unless automated. | |||

#'''Missile Launch''' | |||

#*Pros: High precision and range, missile systems like Raytheon's Coyote are effective for engaging fast-moving or long-range targets and are ideal in military settings for large-scale aerial threats<ref name=":7" />. | |||

#*Cons: High cost per missile, risk of collateral damage, and infrastructure requirements restrict missile use to defense zones rather than civilian settings. | |||

#'''Lasers''' | |||

#* Pros: Laser systems are silent, precise, and capable of engaging multiple targets without producing physical debris. This makes them valuable in urban environments where damage control is essential<ref name=":13" />. | |||

#*Cons: Lasers are costly, sensitive to environmental conditions like fog and rain, and have high energy demands that complicate portability, limiting their field application<ref name=":10" />. | |||

#'''Hijacking''' | |||

#*Pros: Allows operators to take control of drones without destroying them. It’s a non-lethal approach, ideal for situations where it’s essential to capture the drone intact. | |||

#*Cons: Hijacking poses collateral risks for surrounding electronics, has limited range, and is operationally complex in active field environments, requiring specialized training and equipment. | |||

#'''Spoofing''' | |||

#*Pros: Non-destructively diverts drones from sensitive areas by manipulating signals, suitable for deterring drones from critical zones<ref name=":9" />. | |||

#* Cons: Technically complex and less effective against drones with advanced anti-spoofing technology, requiring specialized skills and equipment. | |||

#'''Geofencing''' | |||

#*Pros: Geofencing restricts compliant drones from entering sensitive zones, creating a non-lethal preventive barrier that offers permanent coverage<ref name=":12" />. | |||

#*Cons: Reliance on manufacturers for integration and potential circumvention by modified drones limits geofencing as a standalone defense measure in high-risk scenarios. | |||

===Pugh Matrix=== | |||

The Pugh Matrix is a decision-making tool used to evaluate and compare multiple options against a set of criteria. By systematically scoring each option across various criteria, the Pugh Matrix helps to identify the most balanced or optimal solution based on the chosen priorities. In this report, I created a Pugh Matrix to assess different interception methods for countering projectiles dropped by drones. | |||

Each method was evaluated across seven key criteria: Cost-to-Intercept, Portability, Ease of Deployment, Reloadability, Minimum Collateral Damage, and Effectiveness. For each criterion, the methods were scored as Low (1 point), Medium (2 points), or High (3 points), reflecting their relative strengths and limitations in each area. The scores were then totaled to provide an overall assessment of each method’s viability as a counter-projectile solution. This approach enables a comprehensive comparison, highlighting methods that provide a balanced combination of cost-efficiency, ease of use, and effectiveness in interception. | |||

{| class="wikitable" | |||

|+ | |||

! Method | |||

!Minimal Cost-to-intercept | |||

!Portability | |||

!Ease of Deployment | |||

!Reloadability | |||

!Minimum Collateral Damage | |||

!Effectiveness | |||

!Total Score | |||

|- | |||

|Jamming (RF/GNSS) | |||

|Medium | |||

|High | |||

|High | |||

|High | |||

|High | |||

|Medium | |||

|10 | |||

|- | |||

|Net Throwers | |||

|High | |||

|High | |||

|High | |||

|Medium | |||

|High | |||

|High | |||

|11 | |||

|- | |||

|Missile Launch | |||

|Low | |||

|Low | |||

|Medium | |||

|Low | |||

|Low | |||

| High | |||

|5 | |||

|- | |||

|Lasers | |||

|High | |||

|Medium | |||

|Medium | |||

|High | |||

|High | |||

|High | |||

|8 | |||

|- | |||

|Hijacking | |||

|High | |||

|High | |||

|Medium | |||

|Low | |||

|High | |||

|Medium | |||

|8 | |||

|- | |||

|Spoofing | |||

|Medium | |||

|High | |||

|Medium | |||

|Medium | |||

|High | |||

|Medium | |||

|8 | |||

|- | |||

|Geofencing | |||

|High | |||

|High | |||

|High | |||

|High | |||

|High | |||

|Low | |||

|10 | |||

|} | |||

The resulting scores can be found in the Pugh Matrix below, where Net Throwers scored the highest with 11 points, indicating strong performance across several criteria, particularly in minimizing collateral damage and cost-effectiveness. Other methods, such as Jamming and Geofencing, also scored well, while missile-based solutions, despite high effectiveness, scored lower due to high costs and limited portability. Consequently, we will be focusing on Net Throwers as our main interception mechanism. | |||

=='''Path Prediction of Projectile'''<ref>- Autonomous Ball Catcher Part 1: Hardware — Baucom Robotics</ref><ref>- Ball Detection and Tracking with Computer Vision - InData Labs</ref><ref>- Detecting Bullets Through Electric Fields – DSIAC</ref><ref>- An Introduction to BYTETrack: Multi-Object Tracking by Associating Every Detection Box (datature.io)</ref><ref>- Online Trajectory Generation with 3D camera for industrial robot - Trinity Innovation Network (trinityrobotics.eu)</ref><ref>- Explosives Delivered by Drone – DRONE DELIVERY OF CBNRECy – DEW WEAPONS Emerging Threats of Mini-Weapons of Mass Destruction and Disruption ( WMDD) (pressbooks.pub)</ref><ref>- Deadliest weapons: The high-explosive hand grenade (forcesnews.com)</ref><ref>- SM2025.pdf (myu-group.co.jp)</ref><ref>- Trajectory estimation method of spinning projectile without velocity input - ScienceDirect</ref><ref>- An improved particle filtering projectile trajectory estimation algorithm fusing velocity information - ScienceDirect</ref><ref>- (PDF) Generating physically realistic kinematic and dynamic models from small data sets: An application for sit-to-stand actions (researchgate.net)</ref><ref>https://kestrelinstruments.com/mwdownloads/download/link/id/100/</ref><ref>Normal and Tangential Drag Forces of Nylon Nets, Clean and with Fouling, in Fish Farming. An Experimental Study (mdpi.com)</ref><ref>A model for the aerodynamic coefficients of rock-like debris - ScienceDirect</ref><ref>Explosives Delivered by Drone – DRONE DELIVERY OF CBNRECy – DEW WEAPONS Emerging Threats of Mini-Weapons of Mass Destruction and Disruption ( WMDD) (pressbooks.pub)</ref><ref>Influence of hand grenade weight, shape and diameter on performance and subjective handling properties in relations to ergonomic design considerations - ScienceDirect</ref><ref>'Molotov Cocktail' incendiary grenade | Imperial War Museums (iwm.org.uk)</ref><ref>My Global issues - YouTube</ref><ref>4 Types of Distance Sensors & How to Choose the Right One | KEYENCE America</ref>== | |||

===Theory:=== | |||

Catching a projectile requires different steps. At first the particle has to be detected, after which its trajectory has to be determined. If we know how the projectile is moving in space and time the net can be shot to catch the projectile. However based on the distance of the projectile it takes different amounts of time for the net to reach the projectile. In this time the projectile has moved to a different location. So the net must be shot to a position where the projectile will be in the future such that they collide. | |||

Since projectiles do not make sound and do not emit RF waves, they are not as easy to detect as drones. For this part the assumption will be made that the projectile is visible. Making the system also detect projectiles which are not visible would probably be possible but this would complicate things a lot. The U.S. army uses electronic fields which can detect bullets passing. Something similar could be used to detect projectiles which are not visible, but this will not be done in this project due to the complexity. | |||

To detect a projectile a camera with tracking software is used. Drones will have to be detected by this camera. This will be done by training an AI model for drone detection. | |||

Now that the projectile is in sight its trajectory has to be determined. The speed of the projectile should only slow down due to friction in the air and speed up due to gravity. For a first model air friction can be neglected to get a good approximation of the flight of the projectile. Since not every projectile has the same amount of air resistance, the best friction coefficient should be found experimentally by dropping different projectiles. The best friction coefficient is when most projectiles are caught by the system. An improvement for this is to have different pre-assigned values for friction coefficients for different sizes of projectiles. Since surface area plays a big role in the amount of friction a projectile experiences, this is a reasonable thing to do. | |||

With the expected path of the projectile known, the net can be launched to catch the projectile midair. Basic kinematics can give accurate results for these problems. Also, the problem can be seen as a 2D problem. Since we only protect against projectiles coming towards the system, we can always define a plane such that the trajectory of the projectile and the system are in the same plane, making the problem two dimensional. If the path of the projectile would exceed the plane and become a three dimensional problem the system does not need to protect against this projectile as it does not form a threat, since the system is in the (2D) plane. | |||

===Calculation: === | |||

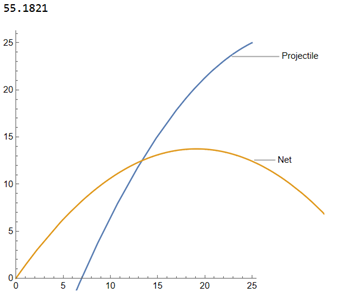

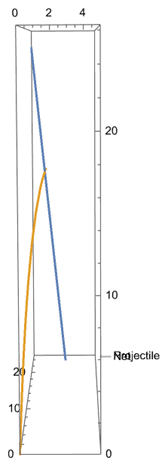

[[File:Imagedfdsfsdfdsf.png|thumb|223x223px|left|PP1: Output 2D model]] | |||

A Mathematica script is written which calculates at this angle the net should be shot. The script now makes use of made up values which have to be determined experimentally based on the hardware that is used. For example, the speed at which the net is shot should be tested and changed in the code to get a good result. The distance and speed of the projectile can be determined using sensors on the device. The output of the Mathematica script is shown in figure PP1. It gives the angle for the net to be shot at as well as a trajectory of the net and projectile to visualize how the interception will happen. | |||

===Accuracy:=== | |||

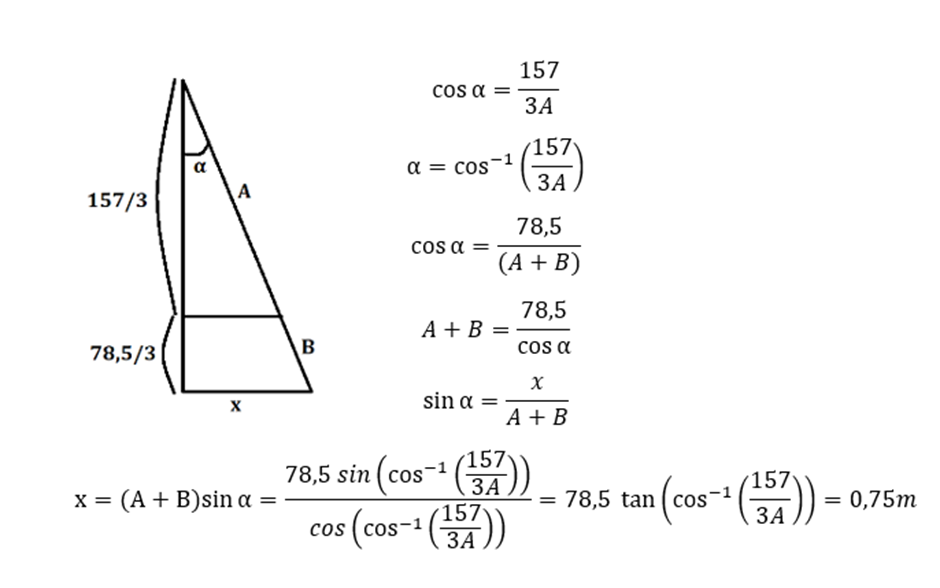

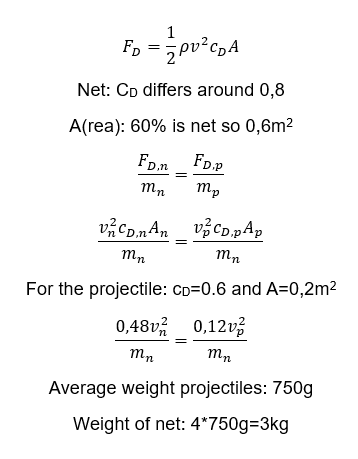

[[File:Imagevsdvsdvdsvsd.png|thumb|319x319px|PP2: Calculations accuracy]] | |||

The height at which projectiles are dropped can be estimated by looking at footage of projectiles dropping. The height can be easily determined by assuming the projectile falls in vacuum, this represents reality really well. The height is given by: 0.5*g*t^2. Using a YouTube video<ref>Ukrainian Mountain Battalion drop grenades on Russian forces with weaponised drone (youtube.com)</ref> as data, it can be seen that almost every drop takes at least 4 seconds. This means that the projectiles are dropped from at least 78.5m. If we catch the projectile at two thirds of its fall, still having plenty of height to be redirected safely, and the net is 1 by 1 meter (so half a meter from its center to the closest side of the net), the projectile must not be dropped more than 0.75 meter (See figure PP2 for the calculation) next to us (outside of the plane) since the system would not catch this, if everything else went perfect. Even if the projectile would be dropped 0.7 meter next to the device, the net would hit the projectile with the side, which does not guarantee that the projectile will stay in the net. | |||

An explosive projectile will do damage even when 0.75 meters away from a person. This means that the previously made assumption, where it was assumed that a 2D model would be fine, since everything happens in a plane, does not fulfill all our needs. Enemy drones are not accurate to the centimeter, since explosive projectiles, like grenades, can kill a person even when 10 meters away. This means that for better results a 3D model should be used. | |||

===3D model:=== | |||

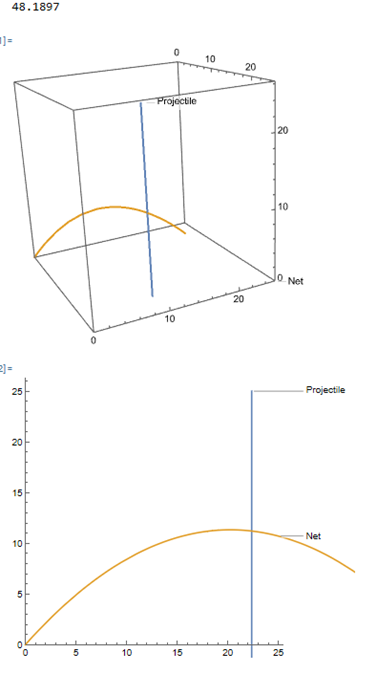

[[File:3D model.png|thumb|502x502px|PP3: Output 3D model]] | |||

It was tried to replicate the 2D model in 3D, but this did not work out with the same generality. For this reason some extra assumptions were made. These assumptions are based on reality and therefor still valid for this system. The only thing this changes is the generality of the model, where it could be used in multiple different cases instead of only projectiles dropped from drones. | |||

In the 2D model the starting velocity of the projectile could be changed. However, in reality, drones do not have a launching mechanism and simply drop the projectile without any starting velocity. This means that the projectile will drop straight down (except some sideways movement due to external forces like wind). This was noted after watching lots of drone warfare video footage, where it was also noted that drones do not usually crash into people, but mainly into tanks since for tanks an accurate hit is required between the base and the turret of the tank. For people, drones drop the projectile from a standstill (to get the required aim). This simplification also makes the 2D model valid again, since there is no sideward movement in the projectile, it will never leave the plane and we can create between the path of the projectile and the mechanism which shoots the net. | |||

Since this mechanism works in the real world (3D), it is decided to plot the 2D model at a required angle in 3D so there is a good representation of how the mechanism will work. The new model also gives the required shooting angle and then it shows the path of the net and projectile in 3D. To get further insight, the 2D trajectory of the net and projectile is also plotted, this can be seen in figure PP3. | |||

===Accuracy 3D model:=== | |||

[[File:Imagevvd.png|thumb|368x368px|PP5: Interception with wind]] | |||

[[File:Imageddd.png|left|thumb|355x355px|PP4: Calculations weight net]] | |||

The 3D model which is now set up only works in a “perfect” world, where there is no wind, no air resistance or any other external factors which may influence the path of the projectile and the net. Also we assume that the system knows where the drone is with infinite accuracy. This is in reality simply not true, but it is important to know how close this model replicates reality and if it can be used. | |||

Wind plays a big role in the path of the projectile and of the net. It is important that the model also functions under these circumstances. In order to determine the acceleration of the projectile and net the drag force on both objects must be determined. Two important factors where the drag force depends on are the drag coefficient and the frontal area of the objects. Since different projectiles are used during warfare, like hand grenades or Molotov cocktails, it is unknown what the exact drag coefficient or frontal area is or the projectile. After a dive in literature it was decided to take an average value for the drag coefficient and the frontal area since these values lied on the same spectrum. For the frontal area this could be predicted since the drones are only able to carry objects of limited size. After some calculations (see figure PP4) it was found that if the net (including the weights on the corners) weighs 3kg, the acceleration of the projectile and net due to wind effects is identical leading to still a perfect interception, which can be seen in figure PP5. This is based on literature values, for a later stage it is necessary to find the exact drag coefficient and surface area of the net and change the weight accordingly. As for projectiles which do not exactly satisfy the found drag coefficient or surface area, it is found with the use of the model that differences up to 50% of the used values do not influence the projectile so much that the interception misses. This range includes almost all theoretical values found for the different projectiles, making the model highly reliable under the influence of wind. | |||

An uncertainty within the system is the exact location of the drone. We aim to accurately know where the drone, and thus the projectile is, but in reality this infinite accurate location is unachievable, but we can get close. The sensors in the system must be optimized to locate the drone as good as possible. Luckily there are sensors which are able to achieve high accuracy, for example a LiDAR sensor which has a range of 2000m and is accurate to 2cm. The 2000m range is well within the range that our system operates and the accuracy of 2cm is way smaller than the size of the net (100cm by 100cm) which should not cause problems for the interception.[[File:Labeled system view.png|thumb|391x391px|Labeled System Overview]] | |||

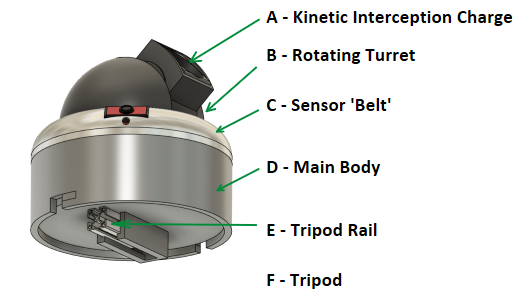

=='''Mechanical Design'''== | |||

=== <u>Introduction</u> === | |||

To thoroughly detail the mechanical design and the design choices made throughout the design process, each component will be discussed individually. For each component, a brief description will be provided as to why this feature is necessary in the system, followed by a number of possible component design choices, and finally which design choice was chosen and why. To conclude, an overview of the system as a whole will be given along with a short evaluation and some next steps for improvements to the system beyond the scope of this project. | |||

=== <u>Kinetic Interception Charge (A)</u> === | |||

'''<u>Feature Description</u>''' | |||

Kinetic interception techniques include a wide range of drone and projectile interception strategies that, as the name suggests, contain a kinetic aspect, such as a shotgun shell or a flying object. This method of interception relies on a physical impact or near-impact knocking the target off course or disabling it entirely. The necessity for kinetic interception capabilities was made clean during the first target market interview, in which the possibility of jamming and (counter-)electronic warfare was discussed and the consequent necessity to be able to intercept drones and dropped projectiles in a non-electronic fashion. | |||

'''<u>Possible Design Choices</u>''' | |||

* Shotgun shells | |||

** Using a mounted shotgun to kinetically intercept drones and projectiles is a possibility, proven also by the fact that shotguns are used as last lines of defense by troops at fixed positions as well as during transport <ref>Across, M. (2024, September 27). ''Surviving 25,000 Miles Across War-torn Ukraine | Frontline | Daily Mail''. YouTube. <nowiki>https://youtu.be/kqKGYn13MeM?si=VPhO7jFG0sHQQXiW</nowiki></ref>. Shotguns are also relatively widely available and the system could be designed to be adaptable to various shotgun types. However, the system would be very heavy and would need to be able to withstand the very powerful recoil of a shotgun firing. | |||

* Net charge | |||

** A net is a light-weight option which can be used to cover a relatively large area-of-effect consistently. This would make the projectile or drone much easier to hit, and thus contribute to the reliability of the system. The light weight would allow it to be rotated and fired faster than heavier options, as well as reducing the weight of any additional charges carried for the system, a critical factor mentioned in both the first and the second target market interviews. | |||

* Air-burst ammunition | |||

** This type of ammunition is designed to detonate in the air close to the incoming projectile or drone. This ammunition is very effective at preventing these hazards of reaching their targets, however very sharply increases the cost-to-intercept, a concept which was also previously introduced in the interviews. Furthermore, it is the most expensive out of all three of these options, which makes it less suitable for the application of this system. | |||

'''<u>Selected Design</u>''' | |||

The chosen kinetic interception charge comprises a light-weight net of a square surface area of 1m^2. The net has thin, weighted disks on the end, which are stacked on top of one another to form a cylindrical charge which can be loaded into the spring-loaded or pneumatically-loaded turret. This charge is then fired at incoming targets, and when done so, the net spreads out and catches the projectile where the path prediction algorithm calculates it will be by the time the net reaches it. This decision is based on the net's light weight, low cost-to-intercept, quick reloading capability, and reliability for the diversion of a projectile caught within its surface area. | |||

=== <u>Rotating Turret (B)</u> === | |||

<u>'''Feature Description'''</u> | |||

The rotating turret system is designed to provide a 360-degree rotation freedom for the attached net charge, allowing for the engagement of threats from any direction. Not just does the turret rotate 360 degrees in the horizontal plane, but also 90 degrees in the vertical plane, i.e. from completely horizontal to completely vertical. This capability is critical for obvious reasons, but especially in the context of fast-paced wars with ever-changing frontlines, such as the one in Ukraine, because of the risk of drone attacks coming from all around any given position. The versatility of a rotating turret enhances the system's ability to respond to these aerial threats significantly. Two stepper motors fitted with rotary encoders rapidly move the turret, one for each of the planes of rotation. The encoders serve to keep the electronics within the system aware of the current orientation of the net charge and to move it to the correct position once a threat has been detected. | |||

[[File:Sensor belt.png|thumb|229x229px|Sensor Belt Top View]] | |||

=== <u>Sensor 'Belt' (C)</u> === | |||

'''<u>Feature Description</u>''' | |||

The sensor ring around the bottom of the turret contains slots for three cameras and microphones. Furthermore, it and the main body interior can be adapted to house further sensor components, such as radar, thermal imaging, RF detectors and other examples. To have a range of sensors fitted to the system plays an important role in ensuring threats of different types can be detected, as well as providing a critical advantage in the effectiveness of the system in various weather and other environmental conditions, such as the density of trees and foliage around the system, or whether there is fog or smoke in the vicinity. | |||

'''<u>Possible Design Choices</u>''' | |||

* Sensors | |||

** Camera | |||

*** Best choice for open areas, as well as any situation where there is a direct line of sight to the drone, and consequently the projectile it drops. Three cameras each covering 120 degrees of space would be combined to provide a view of the entire 360 degree environment. However, as soon as line of sight is broken, the cameras alone are insufficient to detect drones effectively. | |||

** Microphone | |||

*** Fitting the system with microphones compliments the use of cameras effectively. Even when line of sight is broken, the microphone would be able to pick up the sound of the drone flying. This is done by actively performing frequency analysis on the audio recorded by the microphones and checking whether sound frequencies that are typically related to drones flying, including those given off by small motors which typically power the rotor blades of the drone. If these frequencies are significantly present, the microphone interprets the sound as the detection of a drone nearby. A shortcoming of the microphone is that it has a relatively small range, and will work less well with background noise, such as loud explosions or frequent firing. | |||

** Radar | |||

*** While the range of radar sensors is typically very large, there is a significant limitation to this type of detection for small and fast FPV drones. The detectable 'radar cross-section' of the FPV drones is very small, and often radars are designed and calibrated to detect much larger objects, such as fighter jets or naval vessels. This means a specialized radar component would be required, which however would prove to be very expensive and likely difficult to source if a high-end option were necessary. However, some budget options are available and are discussed above this in the wiki. Finally, an additional advantage radar components could possibly provide is the detection of larger and slower surveillance drones flying at a higher altitude. To detect these and warn the soldiers of their presence would allow them to better prepare for incoming surveillance, and consequently also an influx of FPV drone attacks on their position if they are found. | |||

** RF Detector | |||

*** The RF detector is a very useful device which senses the radio frequencies (RF) of drones, drone controllers, and other electronic equipment. Analyzing these frequencies for those typically used by drones to communicate with their pilots, they can quickly be detected if they are in the vicinity. In theory, this could also be used to block that signal and try to prevent the controlling of the drone near the system. | |||

* Sensor implementation | |||

** Sensors implemented directly onto the moving turret | |||

*** This option fixes the sensors relative to the turret and the horizontal orientation of the net charge. This would mean that the turret would rotate until the cameras detect the drone at a predetermined 'angle zero', which aligns with where the turret points. | |||

** Ring/Belt around the central turret | |||