Mobile Robot Control 2024 R2-D2: Difference between revisions

| (108 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

=Introduction= | =Introduction= | ||

This the wiki of the R2-D2 team for the course Mobile Robot Control of the Q4 in the year 2023-2024. The team is consisted from the following members. | This the wiki of the R2-D2 team for the course Mobile Robot Control of the Q4 in the year 2023-2024. The team is consisted from the following members. | ||

The project git repository can be found here: https://gitlab.tue.nl/mobile-robot-control/mrc-2024/r2-d2 | |||

===Group members:=== | ===Group members:=== | ||

| Line 62: | Line 64: | ||

'''Aditya''' | '''Aditya''' | ||

For this assignment I decided to improve upon the existing code of my group member Pavlos. Because I had the similar idea on the implementation of dont_crash as him, I decided to make the robot do more than what was asked for using the existing implementation. I developed a function turnRobot and made a loop in main code that makes the robot turn 90 degrees in the left direction as soon as it detects an obstacle infront of it and continue to move forward until it detects another obstacle and the process is repeated again. It is observed that the robot does not make a perfect 90 degrees turn and also because of this, it crash in the wall at a slight angle. I think this is because the loop works for only one laser data reading that | For this assignment I decided to improve upon the existing code of my group member Pavlos. Because I had the similar idea on the implementation of dont_crash as him, I decided to make the robot do more than what was asked for using the existing implementation. I developed a function turnRobot and made a loop in main code that makes the robot turn 90 degrees in the left direction as soon as it detects an obstacle infront of it and continue to move forward until it detects another obstacle and the process is repeated again. It is observed that the robot does not make a perfect 90 degrees turn and also because of this, it crash in the wall at a slight angle. I think this is because the loop works for only one laser data reading that is measured directly from the front. To fix this, I can create a loop which takes a range of laser angles from the front and takes a turn depending on the angle at which it detects the obstacle. | ||

The simulation video can be found here https://youtu.be/69iLlf4VPyo | The simulation video can be found here https://youtu.be/69iLlf4VPyo | ||

| Line 75: | Line 77: | ||

==Practical Session== | ==Practical Session== | ||

Video from the practical session: | Video from the practical session: | ||

| Line 83: | Line 83: | ||

'''On the robot-laptop open rviz. Observe the laser data. How much noise is there on the laser? What objects can be seen by the laser? Which objects cannot? How do your own legs look like to the robot?''' | '''On the robot-laptop open rviz. Observe the laser data. How much noise is there on the laser? What objects can be seen by the laser? Which objects cannot? How do your own legs look like to the robot?''' | ||

The laser can see obstacles that are on the same plane with the sensor. The noise is very small and when we compared a measurement with what we measured with a measurement tape we found the difference to be very small. The sensor has a range of 10 meters, so obstacles further away weren't visible in RVIZ. Furthermore the viewing angle is around 115 degrees from the front of the robot to the right and 115 degrees from the front to the left. That means if something is behind the robot, the robot can't see it. Our legs appear to be as half circular shapes, since the sensor detects only the legs and not the feet. | |||

'''Take your example of don't crash and test it on the robot. Does it work like in simulation? Why? Why not? (choose the most promising version of don't crash that you have.)''' | '''Take your example of don't crash and test it on the robot. Does it work like in simulation? Why? Why not? (choose the most promising version of don't crash that you have.)''' | ||

The implementations which where checking for a single point in the laser ranges list didn't work that well because a leg or box might be in front of the robot while not getting hit by the laser ray at 0 degrees. Thus, we modified our code to detect the ranges in a specific angle (e.g. 30 degrees) and stop if it finds an obstacle close enough in these ranges. | |||

'''If necessary make changes to your code and test again. What changes did you have to make? Why? Was this something you could have found in simulation?''' | '''If necessary make changes to your code and test again. What changes did you have to make? Why? Was this something you could have found in simulation?''' | ||

After making the before mentioned change, the robot could detect obstacles that were in front of it even if we moved them a bit in any direction. That was something we couldn't see in the simulation since we didn't have dynamic obstacles or static small obstacles and the underlying problem wasn't visible when we were dealing only with walls. | |||

= Week 2 - Local Navigation= | = Week 2 - Local Navigation= | ||

| Line 124: | Line 129: | ||

====Implementation of code and functionality==== | ====Implementation of code and functionality==== | ||

The Artificial Potential Fields method is implemented with the lecture slides as a basis. In the theory, a potential can be calculated at every point the robot can be in. The potential consist of an attracting component based on the goal. Being further from the goal means a higher potential. The potential increases as well when coming closer to unwanted object, which acts as a repelling force. When taking the derivative of this potential field, an artificial force can be derived to push the robot towards the goal while avoiding obstacles. | The Artificial Potential Fields method is implemented with the lecture slides as a basis. In the theory, a potential can be calculated at every point the robot can be in. The potential consist of an attracting component based on the goal. Being further from the goal means a higher potential. The potential increases as well when coming closer to unwanted object, which acts as a repelling force. When taking the derivative of this potential field, an artificial force can be derived to push the robot towards the goal while avoiding obstacles. | ||

| Line 131: | Line 134: | ||

====Simulation Results==== | ====Simulation Results==== | ||

Video displaying the simulation results: https://youtu.be/aqUUIiQzgVs. | Video displaying the simulation results: https://youtu.be/aqUUIiQzgVs. | ||

====Problems==== | ====Problems==== | ||

Map 3 and 4 do not work with the Artificial Potential Field due to the presence of local minima in the artificial field. It is expected the global planner will solve this problem when having enough nodes. If this is not the case, a solution has to be found. | Map 3 and 4 do not work with the Artificial Potential Field due to the presence of local minima in the artificial field. It is expected the global planner will solve this problem when having enough nodes. If this is not the case, a solution has to be found. | ||

| Line 196: | Line 194: | ||

===Artificial Potential Field Approach=== | ===Artificial Potential Field Approach=== | ||

Video of the practical session tests: [https://youtu.be/0HrUQvFmpbw https://youtu.be/0HrUQvFmpbw.] | |||

Note the speed is capped compared to the simulation results in order to increase robustness and to keep up with the LiDAR refresh rate. | |||

The algorithm worked quite well and the robot was able to reach the destination without any notable errors. | |||

=Week 3 - Global Navigation= | =Week 3 - Global Navigation= | ||

| Line 236: | Line 238: | ||

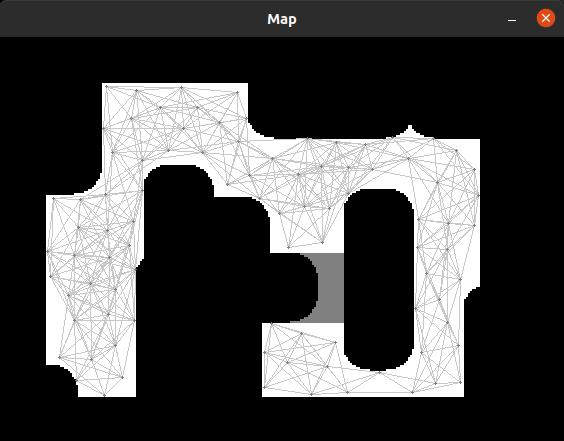

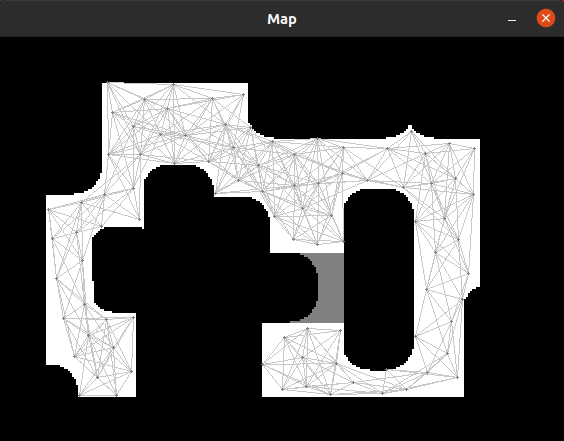

====Simulation Results==== | ====Simulation Results==== | ||

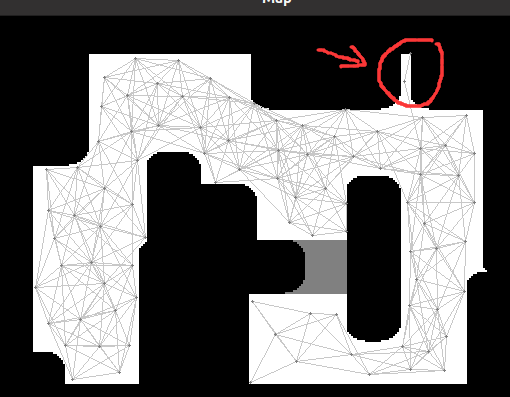

Results: The PRM | Results: The PRM result for the given map_compare is the given image above. | ||

====Problems==== | ====Problems==== | ||

| Line 299: | Line 301: | ||

The DWA algorithm implements obstacle avoidance and path following through the following steps: Velocity sampling: Sampling different linear and angular velocities in the space of possible velocities. Trajectory prediction: For each sampling speed, predict the trajectory of the robot in a short period of time. Collision detection: Check whether the predicted trajectory will collide with an obstacle. Target score: Calculate the score of each collision-free trajectory, which is determined by three factors: the distance between the end of the trajectory and the target point, the speed of the trajectory, and the safety of the trajectory. Select Optimal speed: Select the speed with the highest score as the current control command. Through these steps, the DWA algorithm implements real-time path following and obstacle avoidance, ensuring that the robot can avoid obstacles and advance to the target point during the movement. | The DWA algorithm implements obstacle avoidance and path following through the following steps: Velocity sampling: Sampling different linear and angular velocities in the space of possible velocities. Trajectory prediction: For each sampling speed, predict the trajectory of the robot in a short period of time. Collision detection: Check whether the predicted trajectory will collide with an obstacle. Target score: Calculate the score of each collision-free trajectory, which is determined by three factors: the distance between the end of the trajectory and the target point, the speed of the trajectory, and the safety of the trajectory. Select Optimal speed: Select the speed with the highest score as the current control command. Through these steps, the DWA algorithm implements real-time path following and obstacle avoidance, ensuring that the robot can avoid obstacles and advance to the target point during the movement. | ||

To use our local planner with the global planning code we had to make some modifications on the implementation. Specifically we implemented the local planner as a class. The planner's parameters are now attributes of an object and the driver code (main) can now set these parameters and call the relevant methods. Also the velocities are now sent to the robot through the main file instead of sending them inside the planner's functions. The local planner's method that is used the most in the main code is the "local_planner_step" method which finds the next transnational and rotational velocities given the current robot pose, the robot dynamics, the next (local) goal and the laser measurements. | To use our local planner with the global planning code we had to make some modifications on the implementation. Specifically we implemented the local planner as a class. The planner's parameters are now attributes of an object and the driver code (main) can now set these parameters and call the relevant methods. Also the velocities are now sent to the robot through the main file instead of sending them inside the planner's functions. The local planner's method that is used the most in the main code is the "local_planner_step" method which finds the next transnational and rotational velocities given the current robot pose, the robot dynamics, the next (local) goal and the laser measurements. | ||

Video after the described changes: | Video after the described changes: | ||

| Line 336: | Line 336: | ||

Based on the current robot attitude, obstacle point cloud data generated by laser scanning, and the current target point, the DWA algorithm calculates the optimal motion speed instruction (linear speed and angular speed). The robot moves according to these instructions, and when it approaches the current target point, the program updates the next target point and continues to execute the DWA algorithm. In this way, the DWA algorithm implements obstacle avoidance and path following on a local scale, while the global path planning algorithm provides a global path to ensure that the robot can get from the starting point to the end point smoothly. | Based on the current robot attitude, obstacle point cloud data generated by laser scanning, and the current target point, the DWA algorithm calculates the optimal motion speed instruction (linear speed and angular speed). The robot moves according to these instructions, and when it approaches the current target point, the program updates the next target point and continues to execute the DWA algorithm. In this way, the DWA algorithm implements obstacle avoidance and path following on a local scale, while the global path planning algorithm provides a global path to ensure that the robot can get from the starting point to the end point smoothly. | ||

'''5. Test the combination of your local and global planner for a longer period of time on the real robot. What do you see that happens in terms of the calculated position of the robot? What is a way to solve this?''' | '''5. Test the combination of your local and global planner for a longer period of time on the real robot. What do you see that happens in terms of the calculated position of the robot? What is a way to solve this?''' | ||

When we test the combination of the local and global planners we see that after some time and a few turns the robot thinks that it's in a different position from the one where it really is. This is expected since the robot localizes itself only by dead reckoning using the wheels' odometers and there is always some slippage between the wheels and the ground especially when we accelerate or de-accelerate a lot. We believe this issue could be solved by the simultaneous use of a localization algorithm which would compare the LRF measurements to the prior map knowledge and correct the dead reckoning position. | |||

'''6. Run the A* algorithm using the gridmap (with the provided nodelist) and using the PRM. What do you observe? Comment on the advantage of using PRM in an open space.''' | '''6. Run the A* algorithm using the gridmap (with the provided nodelist) and using the PRM. What do you observe? Comment on the advantage of using PRM in an open space.''' | ||

| Line 360: | Line 362: | ||

After working with the real robot, we noticed some problems with the DWA local planner. More specifically the robot would crash if obstacles were too close to it and the robot wouldn't move if the frequency of the planner was high. By noticing this behavior we found and resolved some bugs related to DWA. The issue we need to solve now is the case of a dynamic obstacle that ends up exactly on a node position making a local goal not reachable. | After working with the real robot, we noticed some problems with the DWA local planner. More specifically the robot would crash if obstacles were too close to it and the robot wouldn't move if the frequency of the planner was high. By noticing this behavior we found and resolved some bugs related to DWA. The issue we need to solve now is the case of a dynamic obstacle that ends up exactly on a node position making a local goal not reachable. | ||

Video of our first trial for the combination of local and global planners in the real robot: | |||

https://youtu.be/qZW3frcgIZg | |||

We see that the robot doesn't move even when there is enough space on the front of it (when we placed the bag). This happens because the lidar is on the front of the robot instead of being in the middle like in the simulation and the robot thinks that the obstacles are closer than they really are. | |||

=Week 4 and 5 - Localization= | =Week 4 and 5 - Localization= | ||

| Line 369: | Line 374: | ||

== Assignment Questions == | == Assignment Questions == | ||

=== | ===Assignment for first week === | ||

====Assignment 0.1==== | ====Assignment 0.1==== | ||

| Line 425: | Line 430: | ||

'''Imagine a case in which the filter average is inadequate for determining the robot position.''' | '''Imagine a case in which the filter average is inadequate for determining the robot position.''' | ||

[screenshot]: same | [screenshot]: same screenshot as above. From it we can see a big green arrow (x,y,direction) represent the average state (a.k.a. the result after filter). | ||

====Assignment 1.3==== | ====Assignment 1.3==== | ||

| Line 438: | Line 443: | ||

Recording video of localization result: https://youtu.be/yGidSriYTFc | Recording video of localization result: https://youtu.be/yGidSriYTFc | ||

=== | ===Assignment for second week=== | ||

==== Assignment 2.1==== | ==== Assignment 2.1 ==== | ||

'''What does each of the component of the measurement model represent, and why is each necessary?''' | |||

The <code>measuremntmodel</code> method of a particle object calculates the likelihood of a single ray's measurement, given the prediction for that ray based on the particle's pose. The likelihood is derived using the formula: | The <code>measuremntmodel</code> method of a particle object calculates the likelihood of a single ray's measurement, given the prediction for that ray based on the particle's pose. The likelihood is derived using the formula: | ||

| Line 448: | Line 454: | ||

The components in the formula can be described as: | The components in the formula can be described as: | ||

*<code>p_hit</code> - It represents how likely is that the measurement is exactly what it should be (if the robot was at the | * <code>p_hit</code> - It represents how likely is that the measurement is exactly what it should be (if the robot was at the particle's location and orientation). This probability is modeled with only the exponential part of a Gaussian distribution to ensure values in the range [0,1], <code>p_hit = exp( -0.5 * pow(((data-prediction)/hit_sigma),2) )</code>. | ||

*<code>p_short</code> - It represents how likely is that the measurement corresponds to a dynamic obstacle which is closer than the one present in the map. For example, a specific ray for a specific particle might hit a wall at 3 meters based on the map, but in reality a human might be standing in between the robot and the wall, making the ray hit an obstacle at 0.5 meters instead of 3 meters. This component calculates how likely it is that we are currently in the previous situation and it is modeled with an exponential distribution where we predefine its maximum value for measurements close to zero. The probability decreases exponentially to zero for measurements close to the predicted measurement. | * <code>p_short</code> - It represents how likely is that the measurement corresponds to a dynamic obstacle which is closer than the one present in the map. For example, a specific ray for a specific particle might hit a wall at 3 meters based on the map, but in reality a human might be standing in between the robot and the wall, making the ray hit an obstacle at 0.5 meters instead of 3 meters. This component calculates how likely it is that we are currently in the previous situation and it is modeled with an exponential distribution where we predefine its maximum value for measurements close to zero. The probability decreases exponentially to zero for measurements close to the predicted measurement. | ||

*<code>p_max</code> - | * <code>p_max</code> - It represents how likely is that a measurement corresponds to a ray hitting a black/non reflective surface or something transparent like glass. In this case we would get a maximum range measurement while the predicted measurement might be something completely different (e.g. wall at 0.5m but measurement is 10m due to non reflective wall). It has a value of 1 if the measurement corresponds to the maximum range and 0 in any other case. | ||

* <code>p_rand</code> - It represents how likely is that a measurement is random (not related to the prediction) even if the robot has the same pose as the particle. This isn't expected to happen a lot that why it has a small constant value for all measurements smaller than the range maximum value. | |||

'''With each particle having N >> 1 rays, and each likelihood belonging to [0, 1], where could you see an issue given your current implementation of the likelihood computation?''' | |||

The <code>computeLikelihood</code> method of a particle object calls the <code>measuremntmodel</code> for each ray of the particle and the corresponding measurement and returns the total likelihood of the measurement for the particle. To calculate the combined probability we assume independence of rays and we define the combined likelihood as the product of all the likelihoods for the the particle's rays (combined_likelihood = p(z1)*p(z2)*p(z3)*...*p(zN), where N is the number of rays). For a particle with pose much different than the robot's pose that could end up in numerical instability since we will be multiplying a very small number with another very small number multiple times, reaching a point where the product cannot be represented by the initial data structure (e.g. double). However this is handled by c++ and when the number is too small to fit in a float or double variable, the variable is set to 0. Regarding the independence of rays assumption, we think it is a valid assumption since we are working with a very sub sampled measurement and the prediction corresponds to the sub sampled space. Specifically, the initial list of 1000 ranges becomes a list of 67 ranges where we can say that the measurements are not correlated. However, in the original dense list of ranges we would probably have a high correlation of a measurement with its neighbors. | |||

==== Assignment 2.2 ==== | |||

'''What are the benefits and disadvantages of both the multinomial resampling and the stratified resampling?''' | |||

'''Multinomial resampling''' | |||

This algorithm takes particles in a uniform way from the list of cumulative weights/likelihoods. Its main benefit is that it is easy to understand. The main disadvantage is that it causes high variance in the amount of times a particle is chosen, meaning some particles are repeated many times while other particles are not used a lot. This could result in a selection of particles that might not be able to describe the robot's pose good enough. | |||

'''Stratified resampling''' | |||

This algorithm splits the list of cumulative weights/likelihoods into equal parts/ranges which are called strata. Then in each strata we draw uniformly a sample of the corresponding particles. In this way, we still reproduce with higher probability the particles with bigger weights while making sure we will reproduce also particles with smaller weights. The result is a more diversified set of samples. Also since each time we are searching in a smaller list that corresponds to the strata instead of the initial list, the algorithm is computationally more efficient than the multinomial. For our application we can't really identify a disadvantage of stratified resampling. However, in general it could be hard to split samples into strata which could make the use of the multinomial algorithm a better choice. | |||

==== Assignment 2.3 ==== | |||

The parameters we tuned are the following: | |||

* <code>Particles</code> - We set the particles number to 500 since we could localize successfully with that amount of particles while the code was running fast enough. | |||

* <code>motion_forward_std</code> and <code>motion_turn_std</code> - We set the propagation noise standard deviation to 10cm for translation and 5 degrees for rotation since we could localize with that noise and still diversify the particles while tracking our position. | |||

* <code>meas_dist_std</code> - We set the LRF measurement noise level to 2cm since the sensor is very accurate and we don't expect larger deviations. | |||

* <code>Algorithm</code> - We use the stratified resampling algorithm due to the before mentioned advantages. | |||

* <code>Scheme</code> - We use the effectiveParticleThreshold to perform the resampling only when it is detected that just a few particles are affecting the most the average position of the filter. | |||

We tested the localization algorithm by initializing the simulation and setting the uncertain odometry to true. Also we move the robot further away from its initial guess. After performing several runs we believe that our localization module is accurate and runs fast enough to be integrated with the rest of the modules for the final challenge. | |||

A video that demonstrates a run in the simulation with uncertain odometry: | |||

https://youtu.be/fkpTKTvYtzk | |||

Video demonstrating a difficult scenario for localization because there exists a large rectangular dynamic obstacle that makes the measurements very similar to the predicted ones for a position close to the obstacle, making the particle filter converge to the wrong estimate: | |||

https://youtu.be/JQYRa6BRUSE | |||

= Final Challenge : Hero, the new age waiter = | |||

== Videos of the final challenge == | |||

* The video of the designed system running on the real robot during the final challenge on 21 June 2024: https://youtu.be/ncUWcO743BM (3rd attempt) | |||

* The video of initial attempts for the designed system for final challenge: https://youtu.be/-lya73TgR88 (1st and 2nd attempt) | |||

* Simulation Testing video on the final challenge RestaurantMap2024: https://youtu.be/VLNTw9IdH2I | |||

* Simulation Testing video on the final challenge RestaurantMap2024 with Variations and Moving Obstacles: https://youtu.be/_71ev8N3lXI | |||

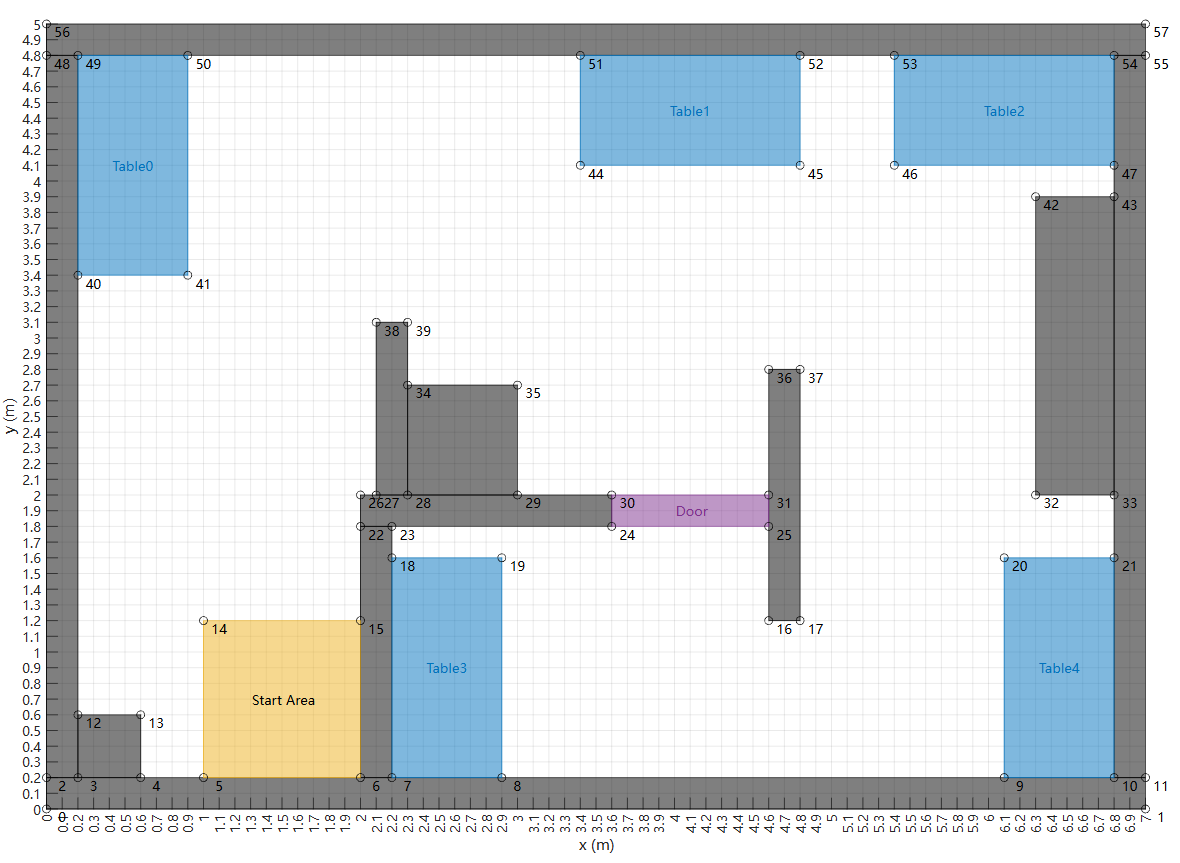

== Introduction == | |||

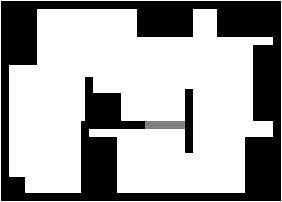

[[File:RestaurantChallengeMap2024.png|thumb|The final challenge map]] | |||

In this challenge, Hero the robot is tasked with delivering orders in a dynamic restaurant environment. The goal is to navigate from the kitchen (starting point) to specified tables, as determined by the judges right before the challenge begins. Hero must contend with various static and dynamic obstacles, including chairs and human actors, to successfully deliver the orders. The robot must approach each table, signal its arrival with a sound, that signifies the order is delivered comfortably to the customer. This involves precise navigation and obstacle avoidance, using LiDAR for object detection and the provided environment map. The challenge tests not only Hero's ability to follow instructions and reach designated locations but also its capacity to adapt to an ever-changing environment, making it a true test of advanced robotics and autonomous navigation. | |||

=== Prerequisites === | |||

There are certain requirements from each stakeholders' perspective that is expected from the robot Hero to fulfil in order to successfully complete the entire challenge: | |||

# From customer point of view - | |||

#* The robot must use speech to communicate with customers, including phrases when it reaches a table or when it planning a route towards a new table from there. This ensures customer safety and overall a better service. | |||

#* Then the robot must approach the table, orient towards it, and move close enough for customers to comfortably take their order. | |||

#* It should be able to find an empty spot at the table and reposition if the initial spot is occupied. | |||

# From Restaurant staff point of view - | |||

#* It should be reliable in all stages of operation, including localization, local planning, and global planning. | |||

#* The robot must have robust obstacle avoidance to ensure the safety of the restaurant staff, preventing collisions at all cost. | |||

#* There should be simple Table Number input Mechanism, allowing staff to easily input table numbers and manage orders. | |||

# From software developer point of view - | |||

#* The robot should have modular code design for seamless integration of various components. By creating different cases of scenarios in the software architecture, ensures distinctive modules/components are separated from one another ensuring software readability and the ease for developers to easily pin-point flaws in a specific components. | |||

#* By making a config file which allows developers and operators to easily adjust all the parameters without altering the core code, ensures optimal performance in various settings. | |||

#** Restaurant staff or robot operators, who may not have a technical background, can still make necessary adjustments to the robot’s behavior through a simple config file. | |||

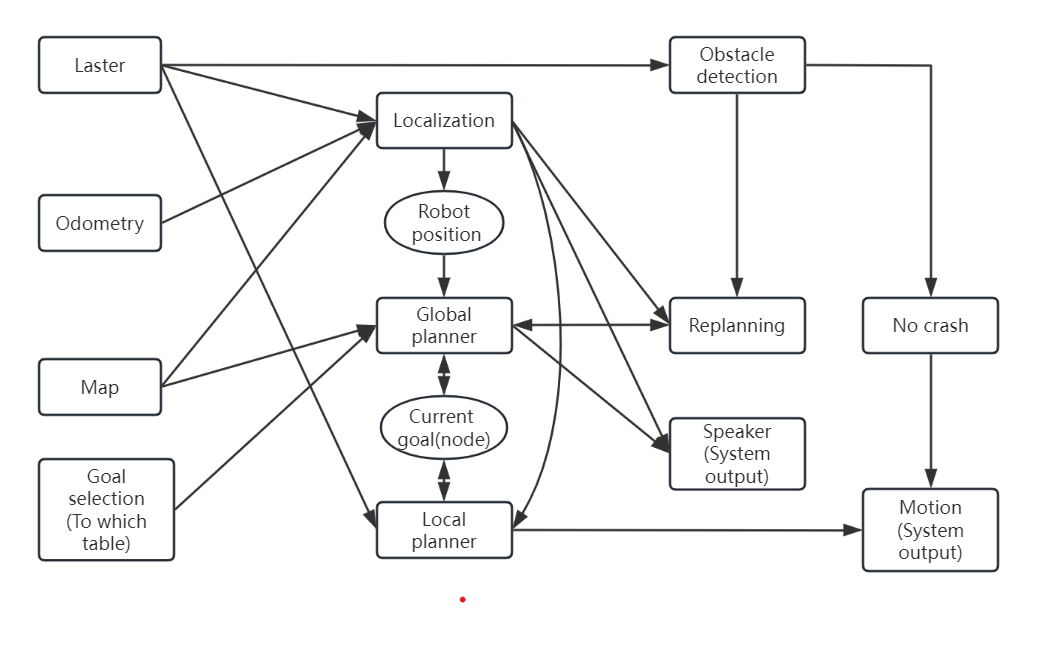

== System Design - Data Flow == | |||

[[File:Data R2-D2.png|thumb|451x451px|Data flow diagram]] | |||

This diagram details the interactions and information flow between various modules. Firstly, the Laser sensor (Laster) provides obstacle information in the environment, and together with Odometry data, it inputs into the Localization Module to determine the robot's current position. The Map provides static information about the environment, and the Goal Selection Module determines the target location for the robot. This target is composed of table location information stored in the backend and the manually entered table visit sequence. Additionally, a dynamic planning algorithm ensures that the robot always selects the closest edge of the table as the global planning target point, and if this point is blocked, a new point will be replanned. | |||

Based on these input data, the localization module calculates the robot's current position and transmits it to the Global Planner. The Global Planner module uses the current position and map information to generate a global path through the PRM algorithm and updates the Current Goal Node. The Local Planner dynamically plans the trajectory between the current position and the current goal node by comparing their information in real-time and formulates specific motion commands. At the same time, if it encounters any unknown obstacles, it will attempt to bypass them using local planner module. If the obstacle cannot be bypassed or is located at the next node, it will report an error message to the global planner and replan the path. | |||

The Obstacle Detection Module utilizes laser sensor data to detect obstacles ahead in real time. Once an obstacle is detected within 0.2 meters, the no-crash protector (No crash) will trigger, causing the robot to stop immediately. If the obstacle is not removed within a long time, the Replanning Module will be triggered, reporting the current obstacle location to the global planner and updating the path through PRM again to ensure the robot's adaptability to the environment. Additionally, the system is equipped with a speaker module (Speaker (System output)) to provide voice feedback, informing users of the current system status or warning messages. After initialization, the localization module will send a message to the speaker to inform users that the initialization is complete. Throughout the process, the modules collaborate to ensure the robot safely reaches its intended destination according to the planned path. | |||

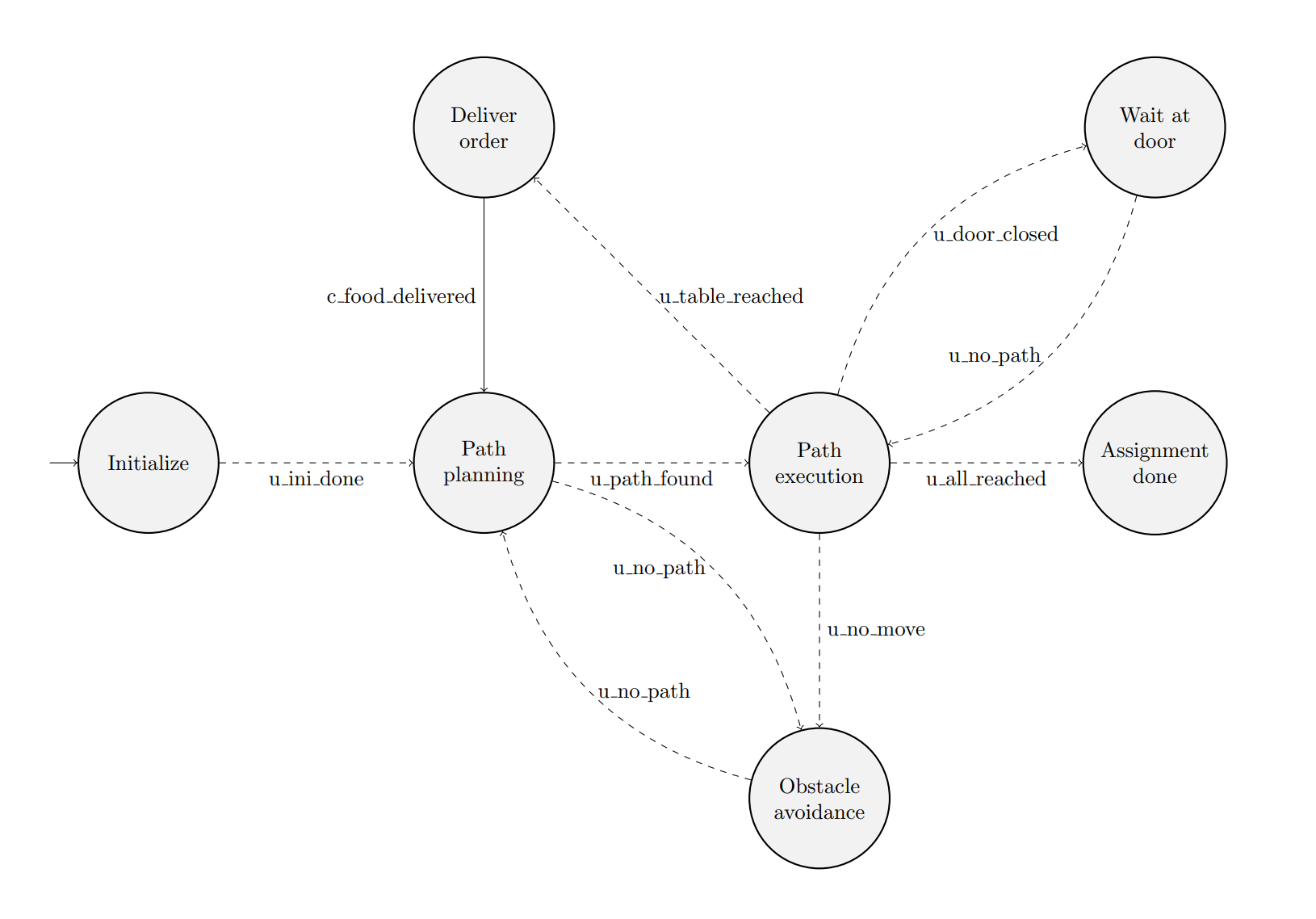

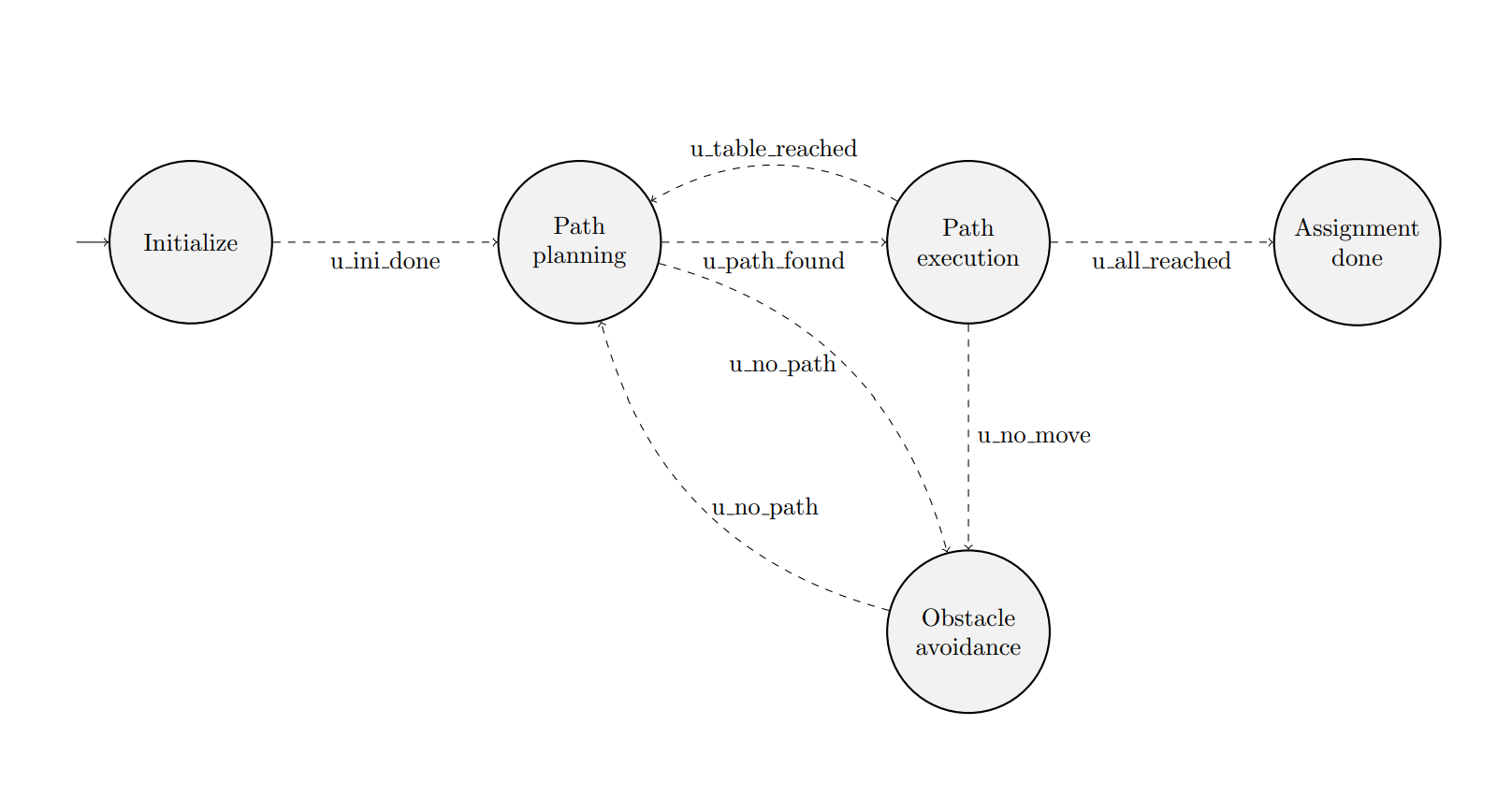

== System Architecture == | |||

[[File:Screenshot 2024-06-27 182950.png|thumb|478x478px|Intented state diagram for the final challenge]]The robot is controlled by a supervisor class in C++ called "Robot." This class is initialized in the main script "main.cpp." Before it can be initialized, the "World" class and the "ParticleFilterBase" class need to be initialized. The "ParticleFilterBase" class was created in assignment four and is responsible for the localization of the robot in the space. The same applies to "World," which places the sensor data and the robot itself in a world model. The "World" class is used by both "Robot" and "ParticleFilterBase." Both classes are used by "Robot" as pointers. Other relevant classes used by the robot include "DWA" for the local planner based on the Dynamic Window Approach, "Planner" for the global planner, and "PRM" for creating the PRM map. Additionally, "ParticleFilterBase" uses some additional classes. All relevant tuning parameters for all used classes and subclasses are dynamically loaded from a general config file for the robot. This allows for dynamic adjustment of parameters without recompiling the program. For example, adjusting the number of nodes for "PRM" or the clearance radius for "DWA." | |||

( | Once the aforementioned initialization of the classes is completed, a while loop is started that continues running as long as there is an active IO connection with the robot. Each time the while loop is called, the function "handle_current_state()" is invoked. This function ensures that the state function that is currently active is called. Each state has its own state ID. The ID of the currently active state is stored in the "Robot" class. A switch function is used to call the correct function corresponding to a state ID. After executing the state function, several conditions are checked that could result in changing the state and thus the state ID. For instance, if the robot reaches the last node of the planned global path, a new path needs to be planned. The possible transitions from state to state and the states themselves will be further explained below. Additionally, the state diagram is shown. The robot announces each transition. | ||

==Practical | ==== The "Robot" class contains the following states: ==== | ||

===== Initialization - Robot::state_initialize() ===== | |||

[[File:Screenshot 2024-06-27 183441.png|thumb|478x478px|Implemented state diagram for the final challenge]] | |||

Although the "Robot" class is initialized, more needs to be done before the robot is ready to depart for the first table. The robot must converge from the initial guess positions to the actual position of the robot. To do this, the robot rotates completely around its axis while making small forward and backward movements. Practical tests have shown that this best converges to the actual location. Before this can happen, the laser data in the "World" class must be initialized. | |||

From this state, it can transition to the "Path planning" state once the robot has completed one full rotation. In hindsight, it would have been better to add an extra condition here, namely checking whether the localized position is close enough to the initial guess. | |||

===== Path planning - Robot::state_set_goal() ===== | |||

Before the robot can depart for a goal or table, a path to this table must be planned. When the "Robot" class is initialized, a PRM map is created. In this state, only the nodes in the quadrant where the goal is located relative to the robot in the world frame are considered. The node closest to the robot is designated as the start node. The node closest to the table relative to the current robot location is chosen as the end node. When a path is found by the "Planner" class, the robot can move towards its goal. | |||

From this state, it can transition to the "Path execution" state if a path is found. If no path can be found, it can transition to the "Obstacle avoidance" state to find an alternative path. The robot saves the distance travelled for a set amount of past cycles. When the mean distance travelled becomes lower than a set threshold, the robot goes to the state "Obstacle avoidance". | |||

===== Path execution - Robot::state_goto_goal() ===== | |||

The robot moves according to the nodes given by the "Planner." Each time the state is called from the main loop, localization is attempted if the sensor data for odometry and LiDAR are available. The average position is requested from "ParticleFilterBase," which "DWA" then uses to calculate the next step. When an intermediate node is reached, this node is removed from the list of coordinates to be visited. | |||

When all intermediate nodes are visited, it means that the final goal is reached. If not all tables have been visited, a new goal is sought through the "Path planning" state. Otherwise, it will transition to the "Assignment done" state. | |||

===== Obstacle avoidance - Robot::state_add_obstacle() ===== | |||

Sometimes the robot may encounter an obstacle. When it is identified that the robot can no longer move to its next intended node, this state is activated. The "Planner" is called again to create a new path in the same manner as the "Set goal" state. The main difference is that a new map is created by the "PRM" class based on the LiDAR data. LiDAR data points within a configurable range of the robot. From these points, a configurable distance in the same direction as the robot is removed from the map as a navigable area. This area is then inflated as previously explained in assignment 3. The method to achieve this is by subtracting the created matte from the original map. This new map will be temporarily used by the "Planner" for an alternative path. | |||

From this state, it can transition to the "Path execution" state if a path is found. If no path can be found, it can transition to the "Path planning" state to attempt regular path planning. [add picture of blocked path] | |||

===== Assignment done - Robot::state_done() ===== | |||

Last state without any new possibilities for states. It is ensured that the robot will not move anymore in this state. | |||

==== The following states are included in the initial state diagram but not implemented: ==== | |||

===== Waiting at door - Robot::state_wait_door() ===== | |||

The ideal situation here would be to add a penalty to the "Planner" class when the robot wants to go through the door. This way, it is not preferred to take the closed door if another route is better. If the route through the door must be taken, then LiDAR information can be used to recognize if the desired path is blocked. The robot will then wait until the LiDAR information recognizes a clear path in this state. | |||

===== Delivering order - Robot::state_deliver_order() ===== | |||

In this state, the robot could deliver the order when a table is reached. It can do this by rotating to the table and waiting for a set amount of time. It is possible to move towards the table if that helps the people at the table to take the food. | |||

The individual components were adapted according to the needs of the final challenge and are explained as follows: | |||

=== Initialization === | |||

The initialization begins by setting up the current time using `std::chrono::system_clock::now()`, calculating the duration since the epoch, and extracting milliseconds using `std::chrono::duration_cast`. This timing information is for precise operations. Next, the robot sends a base reference using `io.sendBaseReference()`, calculated with a sine function to set initial movement parameters. The robot then reads laser data using `io.readLaserData(scan)`. Similarly, the robot reads odometry data using `io.readOdometryData(odom)`. This data is essential for navigation, providing information about the robot’s movement and position. The current position is obtained from the particle filter using `pFilt->get_average_state()`. | |||

The localization process integrates laser and odometry data to accurately determine the robot’s position. The particle filter refines this estimate by averaging data points and adjusting for discrepancies, ensuring precise location awareness. | |||

If both laser and odometry data are updated and the initialization count exceeds a threshold, the robot sends a base reference of (0, 0, 0) to reset its state. The state is then set to 1, completing the initialization phase. The robot announces, “Hero is initialized and will plan a path,” signaling readiness for path planning. In subsequent steps, the robot computes the driven distance using `world->computeDrivenDistance(odom)` if both laser and odometry data are updated again. The pose is updated in the world model, and various filters are updated to reflect the new state, ensuring synchronization with its real-world position and movements. | |||

This comprehensive process ensures the robot is fully prepared to start its main tasks without errors or interruptions. Continuous monitoring and verification of system readiness allow the robot to transition smoothly to the next operational state. | |||

=== Local Navigation === | |||

The local planning phase is critical for ensuring that the robot, Hero, successfully navigates the dynamic restaurant environment while delivering orders to designated tables. During this phase, Hero uses the Dynamic Window Approach (DWA) to handle real-time path planning and obstacle avoidance. Key parameters for the DWA, such as translational and rotational velocities, acceleration limits, and clearance radius, are configured through a flexible settings config file to adapt to varying restaurant conditions. | |||

The robot continuously samples a range of possible translational and rotational velocities, simulating short-term trajectories for each pair. These trajectories are evaluated based on their alignment with the goal, the distance to the nearest obstacle, and the robot's forward speed. By scoring each trajectory using a weighted objective function and selecting the highest-scoring velocity, DWA ensures that the robot maintains an optimal path. This process repeats in a loop, allowing the robot to adapt to changes in the environment, such as moving obstacles or shifts in the goal position. Consequently, DWA's ability to dynamically adjust the robot's path based on real-time sensor data ensures efficient and safe navigation, crucial for the complexities of the final challenge. When a goal node is successfully reached, the robot updates its current position and this is done after each step of dwa as explained in assignment 2 until the loop ends or replanning is triggered. | |||

Obstacle avoidance is a key feature of the local planner, allowing Hero to detect and maneuver around static and dynamic obstacles, such as furniture and moving human actors, without collisions. This is achieved by continuously updating its environment model using LiDAR scans and odometry data. When an obstacle is detected within a predefined clearance radius in the config file, the robot dynamically recalculates its path to ensure a safe and smooth trajectory towards its goal. | |||

Path following is managed by setting sequential waypoints derived from a pre-planned global path. As Hero progresses, it adjusts its motion to stay on course towards the current waypoint. Upon reaching each waypoint, the robot reorients towards the next until it arrives near the target table. Once it reaches a table, Hero announces its arrival with a clear audio signal, ensuring a seamless and polite interaction with the customers. | |||

If an occupied spot is encountered, it calls the replanning module which generates an alternative path for docking around the table. | |||

=== Global Navigation === | |||

[[File:Final map nodes.png|thumb|Final Challenge Map Global Planning Nodes]] | |||

For global planning, the PRM (Probabilistic Roadmap) code from the project was utilized. Due to the requirement to visit each table sequentially, a single PRM planning is conducted for global planning, and the A* algorithm is employed for path planning each time a table is visited. Based on the simulated map data, the resolution of PRM is set to 0.025 pixels. To ensure that nodes are not generated between two tables (since the robot's width cannot fit between tables, making those nodes unreachable), the wall expansion coefficient is set to 0.58, thus preventing the generation of nodes too close to any surface. Additionally, we need to adjust the grayscale recognition to ensure that gray doors are not identified as walls, thereby allowing paths that pass through doors to be generated.To enhance the stability of the PRM algorithm, additional algorithms were incorporated to increase the number of nodes generated around tables. This ensures that even if one or several nodes are occupied, alternative nodes can still serve as endpoints near the tables. | |||

To ensure that the robot can be controlled to visit tables in a specified order by inputting table numbers, the coordinates of the four corners of each table are stored in a configuration file. An algorithm matches the table numbers to their respective coordinates, ensuring that the table coordinates can be directly recognized. In the goal selection algorithm, the input number is first mapped to the corresponding table coordinates. Then, the algorithm searches for PRM-generated nodes near the table and selects the node closest to the current starting point as the target point as mentioned in path planning state. | |||

In practical operation, the robot first initializes and confirms its current coordinates. After the path is generated and the final goal that is the first table is reached, the robot re-plans the path using its current coordinates as the new starting point and the next goal as the endpoint. This process continues iteratively until the robot reaches the final goal. This approach allows for the dynamic switching of goals and robust path planning by incorporating Localization, thereby avoiding errors that could arise from using the previous endpoint's coordinates as the new starting point. | |||

=== Replanning === | |||

In path replanning, we need to consider two scenarios. The first scenario occurs when there is an obstacle on the current path. According to DWA settings, if the next node is occupied by an obstacle, the robot will stop until the node becomes available. But if it does not move, the robot will stay there indefinitely. To avoid this situation, it is necessary to replan a path around the obstacle while maintaining the same goal. The second scenario arises when the goal node neat the table is occupied or there is an obstacle nearby, preventing access to the intended goal. In this case, a new goal must be selected to conclude the current path planning. | |||

[[File:Blocked path.png|thumb|The region near the starting point was marked blocked by the robot as inaccessible due to presence of an unseen static object.]] | |||

To determine the need for replanning, we integrate data from the laser sensor. When the laser sensor detects an obstacle ahead that impedes movement, we read the sensor data to calculate the obstacle's width. By combining the obstacle's width with the global planning node information, we assess whether the obstacle obstructs subsequent paths. If the subsequent nodes are deemed inaccessible, path replanning is initiated; otherwise, the obstacle is simply bypassed. Additionally, we monitor the robot's speed. If the robot's speed is zero for more than 10 seconds, the system will trigger replanning. For scenarios where the goal node is inaccessible, the laser sensor detects if the goal node is occupied and compares the robot's current coordinates with the goal coordinates. If the sensor data indicates that the current distance to the node is greater than the distance to the obstacle, the goal replanning process is initiated. | |||

During path replanning, the first step involves measuring the obstacle's width using the laser sensor and constructing a rectangle in the PRM map with a side length equal to the measured width. The starting edge of the rectangle is the distance detected from the obstacle. Using the robot's current coordinates and the distance measured by the laser sensor as references, we calculate the approximate position coordinates of the obstacle. Before running the PRM algorithm again, we update the simulated map to mark this area as an impassable region (displayed as a black area on the map). Then, the PRM algorithm is rerun to generate new paths and nodes, keeping the original goal node unchanged. Using the robot's current coordinates as the starting point, the A* algorithm is employed to replan the path. | |||

For goal replanning, the steps are similar. The only difference is that after confirming the goal is obstructed, the goal selection algorithm is rerun to choose an alternative accessible node. If the path to this node is not obstructed, the goal is updated to this new node, and the A* algorithm is rerun to plan the path. If the path remains impassable, other nodes are selected until a feasible path is found. If all nodes are obstructed, the obstacle is marked, and the PRM is rerun. | |||

The simulation video to display working of replanning can be found here: https://youtu.be/dWZ9Ip53ogI | |||

=== Localization === | |||

The localization component is responsible for global localization during initialization and then tracking the robot's pose for the rest of the challenge. Before the robot starts moving towards a table, it performs a small circle around itself to make the particle filter's estimations converge to (hopefully) the robot's real position. For estimating the initial position of the robot, we use a guess inside the given starting area and more specifically <code>"initial_guess": [1.5, 0.7, 1.57]</code>. The inner workings of localization remain the same as in exercises apart from the a bug that was causing unexpected errors during runs on the real robot. Particularly, the problem was in the compute likelihood function of a particle where the combined probability of each particle's rays is calculated as a product of the individual probabilities. As it was already mentioned in the exercises, that product could end up so small that the likelihood would be truncated to zero. However, during the exercises we didn't notice how would that affect the rest of the code and specifically the normalization step of the probabilities before resampling. Since now some particles had zero probability, any number multiplied by their probability would result again in zero probability and after the normalization the cumulative sum of the probabilities would no longer add up to 1. That apart from essentially deleting some particles, created the unexpected errors during resampling since the resampling algorithm assumes a search space of [0,1] for the cumulative sum vector. To solve that issue, we modified the compute likelihood function of a particle to set the calculated probability to a predefined lower bound if the product is smaller than this bound. | |||

To test the localization component, we used the real robot during practical sessions and teleop to move it. After verifying that things were working without dynamic obstacles, we started introducing obstacles that weren't present in the map. We noticed that the particle filter was affected a lot by that and we tuned the probability coefficients of the measurement model. In particular we tried to find a balance between the short probability, which accounts for unmodeled obstacles, and the hit probability, which makes correct measurements more important. | |||

=== Serving Tables === | |||

[[File:TableNumberFinalChallenge2024.png|thumb|Table numbers in Final Challenge Map 2024]] | |||

There are 5 tables in the map, in each circle we set a table as the destination of global planning. According to the given svg map, we manually set the center point of each table, as shown below. | |||

"tables": | |||

[[ 75, 320], [ 75, 190], [310, 290], [185, 50], [365, 50]]. | |||

When robot is planning to reach table, PRM will be used to generate nodes. | |||

The code will go through all the nodes, only considering the nodes which stand in the rectangular(diagonal: current position, table centre), | |||

<code>if (not (min_x < node.x < max_x and min_y < node.y < max_y)) {</code> | |||

<code>continue;</code> | |||

<code>}</code> | |||

and find out the node which is the closest to the table centre, and set it to the goal node. | |||

After reaching the goal node, the robot will tell people that it has arrived (<code>io.speak("Table " + to_string(table_seq[cur_table]) + " reached, please pickup your food");</code>). Then the robot will plann the way to next table. | |||

[[File:NodeBetweenTables.png|alt=A node can be generated between table 1&2|thumb|A node can be generated between table 1&2]] | |||

A failure scenario identified during initial testing phase for final challenge was the lack of a clearly defined distance within which a robot is considered to be serving. Consequently, there is no specific limitation to control the distance between the goal node and the table center in the code. For instance, the code tends to select nodes located between tables 1 and 2 as the goal node (as shown in the red circle in the image, with an "infl_radius" of 0.58). However, this gap distance conflicts with the "dont_crash" code or "clearance_radius," causing the robot to stand still between the tables and fail to reach the goal node. Even if the robot reaches the goal node, it cannot move backward and needs a diameter to turn around. The narrow gap conflicts with the DWA algorithm, preventing the robot from exiting the gap. These problems can be resolved by increasing the inflation radius in the PRM, ensuring the gap between tables is filled, and no nodes are generated between the tables. However, increasing the inflation radius also increases the distance between the table and the robot's serving point. | |||

An improvement done was that we choose the node closest to table edge instead of choosing the node closest to the table centre. In json file, tables are constructed by segments, and each segment of individual table is given by four points. | |||

"tables": | |||

[ [49, 50], [50, 41], [41, 40], [40, 49]], | |||

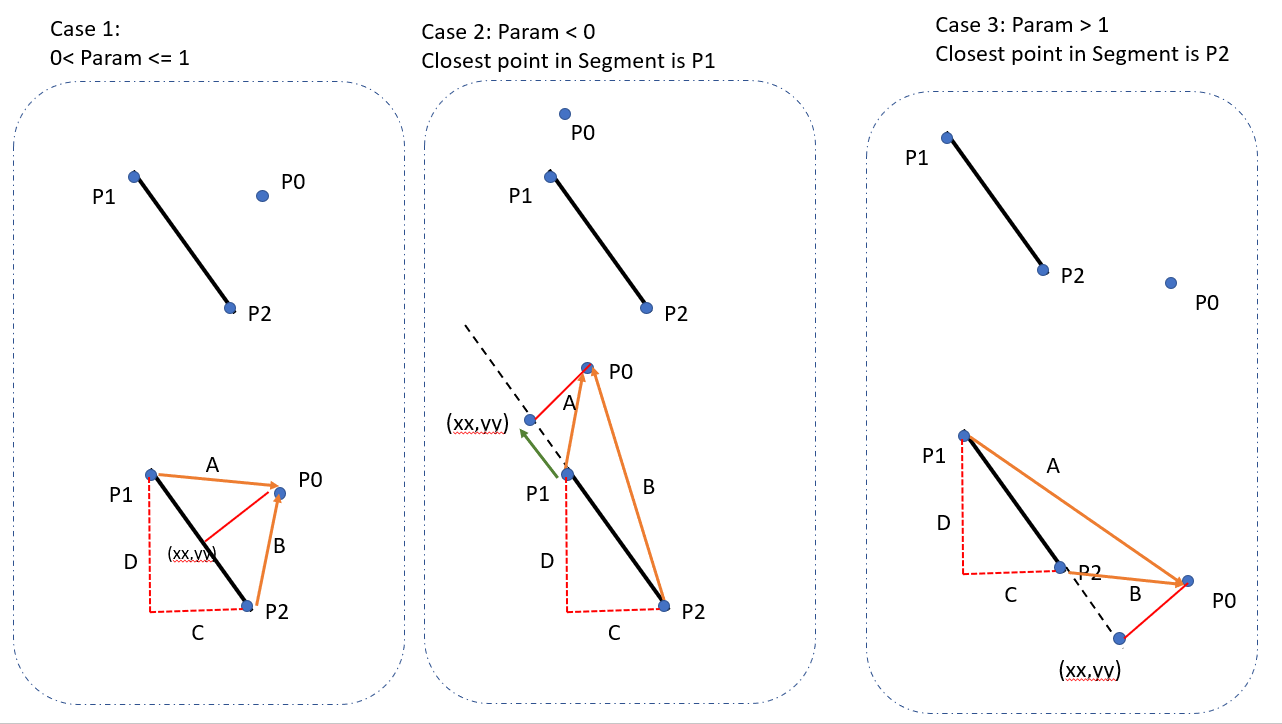

[[File:Distance from a point to a segment.png|thumb|Distance from a point to a segment<ref>Shortest distance between a point and a line segment https://i.sstatic.net/sXc2j.png</ref>]] | |||

For example, in the new goal selection algorithms, it will go through all nodes, and find the node which is closest to any segment of the table, and set that node to the goal node of serving table. | |||

But achieving this requires us to read and store of json file, and create different struct for points, segment, and table. | |||

<code>struct Point { double x; double y; };</code> | |||

<code>struct Segment { Point start; Point end; };</code> | |||

<code>struct Table { std::vector<Segment> segments; };</code> | |||

=== Opening the Door === | |||

To implement the task of opening a door for the final challenge, the code first retrieves the robot's current position with coordinates x and y. It then checks if the robot is near the door by using the `isNearDoor` function, with a distance threshold of 0.5 units. If the robot is within this threshold, it stops the robot by sending a base reference of (0, 0, 0) to halt its movement. The code then pauses the robot's activity for five seconds using `std::this_thread::sleep_for`. After the pause, the robot announces "open the door" using the `io.speak` function. Following this, the robot resumes its tasks by calculating the next step of its Dynamic Window Approach (DWA) algorithm using `lp.dwa_step`, which considers the current position and point cloud data. Finally, the new velocity commands are sent to the robot's base to continue its movement. | |||

If the door does not open, the replanning will be triggered to find an alternative route towards the goal. Another thing to notice here is that, when the robot is trying to cross the door boundary, Localisation particles are seen to blow-up. This was a major problem because the robot is clueless when trying to pass through the door. Additionally, due to this, we can see that it takes a lot of time for the system to process this transition and eventually making the robot to move very very slowly through the door. This issue can also trigger unwanted replanning even if the door was opened and robot is passing safely through it. | |||

The simulation video can be found here: https://youtu.be/J_RSyXLSdz4 | |||

= Final Challenge Results = | |||

Final Results Videos can be found in the starting of the final challenge report. | |||

== First Two Tries == | |||

In our first tries the robot couldn't perform global localization and had a big offset between its real and its estimated position. After the final challenge, we examined the video and recreated the scenario in simulation. We noticed that even when the particle filter pose was close to the real pose, the particles were quite spread and they would converge to a small region only when the measurements were very close to the predictions (no unmodeled obstacles). Considering this, we realized that the lower bound we set for the combined likelihood was so small (1e-15) that in most of the real life cases the measurement model would return the lower bound value for the majority of particles. By modifying the lower bound to a much smaller value (1e-300) we didn't notice this undesired behavior any more. | |||

== The Third Try == | |||

Unfortunately, during the final challenge we thought that the particle filter wasn't working because of the coefficients used for each component of the measuremnt model. Thus we tried to increase the hit probability coefficient and decrease the short probability coefficient to make the filter converge to a position where the measurements will match more to the predictions. However, as mentioned in the previous section, the lower bound for the combined probability was too big. By starting the robot facing the empty space, we managed to localize (no shorter than predicted measurements) and the particle filter's pose estimate was close to the real position for most of the time. Although, the particles were quite spread and after introducing a lot of dynamic obstacles the estimate was getting again away from the real pose. | |||

Regarding the navigation, when the robot was heading towards the (unmodeled) chair, it came as close as the clearance radius would allow and practically stop. This is a local minimum for the local planner where all the possible trajectories would be considered as non admissible trajectories (lead to collision) due to the very close distance from the obstacle. Then after replanning, the robot was still very close to the obstacle in front, making again any trajectory (with translation) non admissible. After many executions of the replanning, the next goal was more to the right of the robot instead of being in front which made the robot after turning towards the goal to also move since there were no longer obstacles in front. This could be easily resolved by a head away from obstacles procedure, where after replanning the robot would first turn until it reached an orientation with enough free space ahead and then try to go the next goal with the local planner. | |||

Lastly, we didn't have the time to test planning by considering the doors and since it was our last try, the team decided to not implement it and for that reason the door was treated as a wall. Consequently, when the robot reached a situation where its way was blocked and the only possible path would be through the door, it just remained stationary since it couldn't find a possible path to its goal. | |||

== Improvements == | |||

There are numerous improvements that can be implemented to further refine the robot's performance and efficiency. Based on our experiences and the challenges encountered, we propose the following future directions for improvement: | |||

'''Robust Initialization Process''' | |||

During our final run, the initialization failed because the robot miscalculated its starting position due to a small numerical value being rounded down to zero. This issue only surfaced during the real run when the robot was placed too close to a wall. To prevent such occurrences, we need to refine the initialization algorithm to handle edge cases more robustly. This includes thorough testing in various starting conditions and environments to ensure reliability. Additionally, incorporating safeguards against numerical errors and enhancing the precision of mathematical computations can significantly improve the initialization accuracy. | |||

'''Door Handling and Replanning''' | |||

Another area for improvement is the integration of door opening capabilities. Currently, the robot does not incorporate our function to reroute through a door and demand the door to be opened. This was due to time constraints we faced that prevented the refinement and integration of this functionality into the main code and the subsequent testing necessary. The lack of robustness meant we did not trust this functionality enough to not cause failures during the final run, and was thus excluded. This feature would have enabled the robot to navigate more complex environmental situations by interacting with doors, enhancing its ability to function autonomously and going to the final table in the challenge. | |||

'''Enhanced Obstacle Detection''' | |||

One significant improvement is the better detection of thin obstacles, such as chair legs. These obstacles are difficult for the robot to detect using its current LiDAR and odometry sensors because they do not present a solid obstacle profile. To address this, we suggest improving the obstacle detection algorithm to account for thin and irregularly shaped objects. This could involve: | |||

- Enhancing the LiDAR data processing to detect small and thin objects more accurately. | |||

- Implementing a pattern recognition system that can identify common shapes of thin obstacles, such as chair legs. | |||

These enhancements would enable the robot to navigate more smoothly and avoid collisions with thin obstacles in dynamic environments like a restaurant. | |||

'''Speed and Precision''' | |||

Fine-tuning the robot's control algorithms to improve speed and precision in task execution can be very useful. Enhancing these algorithms will allow the robot to perform operations more swiftly and accurately, which is particularly important in environments like a restaurant, where time and precision are critical. | |||

'''Error Handling''' | |||

It can be useful to have error detection and handling mechanisms for the purpose of minimizing downtime and improving reliability. Developing protocols for error identification and resolution will enable the robot to autonomously address issues, maintaining continuous operation. This includes detailed diagnostics and recovery procedures to quickly solve any problems that come up. | |||

'''Battery Management''' | |||

Efficient power management can be crucial for the robot's sustained operation. Implementing advanced battery management systems that predict power consumption based on tasks and optimize charging cycles can significantly extend operational time. Such systems would intelligently manage the battery’s charge and discharge processes, ensuring the robot remains functional for longer durations. | |||

'''Docking Protocol''' | |||

Implementing a docking protocol where the robot slows down or stops at tables can enhance user interaction. By allowing the robot to pause for a set amount of time, customers have adequate time to pick up their food, thus improving the service experience. This protocol could be fine-tuned to adjust the stopping duration and distance based on customer feedback. | |||

'''Summary''' | |||

These proposed improvements aim to make the robot more efficient, reliable, and user-friendly. By addressing the limitations observed during the course and implementing these enhancements, the robot’s performance can be significantly improved. This will not only elevate its capabilities in current scenarios but also prepare it for more complex and demanding environments. | |||

=References= | |||

<references /> | <references /> | ||

Latest revision as of 02:02, 29 June 2024

Introduction

This the wiki of the R2-D2 team for the course Mobile Robot Control of the Q4 in the year 2023-2024. The team is consisted from the following members.

The project git repository can be found here: https://gitlab.tue.nl/mobile-robot-control/mrc-2024/r2-d2

Group members:

| Name | student ID |

|---|---|

| Yuri Copal | 1022432 |

| Yuhui Li | 1985337 |

| Wenyu Song | 1834665 |

| Aditya Ade | 1945580 |

| Isabelle Cecilia | 2011484 |

| Pavlos Theodosiadis | 2023857 |

Week 1 - The art of not crashing

This code periodically reads sensor data (laser and odometry) and adjusts the robot's movement in real-time based on this data. Specifically, when an obstacle is detected in front, the robot stops; otherwise, it continues to move forward. This control method uses a fixed-frequency loop and conditional checks to achieve simple obstacle avoidance.

Approximate flow of algorithm:

- Initialize Input/Output and Frequency Control Objects: Create objects to manage sensor data reading and control command sending, as well as an object to control the loop frequency.

- Enter the Main Loop: Keep the loop running until a certain condition (e.g., loss of connection) stops the loop.

- Read Sensor Data: In each loop iteration, read the necessary sensor data (e.g., laser data and odometry data).

- Evaluate Conditions and Send Control Commands:

- If the sensor data meets specific conditions (e.g., detecting an obstacle), send a stop command.

- Otherwise, send a forward or other appropriate control command.

- Control Loop Frequency: Ensure the loop runs at the preset frequency to maintain real-time control and stability.

This method can be applied to various robot control scenarios by periodically acquiring environmental perception data and adjusting the robot's behavior in real-time based on predefined logic, thus achieving the desired functionality.

Simulation

Pavlos

My idea was to use the LiDAR sensor to detect any objects directly on the front of the robot. Thus when the robot would move forward it would detect the distance from the object directly on the front of it and stop before reaching a predefined threshold. In the video the threshold was 0.5 meters. To detect the distance exactly on the front I used the measurement in the middle of the ranges list of a laser scan message. I also created a function which takes as an argument a laser scan message and an angle in degrees and returns the distance measurement of the ray at that angle.

Video displaying the run on the simulation environment:

https://www.youtube.com/watch?v=MXB-z1hzYxE

Isabelle

I took the laser reading at the middle angle by taking the middle value of the reading range, together with the two readings before and after it. The robot moves forward by default and if these values go under 0.3m, it will stop.

Video of the program in action: [link]

Wenyu

Video record of dont_crash implementation & measurements: https://youtu.be/brgnXSbE_CE

Aditya

For this assignment I decided to improve upon the existing code of my group member Pavlos. Because I had the similar idea on the implementation of dont_crash as him, I decided to make the robot do more than what was asked for using the existing implementation. I developed a function turnRobot and made a loop in main code that makes the robot turn 90 degrees in the left direction as soon as it detects an obstacle infront of it and continue to move forward until it detects another obstacle and the process is repeated again. It is observed that the robot does not make a perfect 90 degrees turn and also because of this, it crash in the wall at a slight angle. I think this is because the loop works for only one laser data reading that is measured directly from the front. To fix this, I can create a loop which takes a range of laser angles from the front and takes a turn depending on the angle at which it detects the obstacle.

The simulation video can be found here https://youtu.be/69iLlf4VPyo

Yuri

I immediately started with the Artificial Potential Field method as my "don't crash" implementation.

Yuhui

This code controls a robot's movement based on laser and odometry data at a fixed frequency (10Hz). When the laser or odometry data is updated, the robot moves forward at a speed of 0.1 meters per second. If any laser scan point detects an obstacle closer than 0.4 meters, the robot stops moving. The emc::IO object is used to initialize and manage input/output operations, while the emc::Rate object controls the loop's execution frequency. The functions io.readLaserData(scan) and io.readOdometryData(odom) read laser and odometry data, and the io.sendBaseReference function sends speed commands to control the robot's movement. Video of the program: https://youtu.be/qtMjFI86jlA

Practical Session

Video from the practical session:

https://youtube.com/shorts/uKRVOUrx3sM?feature=share

On the robot-laptop open rviz. Observe the laser data. How much noise is there on the laser? What objects can be seen by the laser? Which objects cannot? How do your own legs look like to the robot?

The laser can see obstacles that are on the same plane with the sensor. The noise is very small and when we compared a measurement with what we measured with a measurement tape we found the difference to be very small. The sensor has a range of 10 meters, so obstacles further away weren't visible in RVIZ. Furthermore the viewing angle is around 115 degrees from the front of the robot to the right and 115 degrees from the front to the left. That means if something is behind the robot, the robot can't see it. Our legs appear to be as half circular shapes, since the sensor detects only the legs and not the feet.

Take your example of don't crash and test it on the robot. Does it work like in simulation? Why? Why not? (choose the most promising version of don't crash that you have.)

The implementations which where checking for a single point in the laser ranges list didn't work that well because a leg or box might be in front of the robot while not getting hit by the laser ray at 0 degrees. Thus, we modified our code to detect the ranges in a specific angle (e.g. 30 degrees) and stop if it finds an obstacle close enough in these ranges.

If necessary make changes to your code and test again. What changes did you have to make? Why? Was this something you could have found in simulation?

After making the before mentioned change, the robot could detect obstacles that were in front of it even if we moved them a bit in any direction. That was something we couldn't see in the simulation since we didn't have dynamic obstacles or static small obstacles and the underlying problem wasn't visible when we were dealing only with walls.

Problem Statement

Local planning's job is finding what translational and rotational velocity to send to the robot's wheels controller given a "local" goal. The local goal in this context is usually some point on the global path which takes us from a starting position to a final destination. Local planning will usually account for live/active sensor readings, and is the algorithm most responsible for dynamic obstacle avoidance.. It will try to follow the global plan if given, but if a dynamic object is kept in the environment that the global planner did not account for, there is a high probability that the robot might crash into it. It is the job of a local planner to avoid such scenarios.

For local navigation, the two solutions we considered for the assignment are the Dynamic Window Approach and the Artificial Potential Fields.

Simulation

Dynamic Window Approach

We implemented the dynamic window approach algorithm based on the paper "The Dynamic Window Approach to Collision Avoidance", by D. Fox et al[1].

Implementation of code and functionality

The algorithm is implemented in the DWA.cpp file. The main function of the algorithm is the Velocity dwa_step(emc::PoseData current_pose, std::vector<Point2D>* point_cloud, Velocity robot_vel) which finds the the next best velocity based on the current pose (position and orientation) of the robot, the point cloud that represents the current LRF measurement and the current robot's velocity. The Velocity class represents a combination of translational and rotational velocity and the Point2D a two dimensional point with x and y coordinates. For the evaluation of the candidate velocities we use the following formula:

score = k_h*((M_PI-heading_error)/M_PI) + k_d*distance/big_const + k_s*vel.trans_vel/max_trans_vel

The three different components of the scoring function are normalized in the [0,1] range to allow better tuning of the scoring by the use of a coefficient for each component. We try to maximize the score, thus all the components are expressed in a way where the higher the value, the better. The components can be explained as follows:

heading_error- Given a candidate velocity, it represents the angle (the absolute value) between the direction towards the goal and robot's heading for its next position with the given velocity. The heading of the robot is represented in the range of [-pi,pi] and the heading error in the range of [0,pi]. It is calculated with the functionfloat calculateHeadingError(emc::PoseData next_pose, Point2D goal)where we calculate the next pose before calling the function given the candidate velocity combination.distance- Given a candidate velocity, the robot's current pose and a point cloud generated from the current LRF measurement, it represents the distance that the robot would need to travel with the candidate velocity to end up colliding with an obstacle. This distance is sometimes referred as the clearance for the candidate velocity. The algorithm has a parameter for how many points to look ahead, where each point is dt=1/frequency seconds away from the previous one. A point of the point cloud is considered to cause a collision with the robot at a projected position if it is inside a circle centered at the projected position with radius equal to a predefined clearance radius. The distance value is calculated with thedouble calculateDistanceFromCollision(std::vector<Point2D>* points, emc::PoseData current_pose, Velocity vel)function.trans_vel- It represents a candidate velocity's translational magnitude. The purpose here is to motivate the robot to move further away if the other components don't get affected.

The tuning of the coefficients for each component of the scoring function wasn't trivial since different relations between the coefficients result in different behaviors. For example, a lower coefficient for the heading component together with a higher one for the clearance score, will result in more exploration of the space where the robot prefers to move towards the empty space instead of moving to the goal. If have the reversed relation the robot would prefer to move towards the goal but in the case of a dynamic obstacle in between, it wouldn't move away from the obstacle since that would also make it move away from the goal.

Simulation Results

Video displaying the run on the simulation environment:

https://www.youtube.com/watch?v=v6rQc6_jtUE

Artificial Potential Fields

Implementation of code and functionality

The Artificial Potential Fields method is implemented with the lecture slides as a basis. In the theory, a potential can be calculated at every point the robot can be in. The potential consist of an attracting component based on the goal. Being further from the goal means a higher potential. The potential increases as well when coming closer to unwanted object, which acts as a repelling force. When taking the derivative of this potential field, an artificial force can be derived to push the robot towards the goal while avoiding obstacles.

Implementing the theory in the code requires a different approach to the same concept. The robot needs to base the potentials on sensor data instead of a known map. To accomplish this the potential is calculated for multiple locations around the robot with shifted sensor data, essentially predicting the potential at multiple possible locations. All LiDAR points induce a reppelling potential, normalised for the amount of read LiDAR points. For the direction in which the difference with the current potential is the highest, the artificial force is used. The artificial force is scaled and directly implemented in the desired robot velocity and rotation. After conducting experiments, the speed/force had to be capped to increase robustness and to keep up with the LiDAR refresh rate.

Simulation Results

Video displaying the simulation results: https://youtu.be/aqUUIiQzgVs.

Problems

Map 3 and 4 do not work with the Artificial Potential Field due to the presence of local minima in the artificial field. It is expected the global planner will solve this problem when having enough nodes. If this is not the case, a solution has to be found.

Assignment Questions

1. What are the advantages and disadvantages of your solutions?

For the DWA algorithm the main advantage is that the robot can dynamically avoid unexpected obstacles provided that they are not forming a dead end. The main disadvantage is the conceptual and computational complexity, which made us lower the frequency of the algorithm and make its decision space more sparse. Another disadvantage is the unavoidable trade off between the heading towards the goal and the dynamic obstacle avoidance.

For the Artificial Potential Field method the advantages are the robust and easily tunable parameters, together with the fact the method is computationally cheap. The downside is the presence of local minima. The robot can get stuck when moving in such local minima. Additionally, there is always a force acting on the robot. This means the robot always wants to move, even when it might not be beneficial in for example the restaurant challenge scenario.

2. What are possible scenarios which can result in failures for each implementation?

For DWA a dead end after which the goal is located, would cause the robot to end up in a position where heading towards any direction would make it head away from the goal and eventually move towards one the two walls of the dead end. Lowering the heading coefficient even further could make the robot travel back towards the empty space but then the robot wouldn't move towards the goal even when if it could because the heading wouldn't be affecting the scoring function that much.

For the Artificial Potential Field method, the failure scenarios are identical to the previous mentioned disadvantages.

3. How would you prevent these scenarios from happening?