Mobile Robot Control 2024 HAL-9000: Difference between revisions

Tag: 2017 source edit |

|||

| (189 intermediate revisions by 6 users not shown) | |||

| Line 24: | Line 24: | ||

== Exercise 1: Non-crashing robot == | == Exercise 1: Non-crashing robot == | ||

For the first exercise, the goal was to ensure that the robot does not move through walls in the simulation and not crash into the walls in both the simulation and the real world. To solve this matter, there were multiple solutions. The first solution collects all of the data from the lasers and stops the robot when it reaches a wall. A second solution | For the first exercise, the goal was to ensure that the robot does not move through walls in the simulation and not crash into the walls in both the simulation and the real world. To solve this matter, there were multiple solutions. The first solution collects all of the data from the lasers and stops the robot when it reaches a wall. A second solution uses the data of the bumper to detect a collision. These solutions were then tested on the robot in real life. After testing, it became clear that, although stopping the robot was not an issue, the robot was not able to move along a wall in parallel. This issue occurred since the sensor data was taken uniformly. So on all sides, the robot would stop with the same threshold. Therefore, a third solution was made that took the orientation of the sensor data into account for the robot to move alongside a wall. The details of the solutions will now be covered in more detail. | ||

[[File:2024-06-0617-12-19-ezgif.com-video-to-gif-converter.gif|thumb|Video 1: Don't crash simulation run.]] | |||

[[File:Vid-ezgif.com-video-to-gif-converter.gif|thumb|464x464px|Video 3: Testing of solution 3 from the don't crash exercises.]] | |||

=== Solution 1 === | === Solution 1 === | ||

For the first solution, we utilize the laser data that the robot has. This contains data as to how far a wall or object is to the robot at a certain angle. For each angle, we check whether the robot is too close to an obstacle, thus within range x. If it is, it stops moving. Else, it keeps on going forward. | For the first solution, we utilize the laser data that the robot has. This contains data as to how far a wall or object is to the robot at a certain angle. For each angle, we check whether the robot is too close to an obstacle, thus within range x. If it is, it stops moving. Else, it keeps on going forward. | ||

=== Solution 2 === | === Solution 2 === | ||

For | For this solution, the robot utilizes bumper sensor data to detect collisions. When the bumper is pressed, indicating contact with an obstacle, the robot immediately halts and then reverses direction until the bumper is released. For the final challenge we will utilize both laser data and bumper data to avoid crashes. | ||

[[File:Output gif.gif|center|thumb|Video 2: Don't crash utilizing the bumper data]] | |||

=== Solution 3 === | === Solution 3 === | ||

This solution is | This solution is an expansion of solution 1. In this expansion, the size of the robot is taken into account. This solution was created after testing the robot physically. We came to the realisation that the size of the robot is not taken into account. Therefore, in the script an offset was included such that the body of the robot does not bump into the wall. Using the line: <code>scan.ranges[i] < minDist + robotWidth*abs(sin(currentAngle)</code> we introduce an offset by adding the sinusoid of the current angle, which is approximately the circular body of the robot. Because the laser of the robot is approximately in the middle of its body, the sinusoid creates a proper offset to not bump into a wall anymore. | ||

=== Questions: === | === Questions: === | ||

# Compare the different methods to one another. What situations do these methods handle well? Is there one "Best" method? | |||

## The best method was by far the 3rd solution. Once tested in real life you can see the caveats you can fall into when just using the simulation software. Therefore, by adding an accounting mechanism for the robots size, gives the best outcome. The best method is definitely the 3rd solution. However, there might even be improvements to it, yet since just stopping is so simple this is the best. | |||

# On the robot-laptop open rviz. Observe the laser data. How much noise is there on the laser? What objects can be seen by the laser? Which objects cannot? How do your own legs look like to the robot. | # On the robot-laptop open rviz. Observe the laser data. How much noise is there on the laser? What objects can be seen by the laser? Which objects cannot? How do your own legs look like to the robot. | ||

## The amount of noise in the laser data, visually assessed, is present. In the gif below it can be seen, where the red lines, the laser data of the robot, are flashing. This means that it is changing each iteration, meaning there is noise in the data. However, the overall location of the laser data is accurate. The objects that can be seen by the laser are the walls, but also the doors. So solid, non transparent objects can be detected by the laser. There also is a blind spot behind the robot, where it cannot see anything. On top of that, transparent objects are harder for the robot to detect due to the laser going through the object. For the robot, your own legs are poles. Due to your feet being under the laser, they are not detected and thus can drive into your feet.[[File:2024-06-0613-53-08-ezgif.com-video-to-gif-converter.gif|center|thumb|375x375px|Video 4: Overview of the laser data's range and noise levels. The flashing of the red lines (laser data) shows noise in the sensors.]] | |||

# Take your example of don't crash and test it on the robot. Does it work like in simulation? Why? Why not? (choose the most promising version of don't crash that you have.) | # Take your example of don't crash and test it on the robot. Does it work like in simulation? Why? Why not? (choose the most promising version of don't crash that you have.) | ||

## When testing don't crash, we came to know that the physical robot size is not taken into account in the simulation. In the simulation, the robot did stop as soon as it came close to a wall, but the physical does not always do this. It crashed into the walls as soon as it was not directly in front of the laser, due to the circular shape of the robot that is not taken into account. When editing the software (solution 3), it did not crash into the walls anymore. | |||

# If necessary make changes to your code and test again. What changes did you have to make? Why? Was this something you could have found in simulation? | #If necessary make changes to your code and test again. What changes did you have to make? Why? Was this something you could have found in simulation? | ||

##We changed the code to incorporate the size of the robot using <code>robotWidth*abs(sin(currentAngle)</code> and adding it to the minimal distance to the robot in solution 3. This adds a circle of the robots laser data such that the distances were approximately correct from the robots body. | |||

# Take a video of the working robot and post it on your wiki. | # Take a video of the working robot and post it on your wiki. | ||

## see video 1. | |||

#Testing of don't crash. As a second exercise, the don't crash algorithm has to be tested in the 2 maps provided. The videos are shown below. In the first video, we can see that the algorithm stops nicely in front of the wall. For the second algorithm, we see that the robot stops even though it can pass, the wall just came too close and it made the robot stop. This is something to work on in the future, because if it is not moving toward the wall, we should be able to just drive parallel to the wall cause we won't crash into it. | |||

<center> | |||

<table> | |||

<tr> | |||

<td>[[File:2024-06-0617-27-33-ezgif.com-video-to-gif-converter.gif|thumb|Video 5]]</td> | |||

<td>[[File:2024-06-0617-29-34-ezgif.com-video-to-gif-converter.gif|thumb|Video 6]] </td> | |||

</tr> | |||

</table> | |||

</center> | |||

== Exercise 2: Local Navigation == | === bugs and fixes === | ||

For local navigation, 2 different solutions had to be introduced for the restaurant scenario. We have chosen for artificial potential fields and vector field histograms because these solutions seemed the most robust to us. In this | The bug that occurred is mainly not being able to drive closely to a wall in parallel. This problem can be fixed through looking at the direction we are driving to and as long as we are not driving towards the wall, it should just keep driving. However, we decided not to implement this, because local navigation will likely account for this. | ||

==Exercise 2: Local Navigation == | |||

For local navigation, 2 different solutions had to be introduced for the restaurant scenario. We have chosen for artificial potential fields and vector field histograms because these solutions seemed the most robust to us. In this paragraph the 2 solutions will be explained. | |||

=== Artificial potential fields === | ===Artificial potential fields === | ||

Artificial potential fields are self-explanatory: they portray the potential fields around an object. A field can either be repulsive or attractive. The reason for being artificial is because these fields do not exist in the real world. In the real world, an object does not push the robot away or pull the robot towards it. In a simulation, however, artificial potential fields can be drawn such that a robot avoids certain objects and gravitates toward others. In this particular case, the robot is drawn towards the goal and repulsed by obstacles that are in its way. The distance between the robot and the goal or obstacle dictates the strength of the field around it. When an obstacle is close, for example, the repulsion force should be higher than the attraction force and high enough to repulse the robot away from the obstacle. This dependency on distance can be seen in the formulas for the two fields. For the implementation, the following source was used: <ref>Matoui, F., Naceur, A. M., & Boussaid, B. (2015). Local minimum solution for the potential field method in multiple robot motion planning task. Conference Paper presented at the 2015 IEEE/ACIS 14th International Conference on Computer and Information Science (ICIS). doi:10.1109/STA.2015.7505223. Retrieved from [ResearchGate](https://www.researchgate.net/publication/307799899).</ref> | Artificial potential fields are self-explanatory: they portray the potential fields around an object. A field can either be repulsive or attractive. The reason for being artificial is because these fields do not exist in the real world. In the real world, an object does not push the robot away or pull the robot towards it. In a simulation, however, artificial potential fields can be drawn such that a robot avoids certain objects and gravitates toward others. In this particular case, the robot is drawn towards the goal and repulsed by obstacles that are in its way. The distance between the robot and the goal or obstacle dictates the strength of the field around it. When an obstacle is close, for example, the repulsion force should be higher than the attraction force and high enough to repulse the robot away from the obstacle. This dependency on distance can be seen in the formulas for the two fields. For the implementation, the following source was used: <ref>Matoui, F., Naceur, A. M., & Boussaid, B. (2015). Local minimum solution for the potential field method in multiple robot motion planning task. Conference Paper presented at the 2015 IEEE/ACIS 14th International Conference on Computer and Information Science (ICIS). doi:10.1109/STA.2015.7505223. Retrieved from [ResearchGate](https://www.researchgate.net/publication/307799899).</ref> | ||

| Line 57: | Line 74: | ||

With the following: | With the following: | ||

* <math>K</math>: positive scaling factor. | *<math>K</math>: positive scaling factor. | ||

* <math>X</math>: position of the robot. | *<math>X</math>: position of the robot. | ||

* <math>X_g</math>: goal of the robot. | * <math>X_g</math>: goal of the robot. | ||

* <math>\rho(X, X_g) = \| X_g - X \|</math>: distance from robot to goal. | * <math>\rho(X, X_g) = \| X_g - X \|</math>: distance from robot to goal. | ||

| Line 73: | Line 90: | ||

</math> | </math> | ||

* <math>\eta > 0</math>: positive scaling factor. | *<math>\eta > 0</math>: positive scaling factor. | ||

* <math>\rho(X, X_0)</math>: the minimum distance (robot and obstacles). | *<math>\rho(X, X_0)</math>: the minimum distance (robot and obstacles). | ||

* <math>\rho_0</math>: distance influence imposed by obstacles; its value depends on the condition of the obstacle and the goal point of the robot, and is usually less than half the distances between the obstacles or shortest length from the destination to the obstacles. | *<math>\rho_0</math>: distance influence imposed by obstacles; its value depends on the condition of the obstacle and the goal point of the robot, and is usually less than half the distances between the obstacles or shortest length from the destination to the obstacles. | ||

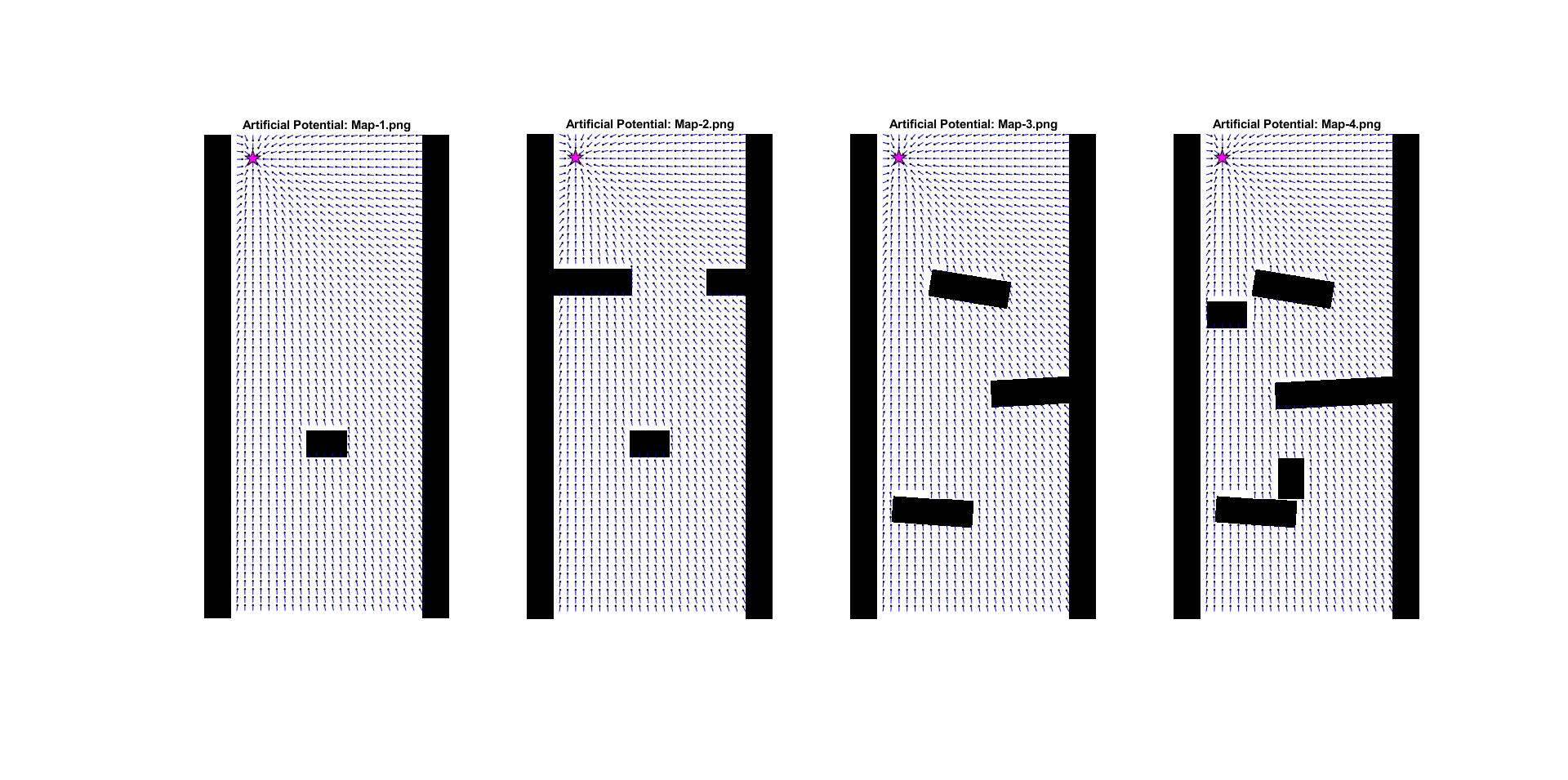

Using these formulas, the following artificial potential fields were created for all maps. | Using these formulas, the following artificial potential fields were created for all maps. | ||

[[File:Potential Fields maps.png|thumb|center|990x990px]] | [[File:Potential Fields maps.png|thumb|center|990x990px|Figure 1: Overview of the attraction field through the different maps.]] | ||

From this figure, the weakness of the artificial potential method becomes apparent: the 'local minimum' problem. This causes the robot to get stuck in places were the minimum potential does not result in a path towards the goal. There are methods to try and deal with them, but this is a fundamental problem with the technique. | From this figure, the weakness of the artificial potential method becomes apparent: the 'local minimum' problem. This causes the robot to get stuck in places were the minimum potential does not result in a path towards the goal. There are methods to try and deal with them, but this is a fundamental problem with the technique. | ||

| Line 102: | Line 119: | ||

In the figures bellow it can be seen how the artificial potential field method performed on the given maps. | In the figures bellow it can be seen how the artificial potential field method performed on the given maps. | ||

<div style="text-align: center;"> | |||

<table style="margin: auto;"> | |||

<tr> | |||

<td> | |||

[[File:Map 1 apf.gif|thumb|Video 7: Artificial potential field testes on map 1. The robot is stuck on a local minima. The robot is repulsed by the obstacle making it turn 180 degrees. After it, the robot is no longer repulsed by the obstacle, but attracted by the goal. Since the robot is in a new position, it manages to avoid the local minima, and avoid the obstacle, finally reaching the goal. |503x503px|center]] | |||

</td> | |||

<td> | |||

[[File:Apf map 2.gif|thumb|Video 8: Artificial potential field testes on map 2. After passing the first obstacle as in map 1, the direction of the robot was influenced by the two obstacles on the robot's sides. However, the attraction force was strong enough to guide the robot to the goal.|511x511px|center]] | |||

</td> | |||

</tr> | |||

<tr> | |||

<td> | |||

[[File:Apf map 4.gif|thumb|Video 9: Artificial potential field testes on map 4. The robot avoided the first two obstacles located on its left and in front of it. However, the robot got stuck after it. The robot had to pass by a narrow passage, where it was influenced by the repulsion forces of the obstacles. The robot did not manage to pass the narrow passages as in map 2 because the attraction force pointed to one of the obstacles, but not to a free space.|503x503px|center]] | |||

</td> | |||

<td> | |||

[[File:Apf map 3.gif|thumb|Video 10: Artificial potential field testes on map 3. The robot manages to avoid the first two obstacles, the first on the left of the robot, and the second on the right. However, the robot did not manage to avoid the third obstacle and got stuck on the local minima. The robot did not manage to adjust its position as in map 1 because the second obstacle influenced the robot's direction, forcing it to go back to the local minima.|503x503px|center]] | |||

</td> | |||

</tr> | |||

</table> | |||

</div> | |||

=== bugs and fixes === | |||

So the main bugs with the artificial potential field were the local minima. These local minima were too much of an issue to solve in time and therefore the decision was made to, instead of fixing the code, not use this algorithm anymore. | |||

=== Answers to Questions Artificial Potential Field=== | |||

<ol> | |||

<ol> | |||

<li><b>What are the advantages and disadvantages of your solutions?</b> | <li><b>What are the advantages and disadvantages of your solutions?</b> | ||

<p>Advantages:</p> | <p>Advantages:</p> | ||

<ul> | <ul> | ||

<li>Simplicity in implementation and understanding.</li> | <li> Simplicity in implementation and understanding. </li> | ||

<li>Effective for basic obstacle avoidance and goal seeking.</li> | <li> Effective for basic obstacle avoidance and goal seeking.</li> | ||

</ul> | </ul> | ||

<p>Disadvantages:</p> | <p>Disadvantages:</p> | ||

<ul> | <ul> | ||

<li>Susceptible to local minima, where the robot can get stuck.</li> | <li> Susceptible to local minima, where the robot can get stuck. </li> | ||

<li>Not effective in highly dynamic or complex environments.</li> | <li> Not effective in highly dynamic or complex environments. </li> | ||

</ul> | </ul> | ||

</li> | </li> | ||

<li><b>What are possible scenarios which can result in failures (crashes, movements in the wrong direction, ...) for each implementation?</b> | <li> <b>What are possible scenarios which can result in failures (crashes, movements in the wrong direction, ...) for each implementation?</b> | ||

<ul> | <ul> | ||

<li>For example, set the target position in an obstacle and let the robot try to get to the target; what happens? The robot may get stuck or oscillate around the obstacle.</li> | <li>For example, set the target position in an obstacle and let the robot try to get to the target; what happens? The robot may get stuck or oscillate around the obstacle.</li> | ||

<li>Another example: Narrow passages can cause the robot to get stuck due to strong repulsive forces from both sides.</li> | <li>Another example: Narrow passages can cause the robot to get stuck due to strong repulsive forces from both sides. </li> | ||

</ul> | </ul> | ||

</li> | </li> | ||

<li><b>How would you prevent these scenarios from happening?</b> | <li> <b>How would you prevent these scenarios from happening?</b> | ||

<p>Possible solutions include:</p> | <p>Possible solutions include:</p> | ||

<ul> | <ul> | ||

<li>Implementing a path planner that re-evaluates the route if the robot gets stuck.</li> | <li> Implementing a path planner that re-evaluates the route if the robot gets stuck. </li> | ||

<li>Using a combination of potential fields and other algorithms like A* or Dijkstra’s algorithm for global path planning.</li> | <li> Using a combination of potential fields and other algorithms like A* or Dijkstra’s algorithm for global path planning. </li> | ||

<li>Introducing randomness or perturbations in the path to escape local minima.</li> | <li>Introducing randomness or perturbations in the path to escape local minima. </li> | ||

</ul> | </ul> | ||

</li> | </li> | ||

<li><b>For the final challenge, you will have to link local and global navigation. The global planner will provide a list of (x, y) (or (x, y, θ) ) positions to visit. How could these two algorithms be combined?</b> | <li> <b>For the final challenge, you will have to link local and global navigation. The global planner will provide a list of (x, y) (or (x, y, θ) ) positions to visit. How could these two algorithms be combined?</b> | ||

<p>The combination could be implemented as follows:</p> | <p>The combination could be implemented as follows:</p> | ||

<ul> | <ul> | ||

<li>The global planner (e.g., A* algorithm) calculates a rough path from the start to the goal.</li> | <li> The global planner (e.g., A* algorithm) calculates a rough path from the start to the goal.</li> | ||

<li>The local planner (artificial potential fields) is used to avoid obstacles and refine the path by guiding the robot between the waypoints provided by the global planner.</li> | <li> The local planner (artificial potential fields) is used to avoid obstacles and refine the path by guiding the robot between the waypoints provided by the global planner.</li> | ||

<li>This hybrid approach ensures that the robot follows an efficient path while dynamically avoiding unforeseen obstacles.</li> | <li> This hybrid approach ensures that the robot follows an efficient path while dynamically avoiding unforeseen obstacles. A good thing to take into account is the balance between global and local navigation. By placing a lot of nodes in the graph of global navigation, the local planner does not have to do much. The other way around, with less nodes the local planner would dominate. In artificial potential fields, having a more influential global planner would be wise, to steer away from (quite literally) local minima. </li> | ||

</ul> | </ul> | ||

</li> | </li> | ||

</ol> | </ol> | ||

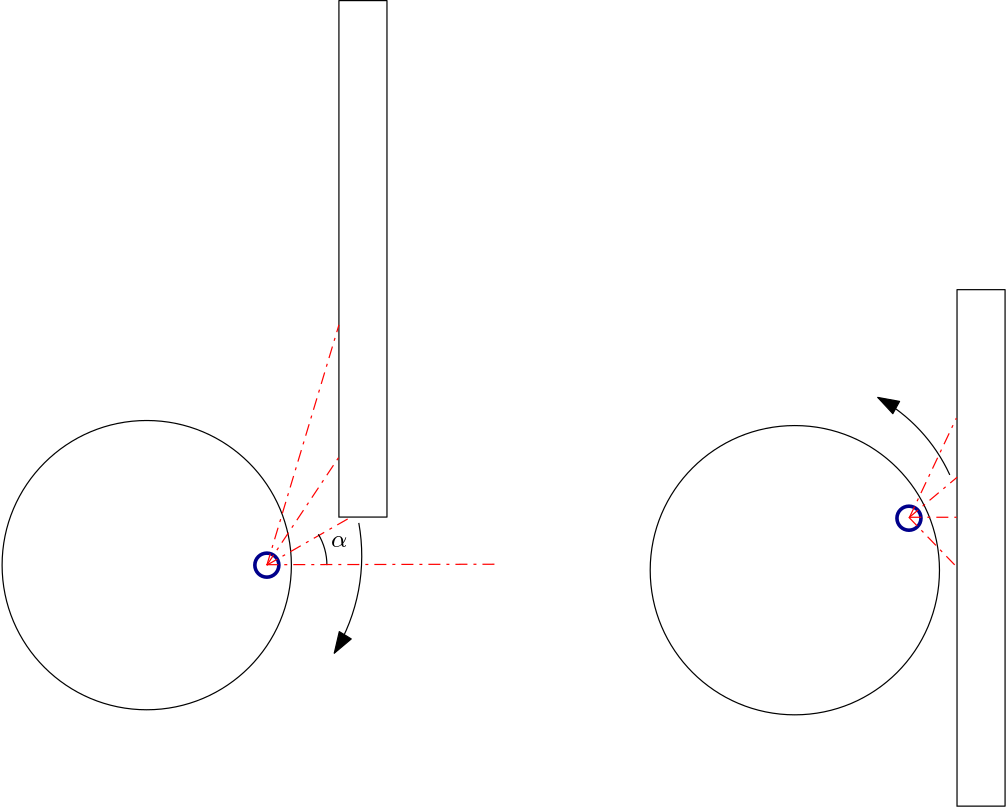

===Vector Field Histogram=== | |||

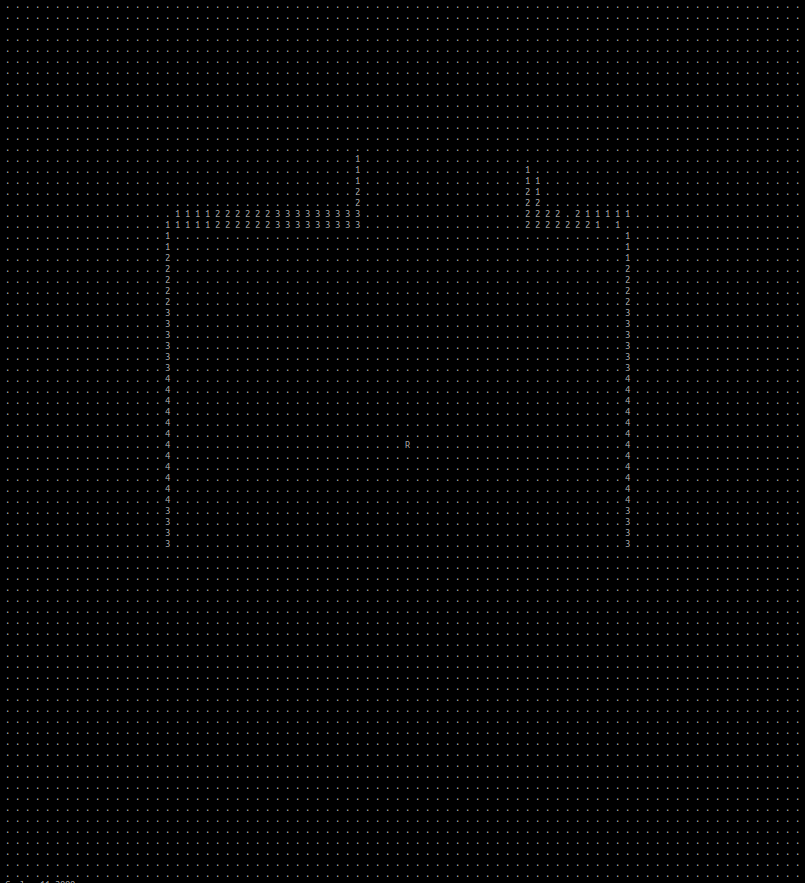

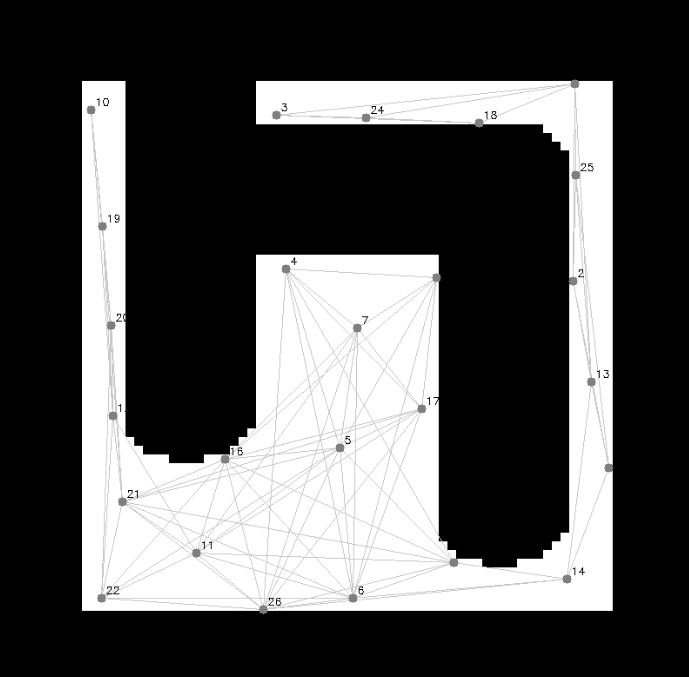

=== Vector Field Histogram === | [[File:Robot visualization.png|thumb|346x346px|[[File:Map_2_polar_histogram.png|frameless|325x325px]]Figure 2: Visualization of the occupation map, created from the laser data. The values shown are based on the distance from the robot. The map below is what the occupancy map is based on.]] | ||

Vector Field Histograms is a method to locally navigate through a space. It uses a map matrix and a polar histogram to determine where the robot can move to get to the goal. The map matrix stores cells which say that they are either occupied or not. The value in those squares then indicate the certainty that the cell is an obstacle. This map moves with the robot as an active window, where the cells in the map matrix are constantly updated just like the polar histogram which is based on the map matrix. Using the laser data of the robot, the height of the bins of the polar is determined and it is smoothed. Finally, a valley between the curves is chosen to move towards to. | Vector Field Histograms is a method to locally navigate through a space. It uses a map matrix and a polar histogram to determine where the robot can move to get to the goal. The map matrix stores cells which say that they are either occupied or not. The value in those squares then indicate the certainty that the cell is an obstacle. This map moves with the robot as an active window, where the cells in the map matrix are constantly updated just like the polar histogram which is based on the map matrix. Using the laser data of the robot, the height of the bins of the polar is determined and it is smoothed. Finally, a valley between the curves is chosen to move towards to. | ||

Summarizing, for vector field histograms, 2 major components had to be created. Firstly, the translation from the laser data to a map that contains the coordinates of the obstacles together with a certainty value and a polar histogram, from which a minima should be chosen as direction to continue moving. These components will be explained now. Note that the ''x, y'' and ''angle'' of the robot are kept track of globally. | Summarizing, for vector field histograms, 2 major components had to be created. Firstly, the translation from the laser data to a map that contains the coordinates of the obstacles together with a certainty value and a polar histogram, from which a minima should be chosen as direction to continue moving. These components will be explained now. Note that the ''x, y'' and ''angle'' of the robot are kept track of globally. | ||

==== Obstacle map ==== | ==== Obstacle map==== | ||

The obstacle map is created by defining a matrix | The obstacle map is created by defining a matrix with the height and width of the active window. Since each run items more in the map, we clear the map at the beginning of the run. Then, we look at the laser data and compute the coordinates of the obstacle using the distance retrieved from the laser data and the angle we globally keep track of. | ||

<math>x = \frac{\sin(\theta) \cdot laser\_data[i]}{CELL\_SIZE}</math> | <math>x = \frac{\sin(\theta) \cdot laser\_data[i]}{CELL\_SIZE}</math> | ||

| Line 168: | Line 196: | ||

<math>y = \frac{\cos(\theta) \cdot laser\_data[i]}{CELL\_SIZE}</math> | <math>y = \frac{\cos(\theta) \cdot laser\_data[i]}{CELL\_SIZE}</math> | ||

Using these coordinates, in the map, the distance of the robot to the wall is put to indicate the risk factor. This value is then normalized to be in range ''[1,10]'' . | Using these coordinates, in the map, the distance of the robot to the wall is put to indicate the risk factor. This value is then normalized to be in range ''[1,10]'' . The robots size is kept into account in this distance aswell. This is done by dilating the walls in the sensor map to the robot width such that it cannot bump into the walls. The laser data map and the map its supposed to represent are depicted in figure 2 and 3. | ||

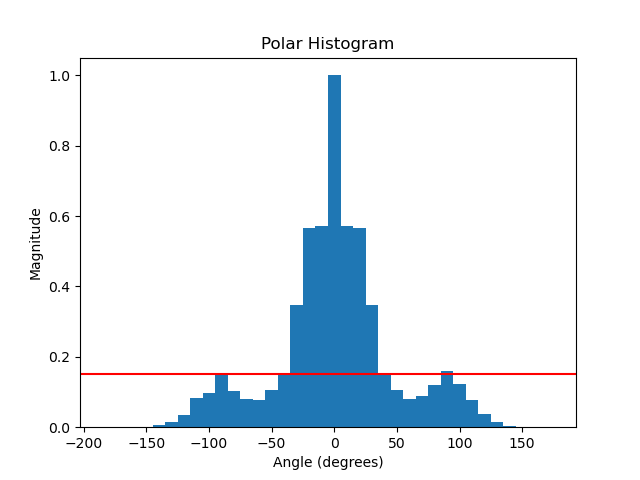

==== Polar Histogram==== | |||

The robots size is kept into account in this distance aswell. This is done by dilating the walls in the sensor map to the robot width such that it cannot bump into the walls. | |||

==== Polar Histogram ==== | |||

In order to create the polar historam, the article 'IEEE Journal of Robotics and Automation Vol 7, No 3, June 1991, pp. 278-288' is used, further refered as [1]. The polar histrogram is made, using the histrogram grid from the previous paragraph. An active window is chosen, with a certain width <math>w_d</math>. We assume a rectangular grid, hence a <math>w_d</math> x <math>w_d</math> grid. Also, it is assumed that the robot is exactly in the middel of this active grid. In order to make the polar histogram, the follow parameters are used: | In order to create the polar historam, the article 'IEEE Journal of Robotics and Automation Vol 7, No 3, June 1991, pp. 278-288' is used, further refered as [1]. The polar histrogram is made, using the histrogram grid from the previous paragraph. An active window is chosen, with a certain width <math>w_d</math>. We assume a rectangular grid, hence a <math>w_d</math> x <math>w_d</math> grid. Also, it is assumed that the robot is exactly in the middel of this active grid. In order to make the polar histogram, the follow parameters are used: | ||

| Line 202: | Line 222: | ||

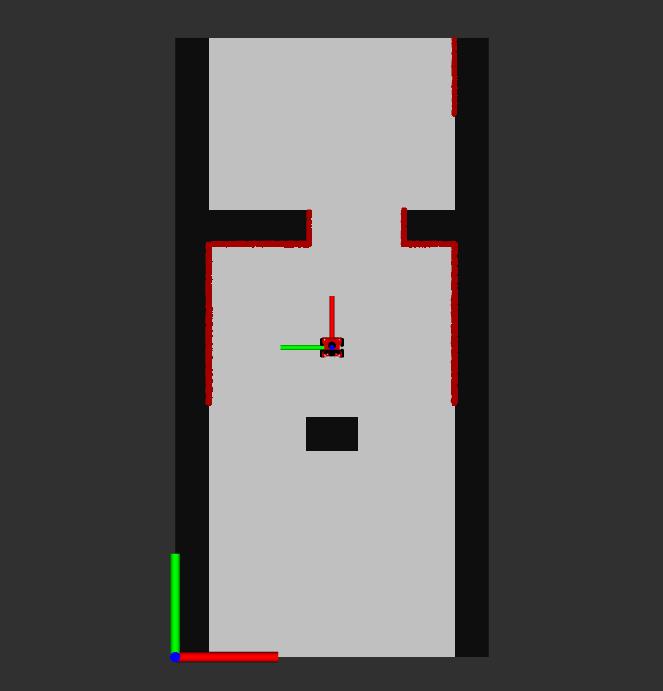

Once the polar histogram is made, it is possible to determine the optimal steering angle. To do so, multiple steps need to be done. The algorithm does this in the following sequence: | Once the polar histogram is made, it is possible to determine the optimal steering angle. To do so, multiple steps need to be done. The algorithm does this in the following sequence: | ||

# Determine the valleys which are bellow a certain threshold. This threshold is introduced in [1], in order to avoid steering towards small corridors. A valley is a 'range' between objects. By looking at the figure below, the possible valleys are (-80, -40), (40, 80) and (100, -100) (with wrapping). ''Note: in the application we used 0 to 360 degrees.'' | |||

#Determine the valleys which is closest to the target goal. For example, is the target angle is -60, the valley (-80, -40) would be chosen, as the target lies in this valley. If the target is 30, the valley (40, 80) is chosen, as the border of 40 is closest to this target angle. | |||

#From the chosen valley, determine the best angle. If the targer is within the valley, return the target. If the target is not within the valley, chose an angle which is as close as possible to the target without driving into a wall. Here, a distinct in made between ''wide'' and ''narrow'' valleys. A wide valley is has a certain width of ''s_max''. If the optimal valley exceeds this width, and the target angle is not in the valley, the new steering angle will be determined as <math>\theta = (k_n + s_max) / 2</math>. Here, ''k_n'' is the border which is closest to the target. This enables the robot to drive close to walls in a wide valley. If the valley is ''narrow'', hence not wider than ''s_max'', the exact middle is chosen as the new steering angle <math>\theta</math>. | |||

3 | [[File:Examplefig2.png|center|thumb|455x455px|igure 3: Example of polar histogram of small corridor containing an obstacle in front of the robot. The red line indicates the threshold.ld.]] | ||

==== Speed Control ==== | |||

Once the steering angle is determined, the robot should take a certain action. To do so, it looks at its own angle and the steering angle resulting from the vector field histogram algorithm. If the angle is small (less than 23 degrees), the robot can still drive forwards and also turn simultaneously. However, if the steering angle is larger than 23 degrees, the robot stops and first makes the turn. This is done in order to enable the robot to make large turns in narrow sections. The 23 degrees can be tuned to a preferred behaviour. If the robot has arrived at the goal within a certain distance (for example 0.2 meters), the robot has arrived and the robot goes to either the next goal of stays if it has no further goals. | |||

=== Bugs and fixes === | |||

One bug in this algorithm, is that the robot starts choosing between two angles rapidly. One of the causes for this, was that the robot could not detect anything in de blind laser spot (the range in which the laser does not detect an object). Therefore, the robot could sometimes steer towards the blank spot, see that there is an obstacle, steer back, and this goes on repeatedly. To fix this issue, a virtual obstacle is placed in the blind spot of the robot. For this reason, the robot will never choose to search for a steering angle in its blind spot. If it wants to steer in its blind spot, it first needs to turn and scan that spot. This solution worked well for most of the situations where the bug occurred, but not all of them. There are still situations where two angles are chosen rapidly after each other. This mainly happens when the steering goal is far away and the robot very close to an object. We hope to solve this with the global planner. | |||

Another solution for getting stuck, is shortening the horizon of the active grid. The laser-finders have a very large range. The map which is made from the laser finders is 4 x 4 meters. However, if the entire map is chosen, the algorithm sometimes can get stuck, i.e. it sees no valleys. To solve this, we can tune the size of the active grid. The video which are shown are with an active grid of 1 x 1 meters. This enables less interference of walls/obstacles which are far away. | |||

===Videos === | |||

<div style="display: flex; justify-content: center;"> | |||

<div style="margin: 5px;"> | |||

[[File:2024-05-2316-19-2011-ezgif.com-video-to-gif-converter.gif|thumb|559x559px|Video 11: Vector field histogram of the second map. The robots senses can be seen in the command line, such as the polar histogram and the obstacle map.]] | |||

</div> | |||

<div style="margin: 5px;"> | |||

[[File:2024-05-2316-14-32-ezgif.com-video-to-gif-converter.gif|thumb|563x563px|Video 12: Vector field histogram of the third map. The robots senses can be seen in the command line, such as the polar histogram and the obstacle map.]] | |||

</div> | |||

<div style="margin: 5px;"> | |||

[[File:2024-05-2316-16-16-ezgif.com-video-to-gif-converter.gif|thumb|571x571px|Video 13: Vector field histogram of the first map. The robots senses can be seen in the command line, such as the polar histogram and the obstacle map.]] | |||

</div> | |||

</div> | |||

[[File:WhatsAppVideo2024-06-05at11.51.46-ezgif.com-video-to-gif-converter.gif|thumb|Video 14: Vector field histogram is being tested in the physical robot. It seems to detect the obstacles well and can get to the goal that is at the end of the parkour.]] | |||

These videos (7-9) are tests of the vector field histogram implementation in the different maps provided. It seems to behave properly, but there are still some minor bugs to be solved. In video 10, we see the real life testing of the robot. We see that it nicely avoids the obstacles and that it goes towards the goal we set. However, when testing the robot in other cases, it sometimes gets stuck in between 2 choices where it jitters between these 2 options. We have tried implementing a hysteresis, but this only worked for small angles and not really for bigger angles, which were also occurring. Other than that, we tried implementing a cost function that also takes in the previous angle, the goal angle and the closest angle. This also did not work. | |||

The following link (https://youtu.be/VTsmuJFkQ40) shows the video on the simulation of the local navigation, as well as some real life testing. The previous explained bug happens at map 3. It shows that the robot starts shacking between two steering actions. Note that this not always happens, as in an example shown in the top videos with the additional printed map. It is very sensitive to starting positions and active fields. This is hopefully solved when the global navigation is used. In map 4, this bug does not occur. This is due to the fact that there is an extra box, which disables the option to choose between two angles. The real testing shows that the robot can navigate around a corner with some obstacles. | |||

=== Answers to Questions Vector Field Histogram === | |||

#<b>What are the advantages and disadvantages of your solutions?</b> | |||

##Advantages: Our solution is relatively robust and can usually find the route that it should take in an environment. It is intuitive as well, the polar histogram is a logical way to think of obstacles getting in a robots way. | |||

## Disadvantages: Vector Field Histogram has a problem with narrow corridors. This is because the polar histogram can sketch it as if there is no passageway (depending on the bin size of the polar histogram). Another problem we encountered was that the robot could not choose between two valleys and kept switching between those two options. | |||

#<b>What are possible scenarios which can result in failures (crashes, movements in the wrong direction, ...) for each implementation?</b> | |||

##Narrow corridors. | |||

##2 paths that are equally as good will result in the robot not being able to decide between one of the passages. | |||

#<b>How would you prevent these scenarios from happening?</b> | |||

##For narrow corridors, we could introduce more bins for the polar histogram such that the map is more refined and thus can spot smaller corridors. | |||

##Hysteresis could be introduced to fix the 2nd problem. Another implementation could be to introduce a cost function that not only takes the goal position into account but also the closest angle and the previous angle we have chosen. This way, there is another factor that can influence the choice, meaning that the chance to have an equal cost in 2 angles is smaller. | |||

##Another (and best) option, is to solve these problems with the global planner. If the nodes are close to each other, the local planner will hopefully not get stuck. In addition, we will try to implement a debugger, which detects of the robot gets stuck. If this happens, the robot will indicate it is stuck and an action has to be taken (which action is explained in the global planner). | |||

#<b>For the final challenge, you will have to link local and global navigation. The global planner will provide a list of (x, y) (or (x, y, θ) ) positions to visit. How could these two algorithms be combined?</b> | |||

##For our current implementation, we can give the robot a goal position towards which it will drive. These points for the robot to drive to are the nodes provided by the A* algorithm. These coordinates will serially driven towards. Once the robot cannot find a path for an amount of time or it discovers no path towards the node, it should consult the global planner again. The global planner should remove the node from the graph and find another path with the A* algorithm again, for the robot to follow. So the local planner will drive from node to node, in order to get to the goal. As mentioned, if the local planner gets stuck on a node, a possibility is to remove that node and move to the next node, which the robot hopefully can reach. There is also a balance here to be decided on, as talked about in artificial potential fields. For this implementation, it seems that the global planner and local planner can work together with each getting 50% of the job. So, this means we can space the random nodes more in global navigation and thus rely more on the local planner. However, the local planner still needs sufficient instructions from the global planner in order to not get stuck. | |||

==Exercise 3 Global Navigation== | |||

The goal of the global navigation exercise consists of three parts. First of all, apply the A* algorithm to find the shortest path in a map which has nodes and edges. Secondly, to create a Probabilistic Road Map to generate the node list and connections for a given map. Finally, the global planner should be connected to the local planner. A framework for the implementation has been provided, in which several parts of code have to be added or adjusted. These added or adjusted parts of code will be elaborated on in the following sections. | |||

=== A* implementation === | |||

For the A* star implementation, code has to be written such that a sequence of node IDs can be found that form the shortest path through the given map from start to the goal. To test the algorithm the map of a maze will be used. In the given code the initialization step and the codes main structure have already been provided. The exercises/parts to be completed are as indicated below. | |||

=== | ===== 1. Find in every iteration, the node that should be expanded next. ===== | ||

The node that should be expanded next, should be the node with the minimum ''f'' value, which takes into account the cost-to-come and the cost-to-go. Starting from the start node, while the goal is not reached yet, a while loop iterates through the ''open_nodes'' list and determines the ''f'' value. A parameter ''min_f_value'' and set to infinity, in the loop the ''f'' value of the current node is compared with this parameter ''min_f_value''. If the ''f'' value of the current node is less than the ''min_f_value'', the parameter is updated to the newly found minimal ''f'' value and the node ID is stored in ''nodeID_min_f''. This node with the minimal ''f'' value is the node which should be expanded next. | |||

==== | ===== 2. Explore its Neighbors and update if necessary. ===== | ||

To explore the Neighbors of the current node, a for loop iterates through each neighbour node ID and checks whether this node is in the ''closed_nodes'' list. If it is closed this node is skipped. For the open neighbour nodes the new cost-to-come, the ''g'' value, is calculated as the cost to come to the current node plus the distance to the neighbour node which is done with the ''calculate_distance'' function. Next it is checked whether the neighbour node is open or if the new ''g'' value is lower than the current ''g'' value of the neighbour node. If this is the case neighbour node should be updated with the new ''g'' value and ''f'' value accordingly. Next the parent node is updated to the current node ID which will help in determining the path. In the end if the neighbour is not already in the ''open_nodes'' list, it is added to the list for evaluation. | |||

===== 3. Trace back the optimal path ===== | |||

[[File:Small maze.png|thumb|354x354px|Figure 4: Visualization of A* on small maze]] | |||

Once the goal is reached, the optimal path can be determined. In order to trace back the optimal path, the path is reconstructed by starting at the goal node and tracing back to the start node using the parent nodes. So ''current_nodeID'' is initially set to ''goal_nodeID and in each iteration of the loop the node IDs are added to the front of path_node_IDs.'' This loop continues until the start node is reached. In the end the node IDs of the determined optimal path are printed in sequence. | |||

For the provided small maze the optimal path is then determined to be as followed. Optimal path from start to goal: 0 -> 7 -> 12 -> 11 -> 10 -> 6 -> 5 -> 4 -> 9 -> 14 -> 19 -> 20 -> 21 -> 23 -> 29 -> 28 -> 34 -> 39 -> 38 -> 37 -> 33 -> 27 -> 26 -> 25 -> 32 -> 31 -> Goal | |||

When comparing this path to the figure of the small maze, it can be concluded that the algorithm works properly to find the shortest path through the maze. | |||

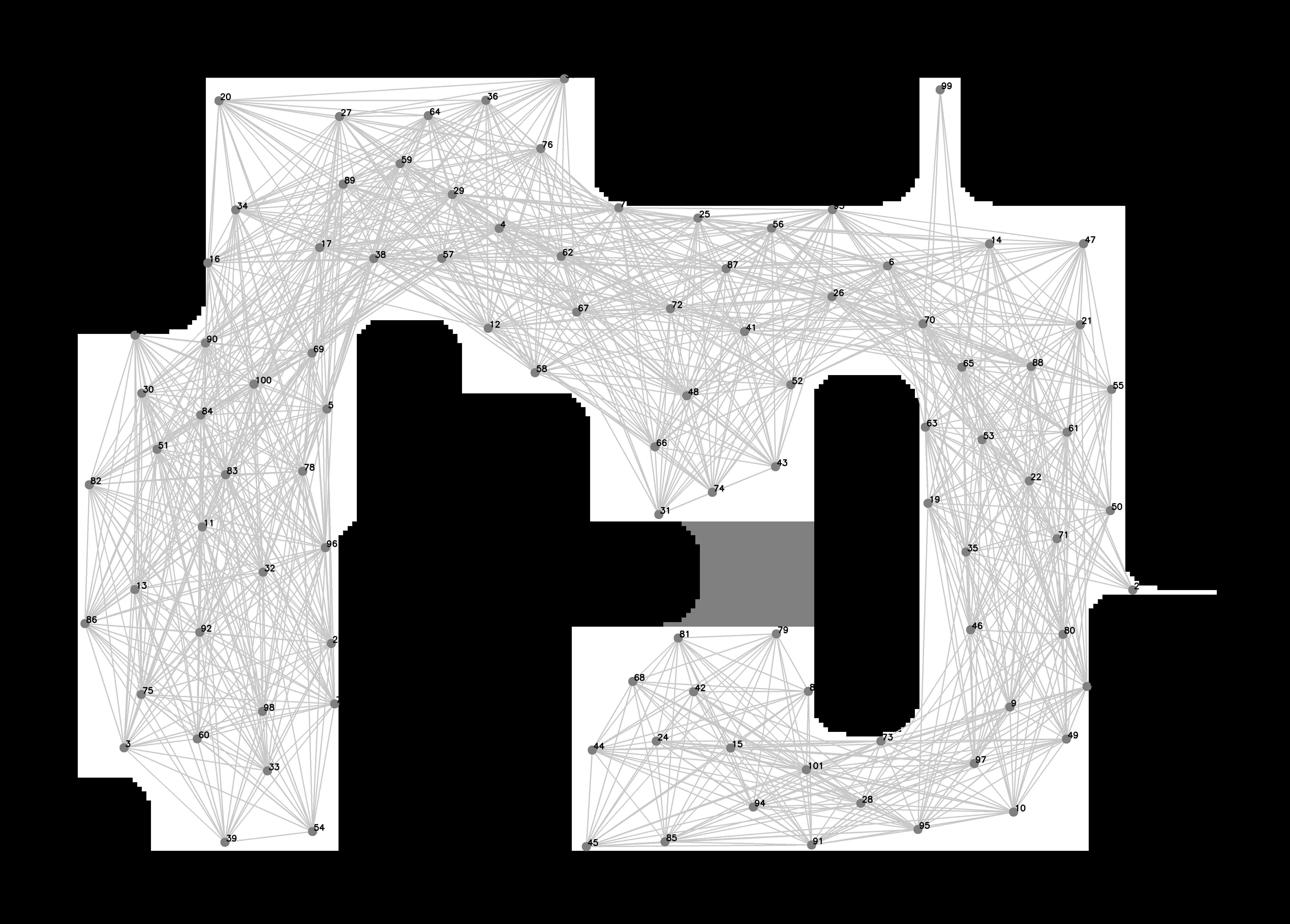

=== Probabilistic Road Map === | |||

The goal of this assignment is to generate a graph that represents a Probabilistic Road Map (PRM) for a given map. This graph consists of vertices and edges and can be used by the A* algorithm to create an optimal path of vertices from a start to a goal position. The exercises to be completed are elaborated below. | |||

===== 1. Inflate the occupied areas of the map (i.e., the walls) in order to account for the size of the robot. ===== | |||

In order to account for the size of the robot, a parameter ''robot_radius'' is defined in meters. This radius is then converted to pixels by dividing the radius by the resolution of provided the map. After this a kernel is created for the dilation operation. An ellipse is chosen which will be twice the radius of the robot in pixels to ensure the kernel is large enough. Since the default dilation operation expands white regions and we want to inflate the walls which are black, the colours of the map are first inverted. Then, the ''dilation'' operation is applied to the map with the use of the created kernel such that the now white walls are inflated. After this the colours in the map are inverted again. In the end this returns the original map with the inflated walls. | |||

== | ===== 2. Write code that checks whether a new randomly placed vertex is a certain distance away from the existing vertices in the graph. ===== | ||

In order to write code that checks the minimum distance between vertices, first a parameter ''min_distance'' is determined. A for loop iterates over each vertex in the graph ''G'' and for each vertex the distance between that vertex and ''new_vertex'' is calculated with the use of the function ''distanceBetweenVertices.'' If the calculated distance is less than ''min_distance'', the boolean ''acceptVert'' is set to false. This indicates that the new vertex is too close and thus should not be accepted. | |||

=== | ===== 3. Determine whether an edge between two vertices is valid ===== | ||

Whether an edge is valid is determined by two aspects. The first one is that the vertices are within a certain distance of each other. This is done with the function ''checkIfVerticesAreClose''. For this a parameter ''max_distance'' is defined and similarly as before, the distance between vertices is calculated with the function ''distanceBetweenVertices''. This distance is than checked with ''max_distance''. If the distance is less than or equal to ''max_distance'', the function returns true. Otherwise it returns false. This function is used further in the script to determine if an edge is valid.[[File:PRM.png|thumb|Figure 5: Example of created PRM]]Secondly, an edge is valid if it does not go through walls. This is checked with the function ''checkIfEdgeCrossesObstacle''. The boolean ''EdgeCrossesObstacle'' is set to false and will be set to true if an edge crosses an obstacle. The Bresenham's algorithm is used to iterate over the pixels along the line segment between two vertices. It checks if any pixel along the line is an obstacle, the function returns true otherwise, it returns false. This function is used further in the script to determine if an edge is valid. | |||

In the end a PRM is created as can be seen in the figure on the right. The PRM has inflated walls, randomly placed vertices which are in a range of distance from each other and edges which do not cross obstacles. Also numbers are printed next to the vertices which is convenient for visualization. The parameters ''robot_radius'', ''min_distance'' and ''max_distance'', together with the amount of vertices ''N'', can be tuned to satisfaction. | |||

=== | ====Answers Global Navigation questions.==== | ||

==== | #'''How could finding the shortest path through the maze using the A* algorithm be made more efficient by placing the nodes differently? Sketch the small maze with the proposed nodes and the connections between them. Why would this be more efficient?'''[[File:Maze with nodes.jpg|thumb|Figure 6: Maze with efficiently placed nodes]] | ||

##Finding the shortest path through the maze could be made more efficient by placing nodes only on the points where the path changes directions or splits into multiple sections. In the figure to the right, the positions where nodes should be placed are indicated with red circles. It can be seen that way less nodes are used than before. The optimal path now consists of less nodes because straight parts are no longer divided by different nodes and edges. | |||

#'''Would implementing PRM in a map like the maze be efficient?''' | |||

##Implementing PRM on a maze would not be efficient. This is due to the fact that the PRM places random vertices on the map and draws edges between them. In the exercises a minimum distance was determined to make the placement of nodes more efficient, as well as a function that would check of the edges would be valid. Since the maze has a lot of occupied spaces by walls, the algorithm would struggle to place all the nodes. The parameters ''robot_radius'', ''min_distance'' and ''max_distance'', together with the amount of vertices ''N'', can be tuned such that it would be possible for the algorithm to place vertices in the maze such that eventually, it could be used by the A* algorithm to find a path through the maze. However this would take a lot of trial and error and would definitely not be efficient. | |||

#'''What would change if the map suddenly changes (e.g. the map gets updated)?''' | |||

##The global planner is used to determine the optimal path for a map with a specified start and goal position. When the PRM is created and the A* algorithm is used to determine this path, the robot uses this path to drive towards the goal. If the map would suddenly change, so a road would be blocked with an obstacle for example, the global planner does not take this into account and the robot could possible crash into the obstacle. In order to prevent this the global planner should be connected to the local planner. This way if the map changes, this could be sensed by the global navigation and a new path could be determined in order to reach the goal. | |||

#'''How did you connect the local and global planner?''' | |||

##In order to combine the local planner and the global planner, the local planner needed to be updated. The first update is that it can update the next goal. It does this by driving towards a goal. If the goal is reached, the node counter increases, and the robot goes to the next node. If the robot cannot reach a node, a debugger should recognize it and delete the note. The local navigation should then be able to drive towards the next node, which hopefully it can reach. It is important to note that the robot does not reach the node exact, but it approximates the node within a certain reach. This enables the robot to use the global planner more a guide and let the local navigator really drives through the map. | |||

##The second thing which needed to be done, was to write the vector field histogram into header files. This way, it can easily be included in the global planner. Currently, the vector field histogram is a header file which is included in the ''include'' map from the global planner. | |||

#'''Test the combination of your local and global planner for a longer period of time on the real robot. What do you see that happens in terms of the calculated position of the robot? What is a way to solve this?''' | |||

##The last time we tested, we used BOBO for the first time. It showed that the odom data and real position is pretty far off, especially when you turn and drive. Therefore, it crashed pretty early, since the real position in the world and the position in the script did not align anymore. To solve this, localization has to be used in order to approximate the robots location, based on the sensor data. | |||

#'''Run the A* algorithm using the grid map (with the provided nodelist) and using the PRM. What do you observe? Comment on the advantage of using PRM in an open space.''' | |||

===== Submission ===== | |||

[[File:WhatsAppVideo2024-05-30at11.52.16-ezgif.com-video-to-gif-converter.gif|thumb|600x600px|center|Video 15]] | |||

#'''What are the advantages/disadvantages of using the first constructor, what are the advantages/disadvantages of the second one?''' | #'''Upload screen recordings of the simulation results and comment on the behaviour of the robot. If the robot does not make it to the end, describe what is going wrong and how you would solve it (if time would allow), and''' | ||

##In the video above a recording of the simulation results is shown. It can be seen that once the script ''follow_astar'' is started, first a PRM is created with vertices and edges. After this the A* algorithm determines the optimal path from the start to the end position and this is visualized in the simulation by the blue line. After this the robot will follow the path and reaches the end goal. It should be noted that this is with the provided path follower that was already in the script. | |||

##In the video below, the same procedure is shown as above but this time with our own path follower implemented. It can be observed that the robot follows the given path but also cuts some corners. This is due to the fact that the path follower does not have to reach the nodes very closely, but in a given range. When the node is reached within the range, the robot will continue to the next node until the goal is reached.[[File:Follow astar.gif|thumb|808x808px|center|Video 16]] | |||

# '''Upload video's of the robot's performance in real life (same as simulation videos), and comment the seen behavior (similar to the previous question).''' | |||

## Sadly, we were not able to test the robot's performance in real life before the deadline of the assignments. | |||

==Exercise 4: Localization== | |||

===0. Assignment introduction=== | |||

====0.1 Explore the code framework==== | |||

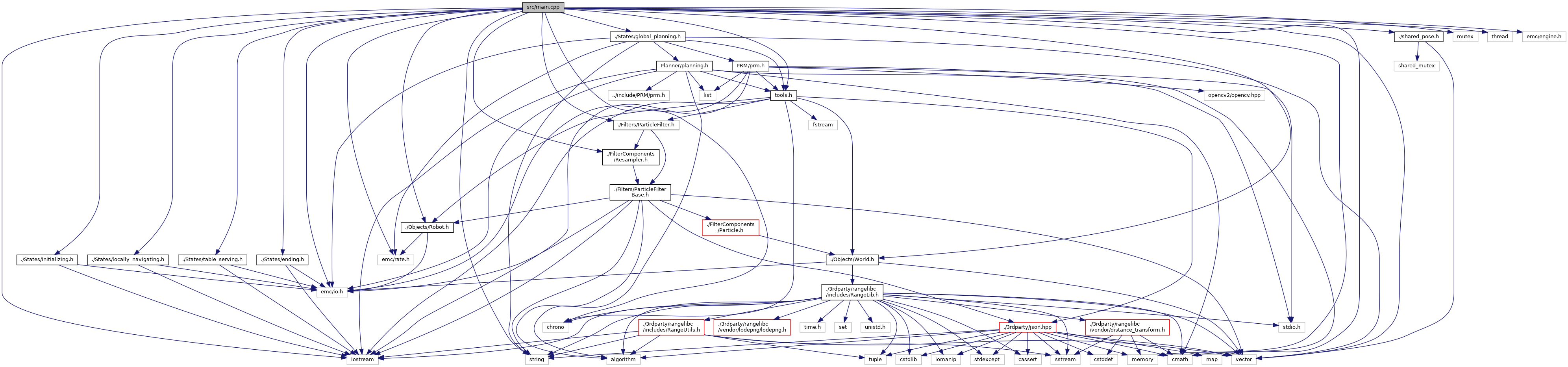

*'''How is the code structured? ''' | |||

The code is divided into different files in order to have definitions and implementations separate from each other. Objects such as particles are used as seperate entities to keep track of the properties of each particle such as positions and weight. | |||

[[File:Screenshot from 2024-06-05 21-02-30.png|center|thumb|900x900px|Figure 7: Dependency graph for main.cpp automatically generated.]] | |||

*'''What is the difference between the ParticleFilter and ParticleFilterBase classes, and how are they related to each other? ''' | |||

The particleFilter is a higher level of abstraction compared to the ParticleFilterBase. The ParticleFilter derives functionality from the ParticleFilterBase class. This was implemented as such to be able to switch the employed method more easily. | |||

* '''How are the ParticleFilter and Particle class related to each other? ''' | |||

The ParticleFilter uses the class particles to represent the particles as individual objects with their respective properties such as position and weight. | |||

* '''Both the ParticleFilterBase and Particle classes implement a propagation method. What is the difference between the methods?''' | |||

One is on the level of a single particle and the other one propagates the complete particle set by employing the class function propagate sample. | |||

===1. Assignments for the first week=== | |||

====1.1 Initialize the Particle Filter ==== | |||

*'''What are the advantages/disadvantages of using the first constructor, what are the advantages/disadvantages of the second one?''' | |||

One initializes the particles uniformly distributed over the map and the other normally distributed over an expected position with a certain confidence. The second one makes better use of the particles but is only possible if the initial position of the robot is known. If this is not known the particle filter would benefit from the uniformly distributed method where the particles (position hypothesis) are dispersed over the space to eventually find its position within the map. | |||

*'''In which cases would we use either of them?''' | |||

The Gaussian method when an initial position is known. | |||

The uniformly distributed method when this is completely unknown. | |||

*'''Add a screenshot of rviz to show that the code is working''' | |||

[[File:Partf11.jpg|center|thumb|521x521px|Figure 8: Gaussian distribution of the particles. Since the Gaussian distribution already accounts for the initial position of the robot, the particles are distributed around this starting point.]] | |||

====1.2 Calculate the pose estimate==== | ====1.2 Calculate the pose estimate==== | ||

*'''Interpret the resulting filter average. What does it resemble? ''' | |||

By implementing the weighted mean we get the weighted average of all the particles | |||

* '''Is the estimated robot pose correct? Why? ''' | |||

It is not yet correct since we consider all particles to be as likely not implementing the update step yet. | |||

*'''Imagine a case in which the filter average is inadequate for determining the robot position.''' | |||

The case where your initial position is far away from the actual robot initial position. Or when you have outliers in the case where you have a small amount of particles. | |||

*'''Add a screenshot of rviz to show that the code is working''' | |||

[[File:Particlefilter12.png|center|thumb|Figure 9: The pose estimate is visualized by a green arrow. It represents the weighted average of all of the particles.]] | |||

====1.3 Propagate the particles with odometry==== | |||

*'''Why do we need to inject noise into the propagation when the received odometry information already has an unknown noise component?''' | |||

Introducing different noise values to each particle in a particle filter ensures diversity and prevents degeneracy. | |||

*'''What happens when we stop here, and do not incorporate a correction step? ''' | |||

This results in increasing error and uncertainty in the estimated state, as the particles fail to adjust based on new data, leading to less accurate predictions over time. | |||

*'''Add a screen recording of rviz while driving to show that the code is working''' | |||

[[File:Propagate.gif|center|thumb|900x900px|Video 17: Propagate the particles with odometry]] | |||

===2. Assignment for the second week=== | |||

====2.1 Correct the particles with LiDAR==== | |||

#'''What does each of the component of the measurement model represent, and why is each necessary?''' | |||

*'''Local Measurement Noise''': Represents inherent inaccuracies in sensor readings due to factors like sensor noise. It is necessary for modelling the probability of an observation based on the prediction and assigning the probability. The following formulas found in the slides were employed to assign this probability. | |||

The probability ''p_hit'' for a given measurement given the prediction is defined by: ; <math> p_{hit}(z_t^k | x_t, m) = \begin{cases} \eta \mathcal{N}(z_t^k; z_t^{k*}, \sigma_{hit}^2) & \text{if } 0 \leq z_t^k \leq z_{max} \ 0 & \text{otherwise} \end{cases} </math> | |||

*'''Unexpected Obstacles''': Accounts for objects not present in the map, such as temporary items or new obstructions. This is modelled via exponential distribution. | |||

The probability ''p_short'' for a short measurement is given by: ;<math> p_{\text{short}}(z_t^k \mid x_t, m) = \begin{cases} \eta \lambda_{\text{short}} e^{-\lambda_{\text{short}} z_t^k} & \text{if } 0 \leq z_t^k \leq z_t^{k*} \\ 0 & \text{otherwise} \end{cases} </math> The normalizing constant ''\eta'' is calculated as: ;<math> \eta = \frac{1}{1 - e^{-\lambda_{\text{short}} z_t^{k*}}} </math> | |||

*'''Failures (Glass, Black Obstacles)''': Handles the inability of sensors to detect certain materials that don't reflect signals well, like glass or dark colored objects. Which will result in the maximum reading even though this is not representing the true distance. | |||

#'''With each particle having N >> 1 rays, and each likelihood being ∈ [0,1] , where could you see an issue given our current implementation of the likelihood computation | The probability ''p_max'' for the maximum range measurement is defined as: ;<math> p_{\text{max}}(z_t^k \mid x_t, m) = \begin{cases} 1 & \text{if } z_t^k = z_{\text{max}} \\ 0 & \text{otherwise} \end{cases} </math> | ||

*'''Random Measurements''': Captures spurious data and anomalies that don't correspond to actual obstacles, likely due to sensor malfunctions or external interferences. | |||

The probability ''p_rand'' for a random measurement is defined as: ;<math> p_{\text{rand}}(z_t^k \mid x_t, m) = \begin{cases} \frac{1}{z_{\text{max}}} & \text{if } 0 \leq z_t^k \leq z_{\text{max}} \\ 0 & \text{otherwise} \end{cases} </math> | |||

All of them combined make a measurement model that models the events that could have occurred for a particular ray (laser measurement), resulting in the following formula: Taking the weighted average of these distributions yields the overall model: ;<math> p(z_t^k \mid x_t, m) = z_{\text{hit}} p_{\text{hit}}(z_t^k \mid x_t, m) + z_{\text{short}} p_{\text{short}}(z_t^k \mid x_t, m) + z_{\text{max}} p_{\text{max}}(z_t^k \mid x_t, m) + z_{\text{rand}} p_{\text{rand}}(z_t^k \mid x_t, m) </math> | |||

#'''With each particle having N >> 1 rays, and each likelihood being ∈ [0,1] , where could you see an issue given our current implementation of the likelihood computation?''' | |||

Since we assume independence we calculate the overall likelihood of the complete measurement (all combined rays) to be their product since these are values between 0 and 1 this can result in computational errors. Related to underflow, when using floating-point arithmetic, the precision of very small numbers can become unreliable. | |||

====2.2 Re-sample the particles==== | ====2.2 Re-sample the particles==== | ||

* '''What are the benefits and disadvantages of both the multinomial resampling and the stratified resampling?''' | |||

The benefit of multinomial resampling is that it is easier to implement because it randomly selects the particles based on their weights, therefore the chance of picking a particle with a higher weight is higher. Stratified resampling assures that samples with a lower weight are also considered and resampled. This is a benefit because you can then easily update your stance if the first prediction was not correct and it preservers particle diversity. A disadvantage of multinomial is that it does not take these lower weighted particles into account and that it performs less efficient. Implementing this in the stratified resampling means that it harder to implement.[[File:Resampling.gif|center|thumb|600x600px|Video 18: Particle filter algorithm working on simulation]] | |||

==== 2.3 Test on the physical setup ==== | |||

*'''How you tuned the parameters.''' | |||

- Tested on simulation - Since the test was done in simulation, and the map was completely known in advance, a bigger weight was given to the hit_prob. Whereas, the other probabilities were reduced. It is because, for instance, it is not expected to find unforeseen obstacles in the simulation, therefore short_prob was reduced. Similarly, it was not expected to find glass walls, so max_prob was also decreased. rand_prob was also reduced since the random error is less in the simulation than in the real setup. After implementing this it was noticed that the particles were lagging behind the robot. It was due to the time taken to resample the particles. So, the resampling time was changed from "Always" to "everyNtimesteps". That way the particles had enough time to be resampled. | |||

*'''How accurate your localization is, and whether this will be sufficient for the final challenge.''' | |||

As the video above shows, the particle filter is already quite accurate in the simulation. In the real setup it might behave differently. We have not been able to test this however. So for the final assignment the algorithm still needs to be tested on the real robot. The simulation does already show that some improvements can be made on the resampling time to make the cloud of particles follow the robot even faster. | |||

*'''How your algorithm responds to unmodeled obstacles.''' | |||

Since we were unable to test this with the real robot, we do not yet know how it will respond to unmodelled obstacles. We hope that by tuning some of the probability factors, the localization algorithm is still able to perform well with the addition of random obstacles. Also, by using the Stratified resampling method, we hope that by keeping some of the lower probable particles, the robot is able to handle these unmodeled obstacles. | |||

*'''Include a video that shows your algorithm working on the real robot''' | |||

Unfortunately were did not finish the particle filter on time to also test the localization on the real robot. We are, however, confident that with the particle filter performing the way it does now that it will also perform well on the actual robot (with some changes in the parameters of course). | |||

== Final Challenge == | |||

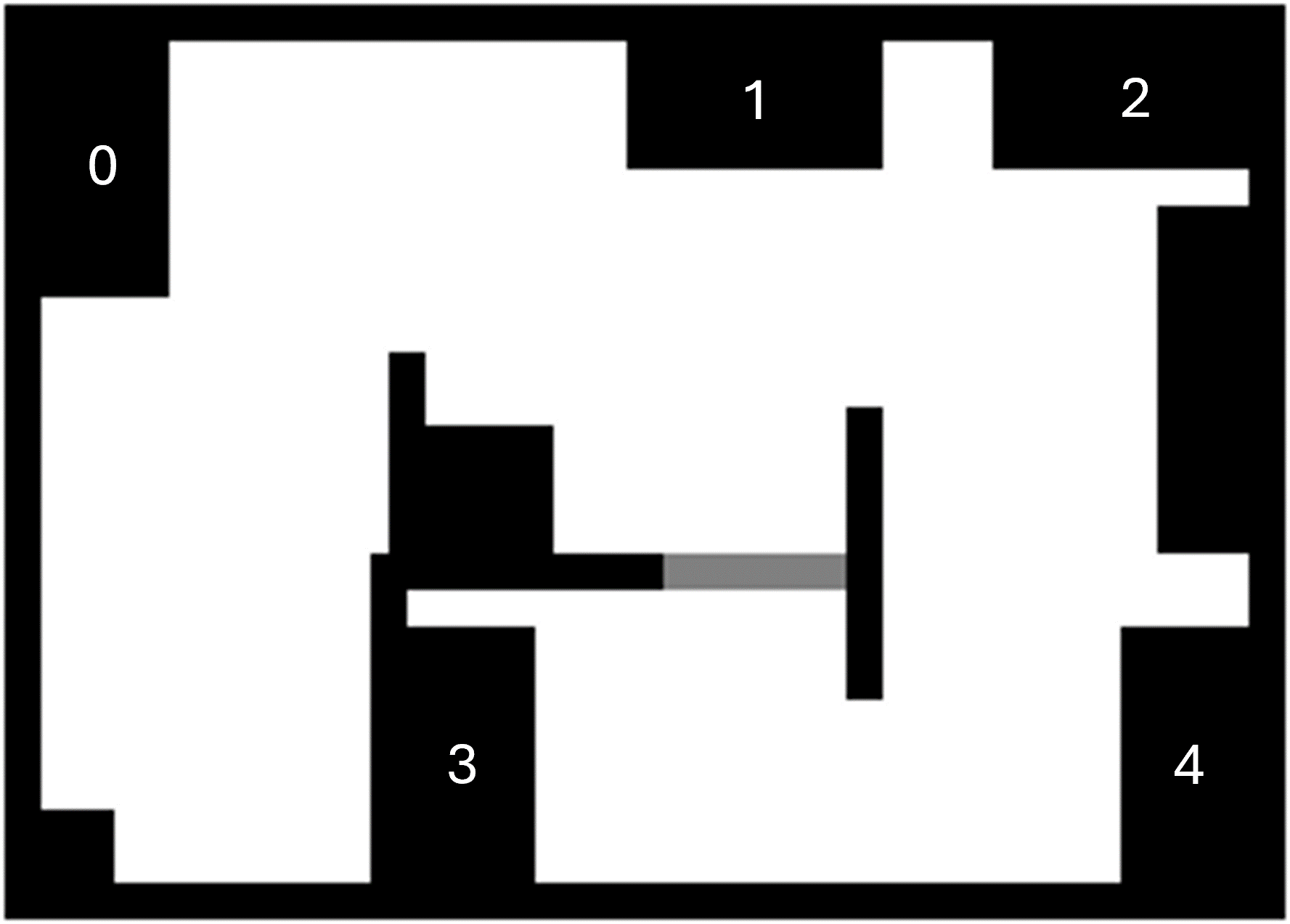

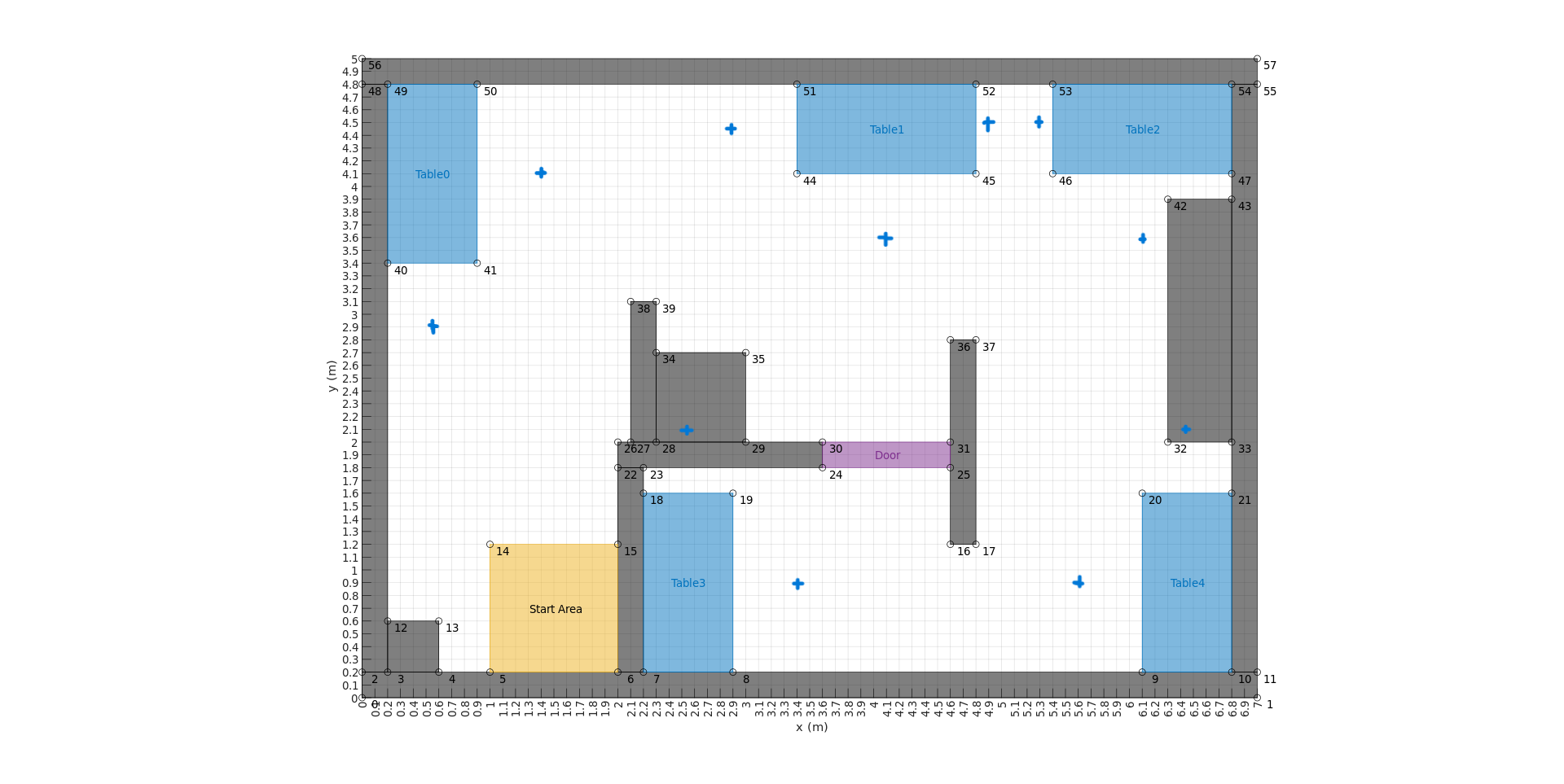

[[File:Table numbers.png|thumb|Figure:1 Map of the restaurant including the table numbers that can be used as input for the user interface.]] | |||

The integration of the major technological components—a local navigator, global planner, and localization algorithm—during the development of an autonomous restaurant robot is important. Real-time obstacle avoidance is ensured by the local navigation, improving worker and customer safety. The global planner improves customer service by figuring out the best paths for efficient order delivery. The robot can precisely navigate to tables via the localization algorithm to determine its position within the restaurant. Together, these parts ensure that the robot functions successfully and efficiently, satisfying the demands of customers, employees, and restaurant owners. We shall address these demands in the Requirements portion of this report. We shall talk about the structure of the essential parts in System Architecture. The components have been tested on their own and in combination with others; the setup of these tests and their results will be analysed in the testing section. The resulting changes made to the system for each component will follow this, along with additional components that were added to adhere to the requirements of the stakeholders. Finally, the integration of all the updated parts will be debated in the Final Challenge Results. | |||

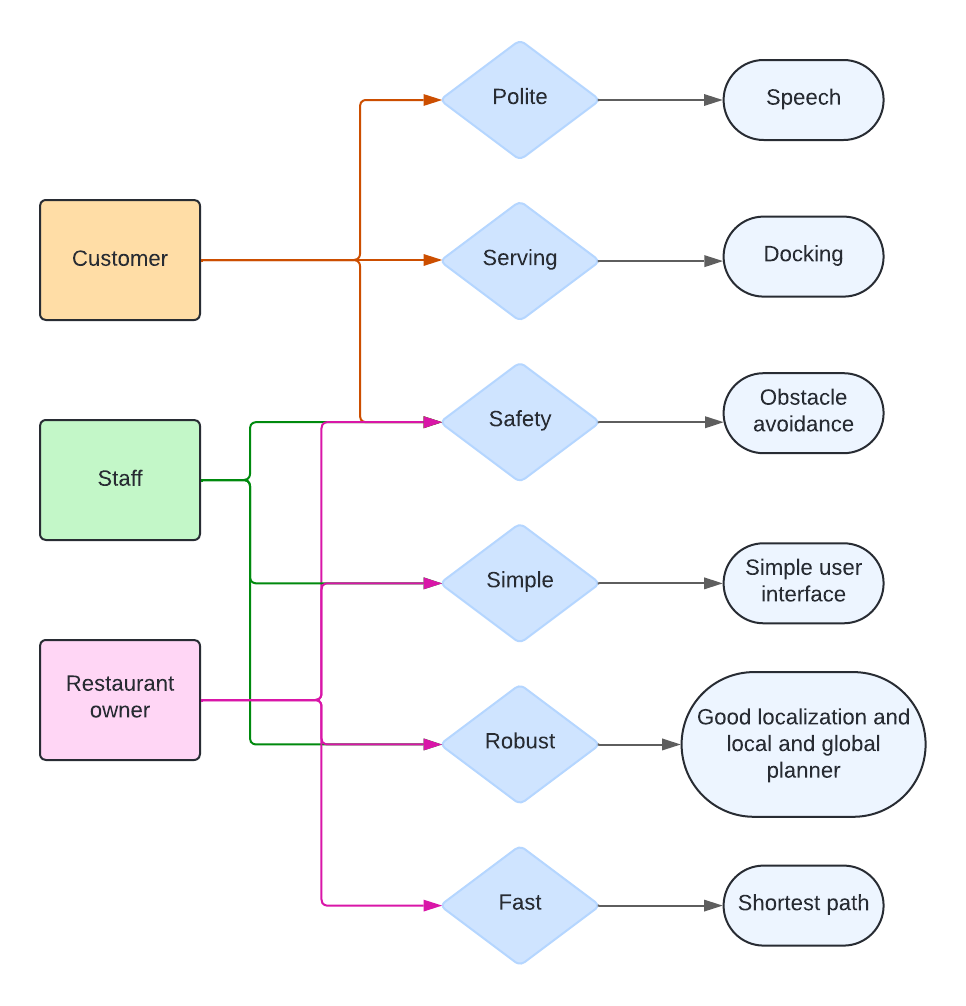

=== Requirements. === | |||

[[File:Stakeholders.png|thumb|Figure 2: Stakeholder environment requirements]]For the final challenge, all the different components listed above had to be combined. Next to that, some more requirements had to be met. These requirements come from the different stakeholders that are involved in the restaurant robot. These stakeholders include the owners of the restaurant, the staff of the restaurant, and the customers. In the image to the right, the requirements for each of the stakeholders can be found. | |||

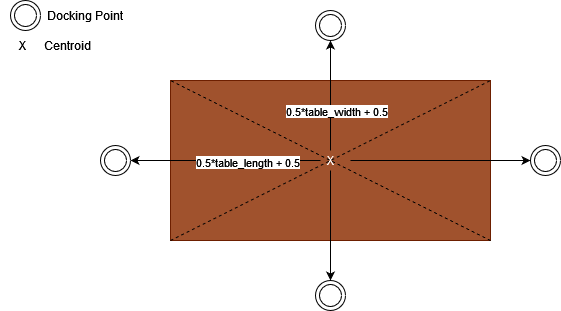

For the customers safety is, of course, important. They want to be sure that they are save while in the restaurant. Next to that, they want the serving to go well. So the robot should go up to the table, orient towards the table, and move close to the table such that the customers can easily take the order from the robot. Moreover, the robot needs to locate a free space on the table so that it can accomplish this. It will, therefore, attempt to use a different side of the table when a spot is taken. The third requirement for customers is that the robot needs to be polite. Servers in a restaurant also always need to be polite towards customers, so the robot should as well. An implementation of this could be to have the robot speak. While serving the table the robot can indicate to the customers that it has arrived with their order and that they can take the order from the tray once it has docked successfully. But also small pronunciations such as "enjoy your meal" are part of polite customer service. | |||

The second stakeholder is the staff of the restaurant. It could be that there is still crew delivering orders themselves. Otherwise the employees making the drinks and food can be accounted here. For them it will also be important to be save while working. For this reason, it is crucial that the robot has obstacle avoidance built in. They want to be able to roam around freely, without a robot crashing into them. Next to that, they might be the people who have to fix the mistakes the robot might be making. Therefore, having a robust robot is very important to them. The robustness of the robot should be in all the stages of the robot, so in localization and in local and global planning. The robot should also be easy to use by the staff. This can be implemented by using a simple user interface. The personnel only need to give the order of table numbers to the robot. | |||

The last stakeholder is the owner of the restaurant. Next to safety, simplicity, and robustness, they want the robot to be as fast as possible. Having the robot speedily deliver the orders will increase customer service and might also increase sales. An implementation of this could be that the robot should find the shortest path towards a table using the global planner. | |||

=== Possible situations. === | |||

While designing the code for the final challenge, more actions need to be taken into account. Not only do the components of local navigation, global planning, and localization need to work effectively together, they also need to give room for more implementations. These extra implementations include table docking, node skipping, speed control, and door opening. Adding these components together and integrating all of the requirements will come with its complications. It is expected that the map that is presented to us at the start will not completely resemble the setup of the map on the final challenge day. There will be obstacles present. These obstacles can be moving obstacles, such as humans walking around in the restaurant, stationary obstacles, such as chairs, and obstructive obstacles. The difference between stationary obstacles and obstructive obstacles is that stationary obstacles might only block one side of a table, while an obstructive obstacle can cut off an entire table. For this reason the extra implementation of node skipping was designed. More information on how this was tested can be found in Testing, the implementation can be found in local navigation. A second situation can be that the robot's initial position differs from its initial belief. For this, the localization should be made more robust. By increasing the particle cloud a bit and slowing down the resampling time, for example, will give the robot more time to adjust to its current position and updated belief. More information can again be found in Testing and the implementation can be found in Localization Improvements. Finally, it can be possible that a part of the map is not accessible. In the case of the third table, this would mean that the door should be utilized for the robot to reach its goal. This can be implemented using a door opening state. Due to time limits, this was not fully implemented yet and will be discussed in future directions. | |||

== Testing == | |||

To have all of the components work together harmoniously, all the individual states need to be robust and free of (major) errors. To ensure this, a couple of tests have been performed. This section includes the tests of the localization, which was not tested before the interim deadline, to show that this component is working and is robust. Since global and local navigation were already tested individually, this section will discuss the test of combining them. After this, tests were performed with localization included in the system. And lastly, all the restaurant challenges were added to the overall system, and these needed to be tested as well. | |||

[[File:Resobs1.png|thumb|Figure 3: Map with a wall that fully obstructs a table to test if the robot will skip an entire table after node skipping.]] | |||

[[File:Resobs2.png|thumb|Figure 4: Map with several obstructions in front of tables to check if the robot will skip one side of a table and try another side.]] | |||

First, for the testing of localization. The test for this individual component on the real robot was not performed before the deadline and therefore will be included here. Since localization already worked in the simulation, the test mostly consisted of changing a few parameters in the .json file. These parameters included the probability parameters discussed in the localization chapter for the exercises. In order to see which components needed change, or, in other words, which component had a higher or lower probability of happening, some changes were made to the map. The map that was used was the same one used for global planning. One of these changes was to have a human walking in the map to account for unexpected obstacles. Another element that was tested was the probability of the maximum range. This was done by taking away a random wall to see if the robot would keep its original prediction of its location. A third test that was performed was to remove a wall that changed the map in such a way that the robot could be standing in a different location. This test in particular was difficult for the robot, and it led to changing the function slightly. The function was changed to behave slightly 'poorer'. It might be odd to make a system behave less well than it did before, but for the particle filter, this gave an advantage. The reason for making this change was because the function was working a bit too well. The robot would quickly update its initial position, and the cloud of particles immediately became a dense pack around the robot pose. This seems nice, but the following experiment showed that it was more of a curse than a blessing. In this experiment, the robot was rotated 180 degrees from its predicted initial pose. After starting up the machine, the robot was not able to localize itself, causing a constant error in its position. It was not able to update its belief because the particles were too tightly packed and unable to change their belief. To account for wrong estimations, the stratified resampling model is used, such that particles are not always discarded. But even with this method, the particles are very conservative. Thus, the particle filter was made to be a bit slower and less dense to have a more robust initialization and localization in the map. | |||

Localization testing with global and local navigation, with a rotated initial pose: | |||

https://youtu.be/Z9WhAeV1nd8 | |||

Localization testing in simulation to test for robust initialization: | |||

https://youtu.be/b3j0EfqmyXs | |||

Second, the combination of global and local navigation was tested. During the experiment, it was important that the robot would not drive against walls, which is a problem often occurring with local navigation, and that the global planner did not put nodes into walls. These results are further discussed in the Local Navigating part bellow, as well as the corresponding video. | |||

The combination of the three components was tested as well. Here, it was discovered that localization was performing appropriately. The robot did, however, occasionally drive into walls. For this reason, a robust obstacle avoidance system was added to the local navigation state. The implementation of this will also be discussed in the section on local navigation below. It also became apparent that global navigation should not place any nodes in either the walls or the tables. | |||

During the combination of the three components, some additional functions were added to the system as well. These functions were node skipping and docking. To test node skipping, the two maps on the side were used. Figure 3 shows how one of the tables is fully obstructed. It was tested to see whether the model would skip the nodes associated with the path towards the table. After skipping these nodes, meaning that the robot is unable to reach these nodes, the robot will conclude that it cannot reach this particular table and will go to the next table on its list or stop if it was the last table. A problem that was identified while testing with this map was the timer included in the node skipping. A timer was included in the node skipping, which stated that the robot would try to reach a node for thirty seconds. Once it has not reached the node within thirty seconds, the robot will try a different node. The timer of thirty seconds was initially meant as a timer for trying to reach a certain table, and once the timer was finished, to go to the next table. The implementation, however, caused the robot to wait for thirty seconds for each node. This meant that if there were four nodes inside the obstructed area, we would have to wait for two minutes before the robot would move on to the next table. This phenomenon was also discovered when skipping table sides. The second map shows how obstacles were placed at the location where the robot would first try to dock. The robot would first try to reach the docking point for quite a long time and then move on to the next side. Meanwhile, another timer, which indicates if the table is reachable or not, was also operative. This often led to the robot skipping a table before reaching the second table side. Node skipping was therefore changed with shorter timers and an addition of tries. This addition of tries indicates how often the robot has tried to reach a node, and after a certain amount of tries, it will move on to the next node. This will be further discussed in the section about node skipping. | |||

==System architecture== | ==System architecture== | ||

=== Architecture Layout (motivation component decisions). === | |||

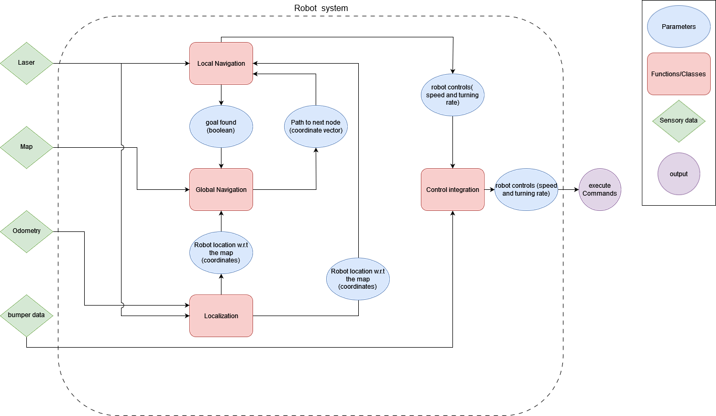

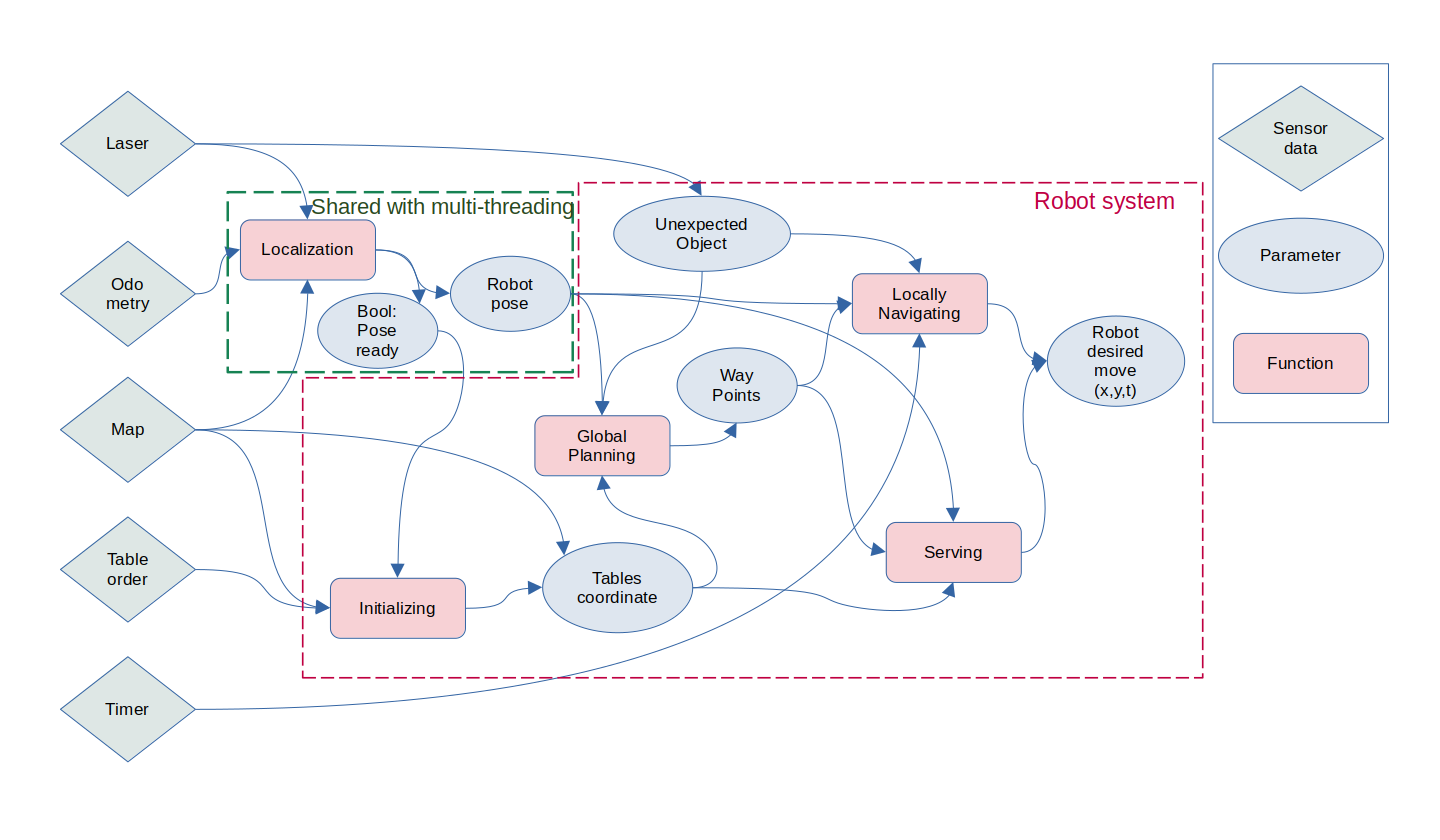

The system architecture must be carefully planned to integrate all components in an organized and understandable manner. This planning was an iterative process involving data flow diagrams and state flow diagrams to visually interpret and communicate the intended architecture. Below, the Data Flow and State Flow are described, presenting both the initial idealization and the final implementation. | |||

Overall, the initial design was preserved as much as possible, but we made adaptations to prioritize ease of implementation. The initial state flow design was simplified by reducing the number of states and adding "sub-states" into them. Additionally, while the data flow remained unchanged, it had to be implemented using multi-threading to have an organized state machine implementation. | |||

=== State Transitions. === | |||

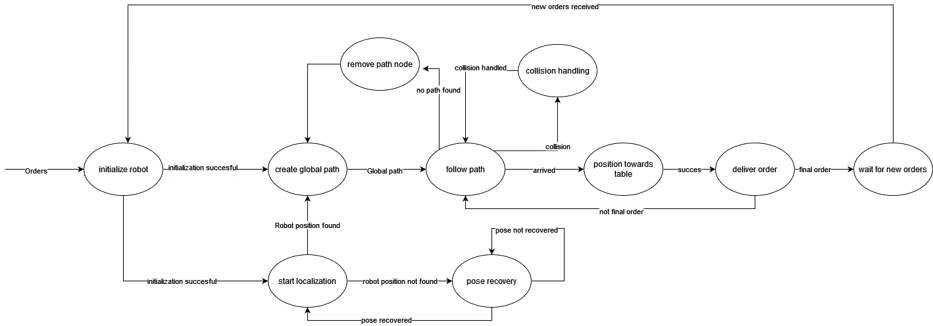

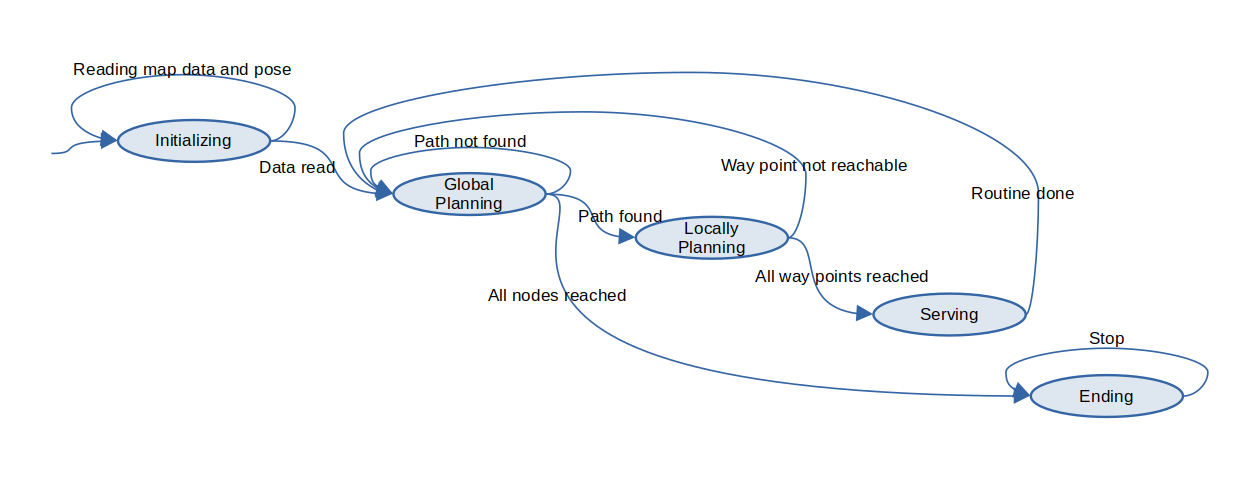

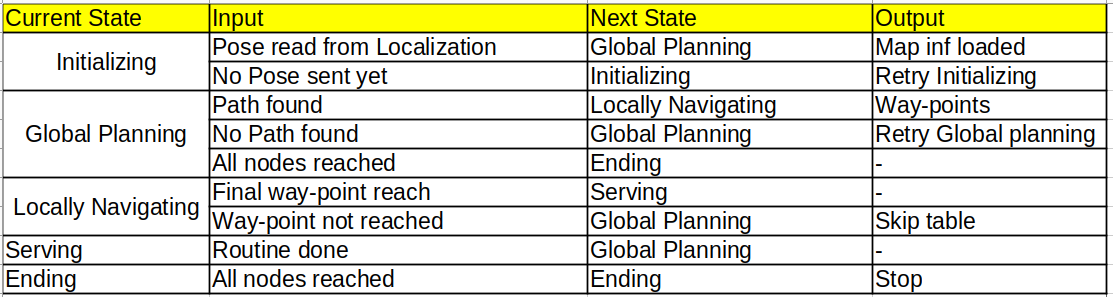

Implementation of the components to meet the requirements, the components of global planning, local navigation, don't crash, and localization need to be combined. Next to that, other implementations such as serving a table needs to be added as well. All the components were taken into consideration since the first idealization of the state flow (Fig. 6). However, for the final implementation, some of the initial states were removed or incorporated inside of other states. For instance, the "Position towards table" and "deliver order" states (of the first idealization) were merged into the "Serving" state of the final state machine implementation, shown in Table 1 and Figure 7. Moreover, the "Pose recovery" state was removed because it turned out that the Localization was robust enough to deal with the misplacing of the robot. That way the implementation of the state machine was simplified. Individual "sub-states" of the final implementation are described in their subsections. [[File:State flows.png|center|thumb|933x933px|Figure 6: Planned State flow diagram]] | |||

[[File:Updated State Flow.png|left|thumb|700x700px|Figure 7. Diagram of the state flow used in the final challenge]] | |||

[[File:State Flow Table.png|thumb|700x700px|Table 1. State machine table of the state flow used in the final challneged]] | |||

=== -- === | |||

=== Data Flow. === | |||

To visualize the data flow of these components, the following data flow diagram has been made. It can be seen in Figure 8. | |||

[[File:Dataflow.png|center|thumb|572x572px|Figure 8: Data flow diagram of the system]] | |||