PRE2023 3 Group10: Difference between revisions

No edit summary |

|||

| (240 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

= <ref name=":44">Simulation Code - https://github.com/viliamDokov/0LAUK0-FireRescueSim</ref>'''Fire Reconnaissance Robot Simulation''' = | |||

== '''Group members''' == | |||

{| class="wikitable" | {| class="wikitable" | ||

|+ | |+Group members | ||

! Name !! Student number | ! Name !! Student number | ||

!Email | !Email | ||

!Study | !Study | ||

!Responsibility | |||

|- | |- | ||

| Dimitrios Adaos || 1712926 | | Dimitrios Adaos || 1712926 | ||

|d.adaos@student.tue.nl | |d.adaos@student.tue.nl | ||

|Computer Science and Engineering | |Computer Science and Engineering | ||

|Simulation (fire simulation and mapping) | |||

|- | |- | ||

|Wiliam Dokov | |Wiliam Dokov | ||

| Line 13: | Line 18: | ||

|w.w.dokov@student.tue.nl | |w.w.dokov@student.tue.nl | ||

|Computer Science and Engineering | |Computer Science and Engineering | ||

|Simulation (sensor virtualization and mapping) | |||

|- | |- | ||

|Kwan Wa Lam | |Kwan Wa Lam | ||

| Line 18: | Line 24: | ||

|k.w.lam@student.tue.nl | |k.w.lam@student.tue.nl | ||

|Psychology and Technology | |Psychology and Technology | ||

|Research/USE analysis and modelling | |||

|- | |- | ||

|Kamiel Muller | |Kamiel Muller | ||

| Line 23: | Line 30: | ||

|k.a.muller@student.tue.nl | |k.a.muller@student.tue.nl | ||

|Chemical Engineering and Chemistry | |Chemical Engineering and Chemistry | ||

|Research/USE analysis and modelling | |||

|- | |- | ||

|Georgi Nihrizov | |Georgi Nihrizov | ||

| Line 28: | Line 36: | ||

|g.nihrizov@student.tue.nl | |g.nihrizov@student.tue.nl | ||

|Computer Science and Engineering | |Computer Science and Engineering | ||

|Simulation (fire data and mapping) | |||

|- | |- | ||

|Twan Verhagen | |Twan Verhagen | ||

| Line 33: | Line 42: | ||

|t.verhagen@student.tue.nl | |t.verhagen@student.tue.nl | ||

|Computer Science and Engineering | |Computer Science and Engineering | ||

|Research and Simulation (mapping) | |||

|} | |} | ||

== '''Introduction''' == | |||

=== Problem statement === | |||

[[File:Fire building.png|thumb|Firefighters have to operate in environments with low visibility, dangerous substances, extreme temperatures, unknown building layouts and obstacles<ref>Digitalhallway. (2007c, juli 22). ''Inside house fire''. iStock. https://www.istockphoto.com/nl/foto/inside-house-fire-gm172338474-3821332</ref>]] | |||

Firefighting is a field where robotic technology can offer valuable assistance. The environment where firefighters have to operate can be very harsh and challenging especially in closed spaces. They have to deal with very low visibility due to smoke and lack of light. As well as the presence of dangerous gases or other substances and extreme temperatures. Additionally they are challenged by obstacles, unknown building layouts and even collapses off the building. In these kinds of scenarios with a lot of uncertainties and unknowns, in order to help and save people that are trapped in a building and also to reduce the risks for the firefighters themselves, technology could be used to lend a hand. It is crucial to be able to determine the layout and the associated temperatures of the inside of these buildings. | |||

For this purpose our group will focus on the design of a simulation of a fire reconnaissance robot that is able to navigate inside a building, build a map of the inside of the building without any prior knowledge of the layout and add a heat-map on top of it so firefighters can see what pathways are possible to traverse and where the source of the fire is. | |||

=== Objectives === | |||

Our objective is to design a simulation of a robot capable of mapping and heat-mapping the layout of a building without prior knowledge in a fire scenario with a sufficient level of realism. To realize these objectives we will focus on the following features for our simulation: | |||

* An environment where such a robot would realistically be used | |||

* A simulation of a fire scenario with a sufficient level of realism (what a sufficient level of realism consists off for the purposes of this project is expanded upon later) | |||

* A robot which can be remotely controlled | |||

* A robot capable of making and displaying a mapping of its environment and its position in it (SLAM) | |||

* A robot capable of adding heat information to a map (creating a heat-map) | |||

=== Users === | |||

Firefighters and first responders would be the primary users of the robot. These are the people that need to interact and deploy the robot in the first place. This means that the robot should be easy and quick to use and set up for in emergency situations where time is of the essence. It'd also be valuable to know their insights and experiences for the robot to work the most effectively in their field of expertise. It's also important that the robot can properly communicate with the firefighters in the emergency situation and relay the information about; fire sources, obstacles, victims, and feasible rescue paths. | |||

The secondary user of a firefighting/rescue robot would be the victims and civilians. The robot is made to help them and come to their aid. It might be needed to find a way to communicate with the victims so they can be assisted most effectively. This might pose a challenge because of the low visibility and low audibility during a fire. | |||

Interested parties for deploying the robot are firefighting authorities, that are tasked for responding to a fire incident and save lives and properties, insurance companies that can benefit from minimizing the loss of life and property and companies that own big buildings and can consider having the robot as part of their regular infrastructure. | |||

=== Approach === | |||

[[File:TUe.png|thumb|An in person interview was conducted with the TU/e fire department (see appendix)<ref>Schalij, N. (2022b, september 20). ''Fire department will not leave TU/e campus any time soon''. https://www.cursor.tue.nl/en/nieuws/2022/september/week-3/fire-department-will-not-leave-tu-e-campus-any-time-soon/</ref>]] | |||

The project was started off with an in depth literature study on the subject matter and closely related topics. While this was happening we were making contact with the fire department at the TU/e so we could do an accurate user analysis based on both literature and our actual target users. This would be followed by research into what is the state of the art in firefighting robots right now so we could see what had been done before and what gaps could still be filled in this particular field. After all this information was collected we were ready to complete the user analysis and make a plan for what exactly we wanted to achieve during this project. | |||

Once it was decided that the focus of the project would be a simulation of a (heat) mapping robot the designing part of the project could finally begin. We first had to research the simulators that we could use (e.g. Netlogo, Webots, Gazebo, ROS, PyroSim) by evaluating their advantages and disadvantages, and what we needed the simulation to do. We also did research on what sensors could possibly be used in the type of scenario that the robot would be used for. | |||

After we finally settled on a simulator the focus was now on implementing a SLAM algorithm, building realistic simulation environments, simulating a realistic fire and implementing a heat map. Our target was to propose a robot design with the focus on its software, a simulation environment that could easily be used in further research in similar topics and some additional hardware suggestions (the required sensors for our robot). | |||

Further details on the course of the project and the week to week developments can be found in the appendix under logbook and project summary. | |||

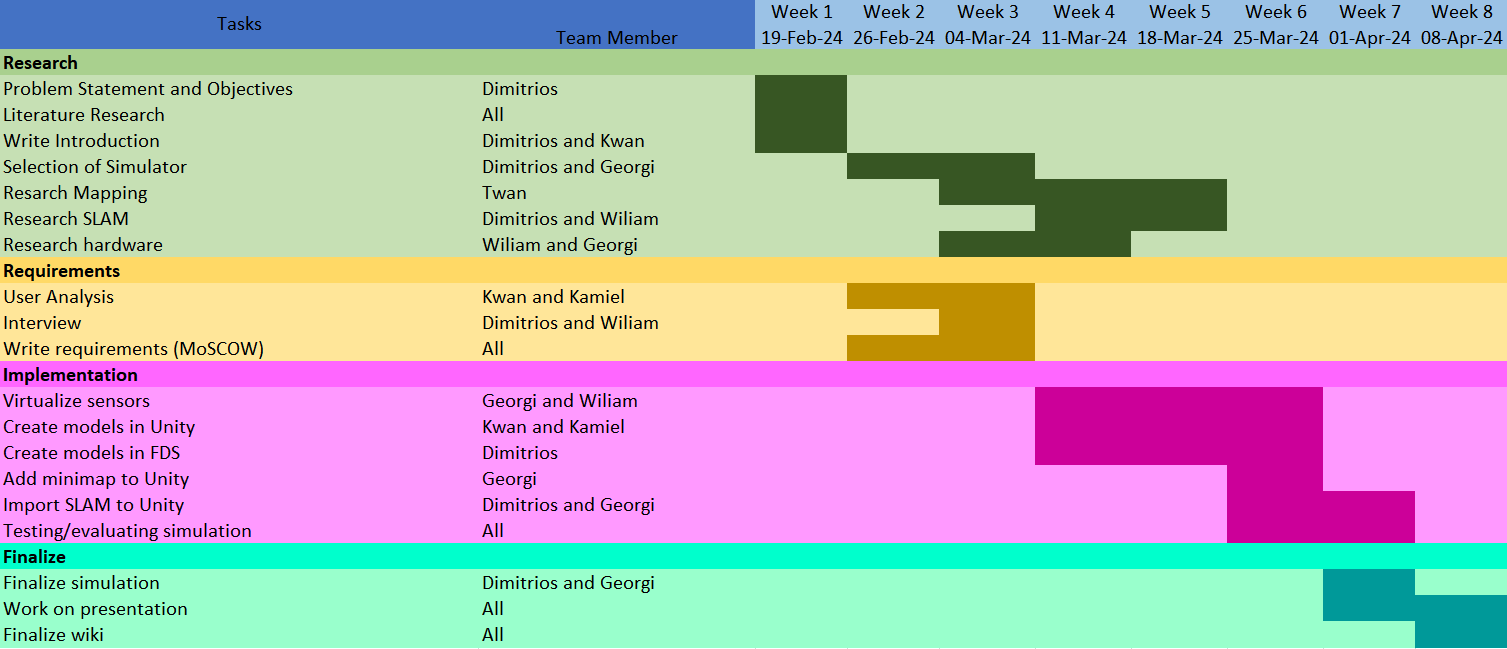

=== Planning === | |||

[[File:G10Planning.png|none|thumb|859x859px|Gannt chart for project planning.]] | |||

== '''Research papers''' == | |||

To start off the project we did an in depth literature research on firefighting robots and other related relevant subjects. In the table below you can see the titles of the relevant research papers accompanied with a quick summary to give a general idea of the contents of the papers and labels to make it easier to find papers on specific subjects later on in the project. It should be noted that this does not include all sources used, the full list of references can be found at the bottom of the page. | |||

{| class="wikitable" | {| class="wikitable" | ||

|+ | |+Research papers | ||

!Week | !№ | ||

!Title | |||

!Labels | |||

!Summary | |||

|- | |||

|1 | |||

|A Victims Detection Approach for Burning Building Sites Using Convolutional Neural Networks<ref name=":0">Jaradat, F. B., & Valles, D. (2020). A Victims Detection Approach for Burning Building Sites Using Convolutional Neural Networks. ''2020 10th Annual Computing And Communication Workshop And Conference (CCWC)''. https://doi.org/10.1109/ccwc47524.2020.9031275</ref> | |||

|Victim detection, Convolutional neural network. | |||

|They trained a convolutional neural network to detect people and pets in thermal IR, images. They gathered their own dataset to train the network. The network results were pretty accurate (One-Step CNN 96.3%, Two-Step CNN 94.6%).<ref name=":0" /> | |||

|- | |||

|2 | |||

|Early Warning Embedded System of Dangerous Temperature Using Single exponential smoothing for Firefighters Safety<ref name=":1">Jaradat, F., & Valles, D. (2018). Early Warning Embedded System of Dangerous Temperature Using Single exponential smoothing for Firefighters. . . ''ResearchGate''. https://www.researchgate.net/publication/327209779_Early_Warning_Embedded_System_of_Dangerous_Temperature_Using_Single_exponential_smoothing_for_Firefighters_Safety</ref> | |||

|Heat detection, Firefighter assistance. | |||

|Proposes to add a temperature sensor to a firefighter's suit which will warn firefighters that they are in a very hot place > 200 °C.<ref name=":1" /> | |||

|- | |||

|3 | |||

|A method to accelerate the rescue of fire-stricken victims<ref name=":2">Lin, Z., & Tsai, P. (2024). A method to accelerate the rescue of fire-stricken victims. ''Expert Systems With Applications'', ''238'', 122186. https://doi.org/10.1016/j.eswa.2023.122186</ref> | |||

|Victim search method. | |||

|This paper describes an approach for locating victims and areas of danger in burning buildings. A floor plan of the burning building is translated into a grid so that the robot can navigate the building. A graph with nodes representing each of the rooms of the building is then generated from the grid to simplify the calculations needed for pathing. The algorithm used relies on crowdsourcing information normalized using fuzzy logic and the temperature of a region as detected by the thermal sensors of the robot to estimate the probability that a victim is present in a room. The authors of the paper found that their approach was significantly faster at locating survivors than strategies currently employed by firefighter and strategies devised by other researchers. | |||

Note: This paper uses the software PyroSim for their simulation. PyroSim offers a 30 day free trial, so it might be possible to use it for our own simulation. Needs further research into PyroSim.<ref name=":2" /> | |||

|- | |||

|4 | |||

|The role of robots in firefighting<ref name=":3">Bogue, R. (2021). The role of robots in firefighting. ''Industrial Robot-an International Journal'', ''48''(2), 174–178. https://doi.org/10.1108/ir-10-2020-0222</ref> | |||

|Overview current robots. | |||

|This paper describes the State of the Art in terrestrial and aerial robots for firefighting. At the same time the paper indicates that there is a general difficulty in the autonomy of such robots, mainly due to difficulties in visualizing the operation environment. There are, however, several projects aiming to address this issue and allow such robots to operate with more autonomy.<ref name=":3" /> | |||

|- | |||

|5 | |||

|SLAM for Firefighting Robots: A Review of Potential Solutions to Environmental Issues<ref name=":4">Hong, Y. (2022). SLAM for Firefighting Robots: A Review of Potential Solutions to Environmental Issues. ''2022 5th World Conference On Mechanical Engineering And Intelligent Manufacturing (WCMEIM)''. https://doi.org/10.1109/wcmeim56910.2022.10021457</ref> | |||

|Simultaneous localization and mapping. | |||

|This paper aims to address some of the unfavorable conditions of fire scenes, like high temperatures, smoke, and a lack of a stable light source. It reviews solutions to similar problems in other fields and analyzes their characteristics from some previous publications. | |||

Based on the analysis of this paper, to address the effect of smoke, a combination of laser based and radar based methods is considered more robust. For darkness effects, the combination of Laser based methods combined with image capture and processing is considered the best approach. Thermal imaging technology is also suggested for addressing high temperatures.<ref name=":4" /> | |||

|- | |||

|6 | |||

|A fire reconnaissance robot based on slam position, thermal imaging technologies, and AR display<ref name=":5">Li, S., Feng, C., Niu, Y., Shi, L., Wu, Z., & Song, H. W. (2019). A Fire Reconnaissance Robot Based on SLAM Position, Thermal Imaging Technologies, and AR Display. ''Sensors'', ''19''(22), 5036. https://doi.org/10.3390/s19225036</ref> | |||

|Reconnaissance robot, Firefighter assistance, Thermal imaging, Simultaneous localization and mapping, Augmented reality. | |||

|Presents design of a fire reconnaissance robot (mainly focusing on fire inspection. Its function is on passing important fire information to fire fighters but not direct fire suppression) It can be used to assist the detection and rescuing processes under fire conditions. It adopts an infrared thermal image technology to detect the fire environment, uses SLAM (simultaneous localization and mapping)technology to construct the real-time map of the environment, and utilizes A* and D* mixed algorithms for path planning and obstacle avoidance. The obtained information such as videos are transferred simultaneously to an AR (Augmented Reality) goggle worn by the firefighters to ensure that they can focus on the rescue tasks by freeing their hands.<ref name=":5" /> | |||

|- | |||

|7 | |||

|Design of intelligent fire-fighting robot based on multi-sensor fusion and experimental study on fire scene patrol<ref name=":6">Zhang, S., Yao, J., Wang, R., Liu, Z., Ma, C., Wang, Y., & Zhao, Y. (2022). Design of intelligent fire-fighting robot based on multi-sensor fusion and experimental study on fire scene patrol. ''Robotics And Autonomous Systems'', ''154'', 104122. https://doi.org/10.1016/j.robot.2022.104122</ref> | |||

|Firefighting robot, Path planning, Fire source detection, Thermal imaging, Binocular vision camera. | |||

|This paper presents the design of an intelligent Fire Fighting Robot based on multi-sensor fusion technology. The robot is capable of autonomous patrolling and fire-fighting functions. In this paper, the path planning and fire source identification functions are mainly studied, which are important aspects of robotic operation. A path-planning mechanism based on an improved version of the ACO(Ant Colony Optimization) is presented to solve that basic ACO is easy to converge in the local solution. It proposes a method to reduce the number of inflection points during movement to improve the motion and speed of the robot | |||

It uses a method for fire source detection, utilizing the combined operation of a binocular vision camera and and infrared thermal imager to detect and locate the fire source. | |||

It also uses ROS (Robot Operating System) based simulation to evaluate the algorithms for path planning.<ref name=":6" /> | |||

|- | |||

|8 | |||

|Firefighting robot with deep learning and machine vision<ref name=":7">Dhiman, A., Shah, N., Adhikari, P., Kumbhar, S., Dhanjal, I. S., & Mehendale, N. (2021). Firefighting robot with deep learning and machine vision. ''Neural Computing And Applications'', ''34''(4), 2831–2839. https://doi.org/10.1007/s00521-021-06537-y</ref> | |||

|Firefighting robot, Deep learning. | |||

|In this paper they made a fire fighting robot which is capable of extinguishing fires caused by electric appliances using a deep learning and machine vision. Fires are identified using a combination of AlexNet and ImageNet, resulting in a high accuracy (98.25% and 92% respectively).<ref name=":7" /> | |||

|- | |||

|9 | |||

|An autonomous firefighting robot<ref name=":8">Hassanein, A., Elhawary, M., Jaber, N., & El-Abd, M. (2015). An autonomous firefighting robot. ''IEEE''. https://doi.org/10.1109/icar.2015.7251507</ref> | |||

|Firefighting robot, Fire detection, Infrared sensor, Ultrasonic sensor. | |||

|They made an autonomous firefighting robot which used infrared and ultrasonic sensors to navigate and a flame sensor to detect fires.<ref name=":8" /> | |||

|- | |||

|10 | |||

| Real Time Victim Detection in Smoky Environments with Mobile Robot and Multi-sensor Unit Using Deep Learning<ref name=":9">Gelfert, S. (2023). Real Time Victim Detection in Smoky Environments with Mobile Robot and Multi-sensor Unit Using Deep Learning. In ''Lecture notes in networks and systems'' (pp. 351–364). https://doi.org/10.1007/978-3-031-26889-2_32</ref> | |||

|Victim detection, Thermal imaging, Remote controlled. | |||

|A low resolution thermal camera is mounted on a remote controlled robot. The robot is trained to detect victims. The victim detection model has a moderately high detection rate of 75% in dense smoke.<ref name=":9" /> | |||

|- | |||

|11 | |||

|Thermal, Multispectral, and RGB Vision Systems Analysis for Victim Detection in SAR Robotics<ref name=":10">Ulloa, C. C., Orbea, D., Del Cerro, J., & Barrientos, A. (2024). Thermal, Multispectral, and RGB Vision Systems Analysis for Victim Detection in SAR Robotics. ''Applied Sciences'', ''14''(2), 766. https://doi.org/10.3390/app14020766</ref> | |||

|Victim detection robot, multispectral imaging; primarily infrared and RGB | |||

|The effectiveness of three different cameras for victim detection. Namely a; RGB, thermal and multispectral camera.<ref name=":10" /> | |||

|- | |||

|12 | |||

|Sensor fusion based seek-and-find fire algorithm for intelligent firefighting robot<ref name=":11">Kim, J., Keller, B., & Lattimer, B. Y. (2013). Sensor fusion based seek-and-find fire algorithm for intelligent firefighting robot. ''IEEE''. https://doi.org/10.1109/aim.2013.6584304</ref> | |||

|Fire detection, Algorithm | |||

|Introduces an algorithm for a firefighting robot that finds fires using long wave infrared camera, ultraviolet radiation sensor and LIDAR.<ref name=":11" /> | |||

|- | |||

|13 | |||

|On the Enhancement of Firefighting Robots using Path-Planning Algorithms<ref name=":12">Ramasubramanian, S., & Muthukumaraswamy, S. A. (2021). On the Enhancement of Firefighting Robots using Path-Planning Algorithms. ''SN Computer Science'', ''2''(3). https://doi.org/10.1007/s42979-021-00578-9</ref> | |||

|Path planning algorithm | |||

|Tests performance of several path-plannig algorithms to allow a firefighting robot to move more efficiently.<ref name=":12" /> | |||

|- | |||

|14 | |||

|An Indoor Autonomous Inspection and Firefighting Robot Based on SLAM and Flame Image Recognition<ref name=":13">Li, S., Yun, J., Feng, C., Gao, Y., Yang, J., Sun, G., & Zhang, D. (2023). An Indoor Autonomous Inspection and Firefighting Robot Based on SLAM and Flame Image Recognition. ''Fire'', ''6''(3), 93. https://doi.org/10.3390/fire6030093</ref> | |||

|Autonomous firefighing robot, Deep learning algorithm | |||

|Made a firefighting robot that maps the area using an algorithm and uses a deep-learning-based flame detection technology utilizing a LIDAR.<ref name=":13" /> | |||

|- | |||

|15 | |||

|Human Presence Detection using Ultra Wide Band Signal for Fire Extinguishing Robot<ref name=":14">Bandala, A. A., Sybingco, E., Maningo, J. M. Z., Dadios, E. P., Isidro, G. I., Jurilla, R. D., & Lai, C. (2020). Human Presence Detection using Ultra Wide Band Signal for Fire Extinguishing Robot. ''IEEE''. https://doi.org/10.1109/tencon50793.2020.9293893</ref> | |||

|Victim detection, Remote control | |||

|A remotely controlled robot using ultra-wide band radar detects humans while fire and smoke are present based on the persons respiration movement.<ref name=":14" /> | |||

|- | |||

|16 | |||

|Firefighting Robot Stereo Infrared Vision and Radar Sensor Fusion for Imaging through Smoke<ref name=":15">Kim, J., Starr, J. W., & Lattimer, B. Y. (2014). Firefighting Robot Stereo Infrared Vision and Radar Sensor Fusion for Imaging through Smoke. ''Fire Technology'', ''51''(4), 823–845. https://doi.org/10.1007/s10694-014-0413-6</ref> | |||

|Real time object identification, Sensor fusion | |||

|Sensor fusion of stereo IR and FMCW radar was developed in order to improve the accuracy of object identification. This improvement ensures that the imagery shown is far more accurate while still maintaining real-time updates of the environment.<ref name=":15" /> | |||

|- | |||

|17 | |||

|Global Path Planning for Fire-Fighting Robot Based on Advanced Bi-RRT Algorithm<ref name=":16">Tong, T., Guo, F., Wu, X., Dong, H., Liu, O., & Yu, L. (2021). Global Path Planning for Fire-Fighting Robot Based on Advanced Bi-RRT Algorithm*. ''IEEE''. https://doi.org/10.1109/iciea51954.2021.9516153</ref> | |||

|Path planning algorithm | |||

|Introduces a bidirectional fast search algorithm based on violent matching and regression analysis. Violent matching allows for direct path search when there are few obstacles, the other segments ensure that the total path search is more efficient and less computationally heavy.<ref name=":16" /> | |||

|- | |||

|18 | |||

|Round-robin study of ''a priori'' modelling predictions of the Dalmarnock Fire Test One<ref name=":17">Rein, G., Torero, J. L., Jahn, W., Stern-Gottfried, J., Ryder, N. L., Desanghere, S., Lázaro, M., Mowrer, F. W., Coles, A., Joyeux, D., Alvear, D., Capote, J., Jowsey, A., Abecassis-Empis, C., & Reszka, P. (2009). Round-robin study of a priori modelling predictions of the Dalmarnock Fire Test One. ''Fire Safety Journal'', ''44''(4), 590–602. https://doi.org/10.1016/j.firesaf.2008.12.008</ref> | |||

|Fire modelling comparison | |||

|Compares the results of different types of fire simulation models, with a real-world experiment.<ref name=":17" /> | |||

|- | |||

|19 | |||

|Summary of recommendations from the National Institute for Occupational Safety and Health Fire Fighter Fatality Investigation and Prevention Program<ref name=":18">Hard, D. L., Marsh, S. M., Merinar, T. R., Bowyer, M. E., Miles, S. T., Loflin, M. E., & Moore, P. W. (2019). Summary of recommendations from the National Institute for Occupational Safety and Health Fire Fighter Fatality Investigation and Prevention Program, 2006–2014. ''Journal Of Safety Research'', ''68'', 21–25. https://doi.org/10.1016/j.jsr.2018.10.013</ref> | |||

|Most common causes of death of firefighters | |||

|Summary of the most common causes of death for firefighters. Cases were separated by nature and cause of death. They were also separated into 10 total categories as well 2 major categories - medical/trauma.<ref name=":18" /> | |||

|- | |||

|20 | |||

|The current state and future outlook of rescue robotics<ref name=":19">Delmerico, J. A., Mintchev, S., Giusti, A., Gromov, B., Melo, K., Havaš, L., Cadena, C., Hutter, M., Ijspeert, A. J., Floreano, D., Gambardella, L. M., Siegwart, R., & Scaramuzza, D. (2019). The current state and future outlook of rescue robotics. ''Journal Of Field Robotics'', ''36''(7), 1171–1191. https://doi.org/10.1002/rob.21887</ref> | |||

|Overview current robots and what needs to be improved upon | |||

|Discusses the main requirements and challenges that need to be solved by search and rescue robots. Generally applicable to our firefighting robot. The most important aspects of search and rescue robots are: ease of use, autonomy, information gathering and use as tools.<ref name=":19" /> | |||

|- | |||

|21 | |||

|Smart Fire Alarm System with Person Detection and Thermal Camera<ref name=":20">Ma, Y., Feng, X., Jiao, J., Peng, Z., Qian, S., Xue, H., & Li, H. (2020). Smart Fire Alarm System with Person Detection and Thermal Camera. In ''Lecture Notes in Computer Science'' (pp. 353–366). https://doi.org/10.1007/978-3-030-50436-6_26</ref> | |||

|Fire alarm, Person detection in fire, Heat detection | |||

|Discusses a smart system for fire alarms that distinguished between heat when people are present and when people aren't present.<ref name=":20" /> | |||

|- | |||

|22 | |||

|See through smoke: robust indoor mapping with low-cost mmWave radar<ref name=":21">Lu, C. X., La Rosa, S., Zhao, P., Wang, B., Chen, C., Stankovic, J. A., Trigoni, N., & Markham, A. (2019). See Through Smoke: Robust Indoor Mapping with Low-cost mmWave Radar. ''arXiv (Cornell University)''. https://doi.org/10.48550/arxiv.1911.00398</ref> | |||

|Millimeter wave radar; Indoor mapping; Emergency response; Mobile robotics | |||

|Utilizing, a Generative adversarial neural network can reliably reconstruct a grid map of a room.<ref name=":21" /> | |||

|- | |||

|23 | |||

|Analysis and design of human-robot swarm interaction in firefighting<ref name=":22">Naghsh, A. M., Gancet, J., Tanoto, A., & Roast, C. (2008). Analysis and design of human-robot swarm interaction in firefighting. ''IEEE''. https://doi.org/10.1109/roman.2008.4600675</ref> | |||

|Human- robot interaction, human robot swarm approach of firefighting, Existing robot human interaction in firefighting | |||

|Describes the cooperation between robots and firefighters during a firefighting mission, including mission planning and execution. The premise is that robots can add sensing capabilities to improve awareness and efficiency in obscured environments.<ref name=":22" /> | |||

|- | |||

|24 | |||

|Using directional antennas as sensors to assist fire-fighting robots in large scale fires<ref name=":23">Min, B., Matson, E. T., Smith, A., & Dietz, J. E. (2014). Using directional antennas as sensors to assist fire-fighting robots in large scale fires. ''IEEE''. https://doi.org/10.1109/sas.2014.6798976</ref> | |||

|Establish communications via robots, Disasters and firefighting | |||

|Describes how to establish communication networks between robots in disastrous fire situations using directional antennas so robots can be deployed to extinguish fires and reach places which firefighters can't easily reach..<ref name=":23" /> | |||

|- | |||

|25 | |||

|Design And Implementation Of Autonomous Fire Fighting Robot<ref name=":24">Reddy, M. S. (2021). Design and implementation of autonomous fire fighting robot. ''Turkish Journal Of Computer And Mathematics Education (TURCOMAT)'', ''12''(12), 2437–2441. https://turcomat.org/index.php/turkbilmat/article/view/7836</ref> | |||

|Fire prevention, Heat sensor, Extinguishing robot | |||

|Describes a robot that can be used to go into fires and reach places normal fire fighters would normally be unable to reach safely.<ref name=":24" /> | |||

|- | |||

|26 | |||

|NL-based communication with firefighting robots<ref name=":25">Hong, J. H., Min, B., Taylor, J. M., Raskin, V., & Matson, E. T. (2012). NL-based communication with firefighting robots. ''IEEE''. https://doi.org/10.1109/icsmc.2012.6377941</ref> | |||

|Overview existing robots, Communication robot-human, Natural language | |||

|Describes different methods of working together between firefighters and robots during fires and a robot that is meant for helping fire fighters during a fire<ref name=":25" /> | |||

|- | |||

|27 | |||

|Experimental and computational study of smoke dynamics from multiple fire sources inside a large-volume building<ref name=":26">Vigne, G., Węgrzyński, W., Cantizano, A., Ayala, P., Rein, G., & Gutiérrez-Montes, C. (2020). Experimental and computational study of smoke dynamics from multiple fire sources inside a large-volume building. ''Building Simulation'', ''14''(4), 1147–1161. https://doi.org/10.1007/s12273-020-0715-1</ref> | |||

|Smoke dynamics, Statistical analysis, Smoke effects | |||

|Summarizes results from a fire simulation of 4 fire sources using the computational fluid dynamics code FDS (Fire Dynamics Simulator, v6.7.1) and compares those results to a single-source simulation, demonstrating the importance of the number of and position of fire sources in a simulation.<ref name=":26" /> | |||

|- | |||

|28 | |||

|Numerical Analysis of Smoke Spreading in a Medium-High Building under Different Ventilation Conditions<ref name=":27">Salamonowicz, Z., Majder–Łopatka, M., Dmochowska, A., Piechota-Polańczyk, A., & Polańczyk, A. (2021). Numerical Analysis of Smoke Spreading in a Medium-High Building under Different Ventilation Conditions. ''Atmosphere'', ''12''(6), 705. https://doi.org/10.3390/atmos12060705</ref> | |||

|Smoke dynamics, Statistical analysis | |||

|Uses simulation to compare smoke spreading in medium-high buildings under different ventilation conditions and draws conclusions on important points to consider in the design of a ventilation system for such buildings such as smoke inlets and outlets and high pressure zones.<ref name=":27" /> | |||

|- | |||

|29 | |||

|A REVIEW OF RECENT RESEARCH IN INDOOR MODELLING & MAPPING<ref name=":28">Gündüz, M. Z., Işıkdağ, Ü., & Başaraner, M. (2016). A REVIEW OF RECENT RESEARCH IN INDOOR MODELLING & MAPPING. ''The International Archives Of The Photogrammetry, Remote Sensing And Spatial Information Sciences'', ''XLI-B4'', 289–294. https://doi.org/10.5194/isprs-archives-xli-b4-289-2016</ref> | |||

|Indoor, Mapping, Modelling, Navigation | |||

|Summarizes the last 10 years of reasearch on indoor modelling and mapping. Describes a variety of used technologies, including lasers scanners, cameras and indoor data models such as IFC, CityGML and IndoorGML. It also provides insight into recent navigation and routing algorithms with emphasis on dynamic environments.<ref name=":28" /> | |||

|- | |||

|30 | |||

|Developing a simulator of a mobile indoor navigation application as a tool for cartographic research<ref name=":29">Łobodecki, J., & Gotlib, D. (2022). Developing a simulator of a mobile indoor navigation application as a tool for cartographic research. ''Polish Cartographical Review'', ''54''(1), 108–122. https://doi.org/10.2478/pcr-2022-0008</ref> | |||

|Virtual model, Indoor navigation, Indoor mapping | |||

|Documents the process of creating of a proof of concept of a virtual indoor environment using Unreal Engine aimed at improving the indoor cartographic process. While still a prototype, the paper can be used to derive useful methods for building simulation and navigation.<ref name=":29" /> | |||

|- | |||

|31 | |||

|Real time simulation of fire extinguishing scenarios<ref name=":30">Maschek, M. (2010). ''Real Time Simulation of Fire Extinguishing Scenarios'' [Technical university wien]. https://www.cg.tuwien.ac.at/research/publications/2010/maschek-2010-rts/maschek-2010-rts-Paper.pdf</ref> | |||

|Virtual model | |||

|Describes software and methodology for simulating fire response scenarios. Demonstrates implementation of FDS with Unreal Engine to generate a fire scenario simulation.<ref name=":30" /> | |||

|- | |||

|32 | |||

|Fire Fighting Mobile Robot: State of the Art and Recent Development<ref name=":31">Tan, C. F., Liew, S., Alkahari, M. R., Ranjit, S., Said, Chen, W., Rauterberg, G., & Sivakumar, D. (2013). Fire Fighting Mobile Robot: State of the Art and Recent Development. ''Australian Journal Of Basic And Applied Sciences'', ''7''(10), 220–230. http://www.idemployee.id.tue.nl/g.w.m.rauterberg/publications/AJBAS2013journal-a.pdf</ref> | |||

|Overview current robots | |||

|This paper gives an overview of some of the state of the art fire fighting robots that are used today.<ref name=":31" /> | |||

|} | |||

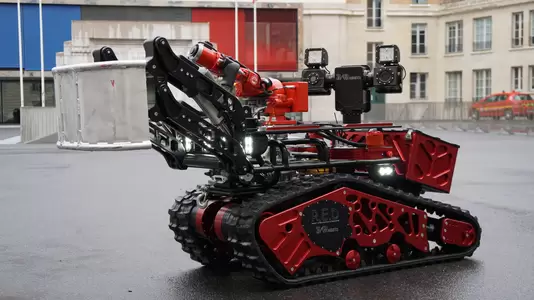

== '''State of the Art Robots''' == | |||

Because to this day firefighting is still a dangerous job, a lot of effort is put into the development of ways to minimize the risk for firefighters. In this age of technology this means that a lot of different fire fighting robots are being developed to try and help firefighters in this delicate task. To see what developments have already been made in this field we will now make an overview of current landscape of state of the art fire fighting robots. This will be done by first giving a couple of examples of a of robots that are being used today. This will paint a clear picture on what kind of technologies there are right now and what is already being done. This way we can try to find new and innovative angles on the matter of firefighting robots and prevent ourselves from designing a robot that is already being employed in the field. | |||

=== LUF60 === | |||

====== Main Characteristics: ====== | |||

[[File:LUF60.png|thumb|The LUF 60 fire fighting robot<ref>''LUF 60 - Wireless remote control fire fighting machine — Steemit''. (z.d.). Steemit. https://steemit.com/steemhunt/@memesdaily/luf-60-wireless-remote-control-fire-fighting-machine</ref>]] | |||

Diesel powered, Fire extinguishing, Remote controlled | |||

====== Description: ====== | |||

This is a remote controlled firefighting robot that is designed to distinguish fires. It consists of an air blower that blows a beam of water droplets (to a distance of approximately 60 meters). This water jet can go up to 2400 liters of water per minute. If needed it could also blow a beam of foam (to a distance of approximately 35 meters). It is designed to operate in difficult conditions and can, by remote control, be send directly to the fire source. To accomplish this the robot is able to remove obstacles and climb stairs (up to a 30 degree angle)<ref name=":31" /><ref name=":32">''LUF 60 – LUF GmbH''. (z.d.). https://www.luf60.at/en/extinguishing-support/fire-fighting-robot-luf-60/</ref>. It should be noted that LUF produces more robots similar to this one see<ref>''Extinguishing Support – LUF GmbH''. (z.d.). https://www.luf60.at/en/extinguishing-support/</ref>. | |||

====== Advantages: ====== | |||

* Minimizes risk for firefighters (remote controlled) and designed to also clear obstructions from a distance. | |||

* Good extinguishing capabilities ( up to 2400 liters per minute). | |||

====== Disadvantages: ====== | |||

* Its size exceeds that of a standard door restricting movement. Its dimensions are are 2.33m x 1.35m x 2.00-2.50m (length x width x height). A standard door is 0.80-0.90m x 2.00-2.10m (width x height). | |||

* Its high weight of 2.200 kg, resulting in low mobility. The maximal speed is 4.5 km/h which is around the average walking speed<ref name=":32" />. | |||

=== EHang EH216-F === | |||

[[File:EHangEH216F.jpg|thumb|EHang EH216-F firefighting drone <ref>''EHang Announced Completion of EH216F’s Technical Examination by NFFE'' (z.d.). EHang. https://www.ehang.com/news/798.html</ref>]] | |||

====== Main Characteristics: ====== | |||

Battery powered, Fire extinguishing, Drone, Remote or directly controlled | |||

====== Description: ====== | |||

This is a firefighting robot that can be either remote or manually controlled by a pilot. The main focus of this robot is to be able to extinguish fires in high-rise buildings that are not possible to extinguish from ground level, due to the limited range of fire hoses<ref name=":3" />. The robot has a maximum flight altitude of 600 m, is able to carry a single person and has a maximum cruising speed of 130 km/h. The drone carries 100 l of firefighting liquid, with the spray lasting for 3.5 minutes and 6 fire extinguishing projectiles containing fire extinguishing powder and a window breaker. The drone is 7.33 m in length, 5.61 m in width and has a height of 2.2 m<ref name=":33">''EHang EH216-F (production model)''. (n.d.). https://evtol.news/ehang-eh216-f</ref>. | |||

====== Advantages: ====== | |||

* Is able to reach and extinguish fires that grounded firefighting equipment cannot reach. | |||

* Can extinguish fires from outside of the buildings, thus minimizing the risk for firefighters. | |||

* Has very high mobility, meaning it can quickly respond to fires. | |||

====== Disadvantages: ====== | |||

* Can only carry a set amount of fire extinguishing equipment, thus limiting it's capabilities. | |||

* It's flight time is approximately 21 minutes, thus limiting deployment range and deployment time<ref name=":33" />. | |||

=== '''THERMITE RS3''' === | |||

[[File:THERMITE RS3.png|thumb|The THERMITE RS3 firefighting robot<ref>''THERMITE®'' (z.d.). https://www.howeandhowe.com/civil/thermite</ref>]] | |||

====== Main Characteristics: ====== | |||

Diesel powered, Fire extinguishing, Remote controlled | |||

====== Description: ====== | |||

The THERMITE RS3 is a firefighting robot designed to extinguish fires from the outside of a building. It is a remote controlled diesel powered robot equipped with a water canon. It is capable of shooting a beam of water 100 meters horizontally and 50 meters vertically. With the capability of using foam if needed. It has also (relatively recently in 2020) been adopted into the Los Angeles Fire Department<ref>''LAFD Debuts the RS3: First Robotic Firefighting Vehicle in the United States | Los Angeles Fire Department''. (z.d.). https://www.lafd.org/news/lafd-debuts-rs3-first-robotic-firefighting-vehicle-united-states</ref>. It can also be noted that Howe & Howe produces more robots with a similar functionality as this one<ref name=":34">''THERMITE®'' (z.d.-b). https://www.howeandhowe.com/civil/thermite</ref>. | |||

Advantages: | |||

* Good extinguishing capabilities ( up to 9464 liters per minute). | |||

* Good mobility for its size (up to 13 km/h and ability to climb 35% slope while weighing 1588 kg). | |||

====== Disadvantages: ====== | |||

* The robot was not designed to go inside of buildings so it has limited adaptability. Its dimensions are are 2.14m x 1.66m x 1.63m (length x width x height). A standard door is 0.80-0.90m x 2.00-2.10m (width x height)<ref name=":34" />. | |||

=== '''COLOSSUS''' === | |||

[[File:COLOSSUS.png|thumb|The COLOSSUS fire fighting robot<ref name=":36">''DPG Media Privacy Gate''. (z.d.). https://www.ad.nl/binnenland/robot-colossus-bleef-de-notre-dame-koelen-van-binnenuit~aee4510a2/</ref>]] | |||

====== Main Characteristics: ====== | |||

Battery powered, Fire extinguishing, Reconnaissance, Remote controlled | |||

====== Description: ====== | |||

The COLOSSUS is a firefighting robot developed to fight fires in indoors and outdoors situations. It is an remotely controlled robot with some AI integration that provides driving assistance. The main purpose of this robot is to extinguish fires in places to dangerous for firefighters to go. The robot is battery powered (batteries last up to 12 hours) and has 9 interchangeable models that can be used for different situations. Because of these different models it is very adaptable and can be used from reconnaissance to victim extraction. A very notable instance of the robot being used was when they were deployed alongside the Paris firefighters during the Notre-Dame fire in 2019. Where they were used to cool down the insides of the cathedral where firefighters couldn't go because of falling debris<ref name=":36" />. Now the robot is being used in 15 different countries. Shark robotics produces more similar robots<ref name=":35">''Colossus advanced firefighting robot | Shark Robotics''. (z.d.-b). https://www.shark-robotics.com/robots/Colossus-firefighting-robot</ref><ref>''Shark Robotics - Leader in safety robotics''. (z.d.). https://www.shark-robotics.com/</ref>. | |||

====== Advantages: ====== | |||

* Relatively long operation time despite being battery powered (up to 12 hours in operational situations). | |||

* Capable to withstand high temperatures (also waterproof and dust-proof). | |||

* Can be deployed out door and indoor (minimizes risk for firefighters). The robots dimensions are 1.60m x 0.78m x 0.76m (length x width x height). Can also climb slopes of up to 40 degrees. | |||

* Different mountable modules for different situations (for instance 180 degree video turret or wounded people transport stretcher) | |||

* Good extinguishing capabilities (3000 liters per minute) | |||

====== Disadvantages: ====== | |||

* The robot has an extremely low speed of up to 3.5 km/h (which is slower then average walking speed)<ref name=":35" />. | |||

=== '''X20 Quadruped Robot Dog''' === | |||

[[File:X30.png|thumb|The X20 Quadruped Robot Dog<ref name=":37">''Uncover Myriad Uses of Robotics across varied industries- DEEP Robotics''. (z.d.). https://www.deeprobotics.cn/en/index/industry.html</ref>]] | |||

====== Main Characteristics: ====== | |||

Battery powered, Reconnaissance, Autonomous and Remote controlled, Quadruped | |||

====== Description: ====== | |||

The X20 Quadruped Robot Dog is a fully autonomous robot (with remote control capabilities) made to traverse difficult terrain such as ruins, piles of rubble and other complex terrains using its four legs. Furthermore the robot was developed to detect hazards using its different sensors and cameras. It comes equipped with a bi-spectrum PTZ camera, a dynamic infrared camera, gas sensor, sound pickup and LiDAR. Using a SLAM algorithm it gets the measurements of its environment and builds a 3D map of it. It also comes equipped with a light weighted robotic arm. The main job of this robot in a disaster area is to do reconnaissance which is directly send back to the digital system. It can collect sound from victims that it finds and make calls with them. Also calculating the best pathways to get to safety<ref name=":38">''X20 Hazard Detection & Rescue Solution''. (z.d.). Deeprobotics. https://deep-website.oss-cn-hangzhou.aliyuncs.com/file/X20%20Hazard%20Detection%20%26%20Rescue%20Solution.pdf</ref>. Deep Robotics has produced more similar robots and even a newer model (the X30) however the X20 was specifically marketed towards rescue operations as to why we chose to look further into this older model (2021)<ref name=":37" /><ref>''DEEP Robotics - Global Quadruped Robot Leader''. (z.d.-b). https://www.deeprobotics.cn/en/index.html</ref>. | |||

====== Advantages: ====== | |||

* The robot is relatively light (53kg) and can go up to 15 km/h*. | |||

* The robot can traverse difficult terrain (its able to traverse 20cm high obstacles and climb stairs and 30 degree slopes) and is also capable of operating indoors as well as outdoors. The robots dimensions are 0.95m x 0.47m x 0.70m (length x width x height). | |||

* The robot is fully autonomous an can make a mapping of the area as well ass detect temperatures, toxic gases and victims. Making it a good reconnaissance robot and making the job more safe for firefighters. | |||

====== Disadvantages: ====== | |||

* The robot is battery powered and only lasts 2-4 hours. | |||

* Even though it can work under extreme conditions such as downpour, dust storm, frigid temperatures and hail. It wasn't specified whether it could work under extreme heat<ref name=":39">''X20: The Ultimate Quadruped Bot series for Industrial Use - DEEP Robotics''. (z.d.). https://www.deeprobotics.cn/en/index/product.html</ref>. | |||

<nowiki>*</nowiki>It should be noted that some specifications of the robot varied pretty significantly on the manufacturers own website<ref name=":39" /><ref name=":38" />. | |||

=== '''SkyRanger R70''' === | |||

[[File:SkyRanger R70.png|thumb|The SkyRanger R70<ref>''SkyRanger® R70 | Teledyne FLIR''. (z.d.). https://www.flir.eu/products/skyranger-r70/?vertical=uas&segment=uis</ref>]] | |||

====== Main Characteristics: ====== | |||

Battery powered, Drone, Reconnaissance, Remote controlled, Semi-autonomous | |||

====== Description: ====== | |||

The SkyRanger R70 comes was developed for a wide range of missions including but not restricted to fire scenes and search and rescue operations. The main goal of the drone is to give an unobstructed wider view of what is happening in such a situation. It comes equipped with a detailed thermal camera making it ideal for analyzing fires from a safe distance. The drone also carries a computer making it capable of using AI for object detection and classification. It isn't specified but it is implied that the drone is only meant for outside use meaning that no scouting inside of buildings can be done with this robot<ref name=":40">''Support for SkyRanger R70 | Teledyne FLIR''. (z.d.-b). https://www.flir.com/support/products/skyranger-r70/?vertical=uas&segment=uis#Documents</ref>. Teledyne FLIR makes more similar drones, thermal cameras and other products<ref>''Thermal Imaging, Night Vision and Infrared Camera Systems | Teledyne FLIR''. (z.d.). https://www.flir.eu/</ref>. | |||

====== Advantages: ====== | |||

* Being a drone this robot is very mobile, with top speeds of 50 km/h. | |||

* Can give a very detailed top down view, including thermal vision. | |||

====== Disadvantages: ====== | |||

* The drone is only meant for reconnaissance meaning that it can only carry and deliver payloads up to 2 kg. | |||

* Its battery only lasts up to 50 minutes. | |||

* Can only operate in temperatures up to 50 degrees Celsius, meaning that it has to keep a safe distance from the fire<ref name=":40" />. | |||

== '''User analysis''' == | |||

The end goal for this project is to deliver a simulation of a remote controlled mapping robot that is capable of entering buildings that are on fire and provide mapping information regarding the layout of the building and where obstacles (including fire) and possibly victims are located. | |||

The chosen programming environment for this task will be Unity. | |||

For the realization for this simulation the following MoSCoW list has been constructed: | |||

=== Must have: === | |||

* A simulation of a remote controlled firefighting robot in a fire scenario. | |||

* Simulate the ability for a robot to turn the information from the 3D environment into a 2D map. | |||

* Realistic fire and physics mechanics in the simulation. | |||

* A simulation of the ability to detect fires and add them to the map (making a heat map). | |||

=== Should have: === | |||

* A simulation of the ability to detect victims and add them to the map. | |||

* A simulation of the ability to detect obstacles and add this information to the map. | |||

=== Could have: === | |||

* The ability to communicate with or help victims. | |||

* The ability to work (fully) autonomously. | |||

=== Will not have: === | |||

* The ability to detect smoke and make a smoke map. | |||

* The ability to open doors. | |||

* The ability to rescue/extract victims. | |||

* The ability to fight fires. | |||

* The ability to of complex communication and interaction beyond just the data sharing. | |||

== '''Reasoning behind chosen objectives''' == | |||

For this project we wanted to deliver a proof of concept for (parts) of a mapping robot to be used by firefighters in buildings. We decided to do this by using a simulation for several reasons | |||

* Our groups composition: as our group consists of 4 computer scientist, making a programmed simulation will cost less time learning new skills so we can focus more time on the proof of concept itself | |||

* A simulation makes it easier to test & tweak the robot's software. As we don't have the resources to test in an actual burning building, a controlled fire in a lab is the best possible way to test a live robot. This still takes time to setup and is harder to repeatedly do. Testing a simulation model is easier, and so gives us more opportunity to test and tweak the robot more easily | |||

* Making a simulation instead of a real model gives the benefit of being able to ignore the hardware side of the robot. When building a real model it must be made by using hardware and focus needs to be diverted to getting the right hardware in place. With a simulation we can focus on the parts of the robot we are going to give a proof of concept for. | |||

We decided to make this simulation in Unity, some of the options we had and their advantages and disadvantages are listed in the next chapter of this wiki. We ultimately decided between using unity and ROS and chose unity for these reasons | |||

* ROS can only be used on Linux, which makes installing it and setting it up and using it a lot more difficult and time expensive | |||

* Unity has a lot more documentation and ready made code then ROS, so in unity we have more resources available to help us build the simulation | |||

* Even though ROS has more features ready aimed at robot simulation, unity has all tools we need to build our simulation | |||

For our simulation we made a MoSCoW list that shows what is going to be included in the simulation. | |||

== '''Simulator Selection''' == | |||

=== Main choices for simulation environments: === | |||

==== Core simulation environment: ==== | |||

* Multi-agent frameworks: NetLogo, RePast | |||

* ROS | |||

* Game Engine - Unreal Engine/Unity | |||

* FDS combined with an agent model | |||

* FDS combined with ROS/Unreal/Unity | |||

==== Fire simulation: ==== | |||

* Fire Dynamics Simulator (FDS) | |||

* Custom fire simulation | |||

===== Discarded: ===== | |||

Swarm - outdated and inferior to NetLogo and RePast | |||

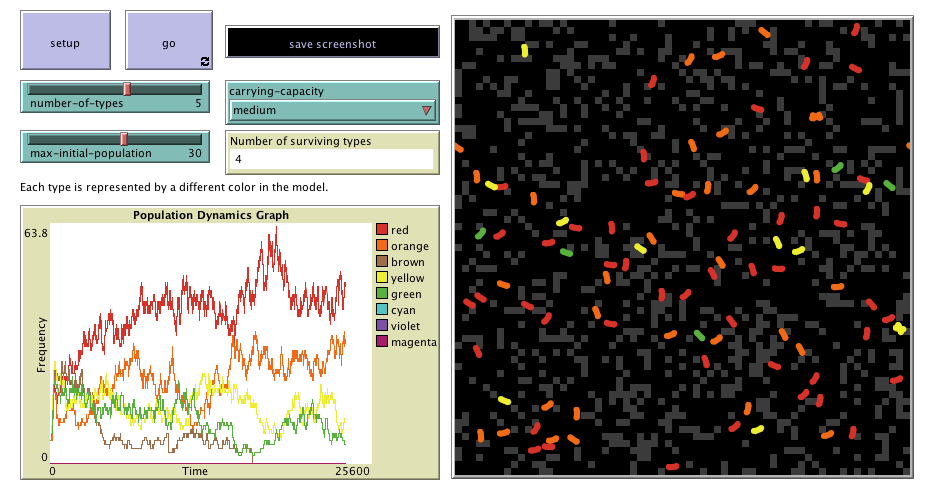

=== NetLogo === | |||

[[File:NetLogo.png|thumb|NetLogo<ref>''NetLogo Models Library: GenEVo 2 Genetic Drift''. (z.d.). https://ccl.northwestern.edu/netlogo/models/GenEvo2GeneticDrift</ref>]] | |||

====== Advantages: ====== | |||

* Simple and very versatile | |||

* Manual control possible | |||

* Well established in agent simulation | |||

====== Disadvantages: ====== | |||

* No integrated fire/smoke simulation | |||

* Cannot integrate actual sensor behavior | |||

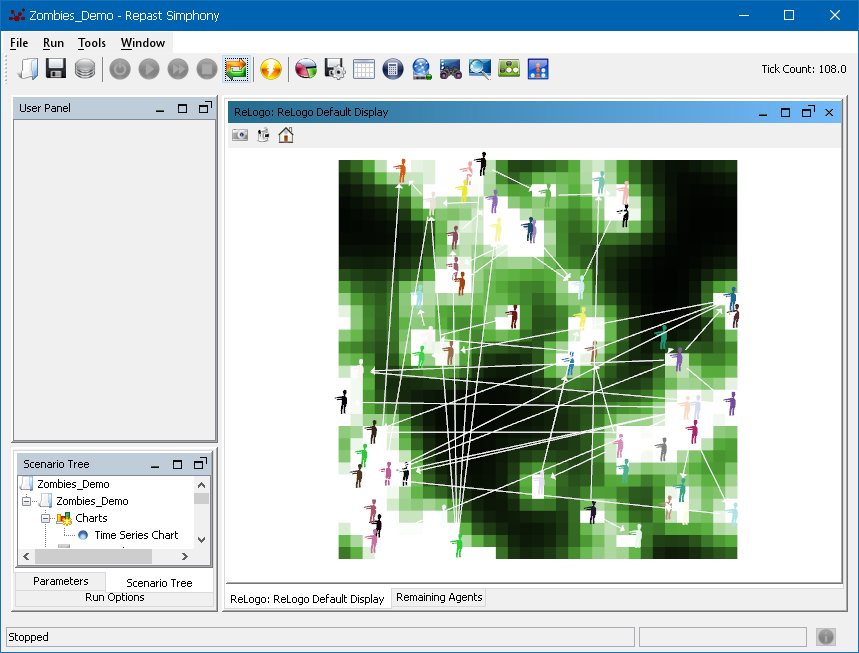

=== RePast === | |||

[[File:RePast.png|thumb|RePast<ref>''Repast suite documentation''. (z.d.). https://repast.github.io/quick_start.html</ref>]]RePast (Recursive Porous Agent Simulation Toolkit) is an open-source agent-based modeling and simulation (ABMS) toolkit for Java. It is designed to support the construction of agent-based models. | |||

====== Advantages: ====== | |||

* Can create very complex models | |||

* Allows the use of Java libraries | |||

====== Disadvantages: ====== | |||

* More complicated than Netlogo | |||

* Bloated code (because Java) | |||

=== ROS/RViz === | |||

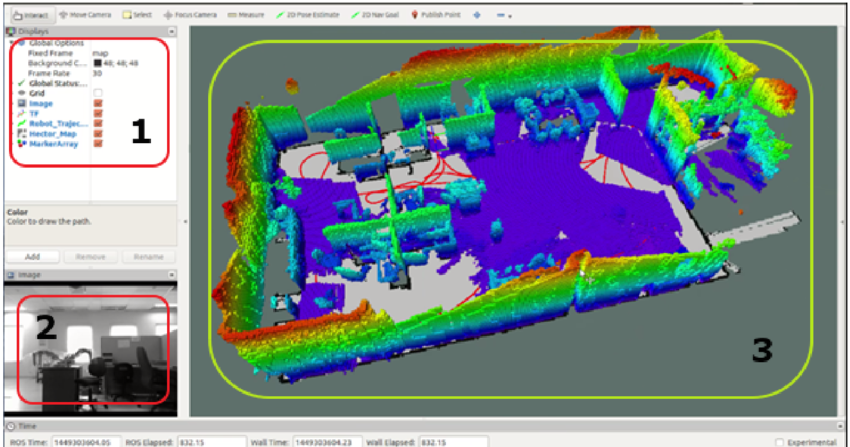

[[File:ROS-RViz.png|thumb|ROS with RViz<ref>''Fig. 7: RVIZ node panel for human-robot visual interface on ROS ecosystem''. (z.d.). ResearchGate. https://www.researchgate.net/figure/RVIZ-node-panel-for-human-robot-visual-interface-on-ROS-ecosystem_fig7_305730015</ref>]] | |||

RViz is a 3D visualization tool for ROS (Robot Operating System), which is commonly used in robotics research and development. ROS is an open-source framework for building robot software, providing various libraries and tools for tasks such as hardware abstraction, communication between processes, pathfinding, mapping and more. | |||

====== Advantages: ====== | |||

* Can simulate sensor data | |||

* Good 3D capabilities | |||

* Well-established in professional robot development | |||

* A variety of tools and plugins | |||

====== Disadvantages: ====== | |||

* Fire/smoke simulation not supported - outside implementation needed | |||

* Unfamiliar and complex | |||

* Requires Linux/ Difficult installation | |||

=== FDS + (Custom)agent simulator === | |||

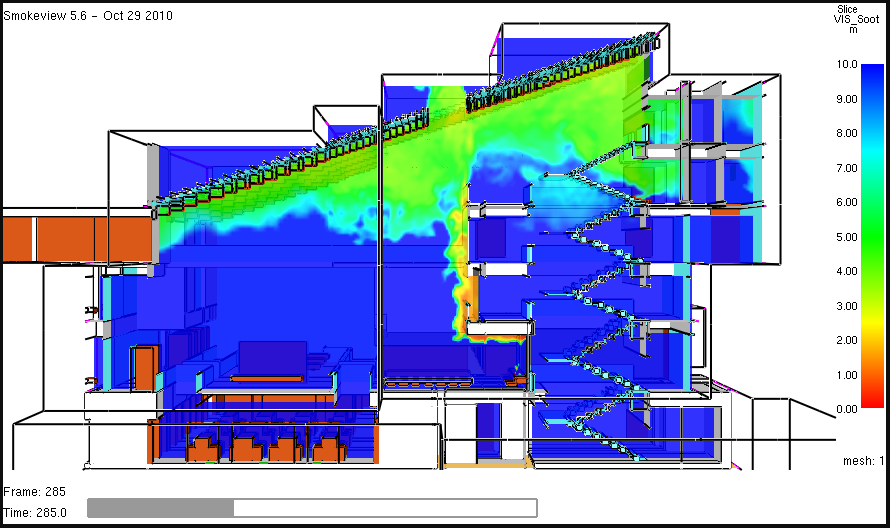

[[File:FDS.png|thumb|FDS<ref>''What is FDS? - FDS Tutorial''. (2021, 25 februari). FDS Tutorial. https://fdstutorial.com/what-is-fds/</ref>]] | |||

Fire Dynamics Simulator (FDS) is a computational fluid dynamics (CFD) model of fire-driven fluid flow. FDS is a program that reads input parameters from a text file, computes a numerical solution to the governing equations, and writes user-specified output data to files. | |||

FDS is primarily used to model smoke handling systems and sprinkler/detector activation studies, as well as for constructing residential and industrial fire reconstructions. | |||

====== Advantages: ====== | |||

* Professional system; Well established in the industry | |||

* Very accurate fire simulation | |||

* 3D | |||

====== Disadvantages: ====== | |||

* Pretty complicated to use | |||

* Computationally expensive | |||

* Couldn’t easily find resources for custom-agent behavior | |||

====== Note: ====== | |||

PyroSym is a GUI which makes using FDS easy but it is paid unless a special offer is made for academic purposes | |||

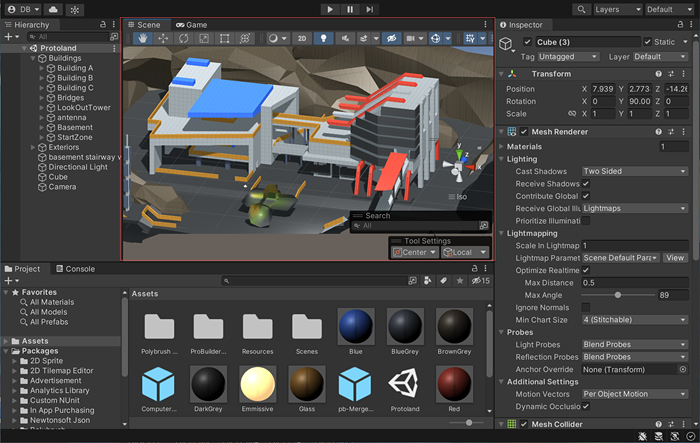

=== Unreal Engine/Unity + FDS === | |||

[[File:Unity.png|thumb|Unity<ref>Technologies, U. (z.d.). ''Unity - Manual: The Scene view''. https://docs.unity3d.com/Manual/UsingTheSceneView.html</ref>]] | |||

Data from FDS can be extracted and loaded into Unreal/Unity and they can handle agent simulation. | |||

====== Advantages: ====== | |||

* Abundance of resources for Unreal Engine/Unity | |||

* High flexibility | |||

* Ease of use of Unreal Engine/Unity combined with accurate simulation from FDS | |||

* Successful implementation in literature [31] | |||

====== Disadvantages: ====== | |||

* Computationally expensive | |||

* Data exporting might not be a simple process | |||

* Complicated to use FDS | |||

=== ROS/RViz + FDS === | |||

====== Advantages: ====== | |||

* Both well established professional software for their respective uses | |||

* Very high control and customizability | |||

====== Disadvantages: ====== | |||

* Did not find resources on successful implementation but no reason why it shouldn’t be possible. | |||

* Both complicated and unknown software (might be too much work) | |||

* Computationally expensive | |||

== '''Chosen Simulator explanation''' == | |||

The final choice was Unity as the core environment combined with FDS for the fire simulation. | |||

For the core simulation environment it was decided that multi-agent frameworks such as NetLogo and RePast are too simple and lack the capabilities for a physical simulation of Unreal, Unity or ROS. From the latter choices Unreal Engine and Unity hold the same value of being highly customizable, user-friendly and very well documented, while ROS had proper sensor and odometry implementations. The final choice was Unity due to its ease of use and customizability as well as the previous experience of 2 team members with the software. | |||

For the fire simulation FDS was chosen as it is an established software and provides highly accurate results. The downside of computational complexity was reduced by carefully selecting input and output parameters. A custom fire simulation would be just as or more complicated to use as FDS while providing inferior results. | |||

== '''Simulated robot specification''' == | |||

In order to achieve the objective of creating a robot that creates a 2D map of its environment we decided to have the following robot design: | |||

# A rectangular chassis of the robot containing all of the microcontrollers, power supply and other electronics needed for the function of the robot. The specific electronics contained in the chassis were ignored in the simulation. The chassis of the robot was simulated as a rectangular cuboid with the Rigid-body<ref name=":41">Unity - Rigidbody https://docs.unity3d.com/ScriptReference/Rigidbody.html</ref> component which gives mass to the object and allows it to be simulated in the unity physics engine. | |||

# Differential drive wheel system. The robot has two motors which are attached to the front wheels and a free moving wheel at the back. | |||

# An air temperature sensor at the chassis of the robot. | |||

# A 2mm wave radar sensor on top of the chassis. This sensor serves the same functionality as a LiDAR sensor with the added advantage that it is not affect by the extreme temperatures that can occur in a fire scenario. | |||

# A camera that will be used to represent the point of view of the robot operator. | |||

These are the 4 main components of the robot, further details on how each of the components was implemented in the simulation can be found in the subsections below. | |||

=== FDS simulation === | |||

To generate fire data, we created the models described in section Simulated environments in FDS. To avoid the major issue of computation complexity, we applied the following simplifications: | |||

# We limited the acceleration of the fire to 10 seconds, i.e. we applied a fire accelerant to the fire for only the first 10 seconds of the simulation. | |||

# We only produced fire data for a slice along the x-y dimensions, i.e. we output data was a plane parallel to plane formed by the x-y dimensions. This plane was 1m high form the origin along the z axis. | |||

Following this procedure, we generated 5 minutes of data from 1 fire for each environment, with only the house having a second fire simulation. This gives a total of 5 minutes of simulation for each of the school and office environments, and 10 minutes for the house environment. | |||

=== FDS data transfer === | |||

The files generated by FDS are read using the ReadFds.ipynb notebook which can be found at the Resources folder in the source code(link here). The python library fdsreader is used to read and transform the data to be printed as a csv in the following format: | |||

First line: Number of timestamps, Number of sample points along the X axis, Number of sample points along the Z axis | |||

Second line: X dimension, Z dimension | |||

Third line: Timestamps of snapshots | |||

Lines 4 and after: Snapshots of the temperature data along the slice of space in the following format - value at position i * X + j corresponds to the datapoint at position i, j in the snapshot. | |||

The data is then read by Unity in the ReadHeatData.cs<ref name=":44" /> script according to this format and stored in a 3 dimensional array with dimensions - time, x and z. | |||

=== Heat sensor === | |||

The heat sensor simulation was designed to mimic a temperature probe at a height of 1 meter. The data generated from FDS is put into a 3 dimensional array with dimensions - time, x and z. The accurate world position of the heat sensor object on the robot is taken and converted to indexes using a linear transformation function which is derived by the difference in the scale of the environment of Unity and FDS. The time is taken from the start of the simulation and together with the translated coordinates is used to get a heat measurement. | |||

The data is taken perfectly with no noise being introduced as from the interview it was concluded that the there is no need for a high amount of accuracy or precision. The important aspect is to determine the general temperature distribution and to determine dangerous areas. For that we did not need to simulate the inaccuracies of temperature probe. | |||

=== Wheel system === | |||

For this robot we selected a differential drive system with two front wheels connect to motors and a free moving caster wheel in the back of the chassis. The front wheels are represented in unity as solid cylindrical objects with the RigidBody<ref name=":41" /> component. The wheel connection to the robot chassis was handled by adding a HingeJoint <ref name=":42">Unity - Hinge Joint https://docs.unity3d.com/Manual/class-HingeJoint.html</ref>component. With the hinge joint we can restrict the movement of the wheels to move as if they were connected to the chassis of the robot. However to still allow the wheels to rotate freely we allowed rotation around the Y-axis of the hinge joint. Additionally, by default the hinge joints in unity have a maximum angle of rotation. This effect is not desirable for our use case since we want our wheels to have unrestricted rotations. We circumvented this by setting the "Min" and "Max" limit parameters to 0 of the HingeJoint to allow for continuous movement. | |||

The back "free-spinning" wheel was designed to simulate a "ball-wheel"<ref>Polulu - Ball Wheel https://www.pololu.com/product/950</ref> . It was modeled using a sphere and RigidBody<ref name=":41" /> component. This wheel, unlike the front wheels needs to rotate freely around every axis in order to allow the robot to freely turn. This extra requirement necessitated the use of more complex ConfigurableJoint <ref>Unity - ConfigurableJoint https://docs.unity3d.com/Manual/class-ConfigurableJoint.html</ref>. With this joint we connected the wheel to the main chassis and locked the movement of the wheel across every axis but we allowed for free rotation. | |||

We also need to move our robot. To do this we simulated the front wheel movement using the "Motor" function of the HingeJoint<ref name=":42" /> component. The HingeJoint motor has two settings: Torque and Velocity. We set the torque of the motors to a high enough value so that our motors can move the and we connected the velocity setting with the user's keyboard input. With this we can control the movement of the robot using the WASD keys. The code that handles the movement of the robot can be found in the MoveWheels2.cs <ref name=":44" />script inside our UnityProject. | |||

An interesting issue that occurred during the development of the simulation was that the torque setting of the wheel motors was too high which caused the robot to flip upside down since the chassis was too light. This issue was exacerbate by the different friction values of the floor of the different environments. In the end we resolved this by increasing the weight of the chassis | |||

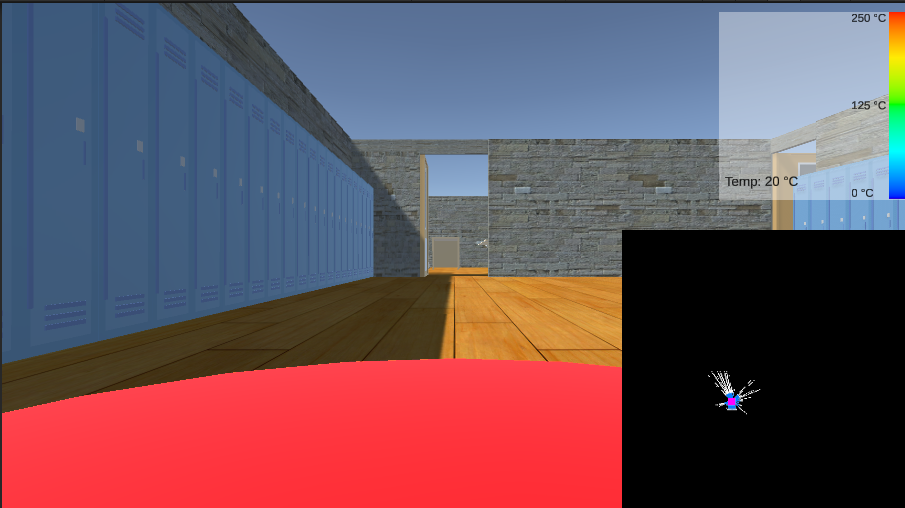

=== 2mm Wave Radar Sensor === | |||

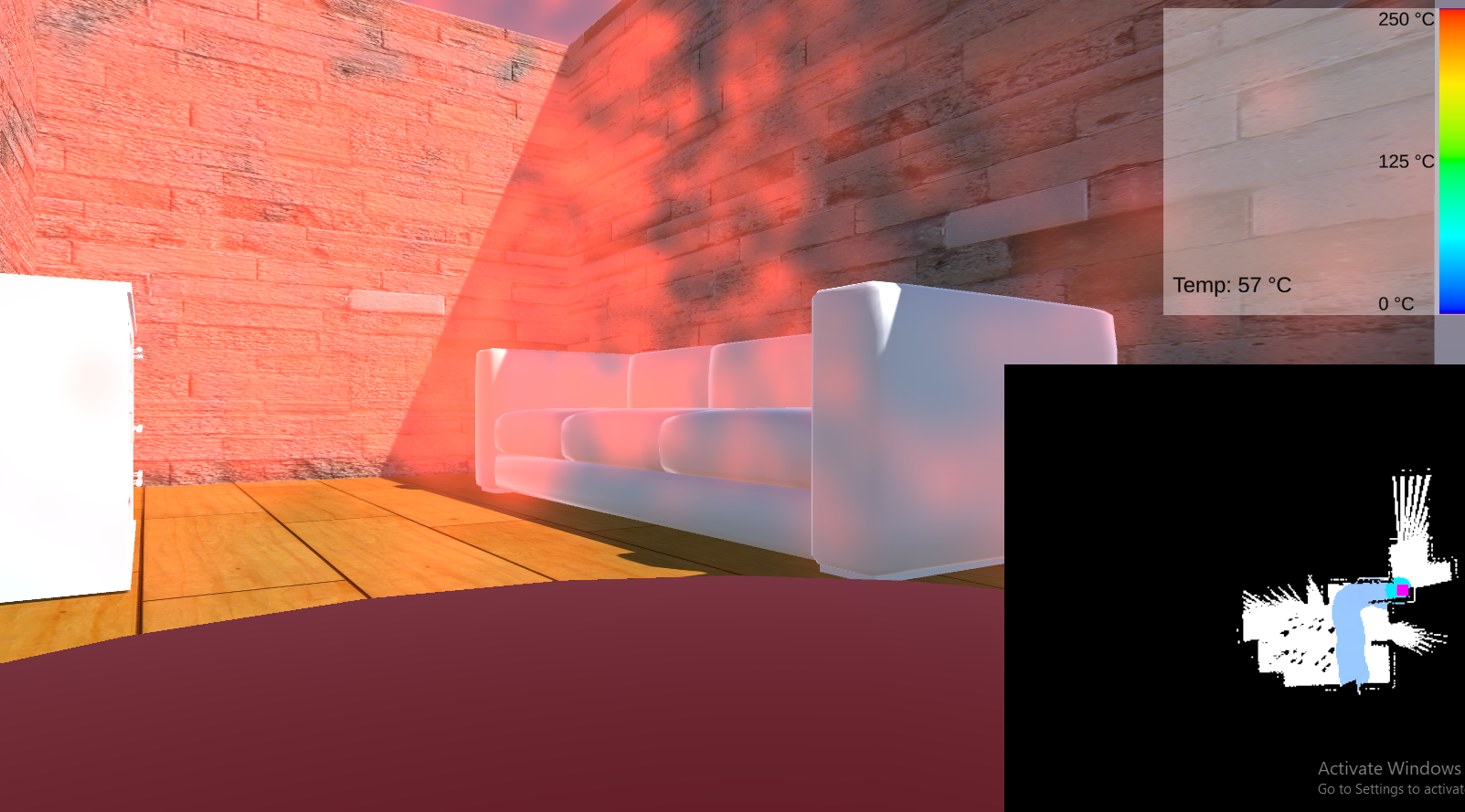

[[File:Screenshot 2024-04-10 224222.png|alt=View from main robot camera|thumb|View from main robot camera]] | |||

Although we officially call this sensor the 2mm Wave Radar Sensor the unofficial term we used when developing the simulation was simply the "LiDAR sensor", because the for the purposes of the simulation the behaviour of both the radar and LiDAR is the same. We want our LiDAR/Radar sensor to generate 2d array scan point of the surrounding obstacles at a given frequency. We also want to simulate the physical nature of the LiDAR where it spins at a certain velocity and it takes measurements at certain frequencies. Finally we also want to take into account that the LiDAR sensor data is not perfect in the real world, so we also want to include these imperfect measurements in our simulation. All of this functionality of the LiDAR was implemented in the LidarScan.cs<ref name=":44" /> script inside our Unity Project. This script sends a predefined number of rays<ref>Unity - Raycast https://docs.unity3d.com/ScriptReference/Physics.Raycast.html</ref> in circle around the radar. The rays travel a predetermined distance and if they it an object that is closer than the given distance they report it back to the LiDAR. In the end we generate an Array of measurements where each entry in the array contains the angle at which this measurement is taken and the distance of the object that was detected. If no object was detected then there is no entry in the measurement array. Finally, we randomize each entry using random values sampled from a uniform random distribution. We can freely tweak the maximum scan distance of the LiDAR, the number of measurements taken per scan and the strength of the random noise that was added. | |||

=== Camera === | |||

[[File:Particle image.png|thumb|Fire particles in a burning room]] | |||

In the real world, the robot will use an infrared camera for two main reasons. First, it can detect the temperature of solid surfaces and second, it can see through smoke which occurs quite often in a house fire scenario. However, we did not choose to simulate the infrared camera in Unity. This is because none of the current algorithms depended on the infrared camera input, only on the heat sensor and the LiDAR. Additionally, implementing the Infrared camera would greatly increase the computational complexity of the simulation since we need to use FDS to precalculate (with great detail) the surface temperature of the solids in the room and we also need to render these temperatures on the camera. Since the frame rate of the simulation was not very high (30 FPS) we decided to opt out of simulating a full blown infrared camera. Instead to still represent what the robot operating can see while controlling the robot we attached an object with a Camera component <ref>Camera component - https://docs.unity3d.com/Manual/class-Camera.html</ref>. | |||

To visualize the heat distribution during the simulation for demonstration purposes we use the Unity particle system. For each point (x, z) generated by FDS we instantiate an object at the real world coordinates corresponding to the data point. The object emits red semitransparent particles at a rate which is proportional to the heat data point at the given (x, z) coordinates and time. This is not accurate to real world fire but serves as a visual aid when working with and testing the simulation. | |||

== '''Simulated environments''' == | |||

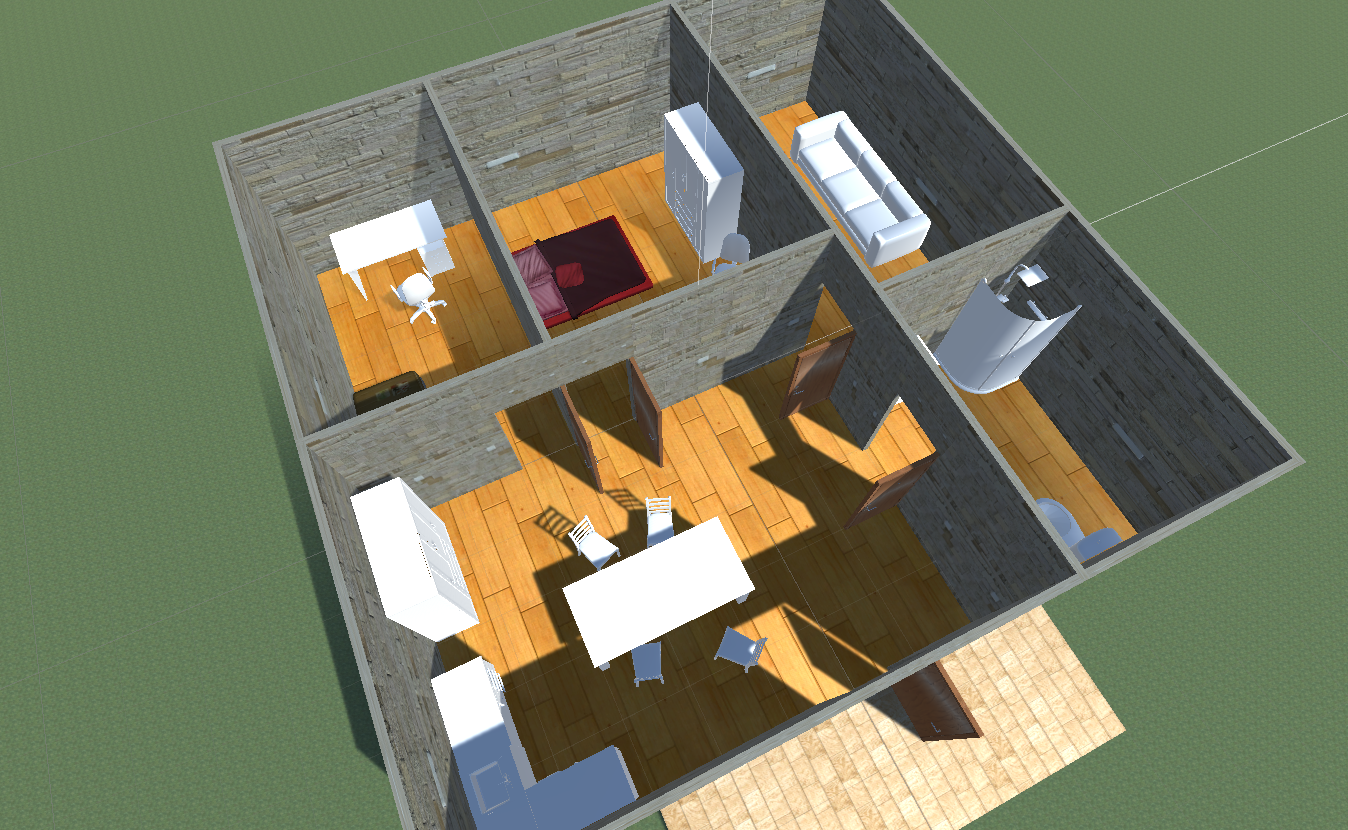

[[File:House.png|thumb|The house simulation environment]] | |||

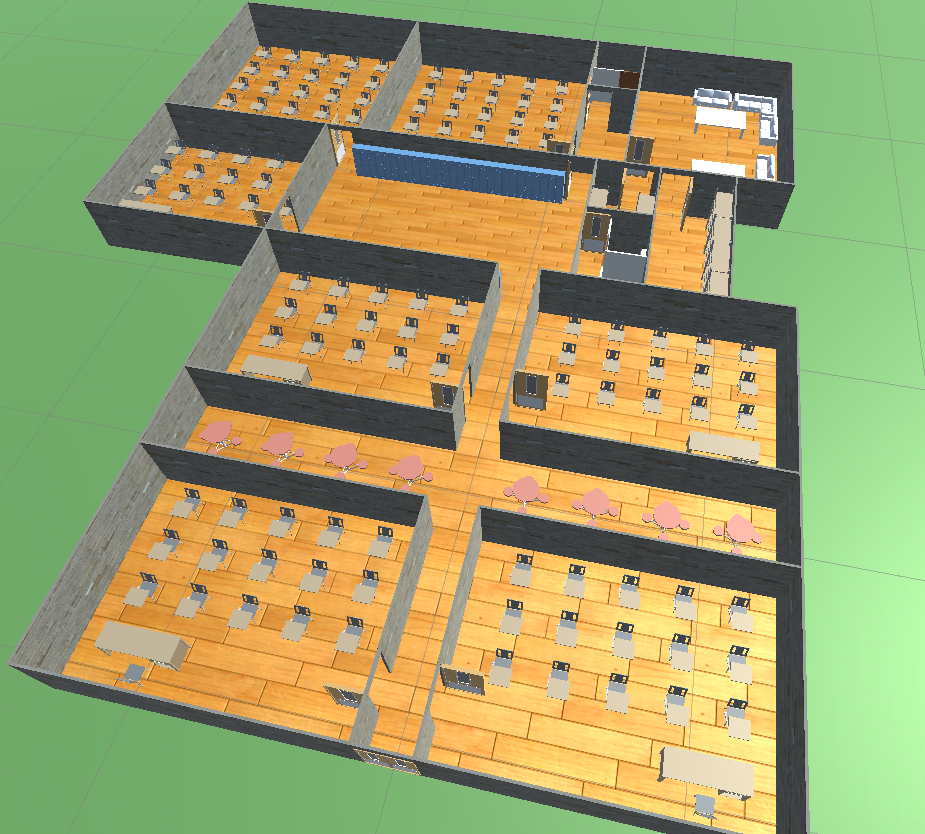

[[File:School.png|thumb|The school simulation environment]] | |||

One of the biggest advantages of simulating the fires was that multiple environments could be build to test the robots performance under different circumstances. It was chosen to build three different environments for the project that could all challenge the robot in different ways. Furthermore a house, school and office building were chosen because these seemed like very plausible locations for an actual fire. | |||

=== House === | |||

The house was the first and smallest environment that was constructed. It had a very small and simplistic layout and was chosen as the first environment to test the robot in the first stages of its development and to learn how to work with unity. It consists of a living room/kitchen, another smaller living room, a bath room and two bedrooms. It was a useful environment for testing especially early one and it relatively small size also meant that computation times were a lot shorter. It was also with this environment that it was concluded that 3d fire was not practical or necessary for this project because computation time was to long, even for this smaller environment. A house is also a very realistic place for the robot to be used because firefighters are less likely to have building plans of these then for larger buildings. Meaning that a mapping robot would be ideal. | |||

=== School === | |||

The school simulation environment was the second simulation environment that we made. The idea of this environment was to expand on the simplistic environment of the house and add more complexity. This complexity stems form the larger scale and more complex layout of the school. Firstly longer narrow hallways could challenge the capabilities of the mmWave Radar and of the mapping algorithms. Secondly, the layout was made so that some rooms lead to other rooms making the resulting map a lot more complex than in its predecessor. We were interested to see if our mapping algorithm would be able to handle these more complicated layouts. The environment consists of 7 class rooms, the main hallway/cafeteria, 3 toilets, the teachers room, 2 storage rooms and 2 smaller hallways. | |||

=== Office === | |||

[[File:Office.png|thumb|The office simulation environment]]The office is the third and last simulation environment that was made for the project. An office building was chosen because all environments until now consisted of smaller rooms connected to each other. To be more precise, the house with the small rooms and the school with the relatively small classrooms and narrow well defined hallways. Therefore the goal of the office building was to challenge the robot by making larger open spaces which could be difficult to deal with because of the limited range of the mmWave Radar. It was also of interest because we wanted to see how different a fire would spread when there were larger open areas to spread through instead of all the relatively closed spaces of the other environments. In this environment the cafeteria is connected all the way to the work spaces making one long main space with smaller meeting rooms connected too it. Larger environments were possible in this part of the project because the choice of 2d fire was already made and this cut down a lot on the computation time which meant that the scale could be increased by a lot. The environment consisted of the larger cafeteria which was connected to the working spaces, 6 toilets, 3 separate meeting rooms, 1 kitchen and 2 storage rooms. | |||

=== Future environments === | |||

More simulation environments could have been build, however for the purposes of this project the current ones were deemed enough. Yet it should be noted that with the foundation laid in this project it would be fairly easy to add more environments and simulate the fires if someone were to continue upon this project. | |||

== '''Mapping algorithms''' == | |||

=== SLAM === | |||

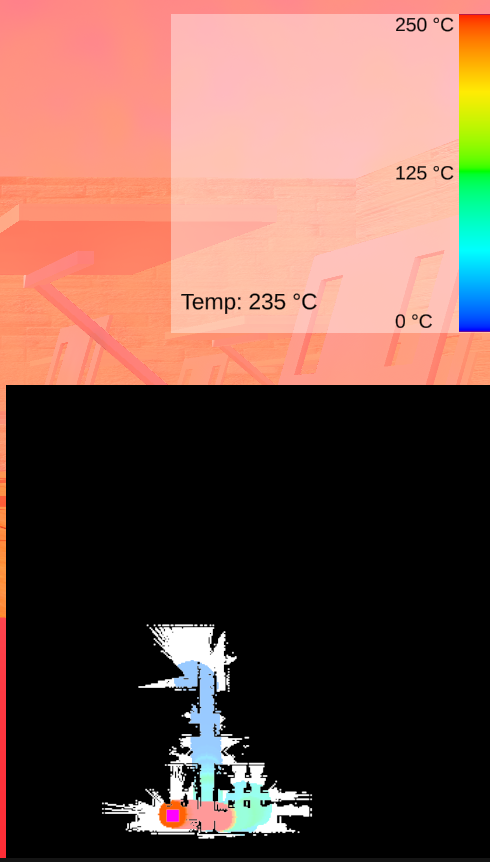

[[File:HeatMap.png|thumb|The map generated by the SLAM algorithm along with the heat data measure from the robot.]] | |||

SLAM<ref>Riisgaard, S., & Blas, M. R. (2005). SLAM for dummies. In SLAM for Dummies [Book]. https://dspace.mit.edu/bitstream/handle/1721.1/119149/16-412j-spring-2005/contents/projects/1aslam_blas_repo.pdf</ref> (simultaneous localization and mapping) is a technique for constructing the map of the environment of the robot (i.e., where the obstacles and empty space around the robot are), and, at the same time, determine the location of the robot inside the environment. | |||

In our simulation, we use data from an emulated LIDAR like sensor, that provides the input to the SLAM algorithm: a set of observations from the sensor that consist of the distance of an obstacle relative to the sensor and the angle used for obtaining the distance. This is provided to the algorithm at regular time steps. This information is combined with a series of controls (i.e., movement instructions) given to the robot and odometry information (i.e., how far the robot has moved based its own sensors). The SLAM algorithm, using this information, updates both the state of the robot (i.e., location) and the map of the environment using probabilistic techniques. | |||

Two SLAM algorithms were ported to unity. CoreSLAM<ref>Steux, Bruno & El Hamzaoui, Oussama. CoreSLAM: a SLAM Algorithm in less than 200 lines of C code. https://www.researchgate.net/publication/228374722_CoreSLAM_a_SLAM_Algorithm_in_less_than_200_lines_of_C_code </ref>, which is an efficient and fast SLAM algorithm, more suitable for systems with limited resources and a port to C# of the HectorSLAM<ref>hector_slam - ROS Wiki. https://wiki.ros.org/hector_slam</ref> algorithm which is part of the functionality of the ROS. | |||

The starting point for our implementation was a public code base<ref>Mikkleini. GitHub - mikkleini/slam.net: Simultaneous localization and mapping libraries for C#. GitHub. https://github.com/mikkleini/slam.net </ref>, that was adapted to compile and optimized for the .NET version used by Unity. In order for the SLAM code to be used in unity several adaptation needed to be made since the original CoreSLAM and HectorSlam implementation were made for a different version of .NET. To do this we ported the source code in our unity project (the SLAM code can be found in the Assets/SLAM folder of our project). After this we needed to adapt the SLAM algorithms to accept the input from our LiDAR sensor. We did this in for both SLAM implementation in the BaseSlam.cs<ref name=":44" /> an HectorSlam.cs<ref name=":44" /> scripts respectively. In these scripts we translate the output of the LiDAR sensor and we feed it into the SLAM algorithms which will generate an obstacle map of given resolution and size. The resolution and size can be controlled as parameters before starting the simulation. The map generated by the SLAM algorithms was translated from raw byte data (The likelihood of there being an obstacle) to an dynamic image appear on the screen. Initially this was done by calling the SetPixel<ref name=":43">Unity - SetPixel https://docs.unity3d.com/ScriptReference/Texture2D.SetPixel.html</ref> method of a texture<ref>Unity - Texture2D https://docs.unity3d.com/ScriptReference/Texture2D.html</ref> for an image<ref>Unity - RawImage https://docs.unity3d.com/2018.2/Documentation/ScriptReference/UI.RawImage.html </ref> in the simulation UI. However this method of rendering the map was extremely slow for larger map resolution since each pixel had to be drawn by the CPU . Because of this the frame rate of the simluation wa very low (5-10 fps). This was fixed by using a ComputeShader, <ref>Unity - ComputeShader https://docs.unity3d.com/ScriptReference/ComputeShader.html</ref>which would use the GPU to draw in parallel each pixel of the texture based on the predicted likelihood from the SLAM algorithm. The code for the shader is in the LidarDrawShader.compute<ref name=":44" /> in the Assets folder of our Unity project. This method drastically increased our fps to around 70 frames per second. | |||

Besides showing the obstacle data we also need to visualize the temperature measurements on our mini-map. This was done in two ways. First, we displayed the current temperature measurement as text on our user interface. This way the fire operator can clearly see if the robot is in danger of overheating. Second, we draw a "heat path" of the robot displaying the temperatures that were measured during the exploration of the environment. Older measurements were made to slowly fade out by increasing the transparency values of their colors. To implement this we also used the SetPixels <ref name=":43" />method for a 2d texture in Unity. The code for displaying the heat data can be found in LidarDrawer.cs<ref name=":44" /> (not the best name). The final result of the mini-map can be seen in the image on the left. | |||

== '''Further work''' == | |||

There are quite some things that we at first wanted to do, but due to time constraints couldn't do. Below are some features for the simulation and research that could be done to continue on our project. Firstly, the parts of the robot we ignored for this project can be looked into. Some of these things are: The movement of the robot, and with that the path-finding of the robot, The physical aspect of the robot: Currently the robot in the simulation is invincible and doesn't influence it's environment. In a real firefighting situation this will not be the case, so to get closer to the design of an actual scouting robot for firefighters, research needs to be done into the material and physical capabilities of such robot. | |||

Secondly, there are a lot of features that could be added to the robot, that didn't fit in the scope of this course. The biggest feature we did not include in our simulation is victim detection. In our use analysis this feature was pointed at as one of the most important features next to mapping. Adding a victim detection algorithm to the simulation would be one step closer to realizing an actual robot. Another thing we encountered near the end of the course was the possibility to have the heat sensor data included into the SLAM algorithm, we didn't have time to include this in the simulation at that point, but using the heat data would possibly improve the mapping and so further improve the functionality of the robot. If all this is realized, one can look at what is ultimately the end goal of the research, actually building a fire reconnaissance robot to assist fire fighters in dangerous indoor fire, and help preserve lives of victims and firefighters. | |||

== '''Appendix''' == | |||

===Appendix 1; Logbook=== | |||

{| class="wikitable" | |||

|+Logbook | |||

!Week | |||

!Name | !Name | ||

!Hours spent | !Hours spent | ||

| Line 43: | Line 648: | ||

| rowspan="6" |1 | | rowspan="6" |1 | ||

|Dimitrios Adaos | |Dimitrios Adaos | ||

| | |Introductory Lecture (2h), Meeting (1h), Brainstorm (0.5h), | ||

| | Find papers (2h), Read and summarize papers (8h) Wrote Introduction (6h) | ||

|19.5h | |||

|- | |- | ||

|Wiliam Dokov | |Wiliam Dokov | ||

| | |Introductory Lecture (2h), Meeting (1h), Brainstorm (0.5h), Find papers (3h), Summarry (7h) | ||

| | |13.5h | ||

|- | |- | ||

|Kwan Wa Lam | |Kwan Wa Lam | ||

|Meeting (1h), Brainstorm (0.5h) | |Introductory Lecture (2h), Meeting (1h), Brainstorm (0.5h), Find papers(1h), Read and summarize papers (7h) | ||

| | |11.5h | ||

|- | |- | ||

|Kamiel Muller | |Kamiel Muller | ||

| | |Introductory Lecture (2h), Meeting (1h), Brainstorm (0.5h), Find papers(1h) | ||

| | |4.5h | ||

|- | |- | ||

|Georgi Nihrizov | |Georgi Nihrizov | ||

| | |Introductory Lecture (2h), Meeting (1h), Brainstorm (0.5h), Find papers(2h), | ||

| | Read and summarize papers (8h) | ||

|13.5h | |||

|- | |- | ||