Mobile Robot Control 2023 HAL-9000: Difference between revisions

No edit summary Tag: 2017 source edit |

|||

| (222 intermediate revisions by 6 users not shown) | |||

| Line 24: | Line 24: | ||

https://cstwiki.wtb.tue.nl/wiki/File:Midterm_presentation.pdf | https://cstwiki.wtb.tue.nl/wiki/File:Midterm_presentation.pdf | ||

==''' | <br /> | ||

=='''Towards Final Challenge'''== | |||

=='' | ===Introduction=== | ||

In recent days, robots have become indispensable in various domains, particularly in tasks that involve repetition. Among these applications, one instance is enabling robots to autonomously navigate and serve orders in restaurants, which is encapsulated in the Restaurant Challenge of the Mobile Robot Control course at TU/e. This project evaluates the capabilities of an autonomous robot to navigate and deliver orders within a restaurant setting. The challenge mandates teams to develop software and algorithms for robot 'Hero' to let it autonomously traverse the restaurant, avoiding obstacles and humans, while accurately delivering orders to the designated tables. | |||

This wiki page aims to encompasses the strategies and algorithms employed by the team HAL-9000 for the Restaurant Challenge. | |||

The page first begins with an overview of the general strategies. It details the systematic integration of various algorithms and software that have been developed to facilitate Hero's tasks. These include the robot's ability to traverse the restaurant independently, avoiding obstacles and humans, and delivering orders accurately to the appropriate tables. Additionally, a state flow diagram and data flow diagram are provided to elucidate Hero's task progressions and the processing of information. | |||

Subsequently, the wiki delves deeper into the specifics of each algorithm implemented in the project. This involves a clear explanation of the localization algorithm for identifying Hero's position in the restaurant, the global path planning algorithm for determining the overall path, and the local path planning algorithm for managing obstacle avoidance and local route adjustments. | |||

=== | After discussing the individual algorithms, the wiki elaborates on the integration of these systems and how they operate in unison. An evaluation of the project's performance is provided at the end, accompanied by a conclusion that summarizes the project outcome, a thorough discussion on the results, and recommendations for future improvements. | ||

===Strategy=== | |||

[[File:State flow diagram.png|800px|right|thumb|State flow diagram]] | |||

The challenge goal is to design a multi-faceted approach to ensure that the autonomous robot can deliver orders to the tables in a swift yet safe manner. To achieve this, the robot calculates the shortest path to the next table using the given table sequence, while continuously scanning the environment for potential obstacles. If an obstacle is detected, robot will enter into obstacle avoidance mode, ensuring the prevention of any crashes. Initially, only known static obstacles are considered to establish a solid foundation. As soon as the simplified version of the challenge is working, the complexity will increase incrementally: First integrate the capacity to cope with unknown static obstacles, then handle doors, and finally enable the robot to deal with unknown dynamic obstacles like humans. | |||

By creating a state and data flow diagram, a policy to create the separate blocks as well as for the integration of these blocks is determined. While progressing with the code, these diagrams should be taken into account to keep a clear and logic connection between the different parts of the code. | |||

The state diagram can be seen in the figure on the right. This is the state flow of the system that is desired to be reached by designing the code and integrating the different blocks. It includes the next table selection, planning a path to this table and what to do when detecting an obstacle at a certain range. If the obstacle is too close, the robot should stop. If the path is opened again, which can be the case with a dynamic obstacle, the robot can start driving again. Otherwise it has to plan a new path or drive carefully around the obstacle if possible. There is also an at door state, which will enable the robot to understand what a door is, know if it is open or closed, request the opening of the door or plan a new path without using the door if it remains closed. Note that the robot is continuously locating itself as it should know its position with respect to the map. When the robot has reached the next table, it will repeat the loop until all orders are delivered. The state diagram could be detailed further by including humans. For now it is decided that humans are considered as general unknown obstacles, therefore there is no dedicated state for different behavior if a human is detected. | |||

The data flow diagram can be seen in the figure at the right. It shows which blocks communicate with each other by sending or receiving data. Most flow connection are quite intuitive on what data flows over this connection, especially for the input blocks. These are not explained in detail, while other, less intuitive connection are elaborated on. The obstacle detection has a connection with the base controller. This is needed as the obstacle detection can detect when an obstacle gets too close, because of which the robot will have to stop to prevent a collision with the detected obstacle. The data flowing over this connection thus will be a signal indicating the robot must stop immediately. From the obstacle detection, there is a second connection to the planner selection. This planner selection makes sure the local planner will take over from the global planner when an obstacle is in proximity. This connection therefore carries data on what distance the obstacle is with respect to the robot. Localization provides information to the global and local planner about the position of the robot with respect to the map. The global and the local path planners communicate with each other as well. The local path planner needs a goal supplied by the global path planner, while the global path planner needs to know which nodes the local path planner has driven passed or skipped because of an obstacle. The data flowing between the planners thus are the next goals to drive to to eventually reach the correct table. The table coordinate is connected to the global path planner and provides it by supplying the node for the corresponding table to drive to. When this table is reached, the global planner will ask for the next node to plan a path to, that is why the connection is going both ways. Lastly, the base controller sends the actual robot inputs for the robot to be actuated.[[File:Data flow diagram.png|600px|right|thumb|Data flow diagram]] | |||

| Line 51: | Line 56: | ||

With the robot having determined its location with respect to the map, it will try and find the most optimal global path to reach the first table. The global path planning requires prior knowledge of the robot's environment, being the supplied map, the current position of the robot and the location of the next table. This is done using the A* algorithm, which identifies the path from an initial position to a targeted position in a nodal map with the smallest cost. The cost in this challenge is time or distance, therefore the shortest path will be selected by the A* algorithm. | With the robot having determined its location with respect to the map, it will try and find the most optimal global path to reach the first table. The global path planning requires prior knowledge of the robot's environment, being the supplied map, the current position of the robot and the location of the next table. This is done using the A* algorithm, which identifies the path from an initial position to a targeted position in a nodal map with the smallest cost. The cost in this challenge is time or distance, therefore the shortest path will be selected by the A* algorithm. | ||

Unknown objects cannot be taken into account by the A* algorithm. For to robot to handle these objects as well, a local path planning is required. The local path planning relies on laser data obtained by the sensors of the robot scanning the environment. Within their range, these sensors can detect any unknown obstacles present around the robot. As the obstacles are detected, the robot has to navigate around them locally by utilizing the Artificial Potential Field algorithm. APF creates attractive fields around the set target and repulsive fields around detected obstacles. These fields create a attractive and a repulsive force respectively, providing the robot with a collision-free path by taking the sum of these forces. | Unknown objects cannot be taken into account by the A* algorithm. For to robot to handle these objects as well, a local path planning is required. The local path planning relies on laser data obtained by the sensors of the robot scanning the environment. Within their range, these sensors can detect any unknown obstacles present around the robot. As the obstacles are detected, the robot has to navigate around them locally by utilizing the Artificial Potential Field algorithm. APF creates attractive fields around the set target and repulsive fields around detected obstacles. These fields create a attractive and a repulsive force respectively, providing the robot with a collision-free path by taking the sum of these forces. | ||

==='''Algorithm details'''=== | |||

The algorithms used make sure the robot can perform its tasks. The three kinds of algorithms are respectively for localization, global path planning and local path planning. The reasoning behind these algorithms' choices as well as the general working of the algorithms will be elaborated on in this section. | |||

====Localization==== | |||

====Localization | ====='''Localization by Particle filter'''===== | ||

Particle filter method is a potent technique used to estimate the position and orientation of a robot within a relatively authentic enviroment with noise and uncertainty. One of the key strengths of particle filters lies in their ability to manage non-linear conditions effectively, ensuring higher accuracy and robustness in complex environments. The following are the genral steps to implement this particle filter method for localization: | |||

1) Initialization | 1) Initialization | ||

The | The particle filter begins with a set of particles randomly distributed throughout the robot's known map or environment. Each particle represents a potential state of the robot, including its position and orientation, and the particle cloud can be initiliazed either through a uniform or a normal distribution. As the following figure shows, particles with Gaussian noise and random initial poses are constructed along with their filters. There are 1000 particles being generated and the center of them is the initial position of the robot. | ||

[[File:Initialloc.png|center|thumb|350px]] | [[File:Initialloc.png|center|thumb|350px|Particle filter: particle construction and initialization]] | ||

| Line 73: | Line 74: | ||

After generated the particle filters, now the prediction of the filter (average pose) should be calculated. The average pose can be obtained by iterating through the particle set while performing accumulation calculations on the position. The green arrow in the figure below shows the visualization of the average pose of particles generated around the initial point of the robot. | After generated the particle filters, now the prediction of the filter (average pose) should be calculated. The average pose can be obtained by iterating through the particle set while performing accumulation calculations on the position. The green arrow in the figure below shows the visualization of the average pose of particles generated around the initial point of the robot. | ||

[[File:avgposeloc.png|center|thumb|350px]] | [[File:avgposeloc.png|center|thumb|350px|Particle filter: average pose]] | ||

3) Propagation of particles | 3) Propagation of particles | ||

The robot | The robot would move around instead of staying still. Thus, updating the positions and orientations of particles based on the robot's motion model is crucial. In this step, each particle undergoes translation and rotation according to the robot's motion information while accounting for the noise and uncertainties in the motion model. The simulation result is shown as the gif below. With the propagation of particles, now the pose prediction (average pose) can be generated even though the robot is moving, getting the primary localization information. | ||

[[File:Local3.gif|center|thumb|450px]] | [[File:Local3.gif|center|thumb|450px|Particle filter: particle propagation]] | ||

| Line 96: | Line 97: | ||

6) Performance and analysis | 6) Performance and analysis | ||

After all the steps above, the particle filter method should be achieved. Thus, now the RVIZ and mrc-teleop can be utilized to simulate the performance of this method for localization. The simulation result is as the below gif | After all the steps above, the particle filter method should be achieved. Thus, now the RVIZ and mrc-teleop can be utilized to simulate the performance of this method for localization. The simulation result is as the below gifs shows. As shown in these two images, the likelihood and resample operations of the particles are working well. However, the only problem is the laser prediction (the green blocks) that similar to the figure shown in step 3), they are not accurate for not matching with the structure of the map. This results significant interference with the overall pose estimation of the particles. Despite continuously debugging the code and checking the map creation and loading, the root cause of the problem hasn't been spotted. Therefore, it is possible that there is an issue with the simulator system, which prevents the sensors from clearly detecting the boundaries created in the map. | ||

[[File:Local6.gif|center|thumb|350px|Particle filter: with classification by likelihood and resampling]] | |||

[[File:Local6new.gif|center|thumb|350px|Particle filter: with classification by likelihood and resampling in redefined config file]] | |||

====='''Localization by dead-reckoning approach'''===== | |||

Due to the incapability of laser information, the availability of the other method that learned from the course was tested and utilized as an alternative, which is the dead-reckoning approach. The principle of this method is to calculate the position change during a journey by accumulating the odometry information like previous velocity and direction, and then use them to estimate the current position. | |||

The gif below visualizes the process of moving robot to one certain point by relying on the dead-reckoning localization. The robot is moving by iterating through the laser sensor data, calculating the repulsive force exerted by each obstacle on the robot and accumulating it to the total repulsive velocity (the linear and angular velocities). Then, the robot would stop at different and specifically set coordinate points. With the utilization of the length measurement function from the map in the simulator, the accuracy of this dead-reckoning approach can be evaluated. Compare with the measurement values and the exact coordinate values at the stopped point, the errors are mostly less than 0.01m, which are really small. However, since this method does not account for various real-world factors such as noise and slip, it is not that practical. Thus, by adopting the uncertain odometry, the same method was carried out again with the same steps. The results of uncertain odometry indicate that the error is much larger than the certain odometry, and even gets larger with moving time increasing. | |||

[[File:Local7c.gif|center|thumb|450px|Dead-reckoning: drive the robot to a certain point by localization]] | |||

====='''Summary of localization'''===== | |||

After considering the designs of these methods for localization, due to time and capability constraints, there are only two viable options. One is to only use steps 1) to 3) of the particle filter method, while the other is the dead-reckoning method. After comparison, using only steps 1) to 3) of the particle filter method results in smaller errors compared to dead-reckoning in the same conditions. However, it is unable to determine the initial angle of the robot's position. Therefore, the main focus for future improvement would still lie in debugging the errors in laser prediction within the particle filter, or other similar localization methods such as Extended Kalman Filter (EKF) can also be learned and employed as an alternative. | |||

====Global path planning - A* | ====Global path planning - A*==== | ||

=====A* algorithm===== | =====A* algorithm===== | ||

The A* algorithm has been chosen as global path planner for this project. A* is a popular and widely-used search algorithm that finds the shortest path between two nodes in a graph. It combines the concepts of both breadth-first search and greedy best-first search to efficiently navigate through the graph. The algorithm maintains two lists: the open list and the closed list. It starts by initializing the open list with the starting node and assigns a heuristic value to each node, estimating the cost from that node to the goal. The algorithm then repeatedly selects the node with the lowest total cost, which is the sum of the cost from the start node and the heuristic value. It expands this selected node by examining its | The A* algorithm has been chosen as global path planner for this project. A* is a popular and widely-used search algorithm that finds the shortest path between two nodes in a graph. It combines the concepts of both breadth-first search and greedy best-first search to efficiently navigate through the graph. The algorithm maintains two lists: the open list and the closed list. It starts by initializing the open list with the starting node and assigns a heuristic value to each node, estimating the cost from that node to the goal. The algorithm then repeatedly selects the node with the lowest total cost, which is the sum of the cost from the start node and the heuristic value. It expands this selected node by examining its neighboring nodes, updating their costs and heuristic values accordingly. The process continues until the goal node is reached or there are no more nodes to explore. By carefully considering the estimated cost and choosing the most promising nodes first, A* efficiently finds the optimal path while avoiding unnecessary exploration.<br /> | ||

=====Creating of nodes and connections===== | =====Map Preparation: Creating of nodes and connections===== | ||

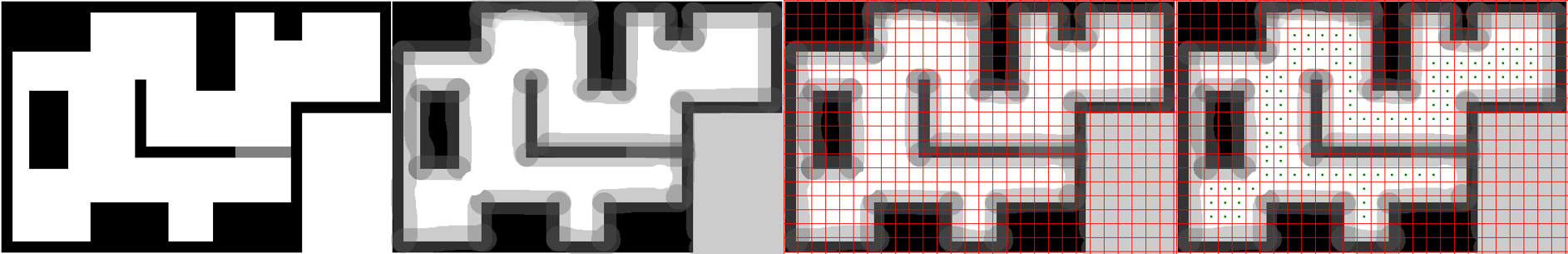

To create the nodes and connections the provided map was taken and divided into equal size squares. | To create the nodes and connections the provided map was taken and divided into equal size squares. The size of these squares can be decreased or increased for an accordingly finer or coarser grid. The current squares seen in the image are 20x20 pixels, which translates to 50x50cm for the physical robot. A finer grid will represent the map more accurately but also increases the chance of hitting walls while driving with the physical robot as well as increasing computation time and making the robot visit more nodes leading to a less smooth motion. The center of each square, in which no obstacles are present, are assigned as nodes. From these nodes the connections are found by checking each corner of the squares, in which the nodes are located, and if two squares have at least one corner in common then a connections between the two nodes will be made. Each wall and obstacles in the map were made bigger to account for uncertainty of the environment and size of the robot. | ||

[[File:Longmap.png|center|1250px|thumb|Creation of grid map, nodes and connections]] | |||

=====Performance of A*===== | |||

'''Simulation''' | |||

In simulation A* works really well as can be seen in the following videos, in both videos it is assumed the door in the map is closed. The first simulation video is made from a map in which the walls and objects have not been made bigger and hence the robot makes very sharp corners close to the walls. | |||

[[File:Close door.gif|center|thumb|Driving dangerously close to walls]] | |||

[[File: | |||

And the following video shows the final result of a safer path that was made with the grid map and nodes shown above. This is a better result as the robot drives in the middle of the corridor, which is safer as this will prevent clipping corners with the physical robot. | |||

[[File:Close door careful.gif|center|thumb|Driving safe distance from walls]] | |||

'''On physical system''' | |||

On the real robot, as can be seen from the following video: | |||

[[File:Wikireport.gif|center|thumb|450px|Hero navigating the first 3 tables using A*]] | |||

In this video, Hero arrives at a table, turns towards it and says 'I arrived at Table'. For the video with sound [https://tuenl-my.sharepoint.com/:v:/g/personal/a_h_d_pauw_student_tue_nl/EcRTOid_dJhFuJpP7wPTtF4BfdEl4oyFL_uu3NJc-nJ8Ww?e=aWCaYW&nav=eyJyZWZlcnJhbEluZm8iOnsicmVmZXJyYWxBcHAiOiJTdHJlYW1XZWJBcHAiLCJyZWZlcnJhbFZpZXciOiJTaGFyZURpYWxvZyIsInJlZmVycmFsQXBwUGxhdGZvcm0iOiJXZWIiLCJyZWZlcnJhbE1vZGUiOiJ2aWV3In19 click here]. | |||

The result can be seen in the following image. | |||

[[File:Wikiendtablesequence.jpg|center|thumb|450px|Result of Hero going from one table to the other for the first 3 tables]] | |||

Hero can be seen going from the starting point (first sheet of paper on the right) to table 1 (second sheet) then table 0 (third sheet) and finally stopping at table 2 (last sheet). The reason hero is not in the restaurant maze is because at that point during testing there was some initialization problem in which hero would first go from the centre of the map to the starting point (first sheet of paper on the right) of the maze ignoring all obstacles. The robot thinks it is at the map centre while in reality it is not placed in that location. This problem can be seen in the video above and in the following video in simulation: | |||

[[File:Initialize first.gif|center|thumb|Problem with initialization]] | |||

This problem was solved by adding an offset to the odometry of the robot. The offset makes sure the actual position of the robot with respect to the map and the position the robot thinks it is in are the same. Now the robot takes it's starting point as the start of the maze as can be seen in this simulation video below. Do note this solution is only temporary and should be replaced if a working localization is integrated. | |||

[[File:Starting immediately.gif|center|thumb|Follow path directly from initial position]]With the odometry offset applied, the robot was able to perform the tasks from the video's successfully. Therefore it can be concluded that the global path planning utilizing the A* algorithm is working properly both in simulation and on the physical system. | |||

====Local path planning - Artificial Potential Field | ====Local path planning - Artificial Potential Field==== | ||

The Artificial Potential Field method | The Artificial Potential Field (APF) method is a pioneering approach in local path planning algorithms. The primary principle behind this technique involves modeling obstacles and goals as repulsive and attractive vector fields, respectively. Conceptualizing the scenario from a potential perspective, it assigns a lower potential to the goal and a higher potential to obstacles. As a result, the robot navigates autonomously towards the coordinates with the lowest potential. | ||

The implementation of the APF method starts by calculating the robot's position's potential. This potential computation helps derive the induced velocities, which are then transmitted to the robot as navigation references. In practical terms, the APF method is constructed as a function that, given the robot's current pose, goal pose, and laser data, returns the reference velocities necessary to move towards the position with minimum potential. | |||

The laser data | The APF method employs laser data to model obstacles. One straightforward approach would be to consider only the nearest obstacle represented as a point in space. This point's relative position concerning the robot would be determined by the range and angle of the minimum laser scan. However, this approach may lack robustness since a single laser measurement might be susceptible to errors, leading to uncertainty in the obstacle's position. To address this issue, every laser measurement below a pre-set detection threshold is chosen to represent an obstacle. Consequently, each obstacle is modeled as a point cloud, with each point generating a repulsive velocity reference for the robot. The total repulsive velocity is then calculated as the sum of all these point-generated velocities, taking into account their orientation. This summation helps balance forces pointing in different directions and compensates for outliers by fighting the uncertainty of laser data. | ||

The APF function is iteratively called until the robot reaches the goal. This iterative process enables the robot to navigate to its destination while avoiding both static and dynamic obstacles. Since the obstacle set is recalculated every time the function is called, the method can handle errors or moving objects. | |||

As the goal is also a point on the map, the attractive velocity is unique and constant. To manage this, all the APF method parameters have been tuned to strike a balance between attractive and repulsive forces, enabling the robot to follow a viable and safe path towards the goal. This process of fine-tuning ensures that the robot is not overly repelled by obstacles that it cannot move forward or overly attracted to the goal that it cannot avoid obstacles.[[File:Apf.gif|thumb|APF on simulation]] | |||

The pros of this approach: | The pros of this approach: | ||

| Line 170: | Line 177: | ||

- It relies on parameter tuning: all the velocity coefficients and al the thresholds must be found through a trial-and-error process. | - It relies on parameter tuning: all the velocity coefficients and al the thresholds must be found through a trial-and-error process. | ||

<br /> | |||

===Integration=== | |||

====Software architecture==== | |||

To integrate the spare parts of the project a main file has been chosen to host the initialization code and a for loop in which the task to take on are managed on a high level. The initialization consists of reading the sequence of table and the json file containing the map parameters. Each table is then mapped into a goal node ID (to feed into A*) and a goal orientation. These values have been hardcoded into helper functions to be called in the main. | |||

Then the flow enters a for loop. Iterating over the list of tables given as input, the robot first computes the ideal path to the table using A*, then it enters the navigation mode. All the functions required for global navigation are collected into a library. Furthermore, a different library which collects the main APF function, all its helper functions and an obstacle detection function is included in the project. The local planning related functions, however, are not called in the main, but inside the global navigation function. The idea is, as a matter of fact, to use the APF as an exception to the default A* navigation, being triggered when an obstacle is detected on the path. | |||

====Obstacle avoidance policy==== | |||

To combine each of the block, the libraries from navigation and localization are merge first. Then, the right policy needs to be developed to switch between local path planning and global path planning. [[File:not_working.gif|center|thumb|First policy on simulation]] | |||

<br /> | The first designed policy is rather simple. The robot follows the global path as long as possible, then, if it detects an obstacle within a certain range, it switches to the obstacle detection mode. When obstacle detection mode is active, the robot gives control to the APF algorithm in order to find a safe path, drive past the obstacle and get back on the global track. The APF, however, needs a goal to compute velocity references. The idea is then to skip a few nodes, setting the goal for APF as the current_global_path_goal + n_skip. The assumption is that obstacles such that chairs or bags (which can be found in a restaurant) are not so large to take up many nodes. | ||

=== | |||

However, after simulating the first policy from the gif, it can be seen that although the robot switches to the local path planning state at the previous node of the obstacle, a significant portion of the robot's edges pass right through the robot in the local path planning state, which means that the current algorithm is not feasible. Since no issues were expected from the APF function as it works correctly on its own, the issue would originate from the policy. Unfortunately, the issue in the policy integration has not been identified completely, because of which no fitting solution could be found. | |||

Therefore, a new policy is put up without using APF: When the robot encounters an obstacle, the robot stops at the node Pn in front of the obstacle. The robot will then calculate whether it will pass the next node Pn+1 based on the data returned by lidar, with the path being a straight line from node Pn to node Pn+1. If the obstacle occupies the next node Pn+1, then the robot will calculate whether it will pass node Pn+2, which is a straight line from node Pn to node Pn+2, and so on. In the end, it can be seen in the gif that the robot avoided the obstacle and the "local" path is a straight line. However, the biggest disadvantage of this method is that if the obstacle is too large to obscure the robot to fail to detect the node after it, then the robot will stall forever. | |||

[[File:STABLE.gif|center|thumb|New policy on simuation]] | |||

<br /><br /> | |||

===Robustness & Evaluation in Restaurant challenge=== | |||

In the context of robotics, robustness typically refers to the ability of a robot to handle or recover from unforeseen circumstances, errors, or changes in its environment. Robustness is a key feature in robotics as it enables the robot to function accurately and consistently despite the presence of noise, disturbances, or variations in the operational environment. | |||

====Final Simulation Performance==== | |||

During the Restaurant challenge, Hero is supposed to: | |||

1) Start in certain area and visit a list of tables in sequence | |||

2) Position near the table, facing towards the table and give a clear sound signal when arrives | |||

3) Do not touch walls or objects | |||

The integrated global and local path planning are tested, and simulation result is as follows:[[File:Stable save.gif|center|thumb|Simulation performance on final challenge day]] | |||

From the simulation it can be seen that the robot move smoothly, avoid an unknown object and successfully plan and follow its way to all 5 tables in sequence, which perfectly meets all the requirements. | |||

====Robustness in Test==== | |||

During testing, we just tested the following robustness based on global navigation: | |||

1)Command sequence execution test: Test whether Hero can accurately access all tables in sequence given a series of table sequences. | |||

2)Sound signal test: Test whether Hero can emit a clear sound signal when reaching a table. | |||

The testing results can be found in the A* performance part. By adding the table sequence, we can make sure the robot does not perform the same task in a loop. And when arriving at each setting position, the robot would perform sound signal. These reduce the chance of error the robot would meet and increase robustness. However, since we just tackled our policy issue in simulation in the last day, we have no time to test combining (local and global) navigation in the restaurant challenge map. | |||

Besides, one issue should be improved is that the robot performance depends on the starting position. As we were not able to complete the localization module in time, if the robot had been tested in the restaurant map, the robot would have lost itself because it did not know its location. This means that when the robot initializes, we should set the robot in the coordinate and initial angle which are as same as those in simulation to make sure the robot will follow the setting path, which reduces the robustness. | |||

====Robustness in Final Restaurant challenge==== | |||

On the final challenge day the code can be demonstrated in a maximum of two trials. The software version used does not include a complete localization algorithm. | |||

*The recording of first trial is presented as follows: | |||

[[File:trial1.gif|center|thumb|First trial]] | |||

From the recording it can be seen that Hero first makes a fast turn to the left side, plans its way, moves straight forward and collides with the table. | |||

It is expected Hero could avoid objects and visit several tables in sequence but clearly it has under-performed compared to the simulation performance. | |||

*The recording of second trial is presented as follows: | |||

[[File:Second try.gif|center|thumb|Second trial]] | |||

From the recording it can be seen that Hero first turn left to plan its way, then go straight but unfortunately clipped the static coat rack object. It can be seen that the robot does try to steer left to avoid the box. However, as it is already in contact with the box, this obstacle avoidance try was not successful and therefore the second trial was ended as well. | |||

<br /> | A few hypothesis about what happened can be found in the deficiencies section later.<br /> | ||

====Deficiencies of Robustness==== | |||

First of all, being the particle filter not robust enough, we had to rely on dead-reckoning. This is a problem since the navigation is based on the knowledge of the current pose. Although, since the crash happened in the early stage of the challenge, the odometry drift might not be the main actor in the bad performance. The navigation policy could have not worked properly as well. The reason why this happened should be found by reproducing the occurrence in testing, after which faulty code can be identified and adjusted. | |||

Secondly, during the challenge it seemed as if the robot did not see the obstacles. It is expected that this is not caused by either the behavior of the local or the global planner. The most probable explanation to why that happened is because the robot got stuck in a loop somewhere and could not go back to either local or global planner. The problem therefore origins from integration between the two path planners and made to robot drive in a straight line without steering or avoiding obstacles. This is the most probable explanation because the robot did not drove directly to its assigned position at the table while it also did not avoid obstacles. Therefore the error is not caused by either of them, as the occurrence is not matching the tasks of the path planners. Being stuck in a loop without being able to exit the loop does match with the straight drive direction. The reason why this happened should be found by reproducing the occurrence in testing, after which faulty code can be identified and adjusted. | |||

Another hypothesis, based upon the outcome of the second trial, is a bad working obstacle detection. The robot relies on an obstacle detection function that checks only a part of the laser scans in front of the robot. The amount of laser scans to check is a parameter tuned on simulation first and later on the testing with the rosbot. Since Hero is larger than the rosbot, the number of laser scans to check should be larger as well. As a matter of fact, from the second video it can be seen that the robot's side collided with the obstacle. This indicates the range of laser scans used for obstacle detection should be increased when running the code on a different robot. | |||

The incomplete aspect of the particle filter method in the overall design of localization has been explained in step 6) in its design steps. The limitation of the dead-reckoning method is that it accumulates significant errors in real-world situations, making it unable to provide accurate positioning, which is crucial for the final restaurant challenge. As for using the method described in design steps of particle filter from step 1) to step 3) for localization, it does take into account the errors and demonstrates promising results in simulations. However, it is not adopted in the end because it couldn't determine the initial angle of the robot's position. And before the final challenge we didn't know we actually could adjust the initial angle of it. | |||

===Conclusion=== | ===Conclusion=== | ||

As can be concluded from the performance and deficiencies, not all steps planned in the strategy were executed successfully. Both the global path planner using A* and the local path planner using the artificial potential field algorithm work individually. The localization which makes use of a particle filter has not been successful in locating the robot with respect to the map. The integration of the global planner with a local planner has been achieved in some manner, but not with the artificial potential field algorithm. This version of the local planner is not very robust or efficient, but does do its job for simple environments. With the local and global planner integrated, the robot can, in simulation, successfully deliver orders to each table while avoiding unknown obstacles. Hereby it also correctly positions towards the table and gives a signal that is has arrived at this table with the order. So far so good. However, during the final challenge, it became clear the robot did not at all perform similar to the simulation. While is started well by orientating correctly, the emergency button of the robot had to be pressed as the robot drove into a table and a coat rack on the first and second trial respectively. This concluded the final challenge for HAL-9000 as the two trials had to be ended precautionary. | |||

The state flow has therefore not been completed as well, with the absence of the localization, interaction with a door and proper integration of the local planner. To make the robot achieve all its required tasks successfully, future steps have been considered, as can be found in the recommendations. These steps will make sure the robot is working substantially better in simulation, but will also achieve robust performance for the physical robot if executed correctly. | |||

===Recommendations | ===Recommendations=== | ||

Since code is has not been finished, the next topics that must be improved are quite clear. The first steps to take are to create a robust localization for the robot to know where it is. Simultaneously, it should be made sure the physical robots performs in similar fashion to the robot in simulation regarding the integration between global and local path planning. After that, the localization has to be integrated as well, creating a system that can handle unknown static objects and keep track of its location with respect to the map. This system is expected to handle dynamical objects already to some degree. | Since code is has not been finished, the next topics that must be improved are quite clear. The first steps to take are to create a robust localization for the robot to know where it is. Simultaneously, it should be made sure the physical robots performs in similar fashion to the robot in simulation regarding the integration between global and local path planning. After that, the localization has to be integrated as well, creating a system that can handle unknown static objects and keep track of its location with respect to the map. This system is expected to handle dynamical objects already to some degree. | ||

| Line 218: | Line 269: | ||

The last step to take is intensive and thorough testing to check the performance and robustness of the robot for all kinds of situations that might happen when such a robot is set to work in a restaurant. The importance of these tests is crucial for the safety of the robot and the environment around it. | The last step to take is intensive and thorough testing to check the performance and robustness of the robot for all kinds of situations that might happen when such a robot is set to work in a restaurant. The importance of these tests is crucial for the safety of the robot and the environment around it. | ||

A recommendation would be to start testing the code as soon as possible. This way, not only the different blocks of code can be verified individually but the integration as well. In addition, the robustness of the code can be thoroughly tested as this can only be done on the physical robot. | |||

Latest revision as of 22:22, 7 July 2023

Group members:

| Name | student ID |

|---|---|

| Xingyi Li | 1820443 |

| Alexander De Pauw | 1877267 |

| Timo van Gerwen | 1321854 |

| Yuzhou Nie | 1863428 |

| Ronghui Li | 1707183 |

| Guglielmo Morselli | 1959301 |

Design Presentation:

https://cstwiki.wtb.tue.nl/wiki/File:Midterm_presentation.pdf

Towards Final Challenge

Introduction

In recent days, robots have become indispensable in various domains, particularly in tasks that involve repetition. Among these applications, one instance is enabling robots to autonomously navigate and serve orders in restaurants, which is encapsulated in the Restaurant Challenge of the Mobile Robot Control course at TU/e. This project evaluates the capabilities of an autonomous robot to navigate and deliver orders within a restaurant setting. The challenge mandates teams to develop software and algorithms for robot 'Hero' to let it autonomously traverse the restaurant, avoiding obstacles and humans, while accurately delivering orders to the designated tables.

This wiki page aims to encompasses the strategies and algorithms employed by the team HAL-9000 for the Restaurant Challenge.

The page first begins with an overview of the general strategies. It details the systematic integration of various algorithms and software that have been developed to facilitate Hero's tasks. These include the robot's ability to traverse the restaurant independently, avoiding obstacles and humans, and delivering orders accurately to the appropriate tables. Additionally, a state flow diagram and data flow diagram are provided to elucidate Hero's task progressions and the processing of information.

Subsequently, the wiki delves deeper into the specifics of each algorithm implemented in the project. This involves a clear explanation of the localization algorithm for identifying Hero's position in the restaurant, the global path planning algorithm for determining the overall path, and the local path planning algorithm for managing obstacle avoidance and local route adjustments.

After discussing the individual algorithms, the wiki elaborates on the integration of these systems and how they operate in unison. An evaluation of the project's performance is provided at the end, accompanied by a conclusion that summarizes the project outcome, a thorough discussion on the results, and recommendations for future improvements.

Strategy

The challenge goal is to design a multi-faceted approach to ensure that the autonomous robot can deliver orders to the tables in a swift yet safe manner. To achieve this, the robot calculates the shortest path to the next table using the given table sequence, while continuously scanning the environment for potential obstacles. If an obstacle is detected, robot will enter into obstacle avoidance mode, ensuring the prevention of any crashes. Initially, only known static obstacles are considered to establish a solid foundation. As soon as the simplified version of the challenge is working, the complexity will increase incrementally: First integrate the capacity to cope with unknown static obstacles, then handle doors, and finally enable the robot to deal with unknown dynamic obstacles like humans.

By creating a state and data flow diagram, a policy to create the separate blocks as well as for the integration of these blocks is determined. While progressing with the code, these diagrams should be taken into account to keep a clear and logic connection between the different parts of the code.

The state diagram can be seen in the figure on the right. This is the state flow of the system that is desired to be reached by designing the code and integrating the different blocks. It includes the next table selection, planning a path to this table and what to do when detecting an obstacle at a certain range. If the obstacle is too close, the robot should stop. If the path is opened again, which can be the case with a dynamic obstacle, the robot can start driving again. Otherwise it has to plan a new path or drive carefully around the obstacle if possible. There is also an at door state, which will enable the robot to understand what a door is, know if it is open or closed, request the opening of the door or plan a new path without using the door if it remains closed. Note that the robot is continuously locating itself as it should know its position with respect to the map. When the robot has reached the next table, it will repeat the loop until all orders are delivered. The state diagram could be detailed further by including humans. For now it is decided that humans are considered as general unknown obstacles, therefore there is no dedicated state for different behavior if a human is detected.

The data flow diagram can be seen in the figure at the right. It shows which blocks communicate with each other by sending or receiving data. Most flow connection are quite intuitive on what data flows over this connection, especially for the input blocks. These are not explained in detail, while other, less intuitive connection are elaborated on. The obstacle detection has a connection with the base controller. This is needed as the obstacle detection can detect when an obstacle gets too close, because of which the robot will have to stop to prevent a collision with the detected obstacle. The data flowing over this connection thus will be a signal indicating the robot must stop immediately. From the obstacle detection, there is a second connection to the planner selection. This planner selection makes sure the local planner will take over from the global planner when an obstacle is in proximity. This connection therefore carries data on what distance the obstacle is with respect to the robot. Localization provides information to the global and local planner about the position of the robot with respect to the map. The global and the local path planners communicate with each other as well. The local path planner needs a goal supplied by the global path planner, while the global path planner needs to know which nodes the local path planner has driven passed or skipped because of an obstacle. The data flowing between the planners thus are the next goals to drive to to eventually reach the correct table. The table coordinate is connected to the global path planner and provides it by supplying the node for the corresponding table to drive to. When this table is reached, the global planner will ask for the next node to plan a path to, that is why the connection is going both ways. Lastly, the base controller sends the actual robot inputs for the robot to be actuated.

To complete the final challenge, an efficient and robust code is created to make the robot deliver orders to multiple tables in a restaurant which environment is known beforehand. However, as the world around us is not static nor constant, even in the presence of unknown static or dynamic obstacles the robot should still complete its task. To solve this challenge, an approach that incorporates global path planning, local path planning for obstacle avoidance and localization is proposed.

The first task the robot performs during the challenge is to find out where it is. This is called localization, for which a particle filter algorithm is utilized. Particle filters, or sequential Monte Carlo methods, are a set of Monte Carlo algorithms used to find approximate solutions for filtering problems for nonlinear state-space systems. The algorithm will estimate the robot's position with respect to the restaurant map by resampling particles until convergence to the position of the physical robot is achieved. The more particles used in the algorithm, the more accurate the algorithm can determine the position of the robot. However, the increase in particles will have a substantial effect on the computation time and could result in lag. Therefore, a balance has to be found, with the amount of particles as a tuning knob, between the computation time and the accuracy of the algorithm.

With the robot having determined its location with respect to the map, it will try and find the most optimal global path to reach the first table. The global path planning requires prior knowledge of the robot's environment, being the supplied map, the current position of the robot and the location of the next table. This is done using the A* algorithm, which identifies the path from an initial position to a targeted position in a nodal map with the smallest cost. The cost in this challenge is time or distance, therefore the shortest path will be selected by the A* algorithm.

Unknown objects cannot be taken into account by the A* algorithm. For to robot to handle these objects as well, a local path planning is required. The local path planning relies on laser data obtained by the sensors of the robot scanning the environment. Within their range, these sensors can detect any unknown obstacles present around the robot. As the obstacles are detected, the robot has to navigate around them locally by utilizing the Artificial Potential Field algorithm. APF creates attractive fields around the set target and repulsive fields around detected obstacles. These fields create a attractive and a repulsive force respectively, providing the robot with a collision-free path by taking the sum of these forces.

Algorithm details

The algorithms used make sure the robot can perform its tasks. The three kinds of algorithms are respectively for localization, global path planning and local path planning. The reasoning behind these algorithms' choices as well as the general working of the algorithms will be elaborated on in this section.

Localization

Localization by Particle filter

Particle filter method is a potent technique used to estimate the position and orientation of a robot within a relatively authentic enviroment with noise and uncertainty. One of the key strengths of particle filters lies in their ability to manage non-linear conditions effectively, ensuring higher accuracy and robustness in complex environments. The following are the genral steps to implement this particle filter method for localization:

1) Initialization

The particle filter begins with a set of particles randomly distributed throughout the robot's known map or environment. Each particle represents a potential state of the robot, including its position and orientation, and the particle cloud can be initiliazed either through a uniform or a normal distribution. As the following figure shows, particles with Gaussian noise and random initial poses are constructed along with their filters. There are 1000 particles being generated and the center of them is the initial position of the robot.

2) Filter prediction

After generated the particle filters, now the prediction of the filter (average pose) should be calculated. The average pose can be obtained by iterating through the particle set while performing accumulation calculations on the position. The green arrow in the figure below shows the visualization of the average pose of particles generated around the initial point of the robot.

3) Propagation of particles

The robot would move around instead of staying still. Thus, updating the positions and orientations of particles based on the robot's motion model is crucial. In this step, each particle undergoes translation and rotation according to the robot's motion information while accounting for the noise and uncertainties in the motion model. The simulation result is shown as the gif below. With the propagation of particles, now the pose prediction (average pose) can be generated even though the robot is moving, getting the primary localization information.

4) Likelihood of particles

Calculating the likelihood of a particle is an important step for particle filter. It is used to determine the weight of each particle, which in turn affects the sampling probability in the subsequent resampling step. By calculating the likelihood of a particle, the particle filter can better estimate the true state of the robot and assign more weight to particles that have a higher match with the observed data.

Generally there're two sub-steps to accomplish this step. First, generate the prediction of the expected measurements (ranges to obstacles) based on laser. Then, compute the likelihood of each measurement relative to this prediction using the parameters set in the config file (incorporating noise).

5)Resampling of particles

Now the particle filter method is almost done. But the resampling of particles is also crucial for the optimization of performance. Multinomial method was chosen as the resampling method. This multinomial resampling method samples particles based on their weights and in a probabilistical way, such that particles with higher weights have a greater probability of being selected into the new particle set, while particles with lower weights have a smaller probability of being selected. This allows the particle set to better reflect the posterior probability distribution, thereby improving the performance of the filter.

6) Performance and analysis

After all the steps above, the particle filter method should be achieved. Thus, now the RVIZ and mrc-teleop can be utilized to simulate the performance of this method for localization. The simulation result is as the below gifs shows. As shown in these two images, the likelihood and resample operations of the particles are working well. However, the only problem is the laser prediction (the green blocks) that similar to the figure shown in step 3), they are not accurate for not matching with the structure of the map. This results significant interference with the overall pose estimation of the particles. Despite continuously debugging the code and checking the map creation and loading, the root cause of the problem hasn't been spotted. Therefore, it is possible that there is an issue with the simulator system, which prevents the sensors from clearly detecting the boundaries created in the map.

Localization by dead-reckoning approach

Due to the incapability of laser information, the availability of the other method that learned from the course was tested and utilized as an alternative, which is the dead-reckoning approach. The principle of this method is to calculate the position change during a journey by accumulating the odometry information like previous velocity and direction, and then use them to estimate the current position.

The gif below visualizes the process of moving robot to one certain point by relying on the dead-reckoning localization. The robot is moving by iterating through the laser sensor data, calculating the repulsive force exerted by each obstacle on the robot and accumulating it to the total repulsive velocity (the linear and angular velocities). Then, the robot would stop at different and specifically set coordinate points. With the utilization of the length measurement function from the map in the simulator, the accuracy of this dead-reckoning approach can be evaluated. Compare with the measurement values and the exact coordinate values at the stopped point, the errors are mostly less than 0.01m, which are really small. However, since this method does not account for various real-world factors such as noise and slip, it is not that practical. Thus, by adopting the uncertain odometry, the same method was carried out again with the same steps. The results of uncertain odometry indicate that the error is much larger than the certain odometry, and even gets larger with moving time increasing.

Summary of localization

After considering the designs of these methods for localization, due to time and capability constraints, there are only two viable options. One is to only use steps 1) to 3) of the particle filter method, while the other is the dead-reckoning method. After comparison, using only steps 1) to 3) of the particle filter method results in smaller errors compared to dead-reckoning in the same conditions. However, it is unable to determine the initial angle of the robot's position. Therefore, the main focus for future improvement would still lie in debugging the errors in laser prediction within the particle filter, or other similar localization methods such as Extended Kalman Filter (EKF) can also be learned and employed as an alternative.

Global path planning - A*

A* algorithm

The A* algorithm has been chosen as global path planner for this project. A* is a popular and widely-used search algorithm that finds the shortest path between two nodes in a graph. It combines the concepts of both breadth-first search and greedy best-first search to efficiently navigate through the graph. The algorithm maintains two lists: the open list and the closed list. It starts by initializing the open list with the starting node and assigns a heuristic value to each node, estimating the cost from that node to the goal. The algorithm then repeatedly selects the node with the lowest total cost, which is the sum of the cost from the start node and the heuristic value. It expands this selected node by examining its neighboring nodes, updating their costs and heuristic values accordingly. The process continues until the goal node is reached or there are no more nodes to explore. By carefully considering the estimated cost and choosing the most promising nodes first, A* efficiently finds the optimal path while avoiding unnecessary exploration.

Map Preparation: Creating of nodes and connections

To create the nodes and connections the provided map was taken and divided into equal size squares. The size of these squares can be decreased or increased for an accordingly finer or coarser grid. The current squares seen in the image are 20x20 pixels, which translates to 50x50cm for the physical robot. A finer grid will represent the map more accurately but also increases the chance of hitting walls while driving with the physical robot as well as increasing computation time and making the robot visit more nodes leading to a less smooth motion. The center of each square, in which no obstacles are present, are assigned as nodes. From these nodes the connections are found by checking each corner of the squares, in which the nodes are located, and if two squares have at least one corner in common then a connections between the two nodes will be made. Each wall and obstacles in the map were made bigger to account for uncertainty of the environment and size of the robot.

Performance of A*

Simulation

In simulation A* works really well as can be seen in the following videos, in both videos it is assumed the door in the map is closed. The first simulation video is made from a map in which the walls and objects have not been made bigger and hence the robot makes very sharp corners close to the walls.

And the following video shows the final result of a safer path that was made with the grid map and nodes shown above. This is a better result as the robot drives in the middle of the corridor, which is safer as this will prevent clipping corners with the physical robot.

On physical system

On the real robot, as can be seen from the following video:

In this video, Hero arrives at a table, turns towards it and says 'I arrived at Table'. For the video with sound click here.

The result can be seen in the following image.

Hero can be seen going from the starting point (first sheet of paper on the right) to table 1 (second sheet) then table 0 (third sheet) and finally stopping at table 2 (last sheet). The reason hero is not in the restaurant maze is because at that point during testing there was some initialization problem in which hero would first go from the centre of the map to the starting point (first sheet of paper on the right) of the maze ignoring all obstacles. The robot thinks it is at the map centre while in reality it is not placed in that location. This problem can be seen in the video above and in the following video in simulation:

This problem was solved by adding an offset to the odometry of the robot. The offset makes sure the actual position of the robot with respect to the map and the position the robot thinks it is in are the same. Now the robot takes it's starting point as the start of the maze as can be seen in this simulation video below. Do note this solution is only temporary and should be replaced if a working localization is integrated.

With the odometry offset applied, the robot was able to perform the tasks from the video's successfully. Therefore it can be concluded that the global path planning utilizing the A* algorithm is working properly both in simulation and on the physical system.

Local path planning - Artificial Potential Field

The Artificial Potential Field (APF) method is a pioneering approach in local path planning algorithms. The primary principle behind this technique involves modeling obstacles and goals as repulsive and attractive vector fields, respectively. Conceptualizing the scenario from a potential perspective, it assigns a lower potential to the goal and a higher potential to obstacles. As a result, the robot navigates autonomously towards the coordinates with the lowest potential.

The implementation of the APF method starts by calculating the robot's position's potential. This potential computation helps derive the induced velocities, which are then transmitted to the robot as navigation references. In practical terms, the APF method is constructed as a function that, given the robot's current pose, goal pose, and laser data, returns the reference velocities necessary to move towards the position with minimum potential.

The APF method employs laser data to model obstacles. One straightforward approach would be to consider only the nearest obstacle represented as a point in space. This point's relative position concerning the robot would be determined by the range and angle of the minimum laser scan. However, this approach may lack robustness since a single laser measurement might be susceptible to errors, leading to uncertainty in the obstacle's position. To address this issue, every laser measurement below a pre-set detection threshold is chosen to represent an obstacle. Consequently, each obstacle is modeled as a point cloud, with each point generating a repulsive velocity reference for the robot. The total repulsive velocity is then calculated as the sum of all these point-generated velocities, taking into account their orientation. This summation helps balance forces pointing in different directions and compensates for outliers by fighting the uncertainty of laser data.

The APF function is iteratively called until the robot reaches the goal. This iterative process enables the robot to navigate to its destination while avoiding both static and dynamic obstacles. Since the obstacle set is recalculated every time the function is called, the method can handle errors or moving objects.

As the goal is also a point on the map, the attractive velocity is unique and constant. To manage this, all the APF method parameters have been tuned to strike a balance between attractive and repulsive forces, enabling the robot to follow a viable and safe path towards the goal. This process of fine-tuning ensures that the robot is not overly repelled by obstacles that it cannot move forward or overly attracted to the goal that it cannot avoid obstacles.

The pros of this approach:

- It can deal with all kinds of obstacles;

- It is robust with respect to uncertainties in the measurements;

- It is able to find a smooth path towards the goal.

The cons of this approach:

- It relies on good localization: the attractive force is, in fact, a function of the current pose and the goal pose;

- It is intrinsically affected by local minima issues, leading the robot to a low-potential position which might not be the goal;

- It relies on parameter tuning: all the velocity coefficients and al the thresholds must be found through a trial-and-error process.

Integration

Software architecture

To integrate the spare parts of the project a main file has been chosen to host the initialization code and a for loop in which the task to take on are managed on a high level. The initialization consists of reading the sequence of table and the json file containing the map parameters. Each table is then mapped into a goal node ID (to feed into A*) and a goal orientation. These values have been hardcoded into helper functions to be called in the main.

Then the flow enters a for loop. Iterating over the list of tables given as input, the robot first computes the ideal path to the table using A*, then it enters the navigation mode. All the functions required for global navigation are collected into a library. Furthermore, a different library which collects the main APF function, all its helper functions and an obstacle detection function is included in the project. The local planning related functions, however, are not called in the main, but inside the global navigation function. The idea is, as a matter of fact, to use the APF as an exception to the default A* navigation, being triggered when an obstacle is detected on the path.

Obstacle avoidance policy

To combine each of the block, the libraries from navigation and localization are merge first. Then, the right policy needs to be developed to switch between local path planning and global path planning.

The first designed policy is rather simple. The robot follows the global path as long as possible, then, if it detects an obstacle within a certain range, it switches to the obstacle detection mode. When obstacle detection mode is active, the robot gives control to the APF algorithm in order to find a safe path, drive past the obstacle and get back on the global track. The APF, however, needs a goal to compute velocity references. The idea is then to skip a few nodes, setting the goal for APF as the current_global_path_goal + n_skip. The assumption is that obstacles such that chairs or bags (which can be found in a restaurant) are not so large to take up many nodes.

However, after simulating the first policy from the gif, it can be seen that although the robot switches to the local path planning state at the previous node of the obstacle, a significant portion of the robot's edges pass right through the robot in the local path planning state, which means that the current algorithm is not feasible. Since no issues were expected from the APF function as it works correctly on its own, the issue would originate from the policy. Unfortunately, the issue in the policy integration has not been identified completely, because of which no fitting solution could be found.

Therefore, a new policy is put up without using APF: When the robot encounters an obstacle, the robot stops at the node Pn in front of the obstacle. The robot will then calculate whether it will pass the next node Pn+1 based on the data returned by lidar, with the path being a straight line from node Pn to node Pn+1. If the obstacle occupies the next node Pn+1, then the robot will calculate whether it will pass node Pn+2, which is a straight line from node Pn to node Pn+2, and so on. In the end, it can be seen in the gif that the robot avoided the obstacle and the "local" path is a straight line. However, the biggest disadvantage of this method is that if the obstacle is too large to obscure the robot to fail to detect the node after it, then the robot will stall forever.

Robustness & Evaluation in Restaurant challenge

In the context of robotics, robustness typically refers to the ability of a robot to handle or recover from unforeseen circumstances, errors, or changes in its environment. Robustness is a key feature in robotics as it enables the robot to function accurately and consistently despite the presence of noise, disturbances, or variations in the operational environment.

Final Simulation Performance

During the Restaurant challenge, Hero is supposed to:

1) Start in certain area and visit a list of tables in sequence

2) Position near the table, facing towards the table and give a clear sound signal when arrives

3) Do not touch walls or objects

The integrated global and local path planning are tested, and simulation result is as follows:

From the simulation it can be seen that the robot move smoothly, avoid an unknown object and successfully plan and follow its way to all 5 tables in sequence, which perfectly meets all the requirements.

Robustness in Test

During testing, we just tested the following robustness based on global navigation:

1)Command sequence execution test: Test whether Hero can accurately access all tables in sequence given a series of table sequences.

2)Sound signal test: Test whether Hero can emit a clear sound signal when reaching a table.

The testing results can be found in the A* performance part. By adding the table sequence, we can make sure the robot does not perform the same task in a loop. And when arriving at each setting position, the robot would perform sound signal. These reduce the chance of error the robot would meet and increase robustness. However, since we just tackled our policy issue in simulation in the last day, we have no time to test combining (local and global) navigation in the restaurant challenge map.

Besides, one issue should be improved is that the robot performance depends on the starting position. As we were not able to complete the localization module in time, if the robot had been tested in the restaurant map, the robot would have lost itself because it did not know its location. This means that when the robot initializes, we should set the robot in the coordinate and initial angle which are as same as those in simulation to make sure the robot will follow the setting path, which reduces the robustness.

Robustness in Final Restaurant challenge

On the final challenge day the code can be demonstrated in a maximum of two trials. The software version used does not include a complete localization algorithm.

- The recording of first trial is presented as follows:

From the recording it can be seen that Hero first makes a fast turn to the left side, plans its way, moves straight forward and collides with the table.

It is expected Hero could avoid objects and visit several tables in sequence but clearly it has under-performed compared to the simulation performance.

- The recording of second trial is presented as follows:

From the recording it can be seen that Hero first turn left to plan its way, then go straight but unfortunately clipped the static coat rack object. It can be seen that the robot does try to steer left to avoid the box. However, as it is already in contact with the box, this obstacle avoidance try was not successful and therefore the second trial was ended as well.

A few hypothesis about what happened can be found in the deficiencies section later.

Deficiencies of Robustness

First of all, being the particle filter not robust enough, we had to rely on dead-reckoning. This is a problem since the navigation is based on the knowledge of the current pose. Although, since the crash happened in the early stage of the challenge, the odometry drift might not be the main actor in the bad performance. The navigation policy could have not worked properly as well. The reason why this happened should be found by reproducing the occurrence in testing, after which faulty code can be identified and adjusted.

Secondly, during the challenge it seemed as if the robot did not see the obstacles. It is expected that this is not caused by either the behavior of the local or the global planner. The most probable explanation to why that happened is because the robot got stuck in a loop somewhere and could not go back to either local or global planner. The problem therefore origins from integration between the two path planners and made to robot drive in a straight line without steering or avoiding obstacles. This is the most probable explanation because the robot did not drove directly to its assigned position at the table while it also did not avoid obstacles. Therefore the error is not caused by either of them, as the occurrence is not matching the tasks of the path planners. Being stuck in a loop without being able to exit the loop does match with the straight drive direction. The reason why this happened should be found by reproducing the occurrence in testing, after which faulty code can be identified and adjusted.

Another hypothesis, based upon the outcome of the second trial, is a bad working obstacle detection. The robot relies on an obstacle detection function that checks only a part of the laser scans in front of the robot. The amount of laser scans to check is a parameter tuned on simulation first and later on the testing with the rosbot. Since Hero is larger than the rosbot, the number of laser scans to check should be larger as well. As a matter of fact, from the second video it can be seen that the robot's side collided with the obstacle. This indicates the range of laser scans used for obstacle detection should be increased when running the code on a different robot.

The incomplete aspect of the particle filter method in the overall design of localization has been explained in step 6) in its design steps. The limitation of the dead-reckoning method is that it accumulates significant errors in real-world situations, making it unable to provide accurate positioning, which is crucial for the final restaurant challenge. As for using the method described in design steps of particle filter from step 1) to step 3) for localization, it does take into account the errors and demonstrates promising results in simulations. However, it is not adopted in the end because it couldn't determine the initial angle of the robot's position. And before the final challenge we didn't know we actually could adjust the initial angle of it.

Conclusion

As can be concluded from the performance and deficiencies, not all steps planned in the strategy were executed successfully. Both the global path planner using A* and the local path planner using the artificial potential field algorithm work individually. The localization which makes use of a particle filter has not been successful in locating the robot with respect to the map. The integration of the global planner with a local planner has been achieved in some manner, but not with the artificial potential field algorithm. This version of the local planner is not very robust or efficient, but does do its job for simple environments. With the local and global planner integrated, the robot can, in simulation, successfully deliver orders to each table while avoiding unknown obstacles. Hereby it also correctly positions towards the table and gives a signal that is has arrived at this table with the order. So far so good. However, during the final challenge, it became clear the robot did not at all perform similar to the simulation. While is started well by orientating correctly, the emergency button of the robot had to be pressed as the robot drove into a table and a coat rack on the first and second trial respectively. This concluded the final challenge for HAL-9000 as the two trials had to be ended precautionary.

The state flow has therefore not been completed as well, with the absence of the localization, interaction with a door and proper integration of the local planner. To make the robot achieve all its required tasks successfully, future steps have been considered, as can be found in the recommendations. These steps will make sure the robot is working substantially better in simulation, but will also achieve robust performance for the physical robot if executed correctly.

Recommendations

Since code is has not been finished, the next topics that must be improved are quite clear. The first steps to take are to create a robust localization for the robot to know where it is. Simultaneously, it should be made sure the physical robots performs in similar fashion to the robot in simulation regarding the integration between global and local path planning. After that, the localization has to be integrated as well, creating a system that can handle unknown static objects and keep track of its location with respect to the map. This system is expected to handle dynamical objects already to some degree.

As proposed in the strategy, the next step is to include a feature to handle doors. This can be done by making the robot understand the difference between a wall and a door using map semantics. The hard part when adding this feature is to adapt the global path planning to plan the path via the door as an intermediate target, while also being able to switch the path if the door does not open for a certain amount of time after requesting.

The following step of the strategy is expected to be even harder, making to robot deal with dynamic obstacles like humans. First, dynamic obstacles should be detected and recognized. The robot should understand a human is nearby and should therefore take extra safety measures to prevent causing a collision as humans are harder to 'repair' if a collision ought to happen.

The last step to take is intensive and thorough testing to check the performance and robustness of the robot for all kinds of situations that might happen when such a robot is set to work in a restaurant. The importance of these tests is crucial for the safety of the robot and the environment around it.