Mobile Robot Control 2023 Wall-E: Difference between revisions

| (67 intermediate revisions by 5 users not shown) | |||

| Line 28: | Line 28: | ||

On this wiki page the following aspects of the project are discussed. First, the strategy with regards to the restaurant challenge is discussed. Next, the software architecture and robustness is explained. Finally, the results are evaluated and a conclusion is drawn. | On this wiki page the following aspects of the project are discussed. First, the strategy with regards to the restaurant challenge is discussed. Next, the software architecture and robustness is explained. Finally, the results are evaluated and a conclusion is drawn. | ||

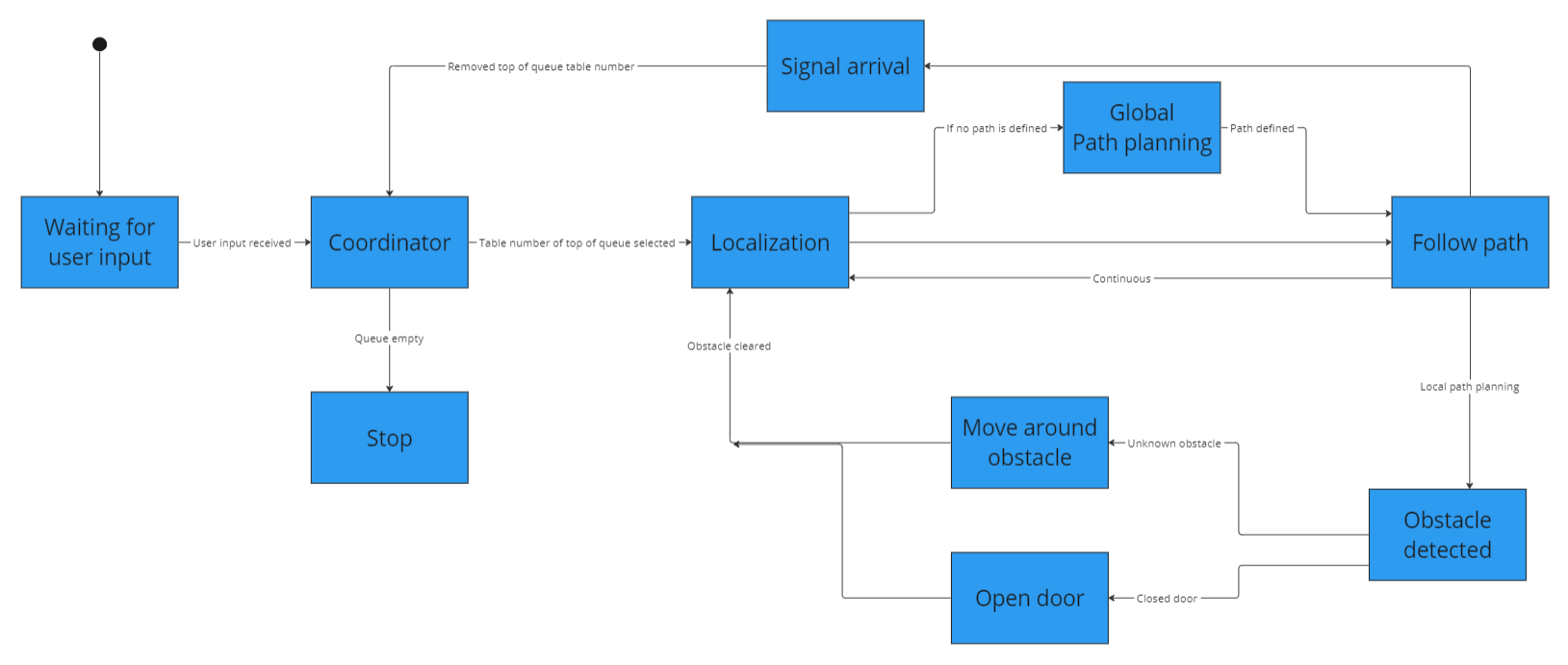

==State flow diagram== | ==State flow diagram== | ||

[[File:WallE state flow.png|thumb|State flow diagram for restaurant challenge.]] | [[File:WallE state flow.png|thumb|State flow diagram for restaurant challenge.]] | ||

| Line 43: | Line 41: | ||

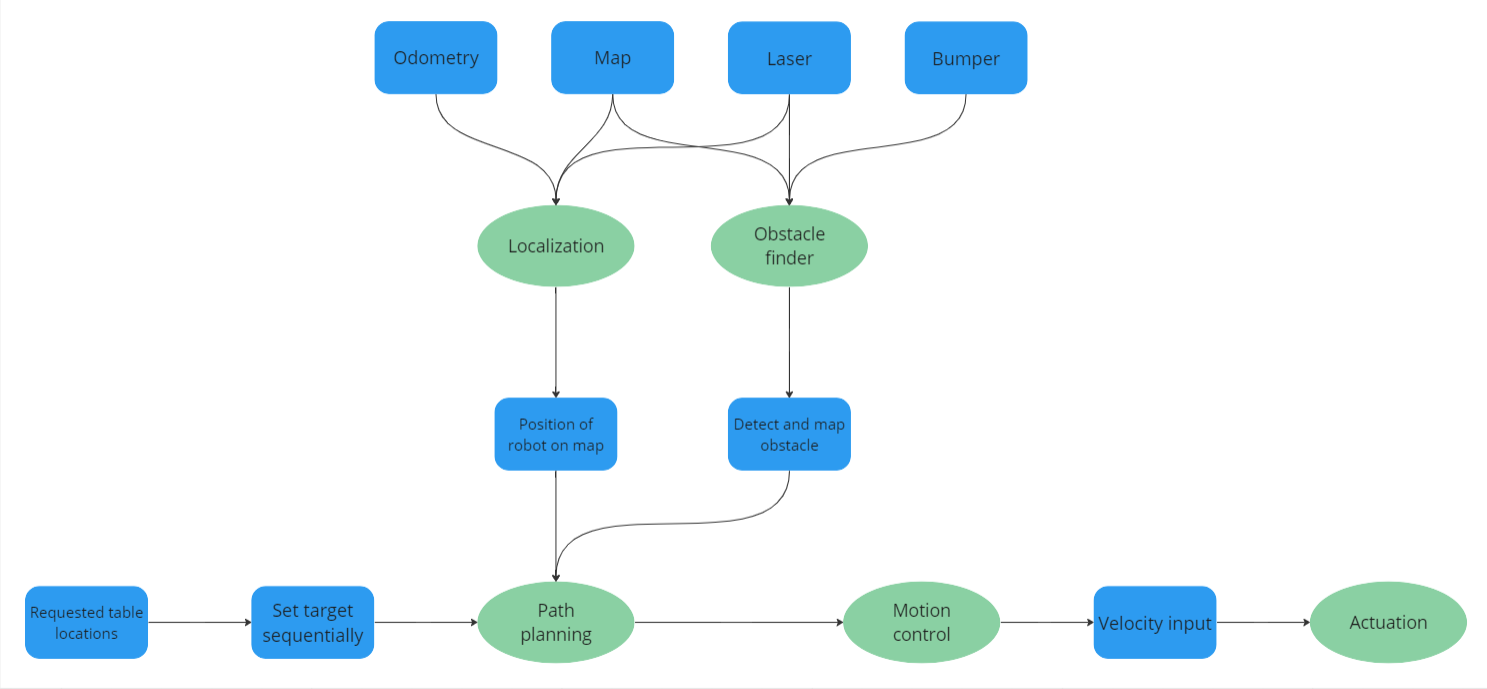

The data flow diagram uses all data available and the flows point to the appropriate processes in order to execute those parts accordingly with the received data. | The data flow diagram uses all data available and the flows point to the appropriate processes in order to execute those parts accordingly with the received data. | ||

==Strategy description Restaurant challenge | ==Strategy description Restaurant challenge== | ||

In this section a general strategy is mentioned, which is used by the group to get an idea of the tasks of the robot and the algorithms that need to be used. This strategy reflects the state flow diagram, as depicted in the state flow section. Further explanations about the final algorithms for the states will be given in the upcoming sections. | |||

- | First, the robot waits for the user input, which is the table queue (''block: Waiting for user input''). After this the coordinator will initialise the progress by 1) checking if the queue is empty, 2) if non-empty it will localise its location after loading the provided map of the restaurant (''block: Coordinator''). This localisation is done by the particle filter, which will estimate the robot’s position within the restaurant environment (''block: Localisation''). For this, the LiDAR data is processed to build and update the map. | ||

After the robot knows its location, the A* algorithm is invoked as the global path planner to generate an initial path from the robot’s position to the first table in the queue (''block: Global Path Planning''). When an initial path is found, the robot will start following this (''block: Follow Path''). The global path consists of waypoints (nodes), which the robot has to follow. Will following this path, the robot will continuously determine its positions by localisation. Based on the provided restaurant map, basic information such as the location of the walls, doors and tables are known, such that the robot can plan a collision-free path. However, there could be unknown obstacles, or the door can be closed, which can be detected by continuously checking the LiDAR data. If such an object is detected, the global path planning will switch to local path planning. | |||

The obstacles can be divided into two categories, which are closed doors, and unknown obstacles, either dynamic or static. For closed doors, the robot will ask for the door to be opened (''block: Open door''). For unknown obstacles, local path planning is an Open Space Approach. The robot will reassess the obstacle situation continuously and adjust the local path if needed (''block: Move around obstacle''). When using the open space approach the robot will check where the most room is available and will drive in this direction. When an object suddenly comes too close the robot will dodge the object by turning away from it. If an obstacle region is reached, the path planning will switch back to global, and a new path will be determined after localisation. During this switch, the robot will slow down temporarily to ensure safety during the planning of a new path. When a new path is found, the robot will continue with its delivery. | |||

When the first table in the queue is reached, it will signal its arrival by speaking (''block: Signal arrival''). The robot needs to position itself at a suitable range from the table and face it correctly. After a set delivery time, the table will be removed from the queue and the robot will restart its cycle until the last table has been reached. Nonetheless, this approach is not flawless, errors could occur due to unexpected situations, such as failed table delivery attempts. When this happens, the robot should replan to generate an alternative path or recovery strategy, by backing out of its spot and trying again with a slightly different angle or delivering at a different node. | |||

==Software Architecture Restaurant challenge== | ==Software Architecture Restaurant challenge== | ||

| Line 117: | Line 60: | ||

The folder containing the main executabe file also contains the files from the A*- algorithm (global path planning), the open space approach (local path planning) and the localisation (particle filter). The executable uses multi-threading in order to run the navigation and localisation algorithms in parallel. Two threads are run simultaneously, one calling the function for navigation and one for localisation. | The folder containing the main executabe file also contains the files from the A*- algorithm (global path planning), the open space approach (local path planning) and the localisation (particle filter). The executable uses multi-threading in order to run the navigation and localisation algorithms in parallel. Two threads are run simultaneously, one calling the function for navigation and one for localisation. | ||

The navigation function first retrieves the table order as arguments and stores it in a table list variable. This table list is then used together with a table nodes list to determine the predefined node in the map that corresponds to the table. The loading of the ''json'' file containing the map parameters including the node definitions is hardcoded into the executable. The node list containing nodes that correspond to the tables that have to be visited by the robot is used in a for loop where the A*- algorithm is used to compute a path from the start till end node. For each loop in the for loop the previous end node is taken as the new starting node for the new path. Once the robot | The navigation function first retrieves the table order as arguments and stores it in a table list variable. This table list is then used together with a table nodes list to determine the predefined node in the map that corresponds to the table. The loading of the ''json'' file containing the map parameters including the node definitions is hardcoded into the executable. The node list containing nodes that correspond to the tables that have to be visited by the robot is used in a for loop where the A*- algorithm is used to compute a path from the start till end node. For each loop in the for loop the previous end node is taken as the new starting node for the new path. Once the robot has reached the table node it will signal the customer that it has arrived with the ''io.speak()'' function. Once the robot notices an obstacle within a set range in its laser data the robot will switch from global path planning (A*- algorithm) to local path planning (Open space approach) and try to dodge the obstacle. After a set time interval the robot will use the node that is closest by to determine a new path using the A*- algorithm. | ||

The localisation function determines the location of the robot on the map using odom data, laser data and information from a png file of the map. The particle filter estimates the current location of the robot and stores this data in a variable. A pointer pointing to the location of this variable is given as an input to the navigation function so the robot can follow the path computed by the A*- algorithm. | The localisation function determines the location of the robot on the map using odom data, laser data and information from a png file of the map. The particle filter estimates the current location of the robot and stores this data in a variable. A pointer pointing to the location of this variable is given as an input to the navigation function so the robot can follow the path computed by the A*- algorithm. | ||

<br /> | <br /> | ||

===Global path planning | ===Global path planning=== | ||

As previously stated, the A* algorithm for global path planning provides the shortest route from a specified start node to a designated end node. In order for the A* algorithm to generate the intended path, it is necessary for the restaurant map to include nodes that connect to the desired table nodes. These nodes will serve as the basis for creating a global path. | As previously stated, the A* algorithm for global path planning provides the shortest route from a specified start node to a designated end node. In order for the A* algorithm to generate the intended path, it is necessary for the restaurant map to include nodes that connect to the desired table nodes. These nodes will serve as the basis for creating a global path. | ||

====''Node definition | ====''Node definition''==== | ||

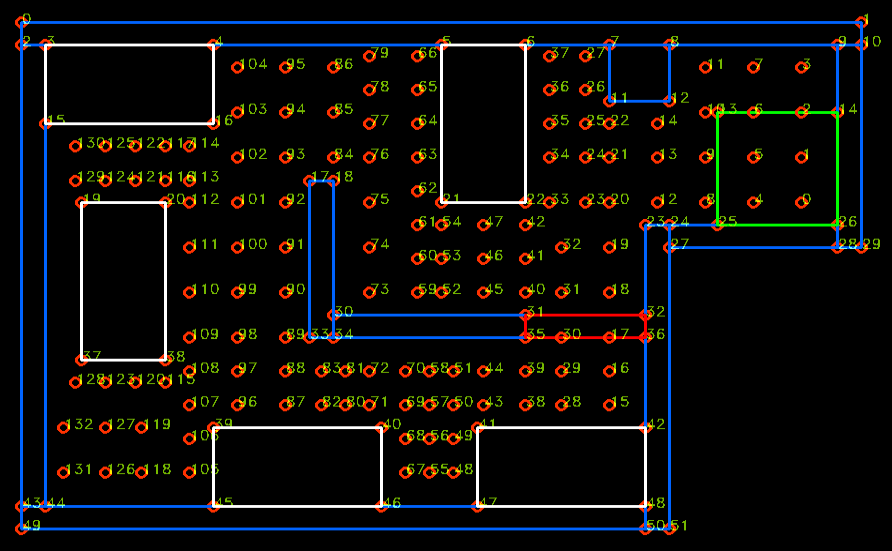

[[File:WallE vectormap.png|thumb|The defined nodes in the vector map for the restaurant challenge.|325x325px]] | |||

The nodes are specifically designed for the calculations involved in the A* algorithm, which aims to determine the distances for efficient route planning. The placement of the nodes is carefully selected to ensure that the robot can access any corner of the map. When considering the distance to the table, it is important to maintain a minimum distance of 20cm on all sides to prevent the robot from colliding with the table. In open areas, the distances between nodes are increased to allow the robot to travel longer distances, but there are still enough nodes to be able to go around unknown static or dynamic obstacles. | |||

Regarding the connections between nodes, each node is linked to its neighboring nodes, including both adjacent and diagonal nodes. However, these connections do not go through walls or tables, and special attention is given to avoid connecting nodes at sharp corners around tables. | |||

During testing, it was discovered that some node connections still posed a risk of cutting too closely around table corners, which could lead to collisions. As a result, certain connections were removed to ensure that the robot could navigate around tables without hitting the corners. | |||

<br /> | |||

====''A*- algorithm''==== | ====''A*- algorithm''==== | ||

The A* algorithm is a widely recognized search algorithm that starts at a designated start node and navigates to a target end node. Each node possesses three values: 'g' represents the cost of movement from the start node to the current node, 'h' estimates the movement cost from the current node to the end node, and 'f' represents the sum of 'h' and 'g' for the current node. The 'h' value, also known as the heuristic, is determined by calculating the Euclidean distance from the current position to the end node. | [[File:Astar WallEgif.gif|thumb|325x325px|Implementation of A* on the final map, visiting two tables without open door implementation.]] | ||

The A* algorithm is a widely recognized search algorithm that starts at a designated start node and navigates to a target end node. Each node possesses three values: 'g', 'h' and 'f'. 'g' represents the cost of movement from the start node to the current node, 'h' estimates the movement cost from the current node to the end node, and 'f' represents the sum of 'h' and 'g' for the current node. The 'h' value, also known as the heuristic, is determined by calculating the Euclidean distance from the current position to the end node. | |||

When the A* function is invoked with the provided start and end nodes, the first step involves initializing the open list. This initialization involves assigning initial values (infinity, distance to the end node, and the sum of 'g' and 'h') to the 'g', 'h', and 'f' values, respectively, for each node. Additionally, the parent parameter of each node is set to None. Then the values for the start node are computed. The ‘g’, ‘h’ and ‘f’ values are zero, the Euclidean distance between start and end node, and the sum of the previous value respectively. | When the A* function is invoked with the provided start and end nodes, the first step involves initializing the open list. This initialization involves assigning initial values (infinity, distance to the end node, and the sum of 'g' and 'h') to the 'g', 'h', and 'f' values, respectively, for each node. Additionally, the parent parameter of each node is set to None. Then the values for the start node are computed. The ‘g’, ‘h’ and ‘f’ values are zero, the Euclidean distance between start and end node, and the sum of the previous value respectively. | ||

| Line 141: | Line 89: | ||

The A* algorithm calculates the path to each specified table from the current node. Once the path has been established, the next step involves guiding the robot along the determined path. This task can be accomplished by utilizing the provided code from the A* assignment previously provided in this course. Particularly, the simulate_path_following function is invoked when the path has been found to let the robot follow the path. | The A* algorithm calculates the path to each specified table from the current node. Once the path has been established, the next step involves guiding the robot along the determined path. This task can be accomplished by utilizing the provided code from the A* assignment previously provided in this course. Particularly, the simulate_path_following function is invoked when the path has been found to let the robot follow the path. | ||

===Local path planning (Obstacle avoidance) | ===Local path planning (Obstacle avoidance)=== | ||

As mentioned before, the robot will switch from global to local path planning when an object is detected too close to the robot. The laser data is therefore scanned continuously. Two methods have been considered, the Artificial potential field algorithm (APF) and the open space approach. In the first subsection, the two algorithms will be compared. Thereafter, the chosen algorithm will be discussed in more detail. | As mentioned before, the robot will switch from global to local path planning when an object is detected too close to the robot. The laser data is therefore scanned continuously. Two local path planning methods have been considered, the Artificial potential field algorithm (APF) and the open space approach. In the first subsection, the two algorithms will be compared. Thereafter, the chosen algorithm will be discussed in more detail. | ||

==== | ====Artificial Potential Field (APF)==== | ||

[[File:APFwalle.gif|thumb|Artificial potential field|320x320px]] | |||

When using APF there will be a repulsion effect on objects and an attraction force on the goal. The repulsions and attractions forces are combined to obtain an artificial resulting force which indicates the motion direction of the robot. In this way, the robot will avoid obstacles and drive in the direction of the goal. The robot uses a box function where it checks the laser data around the robot in a box shape. The box is chosen such that the distance to an object to the sides of the robot is less and the distance to an object in front of the robot is more. Therefore, it will ensure that it can drive in narrow corridors. In comparison with a cone function, the robot will have less blind spots with the box function, which is beneficial. When an object is detected the robot will drive around it. when a dynamic object suddenly occurs in front of the robot, the robot will stop to avoid a collision. A video of the APF approach can at the right side.<br /> | |||

====Open space approach==== | |||

[[File:Localpath.gif|thumb|240x240px|Open space approach]] | |||

The open spaces algorithm works as a way to find the most open area for the robot to drive through. The algorithm starts once the front of the robot is measuring a distance below a threshold of for example, one meter. It works with a horizon, which is a distance that is defined as the average of the distance measured by all laser points. When comparing the laser measurements with the horizon, the algorithm searches for the largest streak of consecutive laser measurements that are larger than the horizon. Once found, it will rotate the robot with a constant turning velocity of 0.5 rad/s to face this open space and the algorithm will be completed. Besides, the robot will dodge objects when they are located too close to the robot (for the video on the right this switching value to the dodging method was set to 0.32 m). Furthermore, the open space approach will use a contant velocity of 0.2 m/s. A video where the robot only uses the local path planning by the open space approach can be seen to the right side. Here can be seen that the robot looks for the locations where there is the most space and then turns to face these open spaces. It will then drive towards this open space until it reaches a wall. Then it will start searching for an open space again. <br /> | |||

The final choice for the local path planning was open space. This decision was made by comparing the local path-planning methods in test sessions. During testing, it was concluded that the open space approach was more robust. The APF algorithm worked also fairly well, but had some problems with straight walls sometimes. Given the time and the robust open space approach, it was chosen to continue with open space. | |||

<br /> | |||

===Localisation=== | |||

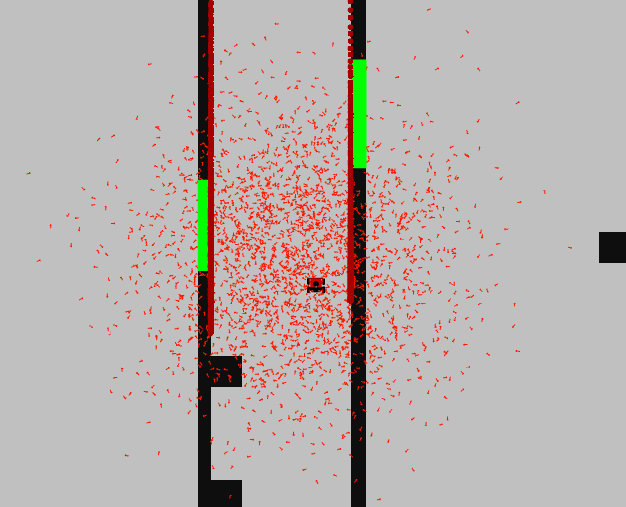

Localisation is necessary to determine the location of the robot in its environment. Determining the robots location solely on data from the wheel encoders results in a wrong location due to sensor drift and slip from the wheels. Laser data, odometry data from the wheel encoders and information from the map is combined to get a better approximation of the robots current location. Localisation is done by using a particle filter algorithm. The particle filter initialises a set of particles which are possible poses of the robot on the map. A probability is assigned to each particle after which the weighted average of these particles is taken to determine the most probable pose of the robot. The cloud of particles is constantly propagated through space using the odometry data of the wheel encoders and resampled to get a more accurate approximation. | |||

===Localisation | |||

Localisation is necessary to determine the location of the robot in its environment. Determining the robots location solely on data from the wheel encoders results in a wrong location due to sensor drift and slip from the wheels. Laser data, odometry data from the wheel encoders and information from the map is combined to get a better approximation of the robots current location. Localisation is done by using a particle filter algorithm. The particle filter initialises a set of particles which are | |||

====''Particle initialisation''==== | ====''Particle initialisation''==== | ||

| Line 171: | Line 111: | ||

====''Particle propagation''==== | ====''Particle propagation''==== | ||

Once the particles have been initialized, the algorithm proceeds with the particle propagation step. The reason for this is that the robot moves and hence its position needs to be updated over time. This step involves updating the positions and orientations of the particles based on the available odometry data and performing necessary frame transformations. | |||

During propagation, the algorithm incorporates the odometry information to simulate how the robot has moved since the last update. The distance and angle traveled by the robot are estimated by adding the received odometry values, which are subject to noise, to the previous pose of each particle. | |||

To account for frame transformations, the odometry data is first converted from the odometry frame to the robot frame. This transformation aligns the odometry information with the robot's orientation. Subsequently, the odometry data is transformed from the robot frame to the map frame, ensuring that the updated particle positions are represented correctly in the global reference frame. | |||

By applying the necessary frame transformations and incorporating odometry data, the particle propagation step allows the algorithm to predict the new positions and orientations of the particles, taking into account the robot's motion and its relationship to the map frame. This step forms an essential part of the particle filter algorithm, enabling the estimation of the robot's pose based on its movement and odometry information. | |||

====''Particle probability''==== | ====''Particle probability''==== | ||

In order to determine the particle that is the best approximation of the robots current pose the algorithm assigns a weight/likelihood to each particle. The largest weight value gets assigned to the particle that has the highest probability of resembling the current pose of the robot. The computation of the weight uses information from the map and the laser data of the robot. For each particle the laser data of the actual robot is compared to the what the laser would have looked like would the robot have the pose of the particle. The theoretical laser data of the particle is computed using a ray casting algorithm for a certain subsample of laser rays (to speed up computational time). The two sets of laser data are compared to | In order to determine the particle that is the best approximation of the robots current pose the algorithm assigns a weight/likelihood to each particle. The largest weight value gets assigned to the particle that has the highest probability of resembling the current pose of the robot. The computation of the weight uses information from the map and the laser data of the robot. For each particle the laser data of the actual robot is compared to the what the laser would have looked like would the robot have the pose of the particle. The theoretical laser data of the particle is computed using a ray casting algorithm for a certain subsample of laser rays (to speed up computational time). The two sets of laser data are compared to each other and used together with a set of probability density functions to determine the weight of the particle. The probability density functions used are: | ||

#A Gaussian distribution to account for noise in the laser signal. | #A Gaussian distribution to account for noise in the laser signal. | ||

#An exponential distribution to account for an unexpected short reading due to an obstacle being present that is not drawn in the map. | #An exponential distribution to account for an unexpected short reading due to an obstacle being present that is not drawn in the map. | ||

#A uniform distribution to account for | #A uniform distribution to account for unexpected long readings due to the laser sensor not retrieving a signal when the laser hits a glass or black object. | ||

#A uniform distribution to account for general random measurements | #A uniform distribution to account for general random measurements. | ||

In this way each laser ray of the particle is assigned a certain probability after which the product of all individual ray probabilites are taken to obtain the weight of the particle. | In this way each laser ray of the particle is assigned a certain probability after which the product of all individual ray probabilites are taken to obtain the weight of the particle. | ||

====''Resampling''==== | ====''Resampling''==== | ||

The resampling step in the particle filter algorithm is crucial for forcing particles to represent the posterior distribution accurately. Without resampling, particles would accumulate in low probability regions, requiring more particles for a reliable estimation. Resampling redistributes particles based on their importance weights, focusing on high likelihood regions. This concentrates computational resources where they matter the most, resembling the concept of "survival of the fittest". Implementing stratified and multinomial resampling schemes will enhance the particle set's representation of high posterior probability regions. | |||

In the end, a total of 500 particles were employed using the multinomial resampling algorithm, which was consistently applied. This configuration facilitated a resampling rate of approximately 5 to 6 Hertz, enabling seamless integration of the particle filter with other components. However, there is potential for further enhancement of the particle filter by considering alternative resampling methods or periodically adjusting the robot's position using a different number of points over longer time intervals. | |||

In | [[File:ParticleFilter.gif|thumb|Particle filter in action]] | ||

At present, the particle filter exhibits a high level of certainty in estimating the robot's position, as indicated by the dense cloud of position estimates. Nevertheless, this certainty poses challenges in cases where the estimate is incorrect or significantly disrupted by external disturbances, potentially hindering accurate convergence to the actual position. | |||

This | |||

To address this issue, one potential solution is to introduce periodic sampling instead of constant resampling. By increasing the time between consecutive resamples, the particle filter is more likely to encounter larger deviations, resulting in a broader estimation cloud. Although this approach may require additional time for the filter to accurately converge to the actual position after each resample, it offers the advantage of improved robustness, enhancing resistance to disturbances and external factors. | |||

<br /> | |||

==Robustness & Evaluation Restaurant challenge== | ==Robustness & Evaluation Restaurant challenge== | ||

Robustness of the robots performance is of utmost importance to ensure that the robot performs well under all circumstances. | Robustness of the robots performance is of utmost importance to ensure that the robot performs well under all circumstances. For example, the challenge environment was not entirely known prior to the final challenge (due to the addition of unknown objects). It was important that the robots software was made robust to these objects during the testing sessions. Robustness of the software against all possible difficult situations will result in the desired performance of the robot, namely the safe and fast delivery of orders to all tables. | ||

===Robustness Restaurant challenge=== | ===Robustness Restaurant challenge=== | ||

During the restaurant challenge several parts of the robots software were tested regarding robustness, namely: | |||

During the restaurant challenge several parts of the robots software were tested regarding robustness namely: | |||

#The robot handling unknown static objects that were not present in the map | #The robot handling unknown static objects that were not present in the map. | ||

#The robot replanning its path due to blockage of a corridor or a door not | #The robot replanning its path due to blockage of a corridor or a door not opening at request. | ||

#The robot localizing itself on the map after a random initial pose in the starting area | #The robot localizing itself on the map after a random initial pose in the starting area. | ||

#The robot handling people walking through the restaurant | #The robot handling people walking through the restaurant. | ||

The groups first goal was to make the robot deliver to a table in a static known environment using the A*- algorithm and the particle filter. After the robot was able to do this (in simulation) the next step was to make the robot robust for unknown objects in the map. The group decided to use an open space approach as a local path planner to dodge these unknown objects. This feature was also mainly tested in simulation where the robot was able to dodge these objects and compute a new path towards its goal. The problem | The groups first goal was to make the robot deliver to a table in a static known environment using the A*- algorithm and the particle filter. After the robot was able to do this (in simulation), the next step was to make the robot robust for unknown objects in the map. The group decided to use an open space approach as a local path planner to dodge these unknown objects. This feature was also mainly tested in simulation where the robot was able to dodge these objects and compute a new path towards its goal. The problem was however that the robots software was not entirely robust to these unknown objects as the second point written above was not achieved. The problem was that the open space algorithm was only able to dodge an object and recompute its path which means when the corridor is fully blocked off the robot will get stuck in a loop where it keeps on trying to follow a path that moves through the blocking object. A solution for this problem would be to let the robot 'try' the open space approach for a finite amount of times after which it will mark the nodes corresponding to the blocked corridor as inaccessible and plan a path through another corridor.[[File:Performance testing GIF3.gif|thumb|Robots performance during testing]]The robot was robust for localizing itself in the given starting area. During testing, the particles of the particle filter were all initialised in the starting area according to a normal distribution. The robot was able to localize itself every time regardless of its initial pose due to this initialisation and periodic resampling. | ||

Sadly, the group ran out of time at the end of the project to also make the robots software robust for people walking through the restaurant. The group was not able to test this feature for the final version so it is unknown what the current software's performance would be in such a scenario. The software is written such that the robot will always divert when an object is sensed inside a certain range by the laser range finder. As the robot was able to dodge people when the open space approach code on its own was tested, it is expected that the robot will apply its open space approach correctly when a human is detected in its range. | |||

Sadly, the group ran out of time at the end of the project to also make the robots software robust for people walking through the restaurant. The group was not able to test this feature so it is unknown what the current software's performance would be in such a scenario. The software is written such that the robot will always divert when an object is sensed inside a certain range by the laser range finder. | |||

===Evaluation Restaurant challenge=== | ===Evaluation Restaurant challenge=== | ||

[[File:Restaurant Challenge GIF.gif|thumb|Performance of robot during Restaurant Challenge]] | [[File:Restaurant Challenge GIF.gif|thumb|Performance of robot during Restaurant Challenge]] | ||

The | The performance during the restaurant challenge was not what the group desired. During the challenge, the robot started following its path to the first table but unfortunately hit the first corner it tried to drive around (See video on the right). The performance during testing the day before the final challenge was better than during the challenge itself (as can be seen in the video above). During the last test session, the robot could localize, reach the first table and avoid obstacles. | ||

However, the performance would have looked better during the final challenge, but the code which was used for the final challenge worked a lot better on the simulation. During the last test session there were some problems with the code when the first table was reached (this also holds for the simulation), as the robot would change from global to local path planning too fast. Moreover, the global path planning was not totally correct as the starting points from the A* algorithm were sometimes not logical when the robot replanned his path. To solve this, quite some changes in code were needed and unfortunately, the changed code could only be tested on the simulation and not on the real robot before the final challenge. However, in the simulation, the code worked a lot better than previously, as the robot could perform all tasks. So it was able to localize, make a global path and avoid obstacles with local path planning. When an object was observed it could also replan its path correctly and serve all tables. This can be seen in the following video. https://tuenl-my.sharepoint.com/:v:/g/personal/j_bongers_student_tue_nl/EWhLdfZ4mLpOuJg2mS4OcfoB2HEiRW6C1aRfEXKvNvqZ5g?e=erBgD2 | |||

Nevertheless, it turned out that the parameters that worked in simulation did not do well in real life, which caused the robot to hit the first corner it encountered. The most important parameters which should have been different in the real-life situation were the distance in which the open space approach was activated and the distance at which the robot only dodges objects. The first value was set to 0.33 m and the second value to 0.28 m. Both parameters were set to a too low value, which resulted that the robot was switching too late to open space and is therefore not able to drive in an open direction on time. Moreover, it is also too late with dodging the object. If the values were set higher during the final challenge, the robot should be able to dodge the objects and not drive into the wall. Another change that could improve the local path planning, is by implementing the box function. As used for the APF algorithm. This change would not be necessary but would remove the blind spots when scanning for objects. In comparison with a cone which is used for the open space approach right now. As can be seen in the performance during the final challenge, the wall into which the robot is driving is located a bit to the side of the robot. When the box function was used, the wall could be detected way easier and the robot would drive around it. However, the box function was not implemented in the first instance as the open space approach itself worked well when it was tested as can be seen in the video of the open space above. | |||

Despite the robot not performing as desired during the final challenge, the group knows that the robot did perform well in simulation and that it was just a matter of tweaking parameters such that the robot would perform the same in real life. | More importantly, the robot should have not driven into the wall in the first place. As it was not an unknown object the robot drove into. This has to do with the node placement for the A*-algorithm and the connections between the nodes. In the simulation, it can already be seen that the robot drives close to the wall where the robot crashes in real life. In the simulation, it was therefore not really a problem, however in the real-life situation it was. The group could have prevented it, as it is clear from the simulation that the robot drives sometimes quite close to the walls and did not take the most simple routes to reach the final node of a table. This was easily solvable by adding more nodes, using the nodes in the middle of the path and removing some connections. Despite the robot not performing as desired during the final challenge, the group knows that the robot did perform well in simulation and that it was just a matter of adjusting some nodes and tweaking parameters such that the robot would perform the same in real life. | ||

==Conclusion== | |||

This project aimed to address the labor shortage issue faced by restaurants by designing an algorithm for a robot capable of delivering orders to tables. The sections discussed on this wiki page provide a comprehensive overview of the end result of this project. | |||

The robot's performance robustness is vital for its effectiveness in various circumstances. The project's focus was on designing a restaurant robot capable of delivering orders to tables. Key challenges included handling unknown objects, achieving reliable localization, and addressing obstacles and human presence. While the robot successfully navigated unknown objects in simulation, it faced difficulties with blocked corridors in real-life situations. Improvements could involve marking inaccessible nodes after attempts to navigate blocked corridors. The robot demonstrated robust localization by consistently determining its position using particle initialization and periodic resampling. Although time constraints limited real-life testing for handling people, the software was designed to divert upon detecting objects, including humans. Testing revealed performance discrepancies between simulation and reality, emphasizing the need for fine-tuning local path planner parameters and optimizing node placement. Despite the challenges faced, the project provides promising insights for achieving desired performance in real-life scenarios and contributes to advancements in autonomous robotics. | |||

==Task description== | ==Task description== | ||

Latest revision as of 15:23, 7 July 2023

Group members:

| Name | student ID |

|---|---|

| Lars Blommers | 1455893 |

| Joris Bongers | 1446193 |

| Erick Hoogstrate | 1455176 |

| Noortje Hagelaars | 1367846 |

| Merlijn van Duijn | 1323385 |

| Sjoerd van der Velden | 1375229 |

Design presentation

The file for the design presentation can be found here:File:Design Presentation Group WallE.pdf

Introduction

For the last several years, restaurants have suffered from a labor shortage. Everywhere around the country restaurants workers are subjected to a heavy workload with no end in sight. In an attempt to resolve this problem, we set out as a group of 6 students to design an algorithm for a robot that can deliver orders to tables. In the span of 10 weeks, we learned all about software design and autonomous robots and immediately put our new knowledge to work in exercises and challenges.

On this wiki page the following aspects of the project are discussed. First, the strategy with regards to the restaurant challenge is discussed. Next, the software architecture and robustness is explained. Finally, the results are evaluated and a conclusion is drawn.

State flow diagram

The state flow diagram, a type of behavioural diagram, shows the design of the discrete control within the system. They graphically represent finite state machines.

The state flow diagram is a representation of what the blocks in code should do, when a certain input is received or a certain object is detected. The main aspects are worked into this, including the local and global path planning, obstacle avoidance and localization. This gives a rough idea of how the robot should behave.

Data flow diagram

The data flow diagram shows a structured analysis which gives insights into the origin of the data and interfaces between processes. The diagram consists of data, representing the information, process, which is a functional component with inputs and outputs, and lastly the flow, which specifies an input/output relation between the process and the data.

The data flow diagram uses all data available and the flows point to the appropriate processes in order to execute those parts accordingly with the received data.

Strategy description Restaurant challenge

In this section a general strategy is mentioned, which is used by the group to get an idea of the tasks of the robot and the algorithms that need to be used. This strategy reflects the state flow diagram, as depicted in the state flow section. Further explanations about the final algorithms for the states will be given in the upcoming sections.

First, the robot waits for the user input, which is the table queue (block: Waiting for user input). After this the coordinator will initialise the progress by 1) checking if the queue is empty, 2) if non-empty it will localise its location after loading the provided map of the restaurant (block: Coordinator). This localisation is done by the particle filter, which will estimate the robot’s position within the restaurant environment (block: Localisation). For this, the LiDAR data is processed to build and update the map.

After the robot knows its location, the A* algorithm is invoked as the global path planner to generate an initial path from the robot’s position to the first table in the queue (block: Global Path Planning). When an initial path is found, the robot will start following this (block: Follow Path). The global path consists of waypoints (nodes), which the robot has to follow. Will following this path, the robot will continuously determine its positions by localisation. Based on the provided restaurant map, basic information such as the location of the walls, doors and tables are known, such that the robot can plan a collision-free path. However, there could be unknown obstacles, or the door can be closed, which can be detected by continuously checking the LiDAR data. If such an object is detected, the global path planning will switch to local path planning.

The obstacles can be divided into two categories, which are closed doors, and unknown obstacles, either dynamic or static. For closed doors, the robot will ask for the door to be opened (block: Open door). For unknown obstacles, local path planning is an Open Space Approach. The robot will reassess the obstacle situation continuously and adjust the local path if needed (block: Move around obstacle). When using the open space approach the robot will check where the most room is available and will drive in this direction. When an object suddenly comes too close the robot will dodge the object by turning away from it. If an obstacle region is reached, the path planning will switch back to global, and a new path will be determined after localisation. During this switch, the robot will slow down temporarily to ensure safety during the planning of a new path. When a new path is found, the robot will continue with its delivery.

When the first table in the queue is reached, it will signal its arrival by speaking (block: Signal arrival). The robot needs to position itself at a suitable range from the table and face it correctly. After a set delivery time, the table will be removed from the queue and the robot will restart its cycle until the last table has been reached. Nonetheless, this approach is not flawless, errors could occur due to unexpected situations, such as failed table delivery attempts. When this happens, the robot should replan to generate an alternative path or recovery strategy, by backing out of its spot and trying again with a slightly different angle or delivering at a different node.

Software Architecture Restaurant challenge

Structure of software

In the exercises during the first weeks of the course all seperate elements of the robot control like global and local path planning and localisation were treated. The challenge was then to combine all seperate functions into one executable that can be called with:

./hero_do_your_thing 2 3 4

The folder containing the main executabe file also contains the files from the A*- algorithm (global path planning), the open space approach (local path planning) and the localisation (particle filter). The executable uses multi-threading in order to run the navigation and localisation algorithms in parallel. Two threads are run simultaneously, one calling the function for navigation and one for localisation.

The navigation function first retrieves the table order as arguments and stores it in a table list variable. This table list is then used together with a table nodes list to determine the predefined node in the map that corresponds to the table. The loading of the json file containing the map parameters including the node definitions is hardcoded into the executable. The node list containing nodes that correspond to the tables that have to be visited by the robot is used in a for loop where the A*- algorithm is used to compute a path from the start till end node. For each loop in the for loop the previous end node is taken as the new starting node for the new path. Once the robot has reached the table node it will signal the customer that it has arrived with the io.speak() function. Once the robot notices an obstacle within a set range in its laser data the robot will switch from global path planning (A*- algorithm) to local path planning (Open space approach) and try to dodge the obstacle. After a set time interval the robot will use the node that is closest by to determine a new path using the A*- algorithm.

The localisation function determines the location of the robot on the map using odom data, laser data and information from a png file of the map. The particle filter estimates the current location of the robot and stores this data in a variable. A pointer pointing to the location of this variable is given as an input to the navigation function so the robot can follow the path computed by the A*- algorithm.

Global path planning

As previously stated, the A* algorithm for global path planning provides the shortest route from a specified start node to a designated end node. In order for the A* algorithm to generate the intended path, it is necessary for the restaurant map to include nodes that connect to the desired table nodes. These nodes will serve as the basis for creating a global path.

Node definition

The nodes are specifically designed for the calculations involved in the A* algorithm, which aims to determine the distances for efficient route planning. The placement of the nodes is carefully selected to ensure that the robot can access any corner of the map. When considering the distance to the table, it is important to maintain a minimum distance of 20cm on all sides to prevent the robot from colliding with the table. In open areas, the distances between nodes are increased to allow the robot to travel longer distances, but there are still enough nodes to be able to go around unknown static or dynamic obstacles.

Regarding the connections between nodes, each node is linked to its neighboring nodes, including both adjacent and diagonal nodes. However, these connections do not go through walls or tables, and special attention is given to avoid connecting nodes at sharp corners around tables.

During testing, it was discovered that some node connections still posed a risk of cutting too closely around table corners, which could lead to collisions. As a result, certain connections were removed to ensure that the robot could navigate around tables without hitting the corners.

A*- algorithm

The A* algorithm is a widely recognized search algorithm that starts at a designated start node and navigates to a target end node. Each node possesses three values: 'g', 'h' and 'f'. 'g' represents the cost of movement from the start node to the current node, 'h' estimates the movement cost from the current node to the end node, and 'f' represents the sum of 'h' and 'g' for the current node. The 'h' value, also known as the heuristic, is determined by calculating the Euclidean distance from the current position to the end node.

When the A* function is invoked with the provided start and end nodes, the first step involves initializing the open list. This initialization involves assigning initial values (infinity, distance to the end node, and the sum of 'g' and 'h') to the 'g', 'h', and 'f' values, respectively, for each node. Additionally, the parent parameter of each node is set to None. Then the values for the start node are computed. The ‘g’, ‘h’ and ‘f’ values are zero, the Euclidean distance between start and end node, and the sum of the previous value respectively.

Following the initialization, a loop is executed, which follows a specific sequence. Initially, the node with the lowest 'f' value is determined. Next, the neighboring nodes of this selected node are added to the open list. This addition is carried out by computing the 'g', 'h', and 'f' values for the neighboring nodes and setting the parent node parameter to the current node with the lowest ‘f’ value. If a neighbor is already present in the open list, but the newly calculated 'f' value is lower than the currently saved 'f' value, the 'f' value and parent node are updated accordingly. This process continues until the node with the lowest 'f' value becomes the end node. At this point, the desired path has been found, and the code exits the loop.

Following the discovery of the path, the code enters a subsequent loop, which retraces the path from the end node to the start node using the parent node parameters. This backtracking loop enables the determination of the complete path.

The A* algorithm calculates the path to each specified table from the current node. Once the path has been established, the next step involves guiding the robot along the determined path. This task can be accomplished by utilizing the provided code from the A* assignment previously provided in this course. Particularly, the simulate_path_following function is invoked when the path has been found to let the robot follow the path.

Local path planning (Obstacle avoidance)

As mentioned before, the robot will switch from global to local path planning when an object is detected too close to the robot. The laser data is therefore scanned continuously. Two local path planning methods have been considered, the Artificial potential field algorithm (APF) and the open space approach. In the first subsection, the two algorithms will be compared. Thereafter, the chosen algorithm will be discussed in more detail.

Artificial Potential Field (APF)

When using APF there will be a repulsion effect on objects and an attraction force on the goal. The repulsions and attractions forces are combined to obtain an artificial resulting force which indicates the motion direction of the robot. In this way, the robot will avoid obstacles and drive in the direction of the goal. The robot uses a box function where it checks the laser data around the robot in a box shape. The box is chosen such that the distance to an object to the sides of the robot is less and the distance to an object in front of the robot is more. Therefore, it will ensure that it can drive in narrow corridors. In comparison with a cone function, the robot will have less blind spots with the box function, which is beneficial. When an object is detected the robot will drive around it. when a dynamic object suddenly occurs in front of the robot, the robot will stop to avoid a collision. A video of the APF approach can at the right side.

Open space approach

The open spaces algorithm works as a way to find the most open area for the robot to drive through. The algorithm starts once the front of the robot is measuring a distance below a threshold of for example, one meter. It works with a horizon, which is a distance that is defined as the average of the distance measured by all laser points. When comparing the laser measurements with the horizon, the algorithm searches for the largest streak of consecutive laser measurements that are larger than the horizon. Once found, it will rotate the robot with a constant turning velocity of 0.5 rad/s to face this open space and the algorithm will be completed. Besides, the robot will dodge objects when they are located too close to the robot (for the video on the right this switching value to the dodging method was set to 0.32 m). Furthermore, the open space approach will use a contant velocity of 0.2 m/s. A video where the robot only uses the local path planning by the open space approach can be seen to the right side. Here can be seen that the robot looks for the locations where there is the most space and then turns to face these open spaces. It will then drive towards this open space until it reaches a wall. Then it will start searching for an open space again.

The final choice for the local path planning was open space. This decision was made by comparing the local path-planning methods in test sessions. During testing, it was concluded that the open space approach was more robust. The APF algorithm worked also fairly well, but had some problems with straight walls sometimes. Given the time and the robust open space approach, it was chosen to continue with open space.

Localisation

Localisation is necessary to determine the location of the robot in its environment. Determining the robots location solely on data from the wheel encoders results in a wrong location due to sensor drift and slip from the wheels. Laser data, odometry data from the wheel encoders and information from the map is combined to get a better approximation of the robots current location. Localisation is done by using a particle filter algorithm. The particle filter initialises a set of particles which are possible poses of the robot on the map. A probability is assigned to each particle after which the weighted average of these particles is taken to determine the most probable pose of the robot. The cloud of particles is constantly propagated through space using the odometry data of the wheel encoders and resampled to get a more accurate approximation.

Particle initialisation

The algorithm starts by initialising the set of particles according to a certain distribution. Each particle in the set represents a hypothesis for the current pose of the robot. The particle cloud can be initiliased either through a uniform or a normal distribution. The particle initialisation through a normal distribution can be seen in the Figure on the right.

Particle propagation

Once the particles have been initialized, the algorithm proceeds with the particle propagation step. The reason for this is that the robot moves and hence its position needs to be updated over time. This step involves updating the positions and orientations of the particles based on the available odometry data and performing necessary frame transformations.

During propagation, the algorithm incorporates the odometry information to simulate how the robot has moved since the last update. The distance and angle traveled by the robot are estimated by adding the received odometry values, which are subject to noise, to the previous pose of each particle.

To account for frame transformations, the odometry data is first converted from the odometry frame to the robot frame. This transformation aligns the odometry information with the robot's orientation. Subsequently, the odometry data is transformed from the robot frame to the map frame, ensuring that the updated particle positions are represented correctly in the global reference frame.

By applying the necessary frame transformations and incorporating odometry data, the particle propagation step allows the algorithm to predict the new positions and orientations of the particles, taking into account the robot's motion and its relationship to the map frame. This step forms an essential part of the particle filter algorithm, enabling the estimation of the robot's pose based on its movement and odometry information.

Particle probability

In order to determine the particle that is the best approximation of the robots current pose the algorithm assigns a weight/likelihood to each particle. The largest weight value gets assigned to the particle that has the highest probability of resembling the current pose of the robot. The computation of the weight uses information from the map and the laser data of the robot. For each particle the laser data of the actual robot is compared to the what the laser would have looked like would the robot have the pose of the particle. The theoretical laser data of the particle is computed using a ray casting algorithm for a certain subsample of laser rays (to speed up computational time). The two sets of laser data are compared to each other and used together with a set of probability density functions to determine the weight of the particle. The probability density functions used are:

- A Gaussian distribution to account for noise in the laser signal.

- An exponential distribution to account for an unexpected short reading due to an obstacle being present that is not drawn in the map.

- A uniform distribution to account for unexpected long readings due to the laser sensor not retrieving a signal when the laser hits a glass or black object.

- A uniform distribution to account for general random measurements.

In this way each laser ray of the particle is assigned a certain probability after which the product of all individual ray probabilites are taken to obtain the weight of the particle.

Resampling

The resampling step in the particle filter algorithm is crucial for forcing particles to represent the posterior distribution accurately. Without resampling, particles would accumulate in low probability regions, requiring more particles for a reliable estimation. Resampling redistributes particles based on their importance weights, focusing on high likelihood regions. This concentrates computational resources where they matter the most, resembling the concept of "survival of the fittest". Implementing stratified and multinomial resampling schemes will enhance the particle set's representation of high posterior probability regions.

In the end, a total of 500 particles were employed using the multinomial resampling algorithm, which was consistently applied. This configuration facilitated a resampling rate of approximately 5 to 6 Hertz, enabling seamless integration of the particle filter with other components. However, there is potential for further enhancement of the particle filter by considering alternative resampling methods or periodically adjusting the robot's position using a different number of points over longer time intervals.

At present, the particle filter exhibits a high level of certainty in estimating the robot's position, as indicated by the dense cloud of position estimates. Nevertheless, this certainty poses challenges in cases where the estimate is incorrect or significantly disrupted by external disturbances, potentially hindering accurate convergence to the actual position.

To address this issue, one potential solution is to introduce periodic sampling instead of constant resampling. By increasing the time between consecutive resamples, the particle filter is more likely to encounter larger deviations, resulting in a broader estimation cloud. Although this approach may require additional time for the filter to accurately converge to the actual position after each resample, it offers the advantage of improved robustness, enhancing resistance to disturbances and external factors.

Robustness & Evaluation Restaurant challenge

Robustness of the robots performance is of utmost importance to ensure that the robot performs well under all circumstances. For example, the challenge environment was not entirely known prior to the final challenge (due to the addition of unknown objects). It was important that the robots software was made robust to these objects during the testing sessions. Robustness of the software against all possible difficult situations will result in the desired performance of the robot, namely the safe and fast delivery of orders to all tables.

Robustness Restaurant challenge

During the restaurant challenge several parts of the robots software were tested regarding robustness, namely:

- The robot handling unknown static objects that were not present in the map.

- The robot replanning its path due to blockage of a corridor or a door not opening at request.

- The robot localizing itself on the map after a random initial pose in the starting area.

- The robot handling people walking through the restaurant.

The groups first goal was to make the robot deliver to a table in a static known environment using the A*- algorithm and the particle filter. After the robot was able to do this (in simulation), the next step was to make the robot robust for unknown objects in the map. The group decided to use an open space approach as a local path planner to dodge these unknown objects. This feature was also mainly tested in simulation where the robot was able to dodge these objects and compute a new path towards its goal. The problem was however that the robots software was not entirely robust to these unknown objects as the second point written above was not achieved. The problem was that the open space algorithm was only able to dodge an object and recompute its path which means when the corridor is fully blocked off the robot will get stuck in a loop where it keeps on trying to follow a path that moves through the blocking object. A solution for this problem would be to let the robot 'try' the open space approach for a finite amount of times after which it will mark the nodes corresponding to the blocked corridor as inaccessible and plan a path through another corridor.

The robot was robust for localizing itself in the given starting area. During testing, the particles of the particle filter were all initialised in the starting area according to a normal distribution. The robot was able to localize itself every time regardless of its initial pose due to this initialisation and periodic resampling.

Sadly, the group ran out of time at the end of the project to also make the robots software robust for people walking through the restaurant. The group was not able to test this feature for the final version so it is unknown what the current software's performance would be in such a scenario. The software is written such that the robot will always divert when an object is sensed inside a certain range by the laser range finder. As the robot was able to dodge people when the open space approach code on its own was tested, it is expected that the robot will apply its open space approach correctly when a human is detected in its range.

Evaluation Restaurant challenge

The performance during the restaurant challenge was not what the group desired. During the challenge, the robot started following its path to the first table but unfortunately hit the first corner it tried to drive around (See video on the right). The performance during testing the day before the final challenge was better than during the challenge itself (as can be seen in the video above). During the last test session, the robot could localize, reach the first table and avoid obstacles.

However, the performance would have looked better during the final challenge, but the code which was used for the final challenge worked a lot better on the simulation. During the last test session there were some problems with the code when the first table was reached (this also holds for the simulation), as the robot would change from global to local path planning too fast. Moreover, the global path planning was not totally correct as the starting points from the A* algorithm were sometimes not logical when the robot replanned his path. To solve this, quite some changes in code were needed and unfortunately, the changed code could only be tested on the simulation and not on the real robot before the final challenge. However, in the simulation, the code worked a lot better than previously, as the robot could perform all tasks. So it was able to localize, make a global path and avoid obstacles with local path planning. When an object was observed it could also replan its path correctly and serve all tables. This can be seen in the following video. https://tuenl-my.sharepoint.com/:v:/g/personal/j_bongers_student_tue_nl/EWhLdfZ4mLpOuJg2mS4OcfoB2HEiRW6C1aRfEXKvNvqZ5g?e=erBgD2

Nevertheless, it turned out that the parameters that worked in simulation did not do well in real life, which caused the robot to hit the first corner it encountered. The most important parameters which should have been different in the real-life situation were the distance in which the open space approach was activated and the distance at which the robot only dodges objects. The first value was set to 0.33 m and the second value to 0.28 m. Both parameters were set to a too low value, which resulted that the robot was switching too late to open space and is therefore not able to drive in an open direction on time. Moreover, it is also too late with dodging the object. If the values were set higher during the final challenge, the robot should be able to dodge the objects and not drive into the wall. Another change that could improve the local path planning, is by implementing the box function. As used for the APF algorithm. This change would not be necessary but would remove the blind spots when scanning for objects. In comparison with a cone which is used for the open space approach right now. As can be seen in the performance during the final challenge, the wall into which the robot is driving is located a bit to the side of the robot. When the box function was used, the wall could be detected way easier and the robot would drive around it. However, the box function was not implemented in the first instance as the open space approach itself worked well when it was tested as can be seen in the video of the open space above.

More importantly, the robot should have not driven into the wall in the first place. As it was not an unknown object the robot drove into. This has to do with the node placement for the A*-algorithm and the connections between the nodes. In the simulation, it can already be seen that the robot drives close to the wall where the robot crashes in real life. In the simulation, it was therefore not really a problem, however in the real-life situation it was. The group could have prevented it, as it is clear from the simulation that the robot drives sometimes quite close to the walls and did not take the most simple routes to reach the final node of a table. This was easily solvable by adding more nodes, using the nodes in the middle of the path and removing some connections. Despite the robot not performing as desired during the final challenge, the group knows that the robot did perform well in simulation and that it was just a matter of adjusting some nodes and tweaking parameters such that the robot would perform the same in real life.

Conclusion

This project aimed to address the labor shortage issue faced by restaurants by designing an algorithm for a robot capable of delivering orders to tables. The sections discussed on this wiki page provide a comprehensive overview of the end result of this project.

The robot's performance robustness is vital for its effectiveness in various circumstances. The project's focus was on designing a restaurant robot capable of delivering orders to tables. Key challenges included handling unknown objects, achieving reliable localization, and addressing obstacles and human presence. While the robot successfully navigated unknown objects in simulation, it faced difficulties with blocked corridors in real-life situations. Improvements could involve marking inaccessible nodes after attempts to navigate blocked corridors. The robot demonstrated robust localization by consistently determining its position using particle initialization and periodic resampling. Although time constraints limited real-life testing for handling people, the software was designed to divert upon detecting objects, including humans. Testing revealed performance discrepancies between simulation and reality, emphasizing the need for fine-tuning local path planner parameters and optimizing node placement. Despite the challenges faced, the project provides promising insights for achieving desired performance in real-life scenarios and contributes to advancements in autonomous robotics.

Task description

| Name | Tasks week 8 | Tasks week 9 | Tasks week 10 | Tasks week 11 |

|---|---|---|---|---|

| Lars Blommers | 1. Localisation (particle filter) | 1. Main file state flow | 1. Combine different elements in main file

2. Combine A* with localisation |

Work on wiki |

| Joris Bongers | 1. Navigation (combining global

and local navigation) |

1. Update wiki with interface,

coordinator information |

1. Combine different elements in main file

2. Combine A* with localisation |

Work on wiki |

| Erick Hoogstrate | 1. Localisation (particle filter) | 1. Finish up localisation (particle filter) | 1. Combine A* with localisation | Work on wiki |

| Noortje Hagelaars | 1. Adjust state and data diagram

after feedback from tutors 2. Navigation (A* algorithm) |

1. Robot implementation of A* | 1. Create vector map of final challenge,

node placement for A* |

Work on wiki |

| Merlijn van Duijn | 1. Navigation (A* algorithm) | 1. Robot implementation of A* | - | Work on wiki |

| Sjoerd van der Velden | 1. Adjust state and data diagram

after feedback from tutors 2. Navigation (combining global and local navigation) |

1. Update wiki with interface,

coordinator information 2. Assist with robot testing A* |

1. Combine A* with local navigation | Work on wiki |