Mobile Robot Control 2023 R2-D2: Difference between revisions

| (25 intermediate revisions by 6 users not shown) | |||

| Line 21: | Line 21: | ||

|} | |} | ||

==Design Presentation== | ==Design Presentation== | ||

| Line 31: | Line 26: | ||

Presentation pdf: | Presentation pdf: | ||

<br /> | |||

==Introduction== | ==Introduction== | ||

This | This wiki presents an in-depth analysis of the Restaurant Challenge, focusing on the system description, software architecture, strategy employed, robustness considerations, and evaluation of the challenge. The Restaurant Challenge is a challenge that assesses the capabilities of an autonomous robot to navigate and deliver orders within a restaurant environment. The challenge requires teams to develop software for the robot, known as Hero, enabling it to autonomously navigate through the restaurant, avoiding obstacles and humans, while accurately delivering orders to designated tables. | ||

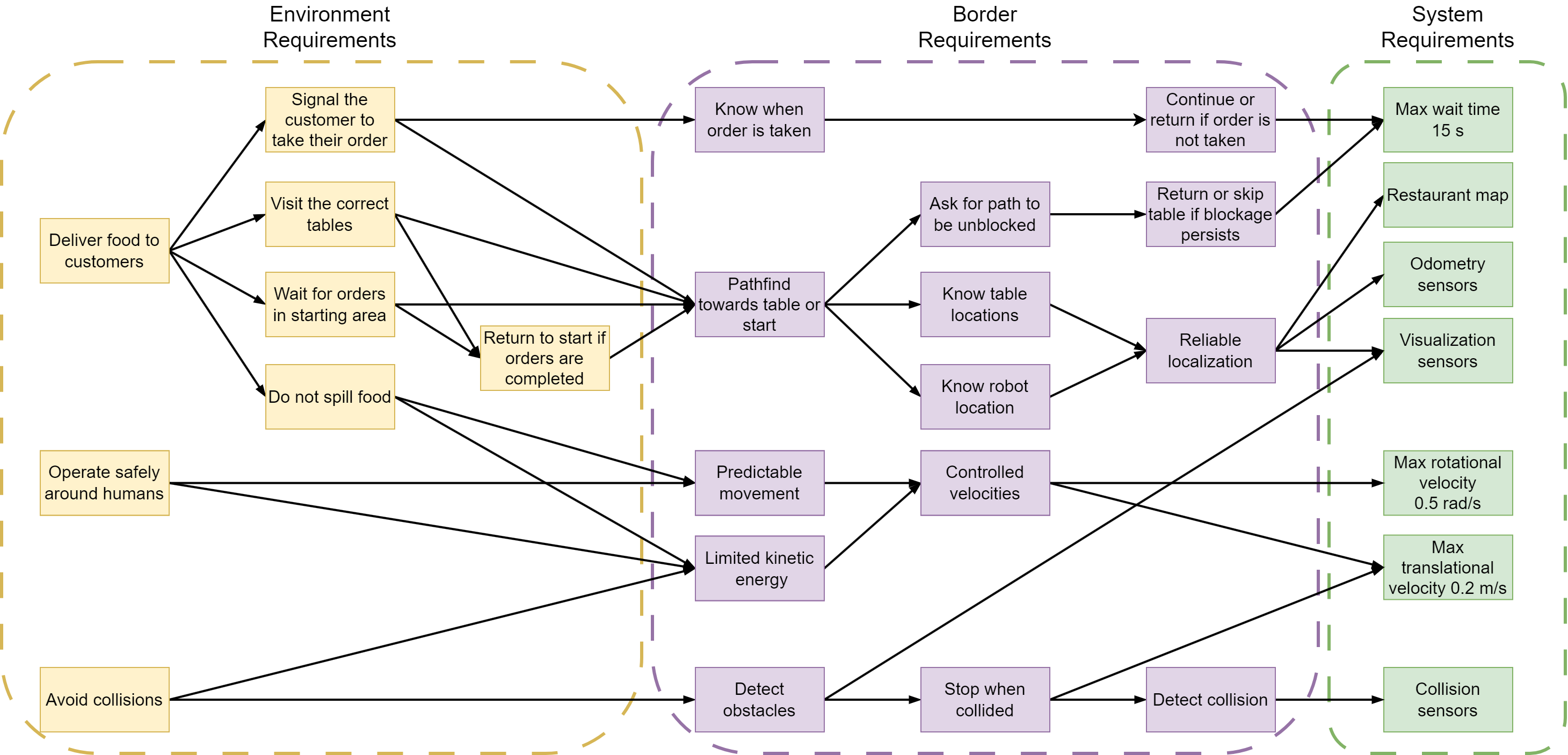

The | The wiki begins by introducing the challenge overview as well as the system description. The requirements are specified in the 'from desires to specs' diagram, which is then followed by the state flow and data flow created for the challenge. The state flow details how Hero progresses from the start area to the designated tables, while the data flow explains how information is exchanged and processed within the system. | ||

Furthermore, the | Furthermore, the wiki covers the strategy employed by the team to tackle the Restaurant Challenge. This includes a discussion of the global planner responsible for determining the overall path, the local planner handling immediate obstacles and route adjustments, the particle filter aiding in localization, and the main algorithm that orchestrates the entire delivery process. | ||

A crucial aspect of the challenge is the software architecture developed. This section of the | A crucial aspect of the challenge is the software architecture developed. This section of the wiki explores the architecture's design, how it was organized and its effectiveness in enabling Hero to perceive and navigate the environment, handle object detection, and execute the delivery task. | ||

The | The wiki also addresses the robustness considerations involved in the challenge, ensuring that Hero can handle unexpected situations, such as collisions, stalls, or navigational uncertainties. Therefore, the software needs to be designed with this in mind, and this section evaluates the robustness of the implementation created. | ||

Finally, the | Finally, the wiki discusses the results of the Restaurant Challenge. The performance of Hero will be assessed based on criteria such as successful delivery completion, adherence to the correct table order, object detection capabilities, and overall navigation efficiency. The evaluation section will include a discussion of the results, lessons learned throughout the challenge, things that could have been improved and future considerations. The wiki will then be concluded with a brief conclusion that describes the main achievements that were successfully completed. | ||

This wiki aims to provide a comprehensive analysis of the Restaurant Challenge, shedding light on the technical aspects, strategies employed, software architectures developed, and evaluation outcomes. This knowledge contributes to the advancement of autonomous navigation and delivery systems, fostering innovation in real-world scenarios where robots can assist in restaurant operations and similar tasks. | |||

==Challenge Overview== | ==Challenge Overview== | ||

| Line 56: | Line 51: | ||

The challenge environment specifications state that the walls are approximately straight, and tables are solid objects represented as rectangles in the LiDAR measurements such that it is not necessary to detect the legs of the tables. Doors may be open or closed, and there can be multiple routes to a given goal. Furthermore, the presence of dynamic objects in the form of humans adds complexity to the navigation task. Additionally, static obstacles such as chairs that are unknown to the robot and not present in the map given may be placed throughout the restaurant. Their detection and influence on the path taken is an additional challenge that needs to be overcome. | The challenge environment specifications state that the walls are approximately straight, and tables are solid objects represented as rectangles in the LiDAR measurements such that it is not necessary to detect the legs of the tables. Doors may be open or closed, and there can be multiple routes to a given goal. Furthermore, the presence of dynamic objects in the form of humans adds complexity to the navigation task. Additionally, static obstacles such as chairs that are unknown to the robot and not present in the map given may be placed throughout the restaurant. Their detection and influence on the path taken is an additional challenge that needs to be overcome. | ||

The challenge is subject to specific rules, including restrictions on touching walls or objects, limitations on robot speed, and time limits for each trial. Two trials are allowed, with a maximum of one restart | The challenge is subject to specific rules, including restrictions on touching walls or objects, limitations on robot speed, and time limits for each trial. Two trials are allowed, with a maximum of one restart. A trial ends if Hero bumps into a wall or object, remains stationary or makes non sensible movements for 30 seconds, exceeds the total time limit of 10 minutes, or if a restart is requested. A restart allows Hero to return to the start position while resetting the trial time but not the total time. | ||

The overall goal of the challenge is to demonstrate the robot's ability to autonomously navigate a complex environment, accurately deliver orders to designated tables, and effectively handle dynamic obstacles. The teams' performance will be evaluated based on their ability to complete the task within the given constraints. | The overall goal of the challenge is to demonstrate the robot's ability to autonomously navigate a complex environment, accurately deliver orders to designated tables, and effectively handle dynamic obstacles. The teams' performance will be evaluated based on their ability to complete the task within the given constraints. | ||

==System Description== | ==System Description== | ||

This section provides a comprehensive overview of Hero | This section provides a comprehensive overview of Hero, its limitations, and functionalities. | ||

Hero has certain limitations on its maximum | Hero has certain limitations on its maximum velocities, namely the translational velocity is limited to 0.5 m/s and the rotational velocity is limited to 1.2 rad/s. To establish position and movement of the robot, two types of outputs from the robot can be utilized: laser data from the laser range finder and odometry data from the wheels. | ||

The laser range finder provides information about the distances between the robot and its surrounding objects. The range_min and range_max values define the minimum and maximum measurable distances, respectively. Any distance reading below range_min or above range_max is considered invalid. Furthermore, the angle_min and angle_max values determine the angles of the first and last beams in the measurement. The angle_increment value is also provided as an input metric from the laser data. The actual laser sensor readings are stored in a vector of floats of which each reading is associated with the ranges. Each element in the vector corresponds to a measured distance at a specific angle, which can be calculated using angle_min, angle_increment, and the index of the element in the vector. Additionally, the timestamp associated with the laser data indicates the time at which the measurements were taken. | The laser range finder provides information about the distances between the robot and its surrounding objects. The range_min and range_max values define the minimum and maximum measurable distances, respectively. Any distance reading below range_min or above range_max is considered invalid. Furthermore, the angle_min and angle_max values determine the angles of the first and last beams in the measurement. The angle_increment value is also provided as an input metric from the laser data. The actual laser sensor readings are stored in a vector of floats of which each reading is associated with the ranges. Each element in the vector corresponds to a measured distance at a specific angle, which can be calculated using angle_min, angle_increment, and the index of the element in the vector. Additionally, the timestamp associated with the laser data indicates the time at which the measurements were taken. | ||

Moreover, odometry provides information about how far the robot has traveled and how much it has rotated. Each wheel and the rotational joint are equipped with encoders that keep track of their respective rotations. By utilizing the encoder data from all sources and considering the wheel configuration, it is possible to calculate the displacement and rotation of the robot since its initial position. However, it is important to note that odometry data is susceptible to drift due to small measurement errors and wheel slip, which accumulate over time. Therefore, relying solely on odometry data for longer periods is not recommended. Additionally, it is essential to be aware that the odometry data does not start at the coordinates (0,0), indicating that the robot's initial position may be different from the origin. Thus, the utilization of a particle filter will be needed. | Moreover, odometry provides information about how far the robot has traveled and how much it has rotated. Each wheel and the rotational joint are equipped with encoders that keep track of their respective rotations. By utilizing the encoder data from all sources and considering the wheel configuration, it is possible to calculate the displacement and rotation of the robot since its initial position. However, it is important to note that odometry data is susceptible to drift due to small measurement errors and wheel slip, which accumulate over time. Therefore, relying solely on odometry data for longer periods is not recommended. Additionally, it is essential to be aware that the odometry data does not start at the coordinates (0,0), indicating that the robot's initial position may be different from the origin. Thus, the utilization of a localization algorithm (Chosen: a particle filter) will be needed. | ||

Apart from the laser and odometry data, | Apart from the laser and odometry data, Hero is equipped with two separate bumpers, each acting as an individual sensor. Therefore, to obtain bumper information, the data from each bumper should be retrieved individually which would provide information about whether the robot made contact with an obstacle, from the front or the back. | ||

Furthermore, the velocity command given to the robot can only realize movement in the x-direction (which is forward and backwards), and rotate with a given input rotational velocity. Thus, by understanding these different types of input data, the robot can be controlled, and made to navigate without collision. | Furthermore, the velocity command given to the robot can only realize movement in the x-direction (which is forward and backwards), and rotate with a given input rotational velocity. Thus, by understanding these different types of input data, the robot can be controlled, and made to navigate without collision. | ||

==From Desires to Specs== | ==From Desires to Specs== | ||

''' | [[File:MrcRequirements.png|none|thumb|1000x1000px|Figure 1: Hero's requirements]] | ||

Before any work on Hero's algorithm was done, the system requirements, necessary to complete the restaurant challenge, were listed. From these requirements, the so-called 'from desires to specs' diagram was constructed, as seen in Figure 1. In this diagram, the environment, border and system requirements are ordered, and relations between them are indicated through the arrows. Constructing this diagram gave us a broad overview of the functionalities that Hero should have, and it helped us in defining the state and data flow of the system.<br /> | |||

==State Flow== | ==State Flow== | ||

[[File:StateflowR2D2.png|thumb|State flow of Hero's algorithm|1300x1300px|none]] | [[File:StateflowR2D2.png|thumb|Figure 2: State flow of Hero's algorithm|1300x1300px|none]] | ||

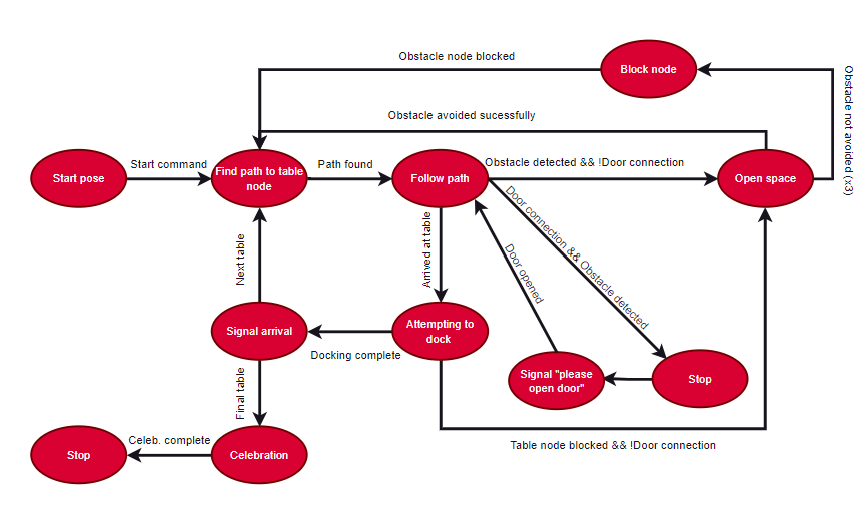

The state diagram is an important step to understanding the high level structure of the developed code. Each node can be visualized as a state that the robot might be in and each edge can be seen as a condition that results in the robot leaving the current state and going to the next. | |||

Firstly, the robot starts at the start position at a random orientation and localizes itself using the particle filter discussed in the particle filter section. After that, once the robot received the start command with the table order, it attempts to find a path to one of the table nodes specified in the table node list. The A* then finds a global path between the current node and the selected table node. The path is always found since the A* algorithm is executed on a global map that does not take into account the unknown obstacles. Now the robot can move to the follow path state, where the velocity commands are computed and sent to the controller to move the robot from one position to the next in the path. After the path is completed and the table node is reached, the robot attempts to dock at the table by for instance rotating or getting close enough to the table. Once this docking is completed successfully, i.e. the robot is close enough to the table, it signals that it has arrived with the order. This whole process is then restarted by again computing the global path to the next table node in the table list. This is repeated until the final table is reached, then the loop is exited and the robot goes in the celebration state, where it signals that all the tables have been reached successfully and then stops. This illustrates the happy flow of the robot in case nothing goes wrong and no unknown obstacles were detected close to the robot. | |||

Since the robot is navigating an environment where unknown obstacles will most certainly be met, a recovery behaviour flow must be added at two main states. The first being the follow path state, where the robot is unable to follow the path computed by the A* due to an unknown obstacle blocking the path or a closed door that needs to be opened. Secondly, at the attempting to dock state, where the robot may not be able to get close enough to the table, possibly due to an obstacle blocking the selected table node. | |||

Furthermore, at the follow path state, two possible recovery behavior scenarios can be realized. The first, is triggered when an obstacle is detected but the robot is not at a door connection. The robot then goes into open spaces state, where open spaces algorithm takes over and the path is disregarded and not followed momentarily. This is an attempt to overcome the obstacle that is blocking the pre-computed path. If the obstacle is indeed avoided, A* is computed from the current position to the table node again. In the case, where the obstacle could not be avoided by the open space approach three times in a row, the next node is removed from the possible nodes that A* uses to plan a path and the A* is recomputed. By doing so, the robot is able to overcome the obstacle by either following a completely different path or by deviating from the path momentarily and then converging back after the obstacle is avoided. The second is triggered when an obstacle is detected and the robot is at a door connection. Here the robot stops and requests for the door to be opened. When the door is opened, the robot continues to follow the path.If the door is not opened after Hero requested it three times, an alternative path is planned, which does not cross the closed door. | |||

In the other scenario, where the robot fails to dock at the table node. If an obstacle is detected and the robot is not at a door connection, the robot goes into the open spaces state to avoid the obstacle, but as expected it will most certainly not be able to avoid the obstacle since it is blocking the node. Therefore, the robot will remove the node from the list of reachable nodes and will recompute A* on the next table node in the list of table nodes. | |||

In conclusion, by following the state flow presented above, the robot is able to always reach one of the table nodes specified for each table. This state flow resembles and dictates the robot's behaviour given the possible scenarios the robot can encounter in its environment. | |||

==Data Flow== | ==Data Flow== | ||

[[File:MrcDataFlow.png|thumb|Data flow of Hero's algorithm| | [[File:MrcDataFlow.png|thumb|Figure 3: Data flow of Hero's algorithm|1200x1200px|none]] | ||

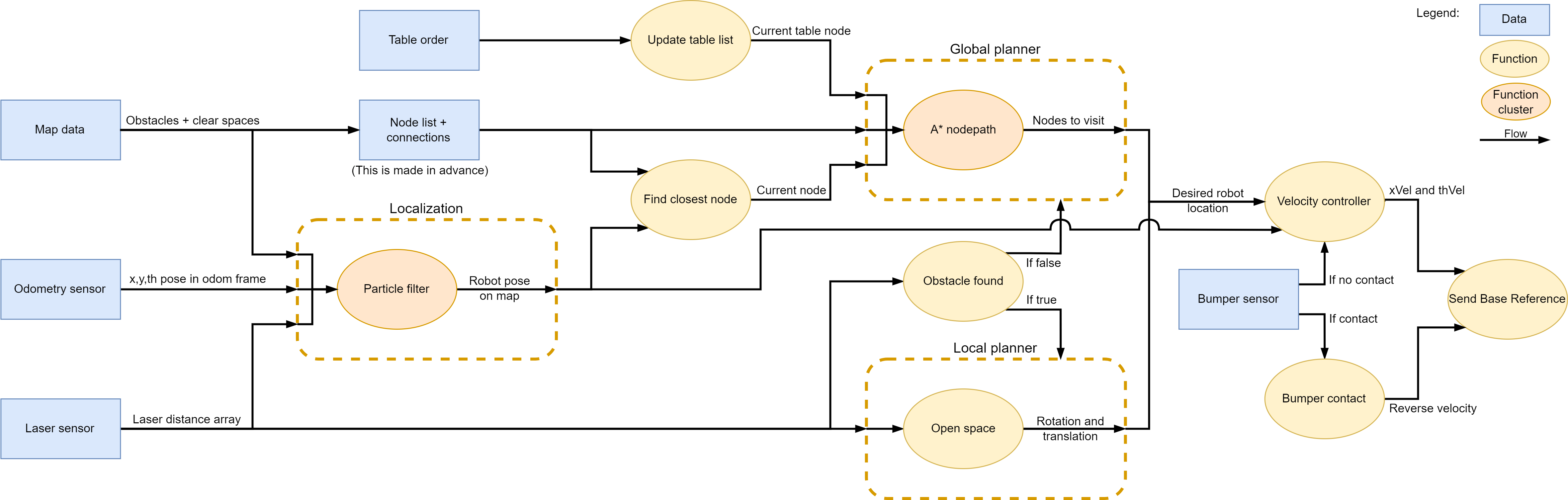

Figure 3 shows the data flow of Hero's algorithm. This data flow is completely revised, compared to the figure that was shown in the design presentation. Many complex elements (outside of the scope of this course) were scrapped due to time constraints, and instead all of the main software functionalities came from the assignments that were given during this course. During the start of the software design for the restaurant challenge, this data flow was still vague, with many key aspects missing. However, as time progressed, and more functionalities were added to the code, this data flow became more clear. One of the main realizations was that by making both the local and global planner output the same data, only one velocity controller was needed. This velocity controller would then steer Hero towards the desired position, based on the current position that is given by the particle function. | |||

' | |||

==Strategy Description Restaurant Challenge== | ==Strategy Description Restaurant Challenge== | ||

===Global Planner=== | ===Global Planner=== | ||

As mentioned, the chosen global planner for Hero is the A* algorithm. The A* algorithm is a widely used path planning algorithm that efficiently computes an optimal path from a starting point to a goal point. In the context of this assignment, the robot receives a specified order of tables as input, and the A* algorithm is responsible for generating a sequential path that visits these tables in the given order. | |||

The A* algorithm combines the strengths of both Dijkstra's algorithm and greedy best-first search. It intelligently explores the search space by considering both the cost of reaching a particular point and an estimate of the remaining cost to reach the goal. One of the key advantages of the A* algorithm is its ability to find the shortest path while minimizing unnecessary backtracking or inefficient movements. By planning the path to the tables in the given order, the algorithm ensures that the robot follows a coherent and streamlined delivery process. This leads to efficient navigation, reducing both the time and distance traveled. | The A* algorithm combines the strengths of both Dijkstra's algorithm and greedy best-first search. It intelligently explores the search space by considering both the cost of reaching a particular point and an estimate of the remaining cost to reach the goal. One of the key advantages of the A* algorithm is its ability to find the shortest path while minimizing unnecessary backtracking or inefficient movements. By planning the path to the tables in the given order, the algorithm ensures that the robot follows a coherent and streamlined delivery process. This leads to efficient navigation, reducing both the time and distance traveled. | ||

In this challenge, the optimal path, which is computed by the A* algorithm, is given as a sequence of nodes. The map is discretized into a grid representation, | In this challenge, the optimal path, which is computed by the A* algorithm, is given as a sequence of nodes. The map is discretized into a grid representation, where nodes have been chosen to mark locations on the map that the robot can visit. The robot traverses the map by moving from one node to another sequentially, following a predetermined set of connections between adjacent nodes. | ||

However, it is important to consider scenarios where unexpected obstacles may interfere with the computed path. In such cases, when an obstacle that was not pre-defined appears along the planned path, the local planner is activated. The local planner utilizes obstacle avoidance techniques to dynamically adjust the robot's trajectory, allowing it to safely navigate around the obstacle. Once the local planner successfully avoids the obstacle, the global planner, using the A* algorithm once again, is invoked to recompute an optimal path to the table goal. | However, it is important to consider scenarios where unexpected obstacles may interfere with the computed path. In such cases, when an obstacle that was not pre-defined appears along the planned path, the local planner is activated. The local planner utilizes obstacle avoidance techniques to dynamically adjust the robot's trajectory, allowing it to safely navigate around the obstacle. Once the local planner successfully avoids the obstacle, the global planner, using the A* algorithm once again, is invoked to recompute an optimal path to the table goal, based on the current robot position. | ||

By seamlessly integrating the A* algorithm as the global planner and incorporating the local planner for obstacle avoidance, Hero can effectively navigate the environment, efficiently delivering orders to the specified tables. The A* algorithm's ability to find optimal paths, combined with the adaptability of the local planner, ensures robust and intelligent decision-making during the robot's navigation process. | By seamlessly integrating the A* algorithm as the global planner and incorporating the local planner for obstacle avoidance, Hero can effectively navigate the environment, efficiently delivering orders to the specified tables. The A* algorithm's ability to find optimal paths, combined with the adaptability of the local planner, ensures robust and intelligent decision-making during the robot's navigation process. | ||

===Local Planner=== | ===Local Planner=== | ||

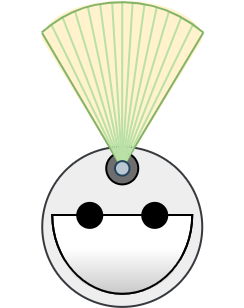

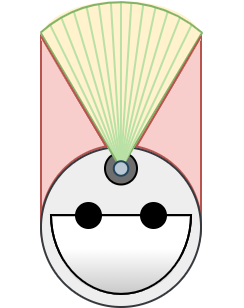

[[File:HeroObstacleAvoidance1.png|thumb|Figure 4: Hero's obstacle detection scanning cone]] | |||

[[File:HeroObstacleAvoidance1.png|thumb|Hero's obstacle detection scanning cone]] | |||

=====Open Space===== | =====Open Space===== | ||

In the implemented open space approach, the robot utilizes laser data to navigate through the environment by identifying and traversing through open spaces or gaps between obstacles. This approach enables the robot to safely move without colliding with objects in its path. The algorithm consists of several key steps: | Open space is activated, as was shown in Figure 3, once an obstacle is detected by the laser sensors, within a distance of 0.4 m in a front facing cone. This scanning area is depicted schematically in Figure 4. In the implemented open space approach, the robot utilizes laser data to navigate through the environment by identifying and traversing through open spaces or gaps between obstacles. This approach enables the robot to safely move without colliding with objects in its path. The algorithm consists of several key steps: | ||

'''1. Gap Detection:''' The algorithm analyzes the laser scan data to identify gaps or open spaces. It examines consecutive laser points within a specified range (2π/3 cone in front of robot) and checks if their distances exceed a certain threshold, look ahead distance that begins at 0.8 meters. If a sequence of laser points with distances greater than the threshold is found, it indicates the presence of a gap. | '''1. Gap Detection:''' The algorithm analyzes the laser scan data to identify gaps or open spaces. It examines consecutive laser points within a specified range (2π/3 rad cone in front of the robot) and checks if their distances exceed a certain threshold, a look ahead distance that begins at 0.8 meters. If a sequence of laser points with distances greater than the threshold is found, it indicates the presence of a gap. | ||

'''2. Gap Width Evaluation:''' After detecting a potential gap, the algorithm evaluates its width to determine if it is wide enough to be considered a significant open space. A minimum gap width criterion is set to 100 laser pointers to ensure that the detected gap is large enough to fit the width of the robot such that it can safely drive within this gap. | '''2. Gap Width Evaluation:''' After detecting a potential gap, the algorithm evaluates its width to determine if it is wide enough to be considered a significant open space. A minimum gap width criterion is set to 100 laser pointers to ensure that the detected gap is large enough to fit the width of the robot such that it can safely drive within this gap. | ||

| Line 113: | Line 115: | ||

'''3. Open Space Search:''' The algorithm searches for open spaces within the laser scan data by iteratively applying the gap detection process. It gradually adjusts the look ahead distance parameter by decreasing it by 10% each iteration to find wider gaps if no suitable gap is found initially. However, it should be noted that this look ahead distance should never decrease below the obstacle distance defined. This adaptive mechanism allows the robot to explore different distances and adapt to the environment's characteristics. | '''3. Open Space Search:''' The algorithm searches for open spaces within the laser scan data by iteratively applying the gap detection process. It gradually adjusts the look ahead distance parameter by decreasing it by 10% each iteration to find wider gaps if no suitable gap is found initially. However, it should be noted that this look ahead distance should never decrease below the obstacle distance defined. This adaptive mechanism allows the robot to explore different distances and adapt to the environment's characteristics. | ||

'''4. Open Space Calculation:''' Once a significant gap is detected, the algorithm calculates the distance and rotation required for the robot to navigate through the open space. It determines the start and end indices of the laser scan | '''4. Open Space Calculation:''' Once a significant gap is detected, the algorithm calculates the distance and rotation required for the robot to navigate through the open space. It determines the start and end indices of the gap found through the laser scan representing the open space and uses this information to calculate the desired rotation angle. The algorithm also accounts for small adjustments to the rotation angle to ensure smooth and precise navigation. | ||

By implementing the open space approach, the robot can effectively plan its trajectory by identifying safe areas to move through. This strategy minimizes the risk of collisions and enables efficient navigation in complex environments. | By implementing the open space approach, the robot can effectively plan its trajectory by identifying safe areas to move through. This strategy minimizes the risk of collisions and enables efficient navigation in complex environments. | ||

=====Re-planning Path===== | =====Re-planning Path===== | ||

Once an obstacle is detected and open space is called, Hero finds the gap using the algorithm described above and then moves a distance equal to the obstacle distance defined. | Once an obstacle is detected and open space is called, Hero finds the gap using the algorithm described above and then moves a distance equal to the obstacle distance defined. However, it does this by defining a desired position on the map, based on its current position, and the required rotation and translation. Following the adjustment of position to circumvent the obstacle, if open space is not called once more, Hero initiates a re-computation of the A* algorithm to re-plan its path. This ensures that the robot can adapt to the new environment and dynamically generate an optimal trajectory towards the next table on the delivery list depending on its new location on the map. | ||

The process of activating open spaces, moving a distance corresponding to the obstacle distance, and recalculating the A* algorithm enables Hero to navigate efficiently and effectively within the restaurant environment. This capability showcases the robot's adaptability and problem-solving skills, as it can respond to unexpected obstacles and make the necessary adjustments to ensure successful delivery completion. | The process of activating open spaces, moving a distance corresponding to the obstacle distance, and recalculating the A* algorithm enables Hero to navigate efficiently and effectively within the restaurant environment. This capability showcases the robot's adaptability and problem-solving skills, as it can respond to unexpected obstacles and make the necessary adjustments to ensure successful delivery completion. | ||

===== | =====Door Detection===== | ||

Being able to detect doors is a crucial aspect of navigating the restaurant environment. This is due to the possibility of certain paths being completely blocked by unforeseen obstacles. Therefore, the robot must be able to detect the door and request for it to be opened in order to successfully deliver orders to all tables. That being said, a door detection algorithm was implemented and integrated within the navigation planning. | |||

A list of door connections was constructed which consists of the combination of the node before the door and the node after the door. As can be seen in the map of Figure 5, the door connection consists of nodes 16 and 44, which in turn means that the list of door connections is: Node_connections [(44,16),(16,44)]. Therefore, to check whether the obstacle detected in front of the robot is indeed a door or an unknown obstacle, the algorithm simply checks whether an obstacle is detected and that the current node is either 44 or 16 and the next node in the node path list is either 16 or 44. If this is the case, and open space detects an obstacle in the way, Hero will request to open the door. If there is no obstacle detected, the door is assumed to be open and Hero will not request the door to be opened. It will continue along its path as planned by A*. | |||

===Particle Filter=== | ===Particle Filter=== | ||

Localization is a crucial task in robotics, involving the estimation of a robot's pose (position and orientation) relative to a known map or reference frame within its environment. It enables effective navigation, perception, and autonomous task execution. For this restaurant navigation challenge, localization is a must since relying on the odometry information is not | Localization is a crucial task in robotics, involving the estimation of a robot's pose (position and orientation) relative to a known map or reference frame within its environment. It enables effective navigation, perception, and autonomous task execution. For this restaurant navigation challenge, localization is a must since relying on the odometry information is not robust due to wheel slippage. Thus, a particle filter is implemented as a means of localization. | ||

A particle filter is a probabilistic localization technique widely employed in robotics. It utilizes an estimation algorithm that maintains a set of particles, each representing a hypothesis of the robot's pose. These particles are distributed across the map and updated based on sensor measurements and control inputs. This allows for robust and accurate localization, particularly in dynamic and uncertain environments. | A particle filter is a probabilistic localization technique widely employed in robotics. It utilizes an estimation algorithm that maintains a set of particles, each representing a hypothesis of the robot's pose. These particles are distributed across the map and updated based on sensor measurements and control inputs. This allows for robust and accurate localization, particularly in dynamic and uncertain environments. | ||

| Line 131: | Line 137: | ||

The functioning of a particle filter involves iterative updates of the particles, guided by sensor measurements and control inputs. Initially, the particles are uniformly distributed across the map, encompassing a broad range of potential robot poses. As sensor measurements, such as odometry or perception data, are acquired, the particles undergo resampling and weighting based on their likelihood of generating the observed data. Particles with higher weights, indicating better agreement with the measured data, have a greater probability of being selected during the resampling process. This iterative process allows the particle filter to converge towards the true robot pose. This is seen, in this implementation, as a group of red particles that are relatively close to the robot's actual position on the map. | The functioning of a particle filter involves iterative updates of the particles, guided by sensor measurements and control inputs. Initially, the particles are uniformly distributed across the map, encompassing a broad range of potential robot poses. As sensor measurements, such as odometry or perception data, are acquired, the particles undergo resampling and weighting based on their likelihood of generating the observed data. Particles with higher weights, indicating better agreement with the measured data, have a greater probability of being selected during the resampling process. This iterative process allows the particle filter to converge towards the true robot pose. This is seen, in this implementation, as a group of red particles that are relatively close to the robot's actual position on the map. | ||

Furthermore, the particle filter also handles the coordination between different frames used in robot localization. There are three frames which are being utilized, and that are being coordinated with each other by the particle filter. The map frame, robot frame, and odometry frame are those three frames. The map frame represents the global reference frame, in this case, it is defined by the map of the restaurant which was given. The robot frame denotes the robot's pose within the map frame, encompassing both its position and orientation. The odometry frame tracks the robot's motion through wheel encoders or other motion sensors. The particle filter leverages information from the odometry frame to update and propagate the particles, accounting for the robot's movement and estimating its pose relative to the map frame. By integrating measurements from the | Furthermore, the particle filter also handles the coordination between different frames used in robot localization. There are three frames which are being utilized, and that are being coordinated with each other by the particle filter. The map frame, robot frame, and odometry frame are those three frames. The map frame represents the global reference frame, in this case, it is defined by the map of the restaurant which was given. The robot frame denotes the robot's pose within the map frame, encompassing both its position and orientation. The odometry frame tracks the robot's motion through wheel encoders or other motion sensors. The particle filter leverages information from the odometry frame to update and propagate the particles, accounting for the robot's movement and estimating its pose relative to the map frame. By integrating measurements from the robot frame, which are obtained from the laser data, the particle filter refines the particles' weights and adjusts their positions, resulting in an accurate estimate of the robot's localization. | ||

Finally, there is a tradeoff between maximizing the particle filter performance by increasing the number of particles utilized and the computational load. So, a tradeoff has to be made between how accurate the robot's pose is estimated, and how fast it is estimated. For this implementation, the optimum number of particles was determined to be 400 particles. This number was found through a trial and error basis. | Finally, there is a tradeoff between maximizing the particle filter performance by increasing the number of particles utilized and the computational load. So, a tradeoff has to be made between how accurate the robot's pose is estimated, and how fast it is estimated. For this implementation, the optimum number of particles was determined to be 400 particles. This number was found through a trial-and-error basis. | ||

By utilizing the particle filter, alongside the laser, odometry and bumper data, the robot should be able to navigate the restaurant. | By utilizing the particle filter, alongside the laser, odometry and bumper data, the robot should be able to navigate the restaurant. | ||

===Announcing Arrival and Celebration=== | ===Announcing Arrival and Celebration=== | ||

The algorithm | The arrival algorithm handles the robot announcements when arrived at a table, and when all tables have been visited. This section outlines the approach used to announce the arrival and trigger a celebratory response. | ||

To begin with, the nodes that | To begin with, the nodes that surround each table are organized into separate vectors based on their relative positions. These vectors serve as reference points for determining the desired orientation of the robot when facing the table. Depending on the specific table being visited, the appropriate vector is selected. | ||

Upon reaching a goal node, the algorithm identifies which vector the node belongs to, thereby determining the desired angle for the robot's orientation. To ensure alignment with the desired angle, the robot's pose is requested through the particle filter. If the robot's current facing direction differs from the desired angle, the robot performs a turn to face the table. | Upon reaching a goal node, the algorithm identifies which vector the node belongs to, thereby determining the desired angle for the robot's orientation. To ensure alignment with the desired angle, the robot's pose is requested through the particle filter. If the robot's current facing direction differs from the desired angle, the robot performs a turn to face the table. | ||

Once the robot is properly oriented towards the table, it announces its arrival by vocalizing the phrase, "Hello, I have arrived with your order." This vocalization ensures that the customers are aware of the robot's presence and the delivery of their order. | Once the robot is properly oriented towards the table, it drives up to the table, until it is 20 cm away, based on the laser sensors. Then, it announces its arrival by vocalizing the phrase, "Hello, I have arrived with your order." This vocalization ensures that the customers are aware of the robot's presence and the delivery of their order. | ||

Furthermore, once all tables have been visited, and this is verified by checking whether the table list is empty, the algorithm triggers a celebratory response from the robot. The robot proclaims its achievement of visiting all tables, signaling the successful completion of its task. | Furthermore, once all tables have been visited, and this is verified by checking whether the table list is empty, the algorithm triggers a celebratory response from the robot. The robot proclaims its achievement of visiting all tables, signaling the successful completion of its task. | ||

===Creating Table List and Inputting Table Order from User=== | ===Creating Table List and Inputting Table Order from User=== | ||

When calling the executable from the terminal, arguments can be supplied to the system. These arguments are the table numbers that Hero should visit. These table numbers are taken and converted to node numbers. From the node map in | When calling the executable from the terminal, arguments can be supplied to the system. These arguments are the table numbers that Hero should visit. These table numbers are taken and converted to node numbers. From the node map in Figure 5, nodes were identified per table as an option to visit. Each possible table node was also assigned a direction to turn to, as discussed in the Announcing Arrival and Celebration section. When converting table numbers to node numbers, two options were created. | ||

Originally, the best nodes to reach were placed first in the list. The robot would only visit another node if this original node is blocked. The table number to node translation only happens at the start of the program, and if a node is blocked. Therefore, if no obstacles are placed in the restaurant, the same node will always be visited per table. This was tested prior to the challenge and used in the challenge as well. | |||

A second option was also created to optimize for the shortened path based on the current node number. The list of final goal nodes to visit was updated every time a table was reached, or a node was blocked. The list of possible nodes to visit is re-ordered based on which node the robot is in, and the closest node (by numerical number) is chosen as the node to visit. If this node is blocked, the next closest node is used instead. This implementation was chosen due to unoptimized paths. For example: the long path followed if table 0 is visited before table 1, causing the robot to drive all the way back around the table to node 46. This could be better chosen to be node 36. This version was only tested in simulation due to time constraints. Within simulation, the recalculation of the nodes based on shortest distance was confirmed. However, due to the preference of different nodes that had not been tested in real life, as well as unexpected simulation behavior of open space, it was decided to not use this during the challenge. | |||

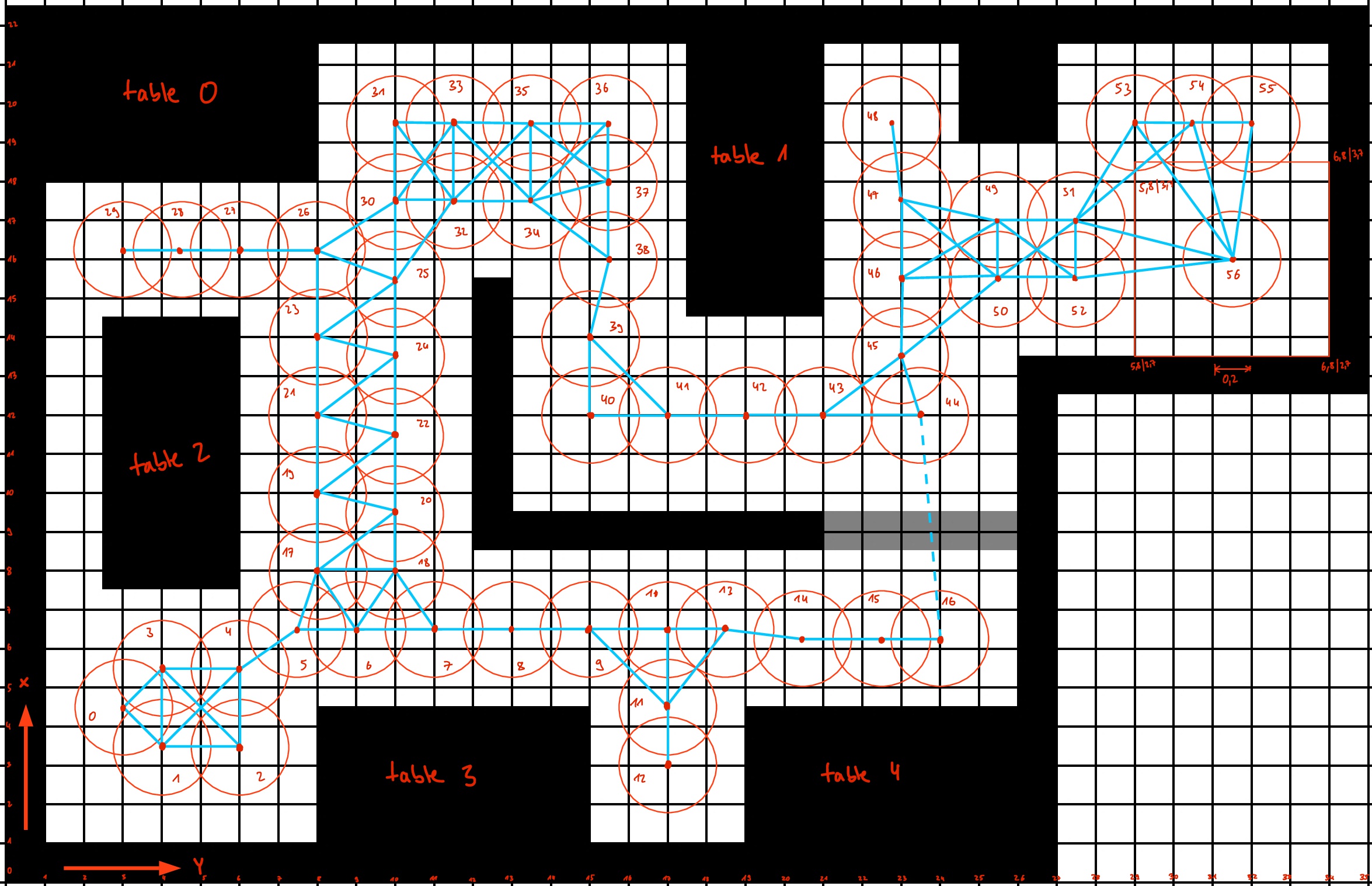

[[File:RestaurantChallengeMap grid16 R2D2.jpg|thumb|Figure 5: R2D2 generated map with nodes and connections in defined grid|800x800px]] | |||

===Map Generation=== | ===Map Generation=== | ||

The map plays a | The map plays a crucial role in the project. The map provided by the lecturers consists of a pixelated image representing the environment, accompanied by a corresponding coordinate JSON file that describes the corner nodes of tables, walls, and obstacles. Additionally, a config file is included, which contains information about the resolution of the pixelated image, enabling the establishment of a scale for projecting the real-life environment onto the image. It is important to note that the relative sizes of objects, tables, and walls in the image are consistent with those in the actual environment. | ||

The robot operates by navigating through nodes that it can visit. | The robot operates by navigating through nodes that it can visit. Therefore, the process of generating the map involves translating the given information, especially the relative size information of walls, tables, and obstacles, into a file that can be interpreted by the A* algorithm. This file allows the algorithm to effectively plan the robot's path, ensuring that it visits all the tables. To achieve this, the strategy involves defining a grid with a chosen grid size, identifying the nodes that the robot can visit, and establishing connections between these nodes. | ||

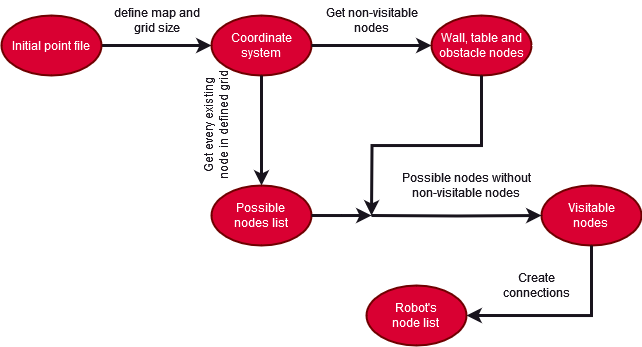

[[File:Algorithm nodelist generation.png|thumb|616x616px|Figure 6: Map generation algorithm visualized]] | |||

The provided coordinate file contains specific point numbers and coordinates in both 2D directions, representing a coordinate system measured in meters that originates from the bottom left corner of the map. The process of generating the node and connection list can either involve developing an algorithm that can convert these given points and connections into a functional grid consisting of nodes that the robot can visit, each with their respective coordinates, or, alternatively, a manual node file generation by hand. However, after attempting to develop the algorithm, it was found to be too complex, and for various reasons mentioned later, the idea was abandoned. | |||

The developed algorithm functions as depicted in the accompanying flow chart. After receiving an initial point file, a map size and a grid size of 0.2 meters are defined to establish a coordinate system. Subsequently, all non-visitable points (nodes) are determined by examining each pair of points in the given file along with their respective positions defined in meters. In parallel, another list is generated containing all the possible nodes in the entire grid. After obtaining both lists, the visitable nodes list is created by subtracting the non-visitable nodes from the possible nodes list. These visitable nodes are then connected to each other, resulting in a connection list. It is worth mentioning that connections are only established if no non-visitable nodes exist between two possible nodes in any direction. The resulting visitable nodes and connections lists are then stored in a JSON file format, facilitating reading by the program and utilization by the A* algorithm for path computation towards the respective tables. | |||

To further enhance the algorithm, it is imperative to include only feasible nodes in the output JSON file, reflecting the nodes that the robot can actually visit. Considering the robot's size, which is half a meter, and the relatively smaller grid size, certain nodes must be excluded from the final node list, taking into account the distance from walls. Moreover, nodes enclosed within tables, for example, must also be excluded from the final node list as they are surrounded by a closed loop of table nodes that the robot cannot traverse. Additional modifications aim to reduce the number of generated and stored nodes, allowing the robot to visit fewer nodes and avoiding unnecessary stop-and-go movements. | |||

The algorithm is limited to the inner table nodes, nodes in proximity to unreachable areas due to the robot's size relative to the distance from walls, and a reduced number of nodes to avoid storing every individual node along a straight path defined by the grid size. Furthermore, it was determined that generating every possible node in the grid through a small grid size algorithm is not a suitable solution for the project. It was also concluded that manually placing nodes is superior due to the simplicity of the given restaurant map. The effort invested in hand-writing around 50 nodes proved to be more efficient than developing an algorithm that is not as efficient. One major advantage of hand-writing the nodes was the ability to add specific diagonal connections or omit specific nodes that were deemed unsafe for the robot, resulting in a more robust node list. | |||

However, for larger projects involving more detailed or larger maps, an automated program must be developed to efficiently execute this concept within a short timeframe. | |||

To manually construct the node and connection lists, a grid with a grid size of 0.2 meters was overlaid onto the image file, taking into account the image's relative size. Subsequently, a 2D coordinate system originating from the bottom left corner of the map was established. Within this coordinate system, it was determined that one unit in the x or y direction would correspond to the grid size of 0.2 meters. The initial step involved marking the robot's starting area on the map, which entailed designating a single node at the center of the area. The subsequent initiation algorithm, to be programmed as part of the overall project, would enable the robot to locate itself within this area using localization methods such as the particle filter, regardless of its initial position within the starting area. Following this, additional points were defined, ensuring a distance of 10 cm from walls (equivalent to half a coordinate unit) and 20 cm from the door at all times. Careful consideration was given to the robot's size during the node selection process, with a circle representing the robot's size superimposed around each chosen node on the map to visually depict its dimensions. Furthermore, a deliberate effort was made to reduce the number of nodes through a selective process. The attached map displays the manually numbered nodes. The positive x-axis is oriented upwards in the image, while the positive y-axis extends to the left. | |||

Connections are depicted in blue on the map. The dotted connection represents passage through the gray door in the environment, although it is included in the regular connections list. It was crucial to ensure that the robot can traverse all these connections without violating any requirements, such as maintaining a safe distance from walls. That is why a circle representing the robot's size is placed around each node to ensure compliance. The complete map can be found alongside this text. The white space signifies the theoretically accessible area, while the black pixels represent obstacles, walls, or tables. The gray areas indicate doors. <br /> | |||

Connections are depicted in blue on the map. The dotted connection represents passage through the gray door in the environment, although it is included in the regular connections list. It was crucial to ensure that the robot can traverse all these connections without violating any requirements, such as maintaining a safe distance from walls. The complete map | |||

===Main Algorithm=== | ===Main Algorithm=== | ||

| Line 188: | Line 191: | ||

#'''Particle Filter and Node Propagation''' Once the robot starts moving, the particle filter continues to update the robot's pose to ensure accurate localization and proper arrival at the desired node and position. The algorithm propagates through the nodes along the planned path until it reaches the target table. | #'''Particle Filter and Node Propagation''' Once the robot starts moving, the particle filter continues to update the robot's pose to ensure accurate localization and proper arrival at the desired node and position. The algorithm propagates through the nodes along the planned path until it reaches the target table. | ||

#'''Obstacle Evasion''' If an obstacle is encountered during traversal, the algorithm activates the open space feature, which is the local planner, enabling the robot to evade the obstacle. However, if the robot fails to reach the next node successfully for two consecutive attempts, the algorithm removes this problematic node from future path planning to avoid further obstacles. | #'''Obstacle Evasion''' If an obstacle is encountered during traversal, the algorithm activates the open space feature, which is the local planner, enabling the robot to evade the obstacle. However, if the robot fails to reach the next node successfully for two consecutive attempts, the algorithm removes this problematic node from future path planning to avoid further obstacles. | ||

#'''''A* Path Recomputation''''' In cases where the A* path computation fails entirely, for example a node in that path is | #'''''A* Path Recomputation''''' In cases where the A* path computation fails entirely, for example a node in that path is inaccessible and deemed to be blocked, the algorithm then proceeds to recompute a different path that has the same goal node in the end as previously. | ||

#'''Door Handling''' When a door is encountered along the A* path, the algorithm sends a door open request and waits until the door is opened before proceeding. | #'''Door Handling''' When a door is encountered along the A* path, the algorithm sends a door open request and waits until the door is opened before proceeding. | ||

#'''Blocked Nodes and Goal Reassignment''' If the goal node at the table is blocked, the algorithm chooses an alternative node at the same table as the new goal, then progresses towards it. | #'''Blocked Nodes and Goal Reassignment''' If the goal node at the table is blocked, the algorithm chooses an alternative node at the same table as the new goal, then progresses towards it. | ||

| Line 197: | Line 200: | ||

==Software Architecture Restaurant Challenge== | ==Software Architecture Restaurant Challenge== | ||

''' | The main algorithm as described in the previous section works via the data flow and state flow from Figures 2 and 3 . These functions are not all in the main algorithm, and reference to a wide array of functions. A number of custom functions were created on top of functions and algorithms created and finished before the group project started. These pre-made items are the Particle Filter for localization, A* for global planning, and open space as a local planner, which were only modified for integration for this project. | ||

There are a number of custom files and functions made. Each file had a header file containing the information to be sent to the functions. The files are as follows: | |||

# '''Table Objectives''': Return the node numbers to be visited at the end of a path, based off of the blocked node list and the desired table list. | |||

# '''Arrival:''' Return the direction to rotate to once the final node at a table has been visited. | |||

# '''Laser Functions:''' Mathematical calculations to calculate between laser angle and laser index. | |||

# '''Node Functions:''' A conglomeration of functions that compute and recompute A* as needed, including finding distances to nodes and finding the nearest node. | |||

# '''Dont Crash:''' Computes bumper contact, if an obstacle is seen, and if there is a door. | |||

# '''Open Space:''' Completes open space as described in a previous section. | |||

# '''Pathfinding''': Computes the desired velocity commands, calls the global or local planner as needed. | |||

Together these files communicate according to the state flow from Figure 2 sending the data flow of Figure 3 in order to move the robot. The files were generally structured well, with small functions that complete one task. However, in hindsight, the Pathfinding file should have been broken up into more functions for easier readability and maintainability. | |||

Despite the overall progress and functionality of the main algorithm, there are certain challenges and issues that have been identified during the testing phase leading to the final challenge. | Despite the overall progress and functionality of the main algorithm, there are certain challenges and issues that have been identified during the testing phase leading to the final challenge. The encountered difficulties are highlighted to note the persisting issues that require further attention and resolution, but could not be realized within the given time frame. They will be further documented in the following section on robustness. | ||

#'''Open Space Interaction with Humans:''' One notable issue is that the open space feature, that is activated for obstacle evasion, does not work effectively in the presence of humans. The robot has a tendency to collide with human legs, potentially causing safety concerns. The root of this issue | #'''Open Space Interaction with Humans:''' One notable issue is that the open space feature, that is activated for obstacle evasion, does not work effectively in the presence of humans. The robot has a tendency to collide with human legs, potentially causing safety concerns. The root of this issue likely lies in the insufficient scanning cone that was used to initialize open space. | ||

#'''Particle Filter Localization Errors:''' It has been observed that once the particle filter is in a wrong position, it struggles to converge back to the correct position. This issue becomes more pronounced when the robot encounters unknown obstacles, particularly large ones, leading to incorrect pose estimation. This issue might stem from the usage of few particles, but due to the computational speed at hand, increasing the particles is not favorable. | #'''Particle Filter Localization Errors:''' It has been observed that once the particle filter is in a wrong position, it struggles to converge back to the correct position. This issue becomes more pronounced when the robot encounters unknown obstacles, particularly large ones, leading to incorrect pose estimation. This issue might stem from the usage of few particles, but due to the computational speed at hand, increasing the particles is not favorable. | ||

==Robustness Restaurant Challenge== | ==Robustness Restaurant Challenge== | ||

The performance verification of the system was conducted through a sequential approach, with examining each component of the algorithm. Initially, the A* algorithm was simulated to determine its effectiveness in pathfinding. It was found that A* functioned adequately, ensuring that the primary objective of efficient route planning was fulfilled. | The performance verification of the system was conducted through a sequential approach, with examining each component of the algorithm. Initially, the A* algorithm was simulated to determine its effectiveness in pathfinding. It was found that A* functioned adequately, ensuring that the primary objective of efficient route planning was fulfilled. However due to no localization, Hero tended to deviate from the real life path. Within Hero's odometry it performed well, so this test was passed. | ||

Next, the particle filter was integrated into the system, in conjunction with A* algorithm. This integration proved to be seamless, as the particle filter successfully operated without any complications when there were no unknown obstacles present in the environment. However, challenges arose when incorporating the open space module back into the system. To address this, a careful calibration of the look-ahead distance was necessary to enable the system to identify unknown obstacles while excluding non-obstacle elements such as tables. Considerable effort was dedicated to fine-tuning the open space module, which ultimately did not perform up to the expected standard. | Next, the particle filter was integrated into the system, in conjunction with A* algorithm. This integration proved to be seamless, as the particle filter successfully operated without any complications when there were no unknown obstacles present in the environment, and fixed the poor localization issues from A*. However, challenges arose when incorporating the open space module back into the system. To address this, a careful calibration of the look-ahead distance was necessary to enable the system to identify unknown obstacles while excluding non-obstacle elements such as tables. Considerable effort was dedicated to fine-tuning the open space module, which ultimately did not perform up to the expected standard. | ||

Furthermore, comprehensive testing was conducted to assess additional functionalities and ensure that all objectives were fulfilled. Tests encompassed scenarios involving door opening, blocking of a node, as well as functionalities like table identification and celebratory responses. These assessments served to validate the overall performance and understand the system's proficiency in executing its intended tasks. | Furthermore, comprehensive testing was conducted to assess additional functionalities and ensure that all objectives were fulfilled. Tests encompassed scenarios involving door opening, blocking of a node, as well as functionalities like table identification and celebratory responses. These assessments served to validate the overall performance and understand the system's proficiency in executing its intended tasks. | ||

To assess the robustness of the system, a series of tests were conducted to examine its performance under various challenging conditions. The tests encompassed different aspects, including obstacle avoidance, accuracy of walls, simulations, visualization of the particle filter, pathfinding, and verification of specific algorithm components. | To assess the robustness of the system, a series of tests were conducted to examine its performance under various challenging conditions. The tests encompassed different aspects, including obstacle avoidance, accuracy of walls, simulations, visualization of the particle filter, pathfinding, and verification of specific algorithm components. | ||

*One critical test involved evaluating the robot's ability to navigate in the presence of a moving human. Human movement was introduced as an obstacle, simulating real-world scenarios. However, it should be noted that the approach for handling human movement was not yet finalized, and further refinement was required to address the challenges posed by humans in the environment. The issues related to open space and the localization error of the particle filter were particularly relevant in this context. | *One critical test involved evaluating the robot's ability to navigate in the presence of a moving human. Human movement was introduced as an obstacle, simulating real-world scenarios. However, it should be noted that the approach for handling human movement was not yet finalized, and further refinement was required to address the challenges posed by humans in the environment. The issues related to open space and the localization error of the particle filter were particularly relevant in this context. | ||

*Simulations played a crucial role in assessing the system's robustness. This allowed for extensive testing in a controlled environment before deployment in real-world settings. | *Simulations played a crucial role in assessing the system's robustness. This allowed for extensive testing in a controlled environment before deployment in real-world settings. | ||

*Visualization of the particle filter was utilized to evaluate its performance and effectiveness in localizing the robot within the environment accurately. By examining the particle filter map, it was observed that removing the door completely from the map was necessary. Failure to do so resulted in localization errors, as the robot would incorrectly perceive its location once it passes the door, and assumes that the robot is still on the first end of the door. | *Visualization of the particle filter was utilized to evaluate its performance and effectiveness in localizing the robot within the environment accurately. By examining the particle filter map, it was observed that removing the door completely from the map was necessary. Failure to do so resulted in localization errors, as the robot would incorrectly perceive its location once it passes the door, and assumes that the robot is still on the first end of the door. | ||

The system's robustness was evaluated through tests and observations, revealing important insights. The performance of the particle filter was found to be influenced by the robot's interactions, with high velocity and high rotational velocity resulting in decreased accuracy, since the computations carried out by the particle filter will not be able to keep up with this rapid movement. Tuning the particle filter parameters and optimizing recovery from incorrect laser data were measures taken to improve robustness. The open space module, however, exhibited limitations and required further improvement as robustness was poor. Enhancing robustness requires addressing these factors which impact performance. | |||

===Videos:=== | |||

As part of the robustness testing of the challenge, some videos were made. Video 1 shows Hero visiting tables 1, 2, and 3. Note that open space recognition of humans is poor in this test, but bumper functionality works well once these bumpers are activated. Open space was later tuned slightly in the testing, in order to scan for a wider angle, but this again resulted in poor human recognition. The thinly blocked sections (like legs) are likely an issue. Video 2 shows Hero Visiting Tables 1, 4, 3, 0, and 2 in that order. Note no unknown obstacles are in the testing area, and Hero requested the door to be opened. This test shows that the Particle filter, A*, and door implementation work very well. No issues were seen in this test. Video 3 shows Hero visiting tables 1, 4, and 3 in that order. Here Hero notices the door is not opened twice and repeats the request to open the door to visit table 4. Once moving from table 4 to 3, an unexpected obstacle in the form of a human that will not move is encountered. Hero replans the path to pass back through the door and around to the other side of table 3. | |||

#'''Good test without doors: https://drive.google.com/file/d/1QjjkPH-98M7pvCE11sMnp0RJUNlhwpEW/view?usp=sharing''' | |||

#'''Perfect test with doors: https://drive.google.com/file/d/1QjmRv4MDE3_WtiGM_gy8g43pHbXBmKKp/view?usp=sharing''' | |||

#'''Plan around obstacle: https://drive.google.com/file/d/1Qlq6RXElBdS-wRzYQZ-_ZTRHnaEJYHck/view?usp=sharing''' | |||

==Evaluation Restaurant Challenge== | ==Evaluation Restaurant Challenge== | ||

The challenge, as expected, consisted of the map provided, in addition to, several static and dynamic objects. There was a static object completely closing off a hallway leading to table 4, and another one constricting the access to table 0. Furthermore, a dynamic object, in this case, a moving person, was also an added obstacle to the map. The observed behavior of the robot verified that there are several aspects of the algorithm that work, and several others that do not. The particle filter worked as expected. Localization was on point, and the robot did not lose its pose throughout the challenge. Also, the global planner was also working correctly. The path to the tables required to visit was generated correctly, and the robot ensured to follow that path. | The challenge, as expected, consisted of the map provided, in addition to, several static and dynamic objects. There was a static object completely closing off a hallway leading to table 4, and another one constricting the access to table 0. Furthermore, a dynamic object, in this case, a moving person, was also an added obstacle to the map. The observed behavior of the robot verified that there are several aspects of the algorithm that work, and several others that do not. The particle filter worked as expected. Localization was on point, and the robot did not lose its pose throughout the challenge. Also, the global planner was also working correctly. The path to the tables required to visit was generated correctly, and the robot ensured to follow that path. | ||

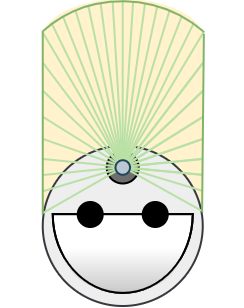

However, the local planner was the downfall. The local planner utilized the open space approach, as mentioned before. The open space is initialized once an obstacle is detected in that cone shape scan | However, the local planner was the downfall. The local planner utilized the open space approach, as mentioned before. The open space is initialized once an obstacle is detected in that cone shape scan shown in the Figure 7 but this means that there are blind spots in this detection method. This issue was already noticed during the final day of testing, but could not be solved due to time constraints. Thus, when the robot had to move through the constricted turn near table 0, the robot bumped into the obstacle, since this obstacle was in the detection blind spot. | ||

Furthermore, when the robot met the static object blocking table 4, the A* algorithm managed to re-plan a different path, and this was verified through the output of the terminal. However, the robot was not able to follow said path, due to open space kicking in, and not being able to exit it. | Furthermore, when the robot met the static object blocking table 4, the A* algorithm managed to re-plan a different path, and this was verified through the output of the terminal. However, the robot was not able to follow said path, due to open space kicking in, and not being able to exit it. | ||

Finally, when the robot was tasked to request the door to open, it succeeded. Just like in simulation and testing, the robot recognized the door as a door and not an obstacle, and then requested | Finally, when the robot was tasked to request the door to open, it succeeded. Just like in simulation and testing, the robot recognized the door as a door and not an obstacle, and then requested for the door to be opened. | ||

In conclusion, all aspects functioned as expected except for the open space. Further testing should have been conducted such that the open space issue would have been caught | In conclusion, all aspects functioned as expected except for the open space. Further testing should have been conducted such that the open space issue would have been caught in the weeks prior, and the blind spots of the cone shaped detection would be identified. If the open space functioned properly, then the challenge could likely have been completed. Also, the detection of humans was not tested nor seen during the challenge but from testing, human detection was not working properly, arguably due to the same oversight. | ||

[[File:HeroObstacleAvoidance2.png|thumb|Hero's obstacle detection blind spots]] | [[File:HeroObstacleAvoidance2.png|thumb|Figure 7: Hero's obstacle detection blind spots]] | ||

[[File:HeroObstacleAvoidance3.png|thumb|Hero's improved obstacle detection]] | [[File:HeroObstacleAvoidance3.png|thumb|Figure 8: Hero's improved obstacle detection]] | ||

===Discussion=== | ===Discussion=== | ||

The previous section elaborated on the performance of the algorithm in the final challenge. The algorithm showed a shortfall in the implementation of the open space approach used as a local planner. As mentioned, the obstacle detection cone used to instigate the local planner had a blind spot. As an improvement, a new detection shape should be used. A potential shape is displayed in | The previous section elaborated on the performance of the algorithm in the final challenge. The algorithm showed a shortfall in the implementation of the open space approach used as a local planner. As mentioned, the obstacle detection cone used to instigate the local planner had a blind spot, as seen in Figure 7. As an improvement, a new detection shape should be used. A potential shape is displayed in Figure 8. This new and improved shape should ensure that the robot sees obstacles when cutting close corners, or navigating an environment with tight turns and obstacles. Furthermore, the detection of humans should also be further tested after this new implementation, in order to see whether the problem is solved or not. | ||

Furthermore, the detection of humans should also be further tested | |||

===Conclusion=== | ===Conclusion=== | ||

In conclusion, the implementation of the system has demonstrated great promise, with several | In conclusion, the implementation of the system has demonstrated great promise, with several fundamentals of the algorithm functioning properly. The revised approach to the open space module and improving the code structure will induce enhancements to functionality and optimizing performance. The fine-tuning of the measurement model in the Particle Filter is a step yet to be taken to improve accuracy and reliability. | ||

Despite the considerable progress achieved, further refinements are required to fully optimize the algorithm. Addressing the identified limitations of the open space module and fine-tuning specific components are crucial next steps towards achieving optimal performance. | Despite the considerable progress achieved, further refinements are required to fully optimize the algorithm. Addressing the identified limitations of the open space module and fine-tuning specific components are crucial next steps towards achieving optimal performance. | ||

Overall, this implementation has shown tremendous potential and marks a significant stride towards meeting the desired objectives. By incorporating the suggested improvements, the system can be further enhanced, boosting its robustness, accuracy, and overall effectiveness in real-world scenarios. | Overall, this implementation has shown tremendous potential and marks a significant stride towards meeting the desired objectives. By incorporating the suggested improvements, the system can be further enhanced, boosting its robustness, accuracy, and overall effectiveness in real-world scenarios. | ||

Latest revision as of 17:08, 7 July 2023

Group members:

| Name | student ID |

|---|---|

| Ismail Elmasry | 1430807 |

| Carolina Vissers | 1415557 |

| John Assad | 1415654 |

| Jelle Cruijsen | 1369261 |

| Florian Geister | 1964429 |

| Omar Elba | 1492071 |

Design Presentation

File:MRC Design.pdf Presentation pdf:

Introduction

This wiki presents an in-depth analysis of the Restaurant Challenge, focusing on the system description, software architecture, strategy employed, robustness considerations, and evaluation of the challenge. The Restaurant Challenge is a challenge that assesses the capabilities of an autonomous robot to navigate and deliver orders within a restaurant environment. The challenge requires teams to develop software for the robot, known as Hero, enabling it to autonomously navigate through the restaurant, avoiding obstacles and humans, while accurately delivering orders to designated tables.

The wiki begins by introducing the challenge overview as well as the system description. The requirements are specified in the 'from desires to specs' diagram, which is then followed by the state flow and data flow created for the challenge. The state flow details how Hero progresses from the start area to the designated tables, while the data flow explains how information is exchanged and processed within the system.

Furthermore, the wiki covers the strategy employed by the team to tackle the Restaurant Challenge. This includes a discussion of the global planner responsible for determining the overall path, the local planner handling immediate obstacles and route adjustments, the particle filter aiding in localization, and the main algorithm that orchestrates the entire delivery process.

A crucial aspect of the challenge is the software architecture developed. This section of the wiki explores the architecture's design, how it was organized and its effectiveness in enabling Hero to perceive and navigate the environment, handle object detection, and execute the delivery task.

The wiki also addresses the robustness considerations involved in the challenge, ensuring that Hero can handle unexpected situations, such as collisions, stalls, or navigational uncertainties. Therefore, the software needs to be designed with this in mind, and this section evaluates the robustness of the implementation created.

Finally, the wiki discusses the results of the Restaurant Challenge. The performance of Hero will be assessed based on criteria such as successful delivery completion, adherence to the correct table order, object detection capabilities, and overall navigation efficiency. The evaluation section will include a discussion of the results, lessons learned throughout the challenge, things that could have been improved and future considerations. The wiki will then be concluded with a brief conclusion that describes the main achievements that were successfully completed.

This wiki aims to provide a comprehensive analysis of the Restaurant Challenge, shedding light on the technical aspects, strategies employed, software architectures developed, and evaluation outcomes. This knowledge contributes to the advancement of autonomous navigation and delivery systems, fostering innovation in real-world scenarios where robots can assist in restaurant operations and similar tasks.

Challenge Overview

The Restaurant Challenge is designed to test the navigation and delivery capabilities of Hero, an autonomous robot. The objective of the challenge is to deliver orders to designated tables in the correct order provided, following a specific set of rules and conditions. The challenge environment consists of a restaurant setup, including walls, tables, doors, and both static and dynamic objects.

At the start of the challenge, Hero is placed in a designated start area with an unknown orientation. The list of tables to be visited in the correct order is provided just before the challenge begins. Hero's task is to navigate through the restaurant, avoiding obstacles and humans, and reach each table to deliver the orders.

The delivery of an order is defined by driving up to the table, positioning near the table while facing towards it, and giving a clear sound signal to indicate Hero's arrival. The exact positioning next to the table is not critical as long as the robot is close enough for a customer to comfortably take their order from the tray.

The challenge environment specifications state that the walls are approximately straight, and tables are solid objects represented as rectangles in the LiDAR measurements such that it is not necessary to detect the legs of the tables. Doors may be open or closed, and there can be multiple routes to a given goal. Furthermore, the presence of dynamic objects in the form of humans adds complexity to the navigation task. Additionally, static obstacles such as chairs that are unknown to the robot and not present in the map given may be placed throughout the restaurant. Their detection and influence on the path taken is an additional challenge that needs to be overcome.

The challenge is subject to specific rules, including restrictions on touching walls or objects, limitations on robot speed, and time limits for each trial. Two trials are allowed, with a maximum of one restart. A trial ends if Hero bumps into a wall or object, remains stationary or makes non sensible movements for 30 seconds, exceeds the total time limit of 10 minutes, or if a restart is requested. A restart allows Hero to return to the start position while resetting the trial time but not the total time.

The overall goal of the challenge is to demonstrate the robot's ability to autonomously navigate a complex environment, accurately deliver orders to designated tables, and effectively handle dynamic obstacles. The teams' performance will be evaluated based on their ability to complete the task within the given constraints.

System Description

This section provides a comprehensive overview of Hero, its limitations, and functionalities.

Hero has certain limitations on its maximum velocities, namely the translational velocity is limited to 0.5 m/s and the rotational velocity is limited to 1.2 rad/s. To establish position and movement of the robot, two types of outputs from the robot can be utilized: laser data from the laser range finder and odometry data from the wheels.