Mobile Robot Control 2023 Group 8: Difference between revisions

Added image for nav assignment 1 |

m →Observations: Minor changes |

||

| (12 intermediate revisions by 2 users not shown) | |||

| Line 20: | Line 20: | ||

===Navigation Assignment 1=== | ===Navigation Assignment 1=== | ||

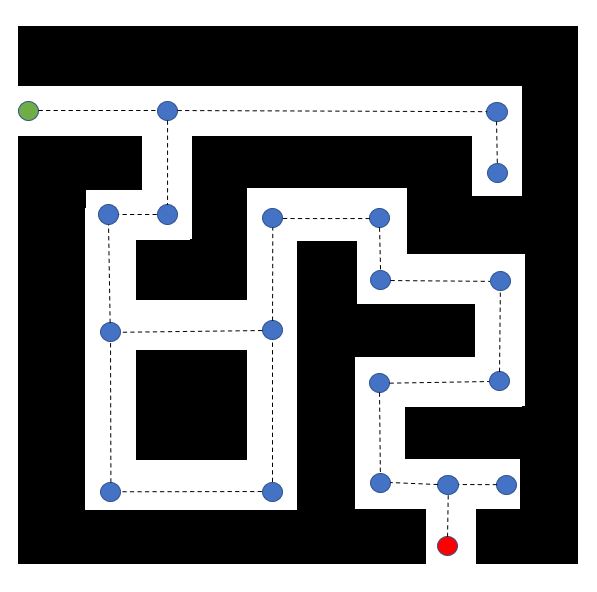

It is more efficient to only place nodes at turning points of the robot (so it can drive straight from node to node), or at a decision point (where the robot can take either of two routes). In between the nodes, the robot will have to drive straight anyway, so it is not necessary to use extra nodes in between. This way the number of nodes is decreased from 41 to 20. Hence, the algorithm will have to explore fewer nodes on the way. This will save unnecessary computations, making the algorithm more efficient. | It is more efficient to only place nodes at turning points of the robot (so it can drive straight from node to node), or at a decision point (where the robot can take either of two routes). In between the nodes, the robot will have to drive straight anyway, so it is not necessary to use extra nodes in between. This way the number of nodes is decreased from 41 to 20. Hence, the algorithm will have to explore fewer nodes on the way. This will save unnecessary computations, making the algorithm more efficient. | ||

[[File:Assignment1 NewNodes.JPG | [[File:Assignment1 NewNodes.JPG|thumb|Figure 1: Efficient node placement for A* algorithm|none]] | ||

===Navigation Assignment 2=== | |||

See main branch in repository. | |||

The used approach for this assignment is open space detection. First, it is determined whether the range of the laser data points is within the set horizon. This is evaluated for the laser data over the whole range. If the range of the laser point is larger than the horizon, this laser point is added to the open space. Laser points of the open space that are next to each other, belang to the same open space. Hence, it can occur that the robot observes multiple open spaces. In this case, the robot chooses the widest open space, and drives to the middle of it. The robot will only correct its direction if the new open space deviates more than 5 laser points from the midpoint of the robot. This, to make sure the movement is smooth and no unnessecary corrections are made. On top of that, if right in front of the robot no object is present within the horizon, it will drive straight forward for 0.5 seconds, or until it encounters an object. After this 0.5 seconds or when an object is detected, the open spaces will be determined again. If the robot detects no open space, or is very close to an object it will rotate, in an attempt to find a new open space. | |||

Screen recording of simulation: https://drive.google.com/file/d/1EcfIyBl419EeOkya5rmSvxfzes4J4Fpt/view?usp=share_link | |||

Recording of experiment: https://drive.google.com/file/d/1usk2VdcQFwjlW3zfe1Fuc-l8EOWuFPkF/view | |||

Possible improvements we worked on/are working on: | |||

- The implementation of multiple horizons: In this version, when no open space is found at the maximum horizon, the horizon is reduced in an attempt to find an open space with a smaller horizon. The horizon will be reduced until 0.5m, since from then onwards objects will be very close and it is better for the robot to first rotate and then re-check for open spaces. This should make the algorithm more robust against different sizes of hallways and object distances. This implementation has been succesfully tested in simulation, but could not be tested on the real robot due to time constraints. | |||

- Dead-reckoning and global localization: In this version, the final goal (being the end of the hallway) is taken into account. This to try to make sure the robot always reaches the end of the hallway, also when it encounters a dead end somewhere in the hallway. To this extent, the position of the robot is tracked and compared to the final position, to make a better choice about which open space to drive to. The code needs a bit more time to finalize, so it is not tested yet. | |||

<br /> | <br /> | ||

=== | |||

TBD | ===Localisation Assignment 1=== | ||

=====Assignment 2===== | |||

#It is known how far the robot should have driven given the input velocity and time. This can be compared with the distance from the odometry data. | |||

#With uncertain odometry the initial position of the odomotry does not start at 0. On top of that, slip is included which makes that the reported distance from odometry does not match the real travelled distance or expected distance. | |||

#It could be used for position updates over a short amount of time. When using only dead-reckoning for too long the error will be added every time, resulting in very bad localization. Therefore, it will have to be corrected after every time step or every few time steps by some other type of localization. | |||

=====Assignment 3===== | |||

In an experiment, the robot drove forward until its odometry data crossed 5 meters, after which it stopped. The path length travelled was measured to be 4.99 ± 0.01 m, while the odometry data indicated 5.07 m in x-direction. Thus, there is a significant difference of about 8 cm between the odometry measurements and the physical displacement.<br /> | |||

===Localisation Assignment 2=== | |||

====Assignment 2.0: Exploration==== | |||

*The ParticleFilter class inherits functions from class ParticleFilterBase. Therefore, ParticleFilterBase has a number of functions which ParticleFilter will also have. On top of that, the ParticleFilter class itself defines some extra functions related to resampling. | |||

*The Particle class represents a single particle in the filter, while the ParticleFilter class manages a collection of particles. | |||

*propagateSample in Particle will propagate only one particle. propagateSamples in ParticleFilter propagates all particles by running the function defined in Particle on all particles in ParticleFilter. | |||

====Assignment 2.1: Initializing the particle filter==== | |||

*One constructor is used for a uniform distribution, while the other is used for a Gaussian distribution about a certain point. | |||

*The uniform distribution constructor covers the entire range. However, it likely gives only a crude estimation of the location. It is not able to sample more often in certain areas. | |||

*The Gaussian distribution constructor is able to sample around a given area, allowing samples to be generated in a concentrated area. It is less efficient at covering the entire map/range. | |||

*The uniform distribution is used for the initial guess of the robot's location. The Gaussian distribution is used for subsequent guesses, when the likelihood around a certain location is large and it makes more sense to concentrate around there. | |||

====Assignment 2.2: Calculating the filter prediction==== | |||

*The angle state-variable is averaged using a special formula which takes into account how angles wrap around.<ref>https://rosettacode.org/wiki/Averages/Mean_angle</ref> | |||

*The average of the filter represents the estimated robot pose. The estimated robot pose is not correct, because the particles are currently randomly generated. After future steps, the filter average will be a better representation of the robot pose. | |||

*''When is the filter average not adequate for estimating the robot position? (TBD)'' | |||

====Assignment 2.3: Propagation of particles==== | |||

*''Injecting noise (TBD)'' | |||

*''No correction step (TDB)'' | |||

====Assignment 2.4: Computation of the likelihood of a particle==== | |||

*''Explaining the code structure (TBD)'' | |||

*The presence of a single ray with a very low likelihood would result in a total low likelihood of the particle. For example, if the likelihoods are 0.9 * 0.9 * 0.04 * 0.7, the impact of the 0.04 ray is relatively large, and the particle would become very unlikely. Additionally, every ray which has likelihood ≠ 1 will decrease the particle likelihood. For a large amount of rays, this could mean that all particle likelihoods are relatively low. | |||

====Assignment 2.5: Resampling our particles==== | |||

* | |||

====Observations==== | |||

* | |||

* [Add image of resulting simulation] | |||

=== Sources === | |||

* | |||

<references /> | |||

Latest revision as of 10:26, 2 June 2023

Welcome to our group page.

Group members

| Name | student ID |

|---|---|

| Eline Wisse | 1335162 |

| Lotte Rassaerts | 1330004 |

| Marijn Minkenberg | 1357751 |

Exercise 1 : The art of not crashing

Instead of just stopping, we made the robot turn around whenever it came close to a wall in front of it. The video of the bobo robot running our dont_crash script can be found here: https://drive.google.com/file/d/109fDDzf6ou2HHuSZgOicY27pRdOpJs0s/view?usp=sharing.

It is more efficient to only place nodes at turning points of the robot (so it can drive straight from node to node), or at a decision point (where the robot can take either of two routes). In between the nodes, the robot will have to drive straight anyway, so it is not necessary to use extra nodes in between. This way the number of nodes is decreased from 41 to 20. Hence, the algorithm will have to explore fewer nodes on the way. This will save unnecessary computations, making the algorithm more efficient.

See main branch in repository.

The used approach for this assignment is open space detection. First, it is determined whether the range of the laser data points is within the set horizon. This is evaluated for the laser data over the whole range. If the range of the laser point is larger than the horizon, this laser point is added to the open space. Laser points of the open space that are next to each other, belang to the same open space. Hence, it can occur that the robot observes multiple open spaces. In this case, the robot chooses the widest open space, and drives to the middle of it. The robot will only correct its direction if the new open space deviates more than 5 laser points from the midpoint of the robot. This, to make sure the movement is smooth and no unnessecary corrections are made. On top of that, if right in front of the robot no object is present within the horizon, it will drive straight forward for 0.5 seconds, or until it encounters an object. After this 0.5 seconds or when an object is detected, the open spaces will be determined again. If the robot detects no open space, or is very close to an object it will rotate, in an attempt to find a new open space.

Screen recording of simulation: https://drive.google.com/file/d/1EcfIyBl419EeOkya5rmSvxfzes4J4Fpt/view?usp=share_link

Recording of experiment: https://drive.google.com/file/d/1usk2VdcQFwjlW3zfe1Fuc-l8EOWuFPkF/view

Possible improvements we worked on/are working on:

- The implementation of multiple horizons: In this version, when no open space is found at the maximum horizon, the horizon is reduced in an attempt to find an open space with a smaller horizon. The horizon will be reduced until 0.5m, since from then onwards objects will be very close and it is better for the robot to first rotate and then re-check for open spaces. This should make the algorithm more robust against different sizes of hallways and object distances. This implementation has been succesfully tested in simulation, but could not be tested on the real robot due to time constraints.

- Dead-reckoning and global localization: In this version, the final goal (being the end of the hallway) is taken into account. This to try to make sure the robot always reaches the end of the hallway, also when it encounters a dead end somewhere in the hallway. To this extent, the position of the robot is tracked and compared to the final position, to make a better choice about which open space to drive to. The code needs a bit more time to finalize, so it is not tested yet.

Localisation Assignment 1

Assignment 2

- It is known how far the robot should have driven given the input velocity and time. This can be compared with the distance from the odometry data.

- With uncertain odometry the initial position of the odomotry does not start at 0. On top of that, slip is included which makes that the reported distance from odometry does not match the real travelled distance or expected distance.

- It could be used for position updates over a short amount of time. When using only dead-reckoning for too long the error will be added every time, resulting in very bad localization. Therefore, it will have to be corrected after every time step or every few time steps by some other type of localization.

Assignment 3

In an experiment, the robot drove forward until its odometry data crossed 5 meters, after which it stopped. The path length travelled was measured to be 4.99 ± 0.01 m, while the odometry data indicated 5.07 m in x-direction. Thus, there is a significant difference of about 8 cm between the odometry measurements and the physical displacement.

Localisation Assignment 2

Assignment 2.0: Exploration

- The ParticleFilter class inherits functions from class ParticleFilterBase. Therefore, ParticleFilterBase has a number of functions which ParticleFilter will also have. On top of that, the ParticleFilter class itself defines some extra functions related to resampling.

- The Particle class represents a single particle in the filter, while the ParticleFilter class manages a collection of particles.

- propagateSample in Particle will propagate only one particle. propagateSamples in ParticleFilter propagates all particles by running the function defined in Particle on all particles in ParticleFilter.

Assignment 2.1: Initializing the particle filter

- One constructor is used for a uniform distribution, while the other is used for a Gaussian distribution about a certain point.

- The uniform distribution constructor covers the entire range. However, it likely gives only a crude estimation of the location. It is not able to sample more often in certain areas.

- The Gaussian distribution constructor is able to sample around a given area, allowing samples to be generated in a concentrated area. It is less efficient at covering the entire map/range.

- The uniform distribution is used for the initial guess of the robot's location. The Gaussian distribution is used for subsequent guesses, when the likelihood around a certain location is large and it makes more sense to concentrate around there.

Assignment 2.2: Calculating the filter prediction

- The angle state-variable is averaged using a special formula which takes into account how angles wrap around.[1]

- The average of the filter represents the estimated robot pose. The estimated robot pose is not correct, because the particles are currently randomly generated. After future steps, the filter average will be a better representation of the robot pose.

- When is the filter average not adequate for estimating the robot position? (TBD)

Assignment 2.3: Propagation of particles

- Injecting noise (TBD)

- No correction step (TDB)

Assignment 2.4: Computation of the likelihood of a particle

- Explaining the code structure (TBD)

- The presence of a single ray with a very low likelihood would result in a total low likelihood of the particle. For example, if the likelihoods are 0.9 * 0.9 * 0.04 * 0.7, the impact of the 0.04 ray is relatively large, and the particle would become very unlikely. Additionally, every ray which has likelihood ≠ 1 will decrease the particle likelihood. For a large amount of rays, this could mean that all particle likelihoods are relatively low.

Assignment 2.5: Resampling our particles

Observations

- [Add image of resulting simulation]