PRE2022 3 Group2: Difference between revisions

| (114 intermediate revisions by 6 users not shown) | |||

| Line 32: | Line 32: | ||

==Abstract== | ==Abstract== | ||

The use of robotics in disaster response operations has gained significant attention in recent years. In particular, low-cost vine robots | The use of robotics in disaster response operations has gained significant attention in recent years. In particular, robotics is being used to navigate and locate survivors through complex terrain. However, current rescue robots have multiple limitations which make it difficult for them to be used successfully. This paper proposes and argues for the use of low-cost vine robots as a promising technology for search and rescue missions in urban areas affected by earthquakes. Vine robots are small, flexible and lightweight robots that can navigate through tight spaces and confined areas, making them ideal for searching collapsed buildings for survivors. They are relatively cheaper than the current state of the art search and rescue robots. However, vine robots have not been implemented into real-life search and rescue missions due to various limitations. In this paper, the limitations are addressed, specifically with regards to the path finding and localization capabilities. This includes research into components that support the vine robot in its capabilities such as sensors, where a comparison is made to figure out what type of sensor would be best suited for extending the vine robots capability to be able to localize survivors. Furthermore, a simulation is made to investigate a localization algorithm that includes path finding capabilities, to conclude the usefulness of using such a robot during a search and rescue mission when looking through a collapsed building. The findings of this paper conclude that further development needs to take place for the vine robot technology to be used successfully in search and rescue missions. | ||

==Introduction and | ==Introduction and research aims== | ||

“Two large earthquakes struck the southeastern region of Turkey near the border with Syria on Monday, killing thousands and toppling residential buildings across the region.” (AJLabs, 2023) The earthquakes were both above 7.5 on the Richter scale, which caused buildings to be displaced from foundations with people still in them. Some people survived the fall when a building collapsed, but were trapped in all of the rubble. | |||

To address these challenges, | Earthquakes are one of the most devastating natural disasters that can occur in urban areas, leading to significant damage to infrastructure, loss of life and displacement of communities. In recent years, search and rescue operations have become increasingly important in the aftermath of earthquakes. These operations aim to locate victims trapped under collapsed buildings and provide them with the necessary medical care and assistance. However, these operations can be challenging due to the complexity and dangers associated with navigating through the rubble of damaged buildings. To address these challenges, technology has emerged as promising for search and rescue operations in urban areas affected by earthquakes. Technology has the potential to significantly improve the efficiency and effectiveness of search and rescue operations, as well as reduce the risk to human rescuers. | ||

This paper provides an overview of the usage technology and robotics in the localization of victims of earthquakes that lead to infrastructure damage, specifically in urban areas. It will further go into the design and capabilities of robotics, highlighting potential advantages over traditional search and rescue methods. The paper will also discuss challenges associated with the usage of technology and robotics, such as limited battery life, difficulties in controlling the robot in complex environments and the need for specialized training for operators. | |||

The research aims addressed in this paper include: | |||

#What are the potential advantages and challenges associated with the usage of low-cost robotics and technology in earthquake response efforts? | |||

#How have robots been deployed in recent earthquake disasters and what has their impact on search and rescue operations been? | |||

#What are the future prospects of low-cost robots in earthquake response efforts? | |||

#How does a localization and path-planning algorithm for urban search and rescue look like? | |||

#What are the potential advantages and challenges associated with the usage of low-cost | |||

#How have | |||

#What are the future prospects of low-cost | |||

==State-of-the-art literature== | ==State-of-the-art literature== | ||

| Line 125: | Line 60: | ||

'''Design of four-arm four-crawler disaster response robot OCTOPUS''' | '''Design of four-arm four-crawler disaster response robot OCTOPUS''' | ||

[[File:Four-arm disaster response robot OCTOPUS.gif|thumb|Four-arm disaster response robot OCTOPUS (Kamezaki et al., 2016).]] | |||

The OCTOPUS robot, presented in this paper, boasts four arms and four crawlers, providing exceptional mobility and flexibility. Its arms are engineered with multiple degrees of freedom, enabling the robot to execute intricate tasks such as lifting heavy objects and opening doors. Furthermore, the crawlers are designed to offer stability and traction, which allow the robot to move seamlessly on irregular and slippery surfaces (Kamezaki et al., 2016). | The OCTOPUS robot, presented in this paper, boasts four arms and four crawlers, providing exceptional mobility and flexibility. Its arms are engineered with multiple degrees of freedom, enabling the robot to execute intricate tasks such as lifting heavy objects and opening doors. Furthermore, the crawlers are designed to offer stability and traction, which allow the robot to move seamlessly on irregular and slippery surfaces (Kamezaki et al., 2016). | ||

| Line 138: | Line 73: | ||

'''Search and Rescue System for Alive Human Detection by Semi-Autonomous Mobile Rescue Robot''' | '''Search and Rescue System for Alive Human Detection by Semi-Autonomous Mobile Rescue Robot''' | ||

[[File:7856489-fig-8-source-small.gif|thumb|Search and rescue system for alive human detection by semi-autonomous mobile rescue robot (Uddin & Islam 2016).]] | |||

This study introduces a cheap robot designed for detecting humans in perilous rescue missions. The paper presents several system block diagrams and flowcharts to demonstrate the robot's operational capabilities. The robot incorporates a PIR sensor and IP camera to detect human presence through their infrared radiation. These sensors are readily available and cost-effective compared to other urban search and rescue robots (Uddin & Islam 2016). | This study introduces a cheap robot designed for detecting humans in perilous rescue missions. The paper presents several system block diagrams and flowcharts to demonstrate the robot's operational capabilities. The robot incorporates a PIR sensor and IP camera to detect human presence through their infrared radiation. These sensors are readily available and cost-effective compared to other urban search and rescue robots (Uddin & Islam 2016). | ||

| Line 162: | Line 97: | ||

'''Robots Gear Up for Disaster Response''' | '''Robots Gear Up for Disaster Response''' | ||

[[File:4399386-fig-10-source-small.gif|thumb|Active scope camera for urban search and rescue. Turning motion in narrow gaps (Hatazaki et al., 2007).]] | |||

Although brilliant robotic technology exists, there is a need to integrate it into complete, robust systems. Furthermore, there is a need to develop sensors and other components that are smaller, stronger, and more affordable (Anthes 2010). | Although brilliant robotic technology exists, there is a need to integrate it into complete, robust systems. Furthermore, there is a need to develop sensors and other components that are smaller, stronger, and more affordable (Anthes 2010). | ||

| Line 174: | Line 109: | ||

'''Multimodality robotic systems: Integrated combined legged-aerial mobility for subterranean search-and-rescue''' | '''Multimodality robotic systems: Integrated combined legged-aerial mobility for subterranean search-and-rescue''' | ||

[[File:1-s2.0-S0921889022000756-gr2.jpg|thumb|The legged-aerial explorer with its full sensor suite and the UAV carrier platform (Lindqvist et al., 2022).]] | |||

This article discusses a Boston Dynamics Spot robot that is enhanced with a UAV carrier platform, and an autonomy sensor payload (Lindqvist et al., 2022). The paper demonstrates how to integrate hardware and software with each other and with the architecture of the robot. | This article discusses a Boston Dynamics Spot robot that is enhanced with a UAV carrier platform, and an autonomy sensor payload (Lindqvist et al., 2022). The paper demonstrates how to integrate hardware and software with each other and with the architecture of the robot. | ||

| Line 200: | Line 135: | ||

This book offers a comprehensive overview of rescue robotics within the broader context of emergency informatics. It provides a chronological summary of the documented deployments of robots in response to disasters and analyzes them formally. The book serves as a definitive guide to the theory and practice of previous disaster robotics (Murphy, 2017). | This book offers a comprehensive overview of rescue robotics within the broader context of emergency informatics. It provides a chronological summary of the documented deployments of robots in response to disasters and analyzes them formally. The book serves as a definitive guide to the theory and practice of previous disaster robotics (Murphy, 2017). | ||

'''Application of robot technologies to the disaster sites''' | '''Application of robot technologies to the disaster sites''' | ||

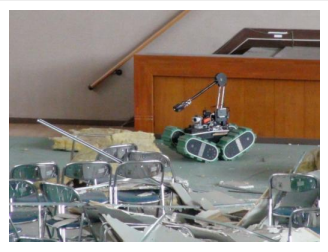

[[File:Kohga 3 in the gymnasium.png|thumb|Kohga 3 in the gymnasium. Application of Robot Technologies to the Disaster Sites (Osumi, 2014).]] | |||

For the first time during the Great East Japan Earthquake disaster, Japanese rescue robots were utilized in actual disaster sites. Their tele-operation function and ability to move on debris were essential due to the radioactivity and debris present (Osumi, 2014). | For the first time during the Great East Japan Earthquake disaster, Japanese rescue robots were utilized in actual disaster sites. Their tele-operation function and ability to move on debris were essential due to the radioactivity and debris present (Osumi, 2014). | ||

| Line 221: | Line 156: | ||

'''Drone-assisted disaster management: Finding victims via infrared camera and lidar sensor fusion''' | '''Drone-assisted disaster management: Finding victims via infrared camera and lidar sensor fusion''' | ||

[[File:7941945-fig-3-source-small.gif|thumb|Drone hardware specification. Drone-assisted disaster management: Finding victims via infrared camera and lidar sensor fusion (Lee et al., 2016).]] | |||

This article mentions that the use of drones has proven to be an efficient method to localize survivors in hard-to-reach areas (e.g., collapsed structures) (Lee et al., 2016). The paper presents a comprehensive framework for drone hardware that makes it possible to explore GPS-denied environments. Furthermore, the hokuyo lidar is used for global mapping, and the Intel RealSense for local mapping. The outcomes show that the combination of these sensors can assist USAR operations to find victims of natural disasters. | This article mentions that the use of drones has proven to be an efficient method to localize survivors in hard-to-reach areas (e.g., collapsed structures) (Lee et al., 2016). The paper presents a comprehensive framework for drone hardware that makes it possible to explore GPS-denied environments. Furthermore, the hokuyo lidar is used for global mapping, and the Intel RealSense for local mapping. The outcomes show that the combination of these sensors can assist USAR operations to find victims of natural disasters. | ||

| Line 253: | Line 188: | ||

'''[[PRE2020 4 Group2|PRE2020_4_Group2]]''' | '''[[PRE2020 4 Group2|PRE2020_4_Group2]]''' | ||

This project researched how a swarm of RoboBees (flying microbots) could be used in USAR operations. The main concern of this project was to identify the location of a gas leak and to communicate that information to the USAR team. They looked into infrared technology, wireless LAN and | This project researched how a swarm of RoboBees (flying microbots) could be used in USAR operations. The main concern of this project was to identify the location of a gas leak and to communicate that information to the USAR team. They looked into infrared technology, wireless LAN and Bluetooth for communication. | ||

# | # | ||

| Line 270: | Line 205: | ||

===Users=== | ===Users=== | ||

Within urban search and rescue operations, several users of | Within urban search and rescue operations, several users of technology and robotics can be named. | ||

First, the International Search and Rescue Advisory Group (INSARAG) determines the minimum international standards for urban search and rescue (USAR) teams (INSARAG – Preparedness Response, z.d.). This organization establishes a methodology for coordination in earthquake response. Therefore, this organization will have to weigh the pros and cons of using | First, the International Search and Rescue Advisory Group (INSARAG) determines the minimum international standards for urban search and rescue (USAR) teams (INSARAG – Preparedness Response, z.d.). This organization establishes a methodology for coordination in earthquake response. Therefore, this organization will have to weigh the pros and cons of using robotics in USAR. If INSARAG sees the added value of using robotics in search and rescue operations, it can promote the usage, and include it in the guidelines. | ||

Second, governments will need to purchase all necessary equipment. For the Netherlands, Nationaal Instituut Publieke Veiligheid is the owner of all the equipment of the Dutch USAR team (Nederlands Instituut Publieke Veiligheid, 2023). This Institute will need to see the added value of the robot while taking into account the guidelines of INSARAG. | Second, governments will need to purchase all necessary equipment. For the Netherlands, Nationaal Instituut Publieke Veiligheid is the owner of all the equipment of the Dutch USAR team (Nederlands Instituut Publieke Veiligheid, 2023). This Institute will need to see the added value of the robot while taking into account the guidelines of INSARAG. | ||

The third group of users consists of members of the USAR teams that will have to work with the | The third group of users consists of members of the USAR teams that will have to work with the technology on site. It will be used alongside other techniques that are already used right now. USAR teams are multidisciplinary and not all members of the team will come in contact with the robot (e.g., nurses or doctors). In order to properly use robotics and technology, USAR members who execute the search and rescue operation will need training. For the Dutch USAR team, this training can be conducted by the Staff Officer Education, Training and Exercise (Het team - USAR.NL, z.d.). USAR members will need to be able to set up the technology, navigate it inside a collapsed building (if it is not fully autonomous), read data that it provides, and find survivors with the help of the technology. Furthermore, they will need to decide whether it is safe to follow the path to a survivor. Lastly, team members will need to retract the technology and reuse it if possible. | ||

===Users' needs=== | ===Users' needs=== | ||

| Line 297: | Line 230: | ||

#What makes a rescue operation expensive? | #What makes a rescue operation expensive? | ||

Furthermore, similar questions were posted on Reddit, which can be found in the Appendix | Furthermore, similar questions were posted on Reddit, which can be found in the Appendix. For the first query on Reddit several people responded, which can also be found in the Appendix. The answers keep coming back to the main problem right now with the technology, which is that the technology is expensive. The equipment that is used is expensive as is the training that the people have to have had for using their equipment. The second query on Reddit received one answer of an USAR search specialist. This specialist mentions that they do not really use technology right now because dogs are much more effective for finding an area that has an person in it. They use technology when trying to look for the exact spot that the person is in, but this technology has many disadvantages. Since they can not can reach deep into the debris. | ||

===Society=== | ===Society=== | ||

Society will benefit from | Society will benefit from technology and robotics as it will help USAR operations to localize victims and find a path within rubble to a victim. This will influence the time needed to search for survivors after earthquakes. This is important as the chances of surviving decrease with each passing day. Furthermore, replacing human rescuers or search dogs with a robot will put less danger on them. Lastly, robots will be able to go further in the debris without endangering humans or the technology itself. So, the usage of robots and technology will influence the number of people that can be saved. | ||

===Enterprise=== | ===Enterprise=== | ||

If the | If the robot or technology will be available for sale, the company behind it will have several interests. First, it will want to create a robot that can make a difference. The robot should help USAR operations with localizing survivors in the rubble. Second, the company will want to either make a profit or make enough money to break even. It will need money to invest back in the product to further improve the robot. For the company, it is important to take into account the guidelines of INSARAG as this institute will promote the usage of rescue robots in their global network. | ||

==Specifications== | ==Specifications== | ||

Before identifying the solutions for navigation and localization, a clear list of specifications for the | Before identifying the solutions for navigation and localization, a clear list of specifications for the robot/technology is given. | ||

'''Must:''' | '''Must:''' | ||

| Line 335: | Line 268: | ||

*Be able to put out fires and melt in extreme heat | *Be able to put out fires and melt in extreme heat | ||

Now that the | ==Vine robot== | ||

[[File:Vinebot.jpg|thumb|367x367px|Vine Robot]] | |||

With the help of the state-of-the-art, the vine robot was chosen as the design. Vine robots are soft, small, lightweight, and flexible robots designed to navigate through tight spaces, making them ideal for searching collapsed buildings for survivors. These robots utilize air pressure, which expands them through the tip, making them move and grow into a worm-like robot. The idea of the vine robot was brought up in 2017, making it a new robotic design. There have been some attempts to move the vine robot in chosen directions using a so-called muscle. This includes 4 tubes (muscles) around the large tube (vine robot), each with their own air supply. This allows the vine robot to move in every direction, depending on the pressure of the muscles. | |||

However, the current vine robots lack the ability to navigate and localize, which is a critical requirement for them to be used successfully in such missions. The following chapters provide a research analysis on the best possible sensors that can be used for localization, as well as a simulation to find out what algorithm works best for navigation. | |||

Now that the vine robot was determined as robotic design, the constraints of the robot can be established. This is mainly a brief description of how the robot can be designed. A complete design and manufacturing plan for the robot has not been taken into account in the scope of this report. | |||

'''Constraints:''' | '''Constraints:''' | ||

*'''Size:''' | *'''Size:''' A small vine robot can navigate through tight spaces more easily than larger robots, allowing it to search for survivors in areas that might be otherwise be inaccessible. | ||

*'''Material:''' | *'''Diameter:''' The diameter of the robot that is chosen should be small enough to enable an air compressor to quickly fill the expanding volume of the body while facilitating growth also at low pressure. In research, the main body tube diameter of the robot is 5 cm (Coad et al., 2019). | ||

*'''Weight:''' | *'''Length:''' The robot body can grow as the fluid pressure inside the body is higher in comparison to the outside (Blumenschein, 2020). | ||

*'''Cost:''' The cost of the vine robot should be reasonable and affordable for rescue teams to deploy. For the base of the robot this will be about €700,-, including one vine robot body, | *'''Material:''' For the vine robot to be lightweight and flexible, the materials must share these properties. | ||

**Soft and flexible materials such as silicone or rubber can be used to mimic the flexibility of a vine. These materials can be easily deformed and are able to conform to complex shapes. | |||

**Carbon fiber can be used to the create the structural stability of the vine robot. Carbon fiber is a lightweight and strong material providing a high strength-to-weight ratio make it ideal for this application | |||

*'''Weight:''' The vine robot should be lightweight, so that it can be easily transported to the search site and placed into position by the rescue team. Additionally this provides the possibility to deploy the robot in unstable environment as it will not add significant stress to the collapsed structure'''.''' | |||

*'''Cost:''' The cost of the vine robot should be reasonable and affordable for rescue teams to deploy. For the base of the robot this will be about €700,-, including one vine robot body, additional bodies are €40,- per body (Vine robots, z.d.). The sensor need to be then included, based on which one is best after research. | |||

*'''Load Capacity:''' The vine robot makes sure that the pressure within the body is 3,5 psi (Vine robots, z.d.). This means that the pressure is 0.246 kg/cm and thus depending on the length of the body at that time how much it can hold. | *'''Load Capacity:''' The vine robot makes sure that the pressure within the body is 3,5 psi (Vine robots, z.d.). This means that the pressure is 0.246 kg/cm and thus depending on the length of the body at that time how much it can hold. | ||

*'''Mobility:''' The vine robot should be able to navigate through tight spaces and climb up surfaces with ease. It can go trough gaps as small as 4.5 centimeter with a diameter of 7 centimeter (Coad et al., 2020). | *'''Mobility:''' The vine robot should be able to navigate through tight spaces and climb up surfaces with ease. It can go trough gaps as small as 4.5 centimeter with a diameter of 7 centimeter (Coad et al., 2020). | ||

*'''Growth speed:''' The vine robot right now can grow at a maximum speed of 10 cm/s (Coad et al., 2020). | *'''Growth speed:''' The vine robot right now can grow at a maximum speed of 10 cm/s (Coad et al., 2020). | ||

* | *'''Turn radius:''' In research, a vine robot was able to round a 90 degree turn (Coad et al., 2019). The optimal turn radius supplied in current literature is 20 centimeters (Auf der Maur et al., 2021). | ||

== | ==Localization== | ||

===Current Difficulties=== | ===Current Difficulties=== | ||

| Line 363: | Line 306: | ||

In conclusion, localizing victims after earthquakes is a challenging task that requires extensive planning, coordination, and resources. The scale of the disaster, the nature of the terrain, the complexity of the affected infrastructure, and the timing of the earthquake can all pose significant challenges for rescue teams. | In conclusion, localizing victims after earthquakes is a challenging task that requires extensive planning, coordination, and resources. The scale of the disaster, the nature of the terrain, the complexity of the affected infrastructure, and the timing of the earthquake can all pose significant challenges for rescue teams. | ||

=== | ===Background of localization for vine robots=== | ||

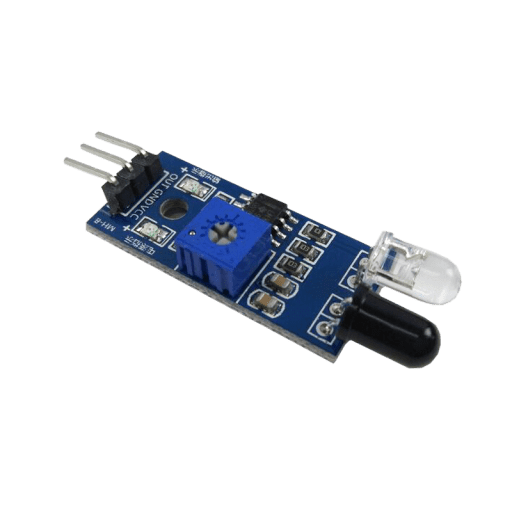

[[File:3-512x512.png|thumb|Infrared sensor module for Arduino]] | |||

Localization of survivors is a critical task in search and rescue operations in destroyed buildings. Vine robots can play an important role in this task by using their flexibility and agility to navigate through complex and unpredictable environment and locate survivors. Localization involves determining the position of the robot and the position of any survivors in the environment and can be achieved through a variety of techniques and strategies. | Localization of survivors is a critical task in search and rescue operations in destroyed buildings. Vine robots can play an important role in this task by using their flexibility and agility to navigate through complex and unpredictable environment and locate survivors. Localization involves determining the position of the robot and the position of any survivors in the environment and can be achieved through a variety of techniques and strategies. | ||

| Line 370: | Line 314: | ||

====Detecting of heat==== | ====Detecting of heat==== | ||

Vine robots can be equipped with a range of sensors that enable them to detect heat in their surroundings. Infrared cameras and thermal imaging systems are among the most commonly used sensors for detecting heat in robots. | Vine robots can be equipped with a range of sensors that enable them to detect heat in their surroundings. Infrared cameras and thermal imaging systems are among the most commonly used sensors for detecting heat in robots. | ||

'''Infrared cameras''' | |||

Infrared cameras work by detecting infrared radiation emitted by objects and converting it into visual representations that can be interpreted by the robot's control systems. | Infrared cameras work by detecting infrared radiation emitted by objects and converting it into visual representations that can be interpreted by the robot's control systems. | ||

[[File:Thermal imaging.jpg|thumb|Thermal imaging with cold spots]] | |||

'''Thermal imaging sensors''' | |||

Thermal imaging systems use a more advanced technology that can detect temperature changes with higher precision, which can enable vine robots to identify potential sources of heat and determine the location and movement of individuals in a given environment | Thermal imaging systems use a more advanced technology that can detect temperature changes with higher precision, which can enable vine robots to identify potential sources of heat and determine the location and movement of individuals in a given environment | ||

'''Contact sensors''' | |||

Contact sensors can be used to detect heat sources that come into direct contact with the robot's sensors. For example, if a vine robot comes into contact with a hot object such as a stove or a piece of machinery, the heat from the object can be detected by the contact sensors. | Contact sensors can be used to detect heat sources that come into direct contact with the robot's sensors. For example, if a vine robot comes into contact with a hot object such as a stove or a piece of machinery, the heat from the object can be detected by the contact sensors. | ||

'''Gas sensors''' | |||

Gas sensors can be used to detect the presence of combustible gases such as methane or propane which can be an indicator of a potential fire or explosion | Gas sensors can be used to detect the presence of combustible gases such as methane or propane which can be an indicator of a potential fire or explosion | ||

| Line 386: | Line 335: | ||

Detecting sound is another critical capability for vine robots especially in search and rescue operations. Vine robots can be equipped with a range of sensors that enable them to detect and interpret sound waves in their surroundings. A common type sensor used to detect sound is a microphone. Microphones can be used to capture sound waves in the environment and convert them into electrical signal that can be interpreted by the robot's control systems or be communicated back to a human operator for analysis | Detecting sound is another critical capability for vine robots especially in search and rescue operations. Vine robots can be equipped with a range of sensors that enable them to detect and interpret sound waves in their surroundings. A common type sensor used to detect sound is a microphone. Microphones can be used to capture sound waves in the environment and convert them into electrical signal that can be interpreted by the robot's control systems or be communicated back to a human operator for analysis | ||

'''Ultrasonic sensors''' | |||

Vine robots can also be equipped with ultrasonic sensors which enable them to detect sound waves that are beyond the range of human hearing. Ultrasonic sensors work by emitting high-frequency sound waves that bounce off objects in the environment and return to the sensor, producing an electrical signal that be interpreted by the robot's control systems. | Vine robots can also be equipped with ultrasonic sensors which enable them to detect sound waves that are beyond the range of human hearing. Ultrasonic sensors work by emitting high-frequency sound waves that bounce off objects in the environment and return to the sensor, producing an electrical signal that be interpreted by the robot's control systems. | ||

Vibration sensors | '''Vibration sensors''' | ||

In addition to ultrasonic sensors, vine robots can be equipped with vibration sensors, which can detect sound waves that are not audible to the human ear. Vibration sensors work by detecting the tiny vibrations in solid objects caused by sound waves passing through them. These vibrations are converted into electrical signals that can be interpreted by the robot's control systems. | In addition to ultrasonic sensors, vine robots can be equipped with vibration sensors, which can detect sound waves that are not audible to the human ear. Vibration sensors work by detecting the tiny vibrations in solid objects caused by sound waves passing through them. These vibrations are converted into electrical signals that can be interpreted by the robot's control systems. | ||

| Line 397: | Line 347: | ||

Detecting movement is another critical capability for vine robots, especially in search and rescue operations. Vine robots can be equipped with a range of sensors that enable them to detect and interpret movement in their surroundings. | Detecting movement is another critical capability for vine robots, especially in search and rescue operations. Vine robots can be equipped with a range of sensors that enable them to detect and interpret movement in their surroundings. | ||

'''Cameras''' | |||

One common type of sensor used in vine robots for detecting movement is a camera. Cameras can be used to capture visual data from the environment and interpret it using computer vision algorithms to detect movement. | One common type of sensor used in vine robots for detecting movement is a camera. Cameras can be used to capture visual data from the environment and interpret it using computer vision algorithms to detect movement. | ||

'''Motion sensors''' | |||

Another type of sensor used in vine robots for detecting movement is a motion sensors. | Another type of sensor used in vine robots for detecting movement is a motion sensors. | ||

| Line 407: | Line 359: | ||

There are 4 types of motion sensors being used in the industry: | There are 4 types of motion sensors being used in the industry: | ||

#Passive infrared (PIR) sensors: These sensors detect changes in the level of infrared radiation (heat) in their field of view caused by moving objects. | #'''Passive infrared (PIR) sensors:''' These sensors detect changes in the level of infrared radiation (heat) in their field of view caused by moving objects. | ||

#Ultrasonic sensors: These sensors emit high-frequency sound waves and measure the time it takes for the waves to bounce back after an object. If an object moves in front of the sensors, it will cause a change in the time it takes for the sound waves to bounce back, which triggers an response. | #'''Ultrasonic sensors:''' These sensors emit high-frequency sound waves and measure the time it takes for the waves to bounce back after an object. If an object moves in front of the sensors, it will cause a change in the time it takes for the sound waves to bounce back, which triggers an response. | ||

#Microwave sensors: Similar to ultrasonic sensors. But these sensors emit electromagnetic waves instead. | #'''Microwave sensors:''' Similar to ultrasonic sensors. But these sensors emit electromagnetic waves instead. | ||

#Vibration sensors: These sensors measure changes in acceleration caused by movement. | #'''Vibration sensors:''' These sensors measure changes in acceleration caused by movement. | ||

===Evaluation of sensors in localization=== | |||

The sensor types above have already explained the basics of detecting a human, where now an evaluation will be made, including other sensors. | |||

'''Types of Sensors:''' | |||

*Thermal Imaging Camera: This sensor can create an image of an object by using infrared radiation emitted from the object. It can detect the heat of a person in dark environments, making them efficient for search and rescue missions. They can identify the location of a person by detecting their body heat through walls or other obstructions (Engineering, 2022) | |||

*Gas Detector: These are used to detect the presence of gases across an area, such as carbon monoxide and methane. They can detect carbon dioxide, which are exhaled by humans, to localize survivors. It can also be used to detect whether or not there are toxic gases that could pose risks to the rescuers. (Instruments, 2021) | |||

*Sound Sensor: This sensor detects the intensity of sound waves, where a microphone is used to provide input. They can be used to locate survivors that may be calling for help or detect sounds of people such as digging or moving debris. (Agarwal, 2019) | |||

* | |||

* | |||

*Light Detection and Ranging (LiDaR): These sensors uses light in the form of a pulsed laser in order to measure the distances tp objects. It can be used to create 3D maps of different environments and can detect movements, such as a survivor moving around debris. (''What Is Lidar?'', n.d.) | |||

*Global Positioning System (GPS): This is a satellite constellation, which provides accurate positioning and navigation measurements worldwide. They can track the movements of different people and can create maps of the environment to help rescuers locate survivors. (''What Is GPS and How Does It Work?'', n.d.) | |||

These sensors are evaluated in the table below, which are ranked based on multiple sources. In order to get a better understanding outcomes, '''the results can be found in the appendix'''. The ranking criteria is as follows: | |||

* | *Range: Maximum distance the sensor can detect an object | ||

* | *Resolution: The smallest object the sensor can detect | ||

*Accuracy: The precision of the measurements | |||

*Cost: The cost of the sensor | |||

* | |||

* | |||

{| class="wikitable" | {| class="wikitable" | ||

!Sensor Type | !Sensor Type | ||

!Range | !Range | ||

!Resolution | !Resolution | ||

!Accuracy | !Accuracy | ||

!Cost | !Cost | ||

!Ranking | !Ranking | ||

|- | |- | ||

|Thermal Imaging | |Thermal Imaging Camera | ||

| | |High | ||

| | |High | ||

| | |Very high | ||

| | |High | ||

| | |1 | ||

|- | |- | ||

|Gas Detector | |Gas Detector | ||

| | |Medium | ||

| | |High | ||

| | |High | ||

| | |High | ||

| | |5 | ||

|- | |- | ||

| | |Sound Sensor | ||

| | |Medium | ||

| | |High | ||

| | |High | ||

| | |Medium | ||

| | |2 | ||

|- | |- | ||

|Light Detection and Ranging (LiDaR) | |Light Detection and Ranging (LiDaR) | ||

| | |Medium | ||

| | |High | ||

| | |High | ||

| | |High | ||

| | |3 | ||

|- | |- | ||

|Global Positioning System (GPS) | |Global Positioning System (GPS) | ||

|Very High | |||

|Medium | |||

|Medium | |||

|Medium | |||

|4 | |||

|}Based on the ranking, it was determined that thermal imaging cameras are the most effective sensors for localizing survivors under rubble. This is due to its ability to detect heat given off by a human body from a large range with very high accuracy. Additionally, this sensor can be used in dark environments, which some others cannot. Even if a survivor is buried under the rubble, their body will still be able to give off a heat signature that can be detected by a thermal imaging camera. However, one major limitation of only using this sensor is that no visual image of the surrounding environment can be provided. As a result, a visual camera can be used in conjunction with a thermal imaging camera, allowing rescuers to get a better picture of the situation by identifying potential obstacles and hazards. | |||

==Pathfinding== | |||

Pathfinding is the process of finding the shortest or most efficient path between two points in a given environment. For a vine robot, pathfinding is critical as it allows the robot to navigate through the porous environment of destroyed buildings and reach its intended destination. Pathfinding algorithms are typically used to determine the best route for the robot to take based on factors such as obstacles, terrain and distance. | |||

One approach is to use a combination of reactive and deliberative pathfinding strategies. Reactive pathfinding involves using sensors to detect obstacles in real-time and making rapid adjustments to the robot's path to avoid them. This can be especially useful in environments where the obstacles and terrain are constantly changing such as in a destroyed building. However, reactive pathfinding algorithms are often less efficient than traditional pathfinding algorithms because they only consider local information and may not find the optimal path. Deliberative pathfinding on the other hand involves planning paths ahead of time based on a map or model of the environment. While this approach can be useful in some cases, it may not be practical in a destroyed building where the environment is constantly changing. Another approach is to use matching learning algorithms to train the robot to navigate through the environment based on real-world data. The latter approach is not the main focus of the paper and the combination is spoken about in further detail. | |||

===Pathfinding for vine robots=== | |||

As described above, sensors are one of the critical components in reactive and deliberative pathfinding. Sensors provide the real-time information that the algorithm needs to navigate the vine robot through a dynamic environment, avoid obstacles, and adapt to changing conditions. In the case of the vine robot system, this mechanism of reactive pathfinding will be done to localize the survivor as is described in a previous section of this paper. Rather this section of the paper focuses on deliberative pathfinding, which includes sensory information being read from a radar to determine a model of the environment that has detected some signs of life within a collapsed building. It is clear that these radars currently require a surface area far larger then that available on the vine robot itself. However, there are improvements to reducing the size of these radars to get them working on a drone. Current experiments seem promising, but aren't fully realized within the research community (example of a drone with a radar). When this becomes a viable option, attaching such a radar to the vine robot should be possible. | |||

Thus, a proposed solution for now is to have these large sensors in conjunction with the vine robot, thereby ensuring a higher chance of success to locate the survivor by having the rescue team first scan the environment and then set up the vine robot in the correct location. The built in a localization algorithm that includes path finding capabilities should then be able to correctly identify a survivor within the rubble. Although it is worth noting that this greatly increases the cost of the search and rescue mission as whole, it is important to keep in mind that this approach does not enhance the localization capabilities of the vine robot itself. The mechanisms of reactive pathfinding play a roll within the localization techniques of the vine robot itself. Therefore this is an extension that isn't necessary for the vine robot to work as intended but is able to enhance the success rate of finding survivors. | |||

===Ground Penetrating Radar (GPR)=== | |||

The underlying technology for GPR is electromagnetic propagation. Many technologies realize detection, but only electromagnetic ones assure best results in terms of speed and accuracy of a relief, working also in a noisy environment. Three methods can be used to detect the presence of a person in a given area through the use of electromagnetic propagation. The first method detects active or passive electronic devices carried by the person, while the second method detects the person's body as a perturbation of the backscattered electromagnetic wave. In both cases, a discontinuity in the medium of electromagnetic wave propagation is required, which means that trapped individuals must have some degree of freedom of movement.However, these methods are only effective in homogeneous mediums, which is not always the case in collapsed buildings where debris can have stratified slabs and heavy rubble at different angles. Therefore, the retrieval of the dielectric contrast features cannot be used as a means for detection in these situations. | |||

Moreover, the irregular surface and instability of ruins make linear scans of radar at soil contact impossible. Instead, the sensor system must be located in a static position or on board of an aerial vehicle to detect the presence of individuals. (Ferrara V., 2015) | |||

====Vital Sign Detection==== | |||

Vital signs are detected using a continuous wave (CW) signal is transmitted towards human subject; the movements of heartbeat and breathing modulate the phase of signal, generating a Doppler shift on the reflected signal, which back to the receiver; and finally the demodulation of the received signal detects vital signs. These radar systems can be compact in size, making it feasible to fit on unmanned aerial vehicles (UAVs). Unfortunately, vibration and aerodynamic instability of a flying vehicle, together limit accuracy and the validity of the same measure. Aside from this the frequency in which these operate greatly impacts the penetration depth of the sensing capability. Currently, GPR systems have an increased sensitivity level, due to the switch to use ultra-wide band radars. The increase in sensitivity does improve the probability of detection, but at the same time, it can produce a bigger number of false alarms. There is research with regards to these two systems, but both still need further improvement before being able to work in present day situations. (Ferrara V., 2015) | |||

=====Constant false alarm rate (CFAR)===== | |||

Airborne ground penetrating radar can effectively survey large areas that may not be easily accessible, making it a valuable tool for underground imaging. One method to address this imaging challenge is by utilizing a regularized inverse scattering procedure, known as CFAR. CFAR detection is an adaptive algorithm commonly used in radar systems to detect target returns amidst noise, clutter, and interference. Among the CFAR algorithms, the cell averaging (CA) CFAR is significant, as it estimates the mean background level by averaging the signal level in M neighboring range cells. The CA-CFAR algorithm has shown promising capabilities in achieving a stable solution and providing well-focused images. By using this technique, the actual location and size of buried anomalies can be inferred.(Ilaria C. et al, 2012) | |||

=====Ultra-wideband (UWB)===== | |||

The proposed method for vital sign detection is highly robust and suitable for detecting multiple trapped victims in challenging environments with low signal-to-noise and clutter ratios. The removal of clutter has been effective, and the method can extract multiple vital sign information accurately and automatically. These findings have significant implications for disaster rescue missions, as they have the potential to improve the effectiveness of rescue operations by providing reliable and precise vital sign detection. Future research should focus on extending the method to suppress or separate any remaining clutter and developing a real-time and robust approach to detect vital signs in even more complex environments. (Yanyun X. et al, 2013) | |||

<br /> | |||

==Simulation== | |||

===Goal=== | |||

To evaluate the localization capabilities of the Vine Robot, a simulation was created. For a Vine Robot to accurately determine the location of a target, the robot must be able to get in close proximity to that target. As such, the robot’s localization algorithm must include pathfinding capabilities. The simulation will be used to test out how a custom developed localization algorithm picks up stimuli from the environment and reacts to these stimuli to then try and locate the survivor from whom these stimuli originate. For the simulation to succeed, the robot is required to reach the survivor’s location in at least 75% of the randomly generated scenarios. | |||

The complexity of creating such a vine robot and its testing environment, due to the sheer amount of different possible scenarios and variables involved in finding survivors within debris, is currently outside of the scope of this course. However, it is believed that the noisy environment and the robot can be simulated to retain their essential properties. The simulated environment will be generated using clusters of debris, with random noise representing interference, and intermittent stimuli that indicate the presence of a survivor. The random noise and intermittent stimuli are critical in simulating the robot’s sensors, since the data received from the sensors are unreliable when going through debris. | |||

===Specification=== | |||

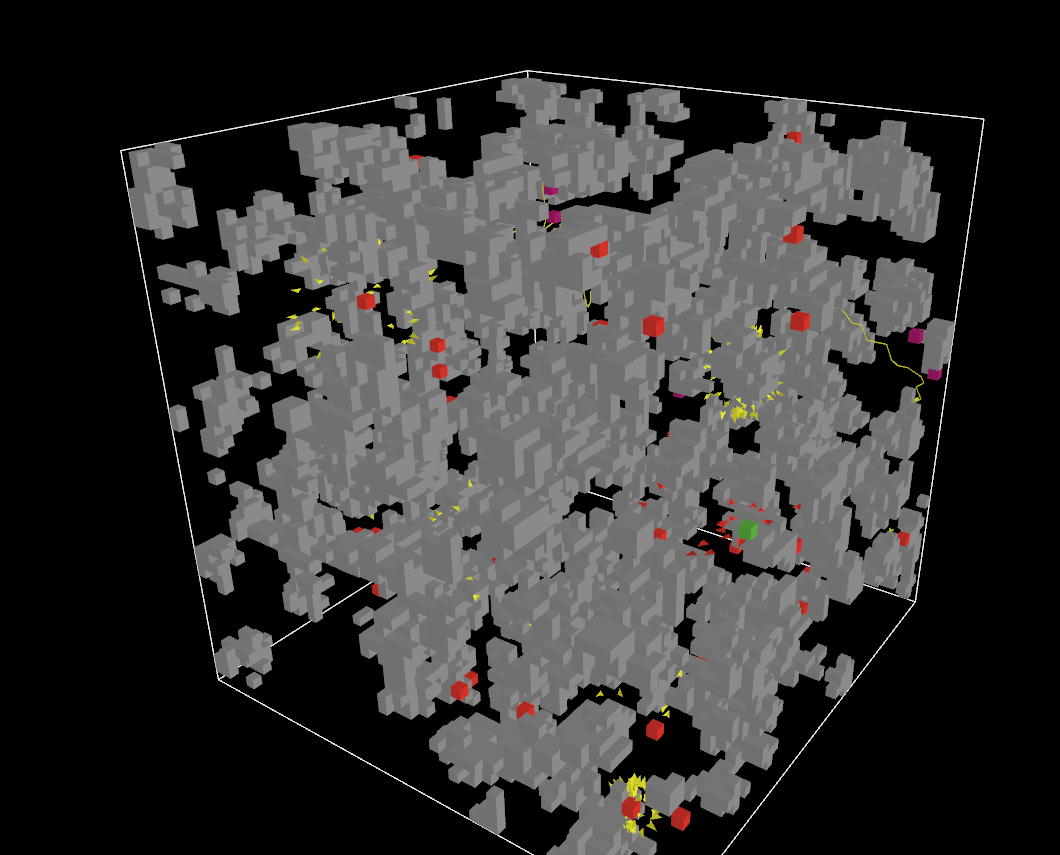

[[File:Simulation environment.png|thumb|A screenshot of the simulation environment with all components visible.]] | |||

The simulation is made using NetLogo 3D. This software has been chosen for its simplicity in representing the environment using patches and turtles. | |||

The vine robot is represented as a turtle. For algorithms involving swarm robotics, multiple turtles can be used. The robot can only move forwards with a maximum speed of 10 centimetres per second and a turning radius of at least 20 centimetres. The robot cannot move onto patches it has visited before, because the vine robot would then hit itself. The robot has a viewing angle of at most 180 degrees. When involving swarm robotics, it is not allowed for the different robots to cross each other either. | |||

The environment is randomly filled with large chunks of debris. These are represented by grey patches. The robot may not move onto these patches. To simulate the debris from collapsed buildings more closely, the debris patches are clustered together. All other patches contain smaller rubble, which the robot can move through. | |||

The environment also contains an adjustable number of survivors, which are represented by green patches. These survivors are stationary, as they are stuck underneath the rubble. Each survivor gives off heat in the form of stimuli, which the robot can pick up on within its field of view. The stimuli are represented by turtles that move away from the corresponding survivor and die after a few ticks. The survivor itself can also be detected when within the robot’s field of view. Once the robot has reached a survivor, the user is notified and the simulation is stopped. | |||

Red patches are used to simulate random noise and false positives. These red patches send out stimuli in the same way as the green patches do. The robot cannot distinguish these stimuli from one another, but it can identify red patches as false positives when they are in its field of view. | |||

===Design choices=== | |||

The simulation cannot represent the whole scenario. Some design decisions were made where it may not be directly apparent as to why they were chosen. Therefore, these design choices are explained in detail here. | |||

In the simulation, the survivor cannot be inside a patch that indicates a large chunk of debris. Intuitively this would mean that the survivor is stuck in small, light debris which the vine robot can move through. However, it is known that the target is stuck underneath rubble, as they would not need rescuing otherwise. As such, it is assumed that the weight of all the rubble underneath which the survivor is trapped prevents them from moving into another patch. The robot, however, is able to move between patches of small, light debris due to its lesser size. Whether the weight is caused by a single big piece of debris or a collection of smaller pieces of debris can be influential in getting the survivor out of the debris, but it is not important for finding them. Thus it is also not important for the simulation. | |||

To properly represent the robot’s physical capabilities and limitations, the simulation environment requires a sense of scale. For simplicity, patches were given a dimension of 10 centimetres cubed and each tick represents 1 second. As a result, any patch that is not marked as a debris chunk patch has an opening that is larger than the robot’s main body tube with a diameter of 5 centimetres. For the robot’s mobility, it entails that a single patch can be traversed per tick and that sideways movement is limited to a 45-degree angle per tick. | |||

One might argue that the robot can turn with a tighter radius when pressed up against a chunk of debris. In theory, this would function by trying to turn in a certain direction while pressing up against the debris. The forwards movement would then be blocked by the debris, while the sideways movement can continue unrestricted. However, this theory has a major downside. Deliberately applying pressure to a chunk of stationary debris could cause it, or the surrounding debris, to shift. Such a shift has unpredictable side effects, which could have disastrous consequences for survivors stuck in the debris. | |||

===Simulation Scenario=== | |||

In active use the robot has to guide rescuers to possible locations of survivors in the environment. This scenario can be described in PEAS as: | |||

*Performance Measure: The performance measure for the vine robot in the simulation would be the number of survivors found within a given time frame. | |||

*Environment: The environment for the vine robot in the simulation would be a disaster zone with multiple clusters of debris. The debris can be of varying sizes and shapes and may obstruct the robot's path. | |||

*Actuators: The actuators for the vine robot in the simulation would be the various robotic mechanisms that enable the robot to move and interact with its environment. | |||

*Sensors: The sensors for the vine robot in the simulation would include a set of cameras and microphones to capture different stimuli. Additionally, the robot would also have sensors to detect obstacles in its path. | |||

Environment description: | |||

*Partially observable | |||

*Stochastic | |||

*Collaborative | |||

*Single-agent | |||

*Dynamic | |||

*Sequential<br /> | |||

===Test Plan=== | |||

The objective of the test plan is to evaluate the localization algorithm of the Vine Robot in a simulated environment with clusters of debris, random noise representing interference, and intermittent stimuli that indicate the presence of a survivor. The test aims to ensure the Vine Robot can locate a survivor in at least 75% of the randomly generated scenarios. | |||

However, the following risks should be considered during the test: | |||

*The simulation may not accurately represent the real-world scenario. | |||

*The Vine Robot's pathfinding algorithm may not work as expected in the simulated environment. | |||

*The Vine Robot's limitations may not be accurately represented in | |||

====Test Scenarios:==== | |||

Each of the following scenarios will be run 10 times, each round of 10 runs will have the parameter spawn-interval ranging from the minimum, median and maximum. Doing this gives the simulation more merit concerning the effect of stimuli on the tests. Aside from this, each scenario will have a certain percentage assigned to it. This is done for the final result, as not every scenario is as important to the simulation goal. Further explanation is given in the testing metrics. | |||

Scenario 1 (20%): In this scenario, the Vine Robot will be placed in an environment with a survivor in a clear path, with no debris and with the maximum noise. The aim is to test the robot's pathfinding capability in a clear path. | |||

Scenario 2 (20%): In this scenario, the Vine Robot will be placed in an environment with the maximum amount of debris and a survivor with no noise. The aim is to test the robot's ability to manoeuvrability through the debris and locate the survivor. | |||

Scenario 3 (55%): In this scenario, the Vine Robot will be placed in an environment with the median input of clusters of debris and noise with one survivor. The aim is to test the robot's ability to navigate through the ideal environment and locate the survivor. | |||

Scenario 4 (5%): In this scenario, the Vine Robot will be placed in an environment with the maximum random noise and maximum amount of cluster of debris. The aim is to test the robot's ability in the worst environment possible. | |||

====Test Procedure:==== | |||

For each scenario, the following procedure will be followed: | |||

*The Vine Robot will be placed in the environment with the survivor and any other required parameters set for the scenario. | |||

*The Vine Robot's localization algorithm will be initiated, and the robot will navigate through the environment to locate the survivor. | |||

*The number of scenarios where the robot successfully locates the survivor will be recorded. | |||

====Test Metrics:==== | |||

The results found will be used to evaluate the Vine Robot's localization algorithm. The percentage of scenarios where the Vine Robot successfully locates the survivor will be given a grade between 1-10. Then each grade will be multiplied by its percentage of importance. Allowing us to have a better representation of the 75% success rate for the Vine Robot, thus the aim is to get a 7.5 average grade. | |||

====Test results:==== | |||

Below are some of the results shown for Scenario 3. The other results are in the appendix. | |||

{| class="wikitable" | |||

|+ | |||

! colspan="4" |Scenario 3 | |||

|- | |||

! colspan="4" |lifespan of stimuli 50 | |||

|- | |||

|Run iteration | |||

|Success/Failure | |||

|Reason for Failure / Blocks discovered | |||

|Ticks | |||

|- | |||

|#1 | |||

|Success | |||

|33/50 blocks discovered | |||

|509 | |||

|- | |||

|#2 | |||

|Failure | |||

|Wall hit / 15/50 blocks discovered | |||

|228 | |||

|- | |||

|#3 | |||

|Failure | |||

|Wall hit / 6/50 blocks discovered | |||

|74 | |||

|- | |||

|#4 | |||

|Success | |||

|1/50 blocks discovered | |||

|14 | |||

|- | |||

|#5 | |||

|Success | |||

|9/50 blocks discovered | |||

|108 | |||

|- | |||

|#6 | |||

|Failure | |||

|Wall hit / 24/50 blocks discovered | |||

|167 | |||

|- | |||

|#7 | |||

|Success | |||

|10/50 blocks discovered | |||

|56 | |||

|- | |||

|#8 | |||

|Success | |||

|12/50 blocks discovered | |||

|149 | |||

|- | |||

|#9 | |||

|Failure | |||

|Wall hit / 8/50 blocks discovered | |||

|51 | |||

|- | |||

|#10 | |||

|Failure | |||

|Dead end / 8/50 blocks discovered | |||

|80 | |||

|- | |||

! colspan="4" |lifespan of stimuli 100 | |||

|- | |||

|#1 | |||

|Success | |||

|4/50 blocks discovered | |||

|11 | |||

|- | |||

|#2 | |||

|Success | |||

|19/50 blocks discovered | |||

|278 | |||

|- | |||

|#3 | |||

|Success | |||

| | | | ||

| | |6 | ||

| | |- | ||

| | |#4 | ||

| | |Success | ||

| | |7/50 blocks discovered | ||

| | |94 | ||

|- | |||

|#5 | |||

|Success | |||

|3/50 blocks discovered | |||

|22 | |||

|- | |||

|#6 | |||

|Success | |||

|16/50 blocks discovered | |||

|105 | |||

|- | |||

|#7 | |||

|Failure | |||

|Wall hit / 5/50 blocks discovereds | |||

|14 | |||

|- | |||

|#8 | |||

|Failure | |||

|Dead end / 10/50 blocks discovered | |||

|106 | |||

|- | |||

|#9 | |||

|Success | |||

|8/50 blocks discovered | |||

|39 | |||

|- | |- | ||

| | |#10 | ||

|Failure | |||

|Wall hit / 3/50 blocks discovered | |||

|3 | |||

| | |||

| | |||

| | |||

|- | |- | ||

| | | | ||

| | | | ||

|Average ticks | |||

|72 | |||

|} | |} | ||

<br /> | |||

====Test conclusion==== | |||

The simulation algorithm used to model the vine robot's behavior in a disaster response scenario involved a combination of pathfinding and survivor detection algorithms. The pathfinding algorithm utilized a search-based approach to navigate through the debris clusters and identify potential paths for the robot to follow. The survivor detection algorithm relied on the robot's sensors to detect signs of life, such as heat. However, while the simulation algorithm provided a useful framework for testing the vine robot's capabilities, it also had several limitations that could have affected the accuracy of the simulation results. For example, the algorithm did not account for potential variations in the environment, such as the presence of potential dead ends . This led to inaccuracies in the robot's pathfinding decisions causing it to get stuck quite often. | |||

Another potential shortcoming of the simulation algorithm was its reliance on pre-determined search strategies and decision-making processes. While the algorithm was designed to identify the most efficient paths through the debris clusters and locate survivors based on sensor data, it did not account for potential variations in the environment or unforeseen obstacles that could impact the robot's performance. This could have limited the algorithm's ability to adapt to changing conditions and make optimal decisions in real-time. | |||

Finally, the simulation algorithm may have also been limited by the accuracy and precision of the sensor data used to model the robot's behavior we decided to simulate. While the algorithm tried emulating advanced sensor technology to detect survivors and navigate through the debris clusters, the accuracy and precision of these sensors could have been misaligned due to the limitations of Netlogo . This could have led to inaccuracies in the simulation results and limited the algorithm's ability to accurately model the vine robot's behavior in a real-world disaster response scenario. | |||

==Conclusion== | |||

Overall, the vine robot shows potential for urban search and rescue operations. From the user investigation, it was clear that the technology should be semi-autonomous and not too expensive regarding material and training. Furthermore, it was investigated that the vine robot could be equipped with a thermal imaging camera and a visual camera in order to localize survivors in rubble. A simulation was used to test a localization algorithm due to the time and budget constraints. However, a prototype of the vine robot could have given more exact insights into the challenges regarding the algorithm. The results of the simulation tests show that the success rate of the customized algorithm is not high enough for the algorithm to be accepted as the average grade is below 7.5. Therefore, the algorithm will need to be optimized and tested again before it can be used in real-life scenarios. The algorithm should include the potential variations of the environment. Further research, especially in partnership with the users, is necessary for the vine robot to be implemented in USAR operations. | |||

==Future work== | |||

First of all, the customized localization algorithm will need to be improved. The simulation as it is now is fully autonomous. However, the user investigation pointed out that it should be semi-autonomous since the current technology that is fully autonomous can not handle the chaotic environment. | |||

Secondly, if the robot would be used semi-autonomously in rescue operations, USAR members should be trained. This training will learn members how to set up the device, how to read data from the robot, and how to control it if necessary. For instance, if the robot senses a stimulus that is not a human being, the human operator could steer the robot in another direction. It should be looked into how this training will look like, who will need it, and who will give it. | |||

Thirdly, when the vine robot has found a survivor in rubble, it has to communicate in some way the exact location to the human operator. This will be done via the program that the operator uses to control it semi-autonomously. | |||

Furthermore, it was found that the vine robot could be equipped with a thermal imagining camera and a visual camera. However, it was not investigated how these sensors could be mounted on the robot. Therefore, a detailed design and manufacturing plan should be made. | |||

Lastly, it is not investigated how the robot can be retracted if used in rubble. Before it is deployed in USAR missions, it should be researched whether the robot can be retracted without damage to the sensors of the robot. In addition, it should be explored how many times the robot can be reused. | |||

Overall, if the customized algorithm is found to pass the success rate, and the vine robot is equipped with the right sensors according to a design plan, the robot should be revised by USAR teams and the INSARAG for deployment in disaster sites. | |||

==References== | |||

Ackerman, E. (2023i). Boston Dynamics’ Spot Is Helping Chernobyl Move Towards Safe Decommissioning. IEEE Spectrum. <nowiki>https://spectrum.ieee.org/boston-dynamics-spot-chernobyl</nowiki> | |||

Agarwal, T. (2019, July 25). ''Vibration Sensor: Working, Types and Applications''. ElProCus - Electronic Projects for Engineering Students. <nowiki>https://www.elprocus.com/vibration-sensor-working-and-applications/#:~:text=The%20vibration%20sensor%20is%20also,changing%20to%20an%20electrical%20charge</nowiki> | |||

Agarwal, T. (2019, August 16). ''Sound Sensor: Working, Pin Configuration and Its Applications''. ElProCus - Electronic Projects for Engineering Students. <nowiki>https://www.elprocus.com/sound-sensor-working-and-its-applications/</nowiki> | |||

Agarwal, T. (2022, May 23). ''Different Types of Motion Sensors And How They Work''. ElProCus - Electronic Projects for Engineering Students. <nowiki>https://www.elprocus.com/working-of-different-types-of-motion-sensors/</nowiki> | |||

AJLabs. (2023). Infographic: How big were the earthquakes in Turkey, Syria? Earthquakes News | Al Jazeera. https://www.aljazeera.com/news/2023/2/8/infographic-how-big-were-the-earthquakes-in-turkey-syria | |||

Al-Naji, A., Perera, A. G., Mohammed, S. L., & Chahl, J. (2019). Life Signs Detector Using a Drone in Disaster Zones. Remote Sensing, 11(20), 2441. <nowiki>https://doi.org/10.3390/rs11202441</nowiki> | |||

Ambe, Y., Yamamoto, T., Kojima, S., Takane, E., Tadakuma, K., Konyo, M., & Tadokoro, S. (2016). Use of active scope camera in the Kumamoto Earthquake to investigate collapsed houses. International Symposium on Safety, Security, and Rescue Robotics. <nowiki>https://doi.org/10.1109/ssrr.2016.7784272</nowiki> | |||

Analytika (n.d.). ''High quality scientific equipment.'' <nowiki>https://www.analytika.gr/en/product-categories-46075/metrites-anichneftes-aerion-nh3-co-co2-ch4-hs-nox-46174/portable-voc-gas-detector-measure-range-0-10ppm-resolution-0-01ppm-73181_73181/#:~:text=VOC%20gas%20detector%20.-,Measure%20range%3A%200%2D10ppm%20.,Resolution%3A%200.01ppm</nowiki> | |||

Anthes, Gary. Robots Gear Up for Disaster Response. Communications of the ACM (2010): 15, 16. Web. 10 Oct. 2012 | |||

''Assembly''. (n.d.). <nowiki>https://www.assemblymag.com/articles/85378-sensing-with-sound#:~:text=These%20sensors%20provide%20excellent%20long,waves%20cannot%20be%20accurately%20detected</nowiki>. | |||

Auf der Maur, P., Djambazi, B., Haberthür, Y., Hörmann, P., Kübler, A., Lustenberger, M., Sigrist, S., Vigen, O., Förster, J., Achermann, F., Hampp, E., Katzschmann, R. K., & Siegwart, R. (2021). RoBoa: Construction and Evaluation of a Steerable Vine Robot for Search and Rescue Applications. 2021 IEEE 4th International Conference on Soft Robotics (RoboSoft), New Haven, CT, USA, 2021, pp. 15-20, doi: 10.1109/RoboSoft51838.2021.9479192. | |||

Baker Engineering and Risk Consultants. (n.d.). ''Gas Detection in Process Industry – The Practical Approach''. Gas Detection in Process Industry – the Practical Approach. <nowiki>https://www.icheme.org/media/8567/xxv-poster-07.pdf</nowiki> | |||

Bangalkar, Y. V., & Kharad, S. M. (2015). Review Paper on Search and Rescue Robot for Victims of Earthquake and Natural Calamities. International Journal on Recent and Innovation Trends in Computing and Communication, 3(4), 2037-2040. | |||

Blines. (2023). ''Project USAR''. Reddit. <nowiki>https://www.reddit.com/r/Urbansearchandrescue/comments/11lvoms/project_usar/</nowiki> | |||

Blumenschein, L. H., Coad M. M., Haggerty D. A., Okamura A. M., & Hawkes E. W. (2020). Design, Modeling, Control, and Application of Everting Vine Robots. https://doi.org/10.3389/frobt.2020.548266 | |||

Boston Dynamics, Inc. (2019). Robotically negotiating stairs (Patent Nr. 11,548,151). Justia. <nowiki>https://patents.justia.com/patent/11548151</nowiki> | |||

''Camera Resolution and Range''. (n.d.). <nowiki>https://www.flir.com/discover/marine/technologies/resolution/#:~:text=Most%20thermal%20cameras%20can%20see,640%20x%20480%20thermal%20resolution</nowiki>. | |||

CO2 Meter. (2022, September 20). How to Measure Carbon Dioxide (CO2), Range, Accuracy, and Precision. <nowiki>https://www.co2meter.com/blogs/news/how-to-measure-carbon-dioxide#:~:text=This%200.01%25%20(100%20ppm),around%2050%20ppm%20(0.005%25)</nowiki> | |||

Coad, M. M., Blumenschein, L. H., Cutler, S., Zepeda, J. A. R., Naclerio, N. D., El-Hussieny, H., ... & Okamura, A. M. (2019). Vine Robots. IEEE Robotics & Automation Magazine, 27(3), 120-132. | |||

Coad, M. M., Blumenschein, L. H., Cutler, S., Zepeda, J. A. R., Naclerio, N. D., El-Hussieny, H., Mehmood, U., Ryu, J., Hawkes, E. W., & Okamura, A. M. (2020). Vine Robots: Design, Teleoperation, and Deployment for Navigation and Exploration. https://arxiv.org/pdf/1903.00069.pdf | |||

Da Hu, Shuai Li, Junjie Chen, Vineet R. Kamat,Detecting, locating, and characterizing voids in disaster rubble for search and rescue, Advanced Engineering Informatics, Volume 42,2019,100974,ISSN 1474-0346, <nowiki>https://doi.org/10.1016/j.aei.2019.100974</nowiki> | |||

De Cubber, G., Doroftei, D., Serrano, D., Chintamani, K., Sabino, R., & Ourevitch, S. (2013, October). The EU-ICARUS project: developing assistive robotic tools for search and rescue operations. In 2013 IEEE international symposium on safety, security, and rescue robotics (SSRR) (pp. 1-4). IEEE. | |||

Delmerico, J., Mintchev, S., Giusti, A., Gromov, B., Melo, K., Horvat, T., Cadena, C., Hutter, M., Ijspeert, A., Floreano, D., Gambardella, L. M., Siegwart, R., & Scaramuzza, D. (2019). The current state and future outlook of rescue robotics. Journal of Field Robotics, 36(7), 1171–1191. <nowiki>https://doi.org/10.1002/rob.21887</nowiki> | |||

Engineering, O. (2022a, October 14). Thermal Imaging Camera. <nowiki>https://www.omega.com/en-us/</nowiki>. <nowiki>https://www.omega.com/en-us/resources/thermal-imagers</nowiki> | |||

F. Colas, S. Mahesh, F. Pomerleau, M. Liu and R. Siegwart, "3D path planning and execution for search and rescue ground robots," 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 2013, pp. 722-727, doi: 10.1109/IROS.2013.6696431. This paper presents a pathplanning system for a static 3D environment, with the use oflazy tensor voting. | |||

Foxtrot841. (2023).''Technology in SAR''. Reddit. https://www.reddit.com/r/searchandrescue/comments/11bw8qh/technology_in_sar/ | |||

''GPS.gov: Space Segment''. (n.d.). <nowiki>https://www.gps.gov/systems/gps/space/</nowiki> | |||

Hampson, M. (2022). Detecting Earthquake Victims Through Walls. IEEE Spectrum. <nowiki>https://spectrum.ieee.org/dopppler-radar-detects-breath</nowiki> | |||

Hatazaki, K., Konyo, M., Isaki, K., Tadokoro, S., and Takemura, F. (2007) Active scope camera for urban search and rescue IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 2007, pp. 2596-2602, doi: 10.1109/IROS.2007.4399386 | |||

Hensen, M. (2023, April 5). ''GPS Tracking Device Price List''. Tracking System Direct. <nowiki>https://www.trackingsystemdirect.com/gps-tracking-device-price-list/</nowiki> | |||

Huamanchahua, D., Aubert, K., Rivas, M., Guerrero, E. L., Kodaka, L., & Guevara, D. C. (2022). Land-Mobile Robots for Rescue and Search: A Technological and Systematic Review. 2022 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS). <nowiki>https://doi.org/10.1109/iemtronics55184.2022.9795829</nowiki> | |||

Instruments, M. (2021, May 16). What are Gas Detectors and How are They Used in Various Industries? MRU Instruments - Emissions Analyzers. <nowiki>https://mru-instruments.com/what-are-gas-detectors-and-how-are-they-used-in-various-industries/</nowiki> | |||

J. (n.d.-b). ''What is the difference between the Sound Level Sensor and the Sound Level Meter? - Technical Information Library''. Technical Information Library. <nowiki>https://www.vernier.com/til/3486</nowiki> | |||

Kamezaki, M., Ishii, H., Ishida, T., Seki, M., Ichiryu, K., Kobayashi, Y., Hashimoto, K., Sugano, S., Takanishi, A., Fujie, M. G., Hashimoto, S., & Yamakawa, H. (2016). Design of four-arm four-crawler disaster response robot OCTOPUS. International Conference on Robotics and Automation. <nowiki>https://doi.org/10.1109/icra.2016.7487447</nowiki> | |||

Kawatsuma, S., Fukushima, M., & Okada, T. (2013). Emergency response by robots to Fukushima-Daiichi accident: summary and lessons learned. Journal of Field Robotics, 30(1), 44-63. doi: 10.1002/rob.21416 | |||

Kruijff, G. M., Kruijff-Korbayová, I., Keshavdas, S., Larochelle, B., Janíček, M., Colas, F., Liu, M., Pomerleau, F., Siegwart, R., N., Looije, R., Smets, N. J. J. M., Mioch, T., Van Diggelen, J., Pirri, F., Gianni, M., Ferri, F., Menna, M., Worst, R., . . . Hlaváč, V. (2014). Designing, developing, and deploying systems to support human–robot teams in disaster response. Advanced Robotics, 28(23), 1547–1570. <nowiki>https://doi.org/10.1080/01691864.2014.985335</nowiki> | |||

Lee, S., Har, D., & Kum, D. (2016). Drone-Assisted Disaster Management: Finding Victims via Infrared Camera and Lidar Sensor Fusion. 2016 3rd Asia-Pacific World Congress on Computer Science and Engineering (APWC on CSE). <nowiki>https://doi.org/10.1109/apwc-on-cse.2016.025</nowiki> | |||

Li, F., Hou, S., Bu, C., & Qu, B. (2022). Rescue Robots for the Urban Earthquake Environment. Disaster Medicine and Public Health Preparedness, 17. <nowiki>https://doi.org/10.1017/dmp.2022.98</nowiki> | |||

Lindqvist, B., Karlsson, S., Koval, A., Tevetzidis, I., Haluška, J., Kanellakis, C., Agha-mohammadi, A. A., & Nikolakopoulos, G. (2022). Multimodality robotic systems: Integrated combined legged-aerial mobility for subterranean search-and-rescue. Robotics and Autonomous Systems, 154, 104134. <nowiki>https://doi.org/10.1016/j.robot.2022.104134</nowiki> | |||

Liu, Y., Nejat, G. Robotic Urban Search and Rescue: A Survey from the Control Perspective. J Intell Robot Syst 72, 147–165 (2013). <nowiki>https://doi.org/10.1007/s10846-013-9822-x</nowiki> | |||

Lyu, Y., Bai, L., Elhousni, M., & Huang, X. (2019). An Interactive LiDAR to Camera Calibration. ''ArXiv (Cornell University)''. <nowiki>https://doi.org/10.1109/hpec.2019.8916441</nowiki> | |||

ManOfDiscovery. (2023).''Technology in SAR''. Reddit. https://www.reddit.com/r/searchandrescue/comments/11bw8qh/technology_in_sar/ | |||

Matsuno, F., Sato, N., Kon, K., Igarashi, H., Kimura, T., Murphy, R. (2014). Utilization of Robot Systems in Disaster Sites of the Great Eastern Japan Earthquake. In: Yoshida, K., Tadokoro, S. (eds) Field and Service Robotics. Springer Tracts in Advanced Robotics, vol 92. Springer, Berlin, Heidelberg. <nowiki>https://doi.org/10.1007/978-3-642-40686-7_</nowiki> | |||

Murphy, R. R. (2017). Disaster Robotics. Amsterdam University Press | |||

Musikhaus Thomann, (n.d.). ''Behringer ECM8000''. <nowiki>https://www.thomann.de/gr/behringer_ecm_8000.htm?glp=1&gclid=Cj0KCQjwxMmhBhDJARIsANFGOSsIyaQUtVOUtdpO6YneKcSOCqkvtbBp3neddsfc7hhylTgvQNprkJcaAvWmEALw_wcB</nowiki> | |||

Nosirov, K. K., Shakhobiddinov, A. S., Arabboev, M., Begmatov, S., and Togaev, O.T. (2020) "Specially Designed Multi-Functional Search And Rescue Robot," Bulletin of TUIT: Managementand Communication Technologies: Vol. 2 , Article 1. <nowiki>https://doi.org/10.51348/tuitmct211</nowiki> | |||

Osumi, H. (2014). Application of robot technologies to the disaster sites. Report of JSME Research Committee on the Great East Japan Earthquake Disaster, 58-74 | |||

Park, S., Oh, Y., & Hong, D. (2017). Disaster response and recovery from the perspective of robotics. International Journal of Precision Engineering and Manufacturing, 18(10), 1475–1482. <nowiki>https://doi.org/10.1007/s12541-017-0175-4</nowiki> | |||

PCE Instruments UK: Test Instruments. (2023, April 9). ''- Sound Sensor | PCE Instruments''. <nowiki>https://www.pce-instruments.com/english/control-systems/sensor/sound-sensor-kat_158575.htm</nowiki> | |||

Raibert, M. H. (2000). Legged Robots That Balance. MIT Press | |||

Sanfilippo F, Azpiazu J, Marafioti G, Transeth AA, Stavdahl Ø, Liljebäck P. Perception-Driven Obstacle-Aided Locomotion for Snake Robots: The State of the Art, Challenges and Possibilities †. Applied Sciences. 2017; 7(4):336. <nowiki>https://doi.org/10.3390/app7040336</nowiki> | |||

Seitron. (n.d.). ''Portable gas detector with rechargeable battery''. <nowiki>https://seitron.com/en/portable-gas-detector-with-rechargeable-battery.html</nowiki> | |||

Shop - Wiseome Mini LiDAR Supplier. (n.d.). Wiseome Mini LiDAR Supplier. <nowiki>https://www.wiseomeinc.com/shop</nowiki> | |||

Tadokoro, S. (Ed.). (2009). Rescue robotics: DDT project on robots and systems for urban search and rescue. Springer Science & Business Media. | |||

Tai, Y.; Yu, T.-T. Using Smartphones to Locate Trapped Victims in Disasters. Sensors 2022, 22, 7502. <nowiki>https://doi.org/10.3390/</nowiki> s22197502 | |||

Teledyne FLIR. (2016, April 12). Infrared Camera Accuracy and Uncertainty in Plain Language. <nowiki>https://www.flir.eu/discover/rd-science/infrared-camera-accuracy-and-uncertainty-in-plain-language/</nowiki> | |||

Tenreiro Machado, J. A., & Silva, M. F. (2006). An Overview of Legged Robots | |||

Texas Instruments. (n.d.). ''An Introduction to Automotive LIDAR''. <nowiki>https://www.ti.com/lit/slyy150#:~:text=LIDAR%20and%20radar%20systems%20can,%3A%20%E2%80%A2%20Short%2Drange%20radar</nowiki>. | |||

''Thermal Zoom Cameras''. (n.d.). InfraTec Thermography Knowledge. <nowiki>https://www.infratec.eu/thermography/service-support/glossary/thermal-zoom-cameras/#:~:text=Depending%20on%20the%20camera%20configuration,and%20aircraft%20beyond%2030%20km</nowiki>. | |||

Uddin, Z., & Islam, M. (2016). Search and rescue system for alive human detection by semi-autonomous mobile rescue robot. 2016 International Conference on Innovations in Science, Engineering and Technology (ICISET). <nowiki>https://doi.org/10.1109/iciset.2016.7856489</nowiki> | |||

Urban Search and Rescue Team. (2023). ''Update zaterdagmiddag''. USAR. <nowiki>https://www.usar.nl/</nowiki> | |||

Van Diggelen, F., & Enge, P. (2015). The World’s first GPS MOOC and Worldwide Laboratory using Smartphones. Proceedings of the 28th International Technical Meeting of the Satellite Division of the Institute of Navigation (ION GNSS+ 2015), 361–369 | |||

VectorNav. (2023). Lidar - A measurement technique that uses light emitted from a sensor to measure the range to a target object. <nowiki>https://www.vectornav.com/applications/lidar-mapping#:~:text=LiDAR%20sensors%20are%20able%20to,sensing%20tool%20for%20mobile%20mapping</nowiki> | |||

Wang, B. (2017, august). ''New inexpensive centimeter-accurate GPS system could transform mainstream applications | NextBigFuture.com''. NextBigFuture.com. <nowiki>https://www.nextbigfuture.com/2015/05/new-inexpensive-centimeter-accurate-gps.html</nowiki> | |||

WellHealthWorks (2022, December 11). ''Thermal Imaging Camera Price''. <nowiki>https://wellhealthworks.com/thermal-imaging-camera-price-and-everything-you-need-to-know/#:~:text=Battery%20life%20of%2010%20hours</nowiki> | |||

''What is GPS and how does it work?'' (n.d.). <nowiki>https://novatel.com/support/knowledge-and-learning/what-is-gps-gnss</nowiki> | |||

''What is lidar?'' (n.d.). <nowiki>https://oceanservice.noaa.gov/facts/lidar.html</nowiki> | |||