Embedded Motion Control 2014 Group 3: Difference between revisions

| (63 intermediate revisions by 4 users not shown) | |||

| Line 27: | Line 27: | ||

= | = Software architecture and used approach = | ||

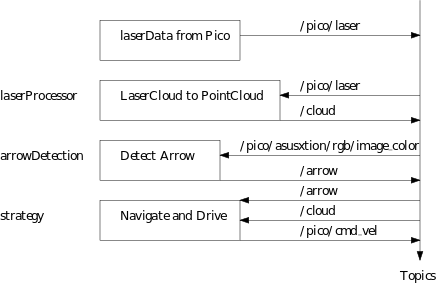

A schematic overview of the software architecture is shown in the figure below. The software basically consists only of 3 nodes, laserProcessor, arrowDetection and strategy. A short elaboration of the nodes: | |||

'''laserProcessor:''' This node reads the ''/pico/laser'' topic which sends the laser data in polar coordinates. All this node does is it converts the polar coordinates to cartesian coordinates and filters out points closer than 10 cm. The cartesian coordinate system is preferred over the polar coordinate system because it feels more intuitive. Finally once the conversion and filtering is done it publishes the transformed coordinates onto topic ''/cloud''. | |||

'''arrowDetection:''' This node reads the camera image from topic ''/pico/asusxtion/rgb/image_color'' and detects red arrows. If it detects an arrow the direction is determined and posted onto topic ''/arrow''. | |||

'''strategy:''' This node does multiple things. First it detects openings where it assigns a target to, if no openings are found the robot finds itself in a dead end. Secondly it reads the topic ''/arrow'' and if an arrow is present it overrides the preferred direction. Finally the robot determines the direction of the velocity of the robot using the Potential Field Method (PFM) and sends the velocity to the topic ''/pico/cmd_vel''. | |||

The approach is very simple and easy to implement. The strategy node for example has approximately only 100 lines of code. This is the reason why there has not been chosen to divide all elements over different nodes. The benefit of easy code is the easiness to tackle bugs and that bugs are less likely to occur. Another benefit of this 'simple' code is the easiness to tweak the robot's behavior to optimize for performance. | |||

The | |||

[[File:softarch2.png]] | |||

= Applied methods description = | |||

== Arrow detection == | |||

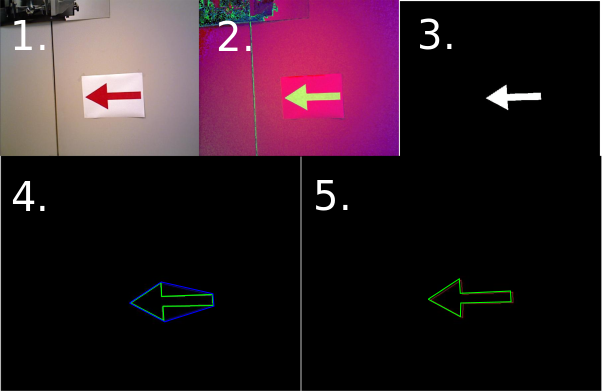

The following steps describe the algorithm to find the arrow and determine its direction: | |||

'''1.''' Read RGB image from ''/pico/asusxtion/rgb/image_color'' topic. | |||

'''2.''' Convert RGB image to HSV image. | |||

'''3.''' Filter out the red color using ''cv::inRange''. | |||

'''4.''' Find contours and convex hulls and apply filter. | |||

The filter removes all contours and convex hulls where the following relationship does not hold: | |||

<math>0.5 < \frac{Contour \ area}{Convex \ hull \ area} < 0.65 </math>. | |||

'''5.''' Use ''cv::approxPolyDP'' over the contours and apply filter. | |||

''' | The function ''cv::approxPolyDP'' is used to fit polylines over the resulting contours. The arrow should have approximately 7 lines per polyline. The polyline with the number of lines as described in the following formula is the arrow: | ||

<math>5 \ < \ number \ of \ lines \ in \ polyline \ < \ 10 </math> | |||

'''6.''' Determine if arrow is pointing left or right. | |||

First the midpoint of the arrow is found using <math>x_{mid} = \frac{x_{min}+x_{max}}{2}</math>. When the midpoint is known the program iterates over all points of the arrow contour. Two counters are made which count the number of points left and right of <math>x_{mid}</math>. If the left counter is greater than the right counter the arrow is pointing to the left, otherwise the arrow is pointing to the right. | |||

'''7.''' Making the detection more robust. | |||

''' | Extra robustness is required for example when at one frame the arrow is not detected the program still has to know there is an arrow. This is done by taking the last 5 iterations, check if in all these iterations the arrow is detected then publish the direction of the arrow onto the topic ''/arrow''. If in the last 5 iterations no arrow has been detected the arrow is not visible anymore thus publish that there is no arrow onto the topic ''/arrow''. | ||

[[File:arrowwiki.png]] | |||

== Strategy == | |||

=== Target === | |||

In the approach used a target is required for Pico to drive to. In this section the determination of the target point is explained. | |||

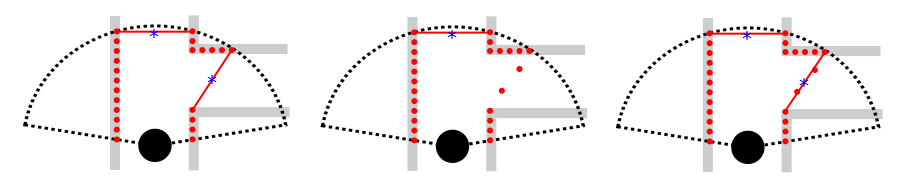

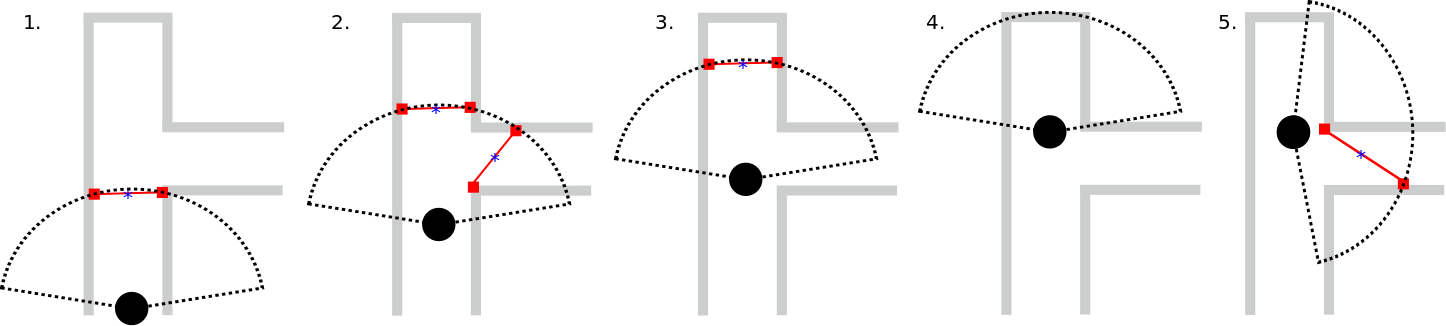

In the code a loop iterates through all data points, which are in order from right to left. Only the points within 1.4 meter from Pico are taken into account. If the distance between two consecutive points is greater than a determined threshold, the presence of a door (opening) is assumed. This will be further explained with the following figure. | |||

[[File: | [[File:Base_setpointPointWIKI.png]] | ||

In the figure the vision of Pico is illustrated by the dotted black arc and the detected points within this marge by red dots. In the left figure one can see that two openings are detected, i.e. the distance between two consecutive points is greater than a user defined threshold value (0.6 m). The potential targets (shown in blue) are just simply placed in the middle of the two consecutive points. | |||

In the middle figure a problem is illustrated which occured while testing. When Pico looks past a corner it somehow detects points which are not really there, so called ghost points. This is a problem because in the case of ghost points the distance between two points becomes smaller than the threshold and no openings are detected. To filter this effect the distance is not taken between two points, but over five (found by testing). A downside of this method is that points far ahead of Pico give the possibility of false positives. But because in this case Pico only looks at a relative short distance, this will not occur. In the figure on the right the filter is applied and the door is found again. | |||

The targets are used to orientate Pico. By adjusting <math>\theta</math> the target point is kept straight in front of Pico. | |||

=== PFM === | |||

Now the target points are determined Pico needs to drive towards them without hitting any walls. In the first strategy, shown at the bottom of this page, walls were fitted so Pico knew where to drive. This method had robustness issues which lead to some problems during the corridor challenge. Therefore a more robust method was chosen for the maze competition. | |||

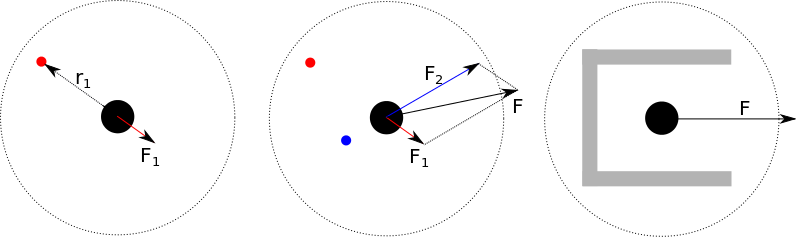

With the Potential Field Method virtual repulsive forces are applied to Pico for every laser point. The force caused by a point depends on the distance, <math>r</math>, between Pico and a laser point. The virtual force is defined as: <math>F_r=-\frac{1}{r^5}</math>, where the resulting force <math>F</math> is pointing away from the obstacle. | |||

When the sum is taken over all repulsive forces, the net repulsive force is pointing away from close objects. | |||

[[File:ForceWIKI.png]] | |||

A target is added as an attractive forces. The function for the attractive force is <math>F_a=r</math>. In one of the first iterations of the software the attractive force was composed like the repulsive force, but during testing a linear relation between distance and force of the target point proved to be working best. | |||

The resulting force is not always the same and because Pico must drive as fast as possible only the direction of the total force is taken. The maximum speed is set in the direction of the resulting force. | |||

=== Dead-end === | |||

The strategy node detects a dead-end when no doors are detected. The robot anticipates by turning around its z-axis in opposite direction of the preferred direction (which is always left or always right). When it detects a door it continues the normal procedure. Consider the following example: | |||

'''1.''' The robot sees a door and keeps on going. The preferred direction of the robot is going left. | |||

'''2.''' The robot sees 2 options, but it takes the most left door since this is the preferred direction. | |||

'''3.''' The robot sees one door and drives to the target. | |||

'''4.''' The robot does not see any doors, it recognises a dead end. It now starts turning around its z-axis in clockwise direction (opposite to the preferred direction which was left). | |||

'''5.''' The robot detects a new door and continues normal procedure. | |||

[[File:Base_deadendWIKI.png]] | |||

In this case a dead-end is only detected if it is within the vision of Pico, which is limited to 1.4 meters. When the dead-end is longer than in the example Pico will drive in the dead-end until the dead-end is within the vision. If the vision field for dead-ends is extended a dead-end will be detected sooner and pico will finish the maze faster. There was no time however to implement this in the algorithm. | |||

= | === Safety === | ||

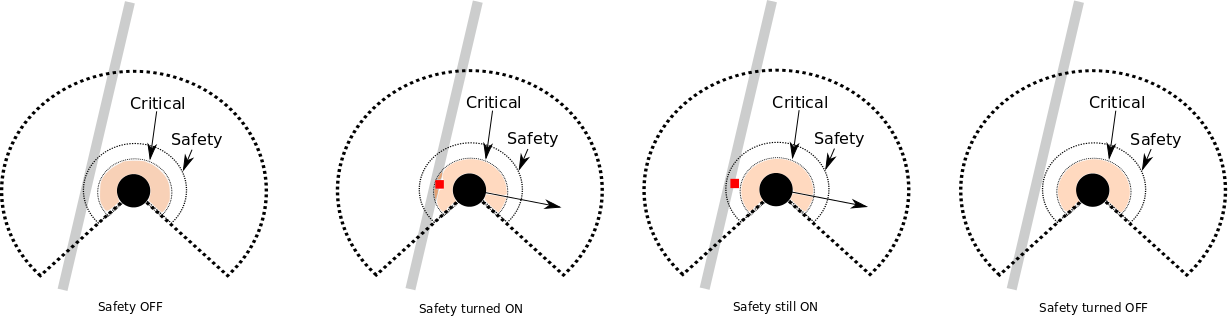

== | The importance of safety and the requirement that pico should not hit an obstacle under any circumstances was realized. For this reason a safety feature was introduced. In ideal situation, pico should never need to use this safety feature. The program was designed in such a way that the robot could complete its task without using 'Safety'. But there are many variables in real world, all of which are difficult to account particularly in the given time frame. It is always a possibility that for some unforeseen reason, pico gets too close to an object and might hit it. 'Safety' was designed to prevent this. The first version of 'Safety' was designed to stop pico if it moves very close to any object it detects. This version was, of course, not very effective as the robot would come to a stand-still once Safety was invoked which prevented it from finishing its task. | ||

In the | 'Safety' was later upgraded. When too close, pico needs to move away from the obstacle and this movement should not interfere with path-detection and space orientation of the robot. In the latest version, a sophisticated safety design was incorporated. Two circles, critical circle and safety circle are considered. The program first counts the number of points detected within the critical circle. If more than 10 points are counted, 'Safety' mode is started. This is done for noise filtering. Safety is given highest priotity and overrides decisions for movement of pico. The program now calculates average x and y position of all points within safety circle (safety circle is larger than critical circle). Without changing the direction it is facing, pico moves in direction directly opposite to the average obstacle point. Since there is no turning involved, spacial orientation of pico remains unaffected. This point is updated with every cycle and pico keeps moving away until the point moves out of safety circle. 'Safety' mode is then turned off and normal operation is resumed. | ||

[[File:base_safetyWIKI_upd.png]] | |||

= | === End of maze === | ||

=== | |||

A part of the script is dedicated to determine if pico has reached the end of maze and its time to stop. In the point cloud, a maximum 1080 points can be detected in a 270 degree range in front of pico. There are many differences that pico can detect when its outside the maze compared to within the maze. One of those differences, the one that has been implemented in the program, is that the detected points are farther away from pico when it is outside the maze. Pico counts the number of points that are farther than 1.6 meters from itself. Within the maze, at all times, this number is not larger than 400 points. When this number is greater than 0.6 times the maximum possible (0.6x1080 = 648) pico runs in 'End of maze' mode. In this mode, pico drives straight for forty cycles after which it comes to a standstill. 'End of maze' counter is checked during every cycle and if, at any point, the counter is not large enough, the cycle counter is reset to zero and pico moves out of 'End of maze' mode. This is a preventive measure against false end-of-maze detection. | |||

= First software approach = | |||

==Obstacle Detection== | |||

===Finding Walls and Doors=== | |||

Our first approach was to find walls and doors (openings) expressed as a line so we could easily steer the robot to a certain door. The following algorithm was developed: | |||

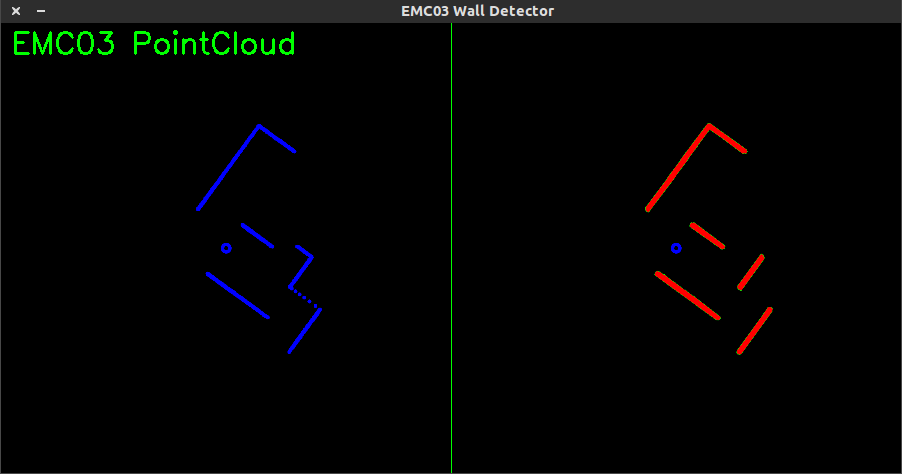

1. All laserpoints are plotted in a ''cv::Mat'' stucture (left). Secondly a Probalistic Hough Line Transform is applied to the plotted laserpoints (right). | |||

[[File:Emc03wall.png]] | [[File:Emc03wall.png]] | ||

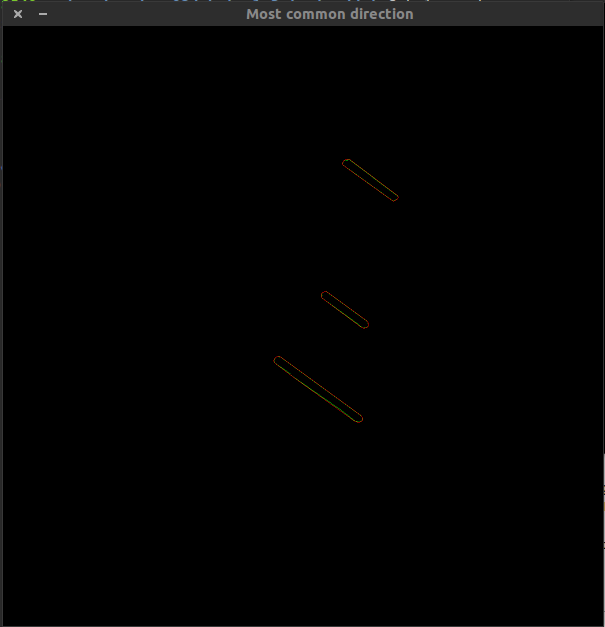

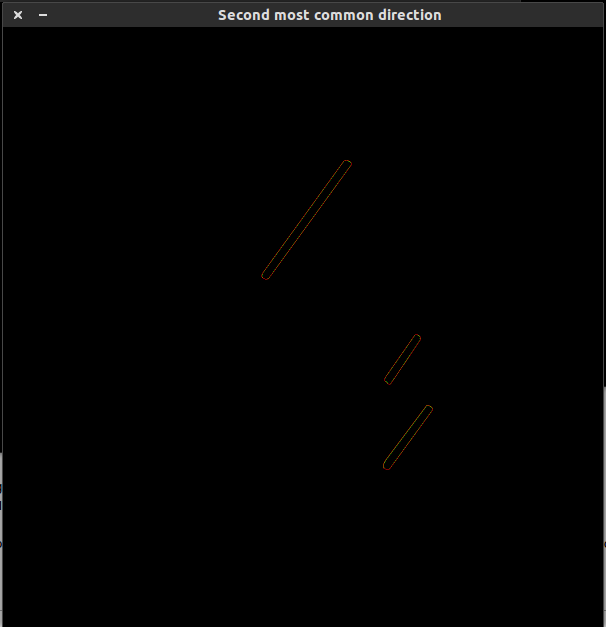

The | 2. The resulting lines are filtered into two categories, a most common directory and a second most common directory. This is shown in the figure below. | ||

[[File:secondmostcommon.png]] | [[File:mostcommon.png]] [[File:secondmostcommon.png]] | ||

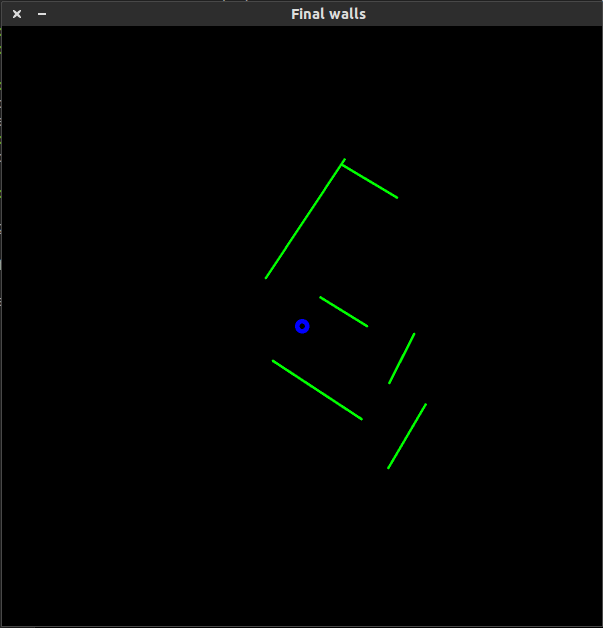

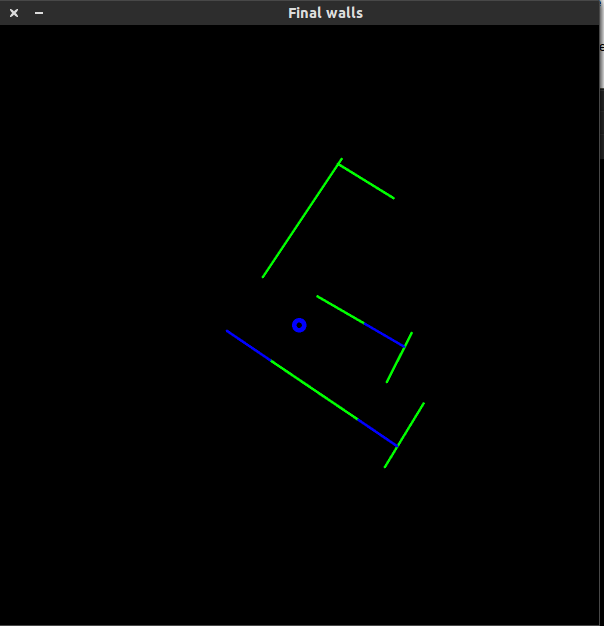

3. Since the Probalistic Hough Line Transformation return multiple lines per wall, i.e. a wall can consists of 20 lines, a line is fit through the contour of each wall. The result is 1 line per wall as shown in the figure below. | |||

[[File:finalwallok.png]] | [[File:finalwallok.png]] | ||

4. Now it is possible to fit 'doors' between walls which is shown in the figure below. | |||

Now | |||

[[File:deurtjes.png]] | [[File:deurtjes.png]] | ||

===Why this method doesn't work in real life=== | ===Why this method doesn't work in real life=== | ||

The method described in how to find walls and doors did not work properly in real life. We experienced some serious robustness problems due to the fact that in some of the iterations complete walls and/or doors were not detected, thus the robot couldn't steer in a proper fashion to the target. Secondly this method required some serious computation power which is not preferable | The method described in how to find walls and doors did not work properly in real life. We experienced some serious robustness problems due to the fact that in some of the iterations complete walls and/or doors were not detected, thus the robot couldn't steer in a proper fashion to the target. Secondly this method required some serious computation power which is not preferable. | ||

= Evaluation of the maze competetion = | |||

During the corridor competition it became clear that the chosen strategy was not robust enough to detect the maze in real life. This first strategy did work in the simulator but not in real-life. Thus to achieve a strategy which will work more robust we changed our strategy as can be read above. | |||

During the testing of the second strategy software in the days before the maze competition we noticed that this was a good decision with excellent results. This conclusion was drawn because of the fact that PICO was not only able to drive correctly through a maze with straight walls and 90 degrees corners, it was also able to drive through a 'maze' with bended walls and random degree angles. Even when people were walking through the maze PICO still did not gave up solving the maze. This made us confident about the performance during the real competition. | |||

During the competition the ‘always go left’ rule was chosen, although it did not matter because of the design of the maze. As a result, PICO chose the correct direction at the first crossing. The correct action for the second crossing was taking a right turn. PICO first looked into the left and front corridor to check whether or not is was a dead end, which it was, so it turned right. The crossings in the rest of the maze were provided with a red arrow which was detected correctly and used to steer into the right corridor. | |||

To improve the strategy a few adaptions for efficiency and robustness can be made. The first corner was taken really fast, it seemed like it was ‘drifting’ through the corned which is the most speed efficient way to pass a crossing. But at the second crossing it first had to check if the other directions where dead ends which costs valuable time. To improve this, the dead end detection should look at a wider range of point instead of only in front of PICO which will make it turn in the right direction even more early. | |||

To accomplish an even better robustness the ghost point ‘filter’ should be improved. In the current strategy a fixed amount of points is chosen to sum the distances, but this is not perfectly robust. Although the PICO finished correctly, an algorithm to detect if a point is a ghost point (for example to check if there are other points close to the current point) should make the detection even more robust. | |||

In conclusion, the chosen strategy turned out to be robust and efficient compared to other groups. Only a few lines of code are used and the strategy does not use a lot of data. During the competition we became first with a total time of 1 minute and 2 seconds, a great achievement! | |||

Latest revision as of 15:00, 2 July 2014

Group Members

| Name: | Student id: |

| Jan Romme | 0755197 |

| Freek Ramp | 0663262 |

| Kushagra | 0873174 |

| Roel Smallegoor | 0753385 |

| Janno Lunenburg - Tutor | - |

Software architecture and used approach

A schematic overview of the software architecture is shown in the figure below. The software basically consists only of 3 nodes, laserProcessor, arrowDetection and strategy. A short elaboration of the nodes:

laserProcessor: This node reads the /pico/laser topic which sends the laser data in polar coordinates. All this node does is it converts the polar coordinates to cartesian coordinates and filters out points closer than 10 cm. The cartesian coordinate system is preferred over the polar coordinate system because it feels more intuitive. Finally once the conversion and filtering is done it publishes the transformed coordinates onto topic /cloud.

arrowDetection: This node reads the camera image from topic /pico/asusxtion/rgb/image_color and detects red arrows. If it detects an arrow the direction is determined and posted onto topic /arrow.

strategy: This node does multiple things. First it detects openings where it assigns a target to, if no openings are found the robot finds itself in a dead end. Secondly it reads the topic /arrow and if an arrow is present it overrides the preferred direction. Finally the robot determines the direction of the velocity of the robot using the Potential Field Method (PFM) and sends the velocity to the topic /pico/cmd_vel.

The approach is very simple and easy to implement. The strategy node for example has approximately only 100 lines of code. This is the reason why there has not been chosen to divide all elements over different nodes. The benefit of easy code is the easiness to tackle bugs and that bugs are less likely to occur. Another benefit of this 'simple' code is the easiness to tweak the robot's behavior to optimize for performance.

Applied methods description

Arrow detection

The following steps describe the algorithm to find the arrow and determine its direction:

1. Read RGB image from /pico/asusxtion/rgb/image_color topic.

2. Convert RGB image to HSV image.

3. Filter out the red color using cv::inRange.

4. Find contours and convex hulls and apply filter.

The filter removes all contours and convex hulls where the following relationship does not hold:

[math]\displaystyle{ 0.5 \lt \frac{Contour \ area}{Convex \ hull \ area} \lt 0.65 }[/math].

5. Use cv::approxPolyDP over the contours and apply filter.

The function cv::approxPolyDP is used to fit polylines over the resulting contours. The arrow should have approximately 7 lines per polyline. The polyline with the number of lines as described in the following formula is the arrow:

[math]\displaystyle{ 5 \ \lt \ number \ of \ lines \ in \ polyline \ \lt \ 10 }[/math]

6. Determine if arrow is pointing left or right.

First the midpoint of the arrow is found using [math]\displaystyle{ x_{mid} = \frac{x_{min}+x_{max}}{2} }[/math]. When the midpoint is known the program iterates over all points of the arrow contour. Two counters are made which count the number of points left and right of [math]\displaystyle{ x_{mid} }[/math]. If the left counter is greater than the right counter the arrow is pointing to the left, otherwise the arrow is pointing to the right.

7. Making the detection more robust.

Extra robustness is required for example when at one frame the arrow is not detected the program still has to know there is an arrow. This is done by taking the last 5 iterations, check if in all these iterations the arrow is detected then publish the direction of the arrow onto the topic /arrow. If in the last 5 iterations no arrow has been detected the arrow is not visible anymore thus publish that there is no arrow onto the topic /arrow.

Strategy

Target

In the approach used a target is required for Pico to drive to. In this section the determination of the target point is explained. In the code a loop iterates through all data points, which are in order from right to left. Only the points within 1.4 meter from Pico are taken into account. If the distance between two consecutive points is greater than a determined threshold, the presence of a door (opening) is assumed. This will be further explained with the following figure.

In the figure the vision of Pico is illustrated by the dotted black arc and the detected points within this marge by red dots. In the left figure one can see that two openings are detected, i.e. the distance between two consecutive points is greater than a user defined threshold value (0.6 m). The potential targets (shown in blue) are just simply placed in the middle of the two consecutive points. In the middle figure a problem is illustrated which occured while testing. When Pico looks past a corner it somehow detects points which are not really there, so called ghost points. This is a problem because in the case of ghost points the distance between two points becomes smaller than the threshold and no openings are detected. To filter this effect the distance is not taken between two points, but over five (found by testing). A downside of this method is that points far ahead of Pico give the possibility of false positives. But because in this case Pico only looks at a relative short distance, this will not occur. In the figure on the right the filter is applied and the door is found again.

The targets are used to orientate Pico. By adjusting [math]\displaystyle{ \theta }[/math] the target point is kept straight in front of Pico.

PFM

Now the target points are determined Pico needs to drive towards them without hitting any walls. In the first strategy, shown at the bottom of this page, walls were fitted so Pico knew where to drive. This method had robustness issues which lead to some problems during the corridor challenge. Therefore a more robust method was chosen for the maze competition. With the Potential Field Method virtual repulsive forces are applied to Pico for every laser point. The force caused by a point depends on the distance, [math]\displaystyle{ r }[/math], between Pico and a laser point. The virtual force is defined as: [math]\displaystyle{ F_r=-\frac{1}{r^5} }[/math], where the resulting force [math]\displaystyle{ F }[/math] is pointing away from the obstacle. When the sum is taken over all repulsive forces, the net repulsive force is pointing away from close objects.

A target is added as an attractive forces. The function for the attractive force is [math]\displaystyle{ F_a=r }[/math]. In one of the first iterations of the software the attractive force was composed like the repulsive force, but during testing a linear relation between distance and force of the target point proved to be working best.

The resulting force is not always the same and because Pico must drive as fast as possible only the direction of the total force is taken. The maximum speed is set in the direction of the resulting force.

Dead-end

The strategy node detects a dead-end when no doors are detected. The robot anticipates by turning around its z-axis in opposite direction of the preferred direction (which is always left or always right). When it detects a door it continues the normal procedure. Consider the following example:

1. The robot sees a door and keeps on going. The preferred direction of the robot is going left.

2. The robot sees 2 options, but it takes the most left door since this is the preferred direction.

3. The robot sees one door and drives to the target.

4. The robot does not see any doors, it recognises a dead end. It now starts turning around its z-axis in clockwise direction (opposite to the preferred direction which was left).

5. The robot detects a new door and continues normal procedure.

In this case a dead-end is only detected if it is within the vision of Pico, which is limited to 1.4 meters. When the dead-end is longer than in the example Pico will drive in the dead-end until the dead-end is within the vision. If the vision field for dead-ends is extended a dead-end will be detected sooner and pico will finish the maze faster. There was no time however to implement this in the algorithm.

Safety

The importance of safety and the requirement that pico should not hit an obstacle under any circumstances was realized. For this reason a safety feature was introduced. In ideal situation, pico should never need to use this safety feature. The program was designed in such a way that the robot could complete its task without using 'Safety'. But there are many variables in real world, all of which are difficult to account particularly in the given time frame. It is always a possibility that for some unforeseen reason, pico gets too close to an object and might hit it. 'Safety' was designed to prevent this. The first version of 'Safety' was designed to stop pico if it moves very close to any object it detects. This version was, of course, not very effective as the robot would come to a stand-still once Safety was invoked which prevented it from finishing its task. 'Safety' was later upgraded. When too close, pico needs to move away from the obstacle and this movement should not interfere with path-detection and space orientation of the robot. In the latest version, a sophisticated safety design was incorporated. Two circles, critical circle and safety circle are considered. The program first counts the number of points detected within the critical circle. If more than 10 points are counted, 'Safety' mode is started. This is done for noise filtering. Safety is given highest priotity and overrides decisions for movement of pico. The program now calculates average x and y position of all points within safety circle (safety circle is larger than critical circle). Without changing the direction it is facing, pico moves in direction directly opposite to the average obstacle point. Since there is no turning involved, spacial orientation of pico remains unaffected. This point is updated with every cycle and pico keeps moving away until the point moves out of safety circle. 'Safety' mode is then turned off and normal operation is resumed.

End of maze

A part of the script is dedicated to determine if pico has reached the end of maze and its time to stop. In the point cloud, a maximum 1080 points can be detected in a 270 degree range in front of pico. There are many differences that pico can detect when its outside the maze compared to within the maze. One of those differences, the one that has been implemented in the program, is that the detected points are farther away from pico when it is outside the maze. Pico counts the number of points that are farther than 1.6 meters from itself. Within the maze, at all times, this number is not larger than 400 points. When this number is greater than 0.6 times the maximum possible (0.6x1080 = 648) pico runs in 'End of maze' mode. In this mode, pico drives straight for forty cycles after which it comes to a standstill. 'End of maze' counter is checked during every cycle and if, at any point, the counter is not large enough, the cycle counter is reset to zero and pico moves out of 'End of maze' mode. This is a preventive measure against false end-of-maze detection.

First software approach

Obstacle Detection

Finding Walls and Doors

Our first approach was to find walls and doors (openings) expressed as a line so we could easily steer the robot to a certain door. The following algorithm was developed:

1. All laserpoints are plotted in a cv::Mat stucture (left). Secondly a Probalistic Hough Line Transform is applied to the plotted laserpoints (right).

2. The resulting lines are filtered into two categories, a most common directory and a second most common directory. This is shown in the figure below.

3. Since the Probalistic Hough Line Transformation return multiple lines per wall, i.e. a wall can consists of 20 lines, a line is fit through the contour of each wall. The result is 1 line per wall as shown in the figure below.

4. Now it is possible to fit 'doors' between walls which is shown in the figure below.

Why this method doesn't work in real life

The method described in how to find walls and doors did not work properly in real life. We experienced some serious robustness problems due to the fact that in some of the iterations complete walls and/or doors were not detected, thus the robot couldn't steer in a proper fashion to the target. Secondly this method required some serious computation power which is not preferable.

Evaluation of the maze competetion

During the corridor competition it became clear that the chosen strategy was not robust enough to detect the maze in real life. This first strategy did work in the simulator but not in real-life. Thus to achieve a strategy which will work more robust we changed our strategy as can be read above.

During the testing of the second strategy software in the days before the maze competition we noticed that this was a good decision with excellent results. This conclusion was drawn because of the fact that PICO was not only able to drive correctly through a maze with straight walls and 90 degrees corners, it was also able to drive through a 'maze' with bended walls and random degree angles. Even when people were walking through the maze PICO still did not gave up solving the maze. This made us confident about the performance during the real competition.

During the competition the ‘always go left’ rule was chosen, although it did not matter because of the design of the maze. As a result, PICO chose the correct direction at the first crossing. The correct action for the second crossing was taking a right turn. PICO first looked into the left and front corridor to check whether or not is was a dead end, which it was, so it turned right. The crossings in the rest of the maze were provided with a red arrow which was detected correctly and used to steer into the right corridor.

To improve the strategy a few adaptions for efficiency and robustness can be made. The first corner was taken really fast, it seemed like it was ‘drifting’ through the corned which is the most speed efficient way to pass a crossing. But at the second crossing it first had to check if the other directions where dead ends which costs valuable time. To improve this, the dead end detection should look at a wider range of point instead of only in front of PICO which will make it turn in the right direction even more early.

To accomplish an even better robustness the ghost point ‘filter’ should be improved. In the current strategy a fixed amount of points is chosen to sum the distances, but this is not perfectly robust. Although the PICO finished correctly, an algorithm to detect if a point is a ghost point (for example to check if there are other points close to the current point) should make the detection even more robust.

In conclusion, the chosen strategy turned out to be robust and efficient compared to other groups. Only a few lines of code are used and the strategy does not use a lot of data. During the competition we became first with a total time of 1 minute and 2 seconds, a great achievement!