PRE2019 4 Group6: Difference between revisions

vlorviodt |

|||

| (90 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

<div style="width:calc(100vw - 175px);background-color:#FDFEFE;padding-bottom:35px;position:absolute;top:0;left:0;"> | <div style="width:calc(100vw - 175px);background-color:#FDFEFE;padding-bottom:35px;position:absolute;top:0;left:0;"> | ||

<div style="font-family: 'q_serif', Ariel, Helvetica, sans-serif; font-size: 14px; line-height: 1.5; max-width: | <div style="font-family: 'q_serif', Ariel, Helvetica, sans-serif; font-size: 14px; line-height: 1.5; max-width: 80%; word-wrap: break-word; color: #333; font-weight: 400; box-shadow: 4px 4px 28px 0px rgba(0,0,0,0.75); margin-left: auto; margin-right: auto; padding: 70px; background-color: white; padding-top: 25px;"> | ||

<center><big><big><big><b>R.E.D.C.A.(Robotic Emotion Detection Chatbot Assistant) </b></big></big></big></center><br> | <center><big><big><big><b>R.E.D.C.A.(Robotic Emotion Detection Chatbot Assistant) </b></big></big></big></center><br> | ||

[[File:face front.png|800px|Image: 800 pixels|center]] | |||

= Group Members = | = Group Members = | ||

| Line 55: | Line 55: | ||

=== Facial Detection === | === Facial Detection === | ||

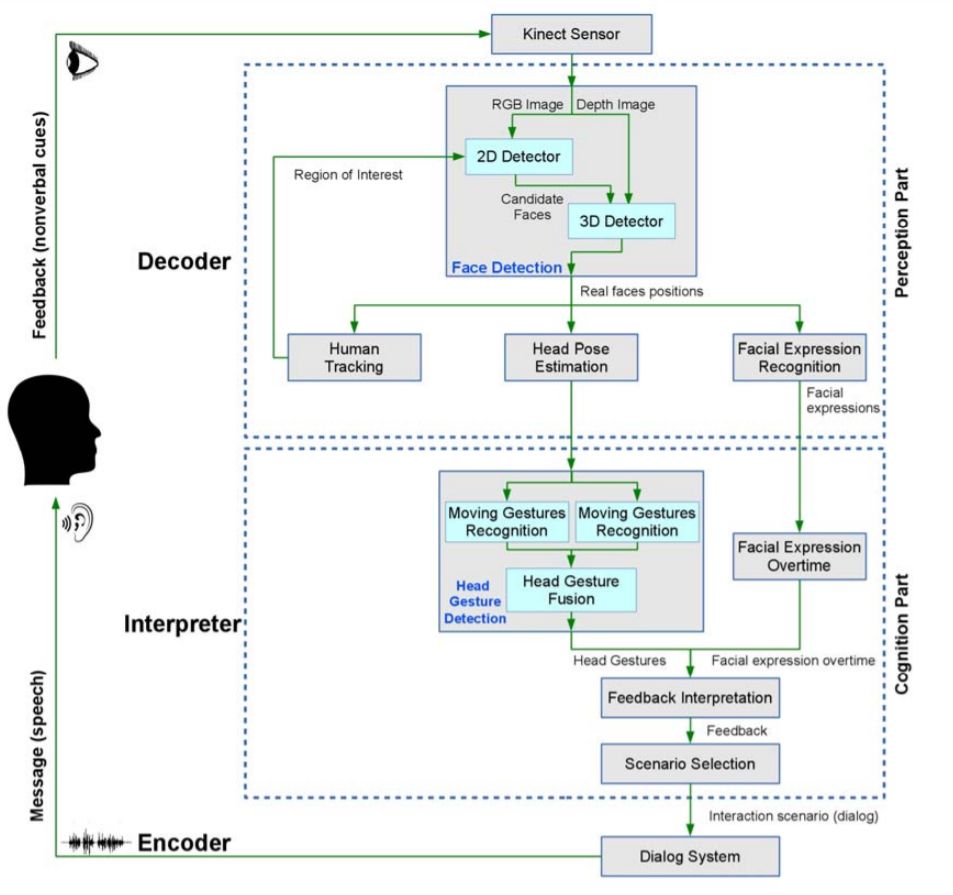

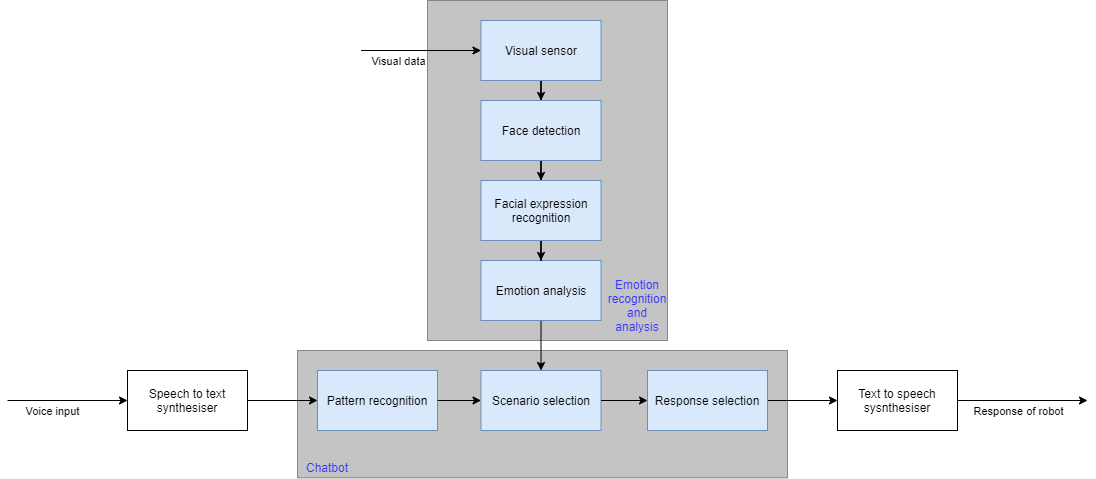

The facial detection with emotion recognition software has already been done before. One method that could be employed for facial detection is done by the following block diagram: | The facial detection with emotion recognition software has already been done before. One method that could be employed for facial detection is done by the following block diagram: | ||

| Line 85: | Line 82: | ||

Many varieties of care robots have been integrated in our society. They vary from low level automata to high level designs that keep track of patient biometrics. For our problem, we focus on the more social care robots that interact with users on a social level. A few relevant robots are PARO, ZORA and ROBEAR. | Many varieties of care robots have been integrated in our society. They vary from low level automata to high level designs that keep track of patient biometrics. For our problem, we focus on the more social care robots that interact with users on a social level. A few relevant robots are PARO, ZORA and ROBEAR. | ||

'''PARO'''<ref name = 'PAROresearch'>Vincentian Collaborative System: Measuring PARO's impact, with an emphasis on residents with Alzheimer's disease or dementia., 2010</ref> is a therapeutic robot resembling a seal. Based on animal therapy, its purpose is to create a calming effect and to elicit emotional reactions from patients in hospitals and nursing homes. It is able to interact with patients via tactile sensors and contains software to look at the user and remember their faces and express emotions. | |||

[[File:CareRobotParo.jpg|300px|Image: 800 pixels|center|thumb|Figure 3: PARO being hugged]] | |||

[[File:CareRobotParo.jpg|300px|Image: 800 pixels| | '''ZORA'''<ref name='ResearchZora'>Huisman, C., Helianthe, K., Two-Year Use of Care Robot Zora in Dutch Nursing Homes: An Evaluation Study, 2019, https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6473570/</ref>, which stands for Zorg, Ouderen, Revalidatie en Animation is a small humanoid robot with a friendly haptic design. It's primarily dispatched to Dutch nursing homes to engage the elderly in a more active lifestyle. It is capable to dance, sing and play games with the user and its design has shown to be effective as positive association for the user. Additionally, ZORA is also used for autistic children to interact with, as it contains predictive movement behavior and a consistent intonation. | ||

[[File:CareRobotZora.jpg|300px|Image: 800 pixels|center|thumb|Figure 4: ZORA in action]] | |||

[[File:CareRobotZora.jpg|300px|Image: 800 pixels| | |||

'''ROBEAR'''<ref name='ResearchRobear'>Olaronke, I., Olawaseun, O., Rhoda, I., State Of The Art: A Study of Human-Robot Interaction in Healthcare | |||

2017, https://www.researchgate.net/publication/316717436_State_Of_The_Art_A_Study_of_Human-Robot_Interaction_in_Healthcare</ref> is humanoid care robot portraying a bear. The design and function is to aid patients with physical constraints with movement, such as walking and lifting patients into their wheelchair. It's friendly design sparks positive associations for the user and makes it a lovable and useful robot. | 2017, https://www.researchgate.net/publication/316717436_State_Of_The_Art_A_Study_of_Human-Robot_Interaction_in_Healthcare</ref> is humanoid care robot portraying a bear. The design and function is to aid patients with physical constraints with movement, such as walking and lifting patients into their wheelchair. It's friendly design sparks positive associations for the user and makes it a lovable and useful robot. | ||

[[File:CareRobotRobear.jpg|300px|Image: 800 pixels| | |||

| | [[File:CareRobotRobear.jpg|300px|Image: 800 pixels|center|thumb|Figure 5: ROBEAR demonstration]] | ||

'''PEPPER'''<ref name='ResearchPepper'>Pandey, A., Gelin, R., A Mass-Produced Sociable Humanoid Robot Pepper: The First Machine of Its Kind, 2018</ref> is a humanoid robot of medium size. It was introduced in 2014 and its primary function is to detect human emotion from facial expressions and intonations in ones voice. Initially, Pepper has been deployed to offices to function as a receptionist or host. As of 2017, the robot has been a focal point in research for assisting elderly in care homes. | |||

[[File:CareRobotPepper.jpg|400px|Image: 800 pixels|center|thumb|Figure 6: Care robot Pepper]] | |||

== Limitations and issues == | == Limitations and issues == | ||

| Line 109: | Line 110: | ||

== R.E.D.C.A. design == | == R.E.D.C.A. design == | ||

R.E.D.C.A. must also have an initial design we wish to pursue as prototype. From what we researched and elaborated on in the State of the Art's previous work section, Pepper is the closest design resembling our goal, with state of the art sensors and actuators for speaking and listening. Our aim is to implement R.E.D.C.A. as such a robot. | |||

= USE Aspects = | = USE Aspects = | ||

[[File:USE_parties.jpg|400px|Image: 800 pixels|right|thumb|Figure | [[File:USE_parties.jpg|400px|Image: 800 pixels|right|thumb|Figure 7: Stakeholders in Facial Recognition Robot]] | ||

Researching and developing facial recognition robots requires that one takes into account into what stakeholders are involved around the process. These stakeholders will interact, create or regulate the robot and may affect its design and requirements. The users, society and enterprise stakeholders are put into perspective below. | Researching and developing facial recognition robots requires that one takes into account into what stakeholders are involved around the process. These stakeholders will interact, create or regulate the robot and may affect its design and requirements. The users, society and enterprise stakeholders are put into perspective below. | ||

| Line 118: | Line 121: | ||

===Users=== | ===Users=== | ||

Lonely elderly people are the main user of the robot. How these elderly people are found is via a government-funded institute where people can make applications in order to get such a robot. These applications can be filled in by everyone(elderly themselves, friends, physiatrists, family, caregivers) with the consent of the elderly person as well. In cases where the health of the elderly is in danger due to illnesses( | Lonely elderly people are the main user of the robot. How these elderly people are found is via a government-funded institute where people can make applications in order to get such a robot. These applications can be filled in by everyone (elderly themselves, friends, physiatrists, family, caregivers) with the consent of the elderly person as well. In cases where the health of the elderly is in danger due to illnesses (final stages dementia etc.) the consent of the elderly is not necessary if the application is filled by doctors or physiatrists. If applicable a caregiver/employee of the institute will go visit the elderly person to check whether the care robot is really necessary or different solutions can be found. If found applicable the elderly will be assigned a robot. | ||

They will interact with the robot on a daily basis by talking to it and the robot will make an emotional profile of the person which it uses to help the person through the day. When the robot detects certain negative emotions(sadness, anger | They will interact with the robot on a daily basis by talking to it and the robot will make an emotional profile of the person which it uses to help the person through the day. When the robot detects certain negative emotions (sadness, anger etc.) it can ask if it can help with various actions like calling family, friends, real caregivers, or having a simple conversation with the person. | ||

===Society=== | ===Society=== | ||

| Line 132: | Line 134: | ||

= User Requirement = | = User Requirement = | ||

In order to develop R.E.D.C.A. several requirements must be | In order to develop R.E.D.C.A. several requirements must be made for it to function appropriately with its designated user. Requirements are split up in various sections relevant to the software and hardware aspects we focus on. | ||

===Emotion Recognition=== | ===Emotion Recognition=== | ||

| Line 138: | Line 140: | ||

* Recognise user face. | * Recognise user face. | ||

* Calculate emotion for only the user's face. | * Calculate emotion for only the user's face. | ||

* Translate to useful data for chatbot. | * Translate to useful data for the chatbot. | ||

* Requirements above done in quick succession for natural conversation flow. | * Requirements above done in quick succession for natural conversation flow. | ||

| Line 144: | Line 146: | ||

* Engage the user or respond | * Engage the user or respond | ||

* High level conversation ability. | * High-level conversation ability. | ||

* Preset options for the user to choose from. | * Preset options for the user to choose from. | ||

* Store user data to enhance conversation experience. | * Store user data to enhance conversation experience. | ||

* Able to call caregivers or guardians for emergencies. | * Able to call caregivers or guardians for emergencies. | ||

* Allows user to interact by speech or text input/output. | * Allows the user to interact by speech or text input/output. | ||

===Hardware=== | ===Hardware=== | ||

| Line 158: | Line 160: | ||

===Miscellaneous=== | ===Miscellaneous=== | ||

* Able to store and transmit data for research if user opted-in. | * Able to store and transmit data for research if user opted-in. | ||

= Approach = | = Approach = | ||

This project has multiple problems that need to be solved in order to create a system / robot that is able to combat the emotional problems that the elderly are facing. In order to categorize the problems are split into three main parts: | This project has multiple problems that need to be solved in order to create a system/robot that is able to combat the emotional problems that the elderly are facing. In order to categorize the problems are split into three main parts: | ||

''' Technical ''' | ''' Technical ''' | ||

| Line 234: | Line 171: | ||

''' Social / Emotional ''' | ''' Social / Emotional ''' | ||

The robot should be able to act accordingly, therefore research needs to be done to know what types of actions the robot can perform in order to get positive results. One thing the robot could be able to do is have a simple conversation with the person or start the recording of an | The robot should be able to act accordingly, therefore research needs to be done to know what types of actions the robot can perform in order to get positive results. One thing the robot could be able to do is to have a simple conversation with the person or start the recording of an audiobook in order to keep the person active during the day. | ||

''' Physical ''' | ''' Physical ''' | ||

| Line 240: | Line 177: | ||

What type of physical presence of the robot is optimal. Is a more conventional robot needed that has a somewhat humanoid look. Or does a system that interacts using speakers and different screens divided over the room get better results. Maybe a combination of both. | What type of physical presence of the robot is optimal. Is a more conventional robot needed that has a somewhat humanoid look. Or does a system that interacts using speakers and different screens divided over the room get better results. Maybe a combination of both. | ||

The main focus of this project will be the technical problem stated however for a more complete use-case the other subject should be researched as well. | The main focus of this project will be the technical problem stated however for a more complete use-case the other subject should be researched as well. | ||

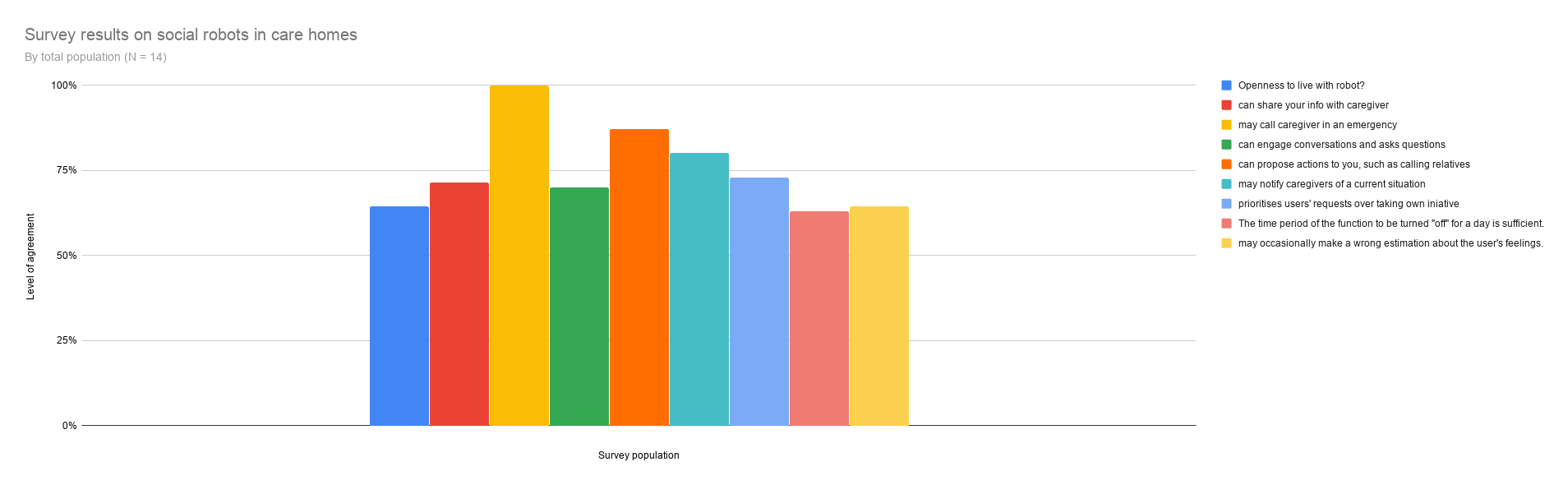

= Survey = | = Survey = | ||

Implementing a social robot in daily life is not an easy task. Having assessed our USE stakeholders, the robot must now cater to their needs. In particularly the needs of the elderly - the primary user - as well as the caregivers and relatives. To gain more insight in their opinions on a social support robot such as R.E.D.C.A., the functionalities it should contain and its desired | Implementing a social robot in daily life is not an easy task. Having assessed our USE stakeholders, the robot must now cater to their needs. In particularly the needs of the elderly - the primary user - as well as the caregivers and relatives. To gain more insight in their opinions on a social support robot such as R.E.D.C.A., the functionalities it should contain and its desired behaviours, a survey has been dispatched to our aforementioned demographics. Care homes, elderly and caregivers we know on a personal level as well as national ones willing to participate have been asked to fill a set of questions. There have been two sets of questions: Closed questions that ask the partaker their opinion, or level of agreement, of certain robot-related statements: for example "the robot can engage the user in conversation". This level is within a range of Strongly Disagree (0%) to Strongly Agree (100%) with 50% being a grey area where the partaker does not know. The second set of questions are open-ended and ask the user for their suggestion what they expect the robot to do when a theoretic user is in a specified emotional state. | ||

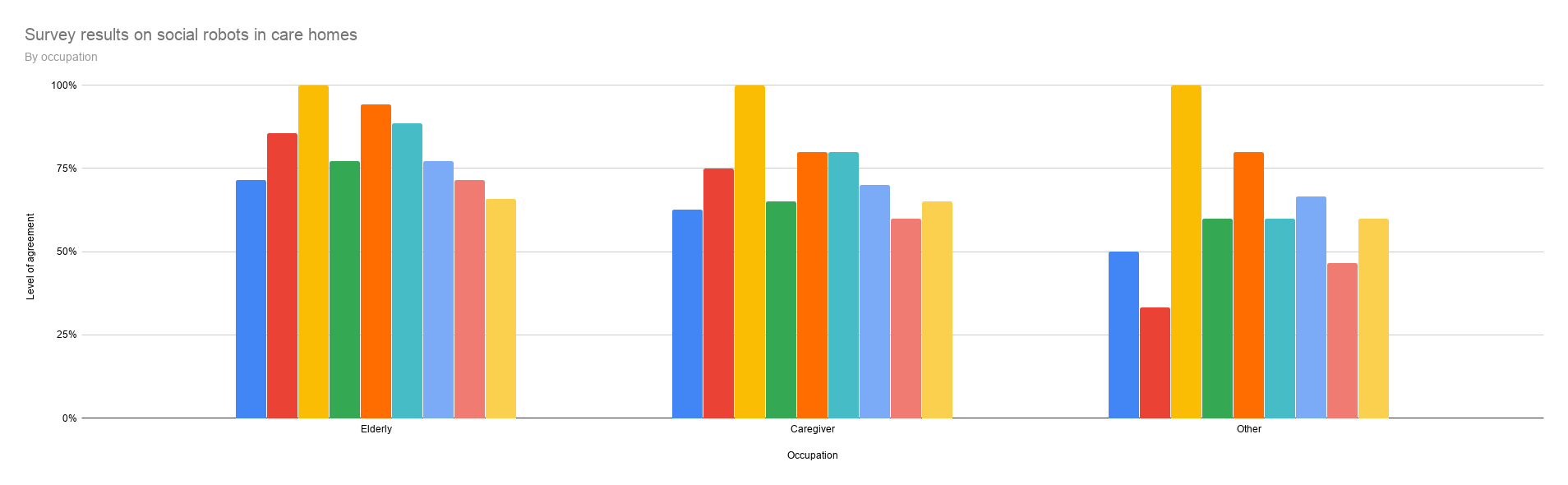

Below are the resulting graphs by accumulating all survey data. | Below are the resulting graphs by accumulating all survey data. | ||

The data of first set of questions has been processed into a bar chart ( | The data of first set of questions has been processed into a bar chart (figure 8), indicating average levels of agreement of the total population per statements. This data has also been grouped per occupation (figure 9) for a more specified overview of these partakers' opinions. | ||

[[File:SR-Total.png|1000px|Image: 4000 pixels|center|thumb|Figure | [[File:SR-Total.png|1000px|Image: 4000 pixels|center|thumb|Figure 8: Survey Results - Total Population]] | ||

[[File:SR-Occupation.png|1000px|Image: 4000 pixels|center|thumb|Figure | [[File:SR-Occupation.png|1000px|Image: 4000 pixels|center|thumb|Figure 9: Survey Results - Per Occupation]] | ||

Additionally, a third graph has been made to display the preferred actions on certain emotional states of a theoretical user. As they were open questions, the answers ranged broadly from accurately specified to vaguely described. To be able to process this into the chart below ( | Additionally, a third graph has been made to display the preferred actions on certain emotional states of a theoretical user. As they were open questions, the answers ranged broadly from accurately specified to vaguely described. To be able to process this into the chart below (figure 10), generalisations on the open-ended questions have been made, where multiple different answers are put in the same category. For example, if one partaker wrote "speak to user" and another "tell the user joke", this both have been categorised as Start conversation. Specific answers have still been taken into consideration upon creating the chatbot. Overall, there have been no great signs of disdain towards the statements proposed, which may be a signal to implement these. | ||

[[File:SR-Emotion.png|1000px|Image: 2000 pixels|center|thumb|Figure | [[File:SR-Emotion.png|1000px|Image: 2000 pixels|center|thumb|Figure 10: Survey Results - Preferred Action on Emotion]] | ||

With the survey's results, we may use this data to tinker and adapt R.E.D.C.A. and its chatbot functionality to its users needs. As the first charts indicate, many appreciate having the robot call for help if required as well as being able to call a person of interest Additionally, a large portion of the surveyed elderly are interested in having R.E.D.C.A. in their home, which stimulates our research and reinforces the demand for such a robot. | With the survey's results, we may use this data to tinker and adapt R.E.D.C.A. and its chatbot functionality to its users needs. As the first charts indicate, many appreciate having the robot call for help if required as well as being able to call a person of interest Additionally, a large portion of the surveyed elderly are interested in having R.E.D.C.A. in their home, which stimulates our research and reinforces the demand for such a robot. | ||

| Line 271: | Line 204: | ||

=Framework= | =Framework= | ||

In the coming section the total framework will be elaborated upon. In the picture below this framework can be seen in a flowchart. There are two inputs to the system. There is the input to the emotion that is expressed on the face of the user and there is the vocal input of the user. Due to limited time, the voice to text and text to speech was not implemented. However, the Pepper robot design already has a built-in text to speech and speech to text system, so this would not be required to be made again. How the individual modules work will be explained in the section below. | In the coming section, the total framework will be elaborated upon. In the picture below this framework can be seen in a flowchart. There are two inputs to the system. There is the input to the emotion that is expressed on the face of the user and there is the vocal input of the user. Due to limited time, the voice to text and text to speech was not implemented. However, the Pepper robot design already has a built-in text to speech and speech to text system, so this would not be required to be made again. How the individual modules work will be explained in the section below. | ||

[[File:Framework_Robot.png]] | [[File:Framework_Robot.png|1000 px|center|thumb|Figure 11: Framework of R.E.D.C.A.]] | ||

=Emotion recognition design= | =Emotion recognition design= | ||

| Line 296: | Line 229: | ||

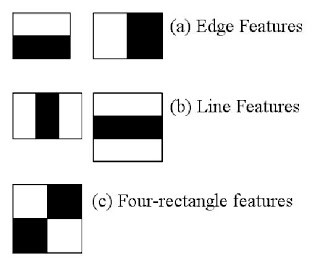

The first step is to extract the Haar Features. The three types of features used for this algorithm are shown below: | The first step is to extract the Haar Features. The three types of features used for this algorithm are shown below: | ||

[[File:name1.jpg|400px|Image: 800 pixels|center|thumb|]] | [[File:name1.jpg|400px|Image: 800 pixels|center|thumb|Figure 12: Haar features]] | ||

Each Haar Feature is a single value obtained by subtracting the sum of pixels under the white rectangle from the sum of pixels under the black rectangle. | Each Haar Feature is a single value obtained by subtracting the sum of pixels under the white rectangle from the sum of pixels under the black rectangle. | ||

| Line 302: | Line 235: | ||

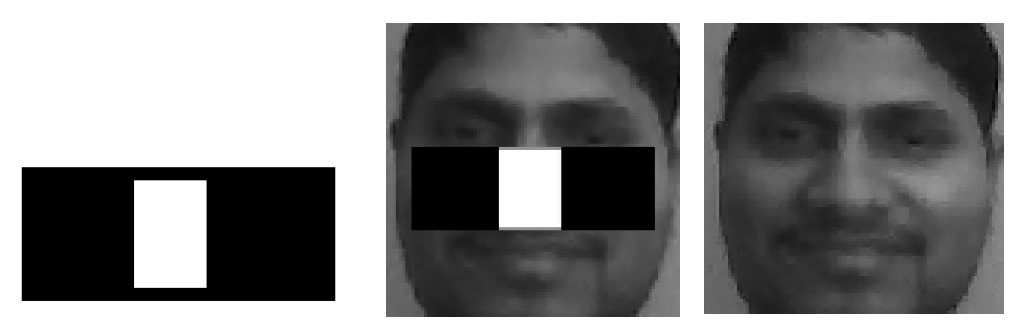

Each Haar Feature considers such adjacent rectangular regions at a specific location in the detection window. For example, one of the properties that all human faces share is that the nose bridge region is brighter than the eyes, so we get the following Haar Feature: | Each Haar Feature considers such adjacent rectangular regions at a specific location in the detection window. For example, one of the properties that all human faces share is that the nose bridge region is brighter than the eyes, so we get the following Haar Feature: | ||

[[File:name2.jpg|400px|Image: 800 pixels|center|thumb|]] | [[File:name2.jpg|400px|Image: 800 pixels|center|thumb|Figure 13: Human edge features]] | ||

Another example comes from the property that the eye region is darker than the upper-cheeks: | Another example comes from the property that the eye region is darker than the upper-cheeks: | ||

[[File:name3.jpg|400px|Image: 800 pixels|center|thumb|]] | [[File:name3.jpg|400px|Image: 800 pixels|center|thumb|Figure 14: Human line features]] | ||

To give you an idea of how many Haar Features are extracted from an image, in a standard 24x24 pixel sub-window there are a total of 162336 possible features. After extraction, the next step is to evaluate these features. Because of the large quantity, evaluation in constant time is performed using the Integral Image image representation, whose main advantage over more sophisticated alternative features is the speed. But even so, it would be still prohibitively expensive to evaluate all Haar Features when testing an image. Thus, a variant of the learning algorithm AdaBoost is used to both select the best features and to train classifiers that use them. | To give you an idea of how many Haar Features are extracted from an image, in a standard 24x24 pixel sub-window there are a total of 162336 possible features. After extraction, the next step is to evaluate these features. Because of the large quantity, evaluation in constant time is performed using the Integral Image image representation, whose main advantage over more sophisticated alternative features is the speed. But even so, it would be still prohibitively expensive to evaluate all Haar Features when testing an image. Thus, a variant of the learning algorithm AdaBoost is used to both select the best features and to train classifiers that use them. | ||

| Line 314: | Line 247: | ||

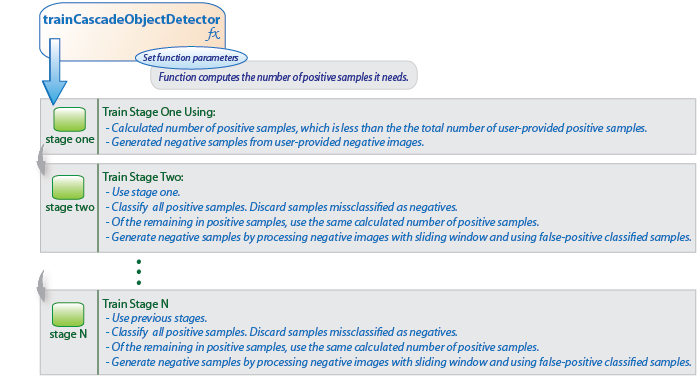

The cascade classifier consists of a collection of stages, where each stage is an ensemble of weak learners. The stages are trained using the Boosting technique which takes a weighted average of the decisions made by the weak learners and this way provides the ability to train a highly accurate classifier. A simple stage description of the Cascade classifier training is given in the figure below: | The cascade classifier consists of a collection of stages, where each stage is an ensemble of weak learners. The stages are trained using the Boosting technique which takes a weighted average of the decisions made by the weak learners and this way provides the ability to train a highly accurate classifier. A simple stage description of the Cascade classifier training is given in the figure below: | ||

[[File:name4.png|400px|Image: 800 pixels|center|thumb|]] | [[File:name4.png|400px|Image: 800 pixels|center|thumb|Figure 15: Cascade classifier training]] | ||

After the training phase, the Cascade face detector is stored as an XML file, which can be then easily loaded in any program and used to get the rectangle areas of an image containing a face. The haarcascade_frontalface_default.xml<ref name="frontalface">haarcascade_frontalface_default.xml, https://github.com/opencv/opencv/tree/master/data/haarcascades</ref> we use is a Haar Cascade designed by OpenCV, a library of programming functions mainly aimed at real-time computer vision, to detect the frontal face. | After the training phase, the Cascade face detector is stored as an XML file, which can be then easily loaded in any program and used to get the rectangle areas of an image containing a face. The haarcascade_frontalface_default.xml<ref name="frontalface">haarcascade_frontalface_default.xml, https://github.com/opencv/opencv/tree/master/data/haarcascades</ref> we use is a Haar Cascade designed by OpenCV, a library of programming functions mainly aimed at real-time computer vision, to detect the frontal face. | ||

| Line 323: | Line 256: | ||

Emotion recognition is a computer technology that identifies human emotion, in our case - in digital frontal images of human faces. The type of emotion recognition we use is a specific case of image classification as our goal is to classify the expressions on frontal face images into various categories such as anger, fear, surprise, sadness, happiness, and so on. | Emotion recognition is a computer technology that identifies human emotion, in our case - in digital frontal images of human faces. The type of emotion recognition we use is a specific case of image classification as our goal is to classify the expressions on frontal face images into various categories such as anger, fear, surprise, sadness, happiness, and so on. | ||

As of yet, there still isn’t one best emotion recognition algorithm as there is no way to compare the different algorithms with each other. However, for some emotion recognition datasets (containing frontal face images, each | As of yet, there still isn’t one best emotion recognition algorithm as there is no way to compare the different algorithms with each other. However, for some emotion recognition datasets (containing frontal face images, each labelled with one of the emotion classes) there are accuracy scores for different machine learning algorithms trained on the corresponding dataset. | ||

It is important to note that the state-of-the-art accuracy score of one dataset being higher than the score for another dataset does not mean that in practice using the first dataset will give better results. The reason for this is that the accuracy scores for the algorithms used on a dataset are based only on the images from that dataset. | It is important to note that the state-of-the-art accuracy score of one dataset being higher than the score for another dataset does not mean that in practice using the first dataset will give better results. The reason for this is that the accuracy scores for the algorithms used on a dataset are based only on the images from that dataset. | ||

| Line 329: | Line 262: | ||

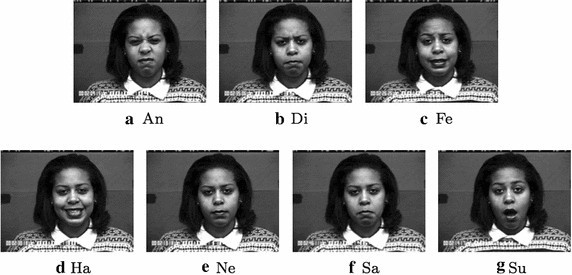

The first dataset we attempted to train a model on was the widely spread Extended Cohn-Kanade dataset (CK+)<ref>Lucey, Patrick & Cohn, Jeffrey & Kanade, Takeo & Saragih, Jason & Ambadar, Zara & Matthews, Iain. (2010). The Extended Cohn-Kanade Dataset (CK+): A complete dataset for action unit and emotion-specified expression. 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition - Workshops, CVPRW 2010. 94 - 101. 10.1109/CVPRW.2010.5543262.</ref> currently consisting of 981 images. We chose it based on the fact that the state-of-the-art algorithm for it had an accuracy of 99.7%<ref>Meng, Debin & Peng, Xiaojiang & Wang, Kai & Qiao, Yu. (2019). Frame Attention Networks for Facial Expression Recognition in Videos. 3866-3870. 10.1109/ICIP.2019.8803603.</ref><ref>State-of-the-Art Facial Expression Recognition on Extended Cohn-Kanade Dataset, https://paperswithcode.com/sota/facial-expression-recognition-on-ck</ref>, much higher than any other dataset. Some samples from the CK+ dataset are provided below: | The first dataset we attempted to train a model on was the widely spread Extended Cohn-Kanade dataset (CK+)<ref>Lucey, Patrick & Cohn, Jeffrey & Kanade, Takeo & Saragih, Jason & Ambadar, Zara & Matthews, Iain. (2010). The Extended Cohn-Kanade Dataset (CK+): A complete dataset for action unit and emotion-specified expression. 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition - Workshops, CVPRW 2010. 94 - 101. 10.1109/CVPRW.2010.5543262.</ref> currently consisting of 981 images. We chose it based on the fact that the state-of-the-art algorithm for it had an accuracy of 99.7%<ref>Meng, Debin & Peng, Xiaojiang & Wang, Kai & Qiao, Yu. (2019). Frame Attention Networks for Facial Expression Recognition in Videos. 3866-3870. 10.1109/ICIP.2019.8803603.</ref><ref>State-of-the-Art Facial Expression Recognition on Extended Cohn-Kanade Dataset, https://paperswithcode.com/sota/facial-expression-recognition-on-ck</ref>, much higher than any other dataset. Some samples from the CK+ dataset are provided below: | ||

[[File:name5.jpg|400px|Image: 800 pixels|center|thumb|]] | [[File:name5.jpg|400px|Image: 800 pixels|center|thumb|Figure 16: CK+ dataset]] | ||

On the CK+ dataset, we tried using the Deep CNN model called Xception<ref>Chollet, Francois. (2017). Xception: Deep Learning with Depthwise Separable Convolutions. 1800-1807. 10.1109/CVPR.2017.195.</ref> that was proposed by Francois Chollet. As in the article “Improved Facial Expression Recognition with Xception Deep Net and Preprocessed Images”<ref>Mendes, Maksat,. (2019). Improved Facial Expression Recognition with Xception Deep Net and Preprocessed Images. Applied Mathematics & Information Sciences. 13. 859-865. 10.18576/amis/130520.</ref> where they got up to 97.73% accuracy, we used preprocessed images and then used the Xception model for best results. However, even though accuracy wise we got very similar results, when we tried using the model in practice it was quite inaccurate, correctly recognizing no more than half of the emotion classes. | On the CK+ dataset, we tried using the Deep CNN model called Xception<ref>Chollet, Francois. (2017). Xception: Deep Learning with Depthwise Separable Convolutions. 1800-1807. 10.1109/CVPR.2017.195.</ref> that was proposed by Francois Chollet. As in the article “Improved Facial Expression Recognition with Xception Deep Net and Preprocessed Images”<ref>Mendes, Maksat,. (2019). Improved Facial Expression Recognition with Xception Deep Net and Preprocessed Images. Applied Mathematics & Information Sciences. 13. 859-865. 10.18576/amis/130520.</ref> where they got up to 97.73% accuracy, we used preprocessed images and then used the Xception model for best results. However, even though accuracy wise we got very similar results, when we tried using the model in practice it was quite inaccurate, correctly recognizing no more than half of the emotion classes. | ||

| Line 335: | Line 268: | ||

Then we tried the same model with another very widely spread emotion recognition dataset - fer2013<ref>fer2013 dataset, https://www.kaggle.com/deadskull7/fer2013</ref>. This dataset, consisting of 35887 face crops, is much larger than the previous one. It is quite challenging as the depicted faces vary significantly in terms of illumination, age, pose, expression intensity, and occlusions that occur under realistic conditions. The provided sample images in the same column depict identical expressions, namely anger, disgust, fear, happiness, sadness, surprise, and neutral (the 7 classes of the dataset): | Then we tried the same model with another very widely spread emotion recognition dataset - fer2013<ref>fer2013 dataset, https://www.kaggle.com/deadskull7/fer2013</ref>. This dataset, consisting of 35887 face crops, is much larger than the previous one. It is quite challenging as the depicted faces vary significantly in terms of illumination, age, pose, expression intensity, and occlusions that occur under realistic conditions. The provided sample images in the same column depict identical expressions, namely anger, disgust, fear, happiness, sadness, surprise, and neutral (the 7 classes of the dataset): | ||

[[File:name6.png|400px|Image: 800 pixels|center|thumb|]] | [[File:name6.png|400px|Image: 800 pixels|center|thumb|Figure 17: Fer2013 dataset]] | ||

Using Xception with image preprocessing on the fer2013 dataset led to around 66% accuracy<ref>Arriaga, Octavio & Valdenegro, Matias & Plöger, Paul. (2017). Real-time Convolutional Neural Networks for Emotion and Gender Classification.</ref>, but in practice, the produced model was much more accurate when testing it on the camera feed. | Using Xception with image preprocessing on the fer2013 dataset led to around 66% accuracy<ref>Arriaga, Octavio & Valdenegro, Matias & Plöger, Paul. (2017). Real-time Convolutional Neural Networks for Emotion and Gender Classification.</ref>, but in practice, the produced model was much more accurate when testing it on the camera feed. | ||

| Line 341: | Line 274: | ||

Based on the results of the previous two datasets, we assumed that probably bigger datasets lead to better accuracy in practice. We decided to try using Xception for one more emotion recognition dataset – FERG<ref>Facial Expression Research Group 2D Database (FERG-DB), http://grail.cs.washington.edu/projects/deepexpr/ferg-2d-db.html</ref><ref>Aneja, Deepali & Colburn, Alex & Faigin, Gary & Shapiro, Linda & Mones, Barbara. (2016). Modeling Stylized Character Expressions via Deep Learning. 10.1007/978-3-319-54184-6_9.</ref>, a database of 2D images of stylized characters with annotated facial expressions. This dataset contains 55767 annotated face images, a sample shown below: | Based on the results of the previous two datasets, we assumed that probably bigger datasets lead to better accuracy in practice. We decided to try using Xception for one more emotion recognition dataset – FERG<ref>Facial Expression Research Group 2D Database (FERG-DB), http://grail.cs.washington.edu/projects/deepexpr/ferg-2d-db.html</ref><ref>Aneja, Deepali & Colburn, Alex & Faigin, Gary & Shapiro, Linda & Mones, Barbara. (2016). Modeling Stylized Character Expressions via Deep Learning. 10.1007/978-3-319-54184-6_9.</ref>, a database of 2D images of stylized characters with annotated facial expressions. This dataset contains 55767 annotated face images, a sample shown below: | ||

[[File:name7.jpg|400px|Image: 800 pixels|center|thumb|]] | [[File:name7.jpg|400px|Image: 800 pixels|center|thumb|Figure 18: Xception dataset]] | ||

The state-of-the-art for this dataset is 99.3%<ref>Minaee, Shervin & Abdolrashidi, Amirali. (2019). Deep-Emotion: Facial Expression Recognition Using Attentional Convolutional Network.</ref><ref>State-of-the-Art Facial Expression Recognition on FERG, https://paperswithcode.com/sota/facial-expression-recognition-on-ferg</ref> and by using Xception with image preprocessing on it we got very similar results. However, even though this dataset was the biggest so far, in practice it performed the worst of the three. This is most likely due to the fact that the images are not of real human faces. | The state-of-the-art for this dataset is 99.3%<ref>Minaee, Shervin & Abdolrashidi, Amirali. (2019). Deep-Emotion: Facial Expression Recognition Using Attentional Convolutional Network.</ref><ref>State-of-the-Art Facial Expression Recognition on FERG, https://paperswithcode.com/sota/facial-expression-recognition-on-ferg</ref> and by using Xception with image preprocessing on it we got very similar results. However, even though this dataset was the biggest so far, in practice it performed the worst of the three. This is most likely due to the fact that the images are not of real human faces. | ||

| Line 349: | Line 282: | ||

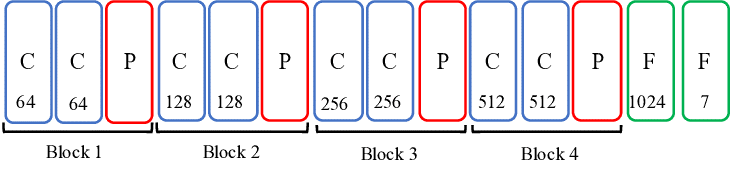

The VGG architecture is similar to the VGG-B<ref>Simonyan, Karen & Zisserman, Andrew. (2014). Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 1409.1556. </ref> configuration but with one CCP block less, i.e. CCPCCPCCPCCPFF: | The VGG architecture is similar to the VGG-B<ref>Simonyan, Karen & Zisserman, Andrew. (2014). Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 1409.1556. </ref> configuration but with one CCP block less, i.e. CCPCCPCCPCCPFF: | ||

[[File:name8.png|400px|Image: 800 pixels|center|thumb|]] | [[File:name8.png|400px|Image: 800 pixels|center|thumb|Figure 19: VGG architecture]] | ||

Note that in convolutional neural networks, C means convolutional layers which convolve the input and pass its result to the next layer, P is for pooling layers which reduce the dimensions of the data by combining the outputs of neuron clusters at one layer into a single neuron in the next layer, and F is for the fully-connected layers, connecting every neuron in one layer to every neuron in another layer. | Note that in convolutional neural networks, C means convolutional layers which convolve the input and pass its result to the next layer, P is for pooling layers which reduce the dimensions of the data by combining the outputs of neuron clusters at one layer into a single neuron in the next layer, and F is for the fully-connected layers, connecting every neuron in one layer to every neuron in another layer. | ||

| Line 361: | Line 294: | ||

=Chatbot design= | =Chatbot design= | ||

Once the facial data is obtained from the algorithm the robot can use this information to better help the elderly to fulfil their needs. How this chatbot will look like is described in the following section. | Once the facial data is obtained from the algorithm the robot can use this information to better help the elderly to fulfil their needs. How this chatbot will look like is described in the following section. | ||

==Needs and values of the elderly== | |||

The utmost important part of the robot is to support and help the elderly person as much as it can. This has to be done correctly however, as crossing ethical boundaries can easily be made. The most important ethical value of an elderly person is their autonomy. This autonomy is the power to make your own choices. <ref name=“Emotion3”> | |||

Johansson-Pajala, R., Thommes, K., Hoppe, J.A. et al. Care Robot Orientation: What, Who and How? Potential Users’ Perceptions. Int J of Soc Robotics (2020). https://doi.org/10.1007/s12369-020-00619-y</ref> For most elderly this value has to be respected more than anything. It is therefore vital that in the chatbot design the elderly person is not forced to do anything. The robot can merely ask. From this also problems can arise if the person asks the robot to do something to hurt him/her. But for this robot design, it is not able to do anything physical that could hurt or help the person. Its most important task is the mental health of the person. Such a robot design is like the PARO robot, a robotic seal that helps elderly with dementia to not feel lonely. The problem of this robot design is that it can not read the persons emotion, as it is treated as a hugging robot, meaning that sensing the head is very difficult. Therefore for this project, different robot designs were made. This can be seen in the other section. | |||

== Why facial emotion recognition== | == Why facial emotion recognition== | ||

For the chatbot design, there are a lot of possibilities to use different sensors to get extra data to use. However, more data is not equal to a better system. It is important to have a good idea what data will be used for what. For example, it is possible to measure the heart rate of the old person or track his/her eye movements, but what would it add? These are the important questions to ask when building such a chatbot. For our chatbot design there is chosen to use emotion recognition based on facial expressions to enhance the chatbot. The main reason for this is that experiments have shown that an empathic computer agent can | For the chatbot design, there are a lot of possibilities to use different sensors to get extra data to use. However, more data is not equal to a better system. It is important to have a good idea of what data will be used for what. For example, it is possible to measure the heart rate of the old person or track his/her eye movements, but what would it add? These are the important questions to ask when building such a chatbot. For our chatbot design, there is chosen to use emotion recognition based on facial expressions to enhance the chatbot. The main reason for this is that experiments have shown that an empathic computer agent can | ||

promote a more positive perception of the interaction.<ref name=“Emotion4”> | promote a more positive perception of the interaction.<ref name=“Emotion4”> | ||

U. K. Premasundera and M. C. Farook, "Knowledge Creation Model for Emotion Based Response Generation for AI," 2019 19th International Conference on Advances in ICT for Emerging Regions (ICTer), Colombo, Sri Lanka, 2019, pp. 1-7, doi: 10.1109/ICTer48817.2019.9023699.</ref> For example, Martinovski and Traum demonstrated that many errors can be prevented if the machine is able to recognize the emotional state of the user and react sensitively to it.<ref name=“Emotion4”>B. Martinovsky and D. Traum, "The Error Is the Clue: Breakdown In | U. K. Premasundera and M. C. Farook, "Knowledge Creation Model for Emotion Based Response Generation for AI," 2019 19th International Conference on Advances in ICT for Emerging Regions (ICTer), Colombo, Sri Lanka, 2019, pp. 1-7, doi: 10.1109/ICTer48817.2019.9023699.</ref> For example, Martinovski and Traum demonstrated that many errors can be prevented if the machine is able to recognize the emotional state of the user and react sensitively to it.<ref name=“Emotion4”>B. Martinovsky and D. Traum, "The Error Is the Clue: Breakdown In | ||

| Line 368: | Line 306: | ||

Marina Del Rey Ca Inst For Creative Technologies, 2006</ref> This is because knowing the state of a person can prevent the chatbot from annoying the person by adapting to the persons state. E.g. prevent pushing to keep talking when the person is clearly annoyed by the robot. This can breakdown the conversation and leave the person disappointed. If the chatbot can detect that the queries it gives make the persons' valance more negative it can try to change the way it approaches the person. | Marina Del Rey Ca Inst For Creative Technologies, 2006</ref> This is because knowing the state of a person can prevent the chatbot from annoying the person by adapting to the persons state. E.g. prevent pushing to keep talking when the person is clearly annoyed by the robot. This can breakdown the conversation and leave the person disappointed. If the chatbot can detect that the queries it gives make the persons' valance more negative it can try to change the way it approaches the person. | ||

Other studies propose that chatbots should have its own emotional model, as humans themselves are emotional creatures. Research done on the connection of a | Other studies propose that chatbots should have its own emotional model, as humans themselves are emotional creatures. Research was done on the connection of a | ||

person's current feelings towards the past by Lagattuta and Wellman <ref name=“Emotion5”>K. Lagattuta and H. Wellman, "Thinking about the Past: Early | person's current feelings towards the past by Lagattuta and Wellman <ref name=“Emotion5”>K. Lagattuta and H. Wellman, "Thinking about the Past: Early | ||

Knowledge about Links between Prior Experience, Thinking, and | Knowledge about Links between Prior Experience, Thinking, and | ||

| Line 441: | Line 379: | ||

The goal of the robot is then to regulate the emotions of the elderly in such a way that they are more in line with the goal of the elderly person itself. | The goal of the robot is then to regulate the emotions of the elderly in such a way that they are more in line with the goal of the elderly person itself. | ||

To do this the robots needs to know whether the situation is controllable or not. For example, when a person is feeling a little bit sad it can try to talk to the person to change its feeling to a more positive feeling. However, when the situation is not controllable the robot should not try to change the emotion of the elderly, but help the person to accept what is happening to them. For example, when the housecat of the person just died, this can have huge impacts on how the elderly person is feeling. Trying to make them happy will not happen of course due to an uncontrollable situation. In this case, the robot can only try to make the duration and intensity of the negative | To do this the robots needs to know whether the situation is controllable or not. For example, when a person is feeling a little bit sad it can try to talk to the person to change its feeling to a more positive feeling. However, when the situation is not controllable the robot should not try to change the emotion of the elderly, but help the person to accept what is happening to them. For example, when the housecat of the person just died, this can have huge impacts on how the elderly person is feeling. Trying to make them happy will not happen of course due to an uncontrollable situation. In this case, the robot can only try to make the duration and intensity of the negative emotionless by trying to comfort them. | ||

It is of course not possible to know the exact long term goals of the elderly person. It is therefore important that the robot is able to adapt to different situations so that it can adapt how it reacts based on the persons long term goals. This is of course not possible in this project due to time and resource constraints. | It is of course not possible to know the exact long term goals of the elderly person. It is therefore important that the robot is able to adapt to different situations so that it can adapt how it reacts based on the persons long term goals. This is of course not possible in this project due to time and resource constraints. | ||

| Line 449: | Line 387: | ||

== Robot user interaction== | == Robot user interaction== | ||

In this section, the interaction between the elderly and the robot will be described in detail so that it is clear what the robot needs to do once it has the valance and arousal information from the emotion recognition. As described in the robot design section the robot will be around 1 | In this section, the interaction between the elderly and the robot will be described in detail so that it is clear what the robot needs to do once it has the valance and arousal information from the emotion recognition. As described in the robot design section the robot will be around 1.2 meters high with the camera module mounted on the top to get the best view of the head of the person. The robot will interact with the user with a text to speech module, as having a voice will convey the message of the robot better than just displaying it. The user can interact with the robot by speaking to it. With a speech to text module, this speech will be converted to a text input that the robot can use. | ||

The robot will interact with the user with its main goal to make sure the emotions the person is feeling are according to their long-time goals. It will do this by making smart response decisions. This decision is also based on the arousal of the emotion, as such high arousal emotions(e.g. angry) may also result in a deviation of the user from their long-time goal. As stated before implementing individual long-term goals is not doable for this project, but this may also be researched in further projects. Instead, the main focus will be on the following long-term goal: | The robot will interact with the user with its main goal to make sure the emotions the person is feeling are according to their long-time goals. It will do this by making smart response decisions. This decision is also based on the arousal of the emotion, as such high arousal emotions(e.g. angry) may also result in a deviation of the user from their long-time goal. As stated before implementing individual long-term goals is not doable for this project, but this may also be researched in further projects. Instead, the main focus will be on the following long-term goal: | ||

| Line 462: | Line 400: | ||

===Cognitive Change=== | ===Cognitive Change=== | ||

This technique tries to modify how the user constructs the meaning of a specific situation. This technique is most applicable in highly aroused situations because these are most of the time uncontrollable emotions. This uncontrollability means that the robot should try to reduce the intensity and duration of the emotion by Cognitive change. As an example, during a very high aroused state the robot should try to comfort the person by asking questions and listening to the user to try to change how the user constructs this bad emotion into a less intense and higher valence emotion. | This technique tries to modify how the user constructs the meaning of a specific situation. This technique is most applicable in highly aroused situations because these are most of the time uncontrollable emotions. This uncontrollability means that the robot should try to reduce the intensity and duration of the emotion by Cognitive change. As an example, during a very high aroused state the robot should try to comfort the person by asking questions and listening to the user to try to change how the user constructs this bad emotion into a less intense and higher valence emotion. | ||

==Implementation of facial data in chatbot == | ==Implementation of facial data in chatbot == | ||

| Line 488: | Line 423: | ||

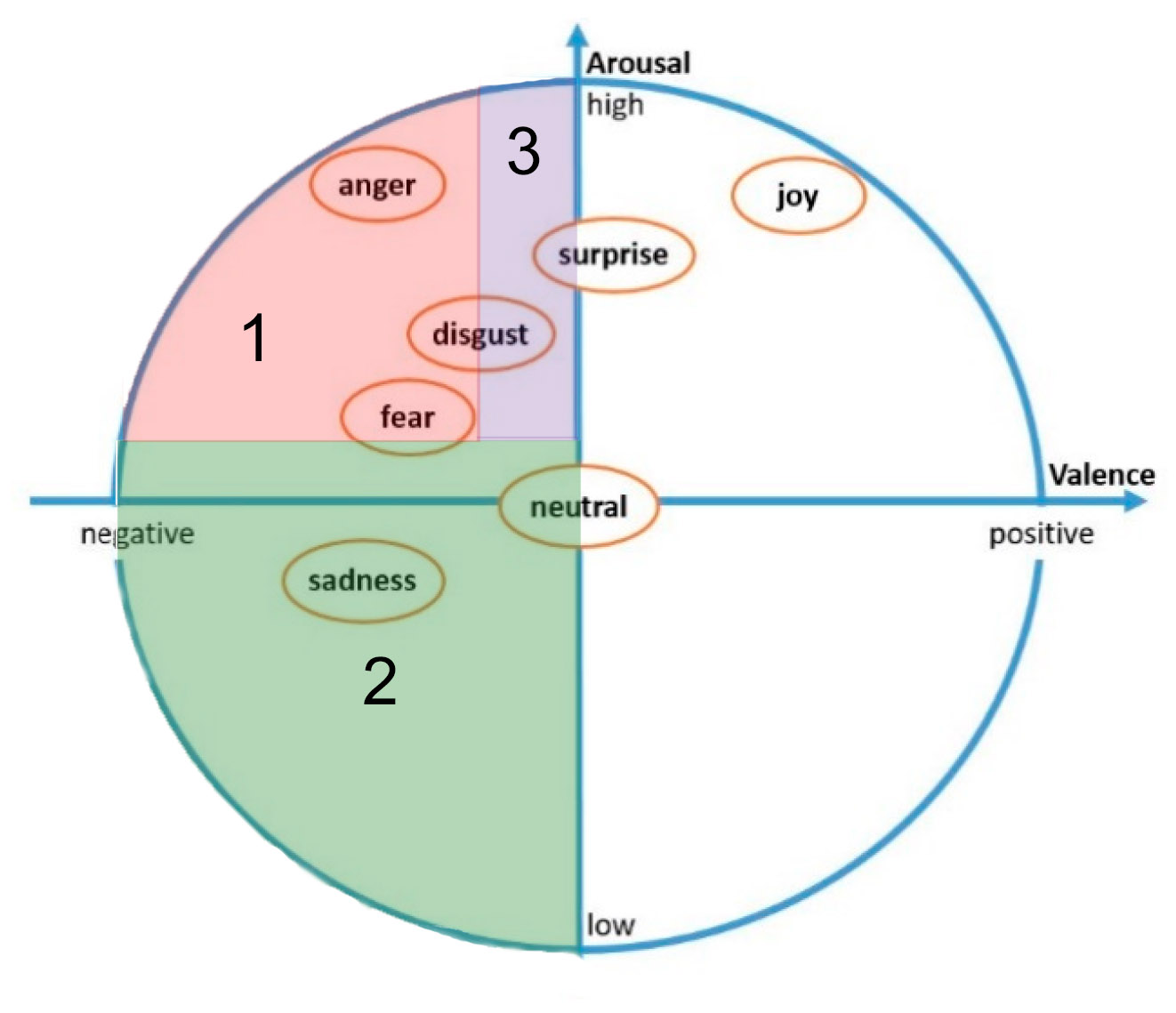

e.g., fear and anger, might not be captured in less than | e.g., fear and anger, might not be captured in less than | ||

four dimensions.<ref name = "Emotive5>Marmpena, Mina & Lim, Angelica & Dahl, Torbjorn. (2018). How does the robot feel? Perception of valence and arousal in emotional body language. Paladyn, Journal of Behavioral Robotics. 9. 168-182. 10.1515/pjbr-2018-0012. </ref> Nevertheless, for this project the 2-dimensional representation will suffice as the robot will not need to be 100% accurate in its readings, as this project uses a certain part of the circle for determining what action to undertake. Meaning that if the emotional state is slightly misread the robot still goes in the right trajectory. Such a valence/arousal diagram looks like the following: | four dimensions.<ref name = "Emotive5>Marmpena, Mina & Lim, Angelica & Dahl, Torbjorn. (2018). How does the robot feel? Perception of valence and arousal in emotional body language. Paladyn, Journal of Behavioral Robotics. 9. 168-182. 10.1515/pjbr-2018-0012. </ref> Nevertheless, for this project the 2-dimensional representation will suffice as the robot will not need to be 100% accurate in its readings, as this project uses a certain part of the circle for determining what action to undertake. Meaning that if the emotional state is slightly misread the robot still goes in the right trajectory. Such a valence/arousal diagram looks like the following: | ||

[[File:Emotional_Model.png|400px|Image: 800 pixels|center|thumb|Figure | [[File:Emotional_Model.png|400px|Image: 800 pixels|center|thumb|Figure 20: Valence and arousal diagram<ref name="ValenceDia">Gunes, H., & Pantic, M. (2010). Automatic, Dimensional and Continuous Emotion Recognition. International Journal of Synthetic Emotions (IJSE), 1(1), 68-99. doi:10.4018/jse.2010101605</ref>]] | ||

How negative or positive a person is feeling can be expressed by the valance state of the person. This valance is the measurement of the affective quality referring to the intrinsic good-ness(positive feelings) or bad-ness(negative feelings). For some emotions, the valance is quite clear eg. The negative effect of anger, sadness, disgust, fear or the positive effect of happiness. However, for surprise it can be both positive and negative depending on the context.<ref name=“Valance2”> Maital Neta, F. Caroline Davis, and Paul J. Whalen(2011, December) ''Valence resolution of facial expressions using an emotional oddball task'', Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3334337/#__ffn_sectitle/</ref> | How negative or positive a person is feeling can be expressed by the valance state of the person. This valance is the measurement of the affective quality referring to the intrinsic good-ness(positive feelings) or bad-ness(negative feelings). For some emotions, the valance is quite clear eg. The negative effect of anger, sadness, disgust, fear or the positive effect of happiness. However, for surprise it can be both positive and negative depending on the context.<ref name=“Valance2”> Maital Neta, F. Caroline Davis, and Paul J. Whalen(2011, December) ''Valence resolution of facial expressions using an emotional oddball task'', Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3334337/#__ffn_sectitle/</ref> | ||

The valance will be simply calculated with the intensity of the emotion and whether it is positive or negative. When the results are not as expected the weight of different emotions can be altered to better fit the situation. | The valance will be simply calculated with the intensity of the emotion and whether it is positive or negative. When the results are not as expected the weight of different emotions can be altered to better fit the situation. | ||

Arousal is a measure of how active or passive the person is. This means for example that a person that is very angry has | Arousal is a measure of how active or passive the person is. This means for example that a person that is very angry has very high arousal or that a person who is feeling sad has low arousal. This extra axis will help to better define what the robot should do as with high arousal a person might panic or hurt someone or themselves. Where exactly the values for these six emotions lay differs from person to person, but the general locations can also be seen from the graph above. | ||

==Implementation of valance and arousal== | ==Implementation of valance and arousal== | ||

| Line 498: | Line 433: | ||

Once the presence of every emotion has been detected and whether the individual is feeling positive or negative and with what arousal, the robot can use this information. How to use this is very important as history has shown that if the robot does not meet the needs and desires of the potential users the robot will be unused. | Once the presence of every emotion has been detected and whether the individual is feeling positive or negative and with what arousal, the robot can use this information. How to use this is very important as history has shown that if the robot does not meet the needs and desires of the potential users the robot will be unused. | ||

Therefore based on the survey, there was chosen to have three different trajectories for which the chatbot will have responses. These three can be seen below in the picture: | Therefore based on the survey, there was chosen to have three different trajectories for which the chatbot will have responses. These three can be seen below in the picture: | ||

[[File:Trajects_Emotions.jpg|400px|Image: 800 pixels|center|thumb|Figure | [[File:Trajects_Emotions.jpg|400px|Image: 800 pixels|center|thumb|Figure 21: Trajectories in Valence and arousal diagram]] | ||

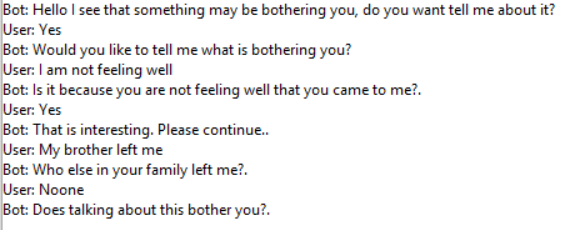

Trajectory 1 is where the user has a quite arousal and low valence. How to deal with these emotions is bases on the survey results and literature<ref name="PSy">Glancy, G., & Saini, M. A. (2005). An evidenced-based review of psychological treatments of anger and aggression. Brief Treatment & Crisis Intervention, 5(2).</ref>. From this, the most important factor for dealing with these strong emotions was to listen to the person and ask questions about why he or she is feeling angry. Therefore the main thing the robot does is ask if something is bothering the person and if it can help. If the user confirms it will immediately get into a special environment where a psychiatric chatbot was set up. This chatbot is based on the ELIZA bot with additional functionalities added from the ALICE bot. The ELIZA bot is quite old but does exactly what the users want: listen and ask questions. It works by rephrasing the input of the user in such a way that it evokes a continuous conversation.<ref name="Elize">Joseph Weizenbaum. 1966. ELIZA—a computer program for the study of natural language communication between man and machine. Commun. ACM 9, 1 (Jan. 1966), 36–45. DOI:https://doi.org/10.1145/365153.365168</ref>. An example of user interaction can be seen in the figure below: | Trajectory 1 is where the user has a quite arousal and low valence. How to deal with these emotions is bases on the survey results and literature<ref name="PSy">Glancy, G., & Saini, M. A. (2005). An evidenced-based review of psychological treatments of anger and aggression. Brief Treatment & Crisis Intervention, 5(2).</ref>. From this, the most important factor for dealing with these strong emotions was to listen to the person and ask questions about why he or she is feeling angry. Therefore the main thing the robot does is ask if something is bothering the person and if it can help. If the user confirms it will immediately get into a special environment where a psychiatric chatbot was set up. This chatbot is based on the ELIZA bot with additional functionalities added from the ALICE bot. The ELIZA bot is quite old but does exactly what the users want: listen and ask questions. It works by rephrasing the input of the user in such a way that it evokes a continuous conversation.<ref name="Elize">Joseph Weizenbaum. 1966. ELIZA—a computer program for the study of natural language communication between man and machine. Commun. ACM 9, 1 (Jan. 1966), 36–45. DOI:https://doi.org/10.1145/365153.365168</ref>. An example of user interaction can be seen in the figure below: | ||

[[File:Example 3 bot.png|400px|Image: 800 pixels|center|thumb|Figure | [[File:Example 3 bot.png|400px|Image: 800 pixels|center|thumb|Figure 22: Example interaction Trajectory 1]] | ||

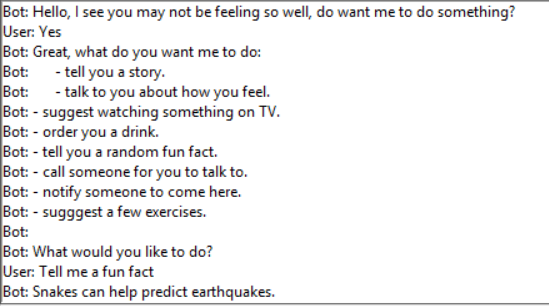

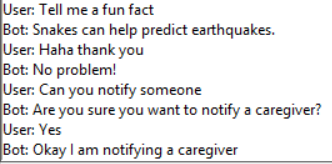

Trajectory 2 is when the person is sad with low to neutral arousal. From the survey and literature, it became clear that during this state there are a lot more things the robot should be able to do. In the survey, people suggested that the robot should also ask questions, but also It should be able to give some physical exercises, tell a story and a few other things. These have all been implemented in this trajectory. An interaction in there could look the following: | Trajectory 2 is when the person is sad with low to neutral arousal. From the survey and literature, it became clear that during this state there are a lot more things the robot should be able to do. In the survey, people suggested that the robot should also ask questions, but also It should be able to give some physical exercises, tell a story and a few other things. These have all been implemented in this trajectory. An interaction in there could look like the following: | ||

[[File:Example 4 bot.png|400px|Image: 800 pixels|center|thumb|Figure | [[File:Example 4 bot.png|400px|Image: 800 pixels|center|thumb|Figure 23: Example interaction Trajectory 2(1/2)]] | ||

[[File:Example 5 bot.png|400px|Image: 800 pixels|center|thumb|Figure | [[File:Example 5 bot.png|400px|Image: 800 pixels|center|thumb|Figure 24: Example interaction Trajectory 2(2/2)]] | ||

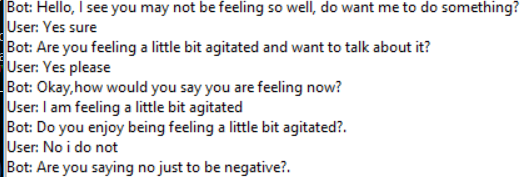

Trajectory 3 is when the person has high arousal with a slight negative valence. This is in between the first two trajectories in terms of what the robot does. Like trajectory 1 it is important that talking and listening to the user is very important. However, due to the higher valence, talking and listening is not always wanted. Therefore the robot should first ask if the person wants to talk about there feeling or if they would want to do something else. If the person wants to do something else all the options the robot can do will be listed just like trajectory 2. An interaction could look like the following: | Trajectory 3 is when the person has high arousal with a slight negative valence. This is in between the first two trajectories in terms of what the robot does. Like trajectory 1 it is important that talking and listening to the user is very important. However, due to the higher valence, talking and listening is not always wanted. Therefore the robot should first ask if the person wants to talk about there feeling or if they would want to do something else. If the person wants to do something else all the options the robot can do will be listed just like trajectory 2. An interaction could look like the following: | ||

[[File:Example 6 bot.png|400px|Image: 800 pixels|center|thumb|Figure | [[File:Example 6 bot.png|400px|Image: 800 pixels|center|thumb|Figure 25: Example interaction Trajectory 3(1/2)]] | ||

[[File:Example 7 bot.png|400px|Image: 800 pixels|center|thumb|Figure | [[File:Example 7 bot.png|400px|Image: 800 pixels|center|thumb|Figure 26: Example interaction Trajectory 3(2/2)]] | ||

==Chatbot implementation== | ==Chatbot implementation== | ||

| Line 513: | Line 448: | ||

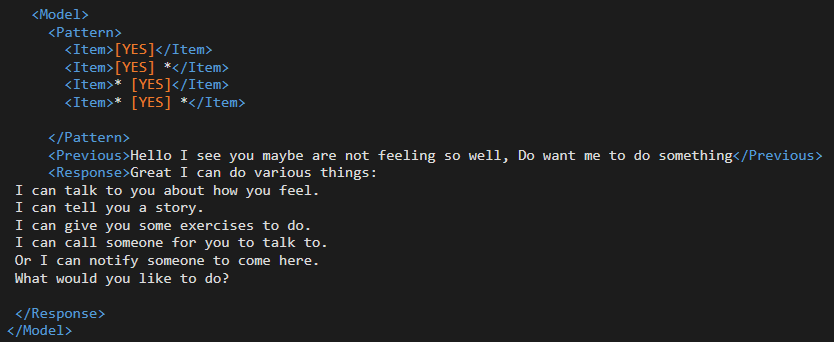

===SIML=== | ===SIML=== | ||

As stated before SIML is a special language developed for chatbots. SIML stands for Synthetic Intelligence Markup Language <ref name="SIML">Synthetic Intelligence Network, Synthetic Intelligence Markup Language Next-generation Digital Assistant & Bot Language. https://simlbot.com/</ref> and has been developed by the Synthetic Intelligence Network. It uses input patterns that the user says or types and uses this to generate a response. These responses are generated based on the emotional state of the person and the input of the user. This is best explained with an example. | As stated before SIML is a special language developed for chatbots. SIML stands for Synthetic Intelligence Markup Language <ref name="SIML">Synthetic Intelligence Network, Synthetic Intelligence Markup Language Next-generation Digital Assistant & Bot Language. https://simlbot.com/</ref> and has been developed by the Synthetic Intelligence Network. It uses input patterns that the user says or types and uses this to generate a response. These responses are generated based on the emotional state of the person and the input of the user. This is best explained with an example. | ||

[[File:SIML Example2.PNG|400px|Image: 800 pixels|center|thumb|Figure | [[File:SIML Example2.PNG|400px|Image: 800 pixels|center|thumb|Figure 27:Example of an SIML model]] | ||

In this example, one model is shown. Such a model houses a user input and robot response. Inside this model, there is a pattern tag. This tag specifies what the user has to say to get the response that is stated in the response tag. So, in this case, the user has to say any form of yes to get the response. This yes is between brackets to specify that there are multiple ways to say yes(e.g. yes, of course, sure etc.). The user says yes or no based on a question the robot asked. To specify what this question was, the previous tag is used. Here the previous response of the robot is used to match when the user wants to interact with the robot. So in short, this code makes sure that when the user says any form of yes on a question it will give a certain response. The chatbot consists of a lot of these models to generate an appropriate response. | In this example, one model is shown. Such a model houses a user input and robot response. Inside this model, there is a pattern tag. This tag specifies what the user has to say to get the response that is stated in the response tag. So, in this case, the user has to say any form of yes to get the response. This yes is between brackets to specify that there are multiple ways to say yes(e.g. yes, of course, sure etc.). The user says yes or no based on a question the robot asked. To specify what this question was, the previous tag is used. Here the previous response of the robot is used to match when the user wants to interact with the robot. So in short, this code makes sure that when the user says any form of yes on a question it will give a certain response. The chatbot consists of a lot of these models to generate an appropriate response. | ||

However, there is no implementation of emotions shown in this example. How the robot deals with different emotions will be explained in the next example. | However, there is no implementation of emotions shown in this example. How the robot deals with different emotions will be explained in the next example. | ||

[[File:SIML Example.PNG|400px|Image: 800 pixels|center|thumb|Figure | [[File:SIML Example.PNG|400px|Image: 800 pixels|center|thumb|Figure 28:Emotion selection of scenarios]] | ||

This model is the first interaction of the robot with the user. This model does not use user input, but a specific keyword used to check the emotional state of the person. This means that every time the keyword AFGW is sent to the robot it will get into this model. It can be seen that in the response there are 4 different scenarios the robot can detect. These are separated by various if-else statements. These 4 scenarios are the following: | This model is the first interaction of the robot with the user. This model does not use user input, but a specific keyword used to check the emotional state of the person. This means that every time the keyword AFGW is sent to the robot it will get into this model. It can be seen that in the response there are 4 different scenarios the robot can detect. These are separated by various if-else statements. These 4 scenarios are the following: | ||

| Line 527: | Line 462: | ||

- High negative valence and high arousal(Trajectory 1) | - High negative valence and high arousal(Trajectory 1) | ||

In each of these scenarios, there is a section between think tags that is executed in the background. In this case, the robot sends the user to a specific concept. In a concept, all the | In each of these scenarios, there is a section between think tags that is executed in the background. In this case, the robot sends the user to a specific concept. In a concept, all the responses specifically for that situation are located. So when the robot detects that the user has low arousal but negative valence it will send him into a specific concept to deal with this state. In these concepts, the methods described above are implemented. | ||

= Integration = | = Integration = | ||

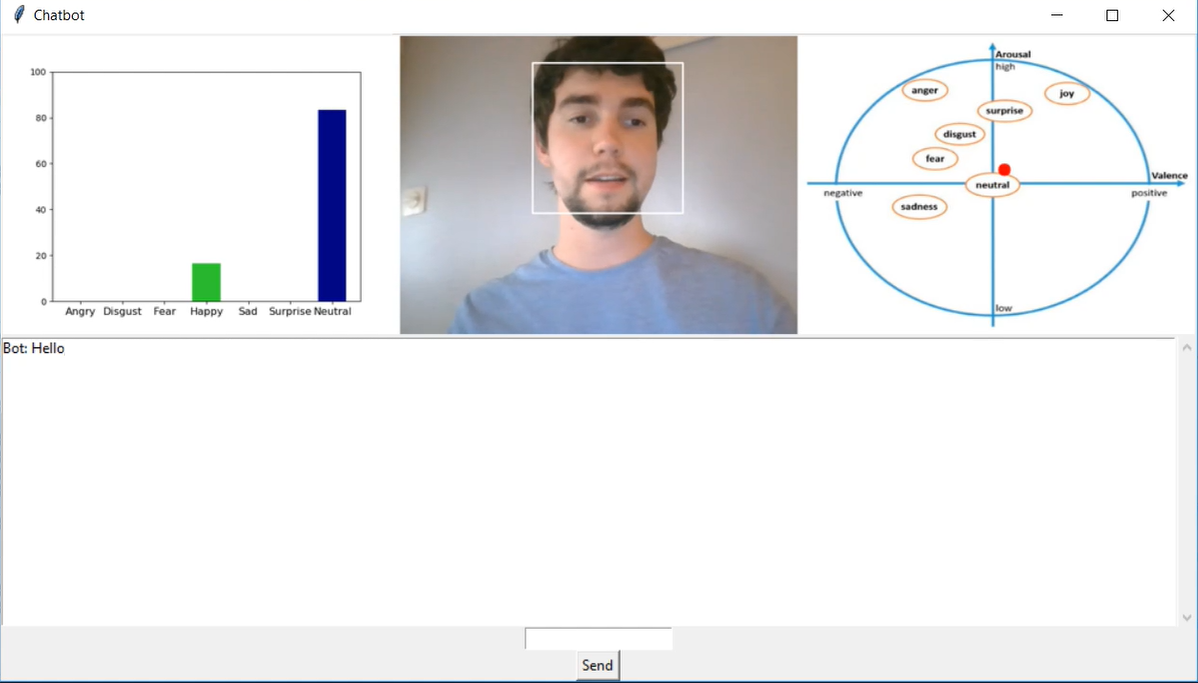

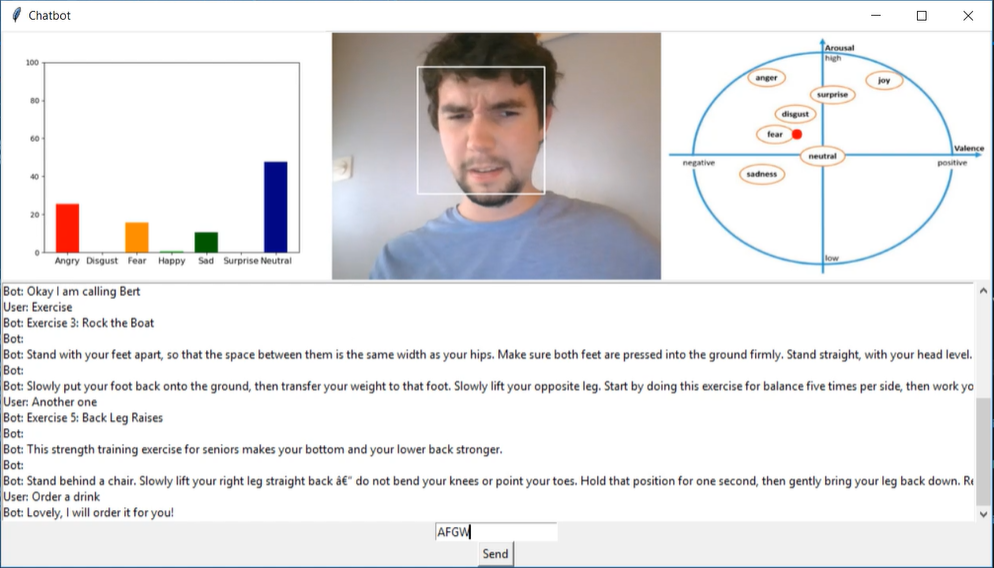

In this section, we discuss how emotion recognition is integrated with the chatbot, how the communication happens, how everything comes together and what the user interface looks like. | |||

[[File:Screen.PNG|400px|Image: 1000 pixels|center|thumb|Figure 29: Interface of R.E.D.C.A.]] | |||

== Emotion recognition == | |||

One of the main components that are displayed when someone runs our program is an image consisting of a frame from the camera feed and two diagrams representing the emotional state based on the facial expression. This image is updated as soon as possible, at each processed frame. Frames are processed one at a time, so, due to time constraints, some are omitted. | |||

At each processed frame, we first use face detection on it, which gives us the coordinates of a rectangle surrounding the person’s face. We then draw that rectangle on the frame and the resulting image is the middle part of the displayed image. | |||

Then, after preprocessing the frame as previously described, we input it into the emotion recognition algorithm. From the output from the emotion recognition, we extract the probabilities for each emotion class, as explained in the emotion recognition section. This output is used to make the bar chart which is the left part of the displayed image. | |||

Finally, for each emotion class, we multiply the probability by the corresponding default valence and arousal values for that class in the valence/arousal diagram, then we sum all the resulting values to compute an estimation of the valence and arousal values of the person. These values are then translated into coordinates in the valence/arousal diagram. The right part of the displayed image consists of a copy of the valence/arousal diagram with a red circle drawn at the computed coordinates, i.e. the circle represents the valence and arousal values of the person. | |||

== Chatbot == | |||

We want emotion recognition to work simultaneously with the chatbot. For the sake of the chatbot project starting automatically when we run the main Python script, we use the executable file produced by Visual Studio the last time the project was built. What we do is, we run the continuous emotion recognition in one thread and we execute the executable file in a separate thread. The way the emotion information is sent to the chatbot is done by writing the valence/arousal values to a comma-separated values file at each processed frame, which is then read by the chatbot. | |||

== Conversation == | |||

Firstly, we need a nice chat-like user interface. We use TkInter<ref>tkinter - Python interface to Tcl/Tk, https://docs.python.org/3/library/tkinter.html </ref>, de-facto Python's standard Graphical User Interface package. The chat is displayed right below the above-mentioned image. All messages between the chatbot and the user are displayed in the chat window. | |||

If the user wants to send a message to the chatbot, he types it in the text field at the bottom of the window and then presses the “Send” button. Afterwards, the message is not only displayed in the chat but is written to a file, which then the chatbot reads. Note that since two processes can’t access the same file simultaneously, special attention has been paid to synchronizing these communications. | |||

Each time the robot reads the user’s message, it generates a corresponding output message and writes it to a file. In order for our main program to read that file, we have made a separate thread which constantly checks the file for changes. Once a change has been detected, the new message is read and displayed in the chat window for the user to see. | |||

= Consequences of erroneous emotion assessments = | = Consequences of erroneous emotion assessments = | ||

For its design, R.E.D.C.A.'s accuracy regarding emotion recognition is on par with the current state of the art CNN's. However, this accuracy is not without flaws and as of such a small percentage of error must not be neglected. Errors made by the robot have generally been tolerated by the surveyed group, but regardless of that it is important to elaborate on the effects | For its design, R.E.D.C.A.'s accuracy regarding emotion recognition is on par with the current state of the art CNN's. However, this accuracy is not without flaws and as of such a small percentage of error must not be neglected. Errors made by the robot have generally been tolerated by the surveyed group, but regardless of that, it is important to elaborate on the effects a mistaken emotion may have on their user. The false-positive and negatives for the three main emotions, anger, sadness and agitation are observed. Aside from the confusion a wrong assessment may bring, it may bring other consequences for the user. | ||

=== Sadness === | === Trajectory 1(Anger) === | ||

'''False Positive:''' When a user is not angry but the routine is triggered nonetheless, R.E.D.C.A. may become inquisitive towards the user and try to calm them down. However, if the user is already calm, this routine will end shortly and only alters the user's state marginally. It may disturb the user at first by the initial question if they want to talk about how they feel, but this can quickly be dismissed by the user. | |||

'''False Negative:''' Not engaging an angry user with the anger subroutine may cause an escalation of the user's emotion, as they are not treated calmly and with the correct questions. If the chosen subroutine is happy(for this project this means no action is taken), this may only frustrate the user more and put stress and harm on the user as well as a danger to the robot. If the valence is lower than measured this would mean that the person gets into the sadness routine, which will also offer tools to make the person feel better, so this is not very harmful. | |||

=== Trajectory 2(Sadness) === | |||

'''False Positive:''' When the user is not in a sad state, engaging them with the chatbot's sadness routine results in a reduction of happiness. Regarding happiness, the sadness subroutine may fail and increase their anger by asking too many questions. For other emotional states, the sadness subroutine may not be as ineffective, as it has been created to be supportive to the user. | '''False Positive:''' When the user is not in a sad state, engaging them with the chatbot's sadness routine results in a reduction of happiness. Regarding happiness, the sadness subroutine may fail and increase their anger by asking too many questions. For other emotional states, the sadness subroutine may not be as ineffective, as it has been created to be supportive to the user. | ||

'''False Negative:''' When another subroutine is applied for a sad user, they may feel resentment for the robot as it does not recognise their sadness and does not function as the support device they expect it to be. As a follow up on repetitive false negatives, the robot may be harmed or disowned by the user. | '''False Negative:''' When another subroutine is applied for a sad user, they may feel resentment for the robot as it does not recognise their sadness and does not function as the support device they expect it to be. For example, asking why he or she is agitated while this is not the case may reduce the valence even more. As a follow up on repetitive false negatives, the robot may be harmed or disowned by the user. | ||

=== Trajectory 3(Agitated)=== | |||

'''False Positive''' When the user is in no agitated state, yet the robot's routine for agitation is triggered, the robot addresses the user and attempts to diffuse and refocus the user's thoughts. This behavior may benefit sad users, however fail to help or worsen the users emotional state when happy or even angry respectively, by being too inquisitive. | |||

'''False | '''False Negative''' The agitated routine is not called, however required. Any other routine used other than this one will most likely increase the level of agitation, which may lead to anger. The situation won't be diffused in time and as a result R.E.D.C.A. has failed its primary purpose. False negatives for both Anger and Agitated trajectories are least desired and their error margin should be insignificantly small. | ||

= Scenarios = | = Scenarios = | ||

This section is dedicated to portraying various scenarios. These scenarios elaborate on moments in our theoretical user’s lives where the presence of our robot may improve their emotional wellbeing. They also demonstrate the steps both robot and user may perform and consider edge cases. | This section is dedicated to portraying various scenarios. These scenarios elaborate on moments in our theoretical user’s lives where the presence of our robot may improve their emotional wellbeing. They also demonstrate the steps both robot and user may perform and consider edge cases. | ||

=== Scenario 1: Chatty User === | === Scenario 1: Chatty User === | ||

| Line 563: | Line 520: | ||

=== Scenario 2: Sad Event User === | === Scenario 2: Sad Event User === | ||

The user has recently experienced a sad event, for example | The user has recently experienced a sad event, for example, the death of a friend. It is assumed that the user will express more emotions on the negative valence spectrum, including sadness in the time span after the occurred event. The robot present in their house is designed to pick up on the changing set and frequency of emotions. Whenever the sad emotion is recognised, the robot may engage the user by demanding attention and the user is given a choice to respond and engage a conversation. To encourage the user from engaging, the robot acts with care, applying regulatory strategies, tailoring the question to the user’s need. Once a conversation is engaged, the user may open themselves to the robot and the robot asks questions as well as comforting the user by means attentional deployment. Throughout, the robot may ask the user if they would like to contact a registered person (e.g. personal caregiver or family), whether a conversation has not been engaged or not. This allows for all possible facets of coping to be reachable for the user. In the end, the user will have cleared their heart, which will help in improving sadness. | ||

=== Scenario 3: Frustrated User === | === Scenario 3: Frustrated User === | ||

A user may have a bad day. Something bad has happened that has left them in a bad mood. Therefore, their interest in talking with anyone, including the robot has been diminished. The robot has noticed their change in their facial emotions and must alter their approach to reach | A user may have a bad day. Something bad has happened that has left them in a bad mood. Therefore, their interest in talking with anyone, including the robot has been diminished. The robot has noticed their change in their facial emotions and must alter their approach to reach its goal of conversation and improved wellbeing. To avoid conflict and the user lashing out on the harmless robot, it may engage the user by diverting their focus. Rather than have the user’s mindset on what frustrated them, the robot encourages the user to talk about a past experience they’ve conversed about. It may also engage a conversation themselves by stating a joke, in an attempt to diffuse the situation. When the user still does not wish to engage, the robot may ask if they wish to call someone. In the end, noncompliant users may need time and the robot may best not further engage. | ||

=== Scenario 4: Quiet User === | === Scenario 4: Quiet User === | ||

| Line 573: | Line 530: | ||

Some users may not be as vocal than others. They won’t take initiative to engage the robot or other humans. Fortunately, the robot will frequently demand attention, asking the user if they wish to talk. It does so by attempting to engage in a conversation at appropriate times (e.g. between times outside of dinner and recreational events where the user is at home). If the robot receives no input, it may ask again several times in short intervals. In case of no response, the robot may be prompted to ask questions or tell stories on their own, until the user tells it to stop or – preferably – engages with the robot. | Some users may not be as vocal than others. They won’t take initiative to engage the robot or other humans. Fortunately, the robot will frequently demand attention, asking the user if they wish to talk. It does so by attempting to engage in a conversation at appropriate times (e.g. between times outside of dinner and recreational events where the user is at home). If the robot receives no input, it may ask again several times in short intervals. In case of no response, the robot may be prompted to ask questions or tell stories on their own, until the user tells it to stop or – preferably – engages with the robot. | ||

=Conclusion= | |||

<!--Use--> | <!--Use--> | ||

Regarding the users, we've researched the affected demographic and given initial thought on their needs and requirements. Once a foundation was laid and a general robot design was established, we pursued to contact the users via a survey. This yielded fruitful results which we've applied to enhance R.E.D.C.A. and narrow down the needs of our user. | |||

<!--Emotion recognition--> | |||