Embedded Motion Control 2014 Group 1: Difference between revisions

| (362 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

<div align="justify"> | <div align="justify"> | ||

<table border="1" cellpadding="5" cellspacing="0" style="width:100%;border-collapse:collapse;"> | This wiki page is written as a report for the course Embedded Motion Control (EMC). For this course, software needs to be written for a robot (PICO) to autonomously solve a maze. As an intermediate review, the robot has to drive through a corridor and take the first exit. | ||

This page aims to give all relevant information with respect to the EMC course. First, the approach to solve the maze is explained. Second, all software components are explained. Furthermore, the result of the corridor challenge and maze competition are described. Last, conclusions are drawn and recommendations for future improvements are presented. | |||

Organizational information, such as planning, meetings and group info can be found in the appendices. Additionally, all videos made in simulation and during experiments are included there, as well as a summary of the topics and messages used for the communication between ROS nodes. | |||

= Approach = | |||

In this section, the considerations with respect to the choice of a maze solving algorithm are explained. Furthermore, the software architecture based on the chosen maze solving algorithm is presented. | |||

== Considerations == | |||

Solving a maze can be done in different ways. For the maze competition some algorithms were considered: | |||

* Random mouse algorithm | |||

* Wall follower | |||

* Pledge algorithm | |||

* Tremaux's algorithm | |||

* Dead-end filling | |||

* Recursive algorithm | |||

* Shortest path algorithm | |||

Explanations about these algorithms can be found at: [http://en.wikipedia.org/wiki/Maze_solving_algorithm algorithms]. | |||

Our approach for the maze competition will be based on the wall follower strategy. Essentially, this algorithm follows corridors while always turning right (or left) at any junction where it can do so. This is equivalent to a human solving a maze by putting their hand on the right, or left, wall and leaving it there as they walk through the maze. For mazes with no loops, as is the case for this course, this approach always leads to the exit. The choice for this algorithm is based on the fact that it is simple and easy to implement, but nevertheless effective. By adding arrow detection and dead-end recognition to the algorithm, the maze can be solved in a simple, efficient and robust way. | |||

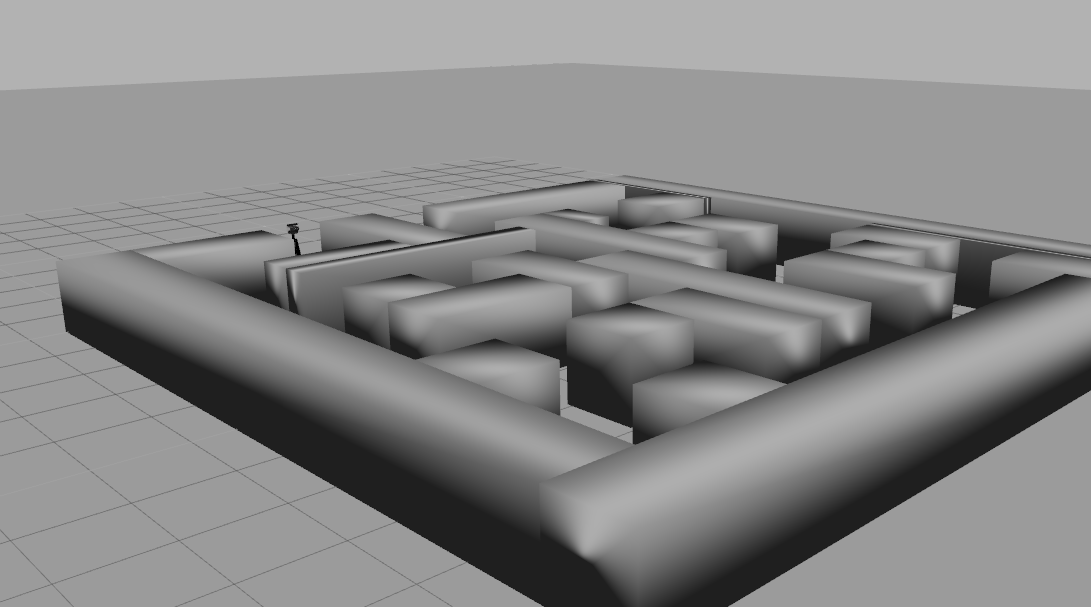

Examples of a maze solved by the Wall follower algorithm are shown below. | |||

* [http://youtu.be/Ww1cJ7ALuwE Link 1] | |||

* [https://www.youtube.com/watch?v=JPOMaMzH5pA Link 2] | |||

Implementing a wall follower algorithm can be done in different ways (see below). The choice of implementation was based on the following defined requirements: | |||

* The algorithm needs to be modular, i.e., it must be possible to start with a simple algorithm which can be improved by adding and deleting different nodes or parts of code. | |||

* The wall follower algorithm needs to be as robust as possible, able to cope with the defined situations of the maze, while simultaneously being kept as little complex as possible. | |||

Implementing the wall follower for PICO using available sensors and actuators requires different steps which need to be considered. First, an approach is defined for how situations can be recognized and decisions are made. Then, choices are made regarding the trajectory PICO will follow through the maze. | |||

'''Situation recognition/Decision making'''<br> | |||

To recognize situations and act on them, some kind of a representation of the world is necessary. This is also needed to localize PICO. Three approaches are considered for the wall follower algorithm. | |||

The first approach is mapping of the environment with [http://wiki.ros.org/gmapping gmapping]. Gmapping makes it able to Simultaneously Localize And Map (SLAM). Based on this map and localization, it is possible to plan a path in the environment. An advantage of this approach is that gmapping is available as a ROS package, which only needs tuning to use it. Furthermore, it is possible with a map to have a robust representation of the environment. The main drawback of this approach is that the main advantage of a map is in fact not used. When a map is known beforehand, a motion planner can determine the fastest route to the exit of the maze. Unfortunately, this is not the case with the maze competition. Furthermore, within the time span of the course, mapping can be a complex and time consuming approach. | |||

The second option is situation recognition based on laser data. With use of laser data, it is possible to determine the position of the robot with respect to the walls. Furthermore, it is possible to detect exits and dead ends based on laser data. Laser data is very easy to interpret because if gives straightforward geometrical information. However, a drawback of direct use of laser data is limited reliability because of noise, giving unpredictable results. in addition, it can be difficult to determine more complex features of the environment solely based on laser data. Finally, raw laser scanner data uses a very large amount of points to describe an environment made out of a few straight walls, which seems overly inefficient for direct use. | |||

The last option is situation recognition based on lines. With use of some transformations, lines can be determined based on the laser data. These lines will represent the walls of the maze. Line data is more reliable than laser data for situation recognition because it filters out noise. Also, determining exits or dead ends may be easier and more robust because of the far smaller amount of data used to describe the environment. Difficulties with line detection could be problems with the output of the line detection. It can be the case that multiple lines are given for one wall or that a line is drawn where no wall is. This has mostly to do with correct tuning of the algorithm. | |||

As already stated in the requirements, the algorithm will be built based on a modular design, starting simple and improving the algorithm over the course of time. Because of this reason, a started will be made using laser data to recognize situations. For the final implementation, Line detection should be inserted in the software architecture. Gmapping is not chosen because is it seen as a more difficult and time consuming approach, more complex than necessary for the maze competition. Our approach is to use the available time to implement a robust algorithm with use of line detection. | |||

'''Behavior'''<br> | |||

Based on the laser or/and line data situations can be recognized. However, PICO still needs to get commands as to what its trajectory will be. Trajectory planning can be done using a global or a local approach, or a combination. Global planning uses the planning of a path in advance, creating setpoints in the environment which PICO should attempt to reach. Local planning only determines the direction PICO should move in at any given moment; what its position is in the maze or where it may ultimately move to is not considered. Because of the use of a (local) wall-follower principle, only local planning is used, which is also one of the driving reasons to not use mapping, as described before. | |||

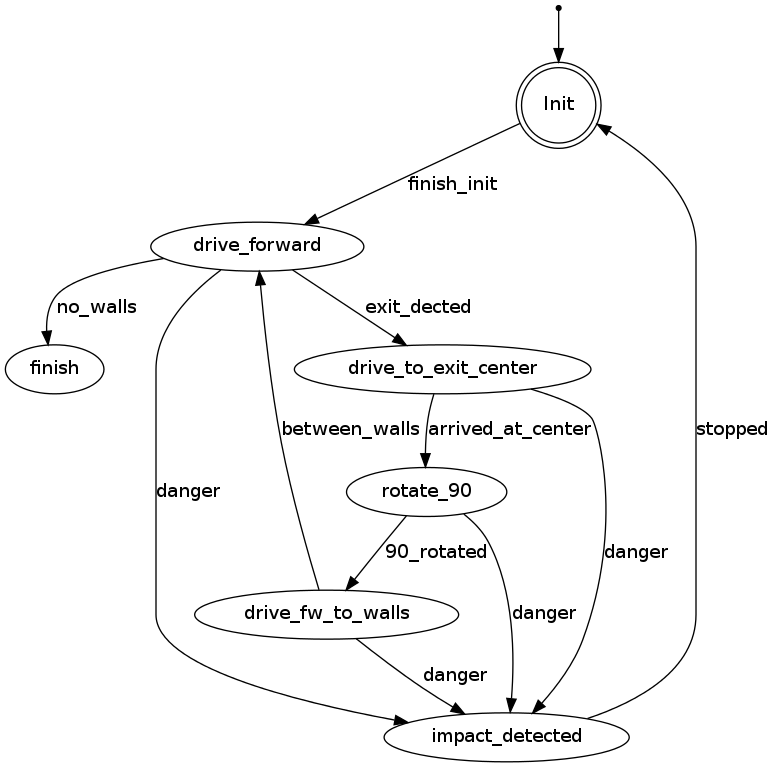

To make the correct decisions using local planning, the approach of a Finite State Machine (FSM) is considered. An FSM provides a structured and very insightful way to model the decision making process. Based on the state or situation PICO is in, its behavior is determined. The state machine will be used in such a way that different states can be added during the course. This aides in developing a modular and robust design, which are two of the most important requirements for the software, as described before. | |||

==Software architecture == | |||

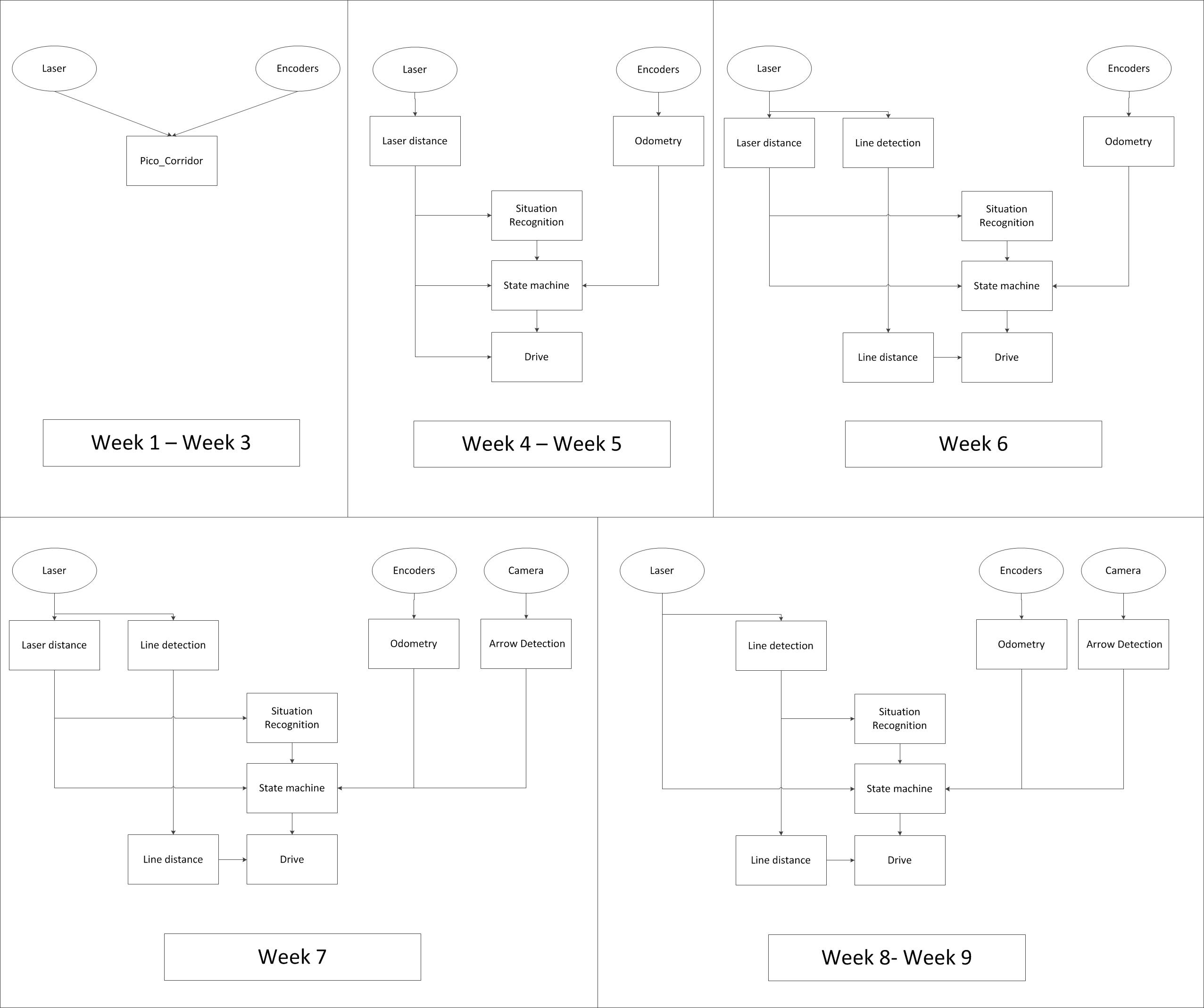

[[File:EMC_history2.jpg|thumb|350px|Evolution of the software architecture during the course of the project]] | |||

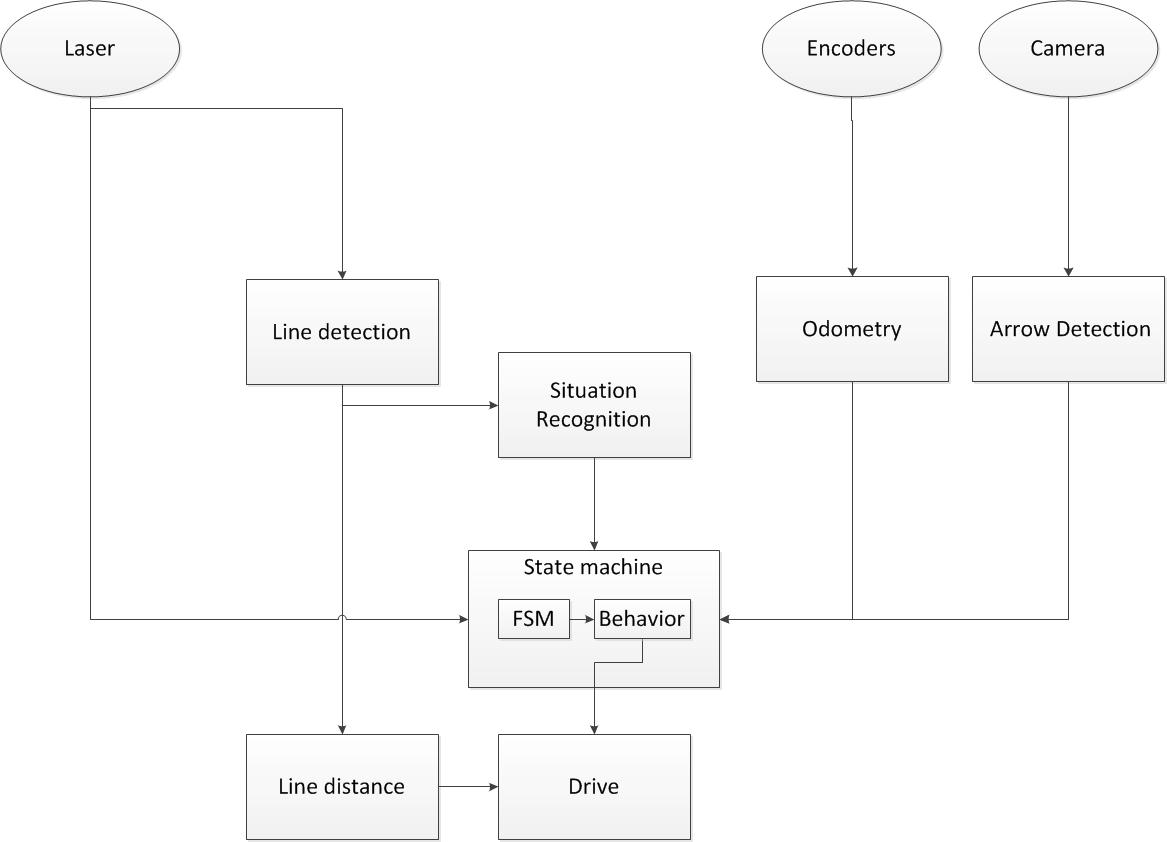

In the previous section, the use of line detection and laser data for situation recognition was discussed. To determine behavior, a state machine will be used. These different operations must be implemented in ROS. Because of the requirement of a modular design, the different operations will be split into separate ROS nodes. This results in a good overview of the system architecture and a modular design in which it is possible to easily add, improve or delete different nodes. | |||

The figure to the right shows how the software architecture changed during the course (click on the picture to enlarge). Oval blocks represent sensor data, rectangular blocks represent ROS nodes. During the first three weeks, only a single script was used for the corridor challenge. After this, the new architecture was implemented and improved over the course of time. Important additions were the addition of the line and arrow detection. Furthermore, it becomes clear that line detection replaces distance calculation using laser data. In the final situation, raw laser scanner sensor data is only used for safety in the state machine. | |||

The final software architecture is a robust, modular, simple and logical design. An enlarged picture of the final software architecture is shown below. The general working principle will first be explained. In the next sections, the different blocks will be explained in more detail. | |||

Based on the incoming laser data from PICO, lines are generated with use of a Hough transform and a custom-built filter. With use of the line data, situations are recognized, sich as exits and dead ends. This information is sent to the state machine. In addition to regular situation recognition, information about a detected arrow is sent to the state machine. This information is used to determine what kind of behavior is desired in the given situation. An example could be that the robot needs to turn 90 degrees to the right because a T-junction is recognized. The state machine sends the command to rotate the robot with a given speed, until the odometry shows that the robot has turned 90 degrees. | |||

The desired behavior of the robot is sent to the drive module, which sends velocity commands to PICO. The drive block also makes sure that the robot drives in the middle of the maze corridors and that it has an orientation perpendicular to the driving direction. For this, distances based on line input is used. Depending on the state of the robot, this 'automatic centering' control can be turned on or off. | |||

The raw laser sensor data is used for the 'safe drive' state of PICO. When an object is detected within a certain distance, PICO will go to its safety state. | |||

[[File:Schematic_EMC012324.JPG|thumb|center|450px|Schematic overview of the final software architecture]] | |||

= Software Components = | |||

== Line detection == | |||

The primary sensor for gathering information about the environment of PICO is its laser scanner. The scanner describes a discretized 270-degree angle around PICO, in the plane of the scanner, in the form of polar coordinates of the closest detected points. While useful in some cases, this results in large dataset from which it can be difficult to extract useful information, such as the distribution of walls surrounding PICO or an upcoming dead end. Since the environment will be built entirely of approximately straight walls, a logical step is to transform the laser points to a set of lines representing the walls. This greatly reduces the number of coordinates, allowing for a better interpretation of the surroundings. | |||

=== Goal === | |||

The goal of this component is to accurately transform received laser points to lines describing the surrounding walls. Lines will be described by four Cartesian coordinates. | |||

=== Considerations === | |||

For detecting straight lines, a commonly used method is the (generalized) Hough transform. The Hough transform is an algorithm which detects points in an image which together form a straight line. It does this by collecting sets of neighboring points, each of which could be a point on the same straight line. If a large enough set of such points is found, a line is ‘detected’. | |||

A drawback of using the Hough transform for this application is that it cannot immediately be used on the laser scan data, because the Hough transform requires an image to operate on. This requires the collected laser scan data to be transformed into a binary image on which the Hough transform can be applied, while the resulting lines too need to be transformed back into real-world coordinates. | |||

Another way to collect lines from the laser data would be to write a simplified algorithm which doesn’t require an image, but operates directly on data from the laser scan. Such an algorithm could use the fact that the laser data is ordered in increasing angle and could look at consecutive points with similar X and Y coordinates. However, after discussion the decision was made that a self-written algorithm would be too complex to develop if it is to be robust in varying situations. In contrast, the Hough transform has been thoroughly developed over may years and therefore delivers a more generalized solution. The decision was therefore made to use the Hough transform for detection of lines. | |||

=== Implementation === | |||

In c++, the Hough transform is implemented in the OpenCV library through the [http://docs.opencv.org/doc/tutorials/imgproc/imgtrans/hough_lines/hough_lines.html HoughLines and HoughLinesP] functions. These functions operate on a binary image (cv::Mat object). In order to arrive at such an image, several transformations are performed: | |||

* From laser scan points in polar coordinates ([http://docs.ros.org/api/sensor_msgs/html/msg/LaserScan.html sensor_msgs::LaserScan]) to Cartesian points ([http://docs.ros.org/api/sensor_msgs/html/msg/PointCloud2.html sensor_msgs::PointCloud2]). This is done using ROS's built-in projection method [http://wiki.ros.org/laser_geometry#High_fidelity_projection laser_geometry::transformLaserScanToPointCloud()], which transforms the laser scan to points in a fixed frame, in this case PICO's base (/pico/base_link). | |||

* From PointCloud2 to [http://docs.pointclouds.org/1.7.0/classpcl_1_1_point_cloud.html pcl::PointCloud<pcl::PointXYZ>], which has an easier interface for extracting the individual points. This is done using the the [http://docs.pointclouds.org/1.0.0/namespacepcl.html#abc8688665cb9e229c199344d767684f6 pcl::fromROSMsg()] method. | |||

* From points in Cartesian coordinates to a binary image ([http://docs.opencv.org/doc/tutorials/core/mat_the_basic_image_container/mat_the_basic_image_container.html cv::Mat]). This is done by scaling the coordinates and overlaying them onto a matrix of dimensions, which are determined by the chosen resolution and a maximum range. Points are then ‘drawn’ onto the image, resulting in a black-and-white image where each projected point is colored white. | |||

The Hough transform can then be performed on this image, the parameters of which are discussed later. The resulting line coordinates, which are scaled on the dimensions of the image, are then transformed back into real-world coordinates. In order to easily publish them on a ROS topic, and to visualize them in rviz, they are stored in a [http://docs.ros.org/api/visualization_msgs/html/msg/Marker.html visualization_msgs::Marker] object, containing a list of couples of points describing the lines. | |||

After implementing the Hough transform, it was found that the resulting set of lines contained many small line segments as well as close and approximately parallel lines. It was found that a cause for this was the variance in the coordinates of the detected laser points. Because of this ‘spread’ of the points, several similar lines could be drawn in the same area, whereas only a single wall exists. Several solutions were investigated, such as further tuning of the parameters of the Hough transform and the use of blurring and edge detection on the input image. However, these solutions often resulted in decreased accuracy, many short line segments or did not fully solve the problem. Therefore, a different approach was pursued, in the form of a custom filter to combine multiple ‘duplicate’ lines into a single line. | |||

==== Custom filter ==== | |||

For two lines to be considered to form a single line in the real world, they must meet some conditions: | |||

* Their angle must be identical up to a threshold (here +/- 10 degrees) | |||

* The distance perpendicular to the lines must be identical up to a threshold (here 0.1m) | |||

* The lines must overlap along their length, or the distance between the endpoints must be within some threshold (here: 0.1m) | |||

An algorithm was written to procedurally consider which line segments from a set satisfy these conditions. Matching line segments were then used to determine a single line which best represents them. The algorithm works in the following way: | |||

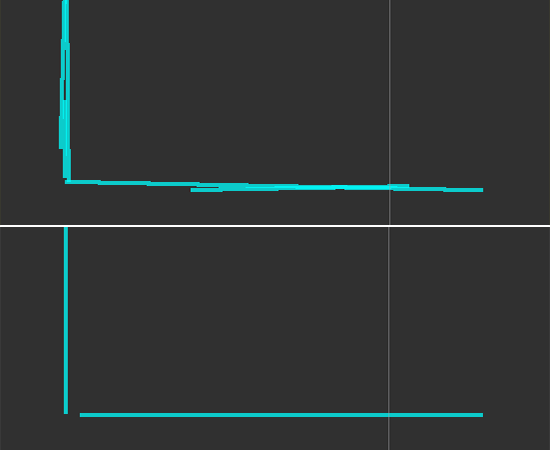

[[File:Filtering.png|thumb|300px|Improvement of line data using custom line filter. Result from Hough transform shown on top, after filtering shown below.]] | |||

* The angle of each line segment w.r.t. the origin is determined using <math>\theta = atan2(\frac{y2-y2}{x2-x1})</math>. The angle is normalized to the [0,<math>\pi</math>] range so that lines in opposing directions are not treated as having different angles. The normalized angles are stored in a vector with the same indices as the line segments for later reference. | |||

* Iterating over the angles, the indices of lines whose angle corresponds to the angle of another line are stored in a new vector. This results in vectors with the indices of approximately parallel lines. | |||

* The original line segments are rotated about the origin by their angle so that they are parallel to the x-axis. Because lines with a similar angle are rotated by the same angle around the same point, their relative distance remains approximately the same. | |||

* The lines are ordered in increasing ‘leftmost’ x-coordinate to facilitate future operations. | |||

* Iterating over the vectors of parallel lines, the distance w.r.t. the x-axis is compared. If the difference in distance between two lines exceeds the defined threshold, the index of the second line is moved to a new vector. This results in new vectors with the indices of lines which are parallel as well as close to each other. | |||

* Iterating over the new vectors, the distance between the rightmost vertex of a line and the leftmost vertex of the next line is determined. If this distance exceeds the threshold, the indices of the remainder of lines are moved to a new vector. | |||

* The result of the previous steps is a set of vectors with indices of line segments which satisfy the conditions to form a single line. For each of these, the minimum and maximum x-value and the average <math>\theta</math> and y values are determined in the rotated coordinate frame. A new line with these coordinates is created, which is rotated back by the average <math>\theta</math> value. This line now represents the combination of the original line segments. | |||

Testing of the filter revealed that the new representations matched the position of original laser points very accurately, while reliably reducing the number of lines to the minimum for each situation without falsely removing lines of interest. Because of the effectiveness of the filter, the parameters of the Hough transform could be tuned to allow more sensitive detection of lines, which normally results in more separate line segments, but which are now combined into a single line regardless. This especially improved line detection accuracy for larger distances. | |||

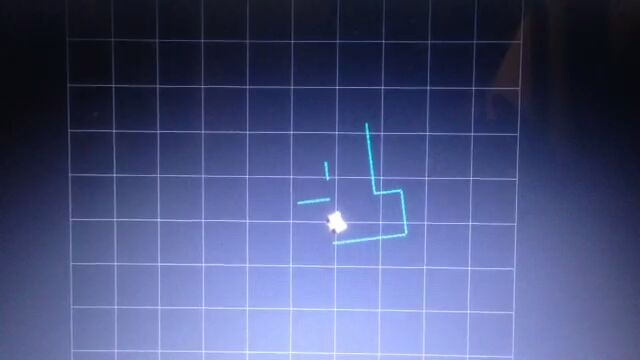

[[File:Lines-animation.gif|thumb|400px|Animation showing laser data, unfiltered lines and final lines after filtering.]] | |||

Even though the filter is somewhat complex, it was found to execute computationally efficiently. Because it iterates over relatively small vectors and effectively only executes floating point comparisons, little load is added to the computationally more expensive Hough transform. This means that the filter can be freely applied if the Hough transform is already being used. | |||

While the filter performed accurately for static situations, it was found that during moving, the line representations would slightly vary between frames, in particular the angle of the lines. An explanation for this could be an inaccuracy in the algorithm, which averages the angle of similar lines. Many small line segments with small deviations in angle can therefore pollute the calculated average. One way to solve this would be to calculate a weighted average based on the length of the line segment. As it turned out, however, the relatively small ‘flickering’ of the lines did not cause problems in the relevant control processes, therefore no effort was put into developing this solution. | |||

Because the filter by nature does not depend on any particular process it is used in, it was structured as a static method in a container class. This allows the filter to be re-used by other processes if desired, as was discussed in the section about [[#Arrow_detection|Arrow detection]]. | |||

=== Conclusion & Recommendations === | |||

Combining the Hough transform and a custom filter to the laser scan data, a minimal set of lines is accurately detected within a range of 3 meter. The minimal representation allows for easy use in other processes, such as determining distance to walls and situation detection. In the animation to the right, the outputs from laser points and unfiltered lines to the final, filtered lines are compared. | |||

Improvements could be made to the range up to which lines are accurately detected. However, considering the choice for local planning only, the range was found to be sufficient and this was not pursued. Improvements could also be made to the filter to reduce ‘flickering’ of lines in dynamic situations. Finally, line detection as described here is limited to straight lines only, which limits its use in a generalized environment. | |||

== Distance == | |||

=== Goal === | |||

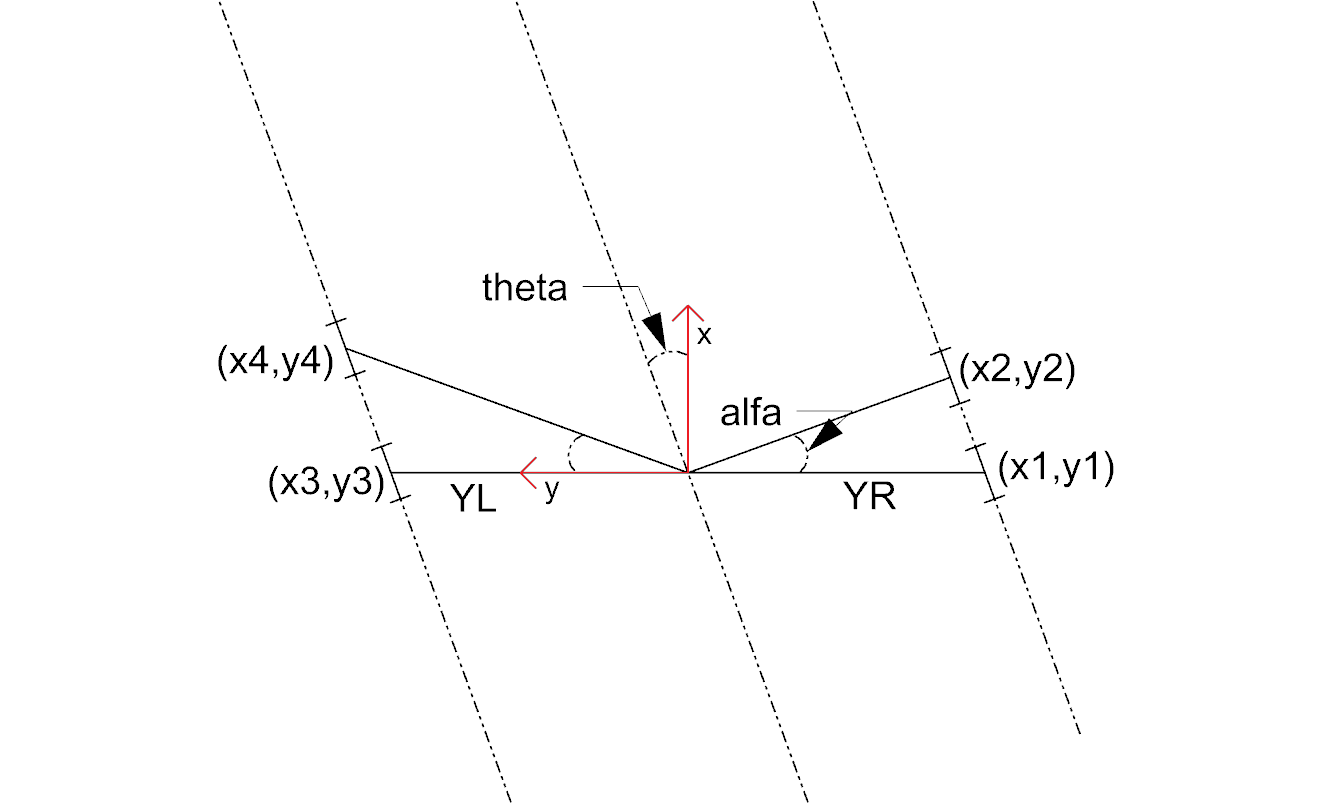

The goal for this part is to determine the distance to left and right walls and to a wall in front, if any exists. Additionally, determine angle <math>\theta</math> with respect to the left and right walls. | |||

=== Considerations === | |||

Two different approaches were considered to determine the distance to the walls. The first approach was based on the laser data points (laser distance), while the second approach utilizes the lines (line distance) generated by the line detection node. The former approach was used in the corridor challenge; it was later replaced by the latter approach using lines, for use in the final maze challenge. | |||

<b>Laser distance</b> | |||

[[file:Dist_corridor.png|thumb|350px|Determining the distance to walls using laser data]] | |||

Using raw laser scanner data, a small sample of laser points can be used to determine the distance to the walls. In the figure to the right is shown how the distance is determined. A sample of points directly next to PICO and at a 5 degree forward angle of PICO are averaged and used for the distance calculations. | |||

A drawback of this approach is that only small sample is used to determine the distance to a wall, which can easily lead to errors. Another drawback of the approach arises when the sampled laserpoints do not find a wall (for instance in case of a gap in the walls); in that case, the distance and angle cannot be determined. Because of these drawbacks, another approach should be pursued, using line data. | |||

<b>Line distance</b> | |||

The calculation of the distance based on lines has several advantages in comparison to the laser distance. | |||

*When lines are used to determine the distance to the walls, effectively all the laser points corresponding to points on that line are used instead of a small sample of laser points. | |||

*When PICO is not directly between walls, for instance when a turn is made in a corridor to take an exit, it is still possible to calculate a distance to other relevant walls based on lines in front of PICO. | |||

The method to determine distances based on line data and its implementation are described in the next part. | |||

=== Implementation === | |||

[[File:Dist_lines2.png|thumb|300px|Determining distance to walls using line data]] | |||

[[File:Distance_lines1.png|thumb|300px|Situation 1 in which a false distance could be calculated; problem did not arise in practice.]] | |||

[[File:Distance_lines2.png|thumb|300px|Situation 2 in which a false distance could be calculated; problem solved by control strategy.]] | |||

The input for this component are the lines, described by sets of two points (x1,y1 and x2,y2). To be able to calculate the distance to the lines correctly, a first step is to check what the orientation of a line is. We want to determine whether the first point or the second point from the line is closest to PICO. This is done using the following, straight-forward code: | |||

<code> | |||

x1 = markers.points[i].x; | |||

y1 = markers.points[i].y; | |||

x2 = markers.points[i+1].x; | |||

y2 = markers.points[i+1].y;<br /> | |||

if (x1 > x2){ | |||

x1 = markers.points[i+1].x; | |||

y1 = markers.points[i+1].y; | |||

x2 = markers.points[i].x; | |||

y2 = markers.points[i].y; | |||

} | |||

</code> | |||

After this transformation, the first point (x1,y1) is always closest to PICO in x-direction. | |||

For every line, angle <math>\theta</math> can be calculated using the following equation:<br><br> | |||

<math>\theta = atan((y2-y1)/(x2-x1))</math><br><br> | |||

The position perpendicular to the line/wall is calculated with the following equation:<br><br> | |||

<math>Y = y2 - ((y2-y1)/(x2/x1)) * x2*cos(\theta_1)</math><br><br> | |||

Conceptually, this equation extends the line until next to PICO, so a line that starts is in front of PICO also results in a value for Y, as if the line ran next to PICO. This is very useful when PICO has turned and is not between the walls yet. The definitions of the distances are visualized in the figure to the right. Note that in the figure, only the angle with respect to the center line of the coridor is given, when in reality the angle to both the left and right wall are calculated separately. | |||

The two equations described above are applied to each line. Based on the values resulting from these equations, some filtering is applied to determine which lines could be relevant. The following filtering criteria are used: | |||

*The absolute value of the angle <math>\theta</math> must be smaller than 0.785 rad (45 degrees): | |||

::this returns only the lines that are "vertical" (+ or - 45 degrees) for PICO. | |||

*When y > 0, a line is to the left of PICO. | |||

*When y < 0, a line is to the right of PICO. | |||

After these filtering steps, it is still possible that multiple lines are detected to the left or the right of PICO. Therefore, an additional criterium was added: | |||

*The line with the minimum y-value is used for the distance to the walls. | |||

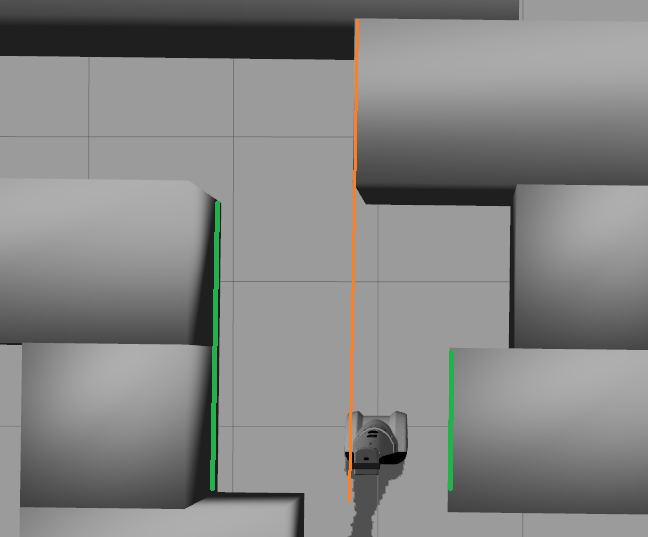

One critical situation when this approach might fail is shown in Situation 1 to the right. In that situation, the wall in front of PICO (orange line) has the minimum y value, so the distance to the orange line would give <math>Y_{left}</math>. However, because PICO has only a few laser points on that wall, it is not detected as a line so this does not give a problem. In this scenario, the distance relative to the two green lines will be returned. | |||

Another approach that was tested was to do the last filtering step based on the line with the x coordinate closest to PICO, which is most cases is the line directly next to PICO. This resulted in problems when PICO turned towards a opening and a far removed wall was found left or right from PICO, as shown in Situation 2 to the right. PICO would then calculate one distance to the wall of the opening and the other distance to the far-removed wall (red line), which is undesired. | |||

=== Conclusion & recommendations === | |||

The calculation of the distance to the walls based on line data was found te be very robust, compared to using laser data. This is partly thanks to the detected lines, which are provide very reliably. | |||

One improvement that could be made is in the choice of the line used to determine the distance. Choosing the line with the y-coordinate closest to PICO is a choice that sometimes leads to a false distance, to which the control is adapted. This could be improved by a smarter selection of the correct line, however, this proved to be unnecessary for the maze competition, for which the implementation described here was sufficient. | |||

==Odometry == | |||

=== Goal === | |||

The odometry block transforms the Quaternion odometry data to roll, pitch and yaw angles. | |||

=== Considerations === | |||

For the transformation the header file ''tf/transform_datatypes.h'' is included. No other options where found for this transformation. | |||

=== Implementation === | |||

With use of the transformation the odometry data is transformed to roll, pitch and yaw angles. For PICO only the yaw angle is relevant, for that reason only the yaw data is published. | |||

=== Conclusion & Recommendation === | |||

The odometry block is a very simple node. It publishes the yaw data which is used by the state machine to determine rotation of PICO. | |||

==Arrow detection == | |||

=== Goal === | |||

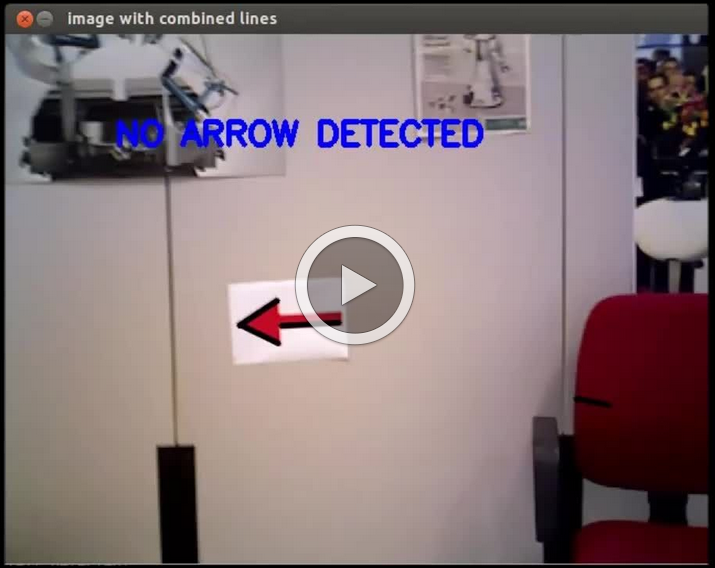

The goal for the arrow detection block is to detect arrows in the corridor by using the asus xtion camera mounted on PICO. Because of the strategy used mainly the to the left pointing arrow should be recognized to override the right wall folower strategy, however both directions are implemented. | |||

=== Considerations === | |||

Three different approaches/methods are considered to achieve this goal: | |||

* Template matching: Template matching is a technique which tries to find a reference image in the current 'video-image'. The technique slides the smaller reference image over the current image, and at every location, a metric is calculated which represents how “good” or “bad” the match at that location is (or how similar the patch is to that particular area of the source image). This is implemented using the openCV function matchTemplate, which results in a matrix with 'correlation' values from which a conclusion can be drawn where the template is matched best in the current image. | |||

During testing of this technique it was found out that only one 'reference' of a certain size is used to compare to the image, in our case with our dynamical changing 'size' of our to be detected arrow we are only detecting the arrow correctly if the size of the camera image of the error is exactly the size of the reference arrow. Therefore to make this method work in our case we need a lot of arrow-reference images of different sizes, which is not suitable. | |||

* Feature detection: Another approach is to use algorithms which detect characteristic 'features' in both the reference and camera image and attempt to match these. Because these features are generally not scale or rotation dependent, this method does not run into the same problems as described for template matching. Therefore, this approach was investigated in the following way: | |||

** The OpenCV library contains several algorithms for feature detection and matching. In general, some steps are followed to match a reference to an image: | |||

*** Detect features or 'keypoints'. For this several algorithms are available, notably the [http://docs.opencv.org/trunk/doc/py_tutorials/py_feature2d/py_sift_intro/py_sift_intro.html SIFT] and [http://docs.opencv.org/trunk/doc/py_tutorials/py_feature2d/py_surf_intro/py_surf_intro.html SURF] algorithms. Generally, these algorithms detect corners in images on various scales, which after some filtering results in detected points of interest or features. | |||

*** From the detected features, descriptors are calculated, which are vectors describing the features. | |||

*** The features of the reference image are matched to the features of the camera image by use if a nearest-neighbor search algorithm, i.e. the [http://www.cs.ubc.ca/~lowe/papers/09muja.pdf FLANN algorithm]. This results in a vector of points in the reference image and a vector of the matched respective points in the camera image. If many such points exist, the reference image can be said to be detected. | |||

*** Finally, using the coordinates of the points in both images, a transformation or homography from the plane of the reference image to that of the camera image can be inferred. This transformation allows tells us how the orientation has changed between the reference image and the image seen by the camera. | |||

** Implementation of the previously described steps resulted in detection of the arrow in a sample camera image. However, it was found that the orientation of the arrow could not accurately be determined by means of the algorithms in the OpenCV library. Due to the line symmetry of the reference arrow, a rotation-independent detector would match equally many points from the 'top' of the arrow to both the top and the bottom of the arrow in the camera image, because the 'bottom' in the camera image can just as well be the top of the arrow when it is flipped horizontally. Therefore, the detector could not determine the orientation of the arrow, even if the full orientation is not of interest in this application. | |||

** One solution to the above problem could be to compare the vectors between the matched points in both images. Because it is only of interest whether the arrow is facing left or right, a simple algorithm could be defined which determines if many points which were located 'left' in the reference image are now located 'right', and vice versa. If this is the case, the orientation of the image has been reversed, allowing us to determine which way the arrow is pointing. However, this approach is probably quite laborious and a different method of detecting the arrow was found to also be suitable, which is why the solution described above was not pursued. | |||

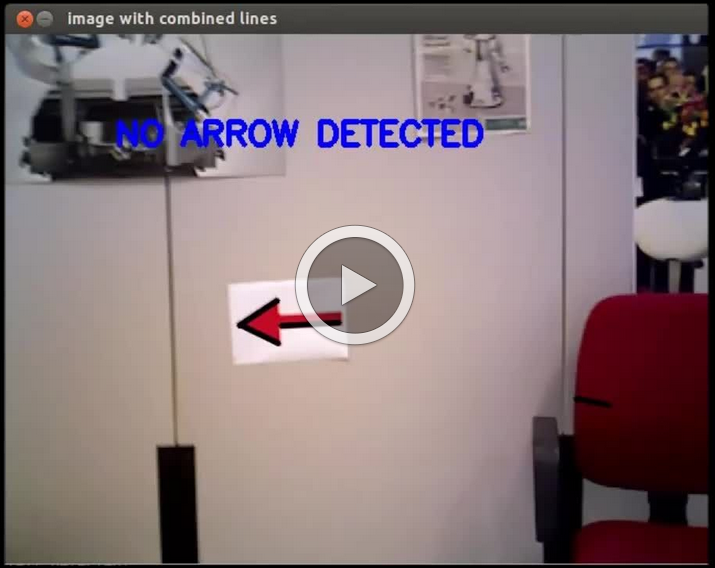

*Edge detection: By using the edge detection method, a arrow is recognized by analyzing the 'edges' of a red object within the camera image. This method is chosen because the implementation was proven to be simple and the conclusions to be drawn where correct and effective. | |||

=== Implementation === | |||

Several steps are performed to recognize the edges of a red object within the camera image. Using those lines an eventual arrow can be detected. The steps are as follows: | |||

** Receive the rgb camera image. | |||

** Convert the rgb camera image to a hsv image. | |||

** This hsv image is converted to a binary image, red pixels are converted to white and all the other pixels are converted to black. A guided user interface (GUI) is implemented with slider bars to enable an easy selection of 'red' hsv values so that easy changes can been made during video playback. | |||

** Using a floodfill algorithm the 'red' area is smoothened out, (8 connectivity is used) | |||

** Edge detection is applied, as an edge is a 'jump' in intensity it can be recognized, this is done using a canny edge detection algorithm. | |||

** By using a hough transform lines are fitted through the detected edges. | |||

** The '''CombineLines()''' function is used to 'neaten' the lines which are coming out of the hough transform. This function was developed to improve the detection if lines in the [[#Line_detection|Line detection]] component, but could be re-used in this component. By using CombineLines(), the performance of this arrow detection was increased by a factor '''2.5'''. | |||

** By recognizing the slanted lines (of about 30 degrees) the arrow head can be recognized. And the direction of the arrow can be derived. | |||

To ensure robustness the arrow needs to be detected at least 2 times within 10 adjacent frames to be assumed valid and to trigger the 'arrow-detected' event. When within the 10 frames a false-positive is detected the counter of the amount of arrows (left or right) detected is reset to 0. | |||

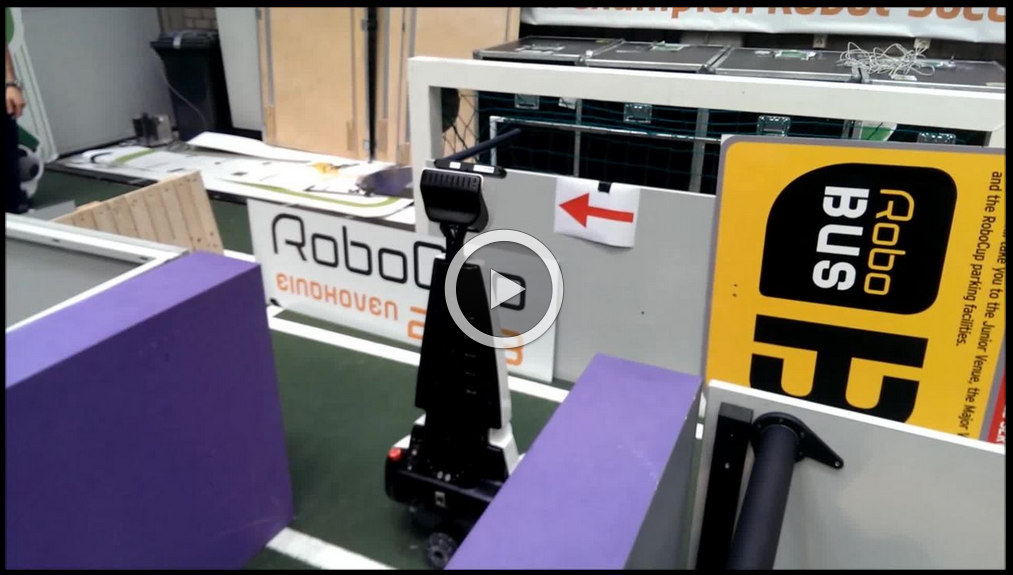

The result obtained is shown in the following videos, the left video showing an example of the edge detection (we use a right wall follower), and the right video a successful detection of an arrow by PICO within the maze: | |||

<table border="1" cellpadding="5" cellspacing="0" style="text-align:center;width:100%;border-collapse:collapse;"> | |||

<tr style="background: #D3D3D3;"> | <tr style="background: #D3D3D3;"> | ||

<td | <td><b>Edge detection</b></td> | ||

<td | <td><b>PICO arrow in maze</b></td> | ||

</tr> | </tr> | ||

<tr> | <tr> | ||

<td> | <td>[[File:arrowdetectie-bewerkt-thumb.png|400px|center|link=http://youtu.be/aTww-nS7fTU]]</td> | ||

<td>[[File:pico_arrowdetected-thumb.png|400px|center|link=http://youtu.be/IBCsY7kr_-w]]</td> | |||

<td>[ | |||

</table> | </table> | ||

== | === Conclusion & Recommendation === | ||

After trying different techniques it was found that the edge detection approach was the most succesfull approach. It was found difficult at first to tune the right hsv - values for the red of the arrow under different lighting conditions, but by using the GUI it could be tuned eventually really easily. It can furthermore be recommended to find a way to stabilize the images so that an even better arrow recognition is possible. With the above described implementation PICO showed to recognize arrows robustly. | |||

== Finite State Machine == | |||

To successfully navigate a maze, PICO must make decisions based on the environment it perceives. Because of the multitude of situations it may encounter and the desire to accurately define its behavior in those different situations, the rules for making the correct decisions may quickly become complex and elaborate. One way two structure its decision making is by defining it as a Finite State Machine (FSM). In an FSM, a set of states is defined in which specific behavior is described. Events may be triggered by, for instance, changes in the environment, and may cause a transition to another state, with different behavior. By accurately specifying the states, transitions between states, and the behavior in each state, complex behavior can be described in a well-structured way. | |||

=== Goal === | |||

The goal of this component is to provide a solid and easy to implement structure for high-level decision making of PICO. It must be possible to specify behavior in a modular way, such that testing, debugging and improving PICO's behavior is a straightforward procedure and PICO's decision logic can always easily de observed. | |||

=== Considerations === | |||

As described above, structuring high-level decision making in the form of an FSM provides a powerful solution, in which behavior is can easily be determined by considering the state PICO is in. Several FSM packages exist for ROS: | |||

* [http://wiki.ros.org/smach SMACH], a feature-rich task-level architecture for coordinating and executing behavior. However, SMACH is only available as in Python, while the remainder of our code is written in c++. Although it is possible to combine Python and c++ on runtime, this was considered too complex in the time frame of the course, therefore SMACH was disregarded. | |||

* [http://wiki.ros.org/decision_making/Tutorials/FSM Decision_making FSM], a lightweight FSM solution available in c++. While promising, this package was available only for ROS Hydro, a more recent distribution of ROS than used in this course. In addition, it uses the catkin build system, whereas the older rosbuild system is used for this course. Therefore, this package too could not be used. | |||

Because no off-the-shelf package for building an FSM was available, but the FSM logic was considered the most suitable to the current objectives, a custom FSM system was built. This system must meet the following requirements: | |||

* Provide essential FSM functionality: configure and execute an FSM with event-based transitions between states | |||

* Abstract 'operational' logic so that it can be applied without having to consider the inner workings. | |||

* Provide an easy to use and logical interface to configure and run the FSM. | |||

=== Implementation === | |||

To ensure clean code and a well-structured system, the custom FSM was written in OOP as its own class. Inner logic is defined through private methods, with only a constructor and relevant public methods for manipulating and executing the FSM. | |||

==== Functioning ==== | |||

States and events constituting a transition between states are internally described as integers and can be defined after instantiation of the FSM. Transitions can be registered by supplying the begin state, event and target state. After defining all states, transitions and an initial state, sensors can send events to the FSM which may trigger a transition, if such a transition is available from the current state. Because ROS uses a polling system with a definable update frequency, multiple events can be received at once. These are therefore collected in a vector, which then contains all available events at that time instance. By executing the FSM, the system runs through all available events and checks if that event can trigger a transition from the current state. If this is the case, the current state is updated to the transitioned state. To ensure unambiguous behavior, care must be taken that multiple events that can each trigger a transition do not occur at once; otherwise, the FSM can result in undesired behavior. This can be prevented by updating and executing the FSM at a high enough frequency and by proper definition of the states and transitions. | |||

To further facilitate configuration of the FSM, special 'panic' transitions can be registered. These transitions are made available from all states and can be used for, for instance, detecting collisions and going into a safe mode. Use of panic transitions thus eliminates the need to register this transition for each state, which could easily lead to errors when new states are introduced. | |||

To ensure all nodes use the same integers to describe an event or state, these were defined as constants in <code>Event</code> and <code>State</code> messages, which could then be accessed by any script which includes them. This way, states and events could be referenced to in human-readable form, further improving readability and preventing errors. | |||

==== Interface ==== | |||

[[File:Fsm.png|thumb|400px|FSM class interface flow]] | |||

The following methods are used to configure and run the FSM: | |||

* <code>fsm(int state_init)</code> Instantiates the FSM object and stores the initial state. | |||

* <code>tr(int state_from, int event, int state_to)</code> Registers a transition from a state to another state by an event. Transitions are stored in a vector of structs containing the three integers. | |||

* <code>panic(int event, int state_to)</code> Registers a special transition which is available from any state. | |||

* <code>clearEvents()</code> Clears the list of available events. | |||

* <code>makeAvailable(int event)</code> Adds an event to the list of available events for the next time the FSM is run. | |||

* <code>int runOnce()</code> Runs the FSM, making a transition to a new state if an available event was found in the list. Iterates over all transitions, calling the private method <code>tryTransition()</code> (see below). Returns the new state. | |||

==== Inner logic ==== | |||

* <code>bool isAvailable(int candidate)</code> Searches the list of available events for the given candidate event, returning true if it is found or false otherwise. | |||

* <code>tryTransition(int event, int candidate_state)</code> Attempts to make the given transition from the current state. Checks if the transition can be made by running <code>isAvailable()</code>, updates the current state if true is returned. | |||

==== Implementation in ROS ==== | |||

In this implementation, decision making is executed from the behavior control node, which instantiates and configures the FSM. Events can be published from any node to a dedicated topic <code>/pico/events</code>, which is listened to by the behavior control node. The received events are periodically pushed to the FSM system, which is the run using the <code>runOnce()</code> method. The received, possibly changed state is then published on the <code>/pico/state</code> topic to be used by any other node which requires it. Furthermore, now the current state has been decided, the correct behavior for PICO is executed. This low-level behavior is in this implementation defined in a separate class, containing functions for each of the behaviors PICO can perform, and executed through a public <code>perform()</code> method. More about this can be read in the [[#Behavior_control|Behavior control]] section. | |||

=== Conclusion & Recommendations === | |||

The developed FSM system provides an easy structure to define high-level decision making for PICO, requiring little configuration and abstracting the heavy lifting of its inner workings. Events can be triggered from any process and the resulting state can be used by other processes. The system allows for simple developing, tuning, altering and debugging of the behavior. The solution is lightweight and adds little overhead to the program. It can also be used in different implementations, not relying on a particular process it is run from or logic it is used in. | |||

The system could be further improved to include execution of the desired behavior directly by the FSM, by registering a function call to each state. This way, configuration of the FSM can fully determine the behavior of PICO, making the process of developing behavior even more transparent. An improvement in the implementation could be made, in which multiple FSM instances can be used to define a hierarchical state machine. A state could contain an FSM of its own, allowing consecutive or other more complex behavior to be performed, before transitioning to a new 'master state'. Due to the relatively simple behavior in this course, this was not implemented. | |||

==Situation Recognition == | |||

Even though a wall-follower strategy in its purest form requires only to detect a wall to either side, performance can be greatly improved when having the ability to recognize the surroundings of PICO and being able to base actions on them accordingly. In order to do so, some situations PICO can encounter must be detected. | |||

=== Goal === | |||

The goal of the situation recognition component is to interpret the data received through PICO's sensors and to provide relevant parameters describing the situation PICO is in. It should do so in a way that works with the way decision making is implemented, using an event-based FSM. | |||

=== Considerations === | |||

In the maze challenge, PICO can encounter many distinct situations, such as a straight corridor, a junction with one or two exits, or a T junction. In addition, the corridors beyond the immediate situation can lead to different situations of their own, including dead ends or additional junctions. Whereas a complete identification of each situation is a noble goal, this does not always carry a benefit for the decision making process. To keep with the [http://en.wikipedia.org/wiki/KISS_principle KISS] principle, the decision was therefore made to focus on detecting only those situations which are immediately relevant. This also works well with the local planning approach, which does not explicitly anticipate on future events. | |||

With the event-based FSM in mind, situation recognition should work with the high-level decision making by publishing the recognized environment features as events, which are used by the FSM. This way, information about the situation can be immediately used to determine PICO's behavior. | |||

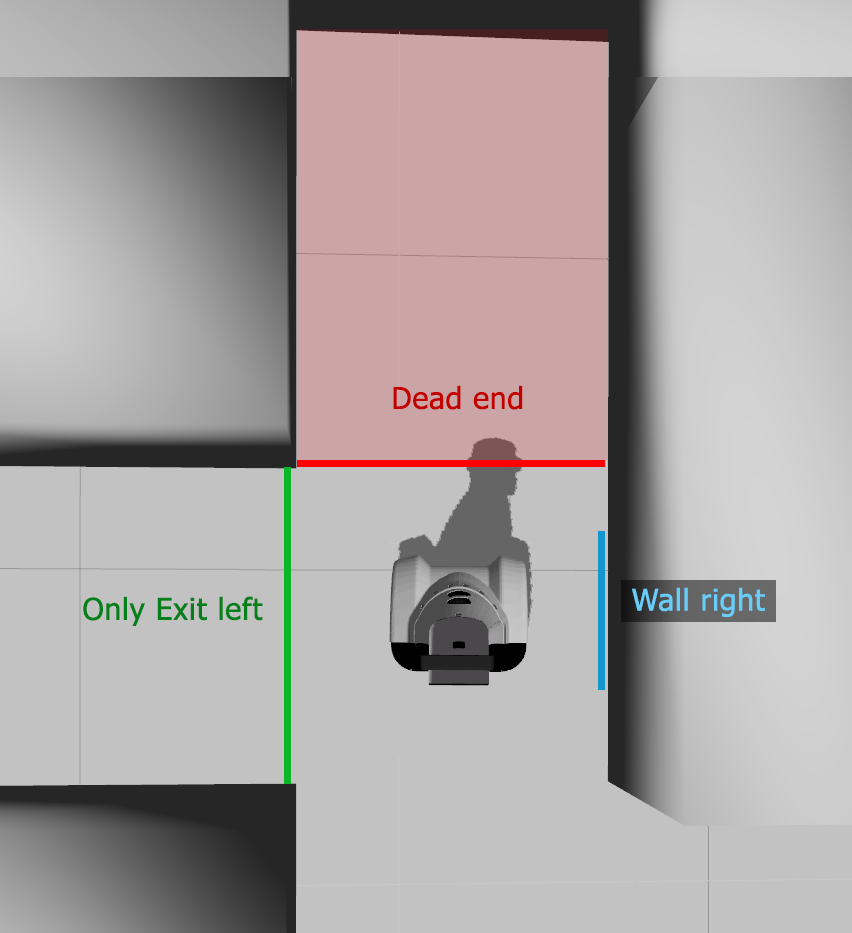

[[File:Situation-detection2.png|thumb|250px|Detecting walls to either side, dead end ahead and a situation with only an exit to the left.]] | |||

From the description of the maze competition, the following situations were identified which could be relevant to the decision making: | |||

* An exit available to be taken to the left or right. | |||

* A wall in front blocking PICO's path. | |||

* A dead end ahead, with no more exits to be taken. | |||

* A dead end ahead with only an exit on the side opposite from the side being followed by the wall-follower strategy. | |||

* Recognition of exits that lead to a dead end. | |||

* Near-collision, to trigger safety behavior. | |||

* Being out of the maze. | |||

Being the primary sensor for sensing the environment, data from the laser scanner could be used to detect these situations This becomes increasingly difficult for detection of more complex situations, such as exits leading to a dead end. Therefore, the available line data is better suitable to detect situations because it represents the environment in a greatly decreased number of parameters. However, considering the lines describing the walls are still only approximations with some degree of inaccuracy, care should be taken that some observations ("two walls meet at a 90 degree angle to form a corner") may not always be found in the equivalent line data (the walls may be at a slightly different angle and they may stop at some distance from each other) to provide robust situation detection. | |||

Care should be taken to avoid false detection of features, in particular dead ends, because the situation recognition is only used to increase performance of a maze solving strategy which on its own would always find the exit. Whereas a false negative might result in slower finding of the exit, a false positive can disrupt the wall-following strategy, possibly causing PICO to never find the exit. In the implementation, erroneously not detecting an existing situation should therefore always be preferred over detecting a situation which in fact does not exist. | |||

The first method considered for detecting features from the surroundings was to use 'scanning' along the walls forming the corridor PICO is in. In this approach, the distance to the walls would be determined first. Then, a small bracket would be defined which the wall intersects, and this bracket would be 'moved' along the direction of the wall, calculating if some line intersects the bracket at each iteration. If no intersection is found, this signals the beginning of an exit. Similarly, detection of a new intersection determines the end of the exit. The scanning approach could then also be used along the direction of the walls of the exit, to determine if the exit leads to a dead end or not. | |||

However, early implementations if this approach proved difficult because of varying angles of the surrounding walls, which need to be taken into account when scanning along them with a bracket, or the wall may be 'lost' at larger distances. In addition, it was found that conceptually, this approach did not really leverage the benefits of having lines describing the environment, as it uses a 'discretized' approach which may just as well use laser data points. Therefore, a different approach was implemented which uses the location of lines with respect to each other to describe the environment. | |||

=== Implementation === | |||

To be able to easily access relevant lines later, as a first step all lines are classified and stored as 'vertical' (along the x-axis) to the left or right, or 'horizontal' (along the y-axis) in front or behind PICO. In addition, they are ordered in increasing distance along their respective axis. The classification in left, right, front or back leads to ambiguity in situations where PICO is not orientated straight in a corridor, for instance during turning. Because situation information is typically not required in such situations, situation detection is not performed when the received lines are at an angle larger than 15 degrees. | |||

==== Exits ==== | |||

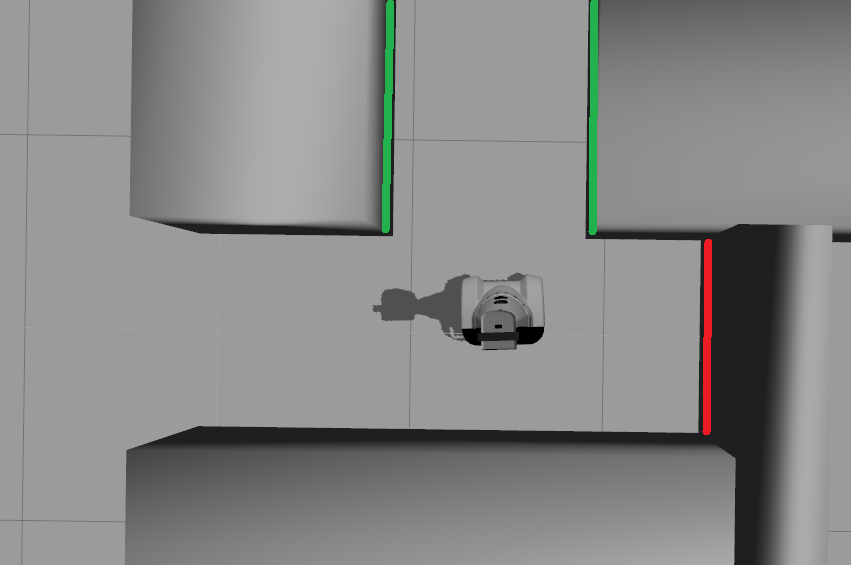

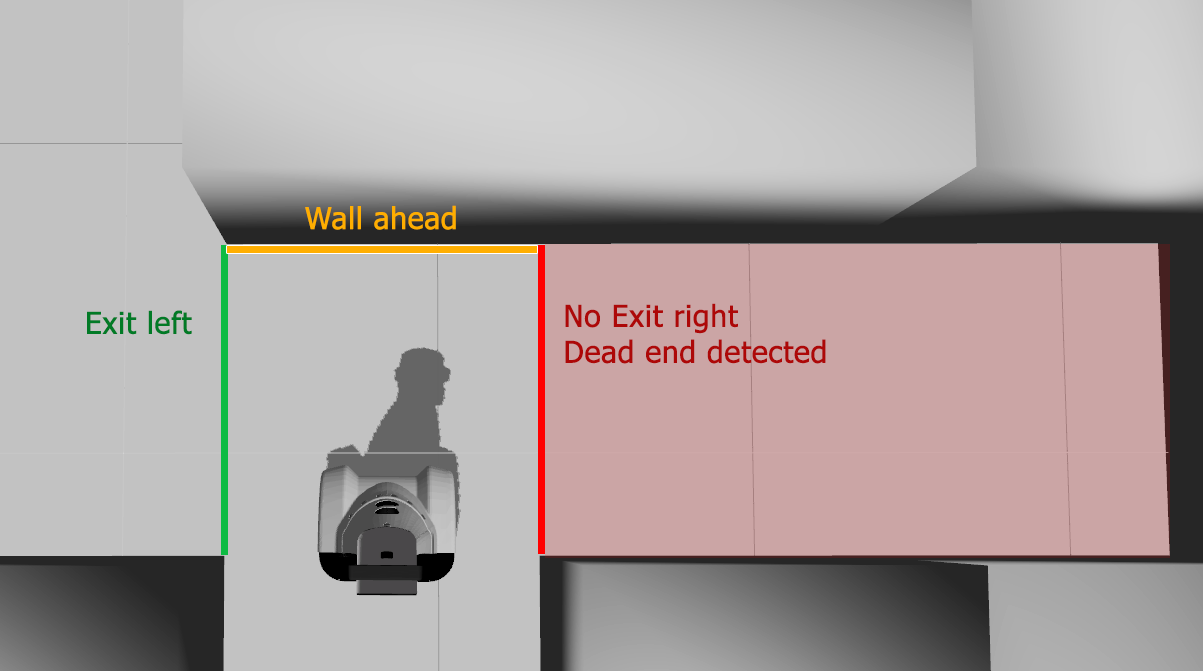

[[File:Situation-detection1.png|thumb|350px|Detecting exits, upcoming walls and undesired exits leading to a dead end.]] | |||

Now the lines have been classified, 'scans' are performed on either side to detect an exit available to be taken. Each vertical line on the side of interest is considered, checking if it is close enough (< 1m) to PICO to form a wall and if it spans the area next to PICO. If no walls are found to be blocking the area to the side of PICO, the horizontal lines in front and behind are considered, which may also 'limit' an exit if they exist in the same area. While performing these comparisons, coordinates describing the closest distance of a line limiting the exit are updated for each line. After considering all lines, if these coordinates constitute an exit of large enough size, an exit is considered to be found. | |||

Rather than to immediately publish the exit on the Events topic, an extra functions is executed which checks if the exit may lead to a dead end. This is the case if two horizontal lines span the exit width and a vertical line spans the distance between the end points of these lines. In a similar way as described for detecting an exit, a check is performed for the existence of such lines. If they are found, the exit can be considered a dead end of its own, and an exit event is not published. | |||

During simulation, it was found that while functioning well in static situations, while driving PICO would not be able to detect a 'dead end exit' when it first approaches an exit, because at that point one wall of the exit would not be detected because of insufficient laser points describing it. The same occurs just before PICO finishes driving past a detected 'dead end exit', when again one wall is no longer detected. Because the detection no longer sees the dead end, an exit event is published, and PICO would move into the exit it should have considered a dead end. To solve this, the algorithm was updated to check for a dead end during the first 10 function calls after it finds the exit. If a dead end is detected in this time, the exit is regarded a dead end, which is stored in memory until the exit is no longer detected, and no exit event is published for that exit. If no dead end was detected during the first 10 function calls, it is considered a 'free' exit for the duration of the time it is detected. This acts as a form of memory for the state of the exit and was found to solve the problem, while also increasing robustness in situations where the line data would sometimes erroneously draw a too short line, resulting in a free exit when in other instances it was found to be a dead end. | |||

Finally, for a detected exit, some information about that exit is published on the Situation topic to be used by low-level behavior control to optimize behavior. The exit width is published, as is the location of the corner of the exit. The exit corner is determined by considering the laser scanner point closest to the side of PICO. If this point is inside a 60 degree 'cone' to the side of PICO and at a distance < 0.75m, close enough to be a plausible corner, this point is assumed to be the exit corner. This information is also determined only once when the exit is detected and remains available while taking the exit. | |||

The functions for checking an exit and a dead end are direction-independent, meaning that they are shared for the left and right side, using a <code>direction_sign</code> integer to determine which side is being assessed. | |||

==== Wall ahead, dead end, only exit left ==== | |||

Apart from exits, some information about the corridor in which PICO is driving is collected. First, the closest horizontal line directly in front of PICO, if any, is determined. If such a line is found within 1.0m, a <code>Wall_Ahead</code> event is published, signalling an upcoming blockage. If it is within 0.5m, the <code>Forward_Blocked</code> even it published, which should trigger immediate action. | |||

In addition, the existence of a dead end is detected in the same way as previously described for exits. In that case, a <code>Dead_End</code> event is published. In the same function, if a dead end is not found but only single exit to the left is found, with the corridor ahead not continuing and no right exit, a <code>Only_Exit_Left</code> event is published, which is relevant because PICO would normally only consider right-hand exits. | |||

==== Collision ==== | |||

For detecting a near-collision, the raw laser scanner data is used. If the closest distance of a laser point is found to be < 0.3m, the <code>Collision</code> event is published. To allow PICO to resume driving when the collision threat is removed, a separate <code>No_Collision</code> event is published when no laser point is at a distance < 0.35m. | |||

=== Conclusion & Recommendations === | |||

All important situations can be recognized using this implementation. Because of reliable line data and a structured approach to determining features of the surroundings, detection was found to be robust during both simulation and testing. In the implementation care was taken to avoid false positives, which could cause PICO to be unable to find the exit. Only out-of-maze detection was not implemented, as it was not a requirement for the maze challenge and could only add to false-positive detection. | |||

Improvements could be made to the exit detection, which uses some sampling to determine if the exit leads to a dead end before an exit is considered to be detected. This causes a short delay in exit detection, which may lead to non-optimal behavior. However, the increased robustness it provides was considered a higher priority. | |||

The implementation of situation detection is only useful for local planning strategies, as it only considers situations which are immediately relevant. In case of a different path planning strategy, the implementation could easily be adjusted to include publishing of information about upcoming situations. | |||

== Behavior control == | |||

The ultimate objective of the software architecture is to steer PICO in the desired direction. Using a State Machine to determine the action PICO should take, the information supplied by each component can be used for robust control and high performance. | |||

=== Goal === | |||

The goal of the behavior control component is to use the information received from other components to provide input to [[#Drive|Drive]] component for desired behavior. | |||

=== Considerations === | |||

High-level decision making is performed using a Finite State Machine instance. In each state, some behavior function can be defined. Therefore, in these low-level behavior control functions, we do not need to be concerned with ''when'' some behavior is desired, but only ''how'' that behavior should be performed. Because of the holonomic platform with two translational and one rotational DOF and the restriction of driving between walls, only a limited set of behaviors needs to be defined. By separating the behavior control component into high-level decision making and low-level behavior functions, behavior shared by different states does not need to be defined multiple times, reducing code complexity and ensuring consistent behavior in each situation. | |||

==== Performance ==== | |||

Because of strict velocity limits, controlling for performance in the case of the maze challenge depends mostly on moving in the right direction. In addition to the chosen strategy, the detection of dead ends and exits leading to a dead end as well as arrows point towards the right direction must be implemented in the behavior control to increase performance. | |||

==== Safety ==== | |||

To prevent PICO from hitting the wall some safety measures must be implemented. The first measure was to let PICO stop when it is to close to a wall or other object. This was done by iterating through all the laserpoints, stopping PICO when a point is between 0.15m and 0.30m. However, this does not allow PICO continue after a near collision is detected. If, in some scenario, the object to the collision is removed, for instance when a person walked in front of PICO and has moved away, PICO should continue on its way. If the object is not removed within some time frame, PICO should move away from the object and attempt to position itself in a safe position. Since PICO will always drive forward, driving backwards will normally move it away from the object causing the collision. | |||

Even though in some cases, PICO will continue the wrong direction after recovering, this is preferable to having no error recovery, in which case PICO will remain halted indefinitely. | |||

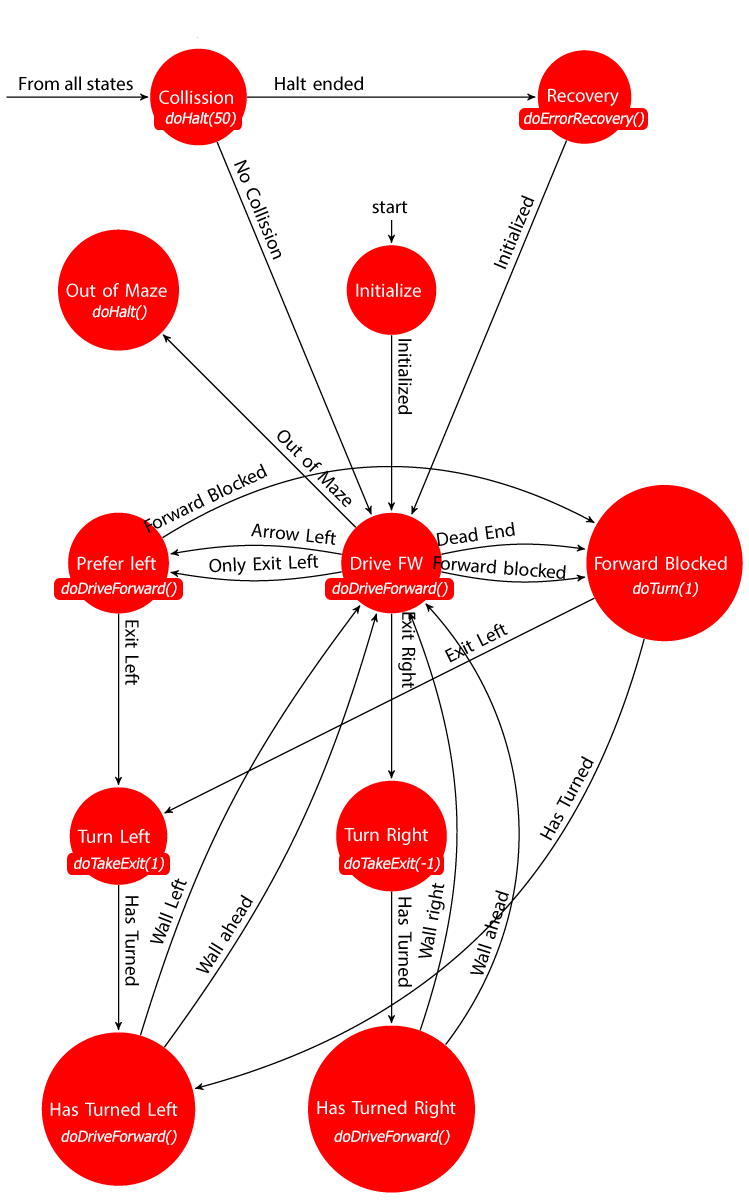

=== Implementation - High-level decision making === | |||

[[File:fsmedit.png|thumb|right|200px|State diagram of the implemented decision making strategy]] | |||

High-level decision making is implemented using the FSM mechanism described before. Functionally, the decision making structure works as follows. After initialization, which is not actively used, PICO starts driving forward in a corridor. Exits to the left are not regarded, as a right-wall follower strategy is used. If an exit to the right is detected, it will take a smooth turn into that exit, returning to its drive-forward state when a wall to the right is detected, signalling taking the exit has been completed. When a situation is detected where there is only one exit to the left, a dead end ahead and no exit to the right, PICO moves into the prefer-left state. The same happens when an arrow to the left is detected. In this state, exits to the right are disregarded, and PICO will drive forward until it detects an exit to the left, which is then taken. From both the regular drive-forward state and the prefer-left state, when a wall in front of PICO is detected which it would otherwise soon drive into, it moves into a forward-blocked state, which has PICO rotate a counter-clockwise <math>\pi/2</math> turn, returning to the drive-forward state once the turn has completed. | |||

After any turn, PICO will move back to the drive-forward state after a wall to side of the direction in which it has turned is detected, or an upcoming wall ahead is detected. This ensures that PICO will not immediately detect an exit to its side after it has made a turn in a corridor, in which case such an exit may in fact represent the corridor it was driving in. Additionally, in very wide corridors where no wall may be detected, an upcoming wall ahead will have it return to the drive-forward state, because otherwise the transition to take an exit would remain blocked, causing PICO to stop moving when the wall is reached. | |||

From any state, if a near-collision is detected, PICO halts and waits for some time. If the object causing the collision is removed, PICO will continue to the drive-forward state. If the collision threat persists, PICO will move backwards for some time, before returning to the drive-forward state. | |||

It is worth mentioning that several state transitions can be made in quick succession. This is important, because although PICO often returns to the drive-forward state after performing some action, it can almost instantaneously move to any other state accessible by a non-blocked transition, for instance when a wall is blocking its path. Returning to the drive-forward state can therefore be interpreted as returning to PICO's default behavior, from which all other desired behavior is accessible through the relevant transitions. | |||

The state diagram of the implemented strategy is shown to the right (click to enlarge). States are shown in red, with the behavior function it executes shown below the state name in cursive. State transitions are drawn as arrows between the states. | |||

=== Implementation - Low-level behavior === | |||

To reduce the amount of code in the behavior control node and allow for easy replacement of behavior functionality, the behavior functions are placed in a behavior class. This class is instantiated from the behavior control node, which updates the sensor information it may require (such as distances to walls and situation information) each time it is run and can then have it perform some desired function. Executing the behavior is further facilitated by the public <code>perform()</code> method of the behavior class. This method takes the state as an input argument and uses a switch statement to execute the desired behavior function, which are private methods in the behavior class. Because of this structure, care must be taken that behavior functions are not blocking, as they are not executed until a new behavior is requested but rather executed each time the behavior controller is run (in this case at approximately 20Hz). | |||

Several behavior functions are developed, which together model the complete behavior of PICO. They are described below. | |||

==== Drive Forward ==== | |||

Function <code>doDriveForward(lin_x = 0.2)</code> takes a velocity in forward direction as input argument (defaults to 0.2m/s) and publishes it to the Drive topic. This function is used in situations where PICO should drive forward, typically trough a corridor with a wall on either or both sides. Rotational and lateral control is left to the Drive component, which uses a proportional controller to keep PICO facing forward and in the center of the corridor. | |||

==== Turn ==== | |||

Function <code>doTurn(direction_sign = 1, angle = CV_PI/2, ang_z = 1.0, lin_x = 0.0, lin_y = 0.0)</code> is used to rotate PICO in place or while using its holonomic capabilities to rotate while driving. To be able to rotate the amount defined by ''angle'' even when the function cannot continue execution until that angle is reached, as described above, a static ''target_angle'' is defined when the function is first called, adding the desired angle to the yaw received from the Odometry topic. Upon every consecutive call of the function, the current yaw is compared to the target yaw, and a command with the given angular and linear velocities is published on the Drive topic if the target yaw has not been reached. When the target yaw is reached, it is reset and the HasTurned event is published on the Events topic, signalling the end of the turn to the FSM. | |||

Because the yaw received from the odometry sensors is normalized on the [-<math>\pi</math>,<math>\pi</math>] domain, simply adding some angle to the current yaw to use as a target yaw can result in a value outside this domain, and will therefore never be reached. To solve this, the yaw at each function call is compared to the previous yaw. If the difference exceeds <math>\pi</math>, it is assumed a full <math>2 \pi</math> rotation is 'obscured' by the yaw normalization, and it is added when being compared to the target yaw. | |||

[[File:Cornering-story.png|thumb|400px|Steps involved in smooth cornering.]] | |||

To allow the use of this function for rotation in both directions, a ''direction_sign'' can be supplied, which can be either -1 or 1, with -1 describing a rotation in clockwise direction and 1 describing a rotation in anti-clockwise direction. | |||

==== Take an Exit ==== | |||

The simplest way to take an exit is to drive to the middle of the exit, rotate in place, then drive forward into the exit. To improve smoothness while navigating the maze, forward translation can be used to drive into the exit. Function <code>doTakeExit(direction_sign, angle)</code> does this by acting as an 'intermediate' function which calculates the desired translational velocities, before calling the <code>doTurn()</code> function which executes the turn. | |||

== | The strategy for calculating the correct translational velocities for a smooth turn is to keep the corner of the exit directly to the side (<math>x_{corner} = 0</math>, at a distance of half the exit width (<math>y_{corner} = width/2</math>). These values are used as setpoints in a controller using proportional control. The distance to the corner of the exit and the width of the exit are supplied by the Situation component. Because PICO will drive some distance into the intersection in order to robustly determine any dead ends, it must rotate my some amount before the exit corner is to the side. To ensure PICO will not drive backwards during the first part of the turn, which would occur using proportional control when <math>x_{corner} < 0</math>, the controller is only used for values of <math>x_{corner} > 0</math>. This results in PICO rotating in place for a short moment before starting a smooth turn, but this is preferred over negative forward velocities, which add time to cornering, or a more complex approach to smooth cornering. | ||

The steps involved in taking a 'smooth corner' are also depicted in the image to the right. | |||

==== Halt ==== | |||

Function <code>dohalt(samples = 0)</code> publishes 0 velocities to the Drive topic, stopping PICO in place. Optionally, ''samples'' can be supplied, in which case the event ''Halt_Ended'' is published when the function is called the given number of times. This acts as a pseudo-timer in cases where PICO should continue with different behavior after halting for given time. | |||

=== | ==== Error Recovery ==== | ||

Function <code>doErrorRecovery()</code> implements the recovery strategy described in the considerations. Using a counter, a negative longitudinal velocity is published on the Drive topic for a pre-defined time, backing up PICO. The drive component's default angle and lateral position control functions are used in an attempt to move PICO to a safe position. When the counter finishes, the ''Initialized'' event is published, which will in turn move PICO to the ''Drive_Forward'' state. | |||

=== Conclusion & Recommendations === | |||

Combining the decision making and behavior control functionality, all important behavior for PICO is implemented. The implementation proved to function as expected both in simulation and during testing. With the implementation, PICO can smoothly and accurately navigate a complex maze, making use of situation detection and arrow detection to increase performance. Use of proportional controllers proved sufficient for stable and well-performing position and rotation control. | |||

The major improvement that could be made is to implement fully smooth cornering, eliminating the initial phase during cornering when PICO rotates in place. This would require a combination of earlier exit/dead-end detection, or a different approach to derive translational setpoints for driving into the exit. Another approach would be to allow PICO to start taking en exit before it has determined whether or not that exit is 'desirable' (not leading to a dead end). This would, however, require a method of recovering from taking an undesirable exit, adding to code complexity. | |||

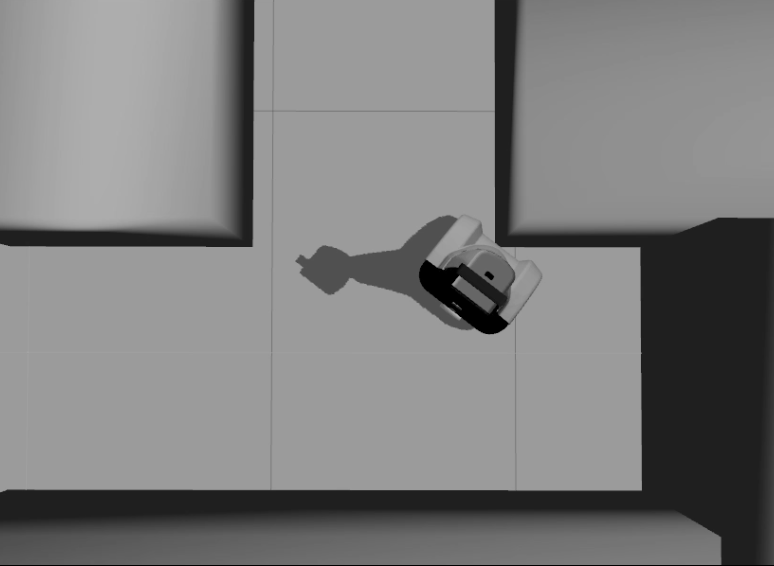

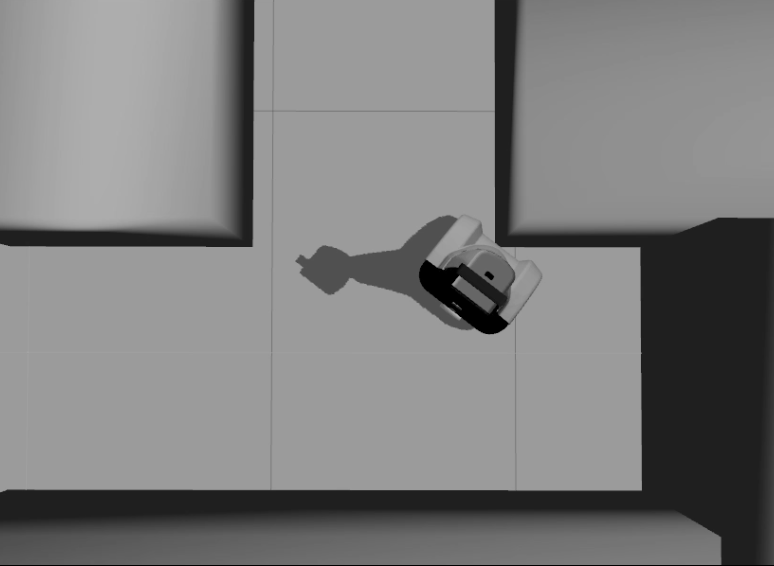

Some videos of PICO's behavior in simulation are shown below (click to view). | |||

=== | <table border="1" cellpadding="5" cellspacing="0" style="text-align:center;width:100%;border-collapse:collapse;"> | ||

<tr style="background: #D3D3D3;"> | |||

<td><b>Robustness</b></td> | |||

<td><b>An example of the implemented safety function</b></td> | |||

</tr> | |||

<tr> | |||

<td>[[File:Robust.png|500px|link=http://youtu.be/wu6yMYyqOtg]]</td> | |||

<td>[[File:safety_collision.png|400px|center|link=http://youtu.be/JnfYCiSv1jk]]</td> | |||

</tr> | |||

</table> | |||

==Drive == | |||

=== Goal === | |||

The drive node sends velocity commands to PICO based on information from the line distance and behavior controller. The line distance gives information about PICO's relative linear and angular position with respect to the walls. The behavior controller gives, if applicable in the state, an angular speed and a speed in forward direction. The goal of this component is to send commands to PICO's base, taking care of the posed velocity limits, and to provide lateral position and rotation control when desired. | |||

=== Considerations === | |||

The behavior controller gives straight-forward velocity information <math>(\dot{x}</math> and <math>\dot{\theta}_{turning})</math> which can be used immediately as velocity commands. | |||

The line distance node provides the left and right horizontal distances to a wall <math>(Y_L</math> and <math>Y_R)</math>. Furthermore, the relative angle left and right of PICO are provided <math>(\theta_L</math> and <math>\theta_R)</math>. This information needs to be interpreted to position and orientate PICO with respect to the walls. This control is called position control. A decision was made to implement this control in the Drive component rather than the behavior component because it is required in the majority of situations. Also, it does not contribute to the 'target' of PICO's behavior, but only aides to 'not run into walls'. | |||

The position control of PICO should not always be used. For example, PICO needs position control when it is driving in a corridor. However, when PICO is turning for an exit, position control should not be used because this will result in conflicting behavior. Furthermore, in cases when PICO does require position control, a situation may arise where there is a wall only on the left or right side, or no wall is found at all. This needs to be interpreted robustly for good behavior of PICO through the maze. | |||

=== | === Implementation === | ||

Whether or not position control should be applied is determined by a boolean <code>do_position_control</code>. If its value is <code>false</code>, <math>\dot{x}</math> and <math>\dot{\theta}_{turning}</math> are sent as velocity commands to PICO as they are received. If its values is <code>true</code>, position control will be used. In those cases, the velocity <math>\dot{x}</math> from the behavior controller and the output of the position controller will be sent to PICO's base, <math>(\dot{y}</math> and <math>\dot{\theta}_{orientation})</math>. | |||

For the position controller, different calculations of <math>(\dot{y}</math> and <math>\dot{\theta}_{orientation})</math> are possible depending on the input data. The basic position control is a simple proportional controller. In the general case (when two walls are detected), the difference between the left and right lateral distance is determined. This value is multiplied with a gain ''(0.6)'' and sent as a velocity command to PICO. | |||

= | <math>\dot{y}=(Y_R+Y_L)\cdot y_{gain}</math><br><br> | ||

For the angle, the average between the left and right angle is calculated and multiplied with a gain ''(0.4)''. | |||

For | |||

<math>\dot{\theta}_{orientation} = ((\theta_R+\theta_L)/2)*\theta_{gain}</math><br><br> | |||

* | |||

This approach gave good results during simulation and testing, so no additional control blocks (for example the addition of D action to the controller) needed to be introduced. | |||

The other options for position control are: | |||

* Wall detected on only left or right side (for instance when driving past an exit): PICO will drive 0.5m from the detected wall and orient itself based on the angle to this wall. | |||

* No walls detected: no position control will be applied. | |||

Before sending any command, the velocities are limited to the maximums for this course (0.2 m/s linear, combined and 1.0 rad/s rotational). To make sure that the forward velocity is not overly reduced in case lateral velocity suddenly becomes large, for instance when the proportional control goes wrong, lateral velocity is additionally limited to 0.1 m/s. If the length of the combined linear velocity vector exceeds 0.2, the x- and y-components are both scaled proportionally to observe the posted limit. | |||

== | === Conclusions and Recommendations === | ||

The | The drive node is a simple node that sends the velocity commands to PICO. Simulations and experiments have shown that PICO is able to drive robustly through every situation in the maze. The robustness is the result of the simple approach used in this node. | ||

Improvements to this node can be made by adding a derivative part to the position controller, though as already stated it functions very robustly with the current implementation. | |||

== Software | == Software overview == | ||

The table below gives a summary of all the software components described in detail above. | |||

{| border="1" cellpadding="5" cellspacing="0" style="border-collapse:collapse;" | {| border="1" cellpadding="5" cellspacing="0" style="border-collapse:collapse;" | ||

| style="background: #D3D3D3;" | '''Node''' | | style="background: #D3D3D3;" | '''Node''' | ||

| Line 190: | Line 512: | ||

|/pico/lines | |/pico/lines | ||

|Array of detected lines. Each line consists out of a start and an end point (x1,y1),(x2,y2) | |Array of detected lines. Each line consists out of a start and an end point (x1,y1),(x2,y2) | ||

|Transformation of raw data to lines with use of the Hough-transform. Each | |Transformation of raw data to lines with use of the Hough-transform. Each is described by two points in the Cartesian coordinate system with Pico being the center e.g. (0,0). | ||

|- | |- | ||

|Arrow detection | |Arrow detection | ||

|/pico/camera | |/pico/camera | ||

|Camera images | |Camera images | ||

|/pico/ | |/pico/event | ||

|Arrow detected to the left | |Arrow detected to the left or to the right | ||

|Detection of arrows pointing to left or right. | |Detection of arrows pointing to left or right. | ||

|- | |- | ||

| Line 204: | Line 526: | ||

|/pico/yaw | |/pico/yaw | ||