PRE2019 3 Group11: Difference between revisions

No edit summary |

No edit summary |

||

| Line 1: | Line 1: | ||

<div style="font-family: 'Calibri'; font-size: 16px; line-height: 1.5; max-width 1200px | <div style="font-family: 'Calibri'; font-size: 16px; line-height: 1.5; max-width 1200px; word-wrap: break-word; color: #333; font-weight: 400; margin-left; margin-right; padding: 70px; background-color: rgb(255, 255, 253);box-shadow: 0px 25px 35px -5px rgba(0,0,0,0.75); padding-top: 25px; transform: rotate(0deg)"> | ||

<font size='10'>Emotional Support Bot aka The Lonely Bot</font> | <font size='10'>Emotional Support Bot aka The Lonely Bot</font> | ||

Revision as of 14:09, 31 March 2020

Emotional Support Bot aka The Lonely Bot

Group Members

| Name | Student ID | Study | Student mail |

|---|---|---|---|

| Aristide Arnulf | 1279793 | Computer Science | a.l.a.arnulf@student.tue.nl |

| Floren van Barlingen | 1314475 | Industrial Design | f.c.e.v.barlingen@student.tue.nl |

| Robin Chen | 1250590 | Computer Science | h.r.chen@student.tue.nl |

| Merel Eikens | 1247158 | Computer Science | m.a.eikens@student.tue.nl |

| Dylan Harmsen | 1313754 | Electrical Engineering | d.a.harmsen@student.tue.nl |

Introduction

Nowadays, the global population is growing ever older and as such we are seeing more and more elderly that are in need of care. One such issue that the elderly face is increasing feelings of loneliness. This may be due to the loss of close relationships or even just increasing isolation from living in a care home. In order to solve this problem, our group has decided to come up with a social robot that partakes in interaction with the elderly to combat loneliness. This robot will use emotion recognition technology in order to find out how an elderly person is feeling and with such information will find a way to communicate with the person in order to comfort them.

Objectives

- Define a concept of a robot e.g. what features it needs to give the appropriate reaction, how it acts

- Analyze the ways in which the robot can communicate with the elderly be it verbal or non-verbal communication

- Provide scenarios in which our robot would be needed and the actions the robot needs to take

- Gather information based on these scenarios and responses to these scenarios via survey and interview

Subject

Our project is about elderly care robots that use emotion recognition technology to give appropriate feedback to the elderly person. We shall design a robot that uses emotion recognition to optimize its response towards its user. Our goal is to improve the social interface of robots interacting with lonely elders by using emotion recognition.

Problem Statement

Firstly, we are going to look at the different emotion recognition technologies and figure out which technology is the most suitable for our design. Secondly, we need to know how the robot should respond to different emotions, in different situations.

Approach

Collect papers and theory with which to make the reactions to expressions

Validate our problem statement with scientific papers

Make fitting reactions to certain (measurable) expressions

User test:

Find participants Practice test Do interview

Milestones

-Complete the software that we want to use for facial recognition.

-Make a system that will utilize the facial recognition and find answers for that.

-Survey/Interview lonely elderly people.

-Formulate all the data aggregated to form a conclusion.

-Tweak our system so it is better suited to the results of the conclusion.

Deliverables

Software that uses neural network for emotion recognition and provides feedback based on the such emotions

Analysis of results provided by surveying/interviewing the elderly on their thoughts on such robots and the feedback these robots could provide

Potential feedback that a social robot could give too the elderly that are suffering from loneliness

USE Case Analysis

Users

The world population is growing older each year with the UN predicting that in 2050, 16% of the people in the world will be over the age of 65.[1] In the Netherlands alone, the amount of people of age 65 and over is predicted to increase by 26% in 2035.[2] This means that our population is growing ever older and thus having facilities in place to help and take care of the elderly is becoming a greater necessity. A key issue that is present in the elderly today is a lack of social communication and interaction. In a study performed in the Netherlands, of a group of 4001 elderly individuals, 21% reported that they experienced feelings of loneliness.[3] Since the average amount of elderly people is only increasing each year, this problem is becoming more and more relevant.

A lot of factors affect life quality in old age. Being healthy is a complex state including physical, mental and emotional well being. The quality of life of the elderly is impacted by feeling of loneliness and social isolation.[4] The elderly are especially prone to feeling lonely due to living alone and having fewer and fewer intimate relationships.[5]

Loneliness is a state in which people don’t feel understood, where they lack social interaction and they feel psychological stress due to this isolation.[6] We distinguish 2 types of loneliness: social isolation and emotional loneliness.[7] Social isolation is when a person lacks in interactions with other individuals.[8] Emotional loneliness refers to the state of mind in which one feels alone and out of touch with the rest of the world.[9] Although different concepts, they are two sides of the same coin and there is quite clear overlap between the two.[10] The effect of loneliness in the elderly from mental to physical health issues can be quite profound. Research shows that such loneliness can lead to depression, cardiovascular disease, general health issues, loss of cognitive functions, and increased risk of mortality.[11]

In order to aid the elderly with our robot, it will have to communicate effectively with them.[12] One of the best ways to communicate with the elderly population is to use a mixture of verbal and non verbal communication. At this age, people tend to have communication issues due to cognitive problems and sensory problems thus effective communication can be a challenge. To facilitate interaction between the robot and the elderly, we must take into consideration the specificity of this age group. Older people respond better to carers who show empathy and compassion, take their time to listen and show respect and try to build a rapport with the person to make them feel comfortable. Instead of being forceful and ordering such people, it helps them feel at ease when they are given questions and offered choices. Elderly people like to feel in control of their life as much as possible.[13]

Society

With the advent of the 21st century, technology has grown exponentially and in an unpredictable manner. With this growth came progress in all areas of human life. However a seemingly unavoidable downside to this growth has been increased social isolation. The use of smartphones and social media gives people less incentive to go out and converse or have meaningful social interaction. The elderly already suffer from such social isolation due to loss of family members and loved ones and as they get older it becomes tough to maintain social contact. With a rise in the overall age of the population, there will be a growing number of lonely elderly people. Care homes are understaffed and care workers are already overworked, it is inevitable that a new solution needs to be found. A clear solution is the use of care robots to help keep the elderly occupied and overall improve their living situation. Japan is a leading force in the development of this technology as the country is already facing the penury of workers and the rise of the elderly population. A robot cannot fully replace the need of human to human social interaction but in many ways it can provide benefits that a human cannot. Since a robot never sleeps it can always tend to the elderly patient. Furthermore, using neural network and data gathering technologies, the robot can learn the best ways in which to interact with the person in order to relieve them of their loneliness and be a ‘friend’ figure that knows what they need and when they need it. Research has shown that care robots in the elderly home do bring about positive effects to mood and loneliness.[14]

A few issues arise when trying to implement such robots into the lives of the elderly. One of the main ones is that the person's autonomy should be preserved. A study shows that when questioned, people agreed that out of a few key values that the robot should provide, autonomy was the most important.[15] This means that although our robot is meant to help reduce loneliness in the elderly, it should not impede that person's autonomy. This further leads to the case in which the robot is pressing things onto an elderly person and they don’t have the mental or physical capacities to agree with what the robot wants to do. To avoid such issues, it is clear that our robot should mainly be applied to the elderly that are both physically and mentally healthy. Another issue is the case of privacy. For our robot to correctly function and optimally aid the patient, it is necessary that it gathers a reasonable amount of data on the person in order to respond in kind. Video footage will be recorded in order to correctly grasp the person's emotions and robots will have access to sensitive information and private data in order to perform their tasks. This means that strict policies will need to be put in place to make sure their privacy is respected. Furthermore, the elderly should be in control of this information and have all rights to allow what the robot should and should not be storing. This immense amount of data could have ethical and commercial repercussions. It is very easy to use such data to profile a person and make targeted advertisements for example. Thus there should be a guarantee that the data will not be sold to other companies and it is stored safely such that only the elderly or care home workers would have access to it.

Enterprise

The world is moving towards a more technological age and with it come new and innovative business opportunities. Social robots are becoming more and more apparent and many companies have been developed to focus on creating such robots.[16] There have been quite a lot of advancements related to our robot through businesses such as Affectiva developing emotion recognition software and many different care robots being made i.e. Stevie the robot. However, there is a gap in the market for a robot that can read the emotions of the elderly and respond in a positive manner to the benefit of the elderly. As we have seen the technology is available and thus taking this step is not too far fetched. With the increasing amount of elderly and care homes having high turnover do to burnout, the need for this robot is quite high, meaning there will be demand for this technology.[17] Although some care homes would welcome this technology to alleviate the loss of employees, some may disagree with using a robot to provide social interaction. However, the labour force in care homes is drastically decreasing due to hard working conditions and low pay rate. This means that it is almost inevitable that such a robot will need to be implemented in the near future. Since there is a market for this technology and many companies are already building similar robots, investing into our robot proposal would be very plausible as it is clear that there is profit to be made. Taking care of the elderly requires good communication skills, empathy and showing concern. The elderly population covers a number of people from various social circles, with different cultures, ages, goals and abilities. To take care of this population in the best way possible, we need a wide variety of techniques and knowledge.

State-of-the-art

Current emotion recognition technologies

Developing an interface that can respond to the emotion of a person starts with choosing a way to read and recognize this emotion. There are many techniques to utilize while recognizing emotion, three common techniques are facial recognition, measuring physiological signals and speech recognition. Emotion recognition using facial recognition can be extended to analyze the full body of the person(e.g. gestures), other combinations of techniques are also possible. A 2009 study proposed such a combination of different methods. The proposed method used facial expressions and physiological signals like heart rate and skin conductivity to classify four emotions of users; joy, fear, surprise and love. [18]

The most intuitive way to detect emotion is using facial recognition software[19]. Emotional recognition using facial recognition is easy to set up and the only thing needed is a camera and code. So these factors together make the facial recognition technique user-friendly. However, humans can fake emotions, this way a person can trick the recognition software. When extending the technique with full body analysis, the result can be more accurate, depending on how much body language the person uses. But also body language can be faked.

There are many papers about using physiological signals to detect emotions[20][21][22][23][24]. The papers discuss and research detecting physiological signals like heart rate, skin temperature, skin conductivity and respiration rate to infer the person’s emotion. To measure these physiological signals, biosensors, electrocardiography and electromyography are used. Even though these methods show more reliable results compared to methods like facial recognition, they all require some effort to setup and are not particularly user-friendly.

Lastly, speech recognition can be used to detect emotions[25]. Different emotions can be recognized by analyzing the tone and pitch of a person’s voice. Of course, the norm differs from person to person and from region to region. In addition, the tone of voice for some emotions are very similar, this makes it hard to classify emotions purely based on voice. And similarly to facial expressions, speech can also be faked.

Various papers talk about using support vector machines to lessen the overlap in their classification and to improve the overall accuracy of their classification.

Since our robot is going to be a service robot, it needs to be user-friendly. This means using physiological signals to detect emotion is a bad choice. Assuming that the user does not fake his emotions, since the user does not benefit from that, we are going to use only facial recognition in our robot. Due to time restrictions and our main emphasis not being on the emotion recognition part, we decided not to use speech recognition. This could be added in the future to improve on our concept.

Current robots

Communication with the Elderly: Verbal vs Non-Verbal

A central aspect of our robot is its ability to effectively communicate with the elderly. In this sense, we would like our robot to be relatively human-like in such that having a conversation with the robot is almost indistinguishable from having a conversation with a human. Communication theory states that communication is made of around six basic elements: the source, the sender, the message, the channel, the receiver and the response.[26]This shows that although communication appears simple, it is actually a very complex interaction and that breaking it down into simple steps for our robot to do is very improbable. Instead, we may observe already existing human-human interaction and use this as a means to give our robot lifelike communication. Communication in our case can be split up into two categories: verbal and non-verbal communication. Verbal communication is the act of conveying a message with language whereas non-verbal communication is the act of conveying a message without the use of verbal language.[27]

A key source to look at in order to find out how our robot should communicate is the nurse-elderly interaction as nurses tend to be extremely competent at comforting and easing an elderly patient into conversation. Communication with older people has its own characteristics; elderly people might have sensory deficits; nurses and patients might have different goals; finally there is the generation gap that might hinder communication.Robots can easily overcome these difficulties and thus focus on the relational aspect. Nurses need to develop different types of abilities, abilities which might overlap. They must be able to fulfill objectives in terms of physical care and at the same time to establish a good relationship with the patient.These two aspects call upon two types of communication: the instrumental communication which is a task related behaviour and the affective communication which is a socio-emotional behaviour. There is research from Peplau that shows that depending on the action performed by a nurse the communication varies from only instrumental to fully emotional with a mixed of both in lots of situations. In our case, the robot would focus on the more emotional aspect of communication to try and build a relationship with the elderly.[28]

Nurses tend to use affective behaviour in which they express support, concern and empathy.[29]Furthermore, the use of socio-emotional communication such as jokes and personal conversation are often used in order to establish a close relationship. Although conversations come in the form of both verbal and non-verbal communication, studies show that non-verbal behaviour is key in creating a solid relationship with a patient. Actions such as eye gazing, head nodding and smiling are all important aspects in communication with the elderly.[30] Another important aspect of communication is touch which serves as both a tool to communicate and show affection. This study shows that the use of touch significantly increased non-verbal responses from patients showing that it helps trigger a communicative response from the patients.[31]Overall, for successful communication with the elderly our robot will have to use a mix of verbal and non-verbal communication with a slight focus on some specific non-verbal behaviour such as head nodding and smiling.

Emotion Recognition

A key aspect to allow our robot to perform is the ability to read the emotions of the elderly and respond in kind. To achieve this, we will use deep convolutional neural networks. Convolutional neural networks excel in image recognition which is ideal for our case as we need to perform emotion recognition. For our situation, we used an already existing piece of emotion recognition software made by atulapra which classifies emotions using a CNN.[32] The CNN is able to distinguish in real time between seven different emotions: angry, disgusted, fearful, happy, neutral, sad and surprised. In order to get the CNN to properly classify the different emotions, we must first train the model on some data. The dataset that was chosen for training and validation is the FER-2013 dataset. [33] This is because in comparison to other datasets, the images provided are not of people posing and thus it makes it more realistic when comparing it to a webcam feed of an elderly person. Furthermore, this dataset has 35,685 different images and although they are quite low resolution, the quantity makes up for the lack of quality. Now that we have a dataset for training and validation, the network needs to be programmed. With the use of TensorFlow and the Keras API, a simple 4-layer CNN is constructed. These libraries help lower the complexity of the code and allows us to get extensive feedback on the accuracy and loss of the model. Once the model has been completed, it can then be saved and reused in the future.

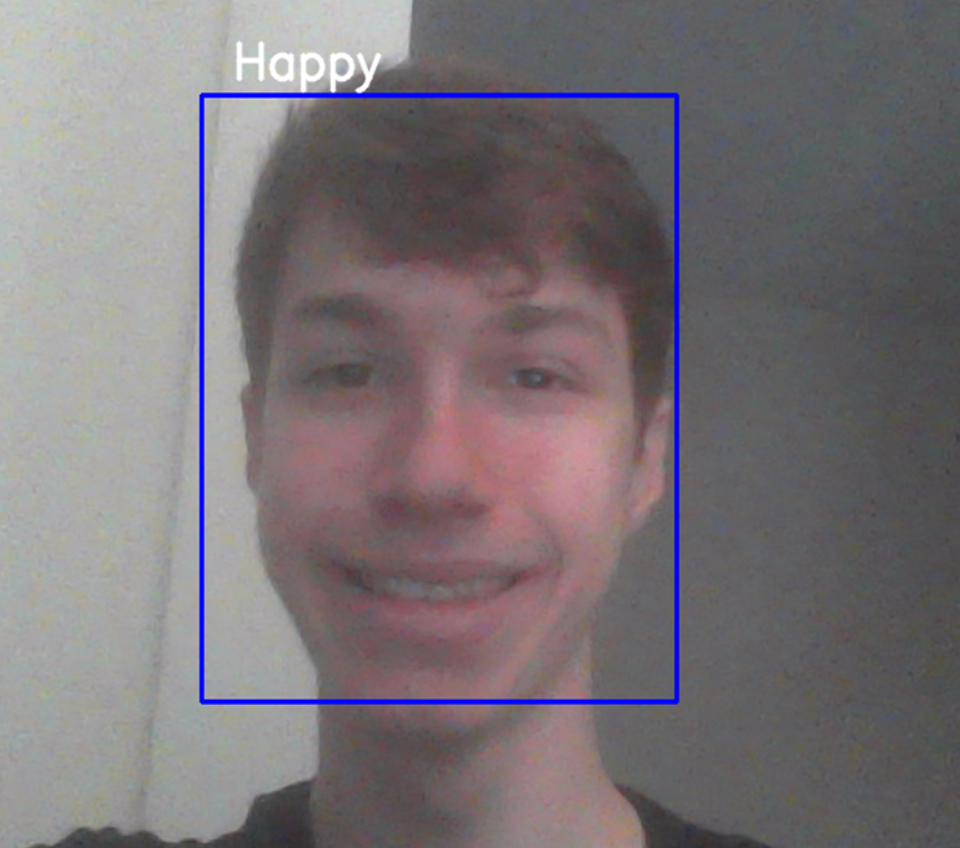

Now that we have a fairly accurate neural network, we just need to apply it to a real time video feed. Using the OpenCV library, we can capture real time video and use the cascade classifier in order to perform Haar cascade object detection which allows for facial recognition. The program takes the detected face and scales it to a 48x48 size input which is then fed into the neural network. The network returns the values from the output layer which represent the probabilities of the emotions the user is portraying.The highest probable value is then returned and displayed as white text above the users face. This can be seen in Fig.1. When in use, the software performs relatively well displaying the emotions fairly accurately when the user is face on. However, with poor lighting and at sharp angles, the software starts to struggle in detecting a face which means it cannot properly detect emotions. These aspects are ones to improve on in the future.

With all this information, a basic response program is created as a prototype such that it can be tested. It contains two responses to two of the seven emotions, happy and sad. If the software captures that the user is portraying a happy emotion for an extended period of time, it uses text to speech to say “How was your day?” and outputs an uplifting image to reinforce the person's positive attitude. If the software captures that the user is portraying a sad emotion for an extended period of time, it uses text to speech to say “Let’s watch some videos!” and outputs some funny youtube clips in order to cheer the person up.

Due to unforeseen circumstances, we were not able to test this software with the elderly and thus it was tested with two siblings. The test revolved around installing the software onto their laptops such that it would trigger at an unexpected time. With their consent, the software was set up such that if it detects a particular emotion much more than the others, it will trigger a response in order to reinforce this emotion or to try to comfort them. The two emotions focused on for the test were happy and sad. On one particular laptop a response for happy was installed and on the other a response for sad. The responses are as stated above. Once the test was over, the two participants were questioned for feedback.

Participant 1 (Happy): “Although it startled me at first, I was quite content that someone asked how my day was going and this gave me motivation to keep a positive attitude for the rest of the day. It did overall uplift my spirit and I honestly thought it was helpful. I would personally use this software again.”

Participant 2 (Sad): “To be honest, my day wasn’t going well at all. And when the program triggered and shoved a youtube video in my face I really didn’t think it was going to make it any better. But it actually did end up helping me feel a bit better. It took my mind off of all the extraneous things I was worrying about and I just spent a good few minutes having a laugh. I could see this being pretty useful if it had varying responses, maybe some different videos!”

Although the results are very qualitative as we were lacking people to test it on, the software shows promising results. The participants were both very positive about the software but it is hard to take them fully into account as with such little quantity of results there is no way to see past bias. With some tweaking to the responses and further research into improving the accuracy of the neural network, it is very possible that we could see this technology being used in a few years time.

Planning

Week 1

| Name | Total Hours | Tasks |

|---|---|---|

| Aristide Arnulf | 6 | Lecture(2), Meeting(4) |

| Floren van Barlingen | 6 | Lecture(2), Meeting(4) |

| Robin Chen | 6 | Lecture(2), Meeting(4) |

| Merel Eikens | 6 | Lecture(2), Meeting(4) |

| Dylan Harmsen | 6 | Lecture(2), Meeting(4) |

Week 2

| Name | Total Hours | Tasks |

|---|---|---|

| Aristide Arnulf | 12 | Meeting(8), Finding and reading articles(4) |

| Floren van Barlingen | 15 | Meeting(8), Finding and reading articles(4), Concepting(3) |

| Robin Chen | 15 | Meeting(8), Finding and reading articles(3), Summarizing the articles(2), Ideating(2) |

| Merel Eikens | 15 | Meeting(8), Finding and reading articles(4), Concepting(3) |

| Dylan Harmsen | 15 | Meeting(8), Finding and reading articles(5), Summarizing the articles(2) |

Week 3

| Name | Total Hours | Tasks |

|---|---|---|

| Aristide Arnulf | 14 | Meeting(8), Research into facial recognition software(6) |

| Floren van Barlingen | 13 | Meeting(8), Research into feedback(5) |

| Robin Chen | 15 | Meeting(8), Research into emotion detection technologies(5), Writing summaries(2) |

| Merel Eikens | 14 | Meeting(8), Research into feedback(6) |

| Dylan Harmsen | 14 | Meeting(8), Research into emotion detection technologies(6) |

| Name | Total Hours | Tasks |

|---|---|---|

| Aristide Arnulf | 8 | Research(5), Ideating(3) |

| Floren van Barlingen | 4 | Research into scenarios(4) |

| Robin Chen | 6 | Research into emotion recognition(3), Writing about emotion recognition(3) |

| Merel Eikens | 4 | Research into loneliness(4) |

| Dylan Harmsen | 9 | Research into emotion recognition(3), Looking into the survey questions and the questions for no1robotics(6) |

Week 5

| Name | Total Hours | Tasks |

|---|---|---|

| Aristide Arnulf | 20 | Meeting(10), Research into scenarios(6), Presentation preparation(4) |

| Floren van Barlingen | 21 | Meeting(10), Research into scenarios(5), Presentation preparation(4), Contact companies(2) |

| Robin Chen | 20 | Meeting(10), Research into scenarios(6), Presentation preparation(4) |

| Merel Eikens | 20 | Meeting(10), Research into scenarios(6), Presentation preparation(4) |

| Dylan Harmsen | 20 | Meeting(10), Research into scenarios(6), Presentation preparation(4) |

Week 6

| Name | Total Hours | Tasks |

|---|---|---|

| Aristide Arnulf | 14 | Meeting(8), Emotion Recognition Using Deep CNN(6) |

| Floren van Barlingen | 8 | Meeting(8) |

| Robin Chen | 12 | Meeting(8), Research human emotional reactions(4) |

| Merel Eikens | 8 | Meeting(8) |

| Dylan Harmsen | 8 | Meeting(8) |

Week 7 (university free week due to corona)

| Name | Total Hours | Tasks |

|---|---|---|

| Aristide Arnulf | 8 | |

| Floren van Barlingen | 4 | |

| Robin Chen | 4 | Analyzing facial expressions and reactions in videos(4) |

| Merel Eikens | 4 | |

| Dylan Harmsen | 9 |

Week 8

| Name | Total Hours | Tasks |

|---|---|---|

| Aristide Arnulf | 8 | Meeting(8) |

| Floren van Barlingen | 8 | Meeting(8) |

| Robin Chen | 18 | Meeting(8), Analyzing videos(4), Writing about current emotion recognition technologies(6) |

| Merel Eikens | 8 | Meeting(8) |

| Dylan Harmsen | 8 | Meeting(8) |

Literature

- ↑ United Nations Publications. (2019b). World Population Prospects 2019: Data Booklet. World: United Nations.

- ↑ Smits, C. H. M., van den Beld, H. K., Aartsen, M. J., & Schroots, J. J. F. (2013). Aging in The Netherlands: State of the Art and Science. The Gerontologist, 54(3), 335–343. https://doi.org/10.1093/geront/gnt096

- ↑ Holwerda, T. J., Beekman, A. T. F., Deeg, D. J. H., Stek, M. L., van Tilburg, T. G., Visser, P. J., … Schoevers, R. A. (2011). Increased risk of mortality associated with social isolation in older men: only when feeling lonely? Results from the Amsterdam Study of the Elderly (AMSTEL). Psychological Medicine, 42(4), 843–853. https://doi.org/10.1017/s0033291711001772

- ↑ Luanaigh, C. Ó., & Lawlor, B. A. (2008). Loneliness and the health of older people. International Journal of Geriatric Psychiatry, 23(12), 1213–1221. https://doi.org/10.1002/gps.2054

- ↑ Victor, C. R., & Bowling, A. (2012). A Longitudinal Analysis of Loneliness Among Older People in Great Britain. The Journal of Psychology, 146(3), 313–331. https://doi.org/10.1080/00223980.2011.609572

- ↑ Şar, A. H., Göktürk, G. Y., Tura, G., & Kazaz, N. (2012). Is the Internet Use an Effective Method to Cope With Elderly Loneliness and Decrease Loneliness Symptom? Procedia - Social and Behavioral Sciences, 55, 1053–1059. https://doi.org/10.1016/j.sbspro.2012.09.597

- ↑ DiTommaso, E., & Spinner, B. (1997). Social and emotional loneliness: A re-examination of weiss’ typology of loneliness. Personality and Individual Differences, 22(3), 417–427. https://doi.org/10.1016/s0191-8869(96)00204-8

- ↑ Gardiner, C., Geldenhuys, G., & Gott, M. (2016). Interventions to reduce social isolation and loneliness among older people: an integrative review. Health & Social Care in the Community, 26(2), 147–157. https://doi.org/10.1111/hsc.12367

- ↑ Weiss, R. (1975). Loneliness: The Experience of Emotional and Social Isolation (MIT Press) (New edition). Amsterdam, Netherlands: Amsterdam University Press.

- ↑ Golden, J., Conroy, R. M., Bruce, I., Denihan, A., Greene, E., Kirby, M., & Lawlor, B. A. (2009). Loneliness, social support networks, mood and wellbeing in community-dwelling elderly. International Journal of Geriatric Psychiatry, 24(7), 694–700. https://doi.org/10.1002/gps.2181

- ↑ Courtin, E., & Knapp, M. (2015). Social isolation, loneliness and health in old age: a scoping review. Health & Social Care in the Community, 25(3), 799–812. https://doi.org/10.1111/hsc.12311

- ↑ Hollinger, L. M. (1986). Communicating With the Elderly. Journal of Gerontological Nursing, 12(3), 8–9. https://doi.org/10.3928/0098-9134-19860301-05

- ↑ Ni, P. I. (2014, November 16). How to Communicate Effectively With Older Adults. Retrieved February 19, 2020, from https://www.psychologytoday.com/us/blog/communication-success/201411/how-communicate-effectively-older-adults

- ↑ Broekens, J., Heerink, M., & Rosendal, H. (2009). Assistive social robots in elderly care: a review. Gerontechnology, 8(2). https://doi.org/10.4017/gt.2009.08.02.002.00

- ↑ Draper, H., & Sorell, T. (2016b). Ethical values and social care robots for older people: an international qualitative study. Ethics and Information Technology, 19(1), 49–68. https://doi.org/10.1007/s10676-016-9413-1

- ↑ Lathan, C. G. E. L. (2019, July 1). Social Robots Play Nicely with Others. Retrieved from https://www.scientificamerican.com/article/social-robots-play-nicely-with-others/

- ↑ Costello, H., Cooper, C., Marston, L., & Livingston, G. (2019). Burnout in UK care home staff and its effect on staff turnover: MARQUE English national care home longitudinal survey. Age and Ageing, 49(1), 74–81. https://doi.org/10.1093/ageing/afz118

- ↑ Chang, C.-Y., Tsai, J.-S., Wang, C.-J., & Chung, P.-C. (2009). Emotion recognition with consideration of facial expression and physiological signals. 2009 IEEE Symposium on Computational Intelligence in Bioinformatics and Computational Biology. https://doi.org/10.1109/cibcb.2009.4925739

- ↑ Zhentao Liu, Min Wu, Weihua Cao, Luefeng Chen, Jianping Xu, Ri Zhang, Mengtian Zhou, Junwei Mao. (2017). A Facial Expression Emotion Recognition Based Human-robot Interaction System. IEEE/CAA Journal of Automatica Sinica, 4(4), 668-676

- ↑ Haag, A., Goronzy, S., Schaich, P., & Williams, J. (2004). Emotion recognition using bio-sensors: First steps towards an automatic system. In Lecture Notes in Artificial Intelligence (Subseries of Lecture Notes in Computer Science) (Vol. 3068, pp. 36–48). Springer Verlag. https://doi.org/10.1007/978-3-540-24842-2_4

- ↑ Jerritta, S., Murugappan, M., Nagarajan, R., & Wan, K. (2011). Physiological signals based human emotion recognition: A review. In Proceedings - 2011 IEEE 7th International Colloquium on Signal Processing and Its Applications, CSPA 2011 (pp. 410–415). https://doi.org/10.1109/CSPA.2011.5759912

- ↑ Kim, K. H., Bang, S. W., & Kim, S. R. (2004). Emotion recognition system using short-term monitoring of physiological signals. Medical and Biological Engineering and Computing, 42(3), 419–427. https://doi.org/10.1007/BF02344719

- ↑ Shu, L., Xie, J., Yang, M., Li, Z., Li, Z., Liao, D., … Yang, X. (2018, July 1). A review of emotion recognition using physiological signals. Sensors (Switzerland). MDPI AG. https://doi.org/10.3390/s18072074

- ↑ Zong, C., & Chetouani, M. (2009). Hilbert-Huang transform based physiological signals analysis for emotion recognition. 2009 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT). https://doi.org/10.1109/isspit.2009.5407547

- ↑ Park, J.-S., Kim, J.-H., & Oh, Y.-H. (2009). Feature vector classification based speech emotion recognition for service robots. IEEE Transactions on Consumer Electronics, 55(3), 1590–1596. https://doi.org/10.1109/tce.2009.5278031

- ↑ Shannon, C. E. (1948). A Mathematical Theory of Communication. Bell System Technical Journal, 27(3), 379–423. https://doi.org/10.1002/j.1538-7305.1948.tb01338.x

- ↑ Caris‐Verhallen, W. M. C. M., Kerkstra, A., & Bensing, J. M. (1997). The role of communications in nursing care for elderly people: a review of the literature. Journal of Advanced Nursing, 25(5), 915–933. https://doi.org/10.1046/j.1365-2648.1997.1997025915.x

- ↑ Hagerty, T. A., Samuels, W., Norcini-Pala, A., & Gigliotti, E. (2017). Peplau’s Theory of Interpersonal Relations. Nursing Science Quarterly, 30(2), 160–167. https://doi.org/10.1177/0894318417693286

- ↑ Caris-Verhallen, W. M. C. M., Kerkstra, A., van der Heijden, P. G. M., & Bensing, J. M. (1998). Nurse-elderly patient communication in home care and institutional care: an explorative study. International Journal of Nursing Studies, 35(1–2), 95–108. https://doi.org/10.1016/s0020-7489(97)00039-4

- ↑ Caris‐Verhallen, W. M. C. M., Kerkstra, A., & Bensing, J. M. (1999). Non‐verbal behaviour in nurse–elderly patient communication. Journal of Advanced Nursing, 29(4), 808–818. https://doi.org/10.1046/j.1365-2648.1999.00965.x

- ↑ Langland, R. M., & Panicucci, C. L. (1982). Effects of Touch on Communication with Elderly Confused Clients. Journal of Gerontological Nursing, 8(3), 152–155. https://doi.org/10.3928/0098-9134-19820301-09

- ↑ atulapra. Emotion-detection [Computer software]. Retrieved from https://github.com/atulapra/Emotion-detection

- ↑ Pierre-Luc Carrier and Aaron Courville. (16AD). FER-2013 [The Facial Expression Recognition 2013 (FER-2013) Dataset]. Retrieved from https://datarepository.wolframcloud.com/resources/FER-2013

Baron, R. J. (1981). Mechanisms of human facial recognition. International Journal of Man-Machine Studies, 15(2), 137–178. https://doi.org/10.1016/s0020-7373(81)80001-6

Breazeal, C. (2003). Emotion and sociable humanoid robots. International Journal of Human-Computer Studies, 59(1–2), 119–155. https://doi.org/10.1016/s1071-5819(03)00018-1

Cañamero, L. (2005). Emotion understanding from the perspective of autonomous robots research. Neural Networks, 18(4), 445–455. https://doi.org/10.1016/j.neunet.2005.03.003

Cavallo, F., Semeraro, F., Fiorini, L., Magyar, G., Sinčák, P., & Dario, P. (2018). Emotion Modelling for Social Robotics Applications: A Review. Journal of Bionic Engineering, 15(2), 185–203. https://doi.org/10.1007/s42235-018-0015-y

Donaldson, J. M., & Watson, R. (1996). Loneliness in elderly people: an important area for nursing research. Journal of Advanced Nursing, 24(5), 952–959. https://doi.org/10.1111/j.1365-2648.1996.tb02931.x

Doroftei, I., Adascalitei, F., Lefeber, D., Vanderborght, B., & Doroftei, I. A. (2016). Facial expressions recognition with an emotion expressive robotic head. IOP Conference Series: Materials Science and Engineering, 147, 012086. https://doi.org/10.1088/1757-899x/147/1/012086

Draper, H., & Sorell, T. (2016). Ethical values and social care robots for older people: an international qualitative study. Ethics and Information Technology, 19(1), 49–68. https://doi.org/10.1007/s10676-016-9413-1

Duffy, B. R. (2003). Anthropomorphism and the social robot. Robotics and Autonomous Systems, 42(3–4), 177–190. https://doi.org/10.1016/s0921-8890(02)00374-3

Gallego-Perez, J. (n.d.). Robots to motivate elderly people: Present and future challenges. Retrieved February 12, 2020, from https://www.mendeley.com/catalogue/robots-motivate-elderly-people-present-future-challenges/?utm_source=desktop&utm_medium=1.19.4&utm_campaign=open_catalog&userDocumentId=%7B8f22462b-3479-423a-b1b7-79e41faa96f2%7D

Golden, J., Conroy, R. M., Bruce, I., Denihan, A., Greene, E., Kirby, M., & Lawlor, B. A. (2009). Loneliness, social support networks, mood and wellbeing in community‐dwelling elderly. International Journal of Geriatric Psychiatry: A journal of the psychiatry of late life and allied sciences, 24(7), 694-700. https://s3.amazonaws.com/academia.edu.documents/4888511/elders_social_nets.pdf?response-content-disposition=inline%3B%20filename%3DLoneliness_social_support_networks_mood.pdf&X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWOWYYGZ2Y53UL3A%2F20200212%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20200212T203152Z&X-Amz-Expires=3600&X-Amz-SignedHeaders=host&X-Amz-Signature=9cc1e16258f84479d508ea577b6fa5ad0953baf481efee2d3103fe051244dbee

Kachouie, R., Sedighadeli, S., Khosla, R., & Chu, M.-T. (2014). Socially Assistive Robots in Elderly Care: A Mixed-Method Systematic Literature Review. International Journal of Human-Computer Interaction, 30(5), 369–393. https://doi.org/10.1080/10447318.2013.873278

Kidd, C. D., Taggart, W., & Turkle, S. (2006). A sociable robot to encourage social interaction among the elderly. Proceedings 2006 IEEE International Conference on Robotics and Automation, 2006. ICRA 2006. https://doi.org/10.1109/robot.2006.1642311

Lee, K. M., Jung, Y., Kim, J., & Kim, S. R. (2006). Are physically embodied social agents better than disembodied social agents?: The effects of physical embodiment, tactile interaction, and people's loneliness in human–robot interaction. International journal of human-computer studies, 64(10), 962-973. https://dr.ntu.edu.sg/bitstream/10356/100910/1/IJHCS-S-05-00003.pdf

Liu, Z., Wu, M., Cao, W., Chen, L., Xu, J., Zhang, R., … Mao, J. (2017). A facial expression emotion recognition based human-robot interaction system. IEEE/CAA Journal of Automatica Sinica, 4(4), 668–676. https://doi.org/10.1109/jas.2017.7510622

Luanaigh, C. Ó., & Lawlor, B. A. (2008). Loneliness and the health of older people. International Journal of Geriatric Psychiatry. https://doi.org/10.1002/gps.2054

Robinson, H. (n.d.). The Role of Healthcare Robots for Older People at Home: A Review. Retrieved February 12, 2020, from https://www.mendeley.com/catalogue/role-healthcare-robots-older-people-home-review/?utm_source=desktop&utm_medium=1.19.4&utm_campaign=open_catalog&userDocumentId=%7Bc70d164c-45e5-433e-9315-73bdf09aabba%7D

Russell, J. A. (1994, February 12). Is there universal recognition of emotion from facial expression? A review of the cross-cultural studies. Retrieved February 12, 2020, from https://www.mendeley.com/catalogue/universal-recognition-emotion-facial-expression-review-crosscultural-studies/?utm_source=desktop&utm_medium=1.19.4&utm_campaign=open_catalog&userDocumentId=%7Bb293b55e-1717-4722-8475-da43c4900b02%7D

Santhoshkumar, R., & Geetha, M. K. (2019). Deep Learning Approach for Emotion Recognition from Human Body Movements with Feedforward Deep Convolution Neural Networks. Procedia Computer Science, 152, 158–165. https://doi.org/10.1016/j.procs.2019.05.038

Şar, A. H. (n.d.). Is the Internet Use an Effective Method to Cope With Elderly Loneliness and Decrease Loneliness Symptom? Retrieved February 12, 2020, from https://www.mendeley.com/catalogue/internet-effective-method-cope-elderly-loneliness-decrease-loneliness-symptom/?utm_source=desktop&utm_medium=1.19.4&utm_campaign=open_catalog&userDocumentId=%7B4d41a2dc-adbd-4792-a315-d4b774efd15e%7D

Sharkey, A. (2014). Robots and human dignity: a consideration of the effects of robot care on the dignity of older people. Ethics and Information Technology, 16(1), 63–75. https://doi.org/10.1007/s10676-014-9338-5

Sharkey, A., & Sharkey, N. (2012). Granny and the robots: ethical issues in robot care for the elderly. Ethics and information technology, 14(1), 27-40. https://philpapers.org/archive/SHAGAT.pdf

Stafford, R. Q., Broadbent, E., Jayawardena, C., Unger, U., Kuo, I. H., Igic, A., ... & MacDonald, B. A. (2010, September). Improved robot attitudes and emotions at a retirement home after meeting a robot. In 19th international symposium in robot and human interactive communication (pp. 82-87). IEEE. https://s3.amazonaws.com/academia.edu.documents/31365205/05598679.pdf?response-content-disposition=inline%3B%20filename%3DImproved_robot_attitudes_and_emotions_at.pdf&X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWOWYYGZ2Y53UL3A%2F20200212%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20200212T203945Z&X-Amz-Expires=3600&X-Amz-SignedHeaders=host&X-Amz-Signature=1bc069aa09339d9833018a3a1eb89c09ab3c513491e168191dc7033b66efbd0b

Tomaka, J., Thompson, S., & Palacios, R. (2006). The relation of social isolation, loneliness, and social support to disease outcomes among the elderly. Journal of aging and health, 18(3), 359-384. https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.911.9588&rep=rep1&type=pdf

Voth, D. (2003). Face recognition technology. IEEE Intelligent Systems, 18(3), 4–7. https://doi.org/10.1109/mis.2003.1200719

Wu, Y.-H., Fassert, C., & Rigaud, A.-S. (2012). Designing robots for the elderly: Appearance issue and beyond. Archives of Gerontology and Geriatrics, 54(1), 121–126. https://doi.org/10.1016/j.archger.2011.02.003

Zhao, X., Naguib, A. M., & Lee, S. (2014). Kinect based calling gesture recognition for taking order service of elderly care robot. The 23rd IEEE International Symposium on Robot and Human Interactive Communication. https://doi.org/10.1109/roman.2014.6926306