PRE2018 4 Group8: Difference between revisions

| (73 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

<div style="font-family: 'Arial'; font-size: 16px; line-height: 1.5; max-width: 1300px; word-wrap: break-word; color: #333; font-weight: 400; box-shadow: 0px 25px 35px -5px rgba(0,0,0,0.75); margin-left: auto; margin-right: auto; padding: 70px; background-color: rgb(255, 255, | <div style="font-family: 'Arial'; font-size: 16px; line-height: 1.5; max-width: 1300px; word-wrap: break-word; color: #333; font-weight: 400; box-shadow: 0px 25px 35px -5px rgba(0,0,0,0.75); margin-left: auto; margin-right: auto; padding: 70px; background-color: rgb(255, 255, 253); padding-top: 25px; transform: rotate(0deg)"> | ||

<font size='7'>Emotion recognition</font> | |||

---- | |||

==Members== | ==Members== | ||

{| class="wikitable" style="border-style: solid; border-width: 1px;" cellpadding="4" | {| class="wikitable" style="border-style: solid; border-width: 1px;" cellpadding="4" | ||

| Line 23: | Line 28: | ||

=== Surgery robots === | === Surgery robots === | ||

The DaVinci surgery system has become a serious competitor to conventional laparoscopic surgery techniques. This is because the machine has more degrees of freedom, thus allowing the surgeon to carry out movements that they were not able to carry out with other techniques. The DaVinci system is controlled by the surgeon itself, and the surgeon therefore has full control and responsibility | The DaVinci surgery system has become a serious competitor to conventional laparoscopic surgery techniques. This is because the machine has more degrees of freedom, thus allowing the surgeon to carry out movements that they were not able to carry out with other techniques. The DaVinci system is controlled by the surgeon itself, and the surgeon, therefore, has full control and responsibility for the result. However, as robots are becoming more developed, they might become more autonomous as well. But mistakes can still occur, albeit perhaps less frequently than with regular surgeons. In such cases, who is responsible? The robot manufacturer, or the surgeon? In this research project, the ethical implications of autonomous robot surgery could be addressed. | ||

=== Elderly care robots === | === Elderly care robots === | ||

The | The aging population is rapidly increasing in most developed countries, while vacancies in elderly care often remain unfilled. Therefore, elderly care robots could be a solution, as they relieve the pressure of the carers of elderly people. They can also offer more specialized care and aide the person in their social development. However, the information recorded by the sensors and the video-images recorded by cameras should be protected well, as the privacy of the elderly should be ensured. In addition to that, robot care should not infantilize the elderly and respect their autonomy. | ||

=== Facial emotion recognition === | === Facial emotion recognition === | ||

Facebook uses advanced Artificial Intelligence (AI) to | Facebook uses advanced Artificial Intelligence (AI) to recognize faces. This data can be used or misused in many ways. Totalitarian governments can use such techniques to control the masses, but care robots could use facial recognition to read the emotional expression of the person they are taking care of. In this research project, facial recognition for emotion regulation can be explored, as there are interesting technical and ethical implications that this technology might have on the field of robotic care. | ||

== Introduction == | == Introduction == | ||

19.9% <ref> Holwerda, T. J., Deeg, D. J., Beekman, A. T., van Tilburg, T. G., Stek, M. L., Jonker, C., & Schoevers, R. A. (2014). Feelings of loneliness, but not social isolation, predict dementia onset: results from the Amsterdam Study of the Elderly (AMSTEL). J Neurol Neurosurg Psychiatry, 85(2), 135-142. </ref> of the elderly report that they experience feelings of loneliness. A potential cause of this is that they have often lost quite a large deal of their family and friends. It is an alarming figure, as loneliness has been linked to increased rates of depression and other degenerative diseases in the elderly. A solution | 19.9% <ref> Holwerda, T. J., Deeg, D. J., Beekman, A. T., van Tilburg, T. G., Stek, M. L., Jonker, C., & Schoevers, R. A. (2014). Feelings of loneliness, but not social isolation, predict dementia onset: results from the Amsterdam Study of the Elderly (AMSTEL). J Neurol Neurosurg Psychiatry, 85(2), 135-142. </ref> of the elderly report that they experience feelings of loneliness. A potential cause of this is that they have often lost quite a large deal of their family and friends. It is an alarming figure, as loneliness has been linked to increased rates of depression and other degenerative diseases in the elderly. A solution to the problem of lonely elderly could be an assistive robot with a human-robot interactive aspect, which also has the possibility to communicate with primary or secondary carers. Such a robot would recognize the facial expression of the elderly person and from this deduct their needs. If the elderly person looks sad, the robot might suggest them to contact a family member or a friend via a Skype call. However, the technology for such interaction is a rather immature topic. | ||

The EU-funded SocialRobot project <ref> Portugal, D., Santos, L., Alvito, P., Dias, J., Samaras, G., & Christodoulou, E. (2015, December). SocialRobot: An interactive mobile robot for elderly home care. In 2015 IEEE/SICE International Symposium on System Integration (SII) (pp. 811-816). IEEE. </ref> has developed a care robot which can move around, record audio or video, and most importantly, it can perform emotion recognition on audio recordings. However, the accuracy of the emotion classification based on audio alone is somewhere between 60 and 80 percent (depending on the specific emotion) <ref> da Silva Costa, L. M. (2013). Integration of a Communication System for Social Behavior Analysis in the SocialRobot Project. </ref>, which is inadequate for the envisioned application. Research has shown that the combination of video images and recorded speech data is especially powerful and accurate in determining an elderly person's emotion. Therefore, this research project proposes a package for facial emotion recognition, as can be used for the SocialRobot project. <ref> Han, M. J., Hsu, J. H., Song, K. T., & Chang, F. Y. (2008). A New Information Fusion Method for Bimodal Robotic Emotion Recognition. JCP, 3(7), 39-47. </ref> | The EU-funded SocialRobot project <ref> Portugal, D., Santos, L., Alvito, P., Dias, J., Samaras, G., & Christodoulou, E. (2015, December). SocialRobot: An interactive mobile robot for elderly home care. In 2015 IEEE/SICE International Symposium on System Integration (SII) (pp. 811-816). IEEE. </ref> has developed a care robot which can move around, record audio or video, and most importantly, it can perform emotion recognition on audio recordings. However, the accuracy of the emotion classification based on audio alone is somewhere between 60 and 80 percent (depending on the specific emotion) <ref> da Silva Costa, L. M. (2013). Integration of a Communication System for Social Behavior Analysis in the SocialRobot Project. </ref>, which is inadequate for the envisioned application. Research has shown that the combination of video images and recorded speech data is especially powerful and accurate in determining an elderly person's emotion. Therefore, this research project proposes a package for facial emotion recognition, as can be used for the SocialRobot project. <ref> Han, M. J., Hsu, J. H., Song, K. T., & Chang, F. Y. (2008). A New Information Fusion Method for Bimodal Robotic Emotion Recognition. JCP, 3(7), 39-47. </ref> | ||

| Line 50: | Line 55: | ||

=== Research Question === | === Research Question === | ||

The choice of our subject of study has gone to emotion recognition in elderly. For this purpose, the following research question was defined: | The choice of our subject of study has gone to emotion recognition in the elderly. For this purpose, the following research question was defined: | ||

''In what way can Convolutional Neural Networks (CNNs) be used to perform emotion recognition on real-time video images of lonely elderly people? '' | ''In what way can Convolutional Neural Networks (CNNs) be used to perform emotion recognition on real-time video images of lonely elderly people? '' | ||

| Line 61: | Line 66: | ||

What are the requirements for the dataset that will be used? | What are the requirements for the dataset that will be used? | ||

What is a suitable CNN architecture to | What is a suitable CNN architecture to analyze dynamic facial expressions? | ||

* USE sub-questions: | * USE sub-questions: | ||

| Line 78: | Line 83: | ||

The deliverables of this project include: | The deliverables of this project include: | ||

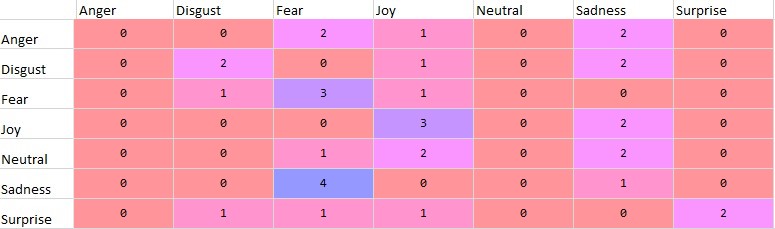

* Software, a neural network trained to recognize emotion from pictures of facial expressions. This software should be able to | * Software, a neural network trained to recognize emotion from pictures of facial expressions. This software should be able to distinguish between the 7 basic facial expressions: Anger, disgust, joy, fear, surprise, sadness, neutral. | ||

* A Wiki page, this will describe the entire process the group went through during the research, as well as a technical and USE evaluation of the software product. | * A Wiki page, this will describe the entire process the group went through during the research, as well as a technical and USE evaluation of the software product. | ||

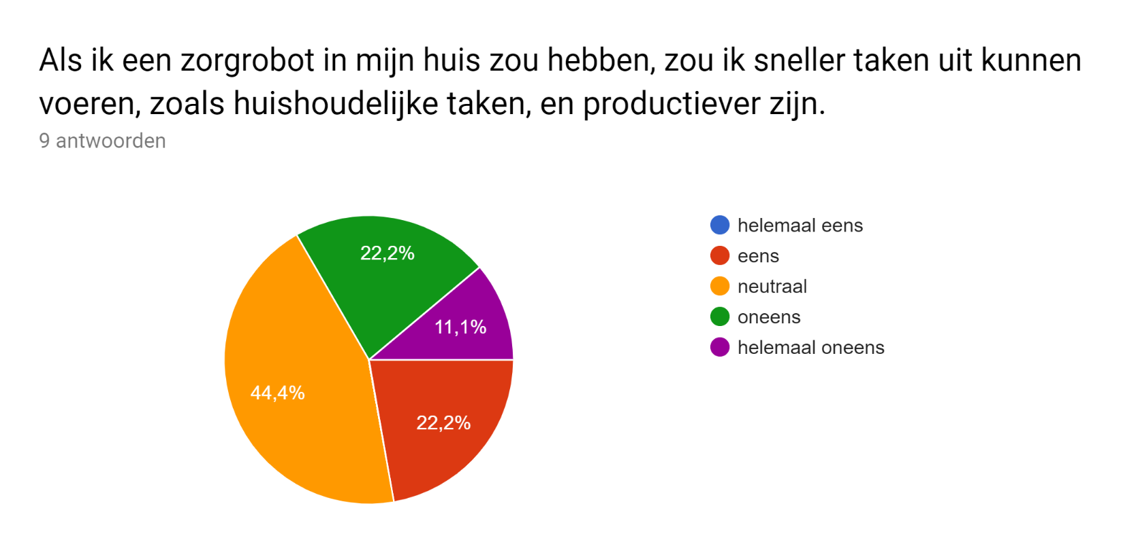

* The | * The analyzed results of a survey, geared towards the acceptance of robots in their home by elderly people. | ||

* The analyzed results of an interview, conducted on several groups of carers in an elderly home. | |||

===Steps to be taken=== | |||

First, a study of the state-of-the-art was conducted to get familiar with the different techniques of using a CNN for facial recognition. This information was crucial for deciding how our project will go beyond what is already researched. Now, a database with relevant photos will be constructed for training the CNN as well as one to test it. A CNN will be constructed, implemented and trained to recognize emotions using the database with photos. After training the CNN will be tested with the testing database, and if time allows it, it will be tested on real people. The usefulness of this CNN in elderly care robots will then be analyzed, as well as the ethical aspects surrounding our application. | |||

== Case-study == | == Case-study == | ||

| Line 92: | Line 102: | ||

Bart always describes himself as "pretty active back in his days". But now that he's reached the age of 83 he is not that active anymore. He lives in an apartment complex for elderly people, with an artificial companion named John. John is a care-robot, that besides helping Bart in the household also functions as an interactive conversation partner. Every morning after greeting Bart, the robot gives a weather forecast. This is a trick it learned from the analysis of casual human-human conversations which almost always start with a chat about the weather. Today this approach seems to work fine, as after some time Bart reacts with: “Well, it promises to be a beautiful day, doesn’t it?” But if this robot was equipped with simple emotion recognition software it would have noticed that a sad expression appeared on Bart’s face after the weather forecast was mentioned. In fact, every time Bart hears the weather forecast he thinks about how he used to go out to enjoy the sun and the fact that he cannot do that anymore. With emotion recognition, the robot could avoid this subject in the future. The robot could even try to arrange with the nurses that Bart goes outside more often. | Bart always describes himself as "pretty active back in his days". But now that he's reached the age of 83 he is not that active anymore. He lives in an apartment complex for elderly people, with an artificial companion named John. John is a care-robot, that besides helping Bart in the household also functions as an interactive conversation partner. Every morning after greeting Bart, the robot gives a weather forecast. This is a trick it learned from the analysis of casual human-human conversations which almost always start with a chat about the weather. Today this approach seems to work fine, as after some time Bart reacts with: “Well, it promises to be a beautiful day, doesn’t it?” But if this robot was equipped with simple emotion recognition software it would have noticed that a sad expression appeared on Bart’s face after the weather forecast was mentioned. In fact, every time Bart hears the weather forecast he thinks about how he used to go out to enjoy the sun and the fact that he cannot do that anymore. With emotion recognition, the robot could avoid this subject in the future. The robot could even try to arrange with the nurses that Bart goes outside more often. | ||

In this example, Bart would profit | In this example, Bart would profit on the implementation of facial emotion recognition software in his care robot. At the same time, a value conflict might arise; On the one hand, the implementation of emotion recognition software could seriously improve the quality of the care delivered by the care robot. But on the other hand, we should seriously consider up to what extent these robots may replace the interaction with real humans. And when the robot decides to take action to get the nurses to let Bart go outside more often this might conflict with the right of privacy and autonomy. It might feel to Bart as if he is treated like a child when the robot calls his carers without Bart's consent. | ||

== State-of-the-Art technology == | == State-of-the-Art technology == | ||

| Line 98: | Line 108: | ||

[[File:databaseCKphotos.gif|thumb|400px|alt=Alt text|Figure 1: An example of a database used for facial emotion recognition, CK+]] | [[File:databaseCKphotos.gif|thumb|400px|alt=Alt text|Figure 1: An example of a database used for facial emotion recognition, CK+]] | ||

To promote the clarity of our literature study, the state-of-the art technology section has been subdivided into three sections: Sources that provide information regarding the ''technical insight'', sources that explain more about the implications our technology can have on ''USE stakeholders'' and sources that describe possible ''applications'' of our technology. | To promote the clarity of our literature study, the state-of-the-art technology section has been subdivided into three sections: Sources that provide information regarding the ''technical insight'', sources that explain more about the implications our technology can have on ''USE stakeholders'' and sources that describe possible ''applications'' of our technology. | ||

=== Technical insight === | === Technical insight === | ||

| Line 104: | Line 114: | ||

[[File:cknormalised.jpg|thumb|400px|alt=Alt text|Figure 2: The normalised CK+ image (right) and its original intensity image (left)]] | [[File:cknormalised.jpg|thumb|400px|alt=Alt text|Figure 2: The normalised CK+ image (right) and its original intensity image (left)]] | ||

Neural networks can be used for facial recognition and emotion recognition. The approaches in literature can be classified based | Neural networks can be used for facial recognition and emotion recognition. The approaches in literature can be classified based on the following elements: | ||

* The database used for training of the data | * The database used for training of the data | ||

* The feature selection method | * The feature selection method | ||

* The neural network architecture | * The neural network architecture | ||

An example of a ''database'' is Cohn-Kanade extended (CK+, see fig. 1.) <ref> Lucey, P., Cohn, J. F., Kanade, T., Saragih, J., Ambadar, Z., & Matthews, I. (2010, June). The extended | An example of a ''database'' is Cohn-Kanade extended (CK+, see fig. 1.) <ref> Lucey, P., Cohn, J. F., Kanade, T., Saragih, J., Ambadar, Z., & Matthews, I. (2010, June). The extended Cohn-Kanade dataset (ck+): A complete dataset for action unit and emotion-specified expression. In 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops (pp. 94-101). IEEE. </ref>. It has pictures from a diverse set of people, the participants were aged 18 to 50, 69% female, 81%, Euro-American, 13% Afro-American, and 6% other groups. The participants started out with a neutral face and were then instructed to take on several emotions, in which different muscular groups in the face (called action units or AUs by the researchers) were active. The database contains pictures with labels, classifying them into 7 different emotions: anger, disgust, fear, happiness, sadness, surprise and contempt. | ||

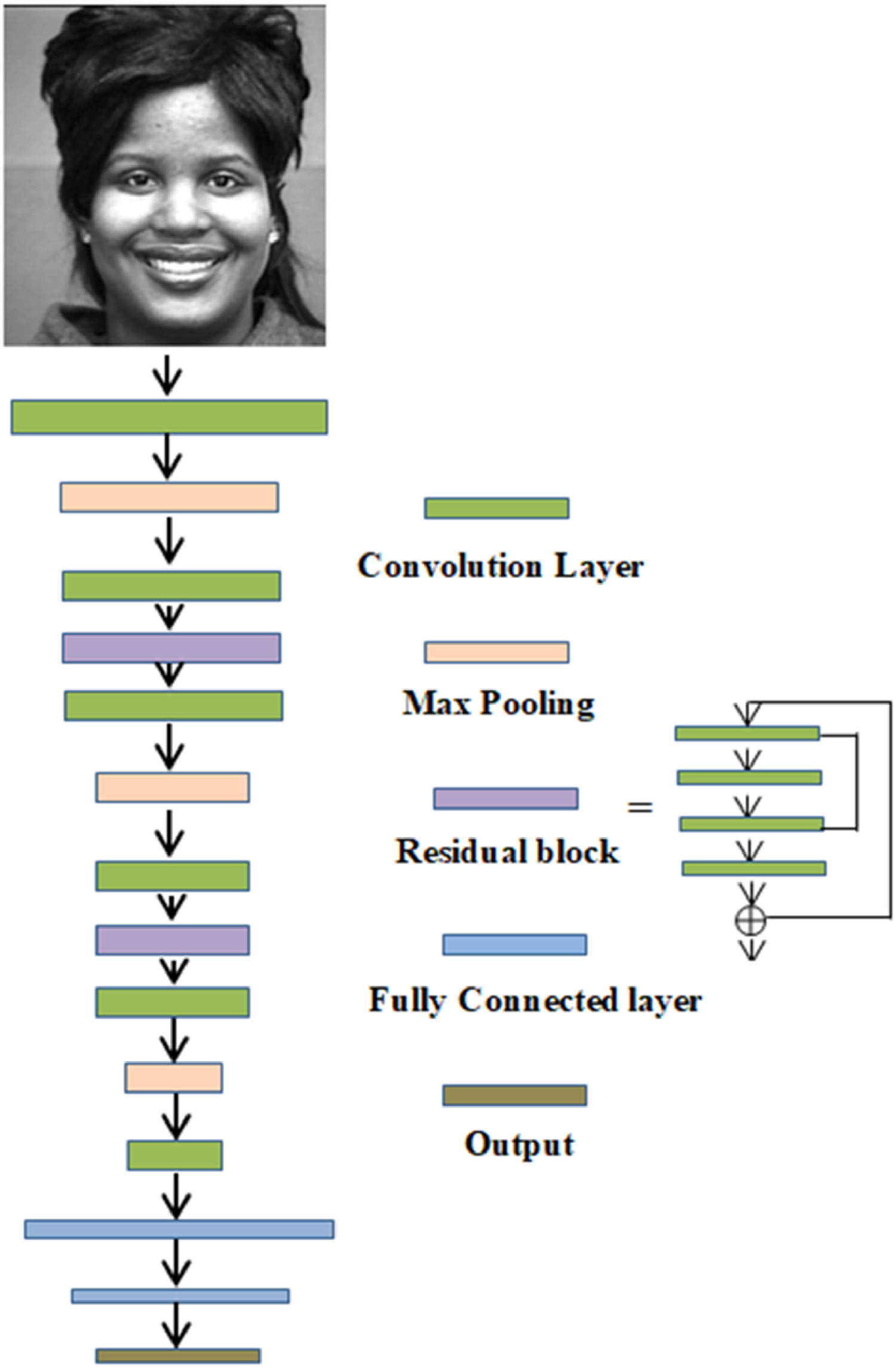

[[File:deepconvolutnetwork.jpg|thumb|400px|alt=Alt text|Figure 3: The machine learning structure used by the researchers from source 9]] | [[File:deepconvolutnetwork.jpg|thumb|400px|alt=Alt text|Figure 3: The machine learning structure used by the researchers from source 9]] | ||

Source 9 has used this database. To extract the ''features'', they first cropped the image and then normalized the intensity (see fig. 2.). The researchers computed the local deviation of the | Source 9 has used this database. To extract the ''features'', they first cropped the image and then normalized the intensity (see fig. 2.). The researchers computed the local deviation of the normalized image with a structuring element that had a size of NxN. | ||

Then as can be seen in fig. 3. , the ''neural network architecture'' chosen by the researchers consisted of six convolution layers and two blocks of deep residual learning | Then as can be seen in fig. 3., the ''neural network architecture'' chosen by the researchers consisted of six convolution layers and two blocks of deep residual learning called a 'deep convolutional neural network' because of its size. In addition to that, after each convolution layer, there is a max pooling layer and there are 2 Fully Connected layers. | ||

There are different ways of connecting neurons in machine learning. If we want to fully connect the neurons, and the input image is a small 200x200 pixel image, it already has 40,000 weights. The convolutional layers apply a convolution operation to the input, reducing the number of free parameters. The max pooling layers combine the outputs of several neurons of the previous layer into a single neuron in the next layer, specifically the one with the highest value is taken as input value for the next layer, further reducing the number of parameters. The Fully Connected layers connect each neuron in one layer to each layer in the next layer. After training the neural network, each neuron has a weight. | There are different ways of connecting neurons in machine learning. If we want to fully connect the neurons, and the input image is a small 200x200 pixel image, it already has 40,000 weights. The convolutional layers apply a convolution operation to the input, reducing the number of free parameters. The max-pooling layers combine the outputs of several neurons of the previous layer into a single neuron in the next layer, specifically, the one with the highest value is taken as an input value for the next layer, further reducing the number of parameters. The Fully Connected layers connect each neuron in one layer to each layer in the next layer. After training the neural network, each neuron has a weight. | ||

The rest of the sources used a similar approach, and have been classified in the same manner in the table below: | The rest of the sources used a similar approach, and have been classified in the same manner in the table below: | ||

| Line 129: | Line 139: | ||

|- | |- | ||

|- | |- | ||

| <ref>Liu, Z., Wu, M., Cao, W., Chen, L., Xu, J., Zhang, R., ... & Mao, J. (2017). A facial expression emotion recognition based human-robot interaction system.</ref> || own database || It recognizes facial expressions in the following steps: division of the facial images in three regions of interest (ROI), the eyes, nose and mouth. Then, feature extraction happens using a 2D Gabor filter and LBP. PCA is adopted to reduce the number of features. || Extreme Learning Machine classifier || This article entails a robotic system that not only recognizes human emotions but also generates its own facial expressions in cartoon symbols | | <ref>Liu, Z., Wu, M., Cao, W., Chen, L., Xu, J., Zhang, R., ... & Mao, J. (2017). A facial expression emotion recognition based human-robot interaction system.</ref> || own database || It recognizes facial expressions in the following steps: division of the facial images in three regions of interest (ROI), the eyes, nose, and mouth. Then, feature extraction happens using a 2D Gabor filter and LBP. PCA is adopted to reduce the number of features. || Extreme Learning Machine classifier || This article entails a robotic system that not only recognizes human emotions but also generates its own facial expressions in cartoon symbols | ||

|- | |- | ||

| <ref>Ruiz-Garcia, A., Elshaw, M., Altahhan, A., & Palade, V. (2018). A hybrid deep learning neural approach for emotion recognition from facial expressions for socially assistive robots. Neural Computing and Applications, 29(7), 359-373.</ref> || Karolinska Directed Emotional Face (KDEF) dataset || Their approach is a Deep Convolutional Neural Network (CNN) for feature extraction and a Support Vector Machine (SVM) for emotion classification. || Deep Convolutional Neural Network (CNN) || This approach reduces the number of layers required and it has a classification rate of 96.26% | | <ref>Ruiz-Garcia, A., Elshaw, M., Altahhan, A., & Palade, V. (2018). A hybrid deep learning neural approach for emotion recognition from facial expressions for socially assistive robots. Neural Computing and Applications, 29(7), 359-373.</ref> || Karolinska Directed Emotional Face (KDEF) dataset || Their approach is a Deep Convolutional Neural Network (CNN) for feature extraction and a Support Vector Machine (SVM) for emotion classification. || Deep Convolutional Neural Network (CNN) || This approach reduces the number of layers required and it has a classification rate of 96.26% | ||

| Line 137: | Line 147: | ||

| <ref>Han, M. J., Hsu, J. H., Song, K. T., & Chang, F. Y. (2008). A New Information Fusion Method for Bimodal Robotic Emotion Recognition. JCP, 3(7), 39-47.</ref> || own database || The human facial expression images were recorded and then segmented by using the skin color. Features were extracted using integral optic density (IOD) and edge detection. || SVM-based classification || In addition to the analysis of facial expressions, also speech signals were recorded. They aimed to classify 5 different emotions, which happened at an 87% accuracy (5% more than the images by themselves). | | <ref>Han, M. J., Hsu, J. H., Song, K. T., & Chang, F. Y. (2008). A New Information Fusion Method for Bimodal Robotic Emotion Recognition. JCP, 3(7), 39-47.</ref> || own database || The human facial expression images were recorded and then segmented by using the skin color. Features were extracted using integral optic density (IOD) and edge detection. || SVM-based classification || In addition to the analysis of facial expressions, also speech signals were recorded. They aimed to classify 5 different emotions, which happened at an 87% accuracy (5% more than the images by themselves). | ||

|- | |- | ||

| <ref>Moghaddam, B., Jebara, T., & Pentland, A. (2000). Bayesian face recognition. Pattern Recognition, 33(11), 1771-1782.</ref> || own database || unknown || Bayesian facial recognition algorithm || This article is from 1999 and stands at the basis of machine learning, using a Bayesian matching algorithm to predict which faces | | <ref>Moghaddam, B., Jebara, T., & Pentland, A. (2000). Bayesian face recognition. Pattern Recognition, 33(11), 1771-1782.</ref> || own database || unknown || Bayesian facial recognition algorithm || This article is from 1999 and stands at the basis of machine learning, using a Bayesian matching algorithm to predict which faces belonging to the same person. | ||

|- | |- | ||

| <ref>Fan, P., Gonzalez, I., Enescu, V., Sahli, H., & Jiang, D. (2011, October). Kalman filter-based facial emotional expression recognition. In International Conference on Affective Computing and Intelligent Interaction (pp. 497-506). Springer, Berlin, Heidelberg.</ref> || unknown || This article uses a 3D candidate face model, that describes features of face movement, such as 'brow raiser' and they have selected the most important ones according to them || CNN || The joint probability describes the similarity between the image and the emotion described by the parameters of the Kalman filter of the emotional expression as described by the features, and it is maximized to find the emotion corresponding to the picture. This article is an advancement of the methods described in 8. The system is more effective than other Bayesian methods like Hidden Markov Models, and Principal Component Analysis. | | <ref>Fan, P., Gonzalez, I., Enescu, V., Sahli, H., & Jiang, D. (2011, October). Kalman filter-based facial emotional expression recognition. In International Conference on Affective Computing and Intelligent Interaction (pp. 497-506). Springer, Berlin, Heidelberg.</ref> || unknown || This article uses a 3D candidate face model, that describes features of face movement, such as 'brow raiser' and they have selected the most important ones according to them || CNN || The joint probability describes the similarity between the image and the emotion described by the parameters of the Kalman filter of the emotional expression as described by the features, and it is maximized to find the emotion corresponding to the picture. This article is an advancement of the methods described in 8. The system is more effective than other Bayesian methods like Hidden Markov Models, and Principal Component Analysis. | ||

| Line 143: | Line 153: | ||

| <ref>Maghami, M., Zoroofi, R. A., Araabi, B. N., Shiva, M., & Vahedi, E. (2007, November). Kalman filter tracking for facial expression recognition using noticeable feature selection. In 2007 International Conference on Intelligent and Advanced Systems (pp. 587-590). IEEE.</ref> || Cohn-Kanade database || unknown || Bayes optimal classifier || The tracking of the features was carried out with a Kalman Filter. The correct classification rate was almost 94%. | | <ref>Maghami, M., Zoroofi, R. A., Araabi, B. N., Shiva, M., & Vahedi, E. (2007, November). Kalman filter tracking for facial expression recognition using noticeable feature selection. In 2007 International Conference on Intelligent and Advanced Systems (pp. 587-590). IEEE.</ref> || Cohn-Kanade database || unknown || Bayes optimal classifier || The tracking of the features was carried out with a Kalman Filter. The correct classification rate was almost 94%. | ||

|- | |- | ||

| <ref>Wang, F., Chen, H., Kong, L., & Sheng, W. (2018, August). Real-time Facial Expression Recognition on Robot for Healthcare. In 2018 IEEE International Conference on Intelligence and Safety for Robotics (ISR) (pp. 402-406). IEEE.</ref> || own database || unknown || CNN || The method of moving average is | | <ref>Wang, F., Chen, H., Kong, L., & Sheng, W. (2018, August). Real-time Facial Expression Recognition on Robot for Healthcare. In 2018 IEEE International Conference on Intelligence and Safety for Robotics (ISR) (pp. 402-406). IEEE.</ref> || own database || unknown || CNN || The method of moving average is utilized to make up for the drawbacks of still image-based approaches, which is efficient for smoothing the real-time FER results | ||

|- | |- | ||

|} | |} | ||

| Line 151: | Line 161: | ||

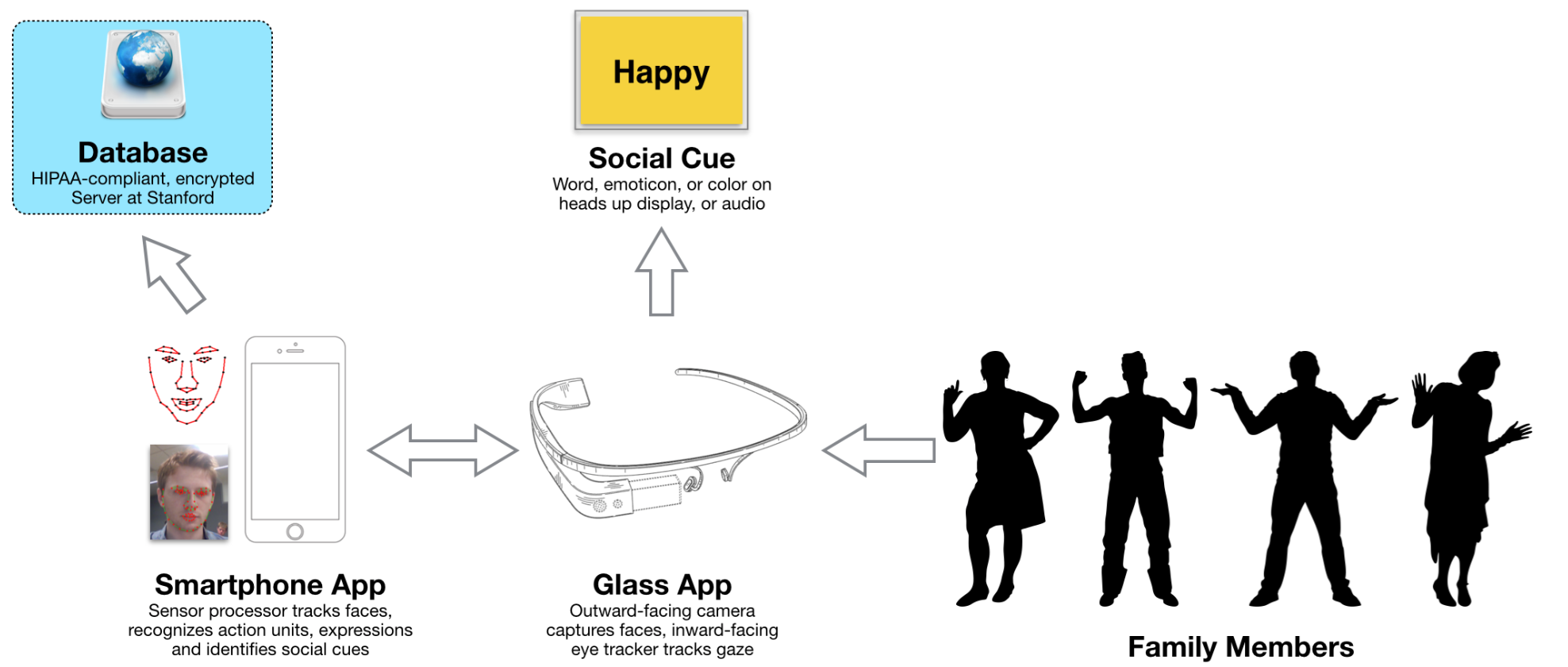

[[File:autismglass.png|thumb|400px|alt=Alt text|Figure 4: The autismglass, developed by Stanford University ]] | [[File:autismglass.png|thumb|400px|alt=Alt text|Figure 4: The autismglass, developed by Stanford University ]] | ||

Some elderly have problems recognizing emotions <ref>Sullivan, S., & Ruffman, T. (2004). Emotion recognition deficits in the elderly. International Journal of Neuroscience, 114(3), 403-432.</ref>. This is problematic, as primary care facilities for the elderly try to care using their emotions, e.g. to cheer the elderly person up by smiling. It would be very useful for the elderly to have a device similar to the Autismglass <ref> Sahin, N. T., Keshav, N. U., Salisbury, J. P., & Vahabzadeh, A. (2018). Second Version of Google Glass as a Wearable Socio-Affective Aid: Positive School Desirability, High Usability, and Theoretical Framework in a Sample of Children with Autism. JMIR human factors, 5(1), e1. </ref>. The Autismglass is in fact a Google Glass that was equipped with facial emotion recognition software (see fig. 4.). It is currently used to help children diagnosed with autism | Some elderly have problems recognizing emotions <ref>Sullivan, S., & Ruffman, T. (2004). Emotion recognition deficits in the elderly. International Journal of Neuroscience, 114(3), 403-432.</ref>. This is problematic, as primary care facilities for the elderly try to care using their emotions, e.g. to cheer the elderly person up by smiling. It would be very useful for the elderly to have a device similar to the Autismglass <ref> Sahin, N. T., Keshav, N. U., Salisbury, J. P., & Vahabzadeh, A. (2018). Second Version of Google Glass as a Wearable Socio-Affective Aid: Positive School Desirability, High Usability, and Theoretical Framework in a Sample of Children with Autism. JMIR human factors, 5(1), e1. </ref>. The Autismglass is, in fact, a Google Glass that was equipped with facial emotion recognition software (see fig. 4.). It is currently used to help children diagnosed with autism recognize the emotions of the people surrounding them. | ||

Assistive social robots have a variety of effects or functions, including (i) increased health by decreased level of stress, (ii) more positive mood, (iii) decreased loneliness, (iv) increased communication activity with others, and (v) rethinking the past <ref>Broekens, J., Heerink, M., & Rosendal, H. (2009). Assistive social robots in elderly care: a review. Gerontechnology, 8(2), 94-103.</ref>. Most studies report positive effects. With regards to mood, companion robots are reported to increase positive mood, typically measured using evaluation of facial expressions of elderly people as well as questionnaires. | Assistive social robots have a variety of effects or functions, including (i) increased health by decreased level of stress, (ii) more positive mood, (iii) decreased loneliness, (iv) increased communication activity with others, and (v) rethinking the past <ref>Broekens, J., Heerink, M., & Rosendal, H. (2009). Assistive social robots in elderly care: a review. Gerontechnology, 8(2), 94-103.</ref>. Most studies report positive effects. With regards to mood, companion robots are reported to increase positive mood, typically measured using evaluation of facial expressions of elderly people as well as questionnaires. | ||

| Line 158: | Line 168: | ||

The research team of <ref>Pioggia, G., Igliozzi, R., Ferro, M., Ahluwalia, A., Muratori, F., & De Rossi, D. (2005). An android for enhancing social skills and emotion recognition in people with autism. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 13(4), 507-515.</ref> has developed an android which has facial tracking, expression recognition and eye tracking for the purposes of treatment of children with high functioning autism. During the sessions with the robot, they can enact social scenarios, where the robot is the 'person' they are conversing with. | The research team of <ref>Pioggia, G., Igliozzi, R., Ferro, M., Ahluwalia, A., Muratori, F., & De Rossi, D. (2005). An android for enhancing social skills and emotion recognition in people with autism. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 13(4), 507-515.</ref> has developed an android which has facial tracking, expression recognition and eye tracking for the purposes of treatment of children with high functioning autism. During the sessions with the robot, they can enact social scenarios, where the robot is the 'person' they are conversing with. | ||

Source <ref>Espinosa-Aranda, J., Vallez, N., Rico-Saavedra, J., Parra-Patino, J., Bueno, G., Sorci, M., ... & Deniz, O. (2018). Smart Doll: Emotion Recognition Using Embedded Deep Learning. Symmetry, 10(9), 387.</ref> has developed a doll which can recognize emotions and act accordingly using an Eyes of Things (EoT). This is an embedded computer vision platform which allows the user to develop artificial vision and deep learning applications that | Source <ref>Espinosa-Aranda, J., Vallez, N., Rico-Saavedra, J., Parra-Patino, J., Bueno, G., Sorci, M., ... & Deniz, O. (2018). Smart Doll: Emotion Recognition Using Embedded Deep Learning. Symmetry, 10(9), 387.</ref> has developed a doll which can recognize emotions and act accordingly using an Eyes of Things (EoT). This is an embedded computer vision platform which allows the user to develop an artificial vision and deep learning applications that analyze images locally. By using the EoT in the doll, they eliminate the need for the doll to connect to the cloud for emotion recognition and with that reduce latency and remove some ethical issues. It is tested on children. | ||

Source <ref>Wang, F., Chen, H., Kong, L., & Sheng, W. (2018, August). Real-time Facial Expression Recognition on Robot for Healthcare. In 2018 IEEE International Conference on Intelligence and Safety for Robotics (ISR) (pp. 402-406). IEEE.</ref> uses facial expression recognition for safety monitoring and health status of the old. | Source <ref>Wang, F., Chen, H., Kong, L., & Sheng, W. (2018, August). Real-time Facial Expression Recognition on Robot for Healthcare. In 2018 IEEE International Conference on Intelligence and Safety for Robotics (ISR) (pp. 402-406). IEEE.</ref> uses facial expression recognition for safety monitoring and health status of the old. | ||

| Line 185: | Line 195: | ||

=== Society === | === Society === | ||

====combat loneliness==== | |||

In most western societies, the aging population is ever increasing. This, in addition to the lack of healthcare personnel, poses a major problem to the future elderly care system. Robots could fill the vacancies and might even have the potential to outperform regular personnel: Using smart neural networks, they can anticipate the elderly person's wishes and provide 24/7 specialized care if required in the own home of the elderly person. The use of care robots in this way is supported by <ref> Broekens, J., Heerink, M., & Rosendal, H. (2009). Assistive social robots in elderly care: a review. Gerontechnology, 8(2), 94-103. </ref>, which reports that care robots can not only be used in a functional way, but also to promote the autonomy of the elderly person by assisting them to live in their own home, and to provide psychological support. This is important, as the reduction of social isolation is detrimental to both the quality of life and the mental state of the elderly. | In most western societies, the aging population is ever increasing. This, in addition to the lack of healthcare personnel, poses a major problem to the future elderly care system. Robots could fill the vacancies and might even have the potential to outperform regular personnel: Using smart neural networks, they can anticipate the elderly person's wishes and provide 24/7 specialized care if required in the own home of the elderly person. The use of care robots in this way is supported by <ref> Broekens, J., Heerink, M., & Rosendal, H. (2009). Assistive social robots in elderly care: a review. Gerontechnology, 8(2), 94-103. </ref>, which reports that care robots can not only be used in a functional way, but also to promote the autonomy of the elderly person by assisting them to live in their own home, and to provide psychological support. This is important, as the reduction of social isolation is detrimental to both the quality of life and the mental state of the elderly. | ||

| Line 197: | Line 207: | ||

All of the categories of elderly people might cope with loneliness, but category 2 and 3 are more likely to need a care robot. They are also a vulnerable group of people, as they might not have the mental capacity to consent to the robot's actions. In this respect, interpreting the person's social cues is vital for their treatment, as they might not be able to put their feelings into words. For this group of elderly, false negatives for unhappiness can especially have an impact. To deduce what impact it can have, it is important to look at the possible applications of this technology in elderly care robots. | All of the categories of elderly people might cope with loneliness, but category 2 and 3 are more likely to need a care robot. They are also a vulnerable group of people, as they might not have the mental capacity to consent to the robot's actions. In this respect, interpreting the person's social cues is vital for their treatment, as they might not be able to put their feelings into words. For this group of elderly, false negatives for unhappiness can especially have an impact. To deduce what impact it can have, it is important to look at the possible applications of this technology in elderly care robots. | ||

As the elderly, especially those of categories 2 and 3, are vulnerable, their privacy should be protected. Information regarding their emotions can be used for their benefit, but can also be used against them, for example, to force psychological treatment if the patient does not score well enough on the 'happiness scale' as determined by the AI. Therefore, the system should be safe and secure. If possible, at least in the first stages secondary users can play a large role as well. Examples of such secondary users are formal caregivers and social contacts. The elderly person should be able to consent to the information regarding their emotions being shared | As the elderly, especially those of categories 2 and 3, are vulnerable, their privacy should be protected. Information regarding their emotions can be used for their benefit, but can also be used against them, for example, to force psychological treatment if the patient does not score well enough on the 'happiness scale' as determined by the AI. Therefore, the system should be safe and secure. If possible, at least in the first stages secondary users can play a large role as well. Examples of such secondary users are formal caregivers and social contacts. The elderly person should be able to consent to the information regarding their emotions being shared with these secondary users. Therefore, only elderly people of category 1 were to be included in this study. | ||

====privacy and autonomy==== | |||

“Amazon’s smart home assistant Alexa records private conversations” <ref> Fowler, G. A. (2019, May 6). Alexa has been eavesdropping on you this whole time. The Washington Post. Retrieved from https://www.washingtonpost.com/technology/2019/05/06/alexa-has-been-eavesdropping-you-this-whole-time/?utm_term=.7fc144aca478</ref> Americans were in a paralytic privacy shock and these headlines were all over the news less than a year ago. But if you think about the functionality of this smart home assistant it shouldn’t come to you as a surprise that it’s recording every word you say. To be able to react on its wake word “Hey Alexa” it should be recording and analyzing everything you say. This is where the difference between “recording” and “listening” enters the discussion. When the device is waiting for its “wake word” it is “listening”: Recordings are deleted immediately after a quick local scan. Once the wake word is said, the device starts recording. However, contrary to Amazon's promises, these recordings were not processed offline nor were they deleted afterwards. After online processing of the recordings, which would not necessarily lead to privacy violation when properly encrypted, the recordings were saved at Amazon's servers to be used for further deep learning. The big problem was that Alexa would sometimes | “Amazon’s smart home assistant Alexa records private conversations” <ref> Fowler, G. A. (2019, May 6). Alexa has been eavesdropping on you this whole time. The Washington Post. Retrieved from https://www.washingtonpost.com/technology/2019/05/06/alexa-has-been-eavesdropping-you-this-whole-time/?utm_term=.7fc144aca478</ref> Americans were in a paralytic privacy shock and these headlines were all over the news less than a year ago. But if you think about the functionality of this smart home assistant it shouldn’t come to you as a surprise that it’s recording every word you say. To be able to react on its wake word “Hey Alexa” it should be recording and analyzing everything you say. This is where the difference between “recording” and “listening” enters the discussion. When the device is waiting for its “wake word” it is “listening”: Recordings are deleted immediately after a quick local scan. Once the wake word is said, the device starts recording. However, contrary to Amazon's promises, these recordings were not processed offline nor were they deleted afterwards. After online processing of the recordings, which would not necessarily lead to privacy violation when properly encrypted, the recordings were saved at Amazon's servers to be used for further deep learning. The big problem was that Alexa would sometimes be triggered by other words than its “wake word” and start recording private conversations which were not meant to be in the cloud forever. These headlines affected the feeling of privacy of the general public. Amazon was even accused of using data from its assistant to make personalized advertisements for its clients. | ||

Introducing a camera in peoples house is a big invasion of their privacy, and if privacy is not seriously considered during the development and implementation, similar problems as in the case of Amazon will arise. | Introducing a camera in peoples house is a big invasion of their privacy, and if privacy is not seriously considered during the development and implementation, similar problems as in the case of Amazon will arise. | ||

| Line 215: | Line 225: | ||

'''Feeling of safety''' | '''Feeling of safety''' | ||

Would you like a camera recording you on the toilet? Even though this camera only recognizes your emotions and keeps that information to itself unless you agree to share it, the answer would probably be “no”. This is probably because you would not feel safe if you were recorded during your visit to the toilet. When a robot with a camera is introduced into an elderly person's house, their feeling of safety will be affected. To minimize this impact the robot will not be recording during the whole day. The camera will be integrated into the eye of the robot. When the robot is recording the eyes are open. When it is not recording the eyes will be closed and there will be a physical barrier over the camera. This should give the user a feeling of privacy when the eyes of the robot are closed. The robot will only record the elderly person when they interact with it. So as soon as they address, | Would you like a camera recording you on the toilet? Even though this camera only recognizes your emotions and keeps that information to itself unless you agree to share it, the answer would probably be “no”. This is probably because you would not feel safe if you were recorded during your visit to the toilet. When a robot with a camera is introduced into an elderly person's house, their feeling of safety will be affected. To minimize this impact the robot will not be recording during the whole day. The camera will be integrated into the eye of the robot. When the robot is recording the eyes are open. When it is not recording the eyes will be closed and there will be a physical barrier over the camera. This should give the user a feeling of privacy when the eyes of the robot are closed. The robot will only record the elderly person when they interact with it. So as soon as they address, its eyes will open, and it will start monitoring their emotional state until the interaction stops. Besides that, the robot will have a checkup function. After consultation with the elderly person, this function can be enabled. This will make the robot wake every hour and check the emotional state of the elderly person. If the elderly person is sad, the robot can start a conversation to cheer them up. If this person is in pain the robot might suggest adjusting the medication to their primary carers. | ||

'''Privacy of third parties''' | '''Privacy of third parties''' | ||

| Line 225: | Line 235: | ||

''' Enterprises who develop the robot ''' | ''' Enterprises who develop the robot ''' | ||

There already are enterprises which are developing robots that can determine facial expression to a certain extent, see for example the previous sub-section 'Possible Applications' in the state-of-the art technology section. The robot of the SocialRobot project is another example. Except for the SocialRobot, all these applications are not developed especially for elderly people. However, these kinds of enterprises could be interested in software or a robot which is specialized in facial recognition of elderly people. Namely, they already develop the robots and the designs which are interesting for a robot to be usable in elderly care. So since there are not robots which can determine facial expression specific of elderly people at the moment and the demand of such robots could be increasing in the near future, these enterprises could be willing to invest in the software for facial recognition for elderly people. Also, the enterprises which are developing Care robots could be interested in software which can determine the facial expression of elderly people. Furthermore, the enterprises of companion robots, just like Paro, could implement such software in their robots too. | There already are enterprises which are developing robots that can determine facial expression to a certain extent, see for example the previous sub-section 'Possible Applications' in the state-of-the-art technology section. The robot of the SocialRobot project is another example. Except for the SocialRobot, all these applications are not developed especially for elderly people. However, these kinds of enterprises could be interested in software or a robot which is specialized in facial recognition of elderly people. Namely, they already develop the robots and the designs which are interesting for a robot to be usable in elderly care. So since there are not robots which can determine facial expression specific of elderly people at the moment and the demand of such robots could be increasing in the near future, these enterprises could be willing to invest in the software for facial recognition for elderly people. Also, the enterprises which are developing Care robots could be interested in software which can determine the facial expression of elderly people. Furthermore, the enterprises of companion robots, just like Paro, could implement such software in their robots too. | ||

''' Enterprises who provide healthcare services ''' | ''' Enterprises who provide healthcare services ''' | ||

The market for the robot can be found in care homes or at homes of elderly people. Care homes could be interested in the development of a robot with facial expression recognition. This is because they can develop and build the robot to their own wishes when they are involved closely. | The market for the robot can be found in care homes or at homes of elderly people. Care homes could be interested in the development of a robot with facial expression recognition. This is because they can develop and build the robot to their own wishes when they are involved closely. | ||

However, care homes also could say no to this robot because they think that robots should not be present in the health care system or they simply do not think that the robot fits in their care home. | However, care homes also could say no to this robot because they think that robots should not be present in the health care system or they simply do not think that the robot fits in their care home. Since the society of The Netherlands is growing grey, there is a lack of employees in care homes, especially in the near future. Therefore, care homes are searching for alternatives and this robot can be a solution to their problem. | ||

== Our robot proposal == | == Our robot proposal == | ||

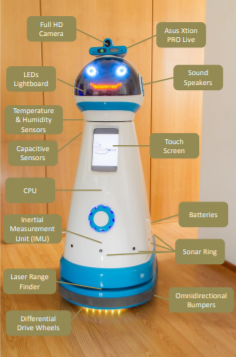

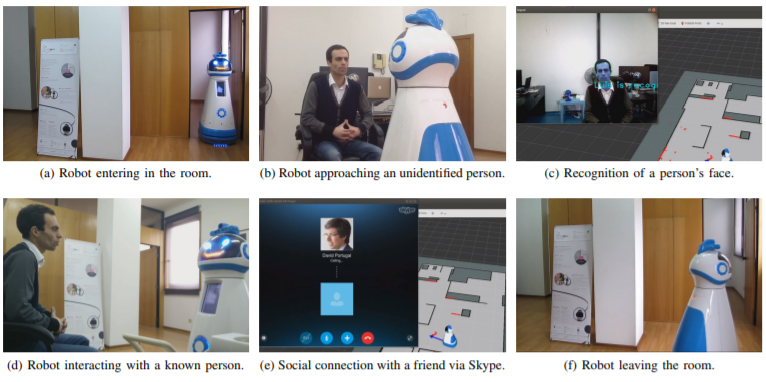

[[File:Socialrobot.png|thumb|400px|left|alt=Alt text|Figure 5: The SocialRobot]] [[File:Socialrobotactions.png|thumb|400px|right|alt=Alt text|Figure 6: The SocialRobot can carry out several different actions, like a) enter the room, b) approach a person, c) perform facial recognition, d) interact with a person, e) establish a social connection via its Skype interface, f) leave the room]] | [[File:Socialrobot.png|thumb|400px|left|alt=Alt text|Figure 5: The SocialRobot]] [[File:Socialrobotactions.png|thumb|400px|right|alt=Alt text|Figure 6: The SocialRobot can carry out several different actions, like a) enter the room, b) approach a person, c) perform facial recognition, d) interact with a person, e) establish a social connection via its Skype interface, f) leave the room]] | ||

=== SocialRobot project === | === SocialRobot project === | ||

SocialRobot | The SocialRobot (see fig. 5.) was developed by a European project that had the aim to aid elderly people in living independently in their own house and to improve their quality of life, by helping them with maintaining social contacts. The robotic platform has two wheels and it is 125cm so that it looks approachable. It is equipped with, including but not limited to: a camera, infrared sensor, microphones, a programmable array of LEDs that can show facial expressions and a computer. Its battery pack is fit to operate continuously for 5 hours. But most importantly, the SocialRobot is able to analyze the emotional state of its user via audio recordings. | ||

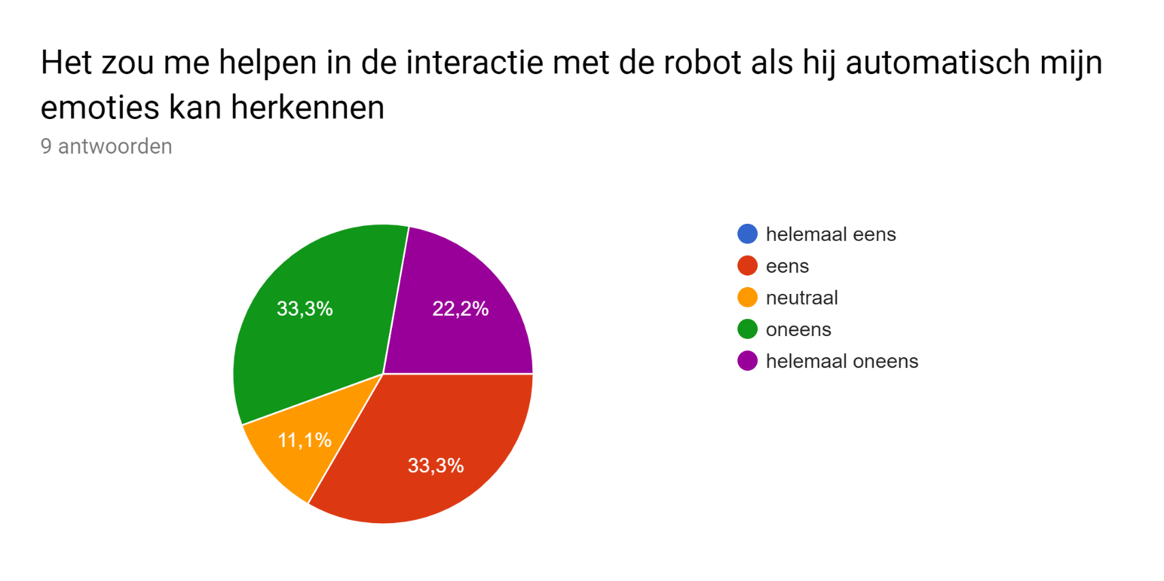

In the original SocialRobot project the robot identifies the elderly person using face recognition and then reads their emotion from the response that they give to its questions using voice recognition. The speech data | In the original SocialRobot project the robot identifies the elderly person using face recognition and then reads their emotion from the response that they give to its questions using voice recognition. The speech data is analyzed and from this, the emotion of the elderly person can be derived. The accuracy of this system was 82% in one of the papers published by the SocialRobot researchers (source 2). The idea is that the robot uses the response as input for the actions it takes afterwards, e.g. if the person is sad, they will encourage them to initiate a Skype call with their friends. If the person is bored, they will encourage them to play cards online with friends. We think that the quality of care provided by this robot could significantly increase when also facial emotion recognition is used. | ||

=== What can our robot do? === | === What can our robot do? === | ||

The SocialRobot platform can execute various actions. Based on the actions, a minimum standard for the accuracy of facial emotion recognition can be specified, as the actions can have varying degrees of invasiveness. | The SocialRobot platform can execute various actions. Based on the actions, a minimum standard for the accuracy of facial emotion recognition can be specified, as the actions can have varying degrees of invasiveness. | ||

* facial recognition: The robot can | * facial recognition: The robot can recognize the face of the elderly person and their carers. | ||

* emotion recognition: Using a combination of facial data and speech data, the robot classifies the emotions of the elderly person. | * emotion recognition: Using a combination of facial data and speech data, the robot classifies the emotions of the elderly person. | ||

* navigate to: The robot can go to a specific place or room in the elderly person's environment. | * navigate to: The robot can go to a specific place or room in the elderly person's environment. | ||

| Line 263: | Line 268: | ||

=== Emotion Classification === | === Emotion Classification === | ||

The current package of emotion recognition in the SocialRobot platform contains the following emotions: Anger, Fear, Happiness, Disgust, Boredom, Sadness, Neutral. This is because the researchers of the project have used openEAR software which employs the Berlin Speech Emotion Database (EMO-DB). However, not all of these emotions are equally important for our application. In addition to that, we do not | The current package of emotion recognition in the SocialRobot platform contains the following emotions: Anger, Fear, Happiness, Disgust, Boredom, Sadness, Neutral. This is because the researchers of the project have used openEAR software which employs the Berlin Speech Emotion Database (EMO-DB) <ref> Eyben, F., Wöllmer, M., & Schuller, B. (2009, September). OpenEAR—introducing the Munich open-source emotion and affect recognition toolkit. In 2009 3rd international conference on affective computing and intelligent interaction and workshops (pp. 1-6). IEEE. </ref>. However, not all of these emotions are equally important for our application. In addition to that, we do not recognize boredom as an emotion, as this is rather a state. The most important emotions for the treatment of elderly persons are probably anger, fear, and sadness. For the purposes of our research project, boredom and disgust could be omitted, as the inclusion of unnecessary features may complicate the classification process. | ||

=== Actions === | === Actions === | ||

The actions described under "What can our robot do?" can be combined for the robot to fulfill several tasks. We have chosen 3 tasks that are especially important for elderly care of lonely elderly, given the privacy requirements described in the previous sub-section. These three actions are associated with the emotion 'sadness', but of course many other actions can be envisioned. For each of the other emotions, we have decided to illustrate possible actions, but in less detail than for the emotion 'sadness'. This approach is similar to that of | The actions described under "What can our robot do?" can be combined for the robot to fulfill several more complex tasks. We have chosen 3 tasks that are especially important for elderly care of lonely elderly, given the privacy requirements described in the previous sub-section. These three actions are associated with the emotion 'sadness', but of course, many other actions can be envisioned. For each of the other emotions, we have decided to illustrate possible actions, but in less detail than for the emotion 'sadness'. This approach is similar to that of source 3, where the difference is that that project investigates the application of speech emotion recognition specifically, and we mainly investigate the influence of facial emotion recognition. | ||

''anger'' | ''anger'' | ||

| Line 278: | Line 283: | ||

''happiness'' | ''happiness'' | ||

Suppose that on the birthday of the elderly person, their grandchildren visit them. They will likely score high on the happiness score, as they are happy to see their family. In such a situation, the robot does not really have to carry out an action, as it is not immediately necessary. However, the robot could further facilitate the social connection between the elderly person and their grandchildren by letting them play a game together on the user interface. | |||

''neutral'' | ''neutral'' | ||

Just like with happiness, if the facial expression of the elderly person is neutral there is not a direct point in carrying out an action. However, it is important for the robot to keep monitoring the face of the elderly person. It is possible that the robot basically does not have enough information to find a facial expression. If this is the case, the robot could ask the elderly person a question, for example whether they are happy. The answer and the facial expression could give the robot more information about the state of the person. | Just like with happiness, if the facial expression of the elderly person is neutral there is not a direct point in carrying out an action. However, it is important for the robot to keep monitoring the face of the elderly person. It is possible that the robot basically does not have enough information to find a facial expression. If this is the case, the robot could ask the elderly person a question, for example, whether they are happy. The answer and the facial expression could give the robot more information about the state of the person. | ||

The three actions for ''sadness'' have been illustrated with a chronological overview of a potential situation where the person is sad. Based on the score for sadness, the SocialRobot chooses | The three actions for ''sadness'' have been illustrated with a chronological overview of a potential situation where the person is sad. Based on the score for sadness, the SocialRobot chooses one of the three actions. | ||

''' Get Carer ''' | ''' Get Carer ''' | ||

| Line 290: | Line 295: | ||

This situation is most useful in elderly care homes. As elderly care homes are becoming increasingly understaffed, a care robot could help out by entertaining the elderly person or by determining when they need additional help from a human carer. The latter is a complicated task, as the autonomy of the elderly person is at stake, and each elderly person will have a different way of communicating that they need help. It is possible to include a button in the information display of the robot which says 'get carer'. In this case, the elderly person can decide for themselves if they need help. This could be helpful if the elderly person is not able to open a can of beans or does not know how to control the central heating system. This option is well suited for our target group of elderly people that have full cognitive capabilities, for simple actions that have a low risk of violating the dignity of the elderly person. | This situation is most useful in elderly care homes. As elderly care homes are becoming increasingly understaffed, a care robot could help out by entertaining the elderly person or by determining when they need additional help from a human carer. The latter is a complicated task, as the autonomy of the elderly person is at stake, and each elderly person will have a different way of communicating that they need help. It is possible to include a button in the information display of the robot which says 'get carer'. In this case, the elderly person can decide for themselves if they need help. This could be helpful if the elderly person is not able to open a can of beans or does not know how to control the central heating system. This option is well suited for our target group of elderly people that have full cognitive capabilities, for simple actions that have a low risk of violating the dignity of the elderly person. | ||

However, if the elderly person feels depressed and lonely, it can be harder for them to admit that they need help. The robot could use emotion recognition to determine a day-by-day score (1-10) for each emotion. These scores can be monitored over time in the SoCoNet database. The elderly person should give consent for the robot to share this information with a carer. A less confronting approach might be to show the elderly person the decrease in happiness scores on the display and to ask them whether they would want to talk to a professional about how they are feeling. Then, a 'yes' and 'no' button might appear. If the elderly person presses 'yes', the robot can get a carer and inform them that the elderly person needs someone to talk to, without reporting the happiness scores or going into detail what is going on. | However, if the elderly person feels depressed and lonely, it can be harder for them to admit that they need help. The robot could use emotion recognition to determine a day-by-day score (1-10) for each emotion. These scores can be monitored over time in the SocialRobot's SoCoNet database. The elderly person should give consent for the robot to share this information with a carer. A less confronting approach might be to show the elderly person the decrease in happiness scores on the display and to ask them whether they would want to talk to a professional about how they are feeling. Then, a 'yes' and 'no' button might appear. If the elderly person presses 'yes', the robot can get a carer and inform them that the elderly person needs someone to talk to, without reporting the happiness scores or going into detail what is going on. | ||

{| class="wikitable" style="border-style: solid; border-width: 1px;" cellpadding="4" | {| class="wikitable" style="border-style: solid; border-width: 1px;" cellpadding="4" | ||

| Line 314: | Line 319: | ||

''' Call a friend ''' | ''' Call a friend ''' | ||

Lonely elderly often do have some friends or family, but they might live somewhere else and not visit them often. The elderly person should not get in | Lonely elderly often do have some friends or family, but they might live somewhere else and not visit them often. The elderly person should not get in social isolation, as this is an important contributor to loneliness. As mentioned before, loneliness is not only detrimental to their quality of life, but also to their physical health. Therefore, it is important for the care robot to provide a solution to this problem. The SocialRobot platform could monitor the elderly person's emotions over time, and if 'sad' scores higher than usual, the robot could encourage the elderly person to initiate a Skype call with one of their relatives/friends via the function social connection. The elderly person should still press a button on the display to actually start the call, to ensure autonomy. | ||

{| class="wikitable" style="border-style: solid; border-width: 1px;" cellpadding="4" | {| class="wikitable" style="border-style: solid; border-width: 1px;" cellpadding="4" | ||

| Line 343: | Line 348: | ||

''' Have a conversation ''' | ''' Have a conversation ''' | ||

Sometimes, the lonely person just needs someone to talk to | Sometimes, the lonely person just needs someone to talk to but does not have available relatives or friends. In such cases, the robot might step in and have a basic conversation with the elderly person concerning the weather or one of his/her hobbies. If humans have a conversation, they tend to get closer to each other, be it for the sole purpose of understanding each other better. The SocialRobot can approach the elderly person by recognizing their face and moving towards them. | ||

The initiation of the conversation could be done by the elderly person themselves. For this purpose, it might be a good idea to give the SocialRobot a name, like 'Fred'. Then, the elderly person can call out Fred, so that the robot will undock itself and approach them. If the initiation of the conversation comes from the robot, it is important for the robot to know when the elderly person is in a mood to be approached. If the person is 'sad' or 'angry' when the robot is in the midst of approaching the person, then the robot should stop and ask the elderly person 'do you want to talk to me?'. If the elderly person says no, the robot will retreat. | The initiation of the conversation could be done by the elderly person themselves. For this purpose, it might be a good idea to give the SocialRobot a name, like 'Fred'. Then, the elderly person can call out Fred, so that the robot will undock itself and approach them. If the initiation of the conversation comes from the robot, it is important for the robot to know when the elderly person is in a mood to be approached. If the person is 'sad' or 'angry' when the robot is in the midst of approaching the person, then the robot should stop and ask the elderly person 'do you want to talk to me?'. If the elderly person says no, the robot will retreat. | ||

| Line 374: | Line 379: | ||

The actions described in the previous section can have several consequences when the emotion classification results are corrupted by false positives or false negatives. | The actions described in the previous section can have several consequences when the emotion classification results are corrupted by false positives or false negatives. | ||

While most current literature on emotion classification is geared towards improving the reliability of the classification, they not set a minimum classification accuracy per emotion. It therefore seems that this is a relatively unexplored field of technological ethics. Instead of setting a minimum value, we have thus decided to solely regard the consequences of false positives and false negatives for each emotion. For the purposes of solving our research question, false positives and negatives concerning the recognition of 'sadness' were found to be particularly important. | While most current literature on emotion classification is geared towards improving the reliability of the classification, they not set a minimum classification accuracy per emotion. It, therefore, seems that this is a relatively unexplored field of technological ethics. Instead of setting a minimum value, we have thus decided to solely regard the consequences of false positives and false negatives for each emotion. For the purposes of solving our research question, false positives and negatives concerning the recognition of 'sadness' were found to be particularly important. | ||

'''anger''' | '''anger''' | ||

| Line 394: | Line 399: | ||

''FP'' | ''FP'' | ||

A false positive results in unnecessary workload of the carer. | A false positive results in the unnecessary workload of the carer. | ||

'''happiness''' | '''happiness''' | ||

| Line 404: | Line 409: | ||

''FP'' | ''FP'' | ||

If a false positive occurs for happiness, the consequences depend on the true emotion. However, as all other emotions are more negative than happiness, it probably means that the SocialRobot is negligent, not carrying out an action that it should have carried out, had it | If a false positive occurs for happiness, the consequences depend on the true emotion. However, as all other emotions are more negative than happiness, it probably means that the SocialRobot is negligent, not carrying out an action that it should have carried out, had it recognized the correct emotion. | ||

'''neutral''' | '''neutral''' | ||

| Line 421: | Line 426: | ||

For a false negative, the consequences are immense. The main point of this research project is to identify sadness for the target group of lonely elderly people. If sadness is not identified, the robot does not have a negative effect, or might even have an adverse effect. | For a false negative, the consequences are immense. The main point of this research project is to identify sadness for the target group of lonely elderly people. If sadness is not identified, the robot does not have a negative effect, or might even have an adverse effect. | ||

''FP'' | ''FP'' | ||

| Line 440: | Line 444: | ||

This article notes that the user will only trust the robot if it is accurate in reading their emotions and basing the actions upon this info. | This article notes that the user will only trust the robot if it is accurate in reading their emotions and basing the actions upon this info. | ||

== Data sets == | == Data sets == | ||

The neural network needs to be trained on a dataset with annotated pictures. The pictures in this dataset should contain the faces of people and should be labeled with their emotion. To make sure our neural network works on elderly people, there should be at least some pictures of elderly in the dataset. Creating our own dataset with pictures of good quality will be a lot of work. Besides that gathering this data from elderly participants gives rise to a lot of privacy issues and privacy legislation. So we looked for an existing dataset that we could use. Underneath you’ll find a list of databases we considered with some | The neural network needs to be trained on a dataset with annotated pictures. The pictures in this dataset should contain the faces of people and should be labeled with their emotion. To make sure our neural network works on elderly people, there should be at least some pictures of the elderly in the dataset. Creating our own dataset with pictures of good quality will be a lot of work. Besides that gathering this data from elderly participants gives rise to a lot of privacy issues and privacy legislation. So we looked for an existing dataset that we could use. Underneath you’ll find a list of databases we considered with some pros and cons. This list is based on the one on the Wikipedia page “Facial expression databases” <ref>Wikipedia. Facial expression databases. 16 March 2019 https://en.wikipedia.org/wiki/Facial_expression_databases</ref>. Most of these datasets are gathered by universities and available for further research, but access must be requested. | ||

- Cohn-Kanade: We got | - Cohn-Kanade: We got access to this dataset. Unfortunately, we could not handle the annotation, a lot of labels seemed to be missing. | ||

- RAVDESS: only video's, as we want to | - RAVDESS: only video's, as we want to analyze pictures it is better to have annotated pictures than frames from annotated video's. | ||

- JAFFE: only | - JAFFE: only Japanese women. Not relevant to our research. | ||

- MMI: | - MMI: | ||

- Faces DB: | - Faces DB: Dataset of our choice, it contains good annotated pictures from 35 subjects of different age categories. | ||

- Belfast Database | - Belfast Database | ||

- MUG In the database participated 35 women and 51 men all of Caucasian | - MUG In the database participated 35 women and 51 men all of the Caucasian origins between 20 and 35 years of age. Men are with or without beards. The subjects are not wearing glasses except for 7 subjects in the second part of the database. There are no occlusions except for a few hairs falling on the face. The images of 52 subjects are available to authorized internet users. The data that can be accessed amounts to 38GB. | ||

- RaFD Request for access has been sent (Rik). Access denied. | - RaFD Request for access has been sent (Rik). Access denied. | ||

| Line 466: | Line 468: | ||

- FERG<ref>D. Aneja, A. Colburn, G. Faigin, L. Shapiro, and B. Mones. Modeling stylized character expressions via deep learning. In Proceedings of the 13th Asian Conference on Computer Vision. Springer, 2016.</ref> avatars with annotated emotions. | - FERG<ref>D. Aneja, A. Colburn, G. Faigin, L. Shapiro, and B. Mones. Modeling stylized character expressions via deep learning. In Proceedings of the 13th Asian Conference on Computer Vision. Springer, 2016.</ref> avatars with annotated emotions. | ||

- AffectNet<ref> A. Mollahosseini; B. Hasani; M. H. Mahoor, "AffectNet: A Database for Facial Expression, Valence, and Arousal Computing in the Wild," in IEEE Transactions on Affective Computing, 2017. </ref> | - AffectNet<ref> A. Mollahosseini; B. Hasani; M. H. Mahoor, "AffectNet: A Database for Facial Expression, Valence, and Arousal Computing in the Wild," in IEEE Transactions on Affective Computing, 2017. </ref>: Huge database (122GB) contains 1 million pictures collected from the internet of which 400 000 are manually annotated. This dataset was too big for us to work with. | ||

: Huge database (122GB) contains 1 million pictures collected from the internet of which 400 000 are | - IMPA-FACE3D 36 subjects 5 elderly open access | ||

- IMPA-FACE3D 36 subjects 5 elderly open | |||

- FEI only neutral-smile university employees. This dataset does not contain elderly. | - FEI only neutral-smile university employees. This dataset does not contain elderly. | ||

| Line 475: | Line 476: | ||

== Facial Emotion Recognition Network == | == Facial Emotion Recognition Network == | ||

There is also a technical aspect | There is also a technical aspect of our research. This involves creating a convolutional neural network (CNN) that can differentiate between seven emotions from facial expressions only. The seven emotions used are mentioned in the emotion classification section of our robot proposal. | ||

This CNN needs to be trained before it can be used. This training is done by providing the CNN with the data you want to analyze (in this case images of facial expressions) with the correct label, the output you would want the network to give you for this input. The network iteratively optimizes its weights by using for instance the sum of the squared errors between the predicted value and the | This CNN needs to be trained before it can be used. This training is done by providing the CNN with the data you want to analyze (in this case images of facial expressions) with the correct label, the output you would want the network to give you for this input. The network iteratively optimizes its weights by using, for instance, the sum of the squared errors between the predicted value and the ground truth (given labels). During this training, the validation data is also used. The accuracy and loss of this separate validation set are shown during training. This separate dataset is used to see whether the network is overfitting on the training data. In that case, the network would just be learning to recognize the specific images in the training set, instead of learning features that can be generalized to other data. This is prevented by looking at how the network performs on the validation set. After training, to see how good the CNN performs, another separate dataset called the test set is used. | ||

For the training, validation and initial testing, a dataset called FacesDB <ref>FacesDB website, http://app.visgraf.impa.br/database/faces/</ref> is used. There will be two test sets, one of people | For the training, validation and initial testing, a dataset called FacesDB <ref>FacesDB website, http://app.visgraf.impa.br/database/faces/</ref> is used. There will be two test sets, one of the people with estimated ages below 60, and one of the people with estimated ages above 60. After this, the goal is to perform a test on elderly people, where images of their facial expressions are processed in real-time. | ||

The first thing to be tested is whether a network trained only on images of people younger than the estimated age of 60, will still predict the right emotions for elderly people. | The first thing to be tested is whether a network trained only on images of people younger than the estimated age of 60, will still predict the right emotions for elderly people. The initial plan was to get this working, and then make it work in real-time. But it was decided to change priorities and make sure the real-time program works over the better classification of elderly people. | ||

===Dataset=== | ===Dataset=== | ||

The Dataset that is used for training, validation and initial testing is called FacesDB. FacesDB was chosen because it contained fully labeled images | The Dataset that is used for training, validation and initial testing is called FacesDB. FacesDB was chosen because it contained fully labeled images of different ages. Moreover, this dataset also contains elderly participants, which is relevant since this is our target audience. The dataset consists of 38 participants, each acting out the seven different emotions. In this dataset, five of the 38 participants are considered to be “elderly”. These five will be excluded from all sets and put into a separate test set. There will also be a validation set, consisting of 3 participants, and a “younger” test set, also consisting of 3 people. | ||

The images in this dataset are 640x480 pixels, with the faces of the participants in the middle of the image. The background of all images is completely black. | The images in this dataset are 640x480 pixels, with the faces of the participants in the middle of the image. The background of all images is completely black. | ||

| Line 490: | Line 491: | ||

===Architecture=== | ===Architecture=== | ||

For a complicated problem like this, a simple CNN does not suffice. This is why, instead of building our own CNN, for now a well-known image classification model is used. This CNN is called VGG16.<ref>Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556.</ref> This network has been trained for the classification of 1000 different objects in images. Using transfer learning, we will be using this network for facial emotion classification. As can be seen in Figure 7, | For a complicated problem like this, a simple CNN does not suffice. This is why, instead of building our own CNN, for now, a well-known image classification model is used. This CNN is called VGG16.<ref>Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556.</ref> This network has been trained for the classification of 1000 different objects in images. Using transfer learning, we will be using this network for facial emotion classification. As can be seen in Figure 7, the CNN is build up of several layers. Some of these layers are convolutional layers that use filters to extract data from the input they receive, hence the name convolutional neural network. The data is transferred between these layers, from the input towards the output, using weighted connections. These weights scale the data when transferred to the next layer and are the part of the CNN which is actually optimized during training. Since the VGG16 network is already trained. There is no need to train all these weights again. The only thing needed to do is teaching the network to recognize the seven emotions that are needed as output, instead of over 1000 different objects. First, the output layer itself is changed to have the number of outputs needed for the problem at hand, which is seven. Next, the weights connecting to the output are trained. This is done by "freezing" all the weights, except for those connected to the output layer, so that only their values can be altered during training. Then the network, and thus essentially the final set of weights is trained on the data. | ||