PRE2019 3 Group12: Difference between revisions

No edit summary |

|||

| Line 104: | Line 104: | ||

A group students from the Singapore Management University have tried to tackle a similar problem.<ref>Nguyen, Huy Hoang, [https://ink.library.smu.edu.sg/cgi/viewcontent.cgi?article=4118&context=sis_research "Real-time Detection of Seat Occupancy and Hogging"], ''IoT-App'', 2015</ref> In their research they proposed a method using capacitance and infrared sensors to solve the problem of seat hogging. Using this they can accurately determine whether a seat is empty, occupied by a human, or the table is occupied by items. This method does require a sensor to be placed underneath each table and since in our situation the chairs move around, this method can't be used everywhere. | A group students from the Singapore Management University have tried to tackle a similar problem.<ref>Nguyen, Huy Hoang, [https://ink.library.smu.edu.sg/cgi/viewcontent.cgi?article=4118&context=sis_research "Real-time Detection of Seat Occupancy and Hogging"], ''IoT-App'', 2015</ref> In their research they proposed a method using capacitance and infrared sensors to solve the problem of seat hogging. Using this they can accurately determine whether a seat is empty, occupied by a human, or the table is occupied by items. This method does require a sensor to be placed underneath each table and since in our situation the chairs move around, this method can't be used everywhere. | ||

Autodesk research also proposed a method for detecting seat occupancy, only this time for a cubicle or an entire room.<ref>Hailemariam, Ebenezer, [https://www.autodeskresearch.com/sites/default/files/Hailemariam__Occupancy_Detection__2011-02-18_1357.pdf "Real-Time Occupancy Detection using Decision Trees with Multiple Sensor Types"], ''Autodesk Research'', 2011</ref> They used decision trees in combination with several types of sensors to determine what sensor is the most effective. The individual feature which best distinguished presence from absence was the root mean square of a passive infrared motion sensor. It had an accuracy of 98.4% but sing multiple sensors only made the result worse, probably due to overfitting. This method could be implemented for rooms around the university but not for individual chairs. | |||

=Planning= | =Planning= | ||

Revision as of 15:50, 9 February 2020

Group Members

| Name | ID | Major | |

|---|---|---|---|

Subject

Our subject for the project is going to be a system which uses a camera-based recognition software to, for example, find empty workplaces in various areas . These include areas like empty workspaces at Metaforum during examweeks for studying, empty places in a library for silentness or empty desks at flexible workspaces at a company.

Objectives

- Library or other flexible workplaces

The system should be able to tell the user whether there is an empty space (or more) in the area and if so, it should also be able to tell the user where this place is located. Additionally, it can also tell whether a person has left the workplace earlier than the end of the reserved time so that the workplace can be set to "available" sooner.

- Room reservation

It should be able to detect whether there are people in the room and if there is no one in the room within certain time window, it should set the room to available for reservation.

Users

The users we plan to target with our project include multiple groups of people, since it can be applied in various environments. Possible users include students, employees making use of flexible work spaces or library visitors. Each of these users can benefit from the technology in two situations. The first is in larger study or working halls, comparable to Metaforum, for checking if there are available places. The second is in smaller study rooms, comparable to booking rooms in Atlas for cancelling reservations if the student doesn't show up or leaves earlier.

Approach

The system is based on the reflection of sound waves emitted by a sound source. The reflected sound will be different based on whether a person is sitting on the chair or not. The distance the sound has to travel is shorter if there is a person (taller than the chair) sitting on the chair. Advantages: It does not capture any visual data which people might feel their privacy violated by. It can have quite a lot of range for one sensor, since it measures the differences between signals over time and always have something to compare.

Disadvantages: Other objects can deteriorate the signal if they’re close to the chair or person, making the measuring of the sound waves less accurate. It can only detect if there is someone in the chair, it cannot tell if the person leaves stuff on the desk indicating he or she is coming back or not.

State-of-the-art

Current State of Art The current state of the art library for AI and machine learning is OpenCv. OpenCV (Open Source Computer Vision Library) is an open source computer vision and machine learning software library. OpenCV was built to provide a common infrastructure for computer vision applications and to accelerate the use of machine perception in the commercial products. Being a BSD-licensed product, OpenCV makes it easy for businesses to utilize and modify the code.

The library has more than 2500 optimized algorithms, which includes a comprehensive set of both classic and state-of-the-art computer vision and machine learning algorithms. These algorithms can be used to detect and recognize faces, identify objects, classify human actions in videos, track camera movements, track moving objects, extract 3D models of objects, produce 3D point clouds from stereo cameras, stitch images together to produce a high resolution image of an entire scene, find similar images from an image database, remove red eyes from images taken using flash, follow eye movements, recognize scenery and establish markers to overlay it with augmented reality, etc. OpenCV has more than 47 thousand people of user community and estimated number of downloads exceeding 18 million. The library is used extensively in companies, research groups and by governmental bodies.

From those the current state of the heart in terms of object detections is an algorithm called Cascade Mask R-CNN. A multi-stage object detection architecture, the Cascade R-CNN, is proposed to address these problems. It consists of a sequence of detectors trained with increasing IoU thresholds, to be sequentially more selective against close false positives. Comparing it to the last year state of art algorithm , Cascade Mask R-CNN has an overall 15% increase in performance, and a 25% performance increase regarding the state of art of 2016.

This method/apporach can be found in the following papers:

They use a convolutional Neural Network to detect land use from satellite images with an accuracy of around 98,6%. https://ieeexplore.ieee.org/abstract/document/7447728

Summary:

Hybrid Task Cascade for Instance Segmentation http://openaccess.thecvf.com/content_CVPR_2019/papers/Chen_Hybrid_Task_Cascade_for_Instance_Segmentation_CVPR_2019_paper.pdf

Summary:

CBNet: A Novel Composite Backbone Network Architecture for Object Detection

https://arxiv.org/pdf/1909.03625v1.pdf

Summary:

Cascade R-CNN: High Quality Object Detection And Instance Segmentation https://arxiv.org/pdf/1906.09756.pdf

Summary:

https://www.tandfonline.com/doi/full/10.1080/09500341003693011 A paper which describes the workings, differences and origin of multiple InfraRed cameras and techniques

Similar systems have already been implemented in real-life situation for parking spaces for cars. Many parking environments have implemented ways to identify vacant parking spaces in an area using various methods: Counter-based systems, which only provide information on the number of vacant spaces, sensor-based systems, which requires ultrasound, infrared light or magnetic-based sensors to be installed on each parking spot, and image or camera-based systems.[1] These camera systems are the most cost-efficient method as it only requires a few cameras in order to function and many areas already have cameras installed. This system is however rarely implemented as it doesn't work too well in outdoor situations.

Researchers from the University of Melbourne have created a parking occupancy detection framework using a deep convolutional neural network (CNN) to detect outdoor parking spaces from images with an accuracy of up to 99.7%.[2] This shows the potential that image based systems using CNN's have in these types of tasks. A major difference that our project has from this research however, is the movement of the spaces. Parking spaces of vehicles always stay on the same position. A CNN is therefore able to focus on a specific part of the camera and focus on one parking space. In our situation the chairs can move around and aren't in a constant position. The neural network will therefore first have to find and identify all the chairs in its vision before it can detect whether it is vacant or not, an additional challenge we need to overcome.

A group students from the Singapore Management University have tried to tackle a similar problem.[3] In their research they proposed a method using capacitance and infrared sensors to solve the problem of seat hogging. Using this they can accurately determine whether a seat is empty, occupied by a human, or the table is occupied by items. This method does require a sensor to be placed underneath each table and since in our situation the chairs move around, this method can't be used everywhere.

Autodesk research also proposed a method for detecting seat occupancy, only this time for a cubicle or an entire room.[4] They used decision trees in combination with several types of sensors to determine what sensor is the most effective. The individual feature which best distinguished presence from absence was the root mean square of a passive infrared motion sensor. It had an accuracy of 98.4% but sing multiple sensors only made the result worse, probably due to overfitting. This method could be implemented for rooms around the university but not for individual chairs.

Planning

Milestones

For our project we have decided upon the following milestones each week:

- Week 1: Decide on a subject, make a planning and do research on existing similar products and technologies.

- Week 2: Finish preparation research on USE aspects and subject and have a clear idea of the possibilities for our projects.

- Week 3: Start writing code for our device and finish the design of our prototype.

- Week 4: Create the dataset of training images and buy the required items to build our device.

- Week 5: Have a working neural network and train it using our dataset.

- Week 6: Test our prototype in a staged setting and gather results and possible improvements.

- Week 7: Finish our prototype based on the test results and do one more final test.

- Week 8: Completely finish the Wiki page and the presentation on our project.

Deliverables

This project plans to provide the following deliverables:

- A Wiki page containing all our research and summaries of our project.

- A prototype of our proposed device.

- A presentation showing the results of our project.

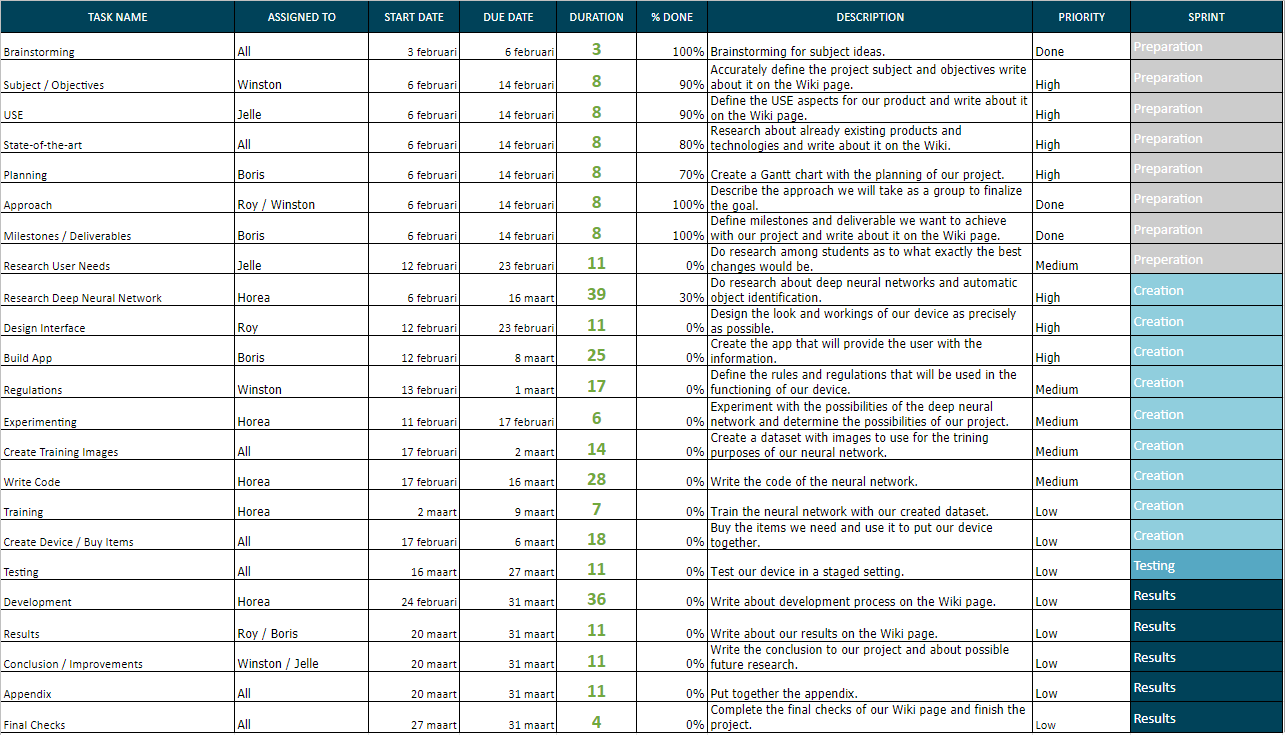

Schedule

Our current planning can be seen in the table below. This planning is not entirely finished and will be updated as the project goes on.

References

- ↑ Bong, D.B.L., "Integrated Approach in the Design of Car Park Occupancy Information System (COINS)", IAENG International Journal of Computer Science, 2008

- ↑ Acharya, Debaditya, "Real-time image-based parking occupancy detection using deep learning", CEUR Workshop Proceedings, 2018

- ↑ Nguyen, Huy Hoang, "Real-time Detection of Seat Occupancy and Hogging", IoT-App, 2015

- ↑ Hailemariam, Ebenezer, "Real-Time Occupancy Detection using Decision Trees with Multiple Sensor Types", Autodesk Research, 2011