PRE2018 3 Group6: Difference between revisions

| (89 intermediate revisions by 5 users not shown) | |||

| Line 18: | Line 18: | ||

== Problem statement == | == Problem statement == | ||

=== How can the uncertainty humans have about | === How can the uncertainty humans have about robot actions be reduced, using vocal cues. === | ||

* Can vocal cues help reduce the anxiety people feel, because of uncertainty, towards these robots? | |||

Define Uncertainty: | |||

employees working with robots have trouble understanding them. | |||

*They do not know how intelligent the robots are and give them made-up characters and intelligence based on what they believe. (Min Kyung Lee, et al. 2012) | |||

*Robots are not competitive or trying to be better than human, although humans can feel like they have to. (Margot Neggers) | |||

*Humans don’t understand robots which makes them feel uncomfortable since they can't predict the robots' actions. As a result humans tend to stop doing their own task (Margot Neggers) | |||

*“The ability to estimate engagement and regulate social signals is particularly important when the robot interacts with people that have not been exposed to robotics, or do not have experience in using/operating them: a negative attitude towards robots, a difficulty in communicating or establishing mutual understanding may cause unease, disengagement and eventually hinder the interaction.” (Ivaldi, S. et al. 2017) | |||

In short, People don’t understand what the robot is thinking/doing therefore they impose false assumptions on the robot and will act under those assumptions. Or the human will just stop doing his/her tasks. | |||

== Users == | == Users == | ||

List of users highly involved with the introduction of robots in warehouse environments. Since there are many different titles for warehouse workers, mentioned are the ones that need to be distinguished because of their relationship with the robot. | |||

'''General Warehouse Laborer''' | '''General Warehouse Laborer''' | ||

| Line 62: | Line 59: | ||

*Jobs that mostly have to do with overlooking, organizing and preparing the shipments and with speaking with clients to make new deals respectively. | *Jobs that mostly have to do with overlooking, organizing and preparing the shipments and with speaking with clients to make new deals respectively. | ||

*Education: again, nothing more than a high school diploma but computer handling skills are required | *Education: again, nothing more than a high school diploma but computer handling skills are required | ||

*This group of employees | *This group of employees will probably be the one that is going to get affected (replaced or change of responsibilities) the least since they do mostly work with human clients or the make higher level decisions | ||

*I believe their interest again should be somewhere in the middle, since their colleagues are mostly getting replaced but they can do their jobs better since the introduction of robots will bring efficiency. Now for them, that their jobs are fairly stable, it makes sense to believe that they will have a positive stance against the robots having higher speaking skills because they will bring better collaboration and thus their organizing tasks will be easier. | *I believe their interest again should be somewhere in the middle, since their colleagues are mostly getting replaced but they can do their jobs better since the introduction of robots will bring efficiency. Now for them, that their jobs are fairly stable, it makes sense to believe that they will have a positive stance against the robots having higher speaking skills because they will bring better collaboration and thus their organizing tasks will be easier. | ||

Sources | Sources | ||

https://www.horizonstaffingsolutions.com/different-types-warehouse-jobs | https://www.horizonstaffingsolutions.com/different-types-warehouse-jobs | ||

https://www.friday-staffing.com/blog/warehouse-job-titles-and-descriptions/ | https://www.friday-staffing.com/blog/warehouse-job-titles-and-descriptions/ | ||

| Line 88: | Line 85: | ||

**annoying robot | **annoying robot | ||

= | =Report= | ||

==Introduction== | |||

A | Industrial robots are taking over more and more tasks in the workforce. Where there were 1.2 million Industrial Robots around the world, there were 1.9 million in 2017 (West, D. M. 2015). The tasks done by these robots are usually very repetitive (Tamburrini, G. ,2019), making them easy to automate. The robots are making the process faster and cheaper. For example, robots can work 24 hours a day without getting tired. Robots will take in more space in the workforce, robots are getting smarter, cheaper and are starting to take over more complex tasks (West, D. M. 2015). The transition to robot co-workers can often bring troubles due to the human resistance of robots like humans having trouble understanding the robot, the robot working in a different pace (Weiss, A., et al. 2016) or the fear of being replaced by the robot.(Salvini, P. et al. 2010). | ||

In this research we are looking at creating a more efficient and pleasant transition for the human co-workers by giving the robot communication tools. Voice will be tested as a communication tool. To identify strengths and weaknesses, a scenario with LED's as communication and a scenario without communication will be added in the experiment for comparison. These tools will be tested to see if enriching a robot with one of them results in a more enjoyable work experience for their human coworkers. In a study done by Sauppé, A., et al It is already shown how giving the robot eyes can make its functions clearer for humans (2015). Peoples' jobs often change due to the introduction of a robot in their work (Salvini, P. et al. 2010). An application of the voice could be used to explain what the robot is currently working on or give instructions to their human counterpart. | |||

This research will mainly focus on industrial robots in warehouses as this is a common place where robots are taking over tasks. | |||

== | ==Related work== | ||

In the introduction it was shown that there is not just an increase in the amount of robots, but also in the difficulty of the tasks they perform. In this section we will cover some of the many works that relate to the problems emerging from these increases. We will also cover research that has been done to specific aspects of our problem. | |||

One of these papers focusses specifically on how helpful vocal interaction can be in learning. In the paper “Effects of voice-based synthetic assistant on performance of emergency care provider in training”(Damacharla P., 2019)) the researchers measure whether a person can learn how to provide emergency care more quickly if it receives assistance from a voice-based synthetic assistant. This research paper is related to our work in that it shows that a vocal stimulant can help people in situations that are normally difficult for them to deal with. Giving us an indication that our research might result in a positive outcome. | |||

Another paper that is related to ours is the paper called “Intelligent agent supporting human-multi-robot team collaboration”(Rosenfeld, A. , 2017). This paper gives an in-depth explanation of how an intelligent agent can help humans in their collaboration with robots. This article is especially interesting since one of the scenarios they focus on is warehouses. In this scenario the intelligent agent suggest possibly difficult situations for robots, such as a box that has fallen. The human can then judge whether this hinders the robots or not, and possibly change the robots behavior based on this. At some point the paper discusses whether the human found these suggestions helpful or annoying. Another paper that writes about a robot giving feedback to humans is the paper “Inspector Baxter: The Social Aspects of Integrating a Robot as a Quality Inspector in an Assembly Line”(Banh, A. ,2015). However this paper focusses on the influence movement and facial expressions can have on the social interaction. | |||

The paper “The Social Impact of a Robot Co-Worker in Industrial Settings”(Sauppé A. ,2017), gives a discussion of the social phenomena that emerge when a robot co-worker is introduced in an industrial environment. It does this by discussing the relationship that each employee developed with the robot. In the end it suggests some improvements for future robot implementations that would improve further social interactions. This article relates to our problem in that it discusses the problem but in a broader sense. I.e. not focusing on a specific solution. An paper called “Ripple effects of an embedded social agent: A field study of a social robot in the workplace”(Kyung Lee. M. ,2012) also discusses these social phenomenon that emerge in a human-robot cooperative industrial environment. However the robots in this paper perform the same basic tasks as the robots in a warehouse. I.e. picking up goods and delivering them to people. | |||

One paper that we found tries to show the importance of movement in the social interaction between human-robot interaction. As cited “they [robots] also need the ability to model and reason about human activities, preferences and conventions. This knowledge is fundamental for robots to smoothly blend their motions, tasks and schedules into the workflows and daily routines of people. We believe that this ability is key in the attempt to build socially acceptable robots for many domestic and service applications”(Tipaldi, G.D. ,2011) This relates to our work, because it indicates a variable we need to keep track off. | |||

== | ==Interviews== | ||

A large part of the orientation and validation process was done using interviews with subject experts. This ranged from interviewing philosophy professors to Robotics R&D engineers. Combining these interviews with the knowledge gained from our literary research served to create a broad base of knowledge concerning robotics and human-robot interactions. Here you can find in short the mayor findings from these interviews. | |||

'''Margot Neggers''' | |||

Margot Neggers is a PhD Candidate at the Technical University of Eindhoven. Where she does research for the Human Technology Interaction groep. Mainly concerning robots and their interaction and communication with humans. | |||

Margot sketched the current situation within human robot interaction while mainly focusing on the industrial sector. According to her there is a lot of improvement possible within the way robots and humans interact on the work floor. At this point both robots and humans do their own work independent of each other. Where often the robots do not signal what they are currently working on. This increases the uncertainty for human workers. And when humans become uncertain their anxiety increases and their productivity drops. This is because human workers who do not know what their robotic colleague is working on at this moment have only one way to reduce this uncertainty. And that is waiting to see what the robot is going to do next. | |||

“That is what people do when they do not understand the robot: they wait. -Margot Neggers” | |||

Margot also explained previous research she has done for human-robot interaction. This was an experiment where a subject and a robot both would navigate concurrently through the same room. For this experiment they tried three communication methods: blinking LEDs, speech and gestures. From this experiment it became clear that subjects prefer speech as a communication tool over gestures and light signals. They found this the most pleasant way for the robot to communicate to them. But if performance was also taken into account it was found that speech was actually not the fastest gestures where. These findings were extremely interesting to us and formed the basis of this research project. | |||

Another important insight gained from Margot was that, especially in this discipline, it is crucial for your results that subject do not have to imagine what something would be like, because when subjects think deeply about what how they would experience something the results would differ from when they actually experience it. And for human-robot interaction this could have a very large impact on your final conclusion. Therefore we started to work towards doing an actual experiment to give more validity to our conclusion. | |||

Besides this Margot also pointed us towards the anthropomorphistic side of human-robot interaction. As she stated that humans working with robots often assign personalities to that robot, which has a very large impact on how humans feel about the robot in future interactions. We thought this would be interesting to look into and therefore decided to try to gain some information on this during the rest of the experiment. | |||

'''Jilles Smids''' | |||

Jilles Smids is a PhD candidate in the ethics of persuasive technology at the section of Philosophy and Ethics at the Technical University of Eindhoven. He has been working on a paper named: Robots in the warehouse: working with or against the machine. This got our interest and kick-started our project. Jilles gave us confirmation in the issues that are at hand with humans and robotics in warehouses. He mentioned that the fear of human workers being taken over by robots is unjustified. Warehouses are actually expanding and cannot find the the excessive workforce needed to fill the warehouse so use robots to co-exist with the current workers. According to Jilles, people tend to fear robots because they are afraid of losing their jobs, whilst robots can actually take over the tasks that are too heavy or uninteresting for humans to do. | |||

Jilles helped us realize that a big problem in automation in the warehouse is not informing workers of what exactly is going on. This leads to cases where robots are being vandalized by human co-workers. It helped give us the idea that humans should be more aware of the actions of robots and gave us a clear ground to start our project from. | |||

Jilles also gave us certain examples of where humans and robots did not get along; such as the Waymo’s self driving car incidents in Arizona. This made it clear that humans and robots are still in early phases of understanding and working together. | |||

'''Bas Coenen''' | |||

Bas Coenen is a graduate from the Technical University of Eindhoven, who is now currently working at Vanderlande as Team Leader Robotics, Senior R&D integration Engineer. | |||

Bas helped us get a more clear understanding of what was actually being done with warehouse-type robotics. He gave insight on current issues with warehouses and robotic involvement around the world. He mentioned that multinational companies that have warehouses/factories all over the world have to deal with different cultures. Something as simple as a positive gesture here could mean something negative somewhere else. So robots that are created have to be able to fit-in in any culture over the world. Otherwise, there will always have to be changes in every warehouse over the world and as a company this can be inconvenient. | |||

He also took us into a demonstration area where different setups for different supply lines were displayed. This gave us a better sense of how professionals have made solutions to warehouse robotics. However, most lines still gave the sense that robots and humans are separate from each other and not really co-existing. | |||

His colleague explained about her work on adding eyes to robots to give a certain sense of being. This way people would notice when they are being noticed. For example, if you are trying to cross a pedestrian crossing and a car is coming, many people try to make eye contact with the driver to see if they are being noticed. This way you know you are seen and won’t be run over. Robots tend to just carry out their task and not give a sense of knowing someone is there. People tend to be afraid or uncertain of robots actions so they stop working to make sure they are safe. If a person notices it is noticed it could save valuable time. | |||

Speaking to people actually working on robotics on a large scale definitely gave us some ideas on how to carry out our experiment. And allowing us to use some of their time, increased the interest we have in robotics. | |||

==Research questions and hypothesis== | ==Research questions and hypothesis== | ||

| Line 189: | Line 154: | ||

If the answer to either of these two questions is true, then we can look at which method is better. Which is why the third research question is the following: “Do vocal queues increase the likeability of a warehouse robot more than visual queues?” | If the answer to either of these two questions is true, then we can look at which method is better. Which is why the third research question is the following: “Do vocal queues increase the likeability of a warehouse robot more than visual queues?” | ||

Now that we have defined some research questions, we can define a hypothesis for the experiment that will be explained a little bit further on. Because of the paper “Effects of voice-based synthetic assistant on performance of emergency care provider in training”[1]. We predict that “Vocal queues increase the likeability of a warehouse robot.” Because of the research Margot Neggers has done, we also predict that “Visual queues increase the likeability of a warehouse.” | Now that we have defined some research questions, we can define a hypothesis for the experiment that will be explained a little bit further on. Because of the paper “Effects of voice-based synthetic assistant on performance of emergency care provider in training”[1]. We predict that “Vocal queues increase the likeability of a warehouse robot.” Because of the research Margot Neggers has done, we also predict that “Visual queues increase the likeability of a warehouse robot.” | ||

With this, we can formulate the following hypothesis for the experiment. | With this, we can formulate the following hypothesis for the experiment. | ||

'''Hypothesis:''' ''Adding a vocal queue or a visual queue to a robot in a warehouse setting will increase the likeability people feel towards it; compared to the likeability they have towards the same robot without these features.'' | '''Hypothesis:''' ''Adding a vocal queue or a visual queue to a robot in a warehouse setting will increase the likeability people feel towards it; compared to the likeability they have towards the same robot without these features.'' | ||

==Health & Safety Plan == | |||

During the experiment, it is of pivotal importance to keep the participants safe. Since the robot taking part in the experiment is around 50kg, in the unfortunate case of an accident there could be a chance of heavy injury. To avoid this some safety measures have to be taken: | |||

• There is a clear line on the floor that the test subject has to stand behind while the robot is moving. Only when the robot has come to a complete stand still is the test subject allowed to cross the line. The line is not directly in the robot’s path so the chances of crossing the line are diminished. | |||

• | • Conversely the robot will only start moving when the test subject is standing behind the line and gives a clear signal that he is done. | ||

• There is always one person responsible for the safety measures taken. This | • There is always one person responsible for the safety measures taken. This persons task is to have access to an external “Kill-switch”. This person solely focuses on the experiment and pressing the button. Once the button is pressed it will immediately turn off the robot. | ||

==Questionnaires== | ==Questionnaires== | ||

| Line 225: | Line 191: | ||

The test subjects would fill in a general intake questionnaire to ask general questions about themselves; such as age, education, etc. Then they would start with one of the scenarios, this could be the list, voice, or LEDs. Then they would fill in the trial questionnaire. The trial questionnaire consisted of a combination of two validated questionnaires: the Co-existence questionnaire similar to that used in the | The test subjects would fill in a general intake questionnaire to ask general questions about themselves; such as age, education, etc. Then they would start with one of the scenarios, this could be the list, voice, or LEDs. Then they would fill in the trial questionnaire. The trial questionnaire consisted of a combination of two validated questionnaires: the Co-existence questionnaire similar to that used in the PEPPER GAME [12] and the Godspeed questionnaire. The Godspeed questionnaire gives a better insight on what people think of the robot. After the test subjects repeated each scenario they got another trial questionnaire. After finishing all three questionnaires, a short interview was conducted. This interview was done to see if additional interesting observations could be made. | ||

A link to the Questionnaires and Interview can be found at [[Questionnaires Link]] | A link to the Questionnaires and Interview can be found at [[Questionnaires Link]] | ||

| Line 233: | Line 199: | ||

'''Context''' | '''Context''' | ||

In order to test our hypothesis, an industrial-like robot (FAST platform) was used to see how people would react. The robot drove packages to a person who would place the packages in the correct box. The idea of the experiment is modeled after the warehouse robots used at Amazon. | In order to test our hypothesis, an industrial-like robot (FAST platform) was used to see how people would react. The robot drove packages to a person who would place the packages in the correct box. The idea of the experiment is modeled after the warehouse robots used at Amazon where robots bring large racks with packages towards human order pickers. | ||

'''Procedure''' | '''Procedure''' | ||

This experiment is conducted in different parts. First, the setup of the experiment is described. Secondly, the steps taken in the experiment shall be explained. Thirdly, the separate scenarios (picklist, voice, LED) that have been tested shall be described. And finally, how the theoretical experiment happened in practice. | This experiment is conducted in different parts. First, the setup of the experiment is described. Secondly, the steps taken in the experiment shall be explained. Thirdly, the separate scenarios (picklist, voice, LED) that have been tested shall be described. And finally, how the theoretical experiment happened in practice shall be described. | ||

'''Setup''' | '''Setup''' | ||

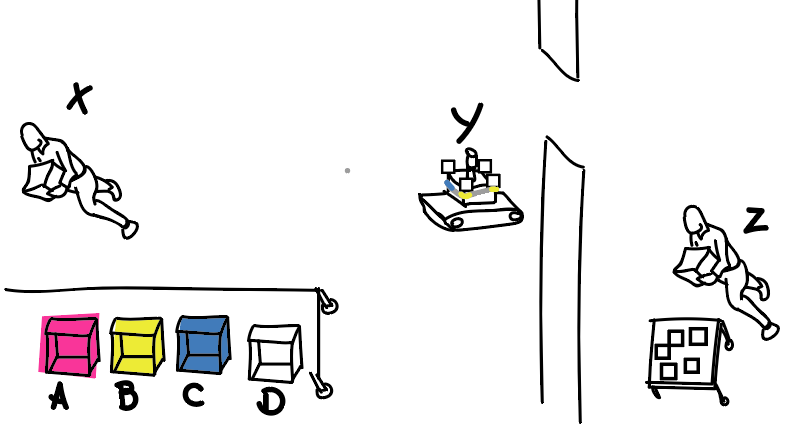

Firstly, the experiment was setup as can be seen in '''Figure'''. Test subject X is placing numbered packages in the corresponding boxes (A, B, C, D). The robot Y hands over these packages. Out of sight, a researcher Z places packages on the robot. This is done out of sight to simulate an automated warehouse. | Firstly, the experiment was setup as can be seen in '''Figure 1'''. Test subject X is placing numbered packages in the corresponding boxes (A, B, C, D). The robot Y hands over these packages. Out of sight, a researcher Z places packages on the robot. This is done out of sight to simulate an automated warehouse. | ||

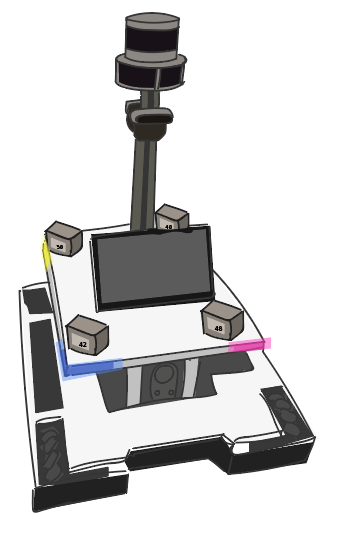

[[File:test drawing.png|300 px]] | [[File:test drawing.png|300 px]] [[File:Robot_with_lights.PNG|200 px]] | ||

Figure 1 Schematic drawing of the experiment | |||

'''Steps''' | '''Steps''' | ||

| Line 252: | Line 219: | ||

A test subject would enter the room and would be given the intake questionnaire as described in the subsection Questionnaire. After they had filled this out they were given half a page of information on what was going to happen; it can be found | A test subject would enter the room and would be given the intake questionnaire as described in the subsection Questionnaire. After they had filled this out they were given half a page of information on what was going to happen; it can be found here [[information sheet]]. Once they thoroughly read the information sheet and one of the researchers made sure they understood the safety aspect the experiment would start. The person would not be told which scenario would take place. The test subject would wait behind the safety line and the robot would drive to the table. Once the robot stood still the scenario would happen and we started timing. This would be repeated three times. Then, the test subject would fill in a questionnaire also described in the subsection Questionnaire. This processes would be repeated for the other two scenarios as well. | ||

Once all three scenarios and all trails within the scenarios have been completed, a final interview is conducted. | Once all three scenarios and all trails within the scenarios have been completed, a final interview is conducted. | ||

'''Scenario’s''' | '''Scenario’s''' | ||

In one scenario the test subject (x) has only the pick-list it received at the start to help him/her with finding the right packets for the right box. An example of the pick list can be found here [[list]]. | In one scenario the test subject (x) has only the pick-list it received at the start to help him/her with finding the right packets for the right box. An example of the pick list can be found here [[list]]. | ||

In an other scenario the robot (y) will use led lights at the side to indicate (using color) in which box each packet goes. It does this by lighting up the corners at which the packet is located in the colors corresponding to the correct boxes. | In an other scenario the robot (y) will use led lights at the side to indicate (using color) in which box each packet goes. It does this by lighting up the corners at which the packet is located in the colors corresponding to the correct boxes. The order of the colored boxes can be seen here: [[LED script]] | ||

In the third scenario the robot (y) will use audio communication to indicate which packet goes into which box. It could say, using the speakers, “Packet 138 goes into box blue”. The human (x) can then place this packet in the corresponding box. | In the third scenario the robot (y) will use audio communication to indicate which packet goes into which box. It could say, using the speakers, “Packet 138 goes into box blue”. The human (x) can then place this packet in the corresponding box. The script of the voice can be found here [[Voice Script]]. | ||

After the human (x) feels it has finished its job, it will say “Done” and the robot will drive away. | After the human (x) feels it has finished its job, it will say “Done” and the robot will drive away. | ||

| Line 265: | Line 232: | ||

The person doing the experiment should not be (color) blind, or deaf. Since they would not be able to do all scenarios. | The person doing the experiment should not be (color) blind, or deaf. Since they would not be able to do all scenarios. | ||

The test will be done in "wizard of oz"style. The robot will be remote controlled by us. Since the experiment is about which scenario the subjects find most useful/comforting, and not about testing the capabilities of the robot. | The test will be done in "wizard of oz"style. The robot will be remote controlled by us. Since the experiment is about which scenario the subjects find most useful/comforting, and not about testing the capabilities of the robot. | ||

'''Comparison to real Experiment''' | '''Comparison to real Experiment''' | ||

| Line 276: | Line 242: | ||

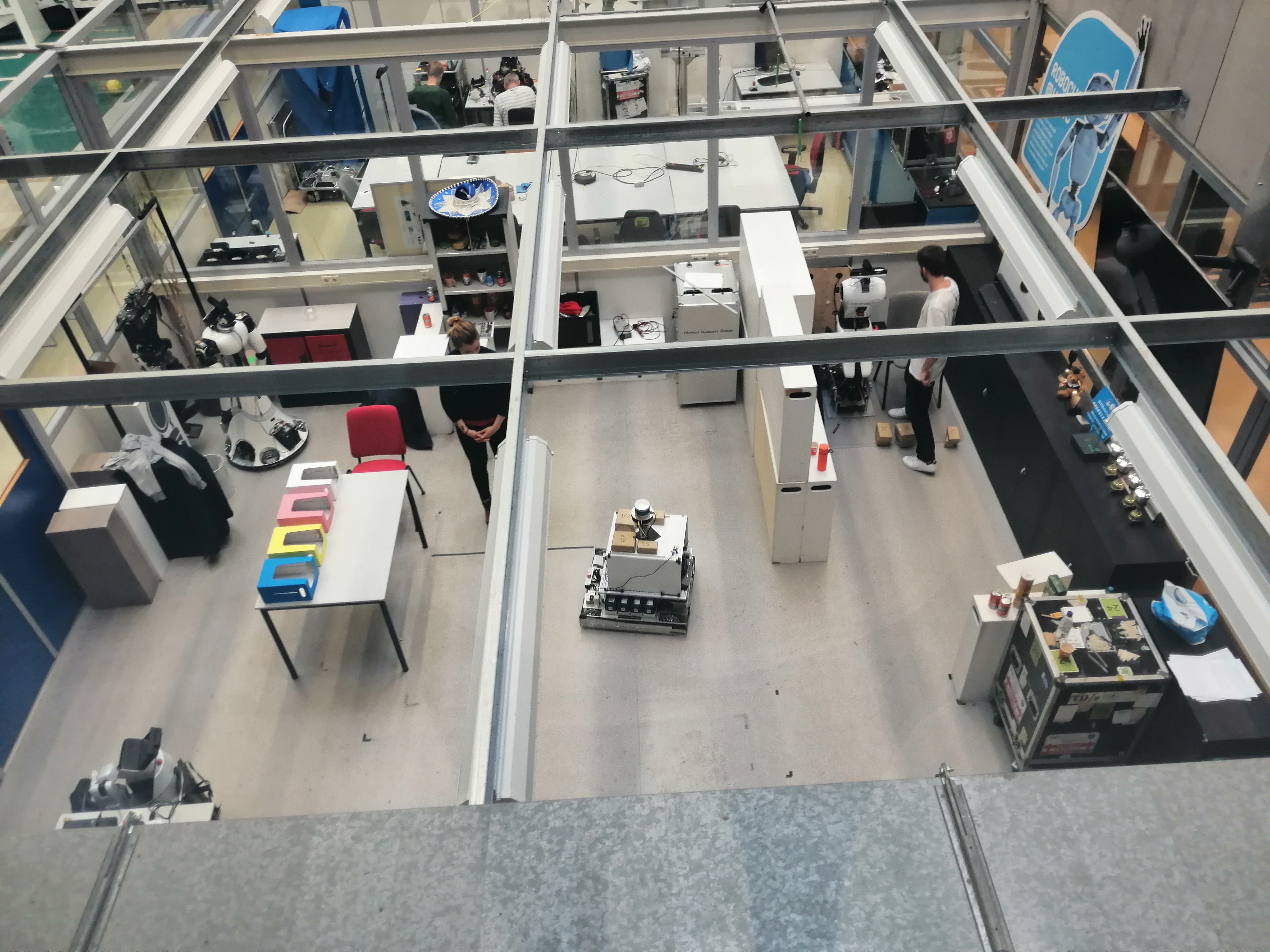

Figure 2 Actual situation during the experiment | Figure 2 Actual situation during the experiment | ||

== | ==Results== | ||

The video showing the experiment can be found here: "https://www.youtube.com/embed/a1PyLLNk4No" | |||

==Observations From the Experiment== | |||

During the experiment, most of the team members were present. This allowed for different observations that did not appear in the questionnaires or the interviews to be made that could not only be interesting for our discussion but could also influence the final answer to our research question. Further investigation of some of these observations could also lead to some interesting new findings about robot-human interaction. | |||

Firstly, the subjects saw a clear difference in the perceived fluidness of motion by the robot if the robot used voice or light signals to communicate.(2.6 to 3.5) Where in all cases the robot was controlled in the same way. | |||

Also, if the lights where the first of the scenarios there was notable confusion for some of the subjects. And in two cases they even waited on a second "go ahead" signal from the observers (team members) before starting the trial. | |||

Sometimes with light signals the subjects handle the boxes with significantly less care then when compared to the list and the voice signals or some subjects took two boxes at the same time resulting in confusion as to which package should go into which box. | |||

Most subjects did not notice that the robot was remotely controlled but instead assumed that it was completely autonomous. | |||

Finally, subjects that said they had experience with robotics assigned higher anthropomorphic values to the robot then those that said they had no experience with robotics. | |||

==Discussion of Results== | |||

All our data can be found here: https://drive.google.com/file/d/1ex0o5aJxVB0nneAn4oEOX2MK6QRtVYUX/view?usp=sharing | |||

Because of the use of the questionnaires after every set of trials in the experiment and the fact that the team was close to the participants during the trials there was a large number of data collected. From analyzing this data, that was seen in previous sections, we can reach some results and analyzing them along with our observations from the experiment we can reach a conclusion to answer our research question. Not all results are directly relevant in answering the research question but help to understand the thought process of someone that would be in a scenario that we are examining. | |||

One of the most important results which was also one of the easiest to collect and process, as it was quantitative, was the amount of time it took for the participants to place the packages in the correct boxes. The speed at which someone completes task not only reflects how quick each robot-human interaction method is to communicate the placement information to the participant but also is a good indication of how understandable and clear the method itself is. The results showed that using vocal queues is clearly the slowest, using the list is slightly faster and finally using the LEDs is the fastest of all three. In comparison with the voice, LEDs need 54% of the time the voice needs to convey the same amount of information. The explanation for this is fairly straightforward. The LEDs not only were conveying the correct information instantly without needing to create a whole sentence but they also could inform the participant for the correct package placement for all four boxes simultaneously. This level of speed, was not possible with the voice as the robot had to make individual sentences for every single box. That doesn’t necessarily make the LEDs the best choice though. As was seen in the observation section, some participants, that had the LEDs in their first set of trials, were notably confused as to what they should do and needed extra assistance from the team members even though the procedure was explained to them before the experiment. This was not observed when the list or the voice were used for interaction. Also, another observation regarding the LEDS in the experiments was that people were more aggressive with their package placement as participants tried to be as quick as possible in their task. That behavior sometimes led to participants grabbing two packages simultaneously and then being confused as to where to place each. Of course, there is trade off of accuracy for speed which is not always favorable and in a warehouse scenario could come down to the priorities of the warehouse owners. | |||

The results from the personal evaluation section of the questionnaires are really not what was expected. The participants found the LEDs more clear, pleasant and calmer. This could be for a variety of reasons. In terms of clarity, the voice could have been hard to hear or understand for the participants. . This of course could be the case because a fairly robotic voice (as it can be seen from the videos) was chosen for the robot and because the volume of the speakers was not maximized. That would mean that in a warehouse scenario the voice of the robot would have to be both loud but also clear enough to communicate with the employees without needing repetitions since that would seriously hinder efficiency. It is important to note though that no participant ask for the robot to repeat itself or to speak louder. Pleasantness and calmness on the other hand could be explained with the way that people viewed the robot itself. It was shown by the “Perceived Intelligence” questions that some participants viewed the robot as an authority (responsible) that was commanding them to do something and not as a co-worker that was just telling them what to do because it couldn’t do it. That may have made participants feel stressed out and generally have negative emotions for the robot which was in a sense “looking over their shoulder” to see their work output thus resulting in them thinking it was not calm and unpleasant. In general, other parts of the questionnaires reflected that people did not have the positive reaction to the voice that was expected. | |||

As it can be seen from the questionnaires, there were questions on how safe the participants felt during and after the experiment. From these questions we can see how comfortable and certain participants around the robot. Results show that participants seemed to feel safe and relaxed in all scenarios. However LEDs seem to stand out as more calmer, relaxed and safer. This difference between the LEDs and the voice could be because of the feeling of authority that some participants felt while working the robot but also because, as we can see from the results of our “Animacy” questions, people thought of the voice as the more alive, thus resulting in them anthropomorphizing the robot and feeling uneasy around it. | |||

The fact the participants anthropomorphized the robot more when voice was used can also be seen from the results of the “Anthropomorphism” questions. There, it can be seen that even though LEDs were considered more natural by participants, the voice was considered more conscious and in general the voice was rated as more anthropomorphic. This difference in results could be caused by the robotic nature of the voice chosen. Even though the voice was not like lifelike and natural, people still attributed signs of conscious thought to it. This could mean that people don’t need signs of nature to think of something as conscious but that is something that should be furthered investigated. | |||

In general, participants ranked the voice as the most alive, lifelike and lively as it can be seen from the results of the “Amimacy” questions. This was on par with what was expected as the voice is closer to the forms of communication we have as humans. That result though, did not result in the voice getting ranked as the most liked robot-human interaction technique, getting passed by the LEDs, which was going against our hypothesis. This is mostly because of reasons that have already been mentioned. Namely, the slow speed at which the voice was talking frustrated the participants who wanted to put the packages in the boxes, the fact that the robot using the voice seemed like an authority and also the fact that people felt uneasy and unsafe while working with a speaking robot. | |||

Finally, as indicated by the results in the “Perceived Intelligence” questions, participants ranked the voice and the LEDs equally. Looking into the subcategories specifically we can see that as mentioned before, people found the voice more responsible but also more intelligent and competent. Especially the later, thinking that the robot is competent, could result in the same problems this research is trying to solve, namely, people overestimating the capabilities of the robot. | |||

==Conclusion== | |||

Research shows that there is a lot of confusion concerning robots. This confusion originates mainly from the lack of knowledge about their abilities and goals. This confusion in turn creates inefficient behaviour and anxious feelings. For example: people who are uncertain about what task the robot is performing will stand still and wait until they find a way to reduce this uncertainty. Besides this there is also the possibility that people will try to compete with the robot or might dislike working with them. Our explores if adding communication tools to the robot could take away this confusion and create a more efficient and pleasant working environment. | |||

From the results of our tests it can be concluded that a voice is not the optimal choice for a communication tool in warehouse robots. Our test shows that using a voice was more inefficient then the other two ways of communicating. Besides being more inefficient voice was also rated worse by our participants on clearness, pleasantness and calmness. | |||

However, the voice as a communication tool should be explored further in the future. The voice could be an valuable addition to industrial robots as it does offer a lot of benefits. The voice can be an option in scenarios where the robot has authority or needs to manipulate the pace in which someone works. The voice can also be a good addition to explain or introduce tasks as this could require more information than LED's can provide. Without teaching employees a new language specifically for communicating with the robot. | |||

Previous research has shown that human features like eyes or humanlike movement can clarify efficiently what the Robot wants and needs(Baxter(Banh, A. ,2015)). Research on the voice as a communication tool in warehouse robots is very limited. We hope that our research has opened up a new possibilities for integrating communication in warehouse robots. | |||

Further research can be done, for example: | |||

-Does adding voice communication to warehouse robots increase the human workers conscious and careful behaviour? | |||

-Does the voice as a communication tool create a higher authority for the robot? | |||

-How could the voice as additional communication tool in warehouse robots be implemented in a real life setting? | |||

== References == | |||

[1]Tamburrini, Guglielmo. (2019). Robot ethics: a view from the philosophy of science. | |||

A | [2]Weiss, A., & Huber, A. (2016). User Experience of a Smart Factory Robot: Assembly Line Workers Demand Adaptive Robots. In AISB2016: Proceedings of the 5th International Symposium on New Frontiers in Human-Robot Interaction. | ||

[3]Sauppé, A., & Mutlu, B. (2015, April). The social impact of a robot co-worker in industrial settings. In Proceedings of the 33rd annual ACM conference on human factors in computing systems (pp. 3613-3622) | |||

[ | [4]West, D. M. (2015). What happens if robots take the jobs? The impact of emerging technologies on employment and public policy. Centre for Technology Innovation at Brookings, Washington DC. | ||

[5]Salvini, P., Laschi, C., & Dario, P. (2010). Design for acceptability: improving robots’ coexistence in human society. International journal of social robotics, 2(4), 451-460. | |||

[6]Damacharla, P., Dhakal, P., Stumbo, S., Javaid, A., Ganapathy, S., Malek, D., . . . Devabhaktuni, V. (2019). Effects of voice-based synthetic assistant on performance of emergency care provider in training. International Journal of Artificial Intelligence in Education : Official Journal of the International Aied Society,29(1), 122-143. doi:10.1007/s40593-018-0166-3 | |||

[7]Rosenfeld, A., Agmon, N., Maksimov, O., & Kraus, S. (2017). Intelligent agent supporting human-multi-robot team collaboration. Artificial Intelligence, 252, 211-231. doi:10.1016/j.artint.2017.08.005. | |||

[8]Allison Saupp´e, Bilge Mutlu, The Social Impact of a Robot Co-Worker in Industrial Settings, Department of Computer Sciences, University of Wisconsin | |||

[9]Min Kyung Lee, Sara Kiesler, Jodi Forlizzi, Paul Rybski, Ripple Effects of an Embedded Social Agent: A Field Study of a Social Robot in the Workplace, Human-Computer Interaction Institute , Robotics Institute, Carnegie Mellon University, Pittsburgh USA | |||

[10]Gian Diego Tipaldi, Kai O. Arras, Planning Problems for Social Robots, Social Robotics Lab Albert-Ludwigs-University of Freiburg | |||

[11]Amy Banh , Daniel J. Rea , James E. Young , Ehud Sharlin, Inspector Baxter: The Social Aspects of Integrating a Robot as a Quality Inspector in an Assembly Line, Proceedings of the 3rd International Conference on Human-Agent Interaction, October 21-24, 2015, Daegu, Kyungpook, Republic of Korea | |||

[12] Pepper Game https://pure.tue.nl/ws/portalfiles/portal/109006948/Scheffer_Tim_Report_Pepper_Game_Project_V1.2.pdf | |||

[13] Ivaldi, S., Lefort, S., Peters, J. et al. Int J of Soc Robotics (2017) "Towards Engagement Models that Consider Individual Factors in HRI: On the Relation of Extroversion and Negative Attitude Towards Robots to Gaze and Speech During a Human–Robot Assembly Task" 9: 63. https://doi.org/10.1007/s12369-016-0357-8 | |||

[14] Min Kyung Lee, Sara Kiesler, Jodi Forlizzi, Paul Rybski, Ripple Effects of an Embedded Social Agent: A Field Study of a Social Robot in the Workplace, May 2012, Human-Computer Interaction Institute , Robotics Institute, Carnegie Mellon University, Pittsburgh USA | |||

== SotA == | == SotA == | ||

=== | ===First oriental research=== | ||

| Line 413: | Line 366: | ||

Short Summary: This article takes an Industry related issue and tries to solve it. Explaining how effective robot-human interaction takes place. | Short Summary: This article takes an Industry related issue and tries to solve it. Explaining how effective robot-human interaction takes place. | ||

'''Human-robot interaction in rescue robotics''' | '''Human-robot interaction in rescue robotics''' | ||

| Line 447: | Line 398: | ||

Short Summary: This article describes how robots can help make the workplace better, by doing tedious tasks, it also describes what are important factors in robot acceptance. Such as their ability to do task that are to dangerous for humans, and about how important expectations about this robot are in acceptance. | Short Summary: This article describes how robots can help make the workplace better, by doing tedious tasks, it also describes what are important factors in robot acceptance. Such as their ability to do task that are to dangerous for humans, and about how important expectations about this robot are in acceptance. | ||

Weiss, A., & Huber, A. (2016). '''User Experience of a Smart Factory Robot: Assembly Line Workers Demand Adaptive Robots.''' In AISB2016: Proceedings of the 5th International Symposium on New Frontiers in Human-Robot Interaction. https://arxiv.org/ftp/arxiv/papers/1606/1606.03846.pdf | Weiss, A., & Huber, A. (2016). '''User Experience of a Smart Factory Robot: Assembly Line Workers Demand Adaptive Robots.''' In AISB2016: Proceedings of the 5th International Symposium on New Frontiers in Human-Robot Interaction. https://arxiv.org/ftp/arxiv/papers/1606/1606.03846.pdf | ||

| Line 498: | Line 446: | ||

'''Robots in Society, Society in Robots''' | '''Robots in Society, Society in Robots''' | ||

Latest revision as of 11:00, 24 April 2019

Group members

| Name | ID |

|---|---|

| Pim van Berlo | 0957823 |

| Timo Boer | 0965729 |

| Charlotte Bording | 1246089 |

| Luuk Roozen | 0948743 |

| Panagiotis Kyriakou | 1256416 |

Problem statement

How can the uncertainty humans have about robot actions be reduced, using vocal cues.

- Can vocal cues help reduce the anxiety people feel, because of uncertainty, towards these robots?

Define Uncertainty:

employees working with robots have trouble understanding them.

- They do not know how intelligent the robots are and give them made-up characters and intelligence based on what they believe. (Min Kyung Lee, et al. 2012)

- Robots are not competitive or trying to be better than human, although humans can feel like they have to. (Margot Neggers)

- Humans don’t understand robots which makes them feel uncomfortable since they can't predict the robots' actions. As a result humans tend to stop doing their own task (Margot Neggers)

- “The ability to estimate engagement and regulate social signals is particularly important when the robot interacts with people that have not been exposed to robotics, or do not have experience in using/operating them: a negative attitude towards robots, a difficulty in communicating or establishing mutual understanding may cause unease, disengagement and eventually hinder the interaction.” (Ivaldi, S. et al. 2017)

In short, People don’t understand what the robot is thinking/doing therefore they impose false assumptions on the robot and will act under those assumptions. Or the human will just stop doing his/her tasks.

Users

List of users highly involved with the introduction of robots in warehouse environments. Since there are many different titles for warehouse workers, mentioned are the ones that need to be distinguished because of their relationship with the robot.

General Warehouse Laborer

- Description: Responsible for general duties involving physical handling of product, materials, supplies and equipment.

- Education: nothing more than a high school diploma needed.

- Much of their work is moving things around in the warehouse but in smaller scales like forklift drivers.

- Relatively safe (employment wise) since they are the jack of all trades in a warehouse

- Logically their interest is against the introduction of robots and if someone was causing inefficiencies because of the robot introduction it would be them. But since they will be the ones working the most with the robots, it makes sense to believe that they will have a positive stance against the robots having higher speaking skills since their job will be easier and they’ll socialize more.

Forklift driver, material handler

- Description: Operate forklifts to move pallets of products, materials, and supplies between production areas, shipping areas, and storage areas, load and unload trailers

- Education: again nothing more than a high school diploma but this time a forklift driver’s license is required and usually experience is required.

- The main group that will be getting replaced for their transporting role but could still remain because of loading and unloading

- Logically their interest is against the introduction of robots since this is the group that is mostly getting replaced, if one stays, their interest are the same as above

Shipping and Receiving Associate, Warehouse Clerk

- Jobs that mostly have to do with overlooking, organizing and preparing the shipments and with speaking with clients to make new deals respectively.

- Education: again, nothing more than a high school diploma but computer handling skills are required

- This group of employees will probably be the one that is going to get affected (replaced or change of responsibilities) the least since they do mostly work with human clients or the make higher level decisions

- I believe their interest again should be somewhere in the middle, since their colleagues are mostly getting replaced but they can do their jobs better since the introduction of robots will bring efficiency. Now for them, that their jobs are fairly stable, it makes sense to believe that they will have a positive stance against the robots having higher speaking skills because they will bring better collaboration and thus their organizing tasks will be easier.

Sources https://www.horizonstaffingsolutions.com/different-types-warehouse-jobs https://www.friday-staffing.com/blog/warehouse-job-titles-and-descriptions/ https://www.indeed.com/

What do the users require?

- users require

- efficiency

- worth of their work

- safety

- robots doing tedious tasks

- time to adapt

- absence of stress

- understandable robots

- Do not require:

- losing their job

- make working with a robot less efficient

- annoying robot

Report

Introduction

Industrial robots are taking over more and more tasks in the workforce. Where there were 1.2 million Industrial Robots around the world, there were 1.9 million in 2017 (West, D. M. 2015). The tasks done by these robots are usually very repetitive (Tamburrini, G. ,2019), making them easy to automate. The robots are making the process faster and cheaper. For example, robots can work 24 hours a day without getting tired. Robots will take in more space in the workforce, robots are getting smarter, cheaper and are starting to take over more complex tasks (West, D. M. 2015). The transition to robot co-workers can often bring troubles due to the human resistance of robots like humans having trouble understanding the robot, the robot working in a different pace (Weiss, A., et al. 2016) or the fear of being replaced by the robot.(Salvini, P. et al. 2010).

In this research we are looking at creating a more efficient and pleasant transition for the human co-workers by giving the robot communication tools. Voice will be tested as a communication tool. To identify strengths and weaknesses, a scenario with LED's as communication and a scenario without communication will be added in the experiment for comparison. These tools will be tested to see if enriching a robot with one of them results in a more enjoyable work experience for their human coworkers. In a study done by Sauppé, A., et al It is already shown how giving the robot eyes can make its functions clearer for humans (2015). Peoples' jobs often change due to the introduction of a robot in their work (Salvini, P. et al. 2010). An application of the voice could be used to explain what the robot is currently working on or give instructions to their human counterpart. This research will mainly focus on industrial robots in warehouses as this is a common place where robots are taking over tasks.

Related work

In the introduction it was shown that there is not just an increase in the amount of robots, but also in the difficulty of the tasks they perform. In this section we will cover some of the many works that relate to the problems emerging from these increases. We will also cover research that has been done to specific aspects of our problem.

One of these papers focusses specifically on how helpful vocal interaction can be in learning. In the paper “Effects of voice-based synthetic assistant on performance of emergency care provider in training”(Damacharla P., 2019)) the researchers measure whether a person can learn how to provide emergency care more quickly if it receives assistance from a voice-based synthetic assistant. This research paper is related to our work in that it shows that a vocal stimulant can help people in situations that are normally difficult for them to deal with. Giving us an indication that our research might result in a positive outcome.

Another paper that is related to ours is the paper called “Intelligent agent supporting human-multi-robot team collaboration”(Rosenfeld, A. , 2017). This paper gives an in-depth explanation of how an intelligent agent can help humans in their collaboration with robots. This article is especially interesting since one of the scenarios they focus on is warehouses. In this scenario the intelligent agent suggest possibly difficult situations for robots, such as a box that has fallen. The human can then judge whether this hinders the robots or not, and possibly change the robots behavior based on this. At some point the paper discusses whether the human found these suggestions helpful or annoying. Another paper that writes about a robot giving feedback to humans is the paper “Inspector Baxter: The Social Aspects of Integrating a Robot as a Quality Inspector in an Assembly Line”(Banh, A. ,2015). However this paper focusses on the influence movement and facial expressions can have on the social interaction.

The paper “The Social Impact of a Robot Co-Worker in Industrial Settings”(Sauppé A. ,2017), gives a discussion of the social phenomena that emerge when a robot co-worker is introduced in an industrial environment. It does this by discussing the relationship that each employee developed with the robot. In the end it suggests some improvements for future robot implementations that would improve further social interactions. This article relates to our problem in that it discusses the problem but in a broader sense. I.e. not focusing on a specific solution. An paper called “Ripple effects of an embedded social agent: A field study of a social robot in the workplace”(Kyung Lee. M. ,2012) also discusses these social phenomenon that emerge in a human-robot cooperative industrial environment. However the robots in this paper perform the same basic tasks as the robots in a warehouse. I.e. picking up goods and delivering them to people.

One paper that we found tries to show the importance of movement in the social interaction between human-robot interaction. As cited “they [robots] also need the ability to model and reason about human activities, preferences and conventions. This knowledge is fundamental for robots to smoothly blend their motions, tasks and schedules into the workflows and daily routines of people. We believe that this ability is key in the attempt to build socially acceptable robots for many domestic and service applications”(Tipaldi, G.D. ,2011) This relates to our work, because it indicates a variable we need to keep track off.

Interviews

A large part of the orientation and validation process was done using interviews with subject experts. This ranged from interviewing philosophy professors to Robotics R&D engineers. Combining these interviews with the knowledge gained from our literary research served to create a broad base of knowledge concerning robotics and human-robot interactions. Here you can find in short the mayor findings from these interviews.

Margot Neggers

Margot Neggers is a PhD Candidate at the Technical University of Eindhoven. Where she does research for the Human Technology Interaction groep. Mainly concerning robots and their interaction and communication with humans.

Margot sketched the current situation within human robot interaction while mainly focusing on the industrial sector. According to her there is a lot of improvement possible within the way robots and humans interact on the work floor. At this point both robots and humans do their own work independent of each other. Where often the robots do not signal what they are currently working on. This increases the uncertainty for human workers. And when humans become uncertain their anxiety increases and their productivity drops. This is because human workers who do not know what their robotic colleague is working on at this moment have only one way to reduce this uncertainty. And that is waiting to see what the robot is going to do next.

“That is what people do when they do not understand the robot: they wait. -Margot Neggers”

Margot also explained previous research she has done for human-robot interaction. This was an experiment where a subject and a robot both would navigate concurrently through the same room. For this experiment they tried three communication methods: blinking LEDs, speech and gestures. From this experiment it became clear that subjects prefer speech as a communication tool over gestures and light signals. They found this the most pleasant way for the robot to communicate to them. But if performance was also taken into account it was found that speech was actually not the fastest gestures where. These findings were extremely interesting to us and formed the basis of this research project.

Another important insight gained from Margot was that, especially in this discipline, it is crucial for your results that subject do not have to imagine what something would be like, because when subjects think deeply about what how they would experience something the results would differ from when they actually experience it. And for human-robot interaction this could have a very large impact on your final conclusion. Therefore we started to work towards doing an actual experiment to give more validity to our conclusion.

Besides this Margot also pointed us towards the anthropomorphistic side of human-robot interaction. As she stated that humans working with robots often assign personalities to that robot, which has a very large impact on how humans feel about the robot in future interactions. We thought this would be interesting to look into and therefore decided to try to gain some information on this during the rest of the experiment.

Jilles Smids

Jilles Smids is a PhD candidate in the ethics of persuasive technology at the section of Philosophy and Ethics at the Technical University of Eindhoven. He has been working on a paper named: Robots in the warehouse: working with or against the machine. This got our interest and kick-started our project. Jilles gave us confirmation in the issues that are at hand with humans and robotics in warehouses. He mentioned that the fear of human workers being taken over by robots is unjustified. Warehouses are actually expanding and cannot find the the excessive workforce needed to fill the warehouse so use robots to co-exist with the current workers. According to Jilles, people tend to fear robots because they are afraid of losing their jobs, whilst robots can actually take over the tasks that are too heavy or uninteresting for humans to do.

Jilles helped us realize that a big problem in automation in the warehouse is not informing workers of what exactly is going on. This leads to cases where robots are being vandalized by human co-workers. It helped give us the idea that humans should be more aware of the actions of robots and gave us a clear ground to start our project from.

Jilles also gave us certain examples of where humans and robots did not get along; such as the Waymo’s self driving car incidents in Arizona. This made it clear that humans and robots are still in early phases of understanding and working together.

Bas Coenen

Bas Coenen is a graduate from the Technical University of Eindhoven, who is now currently working at Vanderlande as Team Leader Robotics, Senior R&D integration Engineer.

Bas helped us get a more clear understanding of what was actually being done with warehouse-type robotics. He gave insight on current issues with warehouses and robotic involvement around the world. He mentioned that multinational companies that have warehouses/factories all over the world have to deal with different cultures. Something as simple as a positive gesture here could mean something negative somewhere else. So robots that are created have to be able to fit-in in any culture over the world. Otherwise, there will always have to be changes in every warehouse over the world and as a company this can be inconvenient.

He also took us into a demonstration area where different setups for different supply lines were displayed. This gave us a better sense of how professionals have made solutions to warehouse robotics. However, most lines still gave the sense that robots and humans are separate from each other and not really co-existing.

His colleague explained about her work on adding eyes to robots to give a certain sense of being. This way people would notice when they are being noticed. For example, if you are trying to cross a pedestrian crossing and a car is coming, many people try to make eye contact with the driver to see if they are being noticed. This way you know you are seen and won’t be run over. Robots tend to just carry out their task and not give a sense of knowing someone is there. People tend to be afraid or uncertain of robots actions so they stop working to make sure they are safe. If a person notices it is noticed it could save valuable time.

Speaking to people actually working on robotics on a large scale definitely gave us some ideas on how to carry out our experiment. And allowing us to use some of their time, increased the interest we have in robotics.

Research questions and hypothesis

Based on the work already done it is possible to formulate some research questions. The main question will be: “Do vocal queues increase the likeability of a warehouse robot, compared to the use of a so called pick list.” The reason that this is the main research question, is that it covers every problem discussed in the introduction. If people like the robot more, then they are less likely to feel anxious about it.

Since we are able to borrow the robot, and doing the same experiment with visual queues instead of vocal queues. We decided that we would do both. For this reason the second research question is defined as follows: “Do visual queues increase the likeability of a warehouse robot compared to the use of a so called pick list”.

If the answer to either of these two questions is true, then we can look at which method is better. Which is why the third research question is the following: “Do vocal queues increase the likeability of a warehouse robot more than visual queues?”

Now that we have defined some research questions, we can define a hypothesis for the experiment that will be explained a little bit further on. Because of the paper “Effects of voice-based synthetic assistant on performance of emergency care provider in training”[1]. We predict that “Vocal queues increase the likeability of a warehouse robot.” Because of the research Margot Neggers has done, we also predict that “Visual queues increase the likeability of a warehouse robot.” With this, we can formulate the following hypothesis for the experiment.

Hypothesis: Adding a vocal queue or a visual queue to a robot in a warehouse setting will increase the likeability people feel towards it; compared to the likeability they have towards the same robot without these features.

Health & Safety Plan

During the experiment, it is of pivotal importance to keep the participants safe. Since the robot taking part in the experiment is around 50kg, in the unfortunate case of an accident there could be a chance of heavy injury. To avoid this some safety measures have to be taken:

• There is a clear line on the floor that the test subject has to stand behind while the robot is moving. Only when the robot has come to a complete stand still is the test subject allowed to cross the line. The line is not directly in the robot’s path so the chances of crossing the line are diminished.

• Conversely the robot will only start moving when the test subject is standing behind the line and gives a clear signal that he is done.

• There is always one person responsible for the safety measures taken. This persons task is to have access to an external “Kill-switch”. This person solely focuses on the experiment and pressing the button. Once the button is pressed it will immediately turn off the robot.

Questionnaires

During the experiment, the test subjects were given a couple of questionnaires. This was done as follows:

1. Fill in intake Questionnaire

2. Scenario A

3. Fill in Trial Questionnaire

4. Scenario B

5. Fill in Trial Questionnaire

6. Scenario C

7. Fill in Trial Questionnaire

8. Ending Short Interview

The test subjects would fill in a general intake questionnaire to ask general questions about themselves; such as age, education, etc. Then they would start with one of the scenarios, this could be the list, voice, or LEDs. Then they would fill in the trial questionnaire. The trial questionnaire consisted of a combination of two validated questionnaires: the Co-existence questionnaire similar to that used in the PEPPER GAME [12] and the Godspeed questionnaire. The Godspeed questionnaire gives a better insight on what people think of the robot. After the test subjects repeated each scenario they got another trial questionnaire. After finishing all three questionnaires, a short interview was conducted. This interview was done to see if additional interesting observations could be made.

A link to the Questionnaires and Interview can be found at Questionnaires Link

Procedure Experiment

Context

In order to test our hypothesis, an industrial-like robot (FAST platform) was used to see how people would react. The robot drove packages to a person who would place the packages in the correct box. The idea of the experiment is modeled after the warehouse robots used at Amazon where robots bring large racks with packages towards human order pickers.

Procedure

This experiment is conducted in different parts. First, the setup of the experiment is described. Secondly, the steps taken in the experiment shall be explained. Thirdly, the separate scenarios (picklist, voice, LED) that have been tested shall be described. And finally, how the theoretical experiment happened in practice shall be described.

Setup

Firstly, the experiment was setup as can be seen in Figure 1. Test subject X is placing numbered packages in the corresponding boxes (A, B, C, D). The robot Y hands over these packages. Out of sight, a researcher Z places packages on the robot. This is done out of sight to simulate an automated warehouse.

Figure 1 Schematic drawing of the experiment

Steps

There were three different scenarios that we tested: picklist, voice, LEDs. These scenarios were tested with each test subject in three trails. In order to keep it fair the experiment was counterbalanced for the different test subjects. For example, test subject 1 would first get a pick list, then experience a robot using voice, and finally a robot with LEDs. Test subject 2 would first get the voice, then the LEDS, and finally the Pick list. This can be done in six different combinations. Since, twelve test subjects were used, each possible order combination was done twice.

A test subject would enter the room and would be given the intake questionnaire as described in the subsection Questionnaire. After they had filled this out they were given half a page of information on what was going to happen; it can be found here information sheet. Once they thoroughly read the information sheet and one of the researchers made sure they understood the safety aspect the experiment would start. The person would not be told which scenario would take place. The test subject would wait behind the safety line and the robot would drive to the table. Once the robot stood still the scenario would happen and we started timing. This would be repeated three times. Then, the test subject would fill in a questionnaire also described in the subsection Questionnaire. This processes would be repeated for the other two scenarios as well.

Once all three scenarios and all trails within the scenarios have been completed, a final interview is conducted.

Scenario’s

In one scenario the test subject (x) has only the pick-list it received at the start to help him/her with finding the right packets for the right box. An example of the pick list can be found here list. In an other scenario the robot (y) will use led lights at the side to indicate (using color) in which box each packet goes. It does this by lighting up the corners at which the packet is located in the colors corresponding to the correct boxes. The order of the colored boxes can be seen here: LED script In the third scenario the robot (y) will use audio communication to indicate which packet goes into which box. It could say, using the speakers, “Packet 138 goes into box blue”. The human (x) can then place this packet in the corresponding box. The script of the voice can be found here Voice Script. After the human (x) feels it has finished its job, it will say “Done” and the robot will drive away.

Notes: The person doing the experiment should not be (color) blind, or deaf. Since they would not be able to do all scenarios. The test will be done in "wizard of oz"style. The robot will be remote controlled by us. Since the experiment is about which scenario the subjects find most useful/comforting, and not about testing the capabilities of the robot.

Comparison to real Experiment

The experiment was adjusted slightly in practice, as can be seen in Figure2 compared to Figure1. The table is rotated 90 degrees. But remains unseen from researcher Z on the right in Figure 2. Test Subject X is on the left waiting behind the line until the robot Y stops. The colored boxes can be clearly seen on the left and the packages are on the robot.

Figure 2 Actual situation during the experiment

Results

The video showing the experiment can be found here: "https://www.youtube.com/embed/a1PyLLNk4No"

Observations From the Experiment

During the experiment, most of the team members were present. This allowed for different observations that did not appear in the questionnaires or the interviews to be made that could not only be interesting for our discussion but could also influence the final answer to our research question. Further investigation of some of these observations could also lead to some interesting new findings about robot-human interaction.

Firstly, the subjects saw a clear difference in the perceived fluidness of motion by the robot if the robot used voice or light signals to communicate.(2.6 to 3.5) Where in all cases the robot was controlled in the same way.

Also, if the lights where the first of the scenarios there was notable confusion for some of the subjects. And in two cases they even waited on a second "go ahead" signal from the observers (team members) before starting the trial.

Sometimes with light signals the subjects handle the boxes with significantly less care then when compared to the list and the voice signals or some subjects took two boxes at the same time resulting in confusion as to which package should go into which box.

Most subjects did not notice that the robot was remotely controlled but instead assumed that it was completely autonomous.

Finally, subjects that said they had experience with robotics assigned higher anthropomorphic values to the robot then those that said they had no experience with robotics.

Discussion of Results

All our data can be found here: https://drive.google.com/file/d/1ex0o5aJxVB0nneAn4oEOX2MK6QRtVYUX/view?usp=sharing

Because of the use of the questionnaires after every set of trials in the experiment and the fact that the team was close to the participants during the trials there was a large number of data collected. From analyzing this data, that was seen in previous sections, we can reach some results and analyzing them along with our observations from the experiment we can reach a conclusion to answer our research question. Not all results are directly relevant in answering the research question but help to understand the thought process of someone that would be in a scenario that we are examining.

One of the most important results which was also one of the easiest to collect and process, as it was quantitative, was the amount of time it took for the participants to place the packages in the correct boxes. The speed at which someone completes task not only reflects how quick each robot-human interaction method is to communicate the placement information to the participant but also is a good indication of how understandable and clear the method itself is. The results showed that using vocal queues is clearly the slowest, using the list is slightly faster and finally using the LEDs is the fastest of all three. In comparison with the voice, LEDs need 54% of the time the voice needs to convey the same amount of information. The explanation for this is fairly straightforward. The LEDs not only were conveying the correct information instantly without needing to create a whole sentence but they also could inform the participant for the correct package placement for all four boxes simultaneously. This level of speed, was not possible with the voice as the robot had to make individual sentences for every single box. That doesn’t necessarily make the LEDs the best choice though. As was seen in the observation section, some participants, that had the LEDs in their first set of trials, were notably confused as to what they should do and needed extra assistance from the team members even though the procedure was explained to them before the experiment. This was not observed when the list or the voice were used for interaction. Also, another observation regarding the LEDS in the experiments was that people were more aggressive with their package placement as participants tried to be as quick as possible in their task. That behavior sometimes led to participants grabbing two packages simultaneously and then being confused as to where to place each. Of course, there is trade off of accuracy for speed which is not always favorable and in a warehouse scenario could come down to the priorities of the warehouse owners.

The results from the personal evaluation section of the questionnaires are really not what was expected. The participants found the LEDs more clear, pleasant and calmer. This could be for a variety of reasons. In terms of clarity, the voice could have been hard to hear or understand for the participants. . This of course could be the case because a fairly robotic voice (as it can be seen from the videos) was chosen for the robot and because the volume of the speakers was not maximized. That would mean that in a warehouse scenario the voice of the robot would have to be both loud but also clear enough to communicate with the employees without needing repetitions since that would seriously hinder efficiency. It is important to note though that no participant ask for the robot to repeat itself or to speak louder. Pleasantness and calmness on the other hand could be explained with the way that people viewed the robot itself. It was shown by the “Perceived Intelligence” questions that some participants viewed the robot as an authority (responsible) that was commanding them to do something and not as a co-worker that was just telling them what to do because it couldn’t do it. That may have made participants feel stressed out and generally have negative emotions for the robot which was in a sense “looking over their shoulder” to see their work output thus resulting in them thinking it was not calm and unpleasant. In general, other parts of the questionnaires reflected that people did not have the positive reaction to the voice that was expected.

As it can be seen from the questionnaires, there were questions on how safe the participants felt during and after the experiment. From these questions we can see how comfortable and certain participants around the robot. Results show that participants seemed to feel safe and relaxed in all scenarios. However LEDs seem to stand out as more calmer, relaxed and safer. This difference between the LEDs and the voice could be because of the feeling of authority that some participants felt while working the robot but also because, as we can see from the results of our “Animacy” questions, people thought of the voice as the more alive, thus resulting in them anthropomorphizing the robot and feeling uneasy around it.

The fact the participants anthropomorphized the robot more when voice was used can also be seen from the results of the “Anthropomorphism” questions. There, it can be seen that even though LEDs were considered more natural by participants, the voice was considered more conscious and in general the voice was rated as more anthropomorphic. This difference in results could be caused by the robotic nature of the voice chosen. Even though the voice was not like lifelike and natural, people still attributed signs of conscious thought to it. This could mean that people don’t need signs of nature to think of something as conscious but that is something that should be furthered investigated.

In general, participants ranked the voice as the most alive, lifelike and lively as it can be seen from the results of the “Amimacy” questions. This was on par with what was expected as the voice is closer to the forms of communication we have as humans. That result though, did not result in the voice getting ranked as the most liked robot-human interaction technique, getting passed by the LEDs, which was going against our hypothesis. This is mostly because of reasons that have already been mentioned. Namely, the slow speed at which the voice was talking frustrated the participants who wanted to put the packages in the boxes, the fact that the robot using the voice seemed like an authority and also the fact that people felt uneasy and unsafe while working with a speaking robot.

Finally, as indicated by the results in the “Perceived Intelligence” questions, participants ranked the voice and the LEDs equally. Looking into the subcategories specifically we can see that as mentioned before, people found the voice more responsible but also more intelligent and competent. Especially the later, thinking that the robot is competent, could result in the same problems this research is trying to solve, namely, people overestimating the capabilities of the robot.

Conclusion

Research shows that there is a lot of confusion concerning robots. This confusion originates mainly from the lack of knowledge about their abilities and goals. This confusion in turn creates inefficient behaviour and anxious feelings. For example: people who are uncertain about what task the robot is performing will stand still and wait until they find a way to reduce this uncertainty. Besides this there is also the possibility that people will try to compete with the robot or might dislike working with them. Our explores if adding communication tools to the robot could take away this confusion and create a more efficient and pleasant working environment.