PRE2018 3 Group2: Difference between revisions

→State of the Art: made story more readable |

|||

| (197 intermediate revisions by 5 users not shown) | |||

| Line 14: | Line 14: | ||

| Iza Linders || 0945517 | | Iza Linders || 0945517 | ||

|- | |- | ||

| | | Noor van Opstal || 0956340 | ||

|- | |- | ||

|} | |} | ||

== Abstract == | |||

We live in an ageing society, the number of elderly people is ever increasing OECD (2007). As a consequence, pressure on caregivers is rising. The research area on possibilities to alleviate this pressure by means of a robotic platform is increasing in size. Emotional comfort tends to be vital in medical environments, and such a robotic platform needs to conform to this aspect of elderly care ANR (2005). For our project, we want to zoom in on a particular part of many modern robots, the part having as primary task the portrayal of emotional information: screen-based faces. During the eight weeks of this project, we will set up a research plan, carry out test with the target users – seniors – and attempt to design and validate a screen-based robotic face. Since our group is a multidisciplinary team this problem will be approached from both a technical perspective and a user centered perspective. | |||

== Introduction == | == Introduction == | ||

When humans interact with each other, social cues are important (Pickett, C. L. et al., 2004). | |||

Social cues are defined as symbols that are expressed through body language, tone or words | |||

and these cues can help clarify people’s intentions (White, n.d.). When designing a robot | |||

that should function in a human environment, this robot should behave in a natural way and | |||

according to the social rules of humans. Therefore, the robot should pay attention to the | |||

social cues that people have and adapt to them. There are several social cues and one of them is facial expression. Facial expression is the act of making known one’s thoughts or feelings using only the face (Oxforddictionaries, n.d.). Facial expression enables us to further comprehend the situation. Without seeing someone’s facial expression, one would not be able to see what the emotion of that person is. | |||

As mentioned by Ross G. Macdonald et al., eyes are important tools in communication | |||

between people. “Twelve-month-old infants respond to objects cued by adults gaze | |||

(Thoermer & Sodian, 2001), indicating that eyes have communicative value well before the | |||

development of spoken language” (Macdonald, R. G. et al., (2013). Gaze cues provide | |||

information, express intimacy, social control, regulate interaction, etc. (Barakova, E.I, 2018- | |||

2019). | |||

To improve the interaction between a human and a robot it is therefore important that a | |||

robot has eyes that can communicate emotions. In a study of Becker (2009) is found that | |||

round shapes tend to induce associations with warmth and traits such as approachability, | |||

friendliness and harmony. Moreover, angular shapes tend to induce associations with | |||

sharpness and associations with traits that express energy, toughness and strength (Becker, | |||

L., 2009). In addition, angular shapes get higher ratings concerning aggressiveness or | |||

confrontation. According to the same study, people prefer colors made up from short | |||

wavelengths over colors made up from long wavelengths (Becker, L., 2009). | |||

In a study about emotion recognition deficits in the elderly a few findings about emotions | |||

are reported. According to Sullivan, S., et al. (204) elderly experience a decline in the | |||

recognition of some emotional expressions. Mostly the emotions anger, sadness and fear are experienced differently by elderly compared to younger people. | |||

Moreover, as explained by Spear, P. D. (2013) and Mellerio, J. (1987) there is a reduction of | |||

short wavelength light transmission with increasing age and therefore colors will be seen | |||

differently by elderly compared to young people. | |||

When designing a robot face, the designer is confronted with a wide range of possible design choices. Previous studies have attempted, and to some degree succeeded, in analyzing this design space. One study in particular, “Characterizing the Design Space of Rendered Robot Faces”, takes non-fictional screen-based designs, and conducts a survey on the influence of the different aspects characterizing these faces. Based on the survey results, they then create a baseline face and vary different details. A second survey is conducted on the influence of these variations. However, this study focuses on the general appearance of robot faces, where we want to research the portrayal of emotions. | |||

Since is not a lot of research done in the field of robots and the emotional perception by elderly. That is why we decided to look into this. The research question is: How does the shape and color of a robot’s eyes influence the emotional perception by elderly? Based on the literature research, two hypotheses are composed. | |||

The first hypothesis states that round eyes will be associated with positive emotions, such as happiness, surprise and friendliness. Angular eyes are expected to be associated with negative emotions such as; fear, anger, sadness and disgust. The second hypotheses states that eyes that have a color with a short wavelength will be | |||

associated with more positive emotions, such as; happiness, surprise and friendliness. Eyes | |||

that have a color with a long wavelength will be more associated with negative emotions, | |||

such as fear, anger, sadness and disgust. | |||

We will draw inspiration from the methods used by our colleagues, and further investigate the design choices one could or should make, when designing a screen-based robot face. Below we first describe a section containing some research based on the State-of-the-Art, then in the method section the structure of the user test and its two validation test, thereafter we represent the results, derive a conclusion and give an discuss further research possibilities. | |||

=== Planning === | |||

The tasks and milestones (both the ones already achieved and those on our to do lists) of this group are elaborated on in the Gannt Chart accessed by the following link: [https://docs.google.com/spreadsheets/d/1yGHbRs6K4x4QR3-7oYmUpe15eQcp-TSZ5pxlC4x34rs/edit?usp=sharing Gannt Chart] | |||

=== Problem Statement === | === Problem Statement === | ||

We live in an ageing society, the number of elderly people is ever increasing | We live in an ageing society, the number of elderly people is ever increasing <ref name=OECD,2007>https://www.oecd.org/newsroom/38528123.pdf OECD. (2007). Annual Report 2007. Paris: OECD Publishing.</ref>. As a consequence, pressure on caregivers is rising. The research area on possibilities to alleviate this pressure by means of a robotic platform is increasing in size. Emotional comfort tends to be vital in medical environments, and such a robotic platform needs to conform to this aspect of elderly care <ref name=ANR,2005>https://www.sciencedirect.com/science/article/pii/S0897189704000874 ANR. (2005). Applied Nursing Research 18 (2005) 22-28</ref>. For our project, we want to zoom in on a particular part of many modern robots, the part having as primary task the portrayal of emotional information: screen-based faces. During the eight weeks of this project, we will set up a research plan, carry out test with the target users – seniors – and attempt to design and validate a screen-based robotic face. Since our group is a multidisciplinary team this problem will be approached from both a technical perspective and a user centered perspective. | ||

<ref name=OECD,2007>https://www.oecd.org/newsroom/38528123.pdf OECD. (2007). Annual Report 2007. Paris: OECD Publishing.</ref>. As a consequence, pressure on caregivers is | |||

== Initial ideas == | == Initial ideas == | ||

| Line 38: | Line 88: | ||

Different themes related to our project can be identified. They are listed here, together with the relevant papers that provide the stated information. | Different themes related to our project can be identified. They are listed here, together with the relevant papers that provide the stated information. | ||

'''Robot emotion expression''' | '''Robot emotion expression''' | ||

Different papers provide an insight into the existing technology in the field of recognizing human emotion in HCI research.<ref>https://www.researchgate.net/publication/235328873_Dominance_and_valence_A_two-factor_model_for_emotion_in_HCI Dryer, Christopher. (1998). Dominance and valence: A two-factor model for emotion in HCI.</ref> ''Article 1'' provides a discussion of existing literature on social robots paired with care for elderly suffering from dementia. It also discusses what contributions and cautions are bound to the use of these assistive robotic agents, and provied literature related to care of elderly with dementia.<ref>https://www.ncbi.nlm.nih.gov/pubmed/23177981 Elaine Mordoch, Angela Osterreicher, Lorna Guse, Kerstin Roger, Genevieve Thompson (2013), Use of social commitment robots in the care of elderly people with dementia: A literature review, Maturitas, p 14-20</ref> ''Article 2'' places its focus on the more general user, and contains a study about the effect of a robot's expressions and physical appearance on the perception of said robot, by the user.<ref>http://www.cs.cmu.edu/~social/reading/breemen2004c.pdf</ref> ''Article 3'' and ''Article 4'' present a description of the development, testing and evaluation of a robotic system, and a framework for emotion interaction for service robots, respectively. ''Article 3'' focuses on the development of EDDIE, a flexible low-cost emotion-display with 23 degrees of freedom for expressing realistic emotions through a humanoid face, while ''Article 4'' presents a framework for recoginition, analysing and generation of emotion based on touch, voice and dialogue.<ref>https://ieeexplore.ieee.org/document/4058873 S. Sosnowski, A. Bittermann, K. Kuhnlenz and M. Buss (2006), Design and Evaluation of Emotion-Display EDDIE, 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 3113-3118.</ref><ref>https://ieeexplore.ieee.org/document/4415108 D. Kwon et al. (2007), Emotion Interaction System for a Service Robot, RO-MAN 2007 - The 16th IEEE International Symposium on Robot and Human Interactive Communication, pp. 351-356.</ref> The results of a different project, KOBIAN, are described in ''Article 5''. Here, different ways of expressing emotions through the entire body of the robot are tested and evaluated.<ref>https://ieeexplore.ieee.org/document/5326184 M. Zecca et al. (2009), Whole body emotion expressions for KOBIAN humanoid robot — preliminary experiments with different Emotional patterns —, RO-MAN 2009 - The 18th IEEE International Symposium on Robot and Human Interactive Communication, pp. 381-386.</ref> | |||

<ref>https://www.ncbi.nlm.nih.gov/pubmed/23177981</ref> | |||

'''Emotion recognition''' | |||

Article | In ''Article 6'', titled "Affective computing for HCI", multiple broad areas related to HCI are adressed, discussing recent and ongoing work at the MIT Media Lab. Affective computing aims to reduce user frustration during interaction. It enables machines to recognize meaningful patterns of such expression, and explains different types of communication (parallel vs. non-parallel).<ref>https://affect.media.mit.edu/pdfs/99.picard-hci.pdf R. W. Picard (2003), Affective computing for HCI</ref> ''Article 7'' consists of a large elaboration on emotion, mood, effects of affect which yields performance and memory, along with its causes and measurements. There is an interaction of affective design and HCI.<ref>https://www.researchgate.net/publication/242107189_Emotion_in_Human-Computer_Interaction Brave, Scott & Nass, Clifford. (2002). Emotion in Human–Computer Interaction. The Human-Computer Interaction Handbook: Fundamentals, Evolving Technologies and Emerging Applications. 10.1201/b10368-6.</ref> A study on emotion-specific autonomic nervous system activity in elderly people was done in ''Article 8''. The elderly taking part followed muscle-by-muscle instructions for constructing facial proto-types of emotional expressions and re-lived past emotional experiences.<ref>https://www.ncbi.nlm.nih.gov/pubmed/2029364 Levenson, R. W., Carstensen, L. L., Friesen, W. V., & Ekman, P. (1991). Emotion, physiology, and expression in old age. Psychology and Aging, 6(1), 28-35.</ref> Reporting on two studies, ''Article 9'' shows how elderly have difficulty differentiating anger and sadness, when shown faces. The two studies provide methods for measuring the ability of emotion recognition in humans.<ref>https://www.tandfonline.com/doi/abs/10.1080/00207450490270901 Susan Sullivan & Ted Ruffman (2004) Emotion recognition deficits in the elderly, International Journal of Neuroscience, 403-432.</ref> Lastly, a definition of several emotions and their relation to physical responses is needed (breathing, facial expressions, body language, etc.). ''Article 10'' describes how a computer can track and analyse a face to compute the emotion the subject is showing. Useful as an insight in how input data can be gathered, analysed and used for the purpose of mimicking or mirroring by a robot.<ref>https://ieeexplore.ieee.org/document/911197 R. Cowie et al., "Emotion recognition in human-computer interaction," in IEEE Signal Processing Magazine, 32-80, Jan 2001.</ref> | ||

<ref>https://ieeexplore.ieee.org/document/ | |||

''' | '''Assistive technology for elderly''' | ||

This category focusses mostly on robotic care for elderly living at home. Articles such as ''article 15'' <ref>https://www.researchgate.net/publication/3450481_Living_With_Seal_Robots_-_Its_Sociopsychological_and_Physiological_Influences_on_the_Elderly_at_a_Care_House Wada, K & Shibata, Takanori. (2007). Living With Seal Robots - Its Sociopsychological and Physiological Influences on the Elderly at a Care House. Robotics, 972-980.</ref> and ''article 11'' <ref>https://www.researchgate.net/publication/229058790_Assistive_social_robots_in_elderly_care_A_review Broekens, Joost & Heerink, Marcel & Rosendal, Henk. (2009). Assistive social robots in elderly care: A review. Gerontechnology, 94-103.</ref> explore the effect of robots to the social, psychological and physiological levels, providing a base for knowing what types of results certain actions yield. | |||

''Article 14'' <ref>https://www.researchgate.net/publication/226452328_Granny_and_the_robots_Ethical_issues_in_robot_care_for_the_elderly Sharkey, Amanda & Sharkey, Noel. (2010). Granny and the robots: Ethical issues in robot care for the elderly. Ethics and Information Technology. 27-40.</ref> explores six ethical issues related to deploying robots for elderly care, ''article 13'' <ref>https://ieeexplore.ieee.org/document/5751987 A. Sharkey and N. Sharkey, "Children, the Elderly, and Interactive Robots," in IEEE Robotics & Automation Magazine, vol. 18, no. 1, pp. 32-38, March 2011. | |||

<ref>https://www.researchgate.net/publication/ | doi: 10.1109/MRA.2010.940151</ref> provides more knowledge when it compares children, elderly and robots and their roles in relationships with humans. | ||

''Article 12'' <ref>https://www.ncbi.nlm.nih.gov/pubmed/11742772 FG. Miskelly, Assistive technology in elderly care. | |||

Article | Department of Medicine for the Elderly</ref> looks at the available technologies for tracking elderly at home, keeping them safe and alerting others when needed. | ||

<br/><br/> | |||

<ref>https://www.ncbi.nlm.nih.gov/pubmed/ | |||

'''Dementia and Alzheimer's''' | |||

'''Dementia and Alzheimer's''' | |||

Closely related to elderly are the diseases Dementia and Alzheimer’s. These diseases affect the brain of a patient, making them forgetful or unable to complete tasks in their day-to-day life without help. ''Article 16'' <ref>https://alzres.biomedcentral.com/articles/10.1186/alzrt143 M. Wortmann, Alzheimer's Research & Therapy (2012)</ref> elaborates on this and explains the impacts and a possible framework to counter these diseases is proposed. ''Article 17'' <ref>https://academic.oup.com/biomedgerontology/article/59/1/M83/533605 The Journals of Gerontology: Series A, Volume 59, Issue 1, 1 January 2004, Pages M83–M85</ref> discusses whether an entertainment robot is useful to be deployed in care for elderly with dementia. | |||

<!-- | |||

Article 11: Assistive social robots in elderly care a review | Article 11: Assistive social robots in elderly care a review | ||

Different social robots are discussed. Research is performed to test positive reactions of elderly to assistive social robots. The positive effects influence mood, loneliness and social connections to others. | Different social robots are discussed. Research is performed to test positive reactions of elderly to assistive social robots. The positive effects influence mood, loneliness and social connections to others. | ||

| Line 115: | Line 133: | ||

<ref>https://www.researchgate.net/publication/3450481_Living_With_Seal_Robots_-_Its_Sociopsychological_and_Physiological_Influences_on_the_Elderly_at_a_Care_House</ref> | <ref>https://www.researchgate.net/publication/3450481_Living_With_Seal_Robots_-_Its_Sociopsychological_and_Physiological_Influences_on_the_Elderly_at_a_Care_House</ref> | ||

'''Dementia and Alzheimers''' | '''Dementia and Alzheimers''' | ||

Article 16: Dementia a global health priority | Article 16: Dementia a global health priority | ||

Alzheimer’s disease is explained with its impacts. A framework to counter Alzheimer and dementia is elaborated. | Alzheimer’s disease is explained with its impacts. A framework to counter Alzheimer and dementia is elaborated. | ||

| Line 123: | Line 142: | ||

Effectiveness of an entertainment robot in occupational robot is discussed. Robots as therapeutic tools are compared and discussed in order to comfort elderly with dementia. | Effectiveness of an entertainment robot in occupational robot is discussed. Robots as therapeutic tools are compared and discussed in order to comfort elderly with dementia. | ||

<ref>https://academic.oup.com/biomedgerontology/article/59/1/M83/533605</ref> | <ref>https://academic.oup.com/biomedgerontology/article/59/1/M83/533605</ref> | ||

--> | |||

'''Measuring tools''' | '''Measuring tools''' | ||

Here, papers are collected that provide aid for designing a robotic system, ''article 18'' <ref>https://dl.acm.org/citation.cfm?id=1358952&dl=ACM&coll=DL N. Sadat Shami, Jeffrey T. Hancock, Christian Peter, Michael Muller, and Regan Mandryk. 2008. Measuring affect in hci: going beyond the individual. In CHI '08 Extended Abstracts on Human Factors in Computing Systems (CHI EA '08). ACM, New York, NY, USA, 3901-3904. DOI: https://doi.org/10.1145/1358628.1358952</ref> discusses some of the difficulties in measuring affection in Human Computer Interaction (HCI). For designing a system that is capable of expressing emotion, ''article 19''<ref>https://ieeexplore.ieee.org/document/1642261 H. Shibata, M. Kanoh, S. Kato and H. Itoh, "A system for converting robot 'emotion' into facial expressions," Proceedings 2006 IEEE International Conference on Robotics and Automation, 2006. ICRA 2006., Orlando, FL, 2006, pp. 3660-3665. | |||

doi: 10.1109/ROBOT.2006.1642261</ref> and ''article 20'' <ref>https://ieeexplore.ieee.org/document/4755969 M. Zecca, N. Endo, S. Momoki, Kazuko Itoh and Atsuo Takanishi, "Design of the humanoid robot KOBIAN - preliminary analysis of facial and whole body emotion expression capabilities-," Humanoids 2008 - 8th IEEE-RAS International Conference on Humanoid Robots, Daejeon, 2008, pp. 487-492. | |||

<ref>https://ieeexplore.ieee.org/document/ | doi: 10.1109/ICHR.2008.4755969</ref> show recent developments when designing a ‘face’ or whole body to express emotion to a user. | ||

'''Miscellaneous''' | |||

In the study of emotions, models are often useful. ''Article 21'' elaborates on social aspects of emotions, together with the implementation in human-computer interaction. A model is tested and accordingly a system is designed with the goal of providing a means of affective input (for example: emoticons). <ref>https://www.researchgate.net/publication/235328873_Dominance_and_valence_A_two-factor_model_for_emotion_in_HCI Dryer, Christopher. (1998). Dominance and valence: A two-factor model for emotion in HCI.</ref> | |||

''Article 22'' describes emotional comfort was researched in patients that found themselves in a therapeutic state. It provides an understanding of the role of personal control in recovery and aspects of the hospital environment that impact hospitalized patients’ feelings of personal control.<ref>https://www.ncbi.nlm.nih.gov/pubmed/15812732 AM. Williams et al., 'Enhancing the therapeutic potential of hospital environments by increasing the personal control and emotional comfort of hospitalized patients.' (2005)</ref> | |||

A more medical approach is taken in ''Article 23'': evidence is provided for altered functional responses in brain regions subserving emotional behaviour in elderly subjects, during the perceptual processing of angry and fearful facial expressions, compared to youngsters.<ref>https://www.ncbi.nlm.nih.gov/pubmed/15936178 A. Tessitore et al., 'Functional changes in the activity of brain regions underlying emotion processing in the elderly.' (2005)</ref> | |||

Statistics about the greying of global population provide insight in challenges paired with this greying. ''Article 24'' is a collection of evidence for the greying of the wordl's population, with causes and consequences. It states three challenges.<ref>https://academic.oup.com/ppar/article-abstract/17/4/12/1456824?redirectedFrom=fulltext Adele M. Hayutin; Graying of the Global Population, Public Policy & Aging Report, Volume 17, Issue 4, 1 September 2007, Pages 12–17</ref> ''Article 25'' indicates the importance of a healthy community to the efficiency and economic growth of said community. It points out the goals of improving the healthcare system and how these improvements can be made.<ref>https://www.who.int/hrh/com-heeg/reports/en/ World Health Organisation, 'Working for health and growth: investing in the health workforce' (2016)</ref> | |||

Article | |||

<ref>https://www. | |||

'''Characterizing the Design Space of Rendered Robot Faces'''<ref>https://www.researchgate.net/publication/323590718_Characterizing_the_Design_Space_of_Rendered_Robot_Faces Kalegina, Alisa & Schroeder, Grace & Allchin, Aidan & Berlin, Keara & Cakmak, Maya. (2018). Characterizing the Design Space of Rendered Robot Faces. 96-104. 10.1145/3171221.3171286</ref> | |||

<ref>https://www. | |||

A study on the design space of robots with a digital (screen-based) face. Summary can be found [https://drive.google.com/open?id=1w2BsjFuKtT7KNKa2nRXOACpIou6h9FAc here]. | |||

== Project setup == | == Project setup == | ||

| Line 167: | Line 171: | ||

=== Approach === | === Approach === | ||

We conduct user tests based on a previously conducted study related to the same subject as our study, but ours focuses on the testing of robot faces and emotional expression with seniors as target group. | |||

We complete 3 different tests paired with statistical analysis to validate the robots faces we designed are suitable for application in care robots for the elderly. | |||

=== | === Deliverables === | ||

At the end of this project period, these are the things we want to have completed to present:<br/> | At the end of this project period, these are the things we want to have completed to present:<br/> | ||

* This wiki page | * This wiki page | ||

* A study report | * A study report. We regard the report as leading, instead of this wiki page. https://drive.google.com/file/d/10eOyrqrv0_qM8moqtG3LNrSrNkcRFg7s/view?usp=sharing | ||

* A | * Consent forms used https://drive.google.com/open?id=1d5nMSqIM2S2ulaygWP1BQDxTVSX1sGNc | ||

== User Test 1== | |||

=== Introduction=== | |||

When designing a robot face, the designer is confronted with a wide range of possible design choices. Previous studies have attempted, and to some degree succeeded, in analyzing this design space. One study in particular, “Characterizing the Design Space of Rendered Robot Faces”, takes non-fictional screen-based designs, and conducts a survey on the influence of the different aspects characterizing these faces. Based on the survey results, they then create a baseline face and vary different details. A second survey is conducted on the influence of these variations. However, this study focuses on the general appearance of robot faces, where we want to research the portrayal of emotions. We will draw inspiration from the methods used by our colleagues, and further investigate the design choices one could or should make, when designing a screen-based robot face. Below we first describe in the methods section the structure of the interviews, thereafter we represent the results, derive a conclusion and give an discuss further research possibilities. | |||

=== Method === | |||

This section elaborates on the structure of the interviews conducted. These interviews consisted out of 3 parts; the introduction questions, test 1 and test 2. All parts were conducted in Dutch, as the elderly all are natively Dutch. | |||

Firstly, the elderly were asked to participate in our study on robot faces. Once they had agreed to participate, we showed them the informed consent and explained what was said to the ones that had some trouble reading due to bad eye sight. The informed consent explained the study and it said that they would be recorded as well. | |||

After they had signed the informed consent, we started the interview. The purpose of the interview was to introduce the participants to the topic of robots, in order to put their mindset into the subject of robots. The questions used can be found here https://drive.google.com/open?id=1DhAuVQ5AHjHgi6Yt51g0OR6pSJITHXHh . | |||

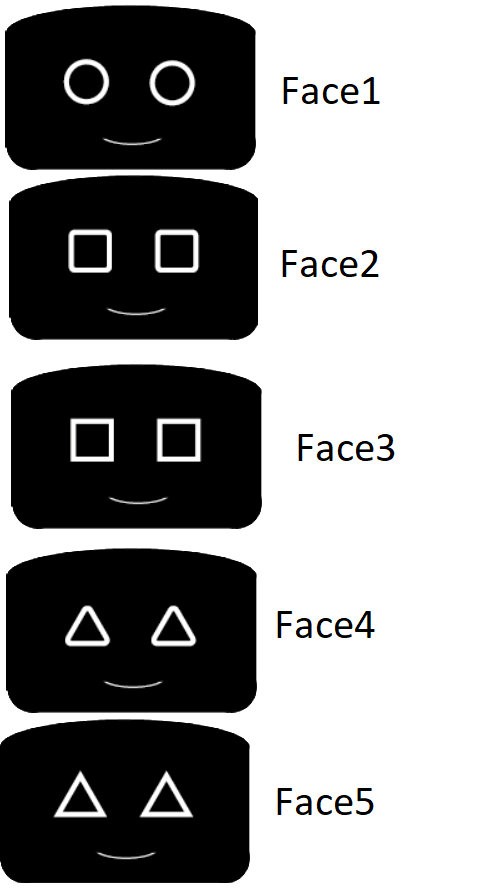

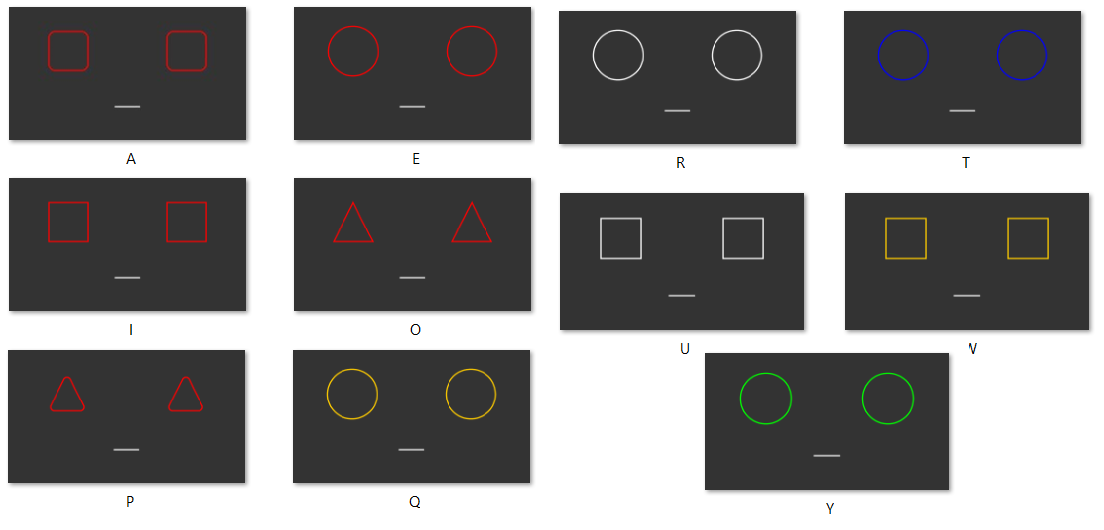

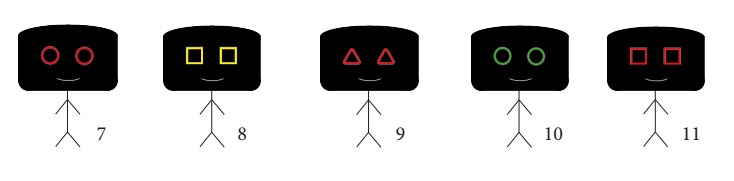

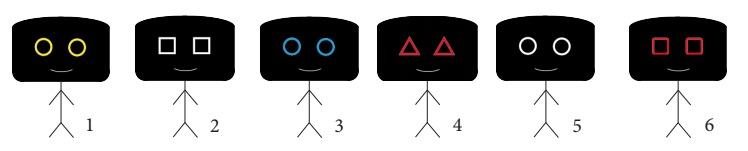

After the interview section, we moved on to the first test. Test 1 was designed to determine which emotions are associated with certain shapes of the eyes of the robot. The participants were asked to sort the different faces based on their first response for certain emotions. For example, we asked the emotion ‘anger’, then the participant had to order the faces, which can be found in figure 1, accordingly how the participant felt that the face could be associated with the emotion. The five faces then would be ranked corresponding to being ‘the most angry’ versus ‘the least angry’. The used emotions are friendliness; anger; sadness; joy; surprise; dislike and fear. | |||

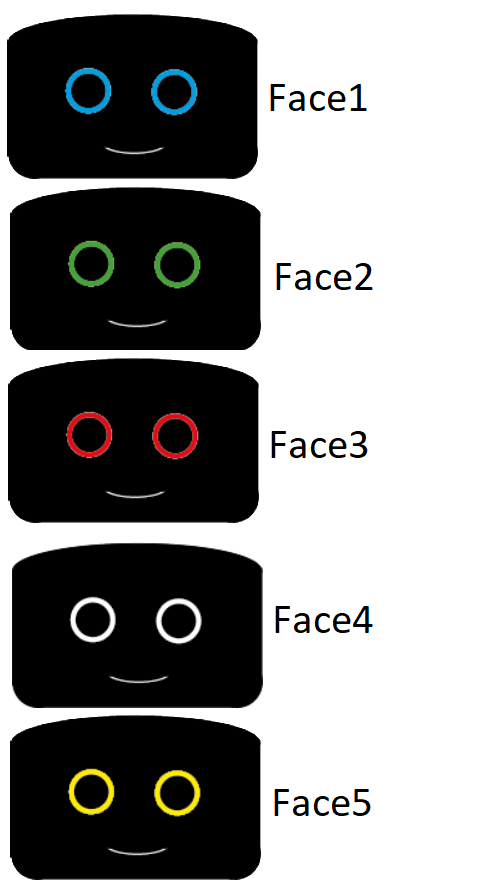

After test 1 was fulfilled, the participant moved on to test 2. Test 2 has the same design, but then for different eye colors with a fixed shape of the eyes. Again, the same emotions were asked and the participants still had to order the faces from ‘the most’ to ‘the least’. Again, the initial response was asked for. The used eye colors can be found in figure 2. | |||

After each test, we asked whether they had a favorite and a least favorite one, and whether they had an additional comment for us. Afterwards they were thanked for their participation. | |||

[[File:FacesTest1.png|thumb|400px|left|alt=Alt text|Figure 1: the faces for test 1]][[File:FacesTest2.png|thumb|400px|right|alt=Alt text|Figure 2: the faces for test 2]] | |||

=== Interview plan ( First try, before Feedback)=== | |||

Interview Guide | |||

'''INLEIDING''' - vragen bedoeld om de mensen vast in het thema te laten denken. | |||

* Zou u een robot kunnen vertrouwen? Wat heeft u nodig om een robot te kunnen vertrouwen? | |||

* Wat verwacht u van een robot? | |||

* Welke positieve/ negatieve ervaringen heeft u al met robots? Wat zou u graag anders zien aan robots? | |||

* Zou u gebruik willen maken van een robot? Waarom wel/niet? | |||

* Hoe zou je willen dat het gezicht van een robot eruit ziet? | |||

* Wat voor materiaal zou je willen hebben bij een robot? | |||

'''ROBOT IN DE ZORG- VRAGEN | |||

''' | |||

* Wat is, voor u, het belangrijkste aan het ontvangen van zorg? | |||

* Wat zou u er van vinden, deze zorg van robots te ontvangen? | |||

* Welke taken denkt u dat een robot kan uitvoeren in de context van (thuis)zorg? | |||

* Hoe belangrijk vindt u het, dat contact met uw verzorger emotioneel is? | |||

* Welke eigenschappen moet een robot hebben om betrouwbaar te zijn/over te komen?* Wil je liever dat een robot zijn emotie prettig/comfortabel of duidelijk is? | |||

'''TESTPLAN''' | |||

'''Test 1''' | |||

* Select various shapes (squares, triangles, circles), and variations of these shapes (tall, broad, small, large, etc.) | |||

* Show these to people and ask them to rate them on likert scale of emotions | |||

* Use 6 basic emotions: happiness, anger, sadness, surprise, disgust, fear | |||

* Sidenote: movement/dynamic shapes not included in this test, while the transitions between shapes may affect the implied emotion. | |||

* Sidenote: no knowledge is gained about the expected, context-dependent reaction of an emotional interface (i.e. nuance in mirroring emotion; laughing when expected to be angry; etc.) | |||

* Alle vragen doornemen met de ouderen, aan het einde van de vragenlijst, de vragen doornemen om meer inzichten te kunnen krijgen. | |||

- Welke van de vormen zou u het liefst in een gezicht zien? | |||

- Welke van de vormen zou u het liefst zien als ogen voor een robot? | |||

- Welke van de vormen zou u het liefst zien als neus voor een robot? | |||

- Welke van de vormen zou u het liefst zien als mond voor een robot? | |||

Doel van deze test is erachter komen welke vormen het meest toepasselijk zijn voor het gebruiken als communicatiemiddel voor emoties. | |||

'''Test 2''' | |||

* Select various robots displaying emotions from visual media. | |||

* Rank robots on the amount of humanity displayed | |||

* Ask questions about the various pictures: | |||

* Which is preferred for tasks inside their personal space? | |||

* What emotion expresses the emotion angry/happy/etc. the best/the most clear? | |||

* Welke robot vond u het vrolijkste (met alle emoties)? | |||

* For various tasks, which robot would you pick to do this task? | |||

- Stofzuigen | |||

- Koffie zetten | |||

- Medicijnen geven | |||

- Eten geven/koken | |||

- Hulp met douchen | |||

- Activiteiten: schilderen, etc. | |||

Side note: we are aware of the fact that there may be some bias met de characters van de robots. | |||

Doel van deze test is een richtlijn vinden om te zien welke trekken van een robot, daarbij ook welke mate van menselijkheid gewenst is voor een robot die ouderen zouden gebruiken in hun eigen omgeving. | |||

== Interview guide == | |||

Via the link below you can see the interview guide we are using, including the pictures and scales that we use for the two tests. | |||

https://drive.google.com/open?id=1E91jO9-nM1NU0apAUHHymIyyJo98OSrf | |||

=== Interview plan ( Second try, after Feedback)=== | |||

https://drive.google.com/open?id=1DhAuVQ5AHjHgi6Yt51g0OR6pSJITHXHh | |||

=== Thematic Analysis=== | |||

:*The recorded interviews [https://drive.google.com/open?id=1nBHtbsOHz-xiz1mxOtzvmSUyfA2LsXX1 Recordings] | |||

:*The transcribed and coded interviews [https://drive.google.com/open?id=1lHo-z-DGVEmlly8Eo38tIuMAA8EV6tj7 Transcriptions] | |||

:*The determined codes and themes [https://drive.google.com/open?id=17UOvepmRTjqSnlGFNmM6h5OFcuoLrw6k Codes and themes] | |||

The discussed results, conclusion and discussion can be found below, in the dedicated sections itself. | |||

=='''Results'''== | |||

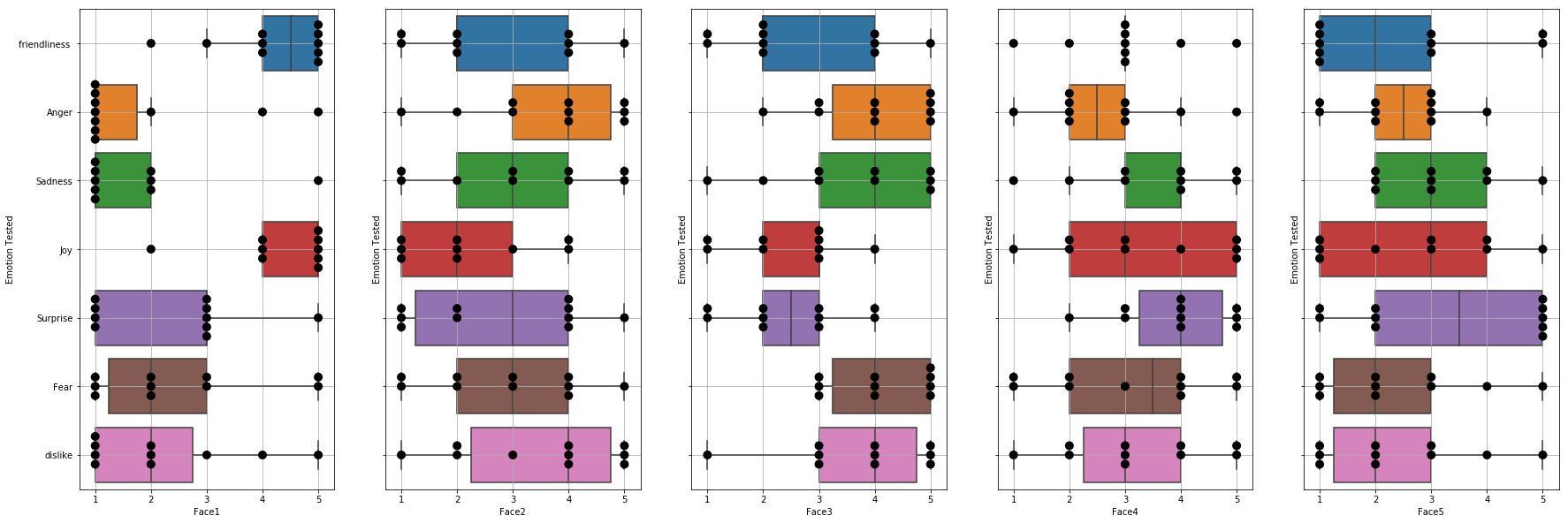

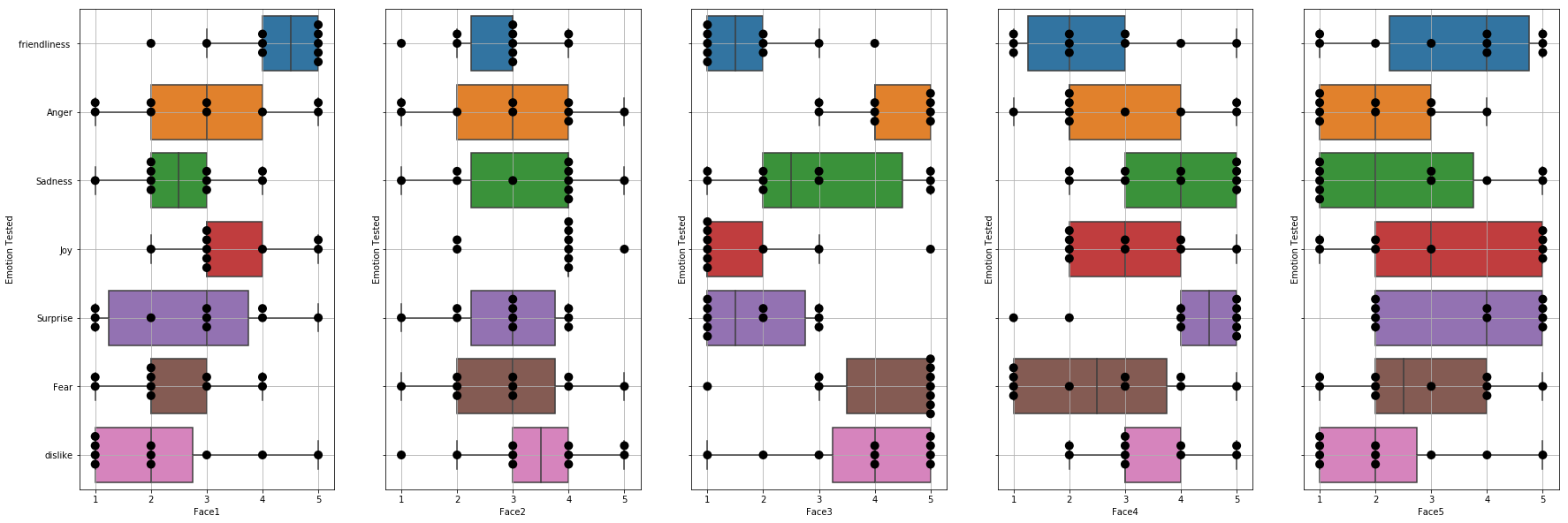

To obtain and visualize results, both STATA and the scikit-learn where used. The results for tests 1 and 2 are given in figure 3 and figure 4 respectively. In these figures a score of 5 means that the face is “the most” friendly/angry/sad of all the faces shown to the interviewee. And a score of 1 means “the least”. The excel file with the raw results can be found in the following link: | |||

[https://drive.google.com/open?id=1fgnQ16IMMsOcNJWlpLSjyXYG948wUYq1 Raw results] | |||

[[File:Test1ResultsEnglish.png|thumb|1000px|left|alt=Alt text|Figure 3: The results of test 1, for all tested faces, on the y axis the assessed emotions and on the x axis their relative responses (5 represents "the most" and 1 represents "the least".]][[File:Test2ResultsEnglish.png|thumb|1000px|left|alt=Alt text|Figure 4: The results of test 2, for all tested faces, on the y axis the assessed emotions and on the x axis their relative responses (5 represents "the most" and 1 represents "the least".]] | |||

====Normality==== | |||

With STATA the normality was checked, for each combination of face and emotion. The normality was fine for all faces and emotions except for; | |||

:*face 5, the normality was rejected for the emotion of surprise; | |||

:*face 8 for the emotions joy and fear. | |||

Since the normality was pretty close to being supported we decided to keep face 5 and face 8 in the dataset. | |||

====Regression==== | |||

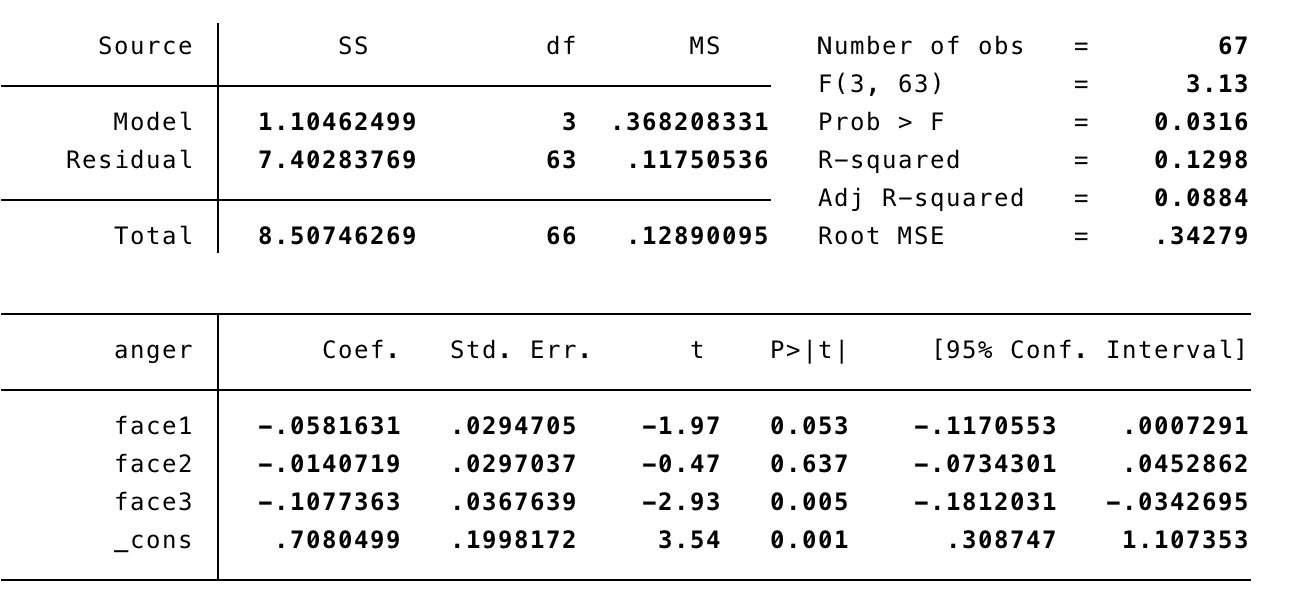

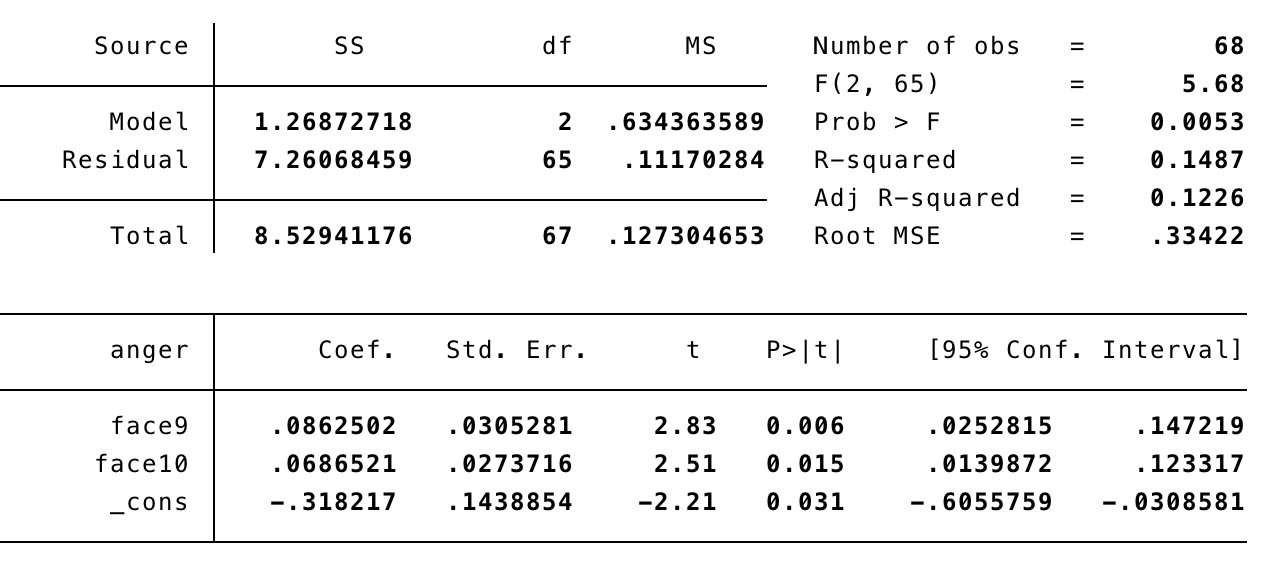

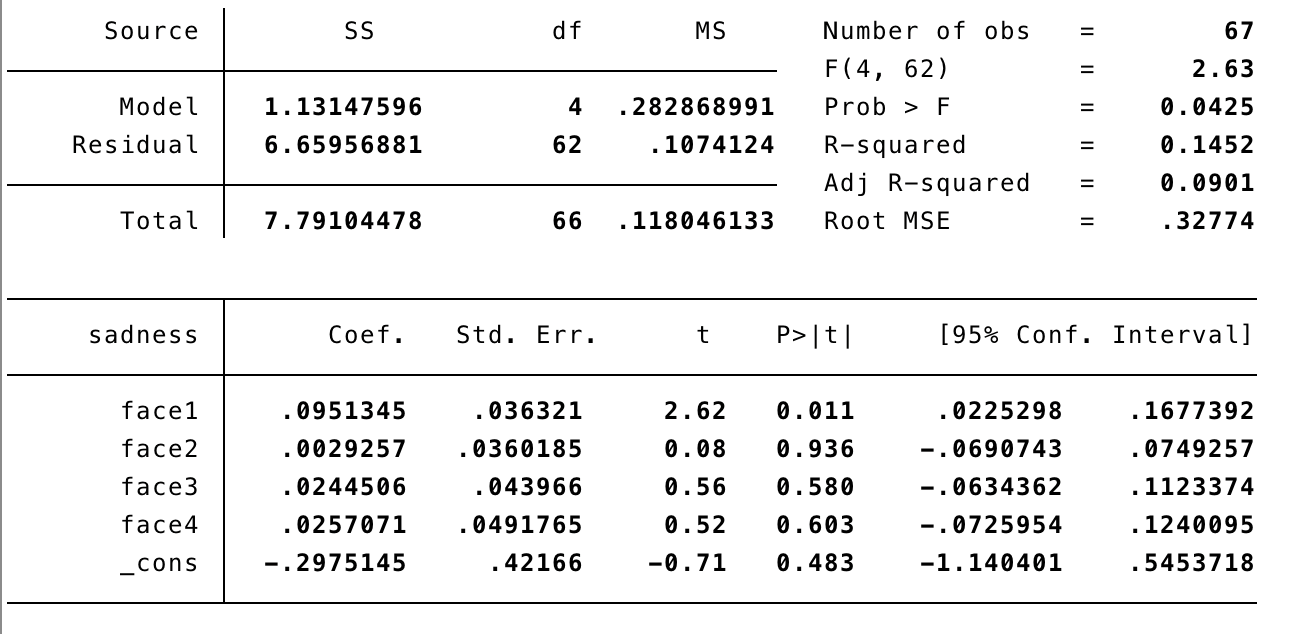

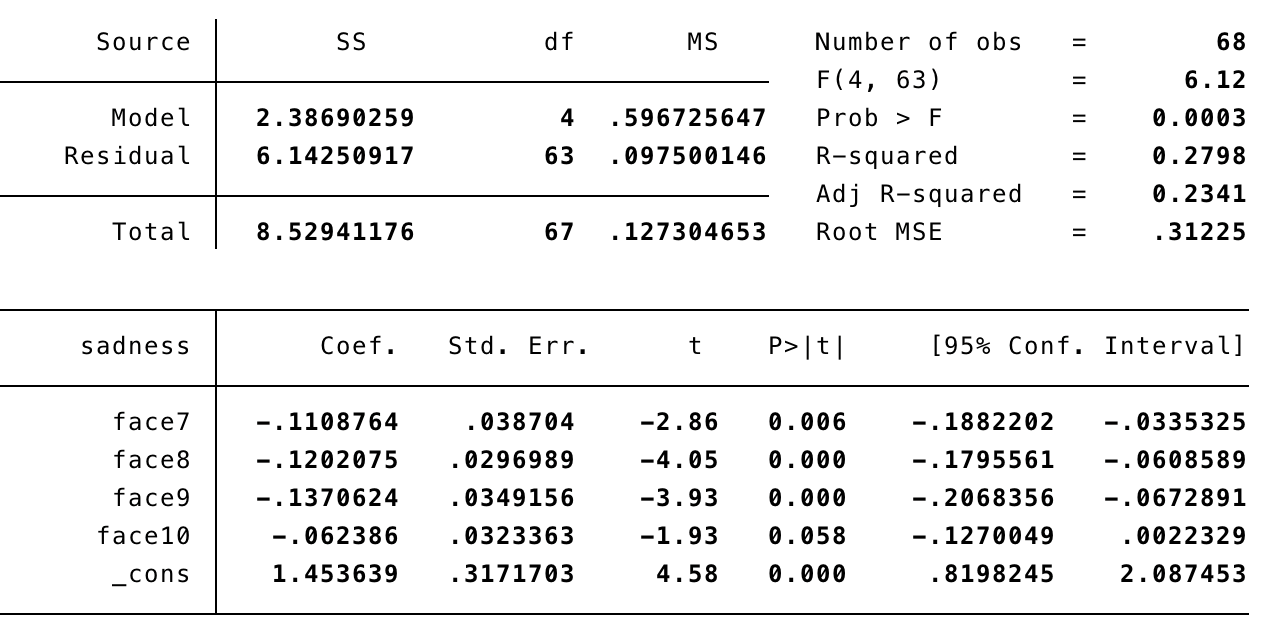

After checking the normality we ran regressions on all emotions. The used emotions are friendliness, anger, sadness, joy, surprise, dislike and fear. The used faces can be found in the method. The results of these regressions will be discussed in this section. | |||

In the explanations down below, the effect of the coefficient will be mentioned. To elaborate this; having a coefficient of +3 means that, if the unit of the emotion increases by 1, the unit of the variable with a coefficient of +3 increases with 3. | |||

However, the coefficients are very small in each regression, therefore they are almost negligible. | |||

First the results of the regressions on the faces and emotions of test 1 are assessed, which will be followed by the assessment of the regressions of test 2. | |||

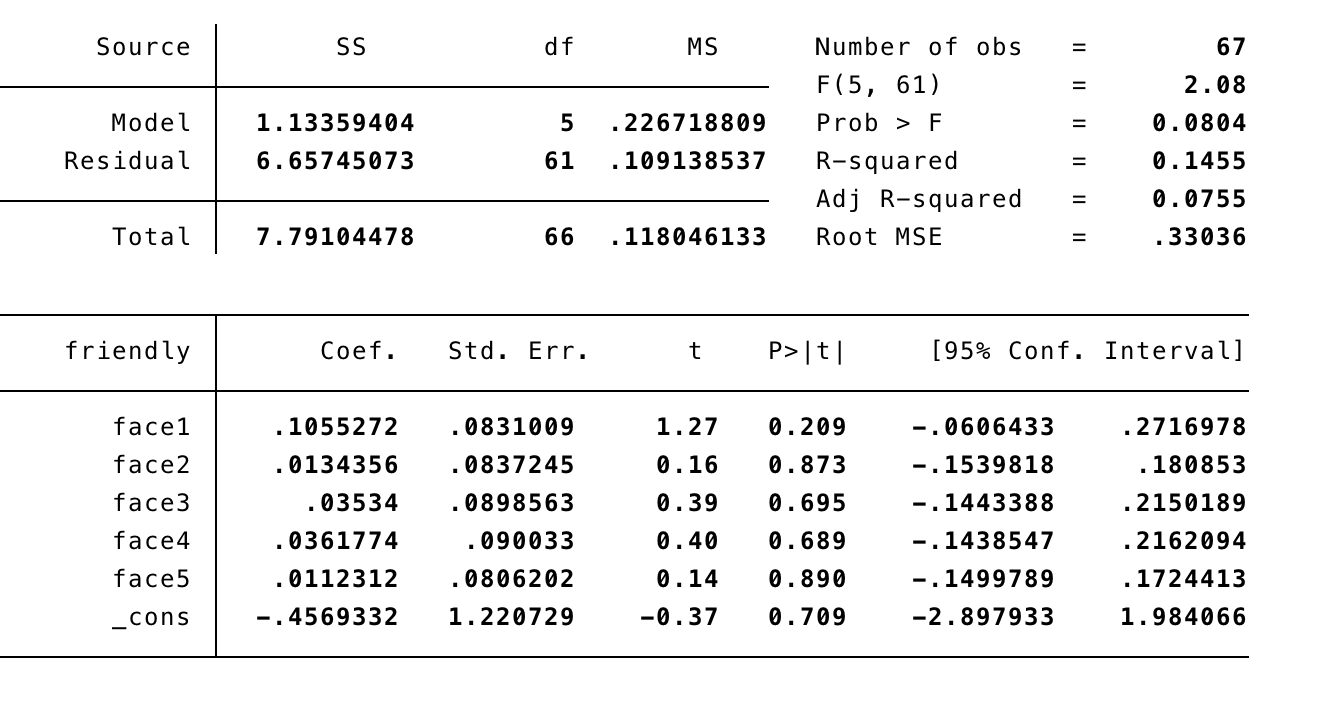

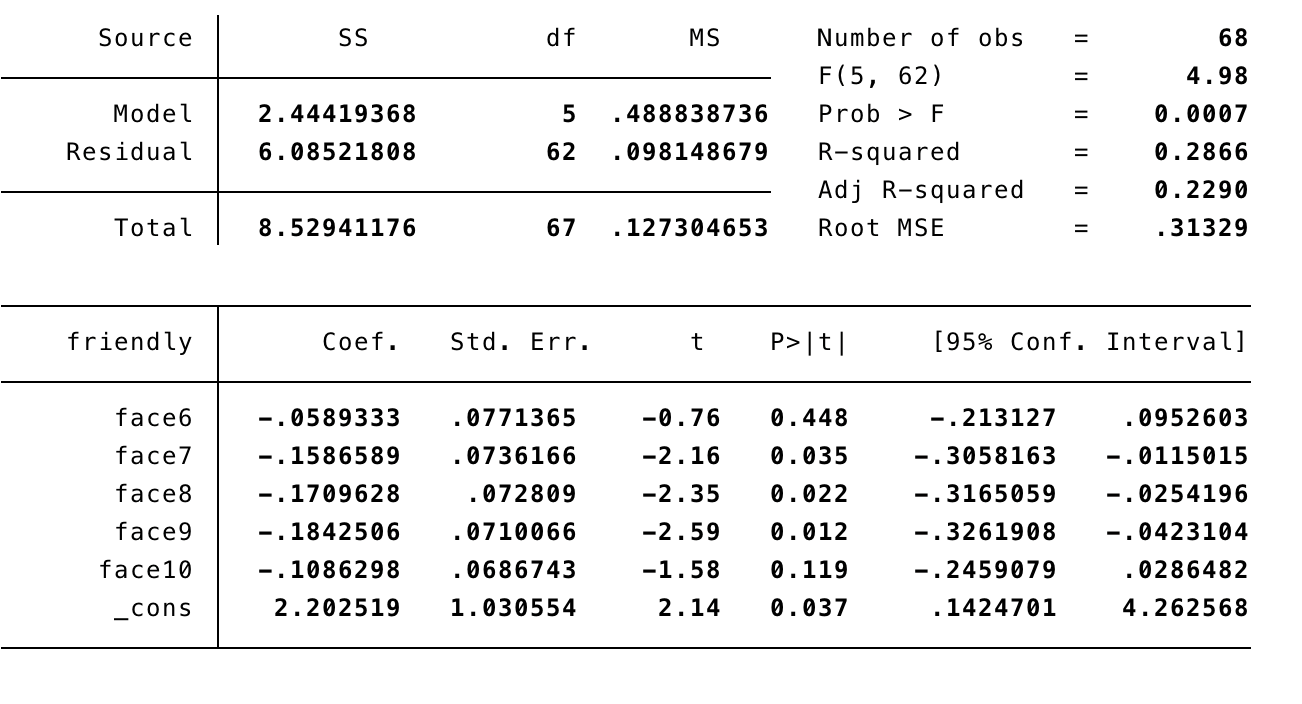

[[File:FriendlyTest1.png|thumb|400px|left|alt=Alt text|Figure 5: the regression of the emotion friendliness of test 1]] [[File:FriendlyTest2.png|thumb|400px|right|alt=Alt text|Figure 13: the regression of the emotion friendliness of test 2]] | |||

[[File:FriendlyTest1b.png|thumb|400px|left|alt=Alt text|Figure 6: the regression of the emotion friendliness of test 1, with an improved model]] [[File:FriendlyTest2b.png|thumb|400px|right|alt=Alt text|Figure 14: the regression of the emotion friendliness of test 2, with an improved model]] | |||

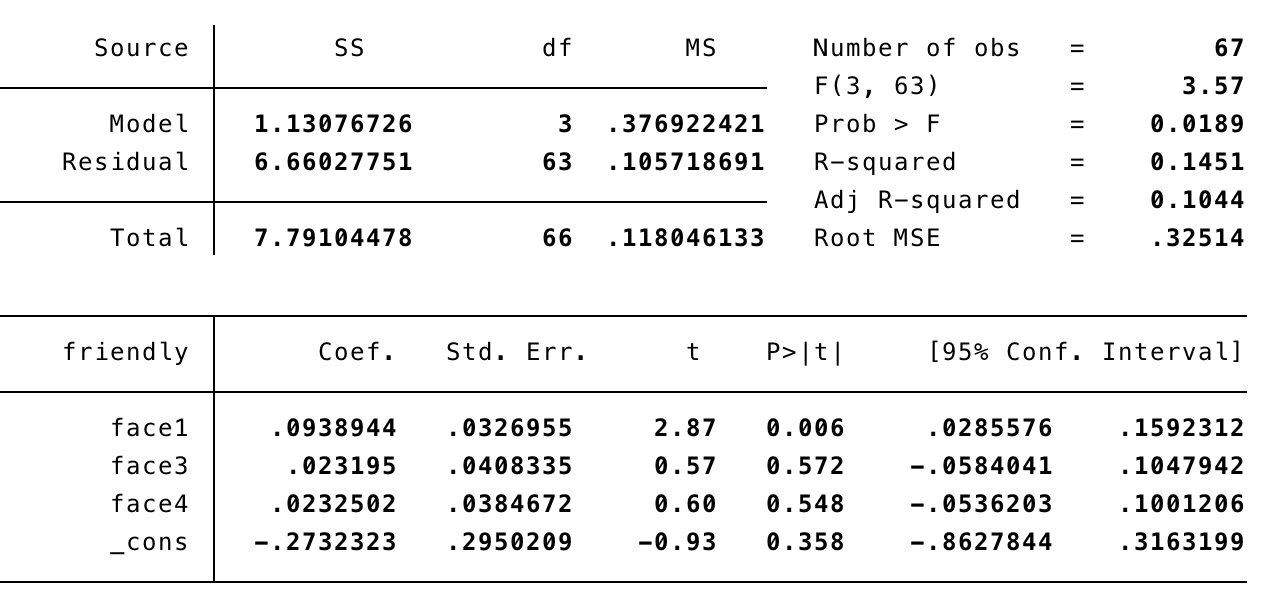

[[File:AngerTest1b.png|thumb|400px|left|alt=Alt text|Figure 7: the regression of the emotion anger of test 1, with an improved model]] [[File:AngerTest2b.png|thumb|400px|right|alt=Alt text|Figure 15: the regression of the emotion anger of test 2, with an improved model]] | |||

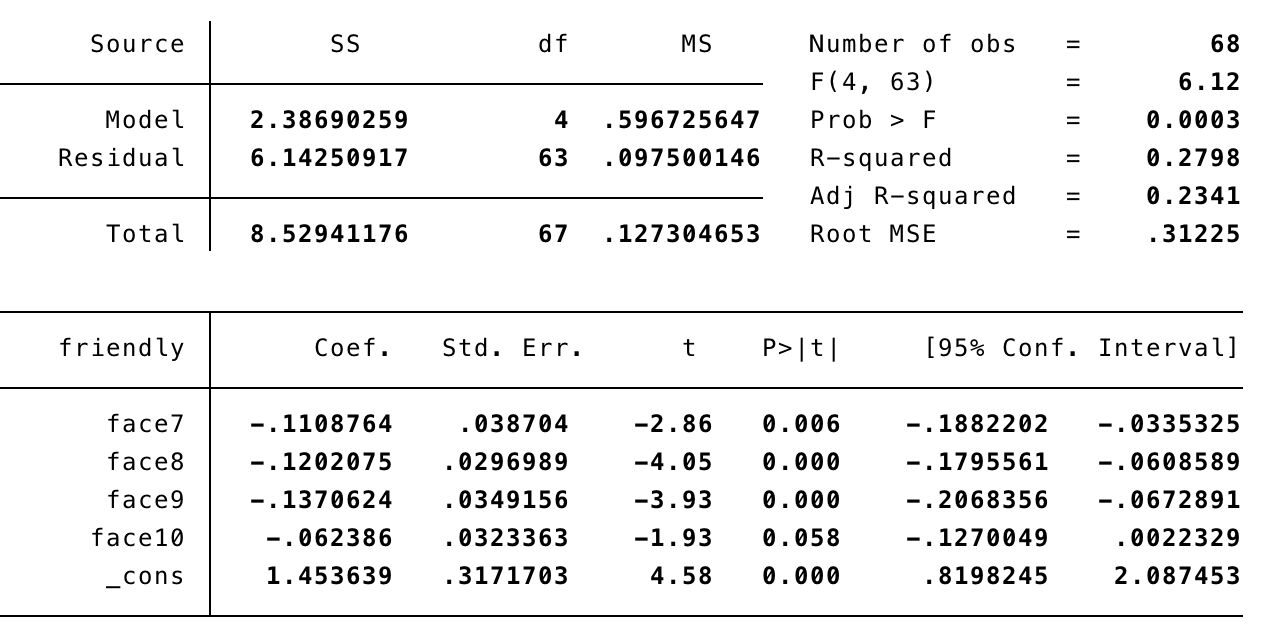

[[File:SadnessTest1b.png|thumb|400px|left|alt=Alt text|Figure 8: the regression of the emotion sadness of test 1, with an improved model]] [[File:SadnessTest2b.png|thumb|400px|right|alt=Alt text|Figure 16: the regression of the emotion anger of test 2, with an improved model]] | |||

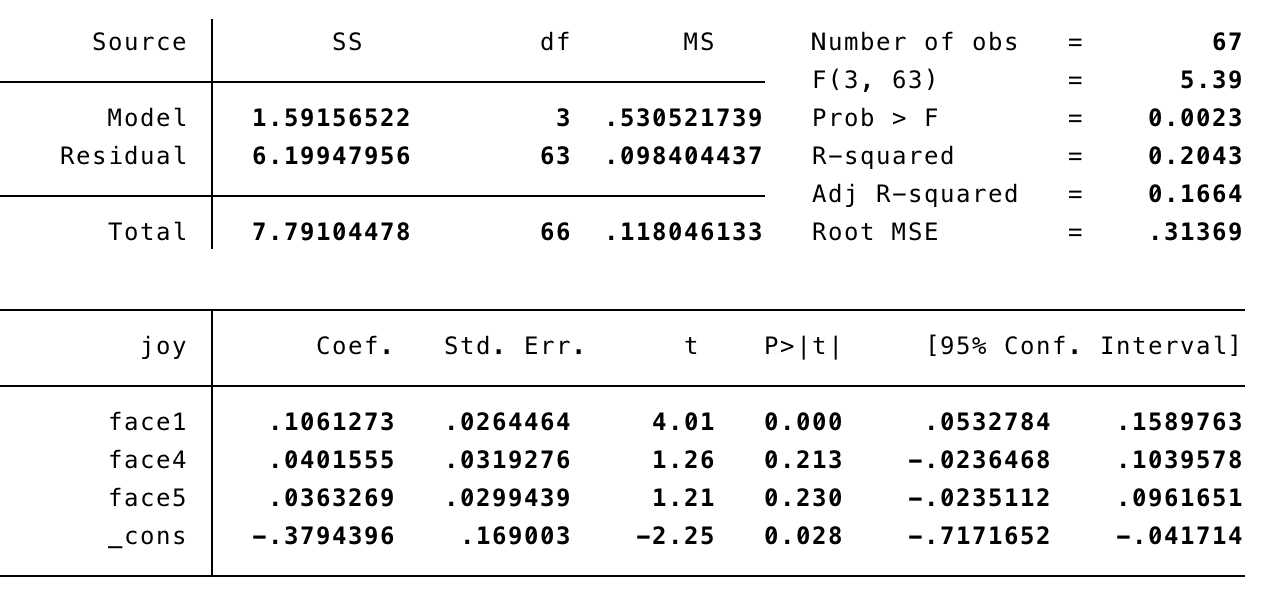

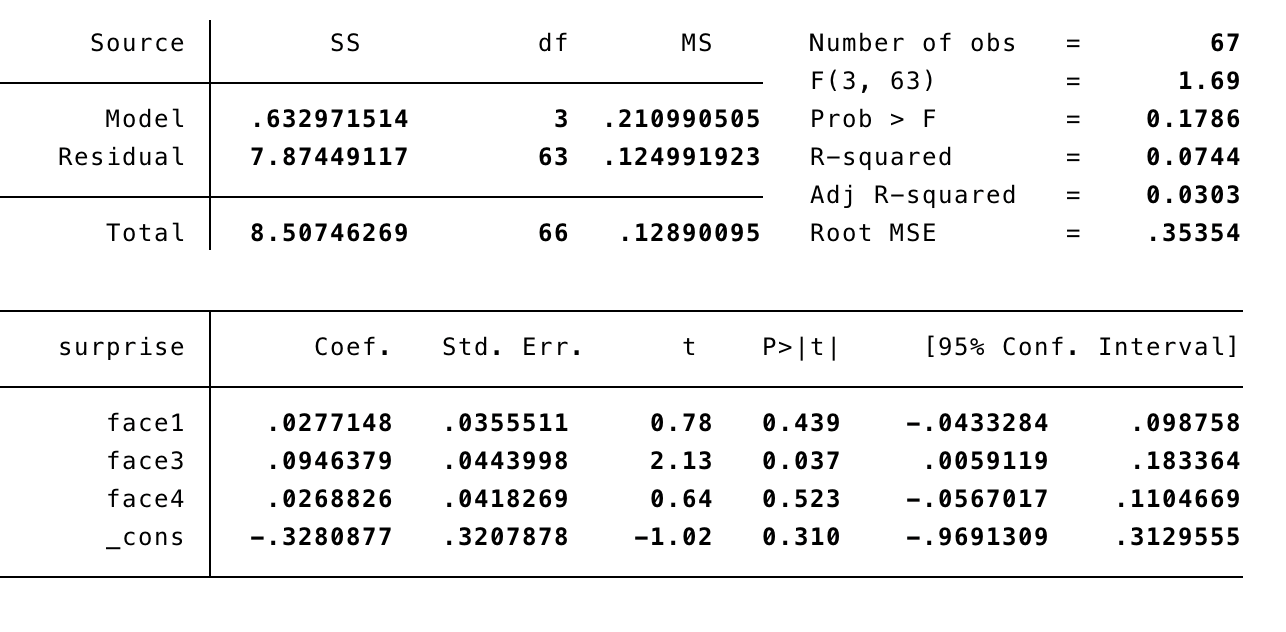

[[File:JoyTest1b.png|thumb|400px|left|alt=Alt text|Figure 9: the regression of the emotion joy of test 1, with an improved model]] [[File:JoyTest2b.png|thumb|400px|right|alt=Alt text|Figure 17: the regression of the emotion joy of test 2, with an improved model]] | |||

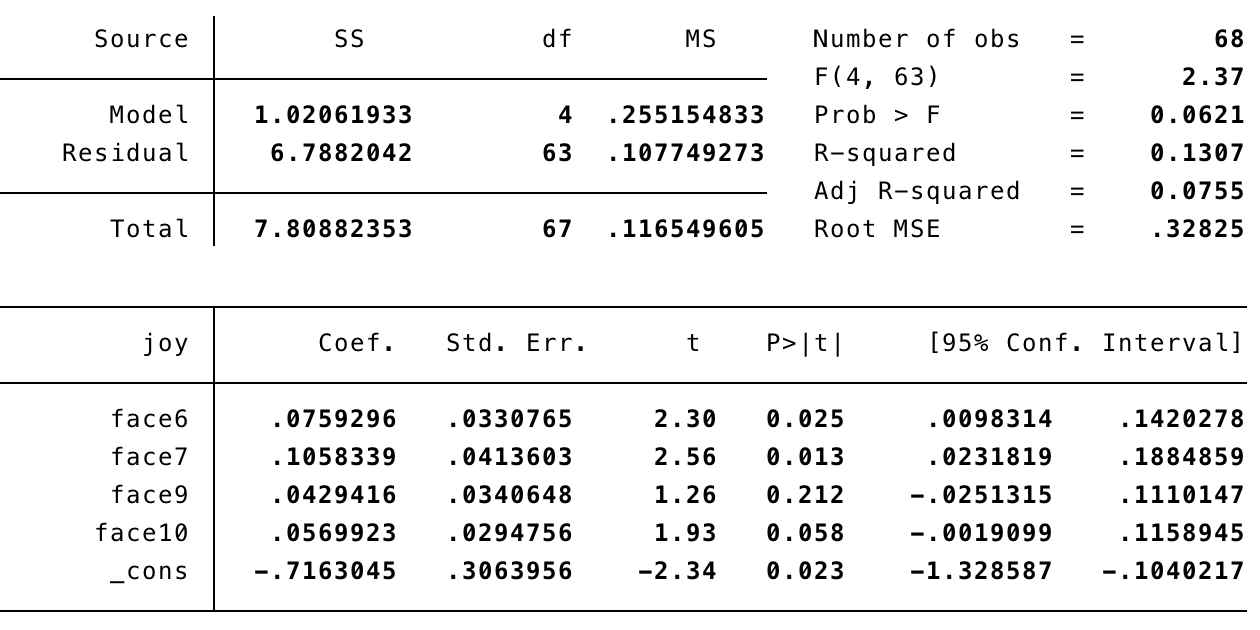

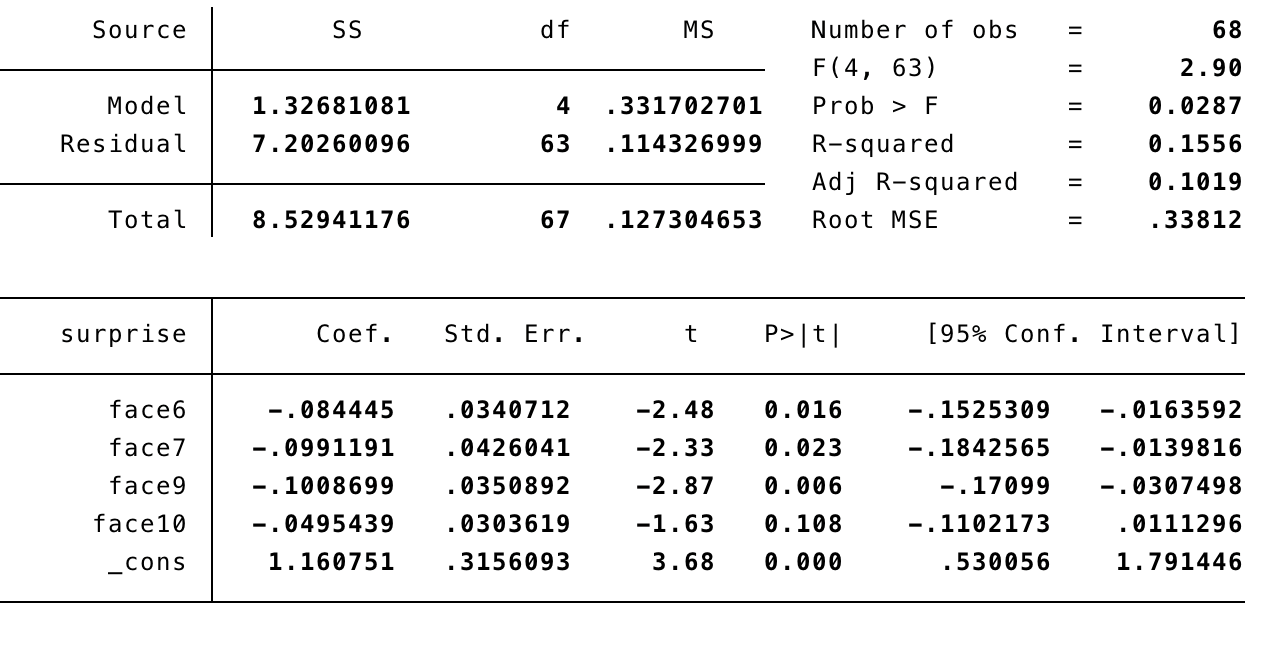

[[File:SupriseTest1b.png|thumb|400px|left|alt=Alt text|Figure 10: the regression of the emotion surprise of test 1, with an improved model]] [[File:SupriseTest2b.png|thumb|400px|right|alt=Alt text|Figure 18: the regression of the emotion surprise of test 2, with an improved model]] | |||

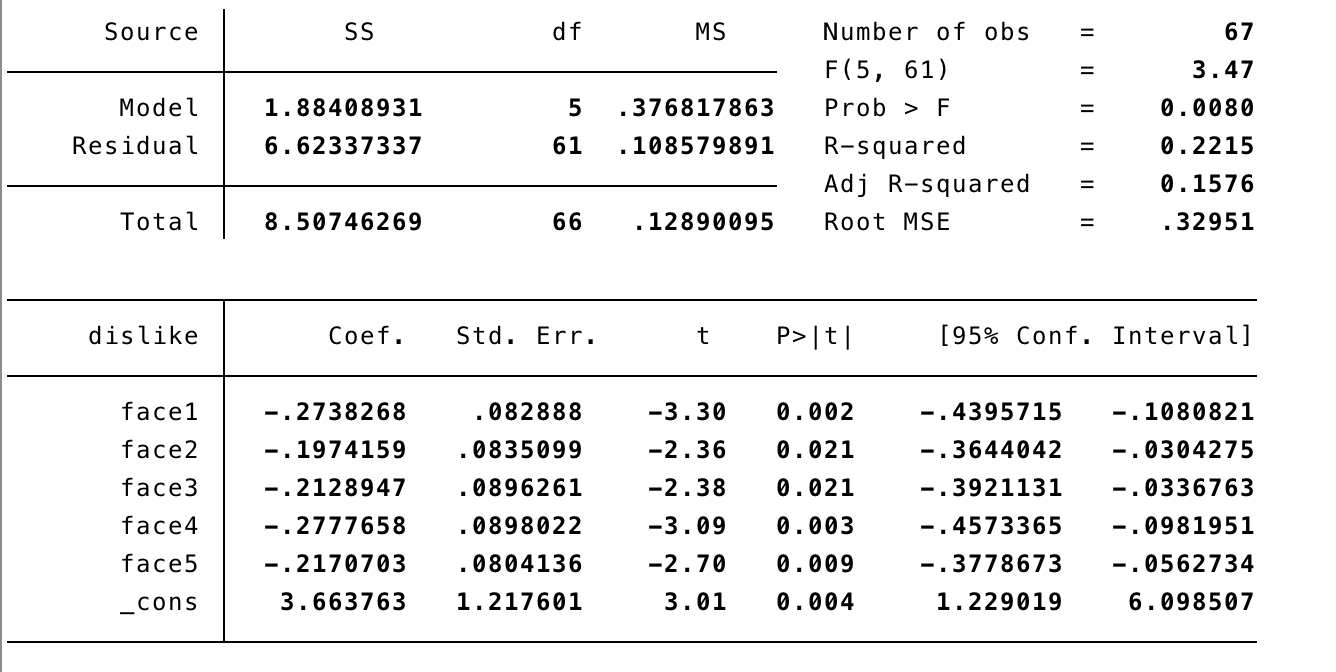

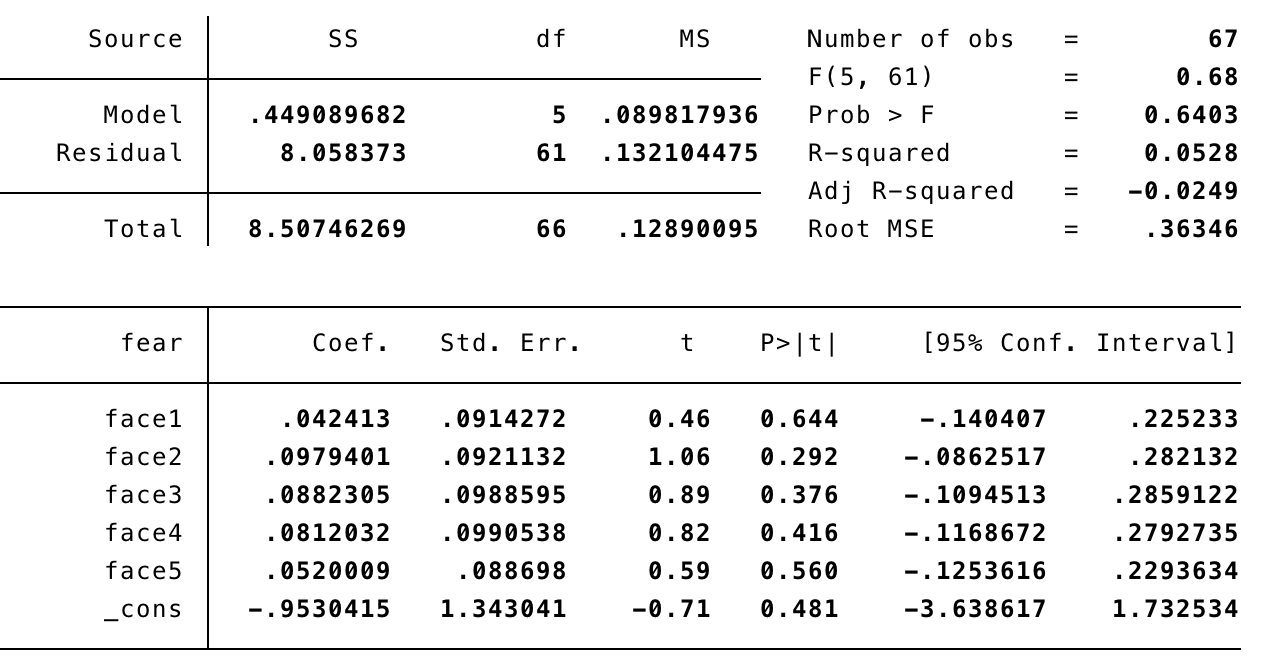

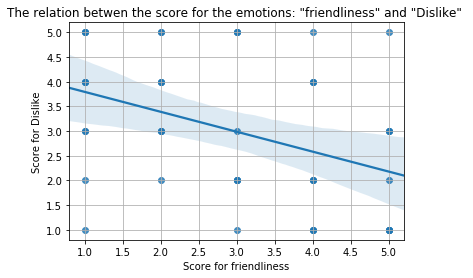

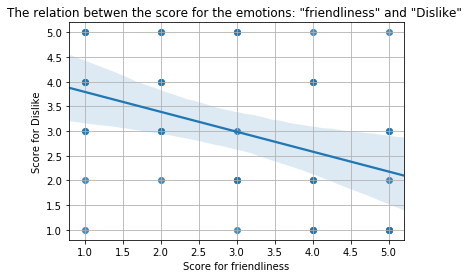

[[File:DislikeTest1.png|thumb|400px|left|alt=Alt text|Figure 11: the regression of the emotion dislike of test 1]] [[File:DislikeTest2b.png|thumb|400px|right|alt=Alt text|Figure 19: the regression of the emotion dislike of test 2, with an improved model]] | |||

[[File:FearTest1.png|thumb|400px|left|alt=Alt text|Figure 12: the regression of the emotion fear of test 1]] [[File:FearTest2b.png|thumb|400px|right|alt=Alt text|Figure 20: the regression of the emotion fear of test 2, with an improved model]] | |||

:*The results of the regression on the emotion of friendliness are given in figure 5. Since none of the variables were significant in this model, we improved the model further, which can be found in figure 6. | |||

:*The results of the regression on the emotion of anger are given in figure 7. Since none of the variables were significant we improved the model further, hence faces 4 and 5 are missing. | |||

:*The results of the regression on the emotion of sadness are given in figure 8. Since none of the variables were significant, just as with the regressions of the previous models, therefore the model was improved. | |||

:*The results of the regression on the emotion of joy are given in figure 9, again with an improved model. | |||

:*The results of the regression on the emotion of surprise are given in figure 10, again with an improved model. | |||

:*The results of the regression on the emotion of dislike are given in figure 11, this model did not required any improvement. | |||

:*The results of the regression on the emotion of fear are given in figure 12. | |||

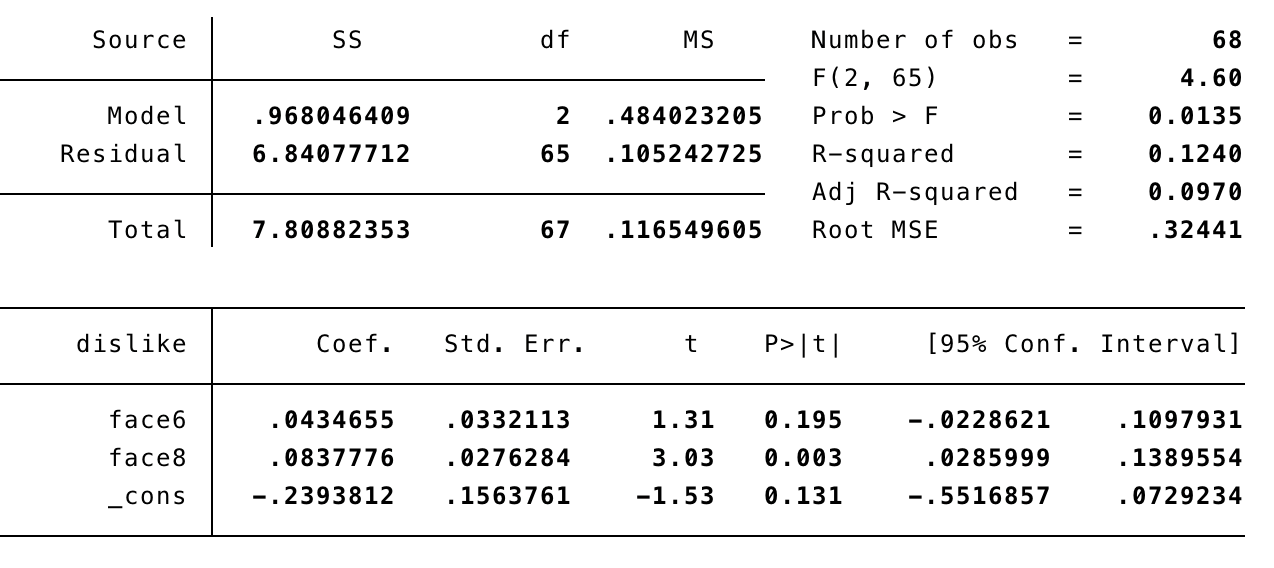

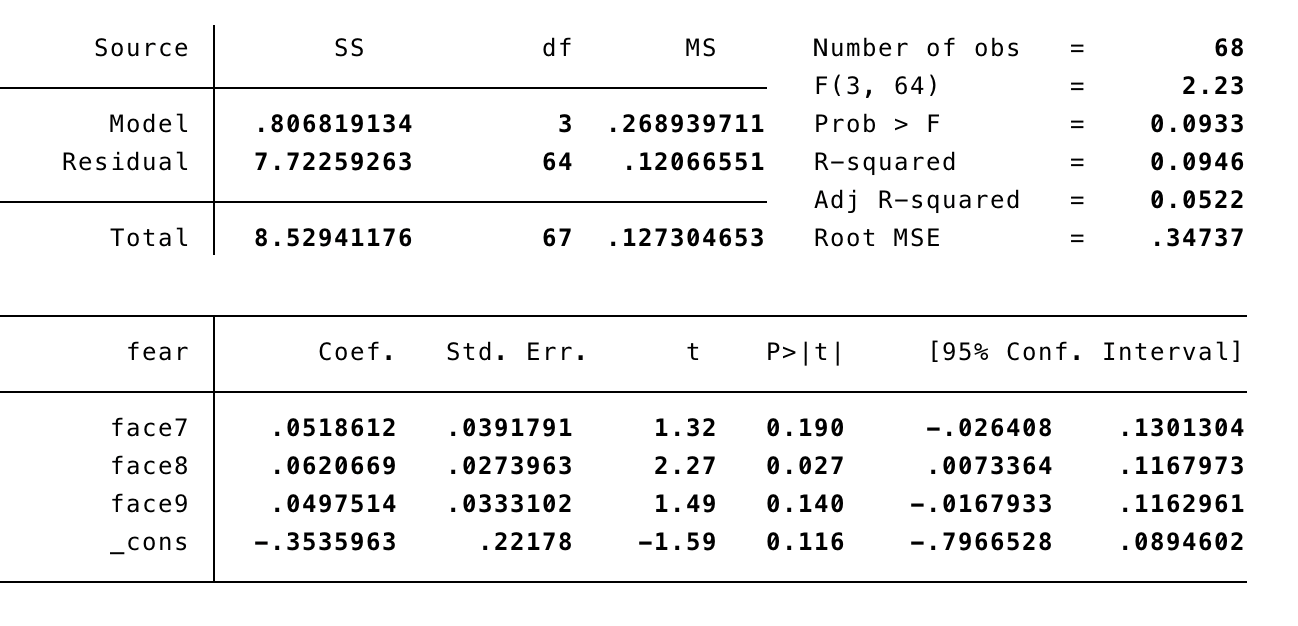

Next the results of the regressions on the faces and emotions of test 2 are assessed: | |||

:*The results of the regression on the emotion of friendliness are given in figure 13. Even though faces 7, 8 and 9 were significant in this model, we tried to improve the model anyway, to see what happens. | |||

:*The results of the regression on the emotion of anger are given in figure 14. Since none of the variables were significant we improved the model further, hence faces 6, 7 and 8 are missing. | |||

:*The results of the regression on the emotion of anger are given in figure 15. We tried to improve the model further by removing face 6. | |||

:*The results of the regression on the emotion of joy are given in figure 16. Since no variables were significant, the model was improved by removing face 8. | |||

:*The results of the regression, after improvement, of the emotion of surprise are given in figure 17. Since no variables were significant, the model was improved by removing face 8. | |||

:*The results of the regression, after improvement, of the emotion of dislike are given in figure 18 . Since no variables were significant, the model was improved by removing face 8. | |||

:*The results of the regression, after improvement, of the emotion of fear is given in figure 19. | |||

From these regression tables one can draw the following conclusions: | |||

For test 1: | |||

:*Face 1 (round eyes) was judged as expressing friendliness, sadness and joy; | |||

:*Face 3 (cubic eyes with sharp angles) was judged expressing the emotion of anger and surprise; | |||

:*For the emotion of dislike, all faces were significant; | |||

:*None of the faces were significant to the emotion of fear in this test, this yields that none of the faces were judged as expressing fear in particular by the elderly. | |||

For test 2: | |||

:*The distribution of emotions to the faces was not quite as distinguishable as in test 1. Here, all faces express more than 1 emotion to be identified with, except for; | |||

:*Face 10 (rounded yellow eyes), which is only matched with anger; | |||

:*Face 6 (rounded blue eyes) expresses joy and surprise; | |||

:*Face 7 (rounded green eyes) expresses friendliness, sadness, joy and surprise; | |||

:*Face 8 (rounded red eyes) expresses, according to the elderly, friendliness, sadness, dislike and fear; | |||

:*Face 9 (rounded white eyes) expresses friendliness, anger, sadness and surprise. For surprise, face 9 was highly significant compared to the other faces. | |||

We did some additional testing on the emotions that have multiple faces to match with, because we need more conclusive answers to be able to continue with our validation. Additional testing yields performing bootstrapped regressions. Bootstrapping the regressions performed above shows that for the emotion of friendliness, the face with the red eyes is most significant. For the emotion of sadness, the face with the white eyes is most significant, as well as for the emotion of surprise. | |||

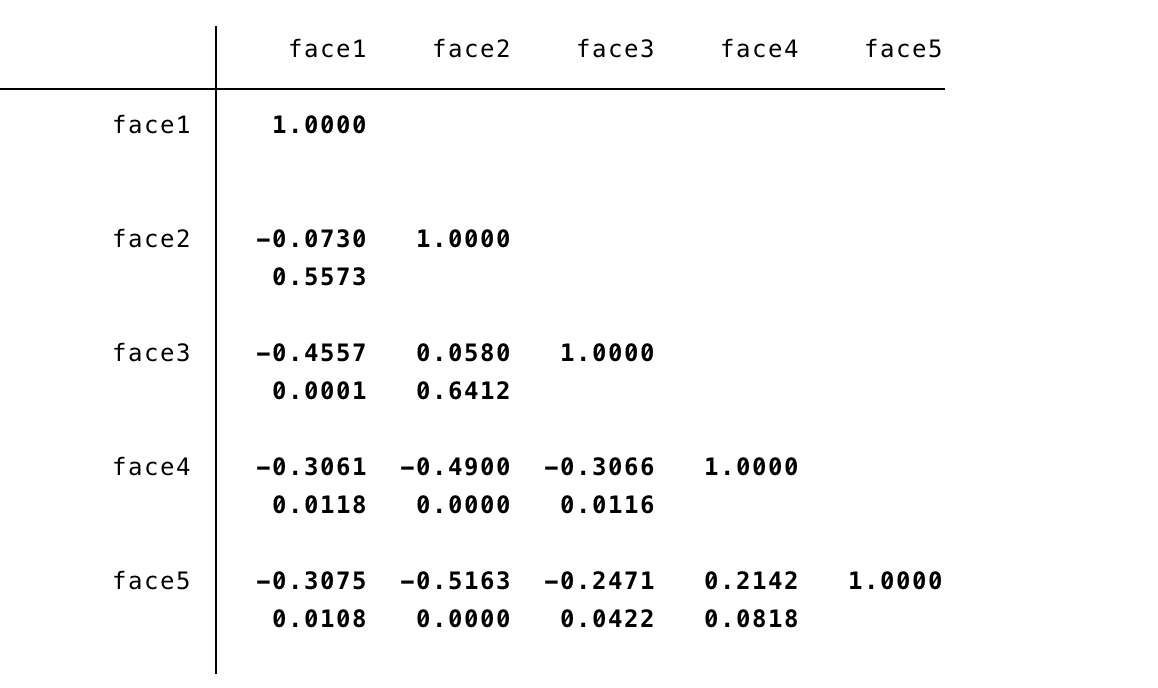

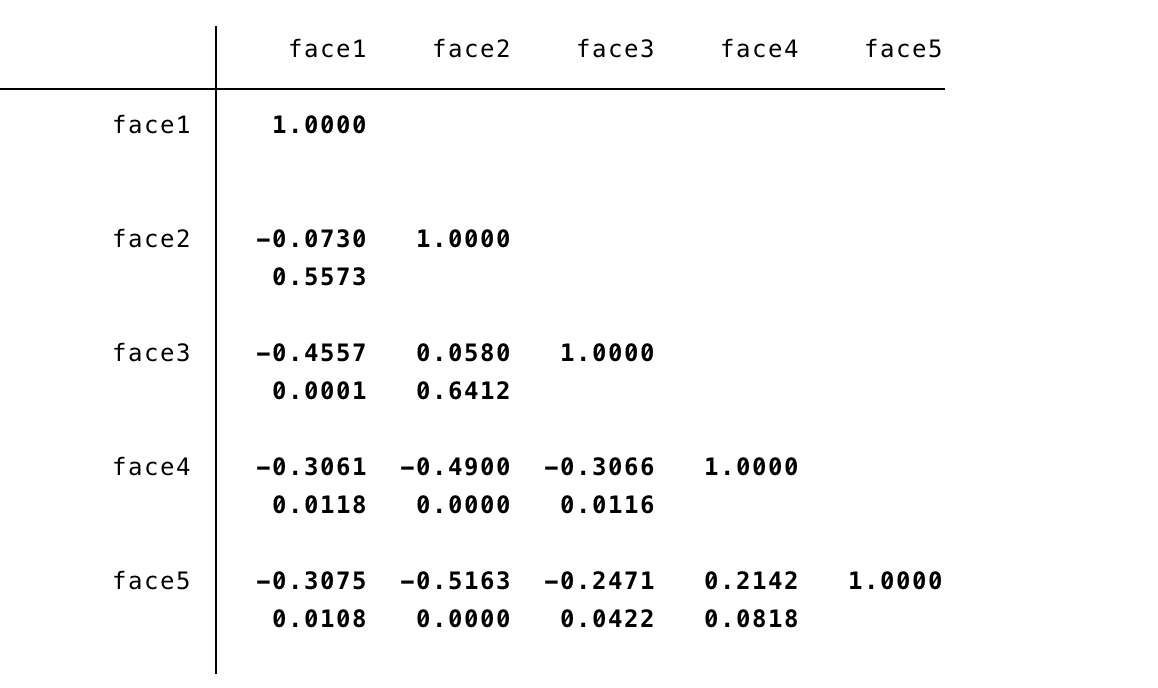

====Correlation==== | |||

[[File:CorrelationMatrixTest1.jpg|thumb|400px|left|alt=Alt text|Figure 21: the correlation test of test 1]] [[File:CorrelationMatrixTest1.jpg|thumb|400px|right|alt=Alt text|Figure 22: the correlation test of test 2]] | |||

[[File:VriendelijkheidAfschuwEnglish.png|thumb|400px|left|alt=Alt text|Figure 23: the regression plot of the emotions "Friendliness" and "Dislike"]] [[File:VriendelijkheidVreugdeEnglish.png|thumb|400px|middle|alt=Alt text|Figure 24: the regression plot of the emotions "Friendliness" and "Joy"]] | |||

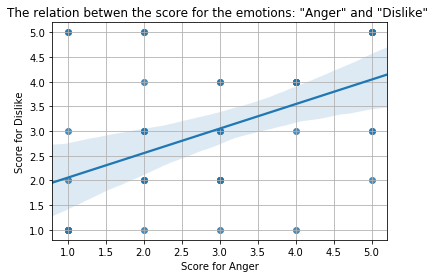

[[File:WoedeAfschuwEnglish.png|thumb|400px|right|alt=Alt text|Figure 25: the regression plot of the emotions "Anger" and "Dislike"]] | |||

Figures 21 and 22 show a correlation tests for both test 1 and test 2. From these figures one can conclude that faces 1 and 3 are not likely to be assessed with the same score (thus are usually not ordered next to each other). | |||

For the correlation between different emotions a regression is done as well, for the emotions which have the clearest correlation the regression plots are given in figure 23, 24 and 25 for the combination of emotions; | |||

:*From figure 23, one can derive that if an elderly assesses a face with a high score of the the emotion sadness this person will most likely asses the same face with a low score for the emotion of friendliness. So quite trivially, one can conclude that those emotions are each others opposites. | |||

:*From figure 24, one can clearly derive a relationship between the emotions friendliness and joy, that is, a face with a high score for the emotion of friendliness is most likely assessed with a high score for the emotion of joy as well. | |||

:*Idem dito for the emotions anger and dislike. | |||

=== Conclusion === | |||

To summarize each statistical test, we will start with the normality. Normality was fine for each variable, except for two faces. Namely face 5 (triangle eyes with sharp edges) and face 8 (red rounded eyes). However, they both were pretty close to normality and both seemed fine to continue with, hence we did. | |||

Next up is the regression tests. The first test performed on the elderly gave more distinctive results. Namely, face 1 (round eyes) came back as expressing friendliness, sadness and joy. Face 3 (cubic eyes with sharp angles) expresses anger and surprise. From the results one can conclude that none of the faces is (relatively) related to fear. | |||

The second test performed on the elderly gave for almost every face multiple emotions. Face 6 (rounded blue eyes) expresses joy and surprise. Face 7 (rounded green eyes) expresses friendliness, sadness, joy and surprise. Face 8 (rounded red eyes) expresses, according to the elderly, friendliness, sadness, dislike and fear. Face 9 (rounded white eyes) expresses friendliness, anger, sadness and surprise. Only face 10 (rounded yellow eyes) matched with one emotion, namely anger. | |||

Finally a correlation test was performed. Here we learned that faces 1 (round eyes) and 3 (cubic eyes with sharp angles) are not likely to be assessed with the same score (thus are usually not competing for the same place on the ranking from 1 to 5). According to the tests, sadness and friendliness are each others opposites. The emotions of friendliness and joy are complementing each other, so do anger and dislike. | |||

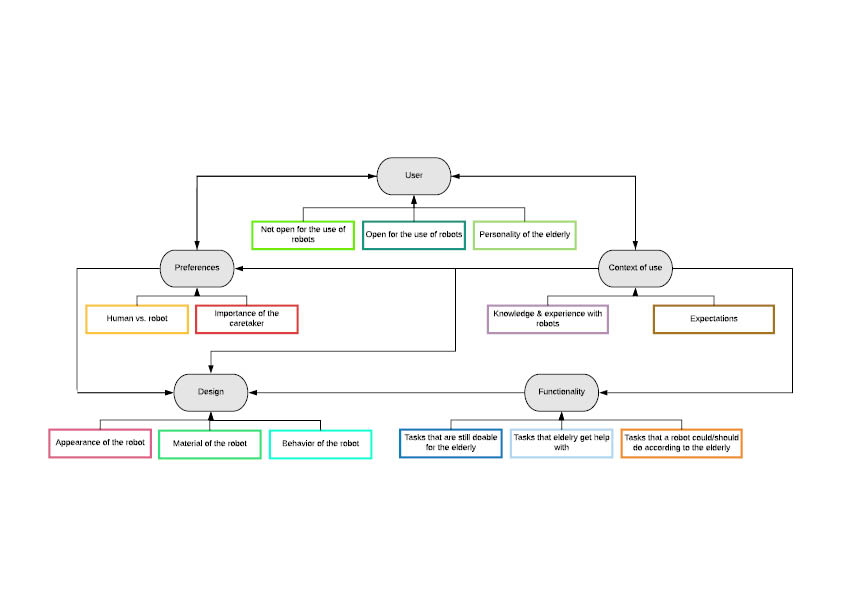

For the thematic analysis, the interviews were transcribed and coded. These codes were evaluated to create sub-themes and team. As such, 5 main themes were created: “User”, “Preferences”, “Context of use”, “Design” and “Functionality”. | |||

The theme “User” includes codes that describe the personalities of the elderly and the way that they think about robots. The opinion of the elderly is important to design an interface that eventually will fit this type of user. There are 3 sub- themes within this theme: “Not open for the use of robot”, “Open for the use of robot” and “Personality of elderly”. They are described below: | |||

• Not open for the use of robots | |||

Some elderly are not open for the use of robots. Most of the participants said that they did | |||

most of the chores themselves and that they did not need a robot that would help them with that. Other participants did not even had an experience with a robot and were convinced that they do not want a robot. | |||

Example: | |||

o Interviewer: “Dus u heeft er nooit een ervaring mee gehad?” | |||

Participant 001: “Nee, maar die hoef ik ook niet.” | |||

• Open for the use of robots | |||

Some elderly are open for the use of robots and they said that they would trust a robot. Moreover, they would make use of the robot if it would be offered to them. | |||

Example: | |||

o Interviewer: “Had u het gevoel dat u die robots kon vertrouwen?” | |||

Participant 002: “Jawel, Jawel. Ja. Ja. Ja.” | |||

Interviewer: “Oke en waardoor dacht u dat?” | |||

Participant 002: “Omdat ze lief overkomen.” | |||

== Discussion == | • Personality of elderly | ||

Most of the participants told us that as long as they could, they wanted to do everything on their own. Almost all the participant said that independence is an important part of their lives. Some of the elderly were not that mobile anymore, however others were still participating in social and sport activities. | |||

Example: | |||

o ”Ik wil zo lang mogelijk zelfstandig blijven.” - Participant 001 | |||

“Preferences” aggregates the codes that relate to the preferences of the elderly when it comes to receiving care and helping in the household. Some people said that the caretakers would be more important if they would need more intensive care. Most of the people did not need that much care, so they did not mind if a human caretaker would be replaced by a robot. Others said that a robot in their house would be strange and scary and told us that also robots can make mistakes. The theme “preferences” has two subthemes: “human vs. robot” and “importance of caretaker”. | |||

• Human vs. Robot | |||

This subtheme describes if elderly would prefer a human or a robot to help them with the household. The opinions about this subject were divided. Some people would prefer a robot so they would not burden anyone with their care. Others would prefer a human. | |||

Example: | |||

o “Ik zou dan eerder voor een robot kiezen. Om een medemens te vragen om een | |||

boodschap voor mij te doen dat gaat me moeilijk af denk ik. “ – Participant 105 | |||

• Importance of the caretaker | |||

This subtheme describes how important a caretaker is for the elderly. Some of the participants were used to one caretaker and had good connection with them. Other never used a caretaker or only used their help for some cleaning once a month. | |||

Example: | |||

o “Maar nu op het moment zijn ze vrij, ingrid. En dan krijg ik een andere hulp. En dat is | |||

toch wel een beetje vreemd. Je moet maar afwachten hoe of wat he.“ – Participant 002 | |||

“Context of use” describes the codes about the knowledge, experience and expectations of robots. When designing an interface, it is important to understand the users and their environment. It is important to know what people expect of robots and what they already know about them. With this information you can design to expectations, so nobody will be disappointed with the final product. This theme has two subthemes: “Knowledge & Experience” and “Expectation”. | |||

• Knowledge & experience with robots | |||

Most of the participants said that they did not have a lot of knowledge and experience with robots. If they did know something about the robots it was because of information via the television. A few participants were talking about the robot ‘Pepper’ and about hospital robots. | |||

Example: | |||

o Participant 102:“Ja ik zou niet weten wat ik daaraan zou willen veranderen of waar ik dat moet zien dat zou ik niet weten”. | |||

Interviewer:” Waarom is dat? Denkt u dat u daarvoor niet genoeg weet van robots?”. | |||

Participant 102:“ Ja ik weet er niet veel van, nee”. | |||

• Expectation | |||

Because of the lack of knowledge and experience with robots, there were a lot of expectations towards robots. Some participants told us that a robot is something for in the future and that they would probably will not experience it because of their age. Others thought that robots could not go outside or that they would make a mess. | |||

Example: | |||

o Interviewer asked the participant why he/she does not need a robot. The participant answered: “Die maken veel te veel rommel op het appartement”.- Participant 001 | |||

“Design” describes codes that deal with the design of the robot. This includes how elderly think that a robot should behave, look and feel. Because of the lack of experience, these answers were mostly | |||

based on their own fantasy or images that they saw on television. This theme has three subthemes: “Appearance of the robot”, “Material of the robot” and “Behavior of the robot”. | |||

• Appearance of the robot | |||

There were divided opinions about the appearance of a robot. Some participants told us that a robot should have a face that could reproduce emotions and that it should look humanoid. Other participants told us that they did not like a robot with a face, and that it should be a pure functional system without any human features. Some participants were very specific about the way that the face should look like. | |||

Example: | |||

o Interviewer: “En wat als u hem zich voorstelt. Wat voor soort gezicht zou hij moeten | |||

hebben?” | |||

Participant 101: “Een beetje vriendelijk gezicht. “ | |||

Interviewer: “Vriendelijk gezicht?” | |||

Participant 101: “Jaa een beetje vriendelijk. Kennen ze met de ogen wel doen he.” | |||

Interviewer: “U vindt vooral de ogen belangrijk?” | |||

Participant 101: “Ja ja, de ogen toch?” | |||

Interviewer: “Oke en waarom specifiek de ogen? Is dat omdat er voor u een betekenis achter zit?” | |||

Participant 101: “Ja daaraan kun je het gezien een beetje zien met de ogen he ja.” | |||

Interviewer: “Dat is omdat er gewoon veel emotie in zit?” | |||

Participant 101: “Jaa, jaa” | |||

• Material of the robot | |||

Most of the participants wanted the robot to feel soft. They did not like using wood, steel or metal, but preferred plastic or doll-material. There was one participant that thought it was important to think about a sustainable material. | |||

Example: | |||

o Interviewer: “Stel u moet de robot aan raken wat zou u willen dat u voelt?” | |||

Participant 102: “Ja gewoon plastic, dat het een beetje zacht aan voelt. Staal is zo koud he. Voelt koud aan. “ | |||

• Behavior of the robot | |||

Almost all the participants told us that it is important that a robot can show emotions. These emotions should be displayed clear and in a human way. We noticed that some elderly prioritize standards & values when it comes to interaction between people. | |||

Example: | |||

o Interviewer: “En moet hij een duidelijke emotie tonen ook?” | |||

Participant 003: “Ja tuurlijk. Hij moet hetzelfde doen als een mens want dat willen ze toch hebben. | |||

Interviewer: “Dus gewoon echt een volledige menselijke robot? | |||

Participant 003: “Ja.” | |||

“Functionality” includes codes that describes what the elderly can already do themselves, where they need help and what they think that a robot could do for them. This information can be important when designing because you need to know the requirements of a system so it can bring value to the users. This theme includes 3 sub-themes: “tasks that are still doable for the elderly”. “Tasks that elderly get help with”, “Tasks that a robot could/should do according to the elderly”. | |||

• Tasks that are still doable for the elderly | |||

The participants wanted to do as much in the household as possible, without help from anyone. The participant was talking about small tasks like: doing dishes, washing clothes, making the bed, etc. | |||

Example: | |||

o “De rest dat doe ik allemaal zelf: wassen, strijken, koken. [onverstaanbaar]. Dan blijf je ook een beetje bezig. Als je zelf kookt moet je ook boodschappen doen en ook afwassen. Sjouwen, ik heb het net nog gedaan. Dan blijf je nog een beetje actief.” - | |||

Participant 004 | |||

• Tasks that elderly get help with | |||

The participants told us that they get help with the big chores, like cleaning the bathroom and cleaning the kitchen. | |||

Example: | |||

“Met de..Een beetje met de badkamer. De grote dingen. Badkamer, wc’s. ik heb twee toiletten [onverstaanbaar] en ja de keuken [onverstaanbaar] daar wordt niet gekookt maar dat moet wel bijgehouden worden.” - Participant 003 | |||

• Tasks that a robot could/should do according to the elderly | |||

Most of the participants were not very keen to tell us what a robot could do for them. They emphasized the fact that they still do a lot by themselves and that they have a caretaker that can help them with big tasks. They only need help if they are not able to do it themselves anymore. Most of the elderly expect the robot to be able to do everything that you ask the robot. That it can give you attention, talk to you, pick up objects, cleaning a wall and help in the household. | |||

Example: | |||

o “Ja dan zou ik hem dingen laten doen waar ik zelf niet meer toe in staat zou zijn. Als er dan bijvoorbeeld iets van er moet gestofzuigd worden, dat kan ik niet meer, dan zou ik zeggen: nou doe jij dat effe.” – Participant 004 | |||

The following relational scheme came from this: | |||

[[File: Relational_scheme.jpg]] | |||

=== Discussion === | |||

Looking back at the test we designed and executed the majority went according to plan, but there were a couple things that should be improved for a future test.<br/><br/> | |||

First of all, the face design we used as a base for testing the connection of shapes and color to the emotion of a robot’s expression had a slight grin for all variations. At first we anticipated this would not cause an issue because it was the same for each face, but noticed that during the test there was at least one participant that did not want to rate the proposed variations on anger or sadness because it smiled. To solve this for a future test a more neutral mouth shape should be used.<br/><br/> | |||

Secondly, we had only ten participants to collect our data from that also were in the same caring facility. This means the test population of our experiment, together with our analysis and conclusion do not represent the target group on a real-world scale. To solve this, we would have to split up our group over different facilities while also making the test population larger to make the results more meaningful. <br/><br/> | |||

Thirdly, as we aimed to gather both quantitative and qualitative data, we asked participants to explain their reasoning behind the sorting of the faces in both tests. The problem we encountered was when the questions were asked, the answers were generally superficial and did not provide enough added value. As a result of this, the interviewers tended to veer off the interview guide to still collect the qualitative part of the data. | |||

Concerning the thematic analysis, keep the image of the relational scheme in mind in the result section. The theme that connects with most of the themes is “User’”. The user has preferences about the care and help they receive and the user has a preference in receiving care from a human or a robot (Theme: “Preferences). Furthermore, the user has certain expectations due to the knowledge and experiences about robots (Theme: “Context of use”). The preferences, context of use and the personality of the users will eventually be used to design an interface for this type of user. Without all this information the designer could not make an interface that would bring value to the elderly. One piece of information is missing and that is the “Functionality”. When it is known what elderly can do themselves and what they cannot do, a designer can adapt his design to the needs of the user. | |||

Relation between sub-themes shows the 5 themes and 13 sub-themes which were found in the thematic analyses . Each theme consists of a few subthemes and these sub-themes consist group of codes. The codes were created after the transcription of each participant. Some subthemes have relations with each other: | |||

- The knowledge and experiences of people can influence the degree of being open for robots or not. If people do not know a lot about robots, they do not know what to expect and then they would chose for the safest way which is probably not using a robot. - Moreover, the appearance, material and behavior of the robot can depend on the knowledge and experience that the elderly already had. When they only have heard of 1 type of robot they would probably have that image in their head for every robot. This also causes expectations of a certain type of robot. - The importance of the caretaker depends fully on the tasks that elderly are still able to do or not to do. The caretaker is most important when an elderly is dependent on their care. | |||

== Validation test 1 == | |||

=== Method === | |||

[[File:AllFacesUserTest2.png|thumb|1000px|left|alt=Alt text|Figure 26: All Faces of user test 2]] | |||

The results of the user test were used to design robot eyes that display emotions. To validate these designs, a validation test was conducted. The validation test consisted out of 2 parts; an introductory talk and a survey. The participants in this study are all residents of the elderly homes Vitalis De Hagen and Engelsbergen and are aged above 65. The seniors speak natively Dutch; hence the study was conducted in Dutch. | |||

The participants that were recruited were in the living room of Vitalis De Hagen and Engelsbergen, since that is where we had permission to perform this research. They were asked whether they would like to participate in a study on robot faces. When the participants agreed, the participants were asked to fill in an informed consent form that explained the study. | |||

In addition, the informed consent form clarified that the participant will stay anonymous and that the data will only be used for this particular study. When the participant finished signing the informed consent form, a conversation about robots was started to make sure that the participant understood the subject of the study.<br/> | |||

The participants were asked if they knew about robots, what they are capable of doing, and whether they had any experience with one. If they did not have knowledge about it, it was explained to them, so they would still be able to continue in our study.<br/> | |||

Next, the survey was conducted. The survey consisted out of 7 questions for each robot face. In total there were 11 faces. The faces were based on the results of the user test and our hypotheses and were programmed in the program called Processing. | |||

(documentation: [https://processing.org/ processing].).<br/> Each face was shown on a computer screen and the participants were asked to answer the following question: To what extent does this face match with [emotion] ? At the place of [emotion], several emotions were inserted; anger, friendliness, fear, sad, happy, disgust and surprise. The question was based on a 5 Likert scale, so the participant could answer with absolutely not (5), not really (4), neutral (3), A little bit (2), very much (1). The interview guide can be found in[https://drive.google.com/file/d/1C7R90fzNW-QIHXLZNtcmgAfiPwuGDB9w/view?usp=sharing interviewGuid]. Afterwards the participants were thanked for their time and participation. | |||

=== Results === | |||

We went to the same elderly homes as with user test 1 (Vitalis De Hagen and Engelsbergen) to conduct the experiment which was described in the methods section. We asked in total 7 people, from these 7 people 3 told us that they could not recognize human-like faces. One cooperated enthusiastically, however, the results were questionable (she found that every emotion corresponded "heel erg"-very much- with every face. And one person was clearly irritated after several faces. indicating survey fatigue. However, 3 interviewees cooperated nicely when conducting the interviews. | |||

The raw results are shown in this link: [https://docs.google.com/spreadsheets/d/1Sx5BmDq5QIkvAFr-M794LMWcb6dPUCAT3E--0IehBg4/edit?usp=sharing results user test 2] | |||

Although most of the participants suffered from survey fatigue, we still performed statistical testing on the results we retrieved from the finished surveys. | |||

Regression tests show barely any significance for the faces and emotions. The adjusted R-squared values for all models were way too low – negative to be precise – therefore we decided to bootstrap or robust the model, on order to see if it would happen. Running a robust on the model did help, but fell way short in order to find significance. The adjusted R-squared yields the predicting purposes of the model. However, finding negative values here means that – no matter we found significance – the significance would not yield any reliable results. | |||

=== Conclusion === | |||

From the results we can conclude that most of the participants had problems with recognizing the faces in the designs. To them, it just were circles on a screen. No reliable significance was found, and the predicting purposes of the models were too low. Survey fatigue came back as a problem and the questions were tedious, too similar and there were too many of them. | |||

=== Discussion === | |||

From the results we derived the following points: | |||

:*People were not able to recognize faces in the shapes we showed them. So, when we asked elderly people if they could help us with our interview and showed them the first face, we got a lot of answers like; "Ik vind dit geen gezicht hoor"- this does not look like a face to me-. After these sorts of answers, we always terminated the interview since asking which kind of emotions people see in random shapes is quite useless for our user test. | |||

:*Compared to the first time we visited Vitalis De Hagen, maybe because of the nice weather, the common area was empty and we were not able to conduct a lot of interviews. | |||

:*The interview was designed to contain 7 questions for 11 face designs, 77 questions in total. Each question asked exactly the same thing of an interviewee. This often caused survey fatigue, ruling the results of these interviews less valuable. We tried to overcome this issue by shuffling emotions the order in which we showed the faces such that the interviewees where more engaged in the interview, however, the amount of questions was just too much. | |||

In addition, when the participant showed difficulty in seeing an emotion, we paused the actual test for a second and gave them an idea to imagine. For example, we asked them to imagine that the robot with that certain face walked in the room and told them to drink a glass of water or something like that. This helped a couple of times but to the participants who did not recognize a face at all, this did not work, unfortunately. | |||

The fact that this user test includes a long list of the same questions, bored the participants and gave them a demanding and fatiguing feeling. The questions were too similar and too many. We quickly come to the conclusion that the design of this user test was insufficient and another, third user test is asked for in order to confirm our results from user test 1. | |||

== Validation test 2 == | |||

=== Method === | |||

Our second validation test consisted of a set of robot faces with different expressions based on the findings of the user test. The goal was to validate these results, in order to make sure that the previous findings were correct and also to get rid of the ambiguity found in the user test. This validation test is designed differently since the first validation test was not sufficient, found during the testing of it. As it was found that to the participants, the test was too long and included too many of the same questions, also they did not see a face on the screen, due to the lack of imagination of some participants. | |||

[[File:2.jpg|thumb|1000px|left|alt=Alt text|Figure 27: Faces with bodies of validation test 2]][[File:1.jpg|thumb|1000px|left|alt=Alt text|Figure 28: Faces with bodies of validation test 2]] | |||

For our second validation test, we started off by approaching several elderly in the previously visited location Vitalis De Hagen and Engelsbergen. The participants were explained what our research goal was, and how the test yielded. | |||

We also provided the participants an informed consent form we prepared for this test, which stated the results be anonymized, participants could opt out of doing the test at any time, question could be asked at any time and the conducted research was in-line with the TU/e’s Code of conduct. | |||

After a person gave their consent and signed the form, we started our tests. For this test we had prepared a sheet with eleven different robot faces with bodies. The same faces from validation test 1 were used in this design, together with the same seven emotions, the faces can be found in figure 27 and 28. | |||

We asked the participants to draw a line from a selected emotion to the robot that most strongly matched said emotion (e.g. ‘anger’ with a line drawn to the robot with red, square eyes). If participants suggested a second face also matched the emotion these second choices were written down separately. | |||

Afterwards we thanked participants for their time and participation. | |||

=== Results === | |||

For starters, all variables were checked. It was found that the emotion sad was either the first choice for a face, or not chosen at all, there is no in between. The emotion angry was either the first choice for a face, or not chosen at all, no is no in between. | |||

All the faces can be found in the method of this validation test 2. | |||

The raw results can be found here [https://drive.google.com/file/d/1i1T-9T4Tgg2VlC5EIhEE47VIP4MEW6dP/view?usp=sharing Results validation 2] | |||

After all variables were checked, a correlations test was ran. There was no correlation to be found, as all values stayed below 0.35. The threshold to concern for any correlation lies at 0.8, so there was no need to worry about that. Therefore, we moved on to the regressions testing. | |||

T-testing was performed lastly. The tests showed that for the emotion friendly, face number 3 with p = 0.062 is being the most significant here, thus face 3 was judged as being most friendly by the elderly. | |||

For the emotion angry, face 1 was found significant (p=0,0075), as face 8 (p=0,0075). | |||

For the emotion surprise, only face 5 was significant (p=0,0053), this means that the elderly judged face 5 as being the most related for a robot looking surprised. | |||

The emotion of dislike has face 7 as being significant here (p=0,0098). Thus face 7 was judges as being most likely associated to dislike. | |||

The emotion joy has also one significant face, which is face 10 (p=0.000). | |||

To the last emotion, fear, face 7 (p=0.0048) was judged as being most significant, thus showing fear, according to the elderly. | |||

For sadness, we found that faces 1 (p=0.0075), 7 (p=0.0075) and 10 (p=0.0075) are most significant. Due to coincides, for all three faces the significance is the same and no additional testing gave any preference. | |||

As can be seen, a lot of conclusive answers are found here. | |||

=== Conclusion === | |||

To summarize once more; for the emotion of fear, it is clear that red eyes, roundly shaped, is the way to express that a face is afraid. For the emotion of joy, a robot green round eyes is seen as being happy. Face 7, with red round eyes, to the elderly, is definitely a face that shows dislike. There where face 5, the one with white rounded eyes, has a definite sign of surprise to the elderly. The faces with yellow eyes, more specifically, yellow cubic eyes with sharp corners shows anger. Unfortunately, the significance is of the same level thus apparently, there is no difference between the degree of anger between rounded and cubic eyes. A robot having blue rounded eyes seems to be most friendly, according to the elderly. Lastly, for sadness the face with yellow rounded eyes, the face with red rounded eyes and the face with green rounded eyes are judged to be sad. Apparently, the rounded eyes are common here. | |||

=== Discussion === | |||

For some emotions, multiple faces are significant. This could be because of the fact that the sample size could be just a bit larger. However, for now the results are much more conclusive and we have found results that can back-up the results found in the user test. | |||

Round red eyes express fear as well as dislike. Joy is expressed by rounded green eyes. White rounded eyes express some sort of surprise to the elderly. A face that has yellow eyes expresses anger. Blue and round eyes are friendly. Sadness is expressed by multiple eyes; namely, rounded yellow, red and green eyes. | |||

Drawing the lines was for some participants too hard, due to their age and motor skills. Therefore, we had to draw it ourselves to make sure the correct lines were drawn. However, a single one had a problem of getting a grasp of the method, but after some help this was solved. Another problem was that the faces were too small on one A4 sized paper. The eyes with the cubic rounded corners were almost undistinguishable for nearsighted visual impaired elderly. This was a consideration we had beforehand since we did not want to make a selection between the faces presented on the first enlarged paper and the second one. Lastly, apparently the same people sit at the living room at Vitales De Hagen and Engelsbergen at the same time of day week in week out. Thus, having multiple locations to go to is a pro. | |||

== Overal discussion and suggestions for future research== | |||

In the user test we found that the first face with rounded eyes was judged are being friendly, sad and joyful. Face 3, the one with cubic eyes with pointy edges was judged being angry and surprised. In addition, rounded blue eyes was matched with joy and surprise. The face with rounded green eyes was matched with friendliness, sadness, joy and surprise. The face with rounded red eyes expresses friendliness, sadness, dislike and fear. The rounded, white eyed face expresses friendliness, anger, sadness and surprise. The face with the yellow rounded eyes matched with anger. | |||

Now it was found that the rounded red eyes express fear as well as dislike. Joy is expressed by rounded green eyes. White rounded eyes express some sort of surprise to the elderly. A face that has yellow eyes expresses anger. Blue and round eyes are friendly. And at last, sadness is expressed by rounded yellow, red and green eyes. | |||

Thus, some the hypotheses are supported here, therefore we were able to narrow most of the results down to one or two faces. Down below one can find a point-by-point list of the validation list: | |||

- Joy is expressed by a face with green round eyes. | |||

- Dislike is expressed by a face with red round eyes. | |||

- Fear is expressed by a face with red round eyes. | |||

- Anger is expressed by a face particularly with yellow eyes, whether it should be round or cubic, is undecided. | |||

- Surprise is found in a face with white rounded eyes. | |||

- A friendly face is found in blue rounded eyes. This was not found in the user test; however, the user test did conclude that friendliness is found in rounded eyes; thus is confirms the hypothesis partly in this case. | |||

- Sadness can be found in the faces with green, red and yellow, rounded eyes. All of these were hypotheses and unfortunately, they have all been confirmed. Therefore, we can conclude that the rounding of the eyes calls for sadness. | |||

All in all, we found a lot of unambiguous answers for the emotions and the faces. | |||

Perhaps the reason why the emotion of sadness did not come forward in only one face, is because the faces all had some sort of tiny smile. We tried to make the faces as neutral as possible, but it might have had some influence on the judgements of the participants. This came back on the thematic analysis in the user test as well. | |||

For future testing it might be better to make a more neutral mouth and perhaps add some more humanistic features to the face, since the first validation test showed us that the elderly found it difficult to find a face on the screen. Also, a good ground-work on introduction is called for, since many of the participants firstly held a negative attitude towards having a robot helping them; however, after some talking and giving realistic examples, they were more open to the idea. A last remark it that one has to make sure to print on a large enough size of paper, to make sure it is visible to everyone. | |||

=References= | =References= | ||

'''Useful links''' | |||

Netflix film 'Next gen' <ref>https://www.youtube.com/watch?v=uf3ALGKgpGU netflix film trailer 'Next gen'</ref> | |||

Pickett, C. L., Gardner, W. L., & Knowles, M. (2004). Getting a cue: The need to belong and | |||

enhanced sensitivity to social cues. Personality and Social Psychology Bulletin, 30(9), 1095- | |||

1107. | |||

Macdonald, R. G., & Tatler, B. W. (2013). Do as eye say: Gaze cueing and language in a real- | |||

world social interaction. Journal of vision, 13(4), 6-6. | |||

Spear, P. D. (1993). Neural bases of visual deficits during aging. Vision research, 33(18), 2589- | |||

2609. | |||

Barakova, E.I., Recognizing social cues: Facial expression and gesture recognition. Course: | |||

Social Interaction with Robots, 2018-2019 | |||

Mellerio, J. (1987). Yellowing of the human lens: nuclear and cortical contributions. Vision | |||

research, 27(9), 1581-1587. | |||

Becker, L. (2009, may). Can the design of food packaging influence the taste and experience of its | |||

content? Thesis for the Master in Psychology Consumer & Behavior. | |||

oxforddictionaries. (n.d.). expression. Retrieved from oxforddictionaries: | |||

https://en.oxforddictionaries.com/definition/expression | |||

White, D. (n.d.). What Are Social Cues? - Definition & Examples. Retrieved from study.com: | |||

https://study.com/academy/lesson/what-are-social-cues-definition-examples.html | |||

Sullivan, S., & Ruffman, T. (2004). Emotion recognition deficits in the elderly. International Journal of | |||

Neuroscience, 114(3), 403-432. | |||

<!--To cite a new source: <nowiki><ref name="reference name">reference link and description</ref></nowiki><br/> | <!--To cite a new source: <nowiki><ref name="reference name">reference link and description</ref></nowiki><br/> | ||

To cite a previously cited source: <nowiki><ref name="reference name" \></nowiki>--> | To cite a previously cited source: <nowiki><ref name="reference name" \></nowiki>--> | ||

<references /> | <references /> | ||

Latest revision as of 23:21, 8 April 2019

0LAUK0 - 2018/2019 - Q3 - group 2

Group members

| Name | Student ID |

|---|---|

| Koen Botermans | 0904507 |

| Ruben Hendrix | 1236095 |

| Jakob Limpens | 1019496 |

| Iza Linders | 0945517 |

| Noor van Opstal | 0956340 |

Abstract

We live in an ageing society, the number of elderly people is ever increasing OECD (2007). As a consequence, pressure on caregivers is rising. The research area on possibilities to alleviate this pressure by means of a robotic platform is increasing in size. Emotional comfort tends to be vital in medical environments, and such a robotic platform needs to conform to this aspect of elderly care ANR (2005). For our project, we want to zoom in on a particular part of many modern robots, the part having as primary task the portrayal of emotional information: screen-based faces. During the eight weeks of this project, we will set up a research plan, carry out test with the target users – seniors – and attempt to design and validate a screen-based robotic face. Since our group is a multidisciplinary team this problem will be approached from both a technical perspective and a user centered perspective.

Introduction

When humans interact with each other, social cues are important (Pickett, C. L. et al., 2004). Social cues are defined as symbols that are expressed through body language, tone or words and these cues can help clarify people’s intentions (White, n.d.). When designing a robot that should function in a human environment, this robot should behave in a natural way and according to the social rules of humans. Therefore, the robot should pay attention to the social cues that people have and adapt to them. There are several social cues and one of them is facial expression. Facial expression is the act of making known one’s thoughts or feelings using only the face (Oxforddictionaries, n.d.). Facial expression enables us to further comprehend the situation. Without seeing someone’s facial expression, one would not be able to see what the emotion of that person is.