0LAUK0 2018Q1 Group 2 - Programming overview: Difference between revisions

| (5 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

= | = Introduction = | ||

This page contains an overview of everthing related to the programming of the prototype of [[PRE2018 1 Group2]] for the course Project Robots Everywhere (0LAUK0). | This page contains an overview of everthing related to the programming of the prototype of [[PRE2018 1 Group2]] for the course Project Robots Everywhere (0LAUK0). | ||

| Line 5: | Line 5: | ||

Ultimately we wanted to combine our programs to enable all intended functionalities of the prototype. Unfortunately we were not able to combine our Arduino programs before the end of the project. Therefore we had to demo the two systems in isolation during the final presentation. | Ultimately we wanted to combine our programs to enable all intended functionalities of the prototype. Unfortunately we were not able to combine our Arduino programs before the end of the project. Therefore we had to demo the two systems in isolation during the final presentation. | ||

All source code is available on our [https://drive.google.com/open?id=1yvuAhzbvv15BWTA4pkX-36xujxOrgy07 google drive project folder] for you to view and test. | |||

= Processing = | = Processing = | ||

| Line 14: | Line 16: | ||

OpenCV will detect most of the faces in view of the camera most of the time. From this arises a problem, we need to identify the user’s face amongst false positives, such as paintings and lamps that sort of look like a face, or people walking by the user’s desk. Ideally we would use [https://en.wikipedia.org/wiki/Template_matching Template Matching] to recognize the users face amongst all faces detected by OpenCV. | OpenCV will detect most of the faces in view of the camera most of the time. From this arises a problem, we need to identify the user’s face amongst false positives, such as paintings and lamps that sort of look like a face, or people walking by the user’s desk. Ideally we would use [https://en.wikipedia.org/wiki/Template_matching Template Matching] to recognize the users face amongst all faces detected by OpenCV. | ||

Using template matching we would ideally look at the profile picture of the user, and detect which region of the webcam image most likely matches the profile picture. If OpenCV detects a face on that location, then we would have found our user. There are | Using template matching we would ideally look at the profile picture of the user, and detect which region of the webcam image most likely matches the profile picture. If OpenCV detects a face on that location, then we would have found our user. There are problems with this approach. The template would have to be rescaled dynamically to cope with changes in the ‘on-screen’ image of the webcam’s output (the size of the user’s face will change as they move closer or further away from the camera, which means that a fixed size template would only work if the user maintains a steady distance to the camera. | ||

This means that we would have to develop a system that can dynamically rescale the profile picture to the sizes of the detected faces and rerun the template matching for each of the resized templates. Due to delays in the Processing sketch’s development this idea was abandoned. Instead we use a simple distance heuristic to determine which face belongs to the user. By frequently performing the face detection the differences between webcam input are minimized (as the user has less time to move around between measurements if they occur twice a second instead of once every two seconds). We then constrain new detections by stating the following: | This means that we would have to develop a system that can dynamically rescale the profile picture to the sizes of the detected faces and rerun the template matching for each of the resized templates. Due to delays in the Processing sketch’s development this idea was abandoned. Instead we use a simple distance heuristic to determine which face belongs to the user. By frequently performing the face detection the differences between webcam input are minimized (as the user has less time to move around between measurements if they occur twice a second instead of once every two seconds). We then constrain new detections by stating the following: | ||

* The new location of the user’s face has a minimum distance of 99% | * The new location of the user’s face has a minimum distance of 99% to the previous detected location. | ||

* The new location of the user’s face has a maximum distance of 101% | * The new location of the user’s face has a maximum distance of 101% to the previous detected location. | ||

* The minimum size of the user’s face is 98% of the previous detected size. | * The minimum size of the user’s face is 98% of the previous detected size. | ||

* The maximum size of the user’s face is 102% of the previous detected size. | * The maximum size of the user’s face is 102% of the previous detected size. | ||

| Line 24: | Line 26: | ||

In quiet scenes these constraints were good enough to accurately track the user. However, there is always some variation in the detected location, even if the user holds their face completely still. Therefore we added a wiggle-factor quite early on in development, such that the program ignores changes in location that are smaller than the wiggle-factor. This also improved the reliability of the tracking. However, the smallest distance heuristic struggles when many faces are looking at the screen at the same time, therefore an alternative (such as template matching with dynamically rescaled template images) should be used for future prototypes. | In quiet scenes these constraints were good enough to accurately track the user. However, there is always some variation in the detected location, even if the user holds their face completely still. Therefore we added a wiggle-factor quite early on in development, such that the program ignores changes in location that are smaller than the wiggle-factor. This also improved the reliability of the tracking. However, the smallest distance heuristic struggles when many faces are looking at the screen at the same time, therefore an alternative (such as template matching with dynamically rescaled template images) should be used for future prototypes. | ||

Another concern was performance. Processing is not really designed for low level optimizations and high speed programs, and we found out that many of its core functions and even functions in OpenCV cannot deal with multithreading. Early in development the program was written as a single threaded program. Processing can automatically create new threads for its own subroutines, and the OpenCV library was using all cores of our test-system’s CPU, but because the OpenCv functionalities were called in the main loop of our program, the entire program would slow to a crawl and run at around 6 frames per second in the best case with 100% CPU usage across all cores. Therefore a new focus of development entailed managing OpenCV from a separate thread. After about 2 weeks of working on it (considering this was my first time writing custom threads) I managed to create a system were the program would call OpenCV at a predetermined interval. This interval can be manipulated by the person setting up the program and changed from multiple times per second to once every hour (not that either one of those extremes is remotely | Another concern was performance. Processing is not really designed for low level optimizations and high speed programs, and we found out that many of its core functions and even functions in OpenCV cannot deal with multithreading. Early in development the program was written as a single threaded program. Processing can automatically create new threads for its own subroutines, and the OpenCV library was using all cores of our test-system’s CPU, but because the OpenCv functionalities were called in the main loop of our program, the entire program would slow to a crawl and run at around 6 frames per second in the best case with 100% CPU usage across all cores. Therefore a new focus of development entailed managing OpenCV from a separate thread. After about 2 weeks of working on it (considering this was my first time writing custom threads) I managed to create a system were the program would call OpenCV at a predetermined interval. This interval can be manipulated by the person setting up the program and changed from multiple times per second to once every hour (not that either one of those extremes is remotely desireable). | ||

The program can still be very intensive on the system’s processor if the user chooses to run the face detection very often. If the detection interval is set such that the program performs the face detection more than 5 times per second, an Intel Core i7 6700 HQ with a nominal clock speed of 2.6 GHz with 8 logical cores | The program can still be very intensive on the system’s processor if the user chooses to run the face detection very often. If the detection interval is set such that the program performs the face detection more than 5 times per second, an Intel Core i7 6700 HQ with a nominal clock speed of 2.6 GHz with 8 logical cores is fully utilized at 100% CPU usage. However, setting the interval to once every 2 seconds lowers CPU usage to around 15-20%. Even though the program can take 100% of CPU resources, it appears to have a low priority, as opening browsers and editing documents can still be done without any hitching or poor performance when the program is running with a high detection interval. However, such a degree of CPU usage is not desirable in terms of the noise that the computer cooling system will make when running the program for a prolonged amount of time, nor will it be environmentally friendly. However, resource utilization is very low if the detection interval is set to a low number. | ||

Late in development configuration files were added to the program. This allows the user to change the behavior of a compiled binary of the program, without having to modify its source code. This was used primarily to perform our behavior experiment. | Late in development configuration files were added to the program. This allows the user to change the behavior of a compiled binary of the program, without having to modify its source code. This was used primarily to perform our behavior experiment. | ||

| Line 32: | Line 34: | ||

= Arduino = | = Arduino = | ||

=== Infrared distance sensors === | === Infrared distance sensors === | ||

The Arduino is connected to a Sharp GP2Y0A02YK0F Distance Measuring Sensor Unit. This sensor can detect distances from 20 to 150 cm. It is connected to the Arduino using an analog port on the Arduino Uno. We first convert this analog signal into a voltage, then we use a custom transformation function to approximate the distance in meters. Using a ruler and some math we generated a set of linear equations that match the graphed inverse number of distance (Sharp Corporation, 2006, p. 5). | The Arduino is connected to a Sharp GP2Y0A02YK0F Distance Measuring Sensor Unit. This sensor can detect distances from 20 to 150 cm. It is connected to the Arduino using an analog port on the Arduino Uno. We first convert this analog signal into a voltage, then we use a custom transformation function to approximate the distance in meters. Using a ruler and some math we generated a set of linear equations that match the graphed inverse number of distance (Sharp Corporation, 2006, p. 5) that were used to specify the set of linear equations. | ||

We tested the reliability of the linear equations by placing the IR sensor on a table with a ruler, and placing an object in front of the sensor at various distances. We then measured the predicted distance. We tried coming up with possible regression models that could predict better than the set of linear equations from our first prototype, but we were unable to find a regression model that predicted better than our initial set of linear equations. We easily achieved a high R-squared of above 0.90, but for each item there was a significant (sometimes up to 15 cm) difference between the distance predicted by the regression and the actual distance. Because of this we stuck with the set of linear equations. The testing data and analysis script used to test possible regressions are available on our google drive project folder. You can also download and use them directly from [https://drive.google.com/open?id=1fFcFpkGtPpEnZpY_1lBBkssDVsuV4-RJ here]. | We tested the reliability of the linear equations by placing the IR sensor on a table with a ruler, and placing an object in front of the sensor at various distances. We then measured the predicted distance. We tried coming up with possible regression models that could predict better than the set of linear equations from our first prototype, but we were unable to find a regression model that predicted better than our initial set of linear equations. We easily achieved a high R-squared of above 0.90, but for each item there was a significant (sometimes up to 15 cm) difference between the distance predicted by the regression and the actual distance. Because of this we stuck with the set of linear equations. The testing data and analysis script used to test possible regressions are available on our google drive project folder. You can also download and use them directly from [https://drive.google.com/open?id=1fFcFpkGtPpEnZpY_1lBBkssDVsuV4-RJ here]. | ||

Latest revision as of 01:22, 30 October 2018

Introduction

This page contains an overview of everthing related to the programming of the prototype of PRE2018 1 Group2 for the course Project Robots Everywhere (0LAUK0).

The programming was done by three of our team's members. Mitchell worked on facial detection using OpenCV and Processing, depth measurement using IR and Arduino, and serial communication between Processing and our Arduino program. Hans worked on controlling the prototype's stepper motors using an Arduino, and parsing movement commands recieved over a Serial connection. Roelof worked on kinematic models in Matlab that would be ported to either the Processing or the Arduino programs.

Ultimately we wanted to combine our programs to enable all intended functionalities of the prototype. Unfortunately we were not able to combine our Arduino programs before the end of the project. Therefore we had to demo the two systems in isolation during the final presentation.

All source code is available on our google drive project folder for you to view and test.

Processing

We will use the Processing software sketchbook to develop our face tracking program. We mainly use this software environment because of personal experience, and because it supports all functions that we need in order to make our prototype work properly.

Face tracking

Face tracking is achieved using a port of OpenCV (Borenstein, 2013). This library has built-in functions to detect faces. The programmer needs to specify a cascade that will be used to detect faces. We have decided to only look for faces that are looking straight at the screen, as this is most useful for our design. We initially wanted to use a stereoscopic camera setup to measure the distance between the user and the screen. However, after two weeks of working on this feature using OpenCV functions we were unable to generate calibrated stereoscopic images. Therefore we made the switch to a single camera setup to detect faces, and to use an IR sensor connected to the Arduino that we were going to use anyway to measure the distance between the user and the monitor.

OpenCV will detect most of the faces in view of the camera most of the time. From this arises a problem, we need to identify the user’s face amongst false positives, such as paintings and lamps that sort of look like a face, or people walking by the user’s desk. Ideally we would use Template Matching to recognize the users face amongst all faces detected by OpenCV.

Using template matching we would ideally look at the profile picture of the user, and detect which region of the webcam image most likely matches the profile picture. If OpenCV detects a face on that location, then we would have found our user. There are problems with this approach. The template would have to be rescaled dynamically to cope with changes in the ‘on-screen’ image of the webcam’s output (the size of the user’s face will change as they move closer or further away from the camera, which means that a fixed size template would only work if the user maintains a steady distance to the camera.

This means that we would have to develop a system that can dynamically rescale the profile picture to the sizes of the detected faces and rerun the template matching for each of the resized templates. Due to delays in the Processing sketch’s development this idea was abandoned. Instead we use a simple distance heuristic to determine which face belongs to the user. By frequently performing the face detection the differences between webcam input are minimized (as the user has less time to move around between measurements if they occur twice a second instead of once every two seconds). We then constrain new detections by stating the following:

- The new location of the user’s face has a minimum distance of 99% to the previous detected location.

- The new location of the user’s face has a maximum distance of 101% to the previous detected location.

- The minimum size of the user’s face is 98% of the previous detected size.

- The maximum size of the user’s face is 102% of the previous detected size.

In quiet scenes these constraints were good enough to accurately track the user. However, there is always some variation in the detected location, even if the user holds their face completely still. Therefore we added a wiggle-factor quite early on in development, such that the program ignores changes in location that are smaller than the wiggle-factor. This also improved the reliability of the tracking. However, the smallest distance heuristic struggles when many faces are looking at the screen at the same time, therefore an alternative (such as template matching with dynamically rescaled template images) should be used for future prototypes.

Another concern was performance. Processing is not really designed for low level optimizations and high speed programs, and we found out that many of its core functions and even functions in OpenCV cannot deal with multithreading. Early in development the program was written as a single threaded program. Processing can automatically create new threads for its own subroutines, and the OpenCV library was using all cores of our test-system’s CPU, but because the OpenCv functionalities were called in the main loop of our program, the entire program would slow to a crawl and run at around 6 frames per second in the best case with 100% CPU usage across all cores. Therefore a new focus of development entailed managing OpenCV from a separate thread. After about 2 weeks of working on it (considering this was my first time writing custom threads) I managed to create a system were the program would call OpenCV at a predetermined interval. This interval can be manipulated by the person setting up the program and changed from multiple times per second to once every hour (not that either one of those extremes is remotely desireable).

The program can still be very intensive on the system’s processor if the user chooses to run the face detection very often. If the detection interval is set such that the program performs the face detection more than 5 times per second, an Intel Core i7 6700 HQ with a nominal clock speed of 2.6 GHz with 8 logical cores is fully utilized at 100% CPU usage. However, setting the interval to once every 2 seconds lowers CPU usage to around 15-20%. Even though the program can take 100% of CPU resources, it appears to have a low priority, as opening browsers and editing documents can still be done without any hitching or poor performance when the program is running with a high detection interval. However, such a degree of CPU usage is not desirable in terms of the noise that the computer cooling system will make when running the program for a prolonged amount of time, nor will it be environmentally friendly. However, resource utilization is very low if the detection interval is set to a low number.

Late in development configuration files were added to the program. This allows the user to change the behavior of a compiled binary of the program, without having to modify its source code. This was used primarily to perform our behavior experiment.

Arduino

Infrared distance sensors

The Arduino is connected to a Sharp GP2Y0A02YK0F Distance Measuring Sensor Unit. This sensor can detect distances from 20 to 150 cm. It is connected to the Arduino using an analog port on the Arduino Uno. We first convert this analog signal into a voltage, then we use a custom transformation function to approximate the distance in meters. Using a ruler and some math we generated a set of linear equations that match the graphed inverse number of distance (Sharp Corporation, 2006, p. 5) that were used to specify the set of linear equations.

We tested the reliability of the linear equations by placing the IR sensor on a table with a ruler, and placing an object in front of the sensor at various distances. We then measured the predicted distance. We tried coming up with possible regression models that could predict better than the set of linear equations from our first prototype, but we were unable to find a regression model that predicted better than our initial set of linear equations. We easily achieved a high R-squared of above 0.90, but for each item there was a significant (sometimes up to 15 cm) difference between the distance predicted by the regression and the actual distance. Because of this we stuck with the set of linear equations. The testing data and analysis script used to test possible regressions are available on our google drive project folder. You can also download and use them directly from here.

Serial communication

The serial communication from the Arduino to Processing is pretty much a mirror image of the design of the serial communication from Processing to the Arduino. The Arduino compares text strings that it received against templates for the different operations that it can perform. Two operations are available:

- If the Arduino receives the command “request: move monitor to x y z”, for some values of x y z, then the program will extract those coordinates and use the motor control functionalities of the other Arduino program to move the monitor in line with our kinematic model.

- If the Arduino receives the command “request: measure depth”, then the output from the IR-sensor is read, and a new command string for processing is generated that includes the distance in meters. For debug purposes the distance voltage of the IR sensor is also transmitted, but the Processing sketch will ignore this value.

Motor control

For motor control we are using the Arduino and the AccelStepper Library for better control of the 28BYJ 5V stepper motors. AccelStepper Library includes options to set acceleration speed, absolute positioning of the motor based on steps, which are quite useful in the case of a monitor arm.

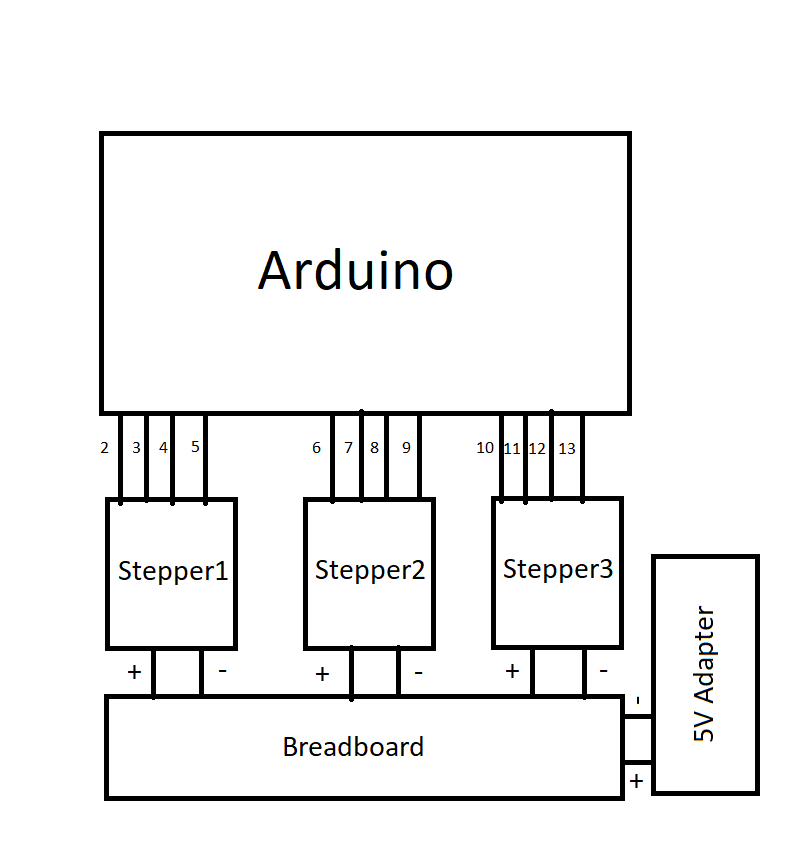

Arduino Setup

A basic overview of the setup. Numbers are digital pins on the arduino.

An overview of the code

Initialising digital pins for the motors

Example of initialising digital pins of motor: AccelStepper stepper1(HALFSTEP, motorPin1, motorPin3, motorPin2, motorPin4); The motor stepper1 controls the bottom part of the monitor arm. The motor stepper2 controls the upper part of the monitor arm. The motor stepper3 controls the front piece of the monitor arm.

Initialising starting values of the motor

Setting basic speed, acceleration and current position. The stepper.move(1) has to be called to start motor, without this line of code the motor doesn’t work. Stepper.move(-1), to move 1 step back to position(0).

Initialising Serial with Serial.begin(115200), we use band 115200 for the fastest data processing of Serial commands.

Serial commands contains the absolute position of steps of the motor x and y. This command is parsed into int x and int y. Example 1024, -1024 would mean it is parsed to int x = 1024 and y = -1024. Afterwards stepper1.moveTo(x) and stepper2.moveTo(y), this means that stepper1 moves to the position where the motor would be if it takes 1024 steps from the 0 steps position. Similarly for stepper2 it moves to -1024 steps from the 0 position. Stepper3 tries to point to the front, by taking the opposite position of steps1 and steps2 combined. So stepper3.moveTo(-(x+y)).

Afterwards the amount of steps to the destination position are calculated. This is done in a more complicated manner, because the function distanceToGo() of the AccelStepper library can only be called after the moveTo() or move() functions are called. However, changing the speed and acceleration speed of the motor can’t be done after the move() or moveTo() functions are called. The distances are needed to calculate how fast the motors have to go compared to the other motors to finish at the same time. The speed of the motor can’t go much above 1000 before it becomes unstable, so it is limited to 1024. The speed of the motor can’t go below 32, because the speed becomes incredibly slow and somehow doesn’t finish at the same time as the other motors do.

References

- Borenstein, G. (2013). OpenCV for Processing [software library]. Retrieved from [1]

- OpenCV team. (n.d.). About. Retrieved from [2]

- Sharp Corporation. (2006). Sharp_Gp2Y0a02Yk0F_E. Retrieved from [3]

- The Processing Foundation. (n.d.). Processing. Retrieved from [4]

Layout of the ideal Processing sketch for our prototype

Processing code layout

User object has

- Average location (x,y,z detected by OpenCV and IR)

- Ideal location (x,y,z of center of screen)

- Max RSI preventive compliant posture (how extreme can the user posture be without breaking the RSI prevention rules)

- Time spent in current posture

- Pixel map of face at startup

- Precision measure of repeated observations of face pixel map.

Cameras have

- Conversion metrics to transform pixels into angles into meters

Arduino IR has

- Conversion metric to transform voltage into meters

At startup

- User's face will be around their ideal location, capture the pixel map of the face detected around this location

- Load equipment and user attributes mentioned above.

At a predefined interval - call openCV to detect faces

- If no face is detected, maintain the average face location calculated from previous face detections

- If one face is detected, compare to face pixel map

- If similarity is too low, maintain the average face location calculated from previous face detections

- If similarity is high, update the average face location

- If multiple faces are detected, compare detected faces to face pixel map

- If there is no match, maintain the average face location calculated from previous face detections.

- If there is one match, update average face location using this face.

- If there are multiple matches, choose faces closest to average face location and update average face location using this face.

- If the current location of the face is significantly different from the average face location:

- Start counting time that this new posture is held:

- If time is very low: it was a split movement, no long term change in posture -> ignore

- If time is long: it is a long term change in posture -> move monitor

- If detected as posture change:

- Face has moved up or down:

- Calculate delta_x, delta_y. give command to move monitor, when the monitor is in front of the user, calculate delta_z using the arduino IR. Give command to move monitor in z direction if delta_z > threshold

- Detected face width has changed: (user moved forward or backward)

- Calculate delta_z using the arduino IR. Give command to move monitor in z direction if delta_z > threshold

- Determine if new posture is RSI preventive compliant

- Face has moved up or down:

- Start counting time that this new posture is held:

Keep track of how long a posture is held

- If posture is RSI prevention compliant: held for too long, notify user to move

- If posture is not RSI prevention compliant: use a much shorter time threshold, notify user sooner