PRE2017 4 Groep3: Difference between revisions

(→UI) |

|||

| (284 intermediate revisions by 6 users not shown) | |||

| Line 2: | Line 2: | ||

== Group members == | == Group members == | ||

* Stijn Beukers | * Stijn Beukers (0993791) | ||

* Marijn v.d. Horst | * Marijn v.d. Horst (1000979) | ||

* Rowin Versteeg | * Rowin Versteeg (1001652) | ||

* Pieter Voors | * Pieter Voors (1013620) | ||

* Tom v.d. Velden | * Tom v.d. Velden (1013694) | ||

== Brainstorm == | == Brainstorm == | ||

| Line 21: | Line 21: | ||

== Chosen Subject == | == Chosen Subject == | ||

For centuries our species has known that they are not perfect and shall never attain perfection. To get ever closer to perfection we have created many tools to bridge the gap between our weaknesses and the perfection and satisfaction we so very much desire. Though many problems have been tackled and human life has greatly improved in quality, we are still capable of losing the items that could provide such comfort. Such items could, for example, be phones, tablets or laptops. Even at home a TV remote is often lost. We propose a solution to the problem of losing items within the confinements of a certain building. The solution we propose is to apply Artificial Intelligence (AI) as to find items using live video footage. This is chosen as image classification has been proven to be very efficient and effective at classifying and detecting objects in images. For convenience sake this system will be provided with | For centuries our species has known that they are not perfect and shall never attain perfection. To get ever closer to perfection we have created many tools to bridge the gap between our weaknesses and the perfection and satisfaction we so very much desire. Though many problems have been tackled and human life has greatly improved in quality, we are still capable of losing the items that could provide such comfort. Such items could, for example, be phones, tablets or laptops. Even at home a TV remote is often lost. We propose a solution to the problem of losing items within the confinements of a certain building. The solution we propose is to apply Artificial Intelligence (AI) as to find items using live video footage. This is chosen as image classification and has been proven to be very efficient and effective at classifying and detecting objects in images. For convenience sake this system will be provided with a user interface, in which the user can easily locate the object he is looking for. | ||

== Users == | == Users == | ||

Though many people may benefit from the proposed systems, there are some people that would more so benefit from the system than others. A prime example would be people that are visually impaired or people who are blind. These people could have a hard time finding some item as they may not be able to recognize it themselves or they may not be able to see it at all. The system would provide them with a sense of ease as they would no longer have to manage where their items are all the time. | Though many people may benefit from the proposed systems, there are some people that would more so benefit from the system than others. A prime example would be people that are visually impaired or people who are blind. These people could have a hard time finding some item as they may not be able to recognize it themselves or they may not be able to see it at all. The system would provide them with a sense of ease as they would no longer have to manage where their items are all the time. However to make this a viable system for the visually impaired, we would have to implement some sort of voice interface, which is a whole different challenge on its own. | ||

Secondly, people that have a kind of dementia would greatly benefit from this system as they don't have to worry about forgetting where they left their belongings due to their deficiency. The elderly in general is also a good user for the proposed system. This is due to the fact that the elderly tend to be forgetful as their body is no longer in the prime of their life. In addition, they are also the people that | Secondly, people that have a kind of dementia would greatly benefit from this system as they don't have to worry about forgetting where they left their belongings due to their deficiency. The elderly in general is also a good user for the proposed system. This is due to the fact that the elderly tend to be forgetful as their body is no longer in the prime of their life. In addition, they are also the people that suffer the most from the aforementioned deficiencies. Additionally, smart home enthusiasts could be interested in this system as it is a new type of smart device. Moreover, people with large mansions could be interested in this system, as within a mansion an item is easily lost. | ||

Lastly, companies could be interested in investing in this software. | Lastly, companies could be interested in investing in this software. By implementing the system, companies would be able to keep track of their staff's belongings and help find important documents that may be lost on someone's desk. | ||

=== User Requirements === | === User Requirements === | ||

| Line 32: | Line 32: | ||

* The system should be able to inform the user where specific items are on command. | * The system should be able to inform the user where specific items are on command. | ||

* The system should be available at all times. | * The system should be available at all times. | ||

* The system should only respond to the main user for security purposes. | * The system should only respond to the main user for security purposes. | ||

* The system should take the privacy concerns of the user into respect. | * The system should take the privacy concerns of the user into respect. | ||

| Line 45: | Line 44: | ||

* Expand AI capabilities by having it classify objects correctly within video footage | * Expand AI capabilities by having it classify objects correctly within video footage | ||

* Have the AI classify objects within live camera footage | * Have the AI classify objects within live camera footage | ||

* Have the AI determine the location of an object on command and | * Have the AI determine the location of an object on command and communicate it to the user | ||

* Have the AI remember objects which are out of sight | * Have the AI remember objects which are out of sight | ||

* Find out the best way to communicate the location to the user | |||

== Planning == | == Planning == | ||

| Line 52: | Line 52: | ||

'''Object detection''' | '''Object detection''' | ||

* Passive object detection (Detecting all objects or specific objects in video/image) | * Passive object detection (Detecting all objects or specific objects in video/image) | ||

* Live video feed detection ( | * Live video feed detection (Using a camera) | ||

* Input: find specific item (Input: e.g. item name. Output: e.g. camera & location) | * Input: find specific item (Input: e.g. item name. Output: e.g. camera & location) | ||

* Location classification (What is camera2 pixel [100,305] called?) | * Location classification (What is camera2 pixel [100,305] called / how can it be found?) | ||

* Keeping track of where item is last seen. | * Keeping track of where item is last seen. | ||

''' | '''Interface''' | ||

* Define interface (which data is needed as input and output in communication between | * Define interface (which data is needed as input and output in communication between interface and object detection system) | ||

* Pure data input coupling with system that then gives output (e.g. send “find bottle” to make sure it receives “living room table” as data, without interface for now | * Pure data input coupling with a system that then gives output (e.g. send “find bottle” to make sure it receives “living room table” as data, without user interface for now) | ||

'''Research''' | '''Research''' | ||

* Check whether users actually like the system in question. | * Check whether users actually like the system in question. | ||

* Check | * Check which locations in building are most useful for users. | ||

* Research privacy concerns regarding cameras in a home. | * Research privacy concerns regarding cameras in a home. | ||

* Analyse the expected cost. | * Analyse the expected cost. | ||

* Research the best way to communicate the location to the user | |||

=== Deliverables === | === Deliverables === | ||

'''Prototype''' | '''Prototype''' | ||

* Create an object recognition setup with a camera. | * Create an object recognition setup with a camera. | ||

* Create | * Create an interface that can process certain commands. | ||

* Create a system that can locate objects that are asked for on a live camera feed. | * Create a system that can locate objects that are asked for on a live camera feed. | ||

* Create | * Create an interface that can explain where a found object is located. | ||

* Create a prototype that works according to the requirements. | * Create a prototype that works according to the requirements. | ||

=== Planning === | === Planning === | ||

{| | |||

! scope="col" | Week | |||

! scope="col" | Milestones | |||

|- | |||

| Week 1 (23-04) | |||

| | |||

* Define problem statement | |||

* Define goals, milestones, users and planning | |||

* Research state of the art | |||

| | |||

|- | |||

| Week 2 (30-04) | |||

| | |||

* Interview users | |||

* Live video feed object detection | |||

* Data interface | |||

| | |||

|- | |||

| Week 3 (07-05) | |||

| | |||

* Report on user results | |||

* Look into point feature recognition | |||

| | |||

|- | |||

| Week 4 (14-05) | |||

| | |||

* Create interface for users | |||

* Implement database for the system | |||

| | |||

|- | |||

| Week 5 (21-05) | |||

| | |||

* Research the best way to communicate the location to the user | |||

* Create a basic usable interface which can tell the location of objects in some way | |||

| | |||

|- | |||

| Week 6 (28-05) | |||

| | |||

* Location classification in the best way | |||

* Implement basic tracking | |||

| | |||

|- | |||

| Week 7 (04-06) | |||

| | |||

* Check privacy and security measures and additional features | |||

| | |||

|- | |||

| Week 8 (11-06) | |||

| | |||

* Tests | |||

* Prototype | |||

* Wiki | |||

| | |||

|- | |||

| Week 9 (18-06) | |||

| | |||

* Final presentation | |||

| | |||

|- | |||

|} | |||

== State of the art == | == State of the art == | ||

Convolutional networks are at the core of most state-of-the-art computer vision solutions<ref name="architecture computer vision">Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., & Wojna, Z. (2016). Rethinking the Inception Architecture for Computer Vision. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). doi:10.1109/cvpr.2016.308</ref>. TensorFlow is a project by Google which uses a convolutional network model built and trained especially for image recognition<ref>Image Recognition | TensorFlow. (n.d.). Retrieved April 26, 2018, from https://www.tensorflow.org/tutorials/image_recognition</ref>. | A number of researches have already been done into the field of finding objects using artificial intelligence in different ways. Among researches specifically aimed at finding objects for visually impaired people are systems that make use of FM Sonar systems that mostly detect the smoothness, repetitiveness and texture of surfaces<ref name="FM Sonar detection">http://www.aaai.org/Papers/Symposia/Fall/1996/FS-96-05/FS96-05-007.pdf</ref> and Speed-Up Robust Features that are more robust with regards to scaling and rotating objects.<ref name="Spotlight finding">Chincha, R., & Tian, Y. (2011). Finding objects for blind people based on SURF features. 2011 IEEE International Conference on Bioinformatics and Biomedicine Workshops (BIBMW). doi:10.1109/bibmw.2011.6112423 ( https://ieeexplore.ieee.org/abstract/document/6112423/ )</ref> Other, more general, researches into object recovery also make use of radio-frequency tags attached to objects<ref name="rfid tags">Orr, R. J., Raymond, R., Berman, J., & Seay, F. A. (1999). A system for finding frequently lost objects in the home. Georgia Institute of Technology. Retrieved from https://smartech.gatech.edu/handle/1853/3391.</ref> and Spotlight, which "employs active RFID and ultrasonic position detection to detect the position of a lost object [and] illuminates the position".<ref name="Spotlight">Nakada, T., Kanai, H., & Kunifuji, S. (2005). A support system for finding lost objects using spotlight. Proceedings of the 7th International Conference on Human Computer Interaction with Mobile Devices & Services - MobileHCI 05. doi:10.1145/1085777.1085846 ( https://dl.acm.org/citation.cfm?id=1085846 )</ref> | ||

A relevant study has been conducted in the nature of losing objects and finding them. It addresses general questions such as how often people lose objects, what strategies are used to find them, the types of objects that are most frequently lost and why people lose objects.<ref name="the nature of losing items">Pak, R., Peters, R. E., Rogers, W. A., Abowd, G. D., & Fisk, A. D. (2004). Finding lost objects: Informing the design of ubiquitous computing services for the home. PsycEXTRA Dataset. doi:10.1037/e577282012-008</ref> | |||

Applicable to the project is also researches that have been done into the needs and opinions of visually impaired people. A book has been written about assistive technologies for visually impaired people.<ref name="book assistive technology">Hersh, M. A., & Johnson, M. A. (2008). Assistive technology for visually impaired and blind people. Londres (Inglaterra): Springer - Verlag London Limited.</ref> Multiple surveys were also conducted about the opinions of visually impaired people on the research regarding visually impairment, both in general<ref> | |||

Duckett, P. S., & Pratt, R. (2001). The Researched Opinions on Research: Visually impaired people and visual impairment research. Disability & Society, 16(6), 815-835. doi:10.1080/09687590120083976 ( https://www.tandfonline.com/doi/abs/10.1080/09687590120083976 )</ref> and in the Netherlands specifically.<ref>Schölvinck, A. M., Pittens, C. A., & Broerse, J. E. (2017). The research priorities of people with visual impairments in the Netherlands. Journal of Visual Impairment & Blindness, 237-261. Retrieved from https://files.eric.ed.gov/fulltext/EJ1142797.pdf.</ref> | |||

Convolutional networks are at the core of most state-of-the-art computer vision solutions<ref name="architecture computer vision">Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., & Wojna, Z. (2016). Rethinking the Inception Architecture for Computer Vision. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). doi:10.1109/cvpr.2016.308</ref>. TensorFlow is a project by Google which uses a convolutional network model built and trained especially for image recognition<ref name="test">Image Recognition | TensorFlow. (n.d.). Retrieved April 26, 2018, from https://www.tensorflow.org/tutorials/image_recognition</ref>. | |||

ImageNet Large Scale Visual Recognition Competition (ILSVRC) is a benchmark for object category classification and detection<ref>Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., . . . Fei-Fei, L. (2015). ImageNet Large Scale Visual Recognition Challenge. International Journal of Computer Vision, 115(3), 211-252. doi:10.1007/s11263-015-0816-y</ref>. TensorFlow’s latest and highest quality model, Inception-v3, reaches 21.2%, top-1 and 5.6% top-5 error for single crop evaluation on the ILSVR 2012 classification, which has set a new state-of-the-art<ref name="architecture computer vision"/>. | ImageNet Large Scale Visual Recognition Competition (ILSVRC) is a benchmark for object category classification and detection<ref>Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., . . . Fei-Fei, L. (2015). ImageNet Large Scale Visual Recognition Challenge. International Journal of Computer Vision, 115(3), 211-252. doi:10.1007/s11263-015-0816-y</ref>. TensorFlow’s latest and highest quality model, Inception-v3, reaches 21.2%, top-1 and 5.6% top-5 error for single crop evaluation on the ILSVR 2012 classification, which has set a new state-of-the-art<ref name="architecture computer vision"/>. | ||

A lot of progress has been made in recent years with regards to object detection. Modern object detectors based on these networks — such as Faster R-CNN, R-FCN, Multibox, SSD and YOLO — are now good enough to be deployed in consumer products and some have been shown to be fast enough to be run on mobile devices<ref name= | A lot of progress has been made in recent years with regards to object detection. Modern object detectors based on these networks — such as Faster R-CNN, R-FCN, Multibox, SSD and YOLO — are now good enough to be deployed in consumer products and some have been shown to be fast enough to be run on mobile devices<ref name="speed object detectors">Huang, J., Rathod, V., Sun, C., Zhu, M., Korattikara, A., Fathi, A., . . . Murphy, K. (2017). Speed/Accuracy Trade-Offs for Modern Convolutional Object Detectors. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). doi:10.1109/cvpr.2017.351</ref>. Research has been done comparing these different architectures on running time, memory use and accuracy, which can be used to determine which implementation to use in a concrete application: <ref name="speed object detectors"/>. | ||

In order to keep track of where a certain object resides, an object tracking (also known as video tracking) system would need to be implemented. Research has been done comparing different such systems using a large scale benchmark, providing a fair comparison: <ref>Leal-Taixé, L., Milan, A., Schindler, K., Cremers, D., Reid, I., & Roth, S. (2017). Tracking the Trackers: An Analysis of the State of the Art in Multiple Object Tracking. CoRR. Retrieved April 26, 2018, from http://arxiv.org/abs/1704.02781</ref>. | |||

A master thesis implementing object tracking in video using TensorFlow has been published: <ref>Ferri, A. (2016). Object Tracking in Video with TensorFlow (Master's thesis, Universidad Politecnica de Catalunia Catalunya, Spain, 2016). Barcelona: UPCommons. Retrieved April 26, 2018, from http://hdl.handle.net/2117/106410</ref>. | |||

Essentially we are designing some kind of "smart home" by expanding the house with this kind of technology. However several issues arise when talking about smart homes; security and privacy in particular. A study was done involving a smart home and the elderly<ref name="Elderly and smart homes">Anne-Sophie Melenhorst, Arthur D. Fisk, Elizabeth D. Mynatt, & Wendy A. Rogers. (2004). Potential Intrusiveness of Aware Home Technology: Perceptions of Older Adults. Proceedings of the Human Factors and Ergonomics Society 48th Annual Meeting (2004). </ref>, which used cameras as well. The results were rather positive, 1783 quotes were selected from interviews to determine the thoughts of the users. 12% of the quotes were negatively directed to the privacy and security of the users. In total 63% of the quotes were positively directed towards the system, with 37% being negatively directed, thus most of the complaints were not aimed at the privacy and security issues but at other concerns, like the use of the certain products of the study, which are irrelevant for this project. | |||

Another study was done involving privacy concerns and the elderly<ref name="Elderly and cameras">Kelly E. Caine, Arthur D. Fisk, and Wendy A. Rogers. (2006). Benefits and Privacy Concerns of a Home Equipped with a Visual Sensing System: a Perspective from Older Adults. Proceedings of the Human Factors and Ergonomics Society 50th Annual Meeting (2006). </ref>, this time focused on the use of cameras. However in this study, the elderly were not the users but they commented on potential uses of the system in question. They were shown several families and the systems which were used by them, and the participants were asked several questions regarding the privacy and benefits of these scenarios. Concerning privacy the results were as follows, the more functioning a user was, the higher privacy concerns were rated. So potential benefits outweigh the privacy concerns when talking about a low functioning user. | |||

A study similar to the last was conducted<ref name="People and cameras">Martina Ziefle, Carsten Röcker, Andreas Holzinger (2011). Medical Technology in Smart Homes: Exploring the User's Perspective on Privacy, Intimacy and Trust. Computer Software and Applications Conference Workshops (COMPSACW), 2011 IEEE 35th Annual. </ref>, which also asked people questions about a system which used cameras in their homes, not focusing on the elderly. The results state that people are very reluctant to place a monitoring system in their homes, except when it is an actual benefit for your health, in that case almost everyone would prefer to have this system. People would also prefer that the cameras installed would be invisible or unobtrusive. There were also a lot of security concerns, people do not trust that their footage is safe and that it can be obtained by third parties. | |||

To tackle privacy concerns which are introduced by cameras, IBM has researched a camera that can be used to filter out any identity revealing features before receiving the video file<ref name="IBM and cameras">Andrew Senior, Sharath Pankanti, Arun Hampapur, Lisa Brown, Ying-Li Tian, Ahmet Ekin. (2003). Blinkering Surveillance: Enabling Video Privacy through Computer Vision. IBM Research Report: RC22886 (W0308-109) August 28, 2003 Computer Science. </ref>. This can be used in our camera based system to preserve the privacy of all primairy and secondary users who are seen by the camera. | |||

For voice recognition many frameworks have been implemented. One such a framework is discussed by the paper of Dawid Polap et al.<ref name = Image approach to voice recognition>Polap, D., & Wozniak, M. (2017). Image approach to voice recognition. 2017 IEEE Symposium Series on Computational Intelligence (SSCI). doi:10.1109/ssci.2017.8280890 ( https://ieeexplore.ieee.org/document/8280890/ )</ref> where they discuss a way to use audio as an image type file which then allows convolutional neural networks to be used. This could be worth while to look into as such a framework already needs to be delivered to find objects using the cameras. | |||

When it comes to speech recognition the main framework used nowadays is the Hidden Markov Models (HMM) approach. A detailed description of how to implement it and where it is used for is presented by Lawrence R. Rabiner which provides a good starting point for the implementation.* <ref name = HMMM>Rabiner, L. R. (1990). A Tutorial on Hidden Markov Models and Selected Applications in Speech Recognition. Readings in Speech Recognition, 267-296. doi:10.1016/b978-0-08-051584-7.50027-9 ( https://ieeexplore.ieee.org/document/18626/ )</ref> | |||

As an alternative to voice control it is possible to allow the system to react to motion controls to. This would allow people who have no voice or have a hard time speaking because of disabilities to still use the system. This process is described by M. Sarkar et al. <ref name = Android>Sarkar, M., Haider, M. Z., Chowdhury, D., & Rabbi, G. (2016). An Android based human computer interactive system with motion recognition and voice command activation. 2016 5th International Conference on Informatics, Electronics and Vision (ICIEV). doi:10.1109/iciev.2016.7759990 ( https://ieeexplore.ieee.org/document/7759990/ )</ref> | |||

As mentioned above, there are those people who have a disability when it comes to speaking. If those people still want to use the voice controlled system some adjustments need to be made as to allow them to still use it. This is described in the paper by Xiaojun Zhang et al. where they describe how to use Deep Neural Networks to still allow these people to use a voice recognition system. <ref name = DNN>Zhang, X., Tao, Z., Zhao, H., & Xu, T. (2017). Pathological voice recognition by deep neural network. 2017 4th International Conference on Systems and Informatics (ICSAI). doi:10.1109/icsai.2017.8248337 ( https://ieeexplore.ieee.org/document/8248337/ )</ref> | |||

When tackling the environment, being the house or building the system will be implemented in, there are decisions to be made about the extent of mapping the space. A similar study compared to our project has been done where there has been discussion about what rooms could be used and where the camera placement could be.<ref name = FindingObjects>Yi, C., Flores, R. W., Chincha, R., & Tian, Y. (2013). Finding objects for assisting blind people. Network Modeling Analysis in Health Informatics and Bioinformatics, 2(2), 71-79.(https://link.springer.com/article/10.1007/s13721-013-0026-x)</ref> The camera's could for example be static or attached to the user in some way. | |||

Continuing about the camera placement, studies concerning surveillance camera's have resulted in optimal placement according to specification of the camera to make the use as efficient as possible so that costs are reduced.<ref name = efficiency>Yabuta, K., & Kitazawa, H. (2008, May). Optimum camera placement considering camera specification for security monitoring. In Circuits and Systems, 2008. ISCAS 2008. IEEE International Symposium on (pp. 2114-2117). IEEE.(https://ieeexplore.ieee.org/abstract/document/4541867/)</ref> They have also resulted in an algorithm that tries to maximize the performance of the camera's by considering the task it is meant for.<ref name = camAlgorithm>Bodor, R., Drenner, A., Schrater, P., & Papanikolopoulos, N. (2007). Optimal camera placement for automated surveillance tasks. Journal of Intelligent and Robotic Systems, 50(3), 257-295. (https://link.springer.com/article/10.1007%2Fs10846-007-9164-7)</ref> | |||

By the use of depth camera's there is also the possibility of generating a 3D model of the indoor environment, described in a paper about RGB-D mapping. This can help with explaining to the user where the object asked for is located.<ref name = depth>Henry, P., Krainin, M., Herbst, E., Ren, X., & Fox, D. (2010). RGB-D mapping: Using depth cameras for dense 3D modeling of indoor environments. In In the 12th International Symposium on Experimental Robotics (ISER. (http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.226.91)</ref> | |||

== User Research == | |||

To investigate the interest and concerns of our system we decided to distribute a survey amongst various potential users. | |||

We are mainly developing this system for people which have either dementia or are visually impaired, since they are among the people we estimate to have the highest probability of losing their personal belonings. For this purpose we ask people in the survey, whom are considered to be potential users, whether they suffer from such a disability. | |||

We are also interested in the correlation between losing objects, age and the interest for this system. | |||

Since we are expecting a lot of privacy concerns, we specifically ask the users in what way they would like to see these concerns be addressed. | |||

We also ask in what rooms the users would want to have the cameras installed to see how many cameras would need to be installed on average. | |||

We are also interested in the price that people would want to pay for the system. This is essential as the system has to be able to have a chance at the current market. | |||

Lastly we ask how important it is that the cameras are hidden, because people may not want a visible camera in their rooms. | |||

The survey below has been filled in by '''61''' people and we will now discuss the results. | |||

Google form: https://docs.google.com/forms/d/e/1FAIpQLScCbIxM10migwrO-rNiF07-iIabRcVXuj8jqcqDpZFWPJ392Q/viewform?usp=sf_link | |||

----'''Results''' | |||

The survey was filled in by people from all over the world in the age ranges of 11-20 (31%) and 21-30 (64%), so those are the only ages we can make a proper statement about. There is no notable difference between the two age groups. | |||

46% of the people were not interested in the system while 42% was interested (the other responses were neither), this seems like an even split. | |||

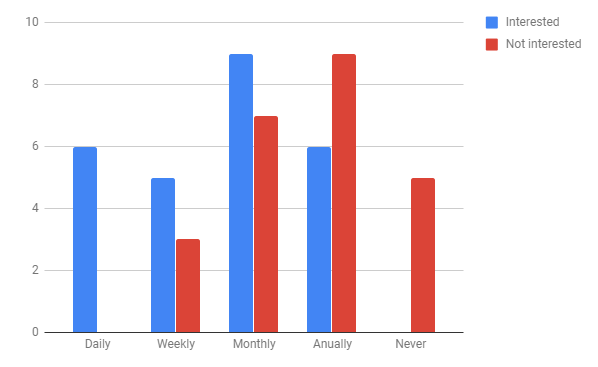

The correlation between interest in the system and how often the person loses something is highly noticable, as presented in the bar chart below. | |||

[[File:Interested.png]] | |||

Do notice that some people were not interested in the system even though they were losing objects rather occasionally, almost all of these people were not interested because of privacy and security concerns. | |||

However not significant, there were 2 visually impaired people which filled in the survey and they were both very interested in the system. | |||

Some comments that were mentioned for being interested in the system: | |||

* The system would help me retrieve the objects I occasionally lose. | |||

* The system is an interesting gimmick to fool around with. | |||

* The system would help me to keep track of valuable items. | |||

* The system is a great idea for people with disabilities. | |||

Some comments that were mentioned for being not interested in the system: | |||

* I do not want cameras in my house. | |||

* I (almost) never lose objects. | |||

* The system might get annoying. | |||

* The system is probably too expensive to install. | |||

* The use cases are too limited. | |||

* The house will use more electricity. | |||

* Privacy/Security concerns (Discussed below) | |||

We received several privacy and security concerns from people as expected. This is understandable as in recent years people are more concerned about their privacy, as many companies use their privacy for their own benefit. | |||

We will discuss several of the comments which were mentioned, and add reasoning to prevent any misuse of the system. | |||

* The information is stored longer than necessary. | |||

We plan to save only the information needed for the system to work, so the last seen locations of certain objects for example, which get updated over time. | |||

* The data is shared with 3rd parties. | |||

A contract can be signed to ensure we are legally not allowed to share any of the data. | |||

* The system can be hacked as the internet is insecure. | |||

The system will operate locally with a central computer which connects all the cameras via hardware, thus there will be no internet involved. However for the prototype we use an existing framework for voice control of Google called "Google Assistant SDK". | |||

* The system can be used by burglars. | |||

The system can only be used by the registered users, a voice recognition system or password system will take care of this. Furthermore the physical system's data will be encrypted so it can not be easily accessed. | |||

* The system cannot be turned off. | |||

In principle you can turn off the system, but take care as the system may "lose" some objects as they can be moved while the system is offline. | |||

* I do not want cameras watching me or I would prefer another method of locating objects. | |||

This is the only concern which cannot be addressed, as this concerns the user's own will. There are several other methods to locate objects, which involve bluetooth tracking devices, which actually can be hacked, so in a sense this system could be safer. | |||

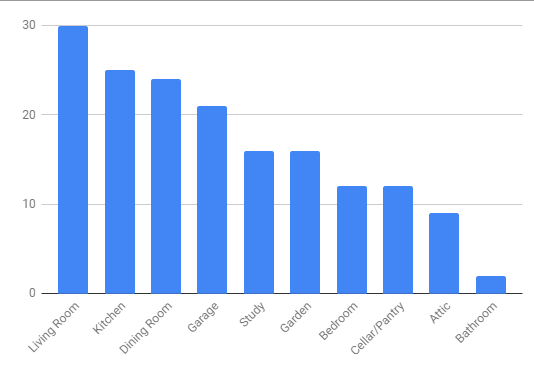

For the people who were interested in the system, we asked what rooms they would want a camera installed, this is represented in the bar chart below. | |||

[[File:rooms.png]] | |||

38 people indicated the rooms they would want a camera installed in, in a total of 167 rooms. This means that every user would have 4 cameras in their home on average. | |||

The prices that people were willing to pay for the system were on average (after filtering out all the different currencies) €230. This would mean €230 for an average of 4 cameras and a computer which can do live calculations, excluding installation costs. | |||

Lastly we asked whether the cameras have to be unobtrusive, for 31% of the people it is not important while 58% found it important (the rest in between). This means that we have to make sure that the cameras are unobtrusive, as it is important for most of the people. | |||

We received some comments and tips for the system: | |||

* You should also implement a security camera feature to the system which can detect burglars and report it. | |||

* You should call the cameras "sensors" to scare off less users. | |||

----'''Results from other sources''' | |||

A survey about lost items was conducted by the company which created "Pixie", a tool to track items in your home using bluetooth<ref name="Pixie">https://getpixie.com/blogs/news/lostfoundsurvey</ref>. The survey concluded that Americans spend 2.5 days a year looking for lost items, and that it takes 5 minutes on average to find a lost item, which resulted in being late for certain events. The most common lost items are TV remotes, keys, shoes, wallets, glasses and phones. The lost items which take on average longer than 15 minutes to find are keys, wallets, umbrellas, passports, driver's licences and credit cards. | |||

== Location description research == | |||

[[ Image:Scene1.png | thumb | right | 5000px | Scenario with object box <ref name="roomImage">https://www.123rf.com/photo_54669575_top-view-of-modern-living-room-interior-with-sofa-and-armchairs-3d-render.html, with unwatermarked found on http://www.revosense.com/2018/01/28/realize-your-desires-living-room-layout-ideas-with-these-5-tips/top-view-of-living-room-interior-3d-render/</ref>]] | |||

One of the major difficulties of this system is describing where an object is located, such that a potential user can understand and locate the object that is to be found. To know the best way to do this however we want to consider several options and compare them with each other. For this we assume that we have an interface in which you can indicate what object you are looking for. We have considered multiple scenarios. In order to visualize these scenarios mentioned below we will use picture shown on the right as an example of what a room where the Object Locating System is utilized could look like. In this picture the red square is an indication of where a requested item was lost. For purpose of illustration we assume the lost/requested item to be a TV remote. | |||

---- | |||

===Scenario 1=== | |||

One of the most convenient ways to show the user the location of an item is by showing them some footage of the moment when the object was last seen. Considering the example scenario this would be the video feed that is shown to the user. This allows the user to quickly identify where the requested object is located rather easily as the user can see exactly where the item was lost, making it very accurate and having little chance of confusion for the user because the interaction with the system doesn't require difficult communication. However, showing such a video feed for an item becomes quite taxing on the hardware making this option rather expensive. There is also no guarantee that the user won't obstruct the object in the live feed, making it hard for the system to show where the item is. Besides this, there isn't necessarily an advantage of having a live feed instead of having a picture. Even if the user would like to look at himself to guide him to the location there will be a delay what makes the system less user friendly. | |||

===Scenario 2=== | |||

Instead of using a live feed, there is also the possibility of using a still picture of the room that is made when the user asks for an object. This means that there can be an object box on a location where the object was last seen while it is not there anymore like the example scenario picture at the right. Compared to the live feed this requires a lot less computation while delivering almost the same result. The only main difference is that the user can't guide himself to the object on the live feed but since the room will probably be well-known to the user this won't be necessary. The other advantage of scenario 1 also applies here, being that the communication between the user and the system will be intuitive and clear, with little chance of miscommunication such as the user not understanding what results the system is giving. In terms of disadvantages there is only one, being that also in this scenario the user can obstruct the object location while the picture is made what makes the result less clear. | |||

===Scenario 3=== | |||

In the example scenario picture there is an empty box of where a TV remote has last been seen but is not there anymore. This may not help the user at all so having a scenario where a picture saved of when the object has last been seen could improve user friendliness. This keeps the same advantages of scenario 1 and 2 so user interaction is easy and clear. However, this might introduce some storage concerns because there have to be a lot of pictures saved for the amount of the objects the system finds. These also have to be updated in a certain time interval. However, there only has to be one picture of an object; the most recent one. This means storage doesn't have to be a problem. It can also be argued that privacy is at risk but the cameras should be as privacy sensitive as the pictures so saving images doesn't increase the risk. | |||

== | ===Scenario 4=== | ||

We now consider a situation where the system will state n objects around the object that you wish to find. | |||

Using our example this would mean that the system will state "The TV Remote is near the couch, pillow and table" if the system states 3 objects. | |||

This requires the object detection of more objects and the system will have to know how close they are to the object you are looking for. | |||

The latter is easy to do, the former however is more difficult as the system will have to be trained on more objects. | |||

The system also has to know how many objects to state and what objects are handy for the user. | |||

This can be done by looking a certain distance around the looked for object, which can be easily done. | |||

The system may state some useless objects in that case however. | |||

The system also cannot state distinct objects, for example in this case it would just state "couch" in stead of a specific couch. | |||

===Scenario 5=== | |||

We now consider a situation where the system will State the object in which the searched for object is encapsulated in. | |||

This is very similar to the last scenario but instead of stating multiple objects, we only state one object. | |||

So for objects on the table it can say "On the table" as the objects are completed encapsulated in the table. | |||

The TV remote however is not encapsulated in anything hence the system cannot state any object. | |||

== | ===Scenario 6=== | ||

We now consider a situation where the system will state the region of the room an item is in. | |||

So using our example the system would state that the TV remote is in the top right region of the room. | |||

As you can see for this example it is already hard to define a specific region, while it may be easier for other examples, a certain corner of a room for instance). | |||

The system would need to build a world model of the room and divide the room into regions, which are understandable for the user. | |||

It cannot use objects for this, so this already increases the difficulty of this system. | |||

The system then needs to detect objects and detect in what region(s) they are, this is also more difficult when the object is in multiple regions at once. | |||

===Scenario 7=== | |||

We now consider the situation where the system states the direction the user should be walking towards. | |||

This can be done using voice commands or an arrow on a screen, it does not really matter for now. | |||

So imagine you are standing in the top left corner of the image and you want to find the TV remote. | |||

The system will indicate that the user has to move forward until he reaches the coach. | |||

This may sound nice when you state it like this, as the user does not have to think at all when finding on object, he/she just has to follow the steps. | |||

However, this gives rise to lots of issues in technology and user friendliness. | |||

It is very difficult to track what direction a user is facing, and to indicate in what (exact) direction a user has to walk. The system would need to have a perfect world model and understand depth and distance. | |||

What will happen when multiple users are on screen? THe system would have trouble to redirect a certain user, unless user recognition is implemented. | |||

The user also obviously knows his own house, so finding an object this way is time consuming, this system would work for an unknown location. | |||

The system may not know where an object is exactly located (if it is using its last seen functionality) so it may direct a user to the wrong location as well. | |||

There will also be a delay present which decreases the user friendliness a lot, as the delay may direct the user to the wrong location. | |||

===Summary=== | |||

In the table below we summarize the different scenarios with their advantages and disadvantages. | |||

We also state whether we need a screen or voice control as user interaction, whether the interaction is live (the user communication is constantly updated) and whether it is required to build a world model of the room. These variants have advantages and disadvantages:<br> | |||

* '''Screen''' | |||

** Advantages: | |||

* | *** We can easily communicate information to the user, a user can easily understand an image. | ||

*** User knows the room and hence it is relatively easy to find an object. | |||

* | ** Disadvantages: | ||

*** A screen needs to be installed or the system has to be able to work via wifi. | |||

* | *** User may obstruct view of camera. | ||

* | |||

* | |||

* '''Voice''' | |||

* | ** Advantages: | ||

* | *** Blind people can only use the system when voice is implemented. | ||

* | ** Disadvantages: | ||

* | *** Voice user interaction is difficult to implement. | ||

*** Speakers are required. | |||

* '''Live''' | |||

* | ** Advantages: | ||

*** It updates in real time so the user can be guided / guide him/herself | |||

** Disadvantages: | |||

*** There will be a delay in output. | |||

*** It may be unnecessary. | |||

* '''World model''' | |||

** Advantages: | |||

*** It can use the model of the room to describe item locations more understandable. | |||

** Disadvantages: | |||

*** It requires very difficult implementations. | |||

*** Multiple rooms need to be combined with each other. | |||

<br> | |||

== | {| class="wikitable" | style:"vertical-align:top; | border="2" style="border-collapse:collapse"; dashed; | ||

|- | |||

! Option | |||

! Screen/Voice | |||

! Live | |||

! World model | |||

! Additional Advantages | |||

! Additional Disadvantages | |||

|- | |||

| rowspan="1" | 1. Show live footage and draw a box around the corresponding location of the object found. || rowspan="1" style="text-align: center;" | Screen|| rowspan="1" style="text-align: center;"| Yes || rowspan="1" style="text-align: center;" | No || || | |||

|- | |||

| rowspan="1" | 2. Show a picture of the room, which was made when the user asks where an object is located, and draw a box around the corresponding location. || rowspan="1" style="text-align: center;" | Screen|| rowspan="1" style="text-align: center;" | No || rowspan="1" style="text-align: center;" | No || || User may obstruct view of camera, in which case a picture may have to be retaken. | |||

|- | |||

| rowspan="1" | 3. Show a picture of the room, which was made when the object was last seen, and draw a box around the corresponding location. || rowspan="1" style="text-align: center;" | Screen|| rowspan="1" style="text-align: center;" | No || rowspan="1" style="text-align: center;" | No || The user can easily see how the object was lost. || Requires a lot of pictures to be made and saved. | |||

|- | |||

| rowspan="2" | 4. State n objects around the object that you wish to find. || rowspan="2" style="text-align: center;" | Voice || rowspan="2" style="text-align: center;" | No || rowspan="2" style="text-align: center;" | No || || Hard to determine what objects to state for the user. | |||

|- | |||

| || May not be accurate enough. | |||

|- | |||

| rowspan="1" | 5. State the object in which the searched for object is encapsulated in. || rowspan="1" style="text-align: center;" | Voice|| rowspan="1" style="text-align: center;" | No || rowspan="1" style="text-align: center;" | no || || The object may not always be encapsulated by another object. | |||

|- | |||

| rowspan="1" | 6. State the region of the room in which the object is located in. || rowspan="1" style="text-align: center;" | Voice|| rowspan="1" style="text-align: center;" | No || rowspan="1" style="text-align: center;" | Yes || Can be very clear if done correctly. || Room needs to be divided into subsections. | |||

|- | |||

| rowspan="5" | 7. State the direction the user should be walking towards. || rowspan="5" style="text-align: center;" | Voice|| rowspan="5" style="text-align: center;" | Yes || rowspan="5" style="text-align: center;" | Yes || Requires no thinking of the user. || System may misguide you if the object is off-screen. | |||

|- | |||

| || Very hard to implement as you need to see what the user is doing as well. | |||

|- | |||

| || Slow technique of guiding when in a known location of the user. | |||

|- | |||

| || The camera needs to know the distance of the object relative to the user. | |||

|- | |||

| || Unclarity when multiple users are on screen. | |||

|} | |||

== | ===Discussion=== | ||

Now that all the scenarios have been discussed, we have to investigate which is most viable to use in our project. When looking at the scenarios there are some features that appear to be too difficult to implement. One of these is the world model. After discussion it seems like having the system monitor multiple rooms makes it too hard to build a world model around. This already eliminates scenarios 6 and 7. Regarding scenarios with a screen, 1, 2, and 3 are similar. However, scenario 1 doesn't have enough extra to contribute compared to the other two while there is more computation. Scenario 2 and 3 seem to be viable choices as they are user friendly and can clearly indicate where an object is. The last two scenarios, 4 and 5, are somewhat similar too. 4 seems to be the best choice based on the fact that scenario 5 doesn't guarantee a good location description if the object is not encapsulated. However due to project time constraints we do not have the time to implement a system that can communicate with the user. From scenario 2 and 3 we chose scenario 3 as storage is not a problem and a user can easily see what he was doing when the object was lost, reducing the time to search for the object. | |||

== | == Software == | ||

[[ Image:Object_Detection_Table.jpg | thumb | right | 500px | Tensorflow object detection]] | |||

For our object recognizing framework we use TensorFlow object detection<ref name="TF">https://github.com/tensorflow/models/tree/master/research/object_detection</ref> which is an open source software library for deep learning. It was developed by researchers and engineers from the Google Brain team within Google’s AI organization. We use TensorFlow image recognition which uses deep convolutional neural networks to classify objects in camera footage. The basic implementation provided can run a camera and show boxes around the classified objects and say what they are with a computed certainty, as presented in the image. The API is written in Python, and we can alter the code for our own implementation. | |||

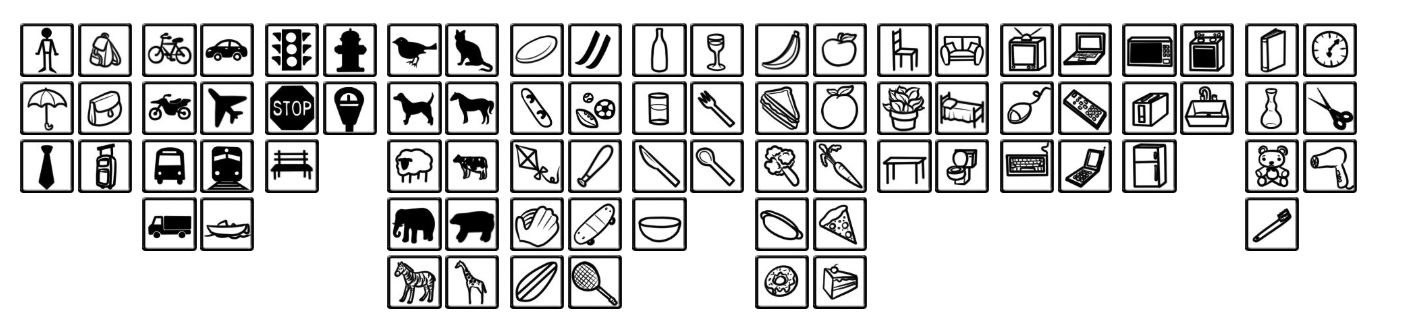

== | [[ Image:coco.png | thumb | right | 500px | All objects in the COCO dataset. <ref name="cocoimage">http://cocodataset.org/#explore</ref>]] | ||

We use the COCO<ref name="COCO">http://cocodataset.org/#home</ref> (Common Objects in Context) dataset for our implementations which can classify about 80 different objects, which includes certain animals. All objects of the COCO dataset are in the image on the right. This also results in certain limitations for our implementation, we can only use the objects which are already in the dataset as adding new objects would take too much time and resources. We would have to re-train the neural network with thousands of (good quality, distinct, general and different angle) images of every distinct object, as otherwise, we could run into the risk of overfitting, which would make the neural network useless. We have thus only limited objects to detect, luckily these objects include some objects which are lost often as well, such as TV remotes, books, cell phones and even cats and dogs. However, we cannot add the objects that we wanted to add such as keys, shoes and other often lost household items. | |||

It is also hard to combine multiple frameworks, as this is only possible if they are also written in Python because again we do not have the time and resources to understand and rewrite multiple frameworks into one framework. This means that we have to create our own tracking software with basic functionality which is less optimal than most other frameworks. Tracking is an implementation to keep track of an object's location even when it is moving, to prevent the classification of 'duplicate' objects. | |||

= | For the pre-trained frozen inference graph for object detection, we used the faster_rcnn_inception_v2_coco model from the Tensorflow model zoo. <ref name="model-zoo">https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/detection_model_zoo.md</ref> This decision is based on the speed and score of this model. It is possible to use a different model that is quicker but has a lower score and vice versa. When a model is quicker, it takes less time to classify objects in an image, meaning that you can analyze more frames per second. This increase of speed generally is at the expense of the score, meaning that the model will be less certain of which item is which. This can be useful to adjust to your needs, for example during the demo where it was of importance that the system would quickly detect a book to show the workings, but which did lead to a false positive. The model can easily be swapped out for a different one using a setting in the settings file. | ||

We implemented the software such that when a camera detects a certain object, it will send a picture of its current location to the database every 10 seconds. The object recognition system uses footage of 3 frames per second to update the object locations. Via a website, we can access the location of a certain object using these pictures (more on this later). The picture will be updated every time the same object is seen again. This, of course, raises several questions, as we need to be able to detect whether an object is the same object as before, even if it was moving. For this we use tracking. We do this via positions, size, colors, point features, and time intervals of a seen object, as it is unlikely that an object changes color (with a light switch for example) and position (moving) in a short time interval. Hence we can guess whether an object is the same. This, however, becomes extra difficult when we have to take footage of multiple cameras into account, or the same cell phone has moved because someone put it in their pocket, as then the position and color could drastically change. | |||

= | We have talked with Wouter Houtman, a PhD student of the Tu/e in the Control Systems Technology section of the Mechanical Engineering department, to discuss the ways to implement tracking. We have learned that it is important to use location and color of an object, just like we already considered, however, he told us that we can also use velocity (meters per second, or pixels per frame in our case) to predict where an object will go in the next frame. Unfortunately, we cannot base our tracking assumptions on the dimensions of an object since we are using only camera footage. Wouter proposed that we should use a hypothesis tree, which was also used in the WIRE framework<ref name="WIRE">http://wiki.ros.org/wire</ref>, to assign probabilities whether two objects are the same or different. For instance when we have one cup and we move it off-screen, and we enter the screen with a new cup, which looks very similar, the system will assign a high probability that it is a new cup, however, it also remembers the hypothesis that it may not be the same cup. Then when the old cup enters the screen again, the system will see two cups and that the cup entered is more similar to the previous cup saved than the already on-screen cup, hence it will alter its probabilities, and use the hypothesis it created to say that the two cups are different, and the first cup is the same as the last cup which entered the screen. So we can use this hypothesis tree in multiples ways to alter previously made assumptions, but this tree can lead to a state explosion (in this case a hypothesis explosion) in which too many hypotheses are created to keep track of. Hence we need to make sure to neglect previously made hypotheses and also make hypotheses when deemed necessary, so not when comparing two objects of a different class for example. We used this idea in our implementation where when a new object is seen that does not match with an object seen shortly before, it first collects data for a longer timespan before matching it with earlier seen versions to check which object this is or if it is a new one. | ||

== | ===Implementation=== | ||

The object detection and tracking system is implemented using Python. The sourcecode is publicly available in [https://gitlab.com/VortexAI/AI_USE_0LAUK0_Group_3 our GitLab repository]. In this section we will explain the most important parts of the program. | |||

== | The purpose of the python script is to continiously analyse the camera feeds of multiple cameras. It runs object detection on each frame, identifying which items are currently seen. The second part of the script, the matching, then has to make sure that these detections are matched to the items that a person has, making sure that no duplicates appear and new items are inserted and such. This system then uploads the information on the items and the pictures of them to the database so that the website can access them. | ||

To | |||

In pseudocode, the basic implementation of the main loop of the script without matching explained is as follows: | |||

Mark each remembered instance as "not matched yet this frame" | |||

For each camera: | |||

Pull new camera frame | |||

Run Object detection on this frame | |||

Extract the boxes & detection data from the object detection | |||

For each detected item: | |||

Make sure that the detection score is high enough | |||

Create a new instance with latest values | |||

See if this matches with an object from the last few frames (based on position matching) | |||

If it matched with high enough certainty, merge the two and mark as matched this frame | |||

If it did not match, check the detection certainty | |||

If it was even more certain that it was such object, create a new instance in the data dictionary | |||

Check each instance that has not been longterm-matched if it should be and run longterm-matching if it's time | |||

Only once every 10 seconds: Start an asynchronous thread that pushes all updated instances to the database and uploads the new last-seen pictures | |||

When the script is started, it will first open the camera streams, open a connection to the database, load the frozen inference graph that is used for object detection, initialize a Flann matcher and sift point feature detector (explained in the modeling part of this section) and initialize other needed variables like the dataset used to keep track of all items. We use a dictionary containing instances of a class "Instance". An Instance represents a possible item in the system. It can be an item that the system has not yet collected enough information about to make a conclusion, or it can already be marked as an individual item. Each Instance has the following values belonging to it that are being kept track of: | |||

* The instance ID (-1 if this item is not yet uploaded) | |||

* The label ID which represents what kind of item it is | |||

* A photo of when the item was last seen | |||

* A coordinate representation of the box surrounding the item in the photo | |||

* Which camera ID the photo was taken from | |||

* A timestamp of when the item was first seen | |||

* A timestamp of when the item was last seen | |||

* The object detection certainty of the last time it was detected | |||

* The number of times this item has been detected | |||

* Whether this item has been long-term matched yet | |||

* Whether this item has been short-term matched this frame | |||

* The highest object detection certainty that has ever been achieved for this item | |||

* The 'best' photo taken of this item, where we used the detection certainty as a measure of quality of the picture of the object. This photo is used for matching. | |||

* Whether the keypoints and descriptors of the best photo have been computed | |||

* The keypoints of the best photo if computed already | |||

* The descriptors of the keypoints of the best photo if computed already | |||

Once this initialization is complete, the script enters the main loop, which will continuously update the dataset with newly detected and updated items and upload changes to the database. The first thing it does is set the matched status of each instance to False, indicating that it has not been seen this frame yet. For each camera, the frame is achieved and is run through the inference graph to get the detection boxes and their accompanying descriptions. For each item, it is checked if the detection was confident. This is a value outputted by the object detection that describes how sure it is that an object is actually the object it describes it as. After testing, we have taken the value of 30% confidence as a threshold of analyzing a detection. If the confidence score is high enough, first a new instance is made. This instance will then be used to see if this item has been seen earlier. It does so by comparing the instance against all other instances of the same object using short-term matching. In other words, it checks a book against all other earlier detected books that were very recently seen in view to see if this detection matches with a very recent detection. This concerns mostly objects that are continiously in view at that moment. The inner workings of short-term matching will be explained later. If the detected item has matched with a recently seen item, its values are updated. If it does not match with any recently seen item, the confidence of the object detection is checked again. If the confidence is high enough, the item is added to the dataset such that it can be kept track of for a little bit longer to gather more data. After tests, we have taken this value as 50%. After it has gathered more data, this will be used to do long-term matching. This checks if this item matches with an item that has been seen before (but has been out of view for a little bit). The fact that we use two separate threshold values for the detection intervals for new and previously seen items makes sure that the system does not pick up too many false positives, while still updating the previously seen items (true-positives). When an item has always only been detected with low confidence, there is a higher chance that this detection is wrong. When, however, the confidence is low but that item is expected to be there based on earlier detections, we are more certain that the detection is correct. This means that we can update the detected objects more often, while taking out false positives. | |||

After these checks have been run on each camera, all objects that are still in the phase of detecting more information are checked to see if a conclusion can be drawn about them. If this is the case, then it is checked how many times the item has been detected. If the item was detected only a few times (which we took as 2 or less times), then we discard this item from the dataset. This avoids false positives we experienced such as an entire room being detected as a motorcycle for one frame. If this is satisfied, then the item is compared to all other items of the same type using long-term matching. If this item matches with a previously seen item, they are merged where the old item's values are updated such that it displays the updated information and can now be short-term tracked untill it is out of view again. If there is no long-term match found, the item is kept in the dataset and is now ready to be uploaded to the database to be viewed on the website. | |||

After all detection is done, the time is checked to see if the database should be updated. We chose to update the database every 10 seconds, but this value can be tweaked without changing the behaviour of the system a lot. The interval is there to make sure that not too much time is spent on uploading changes, while it is not important to the user that there is a slight delay. To upload the new data and pictures, an asynchronous thread is started. We start an asynchronous thread since running this in series with the detection system leads to a noticable wait in the detection, leading to inconsistence of time between detections and in more extreme cases seconds of detection missing while the updates are uploaded. Therefore we run a paralell thread alongside the main detection thread that uploads all new information of an object using SQL and uploads new pictures using the picture API we have made that is described in the UI section. | |||

====Matching==== | |||

To implement the matching of multiple instances, we make use of a function that compares two instances against each other and returns a certainty value from 0.0 to 1.0 representing how certain it is that these items are the same. This value is based on 4 major comparisons and some small pre-checks. The main comparison functions used are comparisons based on size, relative location, color and matching point features. | |||

The size comparison is the simplest, comparing the size of the two instances. This can be used when an item was thought to be seen the frame before, as the size stays relatively the same in a short period of time. | |||

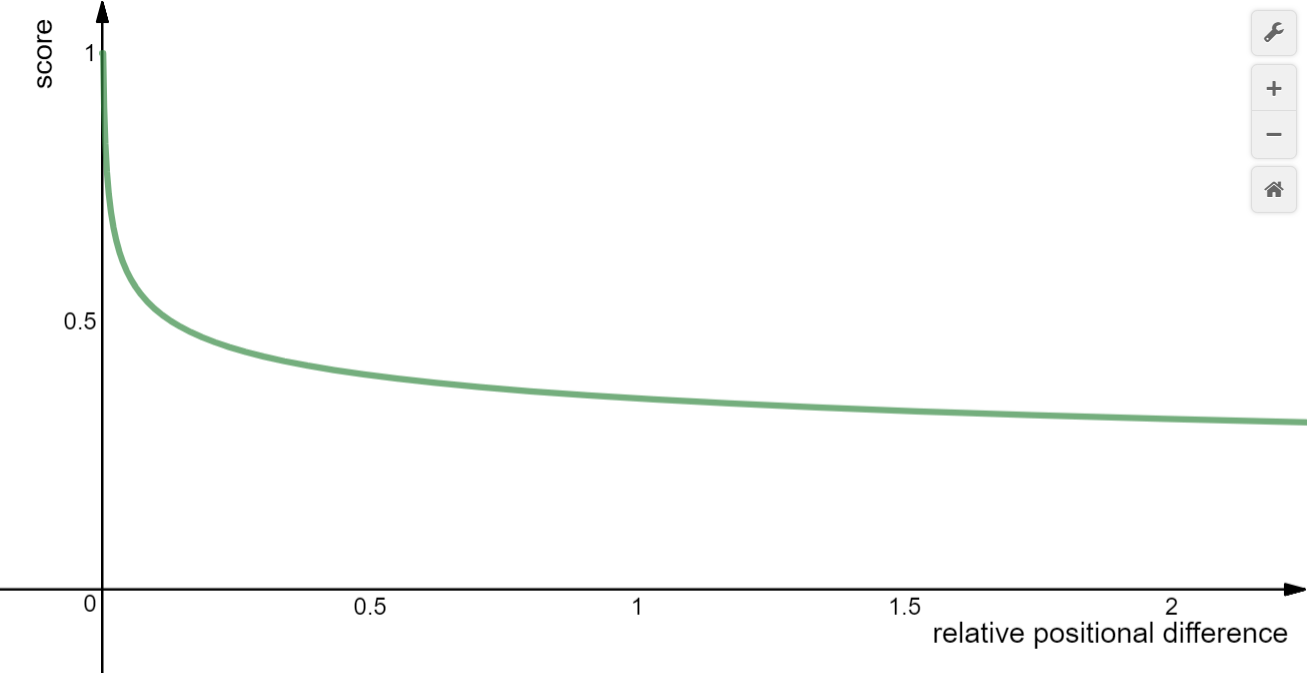

[[File:RelativePositionalDifference.png | thumb | upright=2 | right | The score achieved from the relative positional difference]] | |||

The relative location comparison returns how much the position of an object differs, based on their scale. This means that the larger the objects are in view, the more pixels they can have moved to return the same location-score. When an item is in the left back of the view, it will not suddenly go to the right side of the view in a short timespan, but when, for example, you are holding the item while walking close to the camera, the item appears larger and can move further over the camera view to results in equal score. We calculate this score by first calculating the sizes of both instances and their centers, after which the positional distance is calculated over the X and Y axis, scaled with the items width and sizes and combined using the Pythagorean theorem. This results in the relative positional difference. We then use the formula score = min(1.0, (Scalar / relative positional difference) ^ (1 / power)) to achieve a probability score where we use a scalar of 0.02 and a power of 6. A plot of this formula is shown in the picture on the side. | |||

For color matching, we make use of the OpenCV2 library. For this matching, a best photo is kept track of for each item. Whenever an item is detected, we check the detection score of the object detection. The higher this score, the more confident the object detection is of this object. We use this measure to find a good representative picture of the object where it is best visible. The item is cropped out of the full vision of the camera. We then convert the RGB color space to HSV color space. This allows us to only match based on Hue and Saturation, thus being more robust on changes in lightness. When we input this into OpenCV's histogram matching function, we define to use the correlation metric, as this outputs a value between 0 and 1, which we take to the power of 5 to ensure a quicker droppof in certainty when the histogram matching value is less. | |||

The last main comparison metric is based on point features. For this metric, for both items' best photo, the point feature and their descriptors are calculated using OpenCV's library. This analyses the image, looking for features like corners or other remarkable spots on an image and descriptors of those spots. A Flann based matcher is then used to match points in one image to points in the other image. The more points match with high enough certainty, the more likely it is that these items are the same. | |||

Of these four metrics, the size and location play a big role in short-term matching, meant to track an item that remains in view, while color matching and point features play a main role in long-term matching, meant to match a seen item to an item that has been out of view. | |||

Apart from the described main metrics, we also execute a few other smaller tests on each comparison. First off, we check if the items of the same type. This means, for example, that a book can never be matched to be a glass. Next, we check if one of the objects has already been matched this cycle. This ensures that when, for example, there are 2 books in the view of the camera at the same time, that they will never be said to be the same item. It is then checked if one of the objects is inside the area of the other object. We check for this since we noticed during testing that often an object is detected multiple times. This is because of the way object detection works. We suspect that since with the YOLO system, multiple boxes are proposed and object detection is run on them, that multiple boxes can be outputted for a single object. For example, a bag may be seen as a bag, while only the lower part is also detected as a bag. We filter those out by not considering items that have always only been inside the area of another item of the same type. | |||

Note that all metrics have many values that can be tweaked. Our script contains over 15 settings that influence the working of the system. Most of these values we have decided upon based on limited testing of different values and testing what common input values are such that we can calculate the needed settings to achieve proper output values, but further tweaking of these values may still improve the system. | |||

In our current implementation, a visual output is updated, containing feedback on what the system sees, detects and matches. Based on a frame counter, we measured that disabling this visual feedback, which is unnecessary for a final implementation in a house, leads to a result in analyze time per frame of 30% to 45%, allowing the system to analyse more frames or run a heavier model. | |||

===Testing=== | |||

We have certain levels of testing to help with the implementation of the tracking. (checks ✔ are placed if the system dealt with the test correctly.) These tests were executed with the use of two backpacks with a different color on a live feed with a resolution of 1280x720 pixels and 10 frames per second. | |||

* ✔ Place a stationary object in front of the camera and check whether it will not be recognized as 2 separate objects over time. | |||

** To make sure that 1 object is not recognized as 2 separate objects, which sometimes is the case in the same frame | |||

** The system should know that an object that is standing still (in every frame) remains the same object over time. | |||

** '''Results:''' | |||

*** This test was dealt with correctly every time. However, the system sometimes recognizes the same object twice and then creates another instance of the same object in the database. | |||

* ✔ Place a stationary object in front of the camera and flick the lights on/off, check whether it will not be recognized as 2 separate objects over time. | |||

** The tracking should not depend too much on color, as a slight color change (lighting) can otherwise imply that there is a new object. | |||

** '''Results:''' | |||

*** This test was executed in a bad setting so the results are not usable. | |||

* ✔ Place two distinct and identical objects next to each other (like two identical chairs), and check whether they will be recognized as 2 separate objects over time, not more or less. | |||

** The system should know that two objects which are in a different location, are different objects. | |||

** '''Results:''' | |||

*** The system was able to correctly determine the two objects as unique and never made more than two instances in the database. | |||

* ✔ Place two not similar objects of the same class next to each other (like 2 distinct books) and switch their positions, check whether the correct books' locations are updated. | |||

** The system should be able to track two moving objects, and not create new objects while they are moving. | |||

** The system should update the locations of the objects with the correct corresponding IDs. | |||

** '''Results:''' | |||

*** The results were mostly correct. Once the system was tricked and saved the same bag twice. However, this was fixed after the next image update. | |||

* ✔ Place an object in front of the camera, then stand in front of it for a while, if you leave the system should not think it is a new object. | |||

** The system should know that it is the same object, based on color and location. | |||

** '''Results:''' | |||

*** Even though the system loses the object out of sight, it correctly updates the image after it has been recognized again. | |||

* ✔ Move an object (same angle to the camera) across the whole view of the camera at different speeds, check whether the system only recognized one object. | |||

** The system should detect moving objects, and classify it as the same object. | |||

** '''Results:''' | |||

*** This test was satisfied with normal walking speeds. With relatively fast walking, however, the system couldn't handle the speed and added multiple instances of the same object in the database. | |||

* ✔ Place an object in front of the camera, then rotate the objects 180 degrees, the system should not think it is a new object. | |||

** The system should know it is the same object, even at a different angle. | |||

** '''Results:''' | |||

*** The system did not have any difficulty with this test. | |||

* ✔ Move a rotating object across the whole view of the camera at different speeds, check whether the system only recognized one object. | |||

** The system should recognize objects at different angles and while moving. | |||

** '''Results:''' | |||

*** Also in this test the system could handle a normal walking speed while rotating. Fast walking wasn't handled. | |||

* ✔ Show an object to the camera, then put it behind your back and move to the other side of the view with the object hidden, then reveal the object, check whether the system only recognized one object. (ONLY TEST DONE WITH POINT FEATURE DETECTION) | |||

** The system has to know that when an object disappeared, it is possibly the same object that appeared somewhere else. | |||

** '''Results:''' | |||

*** This test was executed surprisingly well after point feature detection had been implemented again. Without that detection, some objects would be duplicated in the database. | |||

* ✔ Show an object to the camera, then move the object off-screen and return it, check whether the system only recognized one object. | |||

** The system has to know that when an object is off-screen, it is possible that the same object enters the screen again. | |||

** '''Results:''' | |||

*** This test works well when using the same object using point feature detection, but similar objects may also be classified as the previous object. | |||

* X Show a room full of objects to the camera, then shut down the system and boot it again, check whether the system created new instances of every object. | |||

** The system should work after a power outage. | |||

** '''Results:''' | |||

*** We decided not to implement this as it is not relevant for the demo or the prototype, it can be implemented easily by downloading the database of the previous session when booting up the system. | |||

* ✔ Place two cameras next to each other (each having a completely different view), move an object from one camera's view to the other, check whether the system only recognized one object. | |||

** The system should be able to work with different cameras. | |||

** The system should know that when an object is off-screen, it is possible that the same object enters the view of the other camera. | |||

** '''Results:''' | |||

*** This works when it is the same object, but it may confuse different objects with each other. | |||

== Database == | |||

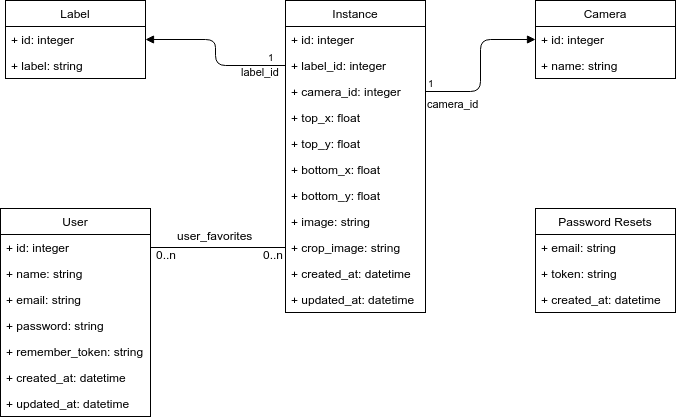

[[File:database.png | thumb | upright=5 | right | Database Structure]] | |||

On the right a diagram of the database structure can be found. First of all, the database has a table containing all labels which can be classified by the system. This table links a label id to a label string. Then there is a cameras table. This table can be used to store the different cameras connected to the locating system. This gives the possibility to give cameras a name, like the room they are in. This table is currently not used by the system. The users table simply stores all registered users, where the password is saved using the bcrypt hashing algorithm. The password resets table is generated automatically by the PHP framework, and is used for the password reset functionality. | |||

The instances table is the most interesting one and holds all instances of objects which are being tracked by the system. An instance has a label attached, and the camera which has detected it last. The two coordinates of the bounding box are also saved here, together with the path on the webserver to the image of the whole frame, and the cropped frame. More on this can be found in the [[#UI | UI]] section. | |||

The user favorites also have to be stored. Note that this is a many to many relation between users and instances. This relation is stored in a pivot table called user_favorites. | |||

== UI == | |||

We also have an additional challenge we have to face; the user should be able to easily determine where an object is located. We must now convert our database of pictures in a meaningful way, as the default presentation is not so useful for the average user. For this we are hosting a website (https://ol.pietervoors.com/) to easily navigate the object you are looking for. The user is presented with a list of items detected in the home. An example of the usage of the website is described below. You can access the website using the following guest account: | |||

username: visitor@pietervoors.com | |||

password: password123 | |||

The UI is built on top of the [https://laravel.com/ Laravel PHP framework], and the source can be found in our [https://gitlab.com/xmarinusx/object-locator GitLab repository]. | |||

As the object detection system and the UI are not running on the same machine, the images have to be communicated somehow. This has been implemented using an API on the webserver. The object detection system can send an HTTP POST request to the web application containing an image. As the objected detection system and the UI share the same underlying database, the web application also has access to the coordinates of the bounding box. The web application then crops the image according to this box, and stores this and the original file to its storage. Both images get a unique name, and the path to their location on the webserver gets saved in the database. The web application now has all information it needs to serve the UI. | |||

Security is one of our main concerns, so the API communication has to be secured well. We have secured the API using the OAuth2.0 protocol, for which Laravel offers an easy to implement package called [https://laravel.com/docs/5.6/passport Laravel Passport]. In the user profile on the website, users can request a Personal Access Token, and this token can be used to authenticate with the API endpoints. Note that this is only needed for development purposes, and is not needed for the regular user. | |||

In order to protect the images which get uploaded through this API, we make sure not to store them in the public folder on the webserver. Instead, we store the images in a secured storage folder which can only be accessed by authenticated users. | |||