Firefly Eindhoven - Localization - Verax: Difference between revisions

No edit summary |

|||

| (5 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

This page discusses the work done on the Verax system in cooperation with Rogier de Rijk, a former | This page discusses the work done on the Verax system in cooperation with Rogier de Rijk, a former MSc. student at TU/e and Avular, a high-tech start-up focused on developing industrial aerial systems. Two students (Daan and Johan) cooperated with Rogier and Avular in the development of a new, vision based localization system. Firstly, the general idea behind the system will be discussed as well as the hardware components present in the realization. Secondly, the specific subproblem Daan and Johan worked on will be introduced and discussed with respect to the theoretical background needed to understand the current implementation. Thirdly, the multiple iterations of implementation in more efficient software will be described. | ||

==Working principle== | ==Working principle== | ||

The Verax system is based on visual pose estimation using a number of infrared LEDs and a calibrated camera wide-lens fish-eye camera. The camera is equipped with a specific band-pass filter to filter out all frequencies outside the infrared band of the LEDs. Then, the resulting blobs are associated with LEDs and the image coordinates of these LEDs are computed. These image coordinates can then be used to solve the perspective-n-point (P''n''P) problem, which is stated as follows: "Given n (n ≥ 3) 3D reference points in the object framework and their corresponding 2D projections, to determine the orientation and position of a fully calibrated perspective camera" [https://ieeexplore.ieee.org/document/6751402/]. Thus, given the known coordinates of the infrared LEDs in the world frame and given the image positions of these LEDs in a calibrated camera image, the orientation and position of the camera with respect to the world frame can be computed. Both the OPnP algorithm of [https://ieeexplore.ieee.org/document/6751402/] and the blob association are running on a NVIDIA Jetson TX1 Module, branded by NVIDIA as "the world's first supercomputer on a module"[https://developer.nvidia.com/embedded/buy/jetson-tx1]. The performance of the Jetson TX1 with respect to visual computing applications makes it very suited for running these algorithm. | The Verax system is based on visual pose estimation using a number of infrared LEDs and a calibrated camera wide-lens fish-eye camera. The camera is equipped with a specific band-pass filter to filter out all frequencies outside the infrared band of the LEDs. Then, the resulting blobs are associated with LEDs and the image coordinates of these LEDs are computed. These image coordinates can then be used to solve the perspective-n-point (P''n''P) problem, which is stated as follows: "Given n (n ≥ 3) 3D reference points in the object framework and their corresponding 2D projections, to determine the orientation and position of a fully calibrated perspective camera" [https://ieeexplore.ieee.org/document/6751402/]. Thus, given the known coordinates of the infrared LEDs in the world frame and given the image positions of these LEDs in a calibrated camera image, the orientation and position of the camera with respect to the world frame can be computed. Both the OPnP algorithm of [https://ieeexplore.ieee.org/document/6751402/] and the blob association are running on a NVIDIA Jetson TX1 Module, branded by NVIDIA as "the world's first supercomputer on a module"[https://developer.nvidia.com/embedded/buy/jetson-tx1]. The performance of the Jetson TX1 with respect to visual computing applications makes it very suited for running these algorithm. | ||

The OPnP minimizes the error of the projected image coordinates of the infrared LEDs with respect to the observed image coordinates for the camera orientation and position, i.e. how should | The OPnP minimizes the error of the projected image coordinates of the infrared LEDs with respect to the observed image coordinates for the camera orientation and position, i.e. how should one hold the camera such that the LEDs (of which the coordinates in the world frame are known) are projected onto the image exactly at the image coordinates at which they have been observed by the camera? The paper proposes a globally optimal, closed form solution to the unconstrained minimization problem by using a novel rotation parametrization and an automatically generated Gröbner basis solver [https://link.springer.com/chapter/10.1007/978-3-540-88690-7_23] for solving the resulting multivariate polynomial system. | ||

==Implementation== | ==Implementation== | ||

Since a regular implementation on either the GPU or CPU | Since a regular implementation of the automatically generated Gröbner basis solver on either the GPU or CPU by making use of MATLAB's embedded C/GPU coder does not meet the performance requirements, the software has to be ported to a lower level language that makes use of GPU accelerated libraries to fully utilize the Jetsons hardware. Since Rogier lacked the expertise to port the code and did not have time to fully look into this, Daan and Johan were assigned the task of porting the MATLAB code and achieve an update rate of approximately 30 Hz. | ||

===Linear algebra=== | ===Linear algebra=== | ||

The automatically generated OPnP algorithm (thus an implementation of the | The automatically generated OPnP algorithm (thus an implementation of the Gröbner basis solver) has two main function calls that limit its performance. These functions implement the following procedures: | ||

* | * Solving a linear system <math> Ax = b </math> with size(A) = 575x575 and size(b) = 575x81. | ||

* An eigenvalue decomposition of a matrix with dimensions 81x81 to retrieve all its eigenvectors. | * An eigenvalue decomposition of a matrix with dimensions 81x81 to retrieve all its eigenvectors. | ||

Both elements take up 50% of the total computation time, so both routines are worth optimizing. | Both elements take up 50% of the total computation time, so both routines are worth optimizing. | ||

====Solving the linear system==== | ====Solving the linear system==== | ||

In general, there are three ways to approach this problem: | In general, there are three ways to approach this problem: | ||

# Invert the matrix A and postmultiply the inverted matrix with b to obtain x. This requires | # Invert the matrix A and postmultiply the inverted matrix with b to obtain x. This requires explicitly calculating the inverse of matrix A, which is time consuming. | ||

# Decompose matrix A into lower and upper triangular matrices L and U, such that A = LU and Ax = LUx = b. Then first solve Ly = b for y (=Ux) by forward substitution, as L is lower triangular. Afterwards solve Ux = y for x by backward substitution, as U is upper triangular. | # Decompose matrix A into lower and upper triangular matrices L and U, such that A = LU and Ax = LUx = b. Then first solve Ly = b for y (=Ux) by forward substitution, as L is lower triangular. Afterwards solve Ux = y for x by backward substitution, as U is upper triangular. | ||

# Decompose matrix A into orthonormal and upper triangular matrices R and Q, such that A = QR and Ax = QRx = b. Then first solve Ly = b for y (=Rx) by backward substitution, as Q is upper triangular. Afterwards solve Rx = y by inverting R. Since R is orthonormal, the inverse is given by the transpose, which greatly reduces the computational costs. | # Decompose matrix A into orthonormal and upper triangular matrices R and Q, such that A = QR and Ax = QRx = b. Then first solve Ly = b for y (=Rx) by backward substitution, as Q is upper triangular. Afterwards solve Rx = y by inverting R. Since R is orthonormal, the inverse is given by the transpose, which greatly reduces the computational costs. | ||

| Line 23: | Line 23: | ||

====Eigenvalue decomposition==== | ====Eigenvalue decomposition==== | ||

Since all eigenvectors are required for the OPnP algorithm, the most general approach to calculating all eigenvectors and eigenvalues of a matrix was chosen. Note: this is also the method implemented by | Since all eigenvectors are required for the OPnP algorithm, the most general approach to calculating all eigenvectors and eigenvalues of a matrix was chosen. Note: this is also the method implemented by LAPACK. Other methods like the power iteration or Rayleigh quotient iteration only return one specific eigenvector or eigenvalue, so these are not suited for our problem. The general approach consists of three stages, further detailed below. | ||

=====Reducing to upper Hessenberg form===== | =====Reducing to upper Hessenberg form===== | ||

In order to speed up the computation in next stage, the square matrix is first reduced to an upper Hessenberg matrix. All entries of an upper Hessenberg matrix below its first subdiagonal are 0. A matrix A can always be converted to upper Hessenberg form by Gaussian elimination or a series of Householder transformations. Since | In order to speed up the computation in next stage, the square matrix is first reduced to an upper Hessenberg matrix. All entries of an upper Hessenberg matrix below its first subdiagonal are 0. A matrix A can always be converted to upper Hessenberg form by Gaussian elimination or a series of Householder transformations. Since LAPACKs implementation uses Householder reduction and Gaussian elimination is unstable for a certain class of matrices, the Householder reduction method is chosen to reduce the matrix to upper Hessenberg form. | ||

=====Reducing to triangular matrix===== | =====Reducing to triangular matrix===== | ||

| Line 32: | Line 32: | ||

=====Calculating eigenvectors===== | =====Calculating eigenvectors===== | ||

When the eigenvalues <math>\lambda_i</math> have all been computed, the eigenvectors <math>b_i</math> can be computed with | When the eigenvalues <math>\lambda_i</math> have all been computed, the eigenvectors <math>b_i</math> can be computed with the inverse power method for an arbitrary initial guess of eigenvector i at iteration k=0 <math>b_{i,k}</math>: | ||

<math> b_{i,k+1} = \frac{(A-\lambda_i I)^{-1} b_{i,k}}{norm((A-\lambda I)^{-1} b_{i,k}) } </math> | <math> b_{i,k+1} = \frac{(A-\lambda_i I)^{-1} b_{i,k}}{norm((A-\lambda I)^{-1} b_{i,k}) } </math> | ||

Although the inverse power method is an iterative procedure in itself, only one iteration is needed since all eigenvalues are already known. The exact knowledge of the eigenvalue ensures convergence of the algorithm after one iteration for an arbitrary, nonzero, initial guess for the eigenvector. Calculating one inverse power method iteration for all eigenvalues gives all eigenvectors, so the problem is solved. | |||

===Software=== | ===Software=== | ||

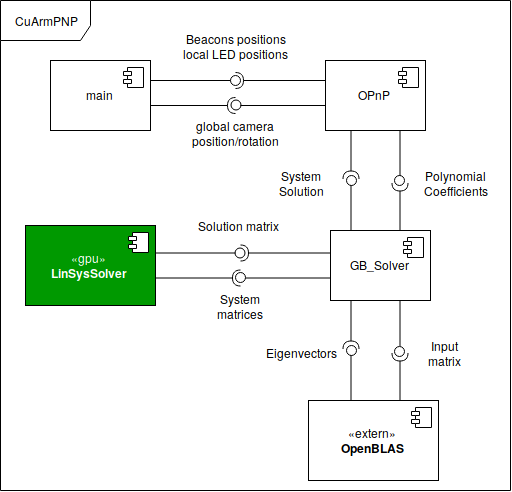

[[File:Component_diagram_Verax.png|thumb|500px|Component diagram of the current implementation]] | |||

This section describes the different software approaches we tried to increase the performance of the algorithm on the Jetson in chronological order. | This section describes the different software approaches we tried to increase the performance of the algorithm on the Jetson in chronological order. | ||

# GPU coder. Rogier requested a trial of the experimental Matlab GPU Coder to generate CUDA code from the | # GPU coder. Rogier requested a trial of the experimental Matlab GPU Coder to generate CUDA code from the Matlab implementation. The resulting performance was terrible, most likely due to the fact that the GPU Coder is not yet very well optimized and that some parts of the code that are more efficient to run on the CPU. Unfortunately the resulting code was also very messy and we could not reuse parts of it. | ||

# C coder. We used Matlab's CPU coder to generate C code instead from the matlab implementation. This outperformed the GPU implementation even though the GPU of the Jetson is significantly more powerful than the CPU, but it was still far from optimal as we were not using the GPU at all and only a single CPU core. The rate achieved by this implementation was 3 FPS. | # C coder. We used Matlab's CPU coder to generate C code instead from the matlab implementation. This outperformed the GPU implementation even though the GPU of the Jetson is significantly more powerful than the CPU, but it was still far from optimal as we were not using the GPU at all and only a single CPU core. The rate achieved by this implementation was 3 FPS. | ||

# cuSOLVER for solving the linear system. After profiling the code we noticed that most of the running time was spent solving a linear system of equations, so we decided to try and accelerate this process using the GPU. We built a library to solve such systems entirely on the GPU using the cuSOLVER toolkit. Combining this with the C coder version yielded a rate of 9FPS, a significant improvement but still far from the desired 30 FPS. | # cuSOLVER for solving the linear system. After profiling the code we noticed that most of the running time was spent solving a linear system of equations, so we decided to try and accelerate this process using the GPU. We built a library to solve such systems entirely on the GPU using the cuSOLVER toolkit. Combining this with the C coder version yielded a rate of 9FPS, a significant improvement but still far from the desired 30 FPS. | ||

# Paper with CUDA pseudocode. Further profiling showed that most of the running time was now spent calculating eigenvectors for a non-symmetric matrix, so we set out to do this on the GPU too, as we were still barely using any of its processing power. We | # Paper with CUDA pseudocode. Further profiling showed that most of the running time was now spent calculating eigenvectors for a non-symmetric matrix, so we set out to do this on the GPU too, as we were still barely using any of its processing power. We were unable to find existing implementations of an eigenvalue solver in CUDA, but we did find a paper describing eigenvector decomposition of a general matrix using CUDA[https://ieeexplore.ieee.org/document/6885312/] and reached out to the original authors to ask for their implementation in CUDA. After receiving no response we set out to recreate the algorithm based on the provided pseudocode. We did not succeed in implementing the algorith, because typos in the pseudocode resulted in infinite loops on the GPU. Since we lacked expertise in the workings of the algorithm and the art of debugging CUDA code, we abandoned this approach. | ||

# Armadillo with MAGMA | # Armadillo with MAGMA. As we were unable to do an eigenvector decomposition entirely on the GPU, we searched for libraries that could do at least some part of the decomposition on the GPU. The [http://icl.cs.utk.edu/magma/ MAGMA] library seemed ideal for this. We had also noticed that the C coder was not making effective use of the Jetson's multiple CPU cores, even though the original matlab script did. The new approach we developed was to rewrite the entire algorithm using the [http://arma.sourceforge.net/ Armadillo] C++ library, and then accelerate the linear system solver and eigenvector decomposition using MAGMA. After implementing this setup and testing the performance, we were shocked to find that the frame rate had actually dropped to 1 FPS instead of increasing. | ||

# Armadillo with cuSOLVER. The previous approach had rather disappointing results, but what did stand out however was that all the execution time outside of the MAGMA library had diminished, suggesting that the Armadillo library was actually rather efficient, and the MAGMA library only slowed the algorithm down. We removed the MAGMA library calls and instead used the cuSOLVER code in approach 3 to solve the linear system, along with Armadillo's own eigenvector decomposition algorithm. With this setup we achieved a rate of well over 20 frames per second. It is the implementation we currently use, but it is still being optimized further in hopes of reaching 30 Hz eventually. | |||

Latest revision as of 14:14, 26 May 2018

This page discusses the work done on the Verax system in cooperation with Rogier de Rijk, a former MSc. student at TU/e and Avular, a high-tech start-up focused on developing industrial aerial systems. Two students (Daan and Johan) cooperated with Rogier and Avular in the development of a new, vision based localization system. Firstly, the general idea behind the system will be discussed as well as the hardware components present in the realization. Secondly, the specific subproblem Daan and Johan worked on will be introduced and discussed with respect to the theoretical background needed to understand the current implementation. Thirdly, the multiple iterations of implementation in more efficient software will be described.

Working principle

The Verax system is based on visual pose estimation using a number of infrared LEDs and a calibrated camera wide-lens fish-eye camera. The camera is equipped with a specific band-pass filter to filter out all frequencies outside the infrared band of the LEDs. Then, the resulting blobs are associated with LEDs and the image coordinates of these LEDs are computed. These image coordinates can then be used to solve the perspective-n-point (PnP) problem, which is stated as follows: "Given n (n ≥ 3) 3D reference points in the object framework and their corresponding 2D projections, to determine the orientation and position of a fully calibrated perspective camera" [1]. Thus, given the known coordinates of the infrared LEDs in the world frame and given the image positions of these LEDs in a calibrated camera image, the orientation and position of the camera with respect to the world frame can be computed. Both the OPnP algorithm of [2] and the blob association are running on a NVIDIA Jetson TX1 Module, branded by NVIDIA as "the world's first supercomputer on a module"[3]. The performance of the Jetson TX1 with respect to visual computing applications makes it very suited for running these algorithm.

The OPnP minimizes the error of the projected image coordinates of the infrared LEDs with respect to the observed image coordinates for the camera orientation and position, i.e. how should one hold the camera such that the LEDs (of which the coordinates in the world frame are known) are projected onto the image exactly at the image coordinates at which they have been observed by the camera? The paper proposes a globally optimal, closed form solution to the unconstrained minimization problem by using a novel rotation parametrization and an automatically generated Gröbner basis solver [4] for solving the resulting multivariate polynomial system.

Implementation

Since a regular implementation of the automatically generated Gröbner basis solver on either the GPU or CPU by making use of MATLAB's embedded C/GPU coder does not meet the performance requirements, the software has to be ported to a lower level language that makes use of GPU accelerated libraries to fully utilize the Jetsons hardware. Since Rogier lacked the expertise to port the code and did not have time to fully look into this, Daan and Johan were assigned the task of porting the MATLAB code and achieve an update rate of approximately 30 Hz.

Linear algebra

The automatically generated OPnP algorithm (thus an implementation of the Gröbner basis solver) has two main function calls that limit its performance. These functions implement the following procedures:

- Solving a linear system [math]\displaystyle{ Ax = b }[/math] with size(A) = 575x575 and size(b) = 575x81.

- An eigenvalue decomposition of a matrix with dimensions 81x81 to retrieve all its eigenvectors.

Both elements take up 50% of the total computation time, so both routines are worth optimizing.

Solving the linear system

In general, there are three ways to approach this problem:

- Invert the matrix A and postmultiply the inverted matrix with b to obtain x. This requires explicitly calculating the inverse of matrix A, which is time consuming.

- Decompose matrix A into lower and upper triangular matrices L and U, such that A = LU and Ax = LUx = b. Then first solve Ly = b for y (=Ux) by forward substitution, as L is lower triangular. Afterwards solve Ux = y for x by backward substitution, as U is upper triangular.

- Decompose matrix A into orthonormal and upper triangular matrices R and Q, such that A = QR and Ax = QRx = b. Then first solve Ly = b for y (=Rx) by backward substitution, as Q is upper triangular. Afterwards solve Rx = y by inverting R. Since R is orthonormal, the inverse is given by the transpose, which greatly reduces the computational costs.

The LU decomposition method was chosen, as this algorithm is the fastest of the three, thus offering the biggest improvement with respect to the update rate requirement. As the LU decomposition is already present in the compiled MATLAB code and this code does not cause issues with respect to numerical instability, there is no need for the more numerically stable but more time consuming QR decomposition.

Eigenvalue decomposition

Since all eigenvectors are required for the OPnP algorithm, the most general approach to calculating all eigenvectors and eigenvalues of a matrix was chosen. Note: this is also the method implemented by LAPACK. Other methods like the power iteration or Rayleigh quotient iteration only return one specific eigenvector or eigenvalue, so these are not suited for our problem. The general approach consists of three stages, further detailed below.

Reducing to upper Hessenberg form

In order to speed up the computation in next stage, the square matrix is first reduced to an upper Hessenberg matrix. All entries of an upper Hessenberg matrix below its first subdiagonal are 0. A matrix A can always be converted to upper Hessenberg form by Gaussian elimination or a series of Householder transformations. Since LAPACKs implementation uses Householder reduction and Gaussian elimination is unstable for a certain class of matrices, the Householder reduction method is chosen to reduce the matrix to upper Hessenberg form.

Reducing to triangular matrix

Next, the upper Hessenberg matrix is reduced to an upper triangular form. This is done by the QR algorithm, which boils down to calculating the QR decomposition [math]\displaystyle{ A_k = Q_k R_k }[/math] and then reversing the order of Q and R to calculate [math]\displaystyle{ A_{k+1} = R_{k}Q_{k} }[/math]. After a certain amount of iterations (depending on the difference in magnitude of the neighbouring eigenvalues), the matrix will converge to an upper triangular matrix with on its main diagonal either a single eigenvalue or blocks of 2x2 submatrices containing two eigenvalues with similar magnitude, but possibly complex conjugated or negated sign. Like this, the eigenvalues can be computed by computing the eigenvalues of all 2x2 blocks of the reduced matrix.

Calculating eigenvectors

When the eigenvalues [math]\displaystyle{ \lambda_i }[/math] have all been computed, the eigenvectors [math]\displaystyle{ b_i }[/math] can be computed with the inverse power method for an arbitrary initial guess of eigenvector i at iteration k=0 [math]\displaystyle{ b_{i,k} }[/math]:

[math]\displaystyle{ b_{i,k+1} = \frac{(A-\lambda_i I)^{-1} b_{i,k}}{norm((A-\lambda I)^{-1} b_{i,k}) } }[/math]

Although the inverse power method is an iterative procedure in itself, only one iteration is needed since all eigenvalues are already known. The exact knowledge of the eigenvalue ensures convergence of the algorithm after one iteration for an arbitrary, nonzero, initial guess for the eigenvector. Calculating one inverse power method iteration for all eigenvalues gives all eigenvectors, so the problem is solved.

Software

This section describes the different software approaches we tried to increase the performance of the algorithm on the Jetson in chronological order.

- GPU coder. Rogier requested a trial of the experimental Matlab GPU Coder to generate CUDA code from the Matlab implementation. The resulting performance was terrible, most likely due to the fact that the GPU Coder is not yet very well optimized and that some parts of the code that are more efficient to run on the CPU. Unfortunately the resulting code was also very messy and we could not reuse parts of it.

- C coder. We used Matlab's CPU coder to generate C code instead from the matlab implementation. This outperformed the GPU implementation even though the GPU of the Jetson is significantly more powerful than the CPU, but it was still far from optimal as we were not using the GPU at all and only a single CPU core. The rate achieved by this implementation was 3 FPS.

- cuSOLVER for solving the linear system. After profiling the code we noticed that most of the running time was spent solving a linear system of equations, so we decided to try and accelerate this process using the GPU. We built a library to solve such systems entirely on the GPU using the cuSOLVER toolkit. Combining this with the C coder version yielded a rate of 9FPS, a significant improvement but still far from the desired 30 FPS.

- Paper with CUDA pseudocode. Further profiling showed that most of the running time was now spent calculating eigenvectors for a non-symmetric matrix, so we set out to do this on the GPU too, as we were still barely using any of its processing power. We were unable to find existing implementations of an eigenvalue solver in CUDA, but we did find a paper describing eigenvector decomposition of a general matrix using CUDA[5] and reached out to the original authors to ask for their implementation in CUDA. After receiving no response we set out to recreate the algorithm based on the provided pseudocode. We did not succeed in implementing the algorith, because typos in the pseudocode resulted in infinite loops on the GPU. Since we lacked expertise in the workings of the algorithm and the art of debugging CUDA code, we abandoned this approach.

- Armadillo with MAGMA. As we were unable to do an eigenvector decomposition entirely on the GPU, we searched for libraries that could do at least some part of the decomposition on the GPU. The MAGMA library seemed ideal for this. We had also noticed that the C coder was not making effective use of the Jetson's multiple CPU cores, even though the original matlab script did. The new approach we developed was to rewrite the entire algorithm using the Armadillo C++ library, and then accelerate the linear system solver and eigenvector decomposition using MAGMA. After implementing this setup and testing the performance, we were shocked to find that the frame rate had actually dropped to 1 FPS instead of increasing.

- Armadillo with cuSOLVER. The previous approach had rather disappointing results, but what did stand out however was that all the execution time outside of the MAGMA library had diminished, suggesting that the Armadillo library was actually rather efficient, and the MAGMA library only slowed the algorithm down. We removed the MAGMA library calls and instead used the cuSOLVER code in approach 3 to solve the linear system, along with Armadillo's own eigenvector decomposition algorithm. With this setup we achieved a rate of well over 20 frames per second. It is the implementation we currently use, but it is still being optimized further in hopes of reaching 30 Hz eventually.