Embedded Motion Control 2018 Group 6: Difference between revisions

(→Plan:) |

|||

| Line 170: | Line 170: | ||

===Control:=== | ===Control:=== | ||

'''ERC''': | '''ERC''': | ||

Input: distances+angles to objects, state of next step | |||

Use: | |||

* Determine setpoint angle and velocity of robot | |||

Interface to world model, what control shares with the world model: | |||

* Setpoint angle and velocity of robot | |||

'''HC''': | '''HC''': | ||

Input: state of next step, path to follow | |||

Use: | |||

* Determine setpoint angle and velocity of robot | |||

Interface to world model, what control shares with the world model: | |||

* Setpoint angle and velocity of robot | |||

===Overview of the interface of the software structure:=== | ===Overview of the interface of the software structure:=== | ||

Revision as of 15:44, 10 May 2018

Group members

| Name: | Report name: | Student id: |

| Thomas Bosman | T.O.S.J. Bosman | 1280554 |

| Raaf Bartelds | R. Bartelds | add number |

| Bas Scheepens | S.J.M.C. Scheepens | 0778266 |

| Josja Geijsberts | J. Geijsberts | 0896965 |

| Rokesh Gajapathy | R. Gajapathy | 1036818 |

| Tim Albu | T. Albu | 19992109 |

| Marzieh Farahani | Marzieh Farahani | Tutor |

Initial Design

Requirements and Specifications

Use cases for Escape Room

1. Wall and Door Detection

2. Move with a certain profile

3. Navigate

Requirements for Escape Room

R1.1 The system shall detect walls without touching them

R1.2 The system shall detect the goal/door

R1.3 The system shall log and map the environment

R2.1 The system shall move slower than 0.5 m/s translational and 1.2 rad/s rotational

R2.2 The system shall not stay still for more than 30 seconds

R3.1 The system shall find the goal

R3.2 The system shall determine a path to the goal

R3.3 THe system shall navigate to the goal

R3.4 THe system shall not touch the walls

R3.5 The system shall complete the task within 5 minutes

Use cases for Hospital Room

(unfinished)

1. Mapping

2. Move with a certain profile

3. Orient itself

4. Navigate

Functions, Components and Interfaces

The softwarethat will be deployed on PICO can be categorized in four different components: perception, monitoring, plan and control. They exchange information through the world model, which stores all the data. Below, the functions of the four components are described. What these components will do is described for both the Escape Room Challenge (ERC) and the Hospital Challenge (HC).

Perception:

ERC:

Input: LRF-data

Use:

- Process (filter) the laser-readings

Interface to world model, perception will store:

- Distances to all near obstacles

HC:

Input: LRF-data, odometry

Use:

- Process both sensors with gmapping

- Process (filter) the laser-readings

Interface to world model, perception will store:

- processed data from the laser sensor, translated to a map (walls, rooms and exits)

- odometry data: encoders, control effort

Monitoring:

ERC:

Input: distances to objects

Use:

- Process distances to determine situation: following wall, (near-)collision, entering corridor, escaped room

Interface to world model, monitoring will provide to the world model:

- What situation is occuring

HC:

Input: map, distances to objects

Use:

- Process distances and map to determine situation: following path, (near-)collision, found object

Interface to world model, monitoring will provide to the world model:

- What situation is occuring

Plan:

ERC:

Input: state of situation

Use:

- Determine next step: prevent collision, continue following wall, stop because finished

Interface to world model, plan will share with the world model:

- The transition in the statemachine, meaning what should be done next

HC:

Input: state of situation

Use:

- Determine next step: go to new waypoint, continue following path, prevent collision, go back to start, stop because finished

- Determine path to follow

Interface to world model, plan will share with the world model:

- The transition in the statemachine, meaning what should be done next

Control:

ERC:

Input: distances+angles to objects, state of next step

Use:

- Determine setpoint angle and velocity of robot

Interface to world model, what control shares with the world model:

- Setpoint angle and velocity of robot

HC:

Input: state of next step, path to follow

Use:

- Determine setpoint angle and velocity of robot

Interface to world model, what control shares with the world model:

- Setpoint angle and velocity of robot

Overview of the interface of the software structure:

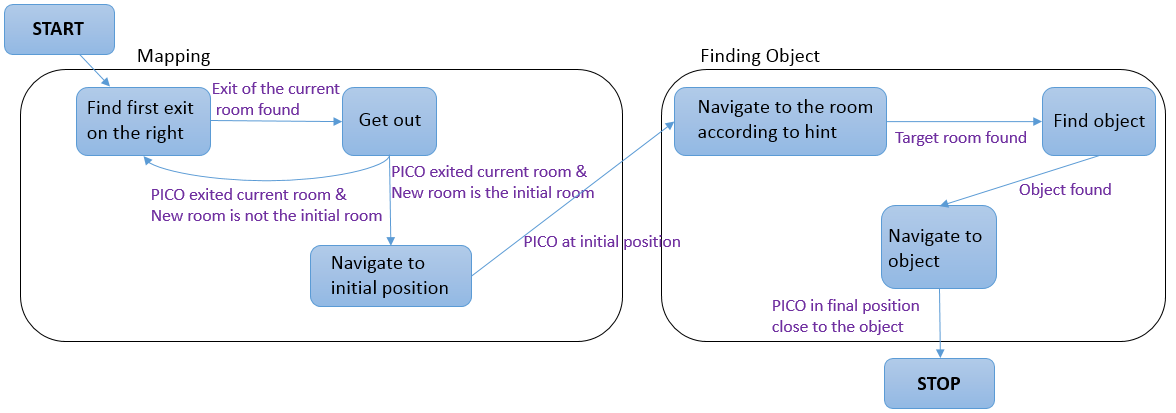

The diagram below provides a graphical overview of what the statemachine will look like. Not shown in the diagram is the case when the events Wall was hit and Stop occur. The occurence of these events will be checked in each state, and in the case they happened, the state machine will navigate to the state STOP. The state machine is likely to be more complex, since some states will comprise a sub-statemachine.