Drone Referee - MSD 2017/18: Difference between revisions

| Line 129: | Line 129: | ||

Using this approach, the result of the generated trajectory and drone position are shown in Figure 3. | Using this approach, the result of the generated trajectory and drone position are shown in Figure 3. | ||

[[File: Ball Tracking without position hold mechanism.png|center]] | [[File:Ball Tracking without position hold mechanism.png|center]] | ||

Figure 3. Ball Tracking without position hold mechanism. | Figure 3. Ball Tracking without position hold mechanism. | ||

Revision as of 09:31, 12 April 2018

Introduction

Abstract

Being a billion Euro industry, the game of Football is constantly evolving with the use of advancing technologies that not only improves the game but also the fan experience. Most football stadiums are outfitted with state-of-the-art camera technologies that provide previously unseen vantage points to audiences worldwide. However, football matches are still refereed by humans who take decisions based on their visual information alone. This causes the referee to make incorrect decisions, which might strongly affect the outcome of the games. There is a need for supporting technologies that can improve the accuracy of referee decisions. Through this project, TU Eindhoven hopes develop a system with intelligent technology that can monitor the game in real time and make fair decisions based on observed events. This project is a first step towards that goal.

In this project, a drone is used to evaluate a football match, detect events and provide recommendations to a remote referee. The remote referee is then able to make decisions based on these recommendations from the drone. This football match is played by the university’s RoboCup robots, and, as a proof-of-concept, the drone referee is developed for this environment.

This project focuses on the design and development of a high level system architecture and corresponding software modules on an existing quadrotor (drone). This project builds upon data and recommendations by the first two generations of Mechatronics System Design trainees with the purpose of providing a proof-of-concept Drone Referee for a 2x2 robot-soccer match.

Background and Context

The Drone Referee project was introduced to the PDEng Mechatronics Systems Design team of 2015. The team was successful in demonstrating a proof-of-concept architecture, and the PDEng team of 2016 developed this further on an off-the-shelf drone. The challenge presented to the team of 2017 was to use the lessons of the previous teams to develop a drone referee using a new custom-made quadrotor. This drone was built and configured by a master student and his thesis was used as the baseline for this project.

The MSD 2017 team is made up of seven people with different technical and academic backgrounds. One project manager and two team leaders were appointed and the remaining four team-members were divided under the two team leaders. The team is organized as below:

| Name | Role | Contact |

|---|---|---|

| Siddharth Khalate | Project Manager | s.r.khalate@tue.nl |

| Mohamed Abdel-Alim | Team 1 Leader | m.a.a.h.alosta@tue.nl |

| Aditya Kamath | Team 1 | a.kamath@tue.nl |

| Bahareh Aboutalebian | Team 1 | b.aboutalebian@tue.nl |

| Sabyasachi Neogi | Team 2 Leader | s.neogi@tue.nl |

| Sahar Etedali | Team 2 | s.etedalidehkordi@tue.nl |

| Mohammad Reza Homayoun | Team 2 | m.r.homayoun@tue.nl |

Problem Description

Problem Setting

Two agents will be used to referee the game: a drone moving above the pitch and a remote human referee, receiving video streams from a camera-gimbal system attached to the drone. The motion controller of the drone and the gimbal must provide video streams allowing for an adequate situation awareness of the human referee, and consequently enabling him/her to make proper decisions. A visualization and command interface shall allow interaction between the drone and the human referees. In particular, besides allowing the visualization of the real-time video stream this interface shall allow for more features such as on-demand repetitions of recent plays and enable the human referee to send decisions (kick-off, foul, free throw, etc) which should be signaled to the audience via LEDs placed on the drone and a display connected to a ground station. Moreover, based on computer vision processing, an algorithm which can run on the remote PC showing the video stream to the human referee, shall give a recommendation to the human referee with respect to the following two rules:

- RULE A: Free throw, when the ball moves out of the bounds of the pitch, crossing one of the four lines delimiting the field.

- RULE B: When robot players in the pitch touch each other a potential foul can occur. Therefore, the system should detect and signal when two robot players in the pitch are touching each other.

If time permits, the remote referee can be given a recommendation by the autonomous system on more rules, such as the distance rules for free kicks mentioned before. The robot soccer field at the mechanical engineering department, building GEM-N, will be used to test the algorithms.

Goal

The final goal is to have a 5 minute long 2 against 2 robot soccer game, using the tech united turtles, refereed by the system described above, which receives a positive recommendation by the human referee. By positive recommendation we mean that the human referee acknowledges that the refereeing system provided, for that 5 minute long game, all the required information and recommendations that enabled him/her to make decisions which would be at least as good as he/she would make if physically present on the pitch. A member of tech-united, with previous refereeing experience, will be asked to referee the game at a different building (GEM-Z) based on the live stream and repetitions provided by the system. Another member of the tech-united will be responsible for simple tasks as placing the ball at the correct position for free-throws, free kicks, etc.

Workpackages

Four workpackages were defined in the problem statement. These were stated as guidelines to tackle the problem at hand. In addition, the team was given freedom to define their own approach to reach the goal. The four workpackages are defined below:

- WP1: Design and simulation of the motion planner and supervisory control

- WP2: Motion control of the drone and gimbal system

- WP3: Visualization and command interface

- WP4: Automatic recommendation for rule enforcement

Final Deliverables

- System architecture of the proposed solution by January 31st along with a time plan and task distribution for the members of the group

- Software of the proposed solutions

- Demo to be scheduled by the end of March or beginning of April

- A Wikipage documenting the project and providing a repository for the software developed, similar the one obtained from the first generation of MSD students

- One minute long video to be used in presentations illustrating the work

- 5 minute long video with the actual game, the remote referee visualizations and the audience screen visualizations

System Objectives and Requirements

Project Scope

The scope of this project was refined during the design phase of the project. This was done due to considerable hardware issues and time constraints. The scope was narrowed down to the following deliverables.

- System Architecture

- Task-Skill-World model

- DSM

- Flight and Control

- Manual and Semi-Autonomous Flight

- Autonomous Flight

- Hardware and Software Interfaces

- Event Detection

- Ball-out-of-pitch Detection

- Collision detection

- Hardware and Software Interfaces

- Rule Enforcement and HMI

- Supervisory Control

- Referee/Audience GUI

- Hardware and Software Interfaces

- Demonstration Video and Final Presentation

- Wiki page

System Architecture

Architecture Description and Methodology

Implemented System Architecture

Implementation

Flight and Control

Manual Flight

Drone Localization

To efficiently plan and execute trajectories, it is crucial for the drone to have an accurate representation of its current pose. From preliminary testing, it was observed that the internal IMU on the Pixhawk generated sufficiently fast and accurate measurements of the Roll, Pitch and Yaw angles of the drone. However, the X, Y and Z measurements were really noisy. To solve this issue, two methods of localizing the drone were explored in this project. This section will explain the choices made and the reasoning behind it. The two implemented methods are:

- Top-cam localization

- Marvelmind localization

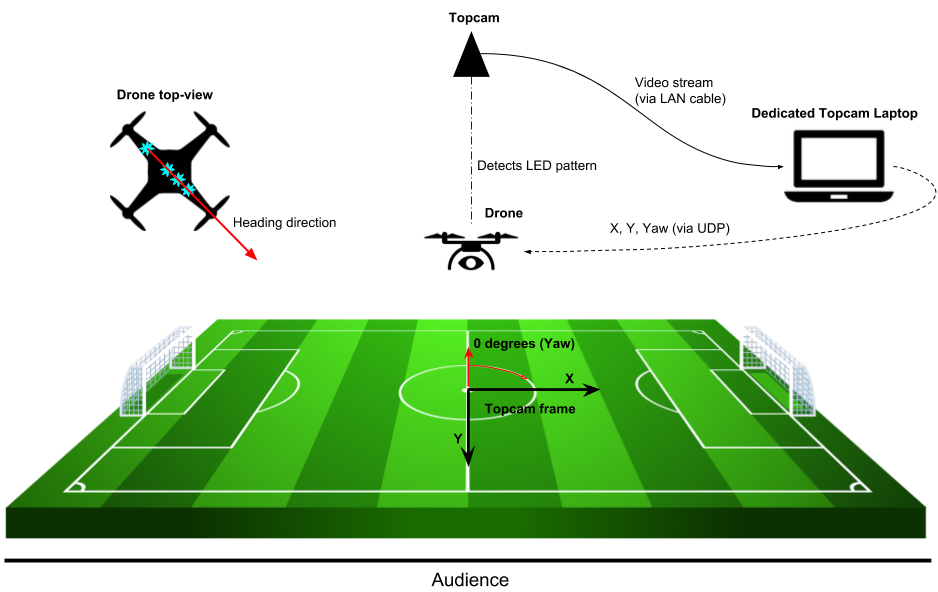

Top-cam Localization

This method was the first approach by the team. A pre-existing code was incorporated that detects an LED pattern on top of the drone and calculates the X, Y and Yaw of the drone in the topcam frame of reference. These measurements are calculated using a computer vision software onboard a separate topcam laptop. These values are then sent over UDP to the drone ground-station, which are received by Simulink and transmitted to the Pixhawk using the MAVLink-Simulink interface. The altitude is measured using a LiDAR as mentioned in the interface diagram earlier. This is described with the diagram below:

Marvelmind Localization

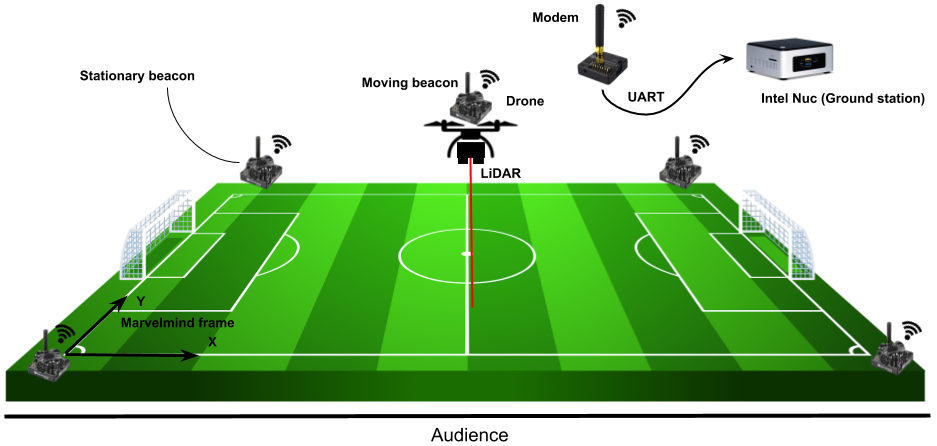

Marvelmind Robotics is a company that develops precise indoor GPS for robots and drones. Indoor localization is achieved with the use of ultrasound beacons. In this application, four beacons are placed at a height of around 1 meter at all each corner of the robocup field. These beacons are stationary. A fifth beacon is attached to the drone and connected to the drone's battery management system. This beacon is known as the hedgehog and is a mobile beacon whose position is tracked by the stationary beacons. Pose data of the four beacons and the hedgehog are communicated using the modem, which also provides data output via UART. In this application, the pose data for the hedgehog is accessed through the modem using a C program. This program accesses the data via UART, decrypts the pose data and broadcasts it to the MATLAB/Simulink file via UDP. All of this is executed on the Intel Nuc.

Autonomous Flight

Path Planning

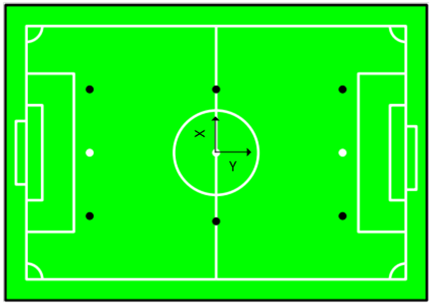

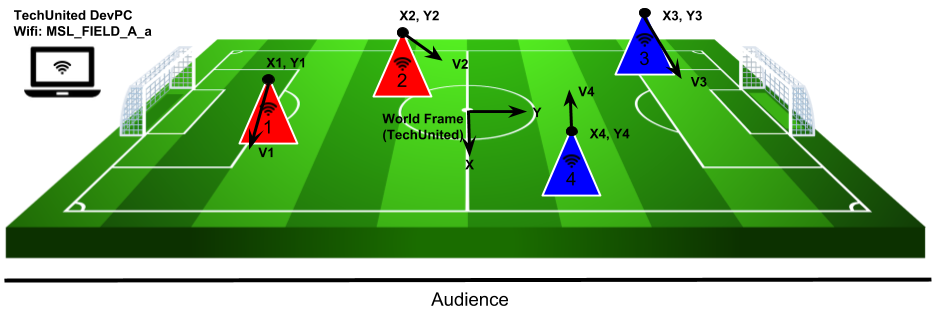

Path planning is as a significant importance for autonomous systems. That lets a system like a drone to find an optimal path. An optimal path can be defined as a path that minimizes the amount of turning or hovering or whatever a specific application requires. Also, path planning requires a map of the environment and the drone to have its location with respect to the map. In this project, the drone is assumed to fly to the center of the pitch in manual mode and, after that, it switches to the automatic mode. In the automatic mode, a number of other assumptions are considered. We will assume for now that the drone is able to localize itself in regards to the map which is a RoboSoccer football field. A view of the match field is depicted in Figure 1. The drone is assumed to stay with the same attitude during the game and it receives ball location on the map, x and y. Also, the ball is assumed to be on the ground. Another assumption is that there is no obstacle for the drone in its height. Finally, the position obtained from vision algorithms includes the minimum noise.

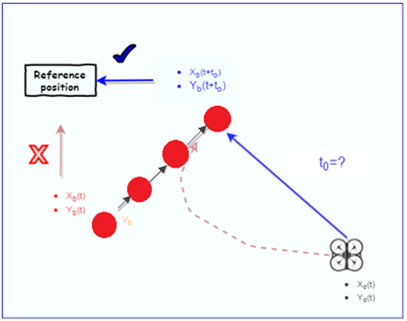

Based on these assumptions, the problem is simplified to find a 2D path planning algorithm. Simple 2D path planning algorithms are not able to deal with complex 3D environments, where there are quite a lot of structures constraints and uncertainties. Basically, the path planning problem is defined as finding the shortest path for the drone because of the energy constraint forced by hardware and the duration of the match. The drone that it is used in this project is limited to the maximum velocity of 3m/s. Another constraint is to have the ball in the view of the camera which is attached to the drone. The path planning algorithm receives x and y coordinates of the ball from vision algorithm, a reference path should be defined for the drone. One possible solution is to directly give the ball position as a reference. Since the ball mostly moves into different direction during the game, it is more efficient to use ball velocity to predict future position. In this manner, the drone would make less effort to follow the ball. Figure 2 illustrates how the drone traversed path is reduced by future position on the ball.

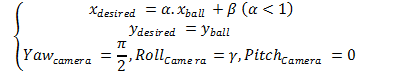

Using this approach, the result of the generated trajectory and drone position are shown in Figure 3.

Figure 3. Ball Tracking without position hold mechanism. The drone should still put a considerable effort into tracking the ball constantly. Furthermore, results from the simulated game show that following the ball requires having a fast moving drone which may affect the quality of images. Therefore, the total velocity of the drone along the x and y-axes is limited for the sake of image quality. The video of the simulated game when a drone is following a ball is shown in video 1.

To make it more efficient, it is possible to consider a circle around the ball as its vicinity. When it is in the vicinity, supervisory control can change the drone mode to the position-hold. As soon as the ball moves to a position where the drone is out of its vicinity, it will switch to the ball tracking mode. On the other hand, the ball position in the images is sometimes occluded by the robot players. Thus, three different modes are designed in the current path planning. The first mode is ball tracking mode in which ball is far from the drone. The second one is position hold when the drone is in the ball vicinity. The Third one is occlusion mode in which drone moves around the last given position of the ball. However, this algorithm adds complexity to the system and may be hard to implement. A different scenario is to follow the ball from one side of the field by following the ball along y-axes. It is shown in the Video 2. In this case, the drone should be at a height more than what it can be in reality. Therefore, it is not practical.

The drone follows the ball along the x-axes but it does not follow the ball completely along the y-axes. In fact, It moves in one side of the field and the camera records the image with an angle ( see equation 1).

A result of utilizing this approach is that the drone moves I a part of the field and it is not required to move all around the field to follow the ball. The coefficient in the equation one should be set based on camera properties and also the height in which the drone flies. Video 3 is provided to illustrate this approach.

Event Detection and Rule Enforcement

Ball Out Of Pitch

Collision Detection

Collisions between robots can be detected by the distance between them and the speeds the robots are traveling at. The collision detection algorithm assumes prior knowledge of the positions of each player robot. In this project, two methods of tracking the robot position were studied and trialed - ArUco Markers, and the use of TechUnited's world model.

ArUco Markers

ArUco is a library for augmented reality (AR) applications based on OpenCV. In implementation, this library produces a dictionary of uniquely numbered AR tags that can be printed and stuck to any surface. With sufficient number of tags, the library is then able to determine the 3D position of the camera that is viewing these tags, assuming that the positions of the tags are known. In this application, the ArUco tags were stuck on top of each player robot and the camera was attached on the drone. The ArUco algorithm was reversed using perspective projections to determine the position of the AR tags using the known position of the drone (localization). However, two drawbacks of this solution were realized.

- The strategy for trajectory planning is to have the ball visible at all times. Since the priority is given to the ball, it is not necessary that all robot players are visible to the camera at all times. In certain circumstances, it is also possible that a collision occurs away from the ball and hence away from the camera’s field of view.

- In this application, the drone and the robot players will be moving at all times. At high relative speeds, the camera is unable to detect the AR tags or detects them with high latency. During trials, it was also observed that the field of view of the fish-eye camera to track these AR tags is heavily limited.

Due to these drawbacks, and considering that the drone referee will be showcased using the TechUnited robots, it was decided to use TechUnited’s infrastructure to track the player robots.

TechUnited World Model

The TechUnited robots, also known as Turtles, continuously broadcast their states to the TechUnited network. In the robotics field of TU Eindhoven, this server is a wireless network named MSL_FIELD_A_a. The broadcasted states are stored in the TechUnited World Model, which can be accessed through their multicast server. In this application, the following states are used for the collision detection method:

- Pose (x, y, heading)

- Velocity (in x direction, in y direction)

The image below shows 4 player robots divided into two teams. Using the multicast server, the position and velocity (with direction) is measured and stored. The axes in the center is the global world frame convention followed by TechUnited.

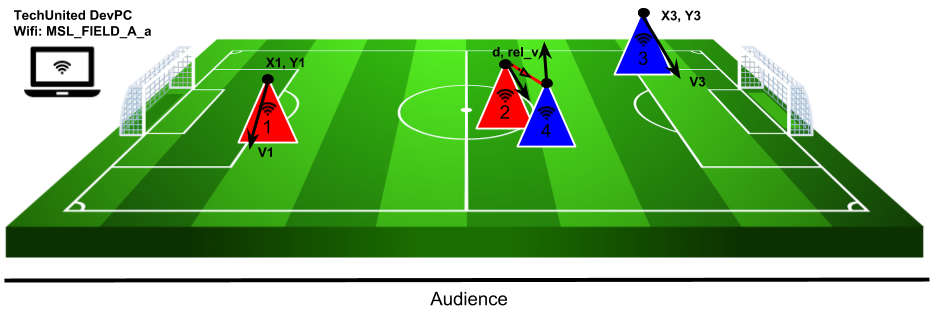

Collision Detection

Once robot positions are known, collisions between them can be detected using a function of two variables,

- Distance between the robots

- Relative velocity between the robots

These variables can be measured using the positions of the robots, their headings and their velocities. The algorithm to detect collisions uses the following pseudo-code.

while(1){

for(int i=0; i<total_players; i++){

store_state_values(i);

}

for(int reference=0; reference<total_players; reference++){

for(int target=j; target<total_players; target++){

distance_check(reference, target);

if(dist_flag == 1){

velocity_check(reference, target);

determine_guilty_player(target, reference, vel_flag);

}

}

}

}

In the above pseudo-code, collisions are detected only if two robots are within a certain distance of each other. In this case, the relative velocity between these robots are checked. If this velocity is above a certain threshold, a collision is detected. The diagram below describes the scenario for a collision. In this diagram, the red line describes the two robots being close to each other, within the distance threshold. It can be considered that the robots are touching at this point. However, a collision is only defined if these robots are actually colliding. So, the relative velocity, described as rel_v' in the diagram, is checked.

The above pseudo-code is broken down and explained below:

for(int i=0; i<total_players; i++){ //check over all playing robots

store_state_values(i);

}

Store State Values: The for loop runs over all playing robots. The number of playing robots are checked before entering the while loop, in this application. The store_state_values function stores the state values of each robot into a player_state matrix. The state values stored are the pose (x, y, heading) and the velocities (vel_x, vel_y).

for(int reference=0; reference<total_players; reference++){

for(int target=reference; target<total_players; target++){

distance_check(reference, target);

...

}

}

Distance Check: For this application, a n x n matrix is made, where n describes the total number of players. The rows of this matrix describes the reference robot and the columns describe the target. In the code snippet above, two for loops can be seen. However, the second for loop starts from the reference player number. This is done to reduce redundancies and restrict the number of computations. This can be seen in the matrix description below. Every element with an x is redundant or irrelevant.

_|1|2|3|4| 1|x|0|0|0| 2|x|x|0|0| 3|x|x|x|0| 4|x|x|x|x|

So, for 4 playing robots (16 interactions), only 6 interactions are checked: 1-2, 1-3, 1-4, 2-3, 2-4, 3-4. The remaining elements in the matrix are redundant. The distance_check function checks if any two robots are within colliding distance of each other (only the above 6 interactions are checked). In this application, the colliding distance was chosen to be 50 cm. If the distance between robots is less than 50cm, the corresponding element in the above matrix is set to 1.

if(dist_flag == 1){

velocity_check(reference, target);

determine_guilty_player(target, reference, vel_flag);

}

Velocity Check: This function is called only if the distance flag is set to 1, when two robots are touching each other (distance between the robots < 50cm). Using the individual velocities and headings of each touching robot, a relative velocity is calculated at the reference robot as origin. If the absolute value of this relative velocity is greater than 5 m/s (velocity threshold in this application), the velocity flag is set. This flag, however, is a signed value, i.e. it is either +1 or -1 depending upon the direction of this relative velocity.

Determine Guilty Player: The sign of the velocity flag tells us which robot is guilty of causing this collision. If the flag is positive, the reference robot is the aggressor and the target robot is the victim of the collision. For a negative flag value, it is the other way around. This determination is important to assign blame and award free-kicks/penalties by the referee during the match.

Interfaces

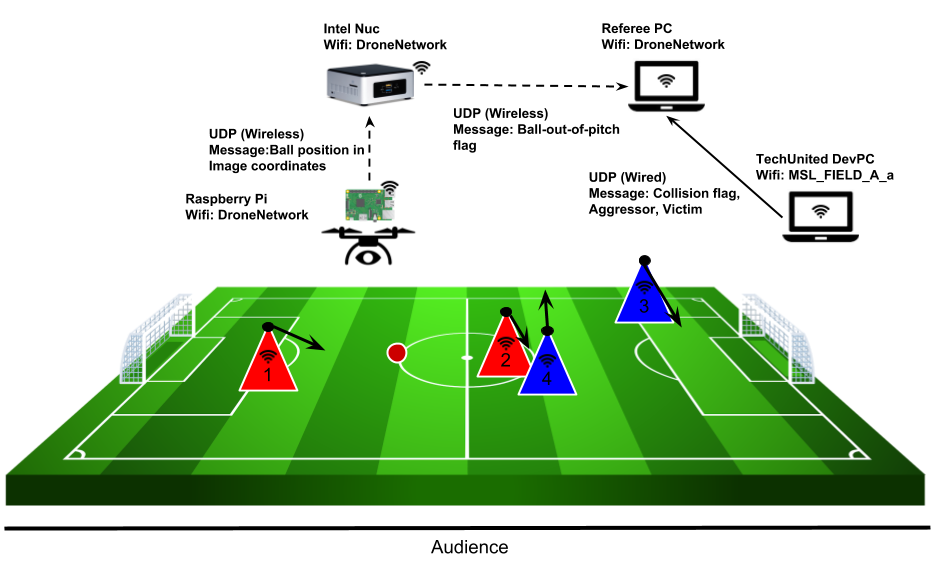

Developing additional interfaces for event detection and rule enforcement was necessary due to the following constraints.

- Intel Nuc was unreliable on-board the drone. While the telemetry module and the Pixhawk helped fly the drone, a Raspberry Pi was used for the computer vision. This Raspberry Pi communicates over UDP to the Nuc. Both the Nuc and the Raspberry Pi operate over the DroneNetwork wireless network.

- The collision detection utilizes the World Model of the TechUnited robots. To access this, the computer must be connected to the TechUnited wireless network. It was realized that the collision detection algorithm has nothing to do with the flight of the drone, and hence it is not necessary to broadcast the collision detection information to the Intel Nuc. Hence, this information was broadcasted directly to the remote referee over the TechUnited network.

The diagram below describes all these interfaces and the data that is being transmitted/received.

Human-Machine Interface

The HMI implementation consists of 2 parts.

- A supervisor, which reads the collision detection and ball-out-of-pitch flag and determines the signals to be sent to the referee.

- A GUI, where the referee is able to make decisions based on the detected events and also display these decisions/enforcements to the audience.

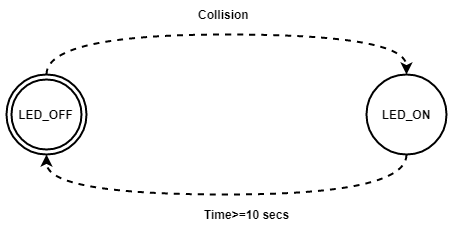

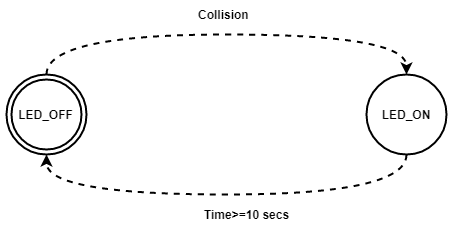

Supervisory Control

The tasks performed by the supervisor were confined to rule enforcement. These tasks are explained below.

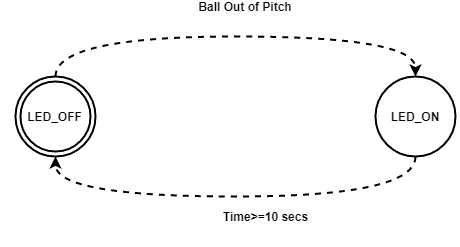

- The supervisor receives input from the vision algorithm as soon as event Ball Out of Pitch occurs. Afterwards, it provides a recommendation to the remote referee via a GUI platform running on his laptop. A constraint has been applied to terminate the recommendation automatically after 10 seconds if the referee doesn’t acknowledge the recommendation.

- The supervisor receives input about the occurrence of the event Collision Detected. As soon as this event is detected, a recommendation is sent to remote referee via GUI Platform. The termination strategy is same as the previous event termination.

- The referee has a button to show the audience the decision he has taken. For this, as soon as he pushes the button in GUI the decision is send to other screen as an LED and the supervisor terminates the LED after certain time as it releases the push button automatically.

The following automatons were used for these tasks.

Graphical User Interface

Integration

Demo Video

Conclusions and Recommendations

Conclusions

Recommendations

Additional Resources

The developed software is divided into two categories:

- C/C++ Code

- MATLAB/Simulink Code

The C/C++ codes are stored in the team's Gitlab private repository here. For access to this resource, please write an email to Aditya Kamath. The Gitlab repository contains the following source code:

- ArUco detection/tracking: This is a re-used code sample using the aruco library for the detection and tracking of AR markers

- UDP interfaces: This repository contains sample code for local/remote UDP servers and clients

- TechUnited World Model: The repositories multicast and Global_par are used to access the TechUnited world model and also contains the code developed for collision detection

- Ball detection/tracking: This contains the code for the detection of a red colored ball

- Marvelmind: This code reads pose data of a selected beacon from the MarvelMind modem and broadcasts data via UDP. This is accessed by the MATLAB/Simulink files.

The MATLAB/Simulink files are stored here and contain the following resources:

- MAV004: This repository contains all the files required to communicate and control the pixhawk using MATLAB/Simulink

- HMI: This contains an integration of the developed supervisor and referee/audience GUI