PRE2016 3 Groep2: Difference between revisions

No edit summary |

|||

| Line 61: | Line 61: | ||

However, the results of WaveNet were not satisfactory, it was too slow in terms of producing content and we decided to focus on the GAN architecture. | However, the results of WaveNet were not satisfactory, it was too slow in terms of producing content and we decided to focus on the GAN architecture. | ||

=== Generative Adversarial Networks ( | === Generative Adversarial Networks (GANs) === | ||

A generative adversarial network (or GANs) is a generative modeling approach, introduced by Ian Goodfellow in 2014. The intuitive idea behind GANs is a game (theoretic) scenario in which the '''generator''' neural network competes against an '''discriminator''' network (the adversary). The generator is trained to produce samples from a particular data distribution of interest. Its adversary, the discriminator, attempts to accurately distinguish between “real” samples (i.e. from the training set) and samples generated/”fake” samples (i.e. produced by the generator). More concretely, for a given sample the discriminator produces a value, which indicates the probability that the given sample is a real training example - as opposed to a generated sample. | |||

A simple analogy - to explain the general idea - is art forgery. The generator can be considered the forger, who tries to sell fake paintings to art collectors. The discriminator is the art specialist, which tries to establish the authenticity of given paintings, e.g. by comparing a new painting with known (authentic) paintings. Over time, both forgers and experts will learn new methods and become better at their respective tasks. The cat-and-mouse game that arises is the competition that drives a GAN. | |||

More formally, the learning in GANs is a zero-sum game, in which the payoff of the generator is taken as the additive inverse (i.e. sign change) of the payoff of the discriminator. The goal of training is then to maximize the payoff of the generator, however, both players attempt to maximize its own payoff. In theory, GANs will not converge in general, which causes GANs to underfit. Nevertheless, recent research on GANs have lead to many successes and progress on multiple problems is made a high rate. In particular, applying a divide-and-conquer technique to break up the generative process into multiple layers can be used to simplify learning. Mirza et al. <ref name=”mirza2014conditional”>Mirza, Mehdi, and Simon Osindero. "Conditional generative adversarial nets." arXiv preprint arXiv:1411.1784 (2014).</ref> introduced a conditional version of a generative adversarial network, which conditioned both the generator and discriminator on class label(s). This idea was used by Denton et al.<ref name="denton2015deep">Denton, Emily L., Soumith Chintala, and Rob Fergus. "Deep Generative Image Models using a Laplacian Pyramid of Adversarial Networks." Advances in neural information processing systems. 2015.</ref> to show that a cascade of conditional GANs can be used to generate higher resolution samples, by incrementally adding details to the sample. A recent example is the [[#StackGAN|StackGAN]] architecture. | |||

=== StackGAN === | === StackGAN === | ||

Revision as of 17:35, 3 April 2017

This is group 2's page

Note: To the professors reading, the last section titled "Summary of Tasks Completed" contains the summary of what we have done in the past week (and weeks before as well).

Group Composition

Group 2 has the following members:

- Steven Ge

- Rolf Morel

- Rick Coonen

- Noud de Kroon

- Bas van Geffen

- Jaimini Boender

- Herman Galioulline

Project Description

In this project, we will explore music generation using machine learning. Specifically, we will consider how taking user preferences into account in generating music via machine learning could create a unique and customized product for consumers of music. Applications for this include: generating music similar to music from dead artists (for fans of those dead composers), generating music scores for movies, and a new type of tool to help inspire artists with new musical ideas. Our project will explore if there is indeed a large need/desire for this type of machine learning music generation. Furthermore, we will also see if such a machine learning algorithm is in fact feasible to implement and provide acceptable music for each of the applications.

Applications

One idea for application includes the concept that there are music consumers who have a particular taste for certain artists who are no longer alive (Bach for example). And these users are restricted to a fixed set of songs already composed by this artist with no variety - but desire new or more music of that same artist. A possible solution to this could be having a machine learning algorithm capable of generating songs containing the key characteristics that uniquely identify that artist. This would be done by training machine learning algorithm on that specific artist's songs. Assuming this is feasible, this could become an emerging market to help classical music lovers relive the past.

Another example of an application would be the movie industry. In the movie industry millions of dollars are spent on generating soundtracks for different scenes. However, over time certain sounds have become key identifiers for evoking certain types of emotions in the audience (clashing cords to create distress and comfort for example). So it happens that when a new score is developed for a movie, the music is different, but several elements in the music must remain the same in order to create the desired effect. Thus, this could potentially be done much more efficiently and cheaply by training a machine learning algorithm for each of the desired effects. For example, having an algorithm to just generate "scary" music trained on the music played during scary scenes in movies.

The final example of an application would be some type of musical idea generator or inspiration for musical artists. Already music artists (mainly techno, or house music) use everyday sounds as samples for their beats or tracks. And so a machine learning algorithms could provide a similar source of interesting ideas for those types of artists. This would be done by having the artist feed an algorithm different kinds of music (either known songs, or some composed samples) and listen to the generated music for some type of inspiration or interesting idea. And then this could be repeated as many times as necessary until a desired idea or sample has been generated.

USE aspects

Through out this project we will concern ourselves with how an AI that can generate music on demand may have impact on Users, Society and Enterprise.

User

A product that does not satisfies the user's needs is inherently deficient. The most important question here is whether users would actually exist for a product that generates music where hitherto the business of creating music was a creative affair solely performed by humans. Often music is more to people than just the aural pleasure of hearing music performed, but also the artist behind the music is of importance.

To gauge interest in an AI product generating music and whether such a product will find application we use surveys to find out features are actually desired by users. Guided by the insights the survey gives us we will steer the project such as to accommodate user needs.

Society

A possible consequence for a very effective musical AI is that it will be competent and efficient to a degree where it will compete with humans. Up till this point in time humans have considered creative tasks, such as composing music, safe from automation. Recent advances in AI technology have shown that there are algorithms that produce artefacts that are very similar in quality to exemplars from the domain of interest. Even when these algorithms learn no more than patterns in from a data set, there are jobs out there that ask for this (temporary music in the film industry).

Given these advances and the rate of research progress it could very well mean that that humans now performing these creative musical tasks will be replaced by AI technology. We will therefore (try) to look into the impact of the intrusion of musical AI into the field of musicians and discuss how this influences employment in this sector.

Enterprise

Beyond making music being a creative pursuit it is responsible for a multi-billion dollar industry. Beyond artists we have producers, record labels and content delivery services (among others). On the productive side there is the possibility for producers to more efficiently obtain the kind of music there are after, be it a generated film score or a sample to be incorporated in a song, etc., by musical artificial intelligence. This could mean more creative control for content owners otherwise not available, but it also means less dependence on artists that need to be paid. This, of course, creates opportunities to increase profits.

Another aspect to consider is ownership of the produced music. Successful AI technologies as we know them today use training data to learn patterns from. Often there will be now way around the fact that if you would like the AI to produce songs similar to songs from a certain artist than this artist's discography will have to be part of the training data. But in general these songs will be copyright protected. As the AI will not reproduce songs but only generate similar songs based on the patterns extant in the original songs, a strong case could be made for the generated song not (directly) infringing on the copyright of the original songs. The AI distilled the underlying patterns of the music and ownership of these interpretive patterns is currently unclear, they are even different based on the AI technology.

We examine the possible applications for AI music in industry as well as look into the legal aspects of creating similar music through pattern recognition.

Machine Learning

Multiple approaches to using Artificial Intelligence technology are available to generating audio samples. We outline two such approaches:

Wavenet

To implement our Machine Learning idea to be able to mimic a certain artist we planned to use a Machine Learning algorithm called WaveNet. WaveNet is a deep neural network that takes raw audio as input. Already, it has been used successfully to train on certain dialects to create a state-of-the-art text-to-speech converter. Specifically, WaveNet has been shown to be capable of capturing a large variety of key speaker characteristics with equal fidelity. So instead of feeding it voice data, we intended to feed it musical input in order to train the network to generate our desired music.

Furthermore, WaveNet is an open source project written in python and is available on GitHub. This easy access we thought would not only allow us to modify WaveNet if we find it necessary but also ensure that there is a community available to help if needed.

However, the results of WaveNet were not satisfactory, it was too slow in terms of producing content and we decided to focus on the GAN architecture.

Generative Adversarial Networks (GANs)

A generative adversarial network (or GANs) is a generative modeling approach, introduced by Ian Goodfellow in 2014. The intuitive idea behind GANs is a game (theoretic) scenario in which the generator neural network competes against an discriminator network (the adversary). The generator is trained to produce samples from a particular data distribution of interest. Its adversary, the discriminator, attempts to accurately distinguish between “real” samples (i.e. from the training set) and samples generated/”fake” samples (i.e. produced by the generator). More concretely, for a given sample the discriminator produces a value, which indicates the probability that the given sample is a real training example - as opposed to a generated sample.

A simple analogy - to explain the general idea - is art forgery. The generator can be considered the forger, who tries to sell fake paintings to art collectors. The discriminator is the art specialist, which tries to establish the authenticity of given paintings, e.g. by comparing a new painting with known (authentic) paintings. Over time, both forgers and experts will learn new methods and become better at their respective tasks. The cat-and-mouse game that arises is the competition that drives a GAN.

More formally, the learning in GANs is a zero-sum game, in which the payoff of the generator is taken as the additive inverse (i.e. sign change) of the payoff of the discriminator. The goal of training is then to maximize the payoff of the generator, however, both players attempt to maximize its own payoff. In theory, GANs will not converge in general, which causes GANs to underfit. Nevertheless, recent research on GANs have lead to many successes and progress on multiple problems is made a high rate. In particular, applying a divide-and-conquer technique to break up the generative process into multiple layers can be used to simplify learning. Mirza et al. [1] introduced a conditional version of a generative adversarial network, which conditioned both the generator and discriminator on class label(s). This idea was used by Denton et al.[2] to show that a cascade of conditional GANs can be used to generate higher resolution samples, by incrementally adding details to the sample. A recent example is the StackGAN architecture.

StackGAN

TODO: Comprehensive explanation

Training Data

LSTM (Back Up)

Since GAN has never been used to create music, we cannot 100% ensure that the output of that network will be great. Therefore we shall use another network, which has already been used several times to create music, as a back up plan. This network is the Long short-term memory network. This network is a recurrent neural network, which means it keeps an internal state. This internal state is used to process the input. This allows for memory like behavior. We will use and modify existing models to create the network for our needs.

Data

We use midi files for the LSTM network. The data sets we currently plan on using are the Lakh MIDI Data set (http://colinraffel.com/projects/lmd/) and a data set created by a redditor called The Midi man (https://www.reddit.com/r/datasets/comments/3akhxy/the_largest_midi_collection_on_the_internet/). The data set created by The Midi man contains information about the artist and the genre of the midi files. The Lakh MIDI Data set consist of several version. The version we are using is the LMD-matched version. This version is a subset of the full version of the data set. This subset has all its midi files matched with the metadata from the one million song database.

Basic

This configuration acts as a baseline for melody generation with an LSTM model. It uses basic one-hot encoding to represent extracted melodies as input to the LSTM.

Lookback

Lookback RNN introduces custom inputs and labels. The custom inputs allow the model to more easily recognize patterns that occur across 1 and 2 bars. They also help the model recognize patterns related to an events position within the measure. The custom labels reduce the amount of information that the RNN’s cell state has to remember by allowing the model to more easily repeat events from 1 and 2 bars ago. This results in melodies that wander less and have a more musical structure.

Attention

In this configuration we introduce the use of attention. Attention allows the model to more easily access past information without having to store that information in the RNN cell's state. This allows the model to more easily learn longer term dependencies, and results in melodies that have longer arching themes.

For Music Analysis

For the training data, we use the For Music Analysis (FMA) dataset created by researchers at the École polytechnique fédérale de Lausanne (EPFL). This data set is specifically made for music analysis tasks. It contains the song files (in mp3 format) and the metadata corresponding to the songs. The creators of this data set has found some limitations in other existing data sets. Examples of these limitations are: Small size of the data set, absence of metadata, availability and legality of music files. The full version of this data set is not released yet. The small and medium version of this data set are released. The small and medium data sets are a subset of the full version and also comes with metadata. The distribution of the genres on the small data set is balanced and on the medium data set it is unbalanced. The distribution does not really matter, as we are going to train on each genre separately. The small and medium data set also does not contain the full lengths of the songs, unlike the full version. It contains 30 second segments taken from the middle of the songs. The reason why the researchers took the middle of the songs is that usually the middle part is more active than the beginning or ending. The meta-data includes artist name, song title, music genre, and track counts. In the next section we will explain how the song vector and the metadata vector looks like.

(Needs to be formatted better) Link paper: https://arxiv.org/pdf/1612.01840.pdf Link data set: https://github.com/mdeff/fma

Data Generator Class

To make use of this data set, we created a class that can extract the information from the small and medium data set. The medium data set contains 20 different genres and the small data set contains 10 different genres, which are included in the genres of the medium data set. The function, that creates the metadata vector for the medium data set, will also work for the small data set. The class contains the following functions:

- __init__(self, sample_length, genre=none): Loads all songs from the data set with a certain genre. Also splits the 30 second samples into multiple sample_length second samples. If the 30 is not divisible by sample_length, then the last part is dropped. (This is not exactly how it is done in the code, but the idea is the same)

- _get_genre(self, index): Returns the genre of a song with the corresponding index

- get_sample(self, index, sample_rate): Gets the index'th sample and creates the song vector with the corresponding sample_rate (Again not exactly like this in code). Also calls the create_metadata_vector function to get the metadata vector of the song.

- create_metadata_vector(self, song): Creates the metadata vector of the song.

Format song vector: A vector created by the librosa library with a given song. The length of the vector is (length_song_in_seconds * sample_rate) entries long.

Format metadata vector (entrees): acousticness, artist_discovery, artist_familiarity, artist_hotttnesss, danceability, energy, instrumentalness, liveness, play_count, song_currency, song_hotttnesss, speechiness, tempo, valence, train, Blues, Chiptune, Classical, Electronic, Folk, Hip-Hop, Indie-Rock, International, Jazz, Metal, Old-Time / Historic, Pop, Post-Punk, Post-Rock, Psych-Folk, Psych-Rock, Punk, Rock, Soundtrack, Trip-Hop

The reason why each genre is a separate entry is that we do not want the neural network to learn a linear relation between the genres.

Survey

To explore the wishes of users we conducted a survey asking people general questions about music generation as well their opinion on various features we proposed for a music generator. To get quantifiable results we gave the subjects a choice on a scale from 1 to 5 to rate their level of agreement or interest to our statements and suggested features. The survey we conducted is available here: File:SurveyArtificialArt.pdf.

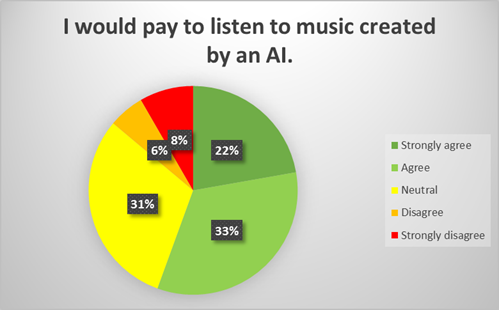

In interesting observation from the survey results is that around half of the people seem to be open to pay for music generated by an AI:

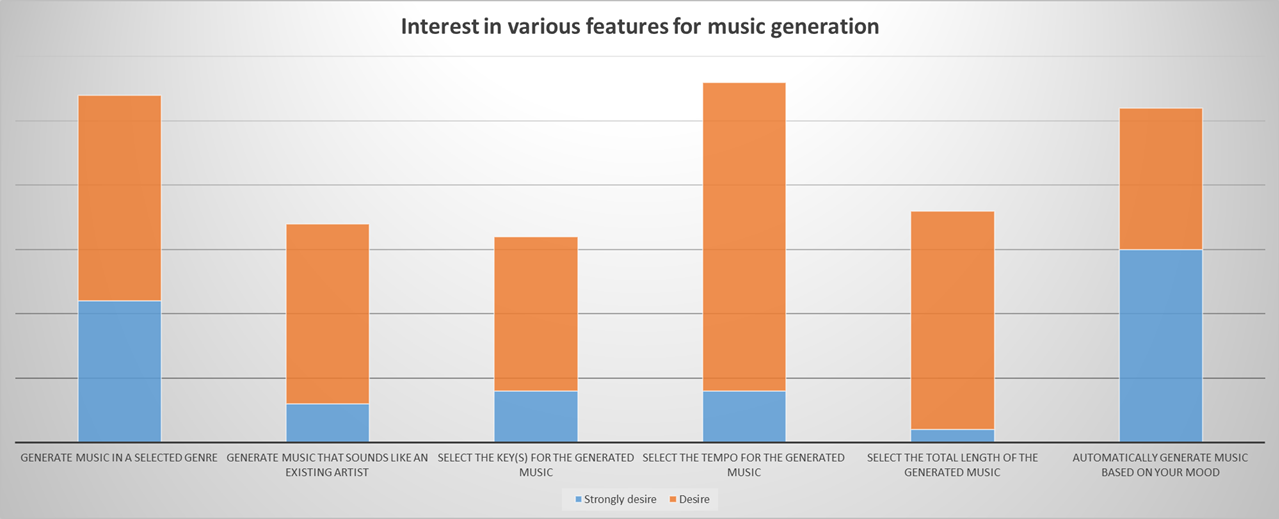

We have compiled the survey results in a bar chart that shows how the different features compared to each other when it comes to how much the subjects desired each of them.

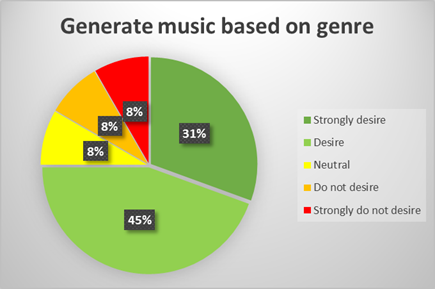

From these results we can see that in general user are definitely the most interested in generating music based on their selected genre, tempo and their mood. From these three, we can see that especially generating music based on mood seems to be very desirable, since many people strongly desire this feature. As for genre and tempo, we can see that they have a higher number of people interested in them in total, but a bigger part of these people is only mildly interested. This is especially true for generating music based on tempo. To give an idea of the number of people interested in each feature, compared to people not interested, take a look at the following chart, that shows the distribution of people's desires for generating music based on genre.

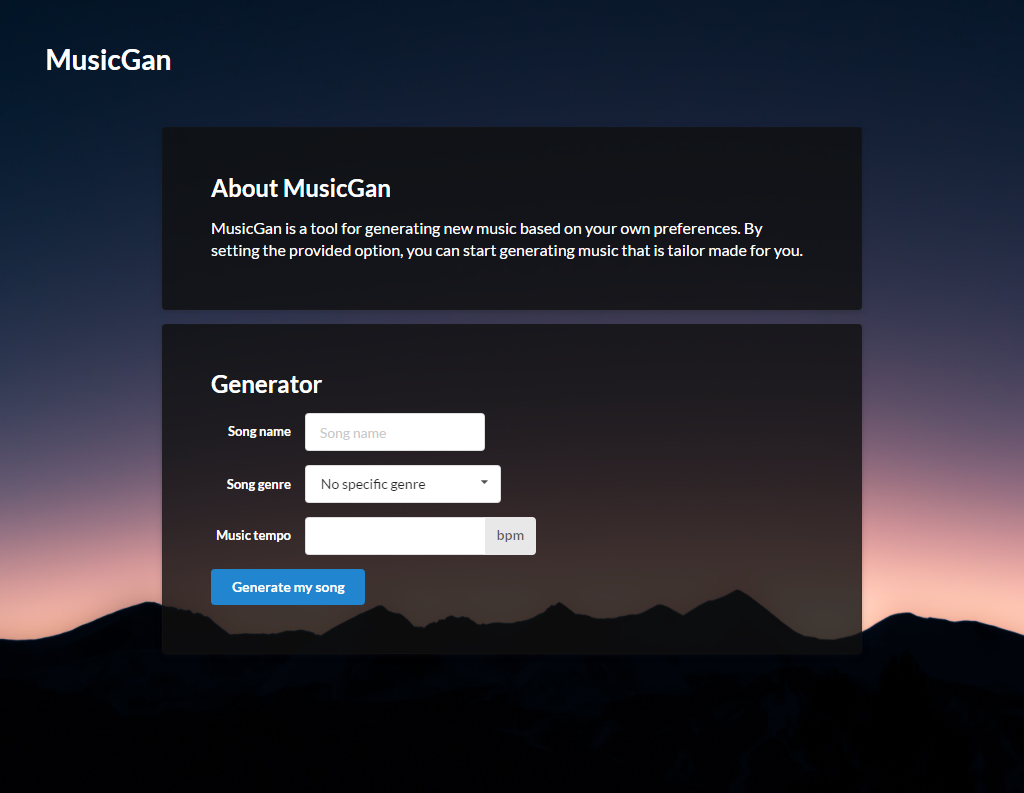

Frontend

Based on the survey results and what we deemed realistic in the timespan of this course we have decided to make our application revolve around two main features: generate based on genre and tempo. This resulted in the first basic prototype in the image below.

The newest version of the website can be seen on MusicGan.eu.

User feedback

To make sure our product actually satisfies potential users, we need to evaluate it by having them try it out and ask them questions. By doing this we want to find out the following things:

- Is it clear for the user what the application is doing?

- Is it clear for the user how to use the application?

- Is the user satisfied with the available options?

- If not, what options are missing?

- What does the user think of the generated song?

- Does it reflect the selected options?

AI Technology research

TODO: Evaluation of experiments with currently available technology (WaveNet) as well as discussion of applying GANs.

Interviews

We plan to conduct a survey of regular people to get a perspective on not only how useful and interesting they would find this idea of having a machine learning algorithm mimic a certain artist's playing style but also what else they would possible find useful (genre generation or mixture of artist styles).

Planning (subject to change)

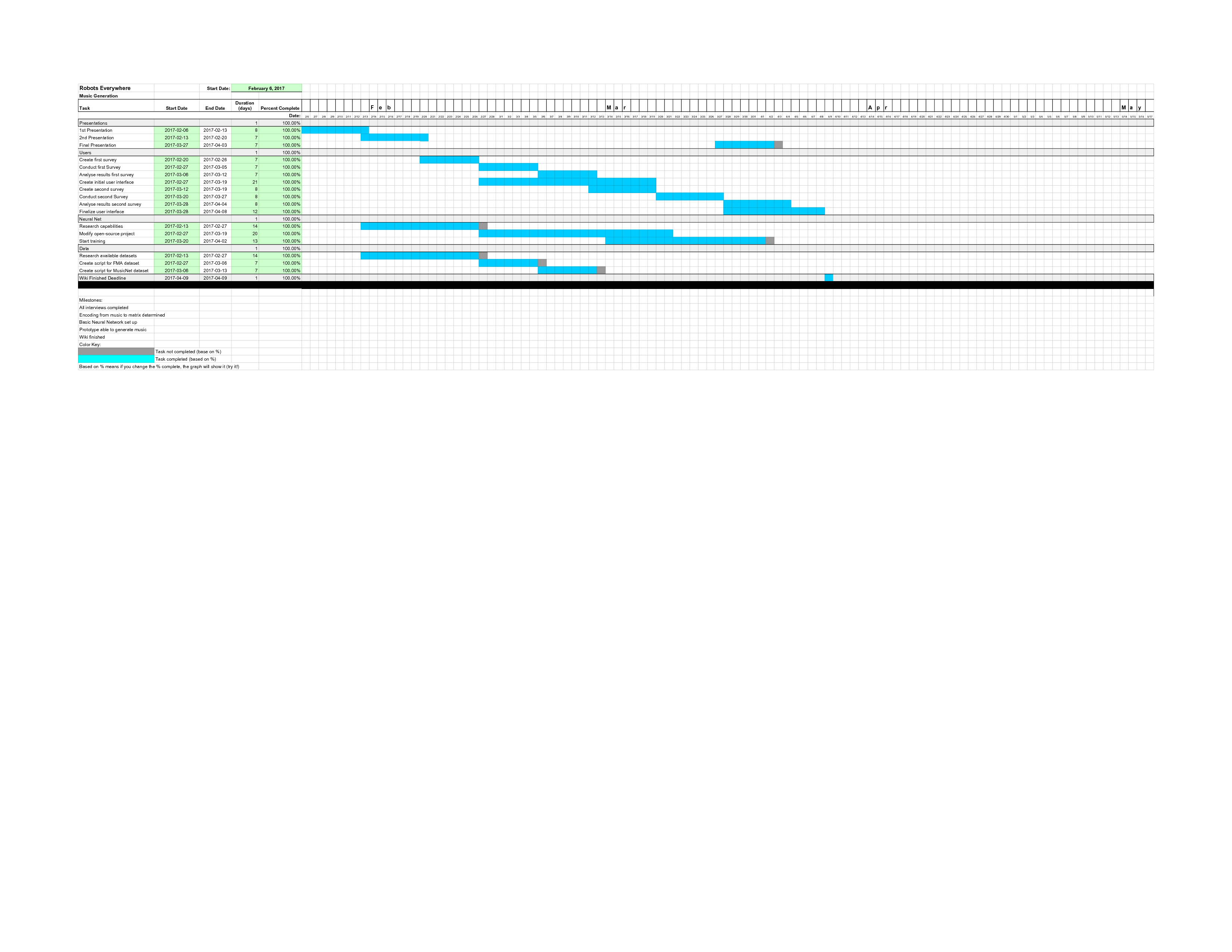

GANT chart

This GANT chart is created to keep this project organized. It shows in which activities this project consist, when these activities take part and when an activity needs to be finished.

It also contains a list of milestones. This list shows in which parts this project is divided. The major activities of the GANT chart (somewhat) correspond to these milestones.

During the course, the percentage of completeness in this GANT chart will be updated.

week 1

- Deciding on the subject of the project

- Working on first presentation

week 2

- First presentation done

- Working on second presentation

- Create a project plan (the GANT chart)

- Create a task division

- Start researching Data Engineering

- Start researching Tensor flow

- Start working on wiki

week 3

- Second presentation done

- Rework project based on feedback

- Start interviews

- Create questionnaire for survey and hold survey

- First experiments with AI software (WaveNet)

- Incorporate changed direction in wiki and flesh out certain sections

- Conduct survey

- Continue experiments with WaveNet

- Begin website

week 4

- DataTeam starts finding data

- Continue to give out survey

- First "Hello World" with WaveNet

week 5

- Analyse results from survey

- Start develop WaveNet prototype

- Data Team starts working on script

week 6

- Working prototype of WaveNet Network

- Working script to gather and format data

- Fleshing out user-facing product (based on survey results)

week 7

- Initial version of user-facing product (i.e. website)

- Start working on the backend of our user-facing product

week 8

- user-facing product finished

- Incorporate WaveNet prototype into product

- Further USE analysis

week 9

- Tidy up user facing product with working Machine Learning Algorithm

- Tidy up wiki

- Research GAN if extra time

week 10

Back up week (in case we run into unforeseen obstacles)

Division of Tasks

For the technical part of this project, we have split the group into two teams. The Deep Neural Network team will work on the Neural network and the Data Engineering team will gather test data and find a suitable encoding.

Team Tensor flow:

- Noud de Kroon

- Bas van Geffen

- Rolf Morrel

Team Data Engineering:

- Rick Coonen

- Jaimini Boender

- Herman Galioulline

Summary of Tasks Completed

Week1

During this week, we as a group we brain stormed many different ideas for the project. The top ideas included: this idea (music generation via machine learning), new bias detection (on news articles on how "conservative" or "liberal" the article is for example), European Law on Internet privacy, and a few others that were discarded quickly. We settled on music generation because it not only seemed the most interesting but also because it had the most potential to produce a meaningful product. And also, creating a presentation explaining our idea.

Week2

During this week we hashed out the details on how to accomplish creating a machine learning algorithm capable of producing music. This included doing research on the available options( libraries and other tools available to use), identifying the different tasks that would be necessary to complete, and then assigning them to different members of the group. And lastly, creating a presentation explaining everything.

Week3

During this week we did our best to incorporate feedback from the presentation in week 2. This included choosing a slightly different angle on our topic, one that had more emphasis on keeping the user in mind when deciding the capabilities of the algorithm. This changed the project to more of designing and making a product (that could potentially be sold or be expanded to something that could be sold) rather then a simple machine learning feasibility research project. Here we split the team into two groups: the Neural Net team (responsible for the actual machine learning algorithm) and the Data Team (responsible for gathering data to train the machine on).

Week 4

This week the Neural Net team was focused on adapting the open source StackGAN project to work with sound, instead of text. This included fixing some internal inconsistencies and understanding it in general. The Data Team started writing a script to generate a stub to help the Neural Net Team in their development. That whole process included communication between the two groups with respect to what was expected from each other and details on how the data should be formatted as input for the algorithm. This included things like the correct way to label the data set, the number of different features to be trained on (number of genres for example), and the type structure the data should be in. And lastly the website for which will be the UI for the whole was created and can be viewed!

Week 5

This week the Neural Net team continued adapting the StackGAN project. Furthermore they created a script to pre-process the music data to change the format in order to help match input. The Data Team created script to take a "small" (15,000 songs) data set and create vectors representing the song along with the meta-data involved. And lastly the url for the website was changed to http://musicgan.eu.

As a backup to the GAN approach to creating music, we decided that a recurrent neural network approach could be promising. More specifically, we are looking at implementing an LSTM (Long short-term memory) neural network which has been documented to produce interesting results. A Google brain project---Magenta---recently was involved in the release of A.I. duet which is based on the LSTM approach. Magenta is also completely open source and the team behind the project are looking to get more people involved in the project by documenting their implementation and posting tutorials on their blog. This week we started working on generating music samples using Magenta's LSTM.

We were able to produce some preliminary results using Magenta's pre-trained models. It is interesting to hear the differences between the various configurations (basic, lookback and attention) of the LSTM.

Basic Recurrent Neural Network Example 1

Basic Recurrent Neural Network Example 2

Lookback Recurrent Neural Network Example 1

Lookback Recurrent Neural Network Example 2

Attention Recurrent Neural Network Example 1

Attention Recurrent Neural Network Example 2

The next two samples were generated by priming the LSTM with the first four notes of "Twinkle Twinkle Little Star" using the attention recurrent neural network.

Twinkle Twinkle Little Star Example 1

Twinkle Twinkle Little Star Example 2

Next steps include tweaking the training of the neural network towards what we found our users are looking for, like generating samples based on genre, existing artists and tempo.

Week 6

This Week the Neural Net continued to correct errors in current GAN algorithm (modifying the different layers of the network to match in the music input). A slight bug in the script made by the Data Team was found, and has been corrected (issue was a string was sent instead of an integer). The modifications of the first layer of stackGAN is finished, and the software is now ready to run. We expect that, since we have learned a lot from this process, adapting layer 2 will go a lot smoother. We propose 4 different test setups:

- The outputs of the neural network are spectrograms where on each rows alternatively the real and complex part of the coefficient is recorded.

- The outputs of the neural network are 1d arrays representing songs. We put a 1D convolutional layer between the song and the rest of the network. We conjecture that the network might learn itself some form of generating a spectrogram encoding.

- The outputs of the neural network are spectrogram which are 256x256x2 images for the final output and 64x64x2 images for the intermediary output. The first channel represents the magnitude of the spectrogram and the second channel the angle.

- As 3, but with 512x512x2 and 128x128x2 resolutions for the final and intermediary output respectively.

The final deliverable of the Neural Network team will be an analysis of the pros and cons of each of these test setups.

With the Data Team, we finished a script enabling us to take any of the mp3 clips off of 7digital. Also a new dataset has been found (MusicNet), which seems to have a lot of nice features (classical music which is very well labeled). For now testing will occur with the MusicNet dataset. Furthermore the data has been compiled and added to the wiki.

The part of the data team, that started working on a back up LSTM, has found data sets (see the "Data" subsection of the "LSTM" section) that can be used for the LSTM network. They also started with the preprocessing of the data and they also found some LSTM models that can be trained for our purpose. The existing models they found, that are re-trainable, are: magenta (LSTM by tensorflow), music_rnn by Yoav Zimmerman and deepjazz by Ji-Sung Kim.

Here is a sample from a Magenta network that we trained: First trained output

We used the same MIDI data set as Maganeta's pre-trained models. The next steps would be to train the network on a different data set, and also look into categorizing input and output into different instruments.

Current task assignment:

- Noud de Kroon: Deep neural net

- Bas van Geffen: Deep neural net

- Herman Galioulline: LSTM and data

- Rick Coonen: Website and data

- Jaimini Boender: Wiki and Data

- Rolf Morel: Deep neural net

- Steven Ge: Data

References

- ↑ Mirza, Mehdi, and Simon Osindero. "Conditional generative adversarial nets." arXiv preprint arXiv:1411.1784 (2014).

- ↑ Denton, Emily L., Soumith Chintala, and Rob Fergus. "Deep Generative Image Models using a Laplacian Pyramid of Adversarial Networks." Advances in neural information processing systems. 2015.