PRE2016 3 Groep2: Difference between revisions

| Line 193: | Line 193: | ||

'''Week 4''' | '''Week 4''' | ||

This week we are focusing on adapting the open source StackGAN project to work with sound, instead of text. It is also critical to have a data set to train the neural network on. The labels of this data set should be correct and contain information relevant to the user preferences which we gathered with our survey. | This week we are focusing on adapting the open source StackGAN project to work with sound, instead of text. It is also critical to have a data set to train the neural network on. The labels of this data set should be correct and contain information relevant to the user preferences which we gathered with our survey. | ||

* Noud de Kroon: Deep neural net | * Noud de Kroon: Deep neural net | ||

Revision as of 10:45, 6 March 2017

This is group 2's page

Group Composition

Group 2 has the following members:

- Steven Ge

- Rolf Morel

- Rick Coonen

- Noud de Kroon

- Bas van Geffen

- Jaimini Boender

- Herman Galioulline

Project Description

In this project, we will explore music generation using machine learning. Specifically, we will consider how taking user preferences into account in generating music via machine learning could create a unique and customized product for consumers of music. Applications for this include: generating music similar to music from dead artists (for fans of those dead composers), generating music scores for movies, and a new type of tool to help inspire artists with new musical ideas. Our project will explore if there is indeed a large need/desire for this type of machine learning music generation. Furthermore, we will also see if such a machine learning algorithm is in fact feasible to implement and provide acceptable music for each of the applications.

Applications

One idea for application includes the concept that there are music consumers who have a particular taste for certain artists who are no longer alive (Bach for example). And these users are restricted to a fixed set of songs already composed by this artist with no variety - but desire new or more music of that same artist. A possible solution to this could be having a machine learning algorithm capable of generating songs containing the key characteristics that uniquely identify that artist. This would be done by training machine learning algorithm on that specific artist's songs. Assuming this is feasible, this could become an emerging market to help classical music lovers relive the past.

Another example of an application would be the movie industry. In the movie industry millions of dollars are spent on generating soundtracks for different scenes. However, over time certain sounds have become key identifiers for evoking certain types of emotions in the audience (clashing cords to create distress and comfort for example). So it happens that when a new score is developed for a movie, the music is different, but several elements in the music must remain the same in order to create the desired effect. Thus, this could potentially be done much more efficiently and cheaply by training a machine learning algorithm for each of the desired effects. For example, having an algorithm to just generate "scary" music trained on the music played during scary scenes in movies.

The final example of an application would be some type of musical idea generator or inspiration for musical artists. Already music artists (mainly techno, or house music) use everyday sounds as samples for their beats or tracks. And so a machine learning algorithms could provide a similar source of interesting ideas for those types of artists. This would be done by having the artist feed an algorithm different kinds of music (either known songs, or some composed samples) and listen to the generated music for some type of inspiration or interesting idea. And then this could be repeated as many times as necessary until a desired idea or sample has been generated.

USE aspects

Through out this project we will concern ourselves with how an AI that can generate music on demand may have impact on Users, Society and Enterprise.

User

A product that does not satisfies the user's needs is inherently deficient. The most important question here is whether users would actually exist for a product that generates music where hitherto the business of creating music was a creative affair solely performed by humans. Often music is more to people than just the aural pleasure of hearing music performed, but also the artist behind the music is of importance.

To gauge interest in an AI product generating music and whether such a product will find application we use surveys to find out features are actually desired by users. Guided by the insights the survey gives us we will steer the project such as to accommodate user needs.

Society

A possible consequence for a very effective musical AI is that it will be competent and efficient to a degree where it will compete with humans. Up till this point in time humans have considered creative tasks, such as composing music, safe from automation. Recent advances in AI technology have shown that there are algorithms that produce artefacts that are very similar in quality to exemplars from the domain of interest. Even when these algorithms learn no more than patterns in from a data set, there are jobs out there that ask for this (temporary music in the film industry).

Given these advances and the rate of research progress it could very well mean that that humans now performing these creative musical tasks will be replaced by AI technology. We will therefore (try) to look into the impact of the intrusion of musical AI into the field of musicians and discuss how this influences employment in this sector.

Enterprise

Beyond making music being a creative pursuit it is responsible for a multi-billion dollar industry. Beyond artists we have producers, record labels and content delivery services (among others). On the productive side there is the possibility for producers to more efficiently obtain the kind of music there are after, be it a generated film score or a sample to be incorporated in a song, etc., by musical artificial intelligence. This could mean more creative control for content owners otherwise not available, but it also means less dependence on artists that need to be paid. This, of course, creates opportunities to increase profits.

Another aspect to consider is ownership of the produced music. Successful AI technologies as we know them today use training data to learn patterns from. Often there will be now way around the fact that if you would like the AI to produce songs similar to songs from a certain artist than this artist's discography will have to be part of the training data. But in general these songs will be copyright protected. As the AI will not reproduce songs but only generate similar songs based on the patterns extant in the original songs, a strong case could be made for the generated song not (directly) infringing on the copyright of the original songs. The AI distilled the underlying patterns of the music and ownership of these interpretive patterns is currently unclear, they are even different based on the AI technology.

We examine the possible applications for AI music in industry as well as look into the legal aspects of creating similar music through pattern recognition.

Machine Learning

Multiple approaches to using Artificial Intelligence technology are available to generating audio samples. We outline two such approaches:

Wavenet

To implement our Machine Learning idea to be able to mimic a certain artist we plan to use a Machine Learning algorithm called WaveNet. WaveNet is a deep neural network that takes raw audio as input. Already, it has been used successfully to train on certain dialects to create a state-of-the-art text-to-speech converter. Specifically, WaveNet has been shown to be capable of capturing a large variety of key speaker characteristics with equal fidelity. So instead of feeding it voice data, we intend to feed it musical input in order to train the network to generate our desired music.

WaveNet is an open source project written in python and is available on GitHub. This easy access will not only allow us to modify WaveNet if we find it necessary but also ensure that there is a community available to help if needed.

Generative Adversarial Networks (GAN)

When time permits it we would like to look into Generative Adverserial Networks, an approach that has been successfully applied to generating images.

TODO: comprehensive explanation.

Training Data

For training data we plan to use 3 main artists: Mozart, Bach and Beethoven. The reason for these choices is that firstly there is no worry about copy right issues on any of these artists music. But also that they have written hundreds of songs all of which are fairly long (compared to today's music). Thus, the high number of hours of music available from these artists will ensure that the machine learning algorithm is trained on a sufficient amount of data. Depending on how the project proceeds this may be subject to change.

Survey

TODO: discussion of survey which was performed before the commencement of week 5. File:SurveyArtificialArt.pdf

Frontend

TODO: discussion of website that includes the aspects users would like to control on their generated music.

AI Technology research

TODO: Evaluation of experiments with currently available technology (WaveNet) as well as discussion of applying GANs.

Interviews

We plan to conduct a survey of regular people to get a perspective on not only how useful and interesting they would find this idea of having a machine learning algorithm mimic a certain artist's playing style but also what else they would possible find useful (genre generation or mixture of artist styles).

Planning (subject to change)

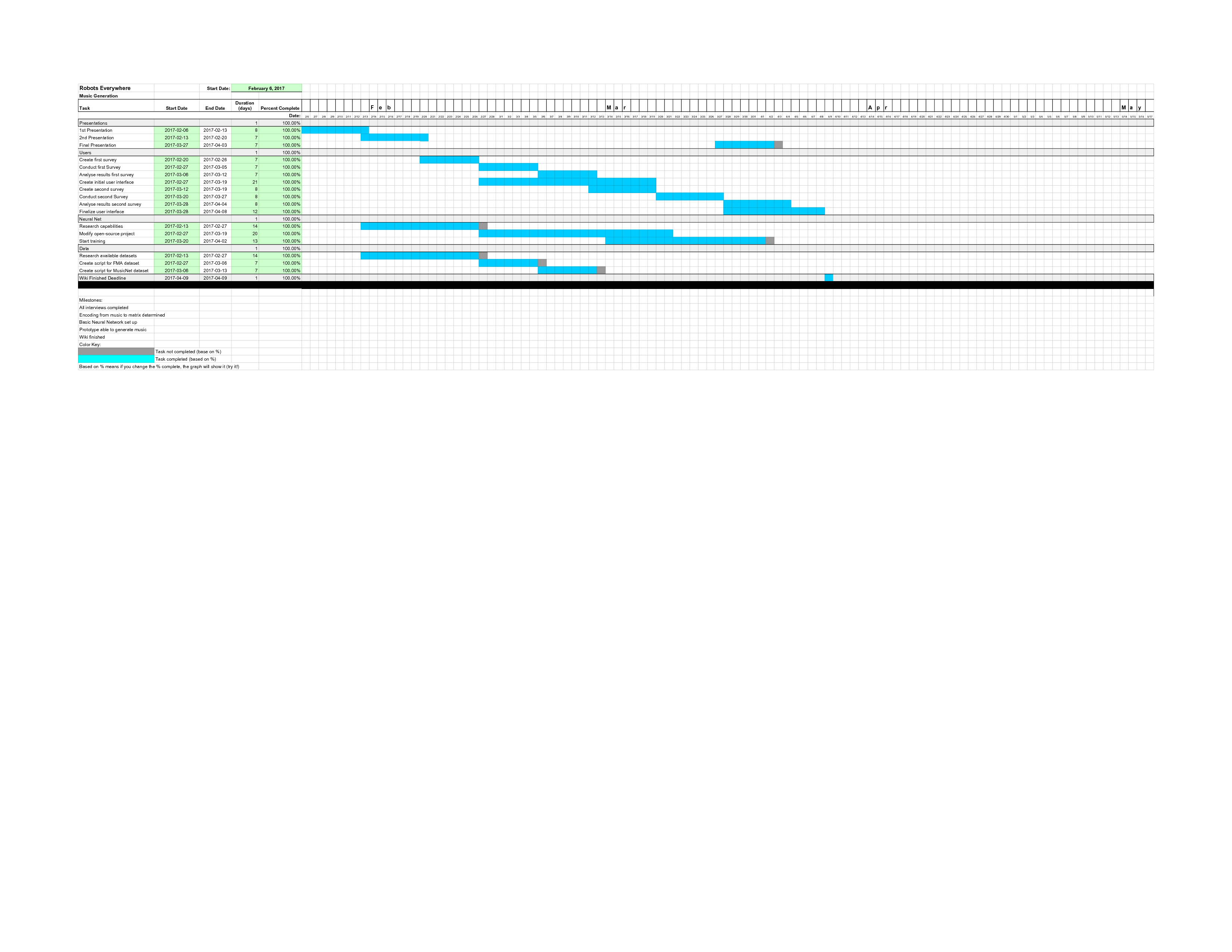

GANT chart

This GANT chart is created to keep this project organized. It shows in which activities this project consist, when these activities take part and when an activity needs to be finished.

It also contains a list of milestones. This list shows in which parts this project is divided. The major activities of the GANT chart (somewhat) correspond to these milestones.

During the course, the percentage of completeness in this GANT chart will be updated.

week 1

- Deciding on the subject of the project

- Working on first presentation

week 2

- First presentation done

- Working on second presentation

- Create a project plan (the GANT chart)

- Create a task division

- Start researching Data Engineering

- Start researching Tensor flow

- Start working on wiki

week 3

- Second presentation done

- Rework project based on feedback

- Start interviews

- Create questionnaire for survey and hold survey

- First experiments with AI software (WaveNet)

- Incorporate changed direction in wiki and flesh out certain sections

week 4

- Evaluating progress on AI and researching direction to continue in

- Continue to give out survey

- Experiment with Wave Net

week 5

- Analyse results from survey

- Initial version of user-facing product (i.e. website)

- Construct first "Hello World" with WaveNet

week 6

- Interviews done

- Fleshing out user-facing product (based on survey results)

week 7

- Start creating prototype (WaveNet)

- Start working on the backend of our user-facing product

week 8

- Incorporate WaveNet prototype

- Researching done

week 9

- Tidy up user facing product with working Machine Learning Algorithm

- Tidy up wiki with

week 10

Back up week (in case we run into unforeseen obstacles)

Research GAN if there is extra time

Division of Tasks

For the USE part of this project, we have given each member of the group a task. Each task involves interviewing people related to the creation of art/music with artificial intelligence and/or people related with the music industry.

- Steven Ge: Make use part go smoothly (and conduct extra interviews)

- Rolf Morel: Interview a deep learning professor

- Rick Coonen: Interview a musician (or any other person that creates music)

- Noud de Kroon: Survey the populus

- Bas van Geffen: Interview a professor at a conservatorium

- Jaimini Boender: Interview Tijn Borghuis

- Herman Galioulline: Interview a producer (A person that makes money from the music industry, but does not create music him-/herself)

For the technical part of this project, we have split the group into two teams. The Tensor flow team will work on the Neural network (which for which we use Tensor flow) and the Data Engineering team will gather test data and find a suitable encoding.

Team Tensor flow:

- Noud de Kroon

- Bas van Geffen

- Herman Galioulline

Team Data Engineering:

- Rick Coonen

- Jaimini Boender

- Rolf Morel

Carnaval Week

- Noud de Kroon: David Bowie with WaveNet

- Bas van Geffen: Website deployment

- Herman Galioulline: Survey

- Rick Coonen: Survey

- Jaimini Boender:

- Rolf Morel:

- Steven Ge: Survey

Week 4

This week we are focusing on adapting the open source StackGAN project to work with sound, instead of text. It is also critical to have a data set to train the neural network on. The labels of this data set should be correct and contain information relevant to the user preferences which we gathered with our survey.

- Noud de Kroon: Deep neural net

- Bas van Geffen: Deep neural net

- Herman Galioulline: Wiki and data

- Rick Coonen: Website and prototype survey

- Jaimini Boender: Data

- Rolf Morel: Deep neural net

- Steven Ge: Data