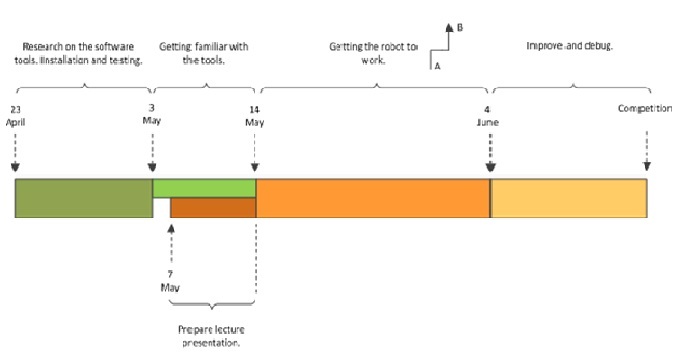

Embedded Motion Control 2012 Group 3: Difference between revisions

| (104 intermediate revisions by 3 users not shown) | |||

| Line 37: | Line 37: | ||

'''Remark:''' | '''Remark:''' | ||

<p style="text-align: justify;"> | |||

Due to incompatibilities with Lenovo W520 (wireless doesn’t work), Ubuntu (10.04) did not work and other versions were tried and tested to work properly. | Due to incompatibilities with Lenovo W520 (wireless doesn’t work), Ubuntu (10.04) did not work and other versions were tried and tested to work properly. | ||

All the software was installed for all members of the group. | All the software was installed for all members of the group. | ||

</p> | |||

<br><br> | |||

''' 2. Discussion about the robot operation''' | ''' 2. Discussion about the robot operation''' | ||

| Line 92: | Line 94: | ||

'''4. Had the first meeting with the tutor.''' | '''4. Had the first meeting with the tutor.''' | ||

== Week 3== | == Week 3== | ||

| Line 109: | Line 110: | ||

# Investigation of the navigation stacks and possibility to create map of the environment. The link for relevant messages is given here. | # Investigation of the navigation stacks and possibility to create map of the environment. The link for relevant messages is given here. | ||

# Thinking and discussing about smart navigation. | # Thinking and discussing about smart navigation. | ||

<br><br> | |||

The | The ideas we came up up: | ||

<p style="text-align: justify;"> | |||

We should save in a buffer the route were the robot went and not go twice through same place. | We should save in a buffer the route were the robot went and not go twice through same place. | ||

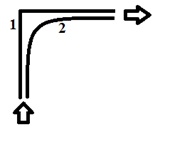

For turning left or right, for case 2 presented in Week 1 and 2, we want to use so cubic or quitting splines. An example of the idea is given in the paper "Task-Space Trajectories via Cubic Spline Optimization" - J. Zico Kolter and Andrew Y. Ng | For turning left or right, for case 2 presented in Week 1 and 2, we want to use so cubic or quitting splines. An example of the idea is given in the paper "Task-Space Trajectories via Cubic Spline Optimization" - J. Zico Kolter and Andrew Y. Ng | ||

</p> | |||

== Week 4== | == Week 4== | ||

| Line 131: | Line 134: | ||

[[File:Example2.jpg|thumb|center|400px|''Figure 2 Robot raw laser data on left junction'']] | [[File:Example2.jpg|thumb|center|400px|''Figure 2 Robot raw laser data on left junction'']] | ||

<p style="text-align: justify;"> | |||

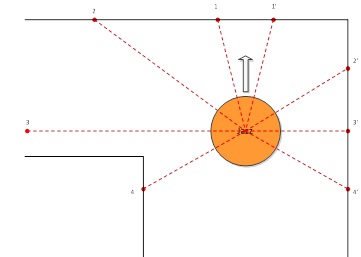

The points in front of the robot will be used for preventing it from hitting the walls of the maze. The two sideways points(2 and 2’) will be used to keep the robot moving straight, by comparing them. 3 and 3’ will be used for detecting of a junction and they with 4 and 4’ will be used to determine where the center of the junction is. | The points in front of the robot will be used for preventing it from hitting the walls of the maze. The two sideways points(2 and 2’) will be used to keep the robot moving straight, by comparing them. 3 and 3’ will be used for detecting of a junction and they with 4 and 4’ will be used to determine where the center of the junction is. | ||

After the junction is detected its type will be sent to the process that builds the map. The map will be array of structures (or classes) that keep the coordinates and orientation of every junction, its type and the exits that have been taken before and that the robot has come from. | After the junction is detected its type will be sent to the process that builds the map. The map will be array of structures (or classes) that keep the coordinates and orientation of every junction, its type and the exits that have been taken before and that the robot has come from. | ||

| Line 139: | Line 142: | ||

We consider that taking right at every junction, the robot will be able to go out of the maze and for the case when we have only a left turn and front path the robot will go forward. | We consider that taking right at every junction, the robot will be able to go out of the maze and for the case when we have only a left turn and front path the robot will go forward. | ||

There will be considered 5 types of junctions: The X type (1), T type (2), left (3) and right (4) type, and dead-end (5). These possibilities are depicted in the figure below. | There will be considered 5 types of junctions: The X type (1), T type (2), left (3) and right (4) type, and dead-end (5). These possibilities are depicted in the figure below. | ||

</p> | |||

<br> | |||

[[File:Example3.jpg|thumb|center|400px|''Figure 3 Junction cases'']] | [[File:Example3.jpg|thumb|center|400px|''Figure 3 Junction cases'']] | ||

| Line 151: | Line 156: | ||

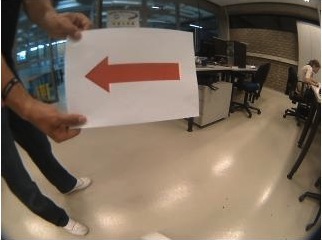

[[File:Example4.jpg|thumb|center|600px|''Figure 4 Steps in image processing that will be considered for the arrow'']] | [[File:Example4.jpg|thumb|center|600px|''Figure 4 Steps in image processing that will be considered for the arrow'']] | ||

<p style="text-align: justify;"> | |||

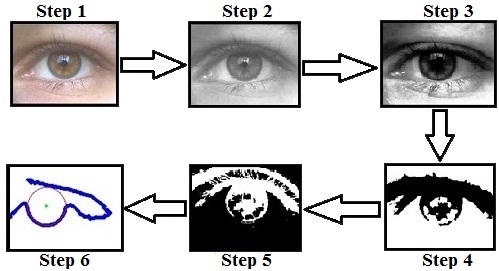

In the first step we can see that is the acquisition of the RGB images, were in second step they are converted to grayscale. Because the distribution of the intensities of the grey is not even, a histogram of the grayscale image will be performed in order to evenly distribute the intensities of grey like in Step 3. After this step a conversion to black and white will be made and possibly an inversion of the colors will be performed in order to identify a contour of the arrow as should be done in Step 6. | In the first step we can see that is the acquisition of the RGB images, were in second step they are converted to grayscale. Because the distribution of the intensities of the grey is not even, a histogram of the grayscale image will be performed in order to evenly distribute the intensities of grey like in Step 3. After this step a conversion to black and white will be made and possibly an inversion of the colors will be performed in order to identify a contour of the arrow as should be done in Step 6. | ||

These steps are only for providing information about future work that will be actually implemented for the arrow after we finish all the left hand side steps from Figure 1, which do not involve image processing. | These steps are only for providing information about future work that will be actually implemented for the arrow after we finish all the left hand side steps from Figure 1, which do not involve image processing. | ||

For more information reference will be made for the paper: | For more information reference will be made for the paper: ”''Electric wheelchair control for people with locomotor disabilities using eye movements''” - Rascanu, G.C. and Solea, R. | ||

</p> | |||

The direction pointing(Left or Right) is still to be discussed. | The direction pointing(Left or Right) is still to be discussed. | ||

| Line 170: | Line 176: | ||

* We decided that is important to figure out fail safe things. | * We decided that is important to figure out fail safe things. | ||

Now that we have the images from the real camera from the robot and some tests we decided that the algorithm presented in the week before for the vision part is not very robust and we decided to go for a better approach, more robust which in progress. | |||

The above points will be treated in Week 6 as we make our last preparation for the corridor competition. | The above points will be treated in Week 6 as we make our last preparation for the corridor competition. | ||

== Week 6 == | |||

At this moment the robot is able to: | |||

* find the entrance of the maze and go in the maze on the center. | |||

* identify each junction and center itself on the center of the corridor. | |||

* using a time function, momentarily, knowing the lab width, it can identify the center of the junction. | |||

* it can detect dead-ends and go further without checking that dead-end. | |||

* the robot is able to go to the end of the maze and come back. | |||

<br> | |||

'''VIDEO DOCUMENTATION''' <br> | |||

Trial with the algorithm just before the corridor competition (http://youtu.be/BgYXToQaHE8) <br> | |||

<br> | |||

=== Vision === | |||

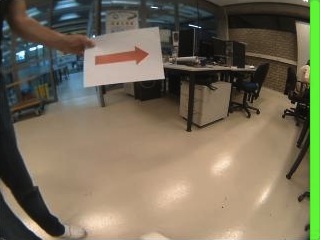

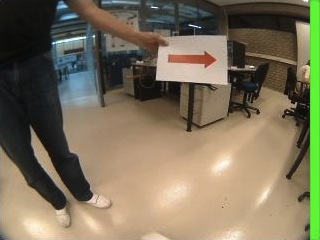

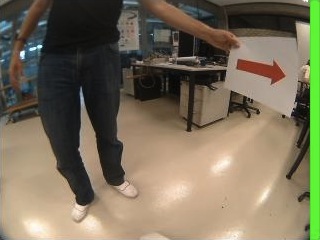

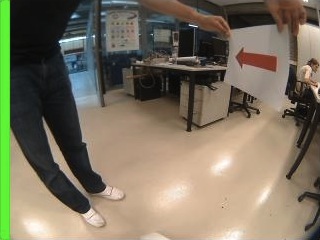

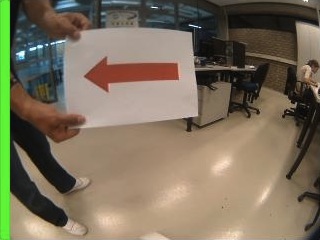

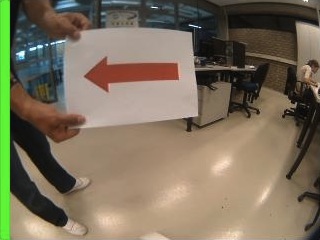

This week, we discussed with Rob about some important issue relating to arrow detection, i.e. how the arrow should be placed in the maze and the difference between the special fish-eye camera on the robot and the normal cameras on our laptops with which we test our code. Since some sample pictures containing the arrow has been uploaded on wiki, we have some nice observations as well. | |||

; We here list some important issues that need to be noticed: | |||

* The camera only has resolution of 320 by 240 | |||

:: Such low resolution covers 170 degree of vision angle, which means the image quality can't be as high as our laptop's cameras. Thus a high robust algorithm should be applied in order to get satisfying result even with low image quality. | |||

* The arrow can be far away from the robot | |||

:: As the resolution is low, if the arrow is far away from the camera, it may only cover 30 pixels. We have to let robot go close enough to the arrow in order to tell its orientation if our algorithm can't deal with small arrows. This costs more time in the end. | |||

* Straight lines may turn into curve after the fish-eye camera | |||

:: Such effect is extremely obvious at the edge of image. If our algorithm only works based on the assumption of straight line, we may have wrong output when the arrow is close to the edge. | |||

* Arrow's color is red | |||

:: Arrow's color is red and there won't be more confusing objects with the same color. This leads us to the approach of color reorganization instead of turning RGB into gray scale. | |||

; Detecting process | |||

{| | |||

| [[File:G3_Untitled1.jpg|thumb|upright|Original Image]] | |||

| [[File:G3_Untitled2.jpg|thumb|upright|Detect red color]] | |||

| [[File:G3_Untitled3.jpg|thumb|upright|Form binary picture]] | |||

| [[File:G3_Untitled4.jpg|thumb|upright|Remove noise points]] | |||

| [[File:G3_Untitled5.jpg|thumb|upright|Tell arrow's direction]] | |||

|} | |||

[[File:HSV color solid cylinder.png|thumb|right|250px|HSV color solid cylinder]] | |||

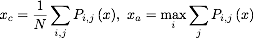

# Color detection | |||

#: Since arrow's color is given which is red, it's better to transform RGB format into HSV format. The advantage benefits from the definition of HSV format since every color corresponds to a certain range of Hue value. Saturation and Value reflects its intensity and brightness. Red color is a bit different since it's hue value can be either 0 or 360. Thus we need an ''' "Or" ''' command to sum up all those red points. | |||

#: [[File:Latex-image-2.jpeg|center|]] | |||

#: The above mathematical representation shows our parameters for color detection. We arrive at these number by testing sample pictures which we assume to have same color features as the actual image from ROS. | |||

# Binary map | |||

#: The binary map only indicates if every pixel is within the color range we previously define. Eventually, every pixel inside the arrow has value of 1 and 0 outside the arrow. By doing this, we are able to perform our own algorithm. | |||

# Noise reduction | |||

#: When forming the binary map, it's inevitable to have noise points, no matter how small our range is. What's more, if we restrict our HSV range to a very small domain, those points near to arrow's edge may be eliminated as well. So it's always a dilemma to find appropriate HSV range which guarantees sharp arrow shape without noise pixels. It will be perfect if we are able to filter the binary map in a way that it only keep the arrow and remove those noise pixels. As we don't need to worry about the noise, we can chose a relatively large HVS range which guarantees good color detection and eliminate all the noise afterwards. | |||

#: We failed to find any OpenCV functions to eliminate noise pixels in binary map. Most of them just "smoothen" the binary picture. So we decide to write our own function to do so. The basic idea is very simple, it removes all those noise points which contains numbers of pixels that are smaller than the pre-defined value. It's safe to give a big value so that we don't detect arrow in a far distance. | |||

#: [[File:g3_enlarge1.jpg|thumb|right|Zoomed frame0009.jpg]] | |||

#: [[File:g3_enlarge2.jpg|thumb|right|Zoomed frame0015.jpg]] | |||

# Detect orientation | |||

#: This is the step that every group should develop their own method to detect arrow's orientation. There is no given function that we can apply directly. Before we figured our final algorithm, we came up with several ideas with different approaches. | |||

#: First is shape recognition. | |||

#: This method is widely used since people can define any kind of shape. It basically compare different regions of a picture with a given pattern. In principle, it should work with arrow detection. But we think this idea is too fancy and may not have high robustness. The coding part may take too much time as well. So we decided not to follow this approach. | |||

#: Second is edge detection. | |||

#: We found it's quite easy to detect edges in OpenCV. Based on our testing, it's possible to detect arrow's orientation based on the contour plot. This idea seems to be a nice one and some other groups use similar approach as well. But we don't think it's an optimal solution. In order to get a clean contour, we need a high quality picture. Since robot's camera only has the resolution of 320 by 240, the arrow must be in the front and close enough to the robot, other wise, it's very difficult to give correct output. As stated above, we will loose much time if the robot has to go close to the arrow. | |||

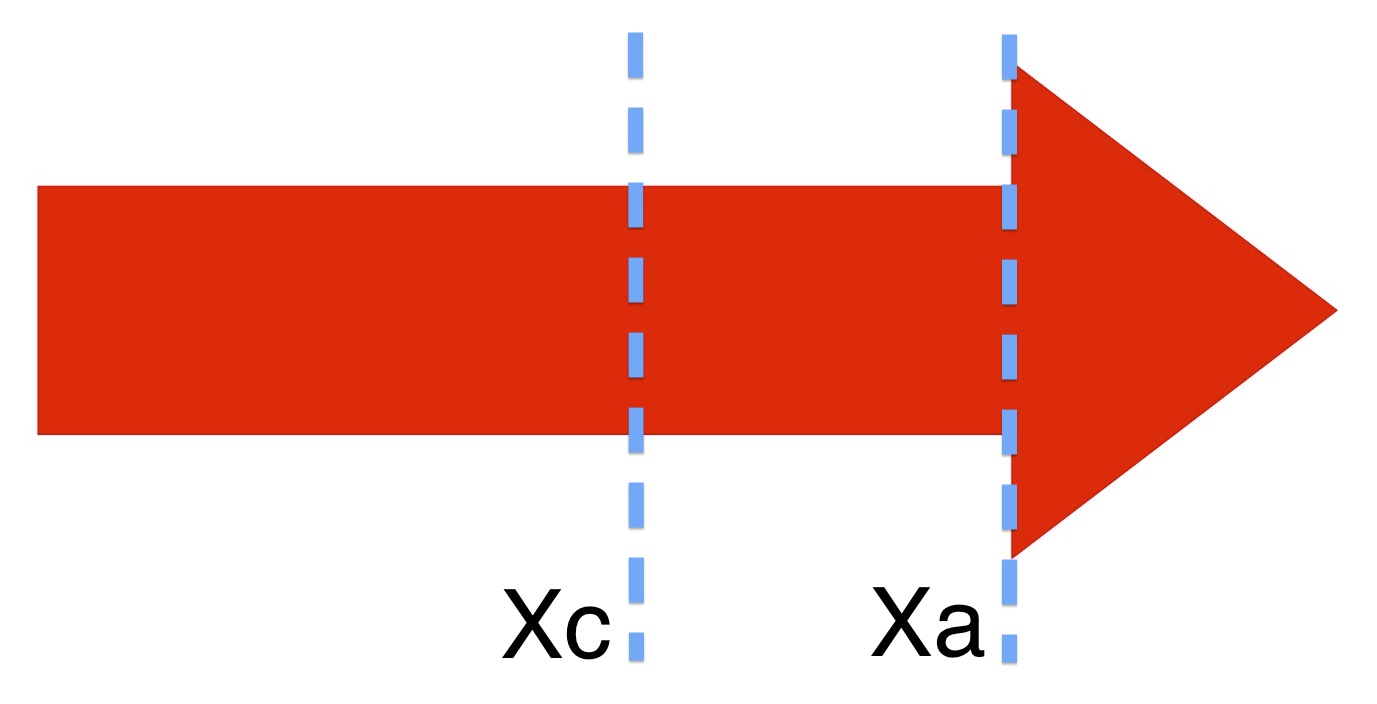

#: Third is position comparison | |||

#: [[File:G3_Arrow.jpg|thumb|right|250px|Detection Algorithm]] | |||

#: This is the most direct method. It compare the x position of arrow's center and its triangle tip. If the tip is on the right with respect to the center, the arrow points right, vise versa. We only need to apply 2 for loops to count two value and compare them afterward. The mathematical representation is: | |||

#: [[File:G3-latex-image-1.png|center|]] | |||

#: where N is the number of pixels with non-zero value. It's equal to the number of pixels consists of the arrow. Since the arrow has a long tail and small triangle tip, xc can be regarded as the x position of geometry center. xa indicated the x position of the longest segment in vertical direction, which can be regarded as the position of triangle tip. By comparing the value of xc and xa, we can easily tell arrow's orientation as illustrated in the picture. | |||

#: This method is surprisingly precise and robust. It works with arrows in far distance as well as on side walls. We test it with all sample pictures and it manages to recognize all the arrows and tell the orientation. Some of the arrows are very difficult to recognize like the one in '' frame0009.jpg ''and ''frame0015.jpg ''. Although some arrows are difficult to detect, the algorithm still provides excellent output. The reason why the algorithm has such excellent is that it's task is very simple. It only works corresponding to particular tasks. For example, the algorithm would fail if there are multiple red objects within the vision. | |||

; Testing | |||

<gallery caption="Sample pictures" widths="128px" heights="96px" perrow="5"> | |||

File:g3_test1.jpg | |||

File:g3_test2.jpg | |||

File:g3_test3.jpg | |||

File:g3_test4.jpg | |||

File:g3_test5.jpg | |||

File:g3_test6.jpg | |||

File:g3_test7.jpg | |||

File:g3_test8.jpg | |||

File:g3_test9.jpg | |||

File:g3_test10.jpg | |||

</gallery> | |||

: We tested the algorithm both with sample pictures and with our own camera. The green line indicates the direction. The algorithm is able to detect arrow's orientation in any position. The test video shows that the output is both correct and stable where the green circle indicates the direction. | |||

<center> | |||

<table> | |||

<tr> | |||

<td>[[File:test_video.png|250px|thumb|center|http://youtu.be/x0uvgFHG8F8]]</td> | |||

</tr> | |||

</table> | |||

</center><br> | |||

: We are sure our algorithm is the optimal solution we can get. Further test based on PICO's camera topics will be performed in week 7. | |||

''''' | |||

== '''Algorithm''' == | |||

''''' | |||

<p style="text-align: justify;"> | |||

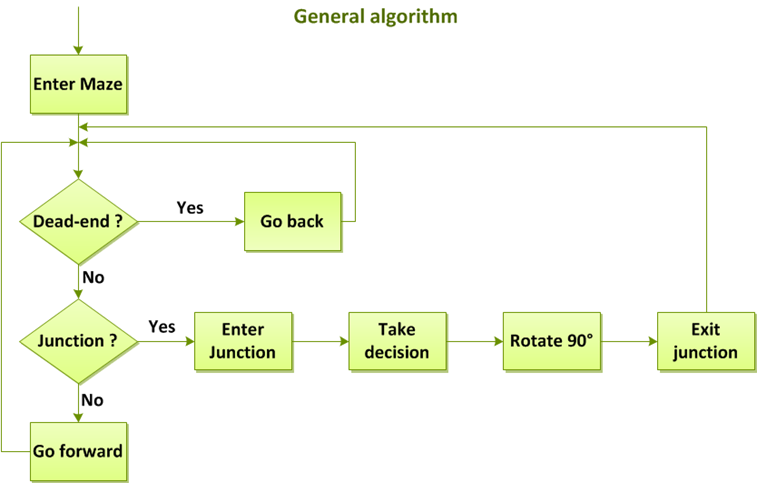

Our code is based on a general algorithm as the one described in the picture below. After entering maze, the robot checks if it reached dead-end. If yes, then it has to go back. Otherwise it goes forward until it reaches a junction or a dead-end. When a junction is detected, the robot starts a routine of entering junction. When it is inside the junction, it will take a decision about the direction it has to follow. The decision is based on the right hand rule. Exception is made if an arrow is detected, in this case, the robot follow the direction indicated by the arrow, regardless of the previous right-hand rule. Another exception is the case of islands in the maze. If we follow the right-hand rule and we detect an island, after a number of consecutive turns, the robot changes direction, to avoid entering infinite loop. After the decision was made, Jazz rotates 90 degrees by making use of the odometry and laser data. After rotation the robot makes the appropriate corrections and then it goes forward and exts the junction. | |||

</p> | |||

<center> | |||

<gallery caption="General algorithm" widths="400px" heights="350px"> | |||

File:Struc1.png | |||

</gallery> | |||

</center><br><br> | |||

<p style="text-align: justify;"> | |||

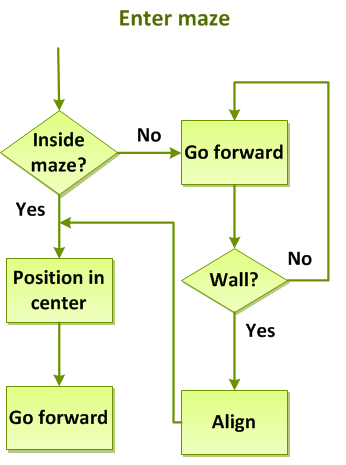

In the pictures below we represents the flowcharts describing the processes of: | |||

<br><br> | |||

'''1. Entering the maze:''' | |||

As we consider that the robot faces more or less (straight or with an angle smaller that 90 degrees) the entrance to the maze, the robot goes forward until it detects entrance in the maze. | |||

<br><br> | |||

- if entrance detected, Jazz pisitions itself in the center by making use of the side points of the laser and after getting a position parallel to the walls of the corridor, it starts moving forward; | |||

<br> | |||

- if entrance not detected yet, it keeps on moving forward as long as it does not find a wall. If a wall is detected, then it alligns itself by executing a rotation and then it positions itself in the center and again it goes forward. | |||

<br><br> | |||

'''2. Detection of junction:''' | |||

One of the side laser points is used for early detection as we will see in the description of the Robot Control in the lines below. The robot goes forward until it gets a message of early detection from the laser. At this moment it reduces speed and it continues going forward with a low speed until it detects entry to the junction. If entry is detected, Jazz makes the proper corrections in order to ensures that it faces forward. Afterwards it measures the width of the junctions and it goes in the center of the junction. | |||

</p> | |||

<br><br> | |||

<center> | |||

<gallery caption=" " widths="400px" heights="350px"> | |||

File:Struc3.png | |||

File:Struc2.png | |||

</gallery> | |||

</center> | |||

''''' | |||

== Robot Control == | |||

''''' | |||

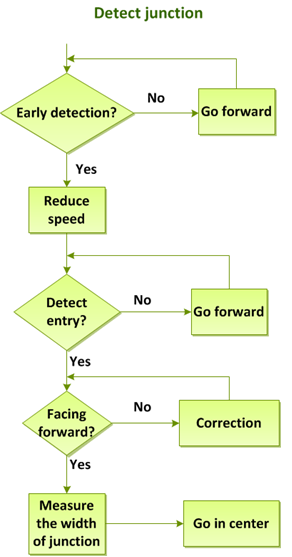

The next picture shows the '''laser points''' we considered as being relevant for detection, align and positioning inside the maze: | |||

'''1. Front (main) laser point 1''' - used for wall detection, collision avoidance, navigation through the maze. | |||

'''2. Front-side points 2, 2' ''' - situated at +-10 degrees around the first point, is used for averaging and for robustness of the first data point; | |||

'''3. Middle points 3, 3'''' - at 45 degrees from the main laser point - used for early detection of junctions and for measuring the width of the junctions; | |||

'''4. Side points 4, 5, 6''' - used for possitioning in the middle of the corridor and aligning. | |||

<br><br> | |||

<center> | |||

<gallery caption="Laser points used" widths="400px" heights="350px"> | |||

File:Control1.png | |||

</gallery> | |||

</center> | |||

<br><br> | |||

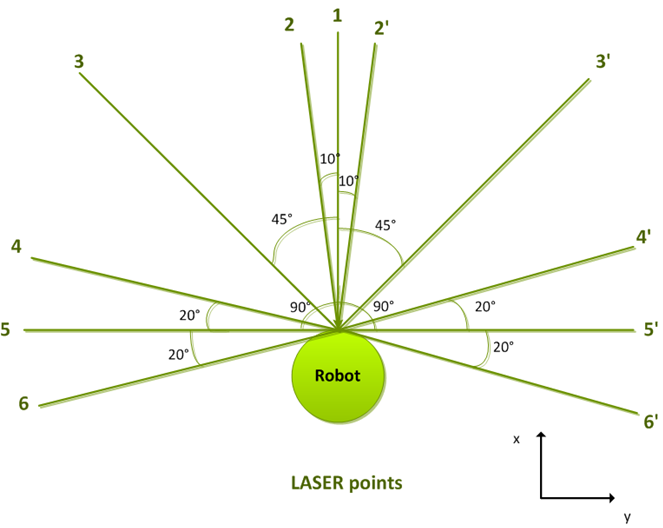

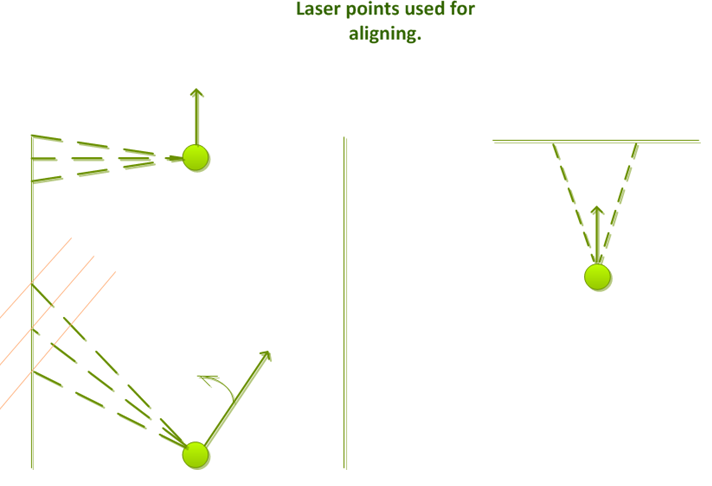

In the left-hand picture below we have represented one typical situation in which we make use of the '''points 4, 5 and 6 for aligning'''. If the robot is not facing forward inside the corridor, then we draw parallel lines between the points returned by these three laser points. By making properly rotation, Jazz tries to allign such that when it faces forward, the three lines almost coincide. | |||

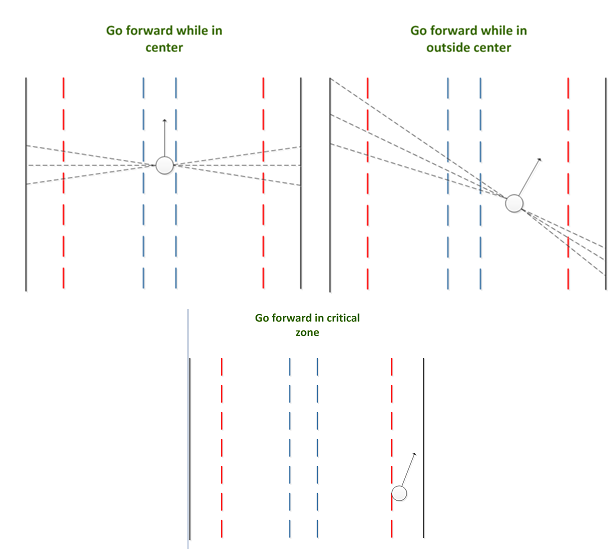

We used the side points 4, 5 and 6 for '''possitioning inside the corridor'''. In the right-hand picture we have represented the behaviour of the robot in three different regions along the width of the corridor: | |||

'''1. Center''' - If the distances shown by 5 and 5'are almost the same, the robot continues on going forward; | |||

'''2. Outside center, middle region''' - the robot uses points 4, 5, 6 to rotate and align such that it returns in the center region; | |||

'''3. critical zone''' - very close to the wall. In this case, the robot stops, alligns and then it continues on moving only when it faces the center of the corridor. | |||

<br><br> | |||

<center> | |||

<gallery caption="Laser points used for aligning (left) and Behavior of the robot in different regions of the corridor (right)" widths="400px" heights="350px"> | |||

<right> | |||

File:Control2.png | |||

<left> | |||

File:Control3.png | |||

</gallery> | |||

</center> | |||

<br><br> | |||

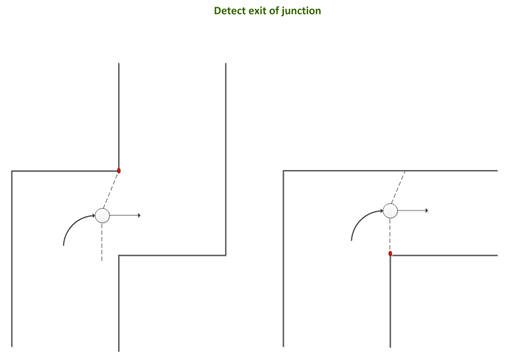

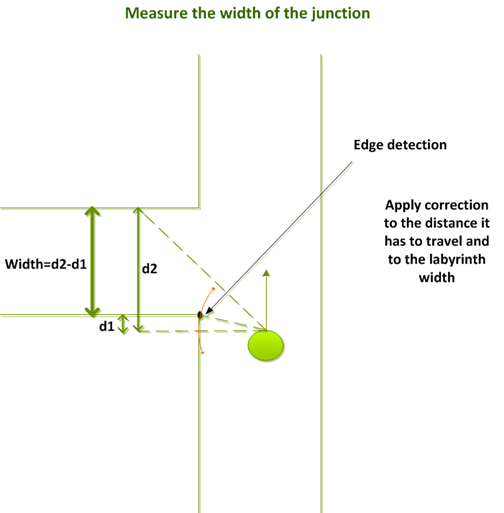

The left part of the figure below has represented two cases of '''exit of junction'''. The first one is the critical type of junction exit, which we managed to deal with easily by using the third point of the laser data and all other points for good positioning. | |||

In the right part of the picture we have the way of '''measuring the width of the junction'''. After the robot detects the entrance in the junction by early detection, the corner is found by using the 4th laser point. At this moment we are able to compute d1. If p4=the distance detected by 4th laser point: d1=sin(20°)/p4. At the same time, we have d2 calculated using the third point from the laser data. So, the width of the junction is given by d2-d1. | |||

In order to perform a '''90 degrees rotation''' we use: | |||

- Odometry data for initial rotation | |||

- Lasers for applying correction | |||

- Smooth acceleration to eliminate the drift from the rear wheels | |||

<br><br> | |||

<center> | |||

<gallery caption="Detect exit of junction (left) and Measure the width of the junction (right)" widths="400px" heights="350px"> | |||

File:Control4.png | |||

File:Control5.png | |||

</gallery> | |||

</center><br><br> | |||

== Positives and drawbacks of the algorithm == | |||

'''Positives:''' | |||

* Good forward control | |||

* Robust junction entry and exit identification | |||

* Smooth acceleration and deceleration | |||

* Using lasers to apply correction whenever there is a reference | |||

* Accurate and robust arrow detection | |||

<br> | |||

'''Drawbacks''' | |||

* No map of the labyrinth is being created | |||

* Rotation in junctions relies heavily on odometry- source of error if no laser reference is available | |||

<br><br> | |||

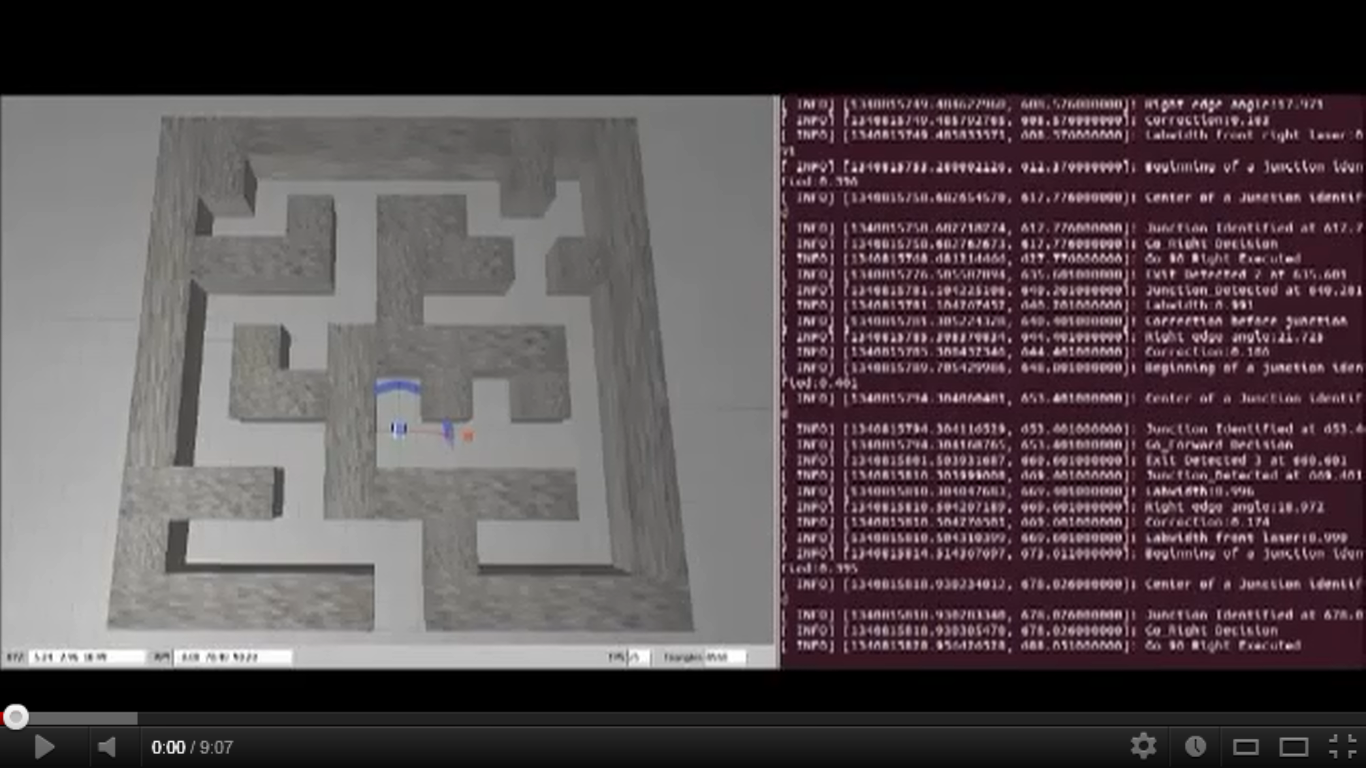

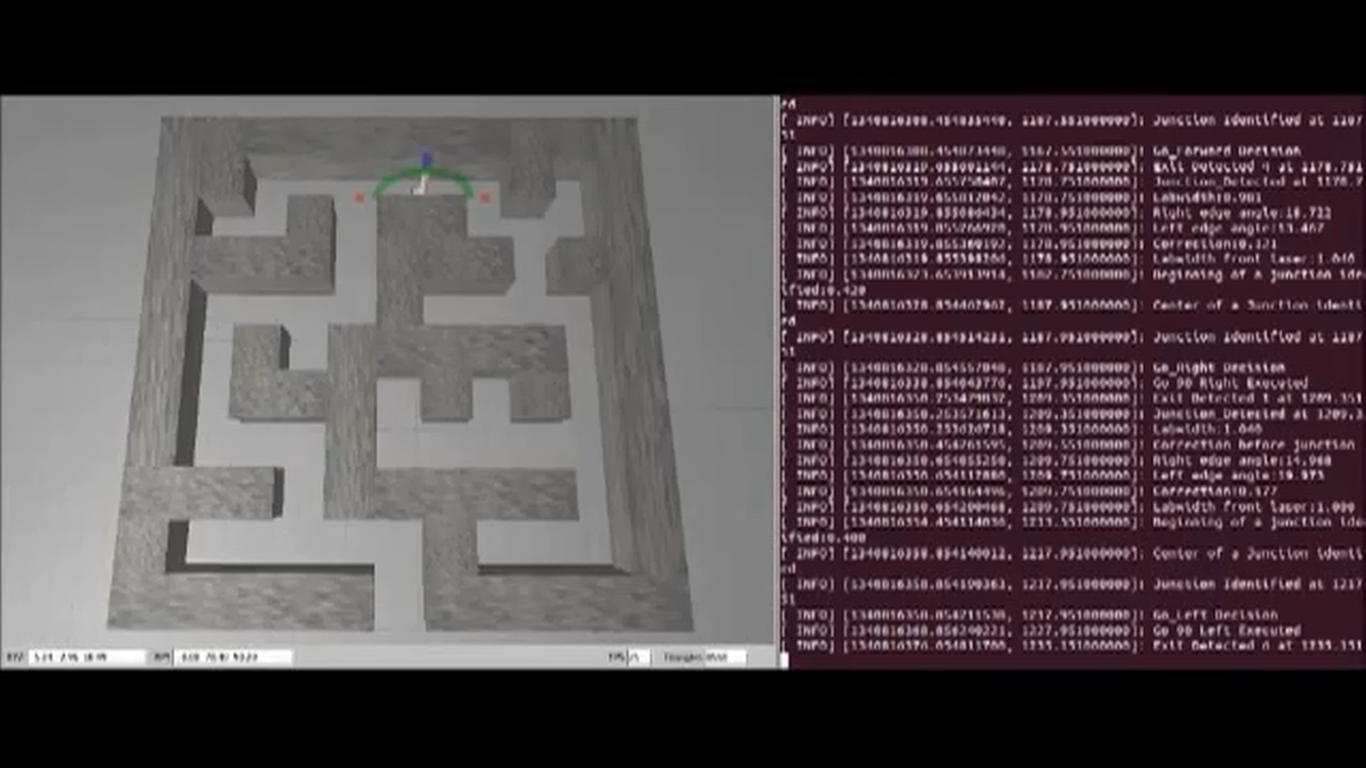

== Results of the last test in the simulator == | |||

Due to its length the video was split in 3 parts showing the entire progress of the robot through the maze. | |||

<center> | |||

<table> | |||

<tr> | |||

<td>[[File:Sim1.png|250px|thumb|center|http://youtu.be/M-3-o_OwRIU]]</td> | |||

<td>[[File:Sim2.png|250px|thumb|center|http://youtu.be/rADehiBpVlM]]</td> | |||

<td>[[File:Sim3.png|250px|thumb|center|http://youtu.be/OlyVTb8dCp8]]</td> | |||

</tr> | |||

</table> | |||

</center> | |||

As it can be seen from the movies the robot performs perfect. | |||

== Results of the last test with the real robot == | |||

This is the last test we performed on the real robot before the final competition. The robot was able to detect the junctions, center of the junctions, arrows and their correct direction. It performed well and it went out of the maze! | |||

<center> | |||

<table> | |||

<tr> | |||

<td>[[File:test_robot.png|250px|thumb|center|http://youtu.be/jxAjOk3ZQ6k]]</td> | |||

</tr> | |||

</table> | |||

</center><br> | |||

== Final Competition == | |||

The presentation of the algorithm and the strategy that we adopted is given [[Media:Final_presentation.pdf]]. | |||

<center> | |||

<table> | |||

<tr> | |||

<td>[[File:competition.png|250px|thumb|center|http://youtu.be/9aPt-MePz7A]]</td> | |||

</tr> | |||

</table> | |||

</center> | |||

<p style="text-align: justify;"> | |||

At the final competition the robot had very good overall result being ranked second from all the teams. Apparently what it went wrong at the junction where the robot stopped was exactly the problem with the odometry(the drawback that is mentioned above). Also, we noticed after this first run that the camera wasn't working due some issues of the robot and a second trial was not possible anymore. | |||

Overall we were pleased with the final results. | |||

</p> | |||

== Conclusions == | |||

'''Team:''' | |||

* good understanding between each other and efficient team work; | |||

* perfect simulation results; | |||

* good last test (the robot was able to go out of the maze also with the arrow recognition working) on the real robot. | |||

'''Tutor:''' | |||

* the weekly meetings were constructive, the sugestions from Rob and Sjoerd were really helpful. | |||

Latest revision as of 16:04, 30 June 2012

Contact Info

| Name | Number | E-mail address |

|---|---|---|

| X. Luo (Royce) | 0787321 | x.luo@student.tue.nl |

| A.I. Pustianu (Alexandru) | 0788040 | a.i,pustianu@student.tue.nl |

| T.L. Balyovski (Tsvetan) | 0785780 | t.balyovski@student.tue.nl |

| R.V Bobiti (Ruxandra) | 0785835 | r.v.bobiti@student.tue.nl |

| G.C Rascanu (George) | 0788035 | g.c.rascanu@student.tue.nl |

GOAL

Win the competition!

HOW ?

Good planning and team work

Week 1 + Week 2

1. Installing and testing software tools

- Linux Ubuntu

- ROS

- Eclipse

- Jazz simulator

Remark:

Due to incompatibilities with Lenovo W520 (wireless doesn’t work), Ubuntu (10.04) did not work and other versions were tried and tested to work properly. All the software was installed for all members of the group.

2. Discussion about the robot operation

Targeting units:

- Maze mapping

- Moving Forward

- Steering

- Decision making

Moving forward and steering

Make choice between what sensors for straight line and what sensors for turning left/right.

Case 1 – Safer, but slower

Case 2- Faster, but more challenging

Time difference between these 2 cases is small or not? According to the simulations we will decide between Case 1 and Case 2.

Backward movement!

Because the target is to get out of the maze as fast as possible this kind of movement will be considered as a safety precaution at the end of the project whether is time or not.

Speed

As mentioned earlier the main requirement is as fast possible, hence take the maximum speed (~ 1 m/s).

Sensors

Web cam -> Identify arrows on the walls by color and shape.

Laser -> Map of the world (range ~< 6 m).

Encoders -> Mounted on each wheel -> used for odometry (estimates change in position over time). How many pulses per revolution?

3. Making planning of the work process

All the team members start reading C++ and ROS tutorials given on the wiki page of the course.

4. Had the first meeting with the tutor.

Week 3

After our meeting, the lecture regarding Tasks of Chapter 5 was split among our team members:

- Introduction + Task definition – Bobiti Ruxandra

- Task states and scheduling – Luo Royce

- Typical task operation - Pustianu Alexandru

- Typical task structure – Rascanu George

- Tasks in ROS - Balyovski Tsvetan

The link for the presentation is given here: http://cstwiki.wtb.tue.nl/images/Tasks.pdf

- Problem with RViz was fixed and solution was posted in FAQ.

- Investigation of the navigation stacks and possibility to create map of the environment. The link for relevant messages is given here.

- Thinking and discussing about smart navigation.

The ideas we came up up:

We should save in a buffer the route were the robot went and not go twice through same place. For turning left or right, for case 2 presented in Week 1 and 2, we want to use so cubic or quitting splines. An example of the idea is given in the paper "Task-Space Trajectories via Cubic Spline Optimization" - J. Zico Kolter and Andrew Y. Ng

Week 4

This week was focused mainly on the software structure. The structure we come up was developed after discussing multiple options in such a way to make it simple so that every one can write his own part of the code and no one confuse what is there.In other words: make simple as possible.

Software structure

The structure of the control software is depicted in Figure 1:

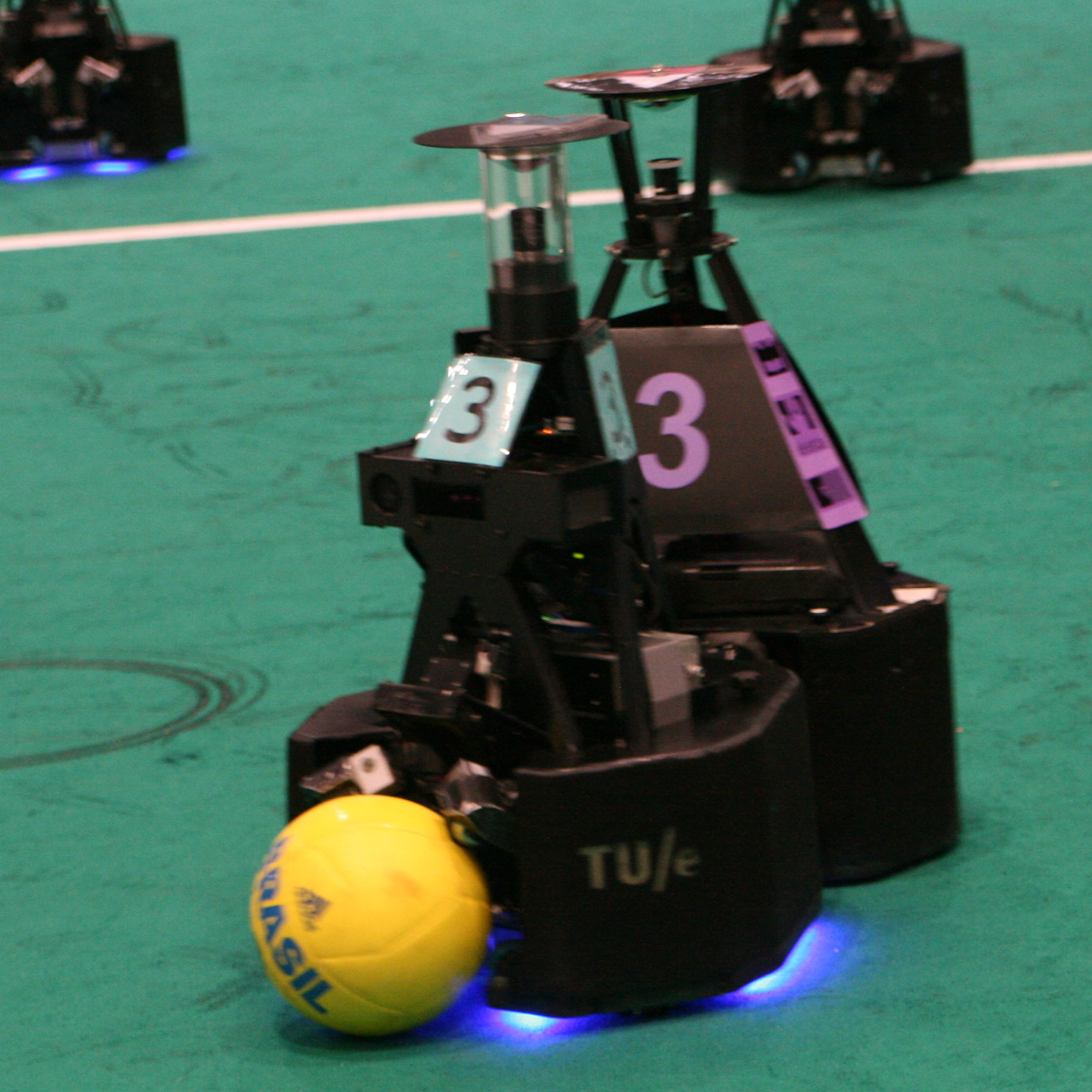

The robot will use the data from the odometry, laser and camera to navigate through the maze. The raw laser data processed and few relevant points will be extracted from it. The noise will be filtered by averaging the data(after applying trigonometric transformation) from few points around the desired one. The relevant points are shown on the next figure:

The points in front of the robot will be used for preventing it from hitting the walls of the maze. The two sideways points(2 and 2’) will be used to keep the robot moving straight, by comparing them. 3 and 3’ will be used for detecting of a junction and they with 4 and 4’ will be used to determine where the center of the junction is. After the junction is detected its type will be sent to the process that builds the map. The map will be array of structures (or classes) that keep the coordinates and orientation of every junction, its type and the exits that have been taken before and that the robot has come from. The position will be used in building the map, but the map also will be used in readjusting the current position if the robot reaches a junction it has been before. The odometry will be reset on every junction and the value that will be taken into account will be the [math]\displaystyle{ sqrt(x^2+y^2) }[/math] as this determines the total distance traveled by the robot after the junction. Depending on the direction that the robot goes after the junction this value will be added to x or y coordinates. If large rotational correction is applied after a junction this will mean that the corner is not exactly 90 degrees and will be taken into account for the coordinate computation. The exact movement strategy is yet to be determined. It will stop the robot at every junction, determine the direction, and send a rotational command, which will be executed by the movement control. The control for keeping the robot straight will operate only if the robot is not at a junction and is not currently rotating. We consider that taking right at every junction, the robot will be able to go out of the maze and for the case when we have only a left turn and front path the robot will go forward. There will be considered 5 types of junctions: The X type (1), T type (2), left (3) and right (4) type, and dead-end (5). These possibilities are depicted in the figure below.

Arrow detection and processing

For the arrow detection the necessary drivers were installed and a ROS to openCV tutorial was followed in order to try and get familiar with ROS and openCV library. Based on some experience from bachelor we concluded that the following steps for image processing, presented in Figure 4 should be performed.

In the first step we can see that is the acquisition of the RGB images, were in second step they are converted to grayscale. Because the distribution of the intensities of the grey is not even, a histogram of the grayscale image will be performed in order to evenly distribute the intensities of grey like in Step 3. After this step a conversion to black and white will be made and possibly an inversion of the colors will be performed in order to identify a contour of the arrow as should be done in Step 6. These steps are only for providing information about future work that will be actually implemented for the arrow after we finish all the left hand side steps from Figure 1, which do not involve image processing. For more information reference will be made for the paper: ”Electric wheelchair control for people with locomotor disabilities using eye movements” - Rascanu, G.C. and Solea, R.

The direction pointing(Left or Right) is still to be discussed.

Week 5

Now the robot is able to go around the maze using right hand rule. Based on simulations, multiple observations were made:

- The robot is not able to go straight when you publish message with both angular and linear velocity. Hence, Case 1 from Week 1 will be used to urn left/right.

- Currently every function that needs to publish does it on its own. This is OK. But on few places the thing needs to be done in cycles, which requires timer that stops it and waits 20ms or so.

- After some more tests, we concluded that actually we need more points from the laser than, some to detect entry of a junction, and some to identify center firstly thought, but this is to be inspected more.

- We decided that is important to figure out fail safe things.

Now that we have the images from the real camera from the robot and some tests we decided that the algorithm presented in the week before for the vision part is not very robust and we decided to go for a better approach, more robust which in progress.

The above points will be treated in Week 6 as we make our last preparation for the corridor competition.

Week 6

At this moment the robot is able to:

- find the entrance of the maze and go in the maze on the center.

- identify each junction and center itself on the center of the corridor.

- using a time function, momentarily, knowing the lab width, it can identify the center of the junction.

- it can detect dead-ends and go further without checking that dead-end.

- the robot is able to go to the end of the maze and come back.

VIDEO DOCUMENTATION

Trial with the algorithm just before the corridor competition (http://youtu.be/BgYXToQaHE8)

Vision

This week, we discussed with Rob about some important issue relating to arrow detection, i.e. how the arrow should be placed in the maze and the difference between the special fish-eye camera on the robot and the normal cameras on our laptops with which we test our code. Since some sample pictures containing the arrow has been uploaded on wiki, we have some nice observations as well.

- We here list some important issues that need to be noticed

- The camera only has resolution of 320 by 240

- Such low resolution covers 170 degree of vision angle, which means the image quality can't be as high as our laptop's cameras. Thus a high robust algorithm should be applied in order to get satisfying result even with low image quality.

- The arrow can be far away from the robot

- As the resolution is low, if the arrow is far away from the camera, it may only cover 30 pixels. We have to let robot go close enough to the arrow in order to tell its orientation if our algorithm can't deal with small arrows. This costs more time in the end.

- Straight lines may turn into curve after the fish-eye camera

- Such effect is extremely obvious at the edge of image. If our algorithm only works based on the assumption of straight line, we may have wrong output when the arrow is close to the edge.

- Arrow's color is red

- Arrow's color is red and there won't be more confusing objects with the same color. This leads us to the approach of color reorganization instead of turning RGB into gray scale.

- Detecting process

|

|

|

|

|

- Color detection

- Since arrow's color is given which is red, it's better to transform RGB format into HSV format. The advantage benefits from the definition of HSV format since every color corresponds to a certain range of Hue value. Saturation and Value reflects its intensity and brightness. Red color is a bit different since it's hue value can be either 0 or 360. Thus we need an "Or" command to sum up all those red points.

- The above mathematical representation shows our parameters for color detection. We arrive at these number by testing sample pictures which we assume to have same color features as the actual image from ROS.

- Binary map

- The binary map only indicates if every pixel is within the color range we previously define. Eventually, every pixel inside the arrow has value of 1 and 0 outside the arrow. By doing this, we are able to perform our own algorithm.

- Noise reduction

- When forming the binary map, it's inevitable to have noise points, no matter how small our range is. What's more, if we restrict our HSV range to a very small domain, those points near to arrow's edge may be eliminated as well. So it's always a dilemma to find appropriate HSV range which guarantees sharp arrow shape without noise pixels. It will be perfect if we are able to filter the binary map in a way that it only keep the arrow and remove those noise pixels. As we don't need to worry about the noise, we can chose a relatively large HVS range which guarantees good color detection and eliminate all the noise afterwards.

- We failed to find any OpenCV functions to eliminate noise pixels in binary map. Most of them just "smoothen" the binary picture. So we decide to write our own function to do so. The basic idea is very simple, it removes all those noise points which contains numbers of pixels that are smaller than the pre-defined value. It's safe to give a big value so that we don't detect arrow in a far distance.

Zoomed frame0009.jpg

Zoomed frame0015.jpg

- Detect orientation

- This is the step that every group should develop their own method to detect arrow's orientation. There is no given function that we can apply directly. Before we figured our final algorithm, we came up with several ideas with different approaches.

- First is shape recognition.

- This method is widely used since people can define any kind of shape. It basically compare different regions of a picture with a given pattern. In principle, it should work with arrow detection. But we think this idea is too fancy and may not have high robustness. The coding part may take too much time as well. So we decided not to follow this approach.

- Second is edge detection.

- We found it's quite easy to detect edges in OpenCV. Based on our testing, it's possible to detect arrow's orientation based on the contour plot. This idea seems to be a nice one and some other groups use similar approach as well. But we don't think it's an optimal solution. In order to get a clean contour, we need a high quality picture. Since robot's camera only has the resolution of 320 by 240, the arrow must be in the front and close enough to the robot, other wise, it's very difficult to give correct output. As stated above, we will loose much time if the robot has to go close to the arrow.

- Third is position comparison

Detection Algorithm - This is the most direct method. It compare the x position of arrow's center and its triangle tip. If the tip is on the right with respect to the center, the arrow points right, vise versa. We only need to apply 2 for loops to count two value and compare them afterward. The mathematical representation is:

- where N is the number of pixels with non-zero value. It's equal to the number of pixels consists of the arrow. Since the arrow has a long tail and small triangle tip, xc can be regarded as the x position of geometry center. xa indicated the x position of the longest segment in vertical direction, which can be regarded as the position of triangle tip. By comparing the value of xc and xa, we can easily tell arrow's orientation as illustrated in the picture.

- This method is surprisingly precise and robust. It works with arrows in far distance as well as on side walls. We test it with all sample pictures and it manages to recognize all the arrows and tell the orientation. Some of the arrows are very difficult to recognize like the one in frame0009.jpg and frame0015.jpg . Although some arrows are difficult to detect, the algorithm still provides excellent output. The reason why the algorithm has such excellent is that it's task is very simple. It only works corresponding to particular tasks. For example, the algorithm would fail if there are multiple red objects within the vision.

- Testing

- Sample pictures

- We tested the algorithm both with sample pictures and with our own camera. The green line indicates the direction. The algorithm is able to detect arrow's orientation in any position. The test video shows that the output is both correct and stable where the green circle indicates the direction.

|

- We are sure our algorithm is the optimal solution we can get. Further test based on PICO's camera topics will be performed in week 7.

Algorithm

Our code is based on a general algorithm as the one described in the picture below. After entering maze, the robot checks if it reached dead-end. If yes, then it has to go back. Otherwise it goes forward until it reaches a junction or a dead-end. When a junction is detected, the robot starts a routine of entering junction. When it is inside the junction, it will take a decision about the direction it has to follow. The decision is based on the right hand rule. Exception is made if an arrow is detected, in this case, the robot follow the direction indicated by the arrow, regardless of the previous right-hand rule. Another exception is the case of islands in the maze. If we follow the right-hand rule and we detect an island, after a number of consecutive turns, the robot changes direction, to avoid entering infinite loop. After the decision was made, Jazz rotates 90 degrees by making use of the odometry and laser data. After rotation the robot makes the appropriate corrections and then it goes forward and exts the junction.

- General algorithm

In the pictures below we represents the flowcharts describing the processes of:

1. Entering the maze:

As we consider that the robot faces more or less (straight or with an angle smaller that 90 degrees) the entrance to the maze, the robot goes forward until it detects entrance in the maze.

- if entrance detected, Jazz pisitions itself in the center by making use of the side points of the laser and after getting a position parallel to the walls of the corridor, it starts moving forward;

- if entrance not detected yet, it keeps on moving forward as long as it does not find a wall. If a wall is detected, then it alligns itself by executing a rotation and then it positions itself in the center and again it goes forward.

2. Detection of junction:

One of the side laser points is used for early detection as we will see in the description of the Robot Control in the lines below. The robot goes forward until it gets a message of early detection from the laser. At this moment it reduces speed and it continues going forward with a low speed until it detects entry to the junction. If entry is detected, Jazz makes the proper corrections in order to ensures that it faces forward. Afterwards it measures the width of the junctions and it goes in the center of the junction.

Robot Control

The next picture shows the laser points we considered as being relevant for detection, align and positioning inside the maze:

1. Front (main) laser point 1 - used for wall detection, collision avoidance, navigation through the maze.

2. Front-side points 2, 2' - situated at +-10 degrees around the first point, is used for averaging and for robustness of the first data point;

3. Middle points 3, 3' - at 45 degrees from the main laser point - used for early detection of junctions and for measuring the width of the junctions;

4. Side points 4, 5, 6 - used for possitioning in the middle of the corridor and aligning.

- Laser points used

In the left-hand picture below we have represented one typical situation in which we make use of the points 4, 5 and 6 for aligning. If the robot is not facing forward inside the corridor, then we draw parallel lines between the points returned by these three laser points. By making properly rotation, Jazz tries to allign such that when it faces forward, the three lines almost coincide.

We used the side points 4, 5 and 6 for possitioning inside the corridor. In the right-hand picture we have represented the behaviour of the robot in three different regions along the width of the corridor:

1. Center - If the distances shown by 5 and 5'are almost the same, the robot continues on going forward;

2. Outside center, middle region - the robot uses points 4, 5, 6 to rotate and align such that it returns in the center region;

3. critical zone - very close to the wall. In this case, the robot stops, alligns and then it continues on moving only when it faces the center of the corridor.

- Laser points used for aligning (left) and Behavior of the robot in different regions of the corridor (right)

The left part of the figure below has represented two cases of exit of junction. The first one is the critical type of junction exit, which we managed to deal with easily by using the third point of the laser data and all other points for good positioning.

In the right part of the picture we have the way of measuring the width of the junction. After the robot detects the entrance in the junction by early detection, the corner is found by using the 4th laser point. At this moment we are able to compute d1. If p4=the distance detected by 4th laser point: d1=sin(20°)/p4. At the same time, we have d2 calculated using the third point from the laser data. So, the width of the junction is given by d2-d1.

In order to perform a 90 degrees rotation we use:

- Odometry data for initial rotation

- Lasers for applying correction

- Smooth acceleration to eliminate the drift from the rear wheels

- Detect exit of junction (left) and Measure the width of the junction (right)

Positives and drawbacks of the algorithm

Positives:

- Good forward control

- Robust junction entry and exit identification

- Smooth acceleration and deceleration

- Using lasers to apply correction whenever there is a reference

- Accurate and robust arrow detection

Drawbacks

- No map of the labyrinth is being created

- Rotation in junctions relies heavily on odometry- source of error if no laser reference is available

Results of the last test in the simulator

Due to its length the video was split in 3 parts showing the entire progress of the robot through the maze.

|

|

|

As it can be seen from the movies the robot performs perfect.

Results of the last test with the real robot

This is the last test we performed on the real robot before the final competition. The robot was able to detect the junctions, center of the junctions, arrows and their correct direction. It performed well and it went out of the maze!

|

Final Competition

The presentation of the algorithm and the strategy that we adopted is given Media:Final_presentation.pdf.

|

At the final competition the robot had very good overall result being ranked second from all the teams. Apparently what it went wrong at the junction where the robot stopped was exactly the problem with the odometry(the drawback that is mentioned above). Also, we noticed after this first run that the camera wasn't working due some issues of the robot and a second trial was not possible anymore. Overall we were pleased with the final results.

Conclusions

Team:

- good understanding between each other and efficient team work;

- perfect simulation results;

- good last test (the robot was able to go out of the maze also with the arrow recognition working) on the real robot.

Tutor:

- the weekly meetings were constructive, the sugestions from Rob and Sjoerd were really helpful.