Embedded Motion Control 2012 Group 1: Difference between revisions

No edit summary |

|||

| (69 intermediate revisions by 5 users not shown) | |||

| Line 35: | Line 35: | ||

'''Tutor:''' [mailto:j.elfring@tue.nl Jos Elfring] | '''Tutor:''' [mailto:j.elfring@tue.nl Jos Elfring] | ||

==Week 1== | ==Progress== | ||

====Week 1==== | |||

* Installed all required software on our computers :) | |||

* Everybody gained some knowledge about Unix, ROS and C++. | |||

{| style="color: black; width="100%" class="wikitable" | |||

| colspan="2" | | |||

====Week 2==== | |||

* Everybody gained more knowledge about Unix, ROS and C++. | |||

* Looked at Gazebo, Rviz, Turtlebot_simulator, PR2_simulator, Jazz_robot_simulator | |||

* Managed to create 2D map with Jazz Robot Simulator. | |||

* Made presentation of Chapter 1: Introduction, Link to the presentation: http://cstwiki.wtb.tue.nl/images/Powerpoint_Chapter_1.pdf | |||

|[[File:Movie1_emc01.png|200px|thumb|http://www.youtube.com/watch?v=Cmh8dMrmgN0]] | |||

|} | |||

{| style="color: black; width="100%" class="wikitable" | |||

| colspan="2" | | |||

====Week 3==== | |||

* Thought about an efficient algorithm to solve the 'maze'-problem. Depth-First-Search is proposed as a candidate algorithm. | |||

* Started with arrow recognition using the OpenCV package. | |||

* Started with map-processing to build up a tree of the maze which can be used by the decision algorithm. | |||

* Optimized mapping algorithm and did some processing on the map. See Figure "Optimized map" | |||

* Started with navigation node for autonomous navigation of the jazz | |||

Tasks for Week 4: | |||

* Process map | |||

* Add markers at crossings | |||

* Define paths between these markers for navigation | |||

|[[File:Mapping.jpg|thumb|200px|''Optimized map'']] | |||

|} | |||

{| style="color: black; width="100%" class="wikitable" | |||

| colspan="2" | | |||

====Week 4==== | |||

* Arrows can be detected by camera with use of the OpenCV package. | |||

* Robot can detect its position in a corridor with use of its laser data and can drive autonomous. | |||

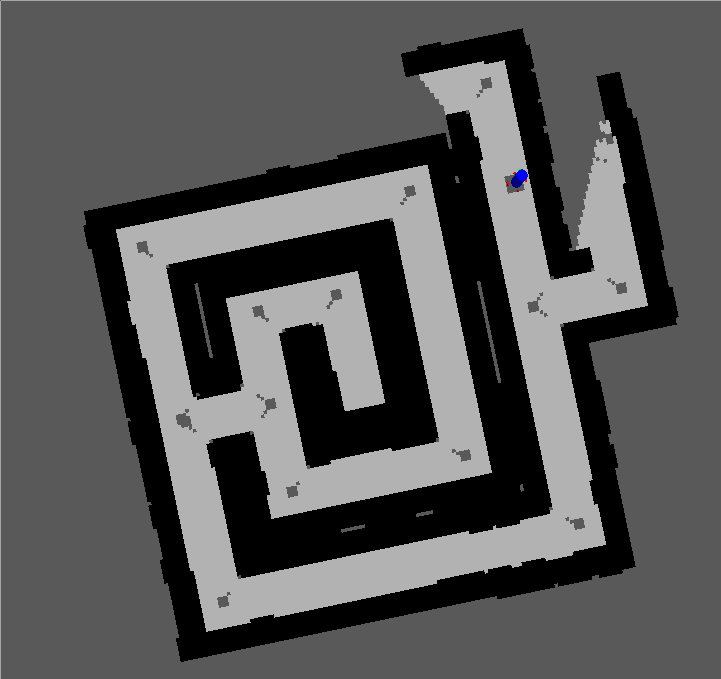

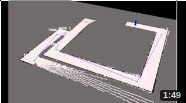

* Corner detection; extracted out of the occupancy-grid. See Figure "Corner detection". | |||

|[[File:Detected corners straight map 22-5-12.png|thumb|200px|''Corner detection'']] | |||

|} | |||

====Week 5==== | |||

* Map-processing is cancelled, since the used package (hector_mapping) creates incorrect maps. | |||

* Now working on processing the laserdata to let the Jazz drive "autonomously" | |||

* Writing an algorithm by using depth-first-search, this algorithm obtains the kind of crossing, the position of the Robot and the angle of the Robot and sends the new direction to the Robot | |||

{| style="color: black; width="100%" class="wikitable" | |||

| colspan="3"| | |||

====Week 6==== | |||

* Did tests with the real Jazz Robot; some issues were find concerning the update-rate; perhaps a new navigation strategy is needed. | |||

* New navigation strategy implemented. Still a couple of bugs that have to be fixed; this is for next week. | |||

* Created a visualization-class to visualize the node-structure for better perception of the algorithm. | |||

* Finished DFS algorithm; algorithm works now if there are no loops. | |||

Tasks for Week 7: | |||

* Debug navigation node; fix issue with no 'corridor' between 'corners'. | |||

* Debug algorithm; try to find some errors. | |||

* Corridor competition | |||

* Test on real robot | |||

|[[File:Test1.png|200px|thumb|http://youtu.be/_g9S24xXes4]] | |||

|[[File:Movie2_emc01.png|200px|thumb|http://www.youtube.com/watch?v=KSjsA6BR-Bs&feature=channel&list=UL]] | |||

|} | |||

{| style="color: black; width="100%" class="wikitable" | |||

| colspan="3"| | |||

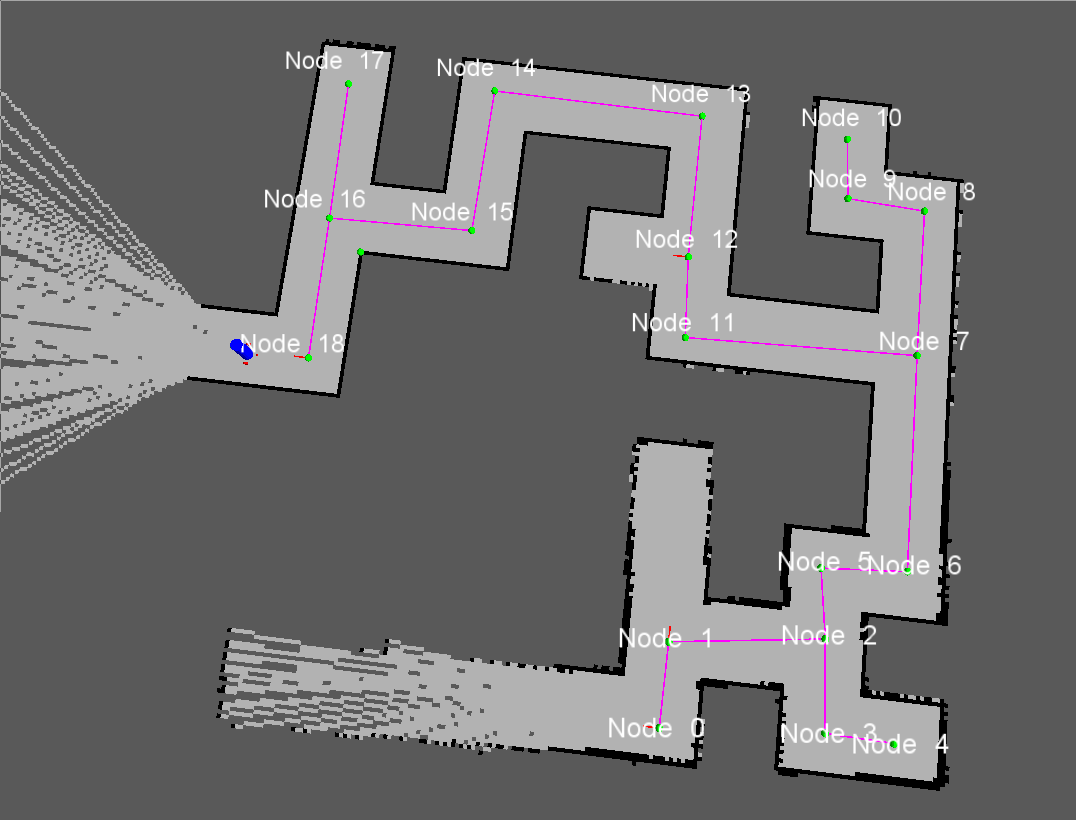

====Week 7==== | |||

* Navigation node debugged, fixed issue with no corridor between corners. | |||

<br> | |||

Original maze without loops solved. Figure "Original map solved" also shows the how the original given maze looks in Rviz when it is solved the robot. | |||

<br> | |||

Big maze solving also possible: The big maze solved can also be seen in Figure "Big maze solved". | |||

|[[File:maze solved.png|thumb|200px|''Original maze solved'' http://www.youtube.com/watch?v=eQblXU57aeU&feature=youtu.be]] | |||

|[[File:Big_maze.jpg|thumb|200px|''Big maze solved'' http://www.youtube.com/watch?v=HEO7ETBTaDs&feature=youtu.be]] | |||

|} | |||

{| style="color: black; width="100%" class="wikitable" | |||

| colspan="3" | | |||

====Week 8==== | |||

* Some adaptions on code with new simulator, see left movie | |||

* Enormous update on wiki | |||

* Minor adaptions on navigation and algorithm | |||

* First test on Jazz robot, see right movie | |||

|[[File:Movie4_emc01.png|200px|thumb|http://www.youtube.com/watch?v=tgHOjsxDUjk&feature=plcp]] | |||

|[[File:F3.PNG|200px|thumb|https://www.youtube.com/watch?v=HjW0VavlWrg&feature=plcp]] | |||

|} | |||

{| style="color: black; width="100%" class="wikitable" | |||

| colspan="2" | | |||

====Week 9==== | |||

* Simulated all possible maze cases, see movies | |||

** Loop | |||

** Double loop | |||

** Triple loop | |||

** Crossings | |||

* Update on wiki | |||

* Could not do any testing, because set up was not working | |||

|[[File:F1.PNG|200px|thumb|https://www.youtube.com/watch?v=e1CASyzed3E&feature=plcp]] | |||

|[[File:F2.PNG|200px|thumb|https://www.youtube.com/watch?v=qrbDDAJchS8&feature=plcp]] | |||

|[[File:F4.PNG|200px|thumb|https://www.youtube.com/watch?v=XwLcYULewZ8&feature=plcp]] | |||

|} | |||

{| style="color: black; width="100%" class="wikitable" | |||

| colspan="2" | | |||

==Navigation== | |||

====Strategy==== | |||

The navigation node is used to steer the robot correctly in the maze. This navigating is done with use of the laser data. The laser data is first set into a certain variable. On the basis of this variable the robot can be navigated through the maze. The navigation is divided into 6 cases. | |||

The first case is the case in which the robot is driving trough a corridor. In this case the robot is kept in the middle of the corridor by checking and adjusting its angle with respect to the walls of the corridors. While driving trough it is constantly checked whether a corner of any type or a dead end can be recognized. Whenever a corner is recognized, the navigation switches to case 2. | |||

In case 2 the recognized corner or dead end is labeled as one of seven possible corner-types. This corner-type can be a corner left or right, one of three possible T-junctions, a cross-over or a dead end. These corner-types all get a number to identify them easily:<br> | |||

0. Dead end <br> | |||

1. Corner right <br> | |||

2. Corner left <br> | |||

3. T-junction middle (means the robot approaches for the middle) <br> | |||

4. T-junction left (means the robot approaches for the left) <br> | |||

5. T-junction right (means the robot approaches for the right) <br> | |||

6. Cross-over <br> | |||

This corner-type is now published together with the coordinates of the center of this corner-type. The coordinates of the center of the corner-type, also called the coordinates of the node, can be retrieved by processing the coordinates retrieved from the map topic, published by the hector_mapping node. Now the algorithm node processes this corner-type and at its turn publish the direction the robot should go. If the corner-type is 0 (dead end), the navigation node will still publish this type and the coordinates of the node, but then the navigation directly switches to case 6. Once the direction in which the robot should go, is received, the navigation switches to case 3. | |||

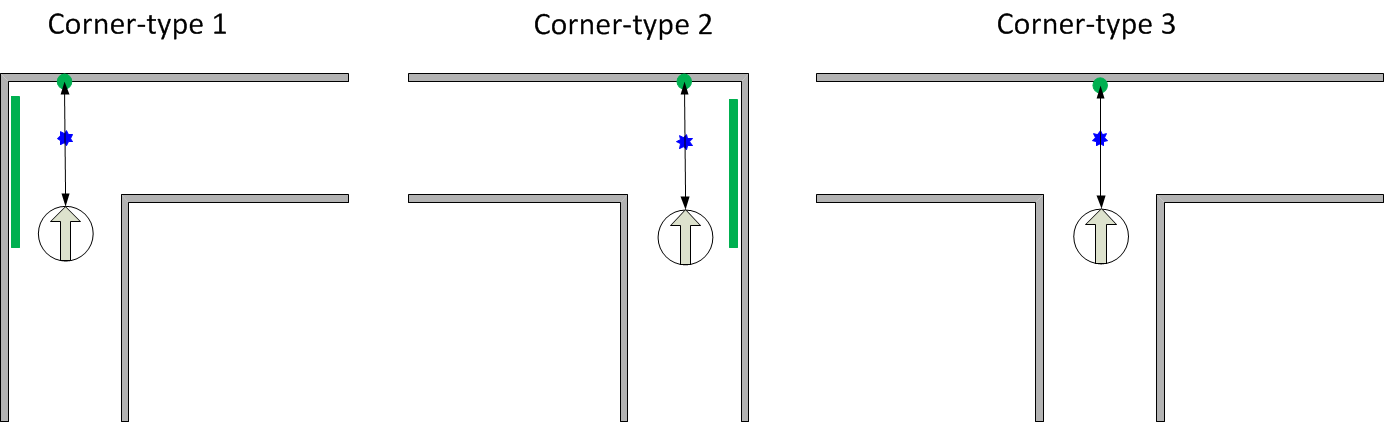

<table align="right"><tr><td>In case 3 the robot drives to the middle of the corner-type. To get to the middle of a corner the robot uses different points with respect to which it gets to the middle. If the corner is of a type in which the robot would drive into a wall if it would not make the corner (type 1, 2 and 3) it uses the distance to the wall to know when to stop driving forward. If this distance is half the distance of a corridor, the robot has reached the middle. If the corner is of type 1 (corner right), the robot uses its laser data on the left, to keep the right distance from the walls it is driving parallel to, while driving to the middle of the corner. With a corner of type 2 (corner left) it does the same, only with the laser data on the right. A type 3 corner (T-junction middle) is the only corner-type in which the robot does not have any points with respect to which it can stay in the middle, while driving forward. In Figure “Corner-type 1, 2, 3” the corner-types 1, 2, 3 can be seen, with in green the laser data or points with respect to which the robot drives to the middle of the corner.</td><td>[[File:Corner type 123.png|thumb|300px|''Corner-type 1, 2, 3'']]</td></tr></table> | |||

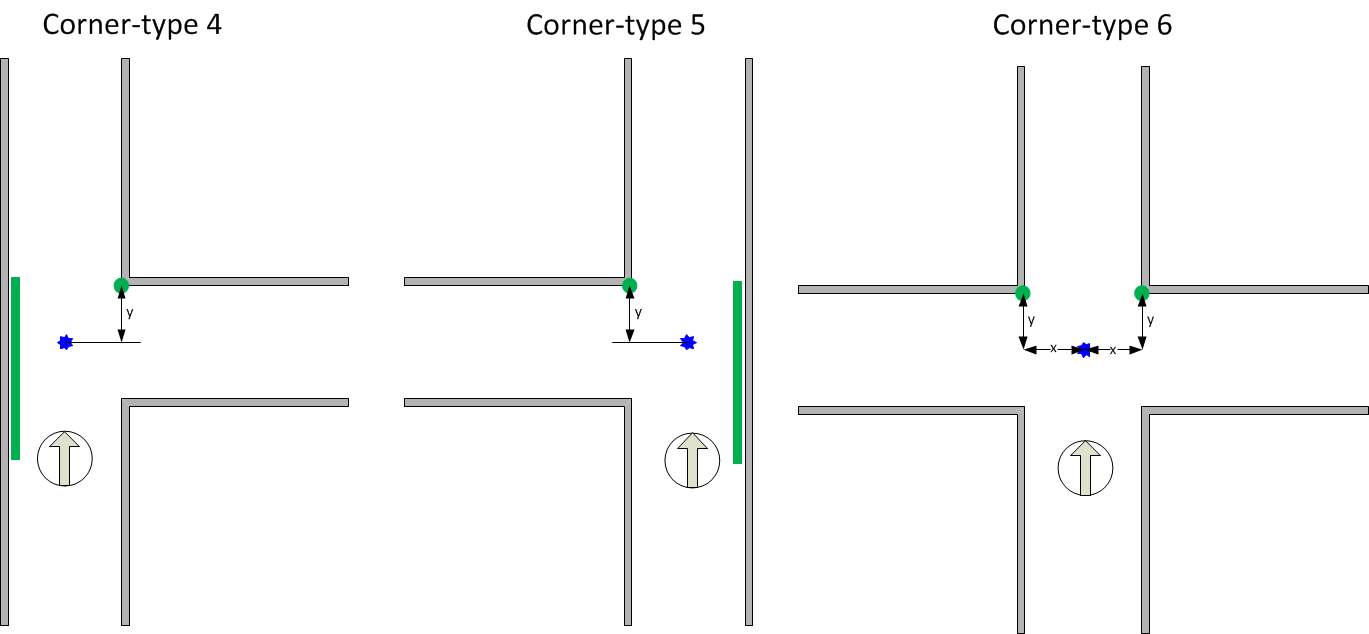

<table align="right"><tr><td>If the corner is of type 4, 5 or 6 the robot uses the distance to the 90 degrees corners to know when to stop driving forward. When the y-distance (distance in the way the robot is driving) is half the distance of a corridor, it has reached the middle. With corners of type 4 and 5 the robot uses its right or left laser data the same way as it uses it when driving to the middle of a corner of type 1 and 2, to stay in the middle while driving forward. With a corner of type 6 the robot uses the x-distance to the 90 degrees corners to stay in the middle while driving forward. When the robot has reached the middle, the navigation switches to case 4. In Figure “Corner-type 4, 5, 6” the corner-types 4, 5, 6 can be seen, with in green the laser data or points with respect to which the robot drives to the middle of the corner.</td><td>[[File:Corner type 456.png|thumb|300px|''Corner-type 4, 5, 6'']]</td></tr></table> | |||

In case 4 the robot makes a turn or does nothing. This is dependant of the direction in which the robot has to go. If the algorithm node publishes as direction to go straight ahead, the robot will not do anything in case 4 and will immediately switch to case 5. On the other hand, if the algorithm publishes to go to the left or right, the robot will make a turn of +(1/2)π radials or -(1/2)π radials respectively. After the turn is completed, the navigation switches to case 5. | |||

In case 5 the robot starts driving forward again until it reaches the corridor. When the corridor is reached, the navigation switches back to case 1. | |||

Case 6 is the case in which the navigation goes, when the robot is driving towards a dead end. In this case only direction is possible and that is backwards. So after driving to center of the dead end, which is at half the distance of a corridor of the dead end, the robot will make a turn of π radials. After the turn is completed, the navigation switches back to case 1. | |||

====Detection corners==== | |||

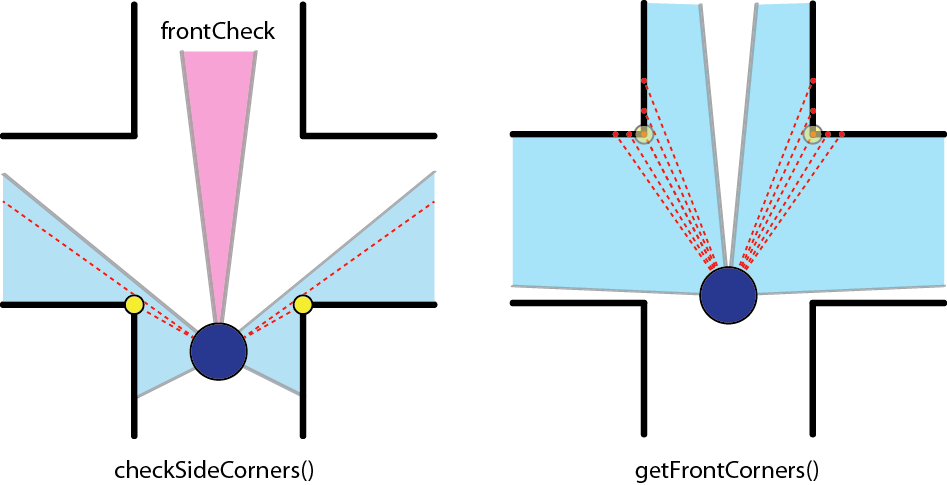

[[File:CheckCorners.png|thumb|500px|''Corner detection'']] | |||

=====Determine CornerType===== | |||

If the robot arrives at a crossing, a specific algorithm is triggered to determine the type of the crossing. The algorithm determines the cornerType which use of three variables (as can also be seen in the left figure): | |||

<ol> | |||

<li><b>frontCheck:</b> This variable denotes if the laser bundel on the north side of the robot detects any close objects. This boolean is determined with use of an average distance and a specific treshold.</li> | |||

<li><b>leftCheck:</b> This variable denotes if the checkSideCorner() algorithm finds an opening on the left side.</li> | |||

<li><b>rightCheck:</b> This variable denotes if the checkSideCorner() algorithm finds an opening on the right side.</li> | |||

</ol> | |||

Based on these three booleans, the cornerType can be determined using some logical operators. | |||

The pseudo code algorithm to detect the sideCorners is given below: | |||

'''loop''' over specific range of laser data points: | |||

'''if''' we look at the left side: | |||

'''if''' distance[i] > distance[i+1] AND distance[i]/distance[i+1] > ratioTreshold: | |||

Do some extra checks | |||

'''if''' extraChecks succeed '''then''' return true '''end''' | |||

'''end''' | |||

'''end''' | |||

'''if''' we look at the right side: | |||

'''if''' distance[i] < distance[i+1] AND distance[i]/distance[i+1] > ratioTreshold: | |||

Do some extra checks | |||

'''if''' extraChecks succeed '''then''' return true '''end''' | |||

'''end''' | |||

'''end''' | |||

=====Find FrontCorners to determine reference Phi===== | |||

Finding these 90 degrees front corners is used in the following states: | |||

<ul> | <ul> | ||

<li><b>DriveToCenter STATE:</b> | |||

<ul> | |||

<li>To determine the reference Phi in case the corner is of type 6 [crossing].</li> | |||

<li>To determine when we reached the center of the crossing when the corner is of type 4,5 or 6.</li> | |||

</ul></li> | |||

<li><b>DriveToCorridor STATE:</b> | |||

<ul> | |||

<li>To determine the reference Phi in case the corner is of type 4,5 or 6.</li> | |||

</ul></li> | |||

</ul> | </ul> | ||

== | The figure on the right illustrates the working of the detection method. In short, the algorithm iterates over a specif domain of laser-points. At each point, it checks the angle between the previous and following laserpoint around its vertical axis. If this angle is more or less 90 degrees, a 90 degrees angle has been found on the x,y position of the base. The algorithm in pseudocode is given below: | ||

< | |||

'''loop''' over specific range of laser data points | |||

Determine coordinates of each point relative to the robot | |||

Base = coordinates[i] | |||

Vector1 = coordinates[i-offset]-coordinates[i] | |||

</ | Vector2 = coordinates[i+offset]-coordinates[i] | ||

Normalize vectors | |||

innerProduct = innerProduct of vectors Vector1 and Vector2 | |||

'''if''' innerProduct is close to zero: | |||

// Possible 90 degrees angle at coordinates[i] | |||

Do some extra checks to make sure coordinates[i] is at a 90 degrees angle | |||

'''if''' extra checks are true: | |||

return the x and y value of the 90 degrees angle | |||

'''end''' | |||

'''end''' | |||

return that no corner of 90 degrees is found | |||

====Controller==== | |||

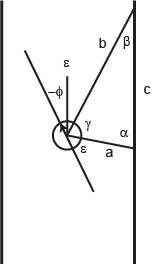

[[File:Angle_calculation.jpg|thumb|200px|''Angle calculation'']] | |||

The forward velocity is set to a constant velocity (v = 0.1) under all circumstances, except when making a turn.<br> | |||

The angular velocity controller depends on its current orientation and a certain reference orientation.<br> | |||

This controller is a simple P-controller and given by:<br> | |||

<math>\omega = -k(\phi - \phi_{ref})</math><br><br> | |||

The angle in the corridor is calculated using the following formulas (with the variables given in Figure "Angle calculation"):<br> | |||

<math>2 \epsilon + \gamma = \pi</math><br> | |||

Z-angles:<br> | |||

<math>\epsilon + \phi = \beta</math><br> | |||

Cosine rule: <br> | |||

<math>c = \sqrt{a^2 + b^2 - 2ab \cos \gamma}</math><br> | |||

Cosine rule: <br> | |||

<math>\beta = \arccos \frac{a^2 + c^2 - b^2}{2 ab}</math><br> | |||

Therefore:<br> | |||

<math>\phi = \beta - \frac{\pi}{2} + \frac{\gamma}{2}</math><br><br> | |||

The reference angle is dependend on the position in the corridor, namely if it is more to the left, the robot should steer to the right and viceversa. Therefore the following relation holds for the reference angle:<br> | |||

<math>\phi_{ref} = \frac{\pi}{4} \frac{e_x}{d}</math><br> | |||

Where <math>d</math> is the corridor width and <math>e_x</math> is the error with respect to the center of the corridor.<br> | |||

==Algorithm== | |||

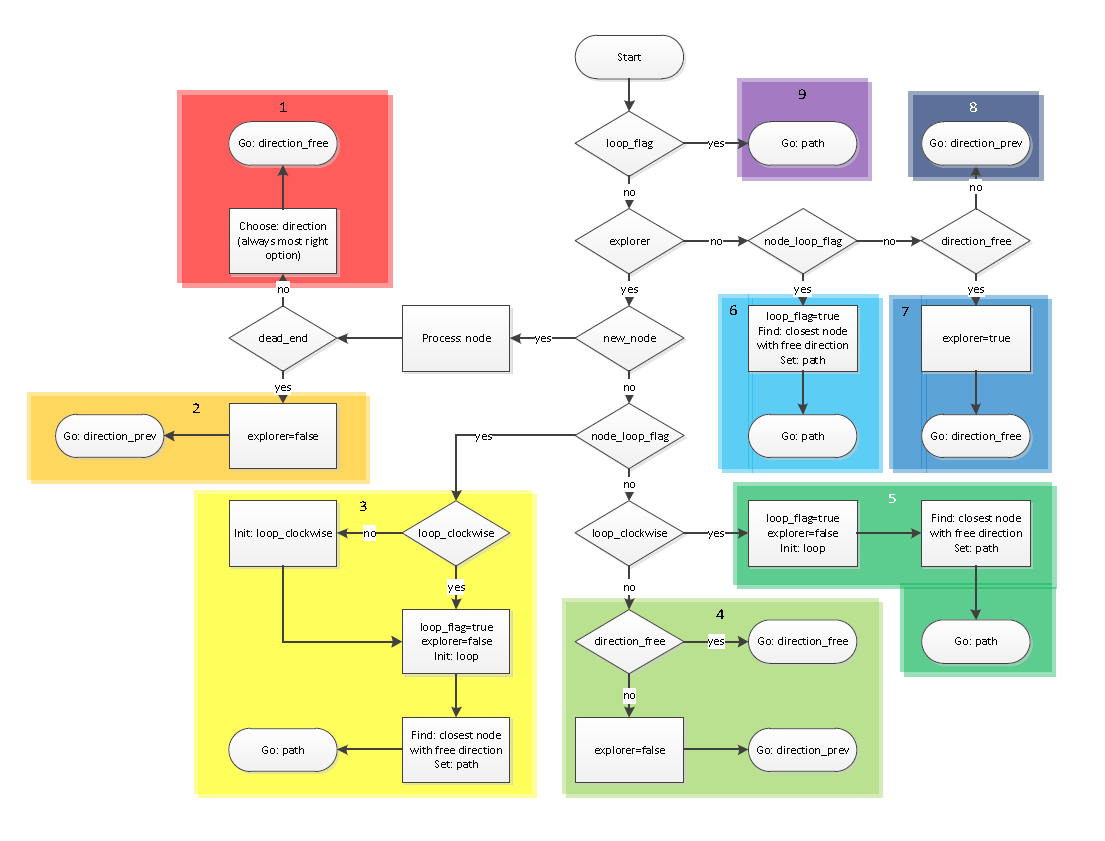

<table align="right"><tr><td>The algorithm node is to choose where the robot has to go, when it is arriving at a node. The algorithm gets the corner-type and the coordinates of the node over a topic published by the navigation node. When the robot arrives at a node, which is new and where the robot has not been jet, it processes this node. This processing means a number-id is given to the node and the coordinates, previous and free directions of this node are saved. | |||

<br> | |||

When the robot is arriving at a node, which has as corner-type the T-junction type where the robot arrives in the middle and can go to the right or the left, the algorithm will first check with the camera whether an arrow can be found on the wall or not. If an arrow is visible, the algorithm returns that the robot has to go in the direction of the arrow. If no arrow is found the algorithm as visible in the flowchart in Figure "Flowchart algorithm" is used to choose in which direction the robot has to go.</td><td>[[File:Flowchart_algorithm.PNG|thumb|400px|''Flowchart algorithm'']]</td></tr></table> | |||

<table align="right"><tr><td><h4>Driving along a set path</h4> | |||

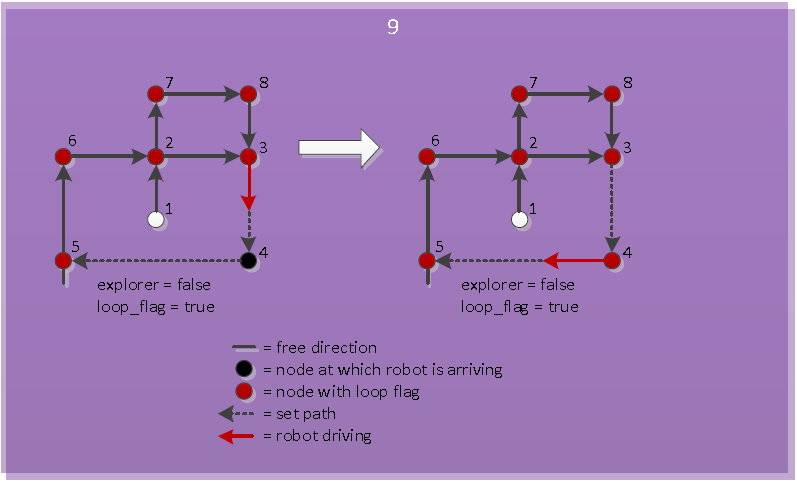

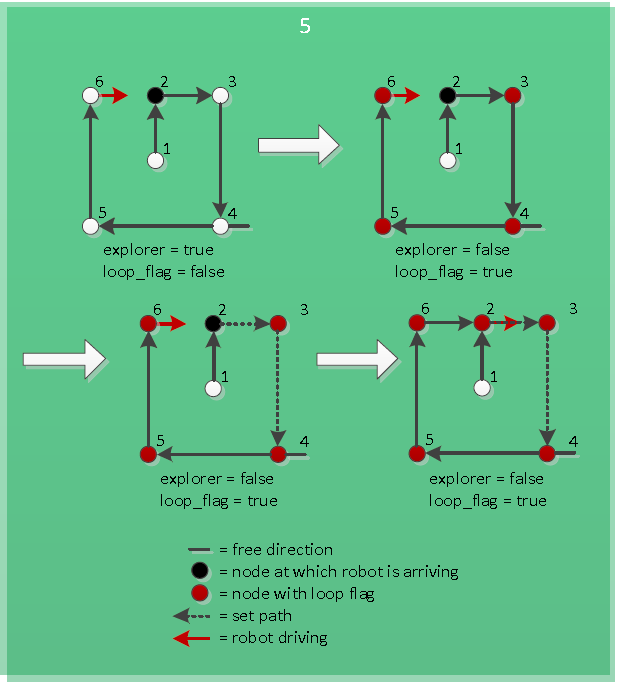

First the algorithm checks whether the boolean ‘loop_flag’ is true or not. This boolean indicates whether the robot is following a path on a loop inside the maze, or not. This path leads the robot from the node on which it was discovered that the robot was in a loop to the closest node on which a direction is not discovered jet. So if this variable is true (flowchart goes to the purple box 9) the algorithm returns that the robot has to keep following the path that has been set. How this works exactly is visualized in Figure "Driving along a set path".</td><td>[[File:Pic_9_algorithm.PNG|thumb|200px|''Driving along a set path'']]</td></tr></table> | |||

<table align="right"><tr><td><h4>Driving back, arriving at node of a known loop</h4> | |||

If the boolean ‘loop_flag’ is not true, which means the robot is not in a loop, the algorithm checks whether the boolean ‘explorer’ is true or not. This boolean indicates whether the robot is exploring a part of the maze which is not discovered jet or it is driving back in a corridor it already discovered. | |||

<br> | |||

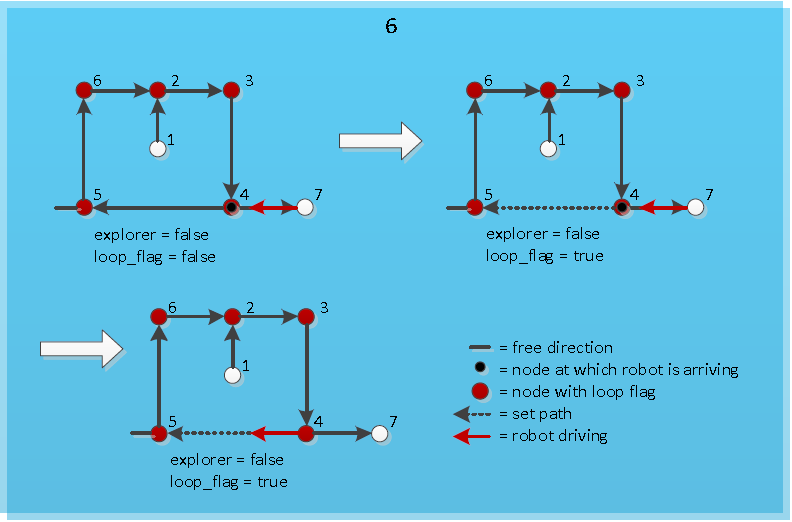

If this boolean is false the algorithm checks whether the node at which it is arriving is part of a loop, indicated by the boolean ‘node_loop_flag’. If this is the case (flowchart goes to light-blue box 6) the robot is back at a loop it already discovered, so the boolean ‘loop_flag’ is set to true, the closest node with a free direction is found and a path towards this node is set. Now the algorithm returns that the robot has to start driving along this set path. Figure "Driving backwards, arriving at node loop" visualizes how this works exactly.</td><td>[[File:Pic_6_algorithm.PNG|thumb|200px|''Driving back, arriving at node loop'']]</td></tr></table> | |||

= | <table align="right"><tr><td><h4>Driving back, arriving at node with or without free direction</h4> | ||

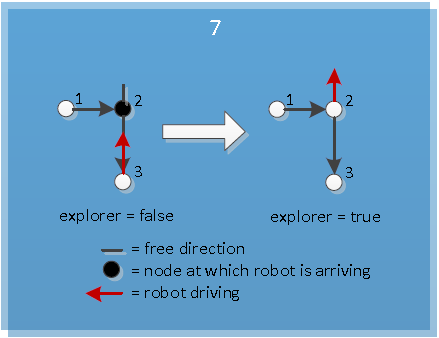

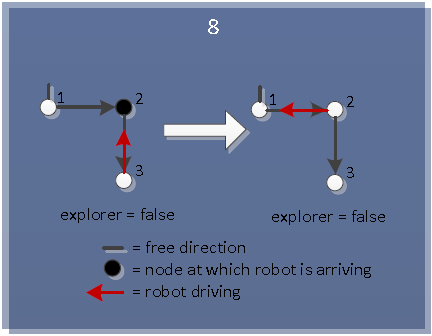

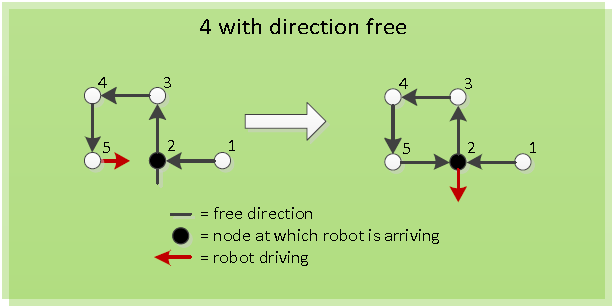

If the boolean ‘node_loop_flag’ is not true, it means the robot is arriving at a node which is already discovered but is not a part of loop. This node can still have a direction free, the robot can discover, indicated by the boolean ‘direction_free’. If this is the case ( flowchart goes to the blue box 7), the ‘explorer’ is set to true and the algorithm outputs that the robot has to go into the free direction. This is visualized in Figure "Driving back, arriving at node with free direction". If the node at which the robot is arriving does not have a free direction left, so ‘direction_free’ is false, the algorithm returns that the robot has to go to the previous node of the node it is arriving at (flowchart goes to the dark blue box 8). This is visualized in Figure "Driving back, arriving at node with no free direction".</td><td>[[File:Pic_7_algorithm.PNG|thumb|200px|''Driving back, arriving at node with free direction'']]</td><td>[[File:Pic_8_algorithm.PNG|thumb|200px|''Driving back, arriving at node without free direction'']]</td></tr></table> | |||

< | |||

< | |||

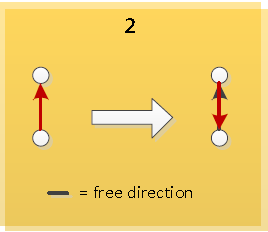

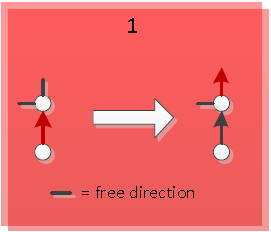

= | <table align="right"><tr><td><h4>Arriving at dead end or new node with free direction</h4> | ||

If ‘explorer’ is true, the next thing the algorithm checks is whether the node, the robot is arriving at, is a new node or not, indicated by boolean ‘new_node’. If the node is a new node, first the node is processed. After this, it is checked whether the node has as corner-type a dead end or not. If it is a dead end (flowchart goes to the orange box 2), so boolean ‘dead_end’ is true, ‘explorer’ is set to false and the algorithm returns that the robot has to go to the previous node of this node. This visualized in Figure "Arriving at new node, which is a dead end". If the node the robot is arriving at is not a dead end (flowchart goes to the red box 1), at least one direction is free at the node. If more directions are free always the most right option is chosen. The algorithm now returns that the robot has go to into the chosen, or in some cases only, free direction. This visualized in Figure "Arriving at new node, with free direction".</td><td>[[File:Pic_2_algorithm.PNG|thumb|200px|''Arriving at new node, which is a dead end'']]</td><td>[[File:Pic_1_algorithm.PNG|thumb|200px|''Arriving at new node, with free direction'']]</td></tr></table> | |||

</ | |||

< | |||

= | <table align="right"><tr><td><h4>Arriving at known node, making a clockwise loop</h4> | ||

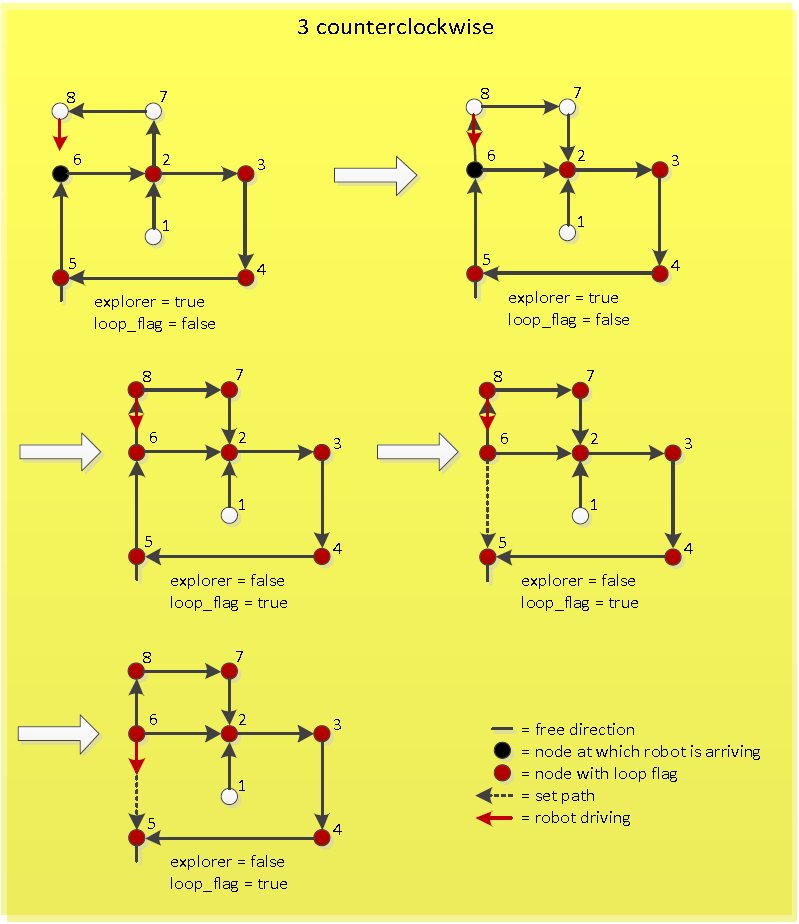

< | Now going back to the boolean ‘new_node’ in the flowchart. If the node the robot is arriving at is not a new node, the algorithm will check whether this node is part of an already discovered and “flagged” loop or not, by checking the boolean ‘node_loop_flag’. If this boolean is true, it means the robot is arriving at a node which is shared by two adjacent loops. On the other hand, if the boolean is false, the robot is arriving at a node which it already has discovered, but is not already part of a loop, so this is a single loop. | ||

<br> | |||

To clarify this, first have a look at the case that there is only one loop, so ‘node_loop_flag’ is false. Now the algorithm will first check whether the robot has made this loop in a clockwise or counterclockwise direction. This is important, because, since at all the times the most right option is chosen at a node, any free direction of a node in a counterclockwise loop would lead to the inside of the loop . Beacuse the exit of the maze is always at the outside, these directions can be ignored. | |||

<br> | |||

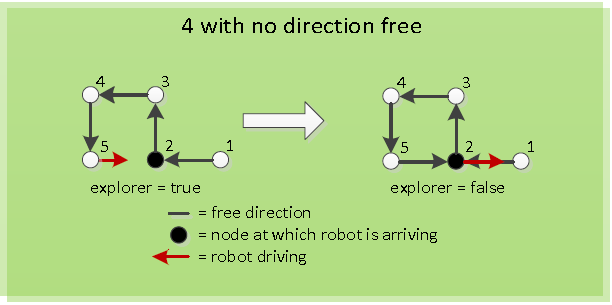

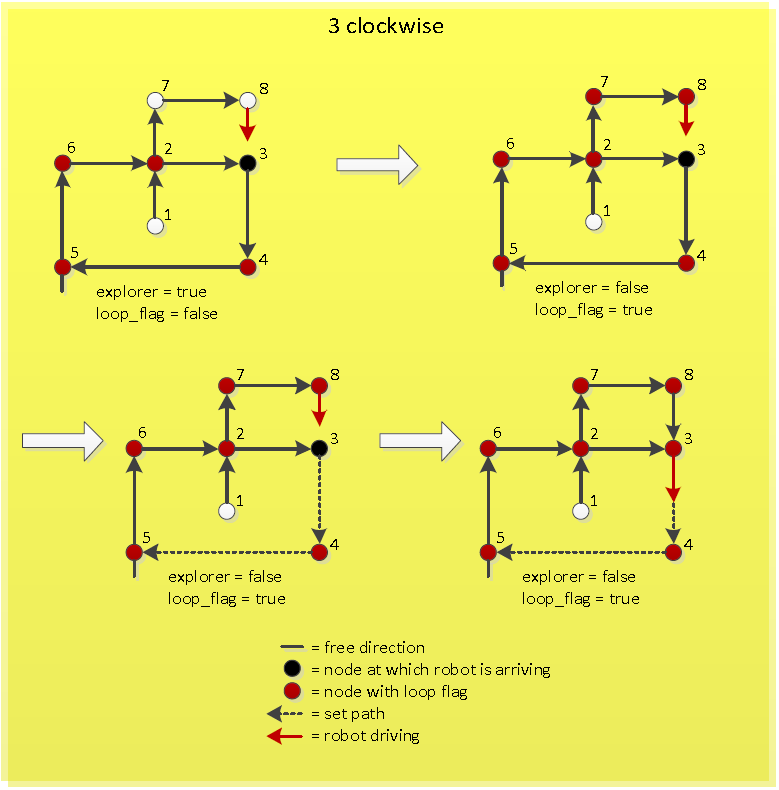

</ | So the algorithm checks the direction of the loop, indicated by the boolean ‘loop_clockwise’. If this boolean is true, the direction of the loop is clockwise. In this case (flowchart goes to the dark green box 5), ‘loop_flag’ is set to true, ‘explorer’ is set to false and the loop is initialized. Initializing the loop means that at each node, which is part of the loop, the boolean ‘node_loop_flag’ is set to true, indicating it is part of the loop. This way a node which is part of the loop can be recognized as being part of a loop, whenever the robot gets back to this node. After this the closest node with a free direction is searched for and a path towards this node is set. Now the algorithm returns that the robot has to start driving along this set path. How this works exactly is visualized in Figure "Arriving at known node, clockwise loop". | ||

</td><td>[[File:Pic_5_algorithm.PNG|thumb|200px|''Arriving at known node, clockwise loop'']]</td></tr></table> | |||

= | <table align="right"><tr><td><h4>Arriving at known node, making a counterclockwise loop, with or without free direction</h4> | ||

< | If ‘loop_clockwise’ is false, the direction of the loop is counterclockwise (flowchart goes to the light green box 4) and as told in this case no node part of this loop now has a value in finding the exit of the maze except for the node the robot is arriving at. This node can still have one free direction, which leads outside the loop. So now it is first checked whether the node, the robot is arriving at, still has a free direction. If this is the case the algorithm returns that the robot has to go into this free direction. This is visualized in Figure "Arriving at known node, counterclockwise loop, direction free". If this is not the case, the only way to go for the robot is to the previous node of the node it is arriving at, leaving the counterclockwise behind. So the algorithm sets ‘explorer’ to false and returns that the robot has to go the previous node of the node it is arriving at. This is visualized in Figure "Arriving at known node, counterclockwise loop, no direction free".</td><td>[[File:Pic_4_dir_free_algorithm.PNG|thumb|200px|''Arriving at known node, counterclockwise loop, direction free'']]</td><td>[[File:Pic_4_no_dir_free_algorithm.PNG|thumb|200px|''Arriving at known node, counterclockwise loop, no direction free'']]</td></tr></table> | ||

< | |||

= | <table align="right"><tr><td><h4>Arriving a known node of a known clockwise or counterclockwise loop</h4> | ||

< | Now there is still the case left, in which the robot is arriving at a node it already discovered, but which is already part of another loop. So it is arriving at a node which is shared by two adjacent loops. In this case (flowchart goes to the yellow box 3) it is first checked whether the new found loop has a clockwise or a counterclockwise direction. | ||

<br> | |||

</ | If the new loop has a clockwise direction ‘loop_flag’ is set to true, ‘explorer’ is set to false and the loop is initialized. Initializing means in this case that both the new loop and already discovered loop are being merged into one larger loop and at all nodes, which are part of this merged loop, the boolean ‘node_loop_flag’ is set to true. After this the closest node with a free direction is searched for in merged loop and a path towards this node is set. Now the algorithm returns that the robot has to start driving along this set path. How this works exactly is visualized in Figure "Arriving at known node of clockwise loop". | ||

<br> <br> | <br> <br> | ||

If the new loop has a counterclockwise direction, first the new loop has to be initialized clockwise. This means that the nodes in the new loop are set in a clockwise order. So the node at which the robot is just arriving now becomes the previous node of the node from which the robot just came. After this the same procedure can be followed as when the new loop is clockwise initially, because now the new counterclockwise loop is made a new clockwise loop. The working of this is visualized in Figure "Arriving at known node of counterclockwise loop". | |||

< | </td><td>[[File:Pic_3_clockwise_algorithm.PNG|thumb|200px|''Arriving at known node of clockwise loop'']]</td><td>[[File:Pic_3_counterclockwise_algorithm.PNG|thumb|200px|''Arriving at known node of counterclockwise loop'']]</td></tr></table> | ||

[[File: | |||

==Arrow detection== | |||

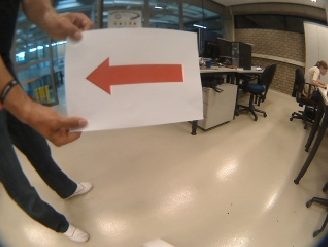

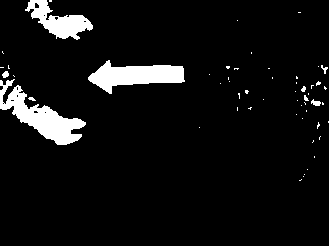

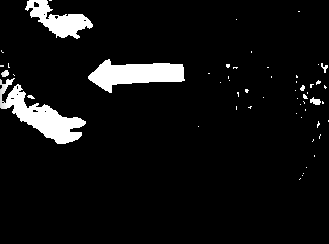

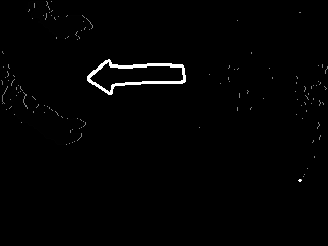

The arrow processing only is performed at T-crossings, since they are only useful at these crossings. The arrow processing algorithm is performed with the OpenCV package and is performed in several steps, which are given here: <br> | |||

* First the camera image, which is shown in Figure “Camera Image” is converted into an HSV image. | |||

* The different channels (Hue, Saturation, Value) are split. | |||

* The Hue and Saturation channels are thresholded with a lower and upper bound and the combination of these two channels are merged into 1 image, which is shown in Figure “Thresholded Image”. The lower and upper bound of the Hue channel are 100 and 150 respectively. The lower and upper bound of the Saturation channel are 100 and 210 respectively. | |||

* After the thresholding, the image is dilated and eroded, to close possible gaps in the arrow. The image after closing is shown in Figure “Closed Image”. | |||

* The contours can be detected of this binary image using the findContours function in OpenCV. | |||

* Every contour is checked whether it is an arrow using four key points, namely the most left, bottom, right and top point. | |||

* The arrow is highlighted and shown in Figure “Arrow detected”. The direction is returned to the algorithm. | |||

<table> | |||

<tr> | |||

<td>[[File:Pic_img_1_original.png|200px|thumb|Figure "Camera Image"]]</td> | |||

<td>[[File:Pic_img_2_thresholded.png|200px|thumb|Figure "Thresholded Image"]]</td> | |||

<td>[[File:Pic_img_3_dilate_erode.png|200px|thumb|Figure "Closed Image"]]</td> | |||

<td>[[File:Pic_img_4_processed.png|200px|thumb|Figure "Arrow detected"]]</td> | |||

</tr> | |||

</table> | |||

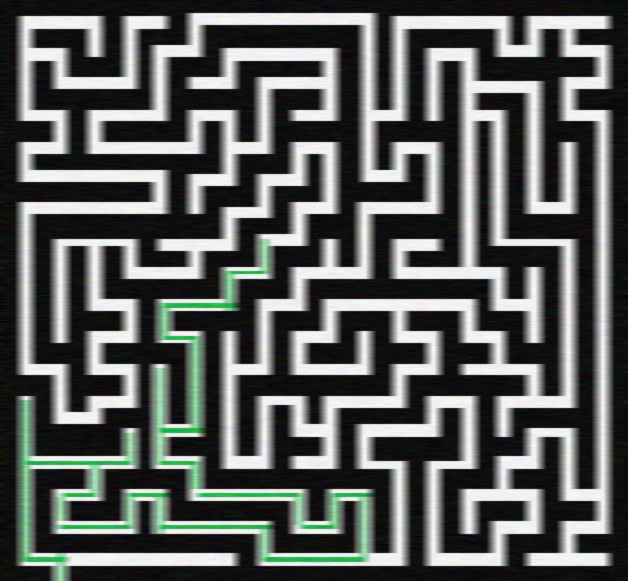

==Simulation results== | |||

Managed to solve various mazes in simulation. Make sure you take a look at the fourth video, triple loop maze solved! | |||

Movies: | |||

<table> | |||

<tr> | |||

<td>[[File:Movie4_emc01.png|200px|thumb|http://www.youtube.com/watch?v=tgHOjsxDUjk&feature=plcp]]</td> | |||

<td>[[File:F1.PNG|200px|thumb|https://www.youtube.com/watch?v=e1CASyzed3E&feature=plcp]]</td> | |||

<td>[[File:F2.PNG|200px|thumb|https://www.youtube.com/watch?v=qrbDDAJchS8&feature=plcp]]</td> | |||

<td>[[File:F4.PNG|200px|thumb|https://www.youtube.com/watch?v=XwLcYULewZ8&feature=plcp]]</td> | |||

</tr> | |||

</table> | |||

==Experimental results== | |||

<table> | |||

< | <tr> | ||

<td>[[File:Test1.png|200px|thumb|http://youtu.be/_g9S24xXes4]]</td> | |||

</ | <td>[[File:F3.PNG|200px|thumb|https://www.youtube.com/watch?v=HjW0VavlWrg&feature=plcp]]</td> | ||

</tr> | |||

</table> | |||

Latest revision as of 13:33, 26 June 2012

Group Members

| Name: | Student id: | Email: |

| Rein Appeldoorn | 0657407 | r.p.w.appeldoorn@student.tue.nl |

| Jeroen Graafmans | 0657171 | j.a.j.graafmans@student.tue.nl |

| Bart Peeters | 0748906 | b.p.j.j.peeters@student.tue.nl |

| Ton Peters | 0662103 | t.m.c.peters@student.tue.nl |

| Scott van Venrooij | 0658912 | s.j.p.v.venrooij@student.tue.nl |

Tutor: Jos Elfring

Progress

Week 1

- Installed all required software on our computers :)

- Everybody gained some knowledge about Unix, ROS and C++.

Week 2

|

| |

Week 5

- Map-processing is cancelled, since the used package (hector_mapping) creates incorrect maps.

- Now working on processing the laserdata to let the Jazz drive "autonomously"

- Writing an algorithm by using depth-first-search, this algorithm obtains the kind of crossing, the position of the Robot and the angle of the Robot and sends the new direction to the Robot

Week 6

Tasks for Week 7:

|

|

| ||

Week 7

|

|

| ||

Week 8

|

|

| ||

Week 9

|

|

|

| |

StrategyThe navigation node is used to steer the robot correctly in the maze. This navigating is done with use of the laser data. The laser data is first set into a certain variable. On the basis of this variable the robot can be navigated through the maze. The navigation is divided into 6 cases. The first case is the case in which the robot is driving trough a corridor. In this case the robot is kept in the middle of the corridor by checking and adjusting its angle with respect to the walls of the corridors. While driving trough it is constantly checked whether a corner of any type or a dead end can be recognized. Whenever a corner is recognized, the navigation switches to case 2. In case 2 the recognized corner or dead end is labeled as one of seven possible corner-types. This corner-type can be a corner left or right, one of three possible T-junctions, a cross-over or a dead end. These corner-types all get a number to identify them easily: In case 4 the robot makes a turn or does nothing. This is dependant of the direction in which the robot has to go. If the algorithm node publishes as direction to go straight ahead, the robot will not do anything in case 4 and will immediately switch to case 5. On the other hand, if the algorithm publishes to go to the left or right, the robot will make a turn of +(1/2)π radials or -(1/2)π radials respectively. After the turn is completed, the navigation switches to case 5. In case 5 the robot starts driving forward again until it reaches the corridor. When the corridor is reached, the navigation switches back to case 1. Case 6 is the case in which the navigation goes, when the robot is driving towards a dead end. In this case only direction is possible and that is backwards. So after driving to center of the dead end, which is at half the distance of a corridor of the dead end, the robot will make a turn of π radials. After the turn is completed, the navigation switches back to case 1. Detection corners Determine CornerTypeIf the robot arrives at a crossing, a specific algorithm is triggered to determine the type of the crossing. The algorithm determines the cornerType which use of three variables (as can also be seen in the left figure):

Based on these three booleans, the cornerType can be determined using some logical operators. The pseudo code algorithm to detect the sideCorners is given below: loop over specific range of laser data points:

if we look at the left side:

if distance[i] > distance[i+1] AND distance[i]/distance[i+1] > ratioTreshold:

Do some extra checks

if extraChecks succeed then return true end

end

end

if we look at the right side:

if distance[i] < distance[i+1] AND distance[i]/distance[i+1] > ratioTreshold:

Do some extra checks

if extraChecks succeed then return true end

end

end

Find FrontCorners to determine reference PhiFinding these 90 degrees front corners is used in the following states:

The figure on the right illustrates the working of the detection method. In short, the algorithm iterates over a specif domain of laser-points. At each point, it checks the angle between the previous and following laserpoint around its vertical axis. If this angle is more or less 90 degrees, a 90 degrees angle has been found on the x,y position of the base. The algorithm in pseudocode is given below: loop over specific range of laser data points

Determine coordinates of each point relative to the robot

Base = coordinates[i]

Vector1 = coordinates[i-offset]-coordinates[i]

Vector2 = coordinates[i+offset]-coordinates[i]

Normalize vectors

innerProduct = innerProduct of vectors Vector1 and Vector2

if innerProduct is close to zero:

// Possible 90 degrees angle at coordinates[i]

Do some extra checks to make sure coordinates[i] is at a 90 degrees angle

if extra checks are true:

return the x and y value of the 90 degrees angle

end

end

return that no corner of 90 degrees is found

Controller The forward velocity is set to a constant velocity (v = 0.1) under all circumstances, except when making a turn. AlgorithmArrow detectionThe arrow processing only is performed at T-crossings, since they are only useful at these crossings. The arrow processing algorithm is performed with the OpenCV package and is performed in several steps, which are given here:

Simulation resultsManaged to solve various mazes in simulation. Make sure you take a look at the fourth video, triple loop maze solved! Movies: Experimental results

|

|||||||