Embedded Motion Control 2015 Group 11: Difference between revisions

| (11 intermediate revisions by 2 users not shown) | |||

| Line 256: | Line 256: | ||

=== Map Saving=== | === Map Saving=== | ||

Because of the limitation of time, we did not finish this part. | |||

=== Door Detection === | === Door Detection === | ||

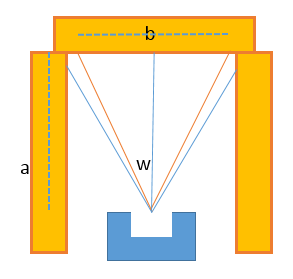

First consider the door is in the left/right side. Once the return value from the laser sensor on 45 deg experiences an increasing for about 30cm, like the green line and blue line in the diagram at c point, there may be exists a door. Hence, check the differences between the laser return value at this side from 45 deg to 90 deg. Such as the thin blue line in the diagram. Check the difference is negative or positive. Once the differences change from negative to positive, such as the orange line and red line in the diagram, there should exists a door. Calculate the degree of the response laser, and from this, because the width of the road 2a has already know, from triangle function, the length of b can be calculated as bellowing: <br> | |||

Assume the angle of the return signal changing from positive to negative is w deg, which means that the angle between this return laser to the straight road is 90 - w deg. Also, as noticed before, in 45 deg, there exists a return value gap, the gap value can be calculated by d. The return value which just before the gap is e. Then, the length of the door can be calculated as: <math> b=\frac{a}{tan(90-\omega)}-\frac{(d+e)}{\sqrt{2}}+\frac{d}{\sqrt{2}} </math> | |||

Also the width of the door is <math> \frac{d}{\sqrt{2}}</math> . The diagram is shown as bellowing: | |||

[[File:door.png|center|500 px]] <br> | |||

The calculation in right side is as same as left side.<br> | |||

Then think of the door in the dead end. Same as before, calculate the diff between each laser sensor, once there exists a change from positive to negative, or from negative to positive, the angle of the return sensor can be set as w. Once there exists to changes in about 60 deg, there exists a dead end or a door, stop the robot and bleep during this time. The width of the door is the width of the route, and the distance between robot and door can be calculated as bellowing: | |||

[[File:door 2.png|center|400 px]] <br> | |||

Hence, the width of the door is as same as the road, b, and the distance from robot to the door can be calculated as bellowing:<math> a=\frac{{\frac{b}{2}}}{tan(\omega)} </math> | |||

Hence, the door can be detected. | |||

<br> | |||

== Result and Evaluation == | == Result and Evaluation == | ||

| Line 268: | Line 283: | ||

=== Real Competition === | === Real Competition === | ||

The evaluation of the maze competition:<br> | The evaluation of the maze competition:<br> | ||

As putted previously, there was a problem with the initialization of our program so that it did not perform well in the final competition. There are some differences between the simulator and the real robot which are not understood. In the real test, we found sometimes the laser data | As putted previously, there was a problem with the initialization of our program so that it did not perform well in the final competition. There are some differences between the simulator and the real robot which are not understood. In the real test, we found sometimes the laser data is some random numbers, making the computations corrupted. Every time we ensured that there was new data available before updating the variables but it still didn't work. During the last test, the tutor tried to find the problem for us but there was no result. After thinking in the past few days, we think a possible approach is to store the laser data in memory by our own and update it whenever new laser data is available. We can compare the difference between consequent laser data and set a threshold to decide if discard the new collected laser data or not. In addition, a median filter is applied to the laser data to suppress the effect of noises. For future work, we first need to refine our code and make it more readable since now we have many functions and it is somehow complicate. Second, perfect the structure of the node used to store the map and accelerate our DP algorithm. | ||

=References= | =References= | ||

Latest revision as of 11:12, 27 June 2015

Group Members

| Name | Student number | |

|---|---|---|

| Changjie Guan | 0927222 | c.guan@student.tue.nl |

| Yang Xu | 0918430 | y.xu.1@student.tue.nl |

| Bolin Zhao | 0925735 | b.zhao@student.tue.nl |

| Zhe Zhao | 0815651 | z.zhao@student.tue.nl |

| Fei Chen | 0923761 | f.chen@student.tue.nl |

| Yizhou Ye | 0925611 | y.ye@student.tue.nl |

| Yiran Liu | 0843177 | y.liu.1@student.tue.nl |

Introduction

The aim of this course is to implement embedded software design to let a robot (PICO) navigate autonomously throughout a maze. PICO is a telepresence robot from Aldebaran. The PICO is programmed to find its way through a maze, without user intervention. The knowledge of C-programming (C++), ROS, and control technology is needed. The environment of the maze is unknown in advance. Besides crossings, intersections, dead ends, and the exit, the maze also contains doors that automatically open and close. On this wiki page, the approach, concept, program design and chosen strategies and algorithms which are presented and explained.

Design Process

Task and goal: Find way out of maze as fast as possible.

Requirement

The requirement analysis base on the problem statement and divide in different level of important as ‘must’,’should’,’could’ and ‘wont'

- the robot must can get out of the maze

- the robot must take action by itself

- the robot should get out of the maze as soon as possible

- the robot won’t hint the wall

Functions

Ensure all the requirements can be meeted.

- Move forward and backward, turn around

- Detect the doors

- Detec the distance between the front wall and the robot

- Remember the path and and the door, grid the path and store it with 0 or 1, representing the pass available or not respectively.

- Each step, select a direction where is available. If the current grid has not been recorded, record it and move on. If the current gird has been recorded as well as its surrounding grids, randomly choose a grid from recorded and move on. If all the maze has been searched, the robot must go out the maze.

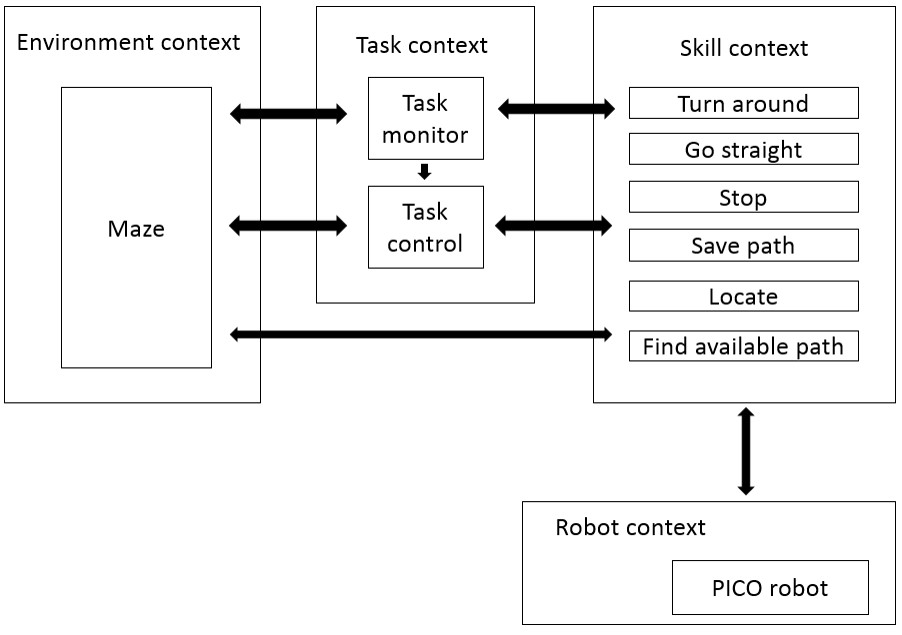

Activity model

Behavioural model

Behavioural model:

- Environment context

- Task context

- Task monitor

- Task control

- Skill context (updated to latest version)

- Find minimum value of sensor (“index”)

- Save data (including map, robot situation, etc.)

- Calculate the location and situation of robot (“update”)

- Align the robot coordinate system (Feedback angle)

- Turn around & J-turn

- Judge the robot is in the center of the road

- Check and judge cross

- Check exit (Leave the maze)

- Check dead end (J-turn)

- Make the robot back to the center of the road (Feedback location)

- Robot context

Structure Model

Function block – Behaviour and Structure

Ten functions:

- Find minimum value of sensor (“index”)

- Judge the robot is in the center of the road

- Save data (including map, robot situation, etc.)

- Check and judge cross

- Calculate the location and situation of robot (“update”)

- Make the robot back to the center of the road (Feedback location)

- Align the robot coordinate system (Feedback angle)

- Turn around & J-turn

- Check exit (Leave the maze)

- Check dead end (J-turn)

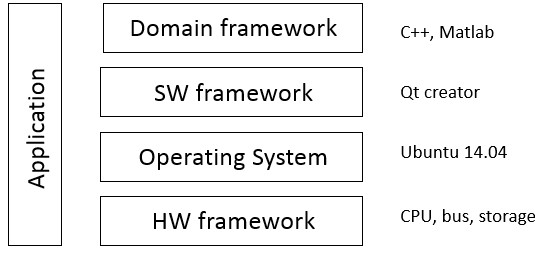

To be more specific, the structure model will be decoupled into Composition Pattern (5Cs). Below we describe how the 5Cs have been decoupled in the design of the depicted components.

5Cs:

- Computation: this is the core of a system's functionality, and it implements the domain knowledge that provides the real added value of the overall system.

- Configuration: this brings data towards the computational components that require it, with the right quality of service. These aspects can be configured by setting the values of a set of parameters definded in the components implementation.

- Coordination: Coordination components are in charge of starting and stopping the computational components of a composite.

- Communication: Communication deals with the exchange of data.

- Composition: the design concern of Composition models the coupling that is always required between the four "Cs" explained above.

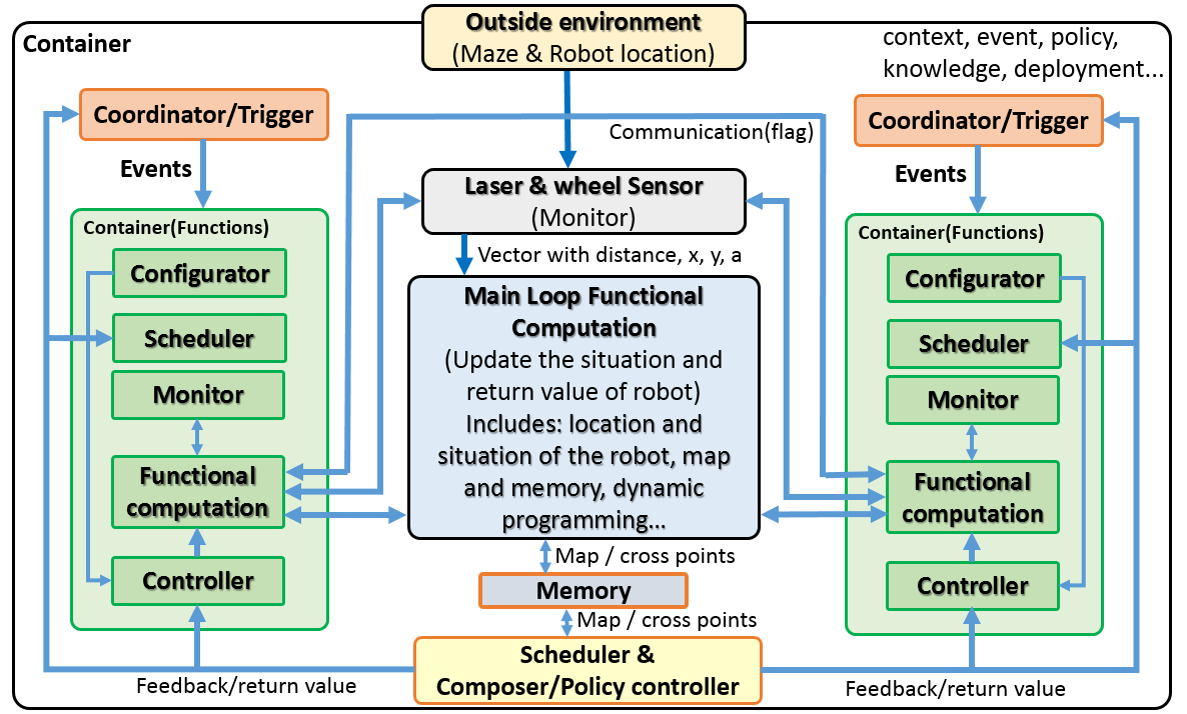

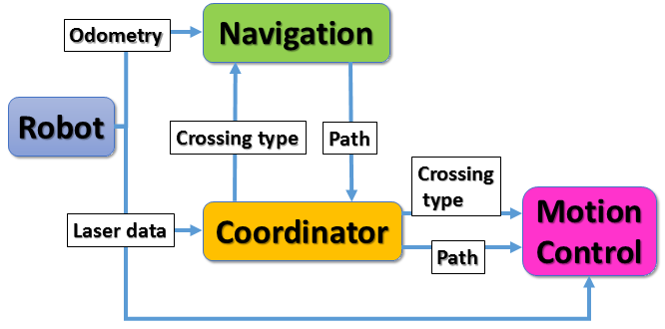

Firstly, the structure model is obtained in system level. Secondly, the lower level composition patterns of the coordinator and the computation blocks (Navigation and Motion control) are shown respectively.

This is the highest level of the composition pattern in our design. In this composition pattern, Navigation and Motion Control are two computation components. The blue arrays show the communication between each components. The coordinator gets the setting values from configurator which is not shown in the below figure. The robot gives two kinds of data, laser data and odometery, as the inputs of the system. The laser and odometry data goes to coordinator and navigation block respectively. Navigation block save and update the map. In the other words, it saves all the data about the passed path, crossings (type, location, decision) and doors (location, decision). According to the data above, Navigation calculates the next optimal path, which becomes the input of the coordinator. The coordinator analyses the crossing type and also passes the possible next path to the motion control block.

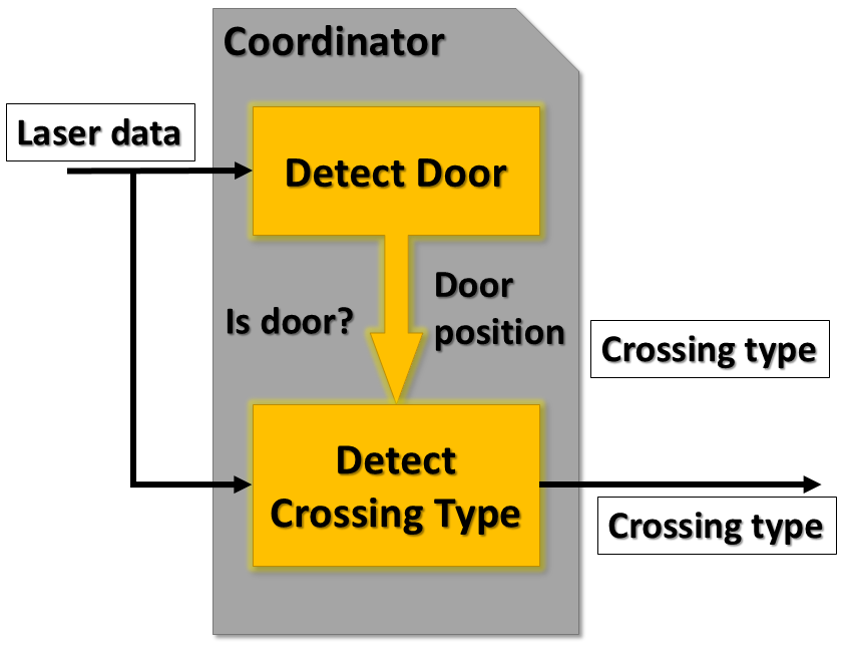

There are two computation components in the coordination block. One is the algorithm to detect the door, and the other is to detect the crossing type. Both computation components use laser data (distances and angles) as the input. For detecting door, the algorithm generates result that tells if there is a door and the location of the door. It is possible and reasonable to treat doors as crossings, and the only difference between doors and real crossing is the detecting algorithm. In this case, we could make full use of the door detecting computation component. With the information of the door (for example, it is on the left/right/front, and the distance between robot and the door), the algorithm of detecting crossing type could analyse the respective crossing type. In a result, crossing type detecting not only check the crossings, but also transfer the data of doors into crossings. Again, in this diagram, all the arrays represent the communication. There are coordinator and configurator for the whole coordinator block, but not shown in the figure.

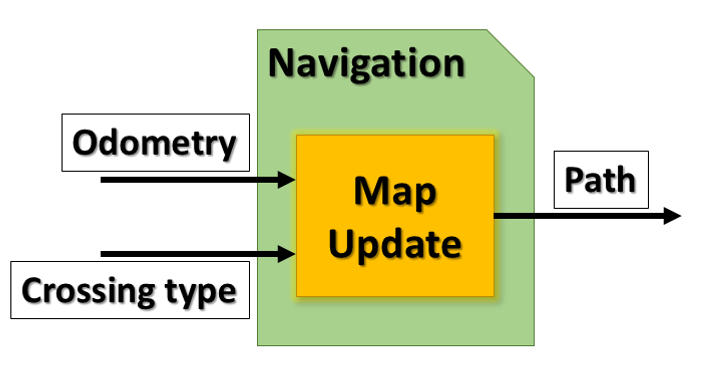

The main computation component here is to save the passed path and update the map. It uses the odometry data and crossing type to memorize the location of the passed door/crossing, the crossing type, and the chosen decision (eg. turned left/right/go straight). The other function of "map update" block is to generate an optimized path. For example, when the robot meets one intersection, the algorithm will check if it passed this intersection before. If so, the decision to be made is simply different from that of last time. If not, the algorithm will randomly choose one direction to turn.

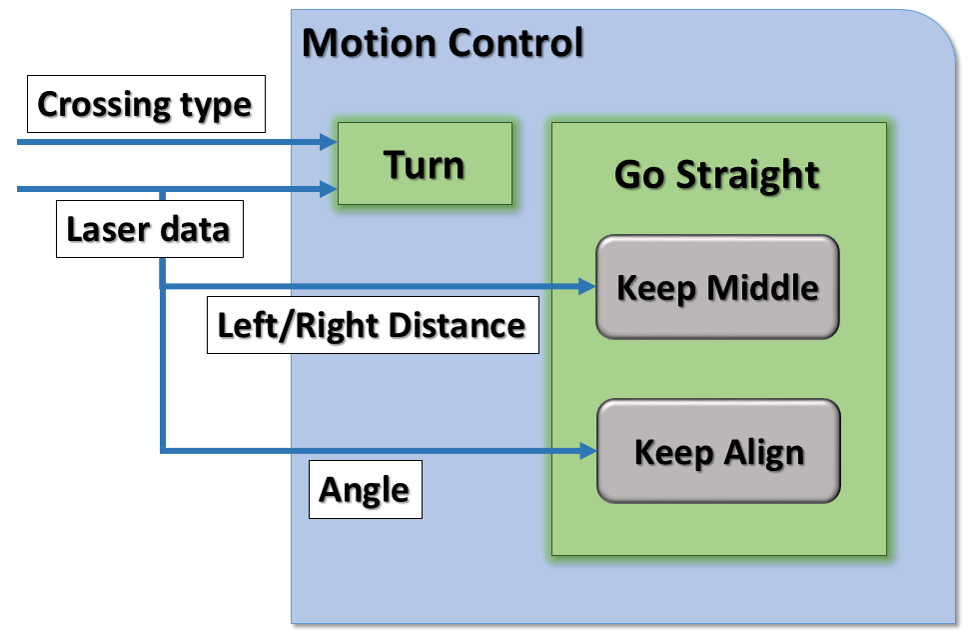

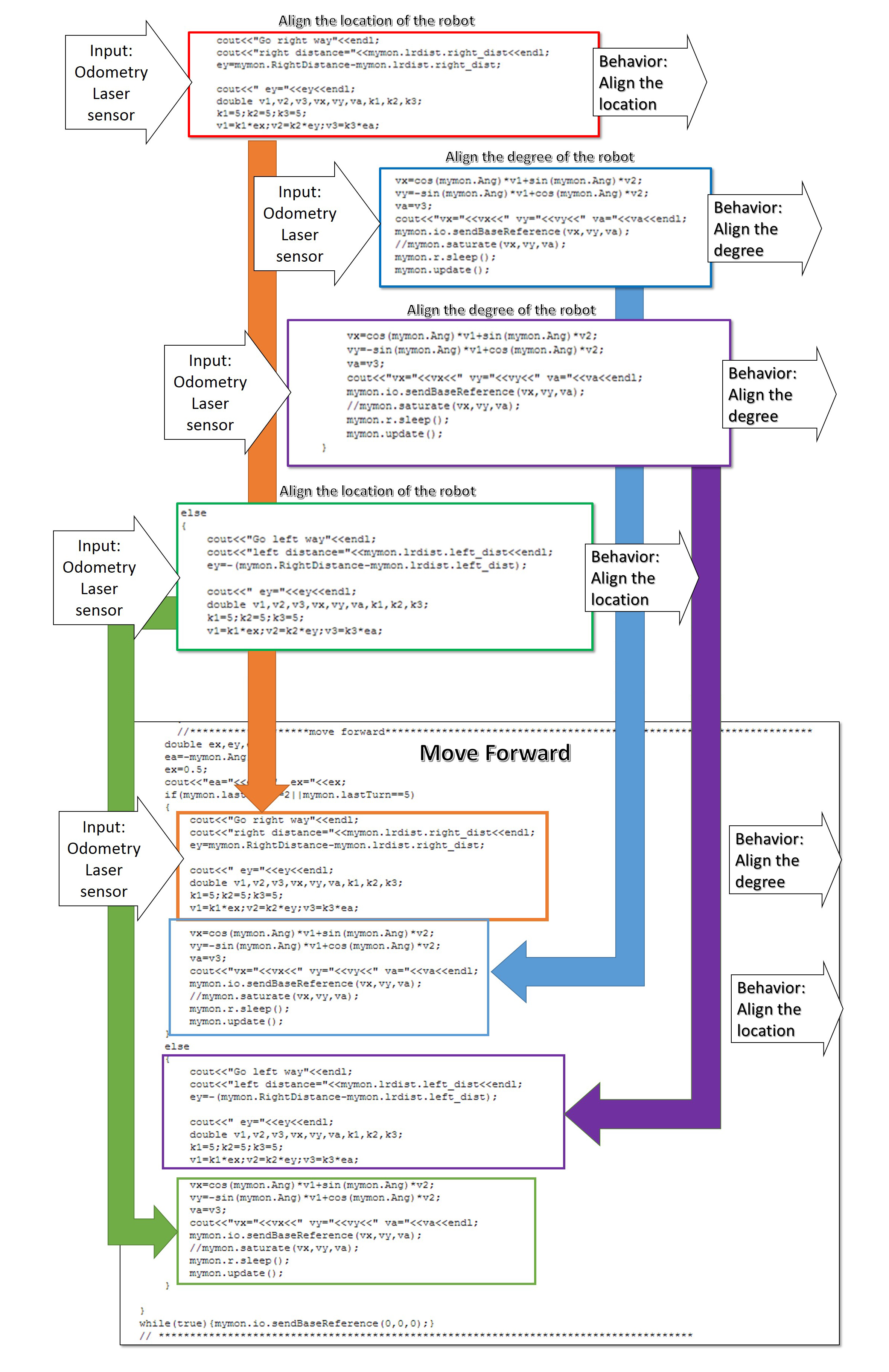

The motion control block has two computation components which are "Turn" and "Go straight".

The "Turn" block receives the crossing type as an input from the coordinator. The other input is the laser data from the robot. According to different types of crossing, different algorithms are used to deal with turning into the desired direction. In order to adjust the turning angle and wheel speed, a PD controller is applied.

With regard to the "Go straight" block, Only the laser data is used as the input. There are two functions within this block, which are keeping middle and keeping align. To ensure the robot to be always in the middle of the corridor, the algorithm compares the distance to left or right wall with the limit (minimum distance to the wall). To achieve this goal, a P controller is used to adjust the distance from the robot to the wall. Similarly, to keep align, the input used is the angle from the laser data. A P controller is designed to adjust the angle that the robot is facing forward.

Schedule

May. 13: Corridor competition.

June. 10: Final presentation.

June. 17: Maze competition.

Pico test schedule

- Test 1: May. 21

At the initial time, we design the robot to walk straight and try to keep it in the middle of the passage. This method make the robot crush to the wall due to that we do not take into account the small error case by the odometry. There will be a turning when we want the robot walk straight.

- Test 2: May. 29

We improve the walk straight function. Now the robot will turn itself during walking to avoid crush. But the speed is relatively low. While this phenomenon is cased by the fact that the errors of two side is small and the robot will try to keep middle all the time. Now we want the robot turn left or right in the certain kinds of cross base on the flags we set. If the robot follow any flag, it should turn to a desired position. But in fact it fails.

- Test 3: June. 4

Check the data during running we find that the measurement of the distance from left and right. Then we fix this problem and the robot now can follow the commander of the flags to stop to the middle of the exit of cross as we want.

- Test 4: June. 11

We replace some function by new method. The old method only works in a simple environment. If we take into account the complex maze, the robot will lost itself e.g. the 180 degrees turning. The new method will lead the robot just base on one side of the wall (left or right ). At the same time the steering do not base on the flags (the kinds of the cross) any more. Now the robot will turn base on the center of the cross detected by the laser sensor during turning.

- Test 5: June. 15

There is a fatal error during this test. In fact we find it last time. The initial data from the laser senor seems cannot readable (or not correct) at the very beginning after the program is runing. This will case the problem that the program cannot go into the main function loop. We still need to fix this.

Corridor Competition

Our PICO failed at the corridor competiton. Fortunately, we get some feedback from it:

- The “move ahead” function was executed in open loop, with too accurate drift control.

- The robot crashed into the wall.

- The robot slide was not controlled, the judgement condition code needs to be rebuilt.

After some effort in improving code, during the second time test, our program finally works.

|

Algorithms

Moving forward or backward

Compare left and right distance, combined with P controller to make the PICO in the middle of corridor; Check the angle of the PICO.

Turning

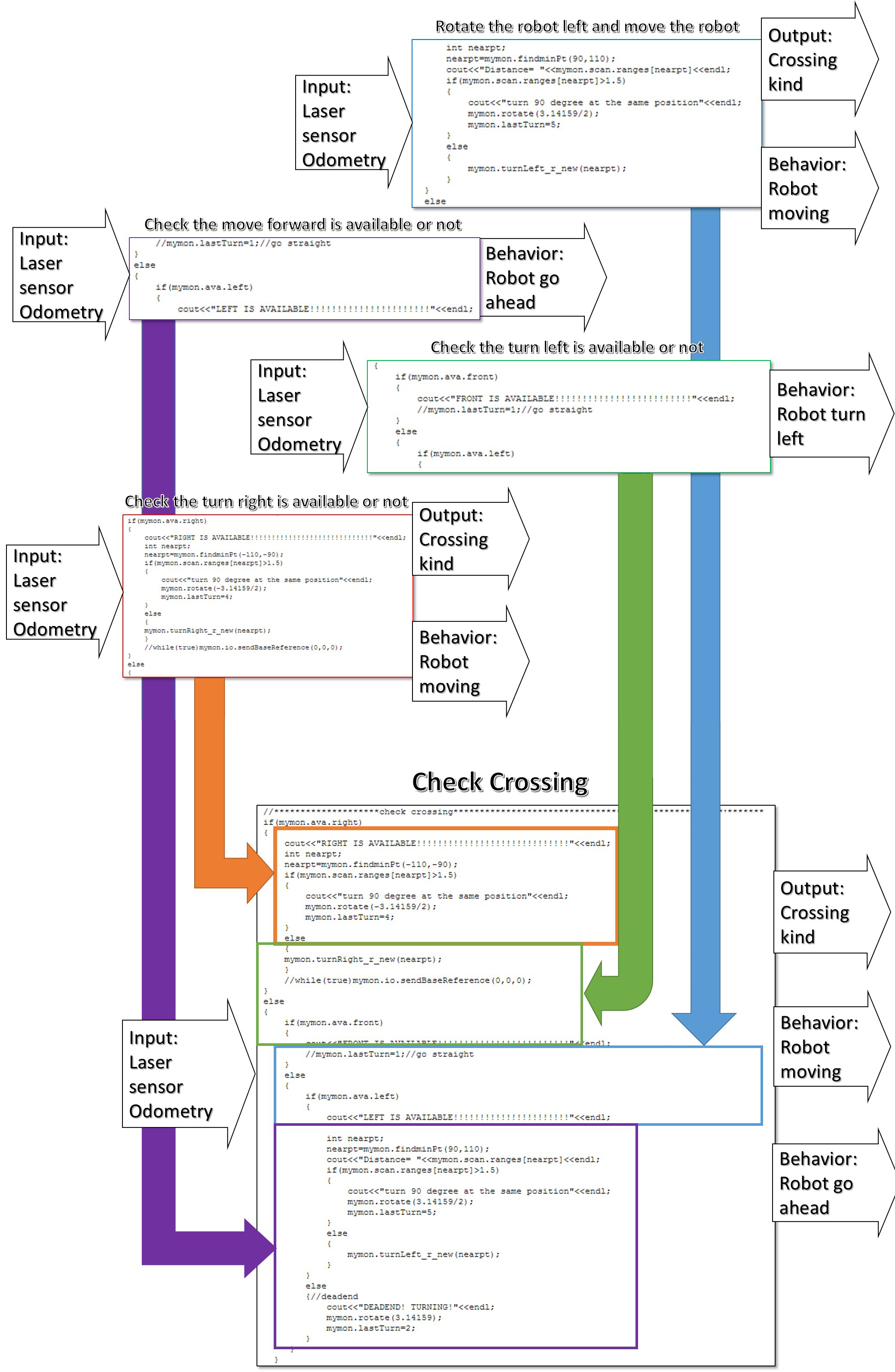

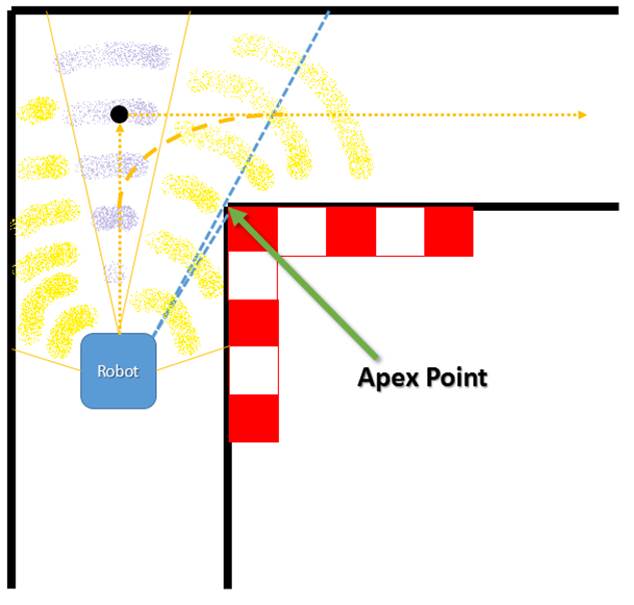

During the first several weeks, the judgement of corner is defined as bellowing:

Input: light sensor

Output:

- The corner exists or not

- The current location (based on robot axis system) to the corner central point distance

- The width of the corner (return value is half of the width)

|

|

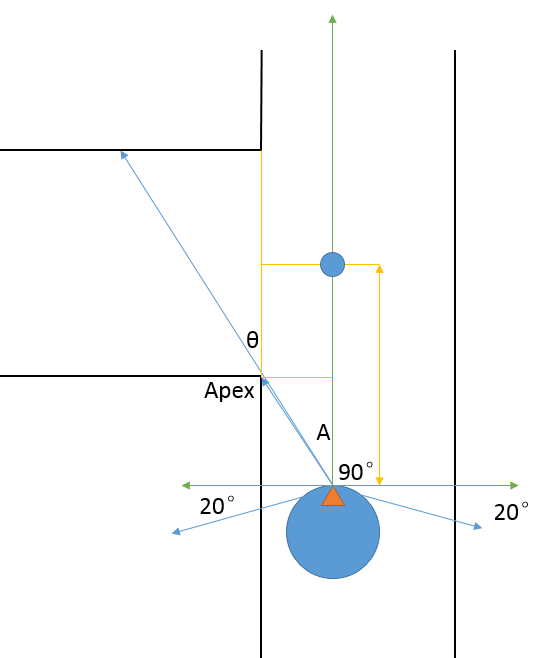

Assume the sensor range is 220 degree, from the -20 degree, the return value is put in the array, the sampling time is 1000 times.

To calculated the return value between 40 degree to 80 degree, calculate the differences. If the return value is bigger than threshold, it can be judged that this side has corner.

Also return the return value of the number, (which return value has a big differences) and then get the diff value between the return value.

After that, use the triangle function to calculate the width of the corner, and the distance from the current location to the center of the corner.

This function can be written in the loop, each loop will correct the distance from local location to the center of the corner.

Presentations

- First presentation (week 3): File:First presentation.pdf

First presentation is about: Task-skill-motion system architecture and the composition pattern.

- Second presentation (week 6): File:Second presentation.pdf

Second presentation is more about: Coding and Composition Pattern.

- Final presentation (week 8): File:Final presentation 11.pdf

Maze Competition

Function Description

Front Road Detected

The different kind of front roads will be set as different flags. The robot will base on those flags to determine the next action to handle the cross.

Take into account the left, right and straight way, the all the situation the robot will meet is:[math]\displaystyle{ 2^3=8 }[/math]

Put all the situation into flags as

| Left | Right | Straight | Flag |

|---|---|---|---|

| Open | Open | Open | 1 |

| Close | Open | Open | 2 |

| Open | Close | Open | 3 |

| Open | Close | Close | 4 |

| Close | Close | Open | 5 |

| Close | Close | Open | 6 |

| Close | Open | Close | None(keep going) |

| Close | Close | Close | 7(Dead-end) |

| Left | Right | Flag |

|---|---|---|

| Open | Open | 8 |

| Open | Close | 9 |

| Close | Open | 10 |

From the Table 2 for more detail condition, if the robot has been faced the wall, those flag may be fail to get due to the sensor cannot detected the corner in front of it. So some more flags are introduced into this mode combine with the judgement of the front wall.

Map Saving

Because of the limitation of time, we did not finish this part.

Door Detection

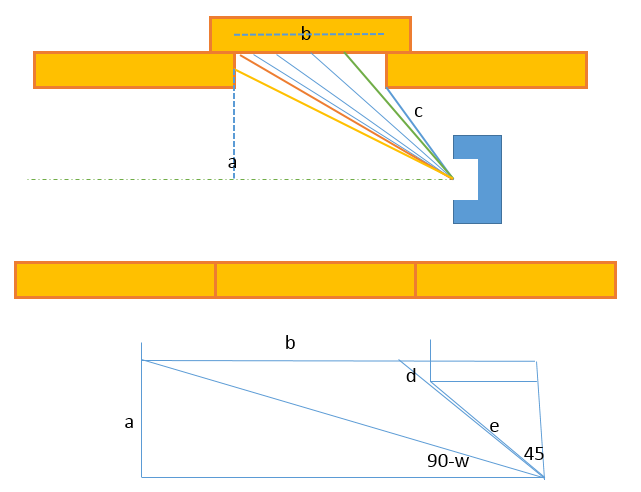

First consider the door is in the left/right side. Once the return value from the laser sensor on 45 deg experiences an increasing for about 30cm, like the green line and blue line in the diagram at c point, there may be exists a door. Hence, check the differences between the laser return value at this side from 45 deg to 90 deg. Such as the thin blue line in the diagram. Check the difference is negative or positive. Once the differences change from negative to positive, such as the orange line and red line in the diagram, there should exists a door. Calculate the degree of the response laser, and from this, because the width of the road 2a has already know, from triangle function, the length of b can be calculated as bellowing:

Assume the angle of the return signal changing from positive to negative is w deg, which means that the angle between this return laser to the straight road is 90 - w deg. Also, as noticed before, in 45 deg, there exists a return value gap, the gap value can be calculated by d. The return value which just before the gap is e. Then, the length of the door can be calculated as: [math]\displaystyle{ b=\frac{a}{tan(90-\omega)}-\frac{(d+e)}{\sqrt{2}}+\frac{d}{\sqrt{2}} }[/math]

Also the width of the door is [math]\displaystyle{ \frac{d}{\sqrt{2}} }[/math] . The diagram is shown as bellowing:

The calculation in right side is as same as left side.

Then think of the door in the dead end. Same as before, calculate the diff between each laser sensor, once there exists a change from positive to negative, or from negative to positive, the angle of the return sensor can be set as w. Once there exists to changes in about 60 deg, there exists a dead end or a door, stop the robot and bleep during this time. The width of the door is the width of the route, and the distance between robot and door can be calculated as bellowing:

Hence, the width of the door is as same as the road, b, and the distance from robot to the door can be calculated as bellowing:[math]\displaystyle{ a=\frac{{\frac{b}{2}}}{tan(\omega)} }[/math]

Hence, the door can be detected.

Result and Evaluation

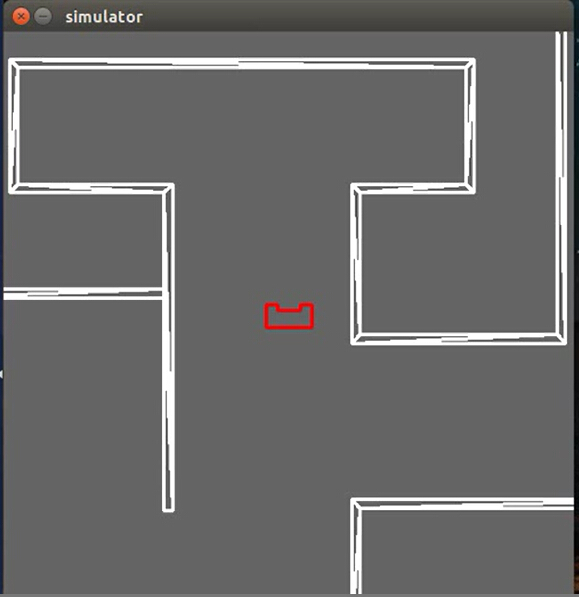

Simulation Result

From the simulation result, it seems works well without considering door.

|

Real Competition

The evaluation of the maze competition:

As putted previously, there was a problem with the initialization of our program so that it did not perform well in the final competition. There are some differences between the simulator and the real robot which are not understood. In the real test, we found sometimes the laser data is some random numbers, making the computations corrupted. Every time we ensured that there was new data available before updating the variables but it still didn't work. During the last test, the tutor tried to find the problem for us but there was no result. After thinking in the past few days, we think a possible approach is to store the laser data in memory by our own and update it whenever new laser data is available. We can compare the difference between consequent laser data and set a threshold to decide if discard the new collected laser data or not. In addition, a median filter is applied to the laser data to suppress the effect of noises. For future work, we first need to refine our code and make it more readable since now we have many functions and it is somehow complicate. Second, perfect the structure of the node used to store the map and accelerate our DP algorithm.

References

- Bruyninckx, Herman, et al. "The BRICS component model: a model-based development paradigm for complex robotics software systems." Proceedings of the 28th Annual ACM Symposium on Applied Computing. ACM, 2013.

- Klemm, William Robert. Atoms of Mind: The" ghost in the Machine" Materializes. Springer Science & Business Media, 2011.

- Herman Bruyninck, 6th European PhD School in Robotic Systems. University of Leuven, Eindhoven University of Technology, 2014.

- Prassler, Erwin, et al. "The use of reuse for designing and manufacturing robots." White Paper (2009).

- Vanthienen, Dominick, Markus Klotzbücher, and Herman Bruyninckx. "The 5C-based architectural Composition Pattern: lessons learned from re-developing the iTaSC framework for constraint-based robot programming." JOSER: Journal of Software Engineering for Robotics 5.1 (2014): 17-35.

- Nikunj R. Mehta, and Nenad Medvidovic. "Composition Of Style-Based Software Architectures From Architectural Primitives". Department of Computer Science University of Southern California, 2004.