Embedded Motion Control 2015 Group 7: Difference between revisions

| (285 intermediate revisions by 9 users not shown) | |||

| Line 9: | Line 9: | ||

|- | |- | ||

! scope="row" | Student 1 | ! scope="row" | Student 1 | ||

| Camiel Beckers || 0766317 || c.j.j.beckers@student.tue.nl | | [http://www.linkedin.com/profile/view?id=342078144&authType=NAME_SEARCH&authToken=f-Ak&locale=nl_NL&trk=tyah&trkInfo=clickedVertical%3Amynetwork%2Cidx%3A1-1-1%2CtarId%3A1433324854375%2Ctas%3Acamiel%20becker Camiel Beckers] || 0766317 || c.j.j.beckers@student.tue.nl | ||

|- | |- | ||

! scope="row" | Student 2 | ! scope="row" | Student 2 | ||

| Brandon Caasenbrood || 0772479 || b.j.caasenbrood@student.tue.nl | | [https://www.linkedin.com/profile/view?id=388280019&authType=NAME_SEARCH&authToken=3-nV&locale=nl_NL&trk=tyah&trkInfo=clickedVertical%3Amynetwork%2Cidx%3A1-1-1%2CtarId%3A1433325801717%2Ctas%3Abrandon%20ca Brandon Caasenbrood] || 0772479 || b.j.caasenbrood@student.tue.nl | ||

|- | |- | ||

! scope="row" | Student 3 | ! scope="row" | Student 3 | ||

| Line 18: | Line 18: | ||

|- | |- | ||

! scope="row" | Student 4 | ! scope="row" | Student 4 | ||

| Falco Creemers || 0773413 || f.m.g.creemers@student.tue.nl | | [https://www.linkedin.com/profile/view?id=387576768&authType=NAME_SEARCH&authToken=Mnt5&locale=nl_NL&trk=tyah&trkInfo=clickedVertical%3Amynetwork%2Cidx%3A1-1-1%2CtarId%3A1433325863422%2Ctas%3Afalco%20creemer Falco Creemers] || 0773413 || f.m.g.creemers@student.tue.nl | ||

|- | |- | ||

! scope="row" | Student 5 | ! scope="row" | Student 5 | ||

| Diem van Dongen || 0782044 || d.v.dongen@student.tue.nl | | [https://www.linkedin.com/profile/view?id=388384726&authType=NAME_SEARCH&authToken=_Fqk&locale=nl_NL&srchid=3882800191433326139320&srchindex=1&srchtotal=1&trk=vsrp_people_res_name&trkInfo=VSRPsearchId%3A3882800191433326139320%2CVSRPtargetId%3A388384726%2CVSRPcmpt%3Aprimary%2CVSRPnm%3Atrue Diem van Dongen] || 0782044 || d.v.dongen@student.tue.nl | ||

|- | |- | ||

! scope="row" | Student 6 | ! scope="row" | Student 6 | ||

| Mathijs van Dongen || 0768644 || m.c.p.v.dongen@student.tue.nl | | [https://www.linkedin.com/profile/view?id=366033350&authType=NAME_SEARCH&authToken=YvWP&locale=nl_NL&trk=tyah&trkInfo=clickedVertical%3Amynetwork%2Cidx%3A1-1-1%2CtarId%3A1433325907818%2Ctas%3Amat Mathijs van Dongen] || 0768644 || m.c.p.v.dongen@student.tue.nl | ||

|- | |- | ||

! scope="row" | Student 7 | ! scope="row" | Student 7 | ||

| Line 30: | Line 30: | ||

|- | |- | ||

! scope="row" | Student 8 | ! scope="row" | Student 8 | ||

| Rik van der Struijk || 0739222 || r.j.m.v.d.struijk@student.tue.nl | | [https://www.linkedin.com/profile/view?id=224622675&authType=NAME_SEARCH&authToken=CGj3&locale=nl_NL&trk=tyah&trkInfo=clickedVertical%3Amynetwork%2Cidx%3A1-1-1%2CtarId%3A1433325953407%2Ctas%3Arik%20van%20der%20struijk Rik van der Struijk] || 0739222 || r.j.m.v.d.struijk@student.tue.nl | ||

|- | |- | ||

! scope="row" | Student 9 | ! scope="row" | Student 9 | ||

| Ward Wiechert || 0775592 || w.j.a.c.wiechert@student.tue.nl | | [https://www.linkedin.com/profile/view?id=241112595&authType=NAME_SEARCH&authToken=SUKa&locale=nl_NL&srchid=3883847261433325982878&srchindex=1&srchtotal=2&trk=vsrp_people_res_name&trkInfo=VSRPsearchId%3A3883847261433325982878%2CVSRPtargetId%3A241112595%2CVSRPcmpt%3Aprimary%2CVSRPnm%3Atrue Ward Wiechert] || 0775592 || w.j.a.c.wiechert@student.tue.nl | ||

|- | |- | ||

|} | |} | ||

=''' | <!-- | ||

='''Update Log'''= | |||

<p>28-04: Updated the page with planning and initial design.</p> | <p>28-04: Updated the page with planning and initial design.</p> | ||

<p>29-04: Updated page with initial design PDf (not yet "wikistyle")</p> | <p>29-04: Updated page with initial design PDf (not yet "wikistyle")</p> | ||

| Line 45: | Line 46: | ||

<p>13-05: Evaluating second experiment, corridor competition and discuss initial software</p> | <p>13-05: Evaluating second experiment, corridor competition and discuss initial software</p> | ||

<p>14-05: Evaluating corridor competition, update initial ideas wrt detection, movement etc. </p> | <p>14-05: Evaluating corridor competition, update initial ideas wrt detection, movement etc. </p> | ||

<p>16-05: Update Movement.</p> | |||

<p>20-05: Evaluatue third experiment.</p> | |||

<p>24-05: Update Design, Detection, Movement, SLAM and overall Planning.</p> | |||

<p>27-05: Correct design, detection, movement, SlAM etc. to last design.</p> | |||

<p>03-06: Revised composition pattern.</p> | |||

--> | |||

<!-- | |||

='''Planning'''= | ='''Planning'''= | ||

'''Week | '''Week 7: 1 June - 7 June''' | ||

<ul > | <ul> | ||

<li> | <li> 2 June: Revised Detection, correcting Hough Transform and improving robustness </li> | ||

<li> | <li> 2 June: Back-up plan considering homing of PICO (driving based on potential field) </li> | ||

<li> | <li> 3 June: SLAM finished, functionality will be discussed (will it or will it not be used?) </li> | ||

<li> 4 June: New experiment: homing, SLAM, .... </li> | |||

<li> Working principle of decision maker </li> | |||

<li> Add filter to Detection: filter markers to purely corners </li> | |||

</ul> | </ul> | ||

'''Week | '''Week 8: 8 June - 14 June''' | ||

<ul | <ul> | ||

<li> '''10 June: Final design presentation - design and "educational progress" </li> | |||

<li>''' | |||

</ul> | </ul> | ||

'''Week | '''Week 9: 15 June - 22 June''' | ||

<ul > | <ul> | ||

<li> | <li> '''17 June: Maze Competition </li> | ||

</ul> | </ul> | ||

--> | |||

='''Introduction'''= | ='''Introduction'''= | ||

| Line 87: | Line 82: | ||

<p>For the course Embedded Motion Control the "A-MAZE-ING PICO" challenge is designed, providing the learning curve between hardware and software. The goal of the "A-MAZE-ING PICO" challenge is to design and implement a robotic software system, which should make Pico or Taco be capable of autonomously solving a maze in the robotics lab. </p> | <p>For the course Embedded Motion Control the "A-MAZE-ING PICO" challenge is designed, providing the learning curve between hardware and software. The goal of the "A-MAZE-ING PICO" challenge is to design and implement a robotic software system, which should make Pico or Taco be capable of autonomously solving a maze in the robotics lab. </p> | ||

<p>PICO will be provided with | <p>PICO will be provided with low level software (ROS based), which provides stability and the primary | ||

functions such as communication and movement. Proper software must be designed to complete the | |||

said goal. Various models are used to design the behavior and structure of the software, see | |||

[http://cstwiki.wtb.tue.nl/index.php?title=Embedded_Motion_Control_2015_Group_7#Software_Design Software Design]. The final software design, including Git-links, is also discussed in this section. The details of the various software functions can be found in the [http://cstwiki.wtb.tue.nl/index.php?title=Embedded_Motion_Control_2015_Group_7#Software_subsystems Software Subsystems]. At the end of | |||

the page, the Maze challenge is discussed and an overall conclusion is presented.<br><br><br><br><br><br></p> | |||

='''Design'''= | ='''Software Design'''= | ||

The software design is discussed in the following subsections. The | |||

requirements and functions of the software are listed and two different | |||

models are used to characterize the behavior and structure of the software. | |||

Note that the actual functionality of the software is not discussed in this | |||

section. More detailed information about the individual software functions | |||

can be found in [http://cstwiki.wtb.tue.nl/index.php?title=Embedded_Motion_Control_2015_Group_7#Software_subsystems Software subsystems]. | |||

=='''Requirements'''== | =='''Requirements and functions'''== | ||

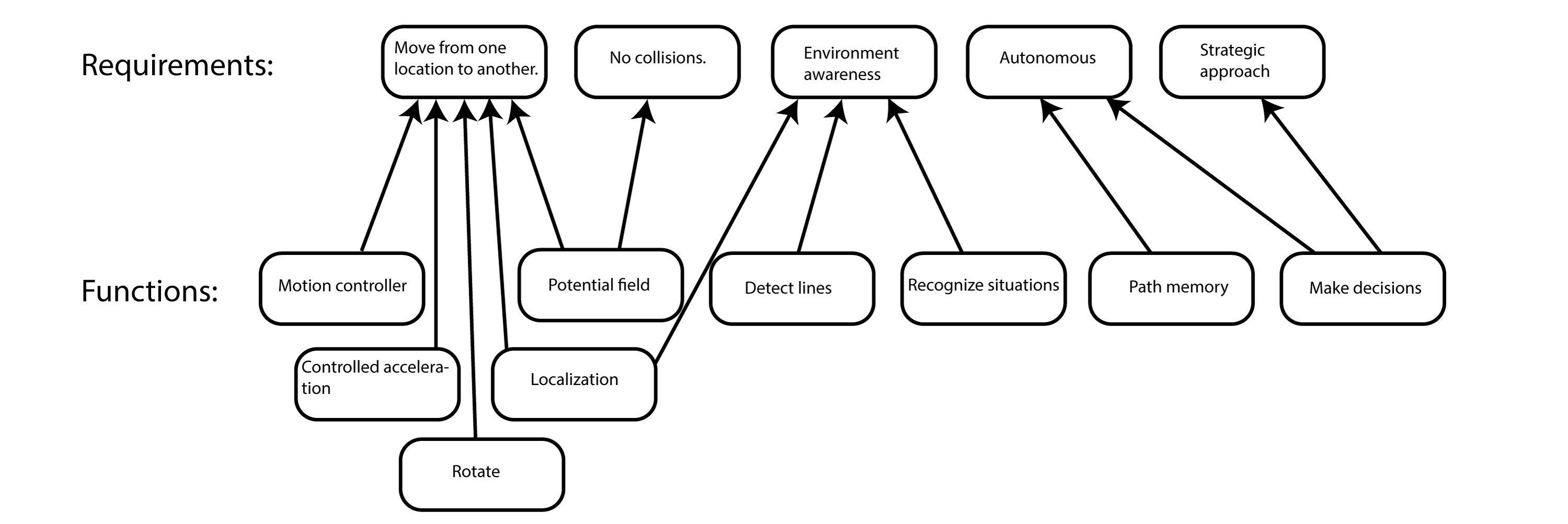

<p> | [[File:req_func.png|thumb|left|alt=Functions and requirements.|Functions and requirements]] | ||

autonomously navigate trough a maze, and being able to solve this maze the following requirements | <p>The requirements specify what expectations the software should fulfill. In order for the robot to autonomously navigate trough a maze, and being able to solve this maze the following requirements are determined with respect to the software of the robot. It is desired that the software meets the following requirements:</p> | ||

are determined with respect to the software of the robot. It is desired that the software meets the | |||

following requirements:</p> | |||

<ul > | <ul > | ||

<li>Move from | <li>Move from one location to another</li> | ||

<li>No collisions</li> | <li>No collisions</li> | ||

<li>Environment awareness: | <li>Environment awareness: interpreting the surroundings</li> | ||

<li>Fully autonomous: not depending on human interaction</li> | <li>Fully autonomous: not depending on human interaction</li> | ||

<li>Strategic approach</li> | <li>Strategic approach</li> | ||

</ul> | </ul> | ||

<p>To satisfy the said requirements, one or multiple functions are designed and assigned to the requirements. The figure describes which functions are considered necessary to meet a specific requirement.</p> | |||

<p>To satisfy the said requirements, one or multiple functions are designed and assigned to the requirements. | <!-- | ||

== Components and Specifications == | |||

<p>Components, both hardware and software, are used to realize the | |||

functions. To realize these functions, | |||

the following components can be assigned to each function respectively, see | |||

Figure 1.2. In which both | |||

hardware and software components are considered.</p> | hardware and software components are considered.</p> | ||

<p>'''[Image will follow soon]'''</p> | <p>'''[Image will follow soon]'''</p> | ||

<p>If the requirements should be satisfied within certain boundaries, desired specifications should be | <p>If the requirements should be satisfied within certain boundaries, | ||

assigned to the components | |||

must at least be able to. The initial hardware specifications correspond with a certain accuracy of | desired specifications should be | ||

a part of the robot. The initial software specifications mainly correspond with the amount of data | assigned to the components realizing the functions. The specifications | ||

describe what the components | |||

must at least be able to. The initial hardware specifications correspond | |||

with a certain accuracy of | |||

a part of the robot. The initial software specifications mainly correspond | |||

with the amount of data | |||

which is stored and applied for faster calculations.</p> | which is stored and applied for faster calculations.</p> | ||

| Line 146: | Line 151: | ||

the width of the robot | the width of the robot | ||

|- | |- | ||

| Software: 2D location to wall coordinates || data point separation 0.25 times | | Software: 2D location to wall coordinates || data point separation 0.25 | ||

times | |||

the width of the robot | the width of the robot | ||

|- | |- | ||

| Line 169: | Line 176: | ||

|} | |} | ||

<p>Note that the accuracy of the left and right motor in centimeters is based on the accuracy of the | <p>Note that the accuracy of the left and right motor in centimeters is | ||

encoders. Due to unknown measurements of the maze, an initial accuracy 1 [cm] for the motors is | based on the accuracy of the | ||

applied. The data point | encoders. Due to unknown measurements of the maze, an initial accuracy 1 | ||

that a sufficient amount of data is present to for instance recognize corners or a door that recently | [cm] for the motors is | ||

opened. Larger data | applied. The data point separation of 0.25 times the width of the robot is | ||

result in an increase of accuracy and a decrease in processing time (more data to be taken into account | initially determined such | ||

for certain decisions and processes).</p>--> | that a sufficient amount of data is present to for instance recognize | ||

corners or a door that recently | |||

opened. Larger data separation should result in a loss of accuracy, whilst | |||

a smaller separation should | |||

result in an increase of accuracy and a decrease in processing time (more | |||

data to be taken into account | |||

for certain decisions and processes).</p> | |||

--> | |||

=='''Behaviour model - The task-skill-motion pattern'''== | |||

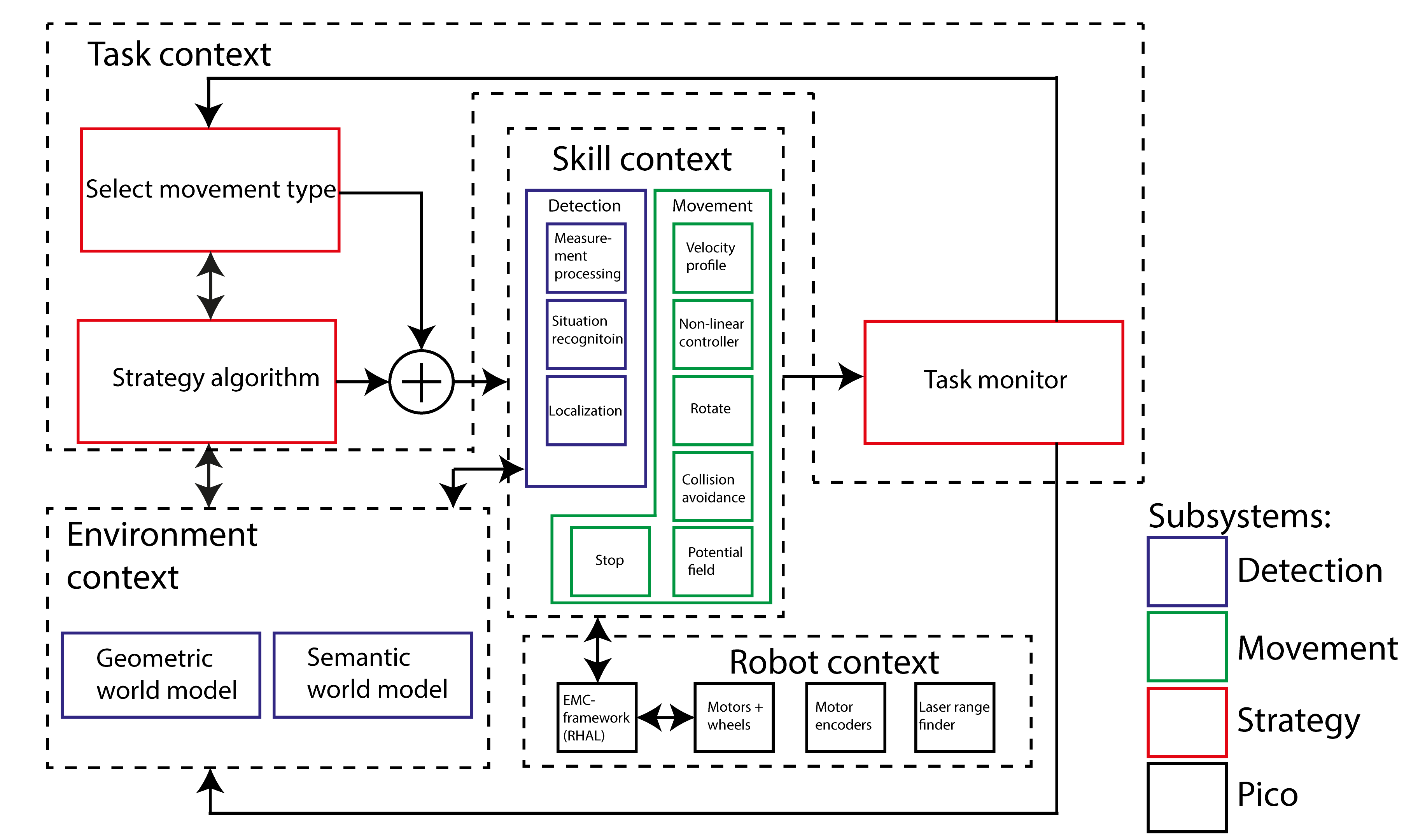

[[File:Behaviour_V3.png|thumb|left|alt=Interface.|Interface]] | |||

<p>In order to further specify the behavior of the software, a task-skill- | |||

motion pattern is constructed. This pattern describes the communication | |||

between the specified contexts and functions necessary to perform the task. | |||

The contexts and functions are represented by blocks in the interface and | |||

correspond with the requirements and functions previously discussed.</p> | |||

<p>The | <p>The robot context describes the lower level abilities and properties of | ||

the robot. In this case motors + wheels, the motor encoders and the laser | |||

range finder are mentioned. The EMC-framework controls this hardware and | |||

functions as an abstraction layer for the rest of the skills.</p> | |||

<p> | <p>The environment context describes the ways the robot stores the information it knows about its surroundings. A local geometric world model is used to store the location of corners in the field of view of PICO. The semantic world model, which entails the 'words' PICO understands. This is used by the detection subsystem (measurement processing) to tell the other subsystems what's going on. For example it knows the words: door, dead-end, T-junction, cross-junction, open, closed. This is perfect for the high-level communication used by strategy. It is also used to store choices made at a junction which the robot has already visited. Note that both these world models are implicit and temporary. There is no explicit world model in the final version of the software.</p> | ||

the | |||

the | |||

<p>The task context describes the control necessary for the robot to deal | |||

with certain situations (such as a corner, intersection or a dead end). The | |||

<p>The | skill context contains the functions which are considered necessary to | ||

realize the task. Note that the block `decision making` is considered as | |||

feedback control, it acts based on the situation recognized by the robot. | |||

However `Strategic Algorithm` corresponds with feed-forward control, based | |||

on the algorithm certain future decisions for instance can be ruled out or | |||

given a higher priority.</p> | |||

<p> | <p>Furthermore, the skill context is divided into two subsections, which | ||

correspond to two of the subsections in the composition pattern, and | |||

contains all the skills the robot possesses.</p> | |||

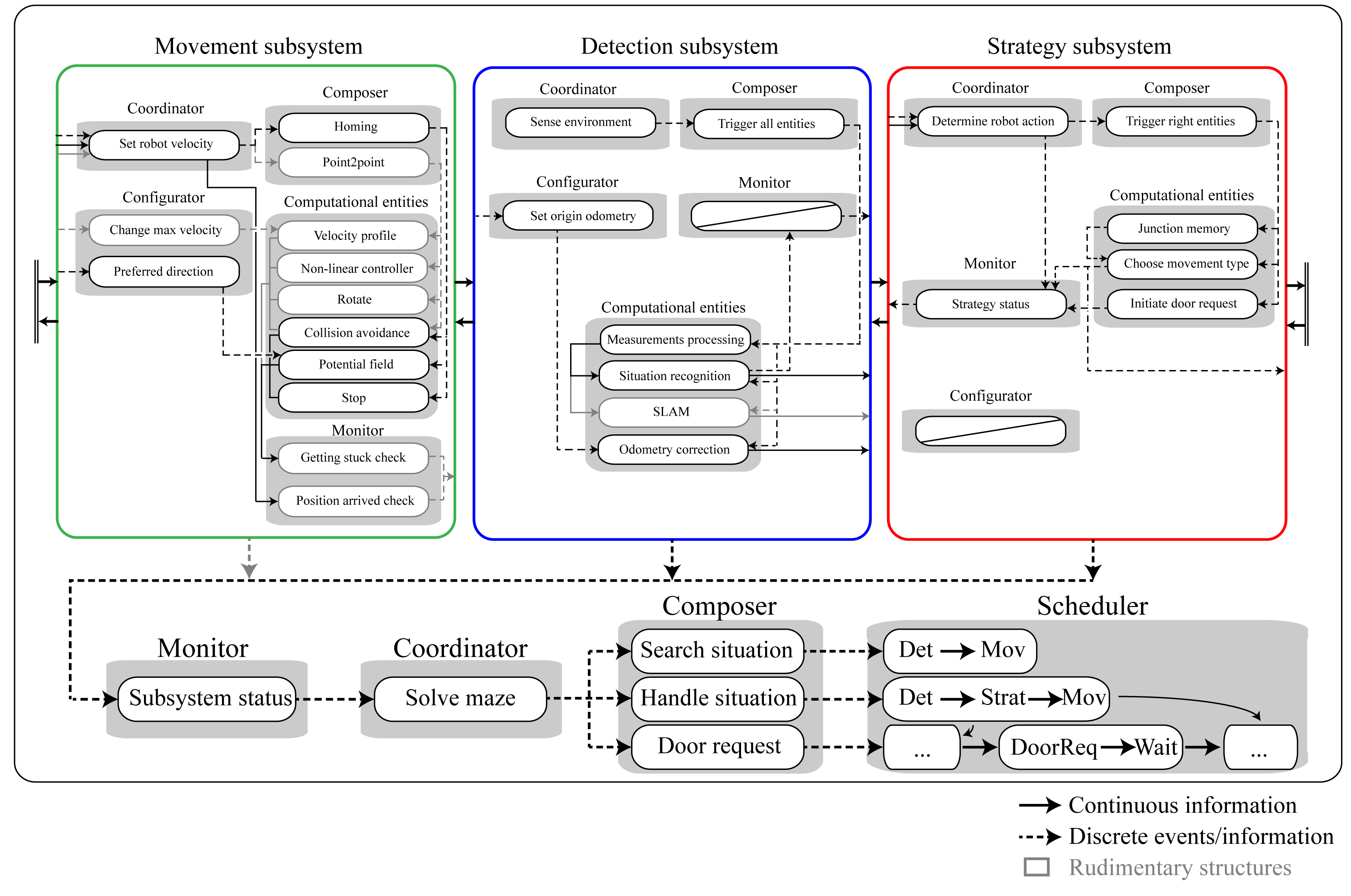

<p>The | =='''Structure model - The composition pattern'''== | ||

<p>The composition pattern, which functions as the structural model for the code, decouples the behavior model functionalities into the 5C’s. The code is divided into these five separate roles:</p> | |||

<p> | |||

<ul> | |||

<li>Computational entities which deliver the functional, algorithmic part | |||

of the system.</li> | |||

<li> Coordinators which deal with event handling and decision making in | |||

the system.</li> | |||

<li>Monitors check if what a function is supposed to accomplish is also | |||

realized in real life.</li> | |||

<li>Configurators which configure parameters of the computational | |||

entities.</li> | |||

<li>Composers which determine the grouping and connecting of computation | |||

entities.</li> | |||

</ul></p> | |||

<p>The | <p>The complete system is split into three subsystems, consisting of the movement subsystem, the detection subsystem and the strategy subsystem. The operation of each of these subsystems is explained in the sections below. The way in which these three work together is determined by the monitor, coordinator and composer of the main system.</p> | ||

[[File:Compositionpattern_V14.png|thumb|left|alt=Composition Pattern.|Composition Pattern]] | |||

==='''Main'''=== | |||

<p>The main task of the main file is to schedule the different subsystems correctly. Initially, only the detection subsystem and the movement subsystem are active. In this state, the robot moves forward by use of a potential field, while situations are searched. Once the detection subsystem recognizes a certain situation, the strategy subsystem is scheduled into the main loop. This subsystem makes a decision regarding the direction of the robot, based on the information provided by the detection subsystem. New orders are then send to the movement subsystem, which determines and sets the robot velocity. In the case that the strategy indicates a door request should be called, this is also scheduled in the main file. The implementation of these functionalities can be found in [http://cstwiki.wtb.tue.nl/index.php?title=Embedded_Motion_Control_2015_Group_7#Code Code].</p> | |||

=''' | [[File:comp_V14-Detection.png|thumb|left|alt=The detection subsystem|The detection subsystem]] | ||

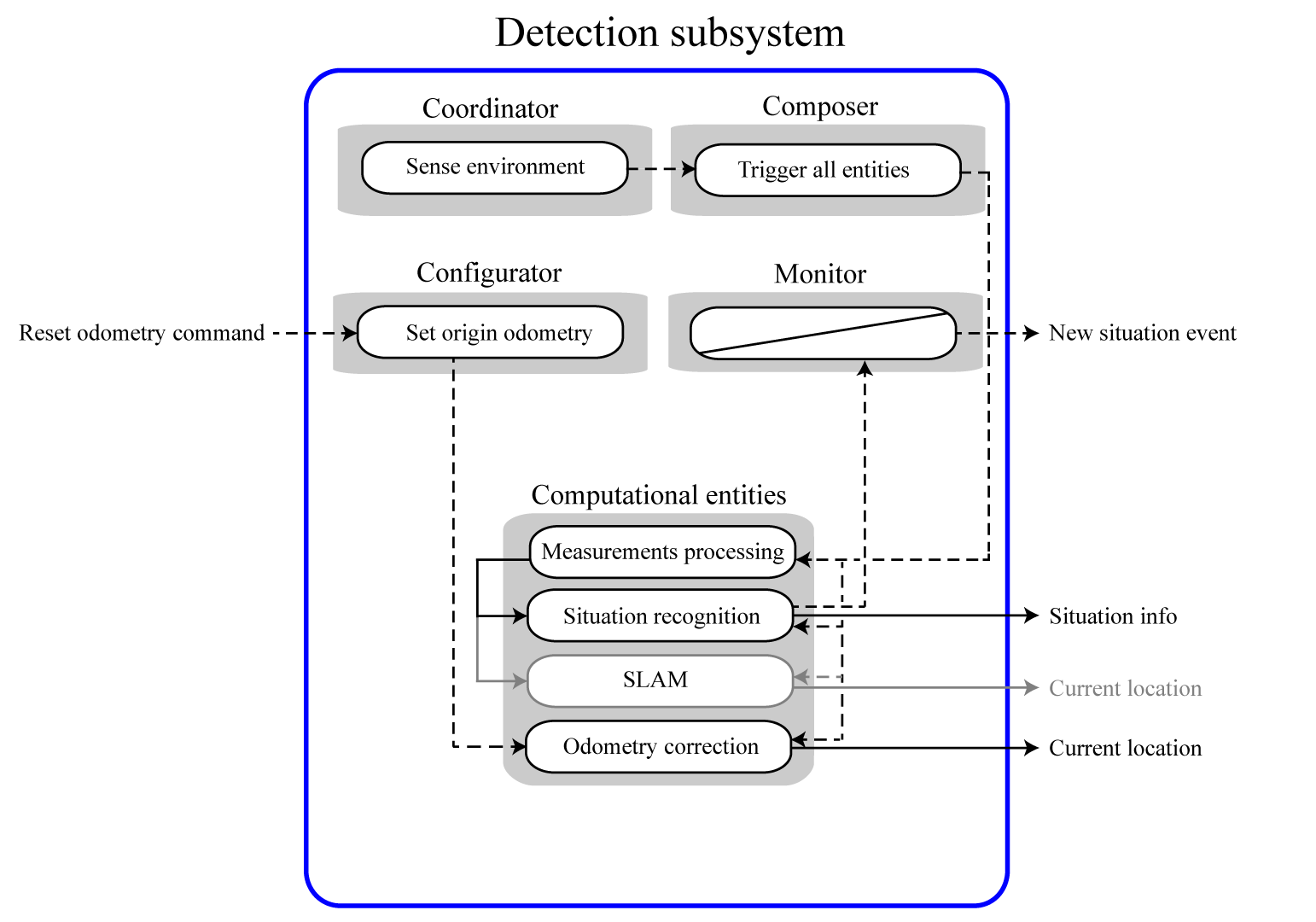

<p>The | ==='''Detection'''=== | ||

<ul> | <p>The detection subsystem is used to process the data of the laser sensors. Its main function is to observe the environment and send relevant information to the other subsystem and the main coordinator. | ||

<li> | The coordinator of the detection subsystem receives the unprocessed data from the EMC-framework | ||

and corrects the odometry data for a possible offset. As commissioned by the coordinator the composer then triggers all computational entities, which are then carried out consecutively. The computational entities | |||

<li> | are shortly explained, and further elaborated in the "Software Design".</p> | ||

<li> | <ul> | ||

<li> | <li>Measurement Processing: using Hough transform to convert LFR data to | ||

lines and markers.</li> | |||

<li>Situation Recognition: detecting corners, corridors and doors based | |||

on lines obtained by the Measurement Processing function.</li> | |||

<li>SLAM: The markers, as determined by measurement processing, are used in combination with our self developed, extended Kalman filter to localize the robot in a global coordinate system. (not used in final version of the code)</li> | |||

<li>Odometry correction: sending out the odometry data with respect to a certain origin. The location of this origin can be reset by the configurator.</li> | |||

</ul> | </ul> | ||

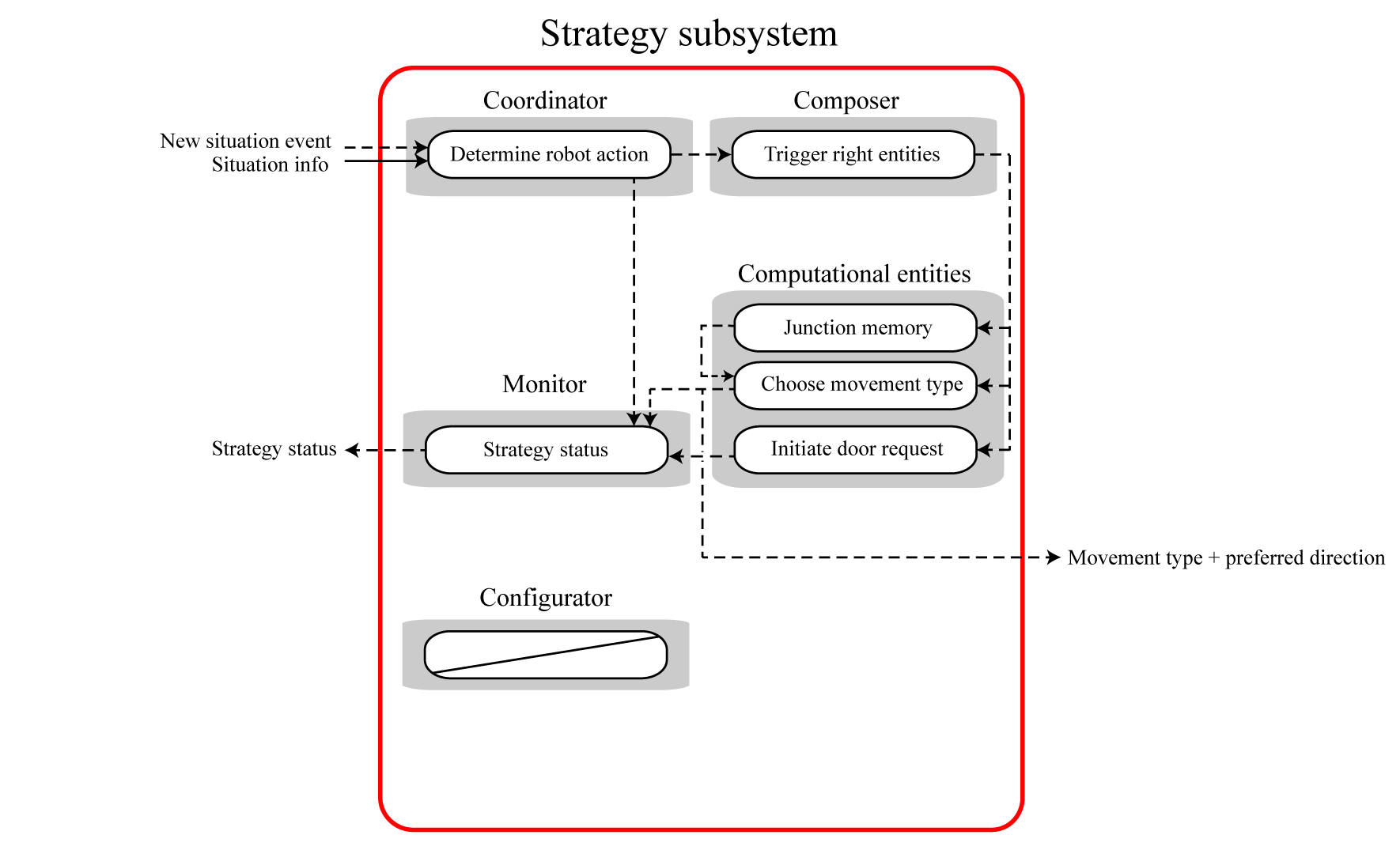

<p>The | [[File:comp_V14-Strategy.png|thumb|left|alt=The strategy subsystem.|The strategy subsystem]] | ||

==='''Strategy'''=== | |||

<p>When the detection subsystem recognizes a situation (junction, T-junction or cross-junction) a discrete | |||

event is send to the coordinator of the strategy subsystem which then will make a decision regarding the direction the robot should move, based on the available options (as detected by the detection subsystem) and junction memory. The junction memory records which of the junctions of the situation have already been investigated and turned out to be unsuccessful (dead-end). | |||

Based on this information one of the two movement types is chosen, see movement subsection, to guide to robot in the right direction. The movement type + direction is sent to the movement subsection. | |||

The strategy subsystem also has the possibility to request the opening of doors, when it encounters a dead end.</p> | |||

[[File:comp_V14-Movement.png|thumb|left|alt=The movement subsystem.|The movement subsystem]] | |||

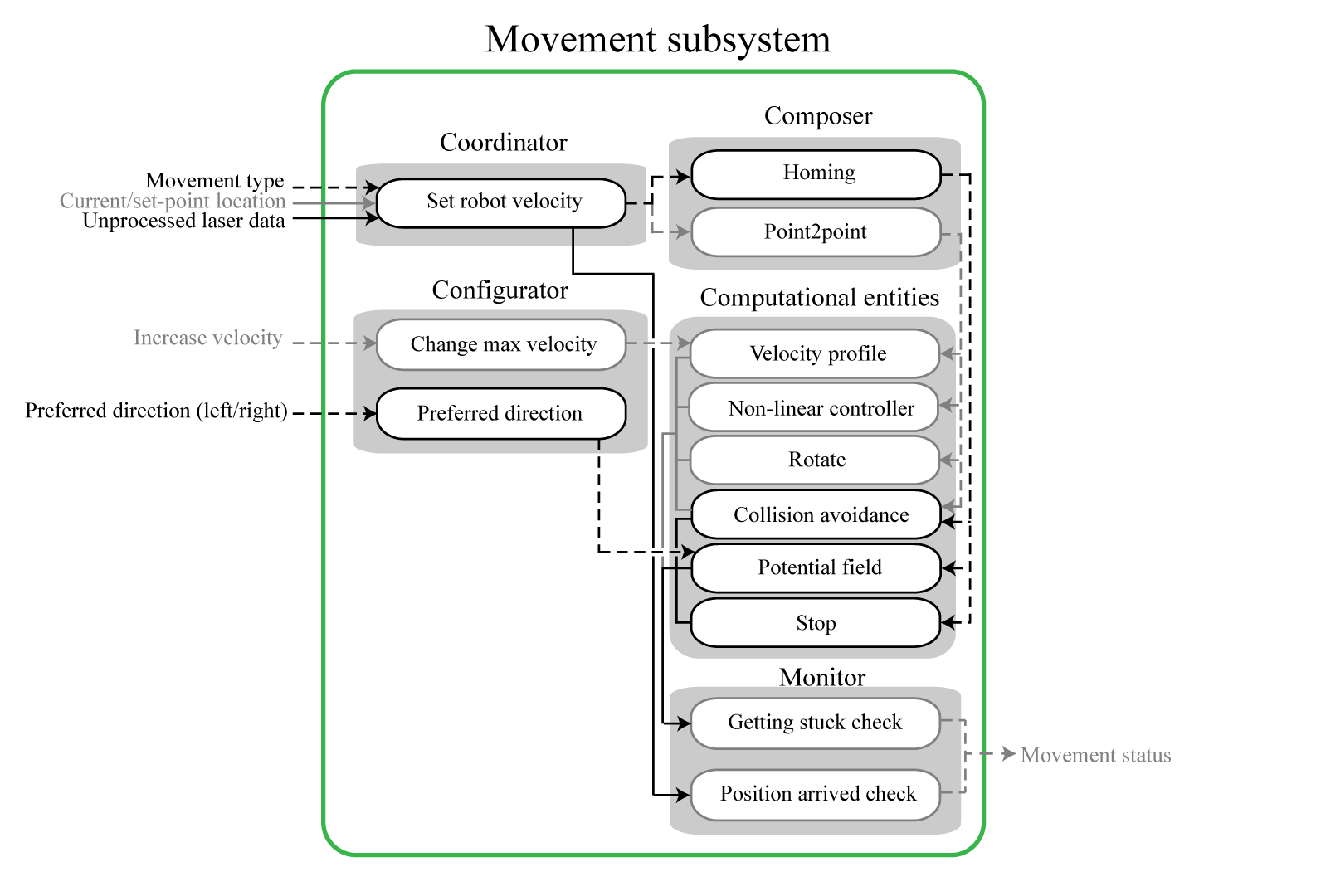

==='''Movement'''=== | |||

<p>The movement subsystem controls the movement of the robot, based on the input it receives from the | |||

other subsystems. This subsystem has two different composers; point2point and homing. In theory, the strategy subsystem has the ability to switch between these two composers. However, in the final version of the code, only the homing composer is used, due to the fact that the point2point algorithm requires the absolute location of the robot (SLAM). | |||

</p> | |||

<p>If used, point2point composer receives the current location of the robot, from detection, and a set-point location, from strategy, both in absolute coordinates. A non-linear controller is used to guide the robot from the current location to this set-point location. Due to the disability of this controller to move to a location directly behind the robot, a separate rotate functionality is included. The magnitude of the robot velocity is controlled separately according to a certain profile in order to limit the robot acceleration and reduce wheel slip. The maximum velocity of the profile can also be set by other subsystems. Lastly, a small potential field is used to avoid collisions. For more information about the individual computational entities, see [http://cstwiki.wtb.tue.nl/index.php?title=Embedded_Motion_Control_2015_Group_7#Movement_2 Subsystems - Movement]. Unfortunately, due to the fact that absolute localization (SLAM) appeared to difficult within the given time frame, this point2point composer is not used in the final version of the code and is therefore marked gray in the composition pattern.</p> | |||

<p>The second composer, homing, uses a large potential field to move the robot through the maze, without knowing the absolute position of the robot. In the standard situation, the robot moves forward. However, a preferred direction, for instance 'left' or 'right' can be ordered by the strategy subsystem. Once again, a smaller, stronger potential field is used to avoid collisions. Also, a separate stop entity is implemented to let the robot wait before a door. | |||

</p> | |||

<p>The movement subsection also contains a monitor. If the point2point composer is used, this monitor can check if the set-point location is reached. The monitor can also check if the robot is stuck, based on the current robot velocity. Unfortunately, the output of this monitor is not used (by strategy) in the final version of the code and is therefore marked gray in the composition pattern.</p> | |||

<!-- OUDE MOVEMENT STUK!! | |||

The movement subsystem receives the current position of the robot, determined by the localization algorithm in the detection subsystem, together with the set point location, determined by the strategy subsystem, both in a global coordinate system. The movement subsystem also receives a boolean from the strategy subsystem, which indicates which of the two movement methods should be used. Based on this, the movement subsection selects one of the two composers.</p> | |||

<p>The first composer (Homing) uses a potential field to guide the robot in the right direction. An | |||

additional smaller, stronger potential field (collision avoidance) is used to make sure that collisions | |||

are avoided. These two entities determine the robot velocity together. Both potential fields use unprocessed laser data.</p> | |||

<p>The second composer (Point2point) mainly uses a non-linear controller to determine the robot velocity (in local x, y and theta-direction) so the robot will move from the current location (A) to | |||

the set-point location (B), while avoiding collisions with object. In total, this composer uses four | |||

computational entities. The composer and the corresponding computational entities are marked gray | |||

because they depend on detection’s ability to localize the robot (SLAM), and might therefore not be | |||

used in the final design. A non-linear controller is used to guide the robot from A to B, by controlling | |||

only the x and theta velocity. This makes for nice smooth corners. Due to the controllers disability to move backwards, an additional turning entitiy is included. The magnitude of this velocity is | |||

controlled by a certain velocity profile, which ensures a certain maximum acceleration. This reduces | |||

slip en thus increases the accuracy of the odometry data. The maximum velocity of this profile is | |||

set by the configurator of the subsystem, which operates based on events raised by other subsystems. | |||

For instance: strategy could indicate that the current location has already been visited, enabling the | |||

robot to move faster.</p> | |||

<p>The movement subsystem also uses the unprocessed laser data to construct a potential field in | |||

the near proximity of the robot. This potential field is used to redirect the movement of the robot | |||

if it gets too close to an object. This entity controls the x and y movement of the robot. The final | |||

velocity that is sent to the robot is the sum of the velocity determined by the non-linear controller | |||

and the collision avoidance algorithm.</p> | |||

<p>The movement monitor checks what the difference is between the point A and B and checks if | |||

the robot has already reached the set-point location (A = B). The monitor raises an event if the | |||

robot gets stuck in a certain location, while trying to reach an unreachable point B.</p> | |||

EINDE OUDE MOVEMENT STUK--> | |||

=='''Code'''== | |||

In this section the 'best' pieces of code are presented and connected to the composition pattern. The software design of the composition pattern is based on the lessons learned from the lectures of [http://people.mech.kuleuven.be/~bruyninc/ Herman Bruyninckx]. When we started using more and more of the C++ classes available functionalities, it became evident that the composition pattern could almost directly be mapped to our software structure. In our opinion the best developed peice of software/composition pattern is the Movement subsystem together with the overal communication between all the subsystems and strategy's connection with the main coordinator. Therefore we would like to mainly show all the connections between the subsystems and the main, while omitting long peices of code necessary for the computational entities. When interessted in our full code we would like to refer you to our 'master4' branch which had the most success during the maze challenge [http://roboticssrv.wtb.tue.nl:8800/emc07/emc7/tree/master4 git:master4]. | |||

We would like to point out that all of our .h and .cpp files have the same structure, this made it very easy to keep our code well organized and readable for our colleagues. Private variables were used to share variables between functions. Public getters and setters were used to access and change these private variables from outside the subsystem. Getters proved very usefull in sending information to other subsystems and monitoring. Setters proved usefull for recieving information as well as configurators. Constructors were used to set all the private variables to their default value at creation in the main file. | |||

'''Main''' | |||

Since it all begins in the main loop lets start there. The main's function is already explained in the composition pattern, so for the actual implementation in the software take a look at [https://gist.github.com/b1c796aab2a9a355a975 main.cpp]. We are proud of the connection with the strategy subsystem and how it helps schedule the main loop. The strategy subsystem is able to activate one of the three composers in the main via main's coordinator by sending its status via the strategy monitor. | |||

'''Detection''' | |||

The loop starts with the detection subsystem, it is necessary to first 'sense' your environment before taking any actions. The structure of the detection class/subsystem is set out in [https://gist.github.com/a802b10a3d6e4a043da2 detection.h]. With the actual implementation [https://gist.github.com/2c4aa10d0d826a58461c detection.cpp]. To improve the readability, the private variables of the slam subssytem were deleted in this snippet. We wish we had time to split the computational entities mprocessing into mprocessing and sitrec. We are proud on how this subsystem communicates with the other subsystems with our well designed configurators and monitors. The monitor allows for data flow control, it controls under what conditions a situation is send out. This is beneficial for the strategy subsystem since it will only be triggered when necessary and not for false or bad readings. The configurator allows for an odometry reset from anywhere in our software, now only used in the strategy subsystem when initiating an action. | |||

'''Movement''' | |||

When nothing 'special' has been sensed the movement subsystem is set to homing and will start driving. However it can recieve a movement 'type' from the strategy subsystem. The structure of the detection class/subsystem is set out in [https://gist.github.com/e03b96f78852f924c50b movement.h]. With the actual implementation [https://gist.github.com/4e1e6dce3a89d1bffe5a movement.cpp]. We are proud on how well we were able to map our composition pattern this subsystem. A clear coordinator and composer is available which select the available computational entities based on a recieved movement type. A monitor is used to check the status of the most important variables within the subsytem and is able to send this to any subsystem or the main. Whereas the configurator can be used to change the behaviour of some of the computational entities. Such as the maximum velocity is used in the velocity profile or the preferred direction when using homing (the potential field) to navigate around. | |||

'''Strategy''' | |||

Strategy is the brains of the software, when the detection subsystem has found something strategy is activated in the main. The structure of the strategy class/subsystem is set out in [https://gist.github.com/54a0af9286ebf54ec561 strategy.h]. With the actual implementation [https://gist.github.com/df4218b9149bfb66f720 strategy.cpp] (note: this is not the full code). We are proud on the fact how well this function communicates with the [https://gist.github.com/b1c796aab2a9a355a975 main.cpp]. It is able to keep itself turned on in the main loop when a situation has been detected for the first time and will turn itself off in the main loop when the situation has been handeled. This communication is handeled by the monitor of strategy. Moreover when a door has been detected the strategy subsystem will tell the main to execute an 'open door' request, wait for a few seconds and after that it will check wheter or not the door went open if not PICO will turn around. We would have liked to have enough time to split the computational entities in seperate functions as the composition pattern suggests. | |||

='''Software subsystems'''= | |||

<p>This section explains in detail the theory behind the various computational entities of the composition pattern. The entities are grouped in their respective subsections: detection, strategy and movement.</p> | |||

=='''Detection'''== | |||

<p>In order for Pico to be aware of its surroundings, the data collected by the laser is transformed into a useful format. Pico should be able to detect walls, different types of junctions and dead ends. These situations can all be expressed through certain couplings of lines and corner points. To achieve this, the laser data is first transformed into lines using the Hough transformation. Then corner points are detected using the intersection points of these lines. By coupling each corner point to one or two lines, certain situations can be recognized. It must be noted that both the Hough transformation and the edge detection function were coded using our own method, without the use of OpenCV or other given functions. OpenCV is only used to plot detected lines, corners and situations.</p> | |||

==='''Measurement processing'''=== | |||

<p> The measurement processing entity receives the raw laser data from the robot oi-layer and processes it, in order to extract the relevant information for other entities. The laser data is processed in two different ways; lines are recognized using the Hough transform, and edges are detected.</p> | |||

===='''Hough transformation'''==== | |||

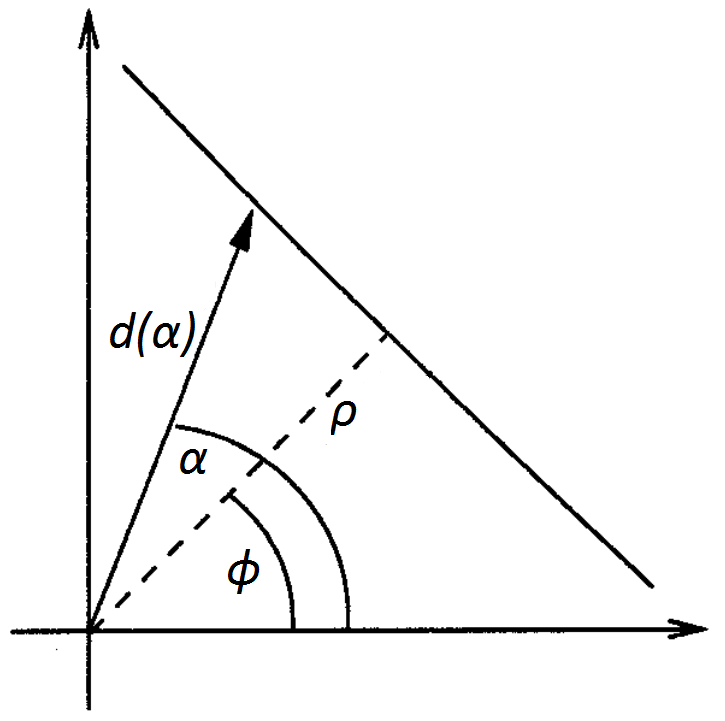

[[File:Hough_Transform.png|thumb|left|alt=Geomtery in Hough Transform, source: Giesler et al(1998).|Geomtery in Hough Transform, source: Giesler et al(1998)]] | |||

<p>The [https://en.wikipedia.org/?title=Hough_transform Hough transformation]is used to detect lines from the laser data. The basic idea is to transform a single point (x,y) into the parameter space of the set of infinite lines running through it, called the Hough space. In the polar coordinate system of the laser scan, a line through a data point is given by ρ=d/cos(α-φ) where ρ is the length of the orthogonal from the origin to the line and φ is its inclination. | |||

By varying φ for each data point from 0 to 180 degrees, different (ρ,φ) pairs are obtained. Laser points which are aimed at the same wall will yield the same (ρ,φ) pair. To determine if a given (ρ,φ) pair is a wall, ρ is discretized, and a vote is cast at the corresponding element for that (ρ,φ) pair in an accumulator matrix. Elements with a high number of votes will correspond with the presence of a wall. As such, finding the local maxima in the accumulator matrix yields the locations of the walls seen by Pico.</p><br><br><br><br><br><br><br><br><br> | |||

===='''Edge detection'''==== | |||

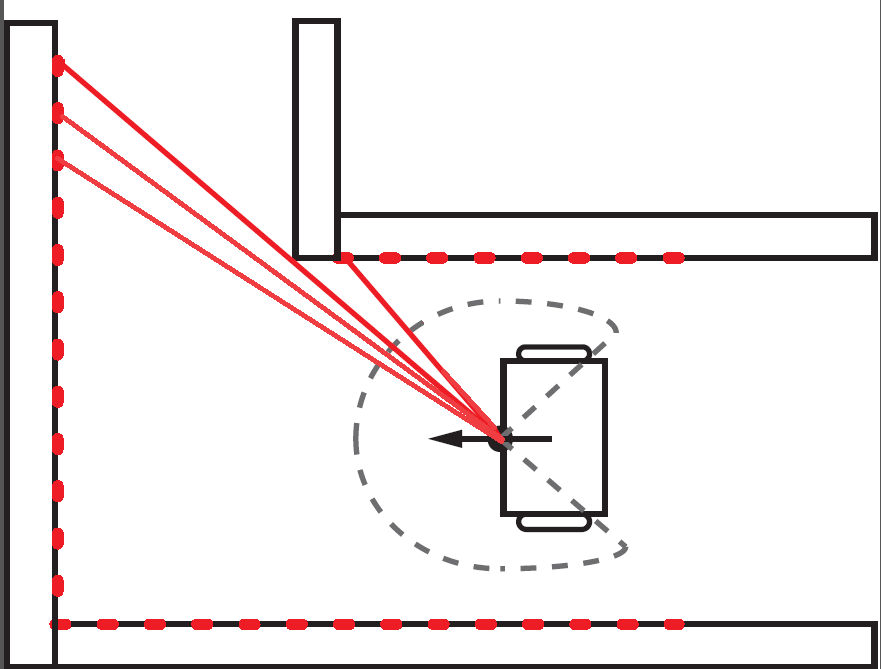

[[File:edge_detection3.png|thumb|left|alt=Sketch of edge detetion.|Figure edge detection]] | |||

The lines found using the Hough transformation are infinite, without any end points. These end points are detected by coupling each increment of the laser data with one of the found lines. This is done by comparing the measured distance of that increment with the projected distance of that increment on the line, given by r<sub>projected</sub>=ρ/cos(α-φ). After having coupled each increment with a line, the increment at which this coupling switches from one line to another gives the location of an end point. However, some of the detected end points will be located at the edge of Pico’s vision, for example when Pico is in front of a turn and cannot see the wall completely. Therefore only end points which lie close to each other, i.e. the corner of a turn where two detected line intersect, are taken into account while the rest are discarded. Because the end points which are not located at the intersection of two lines are discarded, an extra method is needed to find end points which lie on only one line, such as in the case of a T-junction. These end points are detected by searching for a large difference in the measured distance between two laser increments. In order to improve the robustness of this method, the average of multiple neighbouring elements is checked. This idea is shown in the figure edge detection. The edge detection function and the Hough transformation together make up the mprocessing entity.<br><br><br><br><br> | |||

==='''Situation recognition'''=== | |||

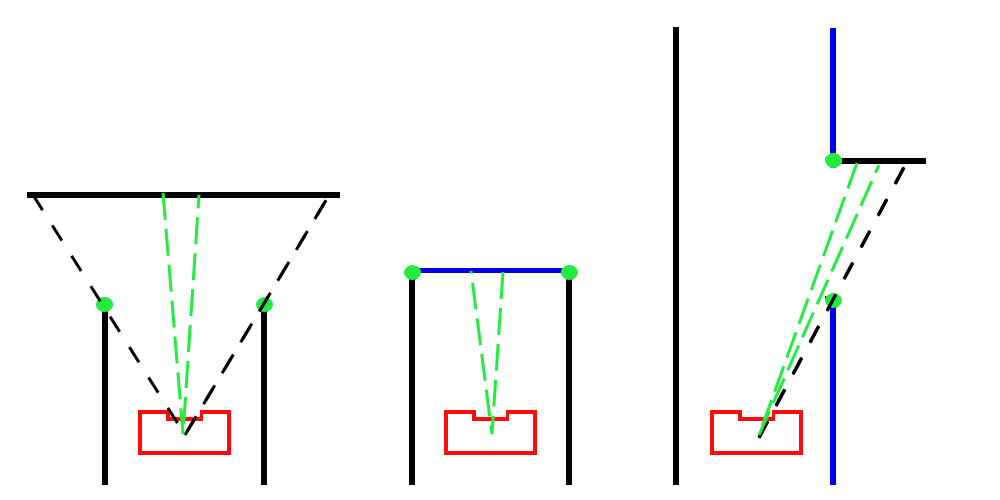

[[File:sitrec.png|thumb|left|alt=Sketch of situation recognition.|Figure situation recognition]] | |||

<p>Situations are recognized using the coupling of each detected corner with one or two detected lines. By comparing two corners which lie within a certain distance of each other, three main types of situations are discerned. These are: a T-junction where the left and right directions are open, a T-junction where the forward and left/right directions are open, and dead ends. These three situations are depicted in Figure situation recognition, where the corners are shown in green, solid black lines show lines which are coupled with one or no corners, and solid blue lines show lines which are shared by two corners. The dashed green lines show the range of laser increments used to confirm the situation.<br><br> | |||

In the case of a T-junction where the left and right directions are open, both corners will be coupled with only one line. The distance to the opposite wall is measured and must not be too far from the two corners for this situation to be present. In a dead end, the two detected corners will both be coupled to two lines, one of which is shared. To confirm a dead end, the laser data between the two corners must lie on the shared line, and the other two lines must be on opposite sides of the robot.<br><br> | |||

Finally, in the case of a T-junction where the forward and left or right directions are open, the corners share one line, and only one corner is coupled with a second line. If the laser data between the corners lies on this second line, such a T-junction is present. Whether there is an opening in the left or right direction is determined by looking at the angle of the corner coupled with two lines compared to the angle of the other corner. If two such T-junctions are found in close proximity to each other, they will be combined into a crossroads.</p><br> | |||

The gif below shows Pico’s performance in a simulation. The maze used in the simulation consists of multiple dead ends and a door. The left screen shows how Pico drives through the maze in the simulation. The right screen shows what Pico sees based on the implementation from the detection subsystem. The blue dots are the measured laser data, while the white dots are the detected corner points. When Pico detects a situation, a green line is drawn between the two corner points where the situation has been found. The red dots show which laser increments are used to confirm the presence of the situation. It is clear from the gif that corner points and different types of situations are detected robustly. | |||

[[File:Opgdet3.gif]] | |||

==='''SLAM'''=== | |||

<p>For localisation and mapping, the [https://en.wikipedia.org/wiki/Extended_Kalman_filter extended Kalman filter] is used for the [https://en.wikipedia.org/wiki/Simultaneous_localization_and_mapping slam] algorithm. Our own made algorithm can be divided into two parts. The first part exists out of founded markers, in our case corners, are used to make a prediction of the robot’s position (<math>\bar{\mu}_x</math>, (<math>\bar{\mu}_y(</math>, <math>\bar{\mu}_{\theta}</math>) and the markers (<math>\bar{\mu}_{x,i}(</math>, <math>\bar{\mu}_{y,i}</math>). The second part gives, the robot the positions of detected corners and tries to associate these with already found markers, or saved the position of the new found marker. By using the extended Kalman filter, the positions of the robot and found markers are continuously updated. | |||

</p><br> | |||

'''Prediction problem''' | |||

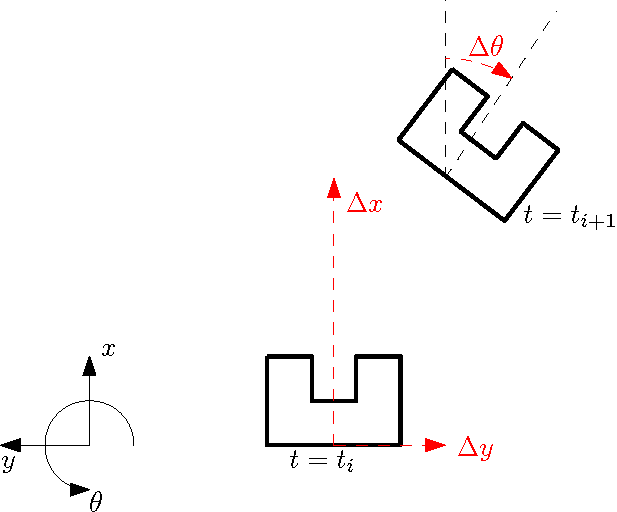

[[File:Explaining_formula.png|thumb|left|alt=Explain values formula|Values for the prediction]] | |||

<p>To make a prediction of the position of the robot, the odometry data are used to make an initial guess. While the robot is driving, the prediction is made as follows </p> | |||

<math>\begin{bmatrix} | |||

\bar{\mu}_x \\ \bar{\mu}_y \\ \bar{\mu}_{\theta} | |||

\end{bmatrix} = \begin{bmatrix} | |||

\mu_x + \Delta x \cdot \cos(\Delta \theta) - \Delta y \cdot \sin(\Delta \theta)\\ | |||

\mu_y + \Delta x \cdot \sin(\Delta \theta) + \Delta y \cdot \cos(\Delta \theta)\\ | |||

\mu_{\theta} + \Delta \theta | |||

\end{bmatrix}</math> | |||

<p> | |||

Here <math>\Delta \theta</math> is the angle, the robot moved between the current and previous angle, <math>\Delta x</math> the difference in x-direction, and <math>\Delta y</math> the difference in y-direction as illustrated in the figure to the left. The position of the robot is given in <math>x</math>, <math>y</math> and <math>\theta</math> direction is given in <math>\mu_x</math>, <math>\mu_y</math> and <math>\mu_{\theta}</math>, respectively. </p> | |||

<p> | |||

Also the covariance matrix <math>\Sigma</math> is determined to predict the accuracy of the model. The values for measurement and process noise, are tuned with experimental data. | |||

</p> | |||

==''' | <br> | ||

<p> The | |||

'''Association problem''' | |||

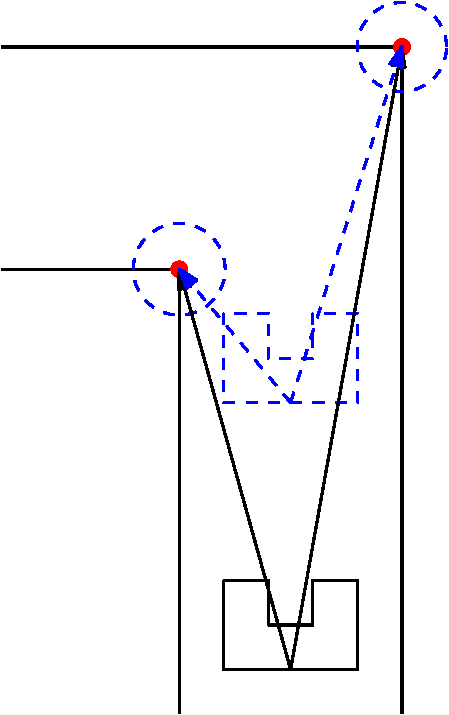

[[File:Association.png|thumb|left|alt=Association|Assciation ]] | |||

<p> | |||

When the robot is driving through the maze, of each corner that is detected, the algorithm is receiving the position of that corner. For each corner, there must be found out if these corners are already detected, or are newly detected ones. Figure to the left, shows the principle which was chosen. In the initial position (black) the two corners are found and saved. When the robot is moved (blue) the corners are found again. To associate the markers to the one found before, a searching area is used (blue circles). The location of this searching area can be determined with use of the prediction. There is determined how much the robot is moved in the three directions. From there the location of the marker can be determined. The marker is found again when the difference of the predicted location and the measured location of the corners is smaller than the tolerances. | |||

</p> | |||

<p>When the marker is found again, the position of the marker is updated with use of the [https://en.wikipedia.org/wiki/Extended_Kalman_filter extended Kalman filter], and the same is done for the position of the robot. When the marker is not found before, the marker is saved in the vector <math>\mu</math> and in the covariance matrix. This vector <math>\mu</math> contains all the positions of the robot and the found markers as, | |||

</p> | |||

<math>\begin{bmatrix} x \\ y \\ \theta \\ M_{x,1} \\ M_{y,1} \\ \vdots \\ M_{x,N} \\ M_{y,N} \end{bmatrix}</math> | |||

<br> | |||

'''Results''' | |||

<p>In the movie, the result of the algorithm is showed. In the left figure, the results of the detection is showed as explained above. At the right, the markers placed by the slam algorithm are showed. As can be seen, the robot is moving through the maze and is located correctly in the map made with the vector <math>\mu</math>. The found corners (white dots), are processed by SLAM and visualized in the right figure. When a corner is detected only ones, or a second time close to the original position, the algorithm updates and positions the marker correctly. All the movements except rotations are performed in the video. When the robot rotates, it experiences some problems by associating the measured corners to the found corners. Therefore the algorithm was not used during the maze-challenge. When this problem is solved, this own made algorithm can be used to position the robot into a world map and use a more advanced algorithm to solve the maze. </p> | |||

<!--[[File:SlamResults.gif]]--> | |||

[[File:SlamAlgorithmGroup7.gif]] | |||

==='''Odometry correction'''=== | |||

<p>The detection subsystem is also used to read and process the odometry data. The original odometry data is read from the oi-layer and corrected for for a certain pre-determined origin loction. At the very beginning of the script the odometry origin is initialized. However, the detection subsystem also offers other subsystems to command an odometry origin reset, by use of a setter function. This results in the current location becoming the odometry origin. The corrected odometry data is sent to other subsystems by use of a getter function.</p> | |||

=='''Strategy'''== | |||

<p>The Strategy subsystem is responsible for the decision making. Strategy is triggered based on the information processed by detection, and will determine an action to act accordingly. The initial plan corresponds to the first idea, which ideally would have been implemented. A change of plans and other necessary changes results in the final Strategy plan, as implemented in the software.</p> | |||

==='''Initial Plan'''=== | |||

<p>The initial strategy plan is dependent on the SLAM and Detection part of the code. It consists of a Tremaux algorithm which is provided with a highest succes rate decision maker, a pathplanner and a Dijkstra algorithm to determine the shortest path between nodes.</p> | |||

<p>[https://en.wikipedia.org/wiki/Maze_solving_algorithm#Tr.C3.A9maux.27s_algorithm Tremaux] describes the placing of landmarks and the (random) order selection of certain choices which can be made by PICO, in this case which path to continue at i.e. an intersection. The highest succes rate decision maker is applied to remove the randomness in the Tremaux algorithm, the direction of the intersection with the least side branches is most preferred (it has the most chance of being successful).</p> | |||

<p>The pathplanner is used to process all the landmarks placed by the Tremaux algorithm into a matrix, describing all possible connections and costs (in this case distance) between nodes. The [https://en.wikipedia.org/?title=Dijkstra%27s_algorithm Dijkstra algorithm] will determine the lowest distance to an unvisited node, connected to a certain starting node. All possibilities will be stepwise determined, updating the most preferred path from start to end. This is particularly useful if PICO at a certain point wants to return to a specific node as quickly as possible. At the end of the strategy process an array will result, describing all necessary landmarks PICO should move to in order to reach the end. </p> | |||

==='''Change of Plans'''=== | |||

<p> As earlier discussed, SLAM is not working robustly as desired. Since the original plan of Strategy was highly dependant on both the overall Detection as SLAM, a change of tactics is necessary. The alternative plan will not make use of SLAM, and therefore the strategy lacks the presence of certain map. As a result , some issues might occur in specific occasions. These issues will be tackled individually, the main goal in this stage of the project is a PICO actually capable of finishing the Maze challenge. The Strategy subsystem can be divided in the following computational entities. </p> | |||

===='''Junction Memory'''==== | |||

<p> Junction Memory describes the prescribed set of integers, corresponding to the situations and previous decisions of PICO and is used to implement a systematic order in the decisions. Every possible situation recognizable by Detection is linked to their own integer (hence junction, T-junction and dead-end). The value of this integer corresponds to a certain decision or decision maker (depending on i.e. dead-end or crossjunction).</p> | |||

<p>In order to (sort of) memorize the previous decisions of PICO at junctions, a specific integer is assigned which corresponds to the most previous action taken by PICO. The value of this integer is linked to a certain “decision” and movement type, the movement type will be further described in computational entity “Choose Movement Type”. If PICO would have made a certain decision at a junction, and this choice was unsuccessful (dead-end or wall), it will return to the same junction if applicable and the " decision" is updated. The updated value corresponds to a different movement type. By updating the “decision” and corresponding movement type for every unsuccessful decision, PICO will analyse every junction in structured manner until succession. If during a certain decision PICO would again recognize a junction this means that this choice is in some way succesfull, therefore the " decision" is resetted.</p> | |||

===='''Movement Type'''==== | |||

<p>The integer as described in Junction Memory describes the “decision” PICO has taken. The value of “Decision” corresponds to certain actions which can be triggered. The values of “decision” and the corresponding movement type are (1):“go left”, (2):”go straight”, (3):”go right”. </p> | |||

<p>Hence if a situation is recognized, the main will receive the new movement type corresponding to a certain decision. Communication with Movement will result in the desired motion of PICO. As previously discussed there is a switch between Movement and Strategy, hence between Homing and a different Movement type. Homing will be on until a situation is recognized, resulting in a certain decision after which Homing is turned back on. In the case of a dead-end Strategy will initiate the door request, further discussed in the following paragraph. If the door request is unsuccesful, the dead-end remains. This corresponds to the Movement type in which PICO is turned 180 degrees. </p> | |||

===='''Initiate Door Request'''==== | |||

<p>If Detection recognizes a dead-end, the corresponding integer is raised. Since a door can always be seen as a dead-end, the corresponding request can be initiated for every dead-end. Strategy will send the request to the main for further processing. During the request PICO will be halted for a period of 5 seconds (which is scheduled in the main) and hence no movement will occur. Communication is temporarily discarded and the bell is rang. After that period, Detection is again called in the main to determine if the so called "dead-end" in fact was a door and a new corridor has opened. If not the signal “dead-end” is send to Strategy, resulting in the desired Movement Type for the Movement subsystem. </p> | |||

<p>The | =='''Movement'''== | ||

<p> The "movement" part of the software uses data gathered by "detection" to send travel commands to PICO. As already discussed, the part of "strategy" is basically the "decision maker" which commands the "movement" of PICO. Initially, the idea was to implement a non-linear controller for point-to-point based composer, however, SLAM wasn't successfully operational and a second approach was implemented. Namely the homing composer. Although the non-linear controller wasn't used, it is still implemented in the original code. </p> | |||

<p> | |||

The composers homing and point-to-point were both different tactics for movement, where point-to-point worked on global coordinates, and homing could solve the maze locally. Furthermore, homing was based on a potential field, whereas point-to-point was based on dynamic system. Both of these movement composers are discussed. | |||

</p> | |||

==='''Point-to-point'''=== | |||

<p>First, the point-to-point movement is discussed, where we discuss how the entity of the non-linear controller and the required velocity profile works. Note, that this movement type is not used, but only implemented in the code.</p> | |||

<p>The initial plan is to first allow PICO to rotate, followed by the translation to the desired coordinates. | '''Non-linear controller''' | ||

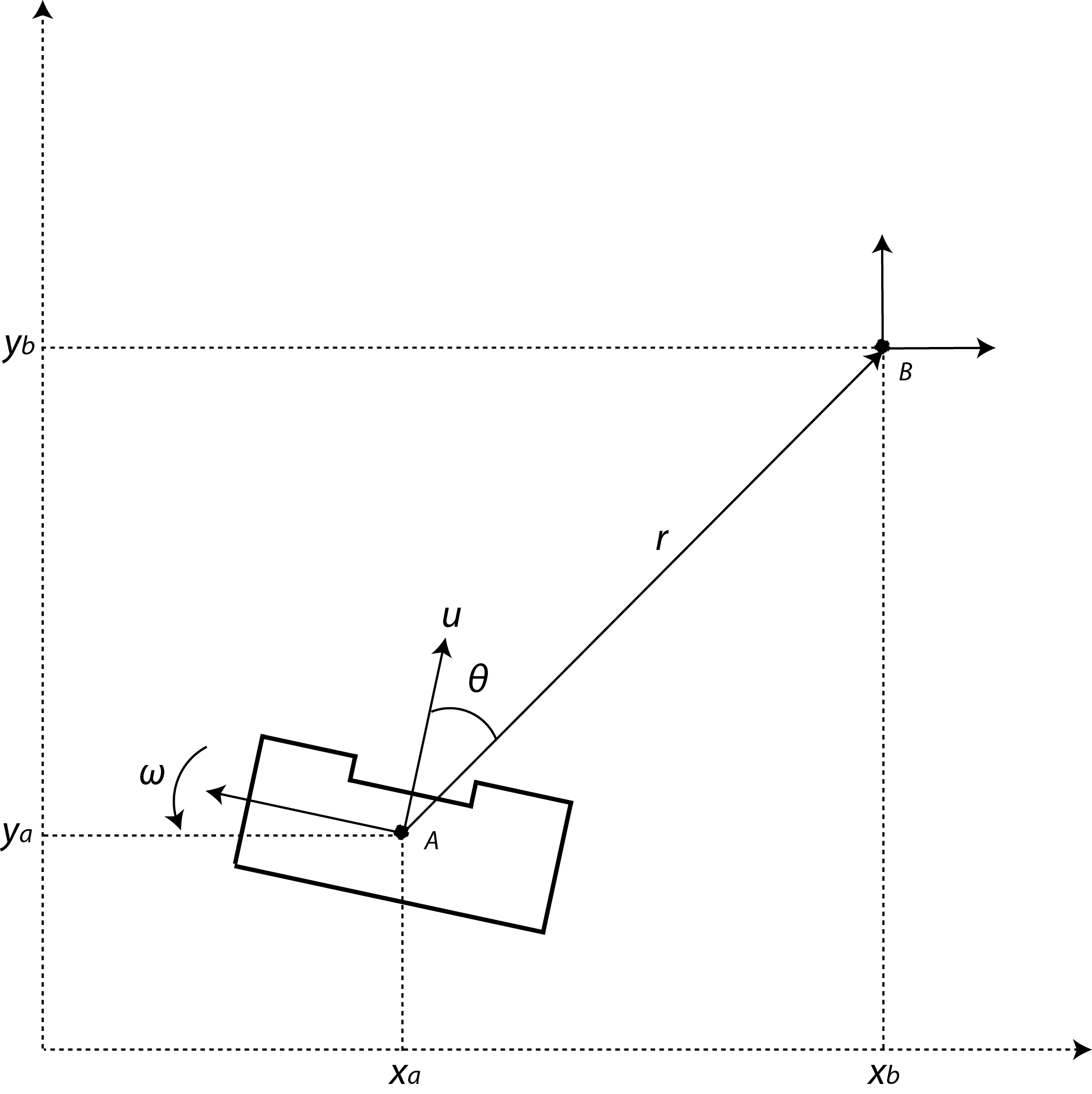

[[File:robot_p2p.png|right|350px|alt=Locations of points A,B.|Locations of points A,B]] | |||

<p> Consider the following example: coordinate A is considered the position of PICO in x, y and theta. With use of the coordinates of edges received by "detection" point B can be placed, which decribes the position PICO should travel towards. This point B will then become the new initial coordinate, as the routine is reset. In case of a junction multiple points can be placed, allowing PICO to choose one of these points to travel to. The initial plan is to first allow PICO to rotate, followed by the translation to the desired coordinates. The idea of a non-linear controller was used, that asymptotically stabilizes the robot towards the desired coordinates.</p> | |||

<p>Although the PICO has an omni-wheel drive platform, it is considered that the robot has an uni-cylce like platform whose control signals are the linear and angular velocity (<math>u</math> and <math>\omega</math>). The mathematical model in polar coordinates describing the navigation of PICO in free space is as follow: </p> | <p>Although the PICO has an omni-wheel drive platform, it is considered that the robot has an uni-cylce like platform whose control signals are the linear and angular velocity (<math>u</math> and <math>\omega</math>). The mathematical model in polar coordinates describing the navigation of PICO in free space is as follow: </p> | ||

| Line 243: | Line 460: | ||

:<math>\dot{r}=-u \, \text{cos}(\theta)\!</math> | :<math>\dot{r}=-u \, \text{cos}(\theta)\!</math> | ||

:<math>\dot{\theta}=-\omega + u\, \frac{\text{sin}(\theta)}{r}\!</math> </p> | :<math>\dot{\theta}=-\omega + u\, \frac{\text{sin}(\theta)}{r},\!</math> </p> | ||

<p> where are <math>r</math> is the distance between the robot and the goal, and <math>\theta</math> is the orientation error. Thus, the objective of the control is to make the variables <math>r</math> and <math>\theta</math> to asymptotically go to zeros. For checking such condition, one can consider the following Lyapunov function candidate: <math>V(r,\theta) = 0.5 \, r^2 + 0.5 \, \theta^2 | <p> where are <math>r</math> is the distance between the robot and the goal, and <math>\theta</math> is the orientation error. Thus, the objective of the control is to make the variables <math>r</math> and <math>\theta</math> to asymptotically go to zeros. For checking such condition, one can consider the following Lyapunov function candidate: <math>V(r,\theta) = 0.5 \, r^2 + 0.5 \, \theta^2</math>. The time derivative of <math>V(r,\theta)</math> should be negative definite to guarantee asymptotic stability; therefore the state feedback for the variables <math>u</math> and <math>\omega</math> are defined as, </p> | ||

<p> | <p> | ||

:<math>u = u_{max}\, \text{tanh}(r)\, \text{cos}(\theta) \! </math> | :<math>u = u_{max}\, \text{tanh}(r)\, \text{cos}(\theta) \! </math> | ||

:<math>\omega = k_{\omega} \, \theta + u_{max} \frac{\text{tanh}(r)}{r} \, \text{sin}( \theta) \, \text{cos}( \theta)\!</math> </p> | :<math>\omega = k_{\omega} \, \theta + u_{max} \frac{\text{tanh}(r)}{r} \, \text{sin}( \theta) \, \text{cos}( \theta),\!</math> </p> | ||

<p> where <math> u_{max} </math> and <math> k_{\omega}</math> both are postive constants. </p> | <p> where <math> u_{max} </math> and <math> k_{\omega}</math> both are postive constants. </p> | ||

===''' | '''Velocity profile''' | ||

<p> | |||

The velocity profile was implemented due to a noticeable behavior of the non-linear controller. The controller would cause the robot to slow down when it is close to the desired position. Moreover, the robot could accelerate more desirably. Hence, a velocity profile was implemented that would store the velocity of the robot, and based on previous velocities ,w.r.t. the current velocity, it would increase, or decrease the control input. Resulting in a far quicker point-to-point movement. Furthermore, the performance was increased, since the non-linear controller does not take viscous friction in account. Whereas the velocity profile would aid in overcoming the viscous friction</p> | |||

==='''Homing'''=== | |||

<p>Second, the homing composer is discussed, where we discuss how the entity of the potential field works. This was the active component of the final movement subsystem, which replaced the point-to-point composer.</p> | |||

'''Potential field ''' | |||

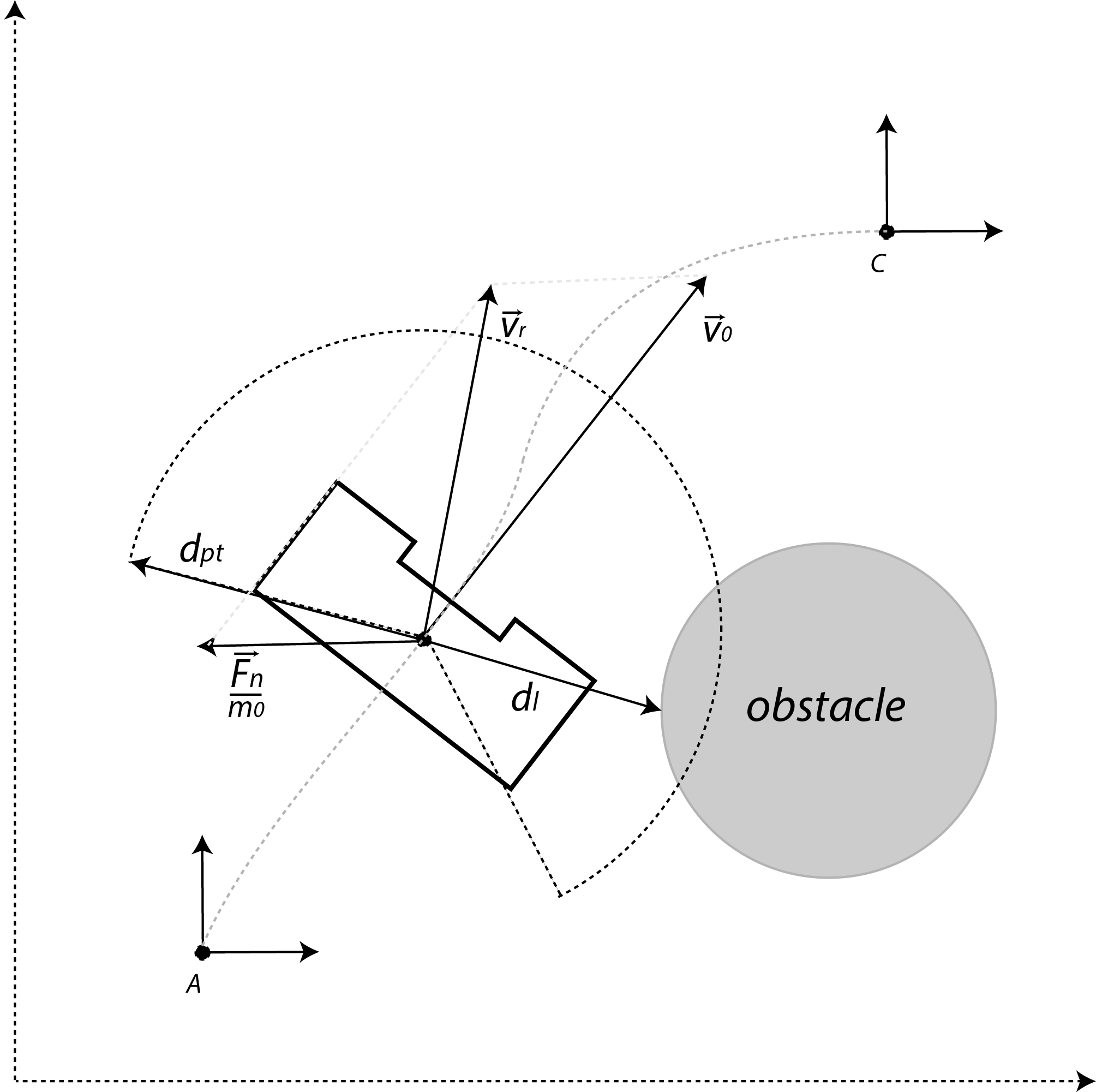

[[File: | [[File:colavoid.png|340px|right|alt=Vectors used in collision avoidance.|Vectors used in collision avoidance.]] | ||

<p> | <p>Consider the following example. The PICO would be located at position A and would travel towards point C, this will result in a collision with the walls. To avoid collision with the walls and other objects, a potential field around the robot will be created using the laser. This can be interpreted as a repulsive force <math> \vec{U} </math> given by:</p> | ||

:<math> \vec{U} = \begin{cases} | :<math> \vec{U}(d_l) = \begin{cases} | ||

K_0 \left(\frac{1}{d_{pt}}- \frac{1}{d_l} \right) \frac{1}{{d_l}^2} & \text{if } d_l < d_{pt}, \\ | |||

0 & \text{otherwise}, \end{cases}</math> | |||

<p> where <math> | <p> where <math>K_0</math> is the virtual stiffness for repulsion, <math>d_{pt}</math> the range of the potential field, and <math>d_l</math> the distance picked up from the laser. Moreover, the same trick can be applied in order for an attractive force, which give the PICO a sense of movement flow. Therefore, the attractive force <math> \vec{V} </math> is given by: </p> | ||

:<math> \vec{ | :<math> \vec{V}(d_l) = \begin{cases} | ||

K_1 d_{l}^2 & \text{if } d_l < \alpha d_{pt}, \\ | |||

0 & \text{otherwise}, \end{cases}</math> | |||

<p>where <math> | <p>where <math>K_1</math> is virtual spring for attraction,and <math>\alpha</math> a scaling for the potential field. Summing these repulsive forces <math> \vec{U} </math> and <math> \vec{V} </math> results into a netto force,</p> | ||

:<math> \vec{F}_n = \sum_{i=1}^N \vec{U}(d_{l,i}) + w_i\vec{V}(d_{l,i}), </math> | |||

<p>where <math>N</math> is the number of laser data, and <math>w</math> a weight filter used to make turns. This weighting filter <math>w</math> has three different types, where we treat all the increments on the left <math>n_{l}</math>, or all the increments on the right <math>n_r</math>, or the filter equal to one. Therefore the expression for the filtler, </p> | |||

<p> | |||

< | :<math> w_i = \begin{cases} | ||

w_i(i = n_r) = \beta, w_i(i \neq n_r) = 1 & \text{if left turn}, \\ | |||

w_i(i = n_l) = \beta, w_i(i \neq n_l) = 1 & \text{if right turn}, \\ | |||

w_i = 1 & \text{otherwise}, \\ | |||

\end{cases}</math> | |||

<p> | <p>where <math>\beta= 0.65</math>. Now, we are able to adjust the weighting filter. This gives control over the direction of the attraction force which steers the robot into a desired direction. This desired direction is called from "Strategy". Consider that the PICO has an initial velocity <math>\vec{v_0}</math>. Once a force is introduced, this velocity vector is influenced. This influence is modeled as an impulse reaction; therefore the new velocity vector <math>\vec{v}_r</math> becomes, </p> | ||

:<math> \vec{v}_r = \vec{v_0} + \frac{\vec{F}_n \,}{m_0} \, \Delta t, </math> | |||

<p> | <p> where <math>m_0</math> is a virtual mass of PICO, and <math>\Delta t</math> the specific time frame. The closer PICO moves to an object, the greater the repulsive force becomes. Some examples of the earlier implementation are included:</p> | ||

{| | |||

|[[File:Col_avoid_video.png|center|315px|link=http://youtu.be/f88-AavnpWo]] | |||

|} | |||

{| | |||

|[[File:push_test_video.png|center|315px|link=http://youtu.be/FSsQrfoJID8]] | |||

|} | |||

='''Evaluating first experiment 8 May'''= | <!-- ='''Evaluating first experiment 8 May'''= | ||

The goal of the first experiment on 8 May was to get used with PICO and handling the communication between user and PICO (with use of GIT). Data is gathered for future use and the point-to-point movement is tested. Due to small errors, other software subsystems were not tested. During this experiment the following items were discussed/observed: | The goal of the first experiment on 8 May was to get used with PICO and handling the communication between user and PICO (with use of GIT). Data is gathered for future use and the point-to-point movement is tested. Due to small errors, other software subsystems were not tested. During this experiment the following items were discussed/observed: | ||

| Line 313: | Line 541: | ||

<li>There are some doubts with the implementation of a nonlinear controller</li> | <li>There are some doubts with the implementation of a nonlinear controller</li> | ||

</ul> | </ul> | ||

='''Evaluating third experiment'''= | |||

{| | |||

|[[File:Col_avoid_video.png|center|315px|link=http://youtu.be/f88-AavnpWo]] | |||

|} | |||

{| | |||

|[[File:push_test_video.png|center|315px|link=http://youtu.be/FSsQrfoJID8]] | |||

|} | |||

='''Evaluating Corridor Competition'''= | ='''Evaluating Corridor Competition'''= | ||

<p>Between the second experiment on 12 May and the corridor competition several changes were made to the existing code. The "detection" part of the software was improved and made slightly more robust by evaluating more laser beams wrt older versions. The communication between "detecion" and "movement" was further improved by implementing dynamic arrays (also known as Vector in C++).</p> | <p>Between the second experiment on 12 May and the corridor competition several changes were made to the existing code. The "detection" part of the software was improved and made slightly more robust by evaluating more laser beams wrt older versions. The communication between "detecion" and "movement" was further improved by implementing dynamic arrays (also known as Vector in C++).</p> | ||

| Line 324: | Line 558: | ||

<li>The "path planning" (planning the path to take the corner) was not as desired, as the collision avoidance was triggered.</li> | <li>The "path planning" (planning the path to take the corner) was not as desired, as the collision avoidance was triggered.</li> | ||

<li>The second mainfile, consiting a "normal" controller was not considered a "better" option out of the two.</li> | <li>The second mainfile, consiting a "normal" controller was not considered a "better" option out of the two.</li> | ||

</ul> | </ul> --> | ||

='''Maze challenge'''= | |||

During the maze challenge, the performance of PICO was not satisfactory as it wasn't able to reach the door or solve the maze. During the final test, the day before the challenge, it was already clear that the detection part of the code did not give the desired results in real life, which is in contrary to the simulation where it worked fine. This is caused by the fact that in real life, the incoming laserdata is not so nicely received by PICO due to diffuse reflectance of the walls, opposed to in the simulation where the walls are 'smooth', and due to noise present in the data. Although prior testdata was saved to optimize the detection afterwards with the simulation, it did not give the same results as in real life. Apparently these tests were not representative for the actual maze. The robot did not recognize situations properly because the detection part, which should have distinguished the situations that could appear in a maze, was not robust enough. To try to make it work, some tolerances were changed. However, during the first try of the maze challenge, PICO started rotating too early or kept rotating because the detection was still not good enough. This made PICO think it was at a junction all the time and that it had to take action, i.e. make a turn. | |||

For the second try, a different code was used with other detection parameters. This time, PICO did a better job at recognizing the situations, but still not satisfactory. It failed to recognize the T-junction of the door, after which it came to a halt at a corner. Stuck in its place, it did not move anymore and that concluded the second try. The fact that it did not move anymore might be because of the used potential field which was used to drive. The input command for driving was very low when it got stuck. The potential field did not have an integrator action to give it a high value when standing still. With the low drive input, the motors did not get enough power to overcome the static friction, resulting in PICO being stuck in one place. | |||

An improvement therefore would be to add an extra check to verify if the drive input is high enough and, if necessary, increase this input. The detection part of the code should be made more robust as well to make sure the situations that are present in a maze will be detected properly. This could be done by for instance adding filters to the incoming laserdata or by changing the detection tolerances which are used to determine whether something is a cross- or T-junction. | |||

='''Overall conclusion'''= | |||

We learned a lot during the course of this project, i.e. programming in C++ in a clear and structured way by making use of a composition pattern. How to set requirements, functions, components, specifications, and interfaces while designing software to reach a certain goal and the big advantages of designing in this way have become clear. Working with Git and Ubuntu, of which no member had experience with, is something that has improved our overall programming efficiency and will be useful in the future. Furthermore, the communication of the hardware and software of a robot has become clear thanks to the hands on tests with PICO and the lectures of [http://people.mech.kuleuven.be/~bruyninc/ Herman Bruyninckx]. | |||

It is unfortunate that PICO did not solve the maze in the end. Up to the final test, the day before the maze challenge, it could detect situations in the maze and move nicely through our small test-mazes. However, when we put everything together it did not do what we intended it to do. In the end, we think we bit off more than we could chew. At the start of the project we decided to use SLAM to create a world model and based on that we would use Tremaux's alghorithm to solve the maze. We wanted to make everything ourselves and not just simply implement already existing methods from OpenCV or ROS, so we would understand what is happening. However, it proved that SLAM was hard to realize and that creating our own Hough-lines algorithm was no easy task either. Furthermore, the overall code structure kept changing during the project to match the composition pattern, which step after step kept improving. These improvements were a combination of new code/tactics and the lack of experience at the beginning of the project. After it had been decided that it would be too risky to rely on SLAM, as it might or might not work, a simpler strategy was used and there would be no mapping of where the robot has been. The main focus was shifted to creating a clear code structure, in which we succeeded if we do say so ourselves. | |||

Regarding SLAM, we were very close to successfully creating it on our own as can be seen in [http://cstwiki.wtb.tue.nl/index.php?title=File:SlamResultsGroup7.gif SLAM]. With more time, this would be a success as well. A thing which was a success, was the way PICO moved using Point2Point and [https://www.youtube.com/watch?v=f88-AavnpWo&feature=youtu.be Collision Avoidance]. It moved nicely towards a set point in space while it avoided obstacles in its way. Due to the change of strategy without SLAM, we did not implement it as it would rely on SLAM. In the end, with the changed strategy PICO was able to move nicely through the maze in the simulator, but unfortunately not in real life. | |||

A challenge during the entire project was our group size. We noticed it was important to maintain good communication with everyone, something which was difficult and not always achieved. We experienced that the reality is different from the simulation and if we had more testing time, we might have altered the code such that the results were satisfactory. We also learned that first determining a good overall code structure and sticking to it is key. We did change the structure a lot, but we are proud of it now. Another thing we are proud of, is the fact that we wrote everything ourselves, even if it was not used eventually, like SLAM.<br><br><br> | |||

P.S. Perhaps PICO could use a larger battery pack: | |||

[[File:pico_battery_down.jpg|alt=Testing with PICO hooked up to the adapter.|Testing with PICO hooked up to the adapter.]] | |||

Latest revision as of 22:53, 26 June 2015

About the group

| Name | Student id | ||

|---|---|---|---|

| Student 1 | Camiel Beckers | 0766317 | c.j.j.beckers@student.tue.nl |

| Student 2 | Brandon Caasenbrood | 0772479 | b.j.caasenbrood@student.tue.nl |

| Student 3 | Chaoyu Chen | 0752396 | c.chen.1@student.tue.nl |

| Student 4 | Falco Creemers | 0773413 | f.m.g.creemers@student.tue.nl |

| Student 5 | Diem van Dongen | 0782044 | d.v.dongen@student.tue.nl |

| Student 6 | Mathijs van Dongen | 0768644 | m.c.p.v.dongen@student.tue.nl |

| Student 7 | Bram van de Schoot | 0739157 | b.a.c.v.d.schoot@student.tue.nl |

| Student 8 | Rik van der Struijk | 0739222 | r.j.m.v.d.struijk@student.tue.nl |

| Student 9 | Ward Wiechert | 0775592 | w.j.a.c.wiechert@student.tue.nl |

Introduction

For the course Embedded Motion Control the "A-MAZE-ING PICO" challenge is designed, providing the learning curve between hardware and software. The goal of the "A-MAZE-ING PICO" challenge is to design and implement a robotic software system, which should make Pico or Taco be capable of autonomously solving a maze in the robotics lab.

PICO will be provided with low level software (ROS based), which provides stability and the primary

functions such as communication and movement. Proper software must be designed to complete the

said goal. Various models are used to design the behavior and structure of the software, see

Software Design. The final software design, including Git-links, is also discussed in this section. The details of the various software functions can be found in the Software Subsystems. At the end of

the page, the Maze challenge is discussed and an overall conclusion is presented.

Software Design

The software design is discussed in the following subsections. The requirements and functions of the software are listed and two different models are used to characterize the behavior and structure of the software. Note that the actual functionality of the software is not discussed in this section. More detailed information about the individual software functions can be found in Software subsystems.

Requirements and functions

The requirements specify what expectations the software should fulfill. In order for the robot to autonomously navigate trough a maze, and being able to solve this maze the following requirements are determined with respect to the software of the robot. It is desired that the software meets the following requirements:

- Move from one location to another

- No collisions

- Environment awareness: interpreting the surroundings

- Fully autonomous: not depending on human interaction

- Strategic approach

To satisfy the said requirements, one or multiple functions are designed and assigned to the requirements. The figure describes which functions are considered necessary to meet a specific requirement.

Behaviour model - The task-skill-motion pattern

In order to further specify the behavior of the software, a task-skill- motion pattern is constructed. This pattern describes the communication between the specified contexts and functions necessary to perform the task. The contexts and functions are represented by blocks in the interface and correspond with the requirements and functions previously discussed.

The robot context describes the lower level abilities and properties of the robot. In this case motors + wheels, the motor encoders and the laser range finder are mentioned. The EMC-framework controls this hardware and functions as an abstraction layer for the rest of the skills.

The environment context describes the ways the robot stores the information it knows about its surroundings. A local geometric world model is used to store the location of corners in the field of view of PICO. The semantic world model, which entails the 'words' PICO understands. This is used by the detection subsystem (measurement processing) to tell the other subsystems what's going on. For example it knows the words: door, dead-end, T-junction, cross-junction, open, closed. This is perfect for the high-level communication used by strategy. It is also used to store choices made at a junction which the robot has already visited. Note that both these world models are implicit and temporary. There is no explicit world model in the final version of the software.

The task context describes the control necessary for the robot to deal with certain situations (such as a corner, intersection or a dead end). The skill context contains the functions which are considered necessary to realize the task. Note that the block `decision making` is considered as feedback control, it acts based on the situation recognized by the robot. However `Strategic Algorithm` corresponds with feed-forward control, based on the algorithm certain future decisions for instance can be ruled out or given a higher priority.

Furthermore, the skill context is divided into two subsections, which correspond to two of the subsections in the composition pattern, and contains all the skills the robot possesses.

Structure model - The composition pattern

The composition pattern, which functions as the structural model for the code, decouples the behavior model functionalities into the 5C’s. The code is divided into these five separate roles:

- Computational entities which deliver the functional, algorithmic part of the system.

- Coordinators which deal with event handling and decision making in the system.

- Monitors check if what a function is supposed to accomplish is also realized in real life.

- Configurators which configure parameters of the computational entities.

- Composers which determine the grouping and connecting of computation entities.

The complete system is split into three subsystems, consisting of the movement subsystem, the detection subsystem and the strategy subsystem. The operation of each of these subsystems is explained in the sections below. The way in which these three work together is determined by the monitor, coordinator and composer of the main system.

Main

The main task of the main file is to schedule the different subsystems correctly. Initially, only the detection subsystem and the movement subsystem are active. In this state, the robot moves forward by use of a potential field, while situations are searched. Once the detection subsystem recognizes a certain situation, the strategy subsystem is scheduled into the main loop. This subsystem makes a decision regarding the direction of the robot, based on the information provided by the detection subsystem. New orders are then send to the movement subsystem, which determines and sets the robot velocity. In the case that the strategy indicates a door request should be called, this is also scheduled in the main file. The implementation of these functionalities can be found in Code.

Detection

The detection subsystem is used to process the data of the laser sensors. Its main function is to observe the environment and send relevant information to the other subsystem and the main coordinator. The coordinator of the detection subsystem receives the unprocessed data from the EMC-framework and corrects the odometry data for a possible offset. As commissioned by the coordinator the composer then triggers all computational entities, which are then carried out consecutively. The computational entities are shortly explained, and further elaborated in the "Software Design".

- Measurement Processing: using Hough transform to convert LFR data to lines and markers.

- Situation Recognition: detecting corners, corridors and doors based on lines obtained by the Measurement Processing function.

- SLAM: The markers, as determined by measurement processing, are used in combination with our self developed, extended Kalman filter to localize the robot in a global coordinate system. (not used in final version of the code)

- Odometry correction: sending out the odometry data with respect to a certain origin. The location of this origin can be reset by the configurator.

Strategy

When the detection subsystem recognizes a situation (junction, T-junction or cross-junction) a discrete event is send to the coordinator of the strategy subsystem which then will make a decision regarding the direction the robot should move, based on the available options (as detected by the detection subsystem) and junction memory. The junction memory records which of the junctions of the situation have already been investigated and turned out to be unsuccessful (dead-end). Based on this information one of the two movement types is chosen, see movement subsection, to guide to robot in the right direction. The movement type + direction is sent to the movement subsection. The strategy subsystem also has the possibility to request the opening of doors, when it encounters a dead end.

Movement

The movement subsystem controls the movement of the robot, based on the input it receives from the other subsystems. This subsystem has two different composers; point2point and homing. In theory, the strategy subsystem has the ability to switch between these two composers. However, in the final version of the code, only the homing composer is used, due to the fact that the point2point algorithm requires the absolute location of the robot (SLAM).

If used, point2point composer receives the current location of the robot, from detection, and a set-point location, from strategy, both in absolute coordinates. A non-linear controller is used to guide the robot from the current location to this set-point location. Due to the disability of this controller to move to a location directly behind the robot, a separate rotate functionality is included. The magnitude of the robot velocity is controlled separately according to a certain profile in order to limit the robot acceleration and reduce wheel slip. The maximum velocity of the profile can also be set by other subsystems. Lastly, a small potential field is used to avoid collisions. For more information about the individual computational entities, see Subsystems - Movement. Unfortunately, due to the fact that absolute localization (SLAM) appeared to difficult within the given time frame, this point2point composer is not used in the final version of the code and is therefore marked gray in the composition pattern.

The second composer, homing, uses a large potential field to move the robot through the maze, without knowing the absolute position of the robot. In the standard situation, the robot moves forward. However, a preferred direction, for instance 'left' or 'right' can be ordered by the strategy subsystem. Once again, a smaller, stronger potential field is used to avoid collisions. Also, a separate stop entity is implemented to let the robot wait before a door.