Embedded Motion Control 2015 Group 7: Difference between revisions

No edit summary |

|||

| Line 296: | Line 296: | ||

<p>The Hough transformation is used to detect lines using the measurement data. The basic idea is to transform a single point into the parameter space of the set of infinite lines running through it, called the Hough space. In the polar coordinate system of the laser scan, a line through a data point is given by <math>d(\alpha)=\frac{\rho}{\cos(\alpha-\phi)}</math> where <math>\rho</math> is the length of the orthogonal from the origin to the line and <math>\phi</math> is its inclination.</p> | <p>The Hough transformation is used to detect lines using the measurement data. The basic idea is to transform a single point into the parameter space of the set of infinite lines running through it, called the Hough space. In the polar coordinate system of the laser scan, a line through a data point is given by <math>d(\alpha)=\frac{\rho}{\cos(\alpha-\phi)}</math> where <math>\rho</math> is the length of the orthogonal from the origin to the line and <math>\phi</math> is its inclination.</p> | ||

<p>By varying <math>\phi</math> for each data point from 0 to 180 degrees, different <math>(\rho,\phi)</math> pairs are obtained. Laser points which are aimed at the same wall will yield the same <math>(\rho,\phi)</math> pair. To determine if a given <math>(\rho,\phi)</math> pair is a wall, <math>\rho</math> is discretized, and a vote is cast at the corresponding element for that <math>(\rho_d,\phi)</math> pair in an accumulator matrix. Elements with a high number of votes will correspond with the presence of a wall. The end-points of the walls are found using corners and the intersection points of the walls.</p> | <p>By varying <math>\phi</math> for each data point from 0 to 180 degrees, different <math>(\rho,\phi)</math> pairs are obtained. Laser points which are aimed at the same wall will yield the same <math>(\rho,\phi)</math> pair. To determine if a given <math>(\rho,\phi)</math> pair is a wall, <math>\rho</math> is discretized, and a vote is cast at the corresponding element for that <math>(\rho_d,\phi)</math> pair in an accumulator matrix. Elements with a high number of votes will correspond with the presence of a wall. The end-points of the walls are found using corners and the intersection points of the walls.</p> <br><br><br> | ||

==='''SLAM'''=== | ==='''SLAM'''=== | ||

Revision as of 15:16, 24 May 2015

About the group

| Name | Student id | ||

|---|---|---|---|

| Student 1 | Camiel Beckers | 0766317 | c.j.j.beckers@student.tue.nl |

| Student 2 | Brandon Caasenbrood | 0772479 | b.j.caasenbrood@student.tue.nl |

| Student 3 | Chaoyu Chen | 0752396 | c.chen.1@student.tue.nl |

| Student 4 | Falco Creemers | 0773413 | f.m.g.creemers@student.tue.nl |

| Student 5 | Diem van Dongen | 0782044 | d.v.dongen@student.tue.nl |

| Student 6 | Mathijs van Dongen | 0768644 | m.c.p.v.dongen@student.tue.nl |

| Student 7 | Bram van de Schoot | 0739157 | b.a.c.v.d.schoot@student.tue.nl |

| Student 8 | Rik van der Struijk | 0739222 | r.j.m.v.d.struijk@student.tue.nl |

| Student 9 | Ward Wiechert | 0775592 | w.j.a.c.wiechert@student.tue.nl |

Updates Log

28-04: Updated the page with planning and initial design.

29-04: Updated page with initial design PDf (not yet "wikistyle")

07-05: Small changes in wiki page

09-05: Changes in design (incl. composition pattern, removed components&specifications)

11-05: Changes in design (incl. composition pattern), evaluating first experiment

13-05: Evaluating second experiment, corridor competition and discuss initial software

14-05: Evaluating corridor competition, update initial ideas wrt detection, movement etc.

16-05: Update Movement.

20-05: Evaluatue third experiment.

24-05: Update Design, Detection, Movement, SLAM and overall Planning.

Planning

Week 5: 18 May - 24 May

- 22 May: Third epxeriment: movement and collision avoidance

- 24 May: large Wiki page update: including all software sections and initial design

- 24 May: Brainstorming and implementing first parts of strategy

Week 6: 25 May - 31 May

- 25 May: Implementing Tremaux algorithm

- 27 May: Fourth experiment: detection and movement

- 27 May: Secomd presentation, main focus on structure and composition pattern

Introduction

For the course Embedded Motion Control the "A-MAZE-ING PICO" challenge is designed, providing the learning curve between hardware and software. The goal of the "A-MAZE-ING PICO" challenge is to design and implement a robotic software system, which should make Pico or Taco be capable of autonomously solving a maze in the robotics lab.

PICO will be provided with lowlevel software (ROS based), which provides stability and the primary functions such as communication and movement. Proper software must be designed to complete the said goal, the software design requires the following points to be taken in account: requirements, functions,

components, specifications, and interfaces. A top-down structure can be applied to discuss the

following initial points.

Design

This software design is discussed in the following subsections. These sections will be updated frequently. The Components and specifications are replaced by i.e. the interface.

Requirements *To be Updated*

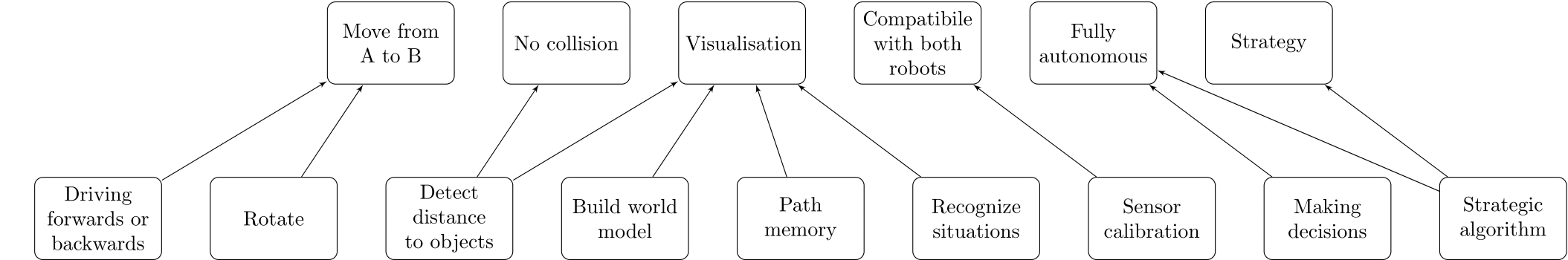

Requirements specify and restrict the possible ways to reach a certain goal. In order for the robot to autonomously navigate trough a maze, and being able to solve this maze the following requirements are determined with respect to the software of the robot. It is desired that the software meets the following requirements:

- Move from certain A to certain B

- No collisions

- Environment awareness: interpeting the surroundins

- Fully autonomous: not depending on human interaction

- Strategic approach

Functions *To be Updated*

To satisfy the said requirements, one or multiple functions are designed and assigned to the requirements. describes which functions are considered necessary to meet a specific requirement.

The function ’Build world model’ describes the visualisation of the environment of the robot. ’Path memory’ corresponds with memorizing the travelled path of the robot. ’Sensor calibration’ can be applied to ensure similar settings of both robots, so that the software can be applied for both.

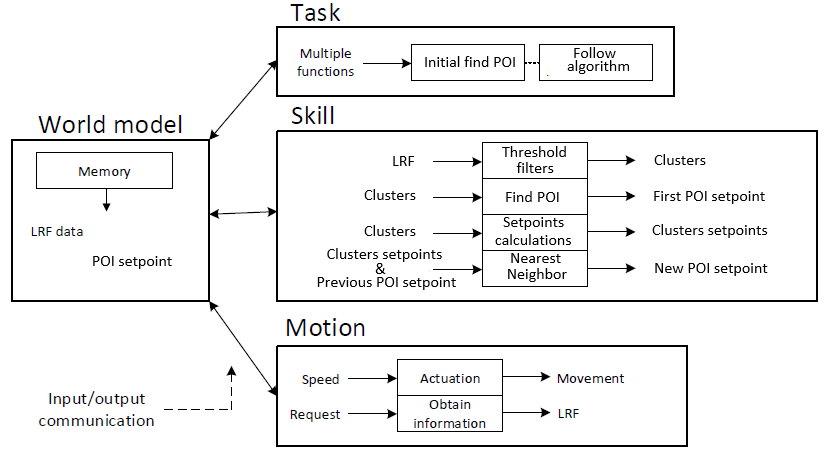

Interface *To be Updated*

The interface describes the communication between the specified contexts and functions necessarry to perform the task. The contexts and functions are represented by blocks in the interface and correspond with the requirements, functions, components and specifications previously discussed.

The robot context describes the abilities the robots Pico and Taco have in common, which in this case is purely hardware. The environment context describes the means of the robot to visualize the surroundings, which is done via the required world model. The task context describes the control necessary for the robot to deal with certain situations (such as a corner, intersection or a dead end). The skill context contains the functions which are considered necessary to realise the task.

Note that the block ’decision making’ is considered as feedback control, it acts based on the situation recognized by the robot. However ’Strategic Algorithm’ corresponds with feedforward control, based on the algorithm certain future decisions for instance can be ruled out or given a higher priority.

To further elaborate on the interface, the following example can be considered in which the robot approaches a T-junction: The robot will navigate trough a corridor, in which information from both the lasers as the kinect can be applied to avoid collision with the walls. At the end of the corridor, the robot will approach a T-junction. With use of the data gathered from the lasers and the kinect, the robot will be able to recognize this T-junction. The strategic algorithm will determine the best decision in this situation based on the lowest cost. The decision will be made which controls the hardware of the robot to perform the correct action. The T-junction is considered a ’decision location’, which will be saved in the case the robot is not capable of solving the maze with the initial decision at this location. During the movement of the robot, the walls are mapped to visualise the surroundings.

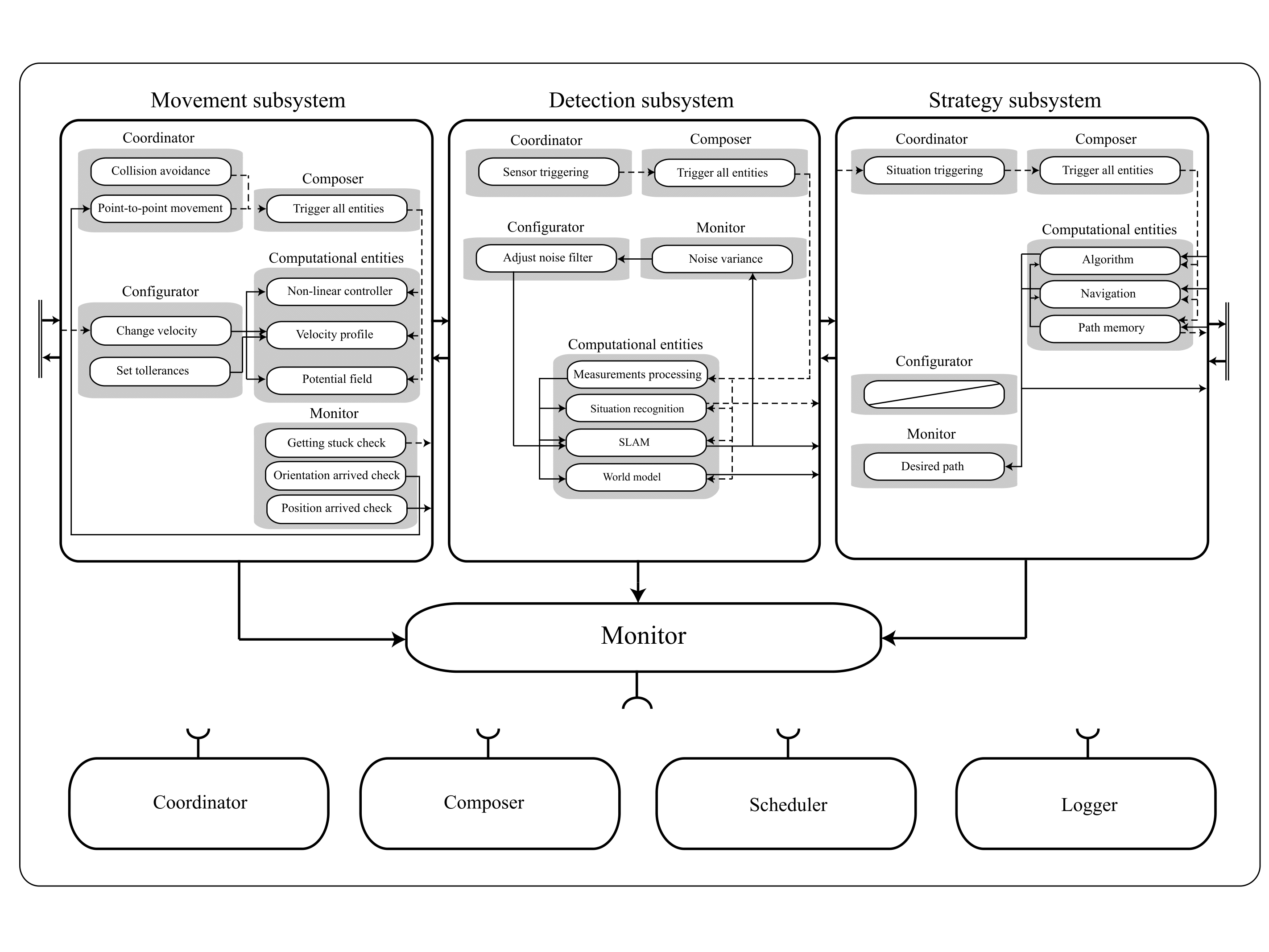

Composition Pattern

The structural model, also known as the composition pattern, decouples the behavior model functionalities into the 5C’s. The code is divided into five seperate roles:

- Computation; provides functionality, such as the algorithms in a application.

- Coordination; event handling, provides the discrete behaviour of entities.

- Configuration; influencing the behaviour of computational entities by changing the parameters.

- Monitoring; checking if a function is accomplishing a task as it is supposed to.

- Composing; determines the gathering and interconnection of computational entities.

The total structure is divided into three subsystems which will be discussed indivually: movement, detection and strategy. Note that black arrows are data communication and dotted arrows are events. The collaboration between the subsystems is determined by the monitor, coordinator and composer of the main system. Note: the computational entities are discussed in the chapter "Software design".

In the current structure of the code, the three subsystems are combined in the C/C++ main file. This file handles the communication of the various subsystems by using the outputs of the various subsystems as inputs for other subsystems. The different subsystems are scheduled manually. First, the detection subsystem is called to sense the environment and recognise situations/objects. Also, the current location of the robot is determined using the Simultaneous Localization And Mapping (SLAM) algorithm. The strategy subsystem uses this information to make a decision regarding the direction of the robot. This subsystem has a setpoint location as output which in turn will be used by the movement subsystem. The movement subsystem uses the current location and the set-point location to control the velocity of the robot. Currently, there is no data logged in the main file. Also, there is no composing happening in the main file.

The movement subsystem is used to control movement of the robot and consists of two coordinators. With respect to the older compostion pattern, situation handling is now a part of the strategy part of the code. The movement subsystem receives the current position of the robot, determined by the SLAM algorithm in the detection subsystem, together with the set point location, determined by the strategy subsystem, both in a global coordinate system. The movement subsystem calculates the robot velocity (in local x, y and theta-direction) so the robot will move from the current location (A) to to the set-point location (B), while avoiding collisions with object. To this end, three computational entities are used. The movement monitor checks what the difference is between the point A and B and checks if the robot has already reached the set-point location (A = B). The monitor raises an event if the robot gets stuck in a certain location, while trying to reach an unreachable point B.

The detection subsystem is used to process the data of the laser sensors. It's main function is to observe the environment and send relevant information to the other subsystem and the main coordinator. As commissioned by the coordinator the composer simply triggers all computational entities, which are then carried out in the shown order. The monitor checks the noise variance present in the marker locations used in SLAM, and if this exceeds a certain maximum the configurator adjusts the noise filter.

The strategy subsystem is used for path planning. This subsystem receives edge, corridor and door as global coordinate data from the detection subsystem for the computational entities. When the detection subsystem recognizes a situation in the maze a discrete event is send to the coordinator of this system which will then triggers all computational entities via the composer. These then choose the best path to take, which is executed by the Movement subsystem. The planned path is send to the monitor as well as to the Point-to-point movement coordinator in the movement subsystem. The monitor will check whether or not the desired path has been carried out.

Initial software, testing and Corridor competition

The first steps of actually coding the software is based on the requirements necessary for the corridor competition. In order to pass the corridor assignment succesfully, PICO should be capable of:

- Move from point A to point B

- Avoid collisions with walls/obstacles

- Detect edge corners in corridor

- Taking corners (with relative coordinates of detected edge)

- Detect end of corridor challenge

The group is divided into a "detection" group, "movement" group and a "world map" group (usefull in a later stage of the project). The primary ideas and implementations will be discussed in a similar structure.

Movement

The "movement" part of the software uses data gathered by "detection" to send travel commands to PICO. In the near future, a new "strategy" part of the software will be designed, which basically becomes the "decision maker" which commands the "movement" of PICO."

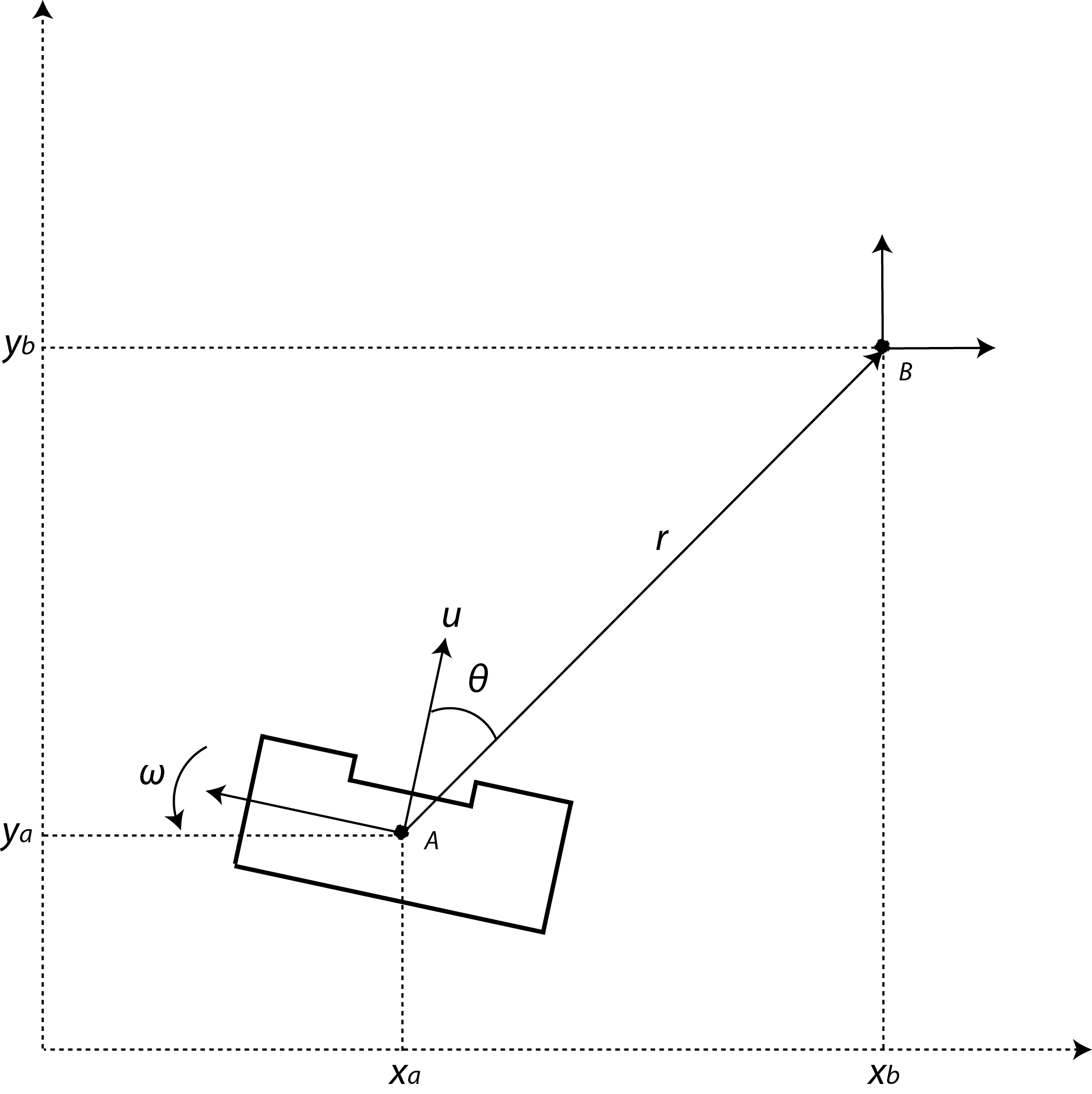

Local coordinates

The main idea is to work with local coordinates. At time t=0, coordinate A is considered the position of PICO in x, y and theta. With use of the coordinates of edges received by "detection" point B can be placed, which decribes the position PICO should travel towards. This point B will then become the new initial coordinate, as the routine is reset. In case of a junction as seen in the figure multiple points can be placed, allowing PICO to choose one of these points to travel to.

The initial plan is to first allow PICO to rotate, followed by the translation to the desired coordinates. This idea was scrapped, because this sequence would be time consuming. Instead a non-linear controller was used, that asymptotically stabilizes the robot towards the desired coordinates.

Although the PICO has an omni-wheel drive platform, it is considered that the robot has an uni-cylce like platform whose control signals are the linear and angular velocity ([math]\displaystyle{ u }[/math] and [math]\displaystyle{ \omega }[/math]). The mathematical model in polar coordinates describing the navigation of PICO in free space is as follow:

- [math]\displaystyle{ \dot{r}=-u \, \text{cos}(\theta)\! }[/math]

- [math]\displaystyle{ \dot{\theta}=-\omega + u\, \frac{\text{sin}(\theta)}{r},\! }[/math]

where are [math]\displaystyle{ r }[/math] is the distance between the robot and the goal, and [math]\displaystyle{ \theta }[/math] is the orientation error. Thus, the objective of the control is to make the variables [math]\displaystyle{ r }[/math] and [math]\displaystyle{ \theta }[/math] to asymptotically go to zeros. For checking such condition, one can consider the following Lyapunov function candidate: [math]\displaystyle{ V(r,\theta) = 0.5 \, r^2 + 0.5 \, \theta^2 }[/math]. The time derivative of [math]\displaystyle{ V(r,\theta) }[/math] should be negative definite to guarantee asymptotic stability; therefore the state feedback for the variables [math]\displaystyle{ u }[/math] and [math]\displaystyle{ \omega }[/math] are defined as,

- [math]\displaystyle{ u = u_{max}\, \text{tanh}(r)\, \text{cos}(\theta) \! }[/math]

- [math]\displaystyle{ \omega = k_{\omega} \, \theta + u_{max} \frac{\text{tanh}(r)}{r} \, \text{sin}( \theta) \, \text{cos}( \theta),\! }[/math]

where [math]\displaystyle{ u_{max} }[/math] and [math]\displaystyle{ k_{\omega} }[/math] both are postive constants.

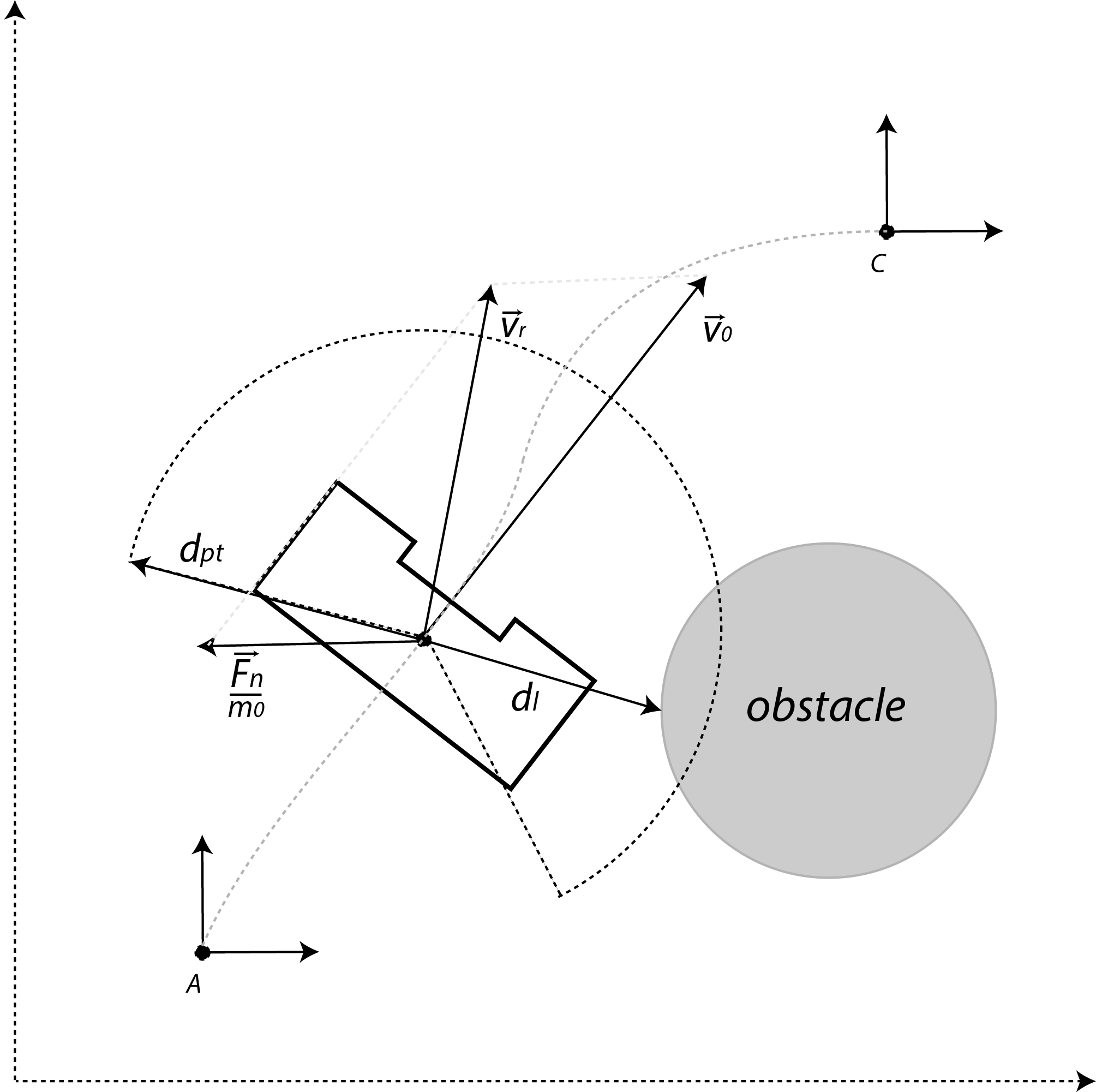

Potential field

If PICO would be located at position A and would directly travel towards point C, this will result in a collision with the walls. To avoid collision with the walls and other objects, a potential field around the robot will be created using the laser. This can be interpreted as a repulsive force [math]\displaystyle{ \vec{U} }[/math] given by:

- [math]\displaystyle{ \vec{U}(d_l) = \begin{cases} -K_0 \left(\frac{1}{d_{pt}}- \frac{1}{d_l} \right) \frac{1}{{d_l}^2} & \text{if } d_l \lt d_{pt}, \\ ~~\, 0 & \text{otherwise}, \end{cases} }[/math]

where [math]\displaystyle{ K_0 }[/math] is the virtual stiffness, [math]\displaystyle{ d_{pt} }[/math] the range of the potential field, and [math]\displaystyle{ d_l }[/math] the distance picked up from the laser. Summing these repulsive force [math]\displaystyle{ \vec{U} }[/math] results into a netto force,

- [math]\displaystyle{ \vec{F}_n = \sum_{i=1}^N \vec{U}(d_{l,i}), }[/math]

where [math]\displaystyle{ N }[/math] is the number of laser data. Assume that the PICO has an initial velocity [math]\displaystyle{ \vec{v_0} }[/math]. Once a force is introduced, this velocity vector is influenced. This influence is modeled as an impuls reaction; therefore the new velocity vector [math]\displaystyle{ \vec{v}_r }[/math] becomes,

- [math]\displaystyle{ \vec{v}_r = \vec{v_0} + \frac{\vec{F}_n \,}{m_0} \, \Delta t, }[/math]

where [math]\displaystyle{ m_0 }[/math] is a virtual mass of PICO, and [math]\displaystyle{ \Delta t }[/math] the specific time frame. The closer PICO moves to an object, the greater the repulsive force becomes

Detection

The initial idea of edge detection will be replaced by a set of multiple detection methods: Hough Transform and SLAM. At this point edge detection is still in use, this will be become a part of the Hough Transform code.

Edge detection

An edge can be considered as a discontinuity of the walls perpendicular to the travel direction of PICO. This can be either an door (open/closed), a corner or a slit in the wall. At this stage only the detection of a corner will be implemented.

.

The edge is then defined as the position of the corner (relative to PICO). At every (time)step the relative distances (wall to pico)will be checked, based on LRF data. If at some point the distance between one increment of the LRF and the neighbouring increment exceeds a certain treshold, it can be considered as an edge point. This is further illustrated by the image. This primary idea is however not robust, therefore the average of neigbouring increments is checked. Robustness will be further improved later on.

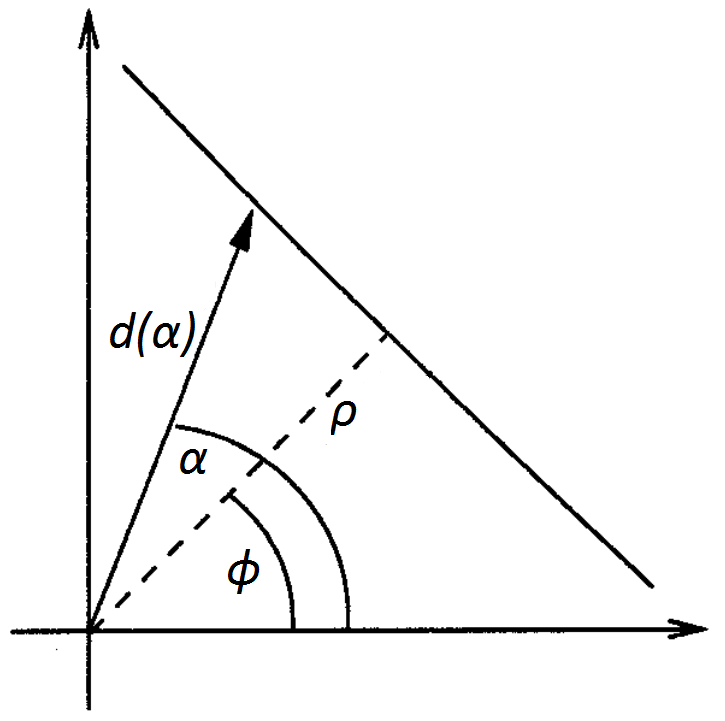

Hough Transform

The Hough transformation is used to detect lines using the measurement data. The basic idea is to transform a single point into the parameter space of the set of infinite lines running through it, called the Hough space. In the polar coordinate system of the laser scan, a line through a data point is given by [math]\displaystyle{ d(\alpha)=\frac{\rho}{\cos(\alpha-\phi)} }[/math] where [math]\displaystyle{ \rho }[/math] is the length of the orthogonal from the origin to the line and [math]\displaystyle{ \phi }[/math] is its inclination.

By varying [math]\displaystyle{ \phi }[/math] for each data point from 0 to 180 degrees, different [math]\displaystyle{ (\rho,\phi) }[/math] pairs are obtained. Laser points which are aimed at the same wall will yield the same [math]\displaystyle{ (\rho,\phi) }[/math] pair. To determine if a given [math]\displaystyle{ (\rho,\phi) }[/math] pair is a wall, [math]\displaystyle{ \rho }[/math] is discretized, and a vote is cast at the corresponding element for that [math]\displaystyle{ (\rho_d,\phi) }[/math] pair in an accumulator matrix. Elements with a high number of votes will correspond with the presence of a wall. The end-points of the walls are found using corners and the intersection points of the walls.

SLAM

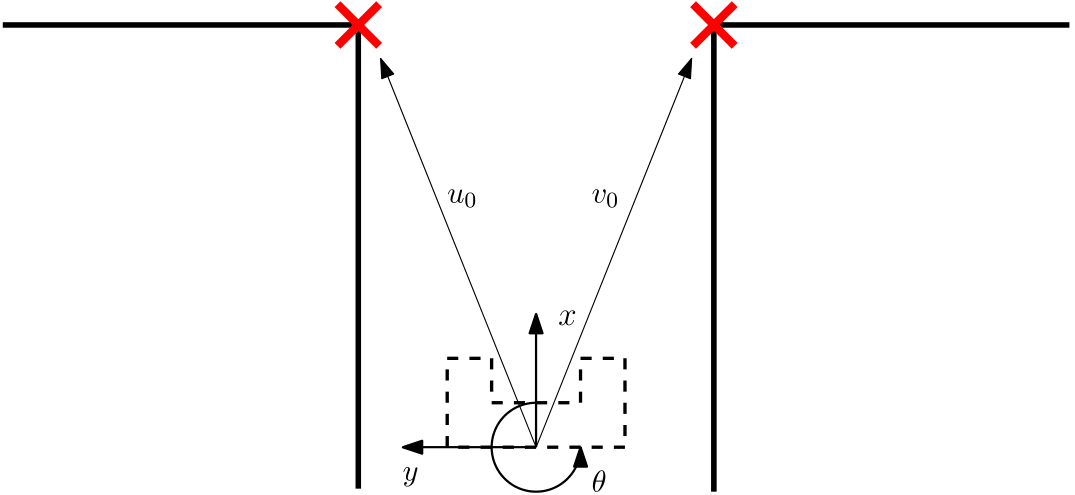

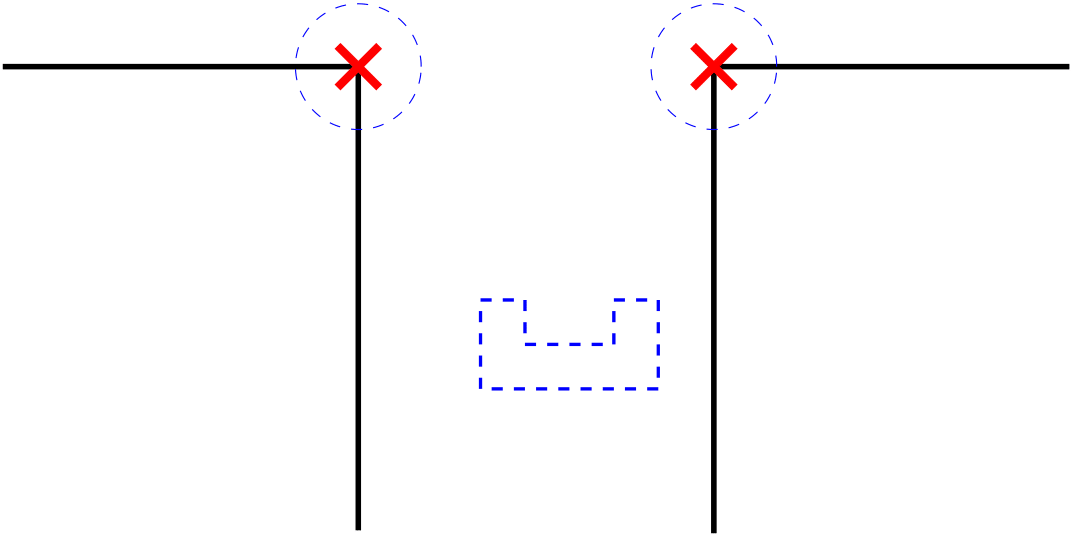

To determine the map of the maze and simultaneously the position of the PICO inside the maze, the odometry is used to make an initial guess. Due to the inaccuracy of the odometry data, the position must be verified and if necessary corrected with the laser measurements. In the figure to the right, the initial position of PICO is showed. Here the odometry data is [math]\displaystyle{ (x,y,\theta)=(0,0,0) }[/math] and exact. The data is verified with the laser measurements requiring landmarks, which in this case the are the corners denoted by the red crosses.

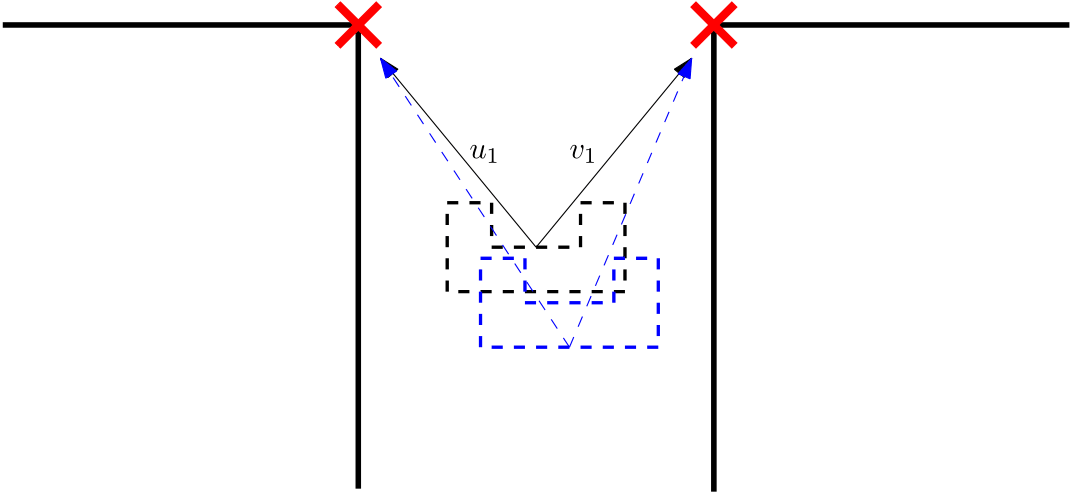

After a certain time step PICO is positioned at a different coordinate, resulting in an estimate and exact position. In the second figure to the right, the exact position of PICO is showed in black and the measured position of the PICO is given in blue. To correct the initial guess to the exact position the landmarks as determined earlier are used. The laser data at [math]\displaystyle{ t_k }[/math] is compared with the laser data at [math]\displaystyle{ t_{k-1} }[/math]. However, the laser data is not perfectly accurate aswell. Therefore, a trade off has to be made. To filter incomming data, laser sensors and odometry, an Extended Kalman Filter is used.

For the correct functionality of the SLAM method, landmarks are necessary. On the downside, the landmarks are not positioned similar with respect to PICO every time the laser measurement is performed. To connect the same landmarks of [math]\displaystyle{ t_k }[/math] and [math]\displaystyle{ t{k-1} }[/math], the data of the odometry is used. An initial guess will determine an estimate for the range where the landmark will be positioned. In \autoref{fig:landmarkRegion} the region where the landmark must be in is showed. In this region there can be measured where the landmark is positioned exactly. All the new landmarks that are not in the region are not used for determine the exact position at [math]\displaystyle{ t_k }[/math].

To simultaneously locate and map the robot and its environment, the observations have to be filtered. An Extended Kalman Filter is used to filter the observation and update the position of the robot and its environment. The EKF has two input parameters. The first parameter is the robot's controls ([math]\displaystyle{ \mu_t }[/math]) which are the velocity outputs or the odometry reading. Second given parameter is the observations ([math]\displaystyle{ z_t }[/math]) which is in this case, the laser data. With this information, a map of the environment can be made and in this case this map will consist out of markers ($ m$). Second the path of the robot ([math]\displaystyle{ x_t }[/math]) can be calculated with the input and the EKF. This is done by comparing the certainty of the odometry with the certainty of the laser measurement. This comparison results in a Kalman gain. When the Kalman gain is equal to zero, the system uses the odometry data to update the positions. However, when the Kalman gain is large, the system uses the markers and laser data update the positions.

Evaluating first experiment 8 May

The goal of the first experiment on 8 May was to get used with PICO and handling the communication between user and PICO (with use of GIT). Data is gathered for future use and the point-to-point movement is tested. Due to small errors, other software subsystems were not tested. During this experiment the following items were discussed/observed:

- Significant slits present in the walls are observed by PICO.

- Gradient present in the velocity, note the stick-slip like behaviour.

- Largers tolerances necessary in the code.

- The movement as prescribed by the code differs from the actual movement of PICO, monitoring will be important.

- Data is gathered during 6 different experiments.

- Armadillo is capable of detecting deadends

These items provided extra insight, the code will be changed to better suit the robot in the real case.

Evaluating second experiment 12 May

The goal of the second experiment was to check if all implemented functions act such as expected (in comparison with the simulator). During the experiment, PICO was not yet capable of solving the maze autonomously in every situation. The following observations are discussed:

- The coordinates of first edge of the corner is not recognized w.r.t. other detected edges (PICO must make the corner).

- Collision avoidance is working as expected.

- Every software piece indivually acts as expected, however the complete implementation need extra attention.

- There are some doubts with the implementation of a nonlinear controller

Evaluating Corridor Competition

Between the second experiment on 12 May and the corridor competition several changes were made to the existing code. The "detection" part of the software was improved and made slightly more robust by evaluating more laser beams wrt older versions. The communication between "detecion" and "movement" was further improved by implementing dynamic arrays (also known as Vector in C++).

The corridor competition was a succes, PICO managed to find the corner and to take this corner. With a winning time of 16 seconds we can call it a succes. However during the competition some minor things were observed which needs further attention:

- The nonlinear controller works fine, but could some fine tuning wrt slow velocity near the corner

- Cornering is happening at quite a slow pace.

- The "path planning" (planning the path to take the corner) was not as desired, as the collision avoidance was triggered.

- The second mainfile, consiting a "normal" controller was not considered a "better" option out of the two.