Samenvatting: Difference between revisions

| (65 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

Terug: [[PRE_Groep2]] | Terug: [[PRE_Groep2]] | ||

---- | ---- | ||

== Introduction == | == Introduction == | ||

Freedom is valuable to humans. It is so important that their right for freedom is protected by the constitution and several human right organizations. Freedom is related to both the physical- and mental state and it can be limited or taken away. Muscle diseases take away a person’s freedom on a physical level. | |||

A muscular disease that recently gained more attention is ALS. Mainly because of the ‘ice bucket challenge’ that went all over Facebook. The idea of the campaign was to gain more awareness for this disease so that money would be raised for further research. With ALS the neurons that are responsible for muscles movement will die off over time (Foundation ALS, 2014). Groups of muscles lose their function, because the ronsponsible neurons can no longer send a signal from the brain to the muscles. This process continues until vital muscles, like the muscles that helps a person to breathe, stop functioning. | |||

A muscular disease that recently gained more attention is ALS. Mainly because of the ‘ice bucket challenge’ that went all over Facebook. The idea of the campaign was to gain more awareness for this disease so that money would be raised for further research. With ALS the neurons that are responsible for muscles movement will die off over time (Foundation ALS). Groups of muscles lose their function, because the ronsponsible neurons can no longer send a signal from the brain to the muscles. This process continues until vital muscles, like the muscles that helps a person to breathe, stop functioning. | |||

During their illness people who suffer from ALS feel like a prisoner in their own body. Their freedom is decreasing in multiple ways. For example at some point they may not be able to express themselves verbally as the vocal cords are muscles and can stop functioning. | During their illness people who suffer from ALS feel like a prisoner in their own body. Their freedom is decreasing in multiple ways. For example at some point they may not be able to express themselves verbally as the vocal cords are muscles and can stop functioning. | ||

There is a team of reseachers (EUAN MacDonald Centre) that that tries to help people with ALS by giving them back their voice. They record the voice of ALS patients prior to the muscle failure, so that those recordings can be used in speech technology. Instead of hearing a computerized sound, ALS patients can hear their own voice when their ability to speak is impaired. This creates a stronger emotional bond between the patient and the loved ones surrounding them. Although such technology | There is a team of reseachers (EUAN MacDonald Centre) that that tries to help people with ALS by giving them back their voice. They record the voice of ALS patients prior to the muscle failure, so that those recordings can be used in speech technology. Instead of hearing a computerized sound, ALS patients can hear their own voice when their ability to speak is impaired. This creates a stronger emotional bond between the patient and the loved ones surrounding them. Although they are working on such technology, it can be further improved by adding emotion to a person's own sound. | ||

There are researches that have investigated the features of particular emotions (). These findings could be implemented in a speech program to add an emotion to a sentence. Although some emotions were recognized based on those features it was difficult for the sample group to successfully recognize certain emotions like sadness. Other studies came with an explanation for this problem. They state that emotion cannot be recognized by acoustic features alone. ( | There are researches that have investigated the features of particular emotions (Williams & Stevens, 1972) (Breazeal, 2001) (Bowles & Pauletto, 2010). These findings could be implemented in a speech program to add an emotion to a sentence. Although some emotions were recognized based on those features it was difficult for the sample group to successfully recognize certain emotions like sadness. Other studies came with an explanation for this problem. They state that emotion cannot be recognized by acoustic features alone. Brusso, et al. (2004) say that the combination of acoustic features (pitch, frequency, etc.) and anatomical features (facial expressions) is more effective for the recognition of emotions. Scherer, Ladd, & Silverman (1984) found that the combination of acoustic features and grammatical features is effective. Thus both studies conclude that the combination of multiple characteristics of emotions leads to the correct recognition of a specific emotion. When implementing these findings into a speech program it is not possible to include anatomical features. Nevertheless it is important to find the best possible way for ALS patients to express themselves verbally even though it is only based on acoustic and grammatical features, because it gives them an increased sense of freedom. | ||

The goal of this research is not to look further into the characteristics of emotions, but to investigate the strength of the combination of just the acoustic | The goal of this research is not to look further into the characteristics of emotions, but to investigate the strength of the combination of just the acoustic and grammatical features. At first this research starts with sentences without emotion, but who express emotion grammatically. The sentence ‘You did so great, I thought it was amazing’ expresses the emotion ‘happy’ because of the words that were chosen. But how large or powerful is the effect when adding physical features of emotions? This question leads to the research question of this research: | ||

What is the effect on the perception of humans by adding acoustic features of emotions to a sentence with grammatical emotional features? | What is the effect on the perception of humans by adding acoustic features of emotions to a sentence with grammatical emotional features? | ||

Based on the knowledge of prior research | Based on the knowledge of prior research the sentence with both acoustic and grammatical features should be more convincing. The hypothesis is that the recognition of emotion in a voice will have a positive affect on likeablity, animacy and persuasiveness (Yoo, & Gretzel, 2011) (Vosse, Ham, & Midden, 2010). | ||

The outcome of this research can be used in speech technology for patients who suffer from ALS, but its use has a broader implementation. It can be used when no anatomical features of emotions are available, but when it is still necessary to communicate a certain emotion. With persuasive technology for example there are devices that want to convince people to behave in a certain way. Some devices use only voice recordings instead of an avatar. In these cases the use of acoustic and grammatical features could have a stronger effect on the convincingness of the device which leads to the wanted change of behavior. | |||

== Method == | == Method == | ||

* '''Materials''' | * '''Materials''' | ||

For the research | For the research five different programs were used; Acapela Box, Audacity, Google Forms, Microsoft Office Excel, and Stata. The use of the programs will be explained below. | ||

Acapela Box is an online text-to-speech generator which can create voice messages with your text and their voices. The voice of Will was used because this one is English (US) and has the different functions ‘happy’, ‘sad’ and ‘neutral’. (Acapela Group, 2009) To generate the voice of Will the speech of a person was recorded. Those parameters are used and implemented in the written text. While programming this, attention was paid to diaphones, syllables, morphemes, words, phrases, and sentences. (Acapela Group, 2014) The Acapela Box also gives you the opportunity to change the speech rate and the voice shaping. (Acapela Group, 2009) This can be very useful for different emotions. | Acapela Box is an online text-to-speech generator which can create voice messages with your text and their voices. The voice of Will was used because this one is English (US) and has the different functions ‘happy’, ‘sad’ and ‘neutral’. (Acapela Group, 2009) To generate the voice of Will the speech of a person was recorded. Those parameters are used and implemented in the written text. While programming this, attention was paid to diaphones, syllables, morphemes, words, phrases, and sentences. (Acapela Group, 2014) The Acapela Box also gives you the opportunity to change the speech rate and the voice shaping. (Acapela Group, 2009) This can be very useful for different emotions. When sadness occurs, a sentence will be spoken slower than when happiness occurs. This is because of long breaks between words and slower pronunciation. (Williams & Stevens, 1972) A person talks with 1.91 syllables per second if the person is sad and with 4.15 syllables per second if the person is angry. (Williams & Stevens, 1972) Because the speech rate of happy and angry does not differ much. It was chosen to use this value of 4.15 syllables per second for happiness. (Breazeal, 2001) Voice shaping changes the pitch of the voice. A happy voice has an average high pitch and a sad voice has an average low pitch. (Liscombe, 2007) So the Acapela Box was used to change this voice shape. | ||

Audacity is a free, open source, cross-platform software to record and edit audio. (Audacity, 2014) In Audacity you can use a lot of functions with which you can give the audio fragment an emotional tone. With the function ‘amplify’ you may choose a new peak-amplitude which is used to make the happy audio fragments louder than the sad fragments. The difference in amplitude between these two emotions is 7 dB on average according to the research by Bowles and Pauletto. The loudness of a neutral voice is close to that of the sad voice. (Bowles & Pauletto, 2010) The pitch of a voice while being sad decreases at the beginning of the sentence and remains rather constant at the second half of the sentence. (Williams & Stevens, 1972) A sad voice could also be recognized by the pitch decreasing at the end of the sentence. In contrast, being happy gives your voice a variety of different pitches. (Breazeal, 2001) In Audacity you can change the pitch of an individual word by selecting it, choosing the effect ‘adjust pitch’ and filling in the percentage you want to change the pitch into. Another feature is that a sad voice has longer breaks between two words comparing to all other emotions. (Bowles & Pauletto, 2010) By selecting a break between words and using the function ‘change tempo’ in Audacity, the length of this break can be made longer. This function can also help you to lengthen or shorten the words of a sentence individually. This is useful because words with only one syllable are pronounced faster when being happy. And if a person is sad, longer words are pronounced 20% slower and short words are pronounced 10-20% slower than a neutral voice. (Bowles & Pauletto, 2010). With the function ‘change tempo’ the speed of the voice changes, but the pitch does not. And this is exactly what we need for these emotions. | Audacity is a free, open source, cross-platform software to record and edit audio. (Audacity, 2014) In Audacity you can use a lot of functions with which you can give the audio fragment an emotional tone. With the function ‘amplify’ you may choose a new peak-amplitude which is used to make the happy audio fragments louder than the sad fragments. The difference in amplitude between these two emotions is 7 dB on average according to the research by Bowles and Pauletto. The loudness of a neutral voice is close to that of the sad voice. (Bowles & Pauletto, 2010) The pitch of a voice while being sad decreases at the beginning of the sentence and remains rather constant at the second half of the sentence. (Williams & Stevens, 1972) A sad voice could also be recognized by the pitch decreasing at the end of the sentence. In contrast, being happy gives your voice a variety of different pitches. (Breazeal, 2001) In Audacity you can change the pitch of an individual word by selecting it, choosing the effect ‘adjust pitch’ and filling in the percentage you want to change the pitch into. Another feature is that a sad voice has longer breaks between two words comparing to all other emotions. (Bowles & Pauletto, 2010) By selecting a break between words and using the function ‘change tempo’ in Audacity, the length of this break can be made longer. This function can also help you to lengthen or shorten the words of a sentence individually. This is useful because words with only one syllable are pronounced faster when being happy. And if a person is sad, longer words are pronounced 20% slower and short words are pronounced 10-20% slower than a neutral voice. (Bowles & Pauletto, 2010). With the function ‘change tempo’ the speed of the voice changes, but the pitch does not. And this is exactly what we need for these emotions. | ||

(To see more specific adjustments on | (For the research ten different sentences were used and adjusted. To see more specific adjustments on these sentences, click here: [[Adjustments sentences]]) | ||

With Google Forms you can create a new survey with others at the same time. It is a tool used to collect information. Audio fragments (via video) can be inserted and every possible question can be written. After receiving enough data from the required participants, the information can be collected in a spreadsheet which can be exported to a .xlsx document (for the program Microsoft Office Excel). (Google Inc., 2014) | With Google Forms you can create a new survey with others at the same time. It is a tool used to collect information. Audio fragments (via video) can be inserted and every possible question can be written. After receiving enough data from the required participants, the information can be collected in a spreadsheet which can be exported to a .xlsx document (for the program Microsoft Office Excel). (Google Inc., 2014) | ||

Microsoft Office Excel is a program to save spreadsheets. This spreadsheet can be imported in the program Stata and from there on it is considered as data in different variables. Stata is used to interpret and analyze the data. | Microsoft Office Excel is a program to save spreadsheets. This spreadsheet can be imported in the program Stata and from there on it is considered as data in different variables. Stata is used to interpret and analyze the data. | ||

* '''Design''' | * '''Design''' | ||

Before the experiment was executed a power analysis was done to get an idea of how many participants might be | Before the experiment was executed, a power analysis was done to get an idea of how many participants might be needed. For the power analysis the power was set to 0.8 at a significance level of 0.05. For a moderate effect size, 64 participants for each condition were needed in a two-tailed t-test and 51 participants for each condition were needed in a one-tailed t-test. A one-tailed t-test would be suitable for the experiment, because no relation or a positive relation was suspected. A negative relation was not to be expected. Therefore there is only one way in which there would be an effect and a two-tailed t-test would not be needed. | ||

However, it is presumably that the effect size is small instead of moderate. Another power analysis was executed to see how many participants were needed if the effect size is small. For a two-tailed t-test 394 participants per condition would be needed and for a one-tailed 310 participants per condition | However, it is presumably that the effect size is small instead of moderate. Another power analysis was executed to see how many participants were needed if the effect size is small. For a two-tailed t-test 394 participants per condition would be needed and for a one-tailed 310 participants per condition. Because the resources and time to collect so many participants were not available, the experiment is executed with 51 participants per condition. | ||

For our experiment a between subjects design was used. The two conditions researched were emotionally loaded voices and neutral voices. Each group only heard one of the conditions. These two conditions made up the independent variable, which therefore is a categorical variable. The dependent variables were likeability, animacy and persuasiveness. All these variables consisted of a Likert scale varying from 1 to 5. All the dependent variables are interval variables.The dependent variables were composed of several questions in the questionnaire. For the dependent variable likeability the questions 27, 30, 35, 38 and 40 from the questionnaire were used. Questions 28, 31, 32 and 34 were used for the dependent variable animacy. And the dependent variable persuasiveness composed of the questions 29, 33, 36 and 37. | For our experiment a between subjects design was used. The two conditions researched were emotionally loaded voices and neutral voices. Each group only heard one of the conditions. These two conditions made up the independent variable, which therefore is a categorical variable. The dependent variables were likeability, animacy and persuasiveness. All these variables consisted of a Likert scale varying from 1 to 5. All the dependent variables are interval variables. The dependent variables were composed of several questions in the questionnaire. For the dependent variable likeability, the questions 27, 30, 35, 38 and 40 from the questionnaire were used. Questions 28, 31, 32 and 34 were used for the dependent variable animacy. And the dependent variable persuasiveness composed of the questions 29, 33, 36 and 37. | ||

Because the survey is about water consumption and | Because the survey is about water consumption and persuasiveness two variables were made which could be of influence on the way participants responded on the comments of Will. These covariates should measure how easy you are to convince and how much you care about the environment. Both covariates are composed of several questions in the questionnaire. How easily you are convinced is composed of the questions 5, 8, 13 and 18. How much you care about the environment is composed of the questions 4, 6, 10, 11, 12, 15, 17 and 19. | ||

(The questions of the questionnaire can be found at [[Research design]].) | |||

* '''Procedure''' | * '''Procedure''' | ||

| Line 72: | Line 54: | ||

The first part is the general one in which some demographic information was asked. Besides some questions were asked about two personal characteristics. Furthermore, some questions were included to prevent that participants directly knew about our research goal. The second part consisted of a simulation in which everyone was supposed to fill in some questions about their showering habits. After each answer, an audio fragment was heard which either gave positive or negative feedback. The third part contained questions about the experience of the voice heard. At last, we added some final questions to give us an indication about general matters such as concentration and comprehensibility. | The first part is the general one in which some demographic information was asked. Besides some questions were asked about two personal characteristics. Furthermore, some questions were included to prevent that participants directly knew about our research goal. The second part consisted of a simulation in which everyone was supposed to fill in some questions about their showering habits. After each answer, an audio fragment was heard which either gave positive or negative feedback. The third part contained questions about the experience of the voice heard. At last, we added some final questions to give us an indication about general matters such as concentration and comprehensibility. | ||

* '''Participants''' | |||

* ''' | |||

The participants (n = 101) were personally asked to fill in the questionnaire. They were gathered from our list of friends on our Facebook accounts. Facebook was used to prevent that elderly, which are unable to change their showering habits due to living in a care home, filled in the questionnaire. Besides, only participants older than 18 were asked, since younger people do often not pay their own energy bill. | The participants (n = 101) were personally asked to fill in the questionnaire. They were gathered from our list of friends on our Facebook accounts. Facebook was used to prevent that elderly, which are unable to change their showering habits due to living in a care home, filled in the questionnaire. Besides, only participants older than 18 were asked, since younger people do often not pay their own energy bill. | ||

From the original data set, three participants | From the original data set, three participants were removed, since they submitted the questionnaire two times. Besides, one extra person was removed from the dataset, since this participant commented that he had not understood the questions about Will. Furthermore participants who totally disagreed when they were asked whether they master the English language, were removed. At last, participants were removed who were totally distracted while filling in the questionnaire. After this we were left with 94 participants. | ||

Each participant got either the questionnaire with the neutral voice (n1 = 46) or with the voice that sounds emotionally loaded (n2 = 48). The condition with the neutral voice is called category 1, and the other condition is called category 2. | Each participant got either the questionnaire with the neutral voice (n1 = 46) or with the voice that sounds emotionally loaded (n2 = 48). The condition with the neutral voice is called category 1, and the other condition is called category 2. | ||

| Line 85: | Line 66: | ||

== Resultaten == | == Resultaten == | ||

As stated in the introduction three concepts of perception | As stated in the introduction three concepts of perception were used for this research: persuasion, likeability and animacy. The scales for these concepts were tested on reliability by calculating Cronbach's alpha. These values were respectively 0.85, 0.89, and 0.85.The concepts will be discussed in the given order. | ||

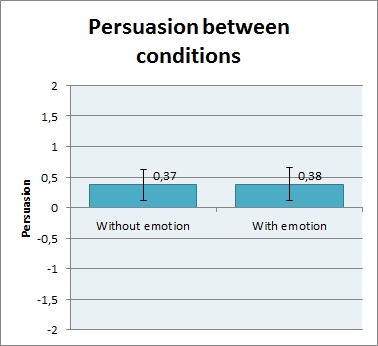

To test whether persuasion of the voice is perceived differently between both conditions an ANOVA was performed. This resulted in p = 0.96, and <math>\eta ^2</math> = 0.00003. A graphical representation of this test can be seen in figure 1. After this, a second test was done in which participants were only included if they said that they are willing to adjust their showering habits. In this case the p-value was 0.95, and <math>\eta ^2</math> = 0.00005. | To test whether persuasion of the voice is perceived differently between both conditions, an ANOVA was performed. This resulted in p = 0.96, and <math>\eta ^2</math> = 0.00003. A graphical representation of this test can be seen in figure 1. The y-axis represents a 5-point Lickert scale, that is encoded from -2 (totally disagree) to +2 (totally agree). Zero means neutral. | ||

After this, a second test was done in which participants were only included if they said that they are willing to adjust their showering habits. In this case the p-value was 0.95, and <math>\eta ^2</math> = 0.00005. | |||

For participants who either only heard at least four positive (p = 0.43; <math> \eta ^2 </math> = 0.03) or four negative audiofragments (p = 0.69; <math> \eta ^2 </math> = 0.02) it was tested whether persuasion differed between the conditions. | For participants who either only heard at least four positive (p = 0.43; <math> \eta ^2 </math> = 0.03) or four negative audiofragments (p = 0.69; <math> \eta ^2 </math> = 0.02) it was tested whether persuasion differed between the conditions. | ||

[[File: | [[File: Persuasion.jpg |350px|Figure 1: Persuasion per condition]] | ||

Figure 1: Persuasion per condition | Figure 1: Persuasion per condition | ||

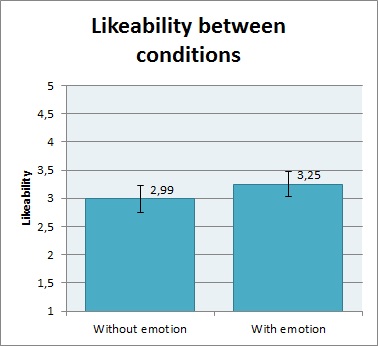

The third test that was performed was to see whether the participants in the condition with emotion rated the voice as more likeable compared to the participants in the neutral condition. Since not all assumptions for an ANOVA were met (normal distribution across both conditions was rejected), the non-parametric kwallist test was performed. This gave a p-value of 0.17, and <math>\eta ^2</math> = 0.02. This effect was calculated by using the following formula: <math> \eta ^2=\frac{\chi ^2}{N-1}</math> ( | The third test that was performed was to see whether the participants in the condition with emotion rated the voice as more likeable compared to the participants in the neutral condition. Since not all assumptions for an ANOVA were met (normal distribution across both conditions was rejected), the non-parametric kwallist test was performed. This gave a p-value of 0.17, and <math>\eta ^2</math> = 0.02. This effect was calculated by using the following formula: <math> \eta ^2=\frac{\chi ^2}{N-1}</math> (NAU EPS625). Figure 2 shows these results. The y-axis represents the scale retrieved from the God speed questionnaire. This scale is from 1 to 5. | ||

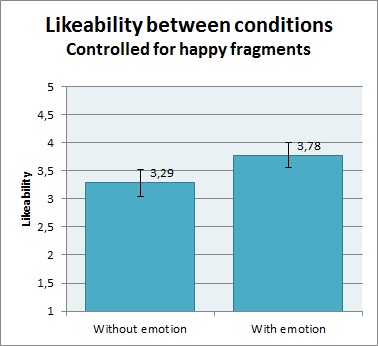

As a follow up test, the kwallis was executed another two times, but now one time with only participants that at least heard four positive audiofragments (p = 0.02; <math> \eta ^2 </math> = 0.22), and the second time with only participants that at least heard four negative audiofragments (p = 0.73; <math> \eta ^2 </math> = 0.01). The effect of mainly hearing positive audiofragments on likeability is shown in figure 3. | As a follow up test, the kwallis was executed another two times, but now one time with only participants that at least heard four positive audiofragments (p = 0.02; <math> \eta ^2 </math> = 0.22), and the second time with only participants that at least heard four negative audiofragments (p = 0.73; <math> \eta ^2 </math> = 0.01). The effect of mainly hearing positive audiofragments on likeability is shown in figure 3, which has the same scale on the y-axis as figure 2. | ||

[[File: | [[File: Likeability1.jpg | 350px |Figure 2: Likeability per condition]] | ||

[[File: | [[File: Likeability2.jpg | 350px | Figure 3: Likeability per condition when heard audio-fragments were mostly positive]] | ||

Figure 2: Likeability per condition --------------------------------------- | Figure 2: Likeability per condition --------------------------------------- | ||

Figure 3: Likeability per condition when heard audio-fragements were mostly positive | Figure 3: Likeability per condition when heard audio-fragements were mostly positive | ||

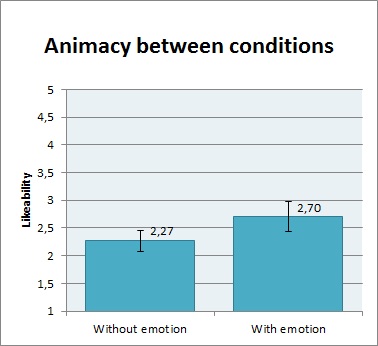

Finally, a kwallis test was done to test whether there is a difference in animacy between both conditions. A kwallis test was chosen because the assumptions were not met (rejection of equal variance for both groups). The p-value found was 0.02 and <math>\eta ^2</math> = 0.06. The found difference can be seen in figure 4. | Finally, a kwallis test was done to test whether there is a difference in animacy between both conditions. A kwallis test was chosen because the assumptions were not met (rejection of equal variance for both groups). The p-value found was 0.02 and <math>\eta ^2</math> = 0.06. The found difference can be seen in figure 4. The y-axis of figure 4, represents the scale encoded from 1 to 5, retrieved from the God speed questionnaire. | ||

To test whether the type of heard emotion has some influence on perceived animacy, participants who heard at least four positive fragments (p = 0.16; <math> \eta ^2 </math> = 0.08) were tested, as well as participants who heard at least four negative fragments (p= 0.17; <math> \eta ^2 </math> = 0.21). | To test whether the type of heard emotion has some influence on perceived animacy, participants who heard at least four positive fragments (p = 0.16; <math> \eta ^2 </math> = 0.08) were tested, as well as participants who heard at least four negative fragments (p= 0.17; <math> \eta ^2 </math> = 0.21). | ||

[[File: | [[File: Animacy.jpg | 350px |Figure 4: Animacy per condition]] | ||

Figure 4: Animacy per condition | Figure 4: Animacy per condition | ||

== | == Discussion and conclusion == | ||

Looking at the results, the concept persuasion did not result in a significant difference between the conditions. This can be seen by looking at the high p-value that was found. Besides that, the effect size was taken into consideration and this was close to zero. Even after controlling for willingness to adjust showering habits, the effect was non-significant, and the effect size hardly increased. | |||

Likeability also showed a non-significant effect, but in contrast to persuasion a small effect size was found. However, a significant, large effect was found in likeability between the conditions when only participants were included that at least heard four out of five positive audiofragments. This makes sense, since likeability is in itself a positive concept. So the more happy a fragment sounds (happy versus neutral), the more likeable it is perceived. | |||

== | Both findings for persuasion and likeability go against the hypothesis that were formulated in the introduction. This can be explained by several things. At first for persuasion it yields that emotion might not be enough to persuade people into changing their behavior. Some participants gave the feedback that also the content of the sentence is of importance: there must be more information available about the water consumption and constructing arguments should be given. Leaving out information was done deliberately to only focus on the emotional context of the sentence instead of the informational context. | ||

For likeability, not controlled for positive fragments, another problem may affect the results. A lot of participants commented that the voice sounded too fake or robotic. It was found that the more human-like a robot is, the more accepted and likeable a robot is (Royakkers et al., 2012). Some participants did not find the used robotic-voice human-like and therefore probably did not find it very likeable. However, apparently when mostly positive fragments were heard, the robot was perceived more likeable. So probably the positive fragments sounded more human-like than the negative ones did. | |||

For animacy a significant effect was found between the two conditions. For the condition with emotion the perceived animacy was higher than for the condition without emotion. The effect size indicated that the difference between the two conditions has a medium effect (following the guidelines for a one-way anova, obtained from the Cognition and Brain Science Unit). The finding for animacy was in line with the hypothesis. An emotional voice is perceived more lively than a neutral voice. Animacy was also tested for people who heard at least four positive audio fragments and for people who heard at least four negative fragments. Effect sizes were bigger for both these groups than the effect size of animacy in general. An explanation for this could be that people who heard the same emotions several times were more accustomed to that voice. Therefore they might have perceived it more lively because they did not perceive any other voice where they could compare it with. However, these findings were not significant and the question is how realiable they are because the two groups consisted of 24 respectively 10 persons. | |||

Besides previous mentioned issues that can be improved, more improvements can be made. These limitations have an influence on all three measured concepts. To begin, given the time to complete this research, concessions had to be made about the size of the sample groups. According to the prior power analysis, the amount of participants that participated, was only enough to reliably find a medium effect. So to enhance reliability more participants would have been needed. A second problem might be the kind of speech program that was used. It is possible that the difference between the neutral voice and the emotionally loaded voice was somewhat hard to hear. This again decreases the chance of finding an effect. The reason for this is the way the program created the voices. As was stated in the method the spoken text that comes from Acapela is not computer-generated, it is recorded by a human speaker. This is the base for the program. But is it possibe for a person to speak without any kind of emotion? To create a more obvious difference between either a sentence with acoustic features of emotion and without, a solution might be to use a mechanical voice. In the end it was decided not to use that for this research because it is already quite easy for manufacturers of robots and speech-programs to generate a better sounding voice than a robotic voice. The practical application of this research would therefore have decreased if the neutral condition had been a robotic voice. | |||

The outcome of this research is in accordance with the previous done research that is stated in the introduction. Namely, when multiple characteristics of emotions were combined, e.g acoustic and meaning of the sentence, it has a reinforcing effect. This reinforcing effect after a combination of different features was also found in previous researches. | |||

Now lets look back at the problem that was given at the beginning of this reserach: the extreme limited freedom of patients who suffer from ALS. As any human they strive for independence, but this becomes impossible in many cases as the illness develops. Increasing their freedom in any way would be a gift to them. The freedom to express yourself is the main focus of this research. Researches are busy with technology that allows people with ALS to use their own voice with speech technology (EUAN MacDonald Centre). This research looks further than using the sound of the voice from people with ALS. By using someone’s own sound the level of animacy would improve a lot, but as this research shows adding acoustic features of emotion to a voice produced by a TTS, will also enhance the level of animacy. Also the perceived likeability when using certain emotions will be increased when implementing the acoustic features. Using these findings in speech technology will allow people with ALS to create a stronger emotional bond through speech with the people surroundig them. | |||

Meanwhile, this implementation might not be that useful for persuasive technology as stated in the introduction. After analysing the results there was no significant difference between using acoustic features of emotions or not and there was also no large effect. However, the concept of persuasiveness was taken into account for a broader implementation of the findings of this research. This concept is not necesarilly relevant to the issue of ALS. This does not mean that the findings of this research are not relevant for other implementations. When no anatomic features of emotion are available, the combination of acoustic and grammatical features has a positive effect on animacy and likeability. This can be used for social robots that cannot express themselves with mimicry. Examples of these kind of robots are the Nao robot and the Amigo robot. The research that was performed used different parameters to change the voices according to a certain emotion. The Nao robot has the option to change the pitch and the volume of the voice (Aldebaran, 2013). These are both acoustic features of emotion that were also used to create the voices in the conducted research. Besides that, speech rate and pitch variations within sentenes and words were manipulated, but in the explanation of the text to speech program of Nao nothing is said about speed changes. Pitch variations within sentences and words are also not mentioned as an option. This means that Nao can be used to create emotionally loaded sentences, however these sentences will not express the emotion that well as the sentences in this research did, because not all used parameters can be changed for Nao. The aim of Nao can vary a lot. If you know for wich goal it is used, a set of predefined sentences can be programmed that are (partially) adjusted to the right emotion. If improvements of the speech technology of Nao in the future ensure that the changeable parameters are expanded, Nao can become even more appropriate for messaging emotionally loaded sentences. | |||

The second mentioned social robot was Amigo. Amigo uses three text-to-speech programs; one made by Philips, tts of Google, and Ubuntu eSpeak (Voncken, 2013). In eSpeak it is possible to adjust the voice by hand. Parameters that were used in the research, which can also be changed in eSpeak are speech rate and volume (eSpeak, 2007). However these options are only possible for a fragments. It is not possible to change these settings within a sentence or even a word. eSpeak therefore does not contribute to a flexible system that can easily be used for communicating emotional sentences. However, the TTS developed by Philips is already quite advanced. It is possible to select a certain emotion, including sad and exciting. (Philips, 2014) Besides that the speech of Amigo is generated real-time. This means that parts of the sentences are already predefined, but other parts are filled in by Amigo itself. Amigo also has the possibility to choose among multiple sentences for specific situations. (Lunenburg) These two characteristics of the TTS from Philips make Amigo more flexible to use for communicating emotional sentences than Nao. | |||

Overall the findings of this research are useful to increase perceived animacy and likeability of robots. At this moment applicability of the research depends on the kind of robot that is used, including its technical capabilities and purpose. | |||

During this research a program was found (Oddcast, 2012) that can add specific sounds to a voice recording. Examples are ‘Wow’ or sobbing sounds. This can enhance the findings of this research. Thus for further research this research can also be used as the basis. Besides that more research needs to be done on the characteristics of acoustic features of emotions. Although the findings of previous research about this topic can be implemented, it can also be improved. An important remark needs to be made that it will always be difficult for people to recognise an emotion that is only based on acoustic features. The reason for this is that the combination of multiple features lead to a correct recognition of a certain emotion. | |||

== References == | |||

Acapela Group. (2009, November 17). Acapela Box. Retrieved from Acapela: https://acapela-box.com/AcaBox/index.php | Acapela Group. (2009, November 17). Acapela Box. Retrieved from Acapela: https://acapela-box.com/AcaBox/index.php | ||

| Line 125: | Line 128: | ||

Acapela Group. (2014, September 26). How does it work? Retrieved from Acapela: http://www.acapela-group.com/voices/how-does-it-work/ | Acapela Group. (2014, September 26). How does it work? Retrieved from Acapela: http://www.acapela-group.com/voices/how-does-it-work/ | ||

Audacity. (2014, | Aldebaran Commodities B.V. (2013). ALTextToSpeech. Retrieved from Aldebaran: http://doc.aldebaran.com/1-14/naoqi/audio/altexttospeech.html | ||

Audacity. (2014, September 29). Audacity. Retrieved from Audacity: http://audacity.sourceforge.net/?lang=nl | |||

Bowles, T., & Pauletto, S. (2010). Emotions in the voice: humanising a robotic voice. York: The University of York. | Bowles, T., & Pauletto, S. (2010). Emotions in the voice: humanising a robotic voice. York: The University of York. | ||

Breazeal, C. (2001). Emotive Qualities in Robot Speech. Cambridge, Massachusetts: MIT Media Lab. | Breazeal, C. (2001). Emotive Qualities in Robot Speech. Cambridge, Massachusetts: MIT Media Lab. | ||

Busso, C., Deng, Z., Yildirim, S., Bulut, M., Lee, C. M., Kazemzadeh, A., ... & Narayanan, S. (2004, October). Analysis of emotion recognition using facial expressions, speech and multimodal information. In Proceedings of the 6th international conference on Multimodal interfaces (pp. 205-211). ACM | |||

Cognition and Brain Science Unit. (2014, October 2). Rules of thumb on magnitudes of effect sizes. Retrieved from CBU: http://imaging.mrc-cbu.cam.ac.uk/statswiki/FAQ/effectSize | |||

eSpeak (2007). eSpeak text to speech. Retrieved from eSpeak: http://espeak.sourceforge.net/ | |||

Google Inc. (2014, April 14). Google Forms. Retrieved from Google: http://www.google.com/forms/about/ | Google Inc. (2014, April 14). Google Forms. Retrieved from Google: http://www.google.com/forms/about/ | ||

Northern Arziona University EPS 625 – intermediate statistics. Retrieved from EPS 625: http://oak.ucc.nau.edu/rh232/courses/EPS625/Handouts/Nonparametric/The%20Kruskal-Wallis%20Test.pdf | |||

Liscombe, J. J. (2007). Prosody and Speaker State: Paralinguistics, Pragmatics, and Proficiency. Comlumbia: Columbia University. | Liscombe, J. J. (2007). Prosody and Speaker State: Paralinguistics, Pragmatics, and Proficiency. Comlumbia: Columbia University. | ||

Lunenburg, J. J. M. Contact person about TTS of Amigo. Contacted on: 16 October 2014. | |||

Oddcast (2012). Text-to-speech. Retrieved from Oddcast: http://www.oddcast.com/demos/tts/emotion.html | |||

Philips (2014). Text-to-speech. Retrieved from Philips: http://www.extra.research.philips.com/text2speech/ttsdev/index.html. | |||

Royakkers, L., Damen, F., Est, R. V., Besters, M., Brom, F., Dorren, G., & Smits, M. W. (2012). Overal robots: automatisering van de liefde tot de dood. | |||

Scherer, K. R., Ladd, D. R., & Silverman, K. E. (1984). Vocal cues to speaker affect: Testing two models. The Journal of the Acoustical Society of America, 76(5), 1346-1356. | |||

Voncken, J. M. R. Investigation of the user requirements and desires for a domestic service robot, compared to the AMIGO robot. | |||

Vossen, S., Ham, J., & Midden, C. (2010). What makes social feedback from a robot work? disentangling the effect of speech, physical appearance and evaluation. In Persuasive technology (pp. 52-57). Springer Berlin Heidelberg. | |||

Williams, C. E., & Stevens, K. N. (1972). Emotions and Speech: Some Acoustical Correlates . Cambridge, Massachusets: Massachusetts Institute of Technology. | Williams, C. E., & Stevens, K. N. (1972). Emotions and Speech: Some Acoustical Correlates . Cambridge, Massachusets: Massachusetts Institute of Technology. | ||

Yoo, K. H., & Gretzel, U. (2011). Creating more credible and persuasive recommender systems: The influence of source characteristics on recommender system evaluations. In Recommender systems handbook (pp. 455-477). Springer US | |||

Latest revision as of 15:52, 19 October 2014

Terug: PRE_Groep2

Introduction

Freedom is valuable to humans. It is so important that their right for freedom is protected by the constitution and several human right organizations. Freedom is related to both the physical- and mental state and it can be limited or taken away. Muscle diseases take away a person’s freedom on a physical level.

A muscular disease that recently gained more attention is ALS. Mainly because of the ‘ice bucket challenge’ that went all over Facebook. The idea of the campaign was to gain more awareness for this disease so that money would be raised for further research. With ALS the neurons that are responsible for muscles movement will die off over time (Foundation ALS, 2014). Groups of muscles lose their function, because the ronsponsible neurons can no longer send a signal from the brain to the muscles. This process continues until vital muscles, like the muscles that helps a person to breathe, stop functioning. During their illness people who suffer from ALS feel like a prisoner in their own body. Their freedom is decreasing in multiple ways. For example at some point they may not be able to express themselves verbally as the vocal cords are muscles and can stop functioning.

There is a team of reseachers (EUAN MacDonald Centre) that that tries to help people with ALS by giving them back their voice. They record the voice of ALS patients prior to the muscle failure, so that those recordings can be used in speech technology. Instead of hearing a computerized sound, ALS patients can hear their own voice when their ability to speak is impaired. This creates a stronger emotional bond between the patient and the loved ones surrounding them. Although they are working on such technology, it can be further improved by adding emotion to a person's own sound.

There are researches that have investigated the features of particular emotions (Williams & Stevens, 1972) (Breazeal, 2001) (Bowles & Pauletto, 2010). These findings could be implemented in a speech program to add an emotion to a sentence. Although some emotions were recognized based on those features it was difficult for the sample group to successfully recognize certain emotions like sadness. Other studies came with an explanation for this problem. They state that emotion cannot be recognized by acoustic features alone. Brusso, et al. (2004) say that the combination of acoustic features (pitch, frequency, etc.) and anatomical features (facial expressions) is more effective for the recognition of emotions. Scherer, Ladd, & Silverman (1984) found that the combination of acoustic features and grammatical features is effective. Thus both studies conclude that the combination of multiple characteristics of emotions leads to the correct recognition of a specific emotion. When implementing these findings into a speech program it is not possible to include anatomical features. Nevertheless it is important to find the best possible way for ALS patients to express themselves verbally even though it is only based on acoustic and grammatical features, because it gives them an increased sense of freedom.

The goal of this research is not to look further into the characteristics of emotions, but to investigate the strength of the combination of just the acoustic and grammatical features. At first this research starts with sentences without emotion, but who express emotion grammatically. The sentence ‘You did so great, I thought it was amazing’ expresses the emotion ‘happy’ because of the words that were chosen. But how large or powerful is the effect when adding physical features of emotions? This question leads to the research question of this research:

What is the effect on the perception of humans by adding acoustic features of emotions to a sentence with grammatical emotional features?

Based on the knowledge of prior research the sentence with both acoustic and grammatical features should be more convincing. The hypothesis is that the recognition of emotion in a voice will have a positive affect on likeablity, animacy and persuasiveness (Yoo, & Gretzel, 2011) (Vosse, Ham, & Midden, 2010).

The outcome of this research can be used in speech technology for patients who suffer from ALS, but its use has a broader implementation. It can be used when no anatomical features of emotions are available, but when it is still necessary to communicate a certain emotion. With persuasive technology for example there are devices that want to convince people to behave in a certain way. Some devices use only voice recordings instead of an avatar. In these cases the use of acoustic and grammatical features could have a stronger effect on the convincingness of the device which leads to the wanted change of behavior.

Method

- Materials

For the research five different programs were used; Acapela Box, Audacity, Google Forms, Microsoft Office Excel, and Stata. The use of the programs will be explained below.

Acapela Box is an online text-to-speech generator which can create voice messages with your text and their voices. The voice of Will was used because this one is English (US) and has the different functions ‘happy’, ‘sad’ and ‘neutral’. (Acapela Group, 2009) To generate the voice of Will the speech of a person was recorded. Those parameters are used and implemented in the written text. While programming this, attention was paid to diaphones, syllables, morphemes, words, phrases, and sentences. (Acapela Group, 2014) The Acapela Box also gives you the opportunity to change the speech rate and the voice shaping. (Acapela Group, 2009) This can be very useful for different emotions. When sadness occurs, a sentence will be spoken slower than when happiness occurs. This is because of long breaks between words and slower pronunciation. (Williams & Stevens, 1972) A person talks with 1.91 syllables per second if the person is sad and with 4.15 syllables per second if the person is angry. (Williams & Stevens, 1972) Because the speech rate of happy and angry does not differ much. It was chosen to use this value of 4.15 syllables per second for happiness. (Breazeal, 2001) Voice shaping changes the pitch of the voice. A happy voice has an average high pitch and a sad voice has an average low pitch. (Liscombe, 2007) So the Acapela Box was used to change this voice shape.

Audacity is a free, open source, cross-platform software to record and edit audio. (Audacity, 2014) In Audacity you can use a lot of functions with which you can give the audio fragment an emotional tone. With the function ‘amplify’ you may choose a new peak-amplitude which is used to make the happy audio fragments louder than the sad fragments. The difference in amplitude between these two emotions is 7 dB on average according to the research by Bowles and Pauletto. The loudness of a neutral voice is close to that of the sad voice. (Bowles & Pauletto, 2010) The pitch of a voice while being sad decreases at the beginning of the sentence and remains rather constant at the second half of the sentence. (Williams & Stevens, 1972) A sad voice could also be recognized by the pitch decreasing at the end of the sentence. In contrast, being happy gives your voice a variety of different pitches. (Breazeal, 2001) In Audacity you can change the pitch of an individual word by selecting it, choosing the effect ‘adjust pitch’ and filling in the percentage you want to change the pitch into. Another feature is that a sad voice has longer breaks between two words comparing to all other emotions. (Bowles & Pauletto, 2010) By selecting a break between words and using the function ‘change tempo’ in Audacity, the length of this break can be made longer. This function can also help you to lengthen or shorten the words of a sentence individually. This is useful because words with only one syllable are pronounced faster when being happy. And if a person is sad, longer words are pronounced 20% slower and short words are pronounced 10-20% slower than a neutral voice. (Bowles & Pauletto, 2010). With the function ‘change tempo’ the speed of the voice changes, but the pitch does not. And this is exactly what we need for these emotions.

(For the research ten different sentences were used and adjusted. To see more specific adjustments on these sentences, click here: Adjustments sentences)

With Google Forms you can create a new survey with others at the same time. It is a tool used to collect information. Audio fragments (via video) can be inserted and every possible question can be written. After receiving enough data from the required participants, the information can be collected in a spreadsheet which can be exported to a .xlsx document (for the program Microsoft Office Excel). (Google Inc., 2014)

Microsoft Office Excel is a program to save spreadsheets. This spreadsheet can be imported in the program Stata and from there on it is considered as data in different variables. Stata is used to interpret and analyze the data.

- Design

Before the experiment was executed, a power analysis was done to get an idea of how many participants might be needed. For the power analysis the power was set to 0.8 at a significance level of 0.05. For a moderate effect size, 64 participants for each condition were needed in a two-tailed t-test and 51 participants for each condition were needed in a one-tailed t-test. A one-tailed t-test would be suitable for the experiment, because no relation or a positive relation was suspected. A negative relation was not to be expected. Therefore there is only one way in which there would be an effect and a two-tailed t-test would not be needed. However, it is presumably that the effect size is small instead of moderate. Another power analysis was executed to see how many participants were needed if the effect size is small. For a two-tailed t-test 394 participants per condition would be needed and for a one-tailed 310 participants per condition. Because the resources and time to collect so many participants were not available, the experiment is executed with 51 participants per condition.

For our experiment a between subjects design was used. The two conditions researched were emotionally loaded voices and neutral voices. Each group only heard one of the conditions. These two conditions made up the independent variable, which therefore is a categorical variable. The dependent variables were likeability, animacy and persuasiveness. All these variables consisted of a Likert scale varying from 1 to 5. All the dependent variables are interval variables. The dependent variables were composed of several questions in the questionnaire. For the dependent variable likeability, the questions 27, 30, 35, 38 and 40 from the questionnaire were used. Questions 28, 31, 32 and 34 were used for the dependent variable animacy. And the dependent variable persuasiveness composed of the questions 29, 33, 36 and 37.

Because the survey is about water consumption and persuasiveness two variables were made which could be of influence on the way participants responded on the comments of Will. These covariates should measure how easy you are to convince and how much you care about the environment. Both covariates are composed of several questions in the questionnaire. How easily you are convinced is composed of the questions 5, 8, 13 and 18. How much you care about the environment is composed of the questions 4, 6, 10, 11, 12, 15, 17 and 19.

(The questions of the questionnaire can be found at Research design.)

- Procedure

Each participant received a questionnaire. This questionnaire contained three parts. The first part is the general one in which some demographic information was asked. Besides some questions were asked about two personal characteristics. Furthermore, some questions were included to prevent that participants directly knew about our research goal. The second part consisted of a simulation in which everyone was supposed to fill in some questions about their showering habits. After each answer, an audio fragment was heard which either gave positive or negative feedback. The third part contained questions about the experience of the voice heard. At last, we added some final questions to give us an indication about general matters such as concentration and comprehensibility.

- Participants

The participants (n = 101) were personally asked to fill in the questionnaire. They were gathered from our list of friends on our Facebook accounts. Facebook was used to prevent that elderly, which are unable to change their showering habits due to living in a care home, filled in the questionnaire. Besides, only participants older than 18 were asked, since younger people do often not pay their own energy bill.

From the original data set, three participants were removed, since they submitted the questionnaire two times. Besides, one extra person was removed from the dataset, since this participant commented that he had not understood the questions about Will. Furthermore participants who totally disagreed when they were asked whether they master the English language, were removed. At last, participants were removed who were totally distracted while filling in the questionnaire. After this we were left with 94 participants.

Each participant got either the questionnaire with the neutral voice (n1 = 46) or with the voice that sounds emotionally loaded (n2 = 48). The condition with the neutral voice is called category 1, and the other condition is called category 2.

The first category consisted of 15 men and 31 women, and their age was between 18 and 57. (M = 27.5, SD = 12.2). The second category consisted 18 men and 30 women, and their age was between 18 and 57. (M = 29.6, SD = 13.3). The highest completed degree of education differed among primary school (0.0%; 4.2%), mavo (2.2%; 2.1%), havo (4.4%; 8.3%), vwo (39.1%; 31.3%), mbo (6.5%; 12.5%), hbo (15.2%; 22.9%), and university (32.6%; 18.8%). The first value is for category 1, and the second is for category 2.

Resultaten

As stated in the introduction three concepts of perception were used for this research: persuasion, likeability and animacy. The scales for these concepts were tested on reliability by calculating Cronbach's alpha. These values were respectively 0.85, 0.89, and 0.85.The concepts will be discussed in the given order.

To test whether persuasion of the voice is perceived differently between both conditions, an ANOVA was performed. This resulted in p = 0.96, and [math]\displaystyle{ \eta ^2 }[/math] = 0.00003. A graphical representation of this test can be seen in figure 1. The y-axis represents a 5-point Lickert scale, that is encoded from -2 (totally disagree) to +2 (totally agree). Zero means neutral.

After this, a second test was done in which participants were only included if they said that they are willing to adjust their showering habits. In this case the p-value was 0.95, and [math]\displaystyle{ \eta ^2 }[/math] = 0.00005.

For participants who either only heard at least four positive (p = 0.43; [math]\displaystyle{ \eta ^2 }[/math] = 0.03) or four negative audiofragments (p = 0.69; [math]\displaystyle{ \eta ^2 }[/math] = 0.02) it was tested whether persuasion differed between the conditions.

Figure 1: Persuasion per condition

The third test that was performed was to see whether the participants in the condition with emotion rated the voice as more likeable compared to the participants in the neutral condition. Since not all assumptions for an ANOVA were met (normal distribution across both conditions was rejected), the non-parametric kwallist test was performed. This gave a p-value of 0.17, and [math]\displaystyle{ \eta ^2 }[/math] = 0.02. This effect was calculated by using the following formula: [math]\displaystyle{ \eta ^2=\frac{\chi ^2}{N-1} }[/math] (NAU EPS625). Figure 2 shows these results. The y-axis represents the scale retrieved from the God speed questionnaire. This scale is from 1 to 5.

As a follow up test, the kwallis was executed another two times, but now one time with only participants that at least heard four positive audiofragments (p = 0.02; [math]\displaystyle{ \eta ^2 }[/math] = 0.22), and the second time with only participants that at least heard four negative audiofragments (p = 0.73; [math]\displaystyle{ \eta ^2 }[/math] = 0.01). The effect of mainly hearing positive audiofragments on likeability is shown in figure 3, which has the same scale on the y-axis as figure 2.

Figure 2: Likeability per condition --------------------------------------- Figure 3: Likeability per condition when heard audio-fragements were mostly positive

Finally, a kwallis test was done to test whether there is a difference in animacy between both conditions. A kwallis test was chosen because the assumptions were not met (rejection of equal variance for both groups). The p-value found was 0.02 and [math]\displaystyle{ \eta ^2 }[/math] = 0.06. The found difference can be seen in figure 4. The y-axis of figure 4, represents the scale encoded from 1 to 5, retrieved from the God speed questionnaire.

To test whether the type of heard emotion has some influence on perceived animacy, participants who heard at least four positive fragments (p = 0.16; [math]\displaystyle{ \eta ^2 }[/math] = 0.08) were tested, as well as participants who heard at least four negative fragments (p= 0.17; [math]\displaystyle{ \eta ^2 }[/math] = 0.21).

Figure 4: Animacy per condition

Discussion and conclusion

Looking at the results, the concept persuasion did not result in a significant difference between the conditions. This can be seen by looking at the high p-value that was found. Besides that, the effect size was taken into consideration and this was close to zero. Even after controlling for willingness to adjust showering habits, the effect was non-significant, and the effect size hardly increased.

Likeability also showed a non-significant effect, but in contrast to persuasion a small effect size was found. However, a significant, large effect was found in likeability between the conditions when only participants were included that at least heard four out of five positive audiofragments. This makes sense, since likeability is in itself a positive concept. So the more happy a fragment sounds (happy versus neutral), the more likeable it is perceived.

Both findings for persuasion and likeability go against the hypothesis that were formulated in the introduction. This can be explained by several things. At first for persuasion it yields that emotion might not be enough to persuade people into changing their behavior. Some participants gave the feedback that also the content of the sentence is of importance: there must be more information available about the water consumption and constructing arguments should be given. Leaving out information was done deliberately to only focus on the emotional context of the sentence instead of the informational context.

For likeability, not controlled for positive fragments, another problem may affect the results. A lot of participants commented that the voice sounded too fake or robotic. It was found that the more human-like a robot is, the more accepted and likeable a robot is (Royakkers et al., 2012). Some participants did not find the used robotic-voice human-like and therefore probably did not find it very likeable. However, apparently when mostly positive fragments were heard, the robot was perceived more likeable. So probably the positive fragments sounded more human-like than the negative ones did.

For animacy a significant effect was found between the two conditions. For the condition with emotion the perceived animacy was higher than for the condition without emotion. The effect size indicated that the difference between the two conditions has a medium effect (following the guidelines for a one-way anova, obtained from the Cognition and Brain Science Unit). The finding for animacy was in line with the hypothesis. An emotional voice is perceived more lively than a neutral voice. Animacy was also tested for people who heard at least four positive audio fragments and for people who heard at least four negative fragments. Effect sizes were bigger for both these groups than the effect size of animacy in general. An explanation for this could be that people who heard the same emotions several times were more accustomed to that voice. Therefore they might have perceived it more lively because they did not perceive any other voice where they could compare it with. However, these findings were not significant and the question is how realiable they are because the two groups consisted of 24 respectively 10 persons.

Besides previous mentioned issues that can be improved, more improvements can be made. These limitations have an influence on all three measured concepts. To begin, given the time to complete this research, concessions had to be made about the size of the sample groups. According to the prior power analysis, the amount of participants that participated, was only enough to reliably find a medium effect. So to enhance reliability more participants would have been needed. A second problem might be the kind of speech program that was used. It is possible that the difference between the neutral voice and the emotionally loaded voice was somewhat hard to hear. This again decreases the chance of finding an effect. The reason for this is the way the program created the voices. As was stated in the method the spoken text that comes from Acapela is not computer-generated, it is recorded by a human speaker. This is the base for the program. But is it possibe for a person to speak without any kind of emotion? To create a more obvious difference between either a sentence with acoustic features of emotion and without, a solution might be to use a mechanical voice. In the end it was decided not to use that for this research because it is already quite easy for manufacturers of robots and speech-programs to generate a better sounding voice than a robotic voice. The practical application of this research would therefore have decreased if the neutral condition had been a robotic voice.

The outcome of this research is in accordance with the previous done research that is stated in the introduction. Namely, when multiple characteristics of emotions were combined, e.g acoustic and meaning of the sentence, it has a reinforcing effect. This reinforcing effect after a combination of different features was also found in previous researches.

Now lets look back at the problem that was given at the beginning of this reserach: the extreme limited freedom of patients who suffer from ALS. As any human they strive for independence, but this becomes impossible in many cases as the illness develops. Increasing their freedom in any way would be a gift to them. The freedom to express yourself is the main focus of this research. Researches are busy with technology that allows people with ALS to use their own voice with speech technology (EUAN MacDonald Centre). This research looks further than using the sound of the voice from people with ALS. By using someone’s own sound the level of animacy would improve a lot, but as this research shows adding acoustic features of emotion to a voice produced by a TTS, will also enhance the level of animacy. Also the perceived likeability when using certain emotions will be increased when implementing the acoustic features. Using these findings in speech technology will allow people with ALS to create a stronger emotional bond through speech with the people surroundig them.

Meanwhile, this implementation might not be that useful for persuasive technology as stated in the introduction. After analysing the results there was no significant difference between using acoustic features of emotions or not and there was also no large effect. However, the concept of persuasiveness was taken into account for a broader implementation of the findings of this research. This concept is not necesarilly relevant to the issue of ALS. This does not mean that the findings of this research are not relevant for other implementations. When no anatomic features of emotion are available, the combination of acoustic and grammatical features has a positive effect on animacy and likeability. This can be used for social robots that cannot express themselves with mimicry. Examples of these kind of robots are the Nao robot and the Amigo robot. The research that was performed used different parameters to change the voices according to a certain emotion. The Nao robot has the option to change the pitch and the volume of the voice (Aldebaran, 2013). These are both acoustic features of emotion that were also used to create the voices in the conducted research. Besides that, speech rate and pitch variations within sentenes and words were manipulated, but in the explanation of the text to speech program of Nao nothing is said about speed changes. Pitch variations within sentences and words are also not mentioned as an option. This means that Nao can be used to create emotionally loaded sentences, however these sentences will not express the emotion that well as the sentences in this research did, because not all used parameters can be changed for Nao. The aim of Nao can vary a lot. If you know for wich goal it is used, a set of predefined sentences can be programmed that are (partially) adjusted to the right emotion. If improvements of the speech technology of Nao in the future ensure that the changeable parameters are expanded, Nao can become even more appropriate for messaging emotionally loaded sentences. The second mentioned social robot was Amigo. Amigo uses three text-to-speech programs; one made by Philips, tts of Google, and Ubuntu eSpeak (Voncken, 2013). In eSpeak it is possible to adjust the voice by hand. Parameters that were used in the research, which can also be changed in eSpeak are speech rate and volume (eSpeak, 2007). However these options are only possible for a fragments. It is not possible to change these settings within a sentence or even a word. eSpeak therefore does not contribute to a flexible system that can easily be used for communicating emotional sentences. However, the TTS developed by Philips is already quite advanced. It is possible to select a certain emotion, including sad and exciting. (Philips, 2014) Besides that the speech of Amigo is generated real-time. This means that parts of the sentences are already predefined, but other parts are filled in by Amigo itself. Amigo also has the possibility to choose among multiple sentences for specific situations. (Lunenburg) These two characteristics of the TTS from Philips make Amigo more flexible to use for communicating emotional sentences than Nao.

Overall the findings of this research are useful to increase perceived animacy and likeability of robots. At this moment applicability of the research depends on the kind of robot that is used, including its technical capabilities and purpose.

During this research a program was found (Oddcast, 2012) that can add specific sounds to a voice recording. Examples are ‘Wow’ or sobbing sounds. This can enhance the findings of this research. Thus for further research this research can also be used as the basis. Besides that more research needs to be done on the characteristics of acoustic features of emotions. Although the findings of previous research about this topic can be implemented, it can also be improved. An important remark needs to be made that it will always be difficult for people to recognise an emotion that is only based on acoustic features. The reason for this is that the combination of multiple features lead to a correct recognition of a certain emotion.

References

Acapela Group. (2009, November 17). Acapela Box. Retrieved from Acapela: https://acapela-box.com/AcaBox/index.php

Acapela Group. (2014, September 26). How does it work? Retrieved from Acapela: http://www.acapela-group.com/voices/how-does-it-work/

Aldebaran Commodities B.V. (2013). ALTextToSpeech. Retrieved from Aldebaran: http://doc.aldebaran.com/1-14/naoqi/audio/altexttospeech.html

Audacity. (2014, September 29). Audacity. Retrieved from Audacity: http://audacity.sourceforge.net/?lang=nl

Bowles, T., & Pauletto, S. (2010). Emotions in the voice: humanising a robotic voice. York: The University of York.

Breazeal, C. (2001). Emotive Qualities in Robot Speech. Cambridge, Massachusetts: MIT Media Lab.

Busso, C., Deng, Z., Yildirim, S., Bulut, M., Lee, C. M., Kazemzadeh, A., ... & Narayanan, S. (2004, October). Analysis of emotion recognition using facial expressions, speech and multimodal information. In Proceedings of the 6th international conference on Multimodal interfaces (pp. 205-211). ACM

Cognition and Brain Science Unit. (2014, October 2). Rules of thumb on magnitudes of effect sizes. Retrieved from CBU: http://imaging.mrc-cbu.cam.ac.uk/statswiki/FAQ/effectSize

eSpeak (2007). eSpeak text to speech. Retrieved from eSpeak: http://espeak.sourceforge.net/

Google Inc. (2014, April 14). Google Forms. Retrieved from Google: http://www.google.com/forms/about/

Northern Arziona University EPS 625 – intermediate statistics. Retrieved from EPS 625: http://oak.ucc.nau.edu/rh232/courses/EPS625/Handouts/Nonparametric/The%20Kruskal-Wallis%20Test.pdf

Liscombe, J. J. (2007). Prosody and Speaker State: Paralinguistics, Pragmatics, and Proficiency. Comlumbia: Columbia University.

Lunenburg, J. J. M. Contact person about TTS of Amigo. Contacted on: 16 October 2014.

Oddcast (2012). Text-to-speech. Retrieved from Oddcast: http://www.oddcast.com/demos/tts/emotion.html

Philips (2014). Text-to-speech. Retrieved from Philips: http://www.extra.research.philips.com/text2speech/ttsdev/index.html.

Royakkers, L., Damen, F., Est, R. V., Besters, M., Brom, F., Dorren, G., & Smits, M. W. (2012). Overal robots: automatisering van de liefde tot de dood.

Scherer, K. R., Ladd, D. R., & Silverman, K. E. (1984). Vocal cues to speaker affect: Testing two models. The Journal of the Acoustical Society of America, 76(5), 1346-1356.

Voncken, J. M. R. Investigation of the user requirements and desires for a domestic service robot, compared to the AMIGO robot.

Vossen, S., Ham, J., & Midden, C. (2010). What makes social feedback from a robot work? disentangling the effect of speech, physical appearance and evaluation. In Persuasive technology (pp. 52-57). Springer Berlin Heidelberg.

Williams, C. E., & Stevens, K. N. (1972). Emotions and Speech: Some Acoustical Correlates . Cambridge, Massachusets: Massachusetts Institute of Technology.

Yoo, K. H., & Gretzel, U. (2011). Creating more credible and persuasive recommender systems: The influence of source characteristics on recommender system evaluations. In Recommender systems handbook (pp. 455-477). Springer US