PRE2023 3 Group5: Difference between revisions

(→Users) |

(→Week 9) |

||

| (75 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

Fall Guard | |||

==Group Members== | ==Group Members== | ||

{| class="wikitable" | {| class="wikitable" | ||

!Name | !Name | ||

!Student ID | !Student ID | ||

| Line 32: | Line 31: | ||

|Computer Science | |Computer Science | ||

|} | |} | ||

== Planning == | |||

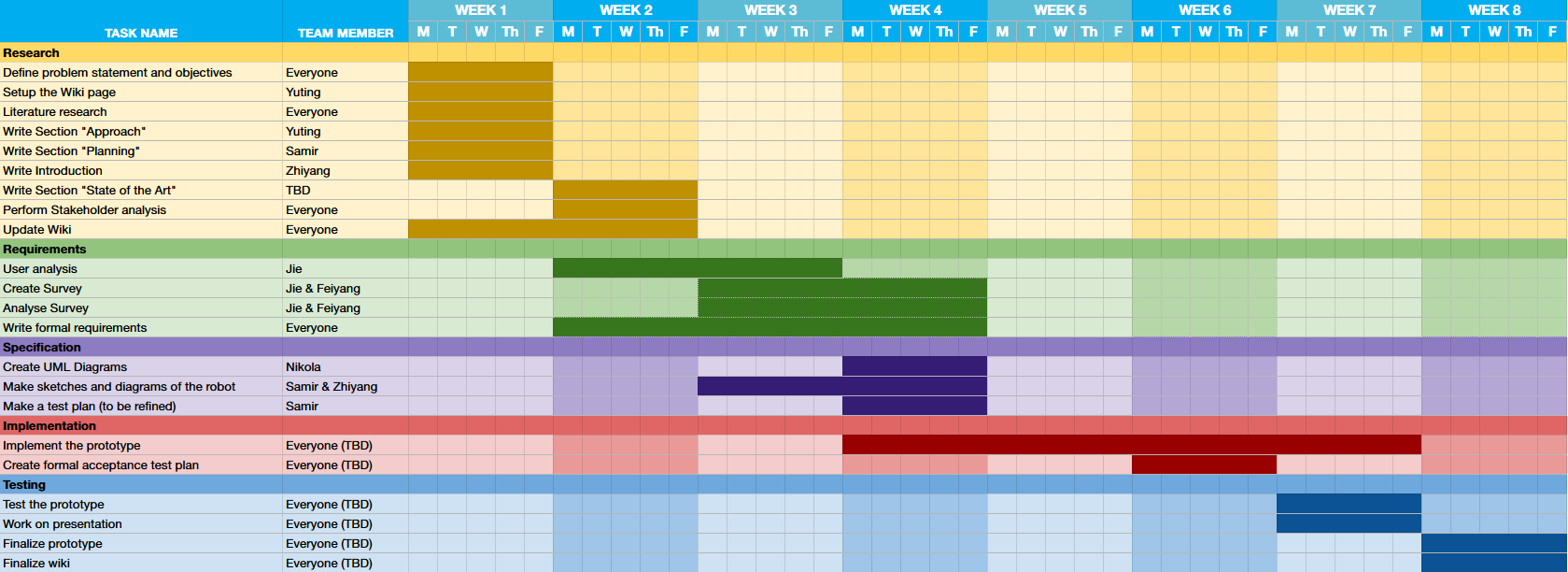

We created a plan for the development process of our product based off of the previously described approach. This plan is shown in the Gantt chart below: | |||

[[File:PRE2023 3 Group 5 Gantt Chart 2.png|center|thumb|1000x1000px|A Gantt chart of our development plan.]] | |||

===Task Division=== | |||

We subdivided the tasks amongst ourselves as follows: | |||

{| class="wikitable" border="1" style="border-collapse: collapse;" cellpadding="2" ; | |||

! colspan="2" style="background: #d1e3ff;" |Research | |||

! | |||

! colspan="2" style="background: #ff8f8f;" |Requirements | |||

! | |||

! colspan="2" style="background: #a7e8b3;" |Specification | |||

! | |||

! colspan="2" style="background: #d9a7e8;" |Implementation | |||

! | |||

! colspan="2" style="background: #ffbf7a;" |Testing | |||

|- | |||

|'''Task''' | |||

|'''Group member''' | |||

| | |||

|'''Task''' | |||

|'''Group member''' | |||

| | |||

|'''Task''' | |||

|'''Group member''' | |||

| | |||

|'''Task''' | |||

|'''Group member''' | |||

| | |||

|'''Task''' | |||

|'''Group member''' | |||

|- | |||

|Define problem statement and objectives | |||

|Everyone | |||

| | |||

|User Analysis | |||

|Jie | |||

| | |||

|Create UML Diagrams | |||

|Nikola | |||

| | |||

|Implement the prototype | |||

|Everyone | |||

| | |||

|Test the prototype | |||

|Everyone | |||

|- | |||

|Setup the Wiki page | |||

|Yuting | |||

| | |||

|Create Survey | |||

|Jie & Feiyang | |||

| | |||

|Define user interface | |||

|Samir & Zhiyang | |||

| | |||

|Create formal acceptance test plan | |||

|Everyone | |||

| | |||

|Work on presentation | |||

|Everyone | |||

|- | |||

|Literature research | |||

|Everyone | |||

| | |||

|Analyse Survey | |||

|Jie & Feiyang | |||

| | |||

|Make a test plan | |||

|Samir | |||

| | |||

|Finalize prototype | |||

|Everyone | |||

| | |||

|- | |||

|Write Section "Approach" | |||

|Yuting | |||

| | |||

|Write Formal Requirements | |||

|Everyone | |||

| | |||

| colspan="3" | | |||

|- | |||

|Write Section "Planning" | |||

|Samir | |||

| | |||

|- | |||

|Write Introduction | |||

|Zhiyang | |||

| | |||

|- | |||

|Write Section "State of the Art" | |||

|Samir & Nikola | |||

| | |||

|- | |||

|Perform Stakeholder Analysis | |||

|Everyone | |||

| | |||

|- | |||

|Update Wiki | |||

|Everyone | |||

| | |||

|} | |||

===Milestones=== | |||

By the end of Week 1 we should have a solid plan for what we want to make, a brief inventory on the current literature, and a broad overview of the development steps required to make our product. | |||

By the end of Week 3 we should have analysed the needs of our users and stakeholders, formalized these needs as requirements according to the MoSCoW method, and have a clear state-of-the-art. | |||

By the end of Week 4 we should have specified the requirements as UML diagrams, blueprints, etc., created a basic user interface, and created an informal test plan. | |||

By the end of Week 6 we should have created a formal acceptance test plan. | |||

By the end of Week 7 we should have finished the implementation of our product's prototype. | |||

By the end of Week 8 we should have tested the product according to the acceptance test plan, finished the presentation, finalized the prototype, and finalized our report. | |||

===Deliverables=== | |||

The final product will be a robot that is programmed to detect when a user falls and alerts emergency services if they do. Furthermore, we would like it to be capable of identifying fall risks and alerting the user of them, but we do not yet know if this can also be done within the course timeframe. | |||

== Approach == | |||

In order to reach the objectives, we split the project into 5 stages. The five stages are distributed into 8 weeks with some overlaps. Everyone in the team is responsible for some tasks in these stages. | |||

# '''Research stage''': In week 1 and 2, we mainly focus on the formulation of problem statements, objectives, and research. We first need to make a plan for the project. The direction of this project is fixed *6/by defining the problem statement and objectives. Doing literature research helps us to gather information of state-of-the-art, the advantages and limitations of current solutions. | |||

# '''Requirements stage''': From week 2 to week 4 we will do user analysis to further determine the goal and requirements of our product. We will collect information about user needs by surveys and interviews. The surveys and interviews can contain information found in the research stage. For example, how does the user think about the current solution, what improvements can be made. | |||

# '''Specification stage''': This stage is in week 3 and 4, in which we create the specification of our product using techniques such as UML diagrams and drawing user interface. From the user analysis and the research, we can create the specification in more detail. After this, a test plan will be made so that the product can be tested to see whether it meets the requirements and the specification. | |||

# '''Implementation stage''': The prototype of our product will be implemented in this stage from week 5 to week 7. We plan to only create the digital part of the product due to time constraints. Also, a more formal test plan will be constructed for later use. | |||

# '''Testing stage''': In week 7 and 8, the prototype will be tested by the test plan and we can examine whether the product reaches our goal and solves the problem. The finalization on the prototype, presentation and wiki page will be done in this stage. | |||

== Introduction == | == Introduction == | ||

=== Problem Statement === | === Problem Statement === | ||

Nowadays, there is an increasing amount of elderly or impaired people whose health conditions or disabilities prevent them from taking care of themselves. Meanwhile, a large number of them have no choice but to live alone entirely or for most of the time. For some people, especially those with limited mobility, even walking in their own home is a struggle. They can easily get hurt if they are not taken care of properly, and there | Nowadays, there is an increasing amount of elderly or impaired people whose health conditions or disabilities prevent them from taking care of themselves. Meanwhile, a large number of them have no choice but to live alone entirely or for most of the time. For some people, especially those with limited mobility, even walking in their own home is a struggle. They can easily get hurt if they are not taken care of properly, and there is a likelihood that their family may remain unaware of potential harm. There have been occasions where individuals fall over at home without anyone noticing which might lead to severe, or even fatal, consequences. The caretaker is thus a necessary role that must be present in our society to ensure the well-being and quality of life for individuals facing these challenges. However, contemporary families often face the dual dilemma of struggling to allocate time for caregiving responsibilities and encountering financial barriers that hinder their ability to engage professional caregivers. Consequently, a significant portion of the population in need is left without the essential care they require. | ||

=== Objectives === | === Objectives === | ||

Considering the current situations that the individuals with limited mobility are facing, we | Considering the current situations that the individuals with limited mobility are facing, we have decided to design a home robot with a primary focus on safety monitoring that is easily accessible to individuals. The robot should be able to have an eye on the individuals while they are walking and take actions when they fall over but cannot manage to get up on their own. The main goal of the robot is to ensure the safety of the user and reach out for help whenever needed. On one hand, the robot closely monitors the individual's mobility at home and swiftly responds to instances of falls. On the other hand, it enhances the overall safety of individuals living at home and provide peace of mind for both users and their caregivers or family. Moreover, the robot can also easily be applied in public areas such as hospitals or nursing homes for the same purpose. However, it may raise privacy concerns if the data recorded by the robot is not kept secure. | ||

As an initial idea, the design of the robot | As an initial idea, the design of the robot covers these features: | ||

'''1.''' '''Built-in program:''' The robot should be designed as a digital program that can be easily installed | '''1.''' '''Built-in program:''' The robot should be designed as a digital program that can be easily installed and uses cameras as its primary sensor. | ||

'''2.''' '''Fall Detection and Response System:''' The robot is implemented with high-accuracy human detection and fall detection artificial intelligence algorithms to detect instances of falls and decision-making abilities to react to the instances accordingly. | '''2.''' '''Fall Detection and Response System:''' The robot is implemented with high-accuracy human detection and fall detection artificial intelligence algorithms to detect instances of falls and decision-making abilities to react to the instances accordingly. | ||

| Line 92: | Line 221: | ||

=== Users === | === Users === | ||

Target | '''Target group 1''': Elderly and impaired individuals living alone. According to the World Health Organization (WHO), the number of people aged 65 or older is expected to more than double by 2050, reaching 1.6 billion. The number of people aged 80 years or older is growing even faster<ref>WORLD SOCIAL REPORT 2023: LEAVING NO ONE BEHIND IN AN AGEING WORLD | WHO| | ||

https://www.un.org/development/desa/dspd/wp-content/uploads/sites/22/2023/01/WSR_2023_Chapter_Key_Messages.pdf</ref>. From the statistics of Centers for Disease Control and Prevention (CDC), one out of four older adults will fall each year in the United States, making falls a public health concern, particularly among the aging population<ref>Falls and Fall Injuries Among Adults Aged ≥65 Years — United States, 2014 | https://www.un.org/development/desa/dspd/wp-content/uploads/sites/22/2023/01/WSR_2023_Chapter_Key_Messages.pdf</ref>. From the statistics of Centers for Disease Control and Prevention (CDC), one out of four older adults will fall each year in the United States, making falls a public health concern, particularly among the aging population<ref>Falls and Fall Injuries Among Adults Aged ≥65 Years — United States, 2014 | ||

| Line 102: | Line 231: | ||

Needs: | Needs: | ||

# In-time fall detection: This is the primary concern for this group. A fall can have serious consequences, and they need a solution that can quickly detect falls and | # '''In-time fall detection:''' This is the primary concern for this group. A fall can have serious consequences, and they need a solution that can quickly detect falls and request help if needed. | ||

# Ease of use: As this group | # '''Ease of use:''' As this group is over 65, learning how to use a complicated technological product is unrealistic. A simple user interface is thus required for this design. | ||

# Automatic | # '''Automatic and accurate:''' Monitoring the user and requesting help should be be done automatically and accurately; otherwise, it would cause a burden on users and caregivers. | ||

# Privacy and safety: As this robot needs to monitor the activities of users, | # '''Privacy and safety:''' As this robot needs to monitor the activities of users, their data should either be stored safely or not stored at all. | ||

# '''Interference with the user''': This robot should not restrict or interfere with the activities of the user group. | |||

Target group 2: | '''Target group 2''': Informal/formal caregivers and doctors. Some family members in developing countries do not have enough time to look after their elderly because of reasons like severe work overtime; while developed countries are also experiencing the shortage of caregivers. These target groups are potential users, as they expect a method to lighten their burden. | ||

Needs: | Needs: | ||

# Reliable assistant: It is stressful and | # '''Reliable assistant:''' It is stressful and tiring for them to keep an eye on the activities on the elderly. Even the professional caregivers cannot focus all their attention on their patients, so they prefer a reliable assistant robot to help them while they are doing other tasks, like preparing medicine. The notification from the robot must also be reliable, otherwise it would be another burden for them. | ||

# Remote monitoring and real-time information: They may want to use the robot to check the situation of the | # '''Remote monitoring and real-time information:''' They may want to use the robot to check the situation of the user when an emergency happens, such that they can take the next step immediately. | ||

'''Another considerations for both major target groups''': The product should be cheap enough such that most families can afford it. | |||

'''Analysis on interviewee 1''' | |||

'''Background information''': Interviewee is a customer data analysis department in a financial bank. His children are working in the US. He does exercise regularly and mainly plays tennis. There was a family member of his who experienced a severe fall which caused a bone fracture. The interviewee has experience with using a service robot and using generative AI tools. | |||

'''Feedback from the interviewee''': He is interested in using a robot to monitor the fall of the user via camera, as it is more convenient compared to other methods, like wearable devices. He prefers that user interactions with the robot happens using voice commands, as he wants to keep the robot at a safe distance. The interviewee clearly pointed out that he wanted the robot to contact the emergency contact via a phone call or similar methods, as other methods are usually easily ignored. The interviewee also mentioned that he prefers that the robot has a non-human like face, and a cute face would be more ideal, as he did not want a human-like robot following him everywhere, which is unnerving for him especially at night. The interviewee exhibited many concerns related to the robot. The first one is the issue of safety; he is afraid that the robot will block, hit or collide with him, causing more falls. The second concern is that he is worried about the privacy aspects, fearing that the company might misuse the data. As most data comes from the user's daily life, he did not want his data is used by a company, even for research purposes. | |||

'''Analysis on interviewee | '''Analysis on interviewee 2''' | ||

Background information: | '''Background information''': She is retired; she used to be a government department officer. She has one child who works in the same city where she lives, but only visits her once a week. She likes to play tennis. She has experienced several falls at home but not very often, and she does not have too much experience with interacting with robots. | ||

Feedback from the interviewee: | '''Feedback from the interviewee''': She is quite concerned about falling because she lives alone and no one can help her immediately when she falls. She is positive towards the idea and interested in having a robot which uses a camera to detect her falls. However, she is worried about the privacy problem, and she hopes that her photos will not be stored or used for other intentions. She expects that the robot is able to make a phone call to her son and also to the emergency services, because she thinks other methods, like SMS messages are not quick nor efficient. Moreover, she does not want the robot following her around all the time. She hopes she can control the robot and set when the robot can follow her. About the appearance of the robot, she does not want a huge object at home which occupies too much space. She is also concerned about the accuracy of the detection and whether or not the robot can avoid obstacles. | ||

'''Analysis on interviewee | '''Analysis on interviewee 3''' | ||

Background information: | '''Background information''': The interviewee is a middle school teacher, around 40 years old, who lives with with her mother and a caregiver. She is experiencing a severe leg illness and her mother has Parkinson's disease, so she hires a caregiver to look after her mother and do housework for her. Her mother experienced a fall many years ago, while she falls once or twice a year, but they are not very severe and she has got used to this thing. | ||

Feedback from the interviewee: She | '''Feedback from the interviewee''': She was open to the robot as she hoped to see an increasing variety of devices for detecting falls. Secondly, she preferred the robot to have a non-human-like appearance and use voice commands to control it when an emergency happens, although she was skeptical about its accuracy. She did not object to collecting user data and sending it to the company, as long as it is fully compliant with the law and the company is only using the data to improve accuracy. But the most concern from the interviewee is the accuracy and reliability of the robot. She does not believe that the robot with camera can detect the fall accurately. She has experience using Siri, a voice assistant made by Apple, but the accuracy disappointed her, so she has doubts about detecting the fall with the camera. The second concern that she mentioned frequently after seeing our prototype was that the robot itself could potentially fall. She was afraid that the robot was too tall and heavy, as many obstacles at home could make the robot fall, and once it falls, it would be troublesome to help the robot up. | ||

=== | === Society === | ||

As the living standards keep increasing, and more medical resources are | As the living standards keep increasing, and more medical resources are publicly available, the life expectancy in many countries increases. This results in more aging population in more developed countries. This population needs more care from the public. However, the number of caregivers does not meet the need of the aging population. A report shows that by 2030, there will be a shortage of 151,000 caregivers in the US <ref>Etkin, K. (2023, January 12). ''Ring the alarm! there’s a global caregiver shortage. can technology help?'' TheGerontechnologist. <nowiki>https://thegerontechnologist.com/ring-the-alarm-theres-a-global-caregiver-shortage-can-technology-help/</nowiki></ref>. Therefore, governments are interested in investing in automatic care robots, to undertake some responsibilities of caring for the elderly. In addition, the elderly are more and more willing to live independently at home instead of needing someone to take care of them all the time. They would like to have a robot that can automate the job of a caregiver, but at the same time, they are concerned about the potential problems such as data leaks and acceptance from society. These problems need to be addressed in the design of the robot. | ||

Needs: | Needs: | ||

# Social acceptance: This robot should be socially accepted by the public, so that most elderly will be willing to use this product. Some people might think that installing a robot at home that monitor them every day is not safe and will be an invasion of privacy. | # '''Social acceptance''': This robot should be socially accepted by the public, so that most elderly will be willing to use this product. Some people might think that installing a robot at home that monitor them every day is not safe and will be an invasion of privacy. | ||

# Data privacy: The potential problem of data | # '''Data privacy''': The potential problem of data breaches should be considered by the developers of the robot and the government; data privacy measures must be taken into account, and data storage methods must be in full compliance with the law. | ||

=== Enterprise === | === Enterprise === | ||

Robotic companies are the main stakeholders. As the elderly population grows, there will be more and more potential customers. Fall detection robots suit the needs of these customers. Hence, the robotic companies are interested in developing such | Robotic companies are the main stakeholders. As the elderly population grows, there will be more and more potential customers. Fall detection robots suit the needs of these customers. Hence, the robotic companies are interested in developing such robots. Hospitals and nursing/care homes will also be interested in fall detection robots to some extent; lack of caregivers is also affecting the revenue of hospitals. If there are fall detection robots that can detect the fall of patients, hospitals can spend less time monitoring the patients. | ||

Needs: | Needs: | ||

# No failure case: If the robot fails to detect | # '''No failure case''': If the robot consistently fails to detect falls, it will have a large negative impact on the developer companies and hospitals. This is because society will lose their trust on such robots. Therefore, the companies would like to ensure that the robot is always able to detect falls. | ||

# Price: As there are fewer human caregivers, companies | # '''Price''': As there are fewer human caregivers, companies start investing in robots for a replacement. But, if the price of a robot is higher than hiring a human caregiver, then there will be no need to purchase such a robot. Therefore, the price must be affordable for users. | ||

== Requirements == | == Requirements == | ||

=== Design specifications === | === Design specifications === | ||

Rae et al. (2013<ref>Rae, I., Takayama, L., & Mutlu, B. (2013). ''The influence of height in robot-mediated communication. 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI).'' doi:10.1109/hri.2013.6483495 </ref>) found that the persuasion of a telepresence robot was less persuasive when the robot was shorter than the user. Thus, for requirement 1.1, we decided that the robot should be about the same height or slightly taller than the user, to minimize the loss of persuasion that may occur when the robot is shorter than the user. For requirement 1.2, this is merely an estimate based off the Atlas robot made by Boston Dynamics, which weighs 75-85 kilograms depending on its version, thus, we took the average across the 3 versions (75, 80, 85 kg respectively). For requirement 1.3, we would like the robot to be able to move | Rae et al. (2013<ref>Rae, I., Takayama, L., & Mutlu, B. (2013). ''The influence of height in robot-mediated communication. 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI).'' doi:10.1109/hri.2013.6483495 </ref>) found that the persuasion of a telepresence robot was less persuasive when the robot was shorter than the user. Thus, for requirement 1.1, we decided that the robot should be about the same height or slightly taller than the user, to minimize the loss of persuasion that may occur when the robot is shorter than the user. For requirement 1.2, this is merely an estimate based off the Atlas robot made by Boston Dynamics, which weighs 75-85 kilograms depending on its version, thus, we took the average across the 3 versions (75, 80, 85 kg respectively). For requirement 1.3, we would like the robot to be able to move (Req 1.8), and in order to do so it needs wheels, which, ideally, can also be rotated (Req. 1.9). Normally, at least 2 wheels are sufficient, but 3 or 4 wheels would also work. The design of our robot also has a body containing a microphone, speaker and camera at its front (Req 1.5 + 1.7) and a charging port at the back to recharge its battery (Req 1.6), with the inside of the body housing all of the electronics and wiring (Req. 1.4). Since we would like the robot to be able to make a video call to the caretaker or family members upon fall detection, the robot needs to have at least those elements. | ||

{| class="wikitable" | {| class="wikitable" | ||

!Index | !Index | ||

| Line 157: | Line 293: | ||

|- | |- | ||

|1.1 | |1.1 | ||

|The robot shall have a height of at | |The robot shall have a height of at most 1.7 meters. | ||

|Must | |Must | ||

|- | |- | ||

| Line 191: | Line 327: | ||

|The robot shall be able to rotate its wheels. | |The robot shall be able to rotate its wheels. | ||

|Should | |Should | ||

|} | |||

The test plan is as follows: | |||

{| class="wikitable" | |||

!Index | |||

!Precondition | |||

!Action | |||

!Expected Output | |||

|- | |||

|1.1 | |||

|None | |||

|Measure the height of the robot. | |||

|The height of the robot is at most 1.7 meters. | |||

|- | |||

|1.2 | |||

|None | |||

|Measure the weight of the robot. | |||

|The weight of the robot is at most 80 kilograms. | |||

|- | |||

|1.3 | |||

|None | |||

|Inspect the robot's structure. | |||

|The robot has a base containing two wheels. | |||

|- | |||

|1.4 | |||

|None | |||

|Inspect the robot's structure. | |||

|The robot has a body casing and electronics inside of it. | |||

|- | |||

|1.5 | |||

|None | |||

|Inspect the robot's structure. | |||

|The robot has a camera on its body. | |||

|- | |||

|1.6 | |||

|The robot is powered on. | |||

|Charge the robot using the provided charger. | |||

|The robot begins to charge its battery with no issues. | |||

|- | |||

|1.7 | |||

|The robot is powered on. | |||

|Pretend to fall down, such that the robot calls the designated contact. | |||

|The designated contact responds, you can see, hear, and talk to them with no issues, and vice versa. | |||

|- | |||

|1.8 | |||

|The robot is powered on. | |||

|Set up the robot and move in any direction. | |||

|The robot starts to move. | |||

|- | |||

|1.9 | |||

|The robot is powered on. | |||

|Set up the robot and move in one direction, then turn in any angle and continue moving. | |||

|The robot starts to move, then turns, and moves in a new direction. | |||

|} | |} | ||

=== Functionalities === | === Functionalities === | ||

In terms of core functionality we would like the robot to be able to continuously monitor the user (Req 2. | In terms of core functionality we would like the robot to be able to continuously monitor the user (Req 2.10) to detect falls (Req 2.1) and notify caretakers or family members as soon as possible. The timeframe was decided to be 30 seconds long (Req 2.2), after researching some other devices on the market, we see that on average, they contact emergency services or caretakers within 30 seconds of detecting a fall and the user not responding. We would like the user to be able to cancel the notification if desired (Req. 2.8), in case the fall was not serious. However, in cases where the robot detects that the user is completely immobile for 10 seconds, then it must immediately notify the caretakers or family members without waiting for the full 30 seconds (Req 2.9). We decided on this 10 second time frame, also because of similar devices on the market having similar time ranges - we simply took the average of these times. | ||

We decided to make the notification in the form of a VOIP/Wi-Fi video call to the designated emergency contact, or a SIM-card based call if the robot does not have access to the Internet (Req 2.3). This is because having a video call adds to the level of reliability of the robot and can allow for a more dynamic two-way interaction between the user and their emergency contact. However, if the contact is unavailable, the robot will instead immediately call local emergency services instead and request help (Req 2.4). In this case, it will instead send a text message to the contact (Req 2.5) and attach a photo (Req 2.7), but in any case, the information relayed must contain at least the name, date of birth, and location of the user (Req 2.6) to allow emergency services or a professional caretaker to identify the user and assist them. The reason we included these three attributes is because in most medical settings in the Netherlands, one's name and date of birth (and possibly address) is required to access the services of the clinic the user is registered to. In some cases BSN and the name of the huisarts may additionally be required for emergency services, but we could not find a definitive answer about this protocol. If it turns out that it is required, then we would need to also add this information to Req. 2.6. | |||

In terms of processing and data storage, the robot should not be able to store data or send it online to a server, due to privacy concerns by the user base which were identified during the interviews (Req. 2.11, 2.12). | |||

{| class="wikitable" | {| class="wikitable" | ||

!Index | !Index | ||

| Line 205: | Line 397: | ||

|- | |- | ||

|2.2 | |2.2 | ||

|When the robot detects a fall, the robot shall notify the designated emergency contact within | |When the robot detects a fall, the robot shall notify the designated emergency contact within 30 seconds. | ||

|Must | |Must | ||

|- | |- | ||

| Line 221: | Line 413: | ||

|- | |- | ||

|2.6 | |2.6 | ||

|The notification (text or call) that the robot sends shall contain the name and location of the user. | |The notification (text or call) that the robot sends shall contain the name, date of birth, and location of the user. | ||

|Must | |Must | ||

|- | |- | ||

|2.7 | |2.7 | ||

|When the robot detects a fall, and the emergency contact has not responded (as described in 2.4), the robot shall take a picture of the fall and send the picture to the designated emergency contact. | |When the robot detects a fall, and the emergency contact has not responded (as described in 2.4), the robot shall take a picture of the fall and send the picture to the designated emergency contact via SMS. | ||

|Could | |Could | ||

|- | |- | ||

|2.8 | |2.8 | ||

|When the robot detects a fall, the robot shall have a mechanism to allow the user to cancel the notification within | |When the robot detects a fall, the robot shall have a mechanism to allow the user to cancel the notification within 30 seconds. | ||

|Should | |Should | ||

|- | |- | ||

|2.9 | |2.9 | ||

|When the robot detects a fall, the robot shall additionally detect if the user is immobile, and if they are immobile | |When the robot detects a fall, the robot shall additionally detect if the user is immobile, and if they are immobile for at least 10 seconds after the fall detection, it shall send the notification to the emergency contact within 1 second of determining the user is immobile. | ||

|Should | |Should | ||

|- | |- | ||

|2.10 | |2.10 | ||

| | |The robot shall monitor the user continuously using the camera mounted on its body. | ||

| | |Must | ||

|- | |- | ||

|2.11 | |2.11 | ||

| | |All data that the robot processes or sends shall be stored locally on a hard drive. | ||

|Must | |Must | ||

|- | |- | ||

|2.12 | |2.12 | ||

| | |The robot shall delete any video data within 1 minute after it has been processed. | ||

|Must | |Must | ||

|} | |||

The test plan is as follows: | |||

{| class="wikitable" | |||

!Index | |||

!Precondition | |||

!Action | |||

!Expected Output | |||

|- | |||

|2.1 | |||

|The robot is powered on. | |||

|Pretend to fall down. | |||

|The robot detects the fall and notifies you of it via voice. The robot asks you if you are okay and if you need help. | |||

|- | |||

|2.2 | |||

|The robot is powered on. | |||

|Pretend to fall down. | |||

|The robot detects the fall and notifies the designated contact within 30 seconds. The notification is either a VOIP video call or a SIM card based call, if the internet is not available. The robot in the call says that the user has fallen and requires assistance. The robot says the name, date of birth, and the address of the user. | |||

|- | |||

|2.3 | |||

|The robot has detected a fall. | |||

|To test the video call, simply wait until the robot notifies the contact. To test the SIM call, turn off the internet, repeat the fall, and wait until the robot notifies the contact. | |||

|The video call and SIM call both successfully connect to the emergency contact with no issues. The robot in the call says that the user has fallen and requires assistance. The robot says the name, date of birth, and the address of the user. | |||

|- | |||

|2.4 | |||

|The robot has detected a fall. | |||

|Wait until the robot notifies the contact. Make sure the emergency contact(s) has/have not responded. | |||

|The robot notifies local emergency services. The robot in the call says that the user has fallen and requires assistance from an ambulance. The robot says the name, date of birth, and the address of the user. | |||

|- | |||

|2.5 | |||

|The robot has detected a fall. | |||

|Wait until the robot notifies the contact. Make sure the emergency contact(s) has/have not responded. | |||

|The emergency contact(s) receive(s) a text message from the robot. The text message says that the user has fallen and requires assistance. The text message contains the name, date of birth, and the address of the user. | |||

|- | |||

|2.6 | |||

|The robot has sent a text message (2.5) | |||

|Inspect the text message. | |||

|The text message contains the name, date of birth, and location of the user. | |||

|- | |||

|2.7 | |||

|The robot has sent a text message (2.5) | |||

|Inspect the text message. | |||

|The text message contains a picture of the fall. | |||

|- | |||

|2.8 | |||

|The robot has detected a fall. | |||

|Tell the robot to cancel the notification. | |||

|The robot cancels the notification process and goes back to idly following you around. | |||

|- | |||

|2.9 | |||

|The robot has detected a fall. | |||

|Stay immobile for 10 seconds. | |||

|The robot sends a notification to the emergency contact within 1 second. The robot in the call says that the user has fallen and requires assistance. The robot says the name, date of birth, and the address of the user. | |||

|- | |||

|2.10 | |||

|The robot is powered on. | |||

|Move or pretend to fall down. If you pretend to fall down, when the robot asks you if you need assistance, say no. | |||

|If the user moves, the robot follows the user around. If the user pretends to fall down, the robot will notify the user of it via voice, asking them if they need assistance. After replying no, the robot goes back to following the user around. | |||

|- | |||

|2.11 | |||

|None | |||

|Inspect the robot's internal hard drive. | |||

|The robot has processed or sent data in the hard drive. | |||

|- | |- | ||

|2. | |2.12 | ||

|The | |None | ||

|Inspect the robot's internal hard drive. | |||

|The only video data remaining is from 1 minute before the robot was turned off in order to inspect it. | |||

|} | |} | ||

=== User Interface === | === User Interface === | ||

The user interface for the robot should be very light and focused mainly on achieving its core functionality. Thus, we have decided to use a voice controlled system (Req 3.1), due to our user group having difficulties with complex technology which would present challenges for them interacting with our robot otherwise. The voice controlled system would have a predefined set of commands that it would respond to, and it would respond to them only if preceded by its name, then the command (Req 3.2). Speech data is interpreted by a natural language processing algorithm and classified into one of these | The user interface for the robot should be very light and focused mainly on achieving its core functionality. Thus, we have decided to use a voice controlled system (Req 3.1), due to our user group having difficulties with complex technology which would present challenges for them interacting with our robot otherwise. This is also supported by our interviews. The voice controlled system would have a predefined set of commands that it would respond to, and it would respond to them only if preceded by its name, then the command (Req 3.2). This is to prevent the robot from accidentally being told to do something that the user did not ask for, for example, if it hears something interpreted as a command from somewhere else (TV for example). Speech data is interpreted by a natural language processing algorithm and classified into one of the predefined commands from 3.6. This does not mean the robot will only respond to these exact commands; it should be able to interpret speech for nuance, for example, "Yeah no, I'm fine" must be interpreted as "Do not need help" by the robot. The robot should be able to change, add, and delete emergency contacts in order to perform its function (Req 3.3, 3.4, 3.5). | ||

{| class="wikitable" | {| class="wikitable" | ||

!Index | !Index | ||

| Line 261: | Line 516: | ||

|- | |- | ||

|3.1 | |3.1 | ||

|The robot's interface shall be controlled using voice commands. | |The robot's interface shall be controlled using voice commands classified as follows: "Need help", "Do not need help", "change emergency contact", "add emergency contact", | ||

| | "delete emergency contact", "end call", "mute microphone", "mute camera".. | ||

|Must | |||

|- | |- | ||

|3.2 | |3.2 | ||

|The robot shall respond to | |The robot shall only respond to commands beginning with its name. | ||

| | |Must | ||

|- | |- | ||

|3.3 | |3.3 | ||

|The robot shall have a mechanism for | |The robot shall have a mechanism for changing the designated emergency contact, such as a family member or caretaker. | ||

|Must | |Must | ||

|- | |- | ||

|3.4 | |3.4 | ||

|The robot shall have a mechanism for | |The robot shall have a mechanism for adding multiple emergency contacts. | ||

|Must | |Must | ||

|- | |- | ||

|3.5 | |3.5 | ||

|The robot shall have a mechanism for | |The robot shall have a mechanism for deleting emergency contacts (but not if there is only one contact) | ||

| | |Must | ||

|} | |||

The test plan is as follows: | |||

{| class="wikitable" | |||

!Index | |||

!Precondition | |||

!Action | |||

!Expected Output | |||

|- | |||

|3.1 | |||

|The robot is powered on. | |||

|Go through each action and check that it matches the expected output. | |||

# Pretend to fall down. When the robot asks if you need help, say "Robot name, I need help." | |||

# Pretend to fall down. When the robot asks if you need help, say "Robot name, I don't need help." | |||

# Say "Robot name, I would like to change one of my emergency contacts." | |||

# Say "Robot name, I would like to add a new emergency contact." | |||

# Say "Robot name, I would like to delete an emergency contact." | |||

# Pretend to fall down. When the robot asks if you need help, say "Robot name, I need help." The robot will start a call with your emergency contact, who must pick up the call. Say "Robot name, end the call." | |||

# Pretend to fall down. When the robot asks if you need help, say "Robot name, I need help." The robot will start a call with your emergency contact, who must pick up the call. Say "Robot name, mute my microphone." | |||

# Pretend to fall down. When the robot asks if you need help, say "Robot name, I need help." The robot will start a call with your emergency contact, who must pick up the call. Say "Robot name, turn off my camera." | |||

|Check the output based on the corresponding action. | |||

# The robot notifies the designated contact. The notification is either a VOIP video call or a SIM card based call, if the internet is not available. The robot in the call says that the user has fallen and requires assistance. The robot says the name, date of birth, and the address of the user. | |||

# The robot goes back to following the user around. | |||

# The robot asks you which emergency contact you would like to change. | |||

# The robot asks you information about the emergency contact you would like to add, such as their name and phone number. | |||

# The robot asks you which emergency contact you would like to delete. | |||

# The robot ends the call. | |||

# The robot mutes your microphone. | |||

# The robot turns off your camera. | |||

|- | |||

|3.2 | |||

|The robot is powered on. | |||

|Go through each action and check that it matches the expected output. | |||

# Pretend to fall down. When the robot asks if you need help, say "I need help." | |||

# Pretend to fall down. When the robot asks if you need help, say "I don't need help." | |||

# Say "I would like to change one of my emergency contacts." | |||

# Say "I would like to add a new emergency contact." | |||

# Say "I would like to delete an emergency contact." | |||

# Pretend to fall down. When the robot asks if you need help, say "Robot name, I need help." The robot will start a call with your emergency contact, who must pick up the call. Say "End the call." | |||

# Pretend to fall down. When the robot asks if you need help, say "Robot name, I need help." The robot will start a call with your emergency contact, who must pick up the call. Say "Mute my microphone." | |||

# Pretend to fall down. When the robot asks if you need help, say "Robot name, I need help." The robot will start a call with your emergency contact, who must pick up the call. Say "Turn off my camera." | |||

|In every case, the robot does not respond to the user. The state that the robot was previously in remains in all cases, so it should be possible to retry the command by saying its name first. | |||

|- | |||

|3.3 | |||

|The robot is powered on. | |||

|Tell the robot to change an emergency contact. | |||

|The robot responds by asking you which contact you would like to change. | |||

|- | |- | ||

|3. | |3.4 | ||

|The robot | |The robot is powered on. | ||

| | |Tell the robot to add a new emergency contact. | ||

|The robot responds by asking you information about the contact. | |||

|- | |- | ||

|3. | |3.5 | ||

| | |The robot is powered on. | ||

| | |Tell the robot to delete an emergency contact. | ||

|The robot responds by asking you which contact you would like to delete, or, if there is only one contact, that it cannot delete the contact. | |||

|} | |} | ||

=== Safety === | === Safety === | ||

The safety of the user is important, thus, we have included some requirements to ensure that. The most important is prevention of false negatives (Req 4. | The safety of the user is important, thus, we have included some requirements to ensure that. The most important is prevention of false negatives (Req 4.1), which refer to falls not being detected. Because it is impossible to make a system that is 100% reliable, we have elected to ensure a 99% reliability rate, that is, that the rate of false negatives in the system cannot be more than 1%. We believe that any higher than that and the system becomes too unreliable for our users to use. '''(waiting until we have figured out how the robot will follow the user)''' | ||

{| class="wikitable" | {| class="wikitable" | ||

!Index | !Index | ||

| Line 297: | Line 604: | ||

|- | |- | ||

|4.1 | |4.1 | ||

| | |During training, the rate of false negative falls shall be at most 1%. | ||

|Should | |Should | ||

|} | |||

The test plan is as follows: | |||

{| class="wikitable" | |||

!Index | |||

!Precondition | |||

!Action | |||

!Expected Output | |||

|- | |- | ||

|4. | |4.1 | ||

|The robot | |The robot is powered on. | ||

| | |Inspect the training statistics of the robot. | ||

|The rate of false negative falls is no more than 1%. | |||

| | |||

|} | |} | ||

| Line 348: | Line 659: | ||

|Must | |Must | ||

|} | |} | ||

The test plan for the above is fairly straightforward, only needing simple inspection of the hardware specifications for all components specified above. Therefore, we will not display a full test plan. | |||

=== Algorithm === | === Algorithm === | ||

The robot uses various algorithms to accomplish its functionalities. It must use an object detection algorithm with functionality that allows it to identify falls (Req 6.1, 6.2) and a pathfinding algorithm to move and | The robot uses various algorithms to accomplish its functionalities. It must use an object detection algorithm with functionality that allows it to identify falls (Req 6.1, 6.2) and a pathfinding algorithm to move and monitor the user (Req 6.3). Furthermore, for the voice interface, it would need a voice processing algorithm, text-to-speech algorithm, and a natural language processing algorithm (Req 6.4, 6.5. 6.6) to be able to receive commands from, and interact with, the user. | ||

{| class="wikitable" | {| class="wikitable" | ||

!Index | !Index | ||

!Description | !Description | ||

!Priority | !Priority | ||

!Notes | |||

|- | |- | ||

|6.1 | |6.1 | ||

|The robot shall use an object detection algorithm in order to identify objects and people in its environment. | |The robot shall use an object detection algorithm in order to identify objects and people in its environment. | ||

|Must | |Must | ||

|Algorithms 6.1 - 6.6 could additionally have constraints based off time/memory complexity, as well as considerations to be taken based off the environment the robot is to operate in. | |||

|- | |- | ||

|6.2 | |6.2 | ||

|The robot shall use the algorithm in 6.1 to identify when an object or person has fallen. | |The robot shall use the algorithm in 6.1 to identify when an object or person has fallen. | ||

|Must | |Must | ||

| | |||

|- | |- | ||

|6.3 | |6.3 | ||

|The robot shall use a pathfinding algorithm in order to move from one location to another. | |The robot shall use a pathfinding algorithm in order to move from one location to another. | ||

|Must | |Must | ||

| | |||

|- | |- | ||

|6.4 | |6.4 | ||

|The robot shall use a natural language processing algorithm in order to interpret and classify commands. | |The robot shall use a natural language processing algorithm in order to interpret and classify commands. | ||

|Could | |Could | ||

| | |||

|- | |- | ||

|6.5 | |6.5 | ||

|The robot shall use a text-to-speech algorithm in order to talk to the user. | |The robot shall use a text-to-speech algorithm in order to talk to the user. | ||

|Could | |Could | ||

| | |||

|- | |- | ||

|6.6 | |6.6 | ||

|The robot shall use a voice processing algorithm in order to recognize speech. | |The robot shall use a voice processing algorithm in order to recognize speech. | ||

|Could | |Could | ||

| | |||

|} | |} | ||

Completing the test plans of the previous sections means that the test plans for this section should all work correctly. In particular, most requirements of section 2 will test 6.1 and 6.2, requirements 1.8 and 1.9 will test 6.3, all requirements of section 3 will test 6.4, 6.5 and 6.6. | |||

Further requirements on these algorithms are shown below: | Further requirements on these algorithms are shown below: | ||

| Line 396: | Line 717: | ||

==== Voice processing ==== | ==== Voice processing ==== | ||

The algorithm must be able to recognize speech and translate it into text for the NLP algorithm to process. The algorithm should still work in the presence of noise, for example, speech coming from the TV should be ignored or suppressed. To do this, it could distinguish the user's voice from others' voices. | The algorithm must be able to recognize speech and translate it into text for the NLP algorithm to process. The algorithm should still work in the presence of noise, for example, speech coming from the TV should be ignored or suppressed. To do this, it could distinguish the user's voice from others' voices. | ||

== Specification == | |||

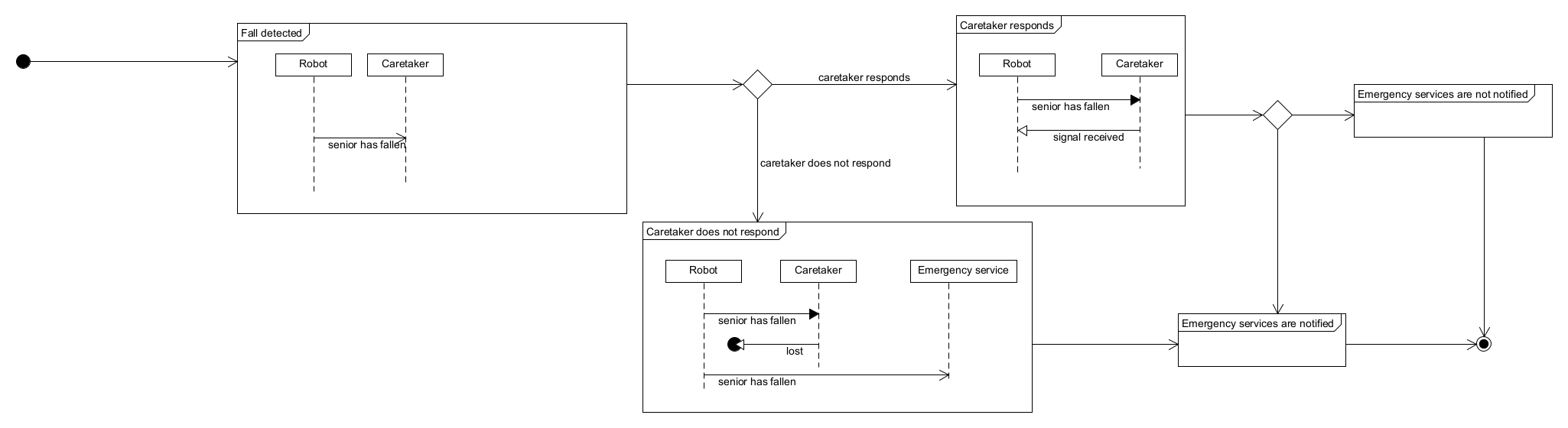

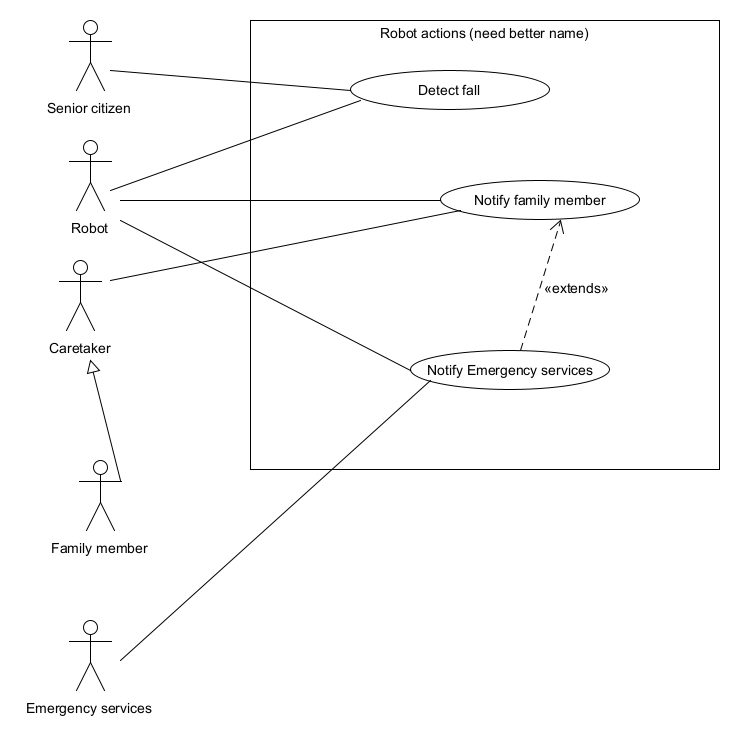

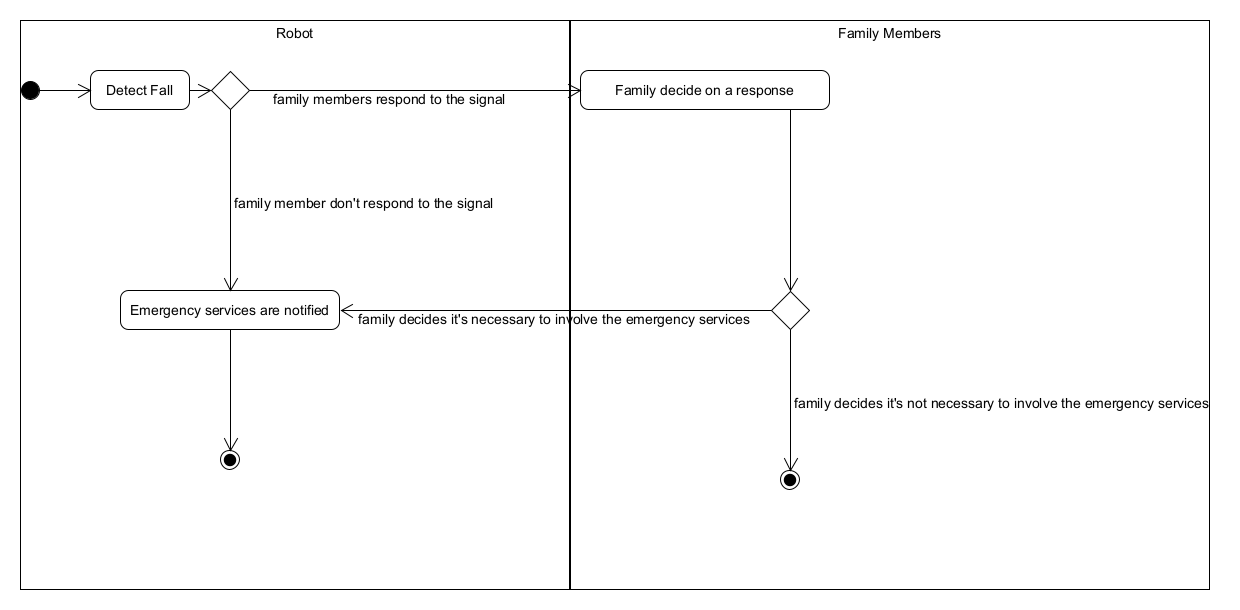

The functionality and behavior of the robot are comprehensively represented through four distinct types of UML (Unified Modeling Language) diagrams: sequence, use case, state machine, and activity. Each diagram serves a unique purpose in illustrating the various facets of the robot’s operation. The sequence diagram explains the order of interactions between the robot and other actors, while the use case diagram provides an overview of the system from the user’s perspective, detailing the interactions between the actors and the system. The state machine diagram offers insights into the different states the robot can be in during its lifecycle and the transitions between these states. Lastly, the activity diagram focuses on the procedural aspects of the system, outlining the control and data flow between various steps. Together, these diagrams provide a holistic view of the robot’s interactions, responses, and internal processes, ensuring a comprehensive understanding of its functionality. | |||

1. '''Sequence diagram''': The sequence diagram provided models the intricate communication between various interaction partners, capturing the flow of different types of messages such as synchronous, asynchronous, response, and create messages. The interaction starts with the detection of a fall, which sets off a chain of communication events between the robot, the caretaker, and the emergency services. The diagram shows that when a senior falls, a message is sent to both the robot and the caretaker. If the caretaker responds, the robot receives a signal, and emergency services are not notified, indicating a managed situation. However, if the caretaker does not respond, the robot proceeds to notify emergency services, suggesting a need for immediate assistance. The use of normal arrows for synchronous messages implies an expectation of immediate response, while dotted arrows for asynchronous messages indicate communication that does not require an immediate reply. This visual representation helps with understanding the sequence of interactions and the conditions under which they occur, ensuring clear and effective communication during critical events. | |||

[[File:Sequence group5 2024.png|center|thumb|1311x1311px]] | |||

2. '''Use case diagram''': The use case diagram provided offers a user-centric perspective of a system’s behavior, highlighting the roles and interactions of various actors without delving into the internal workings of the implementation. It portrays the actions and responsibilities of each actor in relation to the system, ensuring that the user’s experience and requirements are an absolute priority in regards to the design process. This approach allows for a clear understanding of how the system will function from the user’s point of view, facilitating communication between stakeholders and guiding the development team in creating a system that meets the user’s needs. | |||

[[File:Use case group5 2024.png|center|thumb|627x627px]] | |||

3. '''Activity diagram''': The activity diagram models the procedural processing aspects of a system, specifically a response to a fall detection event. It begins when a robot detects a fall, marking the start of the activity. The robot then has two potential actions: it can notify family members or, if there is no response from the family, it can automatically notify emergency services. If the family members do respond to the robot’s signal, they must decide on the appropriate response. This decision could be to notify emergency services if the situation is assessed as critical. Alternatively, if the situation is deemed non-critical, they may choose to not involve the emergency services, which would conclude the activity flow. This diagram effectively communicates the decision-making process and subsequent actions taken by both the robot and family members in the event of a fall, ensuring a swift and appropriate response. It underlines the importance of timely intervention and the roles of automated systems and human judgment in emergency situations, serving as a visual guide for understanding the sequence of actions and conditional logic that dictate the flow of the activity. | |||

[[File:Activity group5 2024.png|center|thumb|1240x1240px]] | |||

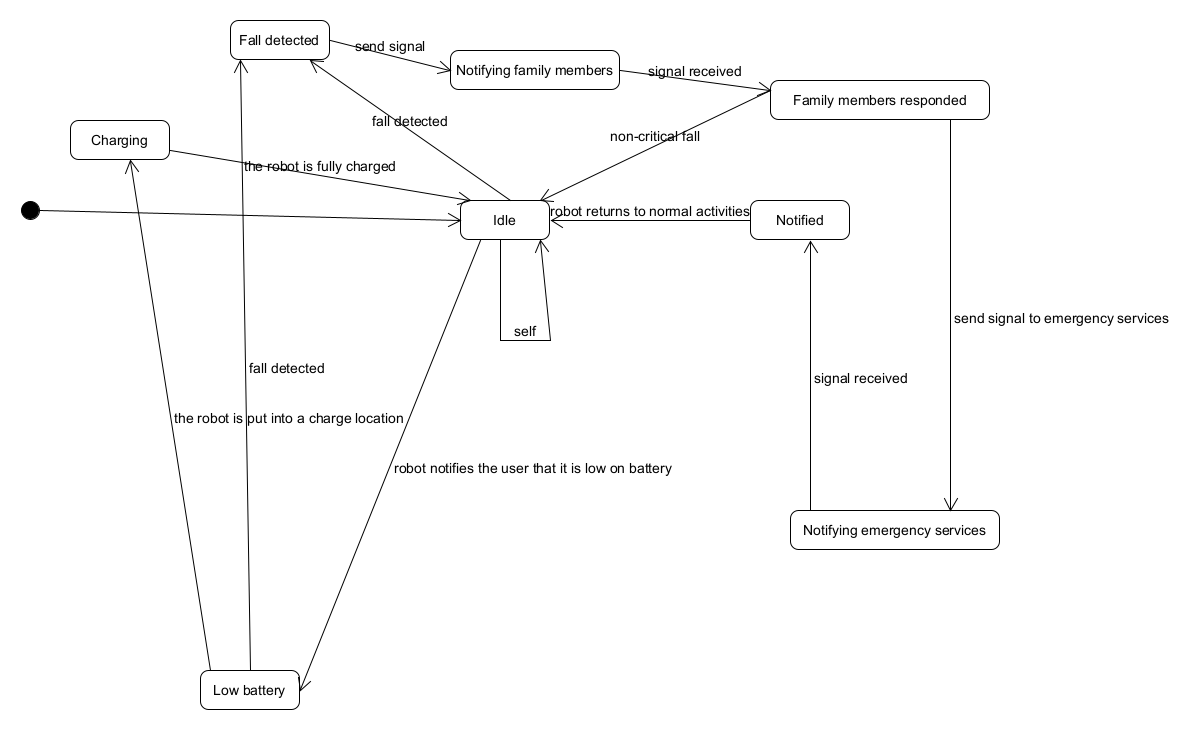

4. '''State machine diagram''': The state machine diagram is used to showcase the dynamic behavior of a system or object throughout its life cycle, from initialization to termination. It captures the various states an object may occupy and the transitions between those states, governed by specific conditions and events. For instance, the diagram for a robot might begin with a charging state, where it remains until fully powered. Once charged, it transitions to an idle state, awaiting further input or events. A fall detection event would trigger a transition to a notifying state, where the robot decides whether to alert family members or emergency services based on the severity of the fall and the response received. If the fall is non-critical or family members intervene, the robot can return to its normal activities(idle position); otherwise, it proceeds to notify emergency services. The diagram also accounts for scenarios like low battery, where the robot must inform the user and return to the charging state. This visual representation provides an accurate description of the operational flow of robot. | |||

[[File:State machine group5 2024.png|center|thumb|1004x1004px]] | |||

== Ethical analysis == | == Ethical analysis == | ||

The introduction of this home robot designed for safety monitoring, particularly for individuals with limited mobility, raises several ethical considerations that must be carefully addressed. As is mentioned previously | The introduction of this home robot designed for safety monitoring, particularly for individuals with limited mobility, raises several ethical considerations that must be carefully addressed. As is mentioned previously, privacy and data security are two of the main concerns when it comes to information collecting using cameras and remote data sharing. Other than that, the user (mainly the individuals being monitored) may feel the loss of their full independence and autonomy under monitoring. The introduction of these kinds of robots may also result in people's excessive reliance on technologies, which can potentially reduce their sense of responsibility for their loved ones. The issues and the possible solutions will be discussed as follows: | ||

'''1. Privacy concerns:''' The primary ethical concern is related to privacy. The built-in camera | '''1. Privacy concerns:''' The primary ethical concern is related to privacy. The built-in camera, while essential for safety monitoring, may intrude on the personal privacy of individuals within their homes since it involves the collection and processing of sensitive data involving their private lives. It is crucial to implement robust privacy protection features, ensuring that users have control over camera access and that visual data is securely stored. Clear communication and transparency regarding data usage and storage are essential and the users should be well-informed about every aspect of the technology regarding privacy before opting to use it. | ||

'''2. Program performance:''' It is essential for the program to be sufficiently accurate especially when it is dealing with health-related tasks. Any misjudgments or mistakes in decision-making may result in severe consequences. It may raise the problem of accountability and responsibility, as the program developer in this case is supposed to take full responsibility for any unexpected failure of the program. Therefore, the program must undergo strict testing protocols to ensure its precision and reliability. | '''2. Program performance:''' It is essential for the program to be sufficiently accurate especially when it is dealing with health-related tasks. Any misjudgments or mistakes in decision-making may result in severe consequences. It may raise the problem of accountability and responsibility, as the program developer in this case is supposed to take full responsibility for any unexpected failure of the program. Therefore, the program must undergo strict testing protocols to ensure its precision and reliability. | ||

'''3. Compromised autonomy:''' While the robot aims to enhance safety and well-being, the autonomy and privacy of the user are to some | '''3. Compromised autonomy:''' While the robot aims to enhance safety and well-being, the autonomy and privacy of the user are to some extent compromised. Being constantly monitored, even by their loved ones deprives individuals of their personal space. Striking a balance between providing necessary care and preserving personal freedom, and obtaining full, informed consent from individuals before deploying the robot in their homes is crucial. | ||

'''4. Dependence on technology:''' With the help from | '''4. Dependence on technology:''' With the help from the robot, it is no longer necessary for the family members to be physically present most of the time to attend to the user. Dependence of the robot will increase more and more to the point that humans do not take responsibility for their loved ones. Providing companionship, particularly for elderly individuals, holds equal importance. Moreover, if most of the caretaker jobs have been taken over by robots, then most human caretakers will have lost their jobs. | ||

'''5. Affordability and continuous improvement:''' It is critical to ensure that the home robot is affordable and accessible to a broad range of individuals. The technology is aimed to solve health-related problems for the entire society rather than the privileged few. The | '''5. Affordability and continuous improvement:''' It is critical to ensure that the home robot is affordable and accessible to a broad range of individuals. The technology is aimed to solve health-related problems for the entire society rather than the privileged few. The developers hold the responsibility to control the investment on the product and to limit the cost. At the same time, it would be a good practice for the developer to gather feedback and stay responsive. Continuous improvement is desired to address any unforeseen ethical challenges. | ||

== Design == | == Design == | ||

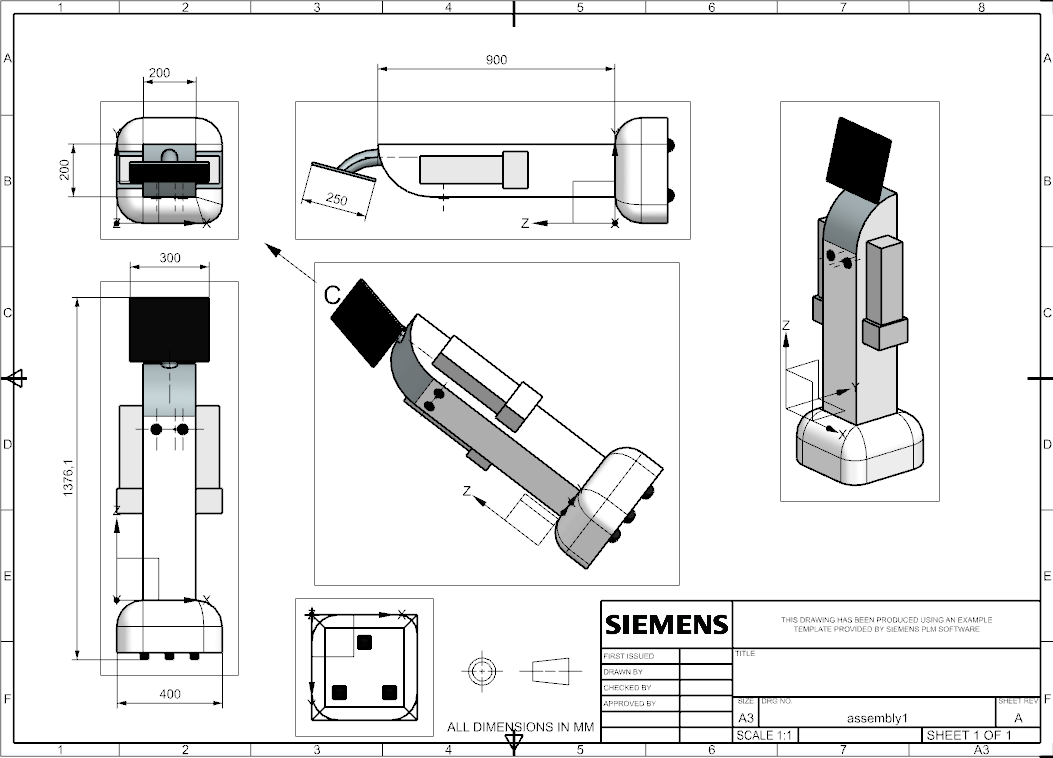

After making sketches, we decided that although the robot's design serves its purpose, a more user friendly design could be created. Thus, we adjusted it such that the screen is now the "head" of the robot, which now has arms. The wheels are still attached to the base of the robot, but, they are now hidden underneath the base. We decided on this as our design for the time being, and created CAD models to demonstrate how the robot might look like. The technical drawings have been generated from the CAD model as shown below. | After making sketches, we decided that although the robot's design serves its purpose, a more user friendly design could be created. Thus, we adjusted it such that the screen is now the "head" of the robot, which now has arms. The wheels are still attached to the base of the robot, but, they are now hidden underneath the base. We decided on this as our design for the time being, and created CAD models to demonstrate how the robot might look like. The technical drawings have been generated from the CAD model as shown below. | ||

| Line 420: | Line 750: | ||

=== Prototype === | === Prototype === | ||

Unfortunately, due to the restrictions of the project, it is not possible for us to implement all of the requirements we | Unfortunately, due to the restrictions of the project, it is not possible for us to implement all of the requirements we have developed. As a prototype, we have picked some significant features and functions that will be implemented by the end of the this project. | ||

{| class="wikitable" | {| class="wikitable" | ||

|+Implementation | |+Implementation | ||

!Index | !Index | ||

!Description | !Description | ||

|- | |- | ||

!2.1 | |||

|The robot shall | |The robot shall be able to detect falls. | ||

|- | |- | ||

!2.2 | |||

|When the robot detects a fall, the robot shall notify the designated emergency contact within | |When the robot detects a fall, the robot shall notify the designated emergency contact within 30 seconds. | ||

|- | |- | ||

!2.3 | |||

|The notification shall be in the form of a VOIP video call to the emergency contact, or a SIM-card based call if an Internet connection is unavailable. | |The notification shall be in the form of a VOIP video call to the emergency contact, or a SIM-card based call if an Internet connection is unavailable. | ||

|- | |- | ||

!2.5 | |||

|When the robot detects a fall, and the emergency contact has not responded (as described in 2.4), the robot shall send a text message to the emergency contact. | |When the robot detects a fall, and the emergency contact has not responded (as described in 2.4), the robot shall send a text message to the emergency contact. | ||

|- | |- | ||

!2.6 | |||

|The notification (text or call) that the robot sends shall contain the name and location of the user. | |The notification (text or call) that the robot sends shall contain the name, date of birth, and location of the user. | ||

|- | |- | ||

!2.7 | |||

|When the robot detects a fall, and the emergency contact has not responded (as described in 2.4), the robot shall take a picture of the fall and send the picture to the designated emergency contact. | |When the robot detects a fall, and the emergency contact has not responded (as described in 2.4), the robot shall take a picture of the fall and send the picture to the designated emergency contact via SMS. | ||

|- | |- | ||

!2.8 | |||

|When the robot detects a fall, the robot shall have a mechanism to allow the user to cancel the notification within | |When the robot detects a fall, the robot shall have a mechanism to allow the user to cancel the notification within 30 seconds. | ||

|- | |- | ||

!2.10 | |||

| | |The robot shall monitor the user continuously using the camera mounted on its body. | ||

|- | |- | ||

!3.1 | |||

| | |The robot's interface shall be controlled using voice commands classified as follows: "Need help", "Do not need help". | ||

|- | |- | ||

!4.1 | |||

|During training, the rate of false negative falls shall be at most 1%. | |During training, the rate of false negative falls shall be at most 1%. | ||

|- | |- | ||

!6.1 | |||

|The robot shall use an object detection algorithm in order to identify objects and people in its environment. | |The robot shall use an object detection algorithm in order to identify objects and people in its environment. | ||

|- | |- | ||

!6.2 | |||

|The robot shall use the algorithm in 6.1 to identify when an object or person has fallen. | |The robot shall use the algorithm in 6.1 to identify when an object or person has fallen. | ||

| | |- | ||

!6.4 | |||

|The robot shall use a natural language processing algorithm in order to interpret and classify commands. | |||

|- | |||

!6.5 | |||

|The robot shall use a text-to-speech algorithm in order to talk to the user. | |||

|- | |||

!6.6 | |||

|The robot shall use a voice processing algorithm in order to recognize speech. | |||

|} | |} | ||

As | As can be seen from the selected requirements in the table above, most of the elements belong to functionalities, which will be the major part of our implementation. We have omitted sections 1 and 5 for brevity, because we do not yet know if we will be able to use an actual robot or if we will have to simulate it using a laptop. In either case, both would satisfy those requirements by default (most if not all of them). We will be using pre-trained models to realize the functionalities related to fall detection, natural language processing, voice processing and text-to-speech. The other functionalities will be implemented by ourselves. | ||

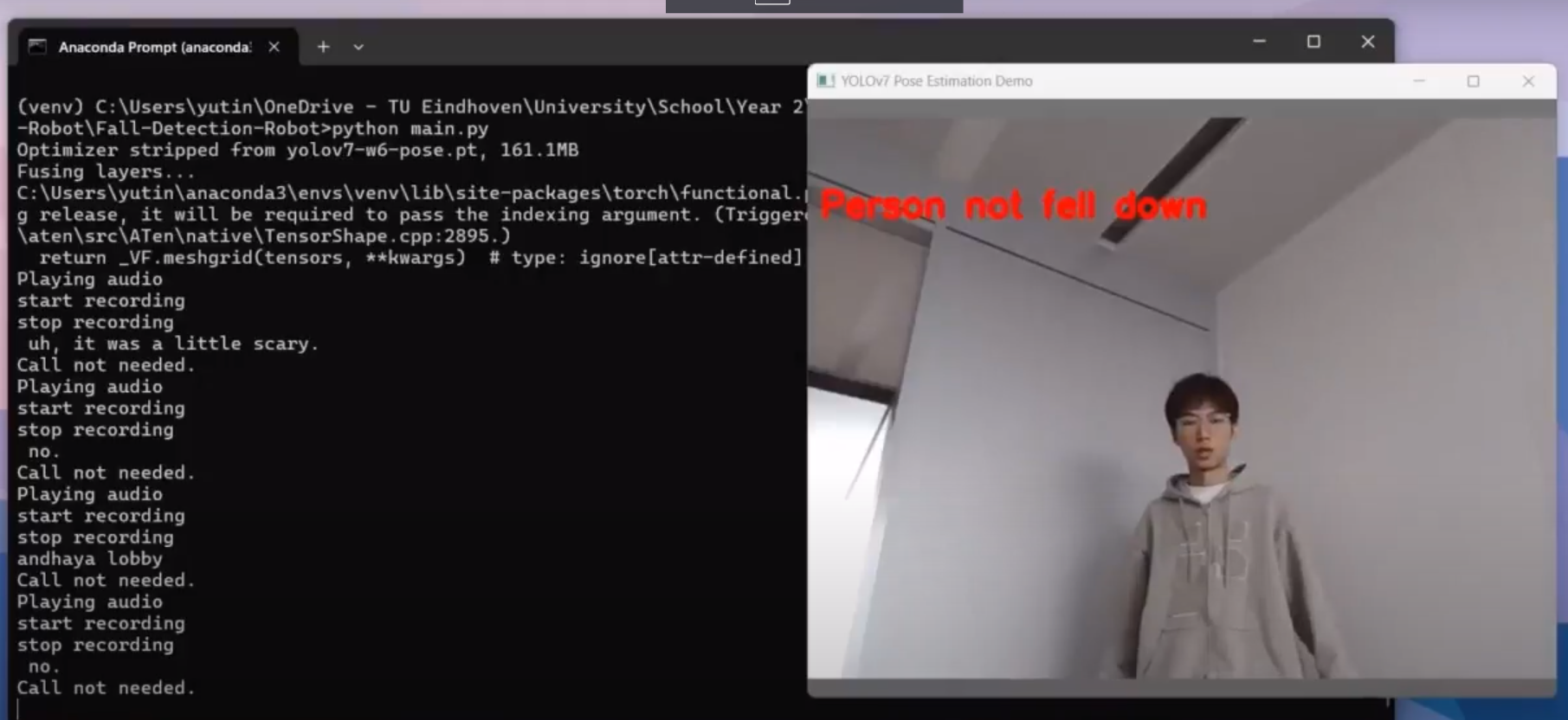

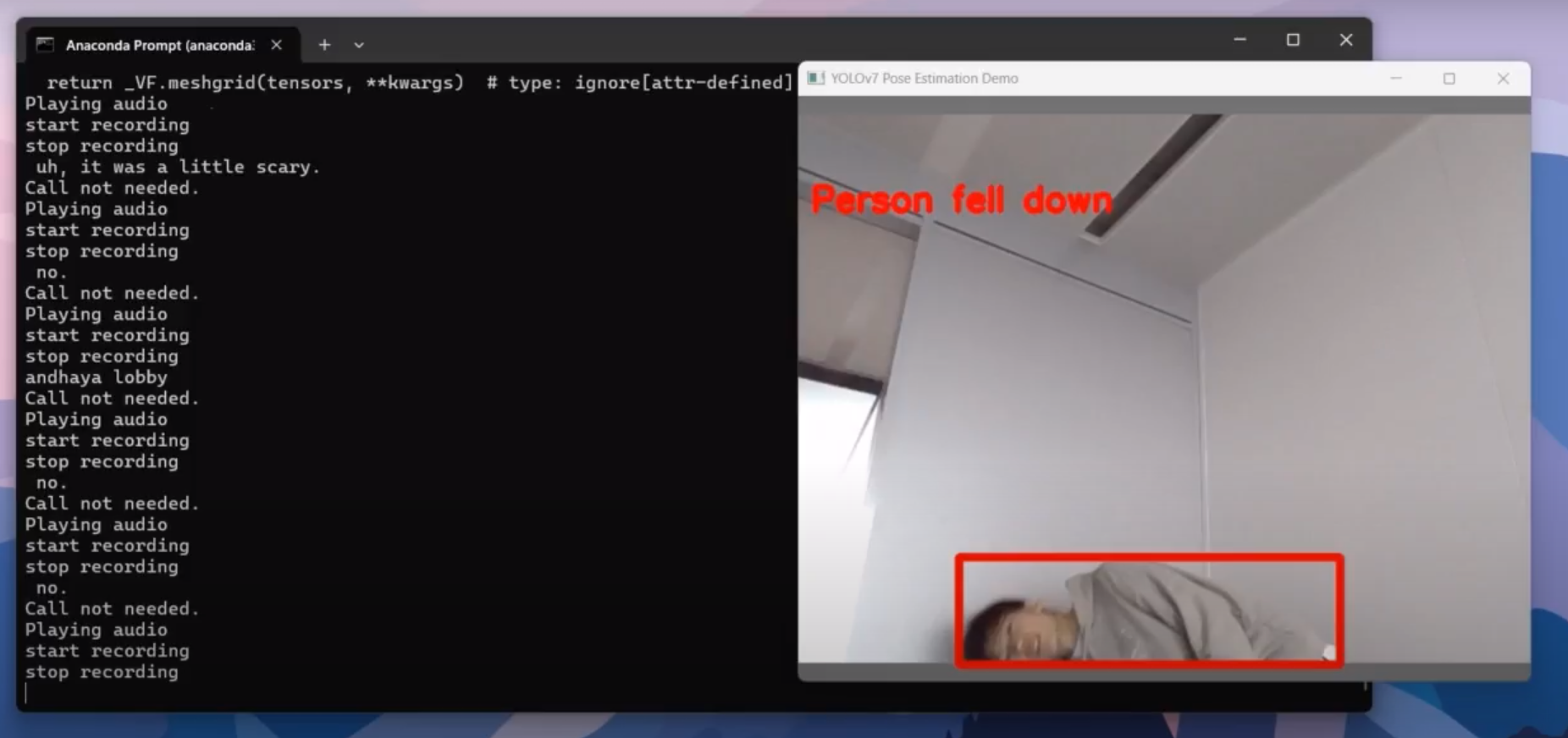

==== Fall detection Algorithm ==== | |||

[[File:Fall-detection-not-fall.png|center|thumb|995x995px|The algorithm detects a person not falling down]] | |||

[[File:Fall-detection-fall.png|center|thumb|1001x1001px|The algorithm detects a person fell down]] | |||

We used two libraries implemented using the yolov7<ref name=":19">[https://github.com/WongKinYiu/yolov7/tree/main?tab=readme-ov-file WongKinYiu. (2022). ''Yolov7: Implementation of paper - yolov7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors''. GitHub. https://github.com/WongKinYiu/yolov7/tree/main?tab=readme-ov-file]</ref> object detection model. The first one<ref name=":20">RizwanMunawar. (2022). ''Yolov7-Pose-estimation: Yolov7 pose estimation using opencv, pytorch''. GitHub. <nowiki>https://github.com/RizwanMunawar/yolov7-pose-estimation/tree/main?tab=readme-ov-file</nowiki></ref> is a package for human pose estimation. It can activate the laptop camera to detect in real-time a human pose as a skeleton. The second one<ref name=":21">Manirajanvn. (2022). ''yolov7_keypoints''. GitHub. <nowiki>https://github.com/manirajanvn/yolov7_keypoints/tree/main</nowiki></ref> is a package to detect a person falling down in a video. We combined those two packages to detect real-time human falls and show it on screen. | |||

===== 1. yolov7<ref name=":19" /> ===== | |||

Yolov7 is the most accurate as well as the fastest object-detection AI model. Its GitHub repository provides several branches for object recognition, pose estimation, Lidar and other functionalities. It can perform detection on videos and images provided by users. It leaves the space for users to customize the algorithm. For example, users can train the model on their own datasets, or modify some key points to have different biases on object recognition. Therefore, it is a good model for us to use in this project to detect human falls. It can only detect poses of images and videos, but our robot needs to detect human falling in real-time. Hence, some integration with other algorithms is needed. | |||

====== 2. yolov7-pose-estimation<ref name=":20" /> ====== | |||

This library is a modification of yolov7. The main reason we use this model is that it is able to apply the yolov7 pose detection on the webcam in real-time. This perfectly suits our need because with this library we can detect the pose of the user in real time. The last step is to identify when the user will be recognized as being falling down. | |||

====== 3. yolov7_keypoints<ref name=":21" /> ====== | |||

This library is another modification of yolov7. It uses a fall detection algorithm to detect the human fall. It extracts some human body key points and calculate the y coordinate difference between shoulder, hip, and foot. When the height difference between shoulder and hip, hip and foot, shoulder and foot is small enough, it detects it as a fall. | |||

Therefore, we integrated the second and the third library to solve the problem of detecting a human fall in real time. Now, we are able to use a web cam to detect whether a human falls down. | |||

==== Voice Recognition Algorithm ==== | |||

When an emergency happens, the user might not be able to move, and interviewees also mentioned that they preferred to use voice commands to call emergency contacts in this situation, so we decided to add voice recognition functions to our robot. When a possible fall has been detected, the robot shall ask the user whether they need help. If the user gives a positive response or does not reply, the robot shall call the emergency contact immediately. To analyze the user's response, a suitable voice recognition API is needed. | |||

1. Whisper<ref>Whisper Introduction. | |||

https://openai.com/research/whisper</ref> is an automatic speech recognition (ASR) system invented by OpenAI, and is trained on 680,000 hours of multilingual and multitask supervised data collected from the web. Whisper leverages a powerful algorithm called a Transformer, commonly used in large language models. It is a free, open-source, capable of handling accents, and multiple languages support. However, the downside of Whisper is that it will take a long time to translate voice into a script. But this API provides users with different processing models with different translating speeds and accuracy, and if we can restrict the user's reply to some simple words, like yes or no, the processing time is still acceptable. | |||

2. Google Speech-to-Text AI<ref>Speech-to-Text documentation | |||

== | https://cloud.google.com/speech-to-text/docs</ref>. Similarly to Whisper, it is also capable of translating voice into a script. As it is a cloud-based service, it requires no local setup, but the downside for is that it is not free to use. After the first 60 minutes each month, it will charge users 0.024 dollars per minute, which could be a factor to affect the users' purchasing decisions. | ||

==== Programmable calling and SMS texting ==== | |||

Programmable calling and SMS texting have also been implemented. Twilio has been used as the application programming interface to develop the program in order to initiate the call and to send the text message. A host phone number has been created on Twilio and the number of one of the group members that is going to receive the call and the SMS message has been assigned. | |||

=== | ==== Test plan & results ==== | ||

We | We make a test plan and make sure it covers everything listed in the implementation. Then we perform testing and record the results. | ||

{| class="wikitable" | The test procedure, showing an optimal order of testing all functionalities, is listed below. The order is shown in the plan itself, so, starting with 2.1, then 3.1, then 2.8, and so on, until 4.1. As a reminder, there are no particular test plans for the requirements of Section 6, because all requirements tested below will also implicitly test the Section 6 requirements. We have modified the the Action and Expected Output columns of some tests for clarity. | ||

! | {| class="wikitable" | ||

! | !Index | ||

! | !Requirement | ||

! | !Precondition | ||

! | !Action | ||

!Expected Output | |||

!Actual Output | |||

|- | |||

|2.1 | |||

|The robot shall be able to detect falls. | |||

|The robot is powered on. | |||

|Pretend to fall down. | |||

|The robot detects the fall and notifies you of it via voice. The robot asks you if you are okay and if you need help. | |||

|Once the tester pretended to fall the robot asked the tester if he needed help. | |||

|- | |- | ||

| | |3.1 | ||

|' | |The robot's interface shall be controlled using voice commands classified as follows: "Need help", "Do not need help". | ||

| | |The robot is powered on. | ||

| | |Note: it may be more optimal to test 2 first, then test 1. | ||

Go through each action and check that it matches the expected output. | |||

# Pretend to fall down. When the robot asks if you need help, say "Robot name, I need help." | |||

# Pretend to fall down. When the robot asks if you need help, say "Robot name, I don't need help." | |||

| | |Check the output based on the corresponding action. | ||

# The robot notifies the designated contact. The notification is either a VOIP video call or a SIM card based call, if the internet is not available. The robot in the call says that the user has fallen and requires assistance. The robot says the name, date of birth, and the address of the user. | |||

# The robot goes back to following the user around. | |||

| | |In test 1, the tester gave a positive response, and the robot called the emergency contact. | ||

In test 2, the tester gave a negative response, and the robot did not call the emergency contact. | |||

|- | |- | ||

| | |2.8 | ||

|When the robot detects a fall, the robot shall have a mechanism to allow the user to cancel the notification within 30 seconds. | |||

|The robot has detected a fall. | |||

|Tell the robot to cancel the notification. | |||

|The robot cancels the notification process and goes back to idly following you around. | |||

|The tester waited 25 seconds and gave a negative response, the robot did not call. | |||

| | |||

| | |||

| | |||

| | |||

| | |||

|- | |- | ||

| | |2.2 | ||

| | |When the robot detects a fall, the robot shall notify the designated emergency contact within 30 seconds. | ||

| | |The robot is powered on. | ||

| | |Pretend to fall down. | ||

|The robot detects the fall and notifies the designated contact within 30 seconds. The notification is either a VOIP video call or a SIM card based call, if the internet is not available. The robot in the call says that the user has fallen and requires assistance. The robot says the name, date of birth, and the address of the user. | |||

| | |Once the tester gave a positive response to the robot, the emergency contact received the call within 5 seconds. | ||

| | |||

|- | |- | ||

| | |2.10 | ||

| | |The robot shall monitor the user continuously using the camera mounted on its body. | ||

| | |The robot is powered on. | ||

| | |Move or pretend to fall down. If you pretend to fall down, when the robot asks you if you need assistance, say no. | ||

| | |If the user moves, the robot follows the user around. If the user pretends to fall down, the robot will notify the user of it via voice, asking them if they need assistance. After replying no, the robot goes back to following the user around. | ||

| | |The tester moved in front of the robot and kept within the scope of its camera, it did not ask if the tester needed help and did not call the emergency contact. | ||

Then, the tester pretended to fall, the robot asked the tester within 1 seconds if he needed help. | |||

|- | |- | ||

| | |2.3 | ||

| | |The notification shall be in the form of a VOIP video call to the emergency contact, or a SIM-card based call if an Internet connection is unavailable. | ||

| | |The robot has detected a fall. | ||

|To test the video call, simply wait until the robot notifies the contact. To test the SIM call, turn off the internet, repeat the fall, and wait until the robot notifies the contact. | |||

|The video call and SIM call both successfully connect to the emergency contact with no issues. The robot in the call says that the user has fallen and requires assistance. The robot says the name, date of birth, and the address of the user. | |||

|Once the tester pretended to fall and gave a positive response, the emergency contact received a SIM-card based call. During the call, the robot mentioned the name, date of birth, and the address of the tester. | |||

The emergency contact did not receive a VOIP. | |||

|- | |- | ||

| | |2.5 | ||

| | |When the robot detects a fall, and the emergency contact has not responded (as described in 2.4), the robot shall send a text message to the emergency contact. | ||

| | |The robot has detected a fall. | ||

|Wait until the robot notifies the contact. Make sure the emergency contact(s) has/have not responded. | |||

|The emergency contact(s) receive(s) a text message from the robot. The text message says that the user has fallen and requires assistance. The text message contains the name, date of birth, and the address of the user. | |||

|Once the tester pretended to fall and gave a positive response, the emergency contact received a SMS message, containing the name, date of birth, and the address of the tester. | |||

|- | |- | ||

| | |2.6 | ||

| | |The notification (text or call) that the robot sends shall contain the name, date of birth, and location of the user. | ||

| | |The robot has sent a text message (2.5) | ||

|Inspect the text message. | |||

|The text message contains the name, date of birth, and location of the user. | |||

|Both the SMS message and the SIM card call received by the emergency contact contained the name, date of birth, and location of the user. | |||

|- | |- | ||

| | |2.7 | ||

| | |When the robot detects a fall, and the emergency contact has not responded (as described in 2.4), the robot shall take a picture of the fall and send the picture to the designated emergency contact via SMS. | ||

| | |The robot has sent a text message (2.5) | ||

|Inspect the text message. | |||

|The text message contains a picture of the fall. | |||

|The receiver did not receive any picture from the robot. | |||

|- | |- | ||

| | |4.1 | ||

| | |During training, the rate of false negative falls shall be at most 1%. | ||

| | |The robot is powered on. | ||